Abstract

Background

Neural activity in basolateral amygdala has recently been shown to reflect surprise or attention as predicted by the Pearce-Kaye-Hall model (PKH)—an influential model of associative learning. Theoretically, a PKH attentional signal originates in prediction errors of the kind associated with phasic firing of dopamine neurons. This requirement for prediction errors, coupled with projections from the midbrain dopamine system into basolateral amygdala, suggests that the PKH signal in amygdala may depend on dopaminergic input.

Methods

To test this, we recorded single unit activity in basolateral amygdala in rats with 6-OHDA or sham lesions of the ipsilateral midbrain region. Neurons were recorded as the rats performed a task previously used to demonstrate both dopaminergic reward prediction errors and attentional signals in basolateral amygdala neurons.

Results

We found that neurons recorded in sham lesioned rats exhibited the same attentionrelated PKH signal observed in previous studies. By contrast, neurons recorded in rats with ipsilateral 6-OHDA lesions failed to show attentional signaling.

Conclusions

These results indicate a linkage between the neural instantiations of the ABL attentional signal and dopaminergic prediction errors. Such a linkage would have important implications for understanding both normal and aberrant learning and behavior, particularly in diseases thought to have a primary effect on dopamine systems, such as addiction and schizophrenia.

Keywords: attention, prediction error, dopamine, salience, schizophrenia, rat

INTRODUCTION

Evidence implicates the basolateral complex of the amygdala (ABL) in learning about rewards and punishments (1). More recently, a spate of studies has shown that reward- and punishment-related processing in ABL is influenced by whether these outcomes are expected (2–7). Hitherto, variations in outcome processing based on expectancies have most often been associated with phasic firing of midbrain dopamine (DA) neurons (8–11). DA neurons increase firing whenever reward is better than expected but suppress firing whenever reward is worse than expected. Although the pattern of activity of ABL neurons shares some of these features, it differs substantially in its details (3). Like DA neurons, a subpopulation of ABL neurons initially increase firing in the face of unexpected upshifts in reward, but they also increase firing in the face of downshifts. Furthermore, this surge in activity is not maximal on the first trial in which reward value is shifted, but rather peaks later, thus lagging the time course of phasic DA activity. A popular interpretation of phasic DA firing is that it reflects encoding of signed prediction errors (8, 12–14). According to this view, the surge in DA firing that accompanies an upshift signals a positive prediction error that contributes to establishing the cue as a predictor of a larger reward (i.e. excitatory learning). Inhibition of DA firing following a downshift, on the other hand, conveys a negative prediction error that serves to establish the cue as a predictor of a smaller reward (i.e. inhibitory learning). In this way signed prediction errors dictate both the amount and the direction of learning.

In contrast, surges in activity in ABL neurons to both upshifts and downshifts are reminiscent of the unsigned prediction errors employed by attentional models of conditioning (15, 16). Strictly speaking, most models utilize a straightforward unsigned prediction error, which requires that maximal activity be observed on the same trial as the reward shift. However, a lagging unsigned prediction error is precisely what the amended Pearce-Hall model (17) proposes (Pearce, Kaye & Hall, henceforward PKH) as a mechanism for attentional changes in learning. According to the PKH model, the amount of attention received by a cue is inversely related to how well the cue has predicted past reward. Thus, a cue that has reliably signaled reward (i.e. one that leads to no error) will receive relatively little attention, since no further learning is needed. Conversely, a cue that predicts reward poorly (i.e. one that leads to large errors, whatever their sign) is expected to receive substantial attention, thereby allowing the animal to determine the nature of the cue’s relationship—if any—with the outcome. For the system’s dynamics to be efficient, however, attentional changes should be somewhat gradual, allowing evidence of a contingency shift to accumulate, instead of reaching maxima or minima on the basis of a single, potentially spurious, occurrence. For this reason the model utilizes lagging unsigned prediction errors to allocate attentional resources (for more details about the model, see Supplementary Material). Support for a PKH-based interpretation of the ABL signal comes from the observation that ABL must be online for orienting responses towards the cues—a commonly used index of attention—to be affected by upshifts or downshifts in expected rewards (3).

Of course, the PKH model derives lagging unsigned prediction errors from signed prediction errors. The strong dopaminergic input from the ventral tegmental area (VTA) into ABL (18), suggests that the ABL signal might depend on signed prediction error signals provided by dopaminergic input. To test this, we recorded single unit activity in ABL in rats with 6-OHDA or sham lesions of the ipsilateral VTA during performance of a task in which rats learned to respond to surprising reward upshifts and downshifts.

MATERIALS AND METHODS

Subjects, behavioral apparatus and testing

Male Long-Evans rats served as subjects, tested at the University of Maryland, Baltimore in accordance with SOM and NIH guidelines. Equipment and training was identical to prior experiments (3). Briefly, training used aluminum chambers. A central odor port was located above and two adjacent fluid wells on a panel in the right wall of each chamber. On each trial, nosepoke into the odor port resulted in delivery of the odor cue. One of three different odors was delivered at the port on each trial. One odor instructed the rat to go to the left to get reward (10% sucrose solution), a second odor instructed the rat to go to the right, and a third odor indicated that the rat could obtain reward at either well. After shaping, we introduced blocks in which we independently manipulated the size or timing of reward in one well, as described in the results and elsewhere (3). After initial training on the task, rats underwent surgery to implant electrodes.

Surgery, single-unit recording and data analysis

Surgical procedures were identical to those described previously (3), except rats received saline or 6-OHDA in VTA (1.0-µL injection of 6 µg/µL 6-OHDA in a PBS-0.1% (w/v) ascorbic acid vehicle at 5.4 mm posterior to bregma, 0.67 laterally, and 8.21 mm ventral to the brain surface). Rats recovered after the surgery, were retrained, and recording began once their performance was accurate and stable. Procedures were identical to those described previously (3). At the end of recording, brains were removed, fixed, and sectioned; one series was processed for Nissl staining to verify electrode placement and another for tyrosine hydroxylase (TH) immunoreactivity to evaluate the VTA lesions.

RESULTS

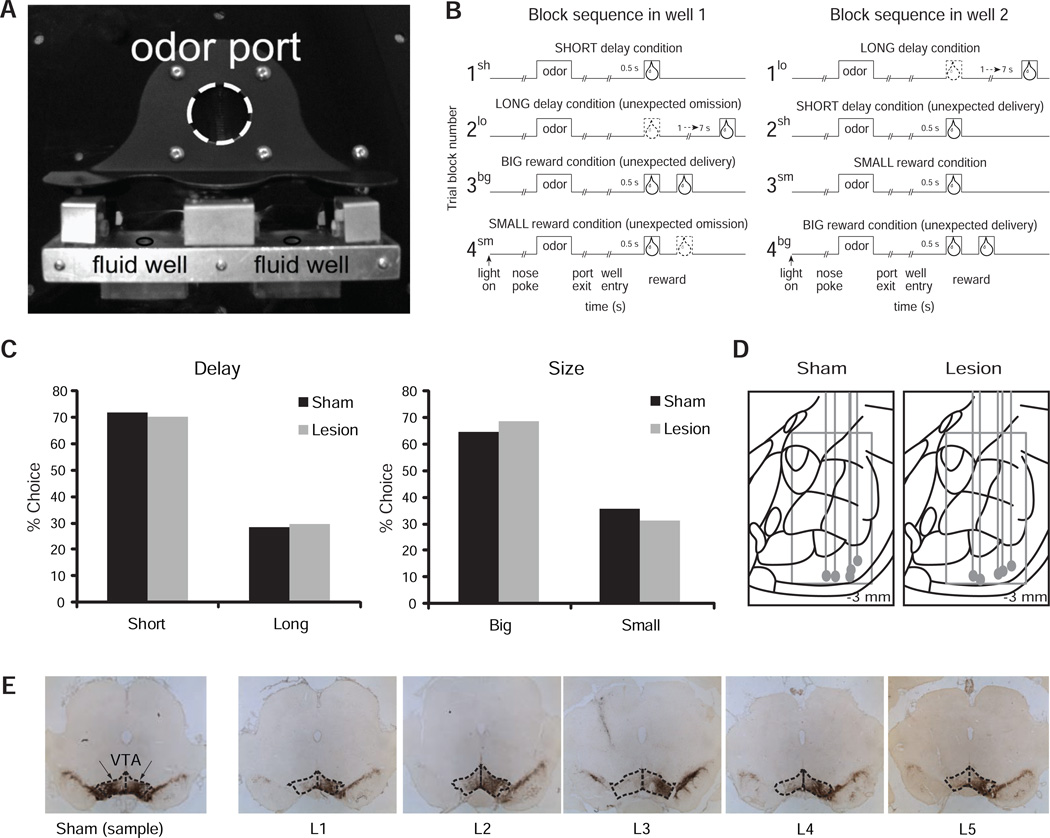

Neuronal activity in ABL was recorded while rats performed a choice task. On each trial, rats were required to nose-poke at an odor port where one of three possible odors could be presented. Odors predicted reward at one of two adjacent fluid wells situated below the odor port (Fig. 1A). Odor 1 signaled that the sucrose reward would be delivered in the left well (forced-left), Odor 2 that it would be delivered in the right well (forced-right), and Odor 3 that it would be made available in either well (free-choice). In each session, the relative value of the reward experienced in each well was manipulated across four blocks of trials (Fig. 1B) by changing its size (big vs. small) or timing (short vs. long). Thus, three blocks contained an unexpected increment or upshift in reward value at one of the wells (Fig. 1B, blocks 2sh, 3bg, and 4bg) and two blocks contained an unexpected decrement or downshift (Fig. 1B, blocks 2lo and 4sm). Both lesioned and sham rats visited the well containing the more valuable reward more often on free-choice trials (Fig. 1C). A two-way ANOVA with the factors of Lesion (lesion vs. sham) and Value (high vs. low reward value) confirmed this observation: a main effect of Value was found, F(1,8) = 345.98, but no effect of Lesion or Lesion × Value interaction (Fs < 1). Moreover, on forced-choice trials, both groups showed greater accuracy (two-way ANOVA: main effect of Value, F(1,8)=27.33, but no effect of Lesion or Lesion × Value interaction, Fs<1) and shorter latencies to leave the odor port (two-way ANOVA: main effect of Value, F(1,8) = 19.54, but no effect of Lesion or Lesion × Value interaction, Fs < 1) when the more valuable reward was at stake.

Figure 1. Behavioral task, performance during recording sessions, and histology.

A, Picture of the set-up in odor boxes. B, Task schematic. Line deflections indicate the time course of stimuli (odors and rewards) presented to the animal on each trial. Other trial events are listed below. At the start of each recording session, one well was randomly designated as short (a 0.5 s delay before reward) and the other long (a 1–7s delay before reward) (block 1). In the second block of trials these contingencies were switched (block 2). In blocks 3–4, we held the delay constant while manipulating the number of the rewards delivered. C, Behavior on free choice trials lawfully reflects the difference in reward delay (left) and size (right) between wells. The bar graphs show the average performance (percent correct) of sham and 6-OHDA rats across all recording sessions. D, location of recording tracks within amygdala for each rat in each group. Diagrams were drawn using the CD version of Paxinos and Watson’s atlas (68). Final electrode position, determined histologically, is indicated by the gray dot at the end of each track. Distance traveled for each session was calculated from this point to determine if neurons were within basolateral amygdala. E, the left-most section shows the intact midbrain region of a sample sham rat (dopamine neurons appear stained in dark brown). The area corresponding to the VTA is enclosed in dashed lines. Extensive dopaminergic damage is evident ipsilateral to the recording electrode in the remaining sections belonging to each of the five lesioned rats.

We recorded 335 ABL neurons in 5 sham rats performing this task (Fig. 1D); histology showed preserved staining for tyrosine hydroxylase (Fig. 1E; compare contra vs. ipsilateral staining). Consistent with numerous reports that a subset of ABL neurons encodes reward value (2, 19–25), 69 neurons (21%) significantly increased firing during the odor cue epoch (t-test, p < 0.05), some of which also encoded size- or delay-generated differences in expected reward value (high value: 26; low value: 29). In addition, 87 neurons (26%) significantly increased firing after reward delivery (1 s) relative to baseline (1 s before nosepoke) across all trial types, including 72 (83%) that fired differentially after learning based on either the delay (short vs. long; t-test; p < 0.05) or size (big vs. small; t-test; p < 0.05) of reward. Importantly, the activity of these neurons differed between the start and the end of trial blocks even though the actual reward being delivered was the same. This pattern of activity is apparent in average activity of all reward-responsive ABL neurons at the beginning and end of the four trial blocks. Inspection of upshift (Fig 2A; 2sh, 4bg, and 3bg) and downshift blocks (Fig 2A; 2lo and 4sm) in the figure reveals that activity was higher initially—while the value of the reward was surprising—but declined toward the end of the blocks, once the rat had learned the new values. This effect was also evident in an analysis of the relative activity of each neuron at the start vs. the end of blocks. For this, we represented the activity in each neuron as a contrast ratio of the form (early−late)/(early+late), using the maximum firing in the first and last 5 trials in each relevant block. The distributions of this contrast ratio appear shifted above zero, indicating that the populations tended to fire more early than late in both upshift and downshift blocks (Fig. 2B). This observation was confirmed by a Wilcoxon analysis, which revealed an overall significant effect (z = 2.68; p < 0.01; u = 0.055). Independent analyses showed a significant shift for upshifts, (Fig. 2B-i; z = 2.38; p < 0.05; u = 0.058), and a marginally significant shift for downshifts (Fig 2B-ii; z = 1.89; p = 0.059; u = 0.053). In general, weaker effects for downshifts relative to upshifts replicate previous reports on this effect (3, 26) and would be consistent with the greater salience of reward (an event that is directly perceived) over omission (an event that entirely depends on a the contrast with a stored representation), which is supported by the fact that acquisition is generally faster than extinction (27). At any rate, separate analyses for upshifts versus downshifts showed no difference between them (Wilcoxon; z= 0.58; p = 0.56), and changes in firing produced by upshifts and downshifts were positively correlated (p < 0.01; r2 = 0.093; Fig. 2B-iii).

Figure 2. Attentional signaling in ABL in sham rats.

AHeat plots of average activity across all neurons showing a significant effect of delay or size in an ANOVA during the 1 s after reward delivery. Activity over the course of the trials is plotted during the first and last 20 (10 per direction) trials in each training block (Fig. 1A; blocks 1–4). Activity is shown, aligned on odor onset (“align odor”) and reward delivery (“align reward”). Blocks 1–4 are shown in the order performed (top to bottom). Thus, during block 1, rats responded after a “long” delay or a “short” delay to receive reward (actual starting direction—left/right—was counterbalanced in each block and is collapsed here). In block 2, the locations of the “short” delay and “long” delay were reversed. In blocks 3–4, delays were held constant but the size of the reward (“big” or “small”) varied. BDistribution of indices [(early−late)/(early+late)] representing the difference in firing to reward delivery (1 s) and omission (1 s) during trials 1–5 (early) and during the last 5 trials (late) after upshifts (i) (2sh, 3bg, and 4bg) and downshifts (ii) (2lo and 4sm). Filled bars in distribution plots (i, ii) indicate the number of cells that showed a main effect (p<0.05) of learning (early vs. late). iii, Correlation between contrast indices shown in i and ii. Filled points in scatter plot (iii) indicate cells that showed a main effect (p<0.05) of learning (early vs. late). Analysis and figures shown here include both free- and forced-choice trials. p values for distributions are derived from Wilcoxon test.

To further investigate these activity changes in individual neurons across trials and their relationship to block type, we conducted an ANOVA with the factors of learning (early vs. late) and shift-type (upshift vs. downshift), focusing on activity at the time of reward and reward omission (1 s) for each of the 87 reward-responsive neurons. This analysis showed that 10 of these neurons (11%) showed a main effect of learning (p < 0.05), firing differently early versus late in both upshift and downshift blocks. This population (Fig. 2B; black bars in distribution and dots on scatter) included 9 neurons that fired significantly more early than late, a proportion that was significantly greater than expected by chance (chi-square = 4.75; p < 0.05) and significantly greater than the number that showed the opposite effect (chi-square = 6.79; p < 0.01). These results are remarkably similar to those reported previously (3).

To determine whether the attentional signal in ABL depends on dopaminergic input, we recorded the activity of 329 ABL neurons from 5 rats with 6-OHDA lesions of ipsilateral midbrain (Fig. 1D). Staining for tyrosine hydroxylase verified near complete loss of dopaminergic neurons ipsilateral to the recording site in these rats (Fig. 1E; compare contra vs. ipsilateral staining). Neurons recorded in these rats showed a slight but significant increase in baseline firing (4.13 spikes/sec on average, compared with 3.79 spikes/sec in shams; Wilcoxon; z = 2.6728; p < 0.005). A general increase in the firing of regular spiking, pyramidal-type neurons, which microelectrodes of the type used here are biased to record, is consistent with observations that tonic dopaminergic input normally increases evoked and spontaneous activity of GABAergic interneurons in ABL (28–31).

On top of this apparent disinhibition, many of the ABL neurons recorded in 6-OHDA rats still increased firing from baseline during the relevant experimental events. This included 99 neurons (30%) that showed elevated activity during the odor cues (t-test, p < 0.05), notably a significantly greater proportion than in shams (chi-square = 4.35; p = 0.04). Some of these neurons also encoded size- or delay-generated differences in expected reward value (high value: 33; low value: 49), but these proportions were no different from those in shams (chi-square = 0.41; p = 0.52). Thus, lesion had no effect on value selectivity during the time of the odor cues.

In addition, 119 neurons in lesioned rats (36%) increased firing during reward presentation (ttest, p < 0.05). This proportion was, in fact, larger than that observed in sham rats (chi-square = 10.0; p < 0.01), indicating that the loss of dopaminergic input did not affect the ability of ABL neurons to respond to reward. Further, as in shams, most of these neurons (91 neurons or 76%) were sensitive to reward value as evinced by their differential firing based on either reward delay (t-test; p < 0.05) or size (t-test; p < 0.05) (vs. shams: chi-square = 0.07; p = 0.79).

However, activity in reward-responsive neurons recorded in lesioned rats was largely insensitive to learning. This is evident in the average firing rate of the reward-responsive neurons across each of the four blocks (Fig 3A); with the possible exception of one trial block (3bg), activity is generally the same to reward at the start and end of the blocks. To quantify this, we again plotted the distribution of contrast ratios comparing firing early vs. late in the upshift and downshift blocks for each neuron (Fig. 3B). Wilcoxon analyses revealed that the combined distribution of these scores was shifted significantly above zero (p = 0.0121; u = 0.0284; z = 2.5093). However this effect was entirely due to an isolated effect in the upshift distribution (z = 2.20; p < 0.05; Fig. 3B-i), consistent with the apparent presence of higher firing early versus late in block 3bg. The same neurons showed no change in firing in response to downshifts in reward (Wilcoxon; z = 0.83; p = 0.40; Fig. 3B-ii), and there was no correlation between the effects of up and downshifts on the firing of individual neurons in the 6-OHDA lesioned rats (Fig. 3B-iii; p = 0.07; r2 = 0.03). A learning×shift-type ANOVA of activity during a 1-s epoch after reward delivery or omission for each of the 119 reward-responsive neurons. This analysis revealed 11 neurons (9%) that showed a main effect of learning (Fig. 3B, black bars in distribution and dots on scatter). This proportion is similar to that in controls (chi-square = 0.28, p = 0.6). However unlike such neurons in controls, which were strongly biased to show more firing early than late in a shift block, these neurons were equally likely to show more firing late than early (7 vs. 4 neurons; chi-square = 0.86; p = 0.35). These proportions were essentially what would be expected by chance given the number of neurons recorded and our analytical approach. Accordingly, the neurons that showed an upshift effect failed to show any significant effect of reward downshift on firing (u = 0.1438, p = 0.1563), whereas neurons in controls that showed an upshift effect also showed a significant effect of downshifts (u = 0.2682, p = 0.0039).

Figure 3. Attentional signaling in ABL in 6-OHDA rats.

A, BDetails of these plots are the same as in Figure 2.

It is possible that the lesion effect arises from the increased baseline firing in neurons from lesioned rats. To address this question, we classified neurons in the lesioned group according to whether they fired above or below the average firing rate of neurons recorded in the sham rats (Fig. 4). Contrary to this idea, the population that showed high baseline firing rates in the lesioned group were actually more likely to show greater activity early than late following upshifts (Wilcoxon; p < 0.01; u = 0.08; Fig. 4A-i). These fast firing neurons, however, failed to show a similar effect after downshifts (Wilcoxon; p = 0.59; u = −0.01; Fig. 4A-ii) nor did they show a correlation between upshifts and downshifts (p = 0.98; r2 = 0.01; Fig. 4A-iii). Notably, the few neurons in the control group with comparable high baseline firing rates showed no difference in firing after upshifts or downshifts (Upshifts: Wilcoxon; p = 0.16; u = 0.03; Fig. 4 C-i; Downshifts: Wilcoxon; p = 0.71; u = −0.02; Fig. 4C-ii; Correlation: p = 0.69; r2 = 0.01; Fig. 4C-iii).

Figure 4. Differences in attentional signaling based on firing rate in sham and 6-OHDA rats.

Distribution of indices [(early−late)/(early+late)] in high-(A) and low-baseline (B) neurons in the lesion group, and for high-(C) and low-baseline neurons in shams (D). Details of these plots are the same as in Figure 2.

On the other hand, neurons in the lesioned rats that showed low baseline firing rates more comparable to those in controls were no more likely to fire early than late for either upshifts or downshifts (Upshifts: Wilcoxon; p = 0.90; u = 0.004; Fig. 4C-i; Downshifts: Wilcoxon; p = 0.15; u = 0.05; Fig. 4C-ii; Correlation: p = 0.16; r2 = 0.04; Fig. 4B-iii). This result contrasts with control data, in which the PKH signal became stronger when analyzed without the higher firing neurons (Upshifts: Wilcoxon; p < 0.05; u = 0.07; Fig. 4D-i; Downshifts: Wilcoxon; p < 0.05; u = 0.08; Fig. 4D-ii; Correlation: p < 0.05; r2 = 0.10; Fig. 4D-iii). Thus, far from accounting for the loss of the attentional changes, the presence of the higher firing neurons in the 6-OHDA lesioned rats appeared to mask the complete elimination of the PKH signal in the more typical, slower-firing ABL neurons.

Finally, we also examined activity across trials in the neurons that fired more early in learning, looking specifically for the integration that is a hallmark of the attentional signal proposed by Pearce, Kaye and Hall (17). While these neurons did fire more in early trials than later trials in lesioned rats (they were selected based on this criterion), they failed to show any evidence of integration at the start of a trial block; instead activity in these neurons was highest on the very first trial that reward was shifted before declining (Fig. 5, grey line, p < 0.05). By contrast, activity in comparable neurons recorded in shams increased to the first unexpected reward and then increased further to the second before declining (Fig. 5, black line, p < 0.05). Thus, although a few abnormally fast firing neurons in lesioned rats did respond more to reward when it was better than expected, activity in these specific neurons failed to exhibit the characteristics of a PKH signal shown by the larger proportion of similar neurons in control rats here and in our prior study (3).

Figure 5. Time course of attentional signal in sham and 6-OHDA rats.

Average firing (500 ms after reward delivery) in ABL across the first 5 trials after an increase in reward is plotted for all upshift-sensitive neurons in 6-OHDA rats (grey line); the comparable population in controls is shown for comparison (black line).

DISCUSSION

The current study replicates the attentional signal in ABL previously shown in rats (3) and humans (4), and further demonstrates its dependence on the dopaminergic system. What role does dopamine play in the construction of the attentional signal in ABL? An obvious possibility is that the latter has its origin in the signed prediction error signals widely reported in midbrain DA neurons (8–11, 32–39). In addition to inverting the polarity of neural activity for negative errors (downshifts) while maintaining that of positive errors, this would require a mechanism to delay or initially attenuate the signal from midbrain to ABL. This attenuation is necessary since the signal in ABL develops across several trials, essentially lagging the changes in the activity of DA neurons recorded in this task, which are maximal on the first trial on which reward is shifted (3, 32). The neural circuit responsible for carrying out these transformations could be local to the mesoamygdalar (VTA-ABL) pathway. As noted earlier, dopaminergic input normally increases activity of GABAergic interneurons in ABL (28–31). This mechanism might allow dopaminergic input to ABL to indirectly modulate firing of what are likely pyramidal-type neurons described here. Alternatively the neural circuit might also include other areas not considered here. Indeed, in the extreme case, the disruption of the PKH signal might be entirely mediated by the loss of DA in areas other than ABL. This latter possibility deserves future investigation in view of recent reports that comparable attentional signals may be found in areas such as anterior cingulated cortex (26, 40), lateral prefrontal cortex, and dorsal striatum (41). It has also been suggested that the effect could be secondary to uptake of 6-OHDA by noradrenergic terminals in VTA and subsequent loss of these neurons; while we view this possibility as remote, given the light noradrenergic innervation of VTA (42), it cannot be excluded as we did not include an agent to block noradrenergic reuptake. Alternatively at least some of these transformations may take place within the midbrain dopaminergic system itself, consistent with reports that a subpopulation of dopamine neurons increase firing in the face of both positively- and negatively-valenced events (35). This model would be in keeping with evidence of dopamine release into ABL in response to either appetitive or aversive events (43–45).

The finding that the attentional signal in ABL depends on dopaminergic input joins extant evidence implicating amygdala-midbrain interactions in attentional processing (46–48). Our results, while preliminary compared to this established line of research, expand upon it in several important ways. For example, while this prior work has focused on information flow from the central nucleus of the amygdala to the substantia nigra pars compacta, our results suggest a parallel interaction in the opposite direction between VTA and ABL, since dopaminergic input to ABL from midbrain originates primarily from this part of midbrain (18). In addition, the central nucleus-substantia nigra pathway seems to be specifically required for restoring attention to cues after it has been reduced by prior experience, perhaps exclusively in the face of the surprising omission of an expected event. This relatively circumscribed role is reflected in neural activity in central nucleus in the same task used here, which is selectively responsive to the omission of an expected reward (49). Activity in ABL provides a much more faithful and general reflection of the PKH signal (3). Supported by dopaminergic errors, such a signal would be important for facilitating learning in a wider variety of circumstances. Indeed, ABL is required for increased orienting - a measure of attention - within our task after either the addition or omission of rewards (3), and damage to this area has recently been shown to affect unblocking when new rewards are added (50). By contrast, central nucleus is only required for increased orienting in response to the omission of reward in our task (49), and damage to this area does not affect unblocking driven by additional rewards (51, 52).

If ABL plays a general role in driving attentional changes, then the role of this region in a variety of learning processes may need to be reconceptualized or at least modified to include this function. For example, associative encoding downstream requires input from ABL (53–55). This may reflect a role for ABL in acquiring the simple associative representations (56). However an alternative account – not mutually exclusive – is that ABL augments the allocation of attentional resources to directly drive acquisition of the same associative information downstream. This might occur if the signal identified here increased the associability of cue-representations in downstream regions. Such an effect may be evident in neural activity related to surprise and uncertainty reported recently in prefrontal cortex (26, 40, 57).

A tight linkage between this attentional signal and the more conventional dopaminergic errors, as suggested by the current results, would favor recent hybrid theories, which combine signed and unsigned error mechanisms (4, 58, 59). Such a linkage also has significance for our understanding of altered learning in neuropathological conditions known to impact the dopaminergic system. For example, some symptoms of schizophrenia have been proposed to reflect aberrant learning and the spurious attribution of salience to cues (60, 61). These symptoms might be secondary to altered signaling in ABL (62) under the influence of abnormally strong dopaminergic input (63, 64). Such alterations could drive frank attentional problems as well as positive symptoms such as hallucinations and delusions. The amygdala has also been central to ideas about addiction, where it is proposed to mediate craving and the abnormal attribution of motivational significance to drug-associated cues and contexts (65–67). Again such aberrant learning may reflect, in part, disrupted attentional processing, potentially under the influence of altered dopamine function.

Supplementary Material

Acknowledgments

This work was supported by grants from the NIDA (R01-DA015718, GS; K01DA021609, MR; R01DA031695, MR) and NIA (R01-AG027097; GS) and the NIDA-IRP (GS). This article was prepared in part while GS was employed at UMB. The opinions expressed in this article are the author's own and do not reflect the view of the National Institutes of Health, the Department of Health and Human Services, or the United States government.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Financial Disclosures: The authors declare no biomedical financial interests or potential conflicts of interest.

REFERENCES

- 1.Murray EA. The amygdala, reward and emotion. Trends in Cognitive Sciences. 2007;11:489–497. doi: 10.1016/j.tics.2007.08.013. [DOI] [PubMed] [Google Scholar]

- 2.Belova MA, Paton JJ, Morrison SE, Salzman CD. Expectation modulates neural responses to pleasant and aversive stimuli in primate amygdala. Neuron. 2007;55:970–984. doi: 10.1016/j.neuron.2007.08.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Roesch MR, Calu DJ, Esber GR, Schoenbaum G. Neural correlates of variations in event processing during learning in basolateral amygdala. Journal of Neuroscience. 2010;30:2464–2471. doi: 10.1523/JNEUROSCI.5781-09.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Li J, Schiller D, Schoenbaum G, Phelps EA, Daw ND. Differential roles of human striatum and amygdala in associative learning. Nature Neuroscience. 2011;14:1250–1252. doi: 10.1038/nn.2904. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Herry C, Bach DR, Esposito F, Di Salle F, Perrig WJ, Scheffler K, et al. Processing of temporal unpredictability in human and animal amygdala. Journal of Neuroscience. 2007;27:5958–5966. doi: 10.1523/JNEUROSCI.5218-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Tye KM, Cone JJ, Schairer WW, Janak PH. Amygdala neural encoding in the absence of reward during extinction. Journal of Neuroscience. 2010;30:116–125. doi: 10.1523/JNEUROSCI.4240-09.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Johansen JP, Tarpley JW, LeDoux JE, Blair HT. Neural substrates for expectation-modulated fear learning in the amygdala and periaqueductal gray. Nature Neuroscience. 2010;13:979–986. doi: 10.1038/nn.2594. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Montague PR, Dayan P, Sejnowski TJ. A framework for mesencephalic dopamine systems based on predictive hebbian learning. Journal of Neuroscience. 1996;16:1936–1947. doi: 10.1523/JNEUROSCI.16-05-01936.1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Mirenowicz J, Schultz W. Importance of unpredictability for reward responses in primate dopamine neurons. Journal of Neurophysiology. 1994;72:1024–1027. doi: 10.1152/jn.1994.72.2.1024. [DOI] [PubMed] [Google Scholar]

- 10.Pan W-X, Schmidt R, Wickens JR, Hyland BI. Dopamine cells respond to predicted events during classical conditioning: evidence for eligibility traces in the reward-learning network. Journal of Neuroscience. 2005;25:6235–6242. doi: 10.1523/JNEUROSCI.1478-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.D'Ardenne K, McClure SM, Nystrom LE, Cohen JD. BOLD responses reflecting dopaminergic signals in the human ventral tegmental area. Science. 2008;319:1264–1267. doi: 10.1126/science.1150605. [DOI] [PubMed] [Google Scholar]

- 12.Rescorla RA, Wagner AR. A theory of Pavlovian conditioning: variations in the effectiveness of reinforcement and nonreinforcement. In: Black AH, Prokasy WF, editors. Classical Conditioning II: Current Research and Theory. New York: Appleton-Century-Crofts; 1972. pp. 64–99. [Google Scholar]

- 13.Schultz W, Dayan P, Montague PR. A neural substrate for prediction and reward. Science. 1997;275:1593–1599. doi: 10.1126/science.275.5306.1593. [DOI] [PubMed] [Google Scholar]

- 14.Sutton RS. Learning to predict by the method of temporal difference. Machine Learning. 1988;3:9–44. [Google Scholar]

- 15.Pearce JM, Hall G. A model for Pavlovian learning: variations in the effectiveness of conditioned but not of unconditioned stimuli. Psychological Review. 1980;87:532–552. [PubMed] [Google Scholar]

- 16.Mackintosh NJ. A theory of attention: variations in the associability of stimuli with reinforcement. Psychological Review. 1975;82:276–298. [Google Scholar]

- 17.Pearce JM, Kaye H, Hall G. Predictive accuracy and stimulus associability: development of a model for Pavlovian learning. In: Commons ML, Herrnstein RJ, Wagner AR, editors. Quantitative Analyses of Behavior. Cambridge MA: Ballinger; 1982. pp. 241–255. [Google Scholar]

- 18.Brinley-Reed M, McDonald AJ. Evidence that dopaminergic axons provide a dense innervation of specific neuronal subpopulations in the rat basolateral amygdala. Brain Research. 1999;850:127–135. doi: 10.1016/s0006-8993(99)02112-5. [DOI] [PubMed] [Google Scholar]

- 19.Tye KM, Stuber GD, De Ridder B, Bonci A, Janak PH. Rapid strengthening of thalamo-amygdala synapses mediates cue-reward learning. Nature. 2008;453:1253–1257. doi: 10.1038/nature06963. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Schoenbaum G, Chiba AA, Gallagher M. Neural encoding in orbitofrontal cortex and basolateral amygdala during olfactory discrimination learning. Journal of Neuroscience. 1999;19:1876–1884. doi: 10.1523/JNEUROSCI.19-05-01876.1999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Patton JJ, Belova MA, Morrison SE, Salzman CD. The primate amygdala represents the positive and negative value of visual stimuli during learning. Nature. 2006;439:865–870. doi: 10.1038/nature04490. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Fontanini A, Grossman SE, Figueroa JA, Katz DB. Distinct subtypes of basolateral amygdala neurons reflect palatability and reward. Journal of Neuroscience. 2009;29:2486–2495. doi: 10.1523/JNEUROSCI.3898-08.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Sugase-Miyamoto Y, Richmond BJ. Neuronal signals in the monkey basolateral amygdala during reward schedules. Journal of Neuroscience. 2005;25:11071–11083. doi: 10.1523/JNEUROSCI.1796-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Nishijo H, Ono T, Nishino H. Single neuron responses in alert monkey during complex sensory stimulation with affective significance. Journal of Neuroscience. 1988;8:3570–3583. doi: 10.1523/JNEUROSCI.08-10-03570.1988. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Belova MA, Patton JJ, Salzman CD. Moment-to-moment tracking of state value in the amygdala. Journal of Neuroscience. 2008;28:10023–10030. doi: 10.1523/JNEUROSCI.1400-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Bryden DW, Johnson EE, Tobia SC, Kashtelyan V, Roesch MR. Attention for learning signals in anterior cingulate cortex. Journal of Neuroscience. 2011;31:18266–18274. doi: 10.1523/JNEUROSCI.4715-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Rescorla RA. Comparison of the rates of associative change during acquisition and extinction. Journal of Experimental Psychology Animal Behavior Processes. 2002;28:406–415. [PubMed] [Google Scholar]

- 28.Rosenkrantz JA, Grace AA. Modulation of basolateral amygdala neuronal firing and afferent drive by dopamine receptor activation in vivo. Journal of Neuroscience. 1999;19:11027–11039. doi: 10.1523/JNEUROSCI.19-24-11027.1999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Bissiere S, Humeau Y, Luthi A. Dopamine gates LTP induction in the lateral amygdala by suppressing feedforward inhibition. Nature Neuroscience. 2003;6:587–592. doi: 10.1038/nn1058. [DOI] [PubMed] [Google Scholar]

- 30.Kroner S, Rosenkranz JA, Grace AA, Barrionuevo G. Dopamine modulatess excitability of basolateral amygdala neurons in vitro. Journal of Neurophysiology. 2005;93:1598–1610. doi: 10.1152/jn.00843.2004. [DOI] [PubMed] [Google Scholar]

- 31.Loretan K, Bissiere S, Luthi A. Dopamine modulation of spontaneous inhibitory network activity in the lateral amygdala. Neuropharmacology. 2004;47:631–639. doi: 10.1016/j.neuropharm.2004.07.015. [DOI] [PubMed] [Google Scholar]

- 32.Roesch MR, Calu DJ, Schoenbaum G. Dopamine neurons encode the better option in rats deciding between differently delayed or sized rewards. Nature Neuroscience. 2007;10:1615–1624. doi: 10.1038/nn2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Bayer HM, Glimcher P. Midbrain dopamine neurons encode a quantitative reward prediction error signal. Neuron. 2005;47:129–141. doi: 10.1016/j.neuron.2005.05.020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Nakahara H, Itoh H, Kawagoe R, Takikawa Y, HIkosaka O. Dopamine neurons can represent context-dependent prediction error. Neuron. 2004;41:269–280. doi: 10.1016/s0896-6273(03)00869-9. [DOI] [PubMed] [Google Scholar]

- 35.Matsumoto M, Hikosaka O. Two types of dopamine neuron distinctly convey positive and negative motivational signals. Nature. 2009;459:837–841. doi: 10.1038/nature08028. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Schultz W, Romo R. Neuronal activity in the monkey striatum during the initiation of movements. Experimental Brain Research. 1988;71:431–436. doi: 10.1007/BF00247503. [DOI] [PubMed] [Google Scholar]

- 37.Hollerman JR, Schultz W. Dopamine neurons report an error in the temporal prediction of reward during learning. Nature Neuroscience. 1998;1:304–309. doi: 10.1038/1124. [DOI] [PubMed] [Google Scholar]

- 38.Waelti P, Dickinson A, Schultz W. Dopamine responses comply with basic assumptions of formal learning theory. Nature. 2001;412:43–48. doi: 10.1038/35083500. [DOI] [PubMed] [Google Scholar]

- 39.Morris G, Nevet A, Arkadir D, Vaadia E, Bergman H. Midbrain dopamine neurons encode decisions for future action. Nature Neuroscience. 2006;9:1057–1063. doi: 10.1038/nn1743. [DOI] [PubMed] [Google Scholar]

- 40.Hayden BY, Heilbronner SR, Pearson JM, Platt ML. Surprise signals in anterior cingulate cortex: neuronal encoding of unsigned reward prediction errors driving adjustment in behavior. Journal of Neuroscience. 2011;31:4178–4187. doi: 10.1523/JNEUROSCI.4652-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Asaad WF, Eskandar EN. Encoding of both positive and negative reward prediction errors by neurons of the primate lateral prefrontal cortex and caudate nucleus. Journal of Neuroscience. 2011;31:17772–17787. doi: 10.1523/JNEUROSCI.3793-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Moore KY, Card JP. Noradrenaline-containing neurons system. In: Bjorklund A, Hokfelt T, editors. Handbook of Chemical Neuroanatomy Classical Transmitters in the CNS Part I. Amsterdam: Elsevier Science Publishers B. V.; 1984. pp. 123–156. [Google Scholar]

- 43.Coco ML, Kuhn CM, Ely TD, Kilts CD. Selective activation of mesoamygdaloid dopamine neurons by conditioned stress: attenuation by diazepam. Brain Research. 1992;590:39–47. doi: 10.1016/0006-8993(92)91079-t. [DOI] [PubMed] [Google Scholar]

- 44.Inglis FM, Moghaddam B. Dopaminergic innervation of the amygdala is highly responsive to stress. Journal of Neurochemistry. 2008;72:1088–1094. doi: 10.1046/j.1471-4159.1999.0721088.x. [DOI] [PubMed] [Google Scholar]

- 45.Harmer CJ, Phillips GD. Enhanced dopamine efflux in the amygdala by a predictive, but not a non-predictive, stimulus: facilitation by prior repeated D-amphetamine. Neuroscience. 1999;90:119–130. doi: 10.1016/s0306-4522(98)00464-3. [DOI] [PubMed] [Google Scholar]

- 46.Lee HJ, Gallagher M, Holland PC. The central amygdala projection to the substantia nigra reflects prediction error information in appetitive conditioning. Learning & Memory. 2010;17:531–538. doi: 10.1101/lm.1889510. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Lee HJ, Youn JM, J OM, Gallagher M, Holland PC. Role of substantia nigra-amygdala connections in surprise-induced enhancement of attention. Journal of Neuroscience. 2006;26:6077–6081. doi: 10.1523/JNEUROSCI.1316-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Lee HJ, Youn JM, Gallagher M, Holland PC. Temporally limited role of substantia nigra-central amygdala connections in surprise-induced enhancement of learning. European Journal of Neuroscience. 2008;27:3043–3049. doi: 10.1111/j.1460-9568.2008.06272.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Calu DJ, Roesch MR, Haney RZ, Holland PC, Schoenbaum G. Neural correlates of variations in event processing during learning in central nucleus of amygdala. Neuron. 2010;68:991–1001. doi: 10.1016/j.neuron.2010.11.019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Chang SE, Wheeler DS, McDannald M, Holland PC. The effects of basolateral amygdala lesions on unblocking. Society for Neuroscience Abstracts. 2010 [Google Scholar]

- 51.Holland PC, Gallagher M. Different roles for amygdala central nucleus and substantia innominata in the surprise-induced enhancement of learning. Journal of Neuroscience. 2006;26:3791–3797. doi: 10.1523/JNEUROSCI.0390-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Holland PC, Gallagher M. Effects of amygdala central nucleus lesions on blocking an d unblocking. Behavioral Neuroscience. 1993;107:235–245. doi: 10.1037//0735-7044.107.2.235. [DOI] [PubMed] [Google Scholar]

- 53.Schoenbaum G, Setlow B, Saddoris MP, Gallagher M. Encoding predicted outcome and acquired value in orbitofrontal cortex during cue sampling depends upon input from basolateral amygdala. Neuron. 2003;39:855–867. doi: 10.1016/s0896-6273(03)00474-4. [DOI] [PubMed] [Google Scholar]

- 54.Ambroggi F, Ishikawa A, Fields HL, Nicola SM. Basolateral amygdala neurons facilitate reward-seeking behavior by exciting nucleus accumbens neurons. Neuron. 2008;59:648–661. doi: 10.1016/j.neuron.2008.07.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.McGaugh JL, Cahill L, Roozendaal B. Involvement of the amygdala in memory storage: interaction with other brain systems. Proceedings of the National Academy of Science. 1996;93:13508–13514. doi: 10.1073/pnas.93.24.13508. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Pickens CL, Setlow B, Saddoris MP, Gallagher M, Holland PC, Schoenbaum G. Different roles for orbitofrontal cortex and basolateral amygdala in a reinforcer devaluation task. Journal of Neuroscience. 2003;23:11078–11084. doi: 10.1523/JNEUROSCI.23-35-11078.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Behrens TE, Woolrich MW, Walton ME, Rushworth MF. Learning the value of information in an uncertain world. Nature Neuroscience. 2007;10:1214–1221. doi: 10.1038/nn1954. [DOI] [PubMed] [Google Scholar]

- 58.Courville AC, Daw ND, Touretzky DS. Bayesian theories of conditioning in a changing world. Trends in Cognitive Sciences. 2006;10:294–300. doi: 10.1016/j.tics.2006.05.004. [DOI] [PubMed] [Google Scholar]

- 59.Lepelley ME. The role of associative history in models of associative learning: a selective review and a hybrid model. Quarterly Journal of Experimental Psychology. 2004;57:193–243. doi: 10.1080/02724990344000141. [DOI] [PubMed] [Google Scholar]

- 60.Corlett PR, Taylor JR, Wang XJ, Fletcher PC, Krystal JH. Toward a neurobiology of delusions. Progress in Neurobiology. 2010;92:345–369. doi: 10.1016/j.pneurobio.2010.06.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Kapur S. Psychosis as a state of aberrant salience: a framework linking biology, phenomenology, and pharmacology in schizophrenia. Americal Journal of Psychiatry. 2003;160:13–23. doi: 10.1176/appi.ajp.160.1.13. [DOI] [PubMed] [Google Scholar]

- 62.Taylor SF, Phan KL, Britton JC, Liberzon I. Neural responses to emotional salience in schizophrenia. Neuropsychopharmacology. 2005;30:984–995. doi: 10.1038/sj.npp.1300679. [DOI] [PubMed] [Google Scholar]

- 63.Corlett PR, Murray GK, Honey GD, Aitken MR, Shanks DR, Robbins TW, et al. Disrupted prediction-error signal in psychosis: evidence for an associative account of delusions. Brain. 2007;130:2387–2400. doi: 10.1093/brain/awm173. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Murray GK, Corlett PR, Clark L, Pessiglione M, Blackwell AD, Honey G, et al. Substantia nigra/ventral tegmental reward prediction error disruption in psychosis. Molecular Psychiatry. 2008;13:267–276. doi: 10.1038/sj.mp.4002058. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Torregrossa M, Corlett PR, Taylor JR. Aberrant learning and memory in addiction. Neurobiology of Learning and Memory. 2011;96:609–623. doi: 10.1016/j.nlm.2011.02.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Everitt BJ, Cardinal RN, Hall J, Parkinson JA, Robbins TW. Differential involvement of amygdala subsystems in appetitive conditioning and drug addiction. In: Aggleton JP, editor. The Amygdala: A Functional Analysis. New York: Oxford University Press; 2000. pp. 353–390. [Google Scholar]

- 67.See RE, Fuchs RA, Ledford CC, McLaughlin J. Drug addiction, relapse, and the amygdala. Annals of the New York Academy of Science. 2003;985:294–307. doi: 10.1111/j.1749-6632.2003.tb07089.x. [DOI] [PubMed] [Google Scholar]

- 68.Paxinos G, Watson C. The rat brain in stereotaxic coordinates, compact sixth edition. Academic Press; 2008. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.