Abstract

The trajectory of the somatic membrane potential of a cortical neuron exactly reflects the computations performed on its afferent inputs. However, the spikes of such a neuron are a very low-dimensional and discrete projection of this continually evolving signal. We explored the possibility that the neuron’s efferent synapses perform the critical computational step of estimating the membrane potential trajectory from the spikes. We found that short-term changes in synaptic efficacy can be interpreted as implementing an optimal estimator of this trajectory. Short-term depression arose when presynaptic spiking was sufficiently intense as to reduce the uncertainty associated with the estimate; short-term facilitation reflected structural features of the statistics of the presynaptic neuron such as up and down states. Our analysis provides a unifying account of a powerful, but puzzling, form of plasticity.

Synaptic efficacies can increase (facilitate) or decrease (depress) several-fold in strength on the time scale of single interspike intervals1-3. This short-term plasticity (STP), which is well captured by simple, but powerful, mechanistic models1,3,4, is of a regularity and magnitude that argues against it being treated only as wanton variability5. There have thus been various suggestions for the function of STP, including low, high or band-pass filtering of inputs3,6 (but see ref. 7), rendering postsynaptic responses insensitive to the absolute intensity of presynaptic activity8,9 decorrelating input spike sequences10, and maintaining working memories in the prefrontal cortex11.

However, despite the ubiquity of STP in cortical circuits2, these suggestions are restricted to select neural subsystems9,11 or forms of STP5,8-10 and are often limited to feedforward networks8-10 or to a firing rate–based description of presynaptic activities8, thereby ignoring the fundamentally fast fluctuations in synaptic efficacies as a result of STP. Worse, the vast bulk of models of neural circuit information processing require synaptic efficacies to be constant over the short term of a single computation, changing at most very slowly to average across the statistics of input or changing only in the light of a gating mechanism12,13. These would seem to be incompatible with substantial STP. Here, we argue that, far from hindering such circuit computations, STP is in fact a near-optimal solution to a central problem neural circuits face that is associated with spike-based communication.

Although, as digital quantities, spikes have the mechanistic advantage of allowing regenerative error correction, they are a substantially impoverished representation of the fast-evolving, analog membrane potentials of the neurons concerned14-16. These analog quantities are normally considered to lie at the heart of computations,17,18 and it is common to appeal to averages over space (that is, multiple identical neurons) and/or time (that is, slow currents) to allow them to be represented by spike trains18. However, both sorts of averages are neurobiologically questionable. In many circumstances, computations need to be executed in the matter of a few interspike intervals19-21, precluding extensive averaging over time; and, in many circuits, neurons represent independent analog quantities, as in recurrent network models of autoassociative memories22,23, or partially independent quantities, as in surface attractor models of population codes24. We make the alternative suggestion that the analog membrane potential of a neuron is being estimated in a statistically appropriate manner by its efferent synapses on the basis of the spike trains that the neuron emits and that STP is a signature of this solution.

In particular, the informativeness of an incoming spike about the membrane potential varies greatly depending on the uncertainty left by the preceding spike train. This makes the spike’s effect very context dependent. We found that important elements of this context dependency are realized by synaptic depression and facilitation. Furthermore, as incoming spikes are sparse, the behavior of the optimal estimator critically depends on prior assumptions about presynaptic membrane potential dynamics. Thus, our approach allowed us to make detailed predictions about how the properties of STP, implementing the optimal estimator, should be matched to the statistics of presynaptic membrane potential fluctuations.

RESULTS

Postsynaptic potentials and the optimal estimator

We first defined the optimal estimator of the continuously varying membrane potential u of a presynaptic cell from its past spikes. We found that it depends on these spikes in the same way as a particular measure of its efferent synapses’ contributions to their postsynaptic membrane potentials. Because spikes are discrete, they cannot support recovery of u with absolute certainty and the full solution to the estimation task is a posterior probability distribution20,25-29 P(ut | s0:t) over the possible values that the presynaptic membrane potential at time t, ut, might take on the basis of all of the spikes observed so far, s0:t. The mean of this posterior is then the estimator that minimizes the squared error25. We interpret the local postsynaptic potential at an excitatory synapse vt as representing this optimal estimate. This local potential is loosely defined as the sum of all excitatory postsynaptic potentials (EPSPs) at this synapse (Supplementary Note and Supplementary Fig. 1); a filtered version of it is recorded in standard experiments into STP.

To be correct, the estimator must implicitly incorporate a statistically appropriate model of membrane potential fluctuations and spike generation in the presynaptic neuron25. For the latter, we adopted the common characterization that a spike is created stochastically whenever ut exceeds a (soft) threshold30,31 (Fig. 1 and Supplementary Fig. 2). In this case, the occurrence of a spike implies that the membrane potential is likely to be high and the absence of spikes implies that the membrane potential is likely to be low. Thus, should increase following a spike and decrease during interspike intervals (Fig. 1a and Online Methods). The decrease should be gradual, as the firing rate of a neuron is limited even at high membrane potential values, and thus the absence of a spike is relatively weak evidence that the membrane potential is low. As required by our interpretation, the local postsynaptic potential, vt, at an excitatory synapse shows the same qualitative characteristics: it increases suddenly at the times of presynaptic spikes and decayes gradually toward a lower baseline between transmission events (Fig. 1a).

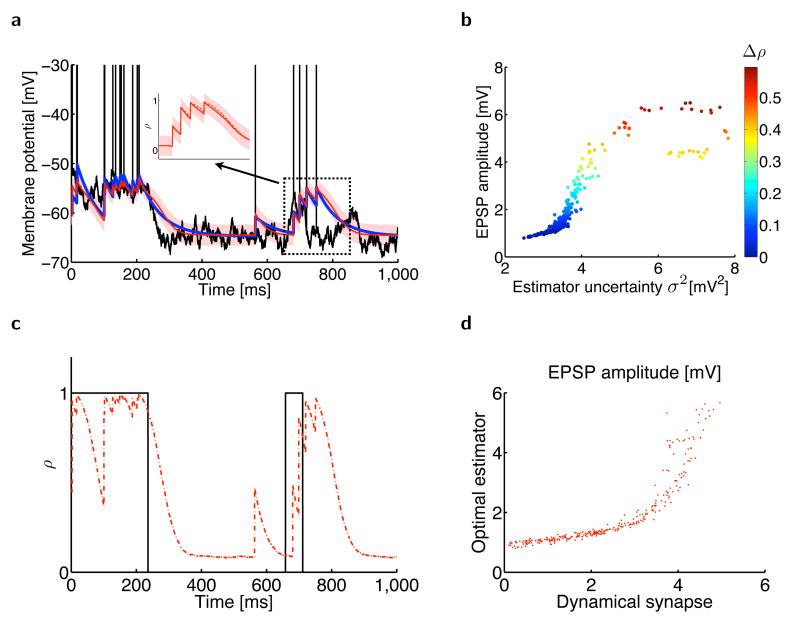

Figure 1.

Estimating the presynaptic membrane potential from spiking information. (a) Sample trace (black) of the presynaptic membrane potential generated by an Ornstein-Uhlenbeck process. When the membrane potential exceeds a soft threshold, action potentials (vertical black lines) are generated. The optimal estimator of the presynaptic membrane potential (red line, mean estimate ; red shading, one s.d. σt) closely matches an optimally tuned canonical model of short-term plasticity11 (blue). Inset shows a magnified section. (b) EPSP amplitude of the optimal estimator (red, mean ± s.d.) and of the canonical model of short-term plasticity (blue, mean ± s.d.) as a function of the estimator uncertainty σ2. Note that EPSP amplitudes in the biophysical model tend to be smaller than those in the optimal estimator, which is compensating for a somewhat slower decay in the biophysical model (see inset in a). (c) The dynamics of the scaled uncertainty (red) closely match the resource variable xt of the canonical model of STP (blue), σ2.

However, this observation is only approximate. As we have mentioned, under STP, the actual size of an EPSP depends on the past history of spiking. Such history dependence is also a hallmark of optimal estimation, as the evidence supplied by a spike needs to be evaluated in the context of the current state of the estimator, that is, the current posterior25. The posterior is computed on-line in a recursive manner, according to standard Bayesian filtering. In this, likelihood information from the current presence or absence of a spike, st, P(st | ut), is combined with the posterior computed in the previous time step, P(ut–δt | s0:t–δt) (Online Methods):

| (1) |

Thus, the effect of an incoming spike on the mean of the posterior, , will be context dependent and vary as a function of the posterior propagated from the past.

The precise nature of the context dependence of the changes in will depend on the particular statistical model assumed for the dynamics of ut, P(ut | ut–δt ). Below, we consider two increasingly complex models: one in which ut performs a random walk around a fixed ‘resting’ (or baseline) membrane potential, urest (Fig. 1), and one in which itself also changes in time (Fig. 2). This allowed us to explore two fundamental factors contributing to the context dependence of that correspond to the effects of synaptic depression and facilitation on vt.

Figure 2.

Estimating the presynaptic membrane potential when the resting membrane potential randomly switches between two different values. (a) Presynaptic subthreshold membrane potential with action potentials (black), its optimal mean estimate (, red line) with the associated s.d. (σ, red shading), and the postsynaptic membrane potential in a model synapse11 with optimally tuned short-term plasticity (blue line). Inset, the (scaled) optimal estimator (red solid line) strongly depends on the estimated probability ρ of being in the up state (red dashed line). (b) EPSP amplitude in the optimal estimator depends on its uncertainty (horizontal axis, σ2) and the change in the estimated probability that the presynaptic cell is in its up state (color code, Δρ). (c) The estimated probability that the presynaptic cell is in its up state ρ (red) tracks the state of the presynaptic neuron (black) as it randomly switches between its up and down states. (d) EPSP magnitudes in the optimal estimator against EPSP magnitudes in the model synapse.

Synaptic depression and uncertainty

After eliminating the influence of spikes themselves, the simplest approximation to the statistics of the membrane potential ut of the presynaptic neuron is as an Ornstein-Uhlenbeck process32,33. For this, the total input to the presynaptic cell is assumed to be Gaussian white noise33 and is subject to leaky integration, decaying toward its resting value, urest (Fig. 1a and Supplementary Fig. 2; Online Methods). In this case, the posterior distribution P(ut | s0:t) can be well characterized as a Gaussian with mean and variance , which expresses the estimator’s current uncertainty about . Given the assumption about the way it is generated, observation of a spike provides evidence that ut is high. However, the quantitative effect on raising depends on the uncertainty σt. The less the uncertainty, that is, the better known is ut, the less the estimate should be influenced by a spike (Fig. 1b) and the lower the synapse’s apparent efficacy. The uncertainty is determined by the evidence from past spikes and we should therefore expect the magnitude of the EPSPs to fluctuate according to this history.

Uncertainty in the optimal estimate increases during interspike intervals (Fig. 1c), as the absence of a spike is only weak evidence for a low membrane potential. Therefore, spikes that arrive after a longer period of silence should increase by more than spikes arriving in quick succession. This closely resembles, all the way down to fine quantitative details, the effect of synaptic depression in a biophysically motivated canonical STP model5,34 (Fig. 1). In such a model, depression is mediated by the depletion of a synaptic resource variable xt, which behaves as the biophysical analog of estimator uncertainty, .

In fact, for a paired-pulse protocol, the dynamical equations describing the time evolution of the optimal estimator and its uncertainty, and , are formally equivalent to those of the biophysical STP model describing the time evolution of the postsynaptic membrane potential and synaptic resource variable, vt and xt, respectively (Online Methods). As a result, higher presynaptic firing rates lead to diminishing postsynaptic responses in both the optimal estimator and the STP model (Fig. 3a).

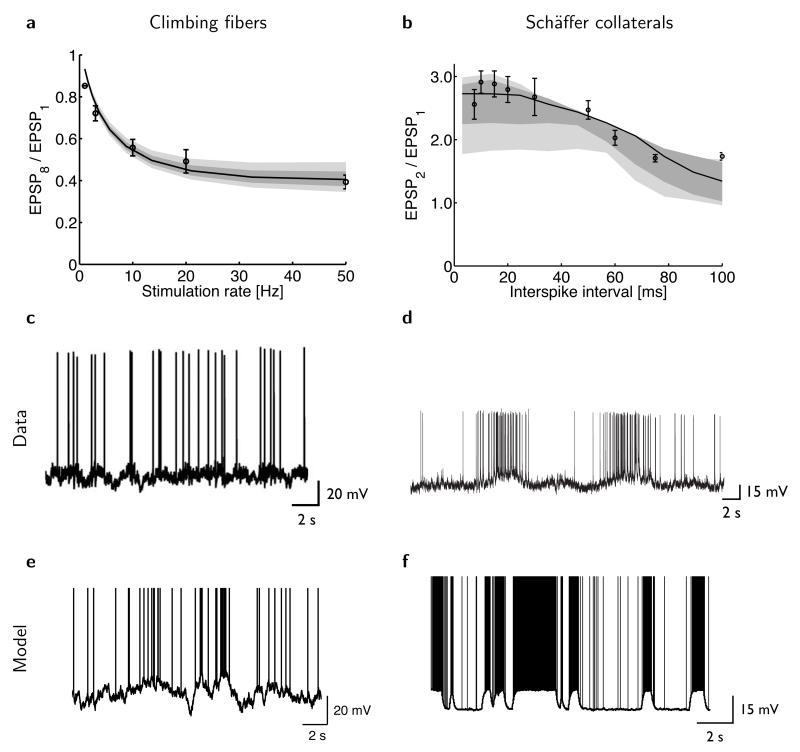

Figure 3.

The optimal estimator reproduces experimentally observed patterns of synaptic depression and facilitation. (a) Synaptic depression in cerebellar climbing fibers (circles: mean ± s.e.m.; redrawn from ref. 6) and in the model (solid line), measured as the ratio of the amplitude of the eighth and first EPSP as a function of the stimulation rate during a train of eight presynaptic spikes. (b) Synaptic facilitation in hippocampal Schäffer collaterals (circles, mean ± s.e.m.; redrawn from ref. 39) and in the model (solid line), measured as the ratio of the amplitude of the second and first EPSP as a function of the interval between a pair of presynaptic spikes. Shading in a and b shows the robustness of the fits (Online Methods): model predictions when best-fit parameters are perturbed by 5% (dark gray) or 10% (light gray). (c–d) Predictions of the model for the dynamics of inferior olive neurons (c) and hippocampal pyramidal neurons (d). Sample traces were generated with parameters fitted to the data about STP in cerebellar climbing fibers (shown in a) and Schäffer collaterals (shown in b). (e–f) In vivo intracellular recordings from inferior olive neurons of the (anesthetized) rat (e, reproduced from ref. 40) and hippocampal pyramidal cells of the behaving rat (f, reproduced from ref. 38).

Synaptic facilitation and ‘up’ state probability

The assumption that the presynaptic membrane potential follows an Ornstein-Uhlenbeck process is often too simplistic35. Fortunately, it is straightforward in our framework to incorporate other statistical properties of membrane potential fluctuations and to study their effects on the features of STP. For example, many cortical cells show phasic activation patterns in which their membrane potential alternates between a resting and a depolarized state, such as ‘up’ and ‘down’ states in the cortex36,37, or out-of-place field and within-place field activity for hippocampal place cells38. This can be captured by extending the model for the dynamics of ut to allow the resting membrane potential to switch between two possible values, corresponding to an up and a down state (Fig. 2, Supplementary Note and Supplementary Fig. 2).

In this extended model, the true current value of is unknown to the postsynaptic cell, as with ut. Thus, the full solution to the estimation problem is a posterior distribution expressing joint uncertainty about these two quantities (Supplementary Note). In this case, the estimated probability that is in its up state also reflects recent spikes and influences (ρt; Fig. 2a–c). Observing a spike when the current estimate of the membrane potential is compatible with the presynaptic neuron being in its down state increases this probability somewhat. Observing a second spike in a short time window, providing substantial extra evidence for this up state, will thus cause a larger increment in (Fig. 2a). EPSPs in the facilitating biophysical STP model and in actual facilitating synapses in a paired-pulse protocol39 (Figs. 2d and 3b) showed the same effect.

Match between STP and the dynamics of the presynaptic cell

Our theory requires the synaptic estimator to be matched to the statistical properties of the presynaptic membrane potential fluctuations that it needs to estimate. Thus, fitting the optimal estimator to experimentally measured STP data (Fig. 3a,b) allowed us to predict, without further parameter fitting, properties of the membrane potential dynamics that the corresponding presynaptic cell type should exhibit. Testing these predictions is challenging because they are about the natural statistics of membrane potential fluctuations and thus require in vivo intracellular recordings, preferably from behaving rather than anaesthetized animals.

Nevertheless, starting from our fits to data about synaptic depression and facilitation for cerebellar climbing fibers (Fig. 3a) and hippocampal Schäffer collateral inputs (Fig. 3b), we predicted membrane potential fluctuations in inferior olive neurons (Fig. 3c) and hippocampal pyramidal cells (Fig. 3d) respectively, that were in broad qualitative agreement with those found in vivo in these cell types38,40 (Fig. 3e,f). Note that the absence and presence of marked up and down states in these two cell types, respectively, is predicted by our theory directly from the absence and presence of facilitation in their corresponding efferent synapses.

The advantage of STP

To quantify the advantage that STP brings to synapses for tracking the presynaptic membrane potential, we measured how well or its biophysical analog vt performed on the estimation task in terms of the time-averaged squared error between ut and its estimate (Online Methods). We compared the optimal estimator of the presynaptic membrane potential (Fig. 4a) with the postsynaptic membrane potential occasioned by a synapse undergoing STP. These two traces were very close. Notably, a static synapse without STP, whose fixed efficacy is still optimized for the same estimation task, performed substantially less well.

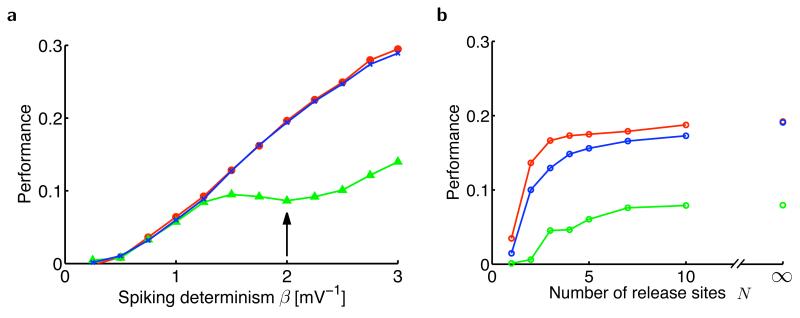

Figure 4.

Estimation performance of the presynaptic membrane potential. (a) Performance as a function of the determinism of presynaptic spiking, β (Online Methods), in the optimal estimator (red), an optimally fitted dynamic synapse (blue) and an optimally fitted static synapse without short-term plasticity (green). When β = 0 (entirely stochastic spiking), spikes are generated independently of the membrane potential and, as a consequence, all models fail to track the membrane potential. As β becomes larger (more deterministic spiking), the dynamic synapse model matches the optimal estimator in performance and substantially outperforms the static synapse. Realistic values for βσOU (here σOU = 1 mV) have been found to be between 2 and 3 in L5 pyramidal cells of somatosensory cortex49. (b) Estimation performance with stochastic vesicle release as a function of the number of synaptic release sites, N. The dynamic synapse (blue) tracked the performance of the optimal estimator (red) well and outperformed the static synapse (green) at all values of N. The performance of all estimators decreases only when the number of independent release sites becomes very low (N = 1 or 2 ). When N is large (N → ∞), synaptic transmission becomes deterministic, even though spike generation itself remains stochastic (with parameter β = 2 shown by the arrow in a).

Our account of synaptic dynamics assumes no transmission failure or, equivalently, a large number of release sites between the pre- and postsynaptic cells. Therefore, we also ran simulations with stochastic synapses4 (Online Methods) to test a more realistic regime of synaptic communication (Fig. 4b). The advantage of a dynamic over a static synapse was maintained when the number of release sites was low, even in the face of transmission failures and the added variability of the dynamic synapse entailed by the stochastic restocking of vesicles.

DISCUSSION

We found that STP arranges for the local postsynaptic membrane potential at a synapse, vt, to behave as an optimal estimator of the presynaptic membrane potential ut. We argue that this is central for a wide swathe of feedforward and recurrent neural circuits. In particular, it allows network computations based on analog quantities encoded in the somatic membrane potentials of neurons to be realized, even though their spikes offer only a low-dimensional and discrete projection of those potentials.

As a first step, we concentrated on the interaction between a pair of cells and on reproducing as close an estimate as possible to the exact presynaptic membrane potential on the postsynaptic side. Of course, vt can be changed by many factors other than synaptic currents, such as voltage signals propagating from other dendritic compartments or backpropagating action potentials from the soma. However, under most experimental procedures that have been used to test STP41,42, the magnitudes of these other factors are minimized, such that the somatic membrane potential recorded reflects, perhaps with some dendritic filtering, the local EPSPs at the stimulated synapse. This allows our theory to be directly applied to data obtained under such conditions (Supplementary Note).

A substantial apparent challenge to our theory, as indeed to many previous functional accounts of STP8-11, is that different efferent synapses of the same cell can express different forms of STP1. Two particular classes of factors that can affect vt may account for this. Both factors have to do with the overall computation performed by the neuron in the network, which need not strictly factorize into individual estimation of each presynaptic membrane potential and combination of these across multiple synapses (Supplementary Fig. 1).

First, computations might be based on estimates of different functions of the presynaptic membrane potentials rather than just their mean value; for example, their rates of change or higher order temporal derivatives. In this case, the different efferent synapses of a single neuron might estimate different functions and therefore be different. It will be an interesting extension of our theory to study how synaptic estimation and single-neuron computation can be blended, rather than being performed as separate algorithmic steps.

Second, the long-run efficacies of synapses in a neural circuit are also important in the computations it performs18,43, suggesting that presynaptic membrane potentials scaled by these computational factors should be reproduced instead. In this case, the optimal estimator just scales as well. However, if the synapse suffers from saturation or other similar nonlinear constraints, then the form of STP that minimizes the error in the estimate of the scaled presynaptic potential would have to reflect these nonlinearities and change as the long-run weight alters. These changes could take potentially quite complex forms. As different efferent synapses have different long-term weights, they could exhibit different forms of STP. Indeed, metaplasticity of STP as a result of long-term potentiation has been observed experimentally41,44.

We should also note that differences in STP across efferent synapses have been predominantly shown for inhibitory interneurons1,45,46. In our theory, estimation of the membrane potential comes in the service of network computations, which are typically posed in terms of the excitatory principal cells rather than inhibitory interneurons. Thus, the theory does not fully extend to cover inhibitory neurons.

Our theory employs a standard account of the relationship between ut and the presynaptic spikes and is thus a complement of the suggestion47 that generation is more complex so that estimation can be straightforward even with a static synapse. The latter account is not easy to reconcile with the fluctuations evident in STP. Others have pursued ideas similar to ours about adaptive gain control mechanisms in neurons generally acting as optimal filters48 and dynamic synapses specifically acting as estimators of presynaptic firing rates15 (B. Cronin, M. Sur & K. Koerding. Soc. Neurosci. Abstr. 663.6, 2007) or interspike intervals15. Some of these studies do not encompass STP, whereas others address depression, but not facilitation. Finally, these studies have primarily sought to predict a general advantage (if one exists at all7) of dynamic synapses over static ones.

Our approach is unique in suggesting that synaptic dynamics are matched to the statistics of presynaptic membrane potential fluctuations that we were able to demonstrate at least qualitatively (Fig. 3c–f). Even such a qualitative match is noteworthy, given that the STP data that we fitted were not extensive, were recorded in vitro under potentially very different stimulation regimes and neuromodulatory milieux for synaptic dynamics than those relevant in vivo, and given our highly simplified statistical model of presynaptic membrane potential dynamics. For example, a common dynamical motif shared by many neurons, including olivary neurons and hippocampal pyramids, and ignored by our model, is the presence of subthreshold membrane potential oscillations38,40.

The simplicity of our model of presynaptic membrane potential dynamics makes it hard to provide a direct biological interpretation of the optimal parameter values (Online Methods) from the fits to data. Further theoretical work would be necessary to incorporate higher-order statistical regularities of these dynamics into the model. Further empirical studies recording STP under more naturalistic conditions and in vivo membrane potential recordings from the same identified pair of neurons, or at least the same cell type, will also be required. These would jointly license more direct comparisons and interpretations. Such experiments would of course be technically challenging. However, the link to optimal estimation offered by our theory provides them with the potential to test directly an important facet of neural circuit computations.

ONLINE METHODS

The optimal estimator

Our goal was to understand the factors contributing to the mean of the posterior or the estimate

| (2) |

corresponding to the postsynaptic potential under our interpretation and, in particular, to the size of change in this estimate in response to an incoming spike, the analogue of the size of an EPSP.

The Ornstein-Uhlenbeck process

The generative model involves two simplifying assumptions. First, we assume that presynaptic membrane potential dynamics are discrete time and Markovian (Supplementary Fig. 1)

| (3) |

In particular, we assume that the presynaptic membrane potential evolves as an Ornstein-Uhlenbeck process, given (in discrete time steps of size δt and thus as a first-order autoregressive process, AR(1)) by

| (4) |

where urest is the resting membrane potential of the presynaptic cell (assumed to be constant here), τ is its membrane time constant and σW is the step size for the random walk behavior of its membrane potential. Because both τ and σW are assumed to be constant, the marginal variance of the presynaptic membrane potential, , is stationary.

The second assumption is that spiking activity at any time only depends on the membrane potential at that time

| (5) |

In particular, we assume that the spike-generating mechanism is an inhomogeneous Poisson process. Thus, at time t, the neuron emits a spike (st = 1) with probability g(ut)δt and the spiking probability given the membrane potential can be written as

| (6) |

We further assume that the transfer function is exponential (Supplementary Fig. 1)

| (7) |

where g0 and β are the base rate and determinism of the spike generation process, respectively.

Because equations (3) and (5) define a hidden Markov model, the posterior distribution over ut can be written in a recursive form as in equation (1)

| (8) |

That is, the posterior at time t, P(ut | s0:t), can be computed by combining information from the current time step with the posterior obtained in the previous time step, P(ut–δt | s0:t–δt). Note that even though inference can be performed recursively and the hidden dynamics is linear-Gaussian (equation (4)), the standard (extended) Kalman filter cannot be used for inference because the measurement does not involve additive Gaussian noise, but instead comes from the stochasticity of the spiking process (equations (6) and (7)).

Performing recursive inference (filtering), as described by equation (8), under the generative model described by equations (3–7) results in a posterior distribution that is approximately Gaussian5 with a mean and a variance

| (9) |

Note that the smaller the bin size δt is, the closer this posterior distribution is to a Gaussian. The expected firing rate γt of the presynaptic cell at time t is obtained from the normalization condition

| (10) |

The mean and variance of the posterior in equation (9) evolve (in continuous time, by taking the limit δt → 0) as5

| (11) |

| (12) |

where denotes the spike train of the presynaptic cell represented as a sum of Dirac delta functions, and ε is an arbitrary small positive constant that ensures that at the time of a spike t = tspike, the update of is based on the variance just before the spike . (A similar, but not identical, derivation can be found in ref. 26.)

Equation (11) indicates that each time a spike is observed, the estimated membrane potential should increase proportionally to the uncertainty (variance) about the current estimate. In turn, this estimation uncertainty then decreases each time a spike is observed (equations (10) and (12)). Conversely, in the absence of spikes, the estimated membrane potential decreases while the variance increases back to its asymptotic value. It can be shown5 that the representation of uncertainty about the membrane potential by σ2 is self-consistent because it is predictive of the error of the mean estimator, .

The dynamics of the membrane potential estimator in equations (11) and (12) is closely related to the dynamics of short-term depression. This can be shown formally by taking the limit when presynaptic spikes are rare. In this case, equations (11) and (12) can be rewritten5 as

| (13) |

| (14) |

where is the normalized variance of the estimator. The other constants involved are , , , and , and , and γ∞ are the stationary posterior mean, variance and expected firing rate in the optimal estimator (equations (9) and (10)) in the absence of presynaptic spikes. More precisely, from equations (11) and (12), we have

| (15) |

| (16) |

where the expected firing rate is . Although it is difficult to get an explicit expression for as a function of the model parameters alone, from equations (15) and (16) we can still express it as a function of the stationary variance

| (17) |

Equations (13) and (14) directly map the posterior mean and (normalized) variance onto the postsynaptic membrane potential v and the synaptic resource variable x in a canonical, biophysically motivated model of a synapse undergoing synaptic depression (see equations (20) and (21)).

The switching Ornstein-Uhlenbeck process

We modeled presynaptic membrane potential fluctuations with an Ornstein-Uhlenbeck process around a constant resting membrane potential. We then generalized this process by letting the resting potential itself change. In this switching Ornstein-Uhlenbeck process, the resting membrane potential is not fixed, but randomly switches between two levels, u+ and u−, corresponding to up and down states (Supplementary Fig. 1)

| (18) |

where η− and η+ are the rates of switching to the down and up states, respectively. Similarly to equation (4), the presynaptic membrane potential evolves as an Ornstein-Uhlenbeck process around the resting potential which is now time dependent

| (19) |

Spike generation is described by the same rule as before (equations (6) and (7)). Although we were able to develop some analytical insight into the behavior of the optimal estimator in the case of a switching Ornstein-Uhlenbeck process (Supplementary Note), a full analytical treatment remains a challenging task. Thus, the results displayed in Figure 2 were obtained by using standard particle filtering techniques50 (see below).

Biophysically motivated STP model

The model we used was taken directly from reference 11 as a canonical model of a synapse undergoing STP. It describes how the postsynaptic potential vt, the synaptic resource xt (responsible for depression) and the utilization factor yt (responsible for facilitation) co-vary in time

| (20) |

| (21) |

| (22) |

where v0 is the postsynaptic resting membrane potential, τm is the postsynaptic membrane constant, J is (the static part of) synaptic efficacy, Y is the maximal synaptic utilization (and the rate of increase in y in response to a spike), τD is the time constant of synaptic depression and τF is the facilitation time constant. Note that if the facilitation time constant is very short (τF →0), then yt can be replaced by Y in equations (20) and (21), resulting in pure depression. Also note that this standard model ignores the finite rise time of EPSPs. However, as rise times are usually about an order of magnitude faster than decay time constants, the effects of this approximation on the estimation performance of a synapse (as shown in Fig. 4) are expected to be negligible and, in any case, affect the static and the dynamic versions of the model equally.

Measuring the performance of estimators

The performance of an estimator, P, was measured as its rescaled root mean squared error (Fig. 4)

| (23) |

where can be substituted with vt to measure the performance of the biophysical models. Note that this provides a suitably normalized measure of performance as perfect estimation results in P = 1 and an estimator that outputs the expected mean presynaptic membrane potential, thereby completely ignoring presynaptic spikes, has P = 0.

Stochastic release

Figure 4 shows the performance of static and depressing synapses and the optimal estimator for the case of stochastic vesicle release. Here, we provide the details of the calculations involved following reference 4.

Depressing synapses

Let N denote the total number of independent release sites. Each site can release at most one vesicle each time a presynaptic spike occurs. Each released vesicle gives rise to a quantum q = J/N postsynaptic response, where J is the maximal EPSP amplitude. The postsynaptic membrane potential evolves as

| (24) |

where τm is the membrane time constant, v0 is the postsynaptic resting potential and St is the presynaptic (delta) spike train. The total number of vesicles released at time t in response to a presynaptic spike, , depends on the number of vesicles that are ready to fuse, , and the utilization fraction Y. More precisely, at the time of a spike, is drawn from a binomial distribution

| (25) |

The number of ready-to-fuse vesicles decreases by each time there is a spike and increases stochastically back to N with a time constant τD in the absence of spikes. Formally, the dynamics of is given by

| (26) |

where and tstk denote the stochastic restocking times produced by an inhomogeneous Poisson process with intensity .

It is easy to show that taking the expectation of equations (24) and (26) over the stochastic release and restocking events, and setting , we get back the standard model of short-term depression (see equations (20) and (21)).

Static synapses

If we take the limit of a short time constant for depression, that is, τD → 0, the restocking of the vesicle described by equation (26) becomes instantaneous and we have . As a consequence, the number of released vesicles at the time of a spike is given by

| (27) |

Optimal estimator

In the case of stochastic vesicle release, the variables of the optimal estimator evolve as

| (28) |

| (29) |

where the number of released vesicles is given by equation (27). Note that if we replace by its expectation , we get back the deterministic optimal estimator derived in equations (11) and (12).

The utilization parameter Y describes the probability of release for a presynaptic spike in all three models. For a fair comparison, it was chosen to be Y = 0.39 for all models, which optimized the performance of the dynamic synapse in the deterministic case for β = 2 (the value we used for the stochastic simulations) and which is consistent with experimentally reported values for the probability of release in cortical pyramidal-to-pyramidal cell connections42.

Numerical simulations for the optimal estimator

We evaluated the mean posterior , the conditional means μ+ , μ− and the conditional variances σ+ , σ− numerically using a standard particle filtering technique50. In practice, we used Npart = 10,000 particles, each of which was two-dimensional ,i = 1, K, Npart. They evolved according to

| (30) |

| (31) |

where is given by equation (18) and by equation (19).

There was an (importance) weight, , assigned to each particle, which was updated according to

| (32) |

In each step of the simulation, all weights were renormalized such that . The particles were resampled when the weights became strongly uneven. Formally, if the number of effective particles at time t, defined as

| (33) |

fell below a given threshold Nthresh = 9,000, then all particles were resampled and the weights were all set back to .

The empirical mean and variance of the posterior membrane potential distribution were determined as

| (34) |

| (35) |

The numerical evaluation of , , and followed the same procedure except that the summation was restricted to the particles that were in the up (or down) state and the weights were renormalized accordingly.

Model parameters for simulations

Unless otherwise noted, the presynaptic membrane time constant was set to τ = 20 ms. The spiking determinism parameter was β−1 = 3 mV, and g0 was set such that g(−60 mV)=10 Hz. For Figure 1, the s.d. of the presynaptic membrane potential was σOU = 5 mV and the resting membrane potential was urest = −60 mV. For Figure 2, the resting values were u− = −65 mV and u+ = −55 mV. The transition rates were η+ = η− = 2 Hz and σOU = 2 mV. For Figure 3a, the fitted parameters were βσOU = 1.13, τ = 1000 ms and g(−60 mV)= 10 Hz. Each one of those three parameters were then sequentially perturbed by ± 5% (resp. ±10%). For Figure 3b, the lower baseline potential was fixed at u− = −65 mV. The fitted parameters were σOU = 0.28 mV, τ = 85.7 ms, u+ = −53.9 mV, η− = 1.09 Hz, η+ = 1.13 Hz, β−1 = 3 mV, and g0 was set such that g(−60 mV ) = 17.8 Hz. Each one of those seven parameters were sequentially perturbed by ± 5% and by ±10%. Note that in this figure, we did not display the experimental data point for the shortest interspike interval (5 ms) because our current spiking model does not include the effects of refractoriness and burstiness that may dominate estimation at such short intervals. For Figure 4, we set urest = −60 mV, σOU =1 mV and β−1 = 0.5 mV.

Supplementary Material

ACKNOWLEDGMENTS

We thank M. Häusser and R. Brown for useful references and L. Abbott, Sz. Káli and members of the Budapest Computational Neuroscience Forum for valuable discussions. This work was supported by the Wellcome Trust (J.-P.P., M.L. and P.D.) and the Gatsby Charitable Foundation (P.D.).

Footnotes

Author contributions

J.-P.P. and M.L. developed the mathematical framework. J.-P.P performed the numerical simulations. All of the authors wrote the manuscript.

COMPETING FINANCIAL INTERESTS

The authors declare no competing financial interests.

Reprints and permissions information is available online at http://www.nature.com/reprintsandpermissions/.

METHODS

Methods and any associated references are available in the online version of the paper at http://www.nature.com/natureneuroscience/.

Note: Supplementary information is available on the Nature Neuroscience website.

References

- 1.Markram H, Wu Y, Tosdyks M. Differential signaling via the same axon of neocortical pyramidal neurons. Proc. Natl. Acad. Sci. USA. 1998;95:5323–5328. doi: 10.1073/pnas.95.9.5323. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Zucker RS, Regehr W. Short-term synaptic plasticity. Annu. Rev. Physiol. 2002;64:355–405. doi: 10.1146/annurev.physiol.64.092501.114547. [DOI] [PubMed] [Google Scholar]

- 3.Abbott LF, Regehr WG. Synaptic computation. Nature. 2004;431:796–803. doi: 10.1038/nature03010. [DOI] [PubMed] [Google Scholar]

- 4.Loebel A, et al. Multiquantal release underlies the distribution of synaptic efficacies in the neocortex. Front. Comput. Neurosci. 2009;3:1–13. doi: 10.3389/neuro.10.027.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Pfister JP, Dayan P, Lengyel M. Know thy neighbour: A normative theory of synaptic depression. In: Bengio Y, Schuurmans D, Lafferty J, Williams CKI, Culotta A, editors. Advances in Neural Information Processing Systems 22. 2009. pp. 1464–1472. [Google Scholar]

- 6.Dittman JS, Kreitzer A, Regehr W. Interplay between facilitation, depression, and residual calcium at three presynaptic terminals. J. Neurosci. 2000;20:1374–1385. doi: 10.1523/JNEUROSCI.20-04-01374.2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Merkel M, Lindner B. Synaptic filtering of rate-coded information. Phys. Rev. E. 2010;81:041921. doi: 10.1103/PhysRevE.81.041921. [DOI] [PubMed] [Google Scholar]

- 8.Abbott LF, Varela JA, Sen K, Nelson SB. Synaptic depression and cortical gain control. Science. 1997;275:220–224. doi: 10.1126/science.275.5297.221. [DOI] [PubMed] [Google Scholar]

- 9.Cook DL, Schwindt P, Grande L, Spain W. Synaptic depression in the localization of sound. Nature. 2003;421:66–70. doi: 10.1038/nature01248. [DOI] [PubMed] [Google Scholar]

- 10.Goldman MS, Maldonado P, Abbott L. Redundancy reduction and sustained firing with stochastic depressing synapses. J. Neurosci. 2002;22:584–591. doi: 10.1523/JNEUROSCI.22-02-00584.2002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Mongillo G, Barak O, Tsodyks M. Synaptic theory of working memory. Science. 2008;319:1543–1546. doi: 10.1126/science.1150769. [DOI] [PubMed] [Google Scholar]

- 12.Carpenter G, Grossberg S. Pattern Recognition by Self-Organizing Neural Networks. MIT Press; Cambridge, Massachusetts: 1991. [Google Scholar]

- 13.Hasselmo ME, Bower J. Acetylcholine and memory. Trends Neurosci. 1993;16:218–222. doi: 10.1016/0166-2236(93)90159-j. [DOI] [PubMed] [Google Scholar]

- 14.Zador A. Impact of synaptic unreliability on the information transmitted by spiking neurons. J. Neurophysiol. 1998;79:1219–1229. doi: 10.1152/jn.1998.79.3.1219. [DOI] [PubMed] [Google Scholar]

- 15.de la Rocha J, Nevado A, Parga N. Information transmission by stochastic synapses with short-term depression: neural coding and optimization. Neurocomputing. 2002;44:85–90. [Google Scholar]

- 16.Pfister JP, Lengyel M. Front. Syst. Neurosci. Conference Abstract: Computational and Systems Neuroscience 2009. 2009. Speed versus accuracy in spiking attractor networks. [Google Scholar]

- 17.Wilson HR, Cowan JD. Excitatory and inhibitory interactions in localized populations of model neurons. Biophys. J. 1972;12:1–24. doi: 10.1016/S0006-3495(72)86068-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Dayan P, Abbott LF. Theoretical Neuroscience. MIT Press; Cambridge, Massachusetts: 2001. [Google Scholar]

- 19.Thorpe S, Fize D, Marlot C. Speed of processing in the human visual system. Nature. 1996;381:520–522. doi: 10.1038/381520a0. [DOI] [PubMed] [Google Scholar]

- 20.Huys QJ, Zemel R, Natarajan R, Dayan P. Fast population coding. Neural Comput. 2007;19:404–441. doi: 10.1162/neco.2007.19.2.404. [DOI] [PubMed] [Google Scholar]

- 21.Stanford TR, Shankar S, Massoglia D, Costello M, Salinas E. Perceptual decision making in less than 30 milliseconds. Nat. Neurosci. 2010;13:379–385. doi: 10.1038/nn.2485. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Hopfield JJ. Neural networks and physical systems with emergent collective computational abilities. Proc. Natl. Acad. Sci. USA. 1982;79:2554–2558. doi: 10.1073/pnas.79.8.2554. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Lengyel M, Kwag J, Paulsen O, Dayan P. Matching storage and recall: hippocampal spike timing-dependent plasticity and phase response curves. Nat. Neurosci. 2005;8:1677–1683. doi: 10.1038/nn1561. [DOI] [PubMed] [Google Scholar]

- 24.Seung HS, Sompolinsky H. Simple models for reading neuronal population codes. Proc. Natl. Acad. Sci. USA. 1993;90:10749–10753. doi: 10.1073/pnas.90.22.10749. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Anderson B, Moore J. Optimal Filtering. Prentice-Hall; Englewood Cliffs, New Jersey: 1979. [Google Scholar]

- 26.Eden UT, Frank L, Barbieri R, Solo V, Brown E. Dynamic analysis of neural encoding by point process adaptive filtering. Neural Comput. 2004;16:971–998. doi: 10.1162/089976604773135069. [DOI] [PubMed] [Google Scholar]

- 27.Paninski L. The most likely voltage path and large deviations approximations for integrate-and-fire neurons. J. Comput. Neurosci. 2006;21:71–87. doi: 10.1007/s10827-006-7200-4. [DOI] [PubMed] [Google Scholar]

- 28.Bobrowski O, Meir R, Eldar Y. Bayesian filtering in spiking neural networks: noise, adaptation and multisensory integration. Neural Comput. 2009;21:1277–1320. doi: 10.1162/neco.2008.01-08-692. [DOI] [PubMed] [Google Scholar]

- 29.Cunningham J, Yu B, Shenoy K, Sahani M. Inferring neural firing rates from spike trains using Gaussian processes. In: Platt J, Koller D, Singer Y, Roweis S, editors. Advances in Neural Information Processing Systems 20. MIT Press; Cambridge, Massachusetts: 2008. pp. 329–336. [Google Scholar]

- 30.Gerstner W, Kistler WK. Spiking Neuron Models. Cambridge University Press; Cambridge: 2002. [Google Scholar]

- 31.Paninski L, Pillow J, Simoncelli E. Maximum likelihood estimate of a stochastic integrate-and-fire neural encoding model. Neural Comput. 2004;16:2533–2561. doi: 10.1162/0899766042321797. [DOI] [PubMed] [Google Scholar]

- 32.Stein RB. A theoretical analysis of neuronal variability. Biophys. J. 1965;5:173–194. doi: 10.1016/s0006-3495(65)86709-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Lansky P, Ditlevsen S. A review of the methods for signal estimation in stochastic diffusion leaky integrate-and-fire neuronal models. Biol. Cybern. 2008;99:253–262. doi: 10.1007/s00422-008-0237-x. [DOI] [PubMed] [Google Scholar]

- 34.Tsodyks M, Pawelzik K, Markram H. Neural networks with dynamic synapses. Neural Comput. 1998;10:821–835. doi: 10.1162/089976698300017502. [DOI] [PubMed] [Google Scholar]

- 35.Shinomoto S, Sakai Y, Funahashi S. The Ornstein-Uhlenbeck process does not reproduce spiking statistics of neurons in prefrontal cortex. Neural Comput. 1999;11:935–951. doi: 10.1162/089976699300016511. [DOI] [PubMed] [Google Scholar]

- 36.Steriade M, Nunez A, Amzica F. A novel slow (< 1 Hz) oscillation of neocortical neurons in vivo: depolarizing and hyperpolarizing components. J. Neurosci. 1993;13:3252–3265. doi: 10.1523/JNEUROSCI.13-08-03252.1993. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Cossart R, Aronov D, Yuste R. Attractor dynamics of network UP states in the neocortex. Nature. 2003;423:283–288. doi: 10.1038/nature01614. [DOI] [PubMed] [Google Scholar]

- 38.Harvey CD, Collman F, Dombeck D, Tank D. Intracellular dynamics of hippocampal place cells during virtual navigation. Nature. 2009;461:941–946. doi: 10.1038/nature08499. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Dobrunz LE, Huang E, Stevens C. Very short-term plasticity in hippocampal synapses. Proc. Natl. Acad. Sci. USA. 1997;94:14843–14847. doi: 10.1073/pnas.94.26.14843. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Chorev E, Yarom Y, Lampl I. Rhythmic episodes of subthreshold membrane potential oscillations in the rat inferior olive nuclei in vivo. J. Neurosci. 2007;27:5043–5052. doi: 10.1523/JNEUROSCI.5187-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Markram H, Tsodyks M. Redistribution of synaptic efficacy between neocortical pyramidal neurons. Nature. 1996;382:807–810. doi: 10.1038/382807a0. [DOI] [PubMed] [Google Scholar]

- 42.Thomson AM. Facilitation, augmentation and potentiation at central synapses. Trends Neurosci. 2000;23:305–312. doi: 10.1016/s0166-2236(00)01580-0. [DOI] [PubMed] [Google Scholar]

- 43.Martin SJ, Grimwood P, Morris R. Synaptic plasticity and memory: an evaluation of the hypothesis. Annu. Rev. Neurosci. 2000;23:649–711. doi: 10.1146/annurev.neuro.23.1.649. [DOI] [PubMed] [Google Scholar]

- 44.Manabe T, Wyllie D, Perkel D, Nicoll R. Modulation of synaptic transmission and long-term potentiation: effects on paired pulse facilitation and EPSC variance in the CA1 region of the hippocampus. J. Neurophysiol. 1993;70:1451. doi: 10.1152/jn.1993.70.4.1451. [DOI] [PubMed] [Google Scholar]

- 45.Reyes A, et al. Target cell–specific facilitation and depression in neocortical circuits. Nat. Neurosci. 1998;1:279–285. doi: 10.1038/1092. [DOI] [PubMed] [Google Scholar]

- 46.Koester HJ, Johnston D. Target cell-dependent normalization of transmitter release at neocortical synapses. Science. 2005;308:863–866. doi: 10.1126/science.1100815. [DOI] [PubMed] [Google Scholar]

- 47.Denève S. Bayesian spiking neurons. I. inference. Neural Comput. 2008;20:91–117. doi: 10.1162/neco.2008.20.1.91. [DOI] [PubMed] [Google Scholar]

- 48.Wark B, Fairhall A, Rieke F. Timescales of inference in visual adaptation. Neuron. 2009;61:750–761. doi: 10.1016/j.neuron.2009.01.019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Jolivet R, Rauch A, Lüscher HR, Gerstner W. Predicting spike timing of neocortical pyramidal neurons by simple threshold models. J. Comput. Neurosci. 2006;21:35–49. doi: 10.1007/s10827-006-7074-5. [DOI] [PubMed] [Google Scholar]

- 50.Doucet A, De Freitas N, Gordon N. Sequential Monte Carlo Methods in Practice. Springer; New York: 2001. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.