Abstract

A hybrid multiscale and multilevel image fusion algorithm for green fluorescent protein (GFP) image and phase contrast image of Arabidopsis cell is proposed in this paper. Combining intensity-hue-saturation (IHS) transform and sharp frequency localization Contourlet transform (SFL-CT), this algorithm uses different fusion strategies for different detailed subbands, which include neighborhood consistency measurement (NCM) that can adaptively find balance between color background and gray structure. Also two kinds of neighborhood classes based on empirical model are taken into consideration. Visual information fidelity (VIF) as an objective criterion is introduced to evaluate the fusion image. The experimental results of 117 groups of Arabidopsis cell image from John Innes Center show that the new algorithm cannot only make the details of original images well preserved but also improve the visibility of the fusion image, which shows the superiority of the novel method to traditional ones.

1. Introduction

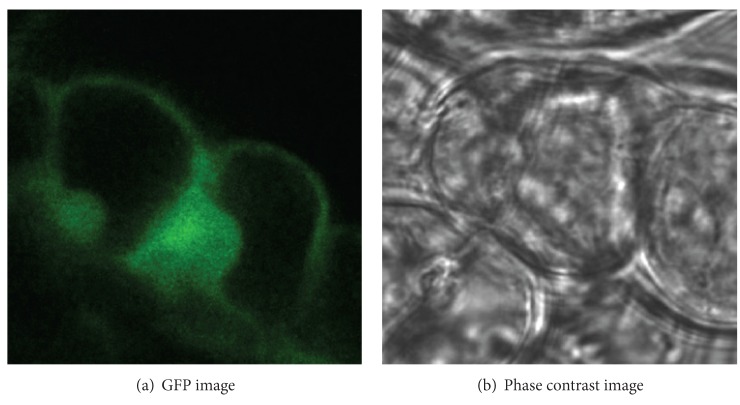

The purpose of image fusion is to integrate complementary and redundant information from multiple images of the same scene to create a single composite that contains all the important features of the original images [1]. The resulting fused image will thus be more suitable for human and machine perception or for further image processing tasks in many fields, such as remote sensing, disease diagnosis, and biomedical research. In molecular biology, the fluorescence imaging and the phase contrast imaging are two common imaging systems [2]. Green fluorescent protein (GFP) imaging can provide the function information related to the molecular distribution in biological living cells; phase contrast imaging provides the structural information with high resolution by transforming the phase difference which is hardly observed into amplitude difference. The combination of GFP image and phase contrast image is valuable for function analyses of protein and accurate localization of subcellular structure. Figure 1 shows one group of registered GFP image and phase contrast image for Arabidopsis cell; it is obvious that there is a big difference between the GFP image and the phase contrast image. Due to low similarity between the originals, various fusion methods that had been widely used in remote image fusion [3–5], such as Wavelet/Contourlet-based ARSIS fusion method [6], will result in spectral and color distortion, dark and nonuniform background, and poor ability of detailed preservation. Recently, Li and Wang have proposed SWT-based (stationary wavelet transform) [7] and NSCT-based (nonsubsampled Contourlet transform) [8] fusion algorithms which utilize the translation invariance of two kinds of transform to reduce the artifacts of fused image, but complicated procedure, high time-consumption, and low robustness hinder its fusion capability. In order to overcome these disadvantages, we bring sharp frequency localization Contourlet transform (SFL-CT) [9] into the fusion of GFP image and phase contrast image, in the manner of SFL-CT's merit of excellent edge expression ability, multiscale, directional characteristics, and anisotropy. We propose a new hybrid multiscale, and multilevel image fusion method combining intensity-hue-saturation (IHS) transform and SFL-CT. Different fusion strategies are utilized for the coefficients of different subbands in order to keep the localization information in GFP image and detailed information of high resolution in phase contrast image. The research conducts a fusion test of 117 groups of Arabidopsis cell images from the GFP database of John Innes Center [10]. Visual information fidelity (VIF) [11] is also introduced to quantify the similarity inside and outside the fluorescent area between the fused image and original ones.

Figure 1.

Arabidopsis cell images.

The outline of this paper is as follows. In Section 2, the SFL-CT and IHS transforms are introduced in detail. Section 3 concretely describes our proposed fusion algorithm based on the neighborhood consistency measurement. Experimental results and performance analysis are presented and discussed in Section 4. Section 5 gives the conclusion of this paper.

2. SFL-Contourlet Transform and IHS Transform

2.1. Traditional Contourlet Transform

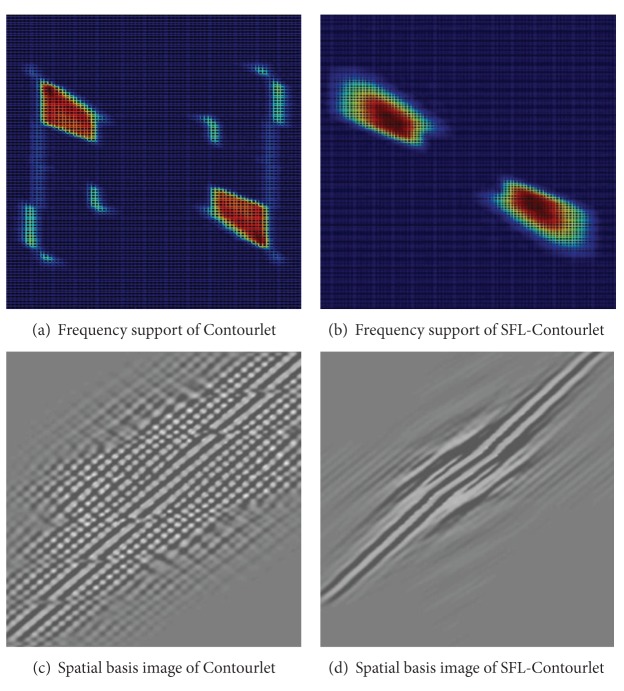

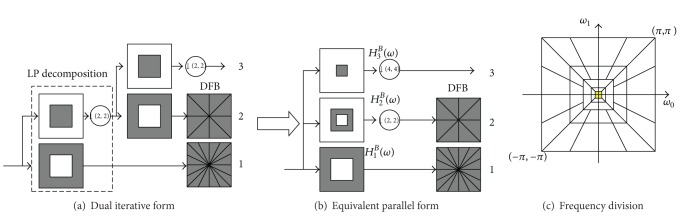

In 2005, Do and Vetterli [12] proposed the Contourlet transform as a directional multiresolution image representation that can efficiently capture and represent smooth object boundaries in natural images. The Contourlet transform is constructed as a combination of the Laplacian pyramid transform (LPT) [13] and the directional filter banks (DFB) [14], where the LPT iteratively decomposes a 2D image into low-pass and high-pass subbands, and the DFB are applied to the high-pass subbands to further decompose the frequency spectrum into directional subbands.

The block diagram of the Contourlet transform with two levels of multiscale decomposition is shown in Figure 2(a), followed by angular decomposition. Note that the Laplacian pyramid shown in the diagram is a simplified version of its actual implementation. Nevertheless, this simplification serves our explanation purposes satisfactorily. By using the multirate identities, we can rewrite the filter bank into its equivalent parallel form, as shown in Figure 2(b), where H i B(ω), i = 1,2, 3, is the equivalent filter of LPT for each decomposition level [15]. Obviously, using ideal filters, the Contourlet transform will decompose the 2D frequency spectrum into trapezoid-shaped regions as shown in Figure 2(c).

Figure 2.

Block diagram of Contourlet transform with 2 levels of multiscale decomposition.

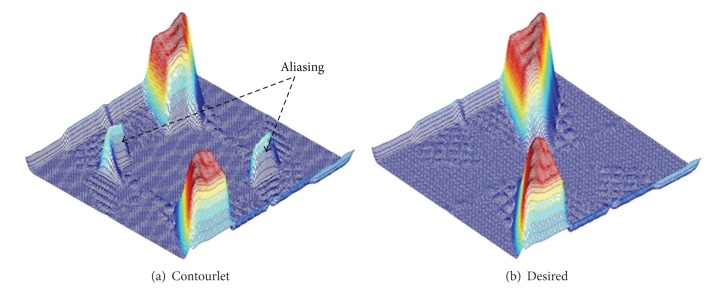

Due to the periodicity of 2D frequency spectrums for discrete signals and intrinsic paradox between critical sample and perfect reconstruction of DFB, it means that we cannot get perfect reconstruction and frequency domain localization simultaneously by a critically sampled filter bank with the frequency partitioning of the DFB. When the DFB is combined with a multiscale decomposition as in the Contourlet transform, the aliasing problem becomes a serious issue. For instance, Figure 3(a) shows the frequency support of an equivalent directional filter of the second channel in Figure 2(b). We can see that Contourlets are not localized in the frequency domain, with substantial amount of aliasing components outside of the desired trapezoid-shaped support as shown in Figure 3(b).

Figure 3.

Frequency support of one channel for Contourlet transform and desired scheme.

2.2. Sharp Frequency Localization Contourlet Transform

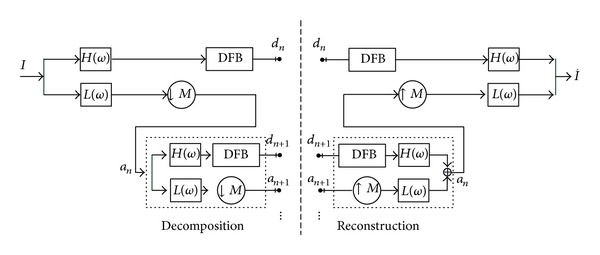

In order to overcome the aliasing disadvantage of Contourlet transform, Lu proposed a new construction scheme which employed a new pyramidal structure for the multiscale decomposition as the replacement of LPT [15]. This new construction is named as sharp frequency localization Contourlet transform (SFL-CT) [9], and its block diagram is shown in Figure 4.

Figure 4.

Block diagram of SFL-CT.

In the diagram, H(ω) represents the high-pass filter, and L(ω) represents low-pass filter in the multiscale decomposition, with ω = (ω 0, ω 1). The DFB which is the same as in Contourlet transform (CT) is attached to the high-pass channel at the finest scale and bandpass channel at all coarser scales. The low-pass filter L(ω) in each levels is downsampled by matrix M, with M normally being set as diagonal matrix (2,2). To have more levels of decomposition, we can iteratively insert at point a n+1 a copy of the diagram contents enclosed by the dashed rectangle. As an important difference from the LPT shown in Figure 2, the new multiscale pyramid can employ a different set of low-pass and high-pass filters for the first level and all other levels, and this is a crucial step in reducing the frequency-domain aliasing of traditional Contourlet transform. We leave the detailed explanation for this issue as well as the specification of the filters H(ω) and L(ω) to [9].

Figure 5 shows one Contourlet basis image and its corresponding SFL-Contourlet part in the frequency and spatial domains. As we can see from Figure 5(a), the original Contourlet transform suffers from the frequency nonlocalization problem. In sharp contrast, SFL-Contourlet produces basis image that is well localized in the frequency domain, as shown in Figure 5(b). The improvement in the frequency localization is also reflected in the spatial domain. As shown in Figures 5(c) and 5(d), the spatial regularity of SFL-Contourlet is obviously superior to the one of Contourlet.

Figure 5.

Comparison of basis image.

2.3. IHS Transform

The intensity-hue-saturation (IHS) transform substitutes the gray image for the intensity component of the color image and thus handles the fusion of the gray and color images [1] and defines three color attribute based on the human visual mechanism, that is, intensity (I), hue (H), and saturation (S). I stands for the information of the source image, H stands for the spectrum and color attributes, and S stands for the purity relative to the grayscale of some color. In IHS space, H component and S component are closely tied to the way that people feel about color, while I component almost has nothing to do with the color component of the image.

There are various algorithms that can transform image from RGB to IHS space, common transformation model including sphere transformation, cylinder transformation, triangle transform, and single six cones [16]. We use triangle transform here. The formula of the forward and inverse transforms are as follows.

From RGB to IHS space (forward transform),

| (1) |

where

| (2) |

The reverse transform

| (3) |

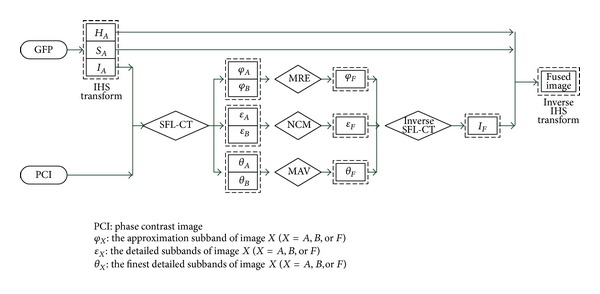

3. The Proposed Fusion Rule

From Figure 1(a), we can see that the background of the GFP image is partially dark; in order to avoid the influence of low contrast after fusion, the intensity component of the original GFP image is extracted by IHS transform which not only keeps most of the information from the original one, but also entirely improves the brightness of the fused image. In this way, we can explore a hybrid multiscale and multilevel fusion algorithm for biological cell image. We use SFL-CT to decompose the intensity components of GFP image and phase contrast image; different fusion schemes are used for different subband coefficients in order to keep a balance between the localization information in GFP image and detailed information of high frequency in phase contrast image. To get the protein distribution information of GFP image, the approximation (coarsest) subband coefficients of fused image are obtained with maximum region energy rule (MRE) [17]. To get structural information of the phase contrast image, coefficients of the finest detailed subband of fused image are based on maximum absolute value rule (MAV) [17]. To balance structural information and color molecular distribution information from the originals, a locally adaptive coefficient fusion rule named neighborhood consistency measurement (NCM) is adopted on coefficients of other detailed subbands. The schematic diagram is shown in Figure 6.

Figure 6.

Schematic diagram of the proposed image fusion algorithm, where subscripts A, B, and F mean GFP image, phase contrast image, and the fused image, respectively.

3.1. Maximum Region Energy (MRE) Rule

When GFP image and phase contrast image are decomposed by the SFL-CT, the coefficients of the coarsest subband represent the approximation component of the input images. Considering approximate information of fused image is constructed by the two kinds of approximation subband coefficients; maximum region energy rule (MRE) is a good choice for the fused approximation subband coefficients.

MRE rule is defined as follows:

| (4) |

where the regional energy E is defined as

| (5) |

where E J A(m, n), E J B(m, n), and E J F(m, n) denote regional energy of original image A, B, and fused image F in the coarsest scale J and location (m, n). Ω(m, n) represents a square region with 3 × 3 size whose center is located at position (m, n). c J X(m, n) denotes the coefficient of the images X = A, B, or F within the region Ω(m, n) in the coarsest subband J and location (m, n). μ J X(m, n) means the average value of coefficients within Ω(m, n).

3.2. Maximum Absolute Value (MAV) Rule

After decomposing the input images using SFL-CT, the image details are contained in the directional subbands in SFL-CT domain. The directional subband coefficients with larger absolute values, especially for subband coefficients at the finest scale, generally correspond to pixels with sharper brightness in the image and thus to the salient features such as edges, lines, and regions boundaries. Therefore, we can use the maximum absolute value (MAV) scheme to make a decision on the selection of coefficients at the finest detailed subbands.

MAV fusion rule is defined as follows:

| (6) |

where d j,l A(m, n), d j,l B(m, n), and d j,l F(m, n) denote the coefficients of the images A, B, and the fused image F in the jth scale,lth directional subband, and location (m, n). abs[·] denotes absolute operator.

3.3. Neighborhood Consistency Measurement (NCM)

Let N j,l X(m, n) denote a region centered at coefficient d j,l X(m, n) in jth level and lth directional subband of image X, and the energy of this region is defined as ρ j,l X(m, n). Then,

| (7) |

The NCM is defined as a threshold for directional coefficients based on one region mentioned above. Let Ψj,l(m, n) denote NCM as follows:

| (8) |

It is not hard to see that the NCM is smaller than 1. In fact, NCM indicates whether the neighborhood is homogenous. Bigger NCM means being more homogenous.

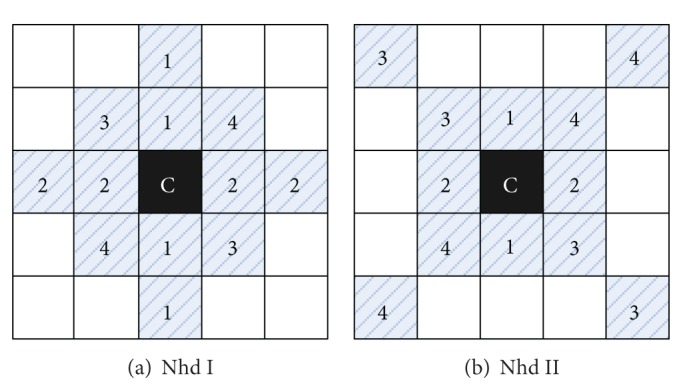

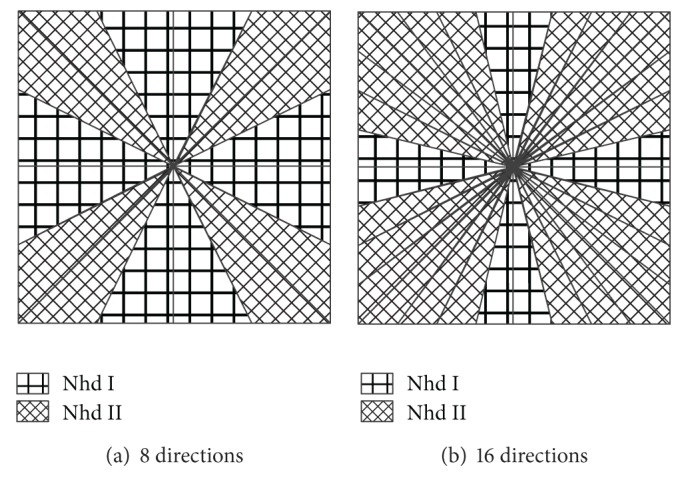

Taking the number of directions in each detailed subband into consideration, we classify neighborhood into two classes: Nhd I and Nhd II which are shown in Figures 7(a) and 7(b). Nhd I is mainly used in horizontal and vertical subbands, and Nhd II is in other subbands. For instance, if the direction number is 8 or 16, we can use empirical distribution model as Figure 8.

Figure 7.

Neighborhood coefficients of SFL-CT.

Figure 8.

Empirical distribution model for neighborhood selection.

We define a threshold T which is normally 0.5 < T < 1.

If Ψj,l(m, n) < T, then

| (9) |

If Ψj,l(m, n) ≥ T, then

| (10) |

3.4. Fusion Procedure

The fusion process, accompanied with the proposed fusion rule, is carried out as in the following steps.

Define the register original images: GFP image as image A, phase contrast image as image B, and fused image as image F.

Make IHS transform for image A, and calculate the corresponding intensity components I A, hue component H A, and saturation component S A.

Decompose I A and image B by SFL-CT, and get two approximation subbands {φ A, φ B}, a series of the finest detailed subbands {θ A, θ B}, and other detailed subbands {ε A, ε B}.

Combine transform coefficients according to the selection rule: coefficients of approximation subbands are based on MRE rule; coefficients of the finest detailed subbands are based on MAV rule; coefficients of other detailed subbands are based on NCM. We can get the approximation subband φ F, detailed subbands ε F, and θ F of the fused image F.

Reconstruct the intensity of the fused image I F with φ F, ε F, and θ F by the inverse SFL-CT.

Reconstruct the fused image F with the hue H A and saturation S A, together with the I F by the inverse IHS transform.

4. Experimental Results and Discussion

4.1. Dataset

All images used in this experiment come from the GFP database of John Innes Center [10]. The original size of images is 358 × 358 pixels. We resize and crop them into 256 × 256 pixels in order to facilitate processing. The experiment contains 117 sets of GFP images (24-bit true color) and their corresponding phase contrast images (8-bit grey scale) of the Arabidopsis. The former reveal the distribution of the labeled protein, and the latter present cell structures information.

4.2. Parameters Selection

For the proposed method, the practical windows (Ω) in NCM rule are usually chosen to be of size 3 × 3, 5 × 5, or 7 × 7. We have investigated these practical windows and found that size 5 × 5 provides good results considering fusion clarity and time consumption. Apart from the sizes of the practical windows, the frequency parameters of SFL-CT are also needed to choose for improving the fusion performance. A larger number of experimental results demonstrate that the passband frequency ω p and stopband frequency ω s which should be 4π/21 and 10π/21, respectively, can not only provide pleasing fusion performance in most cases, but also keep good balance between fusion result and computation complexity.

4.3. Results Comparison

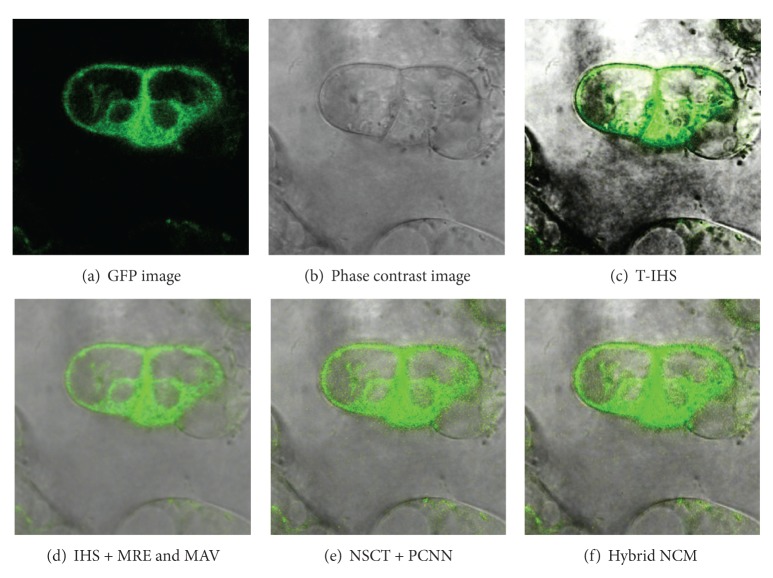

We compare the proposed fusion rule with the traditional methods or rules. They include traditional IHS fusion method (T-IHS) [18], MRE and MAV fusion method based on IHS space (IHS + MRE and MAV) [1], and PCNN-based fusion method [19] in which all the images are decomposed by the nonsubsampled Contourlet transform (NSCT + PCNN), and our method (Hybrid NCM). Among them, MAV stands for the maximum absolute value rules; MRE stands for maximum region energy rules; MRE and MAV represents MRE rule for approximation subband and MAV rule for detailed subbands. The parameters of the above method are set as follows. For the rule of the fusion of MRE, neighborhood window is of size 3 × 3 pixels. SFL-CT makes a decomposition for 4 layers; the numbers of directions of each layer are (4, 8, 16, and 16); the filter for DFB is “pkva” filter; the set of NSCT + PCNN fusion algorithm is just the same as that in [20].

The fusion results, shown in Figure 9, which are obtained by four different methods demonstrate visual difference. It is obvious to see that the fused image using T-IHS method is unsatisfactory. The foreground and background are significantly nonuniform, especially along the cell outlines as there exist fuzzy blacks, so it is difficult to distinguish the inner information. However, the brilliance shown in Figures 9(d)–9(f) is largely improved, and the details of the images are also clearer. All in all, the location information of the cell structure in the phase contrast image and the distribution information of the protein are largely retained. Nevertheless, it is not easy to objectively judge the quality of the above three methods. For better judging these fusion results, the quantitative parameter that is visual information fidelity (VIF) [11] is taken into consideration. In the recent studies, large-scale subjective experiments assess VIF, a novel image similarity criterion, and prove it to be a good substitution for the subjective assessment. We know that there are two kinds of traditional evaluations that are subjective evaluation and objective evaluation. The former depends on the perception of human eye vision; different people would have different perception. The latter method has a little link with subjective factor, but it does not well measure the difference between the fusion image and the original image. As for the characteristic, that is, the little similarity between GFP fluorescence image and phase image, the VIF method, which is the combination of human visual system (HVS) and image characteristic statistics, is introduced into this paper to measure the quality of fusion image. This method can tell us the similarity between different regions of fusion image and the original image in quantitative aspect. The VIF value (the range is 0~1) is closer to 1; then it indicates that the fusion image has more similarity to the original image. A number of experimental results have proved that the VIF method and the human subjective evaluation have a better similarity for image quality than the traditional methods such as root mean square error (RMSE), correlation coefficients (CCs), and mutual information (MI).

Figure 9.

Fused images using different methods.

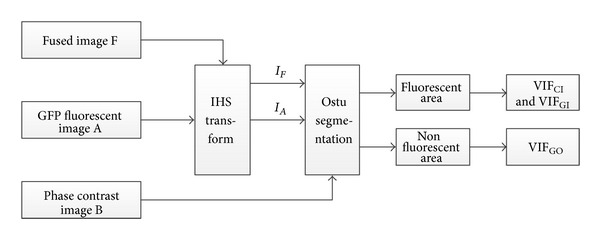

Considering the difference in function orientation of the two kinds of images, especially the corresponding relationship between the fluorescence area in GFP images and the protein distribution in cells, the fluorescence area is firstly extracted from the original two images, then the VIF between fused image and phase contrast image is calculate, and thirdly the VIF between fused image and fluorescence image is calculated too. Fused image should keep high similarity with both phase contrast image and fluorescence image in fluorescence area. However, in the other area, only the similarity between it and the phase contrast image is considered. Therefore, this paper first segments both fused image and source image into fluorescence area and nonfluorescence area with Otsu method [20] and calculates VIF between fused image and source image in fluorescence area and nonfluorescence area, respectively. The calculation procedure is shown in Figure 10. Table 1 displays the calculation result of VIF of the fused image in Figure 9.

Figure 10.

VIF algorithm flow chart.

Table 1.

VIF computing result.

| Fusion methods | VIFA-fl | VIFB-fl | VIFB-nfl |

|---|---|---|---|

| T-IHS | 0.4368 | 0.2759 | 0.4975 |

| IHS + MRE and MAV | 0.3119 | 0.8318 | 0.8318 |

| NSCT + PCNN | 0.3188 | 0.8992 | 0.8992 |

| Hybrid NCM | 0.3112 | 0.9299 | 0.9299 |

In the table, superscript fl refers to the fluorescent area while nfl refers to nonfluorescence area, A represents GFP fluorescence image, and B represents phase contrast image. VIFA-fl refers to the similarity between fused image and GFP fluorescence image in fluorescence area, and VIFB-fl refers to the similarity between fused image and phase contrast image in fluorescence area, while VIFB-nfl refers to the similarity between fused image and phase contrast image in nonfluorescence area.

From Table 1, VIFB-fl and VIFB-nfl of the other three fusion methods are almost the same except T-HIS; the similar results indicate that all the detailed information of fused image comes from the phase contrast image no matter in fluorescent area or nonfluorescent area. Compared with other three methods, the proposed one we use in this experiment gets the highest VIFB-nfl; it does coincide with the observed results that the black background of the GFP image gets repressed. With luminance improved, the structural information will be well embedded in the fused image, which contributes the increase of VIFB-nfl. The method we use can still get higher VIFA-fl and VIFB-fl, which indicates that the function information in GFP image and phase contrast image is well preserved in fluorescent area, and also the highest VIFB-fl explains that SFL-CT can capture the structural information of the phase contrast image effectively.

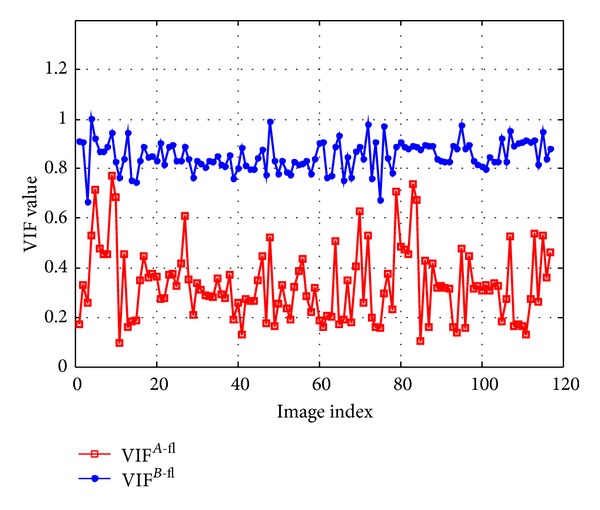

VIF distribution histogram of 117 groups of Arabidopsis cell fusion image is shown in Figure 11; the red squared line represents the VIFA-fl, and the blue dotted line represents the VIFB-fl. It is obvious that VIFB-fl is higher than VIFA-fl, which does coincide with the objective of using SFL-CT to outstand the inner structural information of the phase contrast image. With the increasing VIF within fluorescent area, the VIF in nonfluorescent area also tends to improve; this indicates the following: if the intensity in fluorescent area is strengthening, VIF will increase with the function information fully reflected; and once the brightness increases, the high resolution structural information of the image can be fully shown, and the corresponding VIFB-fl will increase; the phase image is affected by the intensity whereas low in fluorescence area, structural information cannot be reflected very well which reduces VIF's numerical similarity.

Figure 11.

117 groups of VIF distribution histogram of Arabidopsis cell fusion image.

5. Conclusions

This paper proposes a hybrid multiscale and multilevel image fusion method based on IHS transform and SFL-CT to balance the gray structural information and molecular distribution information for the fusion of GFP image and phase contrast image. In manner of SFL-CT's advantage of directional and excellent detailed expression ability, we use SFL-CT to decompose the intensity components of both GFP image and phase contrast image, and different fusion rules are utilized for coefficients of different subbands in order to keep the localization information in GFP image and detailed high-resolution information in phase contrast image. Visual information fidelity (VIF) is introduced to assess the fusion result objectively which quantifies the similarity inside and outside the fluorescent area between the fused image and original images. The experiment fusion results of 117 groups of Arabidopsis cell images from John Innes Center demonstrate that the new algorithm can both make the details of original images well preserved and improve the visibility of the fusion image and also show the superiority of the novel method to traditional methods. Although the results of the proposed method and NSCT + PCNN look similar, the former is much better in line with the image of fused image similarity degree which means that this algorithm has made full use of the advantages of SFL-CT to keep the structural information of the phase contrast image effectively. The complexity of the algorithm is obviously lower than the latter and more advantageous to the actual application.

It is also needed to point out that from the experiment we find that VIFB-fl is no longer equal with VIFB-nfl when we try to improve the intensity of the fluorescent image to make a new fusion image reconstruction; this is partially due to the nonlinear relationship between similarity and intensity within fluorescent area and nonfluorescence area of the fused image. Otsu segmentation method can also cause certain disturbance to the calculation of VIF. One evaluation method cannot be perfect for different kinds of images, and a suitable fusion and evaluation method for biological cells is still a further problem to be solved.

Acknowledgment

This paper is partially supported by the Fundamental Research Funds for the Central Universities (no. CDJZR11120006).

References

- 1.Ukimura O. Image Fusion. Rijeka, Croatia: InTech Press; 2011. [Google Scholar]

- 2.Li S, Yang B, Hu J. Performance comparison of different multi-resolution transforms for image fusion. Information Fusion. 2011;12(2):74–84. [Google Scholar]

- 3.Huang DS, Yang MH, Yao XH, Yin J. A method to fuse SAR and multi-spectral images based on contourlet and HIS transformation. Journal of Astronautics. 2011;32(1):187–192. [Google Scholar]

- 4.Khaleghi B, Khamis A, Karray FO. Multisensor data fusion: a review of the state-of-the-art. Information Fusion. 2013;14(1):28–44. [Google Scholar]

- 5.Long XJ, He ZW, Liu YS, et al. Multi-method study of TM and SAR image fusion and effectiveness quantification evaluation. Science of Surveying and Mapping. 2010;35(5):24–27. [Google Scholar]

- 6.Ranchin T, Aiazzi B, Alparone L, Baronti S, Wald L. Image fusion—the ARSIS concept and some successful implementation schemes. ISPRS Journal of Photogrammetry & Remote Sensing. 2003;58(1-2):4–18. [Google Scholar]

- 7.Li TJ, Wang YY. Fusion of GFP fluorescent and phase contrast images using stationary wavelet transform based variable-transparency method. Optics and Precision Engineering. 2009;17(11):2871–2879. [Google Scholar]

- 8.Li T, Wang Y. Biological image fusion using a NSCT based variable-weight method. Information Fusion. 2011;12(2):85–92. [Google Scholar]

- 9.Lu Y, Do MN. A new contourlet transform with sharp frequency localization. Proceedings of IEEE International Conference on Image Processing (ICIP '06); October 2006; Atlanta, Ga, USA. pp. 1629–1632. [Google Scholar]

- 10.Information on website. http://data.jic.bbsrc.ac.uk/cgi-bin/gfp/

- 11.Sheikh HR, Bovik AC. Image information and visual quality. IEEE Transactions on Image Processing. 2006;15(2):430–444. doi: 10.1109/tip.2005.859378. [DOI] [PubMed] [Google Scholar]

- 12.Do MN, Vetterli M. The contourlet transform: an efficient directional multiresolution image representation. IEEE Transactions on Image Processing. 2005;14(12):2091–2106. doi: 10.1109/tip.2005.859376. [DOI] [PubMed] [Google Scholar]

- 13.Feng P, Pan Y, Wei B, Jin W, Mi D. Enhancing retinal image by the Contourlet transform. Pattern Recognition Letters. 2007;28(4):516–522. [Google Scholar]

- 14.Bamberger RH, Smith MJT. A filter bank for the directional decomposition of images: theory and design. IEEE Transactions on Signal Processing. 1992;40(4):882–893. [Google Scholar]

- 15.Feng P. Study on some key problems of non-aliasing Contourlet transform for high-resolution images processing [Dissertation] Chongqing University; 2007. [Google Scholar]

- 16.Harris JR, Murray R, Hirose T. IHS transform for the integration of radar imagery with other remotely sensed data. Photogrammetric Engineering & Remote Sensing. 1990;56(12):1631–1641. [Google Scholar]

- 17.Chen XX. Image and Video Retrieving and Image Fusion. Beijing, China: Mechanical Industry Press; 2012. [Google Scholar]

- 18.Tu TM, Su SC, Shyu HC, Huang PS. A new look at IHS-like image fusion methods. Information Fusion. 2001;2(3):177–186. [Google Scholar]

- 19.Qu XB, Yan JW, Xiao HZ, Zhu ZQ. Image fusion algorithm based on spatial frequency-motivated pulse coupled neural networks in nonsubsampled contourlet transform domain. Acta Automatica Sinica. 2008;34(12):1508–1514. [Google Scholar]

- 20.Otsu N. A threshold selection method from gray-level histograms. IEEE Transactions on Systems, Man and Cybernetics. 1979;9(1):62–66. [Google Scholar]