Abstract

Complex networks of biochemical reactions, such as intracellular protein signaling pathways and genetic networks, are often conceptualized in terms of modules—semiindependent collections of components that perform a well-defined function and which may be incorporated in multiple pathways. However, due to sequestration of molecular messengers during interactions and other effects, collectively referred to as retroactivity, real biochemical systems do not exhibit perfect modularity. Biochemical signaling pathways can be insulated from impedance and competition effects, which inhibit modularity, through enzymatic futile cycles that consume energy, typically in the form of ATP. We hypothesize that better insulation necessarily requires higher energy consumption. We test this hypothesis through a combined theoretical and computational analysis of a simplified physical model of covalent cycles, using two innovative measures of insulation, as well as a possible new way to characterize optimal insulation through the balancing of these two measures in a Pareto sense. Our results indicate that indeed better insulation requires more energy. While insulation may facilitate evolution by enabling a modular plug-and-play interconnection architecture, allowing for the creation of new behaviors by adding targets to existing pathways, our work suggests that this potential benefit must be balanced against the metabolic costs of insulation necessarily incurred in not affecting the behavior of existing processes.

Introduction

A ubiquitous motif in signaling pathways responsible for a cell’s response to environmental stimuli is the ATP-consuming phosphorylation-dephosphorylation (PD) enzymatic cycle, in which a substrate is converted into product in an activation reaction triggered or facilitated by an enzyme, and subsequently the product is transformed back (or deactivated) into the original substrate, helped on by the action of a second enzyme. For example, a large variety of eukaryotic cell signal transduction processes (1–4) rely upon Mitogen-activated protein kinase cascades for some of the most fundamental processes of life (cell proliferation and growth, responses to hormones, apoptosis), and these are built upon cascades of PD cycles. More generally, this type of reaction, often called a futile, substrate, or enzymatic cycle (5), shows up in many other prokaryotic and eukaryotic systems, including, for example, bacterial two-component systems and phosphorelays (6,7), GTPase cycles (8), actin treadmilling (9), glucose mobilization (10), metabolic control (11), cell division and apoptosis (12), and cell-cycle checkpoint control (13). Many reasons have been proposed for the existence of these futile cycles. In some cases, their function is dictated by the underlying physical chemistry; this may be the case in transmembrane signal transduction, or in metabolic processes that drive energetically unfavorable transformations. In other contexts, and particularly in signaling pathways, there has been speculation that futile cycles play a role ranging from signal amplification to analog-to-digital conversion that triggers decision-making such as cell division (1). An alternative view is that futile cycles can act as insulators that minimize retroactivity impedance effects arising from interconnections. In that role, enzymatic cycles might help enable a plug-and-play interconnection architecture that facilitates evolution (14–16).

An important theme in contemporary molecular biology literature is the attempt to understand cell behavior in terms of cascades and feedback interconnections of more elementary modules, which may be reused in different pathways (17–19). Modular thinking plays a fundamental role in the prediction of the behavior of a system from the behavior of its components, guaranteeing that the properties of individual components do not change upon interconnection. Intracellular signal transduction networks are often thought of as modular interconnections, passing along information while also amplifying or performing other signal-processing tasks. It is assumed that their operation does not depend upon the presence or absence of downstream targets to which they convey information. However, just as electrical, hydraulic, and other physical systems often do not display true modularity, one may expect that biochemical systems, and specifically, intracellular protein signaling pathways and genetic networks, do not always connect in an ideal modular fashion.

Motivated by this observation, the article (14) dealt with a systematic study of the effect of interconnections on the input/output dynamic characteristics of signaling cascades. Following Saez-Rodriguez et al. (20), the term “retroactivity” was introduced for generic reference to such effects, which constitute an analog of nonzero output impedance in electrical and mechanical systems, and retroactivity in several simple models was quantified. It was shown how downstream targets of a signaling system (loads) can produce changes in signaling, thus propagating backward (and sideways) information about targets. Further theoretical work along these lines was reported in Ossareh et al. (15), Kim and Sauro (16), Del Vecchio and Sontag (21), and Sontag (22). Experimental verifications were reported in Ventura et al. (23) and in Jiang et al. (24), using a covalent modification cycle based on a reconstituted uridylyltransferase/uridylyl-removing enzyme PII cycle, which is a model system derived from the nitrogen assimilation control network of Escherichia coli.

The key reason for retroactivity is that signal transmission in biological systems involves chemical reactions between signaling molecules. These reactions take a finite time to occur, and during the process, while reactants are bound together, they generally cannot take part in the other dynamical processes that they would typically be involved in when unbound. One consequence of this sequestering effect is that the influences are also indirectly transmitted laterally, in that for a single input-multiple output system, the output to a given downstream system is influenced by other outputs.

To attenuate the effect of retroactivity, Del Vecchio et al. (14) proposed a negative feedback mechanism inspired by the design of operational amplifiers in electronics, employing a mechanism implemented through a covalent modification cycle based on phosphorylation-dephosphorylation reactions. For appropriate parameter ranges, this mechanism enjoys a remarkable insulation property, having an inherent capacity to shield upstream components from the influence of downstream systems and hence to increase the modularity of the system in which it is placed. One may speculate whether this is indeed one reason that such mechanisms are so ubiquitous in cell signaling pathways. Leaving aside speculation, however, one major potential disadvantage of insulating systems based on operational amplifier ideas is that they impose a metabolic load, ultimately because amplification requires energy expenditure.

Thus, a natural question to ask from a purely physical standpoint: Does better insulation require more energy consumption? This is the article’s subject. We provide a qualified positive answer: for a specific (but generic) model of covalent cycles, natural notions of insulation and energy consumption, and a Pareto-like view of multiobjective optimization, we find, using a numerical parameter sweep in our models, that better insulation indeed requires more energy.

In addition to this positive answer in itself, two major contributions of this work are:

-

1.

Introduction of the innovative measures of retroactivity combined with insulation, in terms of two competing goals: minimization of the difference between the output of the insulator and the ideal behavior, and attenuation of the competition effect (the change in output when a new downstream target is added).

-

2.

Introduction of a possible new way to characterize optimality through balancing of these goals in a Pareto sense.

These contributions should be of interest even in other studies of insulation that do not involve energy use.

The relationship between energy use and optimal biological function is an active theme of contemporary research that has been explored in several different contexts. Recent studies have examined the need for energy dissipation when improving adaptation speed and accuracy, in the context of perfect adaptation (25), and when performing computation, such as sensing the concentration of a chemical ligand in the environment (26). The tradeoff between the energy expenditure necessary for chemotaxis and the payoff of finding nutrients has also been considered (27), yielding insights into the costs and benefits of chemotactic motility in different environments. Such studies are important in characterizing the practical constraints guiding evolutionary adaptation.

Our work contributes to this research by relating the metabolic costs of enzymatic futile cycles with their capacity to act as insulators, facilitating modular interconnections in biochemical networks. While energy use in PD cycles has been related to noise filtering properties (28) and switch stability (29), to our knowledge our work is the first to explore the connection between energy use and insulation. Recent work demonstrates that enzymatic futile cycles can be key components in providing amplification of input signals, while linearizing input-output response curves (30,31). We show that these properties come at a cost: better performance of the PD cycle as an insulator requires more energy use.

Mathematical Model of the Basic System

We are interested in biological pathways that transmit a single, time-dependent input signal to one or more downstream targets. A prototypical example is a transcription factor Z, which regulates the production of one or more proteins by binding directly to their promoters, forming a protein-promoter complex. Assuming a single promoter target for simplicity, this system is represented by the set of reactions

| (1) |

where p stands for the promoter and C denotes the protein-promoter complex. For our analysis, the particular interpretation of Z, p, and C will not be important. One thinks of Z as describing an upstream system that regulates the downstream target C. Although mathematically the distinction between upstream and downstream is somewhat artificial, the roles of transcription factors as controllers of gene expression, or of enzymes on substrate conversions, and not the opposite, are biologically natural and accepted.

We adopt the convention that the (generally time-dependent) concentration of each species is denoted by the respective italics symbol; for example, X = X(t) is the concentration of X at time t. We assume that the transcription factor Z is produced or otherwise activated at a time-dependent rate k(t), and decays at a rate proportional to a constant δ, and that the total concentration of the promoter ptot is fixed. This leads to a set of ordinary differential equations (ODEs) describing the dynamics of the system,

| (2) |

The generalization of these equations to the case of multiple output targets is straightforward. Protein synthesis and degradation take place on timescales that are orders of magnitude larger than the typical timescales of small molecules binding to proteins, or of transcription factors binding to DNA (32). Thus, we will take the rates k(t) and δ to be much smaller than other interaction rates such as kon and koff. In Eq. 1, Z represents the input, and C the output.

The ideal system and the distortion measure

Sequestration of the input Z by its target p affects the dynamics of the system as a whole, distorting the output to C as well as to other potential downstream targets. In an ideal version of Eq. 2, where sequestration effects could be ignored, the dynamics would instead be given by

| (3) |

The term that was removed from the first equation represents a “retroactivity” term, expressed in the language of Del Vecchio et al. (14). This is the term that quantifies how the dynamics of the upstream species Z is affected by its downstream target C. In the ideal system presented in Eq. 3, the transmission of the signal from input to output is undisturbed by retroactivity effects. We thus use the relative difference between the output signal in a system with realistic dynamics and the ideal output, as given by the solution of Eq. 3, as a measure of the output signal distortion, and define the distortion to be

| (4) |

where 〈|·|〉 denotes a long time average. Here we normalize by dividing by the standard deviation of the ideal signal,

| (5) |

Thus, Eq. 4 measures the difference between the output in the real and ideal systems, in units of the typical size of the time-dependent fluctuations in the ideal output signal.

Fan-out: multiple targets

Another consequence of sequestration effects is the interdependence of the output signals to different downstream targets connected in parallel (33). Each molecule of Z may only bind to a single promoter at a time, thus introducing a competition between the promoters to bind with the limited amount of Z in the system. This is a question of practical interest, as transcription factors typically control a large number of target genes. For example, the tumor suppressor protein p53 has well over a hundred targets (34). A similar issue appears in biochemistry, where promiscuous enzymes may affect even hundreds of substrates. For example, alcohol dehydrogenases target ∼100 different substrates to break down toxic alcohols and to generate useful aldehyde, ketone, or alcohol groups during biosynthesis of various metabolites (35).

We quantify the size of the competition effect by the change in an output signal to a given target in response to an infinitesimal change in the abundance of another parallel target. For definiteness, consider Eq. 1 with an additional promoter p′, which bonds to Z to form a complex C′ with the same on/off rates as p,

and the corresponding equation added to Eq. 2,

We then define the competition effect of the system Eq. 2 as

| (6) |

Again, we normalize by the standard deviation of the output signal C(t),

| (7) |

computed with p′tot = 0, so that Eq. 6 measures the change in the output signal when an additional target is introduced relative to the size of the fluctuations of the output in the unperturbed system.

Mathematical Model of an Insulator

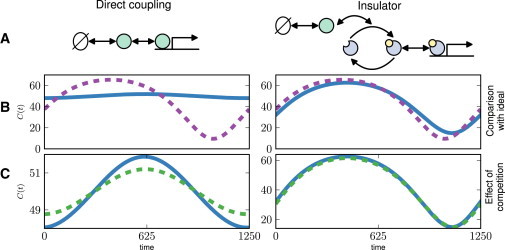

As shown in Fig. 1, typical performance of the simple direct coupling system defined by Eq. 2 is poor, assuming, as in Del Vecchio et al. (14), that we test the system with a simple sinusoidally varying production rate

| (8) |

whose frequency ω is similar in magnitude to k and δ. Oscillation of the output signal in response to the time-varying input is strongly damped relative to the ideal. The output is also sensitive to other targets connected in parallel: as the total load increases, the output signal is noticeably damped in both the transient and steady state. Here the flux of Z into and out of the system is too slow to drive large changes in the output C as the rate of production k(t) varies.

Figure 1.

Retroactivity effects lead to signal distortion, and attenuation of output signals when additional targets are added. Comparison of retroactivity effects on a signaling system with a direct coupling (DC) architecture (left) and one with an insulator, represented by a phosphorylation-dephosphorylation cycle (right). (A) Cartoon schematic of the signaling system. In the DC system (Eq. 1), the input binds directly to the target. With an insulator (Eq. 9), the input drives phosphorylation of an intermediate signaling molecule, whose phosphorylated form binds to the target. (B) Illustration of distortion. The ideal output signal (dashed, and see Eq. 3 in text) with retroactivity effects neglected, is plotted against the output for each system with nonlinear dynamics (solid), given by Eq. 2 for the DC system and Eq. 10 for the insulator. (C) Illustration of competition effect. The output signal in a system with a single target (solid) is compared with the output signal when multiple targets are present (dashed). Note the greatly reduced amplitude of variation of the output in the DC system. Plots of the output signals in each system are shown in the steady state, over a single period of k(t). This plot was made using the parameters k(t) = 0.01(1 + sin(0.005t)); δ = 0.01; α1 = β1 = 0.01; α2 = β2 = k1 = k2 = 10; kon = koff = 10; ptot = 100; and Xtot = 800, Ytot = 800 for the insulator. Parameters specifying the interaction with the new promoter p′ in the perturbed system are k′on = k′off = 10, and p′tot = 60.

As suggested in Del Vecchio et al. (14), the retroactivity effects in this system can be ameliorated by using an intermediate signal processing system, specifically one based on a phosphorylation-dephosphorylation (PD) futile cycle, between the input and output. Such systems appear often in signaling pathways that mediate gene expression responses to the environment (32). In this system, the input signal Z plays the role of a kinase, facilitating the phosphorylation of a protein X. The phosphorylated version of the protein X∗ then binds to the target p to transmit the signal. Dephosphorylation of X∗ is driven by a phosphatase Y. Assuming a two-step model of the phosphorylation-dephosphorylation reactions, the full set of reactions is

| (9) |

The total protein concentrations Xtot and Ytot are fixed.

The forward and reverse rates of the phosphorylation-dephosphorylation reaction depend implicitly on the concentrations of phosphate donors and acceptors, such as ATP and ADP. Metabolic processes ensure that these concentrations are held far away from equilibrium, biasing the reaction rates and driving the phosphorylation-dephosphorylation cycle out of equilibrium. As routinely done in enzymatic biochemistry analysis, we have made the simplifying assumption of setting the small rates of the reverse processes X∗ + Z → C1 and X + Y → C2 to zero. The ODEs governing the dynamics of the system are then

| (10) |

In an Appendix, we provide mathematical results regarding the stability of this system of ODEs in response to the periodic rate k(t), justifying our focus on long-time steady-state behavior.

As shown in Fig. 1, for suitable choices of parameters the output signal in the system including the PD cycle is able to match the ideal output much more closely than in the direct coupling system. The output signal is also much less sensitive to changes in other targets connected in parallel than in the system where the input couples directly to the promoter. One can think of this system with the insulator as equivalent to the direct coupling system, but with effective production and degradation rates

| (11) |

which may be much larger than the original k(t) and δ, thus allowing the system with the insulator to adapt much more rapidly to varying input.

The fact that the PD cycle is driven out of equilibrium, therefore consuming energy, is critical for its signal processing effectiveness. Our focus will be on how the performance of the PD cycle as an insulator depends upon its rate of energy consumption.

Our hypothesis is that better insulation requires more energy consumption. To formulate a more precise question, we need to find a proxy for energy consumption in our simple model.

Energy use and insulation

The free energy consumed in the PD cycle can be expressed in terms of the change in the free energy of the system ΔG resulting from the phosphorylation and subsequent dephosphorylation of a single molecule of X. One can also measure the amount of ATP which is converted to ADP, which is proportional to the current through the phosphorylation reaction C1 → X∗ + Z. In the steady state, because the phosphorylation and dephosphorylation reactions are assumed to be irreversible and the total concentration Xtot is fixed, the time averages of these two measures are directly proportional. The average free energy consumed per unit time in the steady state is then proportional to the average current

| (12) |

Different choices of the parameters appearing in the phosphorylation and dephosphorylation reactions, such as k1, k2, and Xtot, will lead to different rates of energy use and also different levels of performance in terms of the competition effect and distortion.

We focus our attention on the concentrations Xtot and Ytot as tunable parameters. While the reaction rates such as k1 and k2 depend upon the details of the molecular structure and are harder to directly manipulate, concentrations of stable molecules like X and Y can be experimentally adjusted, and hence the behavior of the PD cycle as a function of Xtot and Ytot is of great practical interest. When building synthetic circuits in living cells, for example, Xtot and Ytot can be tuned by placing the genes that express these proteins under the control of constitutive promoters of adjustable strengths, or through the action of inducers (36).

Comparing different parameters in the insulator: Pareto optimality

In measuring the overall quality of our signaling system, the relative importance of faithful signal transmission, as measured by small distortion, and a small competition effect, will vary. This means that quality is intrinsically a multiobjective optimization problem, with competing objectives. Rather than applying arbitrary weights to each quantity, we will instead approach the problem of finding ideal parameters for the PD cycle from the point of view of Pareto optimality, a standard approach to optimization problems with multiple competing objectives that was originally introduced in economics (37). In this view, one seeks to determine the set of parameters of the system for which any improvement in one of the objectives necessitates a sacrifice in one of the others. Here, the competing objectives are the minimization of and .

A Pareto optimal choice of the parameters is one for which there is no other choice of parameters that gives a smaller value of both and . Pareto optimal choices, also called Pareto efficient points, give generically optimum points with respect to arbitrary positive linear combinations α + β, thus eliminating the need to make an artificial choice of weights.

An informal analysis

A full mathematical analysis of the system of nonlinear ODEs in Eq. 10 is difficult. In biologically plausible parameter ranges, however, certain simplifications allow one to develop intuition about its behavior. We discuss now this approximate analysis, to set the stage for, and to help interpret the results of, our numerical computations with the full nonlinear model.

We make the following Ansatz: the variables Z(t) and X∗(t) evolve more slowly than C1(t), C2(t), and C(t). Biochemically, this is justified because phosphorylation and dephosphorylation reactions tend to occur on the timescale of seconds (38,39), as do transcription factor promoter binding and unbinding events (32), while protein production and decay takes place on the timescale of minutes (32). In addition, we analyze the behavior of the system under the assumption that the total concentrations of enzyme and phosphatase, Xtot and Ytot, are large. In terms of the constants appearing in Eq. 10, we assume

| (13) |

Thus, on the timescale of Z(t) and X∗(t), we can make the quasi-steady-state (Michaelis-Menten) assumption that C1(t), C2(t), and C(t) are at equilibrium. Setting the right-hand sides of , , and to zero, and substituting in the remaining two equations of Eq. 10, we obtain the following system:

The lack of additional terms in the equation for Z(t) is a consequence of the assumption that K1 >> Xtot, which amounts to a low binding affinity of Z to its target X (relative to the concentration of the latter); this follows from a total quasi-steady-state approximation as in Ciliberto et al. (40) and Borghans et al. (41). Observe that such an approximation is not generally possible for the original system Eq. 1, and indeed this is the key reason for the retroactivity effect (14).

With the above assumptions, in the system with the insulator, Z(t) evolves approximately as in the ideal system Eq. 3. In this quasi-steady-state approximation

and

and thus we have

Finally, let us consider the effect of the following condition:

| (14) |

If this condition is satisfied, then

with K >> 1, which means that X∗(t) ≈ Z(t), and thus the equation for in Eq. 10 reduces to that for the ideal system in Eq. 3. In summary, if Eq. 14 holds, we argue that the system with the insulator will reproduce the behavior of the ideal system, instead of the real system in Eq. 2. Moreover, the energy consumption rate in Eq. 12 is proportional to k1C1 ≈ (k1/K1)XtotZ, and hence will be large if Eq. 14 holds, which intuitively leads us to expect high energy costs for insulation.

These informal arguments (or more formal ones based on singular perturbation theory (14)) justify the sufficiency, but not the necessity, of Eq. 14. Our numerical results will show that this condition is indeed satisfied for a wide range of parameters that lead to good insulation.

Numerical Results on Pareto Optimality

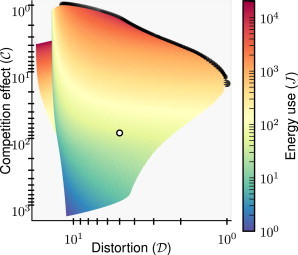

We have explored the performance of the insulating PD cycle over an extensive range of parameters to test our hypothesis that better insulation requires more energy consumption. In Fig. 2 we show a plot of and for systems with a range of Xtot and Ytot, obtained by numerical integration of the differential equations expressed in Eq. 10 (see also Fig. 3 for a three-dimensional view). Pareto optimal choices of parameters on the tested parameter space are indicated by solid points.

Figure 2.

Performance of the insulator measured by the competition effect and distortion of the output in the system with an insulator (Eq. 10), tested over a range of Xtot and Ytot varied independently from 10 to 10,000 in logarithmic steps. For simplicity, and are rescaled such that the smallest (best) values are equal to one. Points are shaded according to the logarithm of the rate of energy consumption of the PD cycle. (Solid dots) Pareto efficient parameter points. Rates of energy consumption increase as one approaches the Pareto front; obtaining small values of the competition effect is particularly costly. (Open dot) For comparison, and positions for the direct coupling system are marked. See Numerical Results on Pareto Optimality for details. This plot was made using the parameters k(t) = 0.01(1 + sin(0.005t)), δ = 0.01, α1 = β1 = 0.01, α2 = β2 = k1 = k2 = 10, kon = koff = 10, and ptot = 100.

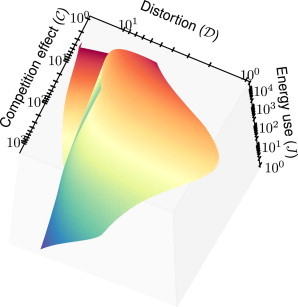

Figure 3.

Three-dimensional plot of competition effect and distortion , along with the rate of energy consumption J, in the system including an insulator (Eq. 10). Note the fold in the plot; it is possible for two different values of the parameters to yield the same measures of insulation and , but with different rates of energy consumption.

Superior performance of the insulator is clearly associated with higher rates of energy consumption, as shown in the figure. Typically the rate of energy consumption increases as one approaches the set of Pareto optimal points, referred to as the Pareto front. Indeed, choices of parameters on or near the Pareto front have some of the highest rates of energy expenditure. Conversely, the parameter choices that have the poorest performance also consume the least energy. As shown above, this phenomenon can be understood by noting that the energy consumption rate from Eq. 12 will be large when the conditions for optimal insulation from Eq. 14 are met.

Note that it is possible for two different choices of the parameters Xtot and Ytot to yield the same measures of insulation and , but with different rates of energy consumption. This results in a fold in the sheet in Fig. 2, most clearly observed near = 10 and = 4. We see then that while better insulation generally requires larger amounts of energy consumption, it is not necessarily true that systems with high rates of energy consumption always make better insulators. See also the three-dimensional plot of , , and J shown in Fig. 3 for a clearer picture.

In addition to the general trend of increasing energy consumption as competition effect or distortion decrease, we find that a strong local energy optimality property is satisfied. We observe numerically that any small change in the parameters Xtot and Ytot, which leads to a decrease in both the competition effect and distortion, must be accompanied by an increase in the rate of energy consumption, excluding jumps from one side of the fold to the other. This local property complements the global observation that Pareto optimal points are associated with the regions of parameter space with the highest rates of energy consumption.

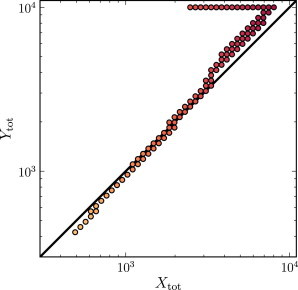

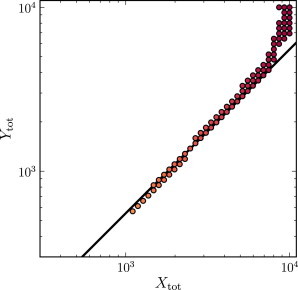

While we find Pareto optimal choices of the concentrations Xtot and Ytot span several orders of magnitude, the ratio of Xtot to Ytot is close to unity for nearly all Pareto optima (see Fig. 4). A small number of Pareto optimal points are found with very different total concentrations of X and Y, but these points appear to be due to boundary effects from the sampling of a finite region of the parameter space. Indeed, we have argued that the insulator should perform best when Eq. 14 is satisfied. For the choice of parameters considered here, this gives Xtot/Ytot = (k2K1)/(k1K2) = 1. Tests with randomized parameters confirm that Eq. 14 gives a good estimate of the relationship between Xtot and Ytot for Pareto optimal points (see Fig. 5 for an example).

Figure 4.

Scatter plot of the Pareto optimal sets of parameters Xtot and Ytot corresponding to those in Figs. 2 and 3. Pareto optimal points are typically those which strike a balance between the total concentrations of X and Y, as suggested by the analysis of Mathematical Model of an Insulator. We predict that Pareto optima will lie along the line (k1/K1)Xtot = (k2/K2)Ytot (shown in background, prediction from Eq. 14), in good agreement with the simulation results. Each point is shaded according to the rate of energy consumption for that choice of parameters. Increases in either Xtot or Ytot result in increased energy expenditure. Due to the limited range of parameters that could be tested, some Pareto optima lie along the boundaries of the parameter space (see the elbow in the scatter points, top of the plot).

Figure 5.

Scatter plot of the Pareto optimal sets of parameters Xtot and Ytot for an insulator using the randomly shifted parameters. Based on Eq. 14, we predict that Pareto optima will lie along the line (k1/K1)Xtot = (k2/K2)Ytot (shown in background). Parameters used are k(t) = 0.0137(1 + sin(0.005t)); δ = 0.0188; α1 = 0.0107; β1 = 0.0102; α2 = 5.31; β2 = 16.42; k1 = k2 = 10; kon = 5.19; koff = 12.49; and ptot = 100. Each point is shaded according to the rate of energy consumption for that choice of parameters (scale analogous to that of Figs. 2–4).

We also observe that there is a lower bound on the concentration of Xtot and Ytot for optimal insulation. Though we tested ranges of concentrations from 10 to 10,000, the first optimal points only appear when the concentrations are ∼500, several times larger than the concentration of the target (ptot = 100) and much larger than the concentration of the signal protein (Zmax ≈ 2 in our simulations). Interestingly, the insulator consumes less energy for these first Pareto optimal parameter choices than at higher concentrations, and achieves the best measures of distortion with relatively low competition effect as well. This suggests that smaller concentrations may be generically favored, particularly when energy constraints are important.

We conclude that the specification of Pareto optimality places few constraints on the absolute concentrations Xtot and Ytot in the model, save for a finite lower bound, but the performance of the insulator depends strongly on the ratio of the two concentrations. This observation connects with the work of Gutenkunst et al. (42), who noted sloppy parameter sensitivity for many variables in systems biology models, excepting some stiff combinations of variables that determine a model’s behavior.

For comparison, we indicate the values of and of the simpler direct coupling architecture, with no insulator, by an open dot in Fig. 2. While many choices of parameters for the insulating PD cycle, including most Pareto optimal points, lead to improvements in the distortion relative to that of the direct coupling system, the most dramatic improvement is in fact in the competition effect. Roughly of the parameter values tested for the insulator have a lower value of the competition effect than that found for the direct coupling system. This suggests that insulating PD cycles may be functionally favored over simple direct binding interactions, particularly when there is strong pressure for stable output to multiple downstream systems.

Finally, we note that the analysis performed here has not factored in the potential metabolic costs of production for X and Y. Such costs would depend on the structure of these components, as well as their rates of production and degradation, which we have not addressed and which may be difficult to estimate in great generality. However, as the rate of energy consumption increases with increasing Xtot and Ytot (see Fig. 4), we would expect to find similar qualitative results regarding rates of energy consumption even when factoring in production costs.

Discussion

A very common motif in cell signaling is that in which a substrate is ultimately converted into a product, in an activation reaction triggered or facilitated by an enzyme, and, conversely, the product is transformed back (or deactivated) into the original substrate, helped on by the action of a second enzyme. This type of reaction, often called a futile, substrate, or enzymatic cycle, appears in many signaling pathways: GTPase cycles (8); bacterial two-component systems and phosphorelays (6,7); actin treadmilling (9); glucose mobilization (10); metabolic control (11); cell division and apoptosis (12); and cell-cycle checkpoint control (13). See Samoilov et al. (5) for many more references and a discussion. While phosphorelay systems do not consume energy, most of these futile cycles consume energy, in the form of ATP or GTP use.

In this work we explored the connection between the ability of energy consuming enzymatic futile cycles to insulate biochemical signaling pathways from impedance and competition effects, and their rate of energy consumption. Our hypothesis was that better insulation requires more energy consumption. We tested this hypothesis through the computational analysis of a simplified physical model of covalent cycles, using two innovative measures of insulation, referred to as competition effect and distortion, as well as a possible new way to characterize optimal insulation through the balancing of these two measures in a Pareto sense. Our results indicate that indeed better insulation requires more energy.

Testing a wide range of parameters, we identified Pareto optimal choices that represent the best possible ways to compromise two competing objectives: the minimization of distortion and of the competition effect. The Pareto optimal points share two interesting features: First, they consume large amounts of energy, consistent with our hypothesis that better insulation requires greater energy consumption. Second, the total substrate and phosphatase concentrations Xtot and Ytot typically satisfy Eq. 14. Assuming that rates for phosphorylation and dephosphorylation are similar, this implies Xtot ∼ Ytot. There is also a minimum concentration required to achieve a Pareto optimal solution; arbitrarily low concentrations do not yield optimal solutions. Interestingly, insulators with Pareto optimal choices of parameters close to the minimum concentration also expend the least amount of energy, compared to other parameter choices on the Pareto front, and have the least distortion while still achieving low competition effect. This suggests that these points near the minimum concentration might be generically favored, particularly when energy constraints are important.

Many reasons have been proposed for the existence of futile cycles in nature, such as signal amplification, increased sensitivity, or analog-to-digital conversion for help in decision-making. An alternative, or at least complementary, possible explanation (14) lies in the capabilities of such cycles to provide insulation, thus enabling a plug-and-play interconnection architecture that might facilitate evolution. Our results suggest that better insulation requires a higher energy cost, so that a delicate balance may exist between, on the one hand, the ease of adaptation through creation of new behaviors by adding targets to existing pathways, and on the other hand, the metabolic costs necessarily incurred in not affecting the behavior of existing processes.

Our results were formulated for a model in which the input signal is a transcription factor Z that regulates the production of one or more proteins by promoter binding, as described by Eq. 1. We have analyzed (details not given here) another model, and derived a similar qualitative conclusion: namely, that higher energy is required to attain better insulation and is also required for chemical reactions that describe signaling systems in which Z denotes the active form of a kinase; and that p is a protein target which can be reversibly phosphorylated to give a modified form C.

Acknowledgments

The authors are very thankful to the anonymous referees for several very useful suggestions.

Work was supported in part by National Institutes of Health grants No. NIH 1R01GM086881, No. 1R01GM100473, and No. AFOSR FA9550-11-1-0247.

Footnotes

John Barton’s work was performed at the Department of Physics, Rutgers University, Piscataway, NJ 08854.

Appendix

In this article we have studied numerical solutions of the set of ODEs given in Eq. 10. For the values of parameters we tested, solutions of Eq. 10 are well behaved, and after long times approach a periodic steady-state solution, where the period T is the same as that of the sinusoidal time-varying production rate k(t) given in Eq. 8. We present analytical results that verify that solutions of Eq. 10 are well behaved for more general choices of parameters. In particular, we prove that:

-

1.

For an approximate one-step enzymatic model, a unique and globally attracting solution exists in response to any periodic input.

-

2.

There is always a periodic solution of Eq. 10 in response to any periodic input k(t).

-

3.

Given some very general physical assumptions for the parameters, solutions of Eq. 10 are linearly stable around an equilibrium point when the input is constant. When the input is time-varying with small amplitude oscillations, a periodic solution exists that is close to each asymptotically stable equilibrium point.

The first proof is shown below, and proofs for the second and third results are given in the Supporting Material.

Globally attracting periodic solutions for a one-step model

We now provide a theoretical analysis showing that there is a unique and globally attracting response to any periodic input, under the assumption that the phosphorylation and dephosphorylation reactions are well approximated by the one-step enzymatic model (43):

| (15) |

(A similar simplified system was used in Del Vecchio et al. (14) to study the sensitivity of retroactivity to parameters.) Such a model can be obtained in the limit that k1,k2 → ∞ in Eq. 10, as considered previously, where now the rates k1 and k2 in Eq. 15 are equivalent to β1 and α1, respectively, in Eq. 10.

The conservation of X and p give the conservation equations X + X∗ + C = Xtot and p + C = ptot. We thus obtain the following set of nonlinear differential equations, where k(t) is a positive and time-varying input function:

Observe that the amount of phosphatase Y is constant. Because X = Xtot – X∗ − C ≥ 0 and p = ptot – C ≥ 0, physically meaningful solutions are those that lie in the set of vectors (Z(t), X∗(t), and C(t)), which satisfy the constraints

Observe that any solution that starts in the set at time t = 0 stays in for all t > 0, because dZ/dt ≥ 0 when Z = 0; dX∗/dt ≥ 0 when X∗ = 0; dC/dt ≥ 0 when C = 0; d(X∗ + C)/dt ≤ 0 when X∗ + C = Xtot; and dC/dt ≤ 0 when C = ptot. We will now prove that, for any given but arbitrary input k(t) ≥ 0, there is a unique periodic solution in of the above system, and this solution is also a global attractor, in the sense that, for every initial condition , the solution ξ(t) with ξ(0) = ξ0 satisfies that as t → ∞.

It is convenient to make the change of variables W(t) = X∗(t) + C(t) so that the equations become

To prove the existence of a globally attracting periodic orbit, we can equivalently study the system in these new variables, where is now the set defined by

To show the existence and global attractivity of a periodic solution, we appeal to the theory of contractive systems (44–46). The key result that we use is as follows. Consider a system of ODEs,

| (16) |

defined for t ∈ [0, ∞] and x ∈ C, where is a closed and convex subset of ; f(t,x) is differentiable on x; and f(t,x), as well as the Jacobian of f with respect to x, denoted as , are continuous in (t,x). Furthermore, suppose that f is periodic with period T, for all t ≥ 0, x ∈ . In our system, n = 3, (x,t) is the vector consisting of (Z(t), W(t), and C(t)), and the periodicity arises from k(t + T) = k(t) for all t ≥ 0. We recall that, given a vector norm on Euclidean space (|·|), with its induced matrix norm ||A||, the associated matrix measure or logarithmic norm μ is defined (47,48) as the directional derivative of this matrix norm in the direction of A and evaluated at the identity matrix:

The key result in our context is as follows. Suppose that, for some matrix measure μ,

Then, there is a unique periodic solution of Eq. 16 of period T and, moreover, for every solution x(t), it holds that as t → ∞. A self-contained exposition, with simple proofs, of this and several other basic results on contraction theory, is given in Sontag (49). As matrix measure, we will use the measure μP,∞ induced by the vector norm |Px|∞, where P is a suitable nonsingular matrix and . For this norm, Michel et al. (50) has that , and, in general, . Computing with our example, we have, denoting ,

With the weighting matrix P = diag(α,1,β), for our system we have

(which is independent of t), and

where

It will be enough to show that there is a choice of α and β such that Ai < 0 for i = 2, 3. Picking any number

we guarantee that A3 ≤ −koff/2 < 0, and also 1 – 1/β > 0. Finally, picking any number,

we guarantee that A2 ≤ −k2Y(1−1/β)/2 < 0.

Supporting Material

References

- 1.Huang C.-Y., Ferrell J.E., Jr. Ultrasensitivity in the mitogen-activated protein kinase cascade. Proc. Natl. Acad. Sci. USA. 1996;93:10078–10083. doi: 10.1073/pnas.93.19.10078. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Asthagiri A.R., Lauffenburger D.A. A computational study of feedback effects on signal dynamics in a mitogen-activated protein kinase (MAPK) pathway model. Biotechnol. Prog. 2001;17:227–239. doi: 10.1021/bp010009k. [DOI] [PubMed] [Google Scholar]

- 3.Widmann C., Gibson S., Johnson G.L. Mitogen-activated protein kinase: conservation of a three-kinase module from yeast to human. Physiol. Rev. 1999;79:143–180. doi: 10.1152/physrev.1999.79.1.143. [DOI] [PubMed] [Google Scholar]

- 4.Bardwell L., Zou X., Komarova N.L. Mathematical models of specificity in cell signaling. Biophys. J. 2007;92:3425–3441. doi: 10.1529/biophysj.106.090084. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Samoilov M., Plyasunov S., Arkin A.P. Stochastic amplification and signaling in enzymatic futile cycles through noise-induced bistability with oscillations. Proc. Natl. Acad. Sci. USA. 2005;102:2310–2315. doi: 10.1073/pnas.0406841102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Bijlsma J.J., Groisman E.A. Making informed decisions: regulatory interactions between two-component systems. Trends Microbiol. 2003;11:359–366. doi: 10.1016/s0966-842x(03)00176-8. [DOI] [PubMed] [Google Scholar]

- 7.Grossman A.D. Genetic networks controlling the initiation of sporulation and the development of genetic competence in Bacillus subtilis. Annu. Rev. Genet. 1995;29:477–508. doi: 10.1146/annurev.ge.29.120195.002401. [DOI] [PubMed] [Google Scholar]

- 8.Donovan S., Shannon K.M., Bollag G. GTPase activating proteins: critical regulators of intracellular signaling. Biochim. Biophys. Acta. 2002;1602:23–45. doi: 10.1016/s0304-419x(01)00041-5. [DOI] [PubMed] [Google Scholar]

- 9.Chen H., Bernstein B.W., Bamburg J.R. Regulating actin-filament dynamics in vivo. Trends Biochem. Sci. 2000;25:19–23. doi: 10.1016/s0968-0004(99)01511-x. [DOI] [PubMed] [Google Scholar]

- 10.Karp G. Wiley; New York: 2002. Cell and Molecular Biology. [Google Scholar]

- 11.Stryer L. Freeman; New York: 1995. Biochemistry. [Google Scholar]

- 12.Sulis M.L., Parsons R. PTEN: from pathology to biology. Trends Cell Biol. 2003;13:478–483. doi: 10.1016/s0962-8924(03)00175-2. [DOI] [PubMed] [Google Scholar]

- 13.Lew D.J., Burke D.J. The spindle assembly and spindle position checkpoints. Annu. Rev. Genet. 2003;37:251–282. doi: 10.1146/annurev.genet.37.042203.120656. [DOI] [PubMed] [Google Scholar]

- 14.Del Vecchio D., Ninfa A., Sontag E. Modular cell biology: retroactivity and insulation. Nat. Mol. Sys. Biol. 2008;4:161. doi: 10.1038/msb4100204. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Ossareh H.R., Ventura A.C., Del Vecchio D. Long signaling cascades tend to attenuate retroactivity. Biophys. J. 2011;100:1617–1626. doi: 10.1016/j.bpj.2011.02.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Kim K.H., Sauro H.M. Measuring retroactivity from noise in gene regulatory networks. Biophys. J. 2011;100:1167–1177. doi: 10.1016/j.bpj.2010.12.3737. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Lauffenburger D.A. Cell signaling pathways as control modules: complexity for simplicity? Proc. Natl. Acad. Sci. USA. 2000;97:5031–5033. doi: 10.1073/pnas.97.10.5031. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Hartwell L., Hopfield J., Murray A. From molecular to modular cell biology. Nature. 1999;402:47–52. doi: 10.1038/35011540. [DOI] [PubMed] [Google Scholar]

- 19.Alexander R.P., Kim P.M., Gerstein M.B. Understanding modularity in molecular networks requires dynamics. Sci. Signal. 2009;2:pe44. doi: 10.1126/scisignal.281pe44. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Saez-Rodriguez J., Kremling A., Gilles E. Dissecting the puzzle of life: modularization of signal transduction networks. Comput. Chem. Eng. 2005;29:619–629. [Google Scholar]

- 21.Del Vecchio D., Sontag E. Engineering principles in bio-molecular systems: from retroactivity to modularity. Eur. J. Control. 2009;15:389–397. [Google Scholar]

- 22.Sontag E. Modularity, retroactivity, and structural identification. In: Koeppl H., Setti G., di Bernardo M., Densmore D., editors. Design and Analysis of Biomolecular Circuits. Springer-Verlag; New York: 2011. pp. 183–202. [Google Scholar]

- 23.Ventura A.C., Jiang P., Ninfa A.J. Signaling properties of a covalent modification cycle are altered by a downstream target. Proc. Natl. Acad. Sci. USA. 2010;107:10032–10037. doi: 10.1073/pnas.0913815107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Jiang P., Ventura A.C., Del Vecchio D. Load-induced modulation of signal transduction networks. Sci. Signal. 2011;4:ra67. doi: 10.1126/scisignal.2002152. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Lan G., Sartori P., Tu Y. The energy-speed-accuracy tradeoff in sensory adaptation. Nat. Phys. 2012;8:422–428. doi: 10.1038/nphys2276. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Mehta P., Schwab D.J. Energetic costs of cellular computation. Proc. Natl. Acad. Sci. USA. 2012;109:17978–17982. doi: 10.1073/pnas.1207814109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Taylor, J. R., and R. Stocker. 2012. Trade-offs of chemotactic foraging in turbulent water. Science. 338:675–679. http://www.sciencemag.org/content/338/6107/675. [DOI] [PubMed]

- 28.Detwiler P.B., Ramanathan S., Shraiman B.I. Engineering aspects of enzymatic signal transduction: photoreceptors in the retina. Biophys. J. 2000;79:2801–2817. doi: 10.1016/S0006-3495(00)76519-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Qian H., Reluga T.C. Nonequilibrium thermodynamics and nonlinear kinetics in a cellular signaling switch. Phys. Rev. Lett. 2005;94:028101. doi: 10.1103/PhysRevLett.94.028101. [DOI] [PubMed] [Google Scholar]

- 30.Sturm O.E., Orton R., Kolch W. The mammalian MAPK/ERK pathway exhibits properties of a negative feedback amplifier. Sci. Signal. 2010;3:ra90. doi: 10.1126/scisignal.2001212. [DOI] [PubMed] [Google Scholar]

- 31.Birtwistle M.R., Kolch W. Biology using engineering tools: the negative feedback amplifier. Cell Cycle. 2011;10:2069–2076. doi: 10.4161/cc.10.13.16245. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Alon U. Chapman & Hall/CRC; New York: 2006. An Introduction to Systems Biology: Design Principles of Biological Circuits. [Google Scholar]

- 33.Kim K.H., Sauro H.M. Fan-out in gene regulatory networks. J. Biol. Eng. 2010;4:16. doi: 10.1186/1754-1611-4-16. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Riley T., Sontag E., Levine A. Transcriptional control of human p53-regulated genes. Nat. Rev. Mol. Cell Biol. 2008;9:402–412. doi: 10.1038/nrm2395. [DOI] [PubMed] [Google Scholar]

- 35.Adolph H.W., Zwart P., Cedergren-Zeppezauer E. Structural basis for substrate specificity differences of horse liver alcohol dehydrogenase isozymes. Biochemistry. 2000;39:12885–12897. doi: 10.1021/bi001376s. [DOI] [PubMed] [Google Scholar]

- 36.Andrianantoandro E., Basu S., Weiss R. Synthetic biology: new engineering rules for an emerging discipline. Nat. Mol. Sys. Biol. 2006;2:1–14. doi: 10.1038/msb4100073. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Shoval O., Sheftel H., Alon U. Evolutionary trade-offs, Pareto optimality, and the geometry of phenotype space. Science. 2012;336:1157–1160. doi: 10.1126/science.1217405. [DOI] [PubMed] [Google Scholar]

- 38.Kholodenko B.N., Brown G.C., Hoek J.B. Diffusion control of protein phosphorylation in signal transduction pathways. Biochem. J. 2000;350:901–907. [PMC free article] [PubMed] [Google Scholar]

- 39.Hornberg J.J., Binder B., Westerhoff H.V. Control of MAPK signaling: from complexity to what really matters. Oncogene. 2005;24:5533–5542. doi: 10.1038/sj.onc.1208817. [DOI] [PubMed] [Google Scholar]

- 40.Ciliberto A., Capuani F., Tyson J.J. Modeling networks of coupled enzymatic reactions using the total quasi-steady state approximation. PLOS Comput. Biol. 2007;3:e45. doi: 10.1371/journal.pcbi.0030045. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Borghans J.A., de Boer R.J., Segel L.A. Extending the quasi-steady state approximation by changing variables. Bull. Math. Biol. 1996;58:43–63. doi: 10.1007/BF02458281. [DOI] [PubMed] [Google Scholar]

- 42.Gutenkunst, R. N., J. J. Waterfall, …, J. P. Sethna. 2007. Universally sloppy parameter sensitivities in systems biology models. PLOS Comput. Biol. 3:1871–1878. http://dx.plos.org/10.1371%2Fjournal.pcbi.0030189. [DOI] [PMC free article] [PubMed]

- 43.Heinrich R., Neel B.G., Rapoport T.A. Mathematical models of protein kinase signal transduction. Mol. Cell. 2002;9:957–970. doi: 10.1016/s1097-2765(02)00528-2. [DOI] [PubMed] [Google Scholar]

- 44.Demidovich B. Dissipativity of a nonlinear system of differential equations. Ser. Mat. Mekh. I. 1961;6:19–27. [Google Scholar]

- 45.Yoshizawa T. The Mathematical Society of Japan; Tokyo: 1966. Stability Theory by Liapunov’s Second Method. [Google Scholar]

- 46.Lohmiller W., Slotine J.J.E. Nonlinear process control using contraction theory. AIChE J. 2000;46:588–596. [Google Scholar]

- 47.Dahlquist G. Stability and error bounds in the numerical integration of ordinary differential equations. Trans. Roy. Inst. Techn. (Stockholm) 1959;130:24. [Google Scholar]

- 48.Lozinskii S.M. Error estimate for numerical integration of ordinary differential equations. I. Izv. Vtssh. Uchebn. Zaved. Mat. 1959;5:222. [Google Scholar]

- 49.Sontag E. Contractive systems with inputs. In: Willems J., Hara S., Ohta Y., Fujioka H., editors. Perspectives in Mathematical System Theory, Control, and Signal Processing. Springer-Verlag; New York: 2010. pp. 217–228. [Google Scholar]

- 50.Michel A.N., Liu D., Hou L. Springer-Verlag; New York: 2007. Stability of Dynamical Systems: Continuous, Discontinuous, and Discrete Systems. [Google Scholar]

- 51.Granas A., Dugundji J. Springer-Verlag; New York: 2003. Fixed Point Theory. [Google Scholar]

- 52.Hurwitz A. On the conditions under which an equation has only roots with negative real parts. In: Bellman R., Kalaba R., editors. Selected Papers on Mathematical Trends in Control Theory. Dover; Mineola, NY: 1964. 65. [Google Scholar]

- 53.Khalil H. Prentice Hall; Upper Saddle River, NJ: 2002. Nonlinear Systems, 3rd Ed. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.