Abstract

Our actions take place in space and time, but despite the role of time in decision theory and the growing acknowledgement that the encoding of time is crucial to behaviour, few studies have considered the interactions between neural codes for objects in space and for elapsed time during perceptual decisions. The speed-accuracy trade-off (SAT) provides a window into spatiotemporal interactions. Our hypothesis is that temporal coding determines the rate at which spatial evidence is integrated, controlling the SAT by gain modulation. Here, we propose that local cortical circuits are inherently suited to the relevant spatial and temporal coding. In simulations of an interval estimation task, we use a generic local-circuit model to encode time by ‘climbing’ activity, seen in cortex during tasks with a timing requirement. The model is a network of simulated pyramidal cells and inhibitory interneurons, connected by conductance synapses. A simple learning rule enables the network to quickly produce new interval estimates, which show signature characteristics of estimates by experimental subjects. Analysis of network dynamics formally characterizes this generic, local-circuit timing mechanism. In simulations of a perceptual decision task, we couple two such networks. Network function is determined only by spatial selectivity and NMDA receptor conductance strength; all other parameters are identical. To trade speed and accuracy, the timing network simply learns longer or shorter intervals, driving the rate of downstream decision processing by spatially non-selective input, an established form of gain modulation. Like the timing network's interval estimates, decision times show signature characteristics of those by experimental subjects. Overall, we propose, demonstrate and analyse a generic mechanism for timing, a generic mechanism for modulation of decision processing by temporal codes, and we make predictions for experimental verification.

Author Summary

Studies in neuroscience have characterized how the brain represents objects in space and how these objects are selected for detailed perceptual processing. The selection process entails a decision about which object is favoured by the available evidence over time. This period of time is typically in the range of hundreds of milliseconds and is widely believed to be crucial for decisions, allowing neurons to filter noise in the evidence. Despite the widespread belief that time plays this role in decisions and the growing recognition that the brain estimates elapsed time during perceptual tasks, few studies have considered how the encoding of time effects decision making. We propose that neurons encode time in this range by the same general mechanisms used to select objects for detailed processing, and that these temporal representations determine how long evidence is filtered. To this end, we simulate a perceptual decision by coupling two instances of a neural network widely used to simulate localized regions of the cerebral cortex. One network encodes the passage of time and the other makes decisions based on noisy evidence. The former influences the performance of the latter, reproducing signature characteristics of temporal estimates and perceptual decisions.

Introduction

It is likely that the cerebral cortex evolved to provide a model of the world, serving decisions for action. Our actions take place in space and time and both of these dimensions are considered in the dominant hypothesis of decision making, where noisy spatial evidence is averaged over time (see [1]–[3]). The longer we spend averaging, the more accurate our decisions [4]. A trade-off between speed and accuracy is implicit in this framework and is a hallmark of decision tasks [5], but the mechanism by which we determine how long to spend averaging is an open question [6]. In recent years, there has been increasing acknowledgement that the encoding of time may be as crucial to behaviour as the encoding of space [7] and several studies have considered roles for temporal codes in decision making [8]–[11]. Under this approach, time is not a passive medium for spatial averaging, but is actively encoded during decisions, determining the rate at which they unfold. Accordingly, the speed-accuracy trade-off (SAT) can be controlled by the estimation of temporal intervals, determining how long spatial evidence is integrated [11].

Our ability to represent time covers at least twelve orders of magnitude, from the scale of microseconds to circadian rhythms, and different neural mechanisms are believed to support representations of vastly different temporal duration [12], [13]. Here, we focus on the hundreds of milliseconds range, the relevant order for the most well studied perceptual decision tasks [1], [3], [14]. Two fundamental questions in the study of temporal processing are whether the representation of time is centralized or distributed [15], [16], and whether the circuitry involved is specialized or generic [17], [18]. In this paper, we propose that local-circuit cortical processing is inherently suited to the representation of space and time on this order, supporting a distributed, generic processing framework. To this end, we demonstrate that a generic biophysical model of a local cortical circuit can estimate time in the hundreds of milliseconds range, where ‘climbing’ activity resembles that seen in cortex during tasks with a timing requirement and estimates of temporal intervals show signature characteristics of temporal estimates by experimental subjects. The network estimates different intervals by the scaling of a single term controlling local-circuit dynamics by the strength of NMDA receptor (NMDAR) conductance. Analysis of network dynamics formally characterizes this timing mechanism and a simple learning rule is sufficient for the network to quickly learn the intervals.

In simulations of a decision task, we couple two such generic networks with identical parameters except for the NMDAR scale factor. One network encodes elapsed time relative to a learned interval. The other decides which of two stimuli has more evidence. As climbing activity evolves in the timing network, it governs the rate of downstream decision processing by gain modulation. To trade speed and accuracy, the timing network simply imposes different temporal constraints on the decision network. The model's activity and behaviour are consistent with a large body of electrophysiological and behavioural data from timing and decision tasks, as well as the hypothesis that cortical circuitry is canonical (see [19]). In our opinion, these results should be expected of a generally uniform structure that evolved to provide a model for action in a spatiotemporal world.

Methods

To address the hypotheses that generic local-circuit processing is sufficient to support timing in the hundreds of milliseconds range (see [17], [18]) and that these temporal codes control the speed and accuracy of decisions [11], we simulated each of two local cortical circuits with a spiking-neuron implementation [20]–[26] of a model widely used in population and firing rate simulations of cortical circuits [27]–[29]. This class of model assumes a columnar structure, where a spatial continuum of bell-shaped population codes (bumps) is supported by net excitation between adjacent columns and net inhibition between distal columns. To emphasize the robustness of the principles underlying our hypotheses, the only differences between the two networks were their stimulus-selectivity and the strength of NMDAR conductance. The scaling of NMDAR conductance is an established mechanism for controlling intrinsic dynamics in these and related models [21], [26], [30], [31] and a potential biological correlate is provided in the Discussion, but our hypothesis does not require this specific mechanism, e.g. scaling the strength of feedback inhibition is another approach. As described below, the timing network had stronger NMDAR conductance, but only the decision network was selective for stimuli. These differences were sufficient to determine each network's function as a timer or decision maker.

In simulations of an interval estimation task, the timing network received noisy current injection, simulating synaptic bombardment from upstream cortical regions, but did not receive any spatially-selective input. Strong NMDAR conductance at intrinsic synapses enabled a local sub-population of the network to undergo ‘climbing’ activity (activity buildup) and the time at which this activity reached a fixed threshold was the network's estimate of a given interval (see [32]). Only the timing network was used in this task.

In coupled-circuit simulations of a decision task, both networks received noisy current injection, but the decision network received two noisy, spatially-selective inputs and its task was to decide which was stronger. It also received spatially non-selective input from the timing network, i.e. every neuron in the timing network projected uniformly to every neuron in the decision network. Spatially non-selective input to recurrent networks is an established form of gain modulation [33], where the magnitude of a selective neural response is modulated by a second source of input (see [34], [35] and Figure 1C). Temporal constraints encoded by climbing activity upstream thereby modulated the rate of downstream decision processing. Note that we use the terms climbing activity and ascending activity interchangeably, and we use the term ramping to refer to ascending or descending activity (see the Discussion).

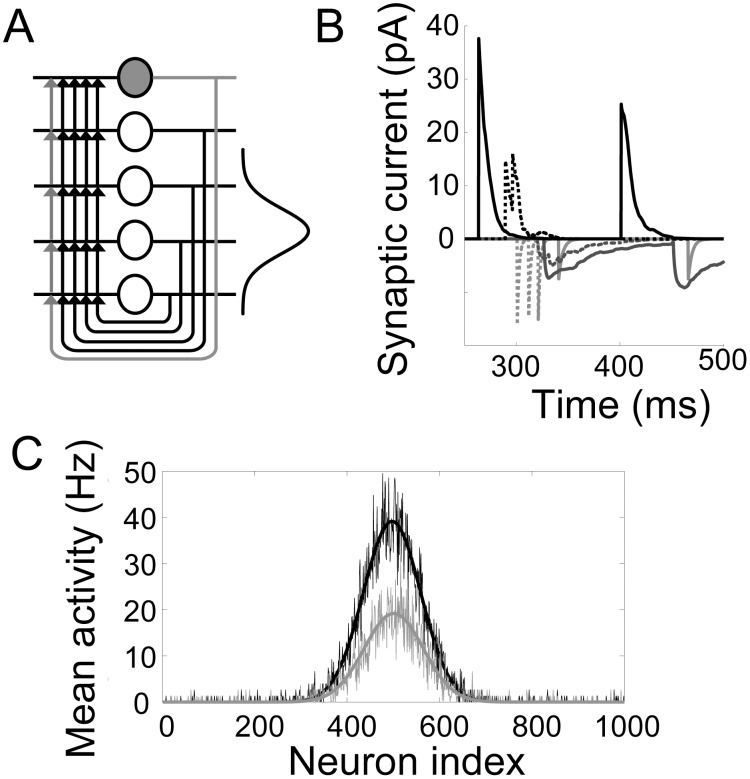

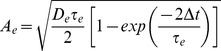

Figure 1. Local-circuit cortical model.

(A) Fully recurrent network of pyramidal neurons (white circles with black projections) and inhibitory interneurons (grey filled circles with grey projections). The 4/1 ratio of pyramidal neurons to interneurons preserves the population sizes in the spiking model (1000/250). The strength of synaptic conductance between pyramidal cells is a Gaussian function of the spatial distance between them (Equation 1), depicted by the Gaussian curve on the right hand side. (B) Intrinsic AMPAR (negative, light grey), NMDAR (negative, dark grey) and GABAR (positive, black) currents onto a pyramidal neuron (solid curves) and onto an interneuron (dotted curves) during the background state. (C) Gain modulation of the decision network by the timing network ( ) in a trial with only one stimulus for the purpose of demonstration. The stimulus was centred on pyramidal neuron 500. The grey curves show the mean spike density function (SDF, see Methods) over the downstream network during the first 250 ms of the trial. The black curves show the mean SDF over the last 250 ms. As climbing activity evolves in the timing network, the response to the stimulus in the downstream network increases without a change to stimulus selectivity. Smooth curves are Gaussian fits to the noisy curves.

) in a trial with only one stimulus for the purpose of demonstration. The stimulus was centred on pyramidal neuron 500. The grey curves show the mean spike density function (SDF, see Methods) over the downstream network during the first 250 ms of the trial. The black curves show the mean SDF over the last 250 ms. As climbing activity evolves in the timing network, the response to the stimulus in the downstream network increases without a change to stimulus selectivity. Smooth curves are Gaussian fits to the noisy curves.

The network model

The local circuit model is a fully recurrent network of leaky integrate-and-fire neurons [36], comprised of  simulated pyramidal neurons and

simulated pyramidal neurons and  fast-spiking inhibitory interneurons. For pyramidal-to-pyramidal synapses,

fast-spiking inhibitory interneurons. For pyramidal-to-pyramidal synapses,  is a Gaussian function of the distance between neurons arranged in a ring. The weight

is a Gaussian function of the distance between neurons arranged in a ring. The weight  between any two pyramidal neurons

between any two pyramidal neurons  and

and  is therefore given by

is therefore given by

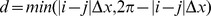

| (1) |

where  defines distance in the ring,

defines distance in the ring,  is a scale factor, and

is a scale factor, and  , depicted on the right side of Figure 1A. The biological basis of

, depicted on the right side of Figure 1A. The biological basis of  is the probability of lateral synaptic contact between pyramidal neurons, generally found to be monotonically decreasing over a distance of

is the probability of lateral synaptic contact between pyramidal neurons, generally found to be monotonically decreasing over a distance of  in layers 2/3 and 5 [37], [38]. Width parameter

in layers 2/3 and 5 [37], [38]. Width parameter  therefore corresponds to approximately

therefore corresponds to approximately  axially in cortical tissue, consistent with cortical tuning curves [39], [40]. Like earlier authors (e.g. [21], [24]), we do not attribute biological significance to the spatial periodicity of the network; rather, this arrangement allows the implementation of

axially in cortical tissue, consistent with cortical tuning curves [39], [40]. Like earlier authors (e.g. [21], [24]), we do not attribute biological significance to the spatial periodicity of the network; rather, this arrangement allows the implementation of  with all-to-all connectivity without biases due to asymmetric lateral interactions between pyramidal neurons. Synaptic connectivity from pyramidal neurons to interneurons, from interneurons to pyramidal neurons, and from interneurons to interneurons is unstructured in the network, so for each of these cases,

with all-to-all connectivity without biases due to asymmetric lateral interactions between pyramidal neurons. Synaptic connectivity from pyramidal neurons to interneurons, from interneurons to pyramidal neurons, and from interneurons to interneurons is unstructured in the network, so for each of these cases,  for all

for all  and

and  .

.

Each model neuron is described by

| (2) |

where  is the membrane capacitance of the neuron,

is the membrane capacitance of the neuron,  is the leakage conductance,

is the leakage conductance,  is the membrane potential,

is the membrane potential,  is the equilibrium potential, and

is the equilibrium potential, and  is the total input current. When

is the total input current. When  reaches a threshold

reaches a threshold  , it is reset to

, it is reset to  , after which it is unresponsive to its input for an absolute refractory period of

, after which it is unresponsive to its input for an absolute refractory period of  . For pyramidal neurons,

. For pyramidal neurons,  ,

,  ,

,  ,

,  ,

,  and

and  . For interneurons,

. For interneurons,  ,

,  ,

,  ,

,  ,

,  and

and  [21].

[21].

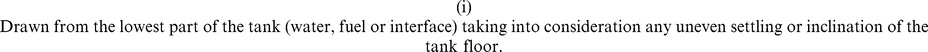

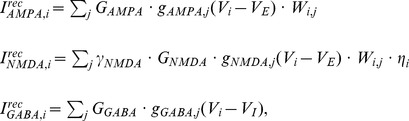

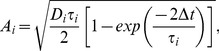

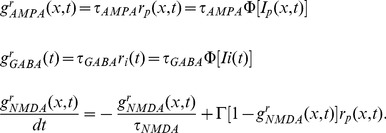

Excitatory currents from pyramidal neurons were mediated by AMPA receptor (AMPAR) and NMDAR conductances, and inhibitory currents from interneurons were mediated by GABA receptor (GABAR) conductances (Figure 1B). The total input current to each neuron  is given by

is given by

| (3) |

where  ,

,  and

and  are the summed AMPAR, NMDAR and GABAR currents from intrinsic (recurrent) synapses, and

are the summed AMPAR, NMDAR and GABAR currents from intrinsic (recurrent) synapses, and  is background noise, described below. These intrinsic currents are defined by

is background noise, described below. These intrinsic currents are defined by

|

(4) |

where  ,

,  and

and  are the respective strengths of AMPAR, NMDAR and GABAR conductance,

are the respective strengths of AMPAR, NMDAR and GABAR conductance,  is the reversal potential for AMPARs and NMDARs and

is the reversal potential for AMPARs and NMDARs and  is the reversal potential for GABARs [21]. AMPAR and GABAR activation (proportion of open channels) are described by

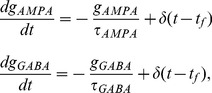

is the reversal potential for GABARs [21]. AMPAR and GABAR activation (proportion of open channels) are described by

|

(5) |

where  is the Dirac delta function and

is the Dirac delta function and  is the time of firing of a pre-synaptic neuron. For NMDAR activation,

is the time of firing of a pre-synaptic neuron. For NMDAR activation,  has a slower rise and decay and is described by

has a slower rise and decay and is described by

| (6) |

where  controls the saturation of NMDAR channels at high pre-synaptic spike frequencies [21]. The slower opening of NMDAR channels is captured by

controls the saturation of NMDAR channels at high pre-synaptic spike frequencies [21]. The slower opening of NMDAR channels is captured by

| (7) |

where  was set to

was set to  [21]. The voltage-dependence of NMDARs is captured by

[21]. The voltage-dependence of NMDARs is captured by  , where

, where  describes the extracellular Magnesium concentration and

describes the extracellular Magnesium concentration and  is measured in millivolts [41]. The scale factor

is measured in millivolts [41]. The scale factor  is described below.

is described below.

The time constants and conductance strengths of AMPAR, NMDAR and GABAR synapses onto cortical pyramidal neurons and inhibitory interneurons vary according to tissue preparation, recording method, species, cortical layer, and to some degree, cortical area within a species or layer. En masse, electrophysiological data provide reasonable guidelines for these parameters, but we emphasize that nothing in the model is fine tuned and our results hold for a broad range of parameters. For AMPAR-mediated currents,  and

and  at synapses onto pyramidal neurons, and

at synapses onto pyramidal neurons, and  and

and  at synapses onto interneurons, producing fast-decaying monosynaptic AMPAR currents on the order of

at synapses onto interneurons, producing fast-decaying monosynaptic AMPAR currents on the order of  [42], [43] that are stronger and faster onto inhibitory interneurons than pyramidal neurons [44]–[46]. For NMDAR-mediated currents,

[42], [43] that are stronger and faster onto inhibitory interneurons than pyramidal neurons [44]–[46]. For NMDAR-mediated currents,  and

and  at synapses onto pyramidal neurons, and

at synapses onto pyramidal neurons, and  and

and  at synapses onto interneurons, producing slow-decaying monosynaptic NMDAR currents on the order of

at synapses onto interneurons, producing slow-decaying monosynaptic NMDAR currents on the order of  [42], [47] that are stronger and slower at synapses onto pyramidal neurons than interneurons [46]. Our excitatory synaptic parameters thus emphasize fast inhibitory recruitment in response to slower excitation (see [48] for discussion). For GABAR-mediated currents,

[42], [47] that are stronger and slower at synapses onto pyramidal neurons than interneurons [46]. Our excitatory synaptic parameters thus emphasize fast inhibitory recruitment in response to slower excitation (see [48] for discussion). For GABAR-mediated currents,  and

and  at synapses onto pyramidal neurons and

at synapses onto pyramidal neurons and  and

and  at synapses onto interneurons [49], [50], producing monosynaptic GABAR currents several times stronger than the above excitatory currents, where stronger conductance onto pyramidal cells was meant to reflect the greater prevalence of GABAR synapses onto pyramidal cells than interneurons [51]. See Figure 1B for exemplary synaptic currents in the model.

at synapses onto interneurons [49], [50], producing monosynaptic GABAR currents several times stronger than the above excitatory currents, where stronger conductance onto pyramidal cells was meant to reflect the greater prevalence of GABAR synapses onto pyramidal cells than interneurons [51]. See Figure 1B for exemplary synaptic currents in the model.

Background activity

For each neuron, current  simulates in vivo cortical background activity by the point-conductance model of [52]:

simulates in vivo cortical background activity by the point-conductance model of [52]:

| (8) |

where reversal potentials  and

and  are given the same values as those for excitatory and inhibitory synapses in Equation 4. The time-dependent excitatory and inhibitory conductances

are given the same values as those for excitatory and inhibitory synapses in Equation 4. The time-dependent excitatory and inhibitory conductances  and

and  are updated at each timestep

are updated at each timestep  according to

according to

| (9) |

and

| (10) |

where  and

and  are average conductances,

are average conductances,  and

and  are time constants,

are time constants,  is normally distributed random noise with 0 mean and unit standard deviation. Amplitude coefficients

is normally distributed random noise with 0 mean and unit standard deviation. Amplitude coefficients  and

and  are defined by

are defined by

|

(11) |

and

|

(12) |

where  and

and  are noise ‘diffusion’ coefficients. See [52] for the derivation of these equations. We followed Table 1 of [52] for parameter values

are noise ‘diffusion’ coefficients. See [52] for the derivation of these equations. We followed Table 1 of [52] for parameter values  ,

,  ,

,  and

and  for pyramidal neurons and interneurons and

for pyramidal neurons and interneurons and  for pyramidal neurons. We gave nominal values to

for pyramidal neurons. We gave nominal values to  for interneurons and to

for interneurons and to  for pyramidal neurons and interneurons, setting these conductances to

for pyramidal neurons and interneurons, setting these conductances to  , i.e. the network's intrinsic connectivity was sufficient to mediate realistic levels of inhibitory background activity onto pyramidal neurons and excitatory and inhibitory background activity onto interneurons.

, i.e. the network's intrinsic connectivity was sufficient to mediate realistic levels of inhibitory background activity onto pyramidal neurons and excitatory and inhibitory background activity onto interneurons.

Table 1. Parameters for the probability density of first passage times.

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Parameter values for the probability density of first passage times, plotted in Figure 7.  : NMDAR conductance strength;

: NMDAR conductance strength;  : initial state;

: initial state;  : timing threshold in terms of NMDAR activation;

: timing threshold in terms of NMDAR activation;  : largest positive eigenvalue.

: largest positive eigenvalue.

Population activity

In both simulated tasks, performance was determined by the mean activity of localized populations of neurons. Spike density functions (SDF, rounded to the nearest millisecond) were therefore built for these neurons by convolving their spike trains with a rise-and-decay function

where t is the time following stimulus onset and  and

and  are the time constants of rise and decay respectively [53]. In the interval estimation task, it was necessary to first identify the relevant population (the bump population) before averaging its activity. To this end, we built SDFs for all pyramidal neurons in the network and the neuron with the highest mean SDF over the full trial was considered the centre of the bump. We included

are the time constants of rise and decay respectively [53]. In the interval estimation task, it was necessary to first identify the relevant population (the bump population) before averaging its activity. To this end, we built SDFs for all pyramidal neurons in the network and the neuron with the highest mean SDF over the full trial was considered the centre of the bump. We included  neurons on either side of this centre in the bump population. In the decision task, the centres of the response fields for the competing stimuli were pre-determined, so SDFs were constructed for these neurons, as well as the

neurons on either side of this centre in the bump population. In the decision task, the centres of the response fields for the competing stimuli were pre-determined, so SDFs were constructed for these neurons, as well as the  neurons on either side. All simulations were run with timestep

neurons on either side. All simulations were run with timestep  and the standard implementation of Euler's forward method.

and the standard implementation of Euler's forward method.

Reduction of the network to an integral and partial differential system

To investigate the mechanism by which the timing network produced climbing activity, we simplified the network to an equivalent integral and partial differential system using a Wilson-Cowan type approach [27], [54]. We then used methods for the study of non-linear dynamics and stochastic processes to analyse the reduced system.

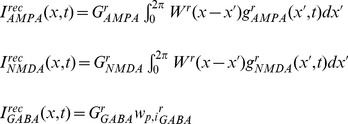

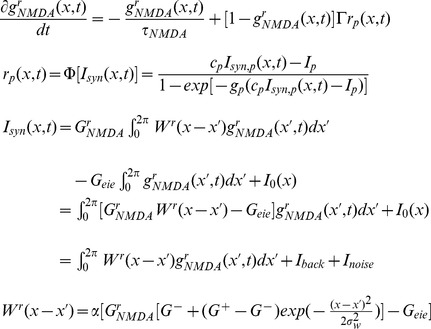

Because pyramidal-to-pyramidal synaptic connectivity is structured and all synaptic connections with interneurons are unstructured, the firing rate of pyramidal neurons and interneurons can be modelled as

| (13) |

and

| (14) |

where (as above)  denotes spatial location and

denotes spatial location and  is the activation function

is the activation function

| (15) |

with gain factor  and noise factor

and noise factor  . The synaptic current at pyramidal neurons at location

. The synaptic current at pyramidal neurons at location  is

is  and consists of AMPAR-, NMDAR- and GABAR-mediated synaptic currents and background current, i.e.

and consists of AMPAR-, NMDAR- and GABAR-mediated synaptic currents and background current, i.e.

. The first three of these currents can be approximated as

. The first three of these currents can be approximated as

|

(16) |

where  ,

,  and

and  describe the effective synaptic strength. Superscripts ‘r’ denote the correspondence of terms in the ‘reduced’ system with those in the timing network. The synaptic currents onto interneurons are similar. Synaptic activation is described by

describe the effective synaptic strength. Superscripts ‘r’ denote the correspondence of terms in the ‘reduced’ system with those in the timing network. The synaptic currents onto interneurons are similar. Synaptic activation is described by  ,

,  and

and  , obeying the dynamics

, obeying the dynamics

|

(17) |

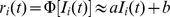

Because the NMDAR time constant is much longer than the respective time constants of AMPARs, GABARs and neuronal firing rates (Equations 13 and 14), these last three variables can be given their steady state values, while NMDAR activation dominates the dynamics of the system. Thus, the system can be described by

|

(18) |

By further linearizing the activation function of the interneurons  , we obtain the integral and partial equation to approximate the timing network:

, we obtain the integral and partial equation to approximate the timing network:

|

(19) |

The chosen parameters were  (

( ),

),  ,

,  ,

,  ,

,  ,

,  ,

,  ,

,  ,

,  ,

,  ,

,  ,

,  . Scale factors

. Scale factors  ,

,  and

and  were used to tune the model to qualitatively reproduce the steady state firing rates in the timing network, while

were used to tune the model to qualitatively reproduce the steady state firing rates in the timing network, while  abstracts over the unstructured interactions between pyramidal neurons and interneurons.

abstracts over the unstructured interactions between pyramidal neurons and interneurons.

Results

We used the generic local-circuit model to simulate an interval estimation task, where intervals were estimated by the time that climbing activity crossed a fixed threshold. We coupled two instances of the local-circuit model to simulate a decision task, where the timing network governed the rate at which the decision network distinguished between two stimuli. As described above, the decision network was identical to the timing network, including all parameter values except for NMDAR conductance strength. Our results proceed as follows: (1) we show that climbing activity in the timing network is controlled by the strength of NMDAR conductance, encoding intervals by the time it takes to reach a fixed threshold. We explain the mechanism underlying this phenomenon by dynamic analysis of the reduced system. (2) We show that the interval estimates by the timing network conform to the scalar property of interval timing, a widely observed experimental phenomenon in which the standard deviation of interval estimates scales linearly with the mean [13], [55]. We explain how the scalar property emerges from the timing network by deriving the probability density of first passage times of the timing threshold. (3) We show that a simple learning rule is sufficient for the timing network to learn to estimate different intervals in the hundreds of milliseconds range. (4) We demonstrate several biologically plausible mechanisms for starting and stopping interval estimates by the timing network, each of which qualitatively reproduces electrophysiological data from tasks with a timing requirement. (5) We demonstrate that the timing network controls the SAT in the downstream decision network, using a biologically plausible means of gain modulation. (6) We show that the resulting distribution of decision times reproduces signature characteristics of decision times by experimental subjects.

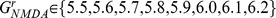

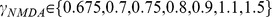

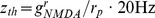

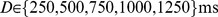

Interval estimates are controlled by NMDAR conductance strength in the timing network

We simulated an interval estimation task with the generic local-circuit model. Estimates of different intervals were produced by scaling the conductance strength of NMDARs by  , where different values of

, where different values of  supported different rates of buildup of activity by the bump population. On each trial, the time at which the mean SDF of the bump population reached a threshold of

supported different rates of buildup of activity by the bump population. On each trial, the time at which the mean SDF of the bump population reached a threshold of  was considered the interval estimate. Thus, like earlier authors (e.g.

[56], [57]), we assume that a behaviourally relevant ballistic process is initiated downstream when neural activity encoding the interval estimate reaches a certain firing rate. The task was simulated by running the network for

was considered the interval estimate. Thus, like earlier authors (e.g.

[56], [57]), we assume that a behaviourally relevant ballistic process is initiated downstream when neural activity encoding the interval estimate reaches a certain firing rate. The task was simulated by running the network for  , sufficient time for a bump to develop for all of the above values of

, sufficient time for a bump to develop for all of the above values of  on at least

on at least  of trials. This length of time may seem long for estimates in the hundreds of milliseconds range, but for longer interval estimates, it allowed for the growing variability of estimates with interval duration, commonly seen in interval estimates in experimental tasks (see Results section The scalar property of interval timing). For

of trials. This length of time may seem long for estimates in the hundreds of milliseconds range, but for longer interval estimates, it allowed for the growing variability of estimates with interval duration, commonly seen in interval estimates in experimental tasks (see Results section The scalar property of interval timing). For  , bumps did not consistently develop within the allotted time, and for those that did, spiking activity did not consistently reach

, bumps did not consistently develop within the allotted time, and for those that did, spiking activity did not consistently reach  . For

. For  , background spiking was approximately

, background spiking was approximately  among pyramidal neurons and

among pyramidal neurons and  among interneurons [58], but climbing activity was not supported by the network. An upper limit of

among interneurons [58], but climbing activity was not supported by the network. An upper limit of  was used because it is consistent with the experimental enhancement of the NMDAR component of cortical excitatory post-synaptic currents by approximately

was used because it is consistent with the experimental enhancement of the NMDAR component of cortical excitatory post-synaptic currents by approximately  of baseline [59], [60] and because interval estimates were increasingly indistinguishable above this value.

of baseline [59], [60] and because interval estimates were increasingly indistinguishable above this value.  trials were run for each value of

trials were run for each value of  .

.

Varying the scale factor  furnished a range of rates of buildup activity, where lower values of

furnished a range of rates of buildup activity, where lower values of  lead to slower buildup and higher values lead to faster buildup. The lowest value of

lead to slower buildup and higher values lead to faster buildup. The lowest value of  that consistently supported buildup activity produced a mean interval estimate of

that consistently supported buildup activity produced a mean interval estimate of  (

( ). The highest value of

). The highest value of  consistent with experimental enhancement of cortical EPSCs [59], [60] produced a mean estimate of

consistent with experimental enhancement of cortical EPSCs [59], [60] produced a mean estimate of  (

( ). The timing network thus supported interval estimates from approximately

). The timing network thus supported interval estimates from approximately  to

to  , consistent with experimental evidence that temporal coding on this order is supported by a common mechanism [12], [55]. Example trials for three values of

, consistent with experimental evidence that temporal coding on this order is supported by a common mechanism [12], [55]. Example trials for three values of  are shown in Figure 2. Note that the location of the bump differs on each trial, as there is no bias favouring a particular network location. We are unaware of any data to conclusively confirm or refute such trial-to-trial variability, but to produce climbing activity in the same sub-population from trial to trial, we simply need to strengthen excitatory synaptic conductances among a few localized neurons, e.g. by Hebbian long term potentiation among the neurons participating in the bump. In the coupled-circuit decision trials in Section Encoding time constraints for a decision, the location of climbing activity in the timing network does not matter because projections from the timing network to the decision network are spatially non-selective.

are shown in Figure 2. Note that the location of the bump differs on each trial, as there is no bias favouring a particular network location. We are unaware of any data to conclusively confirm or refute such trial-to-trial variability, but to produce climbing activity in the same sub-population from trial to trial, we simply need to strengthen excitatory synaptic conductances among a few localized neurons, e.g. by Hebbian long term potentiation among the neurons participating in the bump. In the coupled-circuit decision trials in Section Encoding time constraints for a decision, the location of climbing activity in the timing network does not matter because projections from the timing network to the decision network are spatially non-selective.

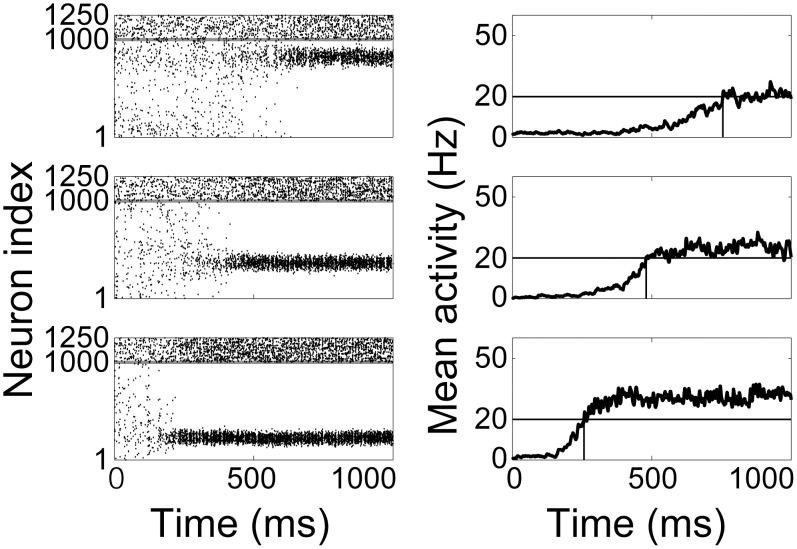

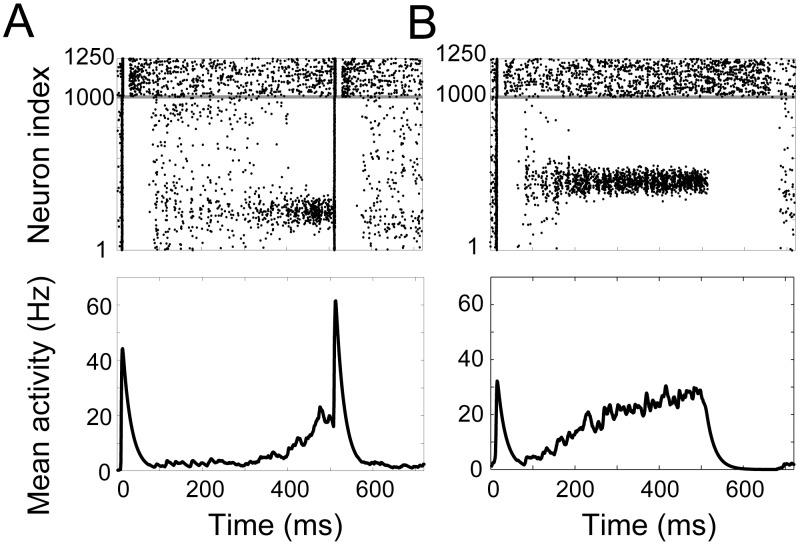

Figure 2. Raster plots (left) and mean SDFs over the ‘bump’ population (right) show the timing network coding temporal intervals with  (top),

(top),  (middle) and

(middle) and  (bottom).

(bottom).

Intervals were estimated when the mean SDF of the bump population reached 20 Hz. In raster plots, pyramidal neurons and inhibitory interneurons are indexed from 1–1000 and 1001–1250 respectively, indicated by the horizontal grey line.

The mechanism underlying climbing activity in the timing network can be understood by non-linear analysis of the reduced integral and partial differential system. For a given value of the effective synaptic strength  , corresponding to NMDAR conductance strength in the timing network, the steady states of the reduced system can be calculated by setting the right hand side of Equation 19 to zero and solving the resulting equations. Our analysis revealed three regimes of the reduced system. 1) Sufficiently small values of

, corresponding to NMDAR conductance strength in the timing network, the steady states of the reduced system can be calculated by setting the right hand side of Equation 19 to zero and solving the resulting equations. Our analysis revealed three regimes of the reduced system. 1) Sufficiently small values of  furnished a flat steady state which is stable and whose eigenvalues are negative. This regime corresponds to the common case in cortex, where background activity is stable and stimulus-selective activity decays to this background state after stimulus offset. This regime in the reduced system corresponds to approximately

furnished a flat steady state which is stable and whose eigenvalues are negative. This regime corresponds to the common case in cortex, where background activity is stable and stimulus-selective activity decays to this background state after stimulus offset. This regime in the reduced system corresponds to approximately  in the timing network. 2) With a moderate increase in

in the timing network. 2) With a moderate increase in  , the system enters a bistable regime. The stable flat steady state is retained, but a small unstable bump steady state and a large stable bump steady state emerge. This bistable regime corresponds to the classic persistent storage regime in these networks (e.g.

[21], [26]), in which a stimulus can trigger a bump state, which persists after stimulus offset. This regime in the reduced system corresponds to approximately

, the system enters a bistable regime. The stable flat steady state is retained, but a small unstable bump steady state and a large stable bump steady state emerge. This bistable regime corresponds to the classic persistent storage regime in these networks (e.g.

[21], [26]), in which a stimulus can trigger a bump state, which persists after stimulus offset. This regime in the reduced system corresponds to approximately  in the timing network. 3) With a further increase in

in the timing network. 3) With a further increase in  , the stable flat state and the unstable bump steady state coalesce into one unstable flat steady state whose largest eigenvalue is positive, while the stable bump state becomes higher. The magnitudes of the unstable flat steady state and the stable bump state increase with further increase to

, the stable flat state and the unstable bump steady state coalesce into one unstable flat steady state whose largest eigenvalue is positive, while the stable bump state becomes higher. The magnitudes of the unstable flat steady state and the stable bump state increase with further increase to  . This regime in the reduced system corresponds to approximately

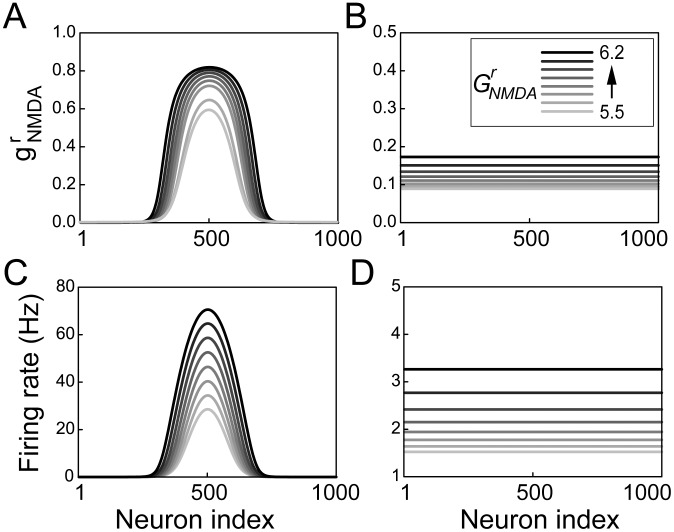

. This regime in the reduced system corresponds to approximately  in the timing network. This third regime is shown in Figure 3, where panels A and B show NMDAR activation at the stable bump state and the unstable flat steady state respectively with increasing

in the timing network. This third regime is shown in Figure 3, where panels A and B show NMDAR activation at the stable bump state and the unstable flat steady state respectively with increasing  . Panels C and D show the corresponding firing rates

. Panels C and D show the corresponding firing rates  .

.

Figure 3. Two steady states of the reduced system.

(A) NMDAR activation at the stable bump state for synaptic strengths  . Darker curves correspond to higher values of

. Darker curves correspond to higher values of  (see legend in panel B). (B) NMDAR activation at the unstable steady state. (C) Firing rates at the stable steady state. (D) Firing rates at the unstable steady state. Shades of grey in B, C and D correspond to those in A.

(see legend in panel B). (B) NMDAR activation at the unstable steady state. (C) Firing rates at the stable steady state. (D) Firing rates at the unstable steady state. Shades of grey in B, C and D correspond to those in A.

The instantaneous firing rates  of the stable bump states in the reduced system were consistent with the steady state spike rates in the timing network, ranging from approximately

of the stable bump states in the reduced system were consistent with the steady state spike rates in the timing network, ranging from approximately  to

to  as

as  was increased from

was increased from  to

to  , corresponding to an increase in

, corresponding to an increase in  from

from  to

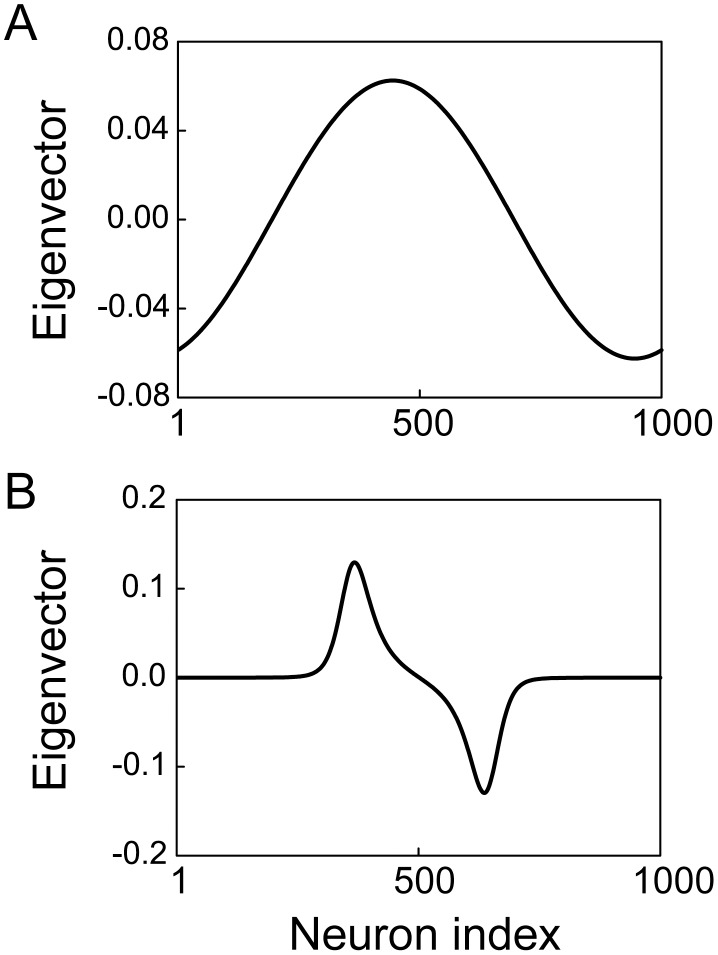

to  in the timing network (Figure 3C). The evolution of the system away from the unstable flat steady state is dominated by the largest positive eigenvalue and a localized activity bump emerges due to the corresponding eigenvector (Figure 4). On the other hand, the largest eigenvalue of the stable bump steady state is zero and the corresponding eigenvector explains the invariant location of the bump [61]. Climbing activity therefore occurs at arbitrary locations, as shown in Figure 2 above.

in the timing network (Figure 3C). The evolution of the system away from the unstable flat steady state is dominated by the largest positive eigenvalue and a localized activity bump emerges due to the corresponding eigenvector (Figure 4). On the other hand, the largest eigenvalue of the stable bump steady state is zero and the corresponding eigenvector explains the invariant location of the bump [61]. Climbing activity therefore occurs at arbitrary locations, as shown in Figure 2 above.

Figure 4. Eigenvectors of the reduced system.

The first eigenvector of the unstable flat steady state (A) and stable bump steady state (B).

The scalar property of interval timing

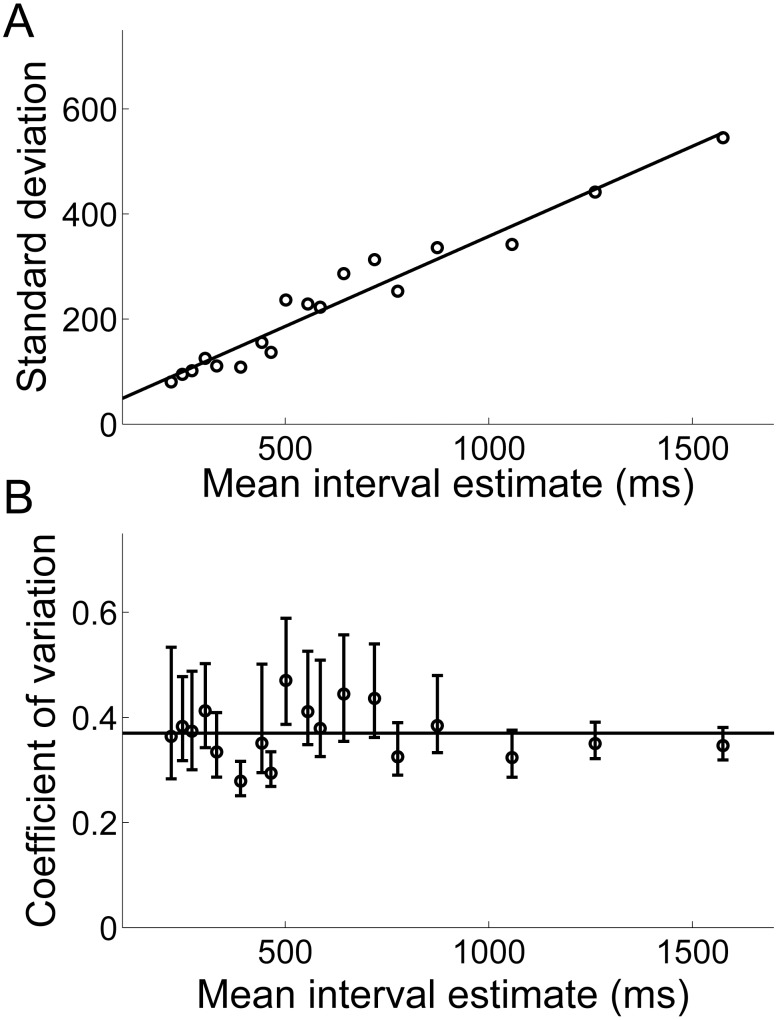

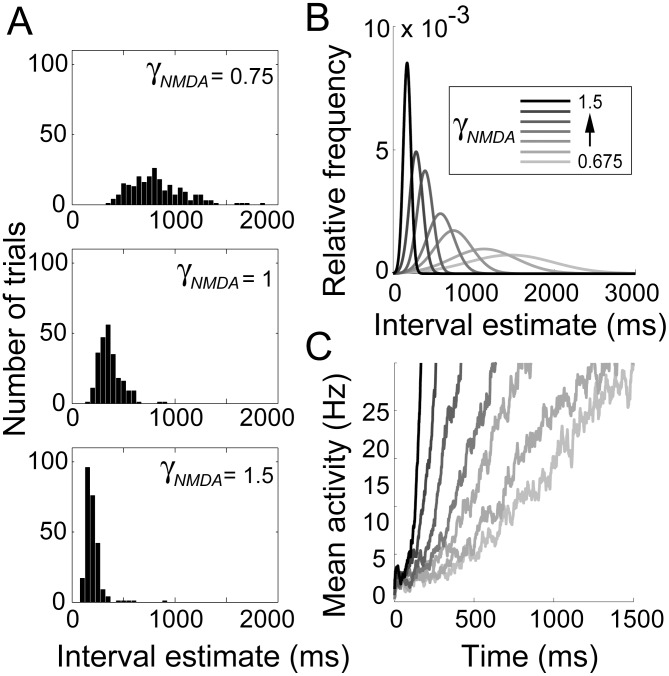

Not only did the timing network produce interval estimates, but the estimates conformed to the scalar property of interval timing [62]. The scalar property is a strong form of Weber's law where the standard deviation of estimates is proportional to the mean (Figure 5A). Weber's law is widely regarded as a signature characteristic of interval timing across a wide range of temporal orders [13], [55], though see [63] and [64] for a systematic description of conformities and violations of the scalar property in humans and non-human animals respectively. The coefficient of variation (CV, the standard deviation divided by the mean) of the interval estimates produced by the timing network was approximately constant (Figure 5B) and compared favourably to experimental measurements on this order [55], [65]. The distribution of interval estimates for each value of  was roughly normal (Figure 6A), another widely-reported characteristic of interval estimates across a range of temporal orders [13], [55]. Gaussian fits to the estimates are shown in Figure 6B. For comparisons with experimental data in the hundreds of milliseconds range, see e.g.

[66] and [67].

was roughly normal (Figure 6A), another widely-reported characteristic of interval estimates across a range of temporal orders [13], [55]. Gaussian fits to the estimates are shown in Figure 6B. For comparisons with experimental data in the hundreds of milliseconds range, see e.g.

[66] and [67].

Figure 5. The interval estimates produced by the timing network conform to the scalar property of interval timing.

(A) The standard deviation over the mean interval produced by the timing network for each value of  . The plotted line shows the best linear fit (least squares). (B) Coefficient of variation (CV) over the mean interval for each value of

. The plotted line shows the best linear fit (least squares). (B) Coefficient of variation (CV) over the mean interval for each value of  . Error bars show

. Error bars show  confidence intervals. The plotted line shows the best horizontal linear fit. As the estimates increase in length, the data converge to the slope of the linear fit in A.

confidence intervals. The plotted line shows the best horizontal linear fit. As the estimates increase in length, the data converge to the slope of the linear fit in A.

Figure 6. Interval estimates produced by the timing network.

(A) Distribution of intervals produced by the timing network for  (top),

(top),  (middle) and

(middle) and  (bottom). Histogram bins were

(bottom). Histogram bins were  . (B) Gaussian fits to the distribution of intervals produced for

. (B) Gaussian fits to the distribution of intervals produced for  , the first and last of which correspond to the lowest and highest values of

, the first and last of which correspond to the lowest and highest values of  (see Methods). (C) Mean SDFs at the centre of the bump (see Methods) over all trials for the values of

(see Methods). (C) Mean SDFs at the centre of the bump (see Methods) over all trials for the values of  shown in B.

shown in B.

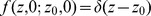

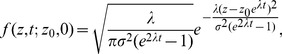

Climbing activity in the timing network can be understood as the evolution of the system from an initial state in the vicinity of the unstable flat steady state to the stable bump state. We linearized the system in the vicinity of the unstable flat steady state as  , where

, where  denotes

denotes  for simplicity. To consider the effects of noise, the linear system can be expressed as a Langevin equation

for simplicity. To consider the effects of noise, the linear system can be expressed as a Langevin equation  , where

, where  is the eigenvalue of the matrix

is the eigenvalue of the matrix  ,

,  is a vector, and

is a vector, and  is white noise with standard deviation

is white noise with standard deviation  . According to non-linear dynamics, the system expands along the manifold tangent to the eigenvector with positive eigenvalue. Thus, we focus on the largest positive eigenvalue, which dominates the expansion of the system [68], and further simplify the system as a 1-dimensional Ornstein-Uhlenbeck (OU) process

. According to non-linear dynamics, the system expands along the manifold tangent to the eigenvector with positive eigenvalue. Thus, we focus on the largest positive eigenvalue, which dominates the expansion of the system [68], and further simplify the system as a 1-dimensional Ornstein-Uhlenbeck (OU) process

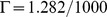

| (20) |

The parameter  of an OU process is typically negative, supporting a stable distribution. Here,

of an OU process is typically negative, supporting a stable distribution. Here,  is positive because the flat steady state is unstable. Thus, positive

is positive because the flat steady state is unstable. Thus, positive  implies that the system departs from the flat state starting at initial state

implies that the system departs from the flat state starting at initial state  . The corresponding Fokker-Plank equation can be written as

. The corresponding Fokker-Plank equation can be written as

| (21) |

with initial state  . The distribution of arrival times of

. The distribution of arrival times of  at the timing threshold can be calculated as

at the timing threshold can be calculated as

|

(22) |

which shows that the system grows along the curve  with standard deviation

with standard deviation  .

.

Intervals are estimated when the system reaches the threshold  , so the interval estimates are the first passage times of the OU process, the distribution of which can be calculated as

, so the interval estimates are the first passage times of the OU process, the distribution of which can be calculated as

|

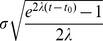

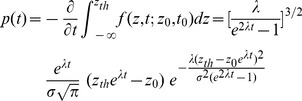

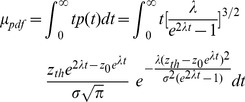

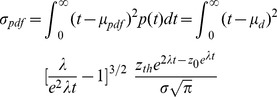

(23) |

with mean  and variance

and variance  given by

given by

|

(24) |

and

|

(25) |

respectively.

Of note,  and

and  are the initial value and threshold of NMDAR activation, (

are the initial value and threshold of NMDAR activation, ( , Equation 19), not the firing rate. To calculate the distribution of first passage times numerically, we need to express the threshold in terms of

, Equation 19), not the firing rate. To calculate the distribution of first passage times numerically, we need to express the threshold in terms of  . For each value of

. For each value of  , we therefore calculated

, we therefore calculated  by scaling the interval estimation threshold in the timing network by the ratio of the maximum values of

by scaling the interval estimation threshold in the timing network by the ratio of the maximum values of  and

and  , i.e.

, i.e.

. This scaling preserves our use of a fixed firing rate threshold in the timing network. Values for

. This scaling preserves our use of a fixed firing rate threshold in the timing network. Values for  ,

,  and the largest positive eigenvalue

and the largest positive eigenvalue  are given in Table 1 for increasing

are given in Table 1 for increasing  . We used a constant level of background noise for all simulations (

. We used a constant level of background noise for all simulations ( ), consistent with our use of constant parameters with the background noise (point-conductance) model across the different values of

), consistent with our use of constant parameters with the background noise (point-conductance) model across the different values of  in the timing network.

in the timing network.

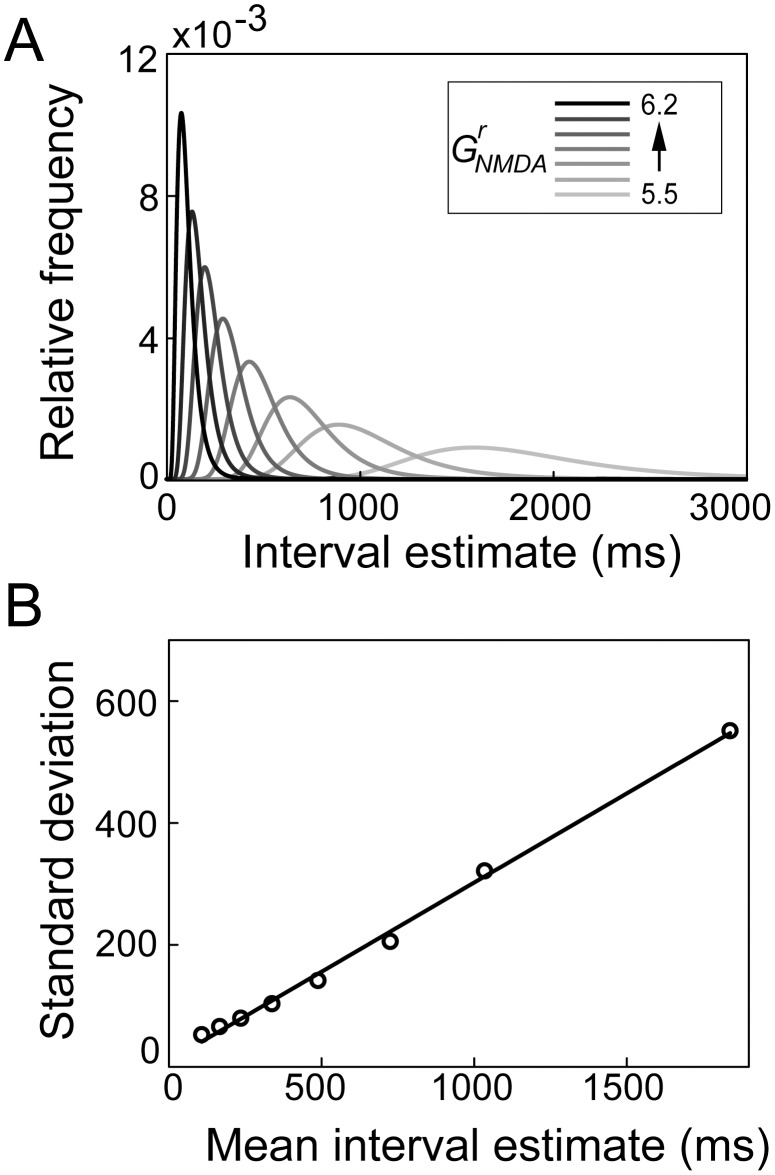

The distribution of first passage times is shown in Figure 7. These curves are very similar to the distribution of interval estimates by the timing network (compare Figures 6B and 7A). Stronger (weaker) NMDAR conductance causes faster (slower) ramping and a narrower (wider) distribution, while the relationship between the mean and standard deviation is approximately linear (compare Figures 5A and 7B).

Figure 7. The probability density of first passage of the timing threshold by the reduced system.

(A) Probability density of interval estimates for different values of NMDAR conductance strength  in the reduced system. (B) The standard deviation over the mean for curves shown in A.

in the reduced system. (B) The standard deviation over the mean for curves shown in A.

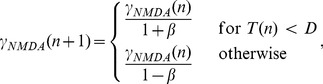

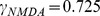

Learning interval estimates

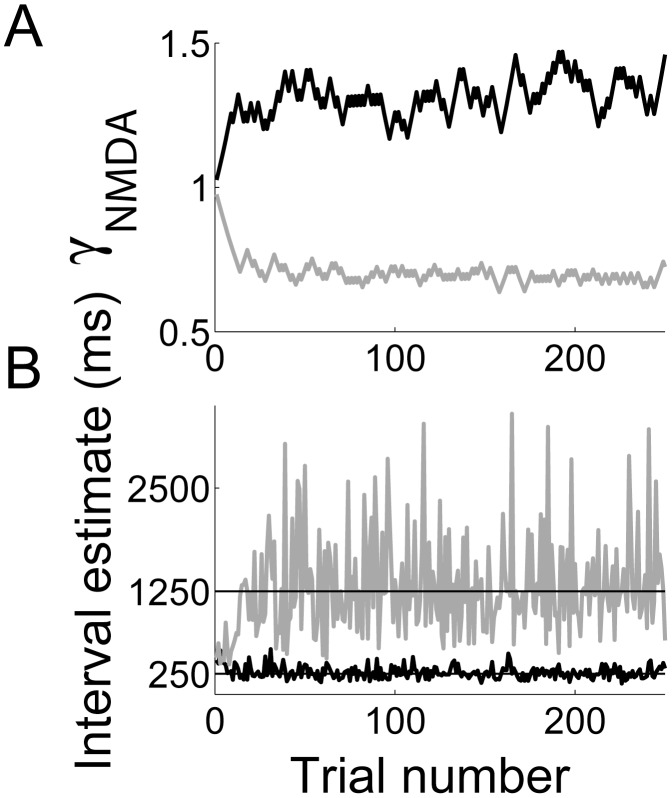

The previous sections demonstrate that the timing network estimates intervals in the hundreds of milliseconds range as a function of the scale factor  and that these estimates share signature characteristics with those of experimental subjects in studies of interval timing. Next, we consider whether the network can learn a given interval in this range, using a simple learning rule [57]. We ran the interval estimation task (described above in Results section Interval estimates are controlled by NMDAR conductance strength in the timing network) for desired intervals

and that these estimates share signature characteristics with those of experimental subjects in studies of interval timing. Next, we consider whether the network can learn a given interval in this range, using a simple learning rule [57]. We ran the interval estimation task (described above in Results section Interval estimates are controlled by NMDAR conductance strength in the timing network) for desired intervals  . For each desired interval, the network began the block of trials in the baseline condition (

. For each desired interval, the network began the block of trials in the baseline condition ( ) and

) and  was adjusted after each trial

was adjusted after each trial  according to

according to

|

(26) |

where  is the estimate of

is the estimate of  on trial

on trial  and

and  determines the rate of learning. As shown in Figure 8, the network learned each interval after a handful of trials [7], [32].

determines the rate of learning. As shown in Figure 8, the network learned each interval after a handful of trials [7], [32].

Figure 8. The timing network learns to estimate intervals under the rule given by Equation 26.

Black and grey curves correspond to specified intervals of  and

and  respectively. (A) Trial-to-trial fluctuations of

respectively. (A) Trial-to-trial fluctuations of  during learning. (B) Trial-to-trial interval estimates.

during learning. (B) Trial-to-trial interval estimates.

Starting and stopping the estimate

Estimates of elapsed time occur relative to a start time, so the network requires a start signal to begin each estimate. Such a signal should be able to reset the network to the background state, regardless of its current state. There are a number of plausible mechanisms that could play this role. We demonstrate two such mechanisms. One is a brief pulse of spatially non-selective excitation, generating blanket feedback inhibition and thus shutting the network down. This mechanism was demonstrated in an earlier study simulating persistent mnemonic activity in prefrontal cortex, using a local-circuit model similar to ours [21]. Figure 9A shows this mechanism in the timing network, where the average excitatory conductance of the point conductance model ( ) at pyramidal neurons was increased by a factor of

) at pyramidal neurons was increased by a factor of  at all pyramidal neurons for

at all pyramidal neurons for  to start the estimate, and again at time

to start the estimate, and again at time  to stop the estimate. In this case, the start signal potentially corresponds to broad excitation of the timing network by a cue stimulus, while the stop signal potentially corresponds to efference copy at the time of motor initiation [22]. As such, we do not expect these signals to be identical in duration or magnitude, but giving them the same parameters shows robustness of the mechanism (fine tuning of each signal was not necessary). Electrophysiological data showing a similar trajectory can be seen in e.g.

[69], where these data were interpreted as encoding the anticipation of an upcoming stimulus.

to stop the estimate. In this case, the start signal potentially corresponds to broad excitation of the timing network by a cue stimulus, while the stop signal potentially corresponds to efference copy at the time of motor initiation [22]. As such, we do not expect these signals to be identical in duration or magnitude, but giving them the same parameters shows robustness of the mechanism (fine tuning of each signal was not necessary). Electrophysiological data showing a similar trajectory can be seen in e.g.

[69], where these data were interpreted as encoding the anticipation of an upcoming stimulus.

Figure 9. Starting and stopping the timing network.

In raster plots (upper), pyramidal neurons and inhibitory interneurons are indexed from 1–1000 and 1001–1250 respectively, indicted by the horizontal grey line. Lower plots show mean SDF of the bump population for the same simulations. See text for simulation details. (A) Brief, spatially non-selective excitation of all pyramidal neurons resets the network, regardless of its current state. (B) Brief, spatially non-selective excitation and inhibition of pyramidal neurons starts and stops activity in the timing network respectively.

Another plausible reset mechanism is long-range excitatory targeting of inhibitory interneurons, which in turn inhibit local pyramidal neurons [70]. Such disynaptic inhibition has been suggested to underlie the control of motor initiation in anti-saccade [71] and countermanding tasks [72] and is simulated in the timing network in Figure 9B. In this simulation, the average excitatory conductance of the point conductance model ( ) at pyramidal neurons was increased by a factor of

) at pyramidal neurons was increased by a factor of  for

for  to start the estimate, and the average excitatory conductance at interneurons was increased by a factor of

to start the estimate, and the average excitatory conductance at interneurons was increased by a factor of  for

for  at

at  to stop the estimate. Similar electrophysiological data can be seen in e.g.

[73], interpreted as encoding the anticipation of an upcoming stimulus in their study. There are, of course, other mechanisms that could start and stop estimates of elapsed time by climbing activity. Indeed, we do not expect cortical timing circuits to remain indefinitely in a regime with no stable background state. For example, at the onset of a cue stimulus, fast-acting neuromodulation could alter network dynamics in a manner similar to the scaling of

to stop the estimate. Similar electrophysiological data can be seen in e.g.

[73], interpreted as encoding the anticipation of an upcoming stimulus in their study. There are, of course, other mechanisms that could start and stop estimates of elapsed time by climbing activity. Indeed, we do not expect cortical timing circuits to remain indefinitely in a regime with no stable background state. For example, at the onset of a cue stimulus, fast-acting neuromodulation could alter network dynamics in a manner similar to the scaling of  , or cortico-thalamo-cortical disinhibition could have a similar effect.

, or cortico-thalamo-cortical disinhibition could have a similar effect.

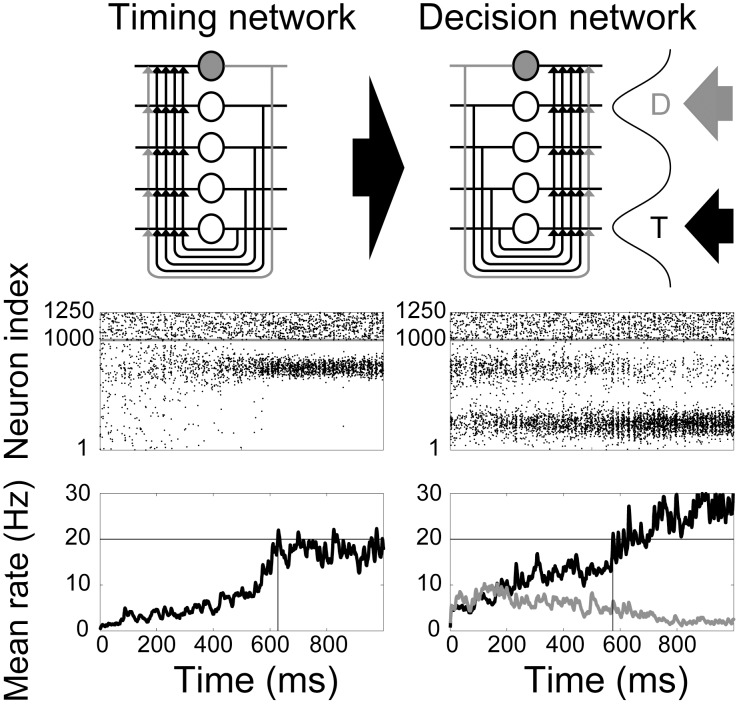

Encoding time constraints for a decision

To address the hypothesis that the encoding of elapsed time controls the speed and accuracy of decisions by gain modulation [11], we ran further simulations to determine if the timing network's temporal estimates could control the SAT in a downstream network during a decision task (Figure 10). As indicated above, the two networks were identical except for the inputs they received and the scale factor  . To emphasize the role played by the timing network in these simulations,

. To emphasize the role played by the timing network in these simulations,  was given a low value in the decision network (

was given a low value in the decision network ( , one quarter of the baseline NMDAR conductance in the timing network for synapses onto pyramidal neurons and interneurons) so its intrinsic processing was too weak to make decisions across all task difficulties without spatially non-selective input from the timing network. Note that this low value of

, one quarter of the baseline NMDAR conductance in the timing network for synapses onto pyramidal neurons and interneurons) so its intrinsic processing was too weak to make decisions across all task difficulties without spatially non-selective input from the timing network. Note that this low value of  did not support climbing activity in the absence of selective input. Down-scaling NMDARs was thus a practical means of limiting the decision network's processing capability. Although we do not assign it a specific biological correlate, we note that the properties of NMDARs can show marked variation between cortical regions [74] and under receptor modulation within a single region [60], [75].

did not support climbing activity in the absence of selective input. Down-scaling NMDARs was thus a practical means of limiting the decision network's processing capability. Although we do not assign it a specific biological correlate, we note that the properties of NMDARs can show marked variation between cortical regions [74] and under receptor modulation within a single region [60], [75].

Figure 10. Gain modulation of the decision network (right) by the timing network (left).

(Top row) Each network schematic reproduces the schematic in Figure 1A. Climbing activity in the timing network provides spatially non-selective input to the decision network, depicted by the broad arrow. The decision network has Gaussian response fields for the target (T) and distractor (D) stimuli (see Methods), where the thinner arrows depict incoming evidence. (Middle row) Raster plots for each network during a single trial of the decision task with  and task difficulty

and task difficulty  . Pyramidal neurons and inhibitory interneurons are indexed from 1–1000 and 1001–1250 respectively, indicated by the horizontal grey line. Neurons 250 and 750 are the centres of the target and distractor RFs respectively. (Bottom row) Mean SDFs over the bump population in the timing network and the target and distractor populations in the decision network (see Methods). Black horizontal lines indicate the 20 Hz threshold used for interval estimation and decision making in the respective networks. Vertical lines show the time of threshold-crossing.

. Pyramidal neurons and inhibitory interneurons are indexed from 1–1000 and 1001–1250 respectively, indicated by the horizontal grey line. Neurons 250 and 750 are the centres of the target and distractor RFs respectively. (Bottom row) Mean SDFs over the bump population in the timing network and the target and distractor populations in the decision network (see Methods). Black horizontal lines indicate the 20 Hz threshold used for interval estimation and decision making in the respective networks. Vertical lines show the time of threshold-crossing.

We simulated a two-choice decision task by providing two noisy stimuli to the decision network for  . On each trial, the network's task was to distinguish the higher-rate input (the target) from the lower-rate input (the distractor). For each stimulus, independent, homogeneous Poisson spike trains were provided to all pyramidal neurons in the decision network, where spike rates were drawn from a normal distribution with mean

. On each trial, the network's task was to distinguish the higher-rate input (the target) from the lower-rate input (the distractor). For each stimulus, independent, homogeneous Poisson spike trains were provided to all pyramidal neurons in the decision network, where spike rates were drawn from a normal distribution with mean  corresponding to the centre of a Gaussian response field defined by

corresponding to the centre of a Gaussian response field defined by  . Constants

. Constants  and

and  are given above for the pyramidal interaction structure

are given above for the pyramidal interaction structure  (Methods section The network model). For the target stimulus, we simulated

(Methods section The network model). For the target stimulus, we simulated  upstream stimulus-selective neurons firing at

upstream stimulus-selective neurons firing at  each by setting

each by setting  and setting extrinsic AMPAR and NMDAR conductance strength to

and setting extrinsic AMPAR and NMDAR conductance strength to  and

and  respectively, trading spatial summation for temporal summation [76]. The distractor stimulus was similarly defined, where task difficulty (target-distractor similarity) was determined by multiplying

respectively, trading spatial summation for temporal summation [76]. The distractor stimulus was similarly defined, where task difficulty (target-distractor similarity) was determined by multiplying  by

by  . On each trial, the decision was considered correct (incorrect) when the mean SDF of the target-selective (distractor-selective) population reached a threshold of

. On each trial, the decision was considered correct (incorrect) when the mean SDF of the target-selective (distractor-selective) population reached a threshold of  . As with the interval estimation task, the threshold assumes a downstream ballistic process is initiated when neural firing reaches a certain rate, an assumption supported by a large body of experimental and theoretical work from decision tasks (see [1], [3], [77], [78]. The time of threshold-crossing was considered the decision time. Note that the precise value of the threshold was not crucial.

. As with the interval estimation task, the threshold assumes a downstream ballistic process is initiated when neural firing reaches a certain rate, an assumption supported by a large body of experimental and theoretical work from decision tasks (see [1], [3], [77], [78]. The time of threshold-crossing was considered the decision time. Note that the precise value of the threshold was not crucial.

Gain modulation of the decision network by the timing network was implemented by spatially non-selective excitation [24], [33], that is, each pyramidal neuron in the decision network received input from all pyramidal neurons in the timing network for the entirety of each trial. Only AMPAR conductances mediated these inter-network inputs, which were set to one fifth the strength of extrinsic AMPARs.

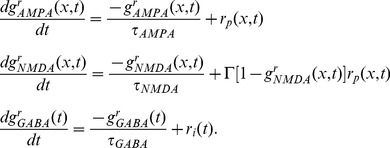

The total input current to each neuron  in the decision network was therefore

in the decision network was therefore

|

(27) |

where  and

and  mediate stimulus-selective inputs (set to 0 for interneurons), and

mediate stimulus-selective inputs (set to 0 for interneurons), and  mediates spatially non-selective inputs from the timing network (set to 0 for interneurons). NMDAR and AMPAR activation at these synapses follows Equations 5 and 6 above.

mediates spatially non-selective inputs from the timing network (set to 0 for interneurons). NMDAR and AMPAR activation at these synapses follows Equations 5 and 6 above.

A block of  decision trials (

decision trials ( trials for

trials for  task difficulties) was run for values of

task difficulties) was run for values of  learned by the timing network for a short (

learned by the timing network for a short ( ) and a long (

) and a long ( ) interval (Section Learning interval estimates above). The mean value of

) interval (Section Learning interval estimates above). The mean value of  over the last

over the last  trials was used in each case. Tight and loose temporal constraints were thus imposed on the decision task by running the timing network with

trials was used in each case. Tight and loose temporal constraints were thus imposed on the decision task by running the timing network with  and

and  respectively on each block of trials, where activity in the timing network served as a spatially non-selective input to the downstream decision network. In both temporal conditions, the model was very accurate on the easiest task (

respectively on each block of trials, where activity in the timing network served as a spatially non-selective input to the downstream decision network. In both temporal conditions, the model was very accurate on the easiest task ( mean target-distractor similarity) and performed at chance when the inputs were indistinguishable on average (

mean target-distractor similarity) and performed at chance when the inputs were indistinguishable on average ( mean target-distractor similarity). For task difficulties in between, decisions were more accurate with the longer temporal estimate. Decision times were shorter for all task difficulties with the shorter temporal estimate. The coupled-circuit decision model thus traded speed and accuracy as a function of a learned interval (Figure 11).

mean target-distractor similarity). For task difficulties in between, decisions were more accurate with the longer temporal estimate. Decision times were shorter for all task difficulties with the shorter temporal estimate. The coupled-circuit decision model thus traded speed and accuracy as a function of a learned interval (Figure 11).

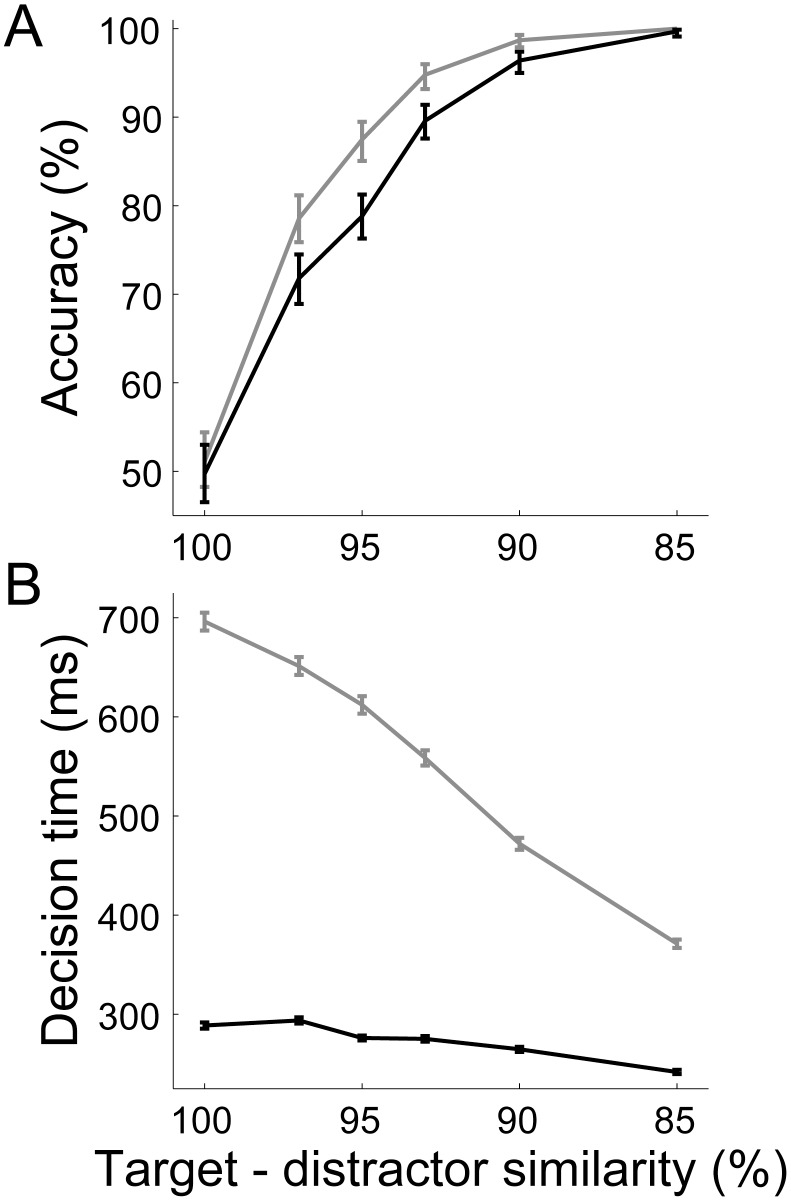

Figure 11. Temporal estimates by the timing network control the speed-accuracy trade-off in the decision network.

Black and grey curves show results for temporal estimates of  and

and  respectively. (A) Accuracy: the proportion of trials on which the decision network correctly chose the target for each level of task difficulty (target-distractor similarity). Error bars show 95% confidence intervals. (B) Decision time: mean time of threshold-crossing (20 Hz) by the target or distractor population (see Methods). Error bars show standard error.

respectively. (A) Accuracy: the proportion of trials on which the decision network correctly chose the target for each level of task difficulty (target-distractor similarity). Error bars show 95% confidence intervals. (B) Decision time: mean time of threshold-crossing (20 Hz) by the target or distractor population (see Methods). Error bars show standard error.

Distributions of decision times under speed and accuracy conditions

Just as the the timing network reproduced signature characteristics of interval estimates by experimental subjects, the coupled-circuit decision network reproduced signature characteristics of psychophysical data from decision tasks. As described in [77], these characteristics result from within-block and between-block experimental manipulations. Our within-block manipulation is task difficulty (mean target-distractor similarity) and our between-block manipulation is the imposition of speed and accuracy conditions by short ( ) and long (

) and long ( ) interval estimates respectively.

) interval estimates respectively.

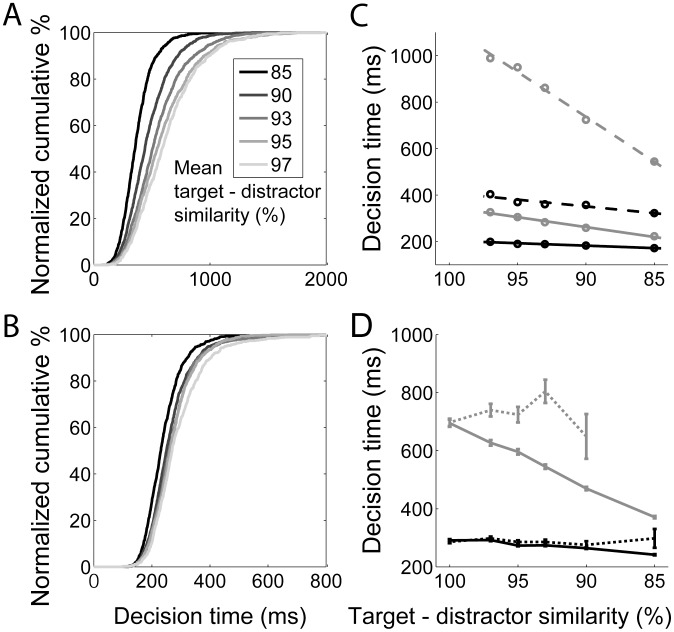

Three general within-block findings are identified in [2], [77]: (1) accuracy decreases and reaction times (RT) increase on correct trials with increasing task difficulty; (2) the shapes of RT distributions are positively skewed, where on correct trials, the tail of the distribution grows at a greater rate than the leading edge with increasing task difficulty; and (3) mean RT differs on correct and error trials, depending on conditions. All three general findings are reproduced by the model (Figures 11 and 12), where we ignore the non-decision component of RT, known to have minimal effect on RT distributions [79]. As described above, increasing task difficulty resulted in a decrease in accuracy and an increase in mean decision time (DT) under both between-block conditions. Increasing mean target-distractor similarity from  to

to  resulted in a decrease in accuracy from

resulted in a decrease in accuracy from  to

to  and an increase in mean DT from

and an increase in mean DT from  to

to  under speed conditions; and resulted in a decrease in accuracy from

under speed conditions; and resulted in a decrease in accuracy from  to

to  and an increase in mean DT from

and an increase in mean DT from  to

to  under accuracy conditions (Figure 11). Furthermore, under both between-block conditions, the shapes of DT distributions were positively skewed (Figure 12A,B) for all task difficulties (not shown), the tail of the DT distribution for correct trials grew at a greater rate than the leading edge with increasing task difficulty (Figure 12C, see caption), and error trials were systematically longer than correct trials (Figure 12D).

under accuracy conditions (Figure 11). Furthermore, under both between-block conditions, the shapes of DT distributions were positively skewed (Figure 12A,B) for all task difficulties (not shown), the tail of the DT distribution for correct trials grew at a greater rate than the leading edge with increasing task difficulty (Figure 12C, see caption), and error trials were systematically longer than correct trials (Figure 12D).

Figure 12. Distributions of decision times produced by the coupled-circuit model.

(A) Normalized cumulative distributions for correct trials under accuracy conditions. Darker curves correspond to more difficult tasks, determined by mean target-distractor similarity (see legend). (B) Same as in A, but under speed conditions. Note the different scales of the x-axis in A and B. (C) Decision times at the crossing of quantiles 0.1 and 0.9 of the distributions for correct trials under accuracy (grey) and speed (black) conditions, plotted as a function of task difficulty. Curves show the best least squares linear fit, where solid and dashed lines correspond to quantiles 0.1 and 0.9 respectively. Under each condition, the slope of the dashed line (38.6 grey, 5.8 black) is greater than that of the solid line (8.5 grey, 2.1 black), indicating that the tail of the distribution is growing more than the leading edge as difficulty increases. (D) Mean decision times on correct (solid) and error (dotted) trials under accuracy (grey) and speed (black) conditions. Error bars show standard error.

Discussion

Over the past several decades, a wealth of data and theory have characterized the cortical mechanisms underlying decisions for action in the world, such as where in space to foveate or to reach (see [1], [3]). In this characterization, time is a passive vehicle for the filtering of noisy spatial codes (see [4]). Recently, there has been increasingly broad recognition that the encoding of time is an essential determinant of behaviour [12], [13], [32], [55], [80], [81]. It would, after all, be impossible to plan actions or anticipate upcoming events without a representation of time. Psychophysical studies have characterized temporal coding for decades [62], [67], [82], [83] and a growing body of electrophysiological [73], [84]–[89], neuroimaging [90], [91], and theoretical [56], [57], [92]–[96] studies have begun to reveal the neural mechanisms underlying the representation of time. Despite these developments, very few studies have considered the interactions between spatial and temporal codes for perception, decision and action [8]–[11].

Using a generic, local-circuit cortical model, we have proposed a neural mechanism for the encoding of time in the hundreds of milliseconds range. Climbing activity in the model resembles neural activity in a number of cortical regions during experimental tasks with a timing requirement in this range [9], [69], [73], [84]–[89] and our analysis of network dynamics formally characterizes the underlying mechanism. Strong feedback excitation destabilizes the network's background state, repelling the network toward a stable attractor. Because the dynamics evolve more quickly with stronger feedback excitation, estimates are shorter with stronger intrinsic NMDAR conductance. The model's interval estimates conform to Weber's law, widely observed experimentally (Figures 6 and 5) and the CV of the these estimates is consistent with that of experimental subjects in timing tasks in the same range (Figure 5). Our derivation of the probability density of interval estimates reveals Weber's law as a mathematical property of the proposed timing mechanism (Figure 7). A simple learning rule is sufficient for the network to learn new intervals within a handful of trials (Figure 8), as observed across experimental paradigms and species [7], and we have demonstrated plausible mechanisms for starting and stopping the estimates (Figure 9). Consistent with the hypothesis that generic properties of local cortical circuits support spatial and temporal processing [12], an identical downstream network makes decisions in simulations of a perceptual discrimination task, producing psychometric and chronometric curves consistent with experimental data (e.g. [9], [97], [98]) and demonstrating the SAT as a function of the learned temporal estimates upstream (Figure 11).

The representation of time in the hundreds of milliseconds range

Different neural mechanisms are expected to code for widely varying temporal durations, ranging from microseconds to days [12], [13]. While considerable overlap between mechanisms is expected at timescales in between, it has been proposed that a dedicated mechanism exists for the hundreds of milliseconds range (see [55], [67]), the relevant order for the most well-studied perceptual decision tasks [1], [3], [14]. These proposals are based on the premise that a single mechanism encoding time for different tasks and modalities will show common variability in these different contexts. For example, [55] suggested a dedicated timing mechanism in this range based on pooled data from a variety of tasks and species showing a similar CV from approximately 200 to 1500 ms (much like Figure 5B). Along similar lines, [82] described a constant Weber fraction for 200 to 2000 ms. [83] used the slope analysis method to distinguish timing-based variability from non-timing sources of variability, such as variability due to perceptual and motor processing during timing tasks. Under this approach, the slope of the linear fit to the variance plotted over the square of interval durations reveals the time-dependent process, shown in their study to be similar for intervals from 325 to 550 ms in two timing tasks. [67] showed a common slope under this method for several auditory tasks requiring interval estimates from 350 ms to 1 s, though they also showed significantly different slopes for other auditory tasks, visual tasks, and between auditory and visual implementations of the same task. See their study for a more extensive description of the evidence for and against a common timer in this range.

In consideration of the above, it is important to distinguish between a common mechanism for timing and a common timer. A common timer refers to a ‘central clock’ processing time across a set of modalities and tasks. Inherent in this definition is a common mechanism, but a common mechanism does not necessarily imply a common timer. We propose that the capability to code time in the hundreds of milliseconds range is a generic property of local cortical circuits under conditions supporting strong attractor dynamics, but this capability does not imply that any single circuit should code time for all tasks and modalities, nor that all local cortical circuits should code time.