Abstract

The Laboratory of Translational Auditory Research (LTAR/NYUSM) is part of the Department of Otolaryngology at the New York University School of Medicine and has close ties to the New York University Cochlear Implant Center. LTAR investigators have expertise in multiple related disciplines including speech and hearing science, audiology, engineering, and physiology. The lines of research in the laboratory deal mostly with speech perception by hearing impaired listeners, and particularly those who use cochlear implants (CIs) or hearing aids (HAs). Although the laboratory's research interests are diverse, there are common threads that permeate and tie all of its work. In particular, a strong interest in translational research underlies even the most basic studies carried out in the laboratory. Another important element is the development of engineering and computational tools, which range from mathematical models of speech perception to software and hardware that bypass clinical speech processors and stimulate cochlear implants directly, to novel ways of analyzing clinical outcomes data. If the appropriate tool to conduct an important experiment does not exist, we may work to develop it, either in house or in collaboration with academic or industrial partners. Another notable characteristic of the laboratory is its interdisciplinary nature where, for example, an audiologistandan engineer might work closely to develop an approach that would not have been feasible if each had worked singly on the project. Similarly, investigators with expertise in hearing aids and cochlear implants might join forces to study how human listeners integrate information provided by a CI and a HA. The following pages provide a flavor of the diversity and the commonalities of our research interests.

Keywords: Cochlear implants, diagnostic techniques, hearing aids and assistive listening devices, hearing science, speech perception

Plasticity and Adaptation

The ability of the human brain to adapt to distorted sensory input is of great interest to scientists, in large part because it has important clinical implications. For example, in the auditory domain the capabilities and limitations of human perceptual adaptation are important in part because they permit postlingually hearing impaired cochlear implant (CI) users to understand speech. Close to 200,000 patients have received CIs as of this writing, a large proportion of whom were deafened after acquiring language (or postlingually). A potentially significant problem in this population is a mismatch between the input acoustic frequency and the characteristic frequency of the neurons stimulated by the implant. If this occurs, then the listener must overcome a mismatch in the neural representation provided by the CI and the long-term representations of speech that were developed when they had acoustic hearing. Frequency mismatch may be particularly problematic for CI users because they must also contend with an input signal that is spectrally degraded.

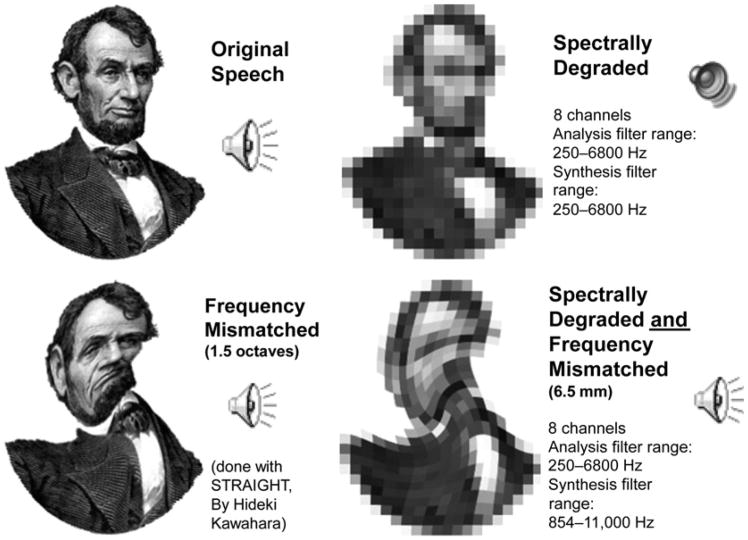

While users of cochlear implants must overcome a number of distortions, there are also numerous examples of the brain's remarkable ability to overcome auditory distortions, particularly when they are imposed one at a time (Ainswort, 1967; Remez et al, 1981; Shannon et al, 1995). Figure 1 shows a visual analogy of this concept: a familiar picture is recognizable when two kinds of severe distortion are imposed separately but unrecognizable when both distortions are imposed at the same time.In the auditory domain, a sentence can be recognizable despite a severe frequency shift, or when processed through an eight-channel noise vocoder (which represents an acoustic simulation of an eight-channel CI without any frequency shift). However, naïve listeners cannot understand sentences that have been spectrally degraded by an eight-channel vocoder and then frequency shifted by a large amount.

Figure 1.

A picture of Abraham Lincoln (top left) is recognizable despite a rotational distortion (lower left) or heavy pixelation (upper right), but imposing both distortions at the same time renders the picture very hard to recognize. Supplemental to the online version of this article is aversion of this figure (Audio 1) that is an auditory demonstration of the concept. A sentence read by an American speaker with a Brooklyn accent can be heard by clicking the top left panel. The bottom left panel will play the same sentence but with a frequency shift roughly equivalent to a 6.5 mm displacement along the cochlea, and the upper right panel plays the sentence as processed by a noise vocoder, which represents an acoustic simulation of an eight-channel CI without any frequency shift. Both degraded sentences are at least somewhat recognizable. However, when the sentence is both frequency shifted and spectrally degraded (lower right) it becomes impossible to recognize, at least without any training.

To what extent are human listeners able to adapt to these distortions? How much time is required for postlingually hearing impaired CI users to adapt? Our work attempts to address these basic questions and is based on a theoretical framework that includes the following general hypotheses: (1) Upon initial stimulation of their device, a postlingually deafened CI user may experience a frequency mismatch, the size of which is influenced by several factors. These include, but are not limited to, the acoustic frequency ranges associated with each electrode, the location of each electrode along the cochlea, the size of the listener's cochlea, patterns of neural survival, and patterns of electrical field transmission within the cochlea. (2) Small amounts of frequency mismatch are easily overcome, possibly by the same neural and cognitive mechanisms responsible for speaker normalization (e.g., the ability to understand a variety of talkers despite differences in pitch, formant frequencies, and accent). (3) Intermediate levels of frequency mismatch may be overcome as part of a nontrivial adaptation process involving perceptual learning; this process may require weeks, months, or even years of regular CI use. (4) Large amounts of frequency mismatch may be impossible to overcome, even after months or years of experience. (5) Individuals may differ in their capacity to adapt to frequency mismatch, and these differences may be predictable based on cognitive resources such as verbal learning ability or working memory. For those patients who cannot adapt completely to frequency mismatch, we speculate it is possible to minimize such mismatch with the use of appropriate frequency-to-electrode tables.

One of our major goals is to measure the extent and time course of adaptation to frequency mismatch in postlingually hearing impaired CI users, using patients who are recently implanted as well as a cross-section of experienced CI users. To avoid basing conclusions on a single method, converging evidence for adaptation is obtained using four different methods: (1) electroacoustic pitch matching, (2) computational modeling of vowel identification, (3) determination of a listener's perceptual vowel space, and (4) real time listener-guided selection of frequency tables.

The electroacoustic pitch-matching and computation modeling methods are both described in greater detail in subsequent sections. In brief, the electroacoustic pitch-matching method requires the listener to find the acoustic stimuli that best match the pitch elicited by stimulation of one or more intracochlear electrodes. Note, however, that this test can only be completed with CI users who have a sufficient amount of residual hearing. The computational modeling method is based on the concept that differing amounts of uncompensated frequency mismatch will yield different patterns of vowel confusions. By computationally examining the vowel-confusion matrices, we can then estimate whether a listener has completely adapted to a given frequency-to-electrode table.

The vowel-space method uses a method-of-adjustment procedure with synthetic vowel stimuli. These stimuli vary systematically in first and second formant frequencies (F1 and F2) and are arranged in a two-dimensional grid (Harnsberger et al, 2001; Svirsky, Silveira, et al, 2004). Patients select different squares in the grid until they find stimuli that most closely match the vowels depicted in visually presented words. Patients then provide goodness ratings for the stimuli they have chosen. The patients' responses to all vowels are then used to construct individual perceptual “vowel spaces,” or the range of F1 and F2 values that correspond to a given vowel. If CI users fail to adapt completely to a frequency mismatch, then the F1 and F2 values of their chosen “vowel space” should differ from those exhibited by normal-hearing individuals.

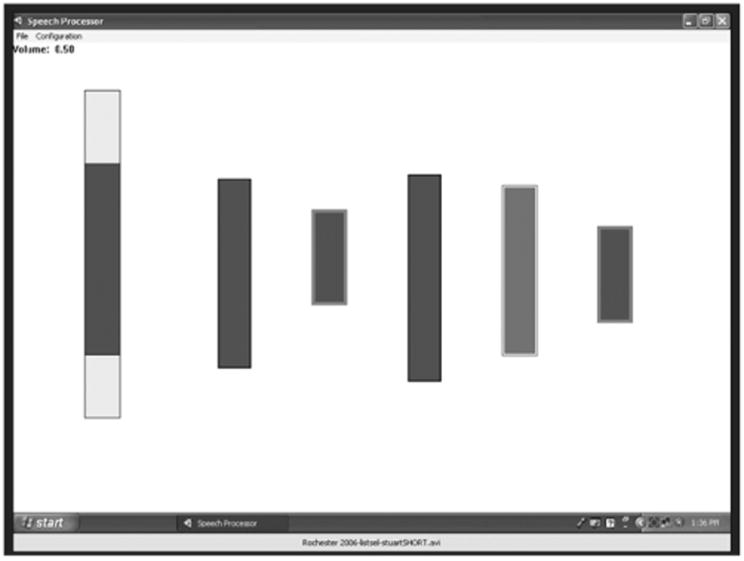

Lastly, the real-time frequency-table selection method involves the listener selecting a frequency table that makes speech sound most intelligible. The frequency table is adjusted by the listener in real time (or near real time) while listening to speech (Kaiser and Svirsky, 2000; Svirsky, 2011; Jethanamest et al, 2010). Figure 2 illustrates one of the programs we have developed for frequency table selection. The light rectangle at the left represents the frequency range of human audition, and the dark rectangle within it represents the frequency range of the active frequency table. The listener can adjust the frequency table manually to optimize the intelligibility of the incoming speech. The Web-based version of this article includes a demonstration of the program at work. In this demo, the output of each filter modulates a band of noise, creating an acoustic simulation of a CI like the one used in Figure 1. As the frequency table moves higher in frequency, the mismatch between the frequency table and the noise bands is minimized, and the talker becomes more and more intelligible. Beyond a certain point, the frequency table moves even higher than the output noise bands, and intelligibility starts to go down again, due to a frequency mismatch in the other direction: the frequency table is now higher in frequency than the noise bands rather than lower. Use of this program can provide insight into adaptation to frequency tables: a CI user who has adapted completely to his frequency table would be expected to select a table that is very close to the one he uses every day. In contrast, a CI user who has not adapted completely would select a table that differs significantly from the one he uses every day. This method, in addition to providing information about adaptation to frequency tables, may provide the basis for clinical methods to select alternative stimulation parameters in patients who show incomplete adaptation to their frequency tables.

Figure 2.

Graphical depiction of a PC-based tool for selection of frequency allocation tables. The vertical scale used for this graphical representation reflects distance along the cochlea. The range of the active frequency table is visually represented by a dark gray bar that is embedded within a larger, light gray bar (leftmost bar). The light gray bar represents the whole frequency range that is audible to humans. As the listener adjusts the frequency table, the dark gray bar moves and changes accordingly. Listeners modify the frequency map in real time until they find the one that sounds most intelligible. In addition to the continuous adjustment described above, the listener (or the experimenter) has the option of selecting a number of filter banks for further comparison. When a given filter bank is selected with the mouse, the corresponding dark rectangle is copied to the right of the screen (see five bars to the right of the figure). Any subset of those filter banks can be then selected for comparison. The active filter bank changes from one to the next, instantaneously, by pressing the space bar. During execution the program keeps track of which filter bank is active and saves the information to a file. Supplemental to the online version of this article is a version of this figure (Video 1) that demonstrates changes in speech intelligibility as the frequency table is adjusted while a talker speaks. Speech is initially unintelligible; it becomes better and becomes optimal as the frequency table moves up; and then it becomes unintelligible again as the frequency table moves even higher.

To help interpret the adaptation data obtained with these four methods, we also obtain measures of speech perception, formant frequency discrimination, cochlear size, electrode location, verbal learning, working memory, and subjective judgments. Our working hypothesis is that incomplete adaptation to a frequency table is more likely in patients with large cochleas, shallow electrode insertion, low verbal learning skills, and low levels of working memory and that the ability to fully adapt may be affected by the presence of usable residual hearing.

In summary, this line of research investigates basic aspects of adaptation to different frequency tables after cochlear implantation in postlingually hearing impaired patients. These experiments also have an important translational aspect, as they try to predict (based on anatomical, cognitive, and psychophysical measures) which patients may have most difficulty adapting to frequency mismatch. Even more importantly from a translational perspective, we are investigating possible ways to mitigate the effect of such frequency mismatch. In so doing, the present studies may provide important basic knowledge about perceptual learning as well as useful and specific guidance to the clinicians who are in charge of fitting CIs.

Electroacoustic Pitch Matching: Behavioral, Physiological, and Anatomical Measurements

As the guidelines in selecting candidates for cochlear implantation are becoming more relaxed, there are an increasing number of CI patients with residual hearing. This allows us to compare the pitch percepts elicited by electrical stimulation with those elicited from acoustic hearing; such comparisons are important because they can serve as a marker as to whether a listener has fully adapted to his or her frequency-to-electrode table (or just “frequency table”). Specifically, we hypothesize that a patient has not fully adapted to his or her frequency table unless the pitch elicited by electrical stimulation of a single electrode is matched to an acoustically presented tone that has a frequency that falls within the band of frequencies allocated to that electrode. Thus, to further explore this adaptation process, we are tracking behavioral and physiological changes in acoustic-electric pitch matching in a group of CI patients over their first 2 yr of device use.

In our current experimental paradigm, pitch percepts are evaluated using psychoacoustic and physiological data, while anatomical data obtained from CT scan measurements are used to help interpret the results. In our psychoacoustic experiment, the CI patient is alternatively stimulated with an acoustic tone in the unimplanted ear via a headphone and an electrical pulse train in the implanted ear. The CI patient first balances the loudness of the acoustic and electric percepts across both ears, if possible. Next, they adjust the frequency of the acoustic tone to match the pitch percept elicited by electrical stimulation. Six pitch-matching trials are conducted for each electrode tested, and the starting frequency of the acoustic tone is randomized for each trial to avoid potentially biasing the frequency matches (Carlyon et al, 2010).

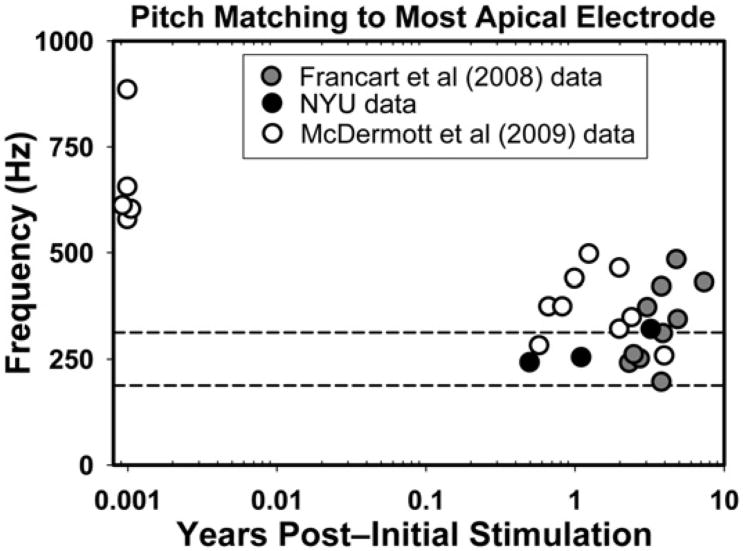

Three users of the Nucleus-24 device have participated in the psychoacoustic experiment as of this writing. Their pitch-matching data at the most apical electrode are plotted in Figure 3 along with data obtained from two related studies (Francart et al, 2008; McDermott et al, 2009). Other than the five subjects from McDermott et al (2009) who were tested shortly after implantation, the rest of the subjects had at least 6 mo to 8 yr of experience. As the figure shows, recently implanted subjects match their most apical electrode to frequencies that are much higher than the 188–313 Hz frequency band that corresponds to that electrode (i.e., they show significant basalward shift). After months or years of experience 9 of 22 experienced CI users displayed little to no frequency shift: the acoustic pitch match fell within the frequency range assigned to that electrode. In contrast, 13 experienced CI users still displayed different amounts of basalward shift, as the acoustic pitch match was higher than 313 Hz.

Figure 3.

Average pitch-matched frequency of acoustic tones for the most apical electrode in 27 users of the Nucleus-24 CI, as a function years of listening experience with the CI. The y-axis corresponds to the acoustic pitch matches (in Hz), and the x-axis depicts the number of years after initial stimulation (on a logarithmic scale). The two horizontal dashed lines show the frequency range that is associated with the most apical electrode in Nucleus-24 users: 188–313 Hz with a 250 Hz center frequency. Values within this range are considered to reflect complete adaptation to any frequency mismatch and are reflected by symbols that fall within the two dashed lines. Symbols above this region represent basalward shift, a situation where the percept elicited by a given electrode is higher in frequency (i.e., a more basal cochlear location) than the electrode's analysis filter.

The picture was different when considering electrodes at intermediate parts of the array, where our three subjects displayed an apicalward shift as they selected acoustic pitch matches that were lower than the center frequency allocated to these electrodes. This trend is consistent with data showing that listeners may show basalward frequency shift for some electrodes (typically the most apical) and apicalward shift for more intermediate electrodes (Dorman et al, 2007). Finally, the data at the most basal electrodes are less reliable because the pitch sensations elicited by electrical stimulation exceed the limits of the listener's audible frequency range. Taken together, the observation that not all pitch matches fell within the frequency range specified by the clinical frequency table suggests that some experienced CI users may not have completely adapted to their clinical frequency table, at least for a subset of stimulating electrodes.

In parallel to the behavioral study we are attempting to develop a physiological measure of acoustic-electric pitch matches for users of cochlear implants who also have residual hearing. Toward this goal, we developed a Matlab program that uses the NIC V2 toolbox (Cochlear Americas) to present interleaving short intervals of acoustic and electrical stimulation, while we record auditory evoked potentials (AEPs) using a Neuroscan system (Charlotte, NC). All acoustic and electrical stimulation is presented sequentially, such that the electrode of interest in the implanted ear is stimulated for 1000 msec and followed by a 1000 m sec acoustic tone presented to the contralateral ear; the acoustic tone is shaped by a trapezoidal window with a rising/falling time of 10 m sec to prevent spectral splatter. Each pair of electric and acoustic stimuli is repeated 500 times. The AEP response recording is initiated by a trigger inserted at the end of each electrical stimulus. To date we have tested two normal hearing subjects and one CI patient to verify the efficacy of the system.

For the normal hearing subjects we presented a fixed 1000 Hz tone to one ear to simulate the fixed place of stimulation caused by stimulating a single intracochlear electrode. Then, in the contralateral ear, we presented tones of 250, 900, 1000, 1100, and 4000 Hz. All acoustic stimuli were presented via insert earphone at 70 dB SPL. As a general rule, for normal hearing listeners AEP latency increases as stimulus frequency decreases. In this study, however, N1 latency was minimized when the same frequency was presented to both ears (i.e., when the pitch percepts of the stimuli were matched between the two ears). A similar result occurred in our single CI user, as N1 latency was minimized when the acoustic stimulus was the frequency that was pitch matched to the stimulated electrode. While preliminary, these data suggest that the latency of N1 has potential as an indicator of electric-acoustic pitch matching across the two ears.

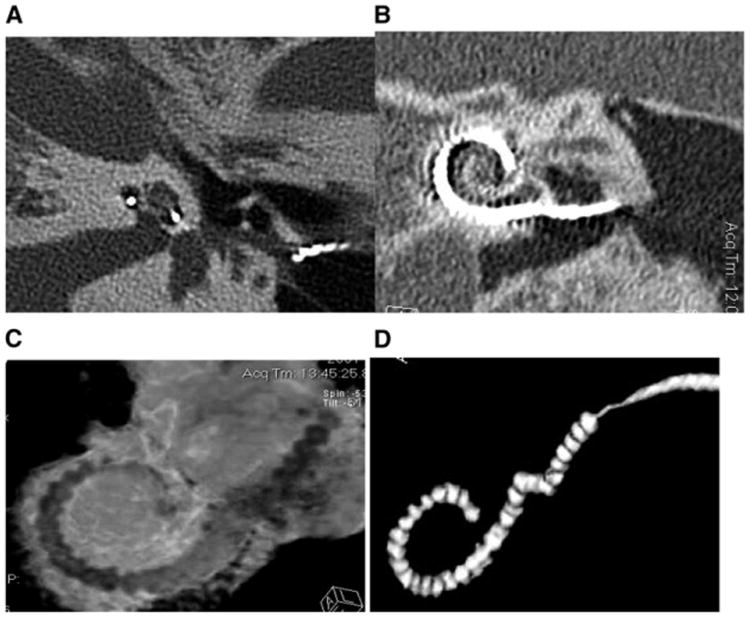

In addition to our psychophysical and physiological measures, we also plan to use imaging data to help interpret the pitch-matching data. Such measures are likely to be important, because the cochlear size and electrode location are two factors that could greatly influence acoustic-electric pitch matches. For example, normal human cochleae typically vary in size by 10% (Ketten et al, 1998) and can differ as much as 40% (Hardy, 1938). In consequence, an electrode location that is 24 mm from the cochlear base would stimulate spiral ganglion neurons with an average characteristic frequency of 76 Hz in a cochlea that is 28 mm long, and 1020 Hz in one that is 42 mm long, according to Stakhovskaya et al's (2007) recent spiral ganglion correction to Greenwood's equation (Greenwood, 1990). Figure 4 shows an example of the imaging data used to obtain the necessary anatomical measures for our studies. As can be seen in the figure, it is possible to visualize the approximate location of each electrode along the cochlea as well as interelectrode spacing of a 22-electrode implant. It is also possible to calculate cochlear size and relative intracochlear position of the implant array based on measurements of cochlear diameter radii and axial height (Ketten et al, 1998). By using extended scale and metal artifact reduction techniques, CT scans can also be used to obtain more fine-grained information about electrode position (e.g., distance to the modiolus or to the outer wall; scala vestibuli vs. scala tympani location) and about neural survival.

Figure 4.

The images show (A) a midmodiolar scan of an implanted right ear from which cochlear radii and height can be measured; (B) a 2-D reformatted image to show a coronal view of the implant in vivo; (C) a 3-D reconstruction of the implant within the cochlear canal (Supplemental to the online version of this article is a version of this figure in which electrodes are shown in green, the intracochlear tissues in red, and the cochlear capsule transparent with canal walls in white); (D) shows a 3-D of the array extracted digitally with metal artifact reduction applied to provide better definition of the individual electrode positions. Note in A, B, and C the shift in position of the trajectory of the array from the outer wall of the cochlear canal to the modiolar wall in the upper basal turn.

Computational Modeling of Speech and Other Auditory Stimuli by Hearing Impaired Listeners

A likely source of interindividual differences in CI users' speech understanding is the combination of the limited speech information provided by the implant and the CI user's ability to utilize this speech information. A CI provides a degraded signal that limits the quality and number of available speech cues necessary for identifying vowels and consonants (Teoh et al, 2003). Moreover, the ability of CI users to discriminate spectral and temporal speech cues is highly variable and worse as a group than listeners with normal hearing (Fitzgerald et al, 2007; Sagi et al, 2009). These two properties provide an opportunity to develop relatively simple computational models of speech understanding by CI users. These models are useful for testing hypotheses about the mechanisms CI users employ to understand speech, for studying the process of adaptation as CI users gain experience with their device, and for exploring CI speech processor settings that may improve a listener's speech understanding.

The Multidimensional Phoneme Identification (MPI) model (Svirsky, 2000, 2002) is a computational framework that aims to predict a CI user's confusions of phonemes (i.e., vowels or consonants) based on his or her ability to discriminate a postulated set of speech cues. The model is multidimensional in the sense that each phoneme can be defined as a point within a multidimensional space, where each dimension is associated with a given speech cue; the phoneme's location within that multidimensional space is specified by that phoneme's speech cue values. The poorer a listener's discrimination for these speech cues, the higher the likelihood a phoneme in one location will be confused with a different phoneme in close proximity within the space. The MPI model's underlying assumptions and postulated speech cues are confirmed when the model produces a confusion matrix that closely matches a CI user's confusion pattern, but are otherwise rejected. In this way, the MPI model can be useful for testing hypotheses about the mechanisms CI users employ to understand both vowels and consonants.

In listeners with normal hearing, vowel identification is closely related to their ability to determine the formant frequencies of each vowel (Peterson and Barney, 1952; Hillenbrand et al, 1995), which indicate the shape of the speaker's vocal tract during the vowel's production (e.g., tongue height, position, roundedness, etc.). In a CI, formant energies are delivered across subsets of electrodes in relation to the frequency-to-electrode allocation of the CI speech processor. Because this allocation is tonotopic, formant energies in the lower frequency ranges are delivered to more apical electrodes, and those with higher frequency ranges are delivered to more basal electrodes. As a test of this model and our ability to predict vowel identification in CI users, we implementedan MPI model of vowel identification using locations of mean formant energies along the implanted array as speech cues, combined with a parameter that indexed a CI user's ability to discriminate place of stimulation in the cochlea. Our model was applied to vowel identification data obtained from 25 postlingually deafened adult CI users. Notably, it was capable of accounting for the majority of their vowel confusions, even though these individuals differed in terms of CI device, stimulation strategy, age at implantation, implant experience, and levels of speech perception (Sagi, Meyer, et al, 2010).

In general, consonants are produced by some form of controlled constriction of the source airflow using the articulators within the oral cavity. Consonants can be classified in terms of distinctive features such as place within the oral cavity where the constriction occurs, the manner of this constriction, and whether the source airflow was periodic (i.e., voiced) or not (voiceless). Within the acoustic signal, there are a variety of speech cues that are related to these speech features (though they do not map exclusively one to the other), and many of these speech cues are transmitted through a CI (Teoh et al, 2003). To assess the ability of the MPI model to predict consonant identification in CI users, we implemented an MPI model of consonant identification using three types of speech cues (two spectral and one temporal) and three independent input parameters representative of a subject's discrimination for each speech cue. The model was subsequently applied to consonant identification data obtained from 28 postlingually deafened CI users. Suggesting the validity of our approach, the model was capable of accounting for many aspects of subjects' consonant confusions, including 70–85% of the variability in transmission of voicing, manner, and place of articulation information (Svirsky et al, 2011).

The MPI model has other applications, including the study of the adaptive process CI users undergo as they gain experience with their device. In a noteworthy study of adaptation in CI users (Fu et al, 2002), three postlingually deafened experienced CI users volunteered to use a frequency map shifted up to one octave below their clinically assigned maps and to use this map daily for 3 mo. At the end of the study period, subjects' speech understanding scores with the frequency-shifted map were lower than their scores with clinically assigned maps, though some improvement in scores did occur with the frequency-shifted map during the study period. This result was interpreted to mean that subjects were capable of adaptation, but their adaptation was incomplete. Sagi, Fu, et al (2010) applied the MPI model to Fu et al's (2002) vowel identification data to help explain their subjects' adaptation to the frequency-shifted map. The first two mean formant energies (F1 and F2) of the vowel stimuli used in Fu et al were used as speech cues. One type of model input parameter was used to account for subjects' discrimination of these speech cues. Two other types of input parameters were used to determine subjects' response center locations, that is, their expectations of where vowels are located in the F1 vs. F2 space, and their uncertainty in recalling these locations. In the case of complete adaptation, one would assume that response center locations are equal to the average locations of vowel stimuli in F1 vs. F2 space, and that subjects' uncertainty in recalling these locations is near zero. This was found to be true when the MPI model was applied to subjects' data when using their clinical maps. With the frequency-shifted map, subjects were able to formulate response center locations that were consistent with the new vowel locations within the first week. Furthermore, their uncertainty for these locations decreased during the 3 mo period (suggesting adaptation) but remained much larger in comparison to subjects' uncertainty with the clinically assigned maps. These results suggest that Fu et al's subjects' could accommodate fairly quickly to how the new vowels sounded, but their adaptation was limited by their ability to formulate stable lexical labels to the new vowel sounds.

The MPI model can be used to predict how CI device settings affect speech perception outcomes. For example, Sagi, Meyer, et al (2010) demonstrated how the MPI model could account for the results of Skinner et al (1995) where it was found that a group of CI users performed better with one frequency allocation map over another. Similarly, in modeling the data of Fu et al (2002), Sagi, Fu, et al (2010) found that the frequency-shifted map would not have improved subjects' speech perception scores, even if adaptation were complete. Currently, this predictive potential of the MPI model is being developed into a tool that may assist audiologists in finding CI speech processor settings that have the greatest potential for improving a CI user's speech understanding in noisy environments.

Lastly, and in addition to these studies on CI patients, we also conducted a study to characterize and model the perceived quality of speech and music by hearing-impaired listeners (Tan and Moore, 2008). This study is in collaboration with Brian C.J. Moore from the Department of Experimental Psychology at University of Cambridge and is a continuation of research aimed at predicting sound quality ratings in cell phones (Moore and Tan, 2003, 2004a, 2004b; Tan et al, 2003, 2004). Unlike the MPI model, which aims to predict and explain vowel or consonant identification, this other model aims to predict quality ratings of speech and music that had been subjected to various forms of nonlinear distortion. Some of these distortions are inherent to certain hearing aid designs including (1) hard and soft, symmetrical and asymmetrical clipping; (2) center clipping; (3) “full-range” distortion, produced by raising the absolute magnitude of the instantaneous amplitude of the signal to a power (alpha) not equal to 1, while preserving the signal amplitude; (4) automatic gain control (AGC); (5) output limiting. In our test of this model, stimuli were subjected to frequency-dependent amplification as prescribed by the “Cambridge formula” (Moore and Glasberg, 1998) before presentation via Sennheiser HD580 earphones. The pattern of the ratings was reasonably consistent across listeners. One notable result is that mean ratings were not lower with increasing amount of soft or center clipping or when the compression ratios of the AGC and output limiting were increased. The deleterious effects produced by these nonlinear distortions may have been offset by the beneficial effects of improving audibility and compensating for loudness recruitment.

Speech Testing in Realistic Conditions: Cochlear Implants and Classroom Acoustics

Despite the fact that CI users often need to communicate in less than optimal acoustic environments, for the most part clinical evaluation is carried out in a sound-treated room having minimal reverberation, using speech stimuli that are carefully recorded under optimal conditions. Such evaluations may reveal maximal performance of the CI user but are not necessarily predictive of performance under real-world listening conditions. This is of concern because most children with CIs who are implanted at a relatively young age are educated in mainstream classrooms (Daya et al, 2000; Sorkin and Zwolan, 2004) and are expected to function in the same listening environments as their normal hearing peers.

Reverberation is one of the acoustic factors that affects the speech-understanding abilities of children with cochlear implants. In a room, sound reflected from the walls, ceiling, and floors is added to the original sound. In normal-hearing listeners, previous research on the effect of reverberation on speech recognition has shown that early reflections reinforce the original speech sound and are actually beneficial (Bolt and MacDonald, 1949; Lochner and Burger, 1961; Bradley et al, 2003). However, if the reverberation time is sufficiently long, late reflections can degrade the speech signal through the mechanisms of overlap masking and self-masking of speech sounds (Bolt and MacDonald, 1949; Nábĕlek et al, 1989) and can result in reduced speech understanding.

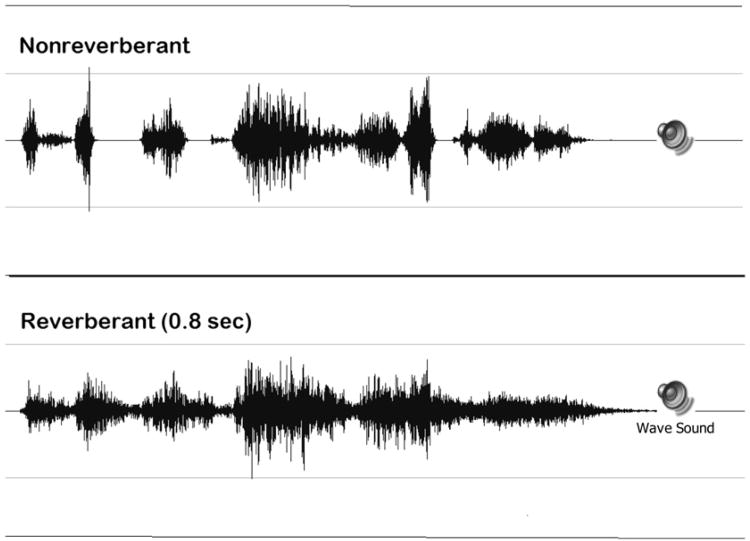

For users of cochlear implants, reverberation has the potential to degrade the speech signal in a more devastating manner than for persons with normal hearing because of the method of signal processing in the cochlear implant speech processor. The waveforms of a single sentence without reverberation and with 0.8 sec reverberation processed through an eight-channel simulation of a cochlear implant are shown in Figure 5, and the audio files of these stimuli can be accessed in the supplementary materials for this article. When we compare the reverberant to the nonreverberant version of the sentence, the prolongation of the sound is clearly visible. We can also clearly see the reduction in the amplitude modulation of the signal, which can hinder the ability to perceive these sounds. When one listens to nonreverberant and reverberant speech processed through the simulated cochlear implant speech processor, two types of degradation are evident in the processed stimuli—the lack of spectral fine structure typical of the speech processor output and the additional temporal smearing of the signal due to reverberation.

Figure 5.

Time waveform of nonreverberant and reverberant stimuli processed through a simulated eight-channel cochlear implant speech processor. Supplemental to the online version of this article is a version of this figure (Audio 2) that includes audio samples of each stimulus that can be played by clicking on the speaker icons.

Until recently it would not have been possible to test the effect of reverberation on the speech perception of persons with cochlear implants in a clinical setting. For example, a previous investigation utilized a classroom with an adjustable reverberation time to assess the effect of reverberation on speech understanding in children (Iglehart, 2009). Another commonly used method is to record reverberant speech test materials and administer the test under headphones. Neither option is feasible, however, for clinical use. In contrast, recent technological developments make it feasible to (1) develop reverberant test materials in a flexible and efficient way and (2) deliver the reverberant test materials directly through the cochlear implant through auxiliary input, thus allowing control of the characteristics of the test conditions in a manner analogous to testing under headphones.

Digital signal processing techniques are commonly used to create virtual listening environments. It is possible to obtain complete information about the acoustics of a room (for a given acoustic source and a given listening location in the room) by recording the binaural room impulse response (BRIR) using microphones located on the head of a research manikin or on the head of a human subject. This method incorporates the head related transfer function as part of the acoustics of the test material. When the BRIR is “convolved” with standard speech materials, the room characteristics are “overlaid” onto the speech. When the recordings created in this manner are played back through headphones, the output represents a virtual representation of a specific environment. The listener hears the speech as if he is sitting at the location in the room where the BRIRs were recorded. A benefit of this method of creating test materials is that once the BRIRs are recorded, any speech material can be used to create a test material. This virtual auditory test approach expands clinical testing capabilities by making it possible to test more realistic and complex listening environments than could be instrumented in the clinical environment and also maintains control over the presentation of the stimuli in a manner that would be difficult in sound field testing (Besing and Koehnke, 1995; Koehnke and Besing, 1996, Cameron et al, 2009). Until recently, this virtual auditory test approach could not be used to evaluate performance by persons using a cochlear implant, because of the requirement that stimuli be delivered through headphones. However, Chan et al (2008) have demonstrated the reliability and validity of testing sound localization of CI users by administering virtual auditory test stimuli through the auxiliary input of the CI.

In one of our projects we have developed a set of reverberant test materials that simulate the acoustics of a classroom environment. In preparation for the assessment of children with cochlear implants, reverberant test materials in noise were prepared representing three different virtual classroom environments (reverberation times = 0.3, 0.6, and 0.8 sec). Speech and speech-in-noise recordings from the Bamford-Kowal-Bench Speech-in-Noise Test (BKB-SIN; Etymotic Research, 2005) were convolved with BRIRs recorded in a classroom with adjustable reverberation. Normative data in noise were obtained from 63 children with normal hearing and a group of 9 normal hearing adults (Neuman et al, 2010). In addition, test materials have been prepared for assessing the effect of reverberation alone (BKB sentences without noise).

Data collection on children who use cochlear implants is in progress. A sample set of data from one child is presented to illustrate how information obtained using such a test material differs from the information available from current clinical measures. Data are from a 7-yr-old girl who uses bilateral cochlear implants (Nucleus 24, Contour Advance, Freedom, ACE strategy). Born with congenital profound hearing loss, she received hearing aids at 7 mo of age and her first implant at 12 mo. She received her second implant at age 5 yr, 4 mo. Speech recognition scores from her most recent clinical evaluation and scores obtained using a virtual classroom test material administered directly to the cochlear implant speech processor via auxiliary input (TV/HiFi cable) appear in Table 1. Clinical speech tests included the Lexical Neighborhood Test (LNT, Kirk et al, 1995) and the Hearing in Noise Test for Children (HINT-C, Nilsson et al, 1996) in quiet and in noise. As can be seen, this child's scores on the clinical test measures are all excellent. Even the score on the HINT-C in noise is a ceiling score. Similarly, the word recognition performance on the BKB sentences in the quiet, nonreverberant condition is similar to the word recognition scores obtained in the clinic, and is similar to those obtained by a group of normal hearing children of similar age. However, this child's word recognition is reduced substantially (to 73%) for the reverberant BKB test material (quiet). In contrast, the word recognition score of normal hearing children of similar age (ages 6–7 yr) on this reverberant test material ranged from 91 to 99%. The SNR-50 (the signal-to-noise ratio required for 50% performance) in the nonreverberant condition for this child with cochlear implants is 6 dB, not significantly different than the mean SNR-50 for children with normal hearing of similar age (Etymotic Research, 2005). But in the reverberant test condition, the SNR-50 is 12.5 dB for this child, 7.5 dB higher than that required by a group of normal hearing children of similar age for this reverberant test condition (Neuman et al, 2010).

Table 1. Percent Correct Word Recognition Scores (percent) on Clinical Tests and the Virtual Classroom Test Material.

| Clinical Scores | |

|

| |

| HINT-C (quiet) | 100 |

| HINT-C (noise, +10 dB SNR) | 100 |

| LNT words | 96 |

|

| |

| Virtual Test Scores | |

|

| |

| BKB sentences, no reverberation | 95 |

| BKB sentences, RT = 0.8 sec | 73 |

| SNR-50, nonreverberant BKB-SIN | 6 dB |

| SNR-50, reverberant BKB-SIN (0.6 sec) | 12.5 dB |

Note: HINT-C = Hearing in Noise Test for Children; LNT = Lexical Neighborhood Test; SNR-50 = signal-to-noise ratio required for 50% performance

Taken together, the scores obtained for this child on the virtual classroom materials indicate that although the child has excellent speech recognition performance in optimal listening conditions, performance will be negatively affected by acoustic degradations typical of classroom listening environments. This child will therefore be at a significant acoustic disadvantage in classrooms where children with normal hearing might not exhibit difficulty.

In the children with cochlear implants already tested, we have noticed differences among listeners in susceptibility to the effects of reverberation, noise, and their combination. We are continuing to collect data on more children with cochlear implants and are considering developing test material for clinical use. The development of test material incorporating classroom acoustic effects could be helpful in developing the Individualized Education Plan for a child with cochlear implants. Results from such a test could be used to assess susceptibility to classroom acoustic effects and would provide objective evidence documenting the need for accommodation for a child in a mainstream classroom.

Bilateral Cochlear Implants

Another line of ongoing research in our group examines issues related to bilateral cochlear implantation. Use of bilateral implants is becoming increasingly common, in large part because when compared to monaural implant use, users of bilateral implants display improved abilities to understand speech in the presence of background noise (e.g., van Hoesel and Tyler, 2003; Nopp et al, 2004; Schleich et al, 2004; Litovsky, Parkinson, et al, 2006; Ricketts et al, 2006; Zeitler et al, 2008), and to localize sound (e.g., Gantz et al, 2002; Tyler, Gantz, et al, 2002; van Hoesel et al, 2002; van Hoesel and Tyler, 2003; Litovsky et al, 2004; Nopp et al, 2004; Schleich et al, 2004; Schoen et al, 2005; Senn et al, 2005; Verschuur et al, 2005; Litovsky, Johnstone, et al, 2006; Litovsky, Parkinson, et al, 2006; Grantham et al, 2007; Neuman et al, 2007). It is note-worthy that these benefits occur despite the possibility that a patient may have a between-ear mismatch in the insertion depth of the electrode, or the number and location of surviving nerve fibers in each ear. If a between-ear mismatch were to exist, it is possible that the same-numbered electrodes in each ear could stimulate neural populations with different characteristic frequencies. When such mismatches become sufficiently large in users of bilateral implants, they have been shown to hinder sound-localization abilities (van Hoesel and Clark, 1997; Long et al, 2003; Wilson et al, 2003; van Hoesel, 2004; Poon et al, 2009), although there is evidence for sensitivity to ITD (interaural time difference) cues even with mismatches in place of stimulation (van Hoesel and Clark, 1997; Long et al, 2003; van Hoesel, 2004; Blanks et al, 2007, 2008; Poon et al, 2009).

Whereas the effects of bilateral mismatches on sound-source location have been explored, their effects on speech understanding have rarely been investigated. This omission stems largely from the difficulty involved in determining whether a listener has a between-ear mismatch in electrode insertion depth or neural survival. Moreover, even if the presence and size of a between-ear mismatch could be reliably identified, there are few clear guidelines for audiologists that could be used to potentially reprogram the frequency table of the speech processor to ameliorate any negative effects. These issues lie at the heart of one of the ongoing research focuses in our department, in which we try to better understand the effects of between-ear mismatches on speech understanding, to identify users of bilateral implants who may have a between-ear mismatch that affects their performance, and to develop a tool that audiologists can use to reprogram the speech processor of the patient in order to ameliorate any negative effects of a between-ear mismatch.

In one key line of research related to bilateral implantation, we are attempting to use behavioral measurements to identify patients who may have a between-ear mismatch that could benefit from reprogramming of the frequency table. The assumption underlying this approach is that, if a sequentially-implanted bilateral CI user is given the opportunity to select a preferred frequency table in the recently implanted ear, he or she will select one that is matched to the contralateral ear with regard to place of stimulation. By this line of logic, if a bilateral CI user selects a different frequency table in one implant than what is programmed in the contralateral device, then there may be a between-ear mismatch in place of stimulation with the standard frequency tables, and the patient may therefore benefit from reprogramming of the frequency table in one ear.

In an effort to test the validity of this approach, we are conducting an experiment in which the overall goal is to enable a user of bilateral cochlear implants to select a frequency table in one ear that maximizes speech intelligibility, and to better understand what acoustic factors drive that selection process. This experiment consists of several different stages. In the first stage, participants obtain a self-selected frequency table. In this crucial stage, participants are presented with running speech, ranging from the experimenter's live voice to prerecorded speech stimuli (a three-sentence Hearing in Noise Test [HINT] list presented at 65 dB SPL). The participants are instructed to focus on the intelligibility of the speech signal. Then, either the participant or the experimenter adjusts the frequency table in real time by use of a specialized system developed in our laboratory. This approach allows the participant to listen to many different frequency tables in rapid succession, which is virtually impossible to do using conventional clinical software. In this way, participants can listen until they select a frequency table that maximizes speech intelligibility. Data obtained with normal-hearing individuals listening to an acoustic simulation of an implant suggests that these judgments are made on the basis of speech intelligibility as opposed to other factors (Fitzgerald et al, 2006; Fitzgerald et al, forthcoming), and pilot data with cochlear implant users also suggests this to be the case. With regard to the present line of research, we obtain self-selected frequency tables from users of bilateral implants in two listening conditions. In one, the participant obtains a self-selected table for the more recently implanted ear by itself. In the second condition, the participant does so once again for the more recently implanted ear, but this time the contralateral implant is also active. As before, the participant is requested to choose a frequency table that maximizes speech intelligibility when the contralateral device is also active. By comparing the frequency tables chosen in each condition, we can obtain an estimate of whether there may be a between-ear mismatch in the place of stimulation.

In the second stage of this experiment, we are attempting to determine which factors drive the selection of the frequency table obtained with running speech. More specifically, we want to determine whether listeners weight different aspects of the speech signal more heavily when making their frequency table self-selections. Toward this goal, we are repeating the self-selection process in both the unilateral and bilateral conditions, but this time we are using vowels and consonants instead of running speech. In this way, we can determine whether participants are focusing on certain attributes of the speech signal when making their self-selections, as would be the case if, for example, the frequency tables selected with running speech and vowels were the same while a different table was selected with consonants. Conversely, if participants select the same frequency table in all instances, then it would imply that they are not weighing certain sounds more heavily than others when choosing a self-selected frequency table.

In a third stage of this experiment, we are also attempting to determine whether, for a given signal, the self-selected frequency table elicits the same percept of pitch as does the standard frequency table in the contralateral ear. When assessing the localization abilities of users of bilateral implants, many researchers choose to utilize electrode pairs that elicit the same pitch percept in each ear, under the assumption that similar pitch percepts mean that neurons of the same characteristic frequency are being stimulated in each ear. It is generally thought that pitch-matched electrodes yield the best localization abilities, although there are examples in which this is not necessarily the case (Long et al, 2003; Poon et al, 2009).

In the final stage of this experiment, we are attempting to obtain bilateral CT scans to determine whether there is a physical mismatch in the electrode location between ears. This information provides an important cross-check to our behavioral data and can help inform our results. For example, if there is a physical mismatch in between-ear electrode location but the patient selects a frequency table in the recently implanted ear that matches that programmed in the contralateral ear, then it suggests that the patient has adapted to the physical mismatch.

A second global theme in our research on bilateral implantation is to quantify the effects of between-ear mismatches on speech understanding in users of bilateral implants. As noted previously, very little is known in this regard, because of the difficulty in identifying a between-ear mismatch using conventional clinical tools. In the present study, we are attempting to estimate how large a between-ear mismatch must be before it would require clinical intervention. Toward this goal, we are bringing experienced bilateral cochlear implant users to our laboratory, and are simulating the effects of a between-ear mismatch in insertion depth by manipulating the frequency table. Specifically, in one ear, the frequency table is unchanged from the standard settings already programmed in the patient's processor. In the contralateral ear, we then manipulate the frequency table to emulate a case in which the electrode in one ear is inserted either shallower, or more deeply, than the contralateral ear. For each test condition, we are then measuring word- and vowel-recognition performance in order to document the effect of between-ear mismatch on speech understanding. We also are attempting to correlate these results with bilateral CT scan data, to determine whether our behavioral results are consistent with any between-ear differences in insertion depth observed in the imaging data.

This line of research with bilateral CI users represents our group's first venture in this domain and exemplifies our interest in basic studies that may have direct relevance to clinical practice.

Bimodal Patients: Listening Using Acoustic and Electrical Auditory Input

Clinical practice with cochlear implants is undergoing a quiet, but significant, change. In the past only deaf persons were eligible to receive a CI. However, current criteria allow implantation of persons with more residual hearing who can understand up to 60% of words in sentences with a HA. The new audiometric criteria for cochlear implantation have yielded a quickly expanding group of cochlear implantees who have some residual hearing in the contralateral ear, as well as a smaller group of “hybrid” CI users who also have residual hearing in the implanted ear. Many of these patients may benefit from amplification, and those who use a hearing aid in the contralateral ear are known as bimodal CI users. As implantation criteria continue to evolve, it is likely that a large proportion of future CI patients will have usable hearing in the contralateral and/or ipsilateral ears. Our latest line of research involves the study of these patients.

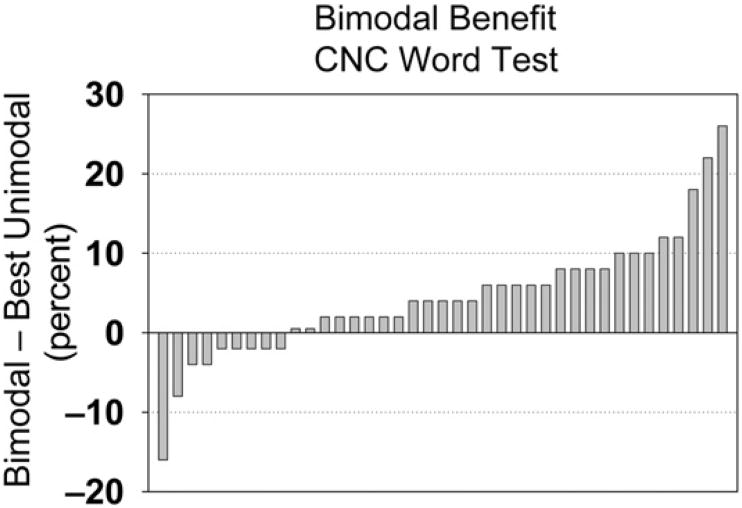

On the whole, improved speech recognition is observed when a HA is used in conjunction with a CI (see Waltzman et al, 1992; Ching et al, 2001; Tyler, Parkinson, et al, 2002; Hamzavi et al, 2004; Mok et al, 2006; Dorman et al, 2008; Brown and Bacon, 2009). This is thought to occur in part because the HA provides information that is not easily perceived with a CI, such as the fundamental frequency, lower harmonics of speech, and temporal fine structure. In addition, bimodal patients can also access some of the benefits of listening with two ears, that is, binaural summation and the ability to benefit from the head-shadow effect. However, bimodal benefit is characterized by a great deal of variability among individuals. Figure 6 shows data for 39 of our postlingually deaf patients who have enough residual hearing to benefit from a HA in the contralateral ear. The figure shows bimodal benefit in quiet, which is defined as the difference between the score obtained in the bimodal condition and the best score of the two unimodal conditions (HA or CI). The patient on the far right of the figure had 26% bimodal benefit (84% bimodal score minus 58%HAscore; his CI score was 40%). In contrast, the patient on the far left showed bimodal interference, as indicated by a negative bimodal benefit (216%). His CI score was 72%, and his bimodal score was 56%, 16% lower than with the CI alone. In this case, the addition of the HA interfered with rather than helped speech perception. Other studies are consistent with these data: some patients do not obtain bimodal benefit, and in a few cases the addition of the HA even degrades performance (Armstrong et al, 1997; Ching et al, 2004; Dunn et al, 2005; Brown and Bacon, 2009).

Figure 6.

Bimodal benefit for word identification in quiet, defined as the difference between the bimodal score minus the best unimodal score. Negative numbers represent bimodal interference rather than benefit.

Current clinical practice with bimodal patients usually entails the separate fitting of each device by a different audiologist, using methods that are normally employed for stand-alone devices. This paradigm is based more on historical circumstances than on evidence of its effectiveness. In our latest line of research we aim to develop data-based tools that will allow clinicians to maximize bimodal speech perception by better coordinating the fitting of the hearing aid and the cochlear implant, as well as the postimplantation follow-up.

Outcomes Studies in Hearing Impaired Adults and Children

An important part of our group's research activities is the study of speech perception (Meyer et al, 1998; Meyer and Svirsky, 2000; Tajudeen et al, 2010), speech production (Miyamoto et al, 1997; Sehgal et al, 1998; Habib et al, 2010), and language development outcomes (Svirsky et al, 2000; Svirsky et al, 2002) in hearing impaired patients with a particular focus on cochlear implant users. One of these studies (Svirsky et al, 2000) showed that language in children with cochlear implants developed at a normal rate after implantation (at least for some measures) and that this development rate exceeded what would be expected from unimplanted children with a similar level of hearing impairment. Another study suggested that a child's auditory input might influence the sequence at which different grammatical skills develop (Svirsky et al, 2002). This is an example where the CI population is used for a study that aims to answer basic questions about the nature of speech and language development.

A significant aspect of our outcomes research activities is that we also aim to examine the methods used in such studies and, when necessary, develop new analysis methods. For example, one of our recent studies examined estimation bias in Information Transfer analysis (Sagi and Svirsky, 2008), a method that is commonly used to measure the extent to which speech features are transmitted to a listener. This method is biased to overestimate the true value of information transfer when the analysis is based on a small number of stimulus presentations. Our study found that the overestimation could be substantial, and guidelines were provided to minimize bias depending on the number of samples, the size of the confusion matrix analyzed, and the manner in which the confusion matrix is partitioned.

Some of our studies have examined the strong influence of age at implantation on the development of speech and language skills. Another example of a novel analysis method, which we named Developmental Trajectory Analysis (DTA), was introduced by Svirsky, Teoh, et al (2004) and further refined by Holt and Svirsky (2008). This method compares developmental trajectories (i.e., curves representing change in an outcome measure over time) for a number of groups of children who differ along a potentially important independent variable such as age at intervention. Some advantages of DTA over other analysis methods are that it assesses the complete developmental trajectory rather than individual data points and that no assumptions are made concerning the shape of developmental trajectories. In addition, all available data points can be used, missing data are handled gracefully, and the method provides a reasonable estimate of the average difference between two groups of developmental curves without making any assumptions about the shape of those curves.

Another interesting example of a methodological study is found in Sagi et al (2007), who examined the conclusions and possible misinterpretations that may arise from the use of the “outcome-matching method.” This is a study design where patient groups are matched not only on potentially confounding variables but also on an outcome measure that is closely related to the outcome measure under analysis. For example, Spahr and Dorman (2004) compared users of CI devices from two manufacturers by matching patients from each group based on their word recognition scores in quiet and then comparing their sentence recognition scores in noise. It was found that the matched group's scores in noise were significantly better for one device in comparison to the other. Although not mentioned in the study, this result was interpreted by some to mean that one device outperformed the other in noise.

We used a simple computational analysis to test the validity of this interpretation. Our study had two parts: a simulation study and a questionnaire. In the simulation study, the outcome-matching method was applied to pseudo-randomly generated data. It was found that the outcome matching method can provide important information when results are properly interpreted (e.g., Spahr et al, 2007), but it cannot be used to determine whether a given device or clinical intervention is better than another one. This is an important result because cochlear implant manufacturers have attempted to use studies based on the outcome matching method to prove the superiority of their devices over their competitors' devices. Our study proved conclusively that this is incorrect. In the second part of the study we examined the level of misinterpretation that could arise from the outcome-matching method. A questionnaire was administered to 54 speech and hearing students before and after reading the Spahr and Dorman article. Before reading the article, when asked whether they believed one device was better than the other, more than 80% responded “I don't know” or “about the same.” After reading the article, a large majority of the students thought that one specific cochlear implant was better. This change in opinion happened despite the fact that (according to the simulation study) such a change was not warranted by the data. Taken together, the simulation study and the analysis of questionnaires provided important information that may help with the design or interpretation of outcomes studies of speech perception in hearing impaired listeners.

Summary

We hope that these pages provide some information about the current lines of research in our laboratory, the questions we ask, and some of the methods we use to answer them. Moreover, we anticipate that these studies will also help to answer basic questions about hearing and speech perception, as well as have an impact on or at least inform the clinical treatment of speech and hearing disorders.

Supplementary Material

Acknowledgments

We also acknowledge the invaluable assistance of NYU's Cochlear Implant Center, its codirectors J. Thomas Roland, MD, and Susan B. Waltzman, PhD, and its chief audiologist, William Shapiro, AuD; Wenjie Wang for her help with AEP measures; Julie Arruda, for assistance with reformatting of images and 3D VRT reconstructions; and Ben Guo for his help in many of the projects listed in the article.

The work described in these pages was supported by the following NIH-NIDCD grants, R01-DC03937 (PI: Svirsky), R01-DC011329 (PIs: Svirsky and Neuman), K99-DC009459 (PI: Fitzgerald), K25DC010834 (PI: Tan), and K23 DC05386 (PI: Martin), as well as by NIDRR grants H133E030006 (PI: Neuman) and H133F090031 (PI: Sagi) (contents do not necessarily represent the policy of the Department of Education, and endorsement by the federal government should not be assumed), PSC-CUNY grant (PI: Martin), AAO-HNS grants (PIs: Daniel Jethanamest and Kevin Wang; Mentor: Svirsky), DRF grant (PI: Tan), and a grant from the Office of Naval Research (PI: Ketten). Cochlear Americas, Advanced Bionics, and Siemens have loaned equipment and software and provided technical advice to some of our projects over the past five years.

Abbreviations

- AEP

auditory evoked potentials

- BKB-SIN

Bamford-Kowal-Bench Speech-in-Noise Test

- BRIR

binaural room impulse response

- CI

cochlear implant

- F1

first formant frequency

- F2

second formant frequency

- MPI

Multidimensional Phoneme Identification

References

- Ainswort WA. Relative intelligibility of different transforms of clipped speech. J Acoust Soc Am. 1967;41:1272–1276. doi: 10.1121/1.1910468. [DOI] [PubMed] [Google Scholar]

- Armstrong M, Pegg P, James C, Blamey P. Speech perception in noise with implant and hearing aid. Am J Otol. 1997;18(6, Suppl.):S140–S141. [PubMed] [Google Scholar]

- Besing JM, Koehnke J. A test of virtual auditory localization. Ear Hear. 1995;16:220–229. doi: 10.1097/00003446-199504000-00009. [DOI] [PubMed] [Google Scholar]

- Blanks DA, Buss E, Grose JH, Fitzpatrick DC, Hall JW., 3rd Interaural time discrimination of envelopes carried on high frequency tones as a function of level and interaural carrier mismatch. Ear Hear. 2008;29:674–683. doi: 10.1097/AUD.0b013e3181775e03. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blanks DA, Roberts JM, Buss E, Hall JW, Fitzpatrick DC. Neural and behavioral sensitivity to interaural time differences using amplitude modulated tones with mismatched carrier frequencies. J Assoc Res Otolaryngol. 2007;8:393–408. doi: 10.1007/s10162-007-0088-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bolt RH, MacDonald AD. Theory of speech masking by reverberation. J Acoust Soc Am. 1949;21:577–580. [Google Scholar]

- Bradley JS, Sato H, Picard M. On the importance of early reflections for speech in rooms. J Acoust Soc Am. 2003;113:3233–3244. doi: 10.1121/1.1570439. [DOI] [PubMed] [Google Scholar]

- Brown CA, Bacon SP. Achieving electric-acoustic benefit with a modulated tone. Ear Hear. 2009;30:489–493. doi: 10.1097/AUD.0b013e3181ab2b87. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cameron S, Brown D, Keith R, Martin J, Watson C, Dillon H. Development of the North American Listening in Spatialized Noise-Sentences test (NA LiSN-S): sentence equivalence, normative data, and test-retest reliability studies. J Am Acad Audiol. 2009;20:128–146. doi: 10.3766/jaaa.20.2.6. [DOI] [PubMed] [Google Scholar]

- Carlyon RP, Macherey O, Frijns JHM, Axon PR, Kalkman RK, Boyle P, Baguley DM, Briggs J, Deeks JM, Briaire JJ, Barreau X, Dauman R. Pitch comparisons between electrical stimulation of a cochlear implant and acoustic stimuli presented to a normal-hearing contralateral ear. J Assoc Res Otolaryngol. 2010;11:625–640. doi: 10.1007/s10162-010-0222-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chan JC, Freed DJ, Vermiglio AJ, Soli SD. Evaluation of binaural functions in bilateral cochlear implant users. Int J Audiol. 2008;47:296–310. doi: 10.1080/14992020802075407. [DOI] [PubMed] [Google Scholar]

- Ching TY, Incerti P, Hill M. Binaural benefits for adults who use hearing aids and cochlear implants in opposite ears. Ear Hear. 2004;25:9–21. doi: 10.1097/01.AUD.0000111261.84611.C8. [DOI] [PubMed] [Google Scholar]

- Ching TY, Psarros C, Hill M, Dillon H, Incerti P. Should children who use cochlear implants wear hearing aids in the opposite ear? Ear Hear. 2001;22:365–380. doi: 10.1097/00003446-200110000-00002. [DOI] [PubMed] [Google Scholar]

- Daya H, Ashley A, Gysin C, et al. Changes in educational placement and speech perception ability after cochlear implantation in children. J Otolaryngol. 2000;29:224–228. [PubMed] [Google Scholar]

- Dorman MF, Gifford RH, Spahr AJ, McKarns SA. The benefits of combining acoustic and electric stimulation for the recognition of speech, voice and melodies. Audiol Neurootol. 2008;13(2):105–112. doi: 10.1159/000111782. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dorman MF, Spahr T, Gifford R, Loiselle L, McKarns S, Holden T, Skinner M, Finley C. An electric frequency-to-place map for a cochlear implant patient with hearing in the nonimplanted ear. J Assoc Res Otolaryngol. 2007;8:234–240. doi: 10.1007/s10162-007-0071-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dunn CC, Tyler RS, Witt SA. Benefit of wearing a hearing aid on the unimplanted ear in adult users of a cochlear implant. J Speech Lang Hear Res. 2005;48:668–680. doi: 10.1044/1092-4388(2005/046). [DOI] [PubMed] [Google Scholar]

- Etymotic Research. Bamford-Kowal-Bench Speech in Noise Test User Manual (Version 1.03) Elk Grove Village, IL: Etymotic Research; 2005. [Google Scholar]

- Fitzgerald MB, Morbiwala TA, Svirsky MA. Customized selection of frequency maps in an acoustic cochlear implant simulation. Conf Proc IEEE Eng Med Biol Soc. 2006;1:3596–3599. doi: 10.1109/IEMBS.2006.260462. [DOI] [PubMed] [Google Scholar]

- Fitzgerald MB, Sagi E, Morbiwala TA, Svirsky MA. Customized selection of frequency maps and modeling of vowel identification in an acoustic simulation of a cochlear implant. Ear Hear Forthcoming. [Google Scholar]

- Fitzgerald MB, Shapiro WH, McDonald PD, et al. The effect of perimodiolar placement on speech perception and frequency discrimination by cochlear implant users. Acta Otolaryngol. 2007;127:378–383. doi: 10.1080/00016480701258671. [DOI] [PubMed] [Google Scholar]

- Francart T, Brokx J, Wouters J. Sensitivity to interaural level difference and loudness growth with bilateral biomodal stimulation. Audiol Neurootol. 2008;13:309–319. doi: 10.1159/000124279. [DOI] [PubMed] [Google Scholar]

- Fu QJ, Shannon RV, Galvin JJ., III Perceptual learning following changes in the frequency-to-electrode assignment with the Nucleus-22 cochlear implant. J Acoust Soc Am. 2002;112:1664–1674. doi: 10.1121/1.1502901. [DOI] [PubMed] [Google Scholar]

- Gantz BJ, Tyler RS, Rubinstein JT, et al. Bilateral cochlear implants placed during the same operation. Otol Neurotol. 2002;23:169–180. doi: 10.1097/00129492-200203000-00012. [DOI] [PubMed] [Google Scholar]

- Grantham DW, Ashmead DH, Ricketts TA, Labadie RF, Haynes DS. Horizontal-plane localization of noise and speech signals by postlingually deafened adults fitted with bilateral cochlear implants. Ear Hear. 2007;28:524–541. doi: 10.1097/AUD.0b013e31806dc21a. [DOI] [PubMed] [Google Scholar]

- Greenwood DD. A cochlear frequency-position function for several species–29 years later. J Acoust Soc Am. 1990;87(6):2592–2605. doi: 10.1121/1.399052. [DOI] [PubMed] [Google Scholar]

- Habib MG, Waltzman SB, Tajudeen B, Svirsky MA. Speech production intelligibility of early implanted pediatric cochlear implant users. Int J Pediatr Otorhinolaryngol. 2010;74:855–859. doi: 10.1016/j.ijporl.2010.04.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hamzavi J, Pok SM, Gstoettner W, Baumgartner WD. Speech perception with a cochlear implant used in conjunction with a hearing aid in the opposite ear. Int J Audiol. 2004;43:61–65. [PubMed] [Google Scholar]

- Hardy M. The length of the organ of Corti in man. Am J Anat. 1938;62:291–311. [Google Scholar]

- Harnsberger JD, Svirsky MA, Kaiser AR, Pisoni DB, Wright R, Meyer TA. Perceptual “vowel spaces” of cochlear implant users: implications for the study of auditory adaptation to spectral shift. J Acoust Soc Am. 2001;109:2135–2145. doi: 10.1121/1.1350403. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hillenbrand J, Getty LA, Clark MJ, Wheeler K. Acoustic characteristics of American English vowels. J Acoust Soc Am. 1995;97:3099–3111. doi: 10.1121/1.411872. [DOI] [PubMed] [Google Scholar]

- Holt RF, Svirsky MA. An exploratory look at pediatric cochlear implantation: is earliest always best? Ear Hear. 2008;29:492–511. doi: 10.1097/AUD.0b013e31816c409f. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Iglehart F. Combined effects of classroom reverberation and noise on speech perception by students with typical and impaired hearing. InterNoise Proc. 2009;218:650–657. [Google Scholar]

- Jethanamest D, Tan CT, Fitzgerald MB, Svirsky MA. A new software tool to optimize frequency table selection for cochlear implants. Otol Neurotol. 2010;31:1242–1247. doi: 10.1097/MAO.0b013e3181f2063e. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kaiser AR, Svirsky MA. Using a personal computer to perform real-time signal processing in cochlear implant research. Paper presented at the IXth IEEE-DSP Workshop; Hunt, TX. October 15-18; 2000. http://spib.ece.rice.edu/SPTM/DSP2000/submission/DSP/papers/paper123/paper123.pdf. [Google Scholar]

- Ketten DR, Skinner M, Wang G, Vannier M, Gates G, Neely G. In vivo measures of cochlear length and insertion depths of Nucleus® cochlear implant electrode arrays. Ann Otol Laryngol. 1998;107(11):1–16. [PubMed] [Google Scholar]

- Kirk KI, Pisoni DB, Osberger MJ. Lexical effects on spoken word recognition by pediatric cochlear implant users. Ear Hear. 1995;16:470–481. doi: 10.1097/00003446-199510000-00004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Koehnke J, Besing JM. A procedure for testing speech intelligibility in a virtual Listening environment. Ear Hear. 1996;17:211–217. doi: 10.1097/00003446-199606000-00004. [DOI] [PubMed] [Google Scholar]

- Litovsky RY, Johnstone PM, Godar S, et al. Bilateral cochlear implants in children: localization acuity measured with minimum audible angle. Ear Hear. 2006;27:43–59. doi: 10.1097/01.aud.0000194515.28023.4b. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Litovsky RY, Parkinson A, Arcaroli J, et al. Bilateral cochlear implants in both adults and children. Arch Otolaryngol Head Neck Surg. 2004;130:648–655. doi: 10.1001/archotol.130.5.648. [DOI] [PubMed] [Google Scholar]

- Litovsky R, Parkinson A, Arcaroli J, Sammeth C. Simultaneous bilateral cochlear implantation in adults: a multicenter clinical study. Ear Hear. 2006;27:714–731. doi: 10.1097/01.aud.0000246816.50820.42. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lochner JPA, Burger JF. The intelligibility of speech under reverberant conditions. Acustica. 1961;11:195–200. [Google Scholar]

- Long CJ, Eddington DK, Colburn HS, Rabinowitz WM. Binaural sensitivity as a function of interaural electrode position with a bilateral cochlear implant user. J Acoust Soc Am. 2003;114:1565–1574. doi: 10.1121/1.1603765. [DOI] [PubMed] [Google Scholar]

- McDermott H, Sucher C, Simpson A. Electro-acoustic stimulation. Acoustic and electric pitch comparisons. Audiol Neurootol. 2009;14(Suppl. 1):2–7. doi: 10.1159/000206489. [DOI] [PubMed] [Google Scholar]

- Meyer TA, Svirsky MA. Speech perception by children with the Clarion (CIS) or Nucleus 22 (SPEAK) cochlear implantorhearing aids. Ann Otol Rhinol Laryngol. 2000;109:49–51. doi: 10.1177/0003489400109s1220. [DOI] [PubMed] [Google Scholar]

- Meyer TA, Svirsky MA, Kirk KI, Miyamoto RT. Improvements in speech perception by children with profound prelingual hearing loss: effects of device, communication mode, and chronological age. J Speech Lang Hear Res. 1998;41:846–858. doi: 10.1044/jslhr.4104.846. [DOI] [PubMed] [Google Scholar]

- Miyamoto RT, Svirsky MA, Robbins AM. Enhancement of expressive language in prelingually deaf children with cochlear implants. Acta Otolaryngol. 1997;117:154–157. doi: 10.3109/00016489709117758. [DOI] [PubMed] [Google Scholar]

- Mok M, Grayden D, Dowell RC, et al. Speech perception for adults who use hearing aids in conjunction with cochlear implants in opposite ears. J Speech Lang Hear Res. 2006;49:338–351. doi: 10.1044/1092-4388(2006/027). [DOI] [PubMed] [Google Scholar]

- Moore BCJ, Glasberg BR. Use of a loudness model for hearing-aid fitting. I. Linear hearing aids. Br J Audiol. 1998;32:317–335. doi: 10.3109/03005364000000083. [DOI] [PubMed] [Google Scholar]

- Moore BCJ, Tan CT. Perceived quality of spectrally distorted speech and music. J Acoust Soc Am. 2003;114(1):408–419. doi: 10.1121/1.1577552. [DOI] [PubMed] [Google Scholar]

- Moore BCJ, Tan CT. Measuring and predicting the perceived quality of music and speech subjected to combined linear and nonlinear distortion. J Audio Eng Soc. 2004a;52(2):1228–1244. [Google Scholar]

- Moore BCJ, Tan CT. Development of a method for predicting the perceived quality of sounds subjected to spectral distortion. J Audio Eng Soc. 2004b;52(9):900–914. [Google Scholar]

- Nábĕlek AK, Letowski TR, Tucker FM. Reverberant overlap and self masking in consonant identification. J Acoust Soc Am. 1989;86:1259–1265. doi: 10.1121/1.398740. [DOI] [PubMed] [Google Scholar]

- Neuman AC, Haravon A, Sislian N, Waltzman SB. Sound-direction identification with bilateral cochlear implants. Ear Hear. 2007;28(1):73–82. doi: 10.1097/01.aud.0000249910.80803.b9. [DOI] [PubMed] [Google Scholar]

- Neuman AC, Wroblewski M, Hajicek J, et al. Combined effects of noise and reverberation on speech recognition performance of normal-hearing children and adults. Ear Hear. 2010;31:336–344. doi: 10.1097/AUD.0b013e3181d3d514. [DOI] [PubMed] [Google Scholar]

- Nilsson M, Soli S, Gelnett D. House Ear Institute Internal Report. Vol. 1996 Los Angeles: House Ear Institute; 1996. Development and Norming of a Hearing in Noise Test for Children. [Google Scholar]

- Nopp P, Schleich PI, D'Haese P. Sound localization in bilateral users of MED-EL COMBI 40/40+ cochlear implants. Ear Hear. 2004;25:205–214. doi: 10.1097/01.aud.0000130793.20444.50. [DOI] [PubMed] [Google Scholar]

- Peterson GE, Barney HL. Control methods used in a study of the vowels. J Acoust Soc Am. 1952;24:175–184. [Google Scholar]

- Poon BB, Eddington DK, Noel V, Colburn HS. Sensitivity to interaural time difference with bilateral cochlear implants: development over time and effect of interaural electrode spacing. J Acoust Soc Am. 2009;126:806–815. doi: 10.1121/1.3158821. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Remez RE, Rubin PE, Pisoni DB, Carrell TD. Speech-perception without traditional speech cues. Science. 1981;212:947–950. doi: 10.1126/science.7233191. [DOI] [PubMed] [Google Scholar]

- Ricketts TA, Grantham DW, Ashmead DH, Haynes DS, Labadie RF. Speech recognition for unilateral and bilateral cochlear implant modes in the presence of uncorrelated noise sources. Ear Hear. 2006;27:763–773. doi: 10.1097/01.aud.0000240814.27151.b9. [DOI] [PubMed] [Google Scholar]

- Sagi E, Fitzgerald MB, Svirsky MA. What matched comparisons can and cannot tell us: the case of cochlear implants. Ear Hear. 2007;28:571–579. doi: 10.1097/AUD.0b013e31806dc237. [DOI] [PubMed] [Google Scholar]

- Sagi E, Fu QJ, Galvin JJ, III, Svirsky MA. A model of incomplete adaptation to a severely shifted frequency-to-electrode mapping by cochlear implant users. J Assoc Res Otolaryngol. 2010;11:69–78. doi: 10.1007/s10162-009-0187-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sagi E, Kaiser AR, Meyer TA, Svirsky MA. The effect of temporal gap identification on speech perception by users of cochlear implants. J Speech Lang Hear Res. 2009;52:385–395. doi: 10.1044/1092-4388(2008/07-0219). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sagi E, Meyer TA, Kaiser AR, Teoh SW, Svirsky MA. A mathematical model of vowel identification by users of cochlear implants. J Acoust Soc Am. 2010;127:1069–1083. doi: 10.1121/1.3277215. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sagi E, Svirsky MA. Information transfer analysis: a first look at estimation bias. J Acoust Soc Am. 2008;123:2848–2857. doi: 10.1121/1.2897914. [DOI] [PMC free article] [PubMed] [Google Scholar]