Abstract

Objective To examine whether network meta-analyses, increasingly used to assess comparative effectiveness of healthcare interventions, follow the key methodological recommendations for reporting and conduct of systematic reviews.

Design Methodological systematic review of reports of network meta-analyses.

Data sources Cochrane Database of Systematic Reviews, Database of Abstracts of Reviews of Effects, Medline, and Embase, searched from inception to 12 July 2012.

Review methods All network meta-analyses comparing clinical efficacy of three or more interventions based on randomised controlled trials, excluding meta-analyses with an open loop network of three interventions. We assessed the reporting of general characteristics and key methodological components of the systematic review process using two composite outcomes. For some components, if reporting was adequate, we assessed their conduct quality.

Results Of 121 network meta-analyses covering a wide range of medical areas, 100 (83%) assessed pharmacological interventions and 11 (9%) non-pharmacological interventions; 56 (46%) were published in journals with a high impact factor. The electronic search strategy for each database was not reported in 88 (73%) network meta-analyses; for 36 (30%), the primary outcome was not clearly identified. Overall, 61 (50%) network meta-analyses did not report any information regarding the assessment of risk of bias of individual studies, and 103 (85%) did not report any methods to assess the likelihood of publication bias. Overall, 87 (72%) network meta-analyses did not report the literature search, searched only one database, did not search other sources, or did not report an assessment of risk of bias of individual studies. These methodological components did not differ by publication in a general or specialty journal or by public or private funding.

Conclusions Essential methodological components of the systematic review process—conducting a literature search and assessing risk of bias of individual studies—are frequently lacking in reports of network meta-analyses, even when published in journals with high impact factors.

Introduction

Assessing the comparative effectiveness of many or all available interventions for a clinical indication is challenging.1 Direct evidence from head to head trials is often lacking.2 3 4 5 Indirect comparison between two interventions can still be estimated from the results of randomised controlled trials, without jeopardising the randomised comparisons within each trial, if each intervention is compared with an identical common comparator.6 7 When both direct and indirect comparisons are available, the two sources of information can be combined by using network meta-analyses—also known as multiple treatments meta-analyses or mixed treatment comparison meta-analyses.8 9 These methods allow for estimating all possible pairwise comparisons between interventions and placing them in rank order.

In the past few years, network meta-analyses have been increasingly adopted for comparing healthcare interventions.10 11 12 Network meta-analyses are attractive for clinical researchers because they seem to respond to their main concern: determining the best available treatment. Moreover, national agencies for health technology assessment and drug regulators increasingly use such methods.13 14 15 16

Several reviews previously evaluated how indirect comparisons have been conducted and reported.7 10 11 12 17 18 These reviews focused on checking validity assumptions (based on homogeneity, similarity, and consistency) and did not assess the key components of the systematic review process. Network meta-analyses are primarily meta-analyses, and should therefore be performed according to the explicit and rigorous methods used in systematic reviews and meta-analyses to minimise bias.19 We performed a methodological systematic review of published reports of network meta-analyses to examine how they were reported and conducted. In particular, we assessed whether the reports adequately featured the key methodological components of the systematic review process.

Methods

Search strategy

We systematically searched for all published network meta-analyses in the following databases from their dates of inception: Cochrane Database of Systematic Reviews, Database of Abstracts of Reviews of Effects, Medline, and Embase. Search equations were developed for each database and were based partly on search terms used by Song and colleagues12 and Salanti and colleagues20 (web table 1). The equations were based on specific free text words pertaining to network meta-analyses or overviews of reviews. The date of the last search was 12 July 2012. We also screened the references of methodology papers and reviews11 12 13 14 15 16 17 21 and searched for papers citing landmark statistical articles through the Web of Science or SCOPUS database.6 8 22 23 24

Eligibility criteria

All reports of network meta-analyses comparing the clinical efficacy of three or more interventions based on randomised controlled trials were eligible. We excluded reports of adjusted meta-analyses involving indirect comparisons (that is, an open loop network of three interventions)10 13 17 as well as publications of methodology, editorial style reviews or reports, cost effectiveness reviews, reviews based on individual patient data, and reviews not involving human participants. We also excluded reviews not published in English, French, German, Spanish, and Italian.

Selection of relevant reports of network meta-analyses

Two reviewers (AB, LT) independently selected potentially relevant reports of network meta-analyses on the basis of the title and abstract and, if needed, the full text according to prespecified eligibility criteria. Disagreements were discussed to reach consensus.

Data extraction

A standardised form was used to collect all data from the original reports of network meta-analyses and supplementary appendices when available. We collected all data for epidemiological and descriptive characteristics and those pertaining to the key methodological components of the systematic review process. Two reviewers (AB, RS) independently extracted all data from a random sample of 20% of reports. From all reports, two reviewers (AB, RS) independently extracted data for items that involved subjective interpretation. The corresponding items were questions referring to participants, interventions, comparisons, and outcomes; the type of comparator; how reporting bias was assessed; the publication status of selected randomised controlled trials; and whether statistical assumptions (homogeneity, similarity, and consistency) were mentioned. Disagreements were resolved by discussion.

General characteristics

The following general characteristics were collected: journal name, year of publication, country of corresponding author, medical area, funding source, and type of interventions included in the network. We categorised journal types into general or specialty; we also identified journals with high impact factors (that is, the 10 journals with the highest impact factor for each medical subject category of the Journal Citation Reports 2011). Regarding network typology, we collected the number of interventions, the number of comparisons conducted by at least one randomised controlled trial (that is, direct comparisons), and the number of randomised controlled trials included in the network.

Reporting of key methodological components of the systematic review process

According to PRISMA25 and AMSTAR26 guidelines, we assessed whether key methodological components were reported or not. In the introduction and methods sections of each report, these factors included:

Questions referring to participants, interventions, comparisons, and outcomes

Existence of a review protocol

Primary outcome explicitly specified in the article or abstract, in the primary study objectives, or as the only outcome reported in the article27

Information sources including databases searched, electronic search strategy, date of last search and period covered by the search of each database, any other sources (conference abstracts, unpublished studies, textbooks, specialty registers (for example, the US Food and Drug Administration), contact with study authors, reviewing the references in the studies found or any relevant systematic reviews), ongoing studies searched, and any or no restrictions related to language or publication status (that is, published studies or any other sources or ongoing studies)

Methods for study selection and data extraction

Methods used for assessing risk of bias of individual studies, such as use of any scales, checklists, or domain based evaluation recommended by the Cochrane Collaboration, with separate critical assessments for different domains (that is, allocation concealment, generation of sequence allocation, blinding, incomplete outcome data, or selective reporting)

Methods to incorporate assessment of risk of bias of individual studies in the analysis or conclusion of review (that is, subgroup analysis, inclusion criteria, sensitivity analysis, or the grading recommendations assessment development and evaluation (GRADE))28

Methods to assess publication or reporting bias (statistical or graphical evaluation).

Regarding the search strategy, we distinguished between a “de novo” search (a new comprehensive literature search) and a mixed search strategy (an initial search for systematic reviews complemented by an updated search of trials not covered by the search period of these systematic reviews).

In the results section, we assessed whether reports included the following components:

Study selection, including the number of studies screened, assessed for eligibility, and included in the review; with reasons for exclusions at each stage (for example, a PRISMA flowchart) and the list of studies included and excluded

Study characteristics, including a description of the trial network with the number of interventions and number of comparisons conducted by at least one randomised controlled trial (that is, direct comparisons), characteristics of patients (for example, age, sex, disease status, severity), duration of follow-up of patients and duration of interventions, description of interventions, number of study groups and patients, and funding sources

Risk of bias within studies

Risk of bias across studies (publication bias).

Finally, we assessed whether the discussion section included:

A limitation regarding reporting bias

Whether the authors mentioned or discussed the assumptions required in network meta-analyses (based on homogeneity, similarity, consistency, and exchangeability)

Whether authors mentioned a conflict of interest.

Conduct quality when the reporting was adequate

When items were adequately reported, we subsequently assessed whether the conduct quality was adequate. We considered the following items as inadequate conduct: electronic search of only one bibliographic database, restricting study selection to published reports, lack of independent duplicate study selection, and lack of independent duplicate data extraction.

Composite outcome assessing inadequate reporting or inadequate conduct quality

To summarise data, we built two composite outcomes assessing inadequate reporting or inadequate conduct quality (when the reporting was adequate). The first composite outcome was based on guidelines from Li and colleagues.29 Inadequate reporting or conduct quality was considered as one of the following:

The authors did not report a literature search, they reported an electronic search of only one bibliographic database, or they did not supplement their investigation by searching for any other sources

The authors did not report an assessment of risk of bias of individual studies.

The second composite outcome was defined as an all or none approach, based on seven methodological items identified as mandatory according to the methodological expectations of Cochrane intervention reviews.30 Conduct or reporting quality was considered inadequate if:

The authors did not report questions referring to participants, interventions, comparisons, and outcomes

The authors did not report eligibility criteria

The authors did not report a literature search; they reported an electronic search of only one bibliographic database; or they did not supplement their investigation by searching for references in studies found, any relevant systematic reviews, or ongoing studies (for example, clinicaltrials.gov or the World Health Organization International Clinical Trials Registry Platform)

The authors did not report the methods of study selection and the data extraction process, or they reported inadequate methods

The authors did not report the results of the selection of primary studies

The authors did not provide a description of the primary studies

The authors did not report an assessment of the risk of bias of individual studies.

Statistical analysis

Quantitative data were summarised by medians and interquartile ranges, and categorical data summarised by numbers and percentages. We compared general characteristics and reporting of key items for network meta-analyses with results published in general and specialty journals and with public and private funding sources. All or none measurements were analysed by χ2 tests for categorical data. All tests were two sided, and P<0.05 was considered significant. Statistical analyses involved use of SAS version 9.3 (SAS Institute).

Results

General characteristics

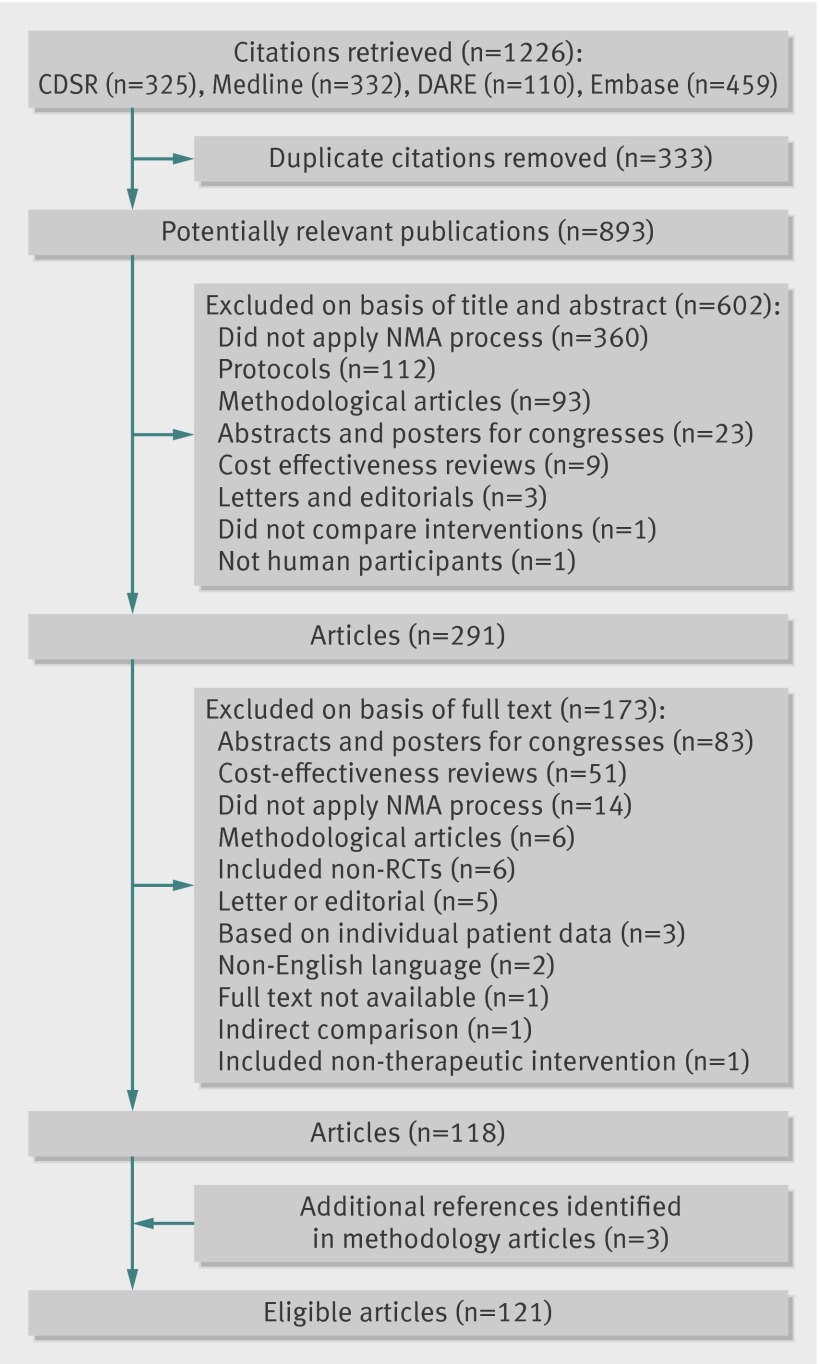

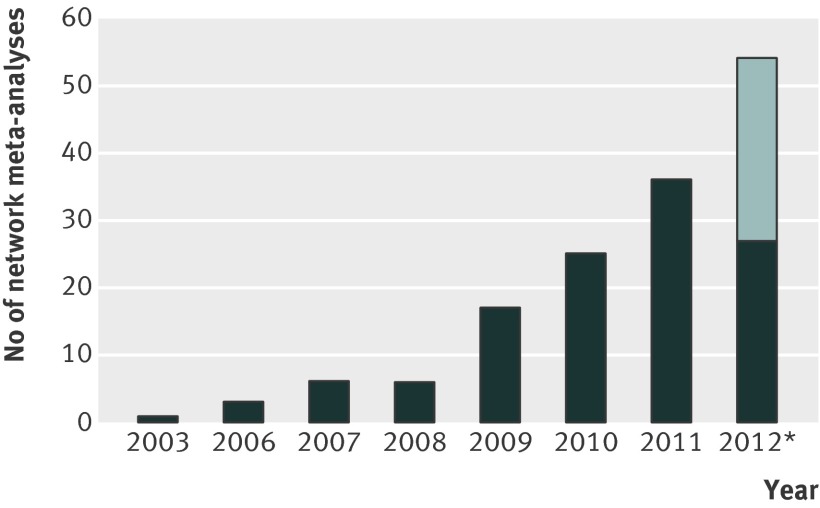

Our search identified 1226 citations, including 333 duplicate citations. Among the 893 potentially relevant publications, 118 were eligible reports of network meta-analyses. Three additional network meta-analyses were identified from methodological articles, resulting in 121 eligible reports of network meta-analyses (figs 1 and 2; web table 2 shows the main characteristics of the network meta-analysesw1-w121).

Fig 1 Flowchart of selection of network meta-analyses. CDSR=Cochrane Database of Systematic Reviews; DARE= Database of Abstracts of Reviews of Effects; NMA=network meta-analysis; RCT= randomised controlled trial

Fig 2 Number of reports of network meta-analyses published per year. *We estimated the number of reports published from July to December 2012 on the basis of the number of published network meta-analyses from the six previous months. Between January and June 2012, 27 reports were published (dark region); therefore, we estimated 27 reports from July to December 2012 (light region)

Reports of network meta-analyses were published in 75 different journals, with 55 reports (45%) published in general journals, 66 (55%) in specialty journals, and 56 (46%) in journals with a high impact factor (table 1; web table 3). The network meta-analyses covered a wide range of medical areas, and 100 (83%) described the assessment of pharmacological interventions. The median number of interventions assessed per network meta-analysis was seven (interquartile range 5-9), and the median number of randomised controlled trials included per network meta-analysis was 22 (15-40).

Table 1.

Epidemiological and descriptive characteristics of 121 reports of network meta-analyses

| Item and subcategory | No (%) of reports |

|---|---|

| Journal type | |

| General journal | 55 (45) |

| With high impact factor | 29 (53) |

| Specialty journal | 66 (55) |

| With high impact factor | 27 (41) |

| Location of corresponding author | |

| Europe | 69 (57) |

| North America | 38 (31) |

| Asia | 8 (7) |

| South America | 3 (2) |

| Oceania | 3 (2) |

| Funding source | |

| Private | 41 (34) |

| Public | 40 (33) |

| None | 15 (12) |

| Both private and public | 7 (6) |

| Unclear | 18 (15) |

| Medical area | |

| Cardiology | 27 (22) |

| Rheumatology | 16 (13) |

| Endocrinology | 12 (10) |

| Oncology | 10 (8) |

| Infectious disease | 9 (7) |

| Psychiatry/psychology | 8 (7) |

| Neurology | 8 (7) |

| Respiratology | 6 (5) |

| Ophthalmology | 5 (4) |

| Surgery | 5 (4) |

| Other (≤3 reviews per medical area; 11 medical areas) | 15 (12) |

| Type of intervention assessed | |

| Pharmacological intervention | 100 (83) |

| Different class * | 72 (60) |

| Same class * | 35 (29) |

| Any dose of same drug * | 12 (10) |

| Non-pharmacological intervention | 11 (9) |

| Devices * | 10 (8) |

| Surgery or procedure * | 7 (6) |

| Therapeutic strategy or education | 5 (4) |

| Both (pharmacological and non-pharmacological intervention) | 10 (8) |

| Type of network | |

| No of interventions assessed per network† | 7 (5-9) |

| No of comparisons conducted by at least one randomised controlled trial (per network meta-analysis)† | 10 (6-15) |

| No of network meta-analyses with at least one closed loop | 103 (85) |

| No of randomised controlled trials included in network meta-analysis (per network meta-analysis)† | 22 (15-40) |

Data are no (%) of reports unless stated otherwise.

*Multiple answers were possible, so the total does not equal 100%.

†Data are median (interquartile range).

Reporting of key methodological components of the systematic review process

Only three (2%) network meta-analyses did not report the databases searched in the methods section; of the remaining 118, 99 (82%) used a de novo search strategy and 19 (16%) a mixed search strategy. However, 42 (35%) did not report searching other sources, and 39 (32%) did not report any information regarding restriction or no restriction of study selection related to publication status (table 2). In addition, 42 (35%) and 32 (26%) network meta-analyses did not report the methods used for study selection and data extraction, respectively. A total of 61 (50%) network meta-analyses did not report any information regarding the assessment of risk of bias of individual studies. Of 121 network meta-analyses, 103 (85%) did not report any methods to assess the likelihood of publication bias.

Table 2.

Reporting of key methodological components of the systematic review process in network meta-analyses, by journal type

| Items | Overall (n=121) | General journals (n=55) | Specialty journals (n=66) |

|---|---|---|---|

| Introduction | |||

| Questions referring to participants, interventions, comparisons, outcomes, and study design | 110 (91) | 51 (93) | 59 (89) |

| Methods | |||

| Existence of systematic review protocol | 15 (12) | 9 (16) | 6 (9) |

| Primary outcome(s) | 85 (70) | 44 (80) | 41 (62) |

| Information sources searched | |||

| Databases searched | 118 (98) | 53 (96) | 65 (98) |

| Electronic search strategy for each database | 33 (27) | 19 (35) | 14 (21) |

| Date of last search for each database | 109 (90) | 50 (91) | 59 (89) |

| Period covered by search for each database | 83 (69) | 36 (65) | 47 (71) |

| Search for any other sources (conference abstracts, unpublished studies, textbooks, specialty registers, contact with study authors, reviewing the references in the studies found or any relevant systematic reviews) | 79 (65) | 38 (69) | 41 (62) |

| Reviewing the references in the studies found or any relevant systematic reviews | 54 (45) | 27 (49) | 27 (41) |

| Search for ongoing studies | 19 (16) | 13 (24) | 6 (9) |

| Restriction or no restriction related to language | 92 (76) | 42 (76) | 50 (76) |

| Restriction or no restriction related to the publication status | 82 (68) | 38 (69) | 44 (67) |

| Study selection and data collection process | |||

| Process for selecting studies | 79 (65) | 35 (64) | 44 (67) |

| Method of data extraction | 89 (74) | 42 (76) | 47 (71) |

| Methods used for assessing risk of bias of individual studies | 60 (50) | 27 (49) | 33 (50) |

| Methods to incorporate assessment of risk of bias of individual studies in the analysis or conclusions of review | |||

| Subgroup analysis | 9 (7) | 4 (7) | 5 (8) |

| Inclusion criteria | 4 (3) | 1 (2) | 3 (5) |

| GRADE | 4 (3) | 4 (7) | 0 (0) |

| Adjustment | 2 (2) | 2 (4) | 0 (0) |

| Any of these methods | 19 (16) | 11 (20) | 8 (13) |

| Assessment of risk of bias that may affect the cumulative evidence (publication bias) | 18 (15) | 11(20) | 7 (11) |

| Results | |||

| Study selection | |||

| No of studies screened, assessed for eligibility, and included in the review | 90 (74) | 43 (78) | 47 (71) |

| List of studies included | 117 (97) | 53 (96) | 64 (97) |

| List of studies excluded | 9 (7) | 6 (11) | 3 (5) |

| Study characteristics | |||

| Description of network | 82 (68) | 39 (71) | 43 (65) |

| Characteristics of patients (for example, age, female:male ratio) | 70 (58) | 30 (55) | 40 (61) |

| Duration of follow-up of patients | 60 (50) | 26 (47) | 34 (52) |

| Duration of interventions | 34 (28) | 15 (27) | 19 (29) |

| Description of interventions | 64 (53) | 30 (55) | 34 (52) |

| No of study groups | 95 (79) | 42 (76) | 53 (80) |

| No of patients | 97 (80) | 41 (75) | 56 (85) |

| Funding source | 13 (11) | 8 (15) | 5 (8) |

| Risk of bias within studies | 51 (42) | 23 (42) | 28 (42) |

| Risk of bias across studies (publication bias) | 18 (15) | 11 (20) | 7 (11) |

| Discussion | |||

| Reporting of limitations at review level (reporting or publication bias) | 58 (48) | 32 (58) | 26 (39) |

| Other items | |||

| Assumptions required in network meta-analysis | |||

| Homogeneity assumption | 107 (88) | 50 (91) | 57 (86) |

| Similarity assumption | 41 (34) | 18 (33) | 23 (35) |

| Consistency or exchangeability assumption | 58 (48) | 32 (58) | 26 (39) |

| Conflict of interest | 95 (79) | 47 (85) | 48 (73) |

Data are number (%) of reports featuring the corresponding item.

In the results section, 95 (79%) network meta-analyses did not describe the characteristics of primary studies (that is, characteristics of the network, patient characteristics, and interventions); 70 (58%) did not report the risk of bias assessment within studies.

The similarity and consistency assumptions were not frequently mentioned in network meta-analyses (66% (n=80) and 44% (n=63) of reports, respectively; table 2).These findings did not differ by journal type or funding source (web table 4).

Conduct quality when the reporting was adequate

For network meta-analyses with adequate reporting, the conduct quality was frequently inadequate: 11% of these network meta-analyses involved a search of only one bibliographic database, 20% restricted the study selection to published articles, 43% lacked an independent duplicate study selection, and 21% lacked an independent extraction of duplicate data. These findings did not differ by journal type or funding source (table 3; web table 5).

Table 3.

Inadequate quality of conduct of the systematic review process with adequate reporting in network meta-analyses, by journal type

| Item (reported and of inadequate quality of conduct) | Overall | General journal | Specialty journal |

|---|---|---|---|

| Electronic search of only one bibliographic database | 13/118 (11) | 8/53 (15) | 5/65 (8) |

| Restriction of study selection based on publication status | 16/82 (20) | 6/38 (16) | 10/44 (23) |

| Lack of independent duplicate study selection | 34/79 (43) | 13/37 (35) | 21/42 (50) |

| Lack of independent duplicate data extraction | 19/89 (21) | 10/41 (24) | 9/48 (19) |

Data are number (%)/total number of reports. Denominators of fractions indicate the total number of reports in which the corresponding item was reported.

Composite outcome assessing inadequate reporting or inadequate conduct quality

Overall, 87 network meta-analyses (72%) showed inadequate reporting of key methodological components or inadequate conduct quality according to guidelines from Li and colleagues.29 This measurement did not differ by journal type (general journal, 69% (95% confidence interval 57% to 81%); specialty journal, 74% (63% to 85%); P=0.5) or funding source (public funding, 67% (74% to 79%); private funding, 79% (67% to 90%); P=0.2).

We used a second composite according to the seven mandatory items of methodological expectations of Cochrane intervention reviews.30 Based on this composite, 120 network meta-analyses (99%) showed inadequate reporting of key methodological components or inadequate conduct. These findings did not differ by journal type or funding source.

Discussion

We identified 121 reports of network meta-analyses covering a wide range of medical areas. Key methodological components of the systematic review process were missing in most reports. The reporting did not differ by publication in a general or specialty journal or by public or private funding.

Several guidelines have been developed to assess the methodological quality and reporting of systematic reviews and meta-analyses,26 30 31 32 33 and according to all these guidelines, retrieving all relevant studies is crucial for the success of every systematic review. The literature search needs to be exhaustive and adequate to maximise the likelihood of capturing all relevant studies and minimise the effects of reporting biases that might influence the nature and direction of results.34 35 36 More than half of our network meta-analyses failed to report exhaustive searches; for those with adequate reporting, 11% searched only one electronic database (web table 6).

In addition, more than half of our network meta-analyses did not describe the characteristics of patients, the duration of follow-up or treatment, or the intervention. The lack of adequate reporting of the characteristics of primary studies raises several problems. Knowing precisely the type of intervention, characteristics, and duration of follow-up and treatment is a preliminary step for researchers and readers to assess the assumption of similarity required in network meta-analyses.12 37

An important step of the systematic review process is to assess the risk of bias of individual studies.38 39 40 41 42 The risk of bias in individual studies could affect the findings of network meta-analyses.43 However, 58% of our network meta-analyses did not report the risk of bias of individual studies in the results sections, and 84% did not report a method to incorporate assessment of risk of bias of individual studies in the analysis or conclusions of the review (web table 6).

Publication bias could affect the results of meta-analyses and network meta-analyses.44 45 46 47 This factor is all the more important, considering that we lacked the validated methods to detect or to appropriately adjust or exclude reporting bias in network meta-analyses. Moreover, reporting bias across trials could differentially affect the various comparisons in the network and modify the rank order efficacy of treatments. However, most of our network meta-analyses (85%) did not report a method to assess the publication bias. Although reporting bias could have a substantial effect on the conclusions of a network meta-analysis, it is probably neglected.

Strengths and limitations of study

To the best of our knowledge, no study investigated the systematic review process in network meta-analyses (mixed treatment, comparison meta-analyses).10 13 Recently, the Agency for Healthcare Research and Quality assessed the statistical methods used in a sample of published network meta-analyses, but did not analyse the conduct and reporting of the systematic review process.48

Our study had some limitations. Assessing conduct quality from published reports alone could be unreliable, as has been shown for randomised trials.49 The study authors may have used adequate methods but omitted important details from their reports, or key information may have been deleted during the publication process. However, we were in the position of the reader, who can only assess what was reported. In addition, we judged that the conduct quality was inadequate only if the reporting was adequate. Furthermore, we did not assess health technology assessment reports, which could have had higher quality or precision than the reports analysed.

Conclusions and implications

Our study identified some important methodological components in the reports of network meta-analyses that may raise doubts about the confidence we could have in their conclusions. Potential flaws in the conduct of network meta-analyses could affect their findings. Performing a network meta-analysis outside the realm of a systematic review, without extensive and thorough searches for eligible trials, and without risk of bias assessments of trials, would increase the risk of a network meta-analysis producing biased results. Guidelines are needed to improve the quality of reporting and conduct of network meta-analyses.

What is already known on this topic

Network meta-analyses are primarily meta-analyses, and should be conducted by respecting the methodological rules of systematic reviews

Network meta-analyses are subject to the same methodological risks of standard pairwise systematic reviews, and because this method in network meta-analyses is complex, it is probably more vulnerable to these risks

What this study adds

Key methodological components of the systematic review process are frequently inadequately reported in publications of network meta-analyses

The level of inadequate reporting does not differ between reports published by a general or specialty journal or between reports with public or private funding

Inadequate reporting of results of network meta-analyses raises doubts about the ability of network meta-analyses to help clinical researchers determine the best available treatment

Contributors: AB was responsible for the study conception, search of trials, selection of trials, data extraction, data analysis, interpretation of results, and drafting the manuscript. LT was responsible for the study conception, search of trials, selection of trials, interpretation of results, and drafting the manuscript. RS was responsible for the data extraction, interpretation of results, and drafting the manuscript. PR was the guarantor and was responsible for the study conception, interpretation of results, and drafting the manuscript. All authors, external and internal, had full access to all of the data (including statistical reports and tables) in the study and can take responsibility for the integrity of the data and the accuracy of the data analysis.

Funding: This study was funded by an academic grant for the doctoral student from “Pierre et Marie Curie University.” Our team is supported by an academic grant (DEQ20101221475) for the programme “Equipe espoir de la Recherche,” from the Fondation pour la Recherche Médicale. The funding agencies have no role in the design or conduct of the study; collection, management, analysis, or interpretation of the data; or preparation and review of the manuscript.

Competing interests: All authors have completed the ICMJE uniform disclosure form at www.icmje.org/coi_disclosure.pdf and declare: no support from any organisation for the submitted work; no financial relationships with any organisations that might have an interest in the submitted work in the previous three years; no other relationships or activities that could appear to have influenced the submitted work.

Ethical approval: Ethical approval not required.

Data sharing: No additional data available.

Cite this as: BMJ 2013;347:f3675

Web Extra. Extra material supplied by the author

Web appendix: Supplementary material

References

- 1.Dickersin K. Health-care policy. To reform US health care, start with systematic reviews. Science 2010;329:516-7. [DOI] [PubMed] [Google Scholar]

- 2.Lathyris DN, Patsopoulos NA, Salanti G, Ioannidis JP. Industry sponsorship and selection of comparators in randomized clinical trials. Eur J Clin Invest 2010;40:172-82. [DOI] [PubMed] [Google Scholar]

- 3.Hochman M, McCormick D. Characteristics of published comparative effectiveness studies of medications. JAMA 2010;303:951-8. [DOI] [PubMed] [Google Scholar]

- 4.Volpp KG, Das A. Comparative effectiveness—thinking beyond medication A versus medication B. N Engl J Med 2009;361:331-3. [DOI] [PubMed] [Google Scholar]

- 5.Estellat C, Ravaud P. Lack of head-to-head trials and fair control arms: randomized controlled trials of biologic treatment for rheumatoid arthritis. Arch Intern Med 2012;172:237-44. [DOI] [PubMed] [Google Scholar]

- 6.Bucher HC, Guyatt GH, Griffith LE, Walter SD. The results of direct and indirect treatment comparisons in meta-analysis of randomized controlled trials. J Clin Epidemiol 1997;50:683-91. [DOI] [PubMed] [Google Scholar]

- 7.Song F, Altman DG, Glenny AM, Deeks JJ. Validity of indirect comparison for estimating efficacy of competing interventions: empirical evidence from published meta-analyses. BMJ 2003;326:472. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Lumley T. Network meta-analysis for indirect treatment comparisons. Stat Med 2002;21:2313-24. [DOI] [PubMed] [Google Scholar]

- 9.Lu G, Ades AE. Combination of direct and indirect evidence in mixed treatment comparisons. Stat Med 2004;23:3105-24. [DOI] [PubMed] [Google Scholar]

- 10.Jansen JP, Fleurence R, Devine B, Itzler R, Barrett A, Hawkins N, et al. Interpreting indirect treatment comparisons and network meta-analysis for health-care decision making: report of the ISPOR task force on indirect treatment comparisons good research practices: part 1. Value Health 2011;14:417-28. [DOI] [PubMed] [Google Scholar]

- 11.Donegan S, Williamson P, Gamble C, Tudur-Smith C. Indirect comparisons: a review of reporting and methodological quality. PLoS One 2010;5:e11054. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Song F, Loke YK, Walsh T, Glenny AM, Eastwood AJ, Altman DG. Methodological problems in the use of indirect comparisons for evaluating healthcare interventions: survey of published systematic reviews. BMJ 2009;338:b1147. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Wells GA, Sultan SA, Chen L, Khan M, Coyle D. Indirect evidence: indirect treatment comparisons in meta-analysis. Canadian Agency for Drugs and Technologies in Health, 2009.

- 14.Glenny AM, Altman DG, Song F, Sakarovitch C, Deeks JJ, D’Amico R, et al. Indirect comparisons of competing interventions. Health Technol Assess 2005;9:1-134, iii-iv. [DOI] [PubMed] [Google Scholar]

- 15.Schöttker B, Luhmann D, Boulkheimair D, Raspe H. Indirekte vergleiche von therapieverfahren. GMS Health Technology Assessment, 2009. [DOI] [PMC free article] [PubMed]

- 16.Cucherat M, Izard V. Les comparaisons indirectes Méthodes et validité. HAS-Service de l’Evaluation des Medicaments, 2009:66.

- 17.Hoaglin DC, Hawkins N, Jansen JP, Scott DA, Itzler R, Cappelleri JC, et al. Conducting indirect-treatment-comparison and network-meta-analysis studies: report of the ISPOR task force on indirect treatment comparisons good research practices: part 2. Value Health 2011;14:429-37. [DOI] [PubMed] [Google Scholar]

- 18.Song F, Harvey I, Lilford R. Adjusted indirect comparison may be less biased than direct comparison for evaluating new pharmaceutical interventions. J Clin Epidemiol 2008;61:455-63. [DOI] [PubMed] [Google Scholar]

- 19.Li T, Puhan MA, Vedula SS, Singh S, Dickersin K. Network meta-analysis-highly attractive but more methodological research is needed. BMC Med 2011;9:79. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Salanti G, Kavvoura FK, Ioannidis JP. Exploring the geometry of treatment networks. Ann Intern Med 2008;148:544-53. [DOI] [PubMed] [Google Scholar]

- 21.Edwards SJ, Clarke MJ, Wordsworth S, Borrill J. Indirect comparisons of treatments based on systematic reviews of randomised controlled trials. Int J Clin Pract 2009;63:841-54. [DOI] [PubMed] [Google Scholar]

- 22.Lu G, Ades AE, Sutton AJ, Cooper NJ, Briggs AH, Caldwell DM. Meta-analysis of mixed treatment comparisons at multiple follow-up times. Stat Med 2007;26:3681-99. [DOI] [PubMed] [Google Scholar]

- 23.Salanti G, Higgins JP, Ades AE, Ioannidis JP. Evaluation of networks of randomized trials. Stat Methods Med Res 2008;17:279-301. [DOI] [PubMed] [Google Scholar]

- 24.Salanti G, Ades AE, Ioannidis JP. Graphical methods and numerical summaries for presenting results from multiple-treatment meta-analysis: an overview and tutorial. J Clin Epidemiol 2011;64:163-71. [DOI] [PubMed] [Google Scholar]

- 25.Liberati A, Altman DG, Tetzlaff J, Mulrow C, Gotzsche PC, Ioannidis JP, et al. The PRISMA statement for reporting systematic reviews and meta-analyses of studies that evaluate health care interventions: explanation and elaboration. Ann Intern Med 2009;151:W65-94. [DOI] [PubMed] [Google Scholar]

- 26.Shea BJ, Grimshaw JM, Wells GA, Boers M, Andersson N, Hamel C, et al. Development of AMSTAR: a measurement tool to assess the methodological quality of systematic reviews. BMC Med Res Methodol 2007;7:10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Chan AW, Hrobjartsson A, Haahr MT, Gotzsche PC, Altman DG. Empirical evidence for selective reporting of outcomes in randomized trials: comparison of protocols to published articles. JAMA 2004;291:2457-65. [DOI] [PubMed] [Google Scholar]

- 28.Guyatt GH, Oxman AD, Vist GE, Kunz R, Falck-Ytter Y, Alonso-Coello P, et al. GRADE: an emerging consensus on rating quality of evidence and strength of recommendations. BMJ 2008;336:924-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Li T, Vedula SS, Scherer R, Dickersin K. What comparative effectiveness research is needed? A framework for using guidelines and systematic reviews to identify evidence gaps and research priorities. Ann Intern Med 2012;156:367-77. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Higgins JPT, Green S, eds. Cochrane handbook for systematic reviews of interventions version 5.1.0. Cochrane Collaboration, 2011.

- 31.Liberati A, Altman DG, Tetzlaff J, Mulrow C, Gotzsche PC, Ioannidis JP, et al. The PRISMA statement for reporting systematic reviews and meta-analyses of studies that evaluate health care interventions: explanation and elaboration. PLoS Med 2009;6:e1000100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Moher D, Cook DJ, Eastwood S, Olkin I, Rennie D, Stroup DF. Improving the quality of reports of meta-analyses of randomised controlled trials: the QUOROM statement. Quality of reporting of meta-analyses. Lancet 1999;354:1896-900. [DOI] [PubMed] [Google Scholar]

- 33.Mills EJ, Ioannidis JP, Thorlund K, Schunemann HJ, Puhan MA, Guyatt GH. How to use an article reporting a multiple treatment comparison meta-analysis. JAMA 2012;308:1246-53. [DOI] [PubMed] [Google Scholar]

- 34.McAuley L, Pham B, Tugwell P, Moher D. Does the inclusion of grey literature influence estimates of intervention effectiveness reported in meta-analyses? Lancet 2000;356:1228-31. [DOI] [PubMed] [Google Scholar]

- 35.Royle P, Milne R. Literature searching for randomized controlled trials used in Cochrane reviews: rapid versus exhaustive searches. Int J Technol Assess Health Care 2003;19:591-603. [DOI] [PubMed] [Google Scholar]

- 36.Sampson M, Barrowman NJ, Moher D, Klassen TP, Pham B, Platt R, et al. Should meta-analysts search Embase in addition to Medline? J Clin Epidemiol 2003;56:943-55. [DOI] [PubMed] [Google Scholar]

- 37.Xiong T, Parekh-Bhurke S, Loke YK, Abdelhamid A, Sutton AJ, Eastwood AJ, et al. Overall similarity and consistency assessment scores are not sufficiently accurate for predicting discrepancy between direct and indirect comparison estimates. J Clin Epidemiol 2013;66:184-91. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Gluud LL. Bias in clinical intervention research. Am J Epidemiol 2006;163:493-501. [DOI] [PubMed] [Google Scholar]

- 39.Juni P, Altman DG, Egger M. Systematic reviews in health care: Assessing the quality of controlled clinical trials. BMJ 2001;323:42-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Wood L, Egger M, Gluud LL, Schulz KF, Juni P, Altman DG, et al. Empirical evidence of bias in treatment effect estimates in controlled trials with different interventions and outcomes: meta-epidemiological study. BMJ 2008;336:601-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Moher D, Pham B, Jones A, Cook DJ, Jadad AR, Moher M, et al. Does quality of reports of randomised trials affect estimates of intervention efficacy reported in meta-analyses? Lancet 1998;352:609-13. [DOI] [PubMed] [Google Scholar]

- 42.Schulz KF, Chalmers I, Hayes RJ, Altman DG. Empirical evidence of bias. Dimensions of methodological quality associated with estimates of treatment effects in controlled trials. JAMA 1995;273:408-12. [DOI] [PubMed] [Google Scholar]

- 43.Dias S, Welton NJ, Marinho V, Salanti G, Higgins JP, Ades AE. Estimation and adjustment of bias in randomized evidence by using mixed treatment comparison meta-analysis. J R Stat Soc 2010;173:613-29. [Google Scholar]

- 44.Thornton A, Lee P. Publication bias in meta-analysis: its causes and consequences. J Clin Epidemiol 2000;53:207-16. [DOI] [PubMed] [Google Scholar]

- 45.Trinquart L, Abbe A, Ravaud P. Impact of reporting bias in network meta-analysis of antidepressant placebo-controlled trials. PLoS One 2012;7:e35219. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Trinquart L, Chatellier G, Ravaud P. Adjustment for reporting bias in network meta-analysis of antidepressant trials. BMC Med Res Methodol 2012;12:150. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Salanti G. Indirect and mixed-treatment comparison, network, or multiple-treatments meta-analysis: many names, many benefits, many concerns for the next generation evidence synthesis tool. Res Synth Methods 2012;3:80-97. [DOI] [PubMed] [Google Scholar]

- 48.Coleman CI, Phung OJ, Cappelleri JC, Baker WL, Kluger J, White CM, et al. Use of mixed treatment comparisons in systematic reviews. AHRQ Methods for Effective Health Care 2012. www.ncbi.nlm.nih.gov/books/NBK107330/. [PubMed]

- 49.Vale CL, Tierney JF, Burdett S. Can trial quality be reliably assessed from published reports of cancer trials: evaluation of risk of bias assessments in systematic reviews. BMJ 2013;346:f1798. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Web appendix: Supplementary material