Abstract

The face inversion effect has been used as a basis for claims about the specialization of face-related perceptual and neural processes. One of these claims is that the fusiform face area (FFA) is the site of face-specific feature-based and/or configural/holistic processes that are responsible for producing the face inversion effect. However, the studies on which these claims were based almost exclusively used stimulus manipulations of whole faces. Here, we tested inversion effects using single, discrete features and combinations of multiple discrete features, in addition to whole faces, using both behavioral and fMRI measurements. In agreement with previous studies, we found behavioral inversion effects with whole faces and no inversion effects with a single eye stimulus or the two eyes in combination. However, we also found behavioral inversion effects with feature combination stimuli that included features in the top and bottom halves (eyes-mouth and eyes-nose-mouth). Activation in the FFA showed an inversion effect for the whole-face stimulus only, which did not match the behavioral pattern. Instead, a pattern of activation consistent with the behavior was found in the bilateral inferior frontal gyrus, which is a component of the extended face-preferring network. The results appear inconsistent with claims that the FFA is the site of face-specific feature-based and/or configural/holistic processes that are responsible for producing the face inversion effect. They are more consistent with claims that the FFA shows a stimulus preference for whole upright faces.

INTRODUCTION

For humans, the faces of conspecifics are among the most important of environmental stimuli. Thus, it is not surprising that debates about mechanisms of human face recognition are prolific in science. A historical and also contemporary debate is whether or not face recognition is the product of face-specific neural mechanisms. A classic effect used in face recognition research that has produced ample data for this debate is that of face inversion (Yin, 1969). The difference in recognition accuracy between upright and inverted (upside down) faces is usually found to be much greater than the difference for nonface objects. Whether or not the face inversion effect requires an underlying face-specific mechanism to produce it has been a long-standing issue.

One of the early frameworks put forward to explain face inversion effects was that recognition of upright whole faces is “holistic” and thus derives an advantage compared with recognition of inverted faces and upright and inverted nonface objects, which is thought to be feature based (Tanaka & Farah, 1993). More recent evidence suggests that other kinds of objects can also come to be processed holistically in their upright orientation through perceptual expertise (Rossion, 2008; Gauthier & Tarr, 2002). Other evidence suggests that the face inversion effect does not just rely on configural/holistic processing of upright faces, but also on feature-based processing (McKone & Yovel, 2009; Yovel & Kanwisher, 2004). Although these accounts differ strongly in some of their premises, they agree on the idea that upright whole faces (and perhaps objects of expertise) are processed qualitatively different.

In contrast to these accounts, some recent studies suggest that the face inversion effect is not the product of a qualitative difference in processing of upright and inverted orientations, but a quantitative difference (Gold, Mundy, & Tjan, 2012; Richler, Mack, Palmeri, & Gauthier, 2010; Sekuler, Gaspar, Gold, & Bennett, 2004). The results of these studies suggest that the same perceptual processes are recruited for upright and inverted faces (and possibly other nonface objects), but those processes are facilitated when the faces are upright compared with inverted. The results further suggest that the process in question is feature-based, rather than “holistic.” In one of these studies, it was clearly shown that the reliance on specific face features was almost the same across orientations (Sekuler et al., 2004). In another study, it was shown that upright face identification performance could be entirely predicted by identification performance with each individual facial feature shown in isolation (Gold et al., 2012). In the third study, it was found that equating the pattern of performance across orientations required allowing more time for the inverted faces (Richler et al., 2010). Taken together, the results of these studies suggest that the face inversion effect reflects an enhancement of feature-based processes in the upright orientation.

The existence of a face-preferring region in the cortex, often called the fusiform face area (FFA), is sometimes cited as evidence for the existence of special feature-based and/or configural/holistic neural mechanisms for processing whole upright faces (for a review, see Kanwisher & Yovel, 2006). Findings converge to show that BOLD fMRI activation in the FFA is greater with whole upright faces than with most other kinds of objects. This pattern of activation has been called face-specific or face-selective by some (Kanwisher & Yovel, 2006), but face-preferring by others (Hanson & Schmidt, 2011; Pernet, Schyns, & Demonet, 2007). Activation in the FFA is greater with upright faces than inverted faces, but inversion does not influence FFA activation with nonface objects (Downing, Chan, Peelen, Dodds, & Kanwisher, 2006; Yovel & Kanwisher, 2004, 2005; Kanwisher, Tong, & Nakayama, 1998). Although activation in the STS—also a part of the core face-preferential network—has been shown to be sensitive to face inversion, the size of the STS inversion effect is not correlated with the size of the behavioral inversion effect, whereas the size of the FFA inversion effect is correlated with behavior (Yovel & Kanwisher, 2005). Also, when fMR adaptation (repetition suppression) was used to test the specificity of the neural representation of upright and inverted faces, adaptation was found with only upright faces and only in the FFA (Yovel & Kanwisher, 2005). Finally, when the inversion effect was tested with sets of faces with changes to feature shape (featural) or changes to feature location (configural), inversion effects in FFA activation were found with both manipulations (Yovel & Kanwisher, 2004). These findings converge to suggest that the FFA is the site of face-preferring processes—possibly configural/holistic, but possibly also feature based—that contribute to the behavioral face inversion effect.

The previous fMRI studies of face inversion, however, all used manipulations of whole faces as stimuli. Ideally, testing whether or not a system responds selectively with whole faces requires a comparison of whole faces and partial faces. Behavioral studies suggest that single face parts do not produce inversion effects (Bushmakin & James, under revision; Pellicano & Rhodes, 2003; Rhodes, Brake, & Atkinson, 1993), but this has not been tested with fMRI. Also, there is evidence that a key determinant of inversion effects may be the combination of information across multiple features (Bushmakin & James, under revision). This suggests that inversion effects can occur with less than a whole face, but that they require more than a single feature. However, this hypothesis has not been tested, either behaviorally or with fMRI. To examine the specificity of inversion effects to whole faces and to determine the neural substrates that underlie such specificity, the current study assessed inversion effects with whole faces compared with inversion effects with stimuli composed of a single facial feature or different com- binations of multiple facial features using both behavioral measures and BOLD fMRI.

METHODS

Participants

Twenty-four healthy adults (10 men; ages, 21–32 years) participated for payment in the behavioral session. Subsequently, 12 of those 24 volunteers (6 men) participated for payment in an fMRI scanning session. All participants signed informed consent forms, and the Indiana University Institutional Review Board approved the study.

Stimuli

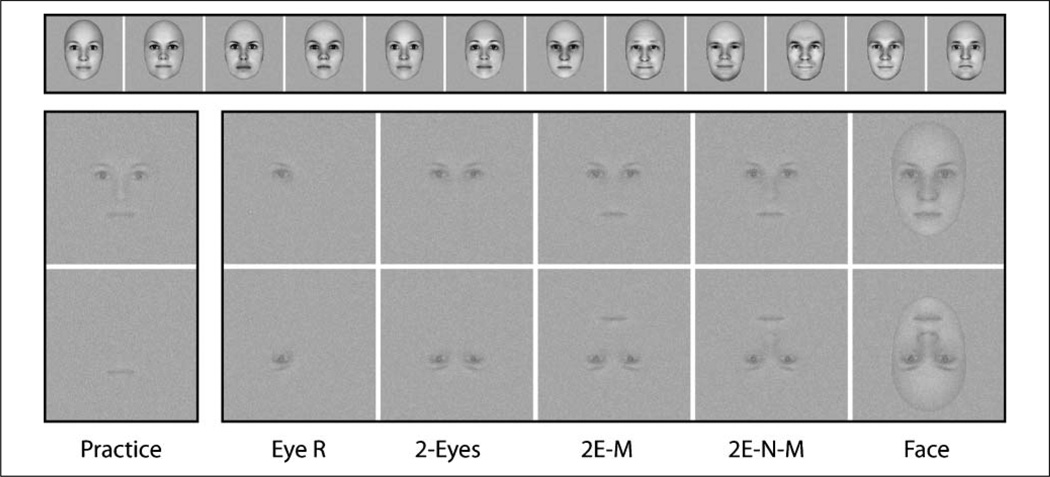

Twelve face images (6 men, 6 women) were created with FaceGen 3.2 (www.facegen.com) and are shown in the top of Figure 1. Parameters in FaceGen were selected such that all faces were between the ages of 20 and 30 years, symmetric, and equally attractive. Faces were rendered as 256 × 256 pixel grayscale images. Different sized apertures were used for the eye/eyebrow, nose, and mouth features, but across face images, the size and position of the apertures was kept constant. For single-feature stimuli, the mouth was chosen for use during practice trials, and the right eye was chosen for experimental trials. These choices were based on the results of previous work (Arcurio, Gold, & James, 2012). Multifeature combination stimuli were created by combining two, three, or four single features, always taken from the same face, and always positioned in the correct spatial locations. The combination stimulus used for practice trials was the four-feature eyes-nose-mouth stimulus and the combinations used for experimental trials were the two-eyes stimulus, the eyes-mouth stimulus, and eyes-nose-mouth stimulus. It is worthwhile noting that in these five single feature and combination feature stimulus types, no face outline was used. The last stimulus type was the whole face. Examples of the stimuli are shown in Figure 1, but at a higher contrast than used during scanning (see procedures below). During practice trials mouth and eyes-nose-mouth stimuli were shown in only the upright orientation. During experimental trials, right-eye, two-eyes, eyes-mouth, eyes-nose-mouth, and whole-face stimuli were shown in both the upright and inverted (horizontally flipped) orientations, for a total of 10 experimental stimulus conditions.

Figure 1.

Stimuli used for practice and experimental trials. The top row shows the 12 different faces from which the stimuli were drawn. In the bottom two rows, the far left column shows the two types of practice stimuli, single mouth and eyes-nose-mouth combination. In the right five columns, from left to right, the stimuli are single right eye, two-eyes, eyes-mouth, and eyes-nose-mouth combinations, and whole face. These five types are also shown inverted (bottom row). The bottom two rows are shown at lowered contrast with Gaussian noise to mimic conditions in the scanner.

Scanning Session Procedures

Participants underwent a prescan practice procedure in an MRI simulator located in the Indiana University Imaging Research Facility to familiarize the participants with the MRI environment and the task and to help limit any perceptual learning during the subsequent scanning session. Participants practiced with the single mouth stimulus and the combination eyes-nose-mouth stimulus (Figure 1). Data from the practice trials were analyzed to find two individualized contrast levels for each participant for presenting stimuli in the subsequent scanning session. This was done to ensure that the tasks were difficult for each participant, but not impossible, and that overall performance for each subject was above floor and below ceiling for all stimulus types.

During scanning, participants lay supine in the scanner bore with their head secured in the head coil by foam padding. Participants viewed stimuli through a mirror that was mounted above the head coil. This allowed the participants to see the stimuli on a rear-projection screen (40.2 × 30.3 cm) placed behind the subject in the bore. Stimuli were projected onto the screen with a Mitsubishi LCD projector (model XL30U). The viewing distance from the mirror to the eyelid was 11.5 cm, and the distance from the screen to the mirror was 79 cm, giving a total viewing distance of 90.5 cm. When projected in this manner, the size of the entire 256 × 256 pixel stimulus image subtended approximately 6° of visual angle.

Each scanning session consisted of one localizer run and 10 experimental runs. The localizer run was included to independently, functionally localize object- and face-preferring brain regions for ROI analyses, specifically the occipital face area (OFA), FFA, and lateral occipital cortex (LO). During the functional localizer run, full contrast, noise-free, grayscale images of familiar objects (e.g., chair, toaster), faces (different from those used in the experimental runs), and phase-scrambled noise (derived from the object and face stimuli) were presented in a blocked design while participants fixated the center of the screen. Six stimuli per block were presented for 1.5 sec each with an ISI of 500 msec, producing a block time of 12 sec. Blocks were presented in 48 sec cycles of noise–objects–noise–faces. There were eight cycles in the single run, and the run began and ended with 12 sec of rest, making the total run length 6 min and 48 sec.

During experimental runs, the experimental stimuli from Figure 1 were presented in a blocked design while participants performed a 1-back matching task. Six stimuli per block were all selected from the same stimulus type. The stimuli were presented in noise and at lowered contrast such that recognition would be difficult and behavioral performance would be below ceiling and above floor. For each participant, contrast levels were determined based on the data collected during the prescan practice. The lowest contrast level chosen produced 75% accuracy with the practice eyes-nose-mouth stimulus and the highest contrast level chosen produced 75% accuracy with the practice mouth stimulus. Stimuli in a block were presented at one of the two contrast levels and were embedded in Guassian noise of constant variance (RMS = 0.1) that was resampled each trial. Stimuli were presented for 1 sec each with an ISI of 2 sec, producing a total block length of 18 sec. Stimulus blocks were separated by fixation blocks 12 sec in length. Matlab R2008a (www.mathworks.com) combined with the Psychophysics Toolbox (www.psychtoolbox.org; Brainard, 1997; Pelli, 1997) was used to create the stimuli, adjust the signal-to-noise ratios, present the stimuli during the scanning session, and collect the behavioral responses. Each run contained 10 stimulus blocks and 11 fixation blocks, for a total run length of 5 min and 12 sec. Across the ten runs, there were a total of 100 stimulus blocks, equally divided among the 10 stimulus conditions (5 stimulus types by 2 orientations), resulting in 10 blocks per stimulus condition.

Imaging Parameters and Analysis

Imaging data were acquired with a Siemens Magnetom TIM TRIO (Munich, Germany) 3-T whole-body MRI. Images were collected using a 32-channel phased-array head coil. The field of view was 220 × 220 mm, with an in-plane resolution of 128 × 128 pixels and 35 axial slices of 3.4 mm thickness per volume. These parameters produced voxels that were 1.7 × 1.7 × 3.4 mm. Functional images were collected using a gradient-echo EPI sequence: TE = 24 msec, TR = 2000 msec, flip angle = 70° for BOLD imaging. Parallel imaging was used with a PAT factor of 2. High-resolution T1-weighted anatomical volumes were acquired using a Turbo-flash 3-D sequence: TI = 900 msec, TE = 2.67 msec, TR = 1800 msec, flip angle = 9°, with 192 sagittal slices of 1 mm thickness, a field of view of 224 × 256 mm, and an isometric voxel size of 1 mm3.

Imaging data were analyzed using BrainVoyager QX 2.2 (Maastricht, The Netherlands). Individual anatomical volumes were transformed into a common stereotactic space based on the reference of the Talairach atlas using an eight-parameter affine transformation. All functional volumes were realigned to the functional volume collected closest in time to the anatomical volume using an intensity-based motion correction algorithm. Functional imaging runs that showed estimated motion “spikes” of greater than 1 mm ormotion “drifts”of greater than 2 mm were excluded. If more than 3 of 10 runs were excluded, then that participant was dropped from the analysis entirely. On the basis of these criteria, no participants were removed. Functional volumes also underwent slice scan time correction, 3-D spatial Gaussian filtering (FWHM 6 mm), and linear trend removal. Functional volumes were coregistered to the anatomical volume using an intensity-based matching algorithm and normalized to the common stereotactic space using an eight-parameter affine transformation. During normalization, functional data were resampled to 3 mm3 isometric voxels. Whole-brain statistical parametric maps were calculated using a general linear model with predictors based on the timing protocol of the blocked stimulus presentation, convolved with a two-gamma hemodynamic response function. For maps where cluster-size correction is reported, the cluster size threshold was determined by Monte Carlo simulation, such that the chosen voxel-wise probability of a Type I error combined with the cluster-size criterion to produce a family-wise false-positive rate of p < .05. Defining clusters for ROI analysis was done by starting with the voxel with the maximum statistical value in the cluster and including other voxels within a cube that extended 15 mm in each direction. After determining the voxels included in each ROI cluster, beta weights for each participant were extracted from the ROIs using the ANCOVA table tool in BrainVoyager’s VOI module. Statistical hypoth- esis testing was performed on the extracted beta weights using repeated-measures ANOVAs in SPSS (New York). Because the main hypotheses were about inversion effects, planned comparisons were performed on those specific pairs of means, corrected for Type I error rate using the Bonferroni method. Other pairwise comparisons between means were carried out using post hoc Tukey’s tests. In the figures where graphs show error bars, those error bars represent 95% confidence intervals, calculated using the within-subject mean squared error taken from the highest-order interaction term in the appropriate ANOVA and a one-tailed t value based on a false positive rate of 5% for each test.

RESULTS

The prescan practice trials were used to determine individual low and high contrast levels for each participant to be used during scanning. The mean high contrast level across participants was 0.0708 root mean squared energy (SNR = 0.6235) with a range of 0.035 to 0.181. The mean low contrast level across participants was 0.0338 root mean squared (SNR = 0.1226) with a range of 0.022–0.055. Contrast level was not a factor of interest in the experimental design; thus, the remaining analyses were performed after collapsing across contrast level.

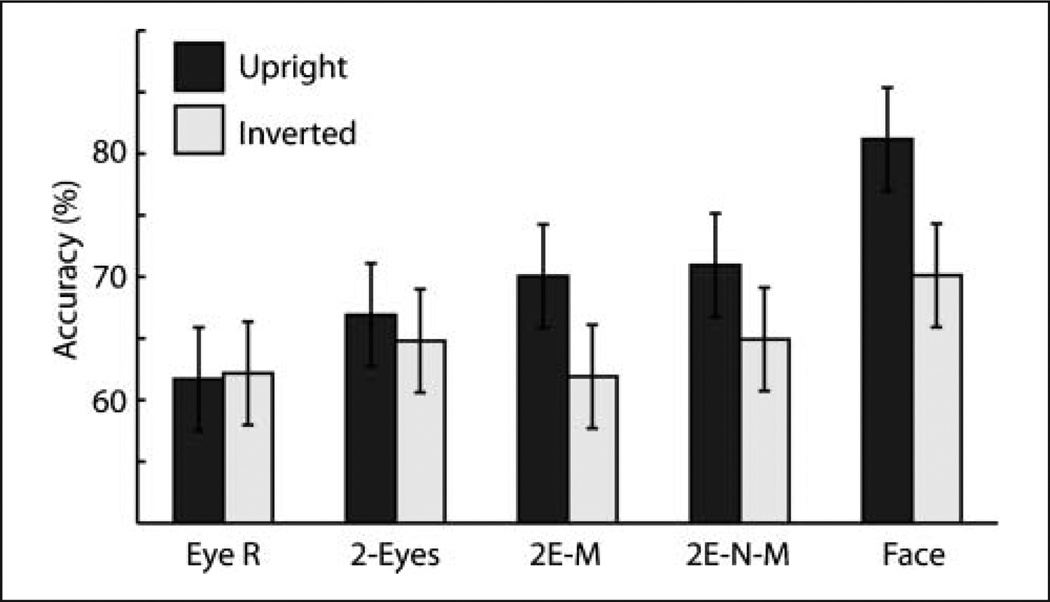

Trials for which participants gave no response were very few, but these were excluded from the analyses. Figure 2 shows accuracy for the five experimental stimulus types, presented in upright and inverted orientation. A repeated-measures ANOVA with Accuracy as the dependent variable and with two within-subject factors (Stimulus Type and Orientation) showed a significant effect of Stimulus Type, F(4, 92) = 19.08, p < .001, a significant effect of Orientation, F(1, 23) = 23.56, p < .001, and a significant interaction between Stimulus Type and Orientation, F(4, 92) = 5.55, p < .001. Five one-tailed planned contrasts— corrected for multiple comparisons using the false discovery rate (FDR) method—were used to assess the effect of inversion across each of the five stimulus types. These revealed that accuracy was better for the upright than inverted orientation for the eyes-mouth, eyes-nose-mouth, and whole-face stimulus types (all t(23) > 2.95, p < .01).

Figure 2.

Accuracy as a function of stimulus type. 2E-M = eyes-mouth, 2E-N-M = eyes-nose-mouth. Error bars are 95% confidence intervals.

Two-tailed post hoc Tukey’s tests were conducted to assess the other pairwise differences between means. Within the upright orientation, accuracy with the whole-face stimulus was significantly better than accuracy with all other stimulus types (all q(5, 23) = 6.05, p < .05) and accuracy with the single right-eye stimulus was significantly worse than with all other stimulus types (all q(5, 23) > 4.74, p < .05). No other comparisons among upright stimuli reached significance. Within the inverted orientation, accuracy with the whole-face stimulus was significantly better than with the eyes-nose-mouth, eyes-nose, and right-eye stimulus types (all q(5, 23) > 4.18, p < .05), but not better than the two-eyes stimulus type. No other comparisons among inverted stimuli reached significance.

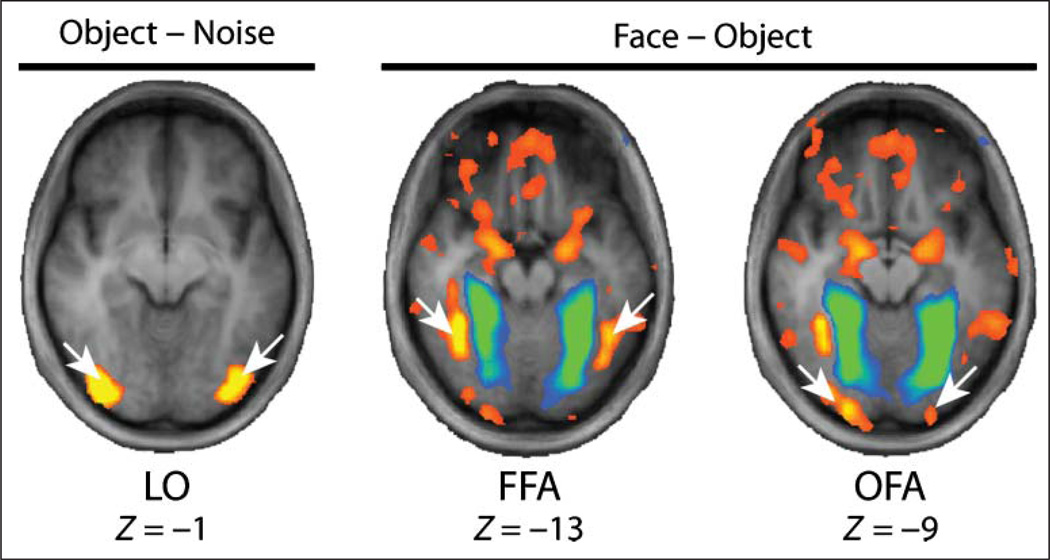

A priori ROIs were localized using the data from the independent functional localizer run. The ROIs were determined from a group-averaged whole-brain fixed-effects general linear model thresholded using the FDR method (q = .05). The locations of the OFA and FFA were determined by contrasting the face and object stimulus conditions, and the location of the LO was determined by contrasting the object and noise stimulus conditions. The locations of the ROIs are shown in Figure 3, and the Talairach coordinates are shown in Table 1. Beta weights representing BOLD signal change were extracted from the ROIs for each participant. A summary of the data is shown in Figure 4. A repeated-measures ANOVA with BOLD percent signal change as the dependent variable and four within-subject factors (Region, Hemisphere, Stimulus Type, Orientation; 3 × 2 × 5 × 2) revealed a significant two-way interaction between Region and Stimulus Type, F(8, 88) = 7.82, p < .001, and another significant two-way interaction between Region and Orientation, F(2, 22) = 8.60, p = .002.

Figure 3.

Locations of object- and face-preferring ROIs. The object–noise contrast is shown with a threshold of t = 16. The face–object contrasts is shown with the FDR threshold (q = .05; voxel-wise t = 3.51). Z values are from the Talairach reference.

Table 1.

Talairach Coordinates for Face-preferring ROIs

| Region | X | Y | Z |

|---|---|---|---|

| L-FFA | −46 | −50 | −13 |

| R-FFA | +36 | −50 | −13 |

| L-LO | −35 | −81 | −5 |

| R-LO | +33 | −83 | −1 |

| L-OFA | −22 | −94 | −9 |

| R-OFA | +21 | −91 | −8 |

| L-STS | −59 | −53 | +6 |

| R-STS | +50 | −57 | +14 |

| L-IFG | −43 | +22 | +8 |

| R-IFG | +52 | +19 | +18 |

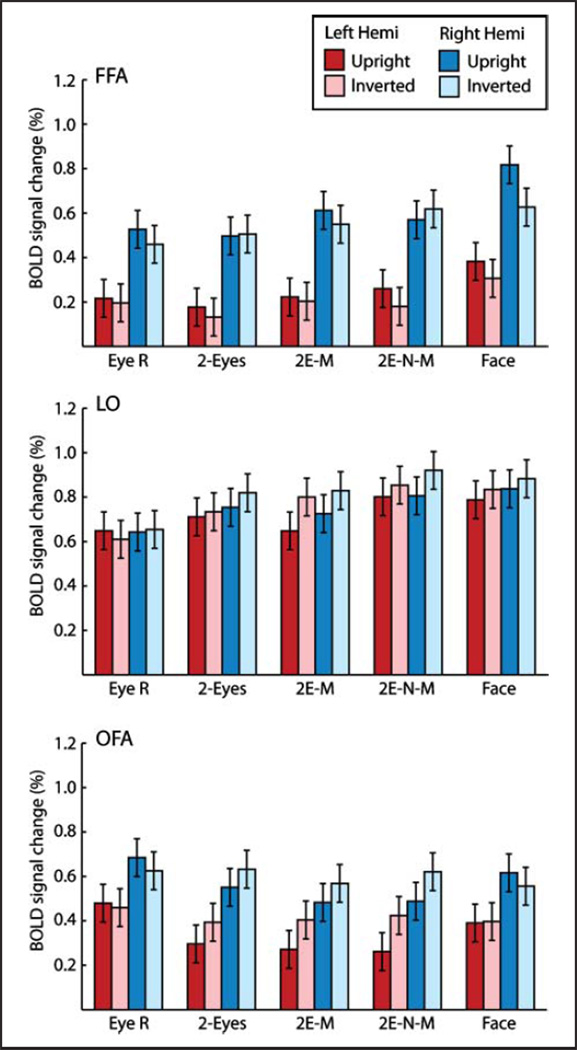

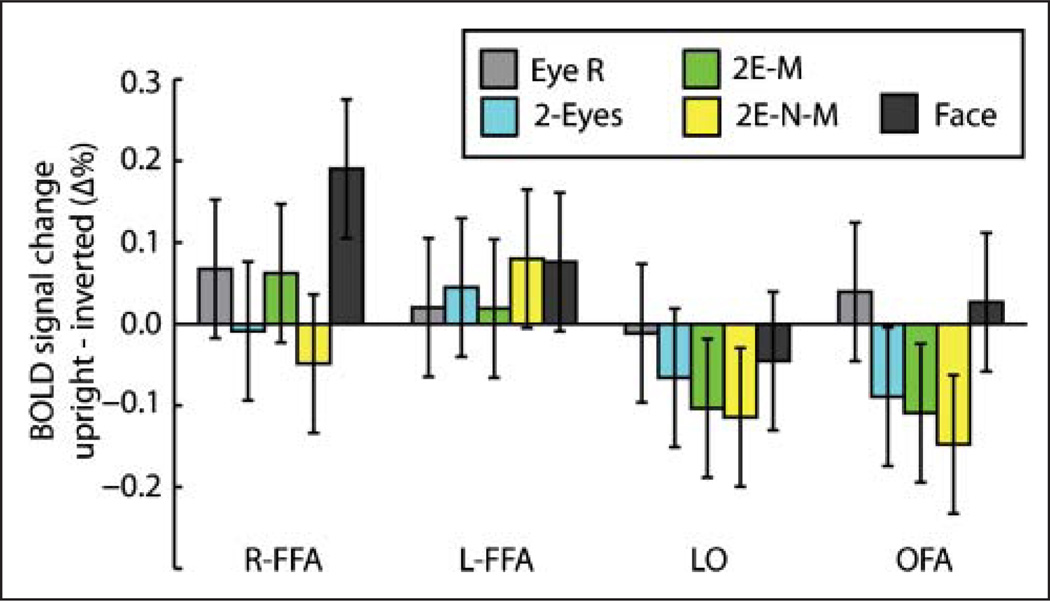

Figure 4.

BOLD signal change as a function of stimulus type, hemisphere, and orientation for the FFA, OFA, and LO. Error bars are 95% confidence intervals.

The main hypotheses were based on assessing inversion effects in BOLD fMRI activation across ROIs; therefore, one-tailed planned comparisons of these effects were undertaken using the FDR method of correction for multiple tests (q < .05). The sizes of the inversion effects are shown in Figure 5. In the right FFA, only the whole-face stimulus showed a significant inversion effect (t(11) = 4.92, q < .05). Because the hemisphere factor did not produce any significant interactions with the other three factors (region, stimulus types, or orientation), BOLD activation in the OFA and the LO is shown collapsed across hemispheres in Figure 5. In the OFA and LO, unlike in the FFA, inversion effects with the whole-face stimulus were not significant. The OFA and LO did show significant effects of inversion with the eyes-mouth and eyes-nose-mouth stimulus types; however, the preference was for inverted stimuli rather than upright (all t(11) > 2.67, q < .05), that is, a reverse inversion effect.

Figure 5.

Inversion effects as a function of stimulus type and brain region. The vertical axis represents the difference in BOLD signal change between upright and inverted presentations. Error bars are 95% confidence intervals.

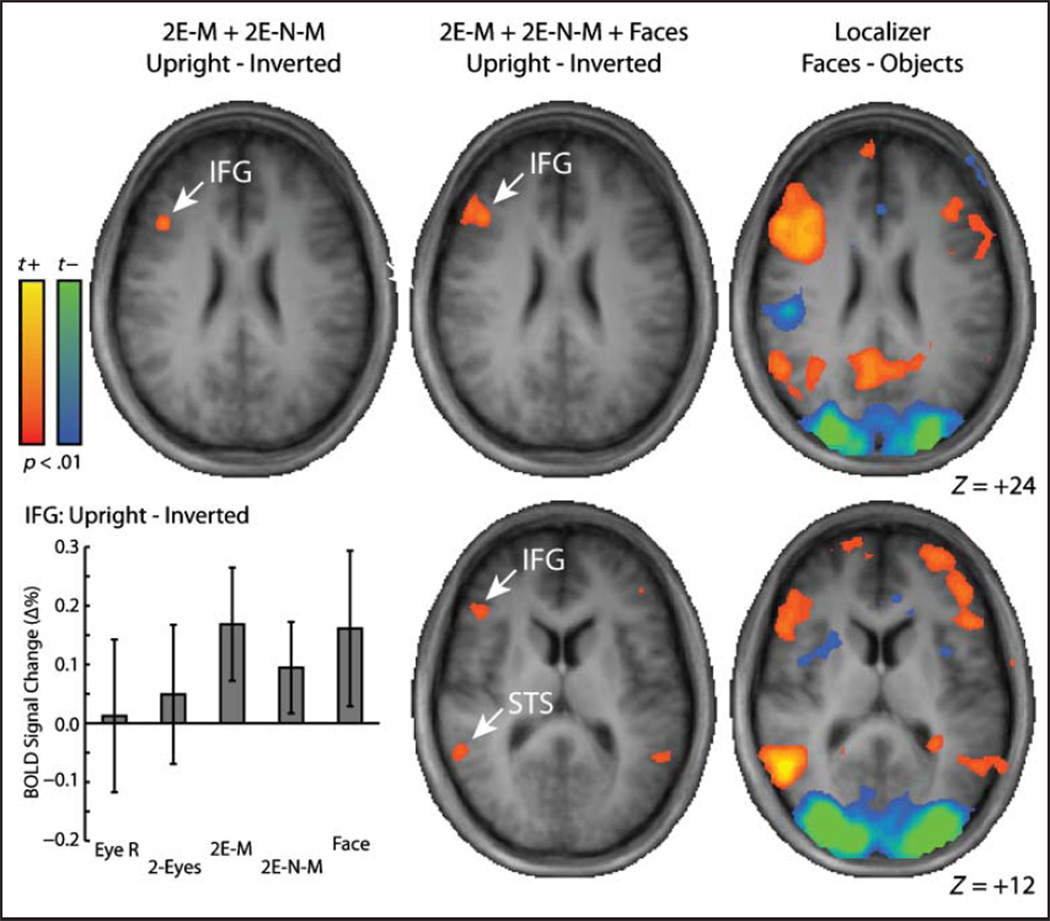

Because the behavioral inversion effects found with the eyes-mouth and eyes-nose-mouth stimuli were not reflected in any of the three independently defined ROIs, a further whole-brain analysis was performed to find the clusters that produced significant inversion effects in BOLD activation for those stimulus types. The left panel in Figure 6 shows a map of the contrast of upright versus inverted orientations, collapsed across the eyes-mouth and eyes-nose-mouth stimuli. Correction for multiple tests was performed by choosing a relatively liberal voxel-wise threshold ( p < .01) and applying a cluster-size filter (Lazar, 2010; Thirion et al., 2007; Forman et al., 1995). The size of the cluster required to satisfy a family-wise error rate of p = .05 was determined using Monte Carlo simulation (Nichols, 2012; Goebel, Esposito, & Formisano, 2006) to be eight 3 × 3 × 3 mm voxels. Using that threshold, the only significant cluster was in the right inferior frontal gyrus (IFG), a region that is part of the extended face-preferring network (Ishai, 2008; Haxby, Hoffman, & Gobbini, 2000). For comparison, the right panel of Figure 6 shows a map from the independent functional localizer run from a contrast of faces versus objects, confirming that the IFG cluster shown in the left panel shows a preference for face stimuli. The plot below the map shows mean BOLD signal change in the IFG cluster and illustrates that the inversion effect in the IFG was not driven by a single stimulus type but was driven relatively equally by inversion effects with eyes-mouth, eyes-nose-mouth, and whole-face stimuli, but not two-eyes or right-eye stimuli.

Figure 6.

Whole-brain maps of inversion effects and face-preferring regions. For the left and middle columns, the brain maps depict contrasts of upright versus inverted orientations. For the left column, the contrast included the eyes-nose and eyes-nose-mouth combination stimuli. For the middle column, the contrast included the eyes-nose, eyes-nose-mouth, and whole-face stimuli. For the right column, brain maps depicts a contrast of the face and object stimuli from the independent localizer run. Z values are from the Talairach reference.

The middle panel of Figure 6 shows the inversion effect contrast with whole faces added to eyes-mouth and eyes-nose-mouth stimuli (the three stimulus types that showed significant behavioral inversion effects), thresholded using the same voxel-wise and cluster criteria as above. For this contrast, the right IFG cluster expanded to include more ventral voxels and a small ventral cluster appeared in the left IFG. There were also significant clusters found in the bilateral STS, a region that is part of the core face-preferring network (Ishai, 2008; Haxby et al., 2000).

DISCUSSION

There has been debate in the literature about the interpretation of behavioral and neural face inversion effects and what they explain about the specificity of perceptual and neural mechanisms involved in face recognition. Some of this debate has revolved around the possible contribution of feature-based processes (Gold et al., 2012; McKone & Yovel, 2009; Rossion, 2008; Sekuler et al., 2004). Although inversion effects have been tested before with single features without a whole-face context (Pellicano & Rhodes, 2003; Rhodes et al., 1993), to our knowledge, this is the first study to investigate inversion effects with multiple combinations of features without a whole-face context. The main findings were that, first, reliable behavioral inversion effects were found with the eyes-mouth and eyes-nose-mouth stimuli, which are combinations of facial features without a whole-face context. Second, accuracy with upright whole faces was greater than any other upright stimulus type; therefore, adding a whole-face context to the eyes-nose-mouth feature combination facilitated recognition. However, accuracy with inverted whole faces was greater than any other inverted stimulus type, suggesting that, whether upright or inverted, adding whole-face context to eyes-nose-mouth features facilitates recognition relatively equally. Third, unlike the pattern of behavioral inversion effects across stimulus types, the pattern of FFA activation showed an inversion effect for only the whole-face stimulus. This finding is more consistent with accounts that the FFA shows a preference for whole upright face stimuli (Caldara et al., 2006), rather than accounts suggesting that the FFA is a site of specific feature-based or configural/holistic processes related to face inversion effects (Kanwisher & Yovel, 2006; Yovel & Duchaine, 2006; Yovel & Kanwisher, 2004, 2005). Fourth, unexpectedly, the pattern of behavioral inversion effects across stimulus types was best reflected by the pattern of activation in the IFG, which is a region in the extended face-preferring network (Ishai, 2008; Haxby et al., 2000). This suggests that the IFG region may be a site of processes that act differentially on upright and inverted combinations of facial features to integrate those features into configurations or possibly whole percepts.

Behavioral inversion effects were small or negligible for the single eye and the two-eyes stimulus types. This is consistent with previous work showing that single face features and features made up of two eyes do not produce significant inversion effects (Pellicano & Rhodes, 2003; Rhodes et al., 1993). The result is also consistent with previous evidence that inversion effects are found with stimuli where the information is contained in features in both the top and bottom halves of a face but are not found with stimuli where the information is in features in only one half of the face (Bushmakin & James, under revision). Behavioral inversion effects with whole faces were not significantly larger than inversion effects with the eyes-mouth and eyes-nose-mouth combination stimuli. This result is not consistent with a framework that requires a whole-face context for large inversion effects, which is sometimes understood as the definition of “holistic” processing (Rossion, 2008; Farah, Wilson, Drain, & Tanaka, 1998). The results are more consistent with a framework in which inversion effects are derived from contributions of feature-based processes—or perhaps a combination of both feature-based and configural/holistic processes—whether or not the information is whole or partial.

There is evidence that the OFA is more involved in feature-based processing than the other regions of the face-selective network, and particularly more than the FFA (Arcurio et al., 2012; Liu, Harris, & Kanwisher, 2010; Nichols, Betts, & Wilson, 2010). If one assumes that the inversion effects with combination stimuli—which are formed of several discrete features with no whole face context—should be based more on feature-based processing than inversion effects with whole faces, then one could also assume that those effects should be mimicked more accurately by the pattern activation in the OFA than the FFA. However, the pattern of activation in the OFA did not mimic the pattern of behavioral inversion effects, even for just the combination stimulus types. In fact, the OFA produced greater activation with inverted stimuli than upright, which could be described as a reverse inversion effect or “face inversion superiority” (Haxby et al., 1999). The same pattern was also found in the LO, which is not face-selective. Previous explanations of face inversion superiority are based on evidence that non-face-selective brain regions (like the LO) sometimes show greater activation with nonface objects than faces. If inverted faces are considered nonface objects, then inversion superiority is explained by the greater activation with nonface objects compared with faces. Although this explanation is consistent with the inversion effects seen here in the FFA and the inversion superiority effect seen in the LO, it is inconsistent with the inversion superiority effect seen in the OFA. The OFA has been shown to be face-preferring (and preferred faces in the current study) and, by this account, should show an inversion effect similar to the FFA. Therefore, the explanation for inversion superiority may be more complicated than initially thought. Furthermore, that inversion superiority effects are simply a biproduct of face-specific and generic object-specific processing is likely underestimating its role in producing behavioral inversion effects.

Unexpectedly, the pattern of behavioral inversion effects was best captured by activation in the IFG. In this region, inversion effects in activation were found for the whole face stimulus and for feature combinations that included at least one part in both the top and bottom halves of the stimulus but were not found for the single-eye stimulus or even the two-eyes stimulus. Because the IFG is sensitive to inversion in stimuli composed of isolated facial features, it suggests that activation there may be influenced by processes involved in integrating piecemeal features into perceptual wholes. Although it is part of the extended face-preferring network, the IFG is rarely associated with either “configural/holistic” or feature-based processes, but the results suggest that investigating its role in integrating specific configurations of discrete features may lead to fruitful insights into the mechanisms of face recognition (and possibly other types of object recognition).

What is the role of configural/holistic processing in producing behavioral and neural inversion effects? The inversion effect has historically been used as a marker for configural/holistic processes that are sometimes considered to be specifically recruited for whole upright face processing (McKone & Yovel, 2009; Rossion, 2008; Farah et al., 1998). Following this idea, it has been argued that the FFA is both the site of whole upright face-specific processing and the site of configural/holistic processing (Kanwisher & Yovel, 2006; McKone, Kanwisher, & Duchaine, 2006; Rhodes, Byatt, Michie, & Puce, 2004). The current findings are consistent with some aspects of these hypotheses but are inconsistent with this group of hypotheses as a whole. Consistent with expectations based on previous work, the FFA responded preferentially with whole upright faces compared with other stimulus types and also responded similarly with inverted whole faces as with other inverted stimulus types. Thus, the FFA showed a large inversion effect with whole faces, but only with whole faces. However, behavioral inversion effects were also found with partial faces, suggesting that either configural/ holistic processing is not restricted to whole faces (Richler, Bukach, & Gauthier, 2009) or that inversion effects do not measure configural/holistic processing per se but instead reflect an enhancement of feature-based processing in the upright orientation (Gold et al., 2012; Sekuler et al., 2004). If either of these is the case, then it cannot be claimed that the FFA has a special role in configural/holistic processing specific to faces and specific to the face inversion effect.

Our data are consistent with a preference in the FFA for whole upright face stimuli (Hanson & Schmidt, 2011; Pernet et al., 2007; Caldara et al., 2006) and are inconsistent with claims that go beyond that regarding its specificity (Downing et al., 2006; Kanwisher & Yovel, 2006). However, our data also speak to the domain-general nature of activation in the FFA. One account of the whole upright face preference in the FFA is that the FFA responds more strongly with symmetric, face-like outlines that have more high-contrast elements in the top half, regardless of whether or not they resemble a face (Caldara & Seghier, 2009; Caldara et al., 2006). Although the current experiment was not designed to directly test these relations, some speculative conclusions can be drawn. The stimuli used in the current experiment can be measured for variables of interest to the theory, which would likely be the ratio of low-level visual characteristics between the top and bottom halves of the stimulus, characteristics such as number of features, contrast energy, luminance, and power spectrum amplitude. On the basis of the theory, stimulus types with a ratio favoring more information in the top half (i.e., more features, greater contrast, greater luminance, or greater amplitude) should produce the largest inversion effects, because these are the stimuli that produce the largest dichotomy between upright and inverted orientations. The right-eye and the two-eyes stimulus types had all of their elements in the top half when they were upright and no elements in the top half when they were inverted. Thus, the right-eye and two-eyes stimulus types provided the greatest discrepancy between the upper-half and lower-half. Following on the above theory, one would expect that the difference in upright and inverted orientations would therefore be greatest for the two-eyes and right-eye stimulus types. However, the results show the opposite; the right-eye and two-eyes stimuli showed the smallest inversion effects. Whole faces, on the other hand, showed the greatest inversion effects, yet whole faces showed the smallest discrepancy between upper- and lower-half visual characteristics. These findings suggest that the low-level visual characteristics of the stimuli may not play as great a role in the activation of the FFA as the feature-level and object-level characteristics of the stimuli.

Finally, the incongruence between the behavior and the activation in the FFA demonstrates that a full understanding of the neural underpinnings of inversion effects and configural/holistic processing involved with face and object recognition cannot rely on exclusive examination of the FFA or on only face-preferring networks.

Acknowledgments

The research was supported in part by the Faculty Research Support Program administered through the IU Office of the Vice President of Research, in part by the Indiana METACyt Initiative of Indiana University; in part through a major grant from the Lilly Endowment, Inc.; and in part by National Institute of Health grant EY019265 to J. M. G. We thank Karin Harman James for insights given on every aspect of the project and Thea Atwood and Rebecca Ward for assistance with data collection.

REFERENCES

- Arcurio LR, Gold JM, James TW. The response of face-selective cortex with single face parts and part combinations. Neuropsychologia. 2012;50:2454–2459. doi: 10.1016/j.neuropsychologia.2012.06.016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brainard DH. The psychophysics toolbox. Spatial Vision. 1997;10:433–436. [PubMed] [Google Scholar]

- Bushmakin M, James TW. The influence of feature conjunction on object inversion effects. doi: 10.1068/p7610. under revision. [DOI] [PubMed] [Google Scholar]

- Caldara R, Seghier ML. The fusiform face area responds automatically to statistical regularities optimal for face categorization. Human Brain Mapping. 2009;30:1615–1625. doi: 10.1002/hbm.20626. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Caldara R, Seghier ML, Rossion B, Lazeyras F, Michel C, Hauert CA. The fusiform face area is tuned for curvilinear patterns with more high-contrasted elements in the upper part. Neuroimage. 2006;31:313–319. doi: 10.1016/j.neuroimage.2005.12.011. [DOI] [PubMed] [Google Scholar]

- Downing PE, Chan AW, Peelen MV, Dodds CM, Kanwisher N. Domain specificity in visual cortex. Cerebral Cortex. 2006;16:1453–1461. doi: 10.1093/cercor/bhj086. [DOI] [PubMed] [Google Scholar]

- Farah MJ, Wilson KD, Drain M, Tanaka JW. What is “special” about face perception? Psychological Review. 1998;105:482–498. doi: 10.1037/0033-295x.105.3.482. [DOI] [PubMed] [Google Scholar]

- Forman SD, Cohen JD, Fitzgerald M, Eddy WF, Mintun MA, Noll DC. Improved assessment of significant activation in functional magnetic resonance imaging (fMRI): Use of a cluster-size threshold. Magnetic Resonance Medicine. 1995;33:636–647. doi: 10.1002/mrm.1910330508. [DOI] [PubMed] [Google Scholar]

- Gauthier I, Tarr MJ. Unraveling mechanisms for expert object recognition: Bridging brain activity and behavior. Journal of Experimental Psychology: Human Perception and Performance. 2002;28:431–446. doi: 10.1037//0096-1523.28.2.431. [DOI] [PubMed] [Google Scholar]

- Goebel R, Esposito F, Formisano E. Analysis of functional image analysis contest (FIAC) data with brainvoyager QX: From single-subject to cortically aligned group general linear model analysis and self-organizing group independent component analysis. Human Brain Mapping. 2006;27:392–401. doi: 10.1002/hbm.20249. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gold JM, Mundy PJ, Tjan BS. The perception of a face is no more than the sum of its parts. Psychological Science. 2012;23:427–434. doi: 10.1177/0956797611427407. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hanson SJ, Schmidt A. High-resolution imaging of the fusiform face area (FFA) using multivariate non-linear classifiers shows diagnosticity for non-face categories. Neuroimage. 2011;54:1715–1734. doi: 10.1016/j.neuroimage.2010.08.028. [DOI] [PubMed] [Google Scholar]

- Haxby JV, Hoffman EA, Gobbini MI. The distributed human neural system for face perception. Trends in Cognitive Sciences. 2000;4:223–233. doi: 10.1016/s1364-6613(00)01482-0. [DOI] [PubMed] [Google Scholar]

- Haxby JV, Ungerleider LG, Clark VP, Schouten JL, Hoffman EA, Martin A. The effect of face inversion on activity in human neural systems for face and object perception. Neuron. 1999;22:189–199. doi: 10.1016/s0896-6273(00)80690-x. [DOI] [PubMed] [Google Scholar]

- Ishai A. Let’s face it: It’s a cortical network. Neuroimage. 2008;40:415–419. doi: 10.1016/j.neuroimage.2007.10.040. [DOI] [PubMed] [Google Scholar]

- Kanwisher N, Tong F, Nakayama K. The effect of face inversion on the human fusiform face area. Cognition. 1998;68:B1–B11. doi: 10.1016/s0010-0277(98)00035-3. [DOI] [PubMed] [Google Scholar]

- Kanwisher N, Yovel G. The fusiform face area: A cortical region specialized for the perception of faces. Philosophical Transactions of the Royal Society, Series B. 2006;361:2109–2128. doi: 10.1098/rstb.2006.1934. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lazar NA. The statistical analysis of functional MRI data. New York: Springer; 2010. [Google Scholar]

- Liu J, Harris A, Kanwisher N. Perception of face parts and face configurations: An fMRI study. Journal of Cognitive Neuroscience. 2010;22:203–211. doi: 10.1162/jocn.2009.21203. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McKone E, Kanwisher N, Duchaine BC. Can generic expertise explain special processing for faces? Trends in Cognitive Sciences. 2006;11:8–15. doi: 10.1016/j.tics.2006.11.002. [DOI] [PubMed] [Google Scholar]

- McKone E, Yovel G. Why does picture-plane inversion sometimes dissociate perception of features and spacing in faces, and sometimes not? Toward a new theory of holistic processing. Psychonomic Bulletin & Review. 2009;16:778–797. doi: 10.3758/PBR.16.5.778. [DOI] [PubMed] [Google Scholar]

- Nichols DF, Betts LR, Wilson HR. Decoding of faces and face components in face-sensitive human visual cortex. Frontiers in Psychology. 2010;1:28. doi: 10.3389/fpsyg.2010.00028. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nichols TE. Multiple testing corrections, nonparametric methods, and random field theory. Neuroimage. 2012;62:811–815. doi: 10.1016/j.neuroimage.2012.04.014. [DOI] [PubMed] [Google Scholar]

- Pelli DG. The VideoToolbox software for visual psychophysics: Transforming numbers into movies. Spatial Vision. 1997;10:437–442. [PubMed] [Google Scholar]

- Pellicano E, Rhodes G. Holistic processing of faces in preschool children and adults. Psychological Science. 2003;14:618–622. doi: 10.1046/j.0956-7976.2003.psci_1474.x. [DOI] [PubMed] [Google Scholar]

- Pernet C, Schyns PG, Demonet JF. Specific, selective or preferential: Comments on category specificity in neuroimaging. Neuroimage. 2007;35:991–997. doi: 10.1016/j.neuroimage.2007.01.017. [DOI] [PubMed] [Google Scholar]

- Rhodes G, Brake S, Atkinson AP. What’s lost in inverted faces? Cognition. 1993;47:25–57. doi: 10.1016/0010-0277(93)90061-y. [DOI] [PubMed] [Google Scholar]

- Rhodes G, Byatt G, Michie PT, Puce A. Is the fusiform face area specialized for faces, individuation, or expert individuation? Journal of Cognitive Neuroscience. 2004;16:189–203. doi: 10.1162/089892904322984508. [DOI] [PubMed] [Google Scholar]

- Richler JJ, Bukach CM, Gauthier I. Context influences holistic processing of nonface objects in the composite task. Attention, Perception & Psychophysics. 2009;71:530–540. doi: 10.3758/APP.71.3.530. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Richler JJ, Mack ML, Palmeri TJ, Gauthier I. Inverted faces are (eventually) processed holistically. Vision Research. 2010;51:333–342. doi: 10.1016/j.visres.2010.11.014. [DOI] [PubMed] [Google Scholar]

- Rossion B. Picture-plane inversion leads to qualitative changes of face perception. Acta Psychologica. 2008;128:274–289. doi: 10.1016/j.actpsy.2008.02.003. [DOI] [PubMed] [Google Scholar]

- Sekuler AB, Gaspar CM, Gold JM, Bennett PJ. Inversion leads to quantitative, not qualitative, changes in face processing. Current Biology. 2004;14:391–396. doi: 10.1016/j.cub.2004.02.028. [DOI] [PubMed] [Google Scholar]

- Tanaka JW, Farah MJ. Parts and wholes in face recognition. The Quarterly Journal of Experimental Psychology Section A. 1993;46:225–245. doi: 10.1080/14640749308401045. [DOI] [PubMed] [Google Scholar]

- Thirion B, Pinel P, Mèriaux S, Roche A, Dehaene S, Poline J-B. Analysis of a large fMRI cohort: Statistical and methodological issues for group analyses. Neuroimage. 2007;35:105–120. doi: 10.1016/j.neuroimage.2006.11.054. [DOI] [PubMed] [Google Scholar]

- Yin RK. Looking at upside-down faces. Journal of Experimental Psychology. 1969;81:141–148. [Google Scholar]

- Yovel G, Duchaine B. Specialized face perception mechanisms extract both part and spacing information: Evidence from developmental prosopagnosia. Journal of Cognitive Neuroscience. 2006;18:580–593. doi: 10.1162/jocn.2006.18.4.580. [DOI] [PubMed] [Google Scholar]

- Yovel G, Kanwisher N. Face perception: Domain specific, not process specific. Neuron. 2004;44:889–898. doi: 10.1016/j.neuron.2004.11.018. [DOI] [PubMed] [Google Scholar]

- Yovel G, Kanwisher N. The neural basis of the behavioral face-inversion effect. Current Biology. 2005;15:2256–2262. doi: 10.1016/j.cub.2005.10.072. [DOI] [PubMed] [Google Scholar]