Abstract

This study documents our experience in designing, testing, and refining human subjects’ consent protocol in 3 of the first NIH-funded online studies of HIV/STI sexual risk behavior in the USA. We considered 4 challenges primary: a) designing recruitment and enrollment procedures to ensure adequate attention to subject considerations; b) obtaining and documenting subjects’ consent; c) establishing investigator credibility through investigator-participant interactions; d) enhancing confidentiality during all aspects of the study. Human consent in online studies appears more relative, continuous, inherent, tenuous, and diverse than in offline studies. Reasons for declining consent appear related to pragmatic concerns not human subjects’ risks. Reordering the consent process, and short, chunked, stepwise, tailored consent procedures may enhance communicating information and documenting consent.

Introduction

Internet-based research is a promising way to access hard-to-reach, high-isk populations for HIV prevention research (National Institute of Mental Health, 2000). The Internet provides unique opportunities and challenges to the conduct of rigorous research, including both opportunities to improve human subjects’ consent procedures and challenges to traditional consent protocols (Pequegnat et al., 2007). As one of one of the first NIH-funded studies of the Internet, we grappled with several key considerations regarding informed consent (Gurak & Rosser, 2003). We will provide an overview of the Men’s INTernet Sex (MINTS) studies, highlight key considerations when conducting Internet-based sex research, followed by a case review where we encountered human subjects’ challenges at every step of our Internet-based study from recruitment to subject payment. This paper provides a case study on how we addressed four key issues in online consent: a) how to design recruitment and enrollment procedures to ensure adequate attention to human subjects considerations; b) how to obtain and document human subjects’ consent; c) how to establish investigator credibility in the absence of face-to-face interactions; and, d) how to enhance confidentiality provisions during all aspects of the study, including payment. We finish with recommendations for other researchers wanting to initiate Internet-based studies. The audiences for this paper are colleagues proposing or undertaking similar studies, IRB staff, human subjects’ committees, grant reviewers, and other key stakeholders.

MINTS Studies Overview

The focus of this paper is to report how our team addressed human consent concerns in MINTS I and II. As the primary aim of this line of HIV prevention research was to conduct an in-depth assessment of risk behavior, our surveys included questions about such socially sensitive information as number of male sex partners, history of abuse, and degree of sexual compulsivity; socially undesirable topics including rates of unsafe sexual behavior, “cheating” on primary partners, and frequency and type of misrepresentation in online sexual negotiations; and illegal activities including frequency of illegal drug use, unsafe sex by HIV positive persons, and deliberate misrepresentation of HIV status. Hence, the topic areas inherently hold risk to subjects, especially if information is intercepted by a third party. The MINTS studies provide a useful case study where we developed and tested new methods to addressing the challenges of informing and obtaining human consent in Internet-based studies and to protect human subjects’ confidentiality at all stages of the research process.

In 2002 and 2005, our team conducted two of the first and largest sexual and drug risk behavior assessment of Men who use the Internet to seek Sex with Men (MISM). In addition, in 2008 we recruited MISM into a randomized controlled trial of an online HIV prevention program. Risk factors for MISM of all race/ethnicities (Rosser et al., 2009), as well as for transgender persons (Bockting, Miner, & Rosser, 2007; Rosser, Oakes, Bockting, Babes, & Miner, 2007), African American MISM (Rosser et al., 2006a), Latino MISM (Ross, Rosser, Stanton, & Konstan, 2004; Rosser et al., 2008), and young adult MISM (Horvath, Rosser, & Remafedi, 2008), have been identified. A second series of published papers have focused on sexual cofactors of risk including HIV serodisclosure (Carballo-Diéguez, Miner, Dolezal, Rosser, & Jacoby, 2006), compulsive sexual behavior (Coleman et al., 2006; Smolenski, Ross, Risser, & Rosser, 2008), cybersex (Ross, Rosser & Stanton, 2006), online communication patterns (Horvath, Oakes, & Rosser, 2008), and the advantages and limitations of seeking sex online (Ross, Rosser, McCurdy & Feldman, 2007).

We have completed an extensive needs assessment on what MISM deem acceptable in online HIV prevention (Hooper et al., 2008), identified theoretical underpinnings for integrating theories of effective HIV prevention with effective e-learning (Allen, 2003; Rosser et al., 2006b), and reported on highly interactive online interventions then in development and currently in randomized controlled trial (Rosser, 2005; Rosser et al., 2005).

In addition to “content area” papers, we have sought to advance the emerging science of Internet based research by publishing a series of “lessons learned papers” in such areas as Internet-based HIV prevention survey methods (Pequegnat et al., 2007), data security in online studies (Konstan, Rosser, Horvath, Gurak, & Edwards, 2008; Konstan, Rosser, Ross, Stanton, & Edwards, 2005; Konstan, Rosser, Stanton, Brady, & Gurak, 2003), impact of attrition in online studies on validity (Ross, Rosser, Stanton & Konstan, 2004), psychometric considerations in online scales (Rosser et al., 2004), and how demographic profiles of online samples differ from conventionally drawn samples (Rosser et al., 2008, 2009). Just as important is attention to ethical considerations (Rosser & Horvath, 2007), and in particular, to advance ethical practice in such areas as obtaining human consent.

Considerations in Internet-Based Research

The Internet revolution has transformed human communication. In 2 decades, e-mail and text messaging have replaced land lines, telegram, fax, and postal service as the dominant means of distance communication. In America, 62% of households have Internet access in the home, and a further 9% access only outside of the home (National Telecommunications and Information Administration, 2007). While initial concerns about Internet research focused on the digital divide, those concerns are decreasing as non-Internet use becomes progressively rare in younger cohorts and the first generation of “Internet natives” (i.e., persons who have grown up always with access to the Internet) enter adulthood. In addition, the increasingly ubiquitous nature of other forms of digital communication (wireless access, cell phones, iPods, handheld devices) makes this divide even smaller (Horrigan, 2008). For behavioral and social scientists, such features as automated data collection, skip patterns, and anonymity, make computer-assisted survey instruments—including web-based instruments—an attractive, competitive, often economic and on several dimensions scientifically more accurate alternative to pen-and-paper-based research (Gosling, Vazire, Srivastava & John, 2004; Kraut et al, 2004; Pequegnat et al., 2007). For online survey and intervention research, however, an identified limitation has been attention to human subjects’ considerations.

Questions about the ethical and methodological issues raised when conducting Internet research are not new and date back to the 1980s, when social scientists and others, mainly from the communication fields, began to observe the novel ways in which people were using the computer-mediated communication for workplace and day-to-day correspondence. Early work by researchers such as Sproull and Kiesler (1991), Hiltz and Turoff (1978), Herring (1996), and others illustrated how the lack of social cues and shifts from written to spoken features of discourse were creating new opportunities but also challenges for human communication.

In order to study these features, researchers embarked on using traditional methods such as textual analysis, surveys, comparative case studies, and so forth. But these studies quickly ran into ethical and human subjects conundrums that were not easily answered. Herring (1996), for example, noted that “in the early years of CMC research, those of us who research [CMC] had no choice but to make up rules and procedures as we went along” (p. 153). This essay was part of an entire special issue of the journal The Information Society, devoted to the topic of “doing Internet research,” in which issues such as working with the IRB, conducting surveys, and protecting confidentiality were discussed. In a similar example, Gurak (1997) in the appendix to her case study, noted that the seemingly simple matter of whether to use real names or pseudonyms is complicated by the fuzzy distinction between what is public and private online and what sort of expectations users have when posting information in a digital setting. In an attempt to help shape the discussion, a number of books began to appear. The edited collection Doing Internet Research (Jones, 1998), for example, brought together scholars mainly in qualitative fields in an exploration of issues such as studying social networks or analyzing web pages. Such work was helpful but in a way, continued to raise more questions than it answered. In 2000, Hine’s Virtual Ethnography put more shape to the matter, addressing the particular issues involved in ethnographic research.

As methods-based discussions continued to be published, and as the Internet became more ubiquitous and even members of IRBs became more familiar with the technology and the subtleties it lent to human subject research, some of these initial questions disappeared. But the topic of ethics and Internet research did not go away. In some ways, they became more complex, as Internet tools and usage broadened and as researchers from disciplines outside of communication studies began to conduct Internet research (Naglieri et al., 2004). The result of this new wave of questions and research resulted in the Association of Internet Researchers’ (AoIR) publication called Ethical Decision-Making and Internet Research: Recommendations From the AoIR Ethics Working Committee. Published in 2002, the paper addresses questions such as whether chat rooms are public spaces (p.10) and offers (as Addendum V) a series of questions and decision-making guidelines. Other researchers have offered guidelines for conducting Internet research that follow the American Psychological Association’s Code of Conduct (Keller & Lee, 2003; Pittenger, 2003). Similarly, work such as Bakardjieva and Feenberg (2001) and others continue to provide guidance on the increasingly complex world of online communication (in their case, as one example, the topic is “the virtual subject”). From these beginnings of consideration of consent in Internet research, studies have begun to emerge on Internet consent processes for nonsurvey-based contexts (Flicker, Haans & Skinner, 2004; Midkiff & Wyatt, 2008; Recupero & Rainey, 2005).

By the early 2000s, papers began appearing that addressed specifics about using surveys in Internet research. There have been numerous publications to date, too lengthy to cite here, but for example, Wright (2005) examines the pros and cons of commercial online survey research tools. Complex Internet survey-based research is now accepted by many journals; for instance, Hargittai’s (2007) study of students using social networks, which employs a sophisticated survey apparatus with significant results.

Nevertheless, for online survey and intervention research, some questions still remain, and at the time of the study reported here, we had very little in the way of reference points. Canned survey tools (such as SurveyMonkey or the more sophisticated programs now available from statistical software companies) did not exist; the AoIR paper had not been published; serious survey researchers were only starting to think about using the Internet. Since the launch and initial conclusion of our studies, a few researchers have also investigated aspects of consent in Internet research. O’Neil, Penrod, and Bornstein (2003) found that upfront requests for personal information on web pages and lengthy consent procedures with multiple web pages encouraged study dropout. In a study of consent comprehension, Varnhagen et al. (2005) found no significance difference in participant’s comprehension between online and printed consent information. Thus without those prior reference points, we began with a series of observations about Internet research. Internet research differs from offline research in at least four principal ways: state of the science, researcher-participant relationship, relativity, and the multidimensional nature of the Internet. When addressing human subjects’ considerations, it is critical to approach Internet studies mindful of how each of these theoretical considerations alter the relationship between research and human subjects.

State-of-the-Science

Compared to conventional study methodologies, Internet survey and intervention research in the field of public health is relatively new. For example, in HIV prevention, the first NIH-funded request for proposals for Internet-based studies was released in 2000 (National Institute for Mental Health/National Institute for Aging, 2000). Given the formative work needed and publication time lag, the results of many of these studies, and even more importantly, papers addressing lessons learned, are only now being published. Thus, the state-of-the-science of Internet-based research is still in its youth. Perhaps more problematic is that Internet-based technology develops so rapidly that innovation and transformation have outpaced research designs. By the time most studies are completed, the results may be out-of-date. Consistent with the NIH Roadmap (National Institutes of Health, 2002), Internet-based social science and behavioral research is inherently multidisciplinary. Expertise resides in the team and communities of interdisciplinary scholars addressing the psychological, social, behavioral, public health, computer science, instructional design, communication, technology, policy, ethical, and legal fields.

The investigator-subject relationship

It is equally important to recognize that online research necessarily changes the nature of the investigator-participant relationship, and thus, the relationship within which consent is obtained and risks are identified and handled. In most conventional studies the investigator(s) at some point meets and interacts with the research subject. Even in telephone survey research, the interviewer can react to verbal cues to inform the consent procedure and interview. In both, the investigator(s) and the subject have opportunities to interrupt the research, to clarify, and to ask questions. While normally an advantage, as Milgram’s (1963) experiment demonstrated, strong demands created by the researcher can be coercive. By contrast, in Internet-based research, the investigator and subject typically never meet. Thus, there is greater freedom for the investigators to choose what level of investigator-subject relationship is appropriate to the study, and then, as detailed below, to design research procedures accordingly to promote an optimal investigator-subject relationship. For example, online studies can be developed to enhance user anonymity, meaning the subject and the investigator may remain unknown to each other throughout the study. In such cases, procedures such as personalized emails of welcome, or detailed biosketches about the investigators would be minimized. Ethically, enhancing anonymity lessens the threat of coercion but may increase the threat of attrition. To prevent high attrition, greater sensitivity to anticipating negative experiences including subject burden, boredom and fatigue is necessary, since Internet-based studies afford subjects with instant and continuous opportunity to discontinue participation at any time.

Relativity

A critical theoretical consideration is appreciating the greater relativity in Internet-based research of person, time and place, which in turn change risk to human subjects and threats to study validity. In offline research, subjects remain unique individuals who typically participate in research at a specific time and in a setting such as a hospital, school, or community venue. Just as anonymity afforded by the Internet may promote attrition, it may also encourage misrepresentation. In MINTS-I we were able to detect survey completion by a single participant 66 times (Konstan, Rosser, Horvath, Gurak, & Edwards. 2008; Konstan, Rosser, Ross, Stanton, & Edwards, 2005), and in MINTS-II an organized group of ineligible participants contributed 126 surveys (unpublished data). Time in online research is much faster than offline, making the use of long conventional consent forms obsolete (Rosser & Horvath, 2007). While a study participant may think nothing of taking 15 minutes to be enrolled in an offline study, they are unlikely to wait 15 seconds for a study form to upload, and almost certainly will not read preparatory material for an extended period. Multitasking by having multiple windows open simultaneously makes attention to studies more tenuous. If a subject is completing a survey or experiencing an intervention while having her or his morning coffee and answering e-mail, their attention may be divided. Additionally, if a subject is in a public place like a coffee shop, library, or place of employment, his attention may be unfocused if he fears being observed while participating in a survey.

Multidimensionality of the Internet

The Internet is simultaneously a tool, communication, environment, and culture. As a tool, Internet consent provides more options for subjects to choose their level of informed consent. In terms of communication, Internet language has its own rules, stylistic considerations and limits, making “translation” into Internet appropriate formats essential for credibility (Gurak & Lannon, 2003). As an environment, the Internet appears less vulnerable to researcher demand characteristics and perhaps as well to volunteer effects. Demand characteristics, as defined by Orne (1962), are the cues in an experiment that may convey an experimenter’s hypothesis to the subject (and thereby influence subject behavior). The fact that Internet-based research takes place in the subject’s environment of choice can de-emphasize the researcher, and thus reduce such effects, though of course poorly framed survey questions or other interactions can always betray intents and introduce bias. Volunteer effects (Rosnow, Rosenthal, McConochie, Marmer, & Arms, 1969) are the differences between the behavior of experiment volunteers and the population being studied (e.g., in general Rosnow et al. report that volunteers test to be better educated and have higher status than nonvolunteers). While some volunteer effects are likely to persist online, the ability to recruit from the natural interaction environment of the population (particularly when studying online phenomena) helps reduce this; in essence it is similar to surveying at a supermarket or shopping mall, rather than inviting students to a lab as Rosnow et al. did. Of course, Internet-use is itself not evenly distributed across the population, so care must be taken when sampling to avoid oversampling high-level users. As a culture, researchers need to appreciate that the Internet has its own social rules and conventions. In both MINTS I and II, for example, participants reported greater disclosure and misrepresentation to potential sex partners when met online than in other settings (Ross, Rosser, Coleman, & Mazin, 2006). For research to be culturally sensitive and respectful, it must be framed in culturally acceptable ways. The clearest example of this is perhaps the common placement of copyright disclaimers at the start of software programs; and the equally common and we suspect almost universal practice of not reading such disclaimers. Similarly, when conducting sex research, it appears that more explicit, direct language and images are acceptable (Hooper et al., 2006; 2008).

Design Considerations in Internet-Based Consent Procedures: A Case Study of the MINTS I and II Studies

The following is a discussion of human subjects’ consent considerations, grouped by study stage.

The IRB-Research Team Relationship

When we began the MINTS studies, we could find no examples of how to present consent considerations in online surveys. Online surveys themselves were still in their infancy; most of today’s standard digital survey tools and companies were not in existence at that time. We considered the default of reproducing standard consent forms but rejected them as ethically inadequate since, on a computer screen, standard length consent forms, formatted and designed for the printed page, were unlikely to be read. We are indebted to our University’s IRB for their openness and willingness to work with us to advance methods in this area, that foremost included “chunking” the consent into short, easy-to-read pages. This procedure transformed consent documentation from a signature-focused event to a gradual and implicit consent process exempted from legal requirements for original signatures. We also collaborated with our IRB to obtain permission to study the motivations of persons who those actively declined to consent. Each of these innovations in how consent is obtained, and documented in scientific studies was possible only because of a productive IRB-researcher relationship. We encourage other investigators who wish to embark in online research to first assess their IRB, as IRBs differ in their institutional/social culture. Such differences may promote or inhibit innovative IRB strategies. Next, we recommend building a strong IRB-researcher relationship, that may include meeting with IRB staff to alert them to the lack of standard methods in online studies and to novel ways of presenting consent (such as those outlined in this article). Perhaps the key to success in this area is having knowledge of IRB rules and regulations. Approaching IRBs from a position of knowledge and respect for rules/constraints, will likely enhance interactions and encourage IRBs to exercise with confidence the inherent flexibility in such rules. These initial steps will promote a productive ethical research environment.

Human Subjects’ Considerations in Planning

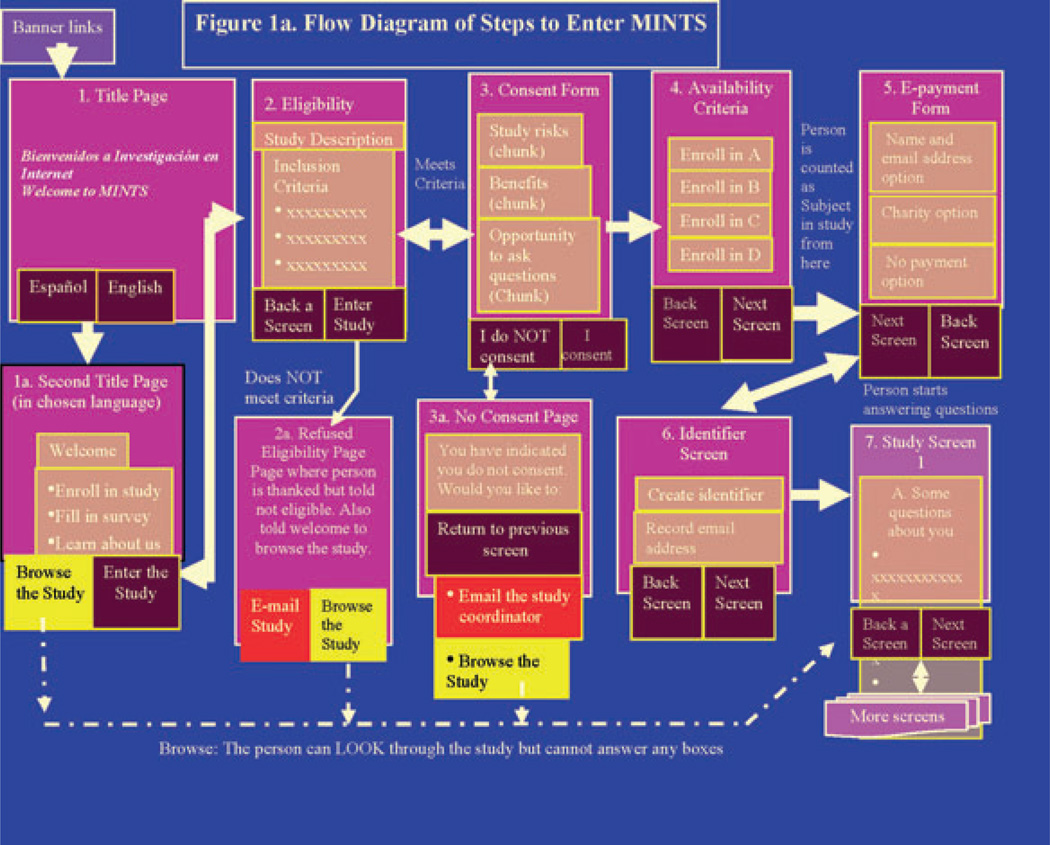

A well designed schematic is necessary for programming the logic in online surveys, including the process of chunking online consent (Lynch & Horton, 2002). Figure 1 shows such a schematic, used in the MINTS-I survey. Several differences may be observed from conventional surveys. As explained in more detail below, enrollment was brought forward, identifiers may be automatically generated or based upon user information, and what happens to an enrollee who is unsure or decides not to participate is detailed.

Figure 1.

Schematic Flow Diagram Showing Logic of Entering Study.

Human Subjects’ Considerations in Recruitment

Assessments of HIV risk behaviors and attitudes regarding sex involve recruitment of minority and hidden populations, some of whom may be deeply suspicious of research and researchers. As evidenced by the legacy of the Tuskegee study of untreated syphilis on African American men (Katz et al., 2006) and attempts by medicine to cure homosexuality (Stein, 1996), the concerns of racial and sexual minorities that they may be treated unethically or with prejudice have historical precedent. In addition, because the Internet can obscure the identity of the authors of content and websites, potential participants may wish to confirm the authenticity of the research and the credibility of the researchers. Additional concerns in our study were risks associated with participation by minors and those intentionally opposed to such research.

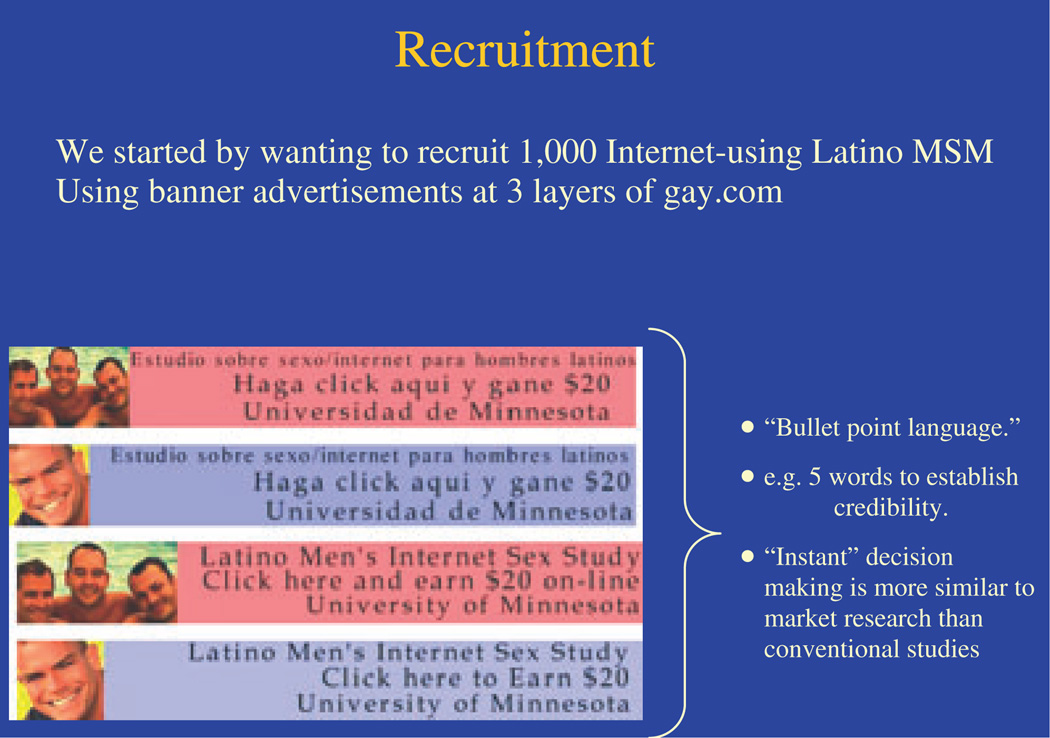

Common recruitment strategies in offline studies may involve distribution of flyers or advertisements which allow for considerable detail about the study to be conveyed. Online equivalents are banner or box advertisements. In an entirely virtual study, the use of banner advertising imposes severe word limitations. As shown in Figure 2, in MINTS-I, we had three lines: “Latino’s Internet Study, Click here to earn $20, University of Minnesota.” In online recruitment, the chief tradeoffs we identified were between providing sufficient detail to attract likely eligible participants and disclosing too much detail to tip off ineligible participants as to how to misrepresent themselves. Similarly, we debated at length the trade-off between detailing the compensation which appears fairly standard in online commercial surveys, with concerns that some participants might view the compensation as an incentive. We concluded that prominently using the name of the university was sufficient at the advertisement stage in order to resolve the challenge of establishing researcher validity and credibility

Figure 2.

Banner Advertisements.

Human Subjects Considerations in Opening Pages and Links

Clicking on the banner advertisement automatically sent subjects to the opening page of our website, where we provided enrollees with the options of consenting and enrolling immediately, reviewing information about the researchers, previewing the study, and changing languages between English and Spanish. A sense of personal relationship is important in online research and other online activities (West, Rosser, Hooper, Monani, & Gurak, 2007), and, therefore, an “About Us” page was important to provide reassurance to enrollees seeking information about the credibility of the research and the credentials of the researchers. Previewing the survey was considered important for two reasons: first, allowing an enrollee to preview a survey is the simplest and fastest way to provide enrollees with an overview of the type of questions asked while promoting a culture of full-disclosure and hence, trust. Second, it enables the curious-but-ineligible who might otherwise feel inclined to misrepresent themselves in order to investigate the study a means to examine the survey without contaminating the results. To encourage questions, every webpage contains the study 1–800 number, the e-mail address, and the IRB research subjects’ advocacy line to address questions and concerns about the study.

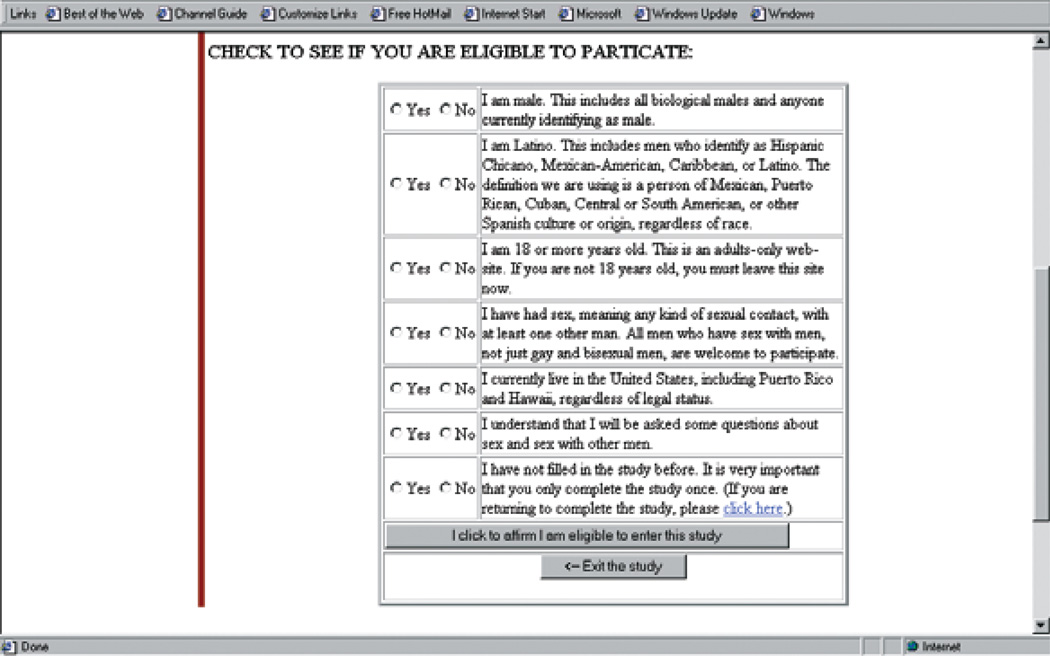

Human Subjects Considerations in Designing Eligibility

In MINTS-I, our University IRB provided permission for us to screen for eligibility prior to obtaining of human consent. This was done to reduce the risk of noneligible participants entering the study, either for monetary gain or to deliberately skew findings. In MINTS-I we used unmasked criteria, but in MINTS-II, based upon our experience in threats to validity from ineligibles wanting to participate, we masked entry criteria more (see Figure 3). Since an aim of MINTS-II was to recruit men diverse in race/ethnicity (which required us to close-out enrollment by race/ethnicity as categories filled), we felt it important to have sufficient numbers of screening questions to avoid the impression that some subjects were excluded because a particular racial/ethnic category had already filled. Screening for eligibility prior to discussing the purpose of the study minimized the time commitment of ineligible subjects.

Figure 3.

Eligibility Page (MINTS-II).

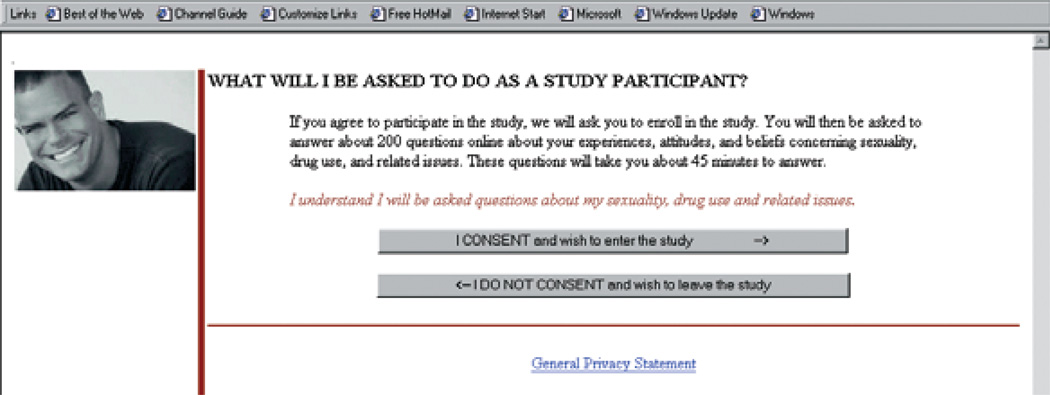

A Stepwise, Chunked, Human Consent Process

Since the early days of computers, it has been clear that people read differently on screens than they do in print. Screens are shaped differently from the printed page; in addition, the speedy nature of digital environment seems to induce faster reading. For longer amount of text, a process of chunking is often appropriate. “Chunking” is the process by which text is “[broken] into manageable pieces” (Lynch & Horton, 2002; Usability.gov, 2008). As shown in Figures 4–8, our approach to human consent was to “chunk” the main features of human consent into five web screens addressing (a) study overview and tasks; (b) risks and benefits; (c) confidentiality; (d) lack of deception; and (e) contact information and information about study funding and sponsorship. In “translating” human consent procedures into an online format, we weighed several competing concerns. First, online communication is most effective when it employs more direct, brief and nonnuanced sentences (e.g., employing a FAQ and/or bullet-list style). Thus, main human subjects concerns were presented as questions, followed by brief 3–4 sentence overviews. Because critical differences in reading online versus conventional reading include more skim-reading and hypertextual use (linking, surfing, jumping around, and vertical scanning), a one-sentence summary highlighted in red was added. Where information was complex, such as the limitations of a federal Certificate of Confidentiality or identification of strategies to protect identity, links were embedded into the text so that those wanting more information more detailed information, while those not wanting detailed information nonetheless were exposed to essential points (see Figures 9–10). At the end of each chunk, buttons labeled consent or do not consent were presented, thus requiring subjects to actively consent to each chunk while also allowing us to track where persons dropped out of enrollment. In MINTS-II, those who clicked “I do not consent” were thanked for their interest and also asked an exit item to identify the major reason for discontinuing. Prior to pilot testing, we reviewed each screen for sufficient white space and font size, shape, and consistency across screens, and inserted visuals to draw the eye into the webpage and to key text. Three options for subjects—to return to previous screens, to mail the researchers and to leave the site immediately—were also provided.

Figure 4.

Consent “Chunk” 1: Study Overview.

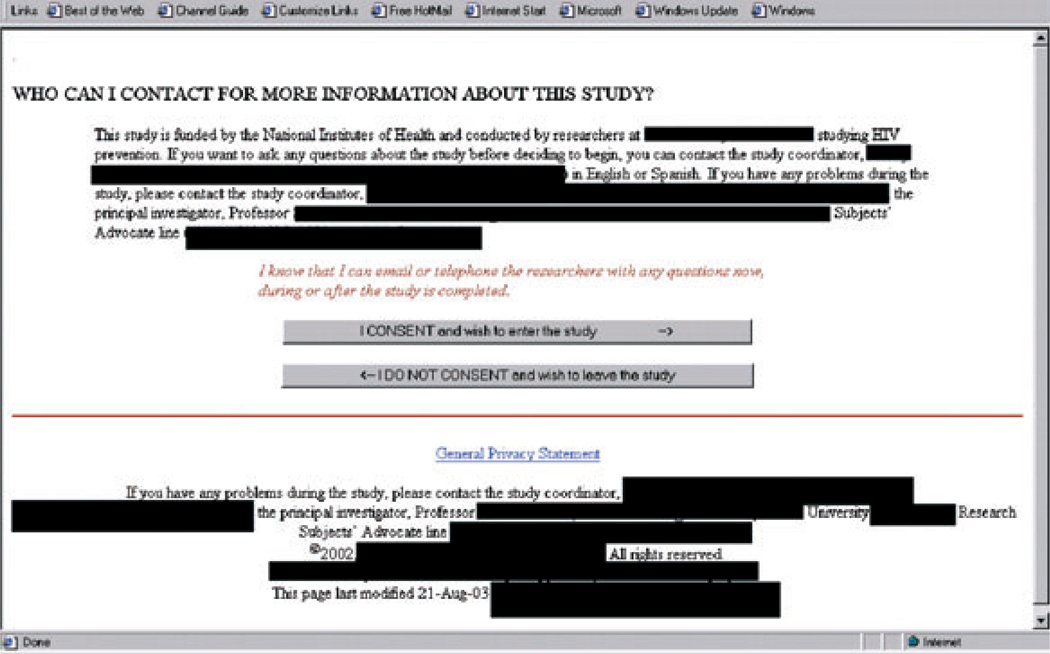

Figure 8.

Multiple Methods to Contact the Study Prior to and Following Consent.

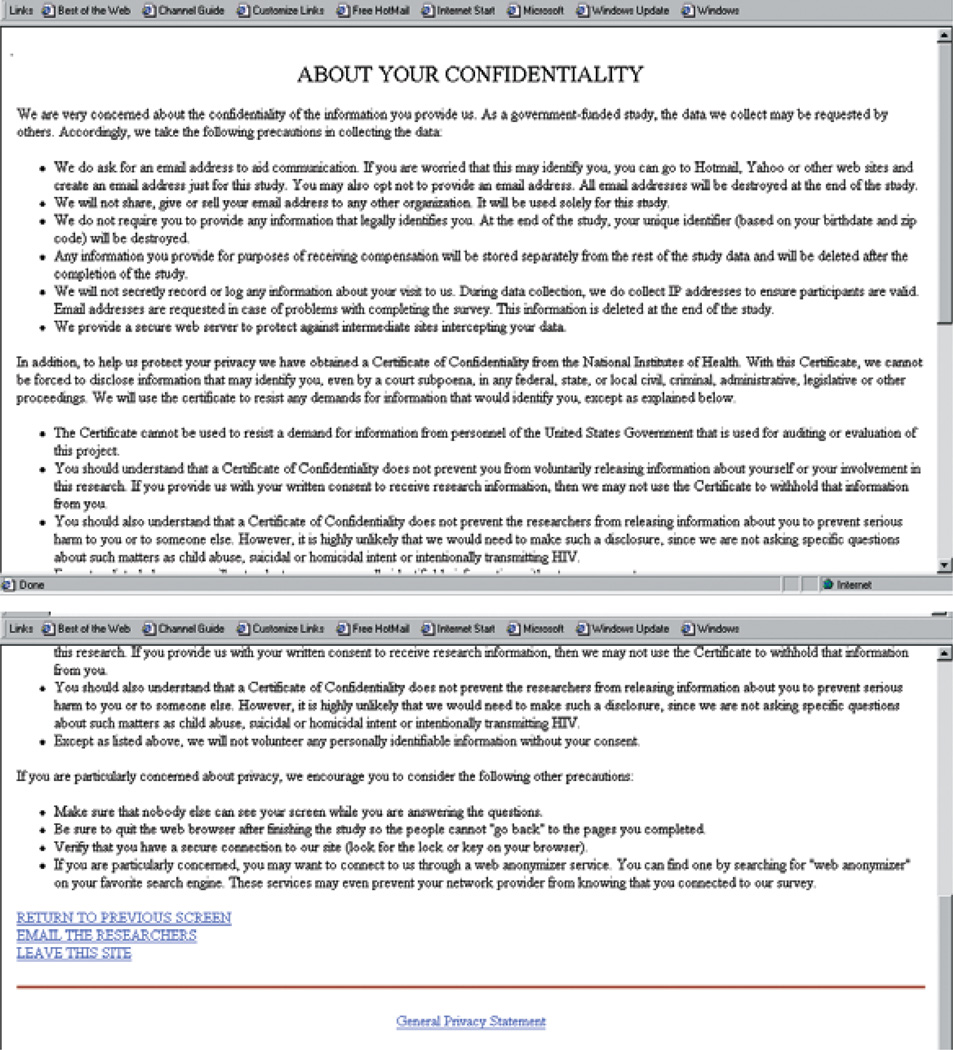

Figure 9.

Link Addressing Confidentiality at Study Site, Federal Limits on a Certificate of Confidentiality and Methods for Enrollees to Enhance Confidentiality at Site of Completion.

Figure 10.

Providing Subjects With Study Expectations and Enrollment Count.

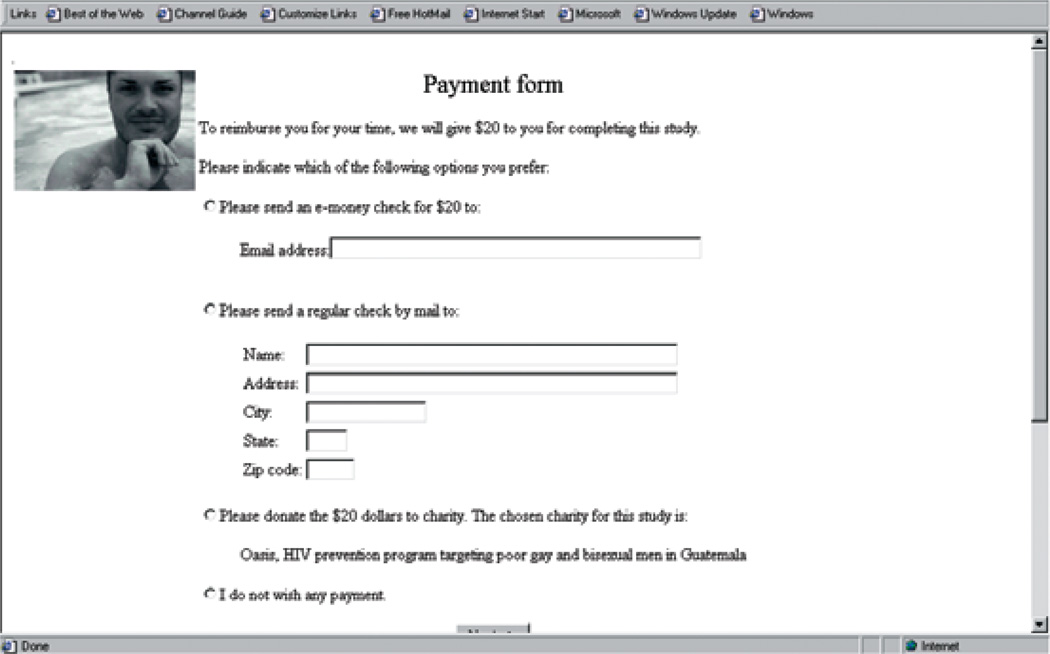

Human Subjects’ Considerations in Payment

Once participants had completed human subjects screening, the next webpage reviewed compensation options (see Figure 11). In considering options for payment, we considered both that different subjects would likely prefer different methods of payment, and that inherent to different payment methods were different levels of confidentiality. At the highest level of disclosure, a traditional check (59% of the MINTS II study sample chose) requires a name and address. To be cashed, a check drawn on our university involves tracking of checks issued by central accounting, and the risk of interception by a third party at the participant’s address. E-Payment (29%) is more efficient and confidential payment method, requiring only an e-mail address, but as we learned, PayPal also provides automatic confirmation all payments cashed and account links. We realized that some participants might want compensation but decline it solely to protect their confidentiality. Hence, we received permission to provide a third option: donation by subjects of their compensation to a named charity (11%). In both studies, the charity chosen was an AIDS Service Organization in which the researchers had no involvement or conflict of interest. Finally, we provided subjects with a decline payment option (2%). Payment type significantly predicted completion rates: traditional check (73%), e-money (67%), charity (48%), and no money (39%,X2(1) = 68.74, p <.001). Internet sex surveys conducted without compensation are vulnerable to attrition.

Figure 11.

Payment Form.

Whether to provide partial compensation for partial completion was also considered. A priori, we determined that we would not use partially completed surveys (beyond comparison with completers), and anticipated average completion time to be 20 to 40 minutes. Since science would not benefit from noncompletion and because the time involvement of noncompleters would be minimal, we felt comfortable in offering no partial compensation.

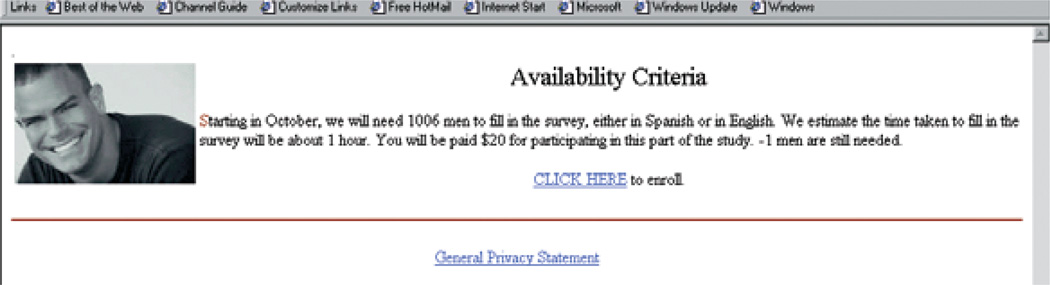

Excluding Based on Full Enrollment

A vulnerability in Internet studies is recruitment of large samples leading to incorrect conclusions. Statistically speaking, very large datasets tend to permit analysts to reject null hypotheses of no effect even though estimated effects are substantively negligible. In studies offering compensation, there is also a budgetary threat caused by too many people enrolling in the study. For these reasons it is not sufficient to simply state the targeted recruitment number; it is also necessary in Internet studies to plan for automated close out of enrollment when sufficient numbers of eligible subjects have completed the study. For this reason, we devoted a screen to availability which listed the number of questions on the study, compensation, and number of subjects still required. This screen was also programmed so that if enrollment needed to be closed temporarily, it could easily be done by resetting the clock on numbers of subjects needed.

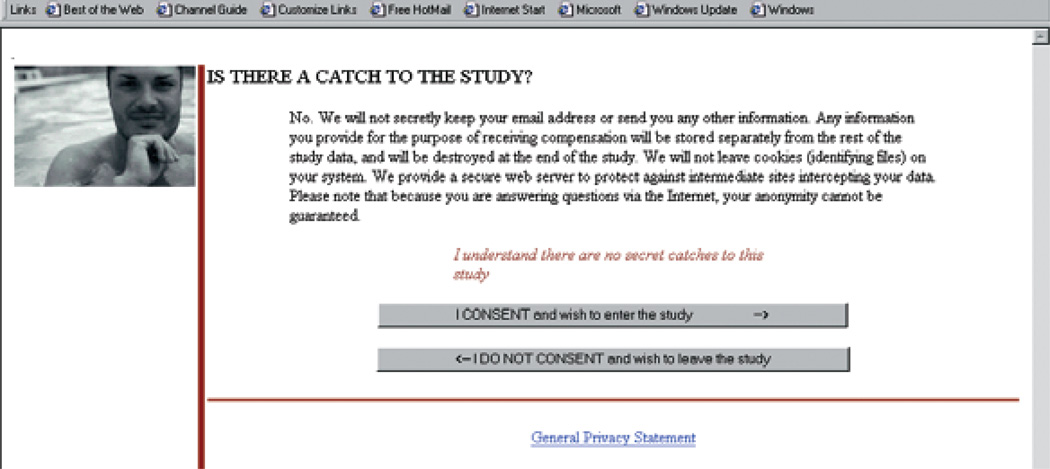

Unique Identifiers and Confirmation Pages

In the two studies, we experimented with two methods of generating unique identifiers. MINTS-I used zipcode and birthdate to generate a unique identifier, while MINTS-II generated a unique subject code. The former allowed cross validation of zip at payment with zip at end of study, while birthdate was cross validated with age (within one year) at a later point in the study. Finally, as shown in Figure 12, participants were provided with a confirmation page requesting them to check study inclusion criteria one final time and to print out and save if they so wished.

Figure 12.

Confirmation Page.

Human Subjects’ Considerations Related to Confidentiality

Our data security protocol has been published elsewhere (Konstan et al., 2008). Telephone enquiries were to a 1–800 number with researchers having the only record of the telephone numbers. To prevent e-mail inquiries being received on our university server, and automatically backed up on machines available to persons outside the study team, we purchased our own server for the study and pointed all study e-mails to this server. Staff were instructed to print out paper copies of any subject e-mail communications, which were logged, together with the response in a binder which remains in a locked filing cabinet. Electronic versions of e-mail inquiries were deleted after printing.

Studying Drop-Outs During Consent Procedures

To advance the study of online consent procedures, for both the MINTS-II risk survey and the randomized controlled trials, we received permission from our IRB to add a single item exit survey for those who clicked on “I do not consent.” For both studies, we identified the major human subjects’ risks listed in the consent procedures (confidentiality, HIV risk, sex and drug content, sexual explicitness), pragmatic concerns (subject burden, compensation, time constraints, ineligibility), and provided an “other” write-in category. Table 1 lists the reasons given. Key considerations about participation appear focused on survey length and compensation, far more than concerns about confidentiality. Almost no one appeared concerned about sex, drug, or explicit content of survey items. We conclude that participants declined to participate for pragmatic reasons, not human subjects’ risks and recommend that future studies consider placing early a web page highlighting key pragmatic factors such as number of questions, time taken, compensation, and confidentiality.

Table 1.

Reasons Given by Respondents Clicking on “I Do Not Consent”

| n | % | |

|---|---|---|

| MINTS-II risk behavior survey(n = 142) | ||

| Too many questions, too long* | 75 | 52.8 |

| Too small a payment for my time | 46 | 32.4 |

| Concerns about confidentiality | 37 | 26.1 |

| I am not really eligible | 3 | 2.1 |

| Don’t wish to discuss HIV | 1 | 0.7 |

| Sex and drug content | 0 | 0.0 |

| Other* | 6 | 4.2 |

| No reason given | 16 | 11.3 |

| MINTS-II randomized controlled trial(n = 62) | ||

| Too much of a hassle | 33 | 53.2 |

| Inconvenient to do right now | 20 | 32.3 |

| Sexually explicit content | 9 | 14.5 |

| Too small a payment for my time | 8 | 12.9 |

| I am concerned about my confidentiality | 0 | 0 |

| Other** | 6 | 9.7 |

The six comments all expressed not enough time so were recoded into the first category

Four of the six write-in comments expressed concern about providing secondary contact information, one about “too much of a hassle,” and one about inconvenience

Discussion

Ethical dilemmas frequently involve a balancing or trade-off. In online survey research, the process of informing subjects sufficiently about a study so they can make an informed decision about whether or not to participate needs to be balanced by awareness of the Internet as an environment or culture that fosters fast interaction and response times, where long documents simply will not be read, and full disclosure may not be a realistic or achievable. In most cases, our solution to these dilemmas was to design consent procedures that highlighted key points and provided options so that each participant could choose how much information they reviewed before consenting or not. Our experience suggests that the online human consent process needs to be short, direct, and cover only the main points. By building to the strengths of the Internet, researchers can anticipate where more information may be most desired—for example in our case around issues of confidentiality—and links to provide additional information for those seeking it can be programmed. We conceptualize human consent in Internet-based research with appropriate security as inherently less risky (since study termination is achievable with one simple click), more relative (subjects will choose what level of consent they wish to provide), continuous (in our experience, a webpage-by-webpage or question-by-question implicit consent), tenuous (as evidenced by higher attrition and less study demands on the subject), and fluid (that as online surveys become more common, subjects’ willingness to participate and terms of participation appear to be changing) than conventional processes.

For IRBs and other key stakeholders, the most important feature to keep in mind is that a participant can exit an Internet-based study at any time with one simple click. Provided researchers are not proposing some nefarious methods of tracking subjects or recontacting them, in almost all cases, the risk to subjects appears minimal and can be self-monitored by the subject. We believe these innovations may ultimately prove to advance to how human consent procedures are currently conceptualized toward highlighting only major risks and benefits rather than listing of all possible concerns.

There are several limitations to the consent process reported in this study. Internet research is still in its infancy. Although standards are being developed, especially in the area of ethics (Ess, 2002), there are still no universally accepted methodological or ethical standards. We hope that as research teams in the social sciences gain experience in online studies and publish their methods, more consideration will be given to improving human consent procedures. Our experience suggests, and the data from the drop outs confirms, that many persons when online may prefer to simply “jump in” to studies without really considering risks to self. For researchers like ourselves, who study sensitive areas, clearly the ethical duty is incumbent upon us to develop methods that facilitate well-informed decisions. The higher the study’s risks, the more online researchers may need to be innovative and thorough in ensuring that enrollees are fully informed prior to participation. For example, in the MINTS randomized controlled trial which includes exposure to sexually explicit content, we made discomfort with such materials an eligibility exclusion criterion while also warning participants in at least three webpages prior to enrollment.

A second challenge in online consent is the absence of research cues. The patient in a hospital office taking a health survey has multiple environmental factors reinforcing the serious nature of the survey and the importance of her/his answers. For online surveys, a well-designed consent process may well provide a similar function and help separate out the serious participant from the curious bystander. Monitoring drop out rates during consent is advised.

Clearly, our methods are only one approach to informed consent. We hope that the protocol summarized here will be superseded by better protocols informed by the emerging science. We encourage other research teams to consider researching methods of online consent and to publish protocols documenting advances in methods.

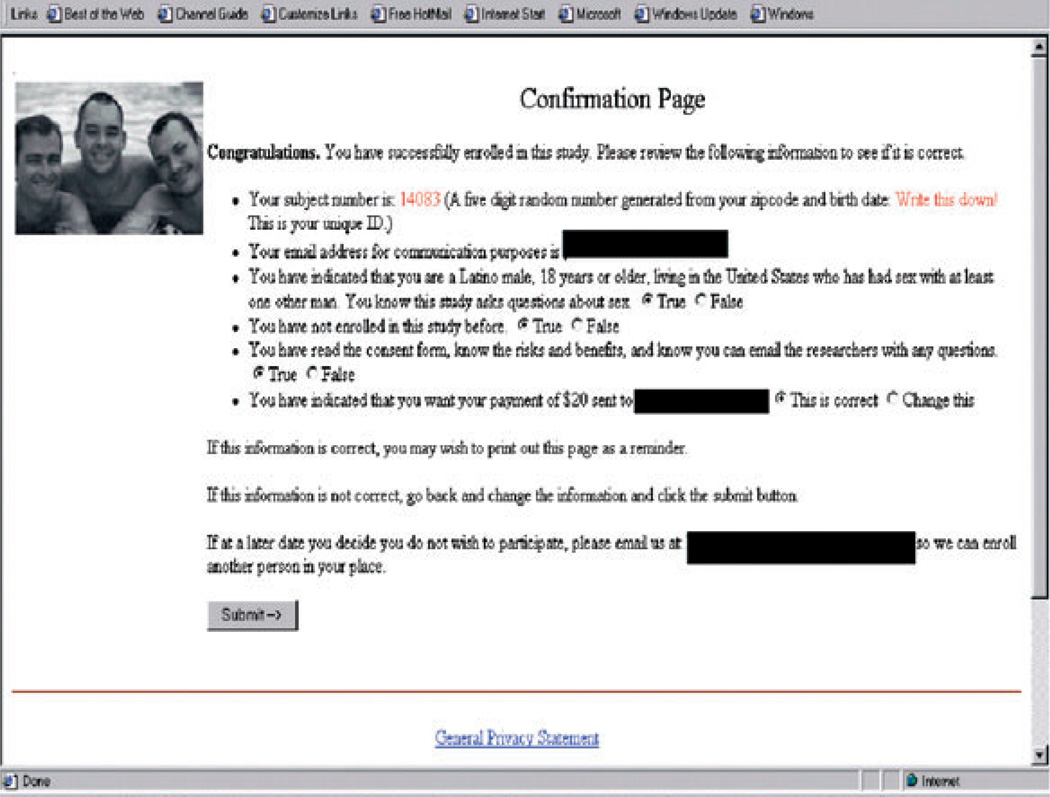

Figure 5.

Consent “Chunk” 2: Review of Risks and Benefits.

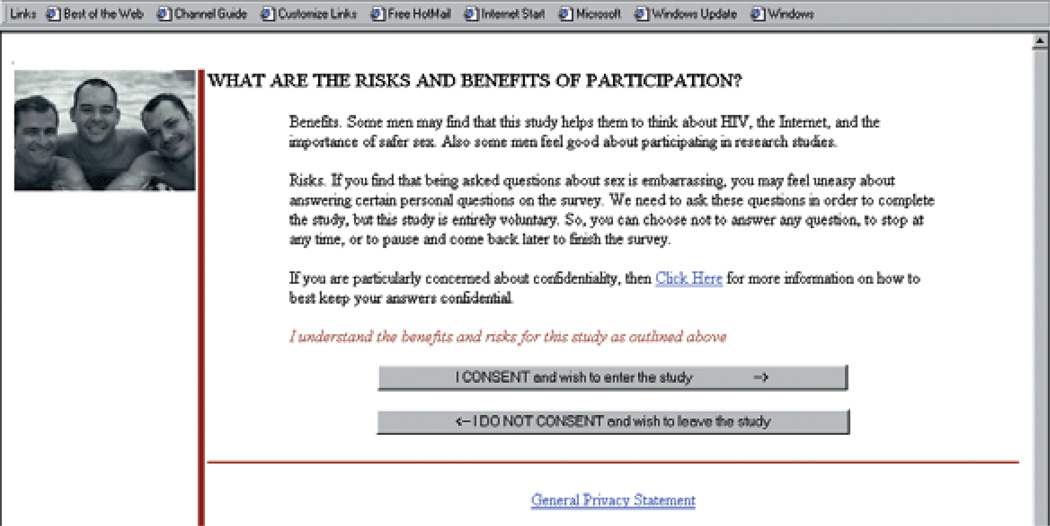

Figure 6.

Consent “Chunk” 3: Confidentiality.

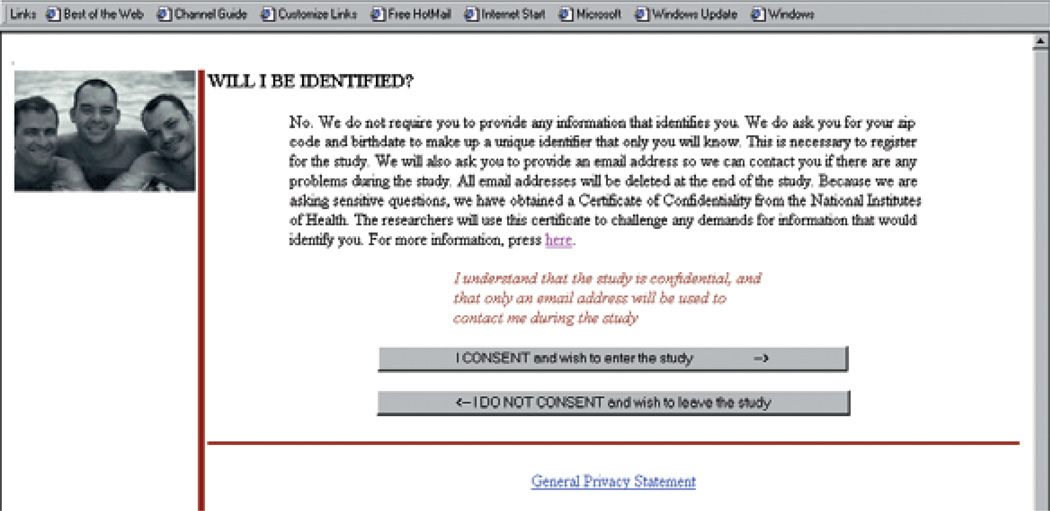

Figure 7.

Addressing Internet Risks as Part of Consent and Distinguishing Anonymity From Confidentiality.

Acknowledgements

The Men’s Internet Studies (MINTS I and II) were funded by the National Institutes of Mental Health (NIMH) Center for Mental Health Research on AIDS, grant number AG63688-01 in response to a request for applications to MH-001-003 “Communications and HIV/STD Prevention.” All research was carried out with the approval of the University of Minnesota Institutional Review Board, human subjects’ committee, study number 0102S83821. The authors wish to acknowledge the assistance of the University of Minnesota IRB staff, and in particular Cynthia McGill, for working with us to develop new methods of addressing human consent procedures and to investigate how human subjects’ considerations impacts participation.

Biographies

B. R. Simon Rosser, Ph.D., MPH is Professor of Epidemiology and Community Health at the University of Minnesota where he directs the HIV/STI Intervention and Prevention Studies (HIPS) Program. His research interests include e-Public Health interventions and research, HIV/STI prevention, sexual health, Internet sex, and virtual community health. He is principal investigator on two NIH R01 Internet-based studies: the Men’s INTernet Studies (MINTS I and II) funded through NIMH, and the Structural Interventions to Lower Alcohol-related STI/HIV (SILAS) funded through NIAAA. With Drs. Konstan and West, he coteaches a graduate multidisciplinary course in the design of effective online interventions and the health care of virtual communities.

Address: HIV/STI Intervention and Prevention Studies (HIPS) Program, Division of Epidemiology & Community Health, University of Minnesota School of Public Health, Minneapolis, MN. rosser@umn.edu

Laura Gurak, Ph.D. is a Professor and Chair of the Department of Writing Studies at the University of Minnesota. Her research is in technical communication and Internet studies. She is author of Cyberliteracy (Yale 2001) and Persuasion and Privacy in Cyberspace (Yale 1997), the latter of which was the first book-length work to study online social action. Current work examines the use of the Internet for social interactions, focusing on online communities, digital literacies, and the Internet-based research. University of Minnesota, 180 Wesbrook Hall, 77 Pleasant St SE, Minneapolis, MN 55455.

Address: Department of Writing Studies, University of Minnesota. gurakl@umn.edu

Keith J. Horvath, Ph.D. is an Assistant Professor in the Division of Epidemiology and Community Health at the University of Minnesota. His research interests are HIV risk assessment and prevention; Internet-based interventions for chronic disease; Online survey design; Sexual minority health. Division of Epidemiology & Community Health, University of Minnesota School of Public Health, 1300 South 2nd Street, Suite 300, Minneapolis, MN 55454.

Address: HIV/STI Intervention and Prevention Studies (HIPS) Program, Division of Epidemiology & Community Health, University of Minnesota School of Public Health, Minneapolis, MN. horva018@umn.edu

J. Michael Oakes, Ph.D. is an Associate Professor and McKnight Presidential Fellow at the Division of Epidemiology and Community Health at the University of Minnesota. His research interests are Quantitative Methods, Social Epidemiology, and Research Ethics. Division of Epidemiology & Community Health, University of Minnesota School of Public Health, 1300 South 2nd Street, Suite 300, Minneapolis, MN 55454.

Address: HIV/STI Intervention and Prevention Studies (HIPS) Program, Division of Epidemiology & Community Health, University of Minnesota School of Public Health, Minneapolis, MN. oakes007@umn.edu

Joseph A. Konstan, Ph.D. is Professor and Associate Head in the Department of Computer Science and Engineering, Institute of Technology, University of Minnesota. His research spans many areas of Human-Computer Interaction, including Social Computing, Recommender Systems, Online Community, and Online Public Health Applications. Department of Computer Science and Engineering, University of Minnesota, 4-192 EE/CS Building, 200 Union Street SE, Minneapolis, MN 55455

Address: HIV/STI Intervention and Prevention Studies (HIPS) Program, Division of Epidemiology & Community Health, University of Minnesota School of Public Health, Minneapolis, MN.

Address: Department of Computer Science and Engineering, Institute of Technology, University of Minnesota. konstan@umn.edu

Gene P. Danilenko, MS is the Project Coordinator for MINTS-II in the Division of Epidemiology & Community Health, University of Minnesota. He is also a PhD candidate in Education/Learning Technologies. His research interests include engagement and motivation in online learning and blended cognitive/social online learning environments. Division of Epidemiology & Community Health, University of Minnesota School of Public Health, 1300 South 2nd Street, Suite 300, Minneapolis, MN 55454.

Address: HIV/STI Intervention and Prevention Studies (HIPS) Program, Division of Epidemiology & Community Health, University of Minnesota School of Public Health, Minneapolis, MN. danil003@umn.edu

References

- Allen M. Michael Allen’s guide to e-learning: Building interactive, fun and effective learning programs for any company. Hoboken, NJ: John Wiley & Sons; 2003. [Google Scholar]

- Bakardjieva M, Feenberg A. Involving the virtual subject. Ethics and Information Technology. 2001;2:233–240. [Google Scholar]

- Bockting W, Miner M, Rosser BRS. Male partners of transgender persons and HIV/STI risk: Findings from an Internet study of Latino men who have sex with men. Archives of Sexual Behavior. 2007;36:778–786. doi: 10.1007/s10508-006-9133-4. [DOI] [PubMed] [Google Scholar]

- Carballo-Diéguez A, Miner M, Dolezal C, Rosser BRS, Jacoby S. Sexual negotiation, HIV-status disclosure, and sexual risk behavior among Latino men who use the Internet to seek sex with other men. Archives of Sexual Behavior. 2006;35(4):473–481. doi: 10.1007/s10508-006-9078-7. [DOI] [PubMed] [Google Scholar]

- Coleman E, Horvath KJ, Miner M, Ross MW, Rosser BRS the Men’s INTernet Study (MINTS-II) Team. Compulsive sexual behavior and risk for unsafe sex in online liaisons for men who use the Internet to seek sex with Men: Results of the Men’s INTernet Sex (MINTS-II) Study; Abstracts of the XVI International AIDS Conference; August 17, 2006; Toronto, Canada. 2006. #ThPDC06 (Poster presentation and discussion). [Google Scholar]

- Ess C. [Accessed 26 May 2008];Ethical decision making and Internet research. 2002 Nov 27; at http://www.aoir.org/reports/ethics.pdf.

- Flicker S, Haans D, Skinner H. Ethical dilemmas in research on Internet communities. Qualitative Health Research. 2004;14(1):124–134. doi: 10.1177/1049732303259842. [DOI] [PubMed] [Google Scholar]

- Gosling SD, Vazire S, Srivastava S, John OP. Should we trust web-based studies? A comparative analysis of six preconceptions about internet questionnaires. American Psychologist. 2004;59(2):93–104. doi: 10.1037/0003-066X.59.2.93. [DOI] [PubMed] [Google Scholar]

- Gurak LJ. Persuasion and privacy in cyberspace: The online protests over Lotus MarketPlace and the Clipper chip. New Haven, CT: Yale University Press; 1997. [Google Scholar]

- Gurak LJ, Lannon JM. A concise guide to technical communication. 3re ed. New York, NY: Pearson Longman; 2003. [Google Scholar]

- Gurak L, Rosser BRS. The challenges of ensuring participant consent in internet studies: A case study of the Men’s INTernet Study; STD/HIV Prevention and the Internet Conference; August 25–27, 2003; Washington, DC. 2003. [Google Scholar]

- Hargittai E. Whose space? Differences among users and non-users of social network sites. Journal of Computer-Mediated Communication. 2007;13(1) article 14. http://jcmc.indiana.edu/vol13/issue1/hargittai.html. [Google Scholar]

- Herring SC. Linguistic and critical research on computer-mediated communication: Some ethical and scholarly considerations. The Information Society. 1996;12(2):153–168. http://ella.slis.indiana.edu/~herring/tis.1996.pdf. [Google Scholar]

- Hine C. Virtual ethnography. Thousand Oaks, CA: Sage Publications; 2000. [Google Scholar]

- Hiltz S, Turoff R, Turoff M. The Network Nation. Reading, MA: Addison-Wesley; 1978. [Google Scholar]

- Hooper S, Rosser BRS, Horvath KJ, Remafedi G, Naumann C the Men’s INTernet Study II (MINTS-II) Team. An evidence-based approach to designing appropriate and effective Internet-based HIV prevention interventions: Results of the Men’s INTernet Sex (MINTS-II) Study needs assessment; Abstracts of the XVI International AIDS Conference, Toronto, Canada, August 17: 2006, #ThPe0451; 2006. (Poster presentation) [Google Scholar]

- Hooper S, Rosser BRS, Horvath KJ, Oakes JM, Danilenko G the Men’s INTernet Sex II (MINTS-II) Team. An online needs assessment of a virtual community: What men who use the Internet to seek sex with men want in Internet-based HIV prevention. AIDS and Behavior. 2008;12:867–875. doi: 10.1007/s10461-008-9373-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Horrigan J. [Accessed on 26 May 2008];Mobile access to data and information. Pew Internet & American Life project. 2008 Mar 5; at http://www.pewinternet.org/PPF/r/ 244/report_display.asp.

- Horvath KJ, Oakes JM, Rosser BRS. Sexual negotiation and HIV serodisclosure among men who have sex with men with their online and offline partners. Journal of Urban Health. 2008;85(5):744–758. doi: 10.1007/s11524-008-9299-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Horvath KJ, Rosser BRS, Remafedi G. The sexual behavior of young Internet-using men who have sex with men. American Journal of Public Health. 2008;98:1059–1067. doi: 10.2105/AJPH.2007.111070. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jones S, editor. Doing internet research. Thousand Oaks, CA: Sage Publications; 1998. [Google Scholar]

- Katz RV, Kegeles SS, Kressin NR, Green BL, Wang MQ, James SA, et al. The Tuskegee Legacy Project: Willingness of minorities to participate in biomedical research. Journal of Health Care for the Poor and Underserved. 2006;17(4):698–715. doi: 10.1353/hpu.2006.0126. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Keller HE, Lee S. Ethical issues surrounding human participants research using the Internet. Ethics & Behavior. 2003;13(3):211–219. doi: 10.1207/S15327019EB1303_01. [DOI] [PubMed] [Google Scholar]

- Konstan JA, Rosser BRS, Horvath KJ, Gurak L, Edwards W. Protecting subject data privacy in Internet-Based HIV/STI Prevention Survey Research. In: Conrad FG, Schober MF, editors. Envisioning the survey interview of the future. New York, NY: John Wiley & Sons; 2008. [Google Scholar]

- Konstan JA, Rosser BRS, Ross MW, Stanton J, Edwards WM. The story of Subject Naught: A cautionary but optimistic tale of internet survey research. Journal of Computer-Mediated Communication. 2005;10(2) 2005 article 11. http://jcmc.indiana/edu/vol10/issue2/konstan.html. [Google Scholar]

- Konstan J, Rosser BRS, Stanton J, Brady P, Gurak L. Protecting subject data privacy in Internet-based HIV survey research; STD/HIV Prevention and the Internet Conference; Washington, DC. 2003. Aug, [Google Scholar]

- Kraut R, Olson J, Banaji M, Bruckman A, Cohen J, Couper M. Psychological research online: Report of Board of Scientific Affairs’ Advisory Group on the Conduct of Research on the Internet. American Psychologist. 2004;59(2):105–117. doi: 10.1037/0003-066X.59.2.105. [DOI] [PubMed] [Google Scholar]

- Lynch PJ, Horton S. Web style guide: Basic design principles for creating web sites. Second ed. New Haven, CT: Yale University Press; 2002. [Google Scholar]

- Midkiff DM, Wyatt WJ. Ethical issues in the provision of online mental health services (etherapy) Journal of Technology in Human Services. 2008;26(2):310–332. [Google Scholar]

- Milgram S. Behavioral study of obedience. Journal of Abnormal and Social Psychology. 1963;67:371–378. doi: 10.1037/h0040525. [DOI] [PubMed] [Google Scholar]

- Naglieri JA, Drasgow F, Schmit M, Handler L, Prifitera A, Margolis A, Velasquez R. Psychological testing on the Internet: New problems, old issues. American Psychologist. 2004;59(3):150–162. doi: 10.1037/0003-066X.59.3.150. [DOI] [PubMed] [Google Scholar]

- National Institute of Mental Health/National Institute on Aging. Mass communications and HIV/STD prevention. MH-01-003. 2000 Apr 17; [Google Scholar]

- National Institutes of Health, Office of Portfolio Analysis and Strategic Initiatives (OPASI) [Accessed April 8, 2007];NIH Roadmap for Medical Research. 2002 at: http://nihroadmap.nih.gov.

- National Telecommunications and Information Administration. Table 1: Total USA Households using the Internet in and outside the home, by selected characteristics: Total, Urban, Rural, Principal City, October 2007. [Accessed on May 7, 2008];2007 at http://www.ntia.doc.gov/reports/2008/Table_HouseholdInternet2007.pdf.

- O’Neil KM, Penrod SD, Bornstein BH. Web-based research: Methodological variables’ effects on dropout and sample characteristics. Behavior Research Methods, Instruments, & Computers. 2003;35(2):217–226. doi: 10.3758/bf03202544. [DOI] [PubMed] [Google Scholar]

- Orne MT. On the social psychology of the psychological experiment: With particular reference to demand characteristics and their implications. American Psychologist. 1962;17:776–783. [Google Scholar]

- Pequegnat W, Rosser BRS, Bowen A, Bull SS, DiClemenete RJ, Bockting WO, et al. Conducting Internet-based HIV/STD prevention survey research: Considerations in design and evaluation. AIDS & Behavior. 2007;11:505–521. doi: 10.1007/s10461-006-9172-9. [DOI] [PubMed] [Google Scholar]

- Pittenger DJ. Internet research: An opportunity to revisit classic ethical problems in behavioral research. Ethics & Behavior. 2003;13(1):45–60. doi: 10.1207/S15327019EB1301_08. [DOI] [PubMed] [Google Scholar]

- Recupero PR, Rainey SE. Informed consent to E-therapy. American Journal of Psychotherapy. 2005;59(4):319–331. doi: 10.1176/appi.psychotherapy.2005.59.4.319. [DOI] [PubMed] [Google Scholar]

- Rosnow RL, Rosenthal R, McConochie R, Marmer, Arms RL. Volunteer effects on experimental outcomes. Educational and Psychological Measurement. 1969;29:825–846. [Google Scholar]

- Ross MW, Rosser BRS, Coleman E, Mazin R. Misrepresentation on the Internet and in real life about sex and HIV: A study of men who have sex with men. Culture, Health and Sexuality. 2006;8(2):133–144. doi: 10.1080/13691050500485604. [DOI] [PubMed] [Google Scholar]

- Ross MW, Rosser BRS, McCurdy S, Feldman J. The advantages and limitations of seeking sex online: A comparison of reasons given for online and offline sexual liaisons by men who have sex with men. Journal of Sex Research. 2007;41:59–71. doi: 10.1080/00224490709336793. [DOI] [PubMed] [Google Scholar]

- Ross MW, Rosser BRS, Stanton J. Beliefs about cybersex and Internet-mediated sex of Latino men who have Internet sex with men: Relationships with sexual practices in cybersex and in real life. AIDS Care. 2004;16:1002–1011. doi: 10.1080/09540120412331292444. [DOI] [PubMed] [Google Scholar]

- Ross MW, Rosser BRS, Stanton J, Konstan J. Characteristics of Latino men who have sex with men on the Internet who complete and drop out of an Internet-based sexual behavior survey. AIDS Education and Prevention. 2004;16(6):526–537. doi: 10.1521/aeap.16.6.526.53793. [DOI] [PubMed] [Google Scholar]

- Rosser BRS. Online HIV prevention and the Men’s Internet Study-II (MINTS-II): A glimpse into the world of designing highly interactive sexual health Internet-based interventions; Abstracts of the World Congress for Sexology; July 10–15, 2005; Montreal, Canada. 2005. [Google Scholar]

- Rosser BRS, Bockting WO, Gurak L, Konstan J, Ross MW, Coleman E. Sexual risk behavior and the Internet: Results of the Men’s INTernet Study (MINTS); Abstracts of the 2005 National HIV Prevention Conference; July 12–c15-2005a; Atlanta GA. 2005. #T1-A0804, p.82. [Google Scholar]

- Rosser BRS, Hooper S, Naumann C, Konstan J, Manning T, Bratland D, et al. Next generation HIV prevention: Building highly interactive web-based HIV interventions for men who use the Internet to seek sex with other men; Abstracts of the XVI International AIDS Conference; August 17, 2006; Toronto, Canada. 2006a. #ThPDC05. (Poster presentation and discussion) [Google Scholar]

- Rosser BRS, Horvath KJ. Ethical issues in Internet-based HIV primary prevention research. In: Loue S, Pike E, editors. Case studies in ethics and HIV research. New York: Springer; 2007. [Google Scholar]

- Rosser BRS, Miner MH, Bockting WO, Ross MW, Konstan J, Gurak L, et al. HIV Risk and the Internet: Results of the Men’s INTernet Study (MINTS) AIDS & Behavior. 2009 doi: 10.1007/s10461-008-9399-8. in press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rosser BRS, Miner M, Bockting WO, Gurak L, Konstan J, Ross MW, et al. Sexual risk behavior and the Internet: Results of the Men’s INTernet Study (MINTS); Abstracts of the XV International AIDS Conference; Bangkok, Thailand. July 11–16, 2004; 2004. #WePeC6083. [Google Scholar]

- Rosser BRS, Naumann C, Konstan J, Hooper S, Feiner K, Bockting W, et al. Next generation HIV prevention programs using the Internet: Men’s INTernet Study II (MINTS-II); Abstracts of the 2005 National HIV Prevention Conference; July 12–15, 2005; Atlanta GA. 2005. #T2-C1501, p. 112. [Google Scholar]

- Rosser BRS, Oakes JM, Bockting WO, Babes G, Miner M. Demographic characteristics of transgender persons in the United States: Results of the National Online Transgender Study. Sexuality Research and Social Policy. 2007;4(2):50–64. [Google Scholar]

- Rosser BRS, Oakes JM, Horvath KJ, Konstan JA, Danilenko GP, Peterson JL. HIV sexual risk behavior by men who use the Internet to seek sex with men: Results of the Men’s Internet Sex Study-II (MINTS-II) AIDS & Behavior. 2009 doi: 10.1007/s10461-009-9524-3. online First, Feb 10:2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rosser BRS, Oakes JM, Konstan J, Remafedi G, Zamboni B the Men’s INTernet Sex II (MINTS-II) Team. New large survey of United States men who seek sex by Internet finds African Americans have highest rate of unsafe sex; Abstracts of the XVI International AIDS Conference; August 15, 2006; Toronto, Canada. 2006b. #TuPE0615.(Poster presentation). [Google Scholar]

- Smolenski DJ, Ross MW, Risser JM, Rosser BRS. Sexual compulsivity and high-risk sexual behavior among Latino men: The role of internalized homonegativity and gay organizations. AIDS Care. 2008;21:42–49. doi: 10.1080/09540120802068803. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sproull L, Kiesler S. Connections: New ways of working in the networked organization. Cambridge, MA: MIT Press; 1991. [Google Scholar]

- Stein TS. A critique of approaches to changing sexual orientation. In: Cabaj RP, Stein TS, editors. Textbook of homosexuality and mental health. Washington, D.C.: American Psychiatric Association; 1996. [Google Scholar]

- Usability.gov. How should you organize your content? [Accessed 26 May 2008];2008 at http://www.usability.gov/design/writing4web.html.

- Varnhagen CK, Gushta M, Daniels J, Peters TC, Parmar N, Law D, et al. How informed is online informed consent? Ethics & Behavior. 2005;15(1):37–48. doi: 10.1207/s15327019eb1501_3. [DOI] [PubMed] [Google Scholar]

- West W, Rosser BRS, Hooper S, Monani S, Gurak L. How learning styles impact e-Learning: A case-comparative study of undergraduate students who excelled, passed, or failed an online course in scientific writing. Journal of Administrators of Electronic Learning. 2007;3(4):533–541. [Google Scholar]

- Wright KB. Researching Internet-based populations: Advantages and disadvantages of online survey research, online questionnaire authoring software packages, and web survey services. Journal of Computer-Mediated Communication. 2005;10(3) article 11. http://jcmc.indiana.edu/vol10/issue3/wright.html. [Google Scholar]