SUMMARY

In everyday life attention operates within complex and dynamic environments, while laboratory paradigms typically employ simple and stereotyped stimuli. This fMRI study investigated stimulus-driven spatial attention using a virtual-environment video. We explored the influence of bottom-up signals by computing saliency maps of the environment and by introducing attention-grabbing events in the video. We parameterized the efficacy of these signals for the orienting of spatial attention by measuring eye movements and used these parameters to analyze the imaging data. The efficacy of bottom-up signals modulated ongoing activity in dorsal fronto-parietal regions and transient activation of the ventral attention system. Our results demonstrate that the combination of computational, behavioral, and imaging techniques enables studying cognitive functions in ecologically valid contexts. We highlight the central role of the efficacy of stimulus-driven signals in both dorsal and ventral attention systems, with a dissociation of the efficacy of background salience versus distinctive events in the two systems.

INTRODUCTION

In everyday life the brain receives a large amount of signals from the external world. Some of these are important for a successful interaction with the environment, while others can be ignored. The operation of selecting relevant signals and filtering out irrelevant information is a key task of the attentional system (Desimone and Duncan, 1995). Much research has been dedicated to identifing the mechanisms underlying attention control, but—because of the need of methodological cogency—most studies have used highly stereotyped experimental paradigms (e.g., Posner, 1980). Typically, laboratory paradigms employ simple stimuli to “cue” spatial attention to one or another location (e.g., a central arrow or a peripheral box, presented in isolation), include tens/hundreds repetitions of the same trial-type for statistical averaging, and attempt to avoid any contingency between successive trials (e.g., by randomizing conditions). This is in striking contrast with the operation of the attentional system in real life, where a multitude of sensory signals continuously compete for the brain’s limited processing resources.

Recently, attention research has turned to the investigation of more ecologically valid situations involving, for example, the viewing of pictures or videos of naturalistic scenes (Carmi and Itti, 2006; Elazary and Itti, 2008). In this context, a highly influential approach has been proposed by Itti and Koch, who introduced the “saliency computational model” (Itti et al., 1998). This algorithm acts by decomposing complex input images into a set of multiscale feature-maps, which extract local discontinuities in line orientation, intensity contrast, and color opponency in parallel. These are then combined into a single topographic “saliency map” representing visual saliency irrespective of the feature dimension that makes the location salient. Saliency maps have been found to predict patterns of eye movements during the viewing of complex scenes (e.g., pictures: Elazary and Itti, 2008; video: Carmi and Itti, 2006) and are thought to well-characterize bottom-up contributions to the allocation of visuo-spatial attention (Itti et al., 1998).

The neural representation of saliency in the brain remains unspecified. Electrophysiological works in primates demonstrated bottom-up effects of stimulus salience in occipital visual areas (Mazer and Gallant, 2003), parietal cortex (Gottlieb et al., 1998; Constantinidis and Steinmetz, 2001), and dorsal premotor regions (Thompson et al., 2005), suggesting the existence of multiple maps of visual salience that may mediate stimulus-driven orienting of visuo-spatial attention (Gottlieb, 2007). On the other hand, human neuroimaging studies have associated stimulus-driven attention primarily with activation of a ventral fronto-parietal network (temporo-parietal junction, TPJ; and inferior frontal gyrus, IFG; see Corbetta et al., 2008), while dorsal fronto-parietal regions have been associated with the voluntary control of eye movements and endogenous spatial attention (Corbetta and Shulman, 2002).

This apparent inconsistency between single-cell works and imaging findings in humans can be reconciled when considering that bottom-up sensory signals are insufficient to drive spatial attention, which instead requires some combination of bottom-up and endogenous control signals. Indeed, saliency maps predict only poorly overt spatial orienting for long exposure to complex stimuli (Elazary and Itti, 2008), where endogenous and strategic factors are thought to play a major role (Itti, 2005). Analogously, neuroimaging studies using standard spatial cueing paradigms demonstrated that bottom-up salience alone does not activate the ventral fronto-parietal network (Kincade et al., 2005), which activates only when transient bottom-up sensory input interacts with endogenous task/set-related signals (Corbetta et al., 2008; Natale et al., 2010; but see Asplund et al., 2010). Thus, a comprehensive investigation of the brain processes associated with stimulus-driven visuo-spatial attention must take into account not only the sensory characteristics of the bottom-up visual input (e.g., in terms of saliency maps), but also the efficacy of these signals for driving spatial orienting. This can be achieved with naturalistic stimuli entailing heterogeneous bottom-up sensory signals that, in turn, may or may not produce orienting of spatial attention. Notably, this variable relationship between sensory input and spatial orienting behavior is akin to everyday situations, where attention is not always oriented toward salient signals. By contrast, standard experimental paradigms entail presenting several times the same stimulus configuration (i.e., an experimental condition) that is assumed to always trigger the same attentional effect over many trial repetitions.

Here we used eye movements and fMRI during the viewing of a virtual environment to investigate brain activity associated with both bottom-up saliency and the efficacy of these signals for stimulus-driven orienting of spatial attention. The video was recorded in a first-person perspective and included navigation through a range of indoor and outdoor scenes. Unlike movies, our stimuli entailed a continuous flow of information from one instant to the next, without any discontinuity in time (e.g., flash-backs) or space (e.g., shots of the same scene from multiple viewpoints, or nonnaturalistic perspectives as in “aerial” or “crane” shots). Thus, here the allocation of spatial attention was driven by the coherent unfolding of the scene, as would naturally happen in everyday life. We used two versions of the video. One version included only the environment (No_Entity video; see Figure 1A); the other version consisted of the same navigation pathway and also a number of human-like characters, who walked in and out the scene at unpredictable times (Entity video; see Figure 2A).

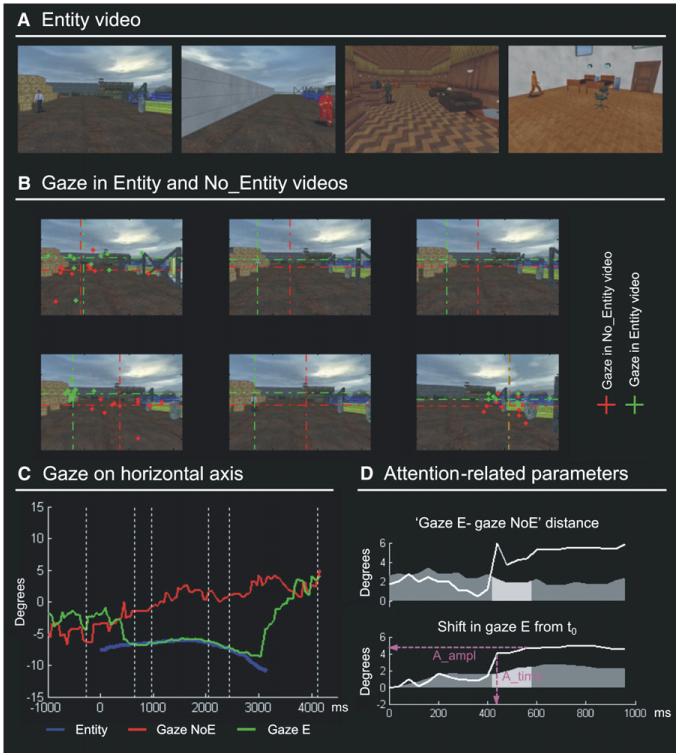

Figure 1. No_Entity Video: Examples, Attention Parameters, and Imaging Results.

(A) Four frames of the No_Entity (NoE) video exemplifying the high variability of the visual scenes.

(B) Examples of group-median gaze position (top panels, red crosses) and saliency maps (bottom panels, maxima highlighted with cyan crosses) for three frames. For each frame of the No_Entity video, we extracted the mean saliency and the distance between gaze position and maximum saliency (yellow lines) to compute the attention parameters that were then used for fMRI analyses (S_mean and SA_dist).

(C) Brain areas where the BOLD signal covaried positively with mean saliency (S_mean).

(D) Brain areas where the BOLD signal covaried negatively with the distance between gaze position and maximum saliency (SA_dist), i.e., where activity increased when subjects attended toward the most salient location of the video. aIPS, anterior intraparietal sulcus; FEF, frontal eye fields. Color bars indicate statistical thresholds. See also Figure S1.

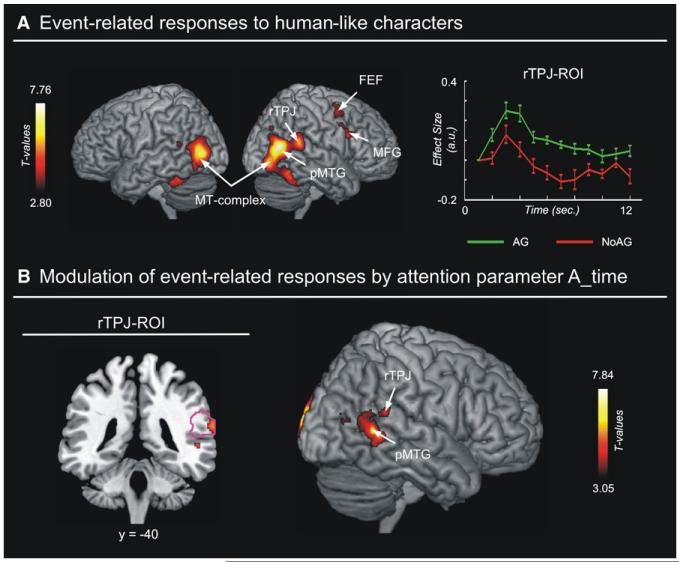

Figure 2. Entity Video: Examples and Computation of the Attention Parameters.

(A) Examples of a few frames of the Entity (E) video showing different characters in the complex environment.

(B) Gaze position during the free viewing of a video segment when the character was present (Entity video, gaze position plotted in green) or when it was absent (No_Entity video, gaze position plotted in red). This shows comparable gaze positions in the first frame (character absent in both videos), a systematic shift when the character appears in the Entity video (frames 2–5), and again similar positions after the character exited the scene (frame 6). The dashed crosses represent group-median gaze positions, and dots show single subjects’ positions.

(C) Time course of the group-median horizontal gaze position for the same character shown in (B) (viewing of the Entity video in green; viewing of the No_Entity video in red). The blue trace displays the horizontal position of the character over time, showing that subjects tracked the character in the Entity video (green line). Vertical dashed lines indicate the time points corresponding to the six frames shown in (B).

(D) Computation of the attention parameters (A_time and A_ampl) for the same character. The attention grabbing properties of each character are investigated by applying a combination of statistical criteria on gaze position traces (see Experimental Procedures section for details), two of which are demonstrated here. The top subplot shows the Euclidian distance between gaze positions during the viewing of the Entity and No_Entity video, plotted over time. The bottom subplot shows the shift of gaze position during the viewing of the Entity video, compared with the gaze position in the first frame when the character appeared (time = 0). The two attention parameters were computed by assessing when both distances exceeded the 95% confidence interval (dark gray shading in each subplot) for at least four consecutive data points (light gray shading). The time of the first data point exceeding the thresholds determined the processing time parameter (A_time), while the amplitude parameter (A_Ampl) was measured in correspondence with the last data point of the window; see magenta lines.

The videos were presented to two distinct groups of subjects. Participants of the first group were asked to freely view the two videos with eye movements allowed (preliminary study, outside the MR scanner). This provided us with an explicit measure of the allocation of spatial attention (overt orienting) and enabled us to characterize the efficacy of the sensory input for spatial orienting. For the No_Entity video we computed the relationship between the location of maximum salience and gaze position as an index of the efficacy of salience to capture visuo-spatial attention (see Figure 1B). For the Entity video, we considered changes in gaze position when the human-like characters appeared in the scene. Each character was scored as “attention grabbing” or “non-attention grabbing” depending on whether it produced a gaze shift or not. For the attention grabbing events, we computed additional temporal and spatial parameters to further characterize the attentional shifts (see Figure 2).

The Entity and No_Entity videos were then presented to a second group of subjects, for fMRI acquisition and “in-scanner” eye movements monitoring. The videos were now presented in two different viewing conditions: with eye movements allowed (overt orienting, as in preliminary study) or with central fixation required (covert orienting; see also Table S1 in Supplemental Experimental Procedures). Our main fMRI analyses concerned the covert viewing conditions, because this minimizes any intersubjects variability that arises when the same visual stimuli are viewed from different gaze directions (e.g., a “left” visual stimulus, for a subject who looks straight ahead, will become a “central” or even a “right” stimulus for a subject who looks toward the left side). The fMRI data were analyzed using attention grabbing efficacy indexes derived from the preliminary study, as these should best reflect orienting behavior on the first viewing of the stimuli. Nonetheless, we also analyzed eye movements recorded in the scanner and the corresponding imaging data to compare overt and covert spatial orienting.

For the No_Entity video, we tested for brain regions where activity covaried with (1) the mean level of saliency; (2) the distance between the location of maximum salience and the attended position, indexing the efficacy of salience; and (3) the saccades’ frequency. For the Entity video, we performed an event-related analysis time-locked to the appearance of the characters, thus identifying brain regions responding transiently to these stimuli. We then assessed whether the size of these activations covaried with the attention grabbing effectiveness of each character (grabbing versus non-grabbing characters). Finally, we used data-driven techniques to identify brain regions involved in the processing of the complex and dynamic visual stimuli, without making any a priori assumption about the video content and timing/shape of the BOLD changes. We introduce the interruns covariation analysis (IRC, conceptually derived from the intersubjects correlation analysis first proposed by Hasson et al., 2004; but see Supplemental Experimental Procedures for relevant differences between these two methods); and we applied analyses of interregional connectivity to investigate the functional coupling of the right TPJ (rTPJ) that was identified by both the hypothesis-based and the IRC analysis as a key area for stimulus-driven spatial orienting in complex dynamic environments.

RESULTS

Overt Spatial Orienting during Free Viewing of Entity and No_Entity Videos

In the preliminary study, we presented Entity and No_Entity videos to 11 subjects and recorded eye movements during free viewing of these complex and dynamic stimuli. The aim of this behavioral experiment was to characterize overt spatial orienting, and the associated covert orienting of attention, upon the first viewing of the stimuli.

Using the No_Entity video we parameterized the relationship between stimulus salience (saliency map) and spatial orienting behavior (gaze position). Figure 1B shows the computation of these parameters for a few frames, including the movie frame (top row), the corresponding saliency map (bottom row, with the maximum highlighted with cyan dotted lines), group-median position of gaze (red dotted lines), and the distance between maximum saliency and gaze position (bold yellow lines). Mean salience and distance values for each frame were used to generate two covariates for the analyses of the fMRI data (S_mean and SA_dist; see also Experimental Procedures). In addition, we also quantified the overall degree of attention shifting, irrespective of salience, by computing the average saccade frequency throughout the video (Sac_freq covariate).

For the Entity video, we assessed the attention grabbing properties of the human-like characters by looking for changes in gaze position when these characters appeared. Using multiple statistical criteria at each time point (see Experimental Procedures), we found systematic shifts toward the unexpected character in 15 out of the 25 entities. Figure 2B shows an example of an attention grabbing character. The red dotted lines show the group-median gaze position when the character was absent (No_Entity video), and the green dotted lines show gaze position when the character was present (Entity video). This orienting behavior was quantified further by computing the processing time, i.e., the time needed to initiate the spatial shift, and the amplitude of the shift (A_time and A_ampl; see Figures 2C and 2D).

We sought to confirm these findings using eye movement data acquired in the scanner (overt viewing fMRI runs; see also Supplemental Experimental Procedures). For the No_Entity video, the behavioral parameters were found to be consistent in the two groups (correlation coefficient for SA_dist: r = 0.94, p < 0.001; and for Sac_freq: r = 0.41, p < 0.001). For the Entity video, we found that the eye traces associated with the human-like characters were highly correlated in the two groups for 24 out of the 25 characters (p < 0.001). Overall, the 25 in-scanner eye traces could be predicted reliably using the corresponding traces recorded in the preliminary study (T = 8.20, p < 0.001). The application of our multiple criteria to the in-scanner gaze position data confirmed as attention grabbing 12 out of 15 characters that were initially identified in the preliminary study. The corresponding time and amplitude parameters were relatively consistent in the two groups (A_ampl: r = 0.75, p = 0.005; A_time: r = 0.56, p = 0.060).

Sensory Saliency and Covert Orienting in the Complex Visual Environment (No_Entity Video)

Figure 1C shows the results of the covariation analyses between the BOLD signal measured during covert viewing of the No_Entity video and the mean saliency of the visual input (S_mean). Positive covariation was found in visual cortex, including the calcarine sulcus (primary visual cortex); the dorsal, lateral, and ventral occipital cortices; and the left anterior intraparietal sulcus (aIPS, see Table 1). This indicates that the overall level of bottom-up stimulus salience primarily affects activity in sensory areas, irrespective of its influence on attentional/orienting behavior. A different pattern emerged when saliency and orienting behavior were considered together (i.e., the efficacy of salience for covert spatial orienting). We found that activity in frontal eye fields (FEF; at the interception of the superior frontal and the precentral sulcus; Petit et al., 1997), in the aIPS (along the horizontal branch of IPS, extending into the superior parietal gyrus [SPG]), and in the right ventral occipital cortex covaried negatively with distance between maximum salience and attended position (SA_dist; see Figures 1D, S1A, and S1B, plus Table 1). These effects were not merely due to the overall amount of attention shifting, as the covariate based on saccade frequency (Sac_freq) did not reveal any significant effect in these regions. These results were confirmed using gaze position data acquired in the scanner (in-scanner indexes) and more targeted analyses using individually defined ROIs in the dorsal fronto-parietal network (see Supplemental Experimental Procedures). In summary, the ongoing activity in the dorsal fronto-parietal network increased when subjects attended toward the most salient location in the scene, demonstrating that these regions represent the efficacy of visual salience for covert spatial orienting rather than salience or attention shifting as such.

Table 1. Sensory Saliency and Attentional Orienting in the No_Entity Video.

| Contrast | Brain Region | Covert | Overt | ||||

|---|---|---|---|---|---|---|---|

| Cluster | Voxel | Voxel | |||||

| p-cor | k | t value | x y z | t value | x y z | ||

| S_mean | R calcarine cortex | <0.001 | 2880 | 3.86 | 20 −100 0 | 3.93 | 14 −86 0 |

| R dorsal occipital cortex | 7.55 | 24 −88 24 | 2.13 | 28 −88 28 | |||

| R lateral occipital cortex | 8.05 | 36 −90 8 | 6.23 | 42 −86 8 | |||

| R ventral occipital cortex | 4.84 | 36 −84 10 | 4.83 | 36 −88 −2 | |||

| L calcarine cortex | <0.001 | 3725 | 6.30 | −16 −98 −4 | 4.34 | −4 −90 −10 | |

| L dorsal occipital cortex | 5.78 | −16 −94 22 | 3.20 | −20 −94 22 | |||

| L lateral occipital cortex | 8.01 | −46 −80 8 | 9.51 | −28 −94 10 | |||

| L ventral occipital cortex | 6.19 | −32 −80 −10 | 6.12 | −46 −72 -6 | |||

| L aIPS/SPG | <0.001 | 1320 | 6.70 | −32 −38 48 | 6.16 | −34 −52 60 | |

| Covert | Covert > Overt | ||||||

| SA_dist | R FEF | 0.098 | 318 | 6.56 | 28 0 62 | 4.11 | 32 0 64 |

| L FEF | <0.001 | 985 | 4.69 | −24 2 58 | 5.47 | −28 0 54 | |

| R aIPS/SPG | 0.021 | 453 | 7.18 | 30 −40 60 | 6.06 | 30 −44 58 | |

| L aIPS/SPG | 0.033 | 412 | 6.15 | −32 −46 62 | 4.56 | −34 −46 60 | |

| R ventral occipital cortex | 0.002 | 680 | 5.99 | 38 −76 −8 | 3.64 | 36 −78 −12 | |

| Overt | Overt > Covert | ||||||

| Sac_freq | R pIPS | - | 223 | 6.45 | 34 −54 40 | 5.32 | 32 −50 34 |

| L pIPS | - | 206 | 6.34 | −24 −52 42 | 5.82 | −16 −56 44 | |

| L occipital cortex | <0.001 | 3193 | 6.90 | −8 −88 18 | - | - |

S_mean: brain regions where BOLD signal covaried positively with mean saliency. SA_dist: regions where BOLD signal covaried negatively with the distance between maximum salience and attended position. Sac_freq: regions where activity covaried positively with saccade frequency. p values are corrected for multiple comparisons at the whole-brain level (except for t values reported in italics), and k is the number of voxels in each cluster. Overt/Covert, spatial orienting with eye movements allowed/disallowed; FEF, frontal eye fields; a/pIPS, anterior/posterior intraparietal sulcus; SPG, superior parietal gyrus.

Covert Spatial Orienting toward the Human-like Characters (Entity Video)

We highlighted regions of the brain that activated when the human-like characters appeared in the scene. We modeled separately the characters that triggered significant changes of gaze position (AG: attention grabbing) and those that did not (NoAG: non-attention grabbing). Both types of events activated the ventral and lateral occipito-temporal cortex, comprising the MT-complex (V5+/MT+), the posterior part of right middle temporal gyrus (pMTG), and the rTPJ (see Figure 3A and Table 2). Significant clusters of activation were found also in the precuneus and in the right premotor cortex, the latter comprising the middle frontal gyrus (MFG, and inferior frontal sulcus) and extending dorsally into the superior frontal sulcus (i.e., the right FEF; see also Figure S1A). Thus, despite the complex and dynamic background visual stimulation, the analysis successfully identified regions transiently responding to the occurrence of these distinctive events.

Figure 3. Event-Related Responses and Attentional Modulation Associated with the Human-like Characters.

(A) Areas showing event-related activation time-locked to the characters’ appearance (mean effect across attention grabbing [AG] and non-grabbing [NoAG] characters). The right temporo-parietal junction (rTPJ) showed greater activation for grabbing versus non-grabbing characters (see signal plot on the right). Error bars = SEM.

(B) Areas where the transient activation associated with the appearance of attention grabbing characters was further modulated by the A_time parameter (processing time, see Figure 2D). In the rTPJ (left panel, outlining the location of the rTPJ ROI in magenta) and in the right posterior middle temporal gyrus (pMTG, right panel), there was a positive covariation between BOLD response and A_time values, indicating that characters requiring longer processing time lead to greater activation of these regions. Color bars indicate statistical thresholds. MT-complex, motion-sensitive middle-temporal complex; MFG, middle frontal gyrus.

Table 2. Transient Responses to the Human-like Characters in the Entity Video.

| Contrast | Brain Region | Covert | Overt | ||||

|---|---|---|---|---|---|---|---|

| Cluster | Voxel | Voxel | |||||

| p-cor | k | t value | x y z | t value | x y z | ||

| Main Effect | R MT-complex | <0.001 | 3600 | 7.76 | 48 −62 14 | 8.55 | 50 −68 4 |

| R ventral occipital cortex | 5.86 | 46 −76 −4 | 8.10 | 46 −76 −4 | |||

| R TPJ | 5.57 | 58 −40 16 | 4.48 | 62 −38 24 | |||

| R pMTG | 3.05 | 66 −50 10 | 3.62 | 66 −50 10 | |||

| L MT-complex | <0.001 | 2231 | 3.63 | −56 −48 16 | 6.76 | −50 −68 4 | |

| L ventral occipital cortex | 4.34 | −40 −66 −6 | 7.29 | −46 −76 −4 | |||

| R precuneus | 0.046 | 602 | 6.11 | 4 −46 58 | 4.16 | 8 −50 52 | |

| R FEF | 0.001 | 1281 | 4.58 | 22 2 56 | 4.01 | 26 2 56 | |

| R MFG/IFS | 5.86 | 36 16 26 | 7.19 | 44 18 24 | |||

| Covert | Covert > Overt | ||||||

| A_time | L dorsal occipital cortex | <0.001 | 1038 | 7.84 | −16 −100 12 | 7.34 | −12 −100 10 |

| R pMTG | 0.005 | 570 | 6.65 | 66 −50 6 | 1.91 | 60 −44 2 | |

| Covert | Covert > Overt | ||||||

| A_ampl | R IFG | <0.001 | 2245 | 7.84 | 52 40 8 | 3.31 | 40 36 10 |

| R MFG | 4.98 | 46 14 42 | - | - | |||

| R supramarginal gyrus | 0.006 | 463 | 5.39 | 54 −36 44 | 3.55 | 56 −30 36 | |

| R angular gyrus/dorsal occ. cortex | 0.004 | 496 | 5.46 | 40 −82 36 | 2.69 | 34 −86 36 | |

| R medial superior frontal gyrus | 0.001 | 656 | 5.84 | 16 22 44 | 3.36 | 12 16 40 |

Main Effect: brain regions that activated transiently upon presentation of unexpected human-like characters, irrespective of whether these were attention grabbing or non-attention grabbing. A_time: regions where the transient BOLD response for the attention grabbing characters covaried positively with the characters' processing times. A_ampl: regions where the transient BOLD response for the attention grabbing characters covaried negatively with the amplitude of the gaze shift. p values are corrected for multiple comparisons at the whole-brain level (except for t values reported in italics), and k is the number of voxels in each cluster. Overt/Covert, spatial orienting with eye movements allowed/disallowed; MT-complex, middle temporal complex (V5+/MT+); TPJ, temporo-parietal junction; pMTG, posterior middle temporal gyrus; FEF, frontal eye fields; MFG, middle frontal gyrus; IFG, inferior frontal gyrus; IFS, inferior frontal sulcus.

In order to ascertain whether activations triggered by the appearance of the characters can be associated with stimulus-driven orienting of spatial attention, we directly compared attention grabbing with non-attention grabbing characters. In particular, we examined activity in the rTPJ, which previous studies identified as a key region for stimulus-driven orienting of spatial attention (Corbetta et al., 2008). This targeted ROI analysis revealed that rTPJ activated more for attention grabbing than non-grabbing characters (T = 2.02; p < 0.028; see signal plot in Figure 3A).

We further confirmed the link between rTPJ activation and spatial attention by covarying BOLD activation for the attention grabbing characters with the corresponding attention-related parameters (processing time and amplitude of visuo-spatial orienting; see Figure 2D). This revealed a significant modulation of the transient rTPJ response by the timing parameter (A_time: T = 2.42; p < 0.017; see Figure 3B, left). Specifically, we found that characters requiring longer processing times activated rTPJ more than characters that required less time. At the whole-brain level, the peak of modulation was located in the right pMTG (see right panel in Figure 3B and Table 2). The amplitude parameter was also found to modulate activity in rTPJ (A_ampl: T = 2.22; p < 0.024). At the whole-brain level, modulation by amplitude was found in the right MFG that also exhibited an overall response to the characters’ onset (see Figure 3A); also, the IFG, medial superior frontal gyrus, and supramarginal and angular gyri did not respond to the characters’ onset (see Table 2). All regions modulated by A_ampl showed greater activation for characters that were presented close to the currently attended location (i.e., larger BOLD responses for smaller amplitudes).

Additional analyses using gaze position data acquired in the scanner (in-scanner indexes of orienting efficacy) confirmed the modulation of activity in the rTPJ for attention grabbing versus non-gabbing characters (while the effect of A_time and A_ampl did not reach full significance) and revealed related effects in the right IFG (rIFG) using a more targeted ROI approach; see Supplemental Experimental Procedures.

Functional Imaging of the Free Viewing, Overt Orienting Conditions

The in-scanner indexes were used also to analyze the imaging data acquired during the corresponding free-viewing fMRI runs (cf. Table S1 in Supplemental Experimental Procedures). We tested all attention-related effects in the overt viewing conditions, and directly compared overt and covert conditions when an effect was present in one condition, but not in the other.

For the No_Entity video, we found activations related to mean saliency (S_mean) in occipital cortex bilaterally as well as in the left aIPS (see Table 1, rightmost column), as in the covert viewing condition. By contrast, activation of dorsal fronto-parietal network for attention toward the most salient image location (SA_dist) was not found in the overt condition (see Table 1, reporting the direct comparison between covert and overt viewing). The overall effect of attention shifting (Sac_freq), which did not show any effect during covert viewing, was now found to modulate activity in the posterior/ventral part of IPS bilaterally (pIPS, posterior descending branch of IPS). The pIPS activation during overt spatial orienting did not colocalize with the activity associated with the efficacy of salience during covert orienting (aIPS; see Figure S1B, displaying both effects together), suggesting a segregation between overt oculomotor control and attention-related effects in pIPS and aIPS, respectively.

For the Entity video, analyses of the overt viewing fMRI data confirmed event-related activation at characters’ onset in extrastriate regions bilaterally, as well as in pMTG, TPJ, and premotor cortex in the right hemisphere. However, the tests related to the attention-grabbing efficacy of the human-like characters now failed to reveal any significant modulation in these regions. Direct comparisons between the two viewing conditions confirmed that the modulation for attention grabbing versus non-grabbing characters in the rTPJ-ROI was significantly larger for covert than overt viewing (p < 0.048), and corresponding trends were found for A_time (p = 0.144) and A_ampl (p = 0.077; see also Table 2 for whole-brain statistics).

Overall, the fMRI analyses of the overt viewing conditions showed that effects that do not depend on the specific spatial layout of the visual scene (e.g., effect of mean saliency in the No_Entity video, and activation for the characters’ appearance in the Entity video) were comparable in overt and covert conditions, whereas effects that depend on the specific spatial layout of the stimuli (i.e., SA_dist and presence of attention grabbing versus non-grabbing characters) were found only in conditions requiring central fixation.

IRC Analyses

Together with our hypothesis-based analyses that parameterized specific bottom-up attentional effects, we sought to investigate patterns of brain activation associated with the processing of the complex dynamic environment using IRC (see Experimental Procedures section and Supplemental Experimental Procedures), a data-driven approach assessing the “synchronization” of brain activity when a subject is presented twice with the same complex and dynamic stimulation (cf. also Hasson et al., 2004).

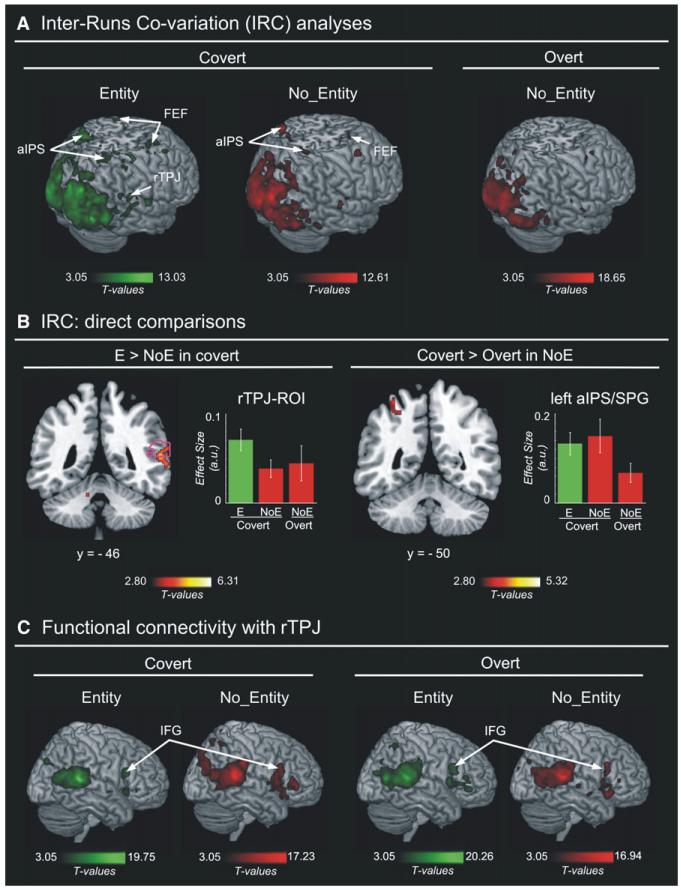

Figure 4A shows areas with a significant IRC during the covert viewing of the Entity and No_Entity videos, and during the overt viewing of the No_Entity video. In all three conditions, a significant IRC was detected in visual occipital cortex, as well as right aIPS/SPG and FEF (see Table 3). In the covert viewing conditions, the direct comparisons between the IRC for Entity and No_Entity videos demonstrated an Entity-specific effect in the rTPJ-ROI (T = 1.84; p < 0.040, Figure 4B, left), with peak activation in the right pMTG at the whole-brain level (see Table 3). The direct comparison of covert and overt viewing of the No_Entity video revealed larger synchronization during the covert condition in the left occipital cortex, plus trends in the left aIPS/SPG (Figure 4B, right; see also Figure S1B) and left medial prefrontal cortex (see Table 3). Thus, this data-driven approach confirmed the participation of both dorsal (aIPS/FEF) and ventral (rTPJ) attentional networks during viewing of the complex dynamic environments, and further supported the specificity of the rTPJ and right pMTG for the processing of the Entity video containing human-like characters.

Figure 4. IRC Analyses and Functional Coupling with the rTPJ.

(A) Brain regions showing significant IRC when subjects viewed the Entity and No_Entity videos during the covert and overt viewing conditions.

(B) Brain regions showing greater IRC when subjects viewed the Entity as compared with the No_Entity video in covert condition (left panel; including rTPJ ROI and right pMTG), and “covert versus overt” condition for the No_Entity video (right panel; including left aIPS/SPG). Error bars = 90% confidence interval.

(C) Maps of interregional coupling computed from the rTPJ ROI separately for the Entity and No_Entity videos, and for the covert and overt viewing conditions. Since functional coupling was estimated using covariation with the signal in the rTPJ ROI, both maps include also voxels within the original rTPJ seed region. However, significant coupling was also found in anatomically distant areas of both hemispheres (see Table 4). In particular, high coupling was found between the rTPJ and the inferior frontal gyrus (IFG), which are two key areas of the ventral fronto-parietal attention network. Color bars indicate statistical thresholds.

Table 3. IRC Analysis.

| Brain Region | Covert | Overt | |||||

|---|---|---|---|---|---|---|---|

| Entity | No_Entity | No_Entity | |||||

| t value | x y z | t value | x y z | t value | x y z | ||

| Occipito-temporal regions | R calcarine cortex | 9.67 | 6 −74 8 | 9.87 | 12 −86 6 | 14.27 | 18 −100 4 |

| L calcarine cortex | 4.61 | −10 −88 4 | 10.87 | −12 −90 −6 | 11.83 | 2 −92 2 | |

| R dorsal occipital cortex | 11.41 | 26 −86 26 | 8.89 | 28 −86 28 | 11.15 | 22 −100 10 | |

| L dorsal occipital cortex | 8.46 | −16 −100 12 | 8.34 | −16 −76 30 | 9.87 | −14 −100 14 | |

| R lateral occipital cortex | 9.17 | 36 −82 12 | 5.67 | 18 −88 −8 | 4.52 | 30 −92 8 | |

| L lateral occipital cortex | 13.03 | −50 −72 2 | 9.58 | −18 −88 −8 | 10.05 | −22 −102 0 | |

| R ventral occipital cortex | 12.12 | 44 −82 −4 | 8.92 | 40 −76 −16 | 13.42 | 36 −90 −8 | |

| L ventral occipital cortexb | 10.64 | −22 −72 −14 | 10.06 | −22 −76 −12 | 5.40 | −16 −78 −12 | |

| R MT-complex | 9.24 | 52 −70 −4 | 5.69 | 50 −72 0 | 6.13 | 52 −66 4 | |

| L MT-complexa | 13.03 | −50 −72 2 | - | 7.08 | −52 −72 10 | ||

| Dorsal fronto-parietal regions | R aIPS/SPG | 7.66 | 36 −44 68 | 3.87 | 22 −48 60 | 5.23 | 44 −42 64 |

| L aIPS/SPGb | 4.79 | −30 −44 56 | 3.94 | −34 −46 58 | - | - | |

| R FEF | 4.66 | 28 −2 56 | 6.85 | 42 4 54 | 3.54 | 26 −4 52 | |

| L FEF | 4.85 | −26 −4 58 | 2.62 | −26 −4 58 | 2.06 | −26 −6 58 | |

| R MFG | 5.50 | 28 18 46 | 2.55 | 28 20 44 | 1.96 | 26 12 46 | |

| Ventral fronto-parietal regions | R superior temporal gyrus | 6.63 | 56 −16 6 | 4.59 | 66 −24 14 | 5.73 | 62 −18 −2 |

| R TPJa | 5.92 | 56 −38 18 | 4.32 | 52 −40 20 | 5.13 | 50 −38 18 | |

| R pMTGa | 5.39 | 66 −46 6 | - | - | - | - | |

| Medial surface | R/L precuneus | 4.99 | 4 −62 40 | 4.66 | −2 −46 52 | 4.60 | 4 −56 64 |

| L posterior cingulate cortex | 2.51 | −10 −18 48 | 5.56 | −12 −20 46 | 3.77 | −12 −22 42 | |

| R dorsal anterior cingulate cortex | 6.07 | 0 14 42 | 2.00 | 0 12 44 | 2.78 | 0 14 42 | |

| L medial prefrontal cortexb | 6.32 | −6 54 10 | 8.04 | −10 40 −6 | - | - |

Brain regions showing significant IRC while subjects viewed the Entity video (covert condition only) and No_Entity video (covert and overt conditions) are listed. p values are corrected for multiple comparisons at the whole-brain level (except for t values reported in italics). Overt/Covert, spatial orienting with eye movements allowed/disallowed; MT-complex, middle temporal complex (V5+/MT+); FEF, frontal eye fields; aIPS/SPG, anterior intraparietal sulcus/superior parietal gyrus; MFG, middle frontal gyrus; TPJ, temporo-parietal junction; pMTG, posterior middle temporal gyrus.

Direct comparison of Entity and No_Entity in the covert condition showed significant differences in right pMTG (x y z = 50 −54 8; p-corr. = 0.004) and left MT-complex (x y z = −50 −70 8; p-corr = 0.015), as well as in the rTPJ (ROI analysis, see main text).

Direct comparison of covert and overt conditions for the No_Entity video showed significant differences in the left ventral occipital cortex (x y z = −16 −78 −12; p-corr = 0.011), plus trends in the left aIPS/SPG (x y z = −34 −46 58; p-corr = 0.059) and left medial prefrontal cortex (xyz= −10 38 −4; p-corr = 0.072).

Functional Coupling of the rTPJ

We completed the investigation of spatial covert orienting in complex dynamic environments by considering the functional coupling of the rTPJ with the rest of the brain. We found that, irrespective of the video (Entity/No_Entity) and viewing condition (covert/overt), there was a significant covariation between activity in rTPJ and activity in the IFG, bilaterally, and activity in the left TPJ (see Table 4, plus Figure 4C). A 2 × 2 AVOVA comparing rTPJ couplings in the four conditions did not reveal any significant main effect or interaction, indicating that the functional coupling between posterior (rTPJ) and anterior (IFG) nodes of the ventral attentional network was similar for the two types of video and the two forms of spatial orienting.

Table 4. Interregional Functional Coupling with the rTPJ.

| Brain Region | Covert | Overt | ||||||

|---|---|---|---|---|---|---|---|---|

| Entity | No_Entity | Entity | No_Entity | |||||

| t value | x y z | t value | x y z | t value | x y z | t value | x y z | |

| R TPJ | 19.75 | 58 −40 12 | 17.23 | 56 −38 10 | 15.20 | 58 −44 14 | 16.94 | 54 −38 10 |

| L TPJ | 7.88 | −58 −48 18 | 9.70 | −62 −38 24 | 8.40 | −64 −40 24 | 6.53 | −56 −38 26 |

| R IFG | 6.94 | 52 16 20 | 7.82 | 52 14 14 | 8.96 | 54 12 8 | 4.81 | 54 14 8 |

| L IFG | 7.05 | −60 12 12 | 7.21 | −60 10 10 | 6.51 | −52 18 20 | 4.48 | −58 4 6 |

| R/L precuneus | 9.15 | −2 −58 54 | 7.95 | 2 −46 52 | 8.19 | 8 −58 52 | 7.28 | −2 −58 54 |

| R/L calcarine cortex | 7.33 | −2 −80 6 | 6.80 | 0 −82 4 | 5.20 | 2 −78 10 | 11.38 | 4 −78 8 |

Brain regions where activity covaried positively with activity in the rTPJ during subjects’ viewing of the Entity and No_Entity videos (covert and overt conditions). p values are corrected for multiple comparisons at the whole-brain level. Overt/Covert, spatial orienting with eye movements allowed/disallowed; TPJ, temporo-parietal junction; IFG, inferior frontal gyrus.

DISCUSSION

The present study aimed at investigating stimulus-driven visuo-spatial attention in a complex and dynamic environment, combining computational modeling, behavioral measures, and BOLD activation. Our results demonstrate that task-irrelevant bottom-up input is processed both in the dorsal and the ventral attention systems. Activity in the two systems was associated with the efficacy of bottom-up signals for covert orienting of spatial attention. The results also revealed a distinction between the two systems: dorsal areas were found to continually represent the efficacy of background salience, while ventral regions responded transiently to attention-grabbing distinctive events. By using ecologically valid settings, these findings challenge traditional models of visuo-spatial attention, demonstrating that the efficacy of bottom-up input determines activation of the attention control systems, rather than the input signal or the orienting process as such.

Sensory Saliency and Attentional Orienting in the Complex Visual Environment

We used saliency maps to characterize our visual environment (Itti et al., 1998). The fMRI analyses showed that mean saliency covaried on a scan-by-scan basis with activity in the occipital visual cortex and the left aIPS (see Figure 1C). More targeted ROI analyses indicated that also the other nodes of the dorsal fronto-parietal network (right aIPS, and FEF bilaterally) showed an effect of mean saliency. The effect of salience in occipital cortex is not surprising, as movie segments with high saliency values typically comprise a larger and/or a greater number of disparities in basic visual features that are represented in occipital cortex. These findings are consistent with those of Thielscher et al. (2008), who showed correlations between saliency of texture borders in the visual scene and activity in visual cortex.

On the other hand, the effect of salience in the dorsal frontoparietal network is most likely associated with higher-level attentional processes. The existence of representations of salience in posterior parietal and dorsal premotor cortex has been suggested by several authors (e.g., Koch and Ullman, 1985; Schall and Hanes, 1993; Constantinidis and Steinmetz, 2001). Nonetheless, saliency alone is a poor predictor of spatial orienting because other factors contribute to exploratory eye movements during the viewing of complex scenes (e.g., task: Navalpakkam and Itti, 2005; object representation: Einhäuser et al., 2008; “center bias:” Tseng et al., 2009). Indeed, here we found that the most reliable predictor of activity in the dorsal attention network was the efficacy of salience for the orienting of spatial attention (SA_dist parameter, see Figure 1D). In aIPS/SPG and FEF, we found BOLD signal increases when subjects attended toward the most salient location of the scene.

The involvement of dorsal parietal and premotor areas is common in fMRI studies of visuo-spatial attention (Corbetta and Shulman, 2002; see also Vandenberghe et al., 2001, showing a parametric relationship between activity in parietal cortex and the amplitude of spatial attention shifts). The dorsal attention network is thought to generate top-down control signals that bias the processing of relevant stimulus features or locations in sensory areas (Corbetta and Shulman, 2002). In standard experimental paradigms involving series of separate and repeated trials, control signals are typically assessed upon the presentation of a symbolic cue that specifies the “to-be-attended stimulus dimension” (e.g., feature/location), yielding to changes of activity before the presentation of the target stimulus (e.g.,Kastner et al., 1999). Our experimental paradigm did not include any such arbitrary cues, or cue-to-target separation; rather, here it was the context itself that provided the orienting signals. The fMRI results revealed that the continuous variation of the currently attended position with respect to the most salient location (SA_dist parameter) affected ongoing activity in this network. By contrast, our predictor assessing the overall effect of attention shifting (Sac_freq) did not modulate activity in these regions during the covert viewing condition (see below for the effect of overt orienting in pIPS).

The role of the intraparietal and dorsal premotor cortex in attention and oculomotor control has been debated for a long time. Some authors emphasized the link between spatial attention and the preparation of saccadic eye movements (e.g., Rizzolatti et al., 1987; Andersen et al., 1997), while others suggested that attentional operations can be distinguished from motor preparation (Colby and Goldberg, 1999). Early functional imaging studies comparing overt and covert forms of attention shifting revealed overlapping activation in IPS and FEF (e.g., Corbetta et al., 1998), consistent with a close relationship between spatial attention and oculomotor control. However, depending on paradigms, control conditions, and endogenous/exogenous mechanisms, differences have also emerged. For example, manipulating the rate of exogenous shifts, Beauchamp et al. (2001) reported greater activation for overt shifts than covert shifts in the dorsal fronto-parietal system. By contrast, other authors found greater activation for covert orienting as compared with that of overt orienting in IPS/FEF (e.g., Corbetta et al., 1998; see also Fairhall et al., 2009; who reported similar intraregional activation, but differential interregional connectivity for covert and overt orienting) and superior parietal cortex (e.g., see Fink et al., 1997, who reported greater activation for covert as compared with that of overt orienting using an object-based orienting task).

Our current study was not specifically designed to compare covert and overt orienting; rather, overt conditions were included primarily to confirm orienting behavior in the group of subjects who underwent fMRI. However, when we compared covert and overt imaging data, we found a distinction within IPS: a subregion in the horizontal branch of IPS responded to the efficacy of salience for spatial orienting (aIPS/SPG), while activity in the pIPS covaried with saccade frequency during overt orienting (see also Figure S1B). The posterior cluster may correspond to the intraparietal subregion IPS1/2 (cf. Schluppeck et al., 2005) that has been indicated as a possible human homolog of monkeys’ LIP area (Konen and Kastner, 2008; see also Kimmig et al., 2001). The more anterior cluster (aIPS/SPG) comprised a section of IPS that often activates in studies of visual attention (e.g., Shulman et al., 2009; see also Wojciulik and Kanwisher, 1999). This region is anterior to retino-topic areas IPS1–5 (Konen and Kastner, 2008), but posterior and dorsal with respect to AIP (an area involved in visually guided grasping; Shikata et al., 2003).

One limitation of the results concerning oculomotor control in pIPS is that here we were unable to distinguish activity related to the motor execution from the sensory consequences of the eye movements (cf. delayed-saccades paradigms specifically designed to investigate overt orienting). All our measures of overt orienting entailed highly variable visual input as a function of eye movements and gaze direction. This may explain why, in overt viewing conditions, we failed to detect any attention-related effects that depend on the relationship between the spatial layout of the stimuli and the current gaze direction (e.g., SA_dist). This, together with the lack of any control of the subject on the environment (e.g., the choice of where to go), limits the possibility of extending our findings to real-life situations, where subjects actively interact with the environment and are free to move their eyes. Nonetheless, the utilization of complex and dynamic stimuli enabled us to highlight the key role of the efficacy of salience for covert spatial orienting and to highlight that this can be distinguished from overt orienting within the IPS.

Another element of novelty in our study is that, unlike most previous fMRI studies, we found a relationship between activity in the dorsal system and orienting of attention toward task-irrelevant locations. Here, subjects did not perform any task and salient locations were computed only on the basis of low-level features (local disparities in color, intensity, and line orientations). Our fMRI results extend electrophysiological data reporting that parietal and premotor neurons are modulated both by intrinsically catching and by behaviorally relevant stimuli (see Gottlieb et al., 1998; Constantinidis and Steinmetz, 2001; Thompson et al., 2005), here showing activation of these areas when salient locations become behaviorally relevant (i.e., when they trigger a shift of gaze/attention). This indicates that the dorsal fronto-parietal network combines bottom-up and endogenous signals to guide spatial attention, consistent with the hypothesis that the dorsal attention network represents current attentional priorities (Gottlieb, 2007).

Spatial Orienting toward the Human-like Characters

For the Entity video we considered transient brain activations associated with the appearance of human-like characters. We found that these unexpected events activated the rTPJ extending in the pMTG, as well as bilateral motion-sensitive MT-complex (V5+/MT+), precuneus, ventral occipital cortex, and right premotor cortex (see Figure 3A). Attention grabbing characters activated rTPJ more than non-attention grabbing characters, linking the activation of these regions to attention rather than mere sensory processing. This was further confirmed by the modulation of the characters’ responses by specific attention-related parameters in the rTPJ and right pMTG (see Figure 3B). A more targeted ROI analysis revealed that also the rIFG showed a pattern of activation similar to rTPJ and right pMTG (cf. Supplemental Experimental Procedures).

The finding of transient activation in rTPJ and rIFG (and of specific attentional effects in these regions) is in agreement with the view that these two regions are core components of the ventral fronto-parietal attentional network (Corbetta et al., 2008). The ventral system has been associated with stimulus-driven reorienting toward task/set-relevant stimuli, while irrelevant stimuli typically do not activate this network (e.g., Kincade et al., 2005; but see Asplund et al., 2010).

In the present study, the unexpected human-like characters activated rTPJ/rIFG despite the fact that they were fully task-irrelevant. Recently, Asplund and colleagues reported activation of the TPJ for task-irrelevant stimuli, but these were presented during performance of a primary ongoing task (i.e., task-irrelevant faces presented within a stream of task-relevant letters;Asplund et al., 2010). The faces activated TPJ only on the first and second presentation (“surprise” trials), indicating that task-irrelevant stimuli can be processed in the ventral system, as long as they are unexpected and interfere with ongoing task performance. In our paradigm, the human-like characters were also unexpected, unrepeated, and distinctive visual events. But, notably, our experimental settings did not involve any primary task; rather, any attentional set arose only as a consequence of the coherent unfolding of the visual environment over time. This demonstrates that, in complex and dynamic settings, task-irrelevant stimuli can activate the rTPJ even when they do not interfere with any prespecified task rules or task sets (see also Iaria et al., 2008).

In our study, despite being fully task-irrelevant, the human-like characters were very distinctive visual events. The orienting efficacy of these stimuli may relate to the fact that they can be recognized on the basis of previous knowledge and/or category-specific representations (see also Navalpakkam and Itti, 2005; Einhäuser et al., 2008). Also, human-like characters may have attracted attention because they were the only moving objects in the scene. Motion was not included in our computation of salience because currently available computational models do not separate the contribution of global flow due to self motion from the local flow due to character motion, which are known to be processed in distinct brain regions (Bartels et al., 2008). Instead, we examined the possible relationship between the human-like characters and points of maximum saliency, computed using intensity, color, and orientation. This revealed that 14 out of the 25 characters did not show any coincidence with the location of maximum saliency. Five characters coincided with the location of maximum saliency for at least 25% of the character’s duration. Three of these were scored as attention grabbing and two as non-grabbing, indicating that there was no systematic relationship between maximum saliency and the appearance of the human-like characters in the scene. This further supports our main conclusion that the efficacy of low-level salience and the efficacy of distinctive visual events are processed separately in the dorsal and ventral attention systems, respectively. Nonetheless, future developments of saliency models will hopefully disentangle global and local motion components, which would permit further discrimination of the contribution of low-level saliency compared with that of higher-order category effects during the processing of moving objects/characters in dynamic environments.

Model-free Analyses of Brain Activity during the Processing of Complex Dynamic Environments

The results discussed above are derived from hypothesis-based analyses involving computations of only a few indexes of attentional orienting (e.g., shifts, timings, and distances). Therefore, we also analyzed the fMRI data using a data-driven technique (IRC analysis), which identifies brain regions involved in the processing of the complex and dynamic stimuli without making any a priori assumptions about stimulus content and the timing/shape of the BOLD response (synchronization; cf. Hasson et al., 2004).

The IRC analysis revealed significant synchronization in occipital visual areas and in the dorsal fronto-parietal network during covert viewing of both the Entity and the No_Entity videos. The rTPJ and right pMTG showed greater synchronization during covert viewing of the Entity video as compared with the No_ Entity video (see Figure 4B). Accordingly, this data-driven analysis confirmed the differential involvement of dorsal and ventral attention networks, but now without making any a priori assumptions. Moreover, it should be noted that the computation of IRC for the Entity video factored out the transient response associated with the presentation of the human-like characters (see Supplemental Experimental Procedures), suggesting that IRC analysis can detect additional signal components. These may include specific changes related to variable processing times and shift amplitudes associated with the different characters, which would be consistent with the influences of character-specific attentional parameters that we found in these areas with the hypothesis-based analyses. Finally, the direct comparison of the IRC maps for covert and overt viewing of the No_Entity video revealed a trend toward higher synchronization in the left SPG during covert viewing. We link this differential effect with the hypothesis-based results showing systematic attention-related effects in the dorsal fronto-parietal network during the covert viewing condition only (SA_dist, cf. Table 1). Thus, overall the IRC analyses confirmed our hypothesis-driven results, but now without making any assumption about the video content and spatial orienting behavior.

Together with this data-driven approach, we also performed analyses of interregional functional coupling (Friston et al., 1997), using the rTPJ as the seed region. These revealed significant coupling between the rTPJ and the IFG bilaterally (i.e., the anterior nodes of the ventral fronto-parietal attention network), plus the TPJ in the left hemisphere. The rTPJ functional coupling was not affected by the video type (Entity and No_Entity videos) or the viewing condition (covert and overt; see Figure 4C). These results indicate that anterior and posterior nodes of the ventral fronto-parietal network operate in a coordinated manner during the processing of the complex dynamic environment, i.e., not just upon the appearance of the human-like characters (see also Shulman et al., 2009, showing high coupling between TPJ and IFG even at rest).

The dynamic interplay between rTPJ and premotor regions during covert spatial orienting has been the focus of several recent investigations. Corbetta and colleagues proposed that the MFG is the main area linking goal-driven attention in dorsal fronto-parietal network and stimulus-driven control in the ventral system (Corbetta et al., 2008). In this model, top-down filtering signals about the currently relevant task set would originate in the dorsal system and would deactivate rTPJ and rIFG via MFG. More recently, Shulman et al. (2009) demonstrated differential activation in anterior and posterior nodes of the ventral system. The rTPJ activated for stimulus-driven orienting irrespective of breaches of expectations, while the rIFG engaged specifically for stimulus-driven orienting toward unexpected stimuli. The authors interpreted these findings by suggesting that rTPJ itself may act as the switch triggering stimulus-driven activation of the dorsal system when attention is reoriented toward behaviorally important objects/stimuli. A different mechanism was recently proposed by Asplund et al. (2010), who found changes of functional coupling between TPJ and inferior prefrontal regions as a function of condition (surprise task-irrelevant face-trials versus task-relevant ongoing letter-trials; see also above). These authors suggested that the rIFG governs the transition between goal-directed performance (in dorsal regions) and stimulus-driven attention (in TPJ). In our study we did not observe any condition-specific changes of connectivity between TPJ and IFG, which were found to be highly coupled in all conditions (see Figure 4C). Aside from the many differences in terms of stimuli and analyses methods, the key difference between previous studies and our current experiment is that, here, the experimental procedure did not involve any primary goal-directed task. Accordingly, the onset of the task-irrelevant events (i.e., the human-like characters) did not interfere with any predefined task set, and no filtering or task-switching operations were required. On the basis of this, we hypothesize a distinction between intraregional activation of TPJ, which would not require any conflict with a prespecified task set, and the modulation of the TPJ-IFG intraregional connectivity. The latter would instead mediate additional processes required when there is a mismatch between the incoming sensory input and the current task set (e.g., filtering and/or network-switching operations).

In conclusion, the present study investigated stimulus-driven attention by characterizing bottom-up sensory signals and their efficacy for the orienting of spatial attention during the viewing of complex and dynamic visual stimuli (virtual-environment videos). We combined a computational model of visual saliency and measurements of eye movements to derive a set of attentional parameters that were used to analyze fMRI data. We found that activity in visual cortex covaried with the stimulus mean saliency, whereas the efficacy of salience was found to affect ongoing activity in the dorsal fronto-parietal attentional network (aIPS/SPG and FEF). Further, comparisons of covert and overt viewing conditions revealed some segregation between orienting efficacy in aIPS and overt saccades in pIPS. On the other hand, the efficacy of attention-grabbing events was associated with modulation of transient activity in the ventral fronto-parietal attentional network (rTPJ and rIFG). Our findings demonstrate that both dorsal and ventral attention networks specify the efficacy of task-irrelevant bottom-up signals for the orienting of covert spatial attention, and indicate a segregation of ongoing/continuous efficacy coding in dorsal regions and transient representations of attention-grabbing events in the ventral network.

EXPERIMENTAL PROCEDURES

Procedure

The experimental procedure consisted of a preliminary behavioral study (n = 11) and an fMRI study in a different group of volunteers (n = 13). The aim of the preliminary study was to quantify the efficacy of bottom-up signals for visuo-spatial orienting, using overt eye movements during free viewing of the complex and dynamic visual stimuli (Entity and No_Entity videos, see below). The fMRI study was carried out with a Siemens Allegra 3T scanner. Each participant underwent seven fMRI runs, either with eye movements allowed (free viewing, overt spatial orienting) or with eye movements disallowed (central fixation, covert spatial orienting; cf. Table S1 in Supplemental Experimental Procedures). Our main fMRI analyses focused on covert orienting, but we also report additional results concerning runs with eye movements allowed (overt orienting in the MR scanner).

Visual Stimuli and Overt Orienting Behavior

Both the preliminary experiment and the main fMRI study used the same visual stimuli. These consisted of two videos depicting indoor and outdoor computer-generated scenarios, and containing many elements typical of real environments (paths, walls, columns, buildings, stairs, furnishings, boxes, objects, cars, trucks, beds, etc.; see Figure 1 A for some examples). The two videos followed the same route through the same complex environments, but one video also included 25 human-like characters (Entity video, Figures 2A and 2B), while the other did not (No_Entity video, Figure 1A). In the Entity video, the characters entered the scene in an unpredictable manner, coming in from various directions, walking through the field of view, and then exiting in other locations, as would typically happen in real environments. Each event/character was unique, unrepeated, and with its own features: they could be either male or female, have different body builds, be dressed in different ways, etc. (see Figure 2A for a few examples).

For each frame of the No_Entity video, we extracted the mean saliency and the position of maximum saliency. Saliency maps were computed by using the “SaliencyToolbox 2.2.” (http://www.saliencytoolbox.net/). The mean saliency values were convolved with the statistical parametric mapping (SPM) hemodynamic response function (HRF), resampled at the scanning repetition time (TR = 2.08 s) and mean adjusted to generate the S_mean predictor for subsequent fMRI analyses. The coordinates of maximum saliency were combined with the gaze position data to generate the SA_dist predictor (i.e., “salience-attention” distance; see below). For the Entity video, we extracted the frame-by-frame position of the 25 characters. The characters’ coordinates were analyzed together with the gaze position data to classify each character as attention grabbing or non-grabbing and to generate the A_time and A_ampl parameters (i.e., processing time and amplitude of the attentional shifts; see below).

Both in the preliminary study and during fMRI, the horizontal and vertical gaze positions were recorded with an infrared eye-tracking system (see Supplemental Experimental Procedures for details). For the main fMRI analyses we used the eye-tracking data recorded in the preliminary study, because these should best reflect the intrinsic attention-grabbing features of the bottom-up signals, as measured on the first viewing of the stimuli. However, we also report additional analyses based on eye-tracking data recorded during the overt viewing fMRI runs (in-scanner parameters). Eye-tracking data recorded during the covert viewing fMRI runs were used to identify losses of fixation (horizontal or vertical velocity exceeding 50°/s), which were modeled as events of no interest in all fMRI analyses.

Eye-tracking data collected while viewing the No_Entity video were used to characterize the relationship between gaze/attention direction and the point of maximum saliency in the image. For each frame we extracted the group median gaze position and computed the Euclidian distance between this and the point of maximum saliency. Distance values were convolved with the HRF, resampled, and mean adjusted to generate the SA_dist predictor for the fMRI analyses. We also computed the overall saccade frequency during viewing of the video, as an index of attention shifting irrespective of salience. The group-average number of saccades per second (horizontal or vertical velocity exceeding 50°/s) was convolved, resampled, and mean adjusted to generate the Sac_freq predictor.

Gaze position data collected while overtly viewing the Entity video were used to characterize spatial orienting behavior when the human-like characters appeared in the scene (see Figure 2D). The attention grabbing property of each character was defined on the basis of three statistical criteria: (1) change of the gaze position with respect to the initial frame (Entity video); (2) significant difference between gaze position in the Entity and No_Entity videos; and (3) reduction of the distance between gaze position and character position, compared with the same distance computed at the initial frame (Entity video). The combination of these three constraints allowed us to detect gaze shifts (criterion 1) that were specific for the Entity video (criterion 2) and that occurred toward the character (criterion 3).

Each criterion was evaluated at each frame, comparing group-median values against a 95% confidence interval. For criteria 1 and 3, the confidence interval was computed by using the variance of the distance between gaze position at the current frame and gaze position at the initial frame (Entity video). For criterion 2, the confidence interval was computed by using the variance of distance between gaze position in the Entity video and gaze position in the No_Entity video. We scored the character as attention grabbing (AG) when all three criteria were satisfied for at least four consecutive frames. If this was not satisfied after 25 frames (1 s) the character was scored as non-attention grabbing (NoAG). In the preliminary study, this procedure identified 15 attention grabbing and 10 non-attention grabbing characters. For attention grabbing characters we parameterized the processing times (A_time), considering the first frame when all three criteria were satisfied, and the amplitude of the shifts (A_ampl), considering the shift of the gaze position at the end of the four-frame window (see Figure 2D).

fMRI Analyses: SPM

Our main SPM analyses (SPM8, Wellcome Department of Cognitive Neurology) utilized orienting efficacy parameters computed in the preliminary study to analyze fMRI data acquired during covert viewing of the videos. We also performed more targeted ROI analyses of the covert fMRI runs using parameters based on in-scanner eye movement recordings (see Supplemental Experimental Procedures), and used in-scanner parameters to analyze imaging data acquired during overt viewing of the videos (eye movements allowed during fMRI). All analyses included first-level within-subject analyses and second-level (random effects) analyses for statistical inference at the group level (Penny and Holmes, 2004).

Main Analyses: Covert Spatial Orienting

The aim of the fMRI analysis of the No_Entity video was to highlight regions of the brain where activity covaried with the level of salience in the visual input, areas where activity reflected the tendency of the subjects to pay attention toward/away from the most salient location of the image (efficacy of salience), and areas modulated by attention shifting irrespective of salience. The first-level models included three covariates of interest: S_mean, SA_dist, and Sac_freq. Each model included also losses of fixation modeled as events of no interest, plus the head motion realignment parameters. The time series were high-pass filtered at 0.0083 Hz and prewhitened by means of autoregressive model AR(1). Contrast images averaging the estimated parameters for the two relevant fMRI runs (see Table S1 in Supplemental Experimental Procedures) entered three one-sample t tests assessing separately the effect of S_mean, SA_dist and Sac_freq at the group-level.

The aim of the fMRI analysis of the Entity video was to identify regions showing transient responses to the human-like characters, and to assess whether the attention-grabbing efficacy of each character modulated these transient responses. The 25 characters were divided into 15 attention grabbing and 10 non-attention grabbing events modeled as different event types using delta functions time-locked to the characters’ appearance, convolved with the standard SPM HRF. Two separate first-level models included the modulatory effects related to either the processing time (A_time) or the amplitude of the spatial shift (A_ampl) associated with each of the attention grabbing characters. All models included losses of fixation as events of no interest, plus the head motion realignment parameters. The time-series were high-pass filtered at 0.0083 Hz and prewhitened by means of autoregressive model AR(1). The second-level analyses included one full-factorial ANOVA to test for the main (mean) effect of attention grabbing and non-grabbing characters and any difference between these; plus two separate one-sample t tests assessing the effects of A_time and A_ampl at the group-level.

For these main analyses we report activations corrected for multiple comparisons at cluster level (p-corr. < 0.05; cluster size estimated at p-unc. = 0.005), considering the whole brain as the volume of interest. The localization of the activation clusters was based on the anatomical atlas of the human brain by Duvernoy (1991). In addition we report ROI analyses focusing on the rTPJ that has been identified as a key region for stimulus-driven orienting using traditional cueing paradigms (e.g., Corbetta et al., 2008). The rTPJ ROI included voxels showing a significant response to the character appearance (see Figure 3A) and belonging to the superior temporal gyrus or the supramarginal gyrus as anatomically defined by the AAL atlas (Tzourio-Mazoyer et al., 2002).

Overt Spatial Orienting

For the fMRI analyses of the data collected during free viewing of the videos (overt orienting), we used behavioral indexes derived from gaze position data recorded in the scanner—that is, behavioral and imaging data recorded concurrently in the same subjects and fMRI runs. The first-level models were analogous to the models used for the main analyses (covert orienting), with the exception that the new models did not include any predictor modeling losses of fixation. Group-level analyses consisted of one-sample t tests and a full factorial ANOVA (see above) testing for all attention-related effects now in free viewing conditions. Moreover, paired t tests directly compared attention-related effects in the overt and covert conditions. Statistical thresholds were corrected for multiple comparisons at cluster level (p-corr. < 0.05; cluster size estimated at p-unc. = 0.005), considering the whole brain as the volume of interest.

fMRI Analyses: IRC Analyses

As for the standard SPM analyses, the IRC analyses included two steps: first, the estimation of covariance parameters in each single subject, and then usage of between-subjects variance to determine parametric statistics (in SPM) for random effects inference at the group-level. The IRCs were computed for the covert viewing conditions of the Entity and No_Entity video, and for the overt viewing condition of the No_Entity video. The Entity video was presented only once in the overt viewing condition (run 7: performed primarily to confirm orienting behavior in the scanner) and could not be submitted to the IRC analysis.

Using multiple regressions at each voxel, separately for each subject and each condition, we fitted the time course of the BOLD response recorded during the first presentation of the video (e.g., the third run for the Entity video) with the time course of the BOLD response recorded in the same voxel during the second presentation of the same video (i.e., the fourth run for the Entity video; see Table S1 in Supplemental Experimental Procedures). This parameter captures the covariance between the BOLD signals at the same voxel, when the subject is presented twice with the same complex stimuli. Accordingly, IRC identifies areas responding to systematic changes within the complex stimuli, without any a priori knowledge or assumptions about the content of the stimuli and the cognitive processes associated with it (synchronization; see also Hasson et al., 2004). It should be noted that this procedure will miss any area showing learning-related effects that occur only during the first (e.g., encoding) or second (retrieval) presentation of the video and it is therefore not suitable for the investigation of memory processes.

Together with the voxel-specific BOLD time course, each regression model included the head motion realignment parameters and global signal of both fMRI runs (data and predictor runs). The regression models concerning the covert viewing conditions included losses of fixation as events of no interest. A cosine basis-set was included in the model to remove variance at frequencies below 0.0083 Hz. In addition, the IRC models for the Entity video included the predicted BOLD response for the human-like characters (i.e., delta functions time-locked to the characters’ onset, convolved with the HRF; separately for AG and NoAG characters), thus removing from the IRC estimation any common variance between the two runs that can be accounted for by the transient response to these stimuli.

Images resulting from the within-subject estimation entered the standard second-level analyses in SPM. These included three one-sample t tests (one for each condition: overt/covert viewing of the No_Entity video, plus covert viewing of the Entity video) assessing the statistical significance of IRC at the group level. A within-subject ANOVA was used to directly compare the IRC in the three conditions. Specifically, we compared brain synchronization during covert viewing of the Entity versus No_Entity video (i.e., the effect of video condition); and synchronization during covert versus overt viewing of No_Entity video (i.e., the effect of viewing condition). The p values were corrected for multiple comparisons at the cluster level (p-corr. < 0.05; cluster size estimated at p-unc. = 0.005), considering the whole brain as the volume of interest. As for our main hypothesis-based analyses, we also specifically assessed IRC in the rTPJ ROI.

fMRI Analyses: Interregional Coupling of the rTPJ

We estimated the functional coupling of the rTPJ by extracting the average signal of the rTPJ ROI and used this as a predictor for the signal in the rest of the brain (Friston et al., 1997). For each subject, the parameters of functional coupling were estimated separately for the Entity and No_Entity videos in covert and overt viewing conditions (i.e., four multiple regression models in SPM). Together with the signal of the rTPJ ROI, the models included the head motion realignment parameters and, for the Entity video, two predictors modeling the transient effect of the attention grabbing and non-grabbing characters (delta functions, convolved with the HRF). For the covert viewing conditions, the models included losses of fixation as events of no interest. The time series were high-pass filtered at 0.0083 Hz and prewhitened by means of autoregressive model AR(1). Group-level significance (random effects) was assessed by using a 2 × 2 within-subjects ANOVA modeling the four conditions of interest (Entity/No_Entity videos × overt/covert viewing). Main effects and interactions were tested at a statistical threshold of p-corr. = 0.05, corrected for multiple comparisons at cluster level (cluster size estimated at p-unc. = 0.005).

Supplementary Material

Supplemental Information for this article includes one figure, one table, and Supplemental Experimental Procedures and can also be found with this article online at doi:10.1016/j.neuron.2011.02.020.

ACKNOWLEDGMENTS

The Neuroimaging Laboratory, Santa Lucia Foundation, is supported by The Italian Ministry of Health. The research leading to these results has received funding from the European Research Council under the European Union’s Seventh Framework Programme (FP7/2007-2013)/ERC grant agreement n. 242809.

REFERENCES

- Andersen RA, Snyder LH, Bradley DC, Xing J. Multimodal representation of space in the posterior parietal cortex and its use in planning movements. Annu. Rev. Neurosci. 1997;20:303–330. doi: 10.1146/annurev.neuro.20.1.303. [DOI] [PubMed] [Google Scholar]

- Asplund CL, Todd JJ, Snyder AP, Marois R. A central role for the lateral prefrontal cortex in goal-directed and stimulus-driven attention. Nat. Neurosci. 2010;13:507–512. doi: 10.1038/nn.2509. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bartels A, Zeki S, Logothetis NK. Natural vision reveals regional specialization to local motion and to contrast-invariant, global flow in the human brain. Cereb. Cortex. 2008;18:705–717. doi: 10.1093/cercor/bhm107. [DOI] [PubMed] [Google Scholar]

- Beauchamp MS, Petit L, Ellmore TM, Ingeholm J, Haxby JV. A parametric fMRI study of overt and covert shifts of visuo-spatial attention. Neuroimage. 2001;14:310–321. doi: 10.1006/nimg.2001.0788. [DOI] [PubMed] [Google Scholar]

- Carmi R, Itti L. Visual causes versus correlates of attentional selection in dynamic scenes. Vision Res. 2006;46:4333–4345. doi: 10.1016/j.visres.2006.08.019. [DOI] [PubMed] [Google Scholar]

- Colby CL, Goldberg ME. Space and attention in parietal cortex. Annu. Rev. Neurosci. 1999;22:319–349. doi: 10.1146/annurev.neuro.22.1.319. [DOI] [PubMed] [Google Scholar]

- Constantinidis C, Steinmetz MA. Neuronal responses in area 7a to multiple-stimulus displays: I. neurons encode the location of the salient stimulus. Cereb. Cortex. 2001;11:581–591. doi: 10.1093/cercor/11.7.581. [DOI] [PubMed] [Google Scholar]

- Corbetta M, Shulman GL. Control of goal-directed and stimulus-driven attention in the brain. Nat. Rev. Neurosci. 2002;3:201–215. doi: 10.1038/nrn755. [DOI] [PubMed] [Google Scholar]

- Corbetta M, Akbudak E, Conturo TE, Snyder AZ, Ollinger JM, Drury HA, Linenweber MR, Petersen SE, Raichle ME, Van Essen DC, Shulman GL. A common network of functional areas for attention and eye movements. Neuron. 1998;21:761–773. doi: 10.1016/s0896-6273(00)80593-0. [DOI] [PubMed] [Google Scholar]

- Corbetta M, Patel G, Shulman GL. The reorienting system of the human brain: From environment to theory of mind. Neuron. 2008;58:306–324. doi: 10.1016/j.neuron.2008.04.017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Desimone R, Duncan J. Neural mechanisms of selective visual attention. Annu. Rev. Neurosci. 1995;18:193–222. doi: 10.1146/annurev.ne.18.030195.001205. [DOI] [PubMed] [Google Scholar]

- Duvernoy HM. The Human Brain. Surface, Three-Dimensional Sectional Anatomy and MRI. Springer-Verlag; Wien: 1991. [Google Scholar]

- Einhäuser W, Spain M, Perona P. Objects predict fixations better than early saliency. J. Vis. 2008;8:18–26. 1–26. doi: 10.1167/8.14.18. [DOI] [PubMed] [Google Scholar]

- Elazary L, Itti L. Interesting objects are visually salient. J. Vis. 2008;8:3–15. 1–15. doi: 10.1167/8.3.3. [DOI] [PubMed] [Google Scholar]

- Fairhall SL, Indovina I, Driver J, Macaluso E. The brain network underlying serial visual search: Comparing overt and covert spatial orienting, for activations and for effective connectivity. Cereb. Cortex. 2009;19:2946–2958. doi: 10.1093/cercor/bhp064. [DOI] [PubMed] [Google Scholar]