Background

The global evidence base for health care is extensive, and expanding; with nearly 2 million articles published annually. One estimate suggests 75 trials and 11 systematic reviews are published daily [1]. Research syntheses, in a variety of established and emerging forms, are well recognised as essential tools for summarising evidence with accuracy and reliability [2]. Systematic reviews provide health care practitioners, patients and policy makers with information to help make informed decisions. It is essential that those conducting systematic reviews are cognisant of the potential biases within primary studies and of how such biases could impact review results and subsequent conclusions.

Rigorous and systematic methodological approaches to conducting research synthesis emerged throughout the twentieth century with methods to identify and reduce biases evolving more recently [3,4]. The Cochrane Collaboration has made substantial contributions to the development of how biases are considered in systematic reviews and primary studies. Our objective within this paper is to review some of the landmark methodological contributions by members of the Cochrane Bias Methods Group (BMG) to the body of evidence which guides current bias assessment practices, and to outline the immediate and horizon objectives for future research initiatives.

Empirical works published prior to the establishment of the Cochrane Collaboration

In 1948, the British Medical Research Council published results of what many consider the first 'modern’ randomised trial [5,6]. Subsequently, the last 65 years has seen continual development of the methods used when conducting primary medical research aiming to reduce inaccuracy in estimates of treatment effects due to potential biases. A large body of literature has accumulated which supports how study characteristics, study reports and publication processes can potentially bias primary study and systematic review results. Much of the methodological research during the first 20 years of The Cochrane Collaboration has built upon that published before the Collaboration was founded. Reporting biases, or more specifically, publication bias and the influence of funding source(s) are not new concepts. Publication bias initially described as the file drawer problem as a bias concept in primary studies was early to emerge and has long been suspected in the social sciences [7]. In 1979 Rosenthal, a psychologist, described the issue in more detail [8] and throughout the 1980s and early 1990s an empirical evidence base began to appear in the medical literature [9-11]. Concurrent with the accumulation of early evidence, methods to detect and mitigate the presence of publication bias also emerged [12-15]. The 1980s also saw initial evidence of the presence of what is now referred to as selective outcome reporting [16] and research investigating the influence of source of funding on study results [10,11,17,18].

The importance of rigorous aspects of trial design (e.g. randomisation, blinding, attrition, treatment compliance) were known in the early 1980s [19] and informed the development by Thomas Chalmers and colleagues of a quality assessment scale to evaluate the design, implementation, and analysis of randomized control trials [20]. The pre-Cochrane era saw the early stages of assessing quality of included studies, with consideration of the most appropriate ways to assess bias. Yet, no standardised means for assessing risk of bias, or “quality” as it was referred to at the time, were implemented when The Cochrane Collaboration was established. The use of scales for assessing quality or risk of bias is currently explicitly discouraged in Cochrane reviews based on more recent evidence [21,22].

Methodological contributions of the Cochrane Collaboration: 1993 – 2013

In 1996, Moher and colleagues suggested that bias assessment was a new, emerging and important concept and that more evidence was required to identify trial characteristics directly related to bias [23]. Methodological literature pertaining to bias in primary studies published in the last 20 years has contributed to the evolution of bias assessment in Cochrane reviews. How bias is currently assessed has been founded on published studies that provide empirical evidence of the influence of certain study design characteristics on estimates of effect, predominately considering randomised controlled trials.

The publication of Ken Schulz’s work on allocation concealment, sequence generation, and blinding [24,25] the mid-1990s saw a change in the way the Collaboration assessed bias of included studies, and it was recommended that included studies were assessed in relation to how well the generated random sequence was concealed during the trial.

In 2001, the Cochrane Reporting Bias Methods Group now known as the Cochrane Bias Methods Group, was established to investigate how reporting and other biases influence the results of primary studies. The most substantial development in bias assessment practice within the Collaboration was the introduction of the Cochrane Risk of Bias (RoB) Tool in 2008. The tool was developed based on the methodological contributions of meta-epidemiological studies [26,27] and has since been evaluated and updated [28], and integrated into Grading of Recommendations Assessment, Development and Evaluation (GRADE) [29].

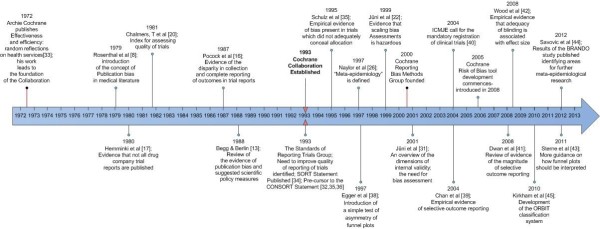

Throughout this paper we define bias as a systematic error or deviation in results or inferences from the truth [30] and should not be confused with “quality”, or how well a trial was conducted. The distinction between internal and external validity is important to review. When we describe bias we are referring to internal validity as opposed to the external validity or generalizability which is subject to demographic or other characteristics [31]. Here, we highlight landmark methodological publications which contribute to understanding how bias influences estimates of effects in Cochrane reviews (Figure 1).

Figure 1.

Timeline of landmark methods research [8,13,16,17,20,22,26,31-45].

Sequence generation and allocation concealment

Early meta-epidemiological studies assessed the impact of inadequate allocation concealment and sequence generation on estimates of effect [24,25]. Evidence suggests that adequate or inadequate allocation concealment modifies estimates of effect in trials [31]. More recently, several other methodological studies have examined whether concealment of allocation is associated with magnitude of effect estimates in controlled clinical trials while avoiding confounding by disease or intervention [42,46].

More recent methodological studies have assessed the importance of proper generation of a random sequence in randomised clinical trials. It is now mandatory, in accordance with the Methodological Expectations for Cochrane Interventions Reviews (MECIR) conduct standards, for all Cochrane systematic reviews to assess potential selection bias (sequence generation and allocation concealment) within included primary studies.

Blinding of participants, personnel and outcome assessment

The concept of the placebo effect has been considered since the mid-1950s [47] and the importance of blinding trial interventions to participants has been well known, with the first empirical evidence published in the early 1980s [48]. The body of empirical evidence on the influence on blinding has grown since the mid-1990s, especially in the last decade, with some evidence highlighting that blinding is important for several reasons [49]. Currently, the Cochrane risk of bias tool suggests blinding of participants and personnel, and blinding of outcome assessment be assessed septely. Moreover consideration should be given to the type of outcome (i.e. objective or subjective outcome) when assessing bias, as evidence suggests that subjective outcomes are more prone to bias due to lack of blinding [42,44] As yet there is no empirical evidence of bias due to lack of blinding of participants and study personnel. However, there is evidence for studies described as 'blind’ or 'double-blind’, which usually includes blinding of one or both of these groups of people. In empirical studies, lack of blinding in randomized trials has been shown to be associated with more exaggerated estimated intervention effects [42,46,50].

Different people can be blinded in a clinical trial [51,52]. Study reports often describe blinding in broad terms, such as 'double blind’. This term makes it impossible to know who was blinded [53]. Such terms are also used very inconsistently [52,54,55] and the frequency of explicit reporting of the blinding status of study participants and personnel remains low even in trials published in top journals [56], despite explicit recommendations. Blinding of the outcome assessor is particularly important, both because the mechanism of bias is simple and foreseeable, and because evidence for bias is unusually clear [57]. A review of methods used for blinding highlights the variety of methods used in practice [58]. More research is ongoing within the Collaboration to consider the best way to consider the influence of lack of blinding within primary studies. Similar to selection bias, performance and detection bias are both mandatory components of risk of bias assessment in accordance with the MECIR standards.

Reporting biases

Reporting biases have long been identified as potentially influencing the results of systematic reviews. Bias arises when the dissemination of research findings is influenced by the nature and direction of results, there is still debate over explicit criteria for what constitutes a 'reporting bias’. More recently, biases arising from non-process related issues (i.e. source of funding, publication bias) have been referred to as meta-biases [59]. Here we discuss the literature which has emerged in the last twenty years with regards to two well established reporting biases, non-publication of whole studies (often simply called publication bias) and selective outcome reporting.

Publication bias

The last two decades have seen a large body of evidence of the presence of publication bias [60-63] and why authors fail to publish [64,65]. Given that it has long been recognized that investigators frequently fail to report their research findings [66], many more recent papers have been geared towards methods of detecting and estimating the effect of publication bias. An array of methods to test for publication bias and additional recommendations are now available [38,43,67-76], many of which have been evaluated [77-80]. Automatic generation of funnel plots have been incorporated when producing a Cochrane review and software (RevMan) and are encouraged for outcomes with more than ten studies [43].A thorough overview of methods is included in Chapter 10 of the Cochrane Handbook for Systematic Reviews of Interventions [81].

Selective outcome reporting

While the concept of publication bias has been well established, studies reporting evidence of the existence of selective reporting of outcomes in trial reports have appeared more recently [39,41,82-87]. In addition, some studies have investigated why some outcomes are omitted from published reports [41,88-90] as well as the impact of omission of outcomes on the findings of meta-analyses [91]. More recently, methods for evaluating selective reporting, namely, the ORBIT (Outcome Reporting Bias in Trials) classification system have been developed. One attempt to mitigate selective reporting is to develop field specific core outcome measures [92] the work of COMET (Core Outcome Measures in Effectiveness Trials) initiative [93] is supported by many members within the Cochrane Collaboration. More research is being conducted with regards to selective reporting of outcomes and selective reporting of trial analyses, within this concept there is much overlap with the movement to improve primary study reports, protocol development and trial registration.

Evidence on how to conduct risk of bias assessments

Often overlooked are the processes behind how systematic evaluations or assessments are conducted. In addition to empirical evidence of specific sources of bias, other methodological studies have led to changes in the processes used to assess risk of bias. One influential study published in 1999 highlighted the hazards of scoring 'quality’ of clinical trials when conducting meta-analysis and is one of reasons why each bias is assessed septely as 'high’, 'low’ or 'unclear’ risk rather than using a combined score [22,94]. Prior work investigated blinding of readers, data analysts and manuscript writers [51,95]. More recently, work has been completed to assess blinding of authorship and institutions in primary studies when conducting risk of bias assessments, suggesting that there is discordance in results between blind and unblinded RoB assessments. However uncertainty over best practice remains due to time and resources needed to implement blinding [96].

Complementary contributions

Quality of reporting and reporting guidelines

Assessing primary studies for potential biases is a challenge [97]. During the early 1990s, poor reporting in randomized trials and consequent impediments to systematic review conduct, especially when conducting what is now referred to as 'risk of bias assessment’, were observed. In 1996, an international group of epidemiologists, statisticians, clinical trialists, and medical editors, some of whom were involved with establishing the Cochrane Collaboration, published the CONSORT Statement [32], a checklist of items to be addressed in a report of the findings of an RCT. CONSORT has twice been revised and updated [35,36] and over time, the impact of CONSORT has been noted, for example, CONSORT was considered one of the major milestones in health research methods over the last century by the Patient-Centered Outcomes Research Institute (PCORI) [98].

Issues of poor reporting extend far beyond randomized trials, and many groups have developed guidance to aid reporting of other study types. The EQUATOR Network’s library for health research reporting includes more than 200 reporting guidelines [99]. Despite evidence that the quality of reporting has improved over time, systemic issues with the clarity and transparency of reporting remain [100,101]. Such inadequacies in primary study reporting result in systematic review authors’ inability to assess the presence and extent of bias in primary studies and the possible impact on review results, continued improvements in trial reporting are needed to lead to more informed risk of bias assessments in systematic reviews.

Trial registration

During the 1980s and 1990s there were several calls to mitigate publication bias and selective reporting via trial registration [102-104]. After some resistance, in 2004, the BMJ and The Lancet reported that they would only publish registered clinical trials [105] with the International Committee of Medical Journal Editors making a statement to the same effect [40]. Despite the substantial impact of trial registration [106] uptake is still not optimal and it is not mandatory for all trials. A recent report indicated that only 22% of trials mandated by the FDA were reporting trial results on clinicaltrials.gov [107]. One study suggested that despite trial registration being strongly encouraged and even mandated in some jurisdictions only 45.5% of a sample of 323 trials were adequately registered [108].

Looking forward

Currently, there are three major ongoing initiatives which will contribute to how The Collaboration assesses bias. First, there has been some criticism of the Cochrane risk of bias tool [109] concerning its ease of use and reliability [110,111] and the tool is currently being revised. As a result, a working group is established to improve the format of the tool, with version 2.0 due to be released in 2014. Second, issues of study design arise when assessing risk of bias when including non-randomised studies in systematic reviews [112-114]. Even 10 years ago there were 114 published tools for assessing risk of bias in non-randomised studies [115]. An ongoing Cochrane Methods Innovation Fund project will lead to the release a tool for assessing non-randomized studies as well as tools for cluster and cross-over trials [116]. Third, selective reporting in primary studies is systemic [117] yet further investigation and adoption of sophisticated means of assessment remain somewhat unexplored by the Collaboration. A current initiative is ongoing to explore optimal ways to assess selective reporting within trials. Findings of this initiative will be considered in conjunction with the release of revised RoB tool and its extension for non-randomized studies.

More immediate issues

Given the increase in meta-epidemiological research, an explicit definition of evidence needed to identify study characteristics which may lead to bias (es) needs to be defined. One long debated issue is the influence of funders as a potential source of bias. In one empirical study, more than half of the protocols for industry-initiated trials stated that the sponsor either owns the data or needs to approve the manuscript, or both; none of these constraints were stated in any of the trial publications [118]. It is important that information about vested interests is collected and presented when relevant [119].

There is an on-going debate related to the risk of bias of trials stopping early because of benefit. A systematic review and a meta-epidemiologic study showed that such truncated RCTs were associated with greater effect sizes than RCTs not stopped early, particularly for trials with small sample size [120,121]. These results were widely debated and discussed [122] and recommendations related to this item are being considered.

In addition, recent meta-epidemiological studies of binary and continuous outcomes showed that treatment effect estimates in single-centre RCTs were significantly larger than in multicenter RCTs even after controlling for sample size [123,124]. The Bias in Randomized and Observational Studies (BRANDO) project combining data from all available meta-epidemiologic studies [44] found consistent results for subjective outcomes when comparing results from single centre and multi-centre trials. Several reasons may explain these differences between study results: small study effect, reporting bias, higher risk of bias in single centre studies, or factors related to the selection of the participants, treatment administration and care providers’ expertise. Further studies are needed to explore the role and effect of these different mechanisms.

Longer term issues

The scope of methodological research and subsequent contributions and evolution in bias assessment over the last 20 years has been substantial. However, there remains much work to be done, particularly in line with innovations in systematic review methodology itself. There is no standardised methodological approach to the conduct of systematic reviews. Subject to a given clinical question, it may be most appropriate to conduct a network meta-analysis, scoping review, a rapid review, or update any of these reviews. Along with the development of these differing types of review, there is the need for bias assessment methods to develop concurrently.

The way in which research synthesis is conducted may change further with technological advances [125]. Globally, there are numerous initiatives to establish integrated administrative databases which may open up new research avenues and methodological questions about assessing bias when primary study results are housed within such databases.

Despite the increase in meta-epidemiological research identifying study characteristics which could contribute to bias in studies, further investigation is needed. For example, as yet there has been little research on integration of risk of bias results into review findings. This is done infrequently and guidance on how to do it could be improved [126]. Concurrently, although some work has been done, little is known about how magnitude and direction in estimates of effect for a given bias and across biases for a particular trial and in turn, set of trials [127].

Conclusion

To summarise, there has been much research conducted to develop understanding of bias in trials and how these biases could influence the results of systematic reviews. Much of this work has been conducted since the Cochrane Collaboration was established either as a direct initiative of the Collaboration or thanks to the work of many affiliated individuals. There has been clear advancement in mandatory processes for assessing bias in Cochrane reviews. These processes, based on a growing body of empirical evidence have aimed to improve the overall quality of the systematic review literature, however, many areas of bias remain unexplored and as the evidence evolves, the processes used to assess and interpret biases and review results will also need to adapt.

Competing interests

The authors declare that they have no competing interests.

Authors’ contributions

This paper was invited for submission by the Cochrane Bias Methods Group. AH and IB conceived of the initial outline of the manuscript, LT collected information and references and drafted the manuscript, DM reviewed the manuscript and provided guidance on structure, DM, DGA, IB and AH all provided feedback on the manuscript and suggestions of additional literature. All authors read and approved the final manuscript.

“…to manage large quantities of data objectively and effectively, standardized methods of appraising information should be included in review processes. … By using these systematic methods of exploration, evaluation, and synthesis, the good reviewer can accomplish the task of advancing scientific knowledge”. Cindy Mulrow, 1986, BMJ.

Contributor Information

Lucy Turner, Email: lturner@ohri.ca.

Isabelle Boutron, Email: isabelle.boutron@bch.aphp.fr.

Asbjørn Hróbjartsson, Email: ah@cochrane.dk.

Douglas G Altman, Email: Doug.Altman@csm.ox.ac.uk.

David Moher, Email: dmoher@ohri.ca.

Acknowledgements

We would like to thank the Canadian Institutes of Health Research for their long-term financial support (2005–2015)-CIHR Funding Reference Number—CON-105529 this financing has enabled much of the progress of the group in the last decade. We would also like to thank Jodi Peters for help preparing the manuscript, Jackie Chandler for coordinating this paper as one of the series. We would also like to thank the Bias Methods Group membership for their interest and involvement with the group. We would especially like to thank Matthias Egger and Jonathan Sterne, previous BMG convenors, and Julian Higgins, Jennifer Tetzlaff and Laura Weeks for their extensive contributions to the group.

Cochrane awards received by BMG members

The Cochrane BMG currently has a membership of over 200 statisticians, clinicians, epidemiologists and researchers interested in issues of bias in systematic reviews. For a full list of BMG members, please login to Archie or visit http://www.bmg.cochrane.org for contact information.

Bill Silverman prize recipients

2009 - Moher D, Tetzlaff J, Tricco AC, Sampson M, Altman DG. Epidemiology and reporting characteristics of systematic reviews. PLoS Medicine 2007 4(3): e78. doi: http://dx.doi.org/10.1371/journal.pmed.0040078 [full-text PDF].

Thomas C. Chalmers award recipients

2001 (tie) - Henry D, Moxey A, O’Connell D. Agreement between randomised and non-randomised studies - the effects of bias and confounding [abstract]. Proceedings of the Ninth Cochrane Colloquium, 2001.

2001 (runner-up) - Full publication: Sterne JAC, Jüni P, Schulz KF, Altman DG, Bartlett C, and Egger M. Statistical methods for assessing the influence of study characteristics on treatment effects in “meta-epidemiological” research. Stat Med 2002;21:1513–1524.

2010 - Kirkham JJ, Riley R, Williamson P. Is multivariate meta-analysis a solution for reducing the impact of outcome reporting bias in systematic reviews? [abstract] Proceedings of the Eighteenth Cochrane Colloquium, 2010.

2012 - Page MJ, McKenzie JE, Green SE, Forbes A. Types of selective inclusion and reporting bias in randomised trials and systematic reviews of randomised trials [presentation]. Proceedings of the Twentieth Cochrane Colloquium, 2012.

References

- Bastian H, Glasziou P, Chalmers I. Seventy-five trials and eleven systematic reviews a day: how will we ever keep up? PLoS Med. 2010;7:e1000326. doi: 10.1371/journal.pmed.1000326. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liberati A, Altman DG, Tetzlaff J, Mulrow C, Gotzsche PC, Ioannidis JP. et al. The PRISMA statement for reporting systematic reviews and meta-analyses of studies that evaluate health care interventions: explanation and elaboration. J Clin Epidemiol. 2009;62:e1–e34. doi: 10.1016/j.jclinepi.2009.06.006. [DOI] [PubMed] [Google Scholar]

- Hedges LV. Commentary. Statist Med. 1987;6:381–385. doi: 10.1002/sim.4780060333. [DOI] [Google Scholar]

- Chalmers I, Hedges LV, Cooper H. A brief history of research synthesis. Eval Health Prof. 2002;25:12–37. doi: 10.1177/0163278702025001003. [DOI] [PubMed] [Google Scholar]

- Medical Research Council. STREPTOMYCIN treatment of pulmonary tuberculosis. Br Med J. 1948;2:769–782. [PMC free article] [PubMed] [Google Scholar]

- Hill AB. Suspended judgment. Memories of the British streptomycin trial in tuberculosis. The first randomized clinical trial. Control Clin Trials. 1990;11:77–79. doi: 10.1016/0197-2456(90)90001-I. [DOI] [PubMed] [Google Scholar]

- Sterling TD. Publication decisions and their possible effects on inferences drawn from tests of significance - or vice versa. J Am Stat Assoc. 1959;54:30–34. [Google Scholar]

- Rosenthal R. The file drawer problem and tolerance for null results. Psycholog Bull. 1979;86:638–641. [Google Scholar]

- Dickersin K, Chan S, Chalmers TC, Sacks HS, Smith H Jr. Publication bias and clinical trials. Control Clin Trials. 1987;8:343–353. doi: 10.1016/0197-2456(87)90155-3. [DOI] [PubMed] [Google Scholar]

- Easterbrook PJ, Berlin JA, Gopalan R, Matthews DR. Publication bias in clinical research. Lancet. 1991;337:867–872. doi: 10.1016/0140-6736(91)90201-Y. [DOI] [PubMed] [Google Scholar]

- Dickersin K, Min YI, Meinert CL. Factors influencing publication of research results. Follow-up of applications submitted to two institutional review boards. JAMA. 1992;267:374–378. doi: 10.1001/jama.1992.03480030052036. [DOI] [PubMed] [Google Scholar]

- Light KE. Analyzing nonlinear scatchard plots. Science. 1984;223:76–78. doi: 10.1126/science.6546323. [DOI] [PubMed] [Google Scholar]

- Begg CB, Berlin JA. Publication bias: a problem in interpreting medical data. J Royal Stat Soc Series A (Stat Soc) 1988;151:419–463. doi: 10.2307/2982993. [DOI] [Google Scholar]

- Dear KBG, Begg CB. An approach for assessing publication bias prior to performing a meta-analysis. Stat Sci. 1992;7:237–245. doi: 10.1214/ss/1177011363. [DOI] [Google Scholar]

- Hedges LV. Modeling publication selection effects in meta-analysis. Stat Sci. 1992;7:246–255. doi: 10.1214/ss/1177011364. [DOI] [Google Scholar]

- Pocock SJ, Hughes MD, Lee RJ. Statistical problems in the reporting of clinical trials. A survey of three medical journals. N Engl J Med. 1987;317:426–432. doi: 10.1056/NEJM198708133170706. [DOI] [PubMed] [Google Scholar]

- Hemminki E. Study of information submitted by drug companies to licensing authorities. Br Med J. 1980;280:833–836. doi: 10.1136/bmj.280.6217.833. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gotzsche PC. Multiple publication of reports of drug trials. Eur J Clin Pharmacol. 1989;36:429–432. doi: 10.1007/BF00558064. [DOI] [PubMed] [Google Scholar]

- Kramer MS, Shapiro SH. Scientific challenges in the application of randomized trials. JAMA. 1984;252:2739–2745. doi: 10.1001/jama.1984.03350190041017. [DOI] [PubMed] [Google Scholar]

- Chalmers TC, Smith H Jr, Blackburn B, Silverman B, Schroeder B, Reitman D. et al. A method for assessing the quality of a randomized control trial. Control Clin Trials. 1981;2:31–49. doi: 10.1016/0197-2456(81)90056-8. [DOI] [PubMed] [Google Scholar]

- Emerson JD, Burdick E, Hoaglin DC, Mosteller F, Chalmers TC. An empirical study of the possible relation of treatment differences to quality scores in controlled randomized clinical trials. Control Clin Trials. 1990;11:339–352. doi: 10.1016/0197-2456(90)90175-2. [DOI] [PubMed] [Google Scholar]

- Juni P, Witschi A, Bloch R, Egger M. The hazards of scoring the quality of clinical trials for meta-analysis. JAMA. 1999;282:1054–1060. doi: 10.1001/jama.282.11.1054. [DOI] [PubMed] [Google Scholar]

- Moher D, Jadad AR, Tugwell P. Assessing the quality of randomized controlled trials. Current issues and future directions. Int J Technol Assess Health Care. 1996;12:195–208. doi: 10.1017/S0266462300009570. [DOI] [PubMed] [Google Scholar]

- Schulz KF. Subverting randomization in controlled trials. JAMA. 1995;274:1456–1458. doi: 10.1001/jama.1995.03530180050029. [DOI] [PubMed] [Google Scholar]

- Schulz KF, Chalmers I, Hayes RJ, Altman DG. Empirical evidence of bias. Dimensions of methodological quality associated with estimates of treatment effects in controlled trials. JAMA. 1995;273:408–412. doi: 10.1001/jama.1995.03520290060030. [DOI] [PubMed] [Google Scholar]

- Naylor CD. Meta-analysis and the meta-epidemiology of clinical research. BMJ. 1997;315:617–619. doi: 10.1136/bmj.315.7109.617. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sterne JA, Juni P, Schulz KF, Altman DG, Bartlett C, Egger M. Statistical methods for assessing the influence of study characteristics on treatment effects in 'meta-epidemiological’ research. Stat Med. 2002;21:1513–1524. doi: 10.1002/sim.1184. [DOI] [PubMed] [Google Scholar]

- Higgins JP, Altman DG, Gotzsche PC, Juni P, Moher D, Oxman AD. et al. The cochrane collaboration’s tool for assessing risk of bias in randomised trials. BMJ. 2011;343:d5928. doi: 10.1136/bmj.d5928. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Guyatt GH, Oxman AD, Vist GE, Kunz R, Falck-Ytter Y, Alonso-Coello P. et al. GRADE: an emerging consensus on rating quality of evidence and strength of recommendations. BMJ. 2008;336:924–926. doi: 10.1136/bmj.39489.470347.AD. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Green S, Higgins J. Glossary. Cochrane handbook for systematic reviews of interventions 4.2. 5 [Updated May 2005] 2009.

- Juni P, Altman DG, Egger M. Systematic reviews in health care: assessing the quality of controlled clinical trials. BMJ. 2001;323:42–46. doi: 10.1136/bmj.323.7303.42. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Begg C, Cho M, Eastwood S, Horton R, Moher D, Olkin I. et al. Improving the quality of reporting of randomized controlled trials. The CONSORT statement. JAMA. 1996;276:637–639. doi: 10.1001/jama.1996.03540080059030. [DOI] [PubMed] [Google Scholar]

- Cochrane AL. Effectiveness and efficiency: random reflections on health services. 1973. [DOI] [PubMed]

- A proposal for structured reporting of randomized controlled trials. The standards of reporting trials group. JAMA. 1994;272(24):1926–1931. doi: 10.1001/jama.1994.03520240054041. [DOI] [PubMed] [Google Scholar]

- Moher D, Schulz KF, Altman DG. The CONSORT statement: revised recommendations for improving the quality of reports of pllel-group randomised trials. Lancet. 2001;357:1191–1194. doi: 10.1016/S0140-6736(00)04337-3. [DOI] [PubMed] [Google Scholar]

- Schulz KF, Altman DG, Moher D. CONSORT 2010 statement: updated guidelines for reporting pllel group randomised trials. BMJ. 2010;340:c332. doi: 10.1136/bmj.c332. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schulz KF, Chalmers I, Altman DG, Grimes DA, Dore CJ. The methodologic quality of randomization as assessed from reports of trials in specialist and general medical journals. Online J Curr Clin Trials. 1995;197:81. [PubMed] [Google Scholar]

- Egger M, Davey SG, Schneider M, Minder C. Bias in meta-analysis detected by a simple, graphical test. BMJ. 1997;315:629–634. doi: 10.1136/bmj.315.7109.629. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chan AW, Hrobjartsson A, Haahr MT, Gotzsche PC, Altman DG. Empirical evidence for selective reporting of outcomes in randomized trials: comparison of protocols to published articles. JAMA. 2004;291:2457–2465. doi: 10.1001/jama.291.20.2457. [DOI] [PubMed] [Google Scholar]

- De AC, Drazen JM, Frizelle FA, Haug C, Hoey J, Horton R. et al. Clinical trial registration: a statement from the International Committee of Medical Journal Editors. N Engl J Med. 2004;351:1250–1251. doi: 10.1056/NEJMe048225. [DOI] [PubMed] [Google Scholar]

- Dwan K, Altman DG, Arnaiz JA, Bloom J, Chan AW, Cronin E. et al. Systematic review of the empirical evidence of study publication bias and outcome reporting bias. PLoS One. 2008;3:e3081. doi: 10.1371/journal.pone.0003081. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wood L, Egger M, Gluud LL, Schulz KF, Juni P, Altman DG. et al. Empirical evidence of bias in treatment effect estimates in controlled trials with different interventions and outcomes: meta-epidemiological study. BMJ. 2008;336:601–605. doi: 10.1136/bmj.39465.451748.AD. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sterne JA, Sutton AJ, Ioannidis JP, Terrin N, Jones DR, Lau J. et al. Recommendations for examining and interpreting funnel plot asymmetry in meta-analyses of randomised controlled trials. BMJ. 2011;343:d4002. doi: 10.1136/bmj.d4002. [DOI] [PubMed] [Google Scholar]

- Savovic J, Jones HE, Altman DG, Harris RJ, Juni P, Pildal J. et al. Influence of reported study design characteristics on intervention effect estimates from randomized, controlled trials. Ann Intern Med. 2012;157:429–438. doi: 10.7326/0003-4819-157-6-201209180-00537. [DOI] [PubMed] [Google Scholar]

- Kirkham JJ, Dwan KM, Altman DG, Gamble C, Dodd S, Smyth R. et al. The impact of outcome reporting bias in randomised controlled trials on a cohort of systematic reviews. BMJ. 2010;340:c365. doi: 10.1136/bmj.c365. [DOI] [PubMed] [Google Scholar]

- Pildal J, Hrobjartsson A, Jorgensen KJ, Hilden J, Altman DG, Gotzsche PC. Impact of allocation concealment on conclusions drawn from meta-analyses of randomized trials. Int J Epidemiol. 2007;36:847–857. doi: 10.1093/ije/dym087. [DOI] [PubMed] [Google Scholar]

- Beecher HK. The powerful placebo. J Am Med Assoc. 1955;159:1602–1606. doi: 10.1001/jama.1955.02960340022006. [DOI] [PubMed] [Google Scholar]

- Chalmers TC, Celano P, Sacks HS, Smith H Jr. Bias in treatment assignment in controlled clinical trials. N Engl J Med. 1983;309:1358–1361. doi: 10.1056/NEJM198312013092204. [DOI] [PubMed] [Google Scholar]

- Hróbjartsson A, Gøtzsche PC. Placebo interventions for all clinical conditions. Cochrane Database Syst Rev. 2010;1 doi: 10.1002/14651858.CD003974.pub3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hrobjartsson A, Thomsen AS, Emanuelsson F, Tendal B, Hilden J, Boutron I. et al. Observer bias in randomised clinical trials with binary outcomes: systematic review of trials with both blinded and non-blinded outcome assessors. BMJ. 2012;344:e1119. doi: 10.1136/bmj.e1119. [DOI] [PubMed] [Google Scholar]

- Gotzsche PC. Blinding during data analysis and writing of manuscripts. Control Clin Trials. 1996;17:285–290. doi: 10.1016/0197-2456(95)00263-4. [DOI] [PubMed] [Google Scholar]

- Haahr MT, Hrobjartsson A. Who is blinded in randomized clinical trials? A study of 200 trials and a survey of authors. Clin Trials. 2006;3:360–365. doi: 10.1177/1740774506069153. [DOI] [PubMed] [Google Scholar]

- Schulz KF, Chalmers I, Altman DG. The landscape and lexicon of blinding in randomized trials. Ann Intern Med. 2002;136:254–259. doi: 10.7326/0003-4819-136-3-200202050-00022. [DOI] [PubMed] [Google Scholar]

- Devereaux PJ, Manns BJ, Ghali WA, Quan H, Lacchetti C, Montori VM. et al. Physician interpretations and textbook definitions of blinding terminology in randomized controlled trials. JAMA. 2001;285:2000–2003. doi: 10.1001/jama.285.15.2000. [DOI] [PubMed] [Google Scholar]

- Boutron I, Estellat C, Ravaud P. A review of blinding in randomized controlled trials found results inconsistent and questionable. J Clin Epidemiol. 2005;58:1220–1226. doi: 10.1016/j.jclinepi.2005.04.006. [DOI] [PubMed] [Google Scholar]

- Montori VM, Bhandari M, Devereaux PJ, Manns BJ, Ghali WA, Guyatt GH. In the dark: the reporting of blinding status in randomized controlled trials. J Clin Epidemiol. 2002;55:787–790. doi: 10.1016/S0895-4356(02)00446-8. [DOI] [PubMed] [Google Scholar]

- Hróbjartsson A, Thomsen ASS, Emanuelsson F, Tendal B, Hilden J, Boutron I. et al. Observer bias in randomized clinical trials with measurement scale outcomes: a systematic review of trials with both blinded and nonblinded assessors. Can Med Assoc J. 2013;185:E201–E211. doi: 10.1503/cmaj.120744. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Boutron I, Estellat C, Guittet L, Dechartres A, Sackett DL, Hrobjartsson A. et al. Methods of blinding in reports of randomized controlled trials assessing pharmacologic treatments: a systematic review. PLoS Med. 2006;3:e425. doi: 10.1371/journal.pmed.0030425. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goodman S, Dickersin K. Metabias: a challenge for comptive effectiveness research. Ann Intern Med. 2011;155:61–62. doi: 10.7326/0003-4819-155-1-201107050-00010. [DOI] [PubMed] [Google Scholar]

- Sterling TD, Rosenbaum WL, Weinkam JJ. Publication decisions revisited: the effect of the outcome of statistical tests on the decision to publish and vice versa. Am Stat. 1995;49:108–112. [Google Scholar]

- Moscati R, Jehle D, Ellis D, Fiorello A, Landi M. Positive-outcome bias: comparison of emergency medicine and general medicine literatures. Acad Emerg Med. 1994;1:267–271. doi: 10.1111/j.1553-2712.1994.tb02443.x. [DOI] [PubMed] [Google Scholar]

- Liebeskind DS, Kidwell CS, Sayre JW, Saver JL. Evidence of publication bias in reporting acute stroke clinical trials. Neurology. 2006;67:973–979. doi: 10.1212/01.wnl.0000237331.16541.ac. [DOI] [PubMed] [Google Scholar]

- Carter AO, Griffin GH, Carter TP. A survey identified publication bias in the secondary literature. J Clin Epidemiol. 2006;59:241–245. doi: 10.1016/j.jclinepi.2005.08.011. [DOI] [PubMed] [Google Scholar]

- Weber EJ, Callaham ML, Wears RL, Barton C, Young G. Unpublished research from a medical specialty meeting: why investigators fail to publish. JAMA. 1998;280:257–259. doi: 10.1001/jama.280.3.257. [DOI] [PubMed] [Google Scholar]

- Dickersin K, Min YI. NIH clinical trials and publication bias. Online J Curr Clin Trials. 1993;50:4967. [PubMed] [Google Scholar]

- Dickersin K. How important is publication bias? A synthesis of available data. AIDS Educ Prev. 1997;9:15–21. [PubMed] [Google Scholar]

- Vevea JL, Hedges LV. A general linear model for estimating effect size in the presence of publication bias. Psychometrika. 1995;60:419–435. doi: 10.1007/BF02294384. [DOI] [Google Scholar]

- Taylor SJ, Tweedie RL. Practical estimates of the effect of publication bias in meta-analysis. Aust Epidemiol. 1998;5:14–17. [Google Scholar]

- Givens GH, Smith DD, Tweedie RL. Publication bias in meta-analysis: a Bayesian data-augmentation approach to account for issues exemplified in the passive smoking debate. Stat Sci. 1997. pp. 221–240.

- Duval S, Tweedie R. Trim and fill: a simple funnel-plot-based method of testing and adjusting for publication bias in meta-analysis. Biometrics. 2000;56:455–463. doi: 10.1111/j.0006-341X.2000.00455.x. [DOI] [PubMed] [Google Scholar]

- Sterne JA, Egger M. Funnel plots for detecting bias in meta-analysis: guidelines on choice of axis. J Clin Epidemiol. 2001;54:1046–1055. doi: 10.1016/S0895-4356(01)00377-8. [DOI] [PubMed] [Google Scholar]

- Terrin N, Schmid CH, Lau J, Olkin I. Adjusting for publication bias in the presence of heterogeneity. Stat Med. 2003;22:2113–2126. doi: 10.1002/sim.1461. [DOI] [PubMed] [Google Scholar]

- Schwarzer G, Antes G, Schumacher M. A test for publication bias in meta-analysis with sparse binary data. Stat Med. 2007;26:721–733. doi: 10.1002/sim.2588. [DOI] [PubMed] [Google Scholar]

- Peters JL, Sutton AJ, Jones DR, Abrams KR, Rushton L. Contour-enhanced meta-analysis funnel plots help distinguish publication bias from other causes of asymmetry. J Clin Epidemiol. 2008;61:991–996. doi: 10.1016/j.jclinepi.2007.11.010. [DOI] [PubMed] [Google Scholar]

- Moreno SG, Sutton AJ, Ades AE, Stanley TD, Abrams KR, Peters JL. et al. Assessment of regression-based methods to adjust for publication bias through a comprehensive simulation study. BMC Med Res Methodol. 2009;9:2. doi: 10.1186/1471-2288-9-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moreno SG, Sutton AJ, Turner EH, Abrams KR, Cooper NJ, Palmer TM. et al. Novel methods to deal with publication biases: secondary analysis of antidepressant trials in the FDA trial registry database and related journal publications. BMJ. 2009;339:b2981. doi: 10.1136/bmj.b2981. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Terrin N, Schmid CH, Lau J. In an empirical evaluation of the funnel plot, researchers could not visually identify publication bias. J Clin Epidemiol. 2005;58:894–901. doi: 10.1016/j.jclinepi.2005.01.006. [DOI] [PubMed] [Google Scholar]

- Peters JL, Sutton AJ, Jones DR, Abrams KR, Rushton L. Comparison of two methods to detect publication bias in meta-analysis. JAMA. 2006;295:676–680. doi: 10.1001/jama.295.6.676. [DOI] [PubMed] [Google Scholar]

- Peters JL, Sutton AJ, Jones DR, Abrams KR, Rushton L. Performance of the trim and fill method in the presence of publication bias and between-study heterogeneity. Stat Med. 2007;26:4544–4562. doi: 10.1002/sim.2889. [DOI] [PubMed] [Google Scholar]

- Ioannidis JP, Trikalinos TA. The appropriateness of asymmetry tests for publication bias in meta-analyses: a large survey. CMAJ. 2007;176:1091–1096. doi: 10.1503/cmaj.060410. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sterne JAC, Egger M, Moher D. In: Cochrane handbook for systematic reviews of intervention. Version 5.1.0 (Updated march 2011) edition. Higgins JPT, Green S, editor. The Cochrane Collaboration; 2011. Chapter 10: addressing reporting biases. [Google Scholar]

- Hutton JL, Williamson PR. Bias in metaGÇÉanalysis due to outcome variable selection within studies. J Royal Stat Soc : Series C (Appl Stat) 2002;49:359–370. [Google Scholar]

- Hahn S, Williamson PR, Hutton JL. Investigation of within-study selective reporting in clinical research: follow-up of applications submitted to a local research ethics committee. J Eval Clin Pract. 2002;8:353–359. doi: 10.1046/j.1365-2753.2002.00314.x. [DOI] [PubMed] [Google Scholar]

- Chan AW, Krleza-Jeric K, Schmid I, Altman DG. Outcome reporting bias in randomized trials funded by the Canadian Institutes of Health Research. CMAJ. 2004;171:735–740. doi: 10.1503/cmaj.1041086. [DOI] [PMC free article] [PubMed] [Google Scholar]

- von Elm E, Rollin A, Blumle A, Senessie C, Low N, Egger M. Selective reporting of outcomes of drug trials. Comparison of study protocols and published articles. 2006.

- Furukawa TA, Watanabe N, Omori IM, Montori VM, Guyatt GH. Association between unreported outcomes and effect size estimates in Cochrane meta-analyses. JAMA. 2007;297:468–470. doi: 10.1001/jama.297.5.468-b. [DOI] [PubMed] [Google Scholar]

- Page MJ, McKenzie JE, Forbes A. Many scenarios exist for selective inclusion and reporting of results in randomized trials and systematic reviews. J Clin Epidemiol. 2013. [DOI] [PubMed]

- Chan AW, Altman DG. Identifying outcome reporting bias in randomised trials on PubMed: review of publications and survey of authors. BMJ. 2005;330:753. doi: 10.1136/bmj.38356.424606.8F. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Smyth RM, Kirkham JJ, Jacoby A, Altman DG, Gamble C, Williamson PR. Frequency and reasons for outcome reporting bias in clinical trials: interviews with trialists. BMJ. 2011;342:c7153. doi: 10.1136/bmj.c7153. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dwan K, Gamble C, Williamson PR, Kirkham JJ. Systematic review of the empirical evidence of study publication bias and outcome reporting BiasGÇöAn updated review. PloS one. 2013;8:e66844. doi: 10.1371/journal.pone.0066844. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Williamson PR, Gamble C, Altman DG, Hutton JL. Outcome selection bias in meta-analysis. Stat Methods Med Res. 2005;14:515–524. doi: 10.1191/0962280205sm415oa. [DOI] [PubMed] [Google Scholar]

- Williamson P, Altman D, Blazeby J, Clarke M, Gargon E. Driving up the quality and relevance of research through the use of agreed core outcomes. J Health Serv Res Policy. 2012;17:1–2. doi: 10.1258/jhsrp.2011.011131. [DOI] [PubMed] [Google Scholar]

- The COMET Initiative. http://www.comet-initiative.org/, Last accessed 19th September 2013.

- Greenland S, O’Rourke K. On the bias produced by quality scores in meta-analysis, and a hierarchical view of proposed solutions. Biostat. 2001;2:463–471. doi: 10.1093/biostatistics/2.4.463. [DOI] [PubMed] [Google Scholar]

- Berlin JA. Does blinding of readers affect the results of meta-analyses? University of Pennsylvania Meta-analysis Blinding Study Group. Lancet. 1997;350:185–186. doi: 10.1016/s0140-6736(05)62352-5. [DOI] [PubMed] [Google Scholar]

- Morissette K, Tricco AC, Horsley T, Chen MH, Moher D. Blinded versus unblinded assessments of risk of bias in studies included in a systematic review. Cochrane Database Syst Rev. 2011. p. MR000025. [DOI] [PMC free article] [PubMed]

- Moher D, Pham B, Jones A, Cook DJ, Jadad AR, Moher M. et al. Does quality of reports of randomised trials affect estimates of intervention efficacy reported in meta-analyses? Lancet. 1998;352:609–613. doi: 10.1016/S0140-6736(98)01085-X. [DOI] [PubMed] [Google Scholar]

- Gabriel SE, Normand SL. Getting the methods right–the foundation of patient-centered outcomes research. N Engl J Med. 2012;367:787–790. doi: 10.1056/NEJMp1207437. [DOI] [PubMed] [Google Scholar]

- Simera I, Moher D, Hoey J, Schulz KF, Altman DG. A catalogue of reporting guidelines for health research. Eur J Clin Invest. 2010;40:35–53. doi: 10.1111/j.1365-2362.2009.02234.x. [DOI] [PubMed] [Google Scholar]

- Hopewell S, Dutton S, Yu LM, Chan AW, Altman DG. The quality of reports of randomised trials in 2000 and 2006: comptive study of articles indexed in PubMed. BMJ. 2010;340:c723. doi: 10.1136/bmj.c723. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Turner L, Shamseer L, Altman DG, Weeks L, Peters J, Kober T. et al. Consolidated standards of reporting trials (CONSORT) and the completeness of reporting of randomised controlled trials (RCTs) published in medical journals. Cochrane Database Syst Rev. 2012;11 doi: 10.1002/14651858.MR000030.pub2. MR000030. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Simes RJ. Publication bias: the case for an international registry of clinical trials. J Clin Oncol. 1986;4:1529–1541. doi: 10.1200/JCO.1986.4.10.1529. [DOI] [PubMed] [Google Scholar]

- Moher D. Clinical-trial registration: a call for its implementation in Canada. CMAJ. 1993;149:1657–1658. [PMC free article] [PubMed] [Google Scholar]

- Horton R, Smith R. Time to register randomised trials. The case is now unanswerable. BMJ. 1999;319:865–866. doi: 10.1136/bmj.319.7214.865. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Abbasi K. Compulsory registration of clinical trials. BMJ. 2004;329:637–638. doi: 10.1136/bmj.329.7467.637. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moja LP, Moschetti I, Nurbhai M, Compagnoni A, Liberati A, Grimshaw JM. et al. Compliance of clinical trial registries with the World Health Organization minimum data set: a survey. Trials. 2009;10:56. doi: 10.1186/1745-6215-10-56. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Prayle AP, Hurley MN, Smyth AR. Compliance with mandatory reporting of clinical trial results on ClinicalTrials.gov: cross sectional study. BMJ. 2012;344:d7373. doi: 10.1136/bmj.d7373. [DOI] [PubMed] [Google Scholar]

- Mathieu S, Boutron I, Moher D, Altman DG, Ravaud P. Comparison of registered and published primary outcomes in randomized controlled trials. JAMA. 2009;302:977–984. doi: 10.1001/jama.2009.1242. [DOI] [PubMed] [Google Scholar]

- Higgins JPT, Altman DG, Sterne JAC. In: Cochrane handbook for systematic reviews of interventions. Version 5.1.0 (Updated march 2011) edition. Higgins JPT, Green S, editor. The Cochrane Collaboration; 2011. Chapter 8: assessing risk of bias in included studies. [Google Scholar]

- Hartling L, Ospina M, Liang Y, Dryden DM, Hooton N, Krebs SJ. et al. Risk of bias versus quality assessment of randomised controlled trials: cross sectional study. BMJ. 2009;339:b4012. doi: 10.1136/bmj.b4012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hartling L, Hamm MP, Milne A, Vandermeer B, Santaguida PL, Ansari M, Testing the risk of bias tool showed low reliability between individual reviewers and across consensus assessments of reviewer pairs. J Clin Epidemiol. 2012. [DOI] [PubMed]

- Higgins JP, Ramsay C, Reeves B, Deeks JJ, Shea B, Valentine J, Issues relating to study design and risk of bias when including non-randomized studies in systematic reviews on the effects of interventions. Res Syn Methods. 2012. 10.1002/jrsm.1056. [DOI] [PubMed]

- Norris SL, Moher D, Reeves B, Shea B, Loke Y, Garner S, Issues relating to selective reporting when including non-randomized studies in systematic reviews on the effects of healthcare interventions. Research Synthesis Methods. 2012. 10.1002/jrsm.1062. [DOI] [PubMed]

- Valentine J, Thompson SG. Issues relating to confounding and meta-analysis when including non-randomized studies in systematic reviews on the effects of interventions. Res Syn Methods. 2012. 10.1002/jrsm.1064. [DOI] [PubMed]

- Deeks JJ, Dinnes J, D’Amico R, Sowden AJ, Sakarovitch C, Song F. et al. Evaluating non-randomised intervention studies. Health Technol Assess. 2003;7:iii–173. doi: 10.3310/hta7270. [DOI] [PubMed] [Google Scholar]

- Chandler J, Clarke M, Higgins J. Cochrane methods. Cochrane Database Syst Rev. 2012. pp. 1–56.

- Dwan K, Altman DG, Cresswell L, Blundell M, Gamble CL, Williamson PR. Comparison of protocols and registry entries to published reports for randomised controlled trials. Cochrane Database Syst Rev. 2011. p. MR000031. [DOI] [PMC free article] [PubMed]

- Gotzsche PC, Hrobjartsson A, Johansen HK, Haahr MT, Altman DG, Chan AW. Constraints on publication rights in industry-initiated clinical trials. JAMA. 2006;295:1645–1646. doi: 10.1001/jama.295.14.1645. [DOI] [PubMed] [Google Scholar]

- Roseman M, Turner EH, Lexchin J, Coyne JC, Bero LA, Thombs BD. Reporting of conflicts of interest from drug trials in Cochrane reviews: cross sectional study. BMJ. 2012;345:e5155. doi: 10.1136/bmj.e5155. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bassler D, Briel M, Montori VM, Lane M, Glasziou P, Zhou Q. et al. Stopping randomized trials early for benefit and estimation of treatment effects: systematic review and meta-regression analysis. JAMA. 2010;303:1180–1187. doi: 10.1001/jama.2010.310. [DOI] [PubMed] [Google Scholar]

- Montori VM, Devereaux PJ, Adhikari NK, Burns KE, Eggert CH, Briel M. et al. Randomized trials stopped early for benefit: a systematic review. JAMA. 2005;294:2203–2209. doi: 10.1001/jama.294.17.2203. [DOI] [PubMed] [Google Scholar]

- Goodman S, Berry D, Wittes J. Bias and trials stopped early for benefit. JAMA. 2010;304:157–159. doi: 10.1001/jama.2010.931. [DOI] [PubMed] [Google Scholar]

- Dechartres A, Boutron I, Trinquart L, Charles P, Ravaud P. Single-center trials show larger treatment effects than multicenter trials: evidence from a meta-epidemiologic study. Ann Intern Med. 2011;155:39–51. doi: 10.7326/0003-4819-155-1-201107050-00006. [DOI] [PubMed] [Google Scholar]

- Bafeta A, Dechartres A, Trinquart L, Yavchitz A, Boutron I, Ravaud P. Impact of single centre status on estimates of intervention effects in trials with continuous outcomes: meta-epidemiological study. BMJ. 2012;344:e813. doi: 10.1136/bmj.e813. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ip S, Hadar N, Keefe S, Parkin C, Iovin R, Balk EM. et al. A Web-based archive of systematic review data. Syst Rev. 2012;1:15. doi: 10.1186/2046-4053-1-15. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hopewell S, Boutron I, Altman DG, Ravaud P. Incorporation of assessments of risk of bias of primary studies in systematic reviews of randomized trials: a cross-sectional review. Journal TBD. in press. [DOI] [PMC free article] [PubMed]

- Turner RM, Spiegelhalter DJ, Smith GC, Thompson SG. Bias modelling in evidence synthesis. J R Stat Soc Ser A Stat Soc. 2009;172:21–47. doi: 10.1111/j.1467-985X.2008.00547.x. [DOI] [PMC free article] [PubMed] [Google Scholar]