Abstract

Resampling-based multiple testing procedures are widely used in genomic studies to identify differentially expressed genes and to conduct genome-wide association studies. However, the power and stability properties of these popular resampling-based multiple testing procedures have not been extensively evaluated. Our study focuses on investigating the power and stability of seven resampling-based multiple testing procedures frequently used in high-throughput data analysis for small sample size data through simulations and gene oncology examples. The bootstrap single-step minP procedure and the bootstrap step-down minP procedure perform the best among all tested procedures, when sample size is as small as 3 in each group and either familywise error rate or false discovery rate control is desired. When sample size increases to 12 and false discovery rate control is desired, the permutation maxT procedure and the permutation minP procedure perform best. Our results provide guidance for high-throughput data analysis when sample size is small.

1. Introduction

With rapidly developing biotechnology, microarrays and next generation sequencing have been widely used in biomedical and biological fields for identifying differentially expressed genes, detecting transcription factor binding sites, and mapping complex traits using single nucleotide polymorphisms (SNPs) [1–7]. The multiple testing error rates associated with thousands, even millions of hypotheses testing, need to be taken into account. Common multiple testing error rates controlled in multiple hypotheses testing are the familywise error rate (FWER), which is the probability of at least one false rejection [8, 9] and the false discovery rate (FDR), which is the expected proportion of falsely rejected null hypotheses [10].

Resampling-based multiple testing procedures are widely used in high-throughput data analysis (e.g., microarray and next generation sequencing), especially when the sample size is small or the distribution of test statistic is nonnormally distributed or is unknown. Resampling-based multiple testing procedures can account for dependent structures among P values or test statistics, resulting in lower type II errors. The commonly used resampling techniques include permutation tests and bootstrap methods.

Permutation tests are nonparametric statistical significance tests, where the test statistics' distribution under the null hypothesis is constructed by calculating all possible values or a concrete number of test statistics (usually 1000 or above) from permuted observations under the null hypothesis. The theory of the permutation test is based on the work by Fisher and Pitman in the 1930s. Permutation tests are distribution-free, which can provide exact P values even when sample size is small.

The bootstrap method, first introduced by Efron [11] and further discussed by Efron and Tibshirani [12], is a way of approximating the sampling distribution from just one sample. Instead of taking many simple random samples from the population to find the sampling distribution of a sample statistic, the bootstrap method repeatedly samples with replacement from one random sample. Efron [11] showed that the bootstrap method provides an asymptotically unbiased estimator for the variance of a sample median and for error rates in a linear discrimination problem (outperforming cross-validation). Freedman [13] conclusively showed that bootstrap approximation of the distribution of least square estimates is valid. Finally, Hall [14] showed that the bootstrap method's reduction of error coverage probability, from o(n −1/2) to o(n −1), makes the bootstrap method one order of magnitude more accurate than the delta method. The P values computed by the bootstrap method are less exact than P values obtained from the permutation method, and additionally, P values estimated by the bootstrap method are asymptotically convergent to the true P values [15].

Different resampling methods can draw different conclusions, however, when applied to the same data set. An investigation of multiple testing error rate control, power, and stability of those resampling methods under different situations is necessary to provide guidance for data analysis, so that optimal methods in different scenarios could be used to maximize power and minimize multiple testing error rates.

In this paper, we focus on investigating the power and stability properties of several commonly used resampling-based multiple testing procedures: (1) the permutation tests [16]; (2) the permutation-based significant analysis of microarray (SAM) procedure [17]; and (3) the bootstrap multiple testing procedures [15].

2. Materials and Methods

2.1. Permutation Test

To carry out a permutation test based on a test statistic that measures the size of an effect of interest, we proceed as follows.

Compute the test statistics for the observed data set, such as two sample t-test statistics.

Permute the original data in a way that matches the null hypothesis to get permuted resamples and construct the reference distribution using the test statistics calculated from permuted resamples.

Calculate the critical value of a level α test based on the upper α percentile of the reference distribution, or obtain the raw P value by computing the proportion of permutation test statistics that are as extreme as or more extreme than the observed test statistic.

Westfall and Young [16] proposed two methods to adjust raw P values to control the multiple testing error rates. One is single-step minP procedure and the other is single-step maxT procedure.

The single-step minP adjusted P values are defined as [18]

| (1) |

The single-step maxT adjusted P values are defined in terms of test statistics T i, namely, [18]

| (2) |

where H M is the complete null hypothesis. P l is the raw P value for the lth hypothesis, and T l is the observed test statistic for the lth hypothesis.

2.2. Significance Analysis of Microarrays (SAM) Procedure

The Significance Analysis of Microarrays (SAM) procedure proposed by Tusher et al. [17] identifies genes with significant changes in expression using a set of gene-specific t-tests. In SAM, genes are assigned with scores relative to change in gene expression and its standard deviation of repeated measurements. Scatter plots of the observed relative differences and the expected relative differences estimated through permutation identifies statistically significant genes based on a fixed threshold.

Based on the description of SAM in Tusher et al. [17], the SAM procedure can be summarized as follows.

Compute a test statistic t i for each gene i (i = 1,…, g).

Compute order statistics t (i) such that t (1) ≤ t (2) ≤ ⋯≤t (g).

Perform B permutations of the responses/covariates y 1,…, y n. For each permutation b, compute the permuted test statistics t i,b and the corresponding order statistics t (1),b ≤ t (2),b ≤ ⋯≤t (g),b.

From the B permutations, estimate the expected values of order statistics by .

Form a quantile-quantile (Q-Q) plot (SAM plot) of the observed t (i) versus the expected .

For a given threshold Δ, starting at the origin, and moving up to find the first i = i 1 such that . All genes past i 1 are called significant positives. Similarly, starting at the origin and moving down to the left, find the first i = i 2 such that . All genes past i 2 are called significant negatives. Define the upper cut point Cutup(Δ) = min{t (i) : i ≤ i 1} = t (i1) and the lower cut point Cutlow(Δ) = max{t (i) : i ≥ i 2} = t (i2).

For a given threshold, the expected number of false rejections E(V) is estimated by computing the number of genes with t i,b above Cutup(Δ) or below Cutlow(Δ) for each of the B permutation and averaging the numbers over B permutations.

A threshold Δ is chosen to control the Fdr (Fdr = (E(V)/r)) under the complete null hypothesis, at an acceptable nominal level.

2.3. Bootstrap Method

The bootstrap method based on estimated null distribution of test statistics was introduced by Pollard and van der Laan [15] and proceeds as follows:

Compute the observed test statistic for the observed data set.

Resample the data with replacement within each group to obtain bootstrap resamples, compute the resampled test statistics for each resampled data set, and construct the reference distribution using the centered and/or scaled resampled test statistics.

Calculate the critical value of a level α test based on the upper α percentile of the reference distribution, or obtain the raw P values by computing the proportion of bootstrapped test statistics that is as extreme as or more extreme than the observed test statistic.

The MTP function based on the bootstrap method includes single-step minP and maxT adjusted P values, as well as step-down minP and step-down maxT adjusted P values. The single-step maxT and minP adjusted P values are defined as before.

The step-down minP adjusted P values are defined as

| (3) |

and the step-down maxT adjusted P values are defined as

| (4) |

where |t s1 | ≥|t s2 | ≥⋯≥|t sm| denote the ordered test statistics [18].

2.4. Simulation Setup

Simulation studies were conducted to compare the power and stability of the resampling-based multiple testing procedures for both independent test statistics and dependent test statistics. According to Rubin et al. [19], the power is defined as the expected proportion of true positives. The stability is measured as the variance of true discoveries and variance of total discoveries.

In our first simulation study, each set includes 100 independently generated groups of two samples with equal sample size of 3 or 12 in each group. 100 repetitions are chosen because computationally 100 is more efficient than 1000 or even higher repetitions. 1000 repetitions are also tried and similar results are obtained. Thus, 100 repetitions are chosen for computational efficiency. The total number of genes (m) is set to be 2000 with the fraction of true null hypotheses (m 0/m) at 50%. In the two-group comparison, the standardized logarithms of gene expression levels are generated from multivariate normal distribution. One group has 50% of genes with means at μ and the remaining with means at 0. All genes in the other group have means at 0. The mean expression level μ on log2 scale is set to be from 1 to 6 with step 0.50 for the first simulation study. The variances of the standardized logarithm of gene expression levels are equal to 1 in both groups. Thus, the mean differences of μ in gene expression between the two groups are the Cohen's d effect sizes. The pairwise correlation coefficients of test statistics are set to be 0 in our simulation study. The test statistics used are equal variance t-test throughout the simulation study. The FWER/FDR level is set at 5% (α = 0.05).

We conducted another simulation study to examine the effect of fraction of true null hypotheses on power and stability. In our second simulation study, each data set includes 100 independently generated samples of two groups with equal sample size of 3. The total number of genes (m) is set to be 1000, with the fraction of differentially expressed genes ((m − m 0)/m) equal to 10%, 25%, 50%, 75%, and 90% to cover all possible scenarios. In the two-group comparison, the gene expression level on log2 scale is generated randomly from a multivariate normal distribution with μ = 0 and σ = 1. The correlations between genes are randomly fluctuated between 0 and 1 to mimic the correlations in real microarray data. The mean differences are set between 1 and 2, with the step equaling the inverse of the number of differentially expressed gene (m − m 0). The variances are set to 1. Equal variance t-tests are used for this simulation study, and the FWER/FDR level is set at 5% (α = 0.05).

The mt.maxT and mt.minP functions in R were used to evaluate the Westfall and Young's permutation test. The sam function in R was used for the SAM procedure. The Bootstrap method proposed by Pollard and van der Laan [15] was executed using the MTP function in R. The MTP function includes the maxT method, the minP method, the single step procedure, and the step-down procedure, which results into four different functions, including single-step maxT (ss.maxT), single-step minP (ss.minP), step-down maxT (sd.maxT), and step-down minP (sd.minP).

2.5. Cancer Microarray Example

Ovarian cancer is a common cause of cancer deaths in women [20]. Microarray experiments were conducted to identify differentially expressed genes between chemotherapy favorable patients and chemotherapy unfavorable patients [21]. Those differentially expressed genes could be used to develop optimal treatment for a new ovarian cancer patient by predicting possible response to chemotherapy. The gene expression data of 12,625 genes from 6 patients' mRNA samples, obtained from Moreno et al.'s ovarian cancer microarray study, were used to show the differences in the number of total discoveries among those resampling-based multiple testing procedures with FWER or FDR controlled at 5% (data accessible at NCBI GEO database [22], accession GSE7463). The preprocessing of the ovarian cancer data set was done using the RMA background correction, quantile normalization, and robust linear model summarization. The raw P values and the adjusted P values of comparisons between the chemotherapy favorable group (3 subjects) and chemotherapy unfavorable group (3 subjects) were calculated using the resampling-based multiple testing functions in the multitest package and the siggenes package in R.

3. Results

Simulation studies were conducted to compare the power and stability across all tested multiple testing procedures for normally distributed data with either independent or randomly correlated test statistics. The sample size is 3 or 12 in each group for independent test statistics and 3 in each group for randomly correlated test statistics.

3.1. Simulation Results for Independent Test Statistics

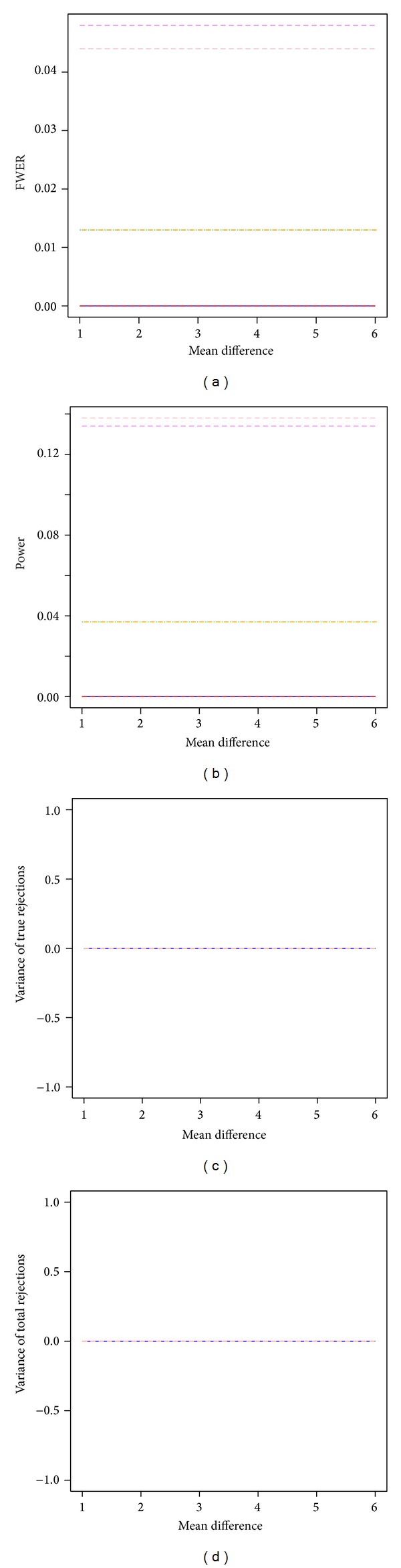

For independent test statistics with FWER controlled at 5%, the two bootstrap minP procedures outperformed all other tested procedures when sample size is 3 in each group (Figure 1). Both the bootstrap single-step minP and the bootstrap step-down minP procedures were more powerful than all other tested procedures, and their FWER estimates were close to 5% nominal level. The two permutation-based procedures (mt.maxT and mt.minP) had no power to detect any significant difference between groups, and their FWER estimates were close to 0. The power of the bootstrap maxT procedures (ss.maxT and sd.maxT) were between the permutation procedures and the bootstrap minP procedures. The estimated variances of true discoveries and total number of discoveries were around 0 for all tested resampling-based multiple testing procedures. The estimated FWER, power, and stability were constant across effect sizes.

Figure 1.

Power and stability properties of resampling-based multiple testing procedures for independent test statistics with FWER controlled at 5% and small sample size of 3 in each group (m 0/m = 50%). Solid blue line: permutation single-step maxT procedure (mt.maxT function); red dashed line: permutation single-step minP (mt.minP function); green dotted line: bootstrap single-step maxT (MTP ss.maxT function); violet dashed line: bootstrap single-step minP (MTP ss.minP function); orange dashed line: bootstrap step-down maxT (MTP sd.maxT function); pink dashed line: bootstrap step-down minP (MTP sd.minP function).

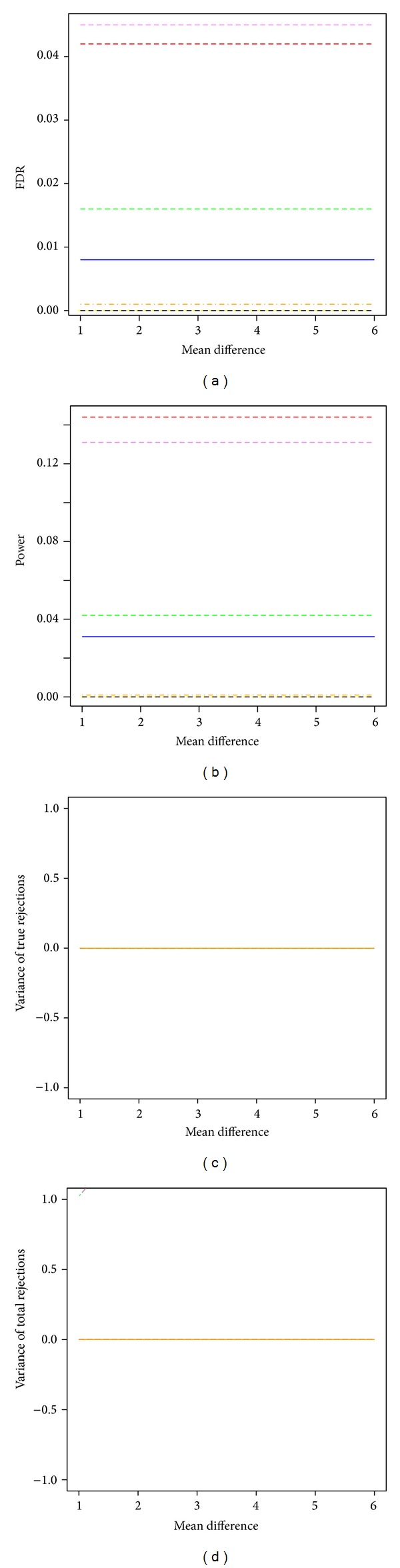

The bootstrap single-step and step-down minP procedures remained to have the largest power among all tested procedures when FDR was controlled at 5% and sample size was 3 in each group (Figure 2). The FDR estimates from the bootstrap single-step and step-down minP procedures also stayed around 5% nominal level. Both the SAM procedure and the two permutation-based maxT and minP procedures had no power to detect any significant difference, and their FDR estimates were also close to 0. Both the FDR estimates and power of the two bootstrap single-step and step-down maxT procedures were between the SAM procedure, the permutation procedures, and the bootstrap minP procedures. All resampling-based multiple testing procedures had estimated variances of true discoveries and total number of discoveries around 0. The estimated FDR, power, and stability were constant across effect sizes.

Figure 2.

Power and stability properties of resampling-based multiple testing procedures for independent test statistics with FDR controlled at 5% and small sample size of 3 in each group (m 0/m = 50%). Yellow dashed line: permutation single-step maxT procedure (mt.maxT function); black dashed line: permutation single-step minP (mt.minP function); solid blue line: bootstrap single-step maxT (MTP ss.maxT function); red dashed line: bootstrap single-step minP (MTP ss.minP function); green dashed line: bootstrap step-down maxT (MTP sd.maxT function); violet dashed line: bootstrap step-down minP (MTP sd.minP function); orange dashed line: SAM procedure (sam function).

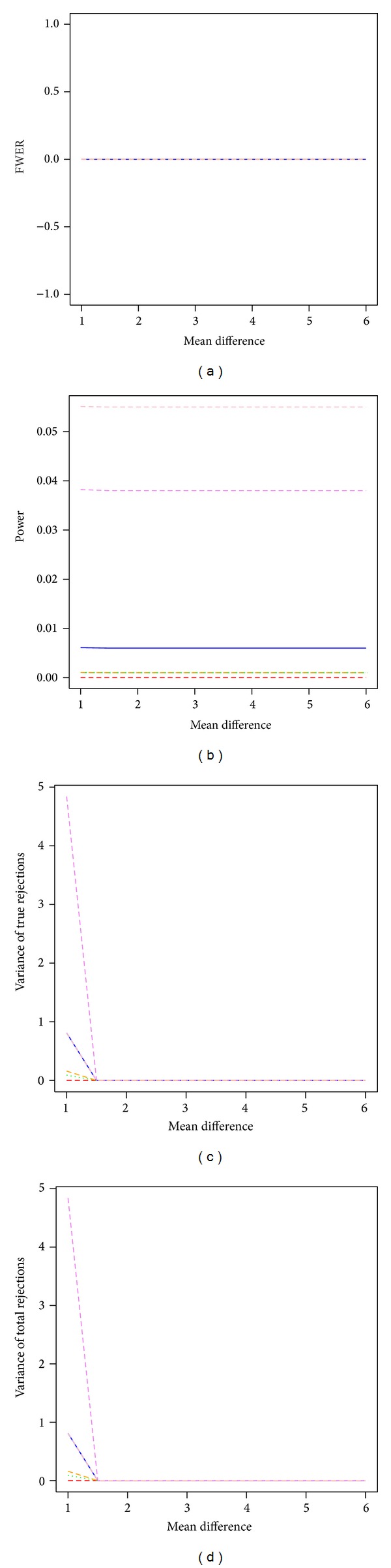

The bootstrap step-down minP procedure had the largest power across all tested procedures when sample size increased to 12 in each group (Figure 3). The bootstrap single-step maxT procedure, the bootstrap step-down maxT procedure, and the permutation single-step minP procedure showed almost zero power for detecting any difference between groups. All tested procedures had FWER estimates around 0 and showed very small estimated variances of true rejections and variances of total rejections. The estimated FWER and power remained constant across effect sizes.

Figure 3.

Power and stability properties of resampling-based multiple testing procedures for independent test statistics with FWER controlled at 5% and moderate sample size of 12 in each group (m 0/m = 50%). Solid blue line: permutation single-step maxT procedure (mt.maxT function); red dashed line: permutation single-step minP (mt.minP function); green dotted line: bootstrap single-step maxT (MTP ss.maxT function); violet dashed line: bootstrap single-step minP (MTP ss.minP function); orange dashed line: bootstrap step-down maxT (MTP sd.maxT function); pink dashed line: bootstrap step-down minP (MTP sd.minP function).

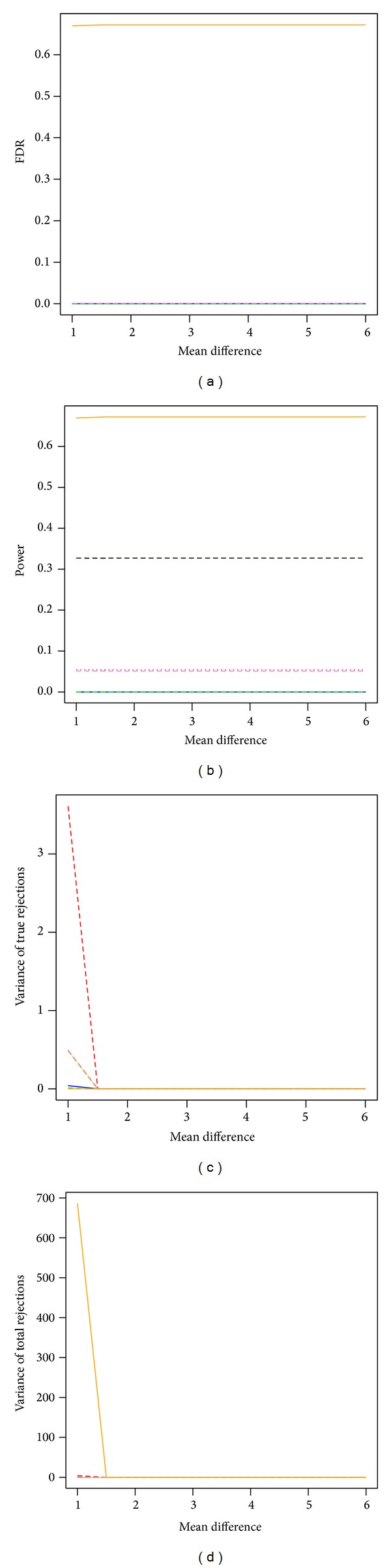

The permutation single-step maxT procedure and the permutation single-step minP procedure performed the best when FDR is controlled at 5% and sample size is 12 in each group (Figure 4). The two permutation maxT and minP procedures had much larger power than the four bootstrap MTP procedures and also had estimated FDR less than 5%. The SAM procedure failed to control the FDR at the desired level of 5%, although it had larger power than all other tested procedures. The estimated variances of total discoveries from the SAM procedure were much larger than all other procedures when the effect size is around 1. The permutation single-step maxT and minP procedures had small variances of true discoveries and total discoveries. The four bootstrap MTP procedures had low power but similar stability as the permutation maxT and minP procedures. The estimated FDR and power were also constant across effect sizes.

Figure 4.

Power and stability properties of resampling-based multiple testing procedures for independent test statistics with FDR controlled at 5% and small sample size of 12 in each group (m 0/m = 50%). Yellow dashed line: permutation single-step maxT procedure (mt.maxT function); black dashed line: permutation single-step minP (mt.minP function); solid blue line: bootstrap single-step maxT (MTP ss.maxT function); red dashed line: bootstrap single-step minP (MTP ss.minP function); green dashed line: bootstrap step-down maxT (MTP sd.maxT function); violet dashed line: bootstrap step-down minP (MTP sd.minP function); orange dashed line: SAM procedure (sam function).

3.2. Simulation Results for Dependent Test Statistics

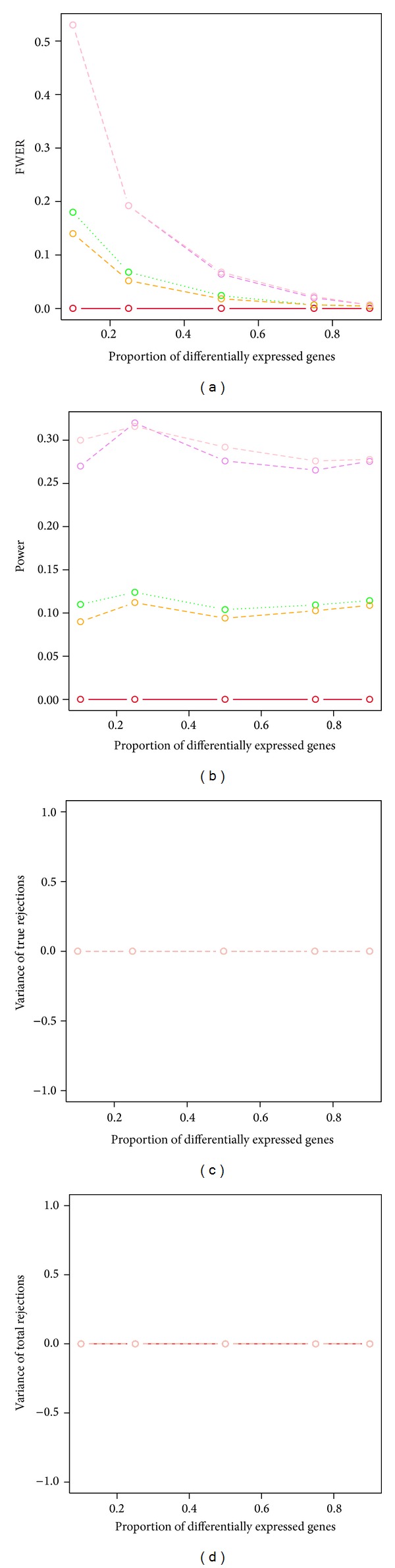

The two bootstrap minP procedures (ss.minP and sd.minP) showed higher power than all other tested procedures across various proportions of nontrue null hypotheses, when test statistics are dependent and FWER is controlled. The two bootstrap minP procedures had desired FWER control as well, when the proportions of nontrue null hypotheses were greater than 50% (Table 1 and Figure 5). The two bootstrap maxT procedures (ss.maxT and sd.maxT) had lower power than the two bootstrap minP procedures. They showed desired FWER control, however, when the proportions of nontrue null hypotheses were over 25%. The permutation single-step maxT and minP procedures had no power to detect any significant difference between groups. All resampling-based procedures had estimated variances of true discoveries and total discoveries around 0 across various proportions of nontrue null hypotheses, when sample size was as small as 3 in each group.

Table 1.

Comparison of the estimated FWER and power for the resampling-based multiple testing procedures with FWER controlled at 5%.

| (m − m 0)/m | mt.maxT | mt.minP | ss.maxT | ss.minP | sd.maxT | sd.minP | |

|---|---|---|---|---|---|---|---|

| FWER | 0.10 | 0.00 | 0.00 | 0.18 | 0.53 | 0.14 | 0.53 |

| 0.25 | 0.00 | 0.00 | 0.07 | 0.19 | 0.05 | 0.19 | |

| 0.50 | 0.00 | 0.00 | 0.02 | 0.06 | 0.02 | 0.07 | |

| 0.75 | 0.00 | 0.00 | 0.01 | 0.02 | 0.01 | 0.02 | |

| 0.90 | 0.00 | 0.00 | 0.00 | 0.01 | 0.00 | 0.01 | |

|

| |||||||

| Power | 0.10 | 0.00 | 0.00 | 0.11 | 0.27 | 0.09 | 0.30 |

| 0.25 | 0.00 | 0.00 | 0.12 | 0.32 | 0.11 | 0.32 | |

| 0.50 | 0.00 | 0.00 | 0.10 | 0.28 | 0.09 | 0.29 | |

| 0.75 | 0.00 | 0.00 | 0.11 | 0.27 | 0.10 | 0.28 | |

| 0.90 | 0.00 | 0.00 | 0.11 | 0.28 | 0.11 | 0.28 | |

mt.maxT: permutation single-step maxT procedure; mt.minP: permutation single-step minP procedure; ss.maxT: bootstrap single-step maxT procedure; ss.minP: bootstrap single-step minP procedure; sd.maxT: bootstrap step-down maxT procedure; sd.minP: bootstrap step-down minP procedure.

Figure 5.

Power and stability properties of resampling-based multiple testing procedures for dependent test statistics with random correlations and the FWER is controlled at 5% (n = 3 in each group). Blue dashed line: permutation single-step maxT procedure (mt.maxT function); solid red line: permutation single-step minP (mt.minP function); green dotted line: bootstrap single-step maxT (MTP ss.maxT function); orange dashed line: bootstrap step-down maxT (MTP sd.maxT function); violet dashed line: bootstrap single-step minP (MTP ss.minP function); pink dashed line: bootstrap step-down minP (MTP sd.minP function).

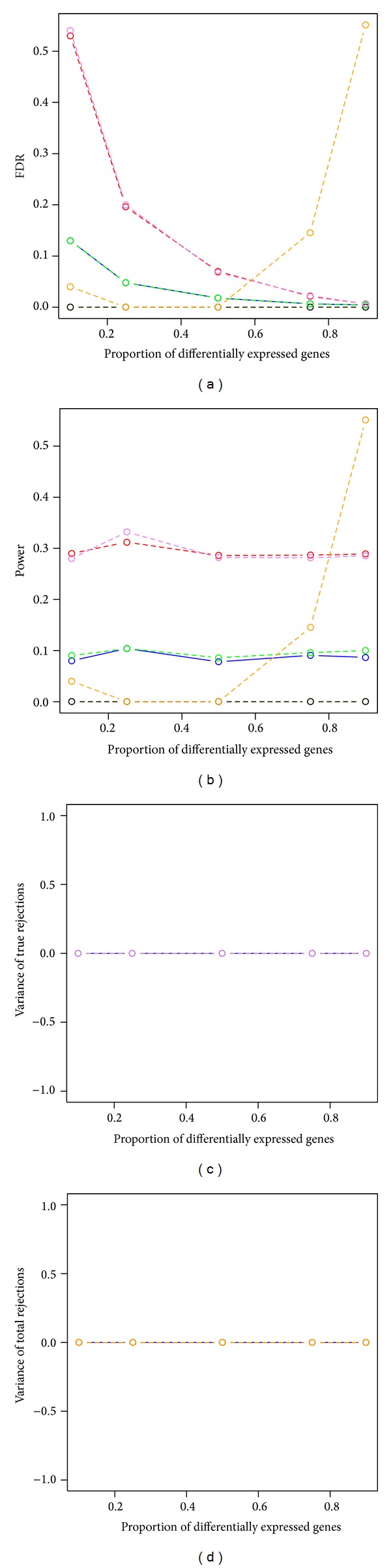

The power and stability of all four bootstrap methods (ss.minP, sd.minP, ss.maxT, and sd.maxT) and the two permutation methods (mt.maxT and mt.minP) showed similar results, when FDR were controlled, as that when FWER were controlled (Table 2 and Figure 6). The SAM procedure had decent FDR control, but very low power when the proportions of nontrue null hypotheses were less than 50%. Both the estimated FDR and power increased when the proportions of nontrue null hypotheses were greater than 50% for the SAM procedure.

Table 2.

Comparison of the estimated FDR and power for the resampling-based multiple testing procedures with FDR controlled at 5%.

| (m − m 0)/m | mt.maxT | mt.minP | ss.maxT | ss.minP | sd.maxT | sd.minP | sam | |

|---|---|---|---|---|---|---|---|---|

| FDR | 0.10 | 0.00 | 0.00 | 0.13 | 0.53 | 0.13 | 0.54 | 0.04 |

| 0.25 | 0.00 | 0.00 | 0.05 | 0.20 | 0.05 | 0.20 | 0.00 | |

| 0.50 | 0.00 | 0.00 | 0.02 | 0.07 | 0.02 | 0.07 | 0.00 | |

| 0.75 | 0.00 | 0.00 | 0.01 | 0.02 | 0.01 | 0.02 | 0.15 | |

| 0.90 | 0.00 | 0.00 | 0.00 | 0.01 | 0.00 | 0.01 | 0.55 | |

|

| ||||||||

| Power | 0.10 | 0.00 | 0.00 | 0.08 | 0.29 | 0.09 | 0.28 | 0.04 |

| 0.25 | 0.00 | 0.00 | 0.10 | 0.31 | 0.10 | 0.33 | 0.00 | |

| 0.50 | 0.00 | 0.00 | 0.08 | 0.29 | 0.09 | 0.28 | 0.00 | |

| 0.75 | 0.00 | 0.00 | 0.09 | 0.29 | 0.10 | 0.28 | 0.15 | |

| 0.90 | 0.00 | 0.00 | 0.09 | 0.29 | 0.10 | 0.29 | 0.55 | |

mt.maxT: permutation single-step maxT procedure; mt.minP: permutation single-step minP procedure; ss.maxT: bootstrap single-step maxT procedure; ss.minP: bootstrap single-step minP procedure; sd.maxT: bootstrap step-down maxT procedure; sd.minP: bootstrap step-down minP procedure; sam: the SAM procedure.

Figure 6.

Power and stability properties of resampling-based multiple testing procedures for dependent test statistics with random correlations and the FDR is controlled at 5% (n = 3 in each group). Yellow dashed line: permutation single-step maxT procedure (mt.maxT function); black dashed line: permutation single-step minP (mt.minP function); solid blue line: bootstrap single-step maxT (MTP ss.maxT function); green dashed line: bootstrap step-down maxT (MTP sd.maxT function); red dashed line: bootstrap single-step minP (MTP ss.minP function); violet dashed line: bootstrap step-down minP (MTP sd.minP function); orange dashed line: SAM procedure (sam function).

3.3. Real Data Example

The gene expression levels of 12625 genes from 6 subjects on log2 scale were used to compare total number of discoveries identified from all tested resampling-based multiple testing procedures (Table 3). The two bootstrap minP procedures had more rejections than the two bootstrap maxT procedures, when FWER was controlled at 5%. The bootstrap step-down minP and single-step minP procedures remained higher number of rejections than the bootstrap step-down maxT and single-step maxT procedures, when FDR was controlled at 5%. The SAM procedure only rejected 2 genes. The permutation maxT and minP procedures rejected none of those genes. The bootstrap multiple testing procedures has higher power than all other tested procedures and rejected much more null hypotheses compared to the permutation test procedures. The bootstrap minP procedures rejected more hypotheses than the bootstrap maxT procedures. The total number of rejections from this real microarray data analysis is consistent with the results from the simulation studies.

Table 3.

Comparisons of number of total discoveries for the resampling-based multiple testing procedures for the ovarian cancer example with 12,625 genes.

| Resampling methods | Rejected number of hypothesis | |

|---|---|---|

| FWER controlled at 5% | FDR controlled at 5% | |

| mt.maxT | 0 | 0 |

| mt.minP | 0 | 0 |

| ss.maxT | 250 | 385 |

| sd.maxT | 407 | 397 |

| ss.minP | 1785 | 1649 |

| sd.minP | 1766 | 1706 |

| sam | 2 | |

mt.maxT: permutation single-step maxT procedure; mt.minP: permutation single-step minP procedure; ss.maxT: bootstrap single-step maxT procedure; ss.minP: bootstrap single-step minP procedure; sd.maxT: bootstrap step-down maxT procedure; sd.minP: bootstrap step-down minP procedure; sam: the SAM procedure.

4. Discussion

This paper investigated the power and stability properties of several popular resampling-based multiple testing procedures for both independent and dependent test statistics, when sample size is small or moderate, using available functions in R. Our simulation results and real data example show that the bootstrap single-step and step-down minP procedures perform the best for both small sample size data (3 in each group) and moderate sample size data (12 in each group) when FWER control is desired. The bootstrap single-step and step-down minP procedures are the best when FDR control is desired for data with small sample size (3 in each group). The permutation maxT and minP procedures perform the best for data with moderate sample size when FDR control is desired. The SAM procedure overestimates FDR, although it has higher power than the permutation and bootstrap maxT and minP procedures.

The simulation results also showed that the permutation test procedures have no power to detect any significant differences between groups when sample size is as small as 3 in each group; the permutation test procedures perform well when sample size increases to 12 in each group; the SAM procedure has no power for detecting significant differences when the proportion of nontrue null hypotheses is less than 50% and sample size is 3; the bootstrap multiple testing procedures perform better than the permutation test procedures and the SAM procedure for small sample size data.

The zero power of the permutation test procedures is due to its limited number of permutated test statistics for data set with small sample sizes. For example, the complete number of enumeration is only 20 for both permutation single-step maxT procedure and permutation single-step minP procedure when sample size is only 3 in each group. Thus, the smallest raw P value from the permutation procedures will be 0.05. After adjusting the raw P values to control FWER or FDR, all adjusted P values will be larger than 0.05, thus no hypotheses will be rejected. As such, the estimated FWER, FDR, and power will all be zero.

Our current investigation only focuses on normally distributed data. Further investigation is needed to extend the distribution in the simulations from multivariate normal to other distributions, such as lognormal and binomial distributions. To examine the power and stability properties of those resampling-based multiple testing procedures, under nonnormal distributions, will be a focus for our future research.

Conflict of Interests

The authors declare that there is no conflict of interests regarding the publication of this paper.

Acknowledgments

The authors thank Professor Carlos S. Moreno for permission of using his microarray data. This work was supported in part by the National Institute of Minority Health and Health Disparities Awards U54MD007584 (J. Hedges, PI) and G12MD007601 (M. Berry, PI).

References

- 1.Kulesh DA, Clive DR, Zarlenga DS, Greene JJ. Identification of interferon-modulated proliferation-related cDNA sequences. Proceedings of the National Academy of Sciences of the United States of America. 1987;84(23):8453–8457. doi: 10.1073/pnas.84.23.8453. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Schena M, Shalon D, Davis RW, Brown PO. Quantitative monitoring of gene expression patterns with a complementary DNA microarray. Science. 1995;270(5235):467–470. doi: 10.1126/science.270.5235.467. [DOI] [PubMed] [Google Scholar]

- 3.Lashkari DA, Derisi JL, Mccusker JH, et al. Yeast microarrays for genome wide parallel genetic and gene expression analysis. Proceedings of the National Academy of Sciences of the United States of America. 1997;94(24):13057–13062. doi: 10.1073/pnas.94.24.13057. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Pollack JR, Perou CM, Alizadeh AA, et al. Genome-wide analysis of DNA copy-number changes using cDNA microarrays. Nature Genetics. 1999;23(1):41–46. doi: 10.1038/12640. [DOI] [PubMed] [Google Scholar]

- 5.Buck MJ, Lieb JD. ChIP-chip: considerations for the design, analysis, and application of genome-wide chromatin immunoprecipitation experiments. Genomics. 2004;83(3):349–360. doi: 10.1016/j.ygeno.2003.11.004. [DOI] [PubMed] [Google Scholar]

- 6.Mei R, Galipeau PC, Prass C, et al. Genome-wide detection of allelic imbalance using human SNPs and high-density DNA arrays. Genome Research. 2000;10(8):1126–1137. doi: 10.1101/gr.10.8.1126. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Hehir-Kwa JY, Egmont-Petersen M, Janssen IM, Smeets D, van Kessel AG, Veltman JA. Genome-wide copy number profiling on high-density bacterial artificial chromosomes, single-nucleotide polymorphisms, and oligonucleotide microarrays: a platform comparison based on statistical power analysis. DNA Research. 2007;14(1):1–11. doi: 10.1093/dnares/dsm002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Hochberg Y, Tamhane AC. Multiple Comparison Procedures. New York, NY, USA: John Wiley & Sons; 1987. [Google Scholar]

- 9.Shaffer JP. Multiple hypothesis testing: a review. Annual Review of Psychology. 1995;46:561–584. [Google Scholar]

- 10.Benjamini Y, Hochberg Y. Controlling the false discovery rate: a practical and powerful approach to multiple testing. Journal of the Royal Statistical Society B. 1995;57(1):289–300. [Google Scholar]

- 11.Efron B. Bootstrap methods: another look at the jackknife. The Annals of Statistics. 1979;7(1):1–26. [Google Scholar]

- 12.Efron B, Tibshirani R. An Introduction to the Bootstrap. New York, NY, USA: CRC Press; 1994. [Google Scholar]

- 13.Freedman DA. Bootstrapping regression models. The Annals of Statistics. 1981;9(6):1218–1228. [Google Scholar]

- 14.Hall P. On the bootstrap and confidence intervals. The Annals of Statistics. 1986;14(4):1431–1452. [Google Scholar]

- 15.Pollard KS, van der Laan MK. Choice of a null distribution in resampling-based multiple testing. Journal of Statistical Planning and Inference. 2004;125(1-2):85–100. [Google Scholar]

- 16.Westfall PH, Young SS. Resampling-Based Multiple Testing: Examples and Methods for P-Value Adjustment. New York, NY, USA: John Wiley & Sons; 1993. [Google Scholar]

- 17.Tusher VG, Tibshirani R, Chu G. Significance analysis of microarrays applied to the ionizing radiation response. Proceedings of the National Academy of Sciences of the United States of America. 2001;98(9):5116–5121. doi: 10.1073/pnas.091062498. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Ge Y, Dudoit S, Speed TP. Resampling-based multiple testing for microarray data analysis. Test. 2003;12(1):1–77. [Google Scholar]

- 19.Rubin D, Dudoit S, van der Laan M. A method to increase the power of multiple testing procedures through sample splitting. Statistical Applications in Genetics and Molecular Biology. 2006;5(1, article 19) doi: 10.2202/1544-6115.1148. [DOI] [PubMed] [Google Scholar]

- 20.Jemal A, Siegel R, Ward E, et al. Cancer statistics, 2006. CA: A Cancer Journal for Clinicians. 2006;56(2):106–130. doi: 10.3322/canjclin.56.2.106. [DOI] [PubMed] [Google Scholar]

- 21.Moreno CS, Matyunina L, Dickerson EB, et al. Evidence that p53-mediated cell-cycle-arrest inhibits chemotherapeutic treatment of ovarian carcinomas. PLoS ONE. 2007;2(5, article e441) doi: 10.1371/journal.pone.0000441. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Edgar R, Domrachev M, Lash AE. Gene Expression Omnibus: NCBI gene expression and hybridization array data repository. Nucleic Acids Research. 2002;30(1):207–210. doi: 10.1093/nar/30.1.207. [DOI] [PMC free article] [PubMed] [Google Scholar]