Abstract

Objectives

This research is designed to examine demographic differences between the ACTIVE sample and the larger, nationally representative Health and Retirement Study (HRS) sample.

Methods

After describing some relevant demographics (Age, Education, Sex, and Race/Ethnicity) we use three statistical methods to determine sample differences – Logistic Regression Modeling (LRM), Decision Tree Analysis (DTA), and Post-Stratification and Raking Methods. When some differences are found, we create sample weights that other researchers can use to adjust these differences.

Results

By using the resulting sample weights, all results of ACTIVE analyses can be said to be nationally representative based on HRS demographics.

Discussion

Weights are typically provided with data sets to improve the representation of the sample to a nationally representative characterization. With minor sample weighting the ACTIVE sample is treated this way.

Keywords: demographics, statistical methods, sample weights, nationally representative sample, ACTIVE

Introduction

The ACTIVE study was designed to test the transfer effects of cognitive training on everyday abilities in older adults (see Ball et al., 2002; Jobe et al., 2001; McArdle & Prindle, 2008; Rebok, Carlson, & Langbaum, 2007). Various assertions were made at the outset of the ACTIVE program of research: (1) The sample would be large enough to obtain precise estimates of training – with N>2,800 this aim seems achievable. (2) The individuals were randomly assigned to three different training interventions and a no-contact control group. This randomized trial design allows direct comparison of groups of trained and not-trained individuals with unambiguous results, and this also seems to have been achieved. (3) The effects of cognitive training will transfer to measures of everyday functioning through their effects on cognitive abilities. This assertion has been tested at all occasions with evidence of transfer at 5 and 10 years after training was conducted (see (McArdle & Prindle, 2008; Rebok et al., 2007; Willis et al., 2006)). (4) The study enrolled a volunteer sample of older adults, with targeted efforts to include African Americans as they had been under-represented in prior cognitive aging research. How representative the sample is of the older US population has not been examined fully, a limitation for inferences of study findings. (4) The cognitive training programs are effective for a national population. This assumption also has been underlying all inferences, but it has not yet been fully examined.

The ACTIVE sample and resulting data set was created by asking a number of persons (over 5,000) to participate, and enrolling 2,802 participants. The subsequent randomization to four groups brings each group to about n=700 in number. While we assume the initial sampling reflects some form of participant sampling bias itself, we do not pursue this matter further. We also do not pursue the analysis of the randomized treatments as this has been reported elsewhere (Ball et al., 2002; Willis et al., 2006). What is pursued here is an assessment of the national representation of the participants in ACTIVE.

The idea that results from the selected sample of people can generalize to the entire population of older adults is of obvious importance for a study of this magnitude. Many recent claims have been made about the growth and decline of specific cognitive functions (e.g., Horn, 1967; Kaufman, Kaufman, Liu, & Johnson, 2009; McArdle, Ferrer-Caja, Hamagami, & Woodcock, 2002; Schaie & Willis, 1993; Zimprich & Martin, 2002), but the national samples used in these studies were all assumed to be representative of some important population. As far as we can tell, these key assumptions were not fully examined.

In order to examine the presumption that the ACTIVE sample is nationally representative, it is compared here to the sample of the Health and Retirement Survey (HRS; Juster & Suzman, 1995; McArdle, Fisher, & Kadlec, 2007), considering the HRS sample as a proxy for a nationally representative distribution of people. To carry out these analyses, we use publicly available data (from ICPSR) for both the ACTIVE and HRS studies (see ICPSR and HRS websites) to collate comparable sample demographic characteristics (Age, Education, Sex, Race/Ethnicity) for each study sample. To see if there is any deviation between the two studies we use three approaches: 1) logistic regression modeling (LMR) to examine groups differences; 2) a more exploratory data mining approach termed decision tree analysis (DTA; following (McArdle, 2011,2012); and 3) the idea of weighting the sample to account for any deviations of the ACTIVE study from the HRS population characteristics with Post Stratification and Raking.

As a result, a new set of sampling weights (see Cole & Hernan, 2008; Kish, 1995) are obtained using the Post-Stratification, LRM, DTA, and Raking approaches and applied to assess how the weights affect outcomes previously reported. Each process uses the same demographic variables that were used in the sample association analysis (Age, Education, Sex, and Race/Ethnicity). To the degree that any subsequent analyses of ACTIVE data use these sampling weights, it can be said that the results of these analyses are as nationally representative as the HRS.

Methods

Participants

The data were accessible from the University of Michigan ICPSR’s data repository and from the HRS database. From these files, the demographics for each person were available as outlined above. The data files were merged together, and years of age, years of education, Sex and race/ethnicity were equated between samples. For age, the sample of ACTIVE included persons aged 65 to 95 years (Jobe et al., 2001). Because the HRS age range was broader (about 50–95), the HRS sample was reduced to include only persons 65 to 95 years, to be directly in line with ACTIVE. The HRS restricted sample is N=10,487.

Next the demographic variables were recoded for simplicity and interpretation. The Age variable was centered at 65 for all subsequent analyses. Years of education included the reported number of years of education through high school diploma (1–12), associate degree (14), bachelors degree (16), masters degree (18), and the PhD/MD (20). The final Education variable was centered at 12. Sex was coded in the female direction, with males coded 0 and females coded 1. Race/Ethnicity includes responses of White, Black, and Other, where White is the baseline (0), and Black (1) and Other (1) are simple contrasts allowing for direct estimates of group differences.

Initial Data Description

The ACTIVE sample was drawn from six metropolitan/surrounding areas within the United States (Birmingham, AL; Boston, MA; Indianapolis, IN; Baltimore, MD; State College, PA; Detroit, MI). The impetus for such a design was the nature of the training program. Six locations were chosen to sample various areas of the United States, while maintaining close connection with participants to minimize costs of the training portion of the study. Within these six areas blacks were oversampled because of their low prevalence in previous research on elderly cognitive training (Jobe et al., 2001). Each location varied the method used for recruitment, but the main -forms included onsite presentations, introductory letters, newspaper advertisements, and follow-up telephone calls. Study candidates were drawn from public records and retirement service participants. In particular, participants had to be at least 65 years old, and have no physical or mental handicaps that would prevent them from performing the training program and cognitive testing.

The HRS is considered as a nationally representative sample of adults generally 50 and older (see Juster & Suzman, 1995), as long as the sample weights are applied. In proposing a comparison of the HRS and ACTIVE as comparable, it is noted that they have similar aims in following longitudinally the trajectory of an aging population in the United States. The massive size of the HRS sample, and previous work to bring it in line with countrywide population parameters means it serves as a good prototype for ACTIVE (Hauser & Willis, 2005). The HRS includes a great deal of demographic information, but we focus on the participant’s self-reported age, education, Sex and race/ethnicity. Both Age and Education are reported in years (Education in terms of years of formal schooling), and Sex is listed as Male or Female. Race/Ethnicity is indexed in several ways, and here we create sub-groups of White, Black, and Other as shown in Table 1 for the HRS respondents over age 65.

Table 1.

Demographic Simple Statistics for ACTIVE and HRS

| A. ACTIVE sample demographic variables | ||||||

|---|---|---|---|---|---|---|

| Ethnicity | ||||||

| Age | Education | Sex | White | Black | Other | |

| Mean | 73.6 | 13.5 | 75.9% | 72.4% | 26.0% | 1.64% |

| Std. Dev. | 5.91 | 2.77 | ||||

| N | 2,802 | 2,802 | 2,802 | 2,028 | 728 | 46 |

|

B. HRS 2010 sample demographic variables (Note: Only for Respondents over age 65 and HRS Respondent Weights used. | ||||||

| Ethnicity | ||||||

| Age | Education | Sex | White | Black | Other | |

| Mean | 76.1 | 12.3 | 58.3% | 83.7% | 13.9% | 2.34% |

| Std. Dev. | 7.01 | 3.23 | ||||

| N | 10,487 | 10,486 | 10,487 | 8,781 | 1,461 | 245 |

The Other category includes individuals who reported their race/ethnicity as Asian, Latino, or Native American. These subcategories were sampled in rather small percentages, leading to very small cell sizes when the data are further crossed with other variables. For a clear comparison of the sample demographics, the ACTIVE demographics are listed in the second part of Table 1. Here, the sample average Age and Education are listed next to the proportions of females (Sex) and defined Race/Ethnic groups. Some of the last proportions show some differences, but these will be examined through the models of study association.

The ACTIVE study was started in 1998, when participant enrollment began. Measurement of persons began in the next year, and two year follow-up testing of participants was completed in 2002 (Jobe et al., 2001). Alternatively, the HRS began in 1992, providing that demographic similarities could be biased by effects of time (Juster & Suzman, 1995). To rectify the difference in initial sampling, the year 2000 sample and weights from the HRS were used as the prototype to compare to ACTIVE.

If the ACTIVE sample was found to have some biases for certain demographic proportions, we may wish to weight the sample to bring these ACTIVE proportions in line with the HRS population. The first step in this process was to create brackets for age and education to have good coverage of each value across the spectrum of ages and years of education. These brackets are shown in Table 2. The brackets were created by grouping age in 5-year intervals from age 65 to 95 (the age range for ACTIVE), and they illustrate the potential nonlinearity of the predictors. The restrictions based on age for HRS from the previous analysis were carried over so that the age ranges were equal across groups. The brackets were also formed to provide that cell based methods (Post-Stratification and Raking) would provide legitimate weights and would converge on a solution.

Table 2.

Demographic Brackets For ACTIVE Sample

| Age Categories | Freq. | Percent | Cumul. Freq. | Cumul. Per. |

|---|---|---|---|---|

| 65–69 | 819 | 29.23 | 819 | 29.23 |

| 70–74 | 864 | 30.84 | 1683 | 60.06 |

| 75–79 | 609 | 21.73 | 2292 | 81.8 |

| 80–84 | 372 | 13.28 | 2664 | 95.07 |

| 85–89 | 119 | 4.25 | 2783 | 99.32 |

| 90–94 | 19 | 0.68 | 2802 | 100 |

| Educ. Categories | Freq. | Percent | Cumul. Freq. | Cumul. Per. |

| 0–4 years | 3 | 0.11 | 3 | 0.11 |

| 5–8 years | 86 | 3.07 | 89 | 3.18 |

| 9–12 years | 1030 | 36.76 | 1119 | 39.94 |

| 13 years | 786 | 28.05 | 1905 | 67.99 |

| 14 years | 120 | 4.28 | 2025 | 72.27 |

| 15 years | 318 | 11.35 | 2343 | 83.62 |

| 16+ years | 459 | 16.38 | 2802 | 100 |

Notes: The higher age brackets were collapsed (85–94) because of lower cell sizes. The same is true of the first two education categories.

Models of Analysis

The first part of the analysis deals with testing if certain demographic variables predict study association (ACTIVE versus HRS). This was done by implementing a logistic regression process where the outcome is study assignment (see Hosmer & Lemeshow 1989; McArdle & Hamagami, 1994). Those that were included in the HRS are assigned 0 and those in ACTIVE are assigned 1. The sample demographics are used as predictors (age, education, Sex, race/ethnicity). The analysis of study association was broken down into a few steps to progressively build a full model of predictors. Each predictor was put in individually to report a baseline in predicted variance (pseudo R2); a 5% level of significance is reported. After this, the complete set was input as a multiple logistic regression.

Next a Decision Tree Analysis (DTA) using a Classification and Regression Tree (CART) approach was used to predict group association for the two studies (see McArdle, 2011,2012). The historical view of DTA is presented in detail elsewhere (see Breiman, Friedman, Olshen, & Stone, 1984), and there are many available computer programs (see McArdle, 2011; Strobl, Malley, & Tutz, 2009). DTAs have a few common features. (1) DTAs are admittedly “explorations” of available data. (2) In most DTAs, the outcomes are considered to be so critical that it does not seem to matter how we create the forecasts as long as they are “maximally accurate.” (3) Some of the DTA data used have a totally unknown structure, and experimental manipulation is not a formal consideration. (4) DTAs are only one of many statistical tools that could have been used. Popularity of DTA comes from its easy to interpret dendrograms, or Tree structures, and the related Cartesian subplots. DTA programs are now widely available and very easy to use and interpret. The DTA used here was based on a CART classification method (R programs using “rpart” and “party”; Hothorn, Hornik, & Zeileis, 2006;) with the binary outcomes of ACTIVE versus HRS and the demographics listed above as inputs. No utilities were used, so the sample sizes were not reweighted. Splitting on a given variable is done by selecting the variable that offers the maximal prediction of the outcome in a set of variable. These splitting potentials take into account data in categorical and continuous configurations. The analyses also include a comparison of the various weights and their effects on the demographics used (biases in means are examined).

In the Post-Stratification and Raking methods, the general trend is to use cell-based proportions to re-weight underrepresented cells from the sample to match the population proportions (Holt & Smith, 1979). This procedure used Sex- and age-ordered categories as the splits for cell association. Further division of cells by race and/or education created empty stratified cells in the sample. Alternatively, we can use a “raking” method (Deville, 1993) approach to make sample proportions more closely match the population proportions (in this case, those of the HRS). The raking process for creating the sample weights involves knowing the relative population proportions of the demographics that we are using in our analyses (age, education, Sex, race/ethnicity). For this, we use the weighted HRS data (HRS proportions using the sample weights created for that data). The raking process iterates weights by smoothing out over-sampled categories and increasing weights on under-sampled portions. If at the end of the iterative process, the deviation of the weighting has not settled, then new brackets should be made to account for low information cells. This technique can be thought of as a two-way Post-Stratification that rakes along columns and then along rows to progressively revise sample weights to match population proportions over separate cell divisions (Little, 1993).

Finally, in order to assess how these weights affect the intervention effects previously reported (Ball et al., 2002; Willis et al., 2006), results of unweighted repeated measures MANOVA are compared to the results of weighted repeated measures MANOVA, using weights obtained through the models described above. This provides the opportunity to determine how well the unweighted means match the weighted means. If the means change substantially, then we have reason to believe that the proportion in the sample lead to biased results and not be generalizable to the general population and use of the weights would reduce this bias.

Results

Logistic Regression Model (LMR) Analyses

Simple Effects of Individual Predictors

The first set of results comes from logistic regressions with single predictors of study association (See Appendix Table 1). From this we can see how well each variable predicts association without possible collinearity effects. A list of the results of single predictors for study association is displayed in Table 3. The logistic models the propensity of being enrolled in ACTIVE versus HRS as a function of age, education, Sex, and race. Differences were detected, with lower ages and higher education in the ACTIVE sample. Additionally the ACTIVE sample was significantly more likely to be female than the HRS sample and to include significantly more Blacks than in the HRS sample.

Table 3.

Logistic predictors of study association (HRS=0, ACTIVE=1)

|

Single Logistic Indicators |

Multiple Indicators | |||

|---|---|---|---|---|

| Predictor | OR | 95% CI | OR | 95% CI |

| Age | 0.945 | (0.938, 0.951) | 0.953 | (0.947, 0.960) |

| Education | 1.142 | (1.125, 1.159) | 1.173 | (1.154, 1.193) |

| Sex | 2.253 | (2.049, 2.477) | 2.354 | (2.133, 2.597) |

| Race (B) | 2.158 | (1.950, 2.387) | 2.201 | (1.975, 2.452) |

| Race (O) | 0.813 | (0.591, 1.118) | 0.91 | (0.654, 1.268) |

Notes: Each letter indicates a different logistic regression model. Sex is effect coded with males −0.5 and females 0.5. Ethnicity is coded with white as baseline and black and other effects are modeled. Individual logistic pseudo R2 values: age= 0.022; education= 0.025; Sex= 0.023; black= 0.023; other= 0.016. R2 value for the multiple indicator logistic regression = 0.084.

In addition to these odds ratio estimates, we get a sense for the ability to discern study association with the pseudo R2 values. Data in this table give us an idea of the ability of the predictor variables to correctly classify persons, rather than the amount of explained variance as in a traditional regression analysis. In this kind of comparison, these variables offer little evidence that we could correctly identify persons as being HRS or ACTIVE participants with any degree of certainty. But here this result implies that there is very little bias in the sampling procedures between these two samples. Since these estimates are run as separate logistic regressions, we move to a multiple predictor model to see if the results hold.

Main Effects Regression

In an effort to determine how well the demographic variables could capture person-study association, we implemented a multiple regression analysis, with Age, Education, Sex and Race/Ethnicity entered as multiple predictors of study association. Results are presented in Table 3; overall pseudo R2 = 0.084. The main effects of these variables in predicting whether a person was a member of the ACTIVE or HRS sample were similar to that of the single predictor models reported above. All main effects were significant, indicating many independent effects, and the only value that showed no bias between samples was the effect of the other race/ethnicity category. The overall effect of these variables to correctly classify persons is relatively low given the individual effects outlined previously. These results are in line with the previous analyses, but there is only a small gain of enhanced prediction with multiple predictors.

Interaction Effects Regression

The model was extended to include multiple predictors and all two-way interactions of these same predictors. In the model, we look to see if the main effects still hold, and how the interactions may change the interpretations stated in the previous two sections. Results are shown in Table 4.

Table 4.

Study association analysis with two-way interaction terms

| Predictor | β | S.E. | OR | 95% CI |

|---|---|---|---|---|

| Age | 0.026 | 0.027 | 1.026 | (0.972, 1.082) |

| Education | 0.260 | 0.062 | 1.296 | (1.148, 1.463) |

| Sex | 0.912 | 0.406 | 2.489 | (1.124, 5.510) |

| Race (B) | 0.482 | 0.053 | 2.623 | (2.134, 3.225) |

| Race (O) | −0.5.74 | 0.186 | 0.317 | (0.153, 0.658) |

| Age*Sex | −0.006 | 0.008 | 0.994 | (0.978, 1.010) |

| Age*Education | 0.001 | 0.001 | 1.001 | (0.999, 1.004) |

| Age*Black | 0.024 | 0.010 | 1.024 | (1.005, 1.044) |

| Age*Other | −0.095 | 0.026 | 0.909 | (0.864, 0.956) |

| Education*Sex | −0.082 | 0.019 | 0.922 | (0.888, 0.956) |

| Education*Black | −0.011 | 0.020 | 0.989 | (0.951, 1.029) |

| Education*Other | −0.091 | 0.058 | 0.913 | (0.815, 1.022) |

| Sex*Black | −0.067 | 0.136 | 0.935 | (0.717, 1.220) |

| Sex*Other | 0.197 | 0.380 | 1.217 | (0.579, 2.562) |

Notes: overall model R2 = 0.087.

We note that the main effect of Age is now not significant, but the effect of the interaction of age with each race/ethnic category is significant. The effects of Education, Sex, and Black race mimic the multiple regression results previously presented. The interaction of Education and Sex showed a disadvantage for males in the ACTIVE study versus the HRS sample.

The overall effect of adding two-way interactions provides little prediction value to the overall model (R2 = 0.084 → 0.087) compared to the model when only main effects are included, so we will only use the main effects model. The pseudo-R2 provides a limited view of the differences between the two studies, with only about 8% of the prediction accounted for by the sample characteristics selected in the analysis. With a small effect given sample demographics for HRS and ACTIVE, we conclude that only minor differences exist between the samples.

Decision Tree Analyses (DTA)

The same set of data was examined using data mining techniques (see Appendix Table 2). In these models, we allow all possible nonlinear interactions between the demographic characteristics available. Study association was again listed as the predicted outcome, with the demographic variables of Age, Education, Sex, and Race/Ethnicity used as predictors of the possible splitting nodes. The outcome of this analysis is a decision tree that splits persons into groups based on cut-points with continuous variables, and on group with categorical variables.

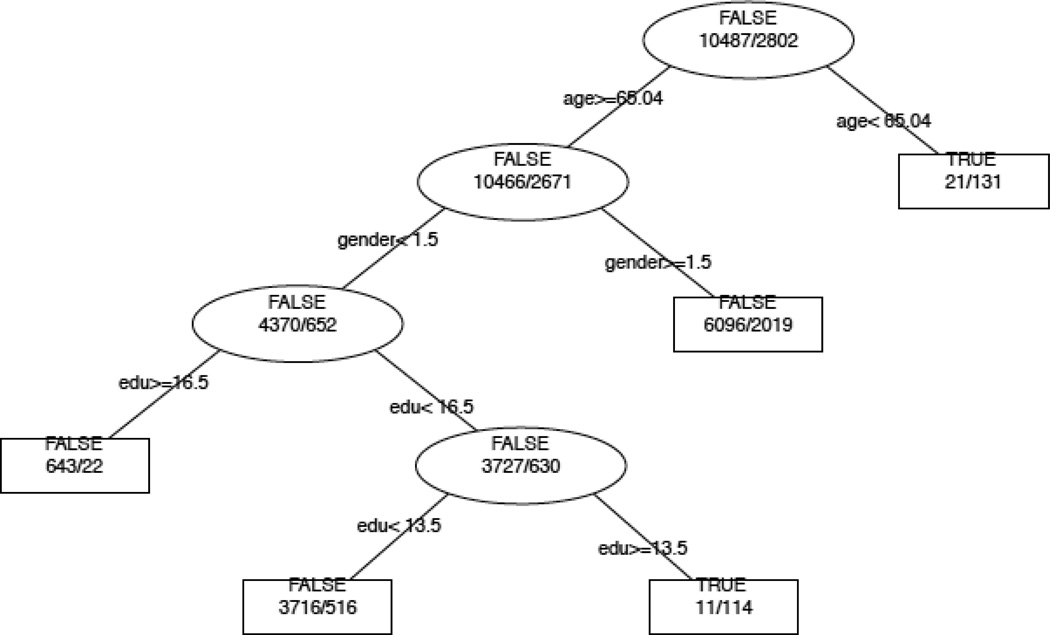

The final tree is shown in Figure 1. This is based on 23 groups determined to have the best splits by the “rpart” R program (see R Core Team, 2013; Strobl et al., 2009; Therneau, Atkinson, & Ripley, 2012). In this case, age provided the first split at age 65.04. Next Sex was used as a splitting variable, with females going to the left path. Then Education was used to split the data at 16 years of education, and then it was used again at 13 years of education for the lower branch. Thus, the optimal tree that we found suggested Age (13.4%), Education (3.9%), Sex (0.7%), and then Race/Ethnicity (0.3%) to be important variables to organizing persons based on study association (with variable importance in the order listed). The overall accuracy of this DTA was 14.6%, a slight increase over the LMR of 8.4%. This shows the specific nonlinearity (especially within Education) and the resulting higher-order interactions between the variables that would not be apparent in simple two-way interactions portrayed in the above LRM.

Figure 1. Snapshot of DTA-PARTY decision tree.

(Note: The root node at the top indicates the first split and the variable it splits on (age, with age being dichotomized at 65.04 years). Circle nodes are non-terminal nodes and square nodes are terminal nodes. A TRUE terminal node is one where the program predicts the outcome as part of the ACTIVE sample, FALSE terminal nodes are ones where HRS association is predicted. The left value is the HRS sample at a given node and the right values is the ACTIVE sample. The overall accuracy of the model is PR=14.6%.)

Post-Stratification and Raking Methods

The ACTIVE time 1 data were used to create weights based on HRS weighted proportions. For the Post Stratification method, the Sex-by-age and Sex-by-ethnicity proportions were used to create sample weights. The HRS proportions were divided by the ACTIVE proportions to return the relative weight to be given to each cell. If the proportion for older males was higher in ACTIVE than HRS, their weight would be less than 1 (indicating that this group is overrepresented).

A similar method of weighting was established for the raking process. For this three interaction terms were created for Sex by: Age (12 cells), Education (12 cells), and Race/Ethnicity (6 cells). When we establish that we essentially have three post-stratified proportions that we will ‘rake’ over, it is more clearly identified as an extension of post-stratification. The raking procedure used these three interactions to create marginal sample weights for ACTIVE based on proportions from HRS with marginal weights. The stopping rule for raking included program termination when the calculated percents differed from the marginal percents by less than 0.001. This was established in 5 iterations when a maximum of 50 were requested.

Creating Sample Weights for ACTIVE

We create sampling weights from the LRM in the usual ways (see (Cole & Hernan, 2008)). Similarly, sampling weights can be easily created from the DTA output by assuming that the probability of inclusion in ACTIVE is the percentage of ACTIVE participants in the final nodes. In Table 5 we list a few sample statistics for the un-weighted and weighted demographics in the ACTIVE sample. The LMR and DTA methods seem to yield values more in line with the original sample statistics un-weighted.

Table 5.

Unweighted and Weighted ACTIVE Statistics

| A. Unweighted Statistics | ||||||

|---|---|---|---|---|---|---|

| Ethnicity | ||||||

| Age | Education | Sex | White | Black | Other | |

| Mean | 73.6 | 13.5 | 75.9% | 72.4% | 26.0% | 1.64% |

| Std. Dev. | 5.91 | 2.77 | ||||

| N | 2,802 | 2,802 | 2,802 | 2,028 | 728 | 46 |

| B. LRM Weighted Statistics | ||||||

| Ethnicity | ||||||

| Age | Education | Sex | White | Black | Other | |

| Mean | 74.2 | 13.3 | 74.2% | 77.1% | 21.20% | 1.74% |

| Std. Dev. | 5.99 | 2.67 | ||||

| N | 2,802 | 2,802 | 2,802 | 2,160 | 593 | 49 |

| C. DTA Weighted Statistics | ||||||

| Ethnicity | ||||||

| Age | Education | Sex | White | Black | Other | |

| Mean | 74.0 | 13.3 | 75.8% | 72.3% | 26.1% | 1.60% |

| Std. Dev. | 5.75 | 2.56 | ||||

| N | 2,802 | 2,802 | 2,802 | 2,025 | 732.1 | 44.9 |

The demographic statistics in Table 5 were then tested for equivalence with a Repeated Measures MANOVA testing weighted and un-weighted values of Age, Education, and Sex for equality. The means of these variables were significantly different in an overall test for equality (Wilks’ Lambda = 0.251, F15,2787 = 553, p < 0.001), indicating that these sampling weights are not equivalent.

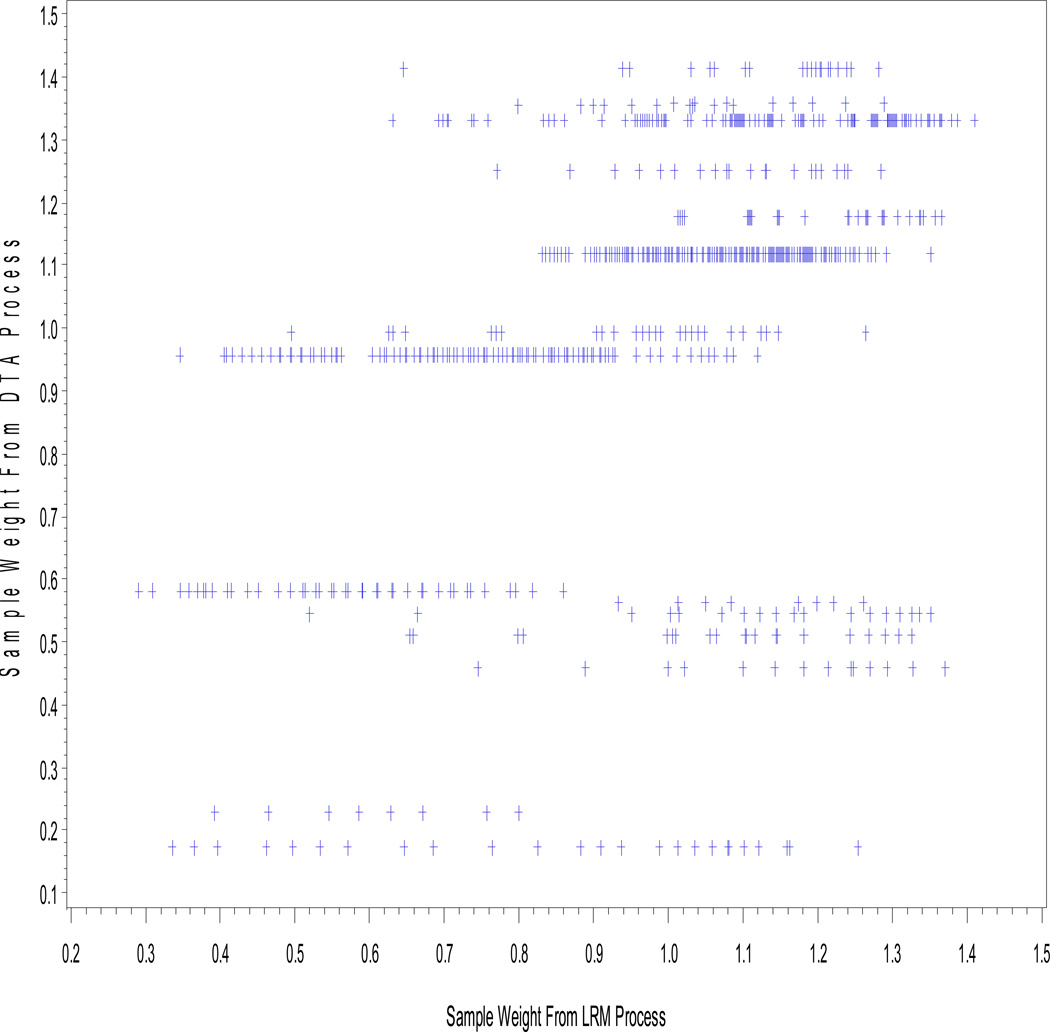

These sets of sampling weights are compared directly in Figure 2. The figure portrays the distributions of each of the weighting methods. Each method differs in implementation, but values tend to cluster around 1, for no change in person weighting. The LRM, DTA, and post-stratification methods provide peaked distributions, whereas the raking method has a relatively flat distribution.

Figure 2. Scatterplot of DTA determined weights as a function of LRM determined weights.

(Note: Probabilities were transformed to relative weights, with possible weight scores being greater than 0. The correlation between the two weights was found to be r = 0.482. The bars reflect the relatively lower resolution of the optimal classification tree where 23 terminal nodes are used to identify the probabilities of study association.)

Results of MANOVA Analyses: Weighted vs Unweighted

The weights did not change the patterns of means (results available from authors), except for minor variations in explained variance.

Discussion

A few statistically significant differences between the original ACTIVE sample and the more nationally representative weighted HRS sample were identified. The ACTIVE sample was slightly younger, more educated, more female, and included more Blacks than the HRS sample. However, we should point out that the statistical models used here (LMR and DTA) have already proven they can pick up substantial sampling biases (see McArdle, 2012), and that is not really the case here. In essence, the ACTIVE participants are very much like the HRS participants when we only consider their ages, the level of their educational attainments, their Sex, and their race/ethnicity (i.e., only between 8.4% and 14.6% different).

The sampling weights we created show some changes to the demographic factors, with modifications mainly to Sex and race/ethnicity breakdowns. The 2000 Current Population Survey (CPS) provides estimates of the United States population make up on these variables. The average age of individuals over 65 years old was 74.5 years, with males being 42.4% and females 57.6% of the population. The breakdown of race indicated that in 2000, 88.5% of the US population was White, 8.4% was Black, and 3.1% was of another race (Asian, Pacific Islander, Native American). The educational attainment of the selected group of older adults was measured to be 12.5 years of education. These point to an oversampling of females and individuals with higher levels of education in the HRS, and now in ACTIVE as well. The lack of a full realization of the White subgroup (back to 88.5%) is a dramatic effect of the sampling approaches used in these studies. Again, in the ACTIVE Study, this was a direct result of the deliberate attempts to enroll Black participants.

The inclusion of indicators used in the current study identifies major person characteristics that each study should have within their dataset. These data could be expanded in future studies to accommodate more features about person to make sure they are unbiased. Such features as eyesight, driving habits, and general mobility may be important aspects of a study question and it would make sense to reweight the ACTIVE sample if these are important baseline characteristics. As a starting point for examining the national representativeness of ACTIVE, this first look provides good support for a sample that can be compared to the national population.

In conclusion, we have created four sets of sampling weights for each person (labeled LMR, DTA, Post-Stratification and Raking) that can now be applied to any subsequent analysis of ACTIVE data. Although we have not created Inverse Mills ratios that could be used in a “Heckman” type regression correction, the same concepts are used here (see Puhani, 2000).

The choice between sampling weights is a choice that must be made by the researcher (and see Stapleton, 2002). Nevertheless, if any of these sampling weights are used in subsequent analyses, then the ACTIVE sample can then be said to be nationally representative, or at least as nationally representative as the HRS sample, and this seems a definite advantage. However, given the small range of sociodemographic differences between the ACTIVE and HRS samples noted above and the lack of bias from sampling techniques, the use of sample weights in an analysis of intervention effects would not change the pattern of reported outcomes through 5 years post-intervention. That is, results through 5 years reported by the ACTIVE investigators can be considered generalizable to the US population.

Acknowledgments

This research was conducted under grant number U01AG014282 from the National Institute on Aging to the New England Research Institutes (NERI). ACTIVE is supported by grants from the National Institute on Aging and the National Institute of Nursing Research to Hebrew Senior Life (U01NR04507), Indiana University School of Medicine (U01NR04508), Johns Hopkins University (U01AG14260), New England Research Institutes (U01AG14282), Pennsylvania State University (U01AG14263), University of Alabama at Birmingham (U01AG14289), University of Florida (U01AG14276). The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institute of Nursing Research, National Institute on Aging, or the National Institutes of Health. Representatives of the funding agency have been involved in the review of the manuscript but not directly involved in the collection, management, analysis or interpretation of the data.

Dr. McArdle was a member of the Data and Safety Monitoring Board of the ACTIVE Study from 1995 to 2000, but has never had financial gains from this study. The authors both thank Dr. Sharon Tennstedt from NERI for her constant concerns and continuing oversight of this project.

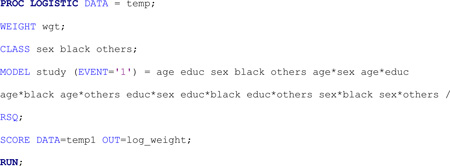

Appendix Table 1: The LRM Approach to Sample Weighting (using SAS® 9.2 PROC/Logistic software)

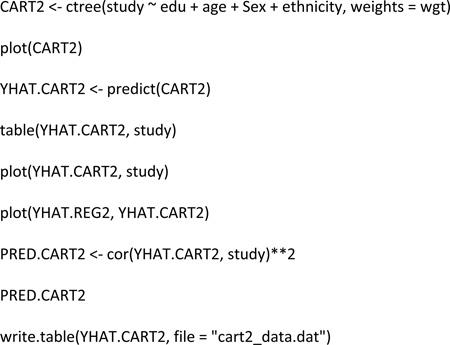

Appendix Table 2: The DTA Approach to Sample Weighting (using R 2.15.2 with package – “party”)

REFERENCES

- Ball K, Berch DB, Helmers KF, Jobe JB, Leveck MD, Marsiske M, et al. Effects of cognitive training interventions with older adults: a randomized controlled trial. JAMA. 2002;288(18):2271–2281. doi: 10.1001/jama.288.18.2271. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Breiman L, Friedman J, Olshen R, Stone C. Classification and regression trees. Pacific Grove, CA: Wadsworth and Brooks/Cole; 1984. [Google Scholar]

- Cole SR, Hernan MA. Constructing inverse probability weights for marginal structural models. Am J Epidemiol. 2008;168(6):656–664. doi: 10.1093/aje/kwn164. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Deville JC, Sarndal CE, Sautory O. Generalized raking procedures in survey sampling. J Am Stat Assoc. 1993;88(423):1013–1020. [Google Scholar]

- Hauser RM, WIllis RJ. Survey design and methodology in the health and retirement study and the wisconsin longitudinal study. In: Waite Linda J., editor. Aging, Health, and Public Policy: Demographic and Economic Perspectives, Supplement to Population and Development Review. Vol. 30. New York: Population Council; 2005. 2004. [Google Scholar]

- Holt D, Smith TMF. Post Stratification. J R Stat Soc Ser A. 1979;142(1):33–46. [Google Scholar]

- Horn JL, Cattell RB. Age differences in fluid and crystallized intelligence. Acta Psychologica. 1967;26:107–129. doi: 10.1016/0001-6918(67)90011-x. [DOI] [PubMed] [Google Scholar]

- Hosmer DW, Lemeshow S. Applied logistic regression. New York, NY: Wiley; 1989. [Google Scholar]

- Hothorn T, Hornik K, Zeileis A. Unbiased Recursive Partitioning: A conditional inference framework. Journal of Computational and Graphical Statistics. 2006;17:492–514. [Google Scholar]

- Jobe JB, Smith DM, Ball K, Tennstedt SL, Marsiske M, Willis SL, et al. ACTIVE: a cognitive intervention trial to promote independence in older adults. Control Clin Trials. 2001;22(4):453–479. doi: 10.1016/s0197-2456(01)00139-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Juster T, Suzman R. Overview of the Health and Retirement Study. J Hum Resour. 1995;30:S7–S56. [Google Scholar]

- Kaufman AS, Kaufman JC, Liu X, Johnson CK. How do educational attainment and Sex relate to fluid intelligence, crystallized intelligence, and academic skills at ages 22–90 years? Arch Clin Neuropsychol. 2009;24(2):153–163. doi: 10.1093/arclin/acp015. [DOI] [PubMed] [Google Scholar]

- Kish L. Methods for Design Effects. J Off Stat. 1995;11:55–77. [Google Scholar]

- Little RJA. Post-Stratification: a Modeler’s Perspective. J Am Stat Assoc. 1993;88:1001–1012. [Google Scholar]

- McArdle JJ. Exploratory data mining using CART in the Behavioral science. In: Cooper H, Panter A, editors. Handbook of Methodology in the Behavioral Sciences. Washington, DC: APA Books; 2011. [Google Scholar]

- McArdle JJ. Dealing With Longitudinal Attrition Using Logistic Regression and Decision Tree Analyses. In: McArdle JJ, Ritschard G, editors. Contemporary Issues in Exploratory Data Mining in the Behavioral Sciences. NY: Routlidge; 2012. [Google Scholar]

- McArdle JJ, Ferrer-Caja E, Hamagami F, Woodcock RW. Comparative longitudinal structural analyses of the growth and decline of multiple intellectual abilities over the life span. Dev Psychol. 2002;38(1):115–142. [PubMed] [Google Scholar]

- McArdle JJ, Fisher GG, Kadlec KM. Latent variable analyses of age trends of cognition in the Health and Retirement Study, 1992–2004. Psychol Aging. 2007;22(3):525–545. doi: 10.1037/0882-7974.22.3.525. [DOI] [PubMed] [Google Scholar]

- McArdle JJ, Hamagami F. Logit and multilevel logit modeling studies of college graduation for 1984–85 freshman student athletes. J Am Stat Assoc. 1994;89(427):1107–1123. [Google Scholar]

- McArdle JJ, Prindle JJ. A latent change score analysis of a randomized clinical trial in reasoning training. Psychol Aging. 2008;23(4):702–719. doi: 10.1037/a0014349. [DOI] [PubMed] [Google Scholar]

- Puhani PA. The Heckman Correction for Sample Selection and Its Critique. J Econ Surv. 2000;14(1):53–68. [Google Scholar]

- R Core Team. R: A language and environment for statistical computing. Vienna, Austria: R Foundation for Statistical Computing; 2013. ISBN 3-900051-07-0, URL http://www.R-project.org/ [Google Scholar]

- Rebok GW, Carlson MC, Langbaum JB. Training and maintaining memory abilities in healthy older adults: traditional and novel approaches. J Gerontol B Psychol Sci Soc Sci. 2007;62(Spec No 1):53–61. doi: 10.1093/geronb/62.special_issue_1.53. [DOI] [PubMed] [Google Scholar]

- Schaie KW, Willis SL. Age difference patterns of psychometric intelligence in adulthood: Generalizability within and across ability domains. Psychol Aging. 1993;8(1):44–55. doi: 10.1037//0882-7974.8.1.44. [DOI] [PubMed] [Google Scholar]

- Stapleton LM. The incorporation of sample weights into multilevel structural equation models. Struct Equ Modeling. 2002;9(4):475–502. [Google Scholar]

- Strobl C, Malley J, Tutz G. An introduction to recursive partitioning: rationale, application, and characteristics of classification and regression trees, bagging, and random forests. Psychol Methods. 2009;14(4):323–348. doi: 10.1037/a0016973. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Therneau T, Atkinson B, Ripley B. rpart: Recursive Partitioning. R package version. 2012;4:1–10. [Google Scholar]

- Willis SL, Tennstedt SL, Marsiske M, Ball K, Elias J, Koepke KM, et al. Long-term effects of cognitive training on everyday functional outcomes in older adults. Jama. 2006;296(23):2805–2814. doi: 10.1001/jama.296.23.2805. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zimprich D, Martin M. Can longitudinal changes in processing speed explain longitudinal age changes in fluid intelligence? Psychol Aging. 2002;17(4):690–695. doi: 10.1037/0882-7974.17.4.690. [DOI] [PubMed] [Google Scholar]