Abstract

Background

Anesthesiology residencies are developing trainee assessment tools to evaluate 25 milestones that map to the 6 core competencies. The effort will be facilitated by development of automated methods to capture, assess, and report trainee performance to program directors, the Accreditation Council for Graduate Medical Education and the trainees themselves.

Methods

We leveraged a perioperative information management system to develop an automated, near-real-time performance capture and feedback tool that provides objective data on clinical performance and requires minimal administrative effort. Prior to development, we surveyed trainees about satisfaction with clinical performance feedback and about preferences for future feedback.

Results

Resident performance on 24,154 completed cases has been incorporated into our automated dashboard, and trainees now have access to their own performance data. Eighty percent (48 of 60) of our residents responded to the feedback survey. Overall, residents ‘agreed/strongly agreed’ that they desire frequent updates on their clinical performance on defined quality metrics and that they desired to see how they compared to the residency as a whole. Prior to deployment of the new tool, they ‘disagreed’ that they were receiving feedback in a timely manner. Survey results were used to guide the format of the feedback tool that has been implemented.

Conclusions

We demonstrate the implementation of a system that provides near real-time feedback concerning resident performance on an extensible series of quality metrics, and which is responsive to requests arising from resident feedback about desired reporting mechanisms.

Introduction

In July of 2014 all anesthesiology residency programs will enter the Next Accreditation System (NAS) of the Accreditation Council for Graduate Medical Education (ACGME). A major aspect of the NAS involves the creation of roughly 30 milestones for each specialty that will map to different areas within the construct of the six core competencies: Patient Care, Medical Knowledge, Systems-Based Practice (SBP), Professionalism, Interpersonal and Communication Skills, Practice-Based Learning, and Improvement (PBLI).1 The milestones have been described by leaders of the ACGME as “specialty-specific achievements that residents are expected to demonstrate at established intervals as they progress through training.”1 In anesthesiology, these intervals are currently conceived as progressing through 5 stages ranging from the performance expected at the end of the clinical base year (Entry Level) to the performance level expected following a period of independent practice (Advanced Level).* The education community recognizes both the opportunities and challenges that will be present in the implementation of the Milestones Project and the NAS.

Finding the appropriate means by which to assess and report on anesthesiology resident performance on all of the milestones and core competencies as required in the ACGME Anesthesiology Core Program Requirements may prove daunting.† For instance, there are 60 residents in the authors’ residency program. There are currently 25 Milestones proposed for anesthesiology with 5 possible levels of performance for each milestone. Each resident is expected to be thoroughly assessed across this entire performance spectrum every 6 months, resulting in 1,500 data points for each evaluation cycle reported to the ACGME along with a report of personal performance provided to each resident.* Finding methods that can moderate the administrative workload on the program directors, clinical competency committees, and residents while still providing highly reliable data will be of great benefit.

Previous studies have reported on similar work in the development of an automated case log system.2,3 However, to our knowledge there are no descriptions of an automated, near-real time performance feedback tool that provides residents and program directors with data on objective clinical performance concerning the quality of patient care that residents deliver. The increasing adoption of electronic record keeping in the perioperative period opens the possibility to develop real time or near-real time process monitoring and feedback systems. Similar systems have been developed in the past to comply with the Joint Commission mandate for ongoing professional practice evaluation and to improve perioperative processes.4–10

In this report we will describe the development of a near real-time performance feedback system utilizing data collected as part of routine care via an existing perioperative information management system. The system described has two main functionalities. First, it allows program directors to assess a number of the milestones under the Systems-Based Practice (SBP) and Practice-Based Learning and Improvement (PBLI) core competencies.* Second, it provides residents with near real-time performance feedback concerning a wide array of clinical performance metrics. Both functions require minimal clerical or administrative efforts from trainees or training programs. This report will progress in three parts: 1) describe the creation of this system through an iterative process involving resident feedback, 2) detail quantitative baseline data that can be used for future standard setting, and 3) describe the specifics of how data obtained through this system can be used as part of the milestones assessment.

Materials & Methods

Our study was reviewed and approved by the Vanderbilt University School of Medicine Institutional Review Board (Nashville, Tennessee).

Creation of Automated Data Collection and Reporting Tool

We began by creating logic to score each completed anesthesia case stored in our perioperative information management system and for which a trainee provided care. Each case was scored on a series of quality metrics, which are shown in table 1 and are based on national standards, practice guidelines, or locally-derived, evidence-based protocols for process and outcome measures.11–16 All cases were evaluated, although some cases were not eligible for scoring across all five of the metrics. For each case, if any one of the gradable items was scored as a failure the overall case was scored as a failure. On a given case if a metric did not apply (i.e., central line precaution metric in a case where no central line was inserted), that particular metric was omitted from the scoring for the case. This logic was then programmed into a structured query language script and added to our daily data processing jobs that pull data from our perioperative information management system into our perioperative data warehouse (PDW). Our daily jobs are set up to run at midnight to capture and process all cases from the previous day.

After the cases and their associated scores were brought into the PDW, we used Tableau (Version 7.0, Tableau Software, Seattle, WA) to create a series of data visualizations. The first visualization was a view designed for the Program Director and Clinical Competency Committee in order to show the performance of all residents on each metric, plotted over time (see fig. 1). The second visualization was a view designed for individual trainees. This is a password-protected website that displays the individual performance for the resident viewing their data as compared to aggregate data for their residency class and for the complete residency cohort, all of which is plotted over time (see fig. 2). Both the program director and the individual trainee visualizations contain an embedded window that displays a list of cases where failures have been flagged with a hyperlink to view the electronic anesthesia care record for that case. Row-level filtering was applied at the user level to ensure that each trainee was only able to view their own records. Because the PDW is updated nightly, the visualizations automatically provide new case data each night shortly after midnight.

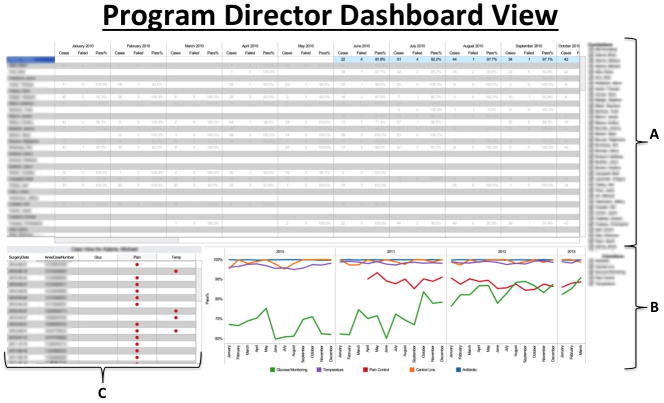

Figure 1.

This figure displays the Program Director dashboard view which is accessed through a password-protected website. The different panels comprising this dashboard show: A) tabular numerical listings of individual resident scores plotted by case pass/failure rate by month, with ‘pass/fail’ as a binary score taking into account passing on all 5 metrics or not; B) color-coded graphical representation of performance of all residents on each individual metric over time; and C) listings of individual cases in which occurred, with each row representing one case and the metric(s) failed in that case being depicted by the red dots. In this portion of the dashboard, a read-only version of the anesthesia record for a case in question can be accessed from our AIMS by clicking on the case (one row represents one case). Additionally, in panel ‘A’ in the figure, resident performance can be sorted by column in ascending or descending order, giving the program director immediate access to the residents that are top performers and those that are low performers on defined quality metrics. Thus, the Program Director can quickly assess individual resident performance and global programmatic performance from this one dashboard. [AIMS = Anesthesia Information Management System]

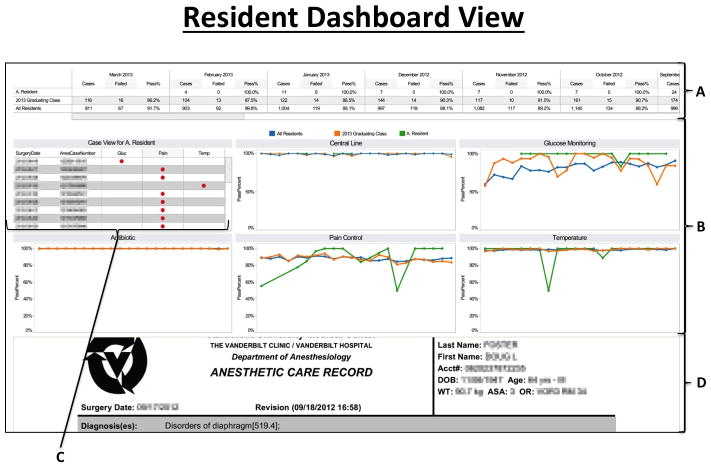

Figure 2.

This figure displays the Resident dashboard view which is accessed through a password-protected website. The different panels comprising this dashboard show: A) a tabular view of the performance of a single resident in comparison to CA training level and entire program by month, with ‘pass/fail’ as a binary score taking into account passing on all 5 metrics or not; case pass/fail rate; B) a graphical representation of the performance of single resident in comparison to CA level and entire program by individual metrics by month; C) individual case listings showing where resident performance failures occurred; and D) the PDF form of the case record in our AIMS can be accessed by clicking on one of the cases listed in panel C. [CA = Clinical Anesthesia; AIMS = Anesthesia Information Management System]

Resident Input for Refining Feedback Tool

As part of the process of refining this feedback tool, an anonymous online survey was sent to all 60 residents in our training program using Research Electronic Data Capture (RedCAP) to elicit their opinions about clinical performance feedback (see appendix 1).17 We omitted questions about respondent demographics in order to keep the responses as anonymous as possible. This was done because we wanted credible feedback about an area where the residents could potentially report dissatisfaction, as comments about ways to improve this system in order to make it meaningful to the end user are crucial to its success.

Resident Orientation

A short user guide and explanatory email were developed and delivered to each trainee in order to give an orientation to the web-based feedback tool, which is provided as Supplemental Digital Content 1 entitled Resident Performance Dashboard User Manual and Logic.

Results

Survey Results

Eighty percent (48/60) of the residents completed the survey. Table 2 displays the summary data from the first portion of the resident survey, which included nine questions sampling four themes:

satisfaction with the frequency/timeliness of feedback prior to development of this application

satisfaction with the amount of feedback prior to system development

desire to receive performance data with comparison to peers and faculty

knowledge of current departmental quality metrics.

On average, the 48 survey respondents ‘agreed/strongly agreed’ that they desire frequent updates on their personal clinical performance in defined quality metrics (e.g., Pain score upon entry to post anesthesia care unit (PACU), postoperative nausea and vomiting rate) and that they desired to know how they compared to the residency as a whole and to faculty. Additionally, while residents were ‘neutral’ concerning the timeliness of feedback and amount of feedback from faculty evaluators, they on average ‘disagreed’ that they received feedback in the right amount or in a timely manner concerning practice performance data.

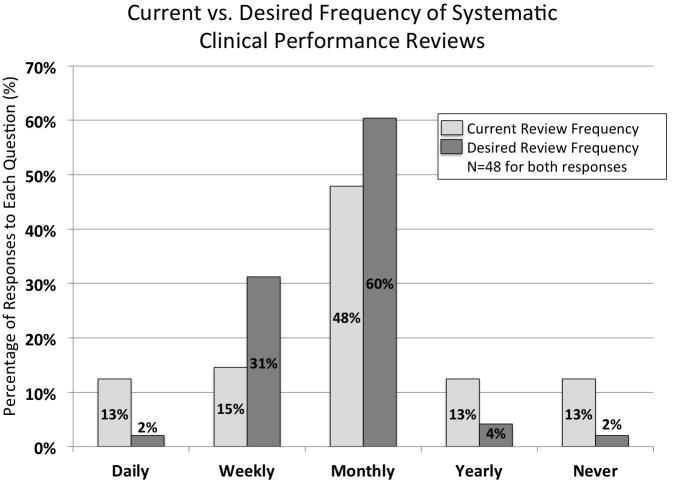

Figure 3 displays the results concerning the residents’ report of how often they systematically review their clinical performance now and how often they would like to do so. Of note, 91% responded that they would like to receive a systematic review of practice performance data every 1–4 weeks. The survey also included several questions concerning the practice performance areas in which the residents believe that they could improve (see table 3). Ninety percent (43/48) of residents responded that they could improve in at least one, and often multiple, areas. However, 10.4% (5/48) of respondents believed that they were compliant (i.e., highly effective) in all of the six areas listed. Table 4 lists qualitative thematic data from a question at the end of the survey to which residents could give free text answers about other performance metrics about which they would like to receive feedback. Finally, all respondents except one noted that they would like to receive this feedback in some electronic form, either by email, website, or smartphone application (see table 5).

Figure 3.

This figure depicts the resident responses to questions on an anonymous survey administered during the development phase of the feedback tool. The questions asked about the resident’s current and desired frequency of systematic clinical performance reviews. Results are demonstrated as the percentage of respondents answering in the categories offered. Of note, over 25% of residents said that they currently perform any systematic review of their performance at a frequency of once per year or less, whereas 91% stated that they would like a systematic review every 1–4 weeks. N=48 for both questions.

The results of the resident survey were used to guide the final format of the feedback tool and the comparators displayed in the data fields. The specific website format that had been in development was refined and comparisons to each resident’s overall class and the entire residency were added.

Performance Results

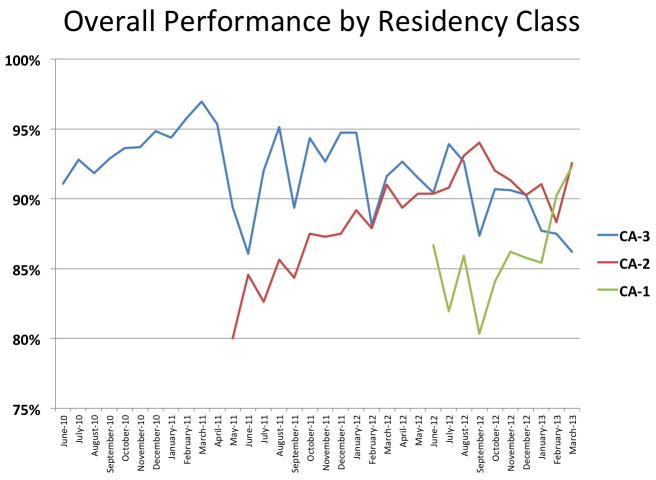

We successfully created and launched the automated case evaluation tool and the automated web visualizations for both the Program Director/Education Office staff and individual trainees as shown in figures 1 and 2. Creation and validation of the case evaluation metrics was completed in less than four weeks, and development of the visualizations took approximately 60 hours of developer time. Data on 24,154 completed anesthetics from the period February 1, 2010 to March 31, 2013 have been provided to our 60 trainees. Table 6 shows a breakdown of the frequency with which a given metric applied to a case performed by a resident. Antibiotic compliance applied to the greatest percentage of cases and central line insertion precautions applied to the fewest. The large fraction of cases not eligible for scoring on the pain metric were patients who were admitted directly to the intensive care unit after surgery, bypassing the PACU, or cases where a nurse anesthetist assumed care at the end of the case. Overall performance by residency class is shown in figure 4 and individual resident performance for the current CA-3 class is shown in figure 5. Examination of figures 4 and 5 indicates little if any improvement of resident performance on these clinical tasks across the arc of their training. Performance appears to peak in the second clinical anesthesia year. Figure 6 shows the performance of the entire residency on each individual metric over time.

Figure 4.

This figure illustrates the overall case pass rate of each of our current residency classes by month over the past 36-months. Of note, there is no demonstrable improvement over time, as would be expected with longer periods of training, and as is expected to be demonstrated in the Milestones Project.

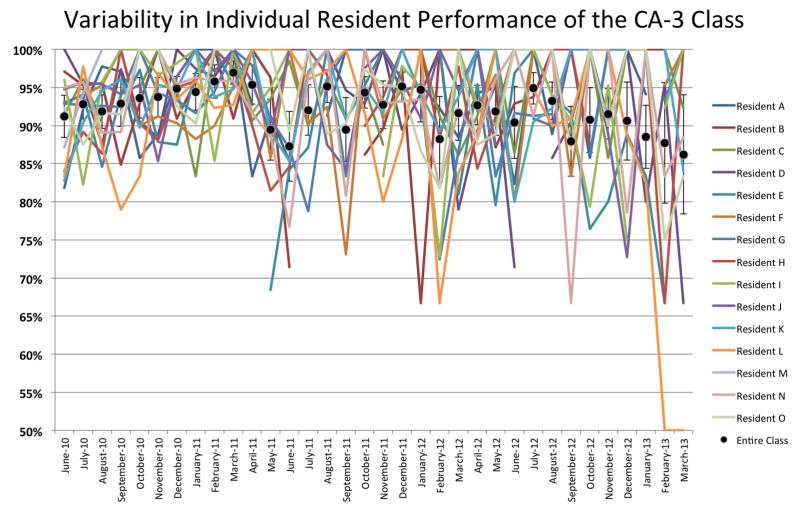

Figure 5.

This figure represents marked variability in the percentage case pass rate of individual residents of the current CA-3 class by month over their entire anesthesia residency, which starts with a month of anesthesia in June at the end of intern year. [CA = clinical anesthesia] There is no discernible pattern of improvement in performance for any resident over time when practicing in a system where feedback on quality metrics was not provided.

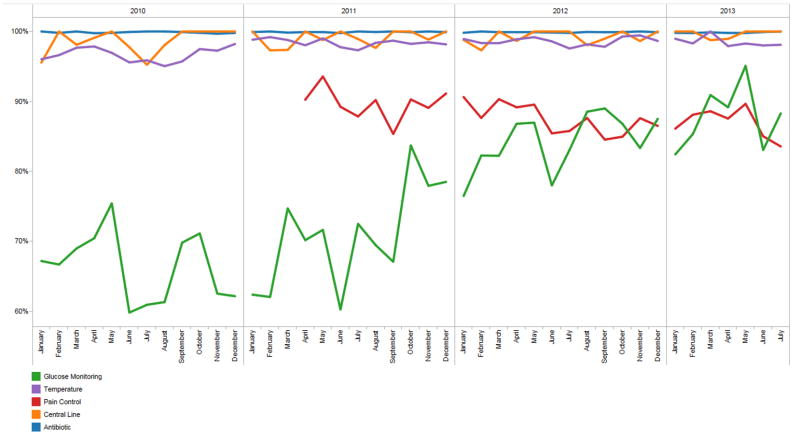

Figure 6.

This figure represents the overall programmatic performance on each of the five quality metrics over the study period and that most of the case failures are accounted for by the glucose monitoring and pain metrics. There is an observed improvement in glucose monitoring and temperature management over time, and the variability in central line documentation shows a reduction. Much of the improvement in glucose monitoring is likely due to decision support reminders embedded in our anesthesia information management system. Pain management does not appear to improve; however, our data shows that on average >90% of patients arrive to the PACU with a pain score <7. [PACU = post-anesthesia care unit]

Discussion

We have created an automated system for near real-time clinical performance feedback to anesthesia residents using a perioperative information management system. The minimal marginal cost of developing this system was a long term benefit of the substantial prior investment in our dedicated anesthesia information technology development effort.18 The clinical performance metrics described in this report are process and near-operating room outcome measures that are readily available and related to the quality of care delivered. They provide feedback about this care as an ongoing formative assessment of anesthesiology residents. The system is extensible to other forms of documentation and machine-captured data and for use in Ongoing Professional Performance Evaluation for nonresident clinicians, as has been demonstrated in previous reports by our group.7,9

Since implementation, this resident performance evaluation system has required minimal ongoing effort. It provides continuous benefits to our training program and resident trainees with respect to assessment and professional development in the domains of SBP and PBLI. The strengths of this system are 1) its ability to provide objective, detailed data about routine clinical performance and 2) its ability to scale in both the level at which the metrics are evaluated and the number of metrics evaluated, both of which are in line with the ACGME Milestones Project goals.

Concerning the first cited strength, the system described is able to give the program director, the clinical competency committee, and the resident objective, detailed feedback about routine personal clinical performance. Many specialties, including anesthesiology, use case logging systems to track clinical experience, and these logs are used as a surrogate for performance in routine care.2,3,19 But, case logs do not contain evaluative information regarding clinical performance. Simulation has come to play a major role in technical and non-technical skill evaluations as another means of performance assessment.20 However, much anesthesia simulation training in the nontechnical domain involves participants or teams of participants responding to emergency scenarios that are 5–15 min in duration. Conversely, it is not feasible to run simulation sessions for hours on end to investigate how residents deliver care in more routine circumstances. What is needed is an ongoing assessment of the quality of the actual clinical performance of residents during the 60–80 h per week that they spend delivering anesthesia care in operating rooms. Faculty evaluations of residents have traditionally been used to do this, and a recent report has better quantified how to systematically use faculty evaluation data.21 But, faculty evaluations of residents can be biased about practice performance due to several factors, including the personality type of the resident being evaluated.22

In contrast, an automated system that extracts performance data directly from the electronic medical record (EMR) can add copious objective, detailed, near real-time data as a part of the ongoing formative assessment of the resident. This system would supplement, not replace, these other assessment modalities as one part of navigating the milestones. For instance, while many of the patient care milestones can be accomplished through faculty evaluations, case logs, and simulation, the requirements for the PBLI and SBP core competencies include milestones such as “incorporation of quality improvement and patient safety initiatives into personal practice” (PBLI1), “analysis of practice to identify areas in need of improvement” (PBLI2), and having a “systems-based approach to patient care” (SBP1).* While these milestones could be assessed in a number of ways, a system that requires little administrative support and supplies residents with individualized, objective feedback about their clinical performance concerning published quality metrics provides residents with feedback about the areas in their practice in which they need to incorporate quality improvement and patient safety initiatives. Personalized, objective self-assessment feedback promotes quality improvement on routine practices within internal medicine, such as the longitudinal management of diabetes and prevention of cardiovascular disease, and this advantage could cross over to anesthesiology training.23–25

In relation to the second cited strength – the ability to scale in terms of depth and scope – our system is designed to be scalable for future growth. Any process or outcome measure that can be given a categorical (e.g., pass/fail, yes/no, present/not present) logic for analysis could be added to this system. Additionally, the pass/fail cutoff for a number of metrics could be changed according to training level, which aligns with the NAS and milestones concept. For instance, we currently use an entry-to-PACU pain score of > 7 as a cutoff for failure of this metric. This may be appropriate for a CA-1 resident (Junior Level) to demonstrate competency, but it may represent failure for a CA-3 resident (Senior Level). Longitudinal feedback with increasing expectations may be valuable for anesthesiology trainees. A recent systemic review of the impact of assessment and feedback on clinician performance found that feedback can positively impact physicians’ clinical performance when it is provided in a systematic fashion by an authoritative and credible source over several years. 26

Several limitations of this report should be noted. First, we have not completed an analysis of the effect of this performance feedback system on the actual performance of the residents as they progress through our residency program. In fact, although our data demonstrate that performance does not improve with training year, which runs counter to the very purpose of the milestones, we believe that this finding may be due to several factors not related to resident development. These may include the high baseline pass rate on some metrics (e.g., antibiotics) and recent operational changes for others (e.g., glucose monitoring) and lack of familiarity with the quality metrics being measured as noted in the resident survey response (table 2). For example, in 2011 we implemented clinical decision support to promote appropriate intraoperative glucose monitoring, and this likely confounds the longitudinal analysis of the CA-3 class performance. However, we believe that sharing the process of development with others is of value in order to show what can be operationalized over a short period of time when a baseline departmental investment in the anesthesia and perioperative information management system and personnel to create such tools has been made.6,7,9,18,27–29 Second, it appears that the individual resident performance variance increases over time. While we did not have a formal measure of this, a possible explanation of this is that residents are likely to encounter higher acuity patients and cases in which all of the performance metrics apply as they progress through their residency. While the system is able to report performance over time on a series of metrics, this is not an independent measure of a resident’s performance because trainees are supported by both their supervising attendings and systems-level functions (e.g., pop-up reminders to administer antibiotics). While we cannot fully rule out a faculty-to-resident interaction, it is likely mitigated to some degree by the random daily assignments made amongst our 120 faculty physicians who supervise residents. Third, we have not investigated the validity and reliability of our composite performance metric, which is heavily influenced by the antibiotic metric as this is the item most commonly present (table 6). While there is no ‘gold standard’ in this domain, previous research has shown that composite scoring systems aggregated from evidence-based measures address these difficulties.24,25,30 Finally, even though the residents stated that they would like to see their data compared to faculty performance, this has not been added as there are a number of confounders that are being discussed (i.e., faculty scores being heavily affected by resident and certified registered nurse anesthetist performance that the faculty themselves supervise).

Further future directions will involve a number of developments. First, evaluation of the effect of this feedback system on longitudinal performance will be undertaken. Because the project is still in the development phase and the number of metrics to be included is expanding, we are currently using the tool only to provide formative feedback. It does however fulfill the ACGME requirement to give residents feedback on their personal clinical effectiveness as queried each year in the annual ACGME survey. Second, fair performance standards need to be evaluated and set concerning quality care.30 On the five metrics measured thus far, what minimum percentage pass rate is required to demonstrate competency, and is this the same at all years of training and beyond? Several studies have examined this problem in simulation settings, and these rigorous methods now must be applied to clinical performance.31–33 Third, further process measures will be added to the core list we have in place, such as the use of multi-modal analgesia in opioid-dependent patients or avoidance or treatment of the triple-low state.34,35 In addition to the Milestones already discussed above, we plan to expand the use of automatic data capture from the EMR to aid in the assessment of a wide range of the Milestones (Patient Care-2,3,4,7; Professionalism-1; PBLI-3). While the thorough assessment of resident performance and progress should continue to be tracked through multiple sources of input, we believe that use of objective, near-real-time data from the EMR can be leveraged to provide feedback on 12 of the 25 proposed Milestones (see table 7). Finally, this system could be modified to track additional objective perioperative outcome measures, such as elevated troponin, need for reintubation, acute kidney injury, efficacy of regional blocks, and delirium, all of which can be captured from data in our EMR and all of which were requested by our residents in the survey that we administered (table 4).

In summary, we described the design and implementation of an automated resident performance system that provides near real-time feedback to the program and to anesthesia residents from our perioperative information management system. The process and outcome metrics described in this report are related to the quality of care delivered. This system requires minimal administrative effort to maintain and generates automated reports along with an online dashboard. While this project has just begun and multiple future investigations concerning its validity, reliability, and scope must be completed to evaluate its effectiveness, this use of the EMR should function as one valuable piece in the Milestones puzzle.

Supplementary Material

Supplemental Digital Content 1. Resident Performance Dashboard User Manual and Logic

Acknowledgments

Funding: Department of Anesthesiology, Vanderbilt University, Nashville, Tennessee and Vanderbilt Institute for Clinical and Translational Research, Nashville, Tennessee (UL1 TR000445 from NCATS/NIH)

Appendix 1. Resident Survey

| Question* | Strongly Disagree | Disagree | Neutral | Agree | Strongly Agree |

|---|---|---|---|---|---|

| I am familiar with the quality performance measures used by my department and I understand the components assessed in each metric (e.g., normothermia, CVL insertion practice, etc.) | |||||

| I think that our electronic medical record is appropriately utilized to give me data about my clinical performance | |||||

| I am satisfied with the amount of feedback that I get about my clinical performance from faculty | |||||

| I receive timely feedback about my clinical performance from faculty | |||||

| I am satisfied with the amount of feedback that I get about my clinical performance from practice performance data (e.g., PONV, pain scores in PACU, on time first starts, etc.) | |||||

| I receive timely feedback about my clinical performance from practice performance data (e.g., PONV, pain scores in PACU, on time first starts, etc.) | |||||

| I would like to receive frequent updates about my clinical practice according to defined performance metrics (e.g., PONV, pain scores in PACU, on time first starts, etc.) | |||||

| I would like to receive frequent updates about my clinical practice according to defined performance metrics with comparison to mean performance in my residency class and the residency as a whole | |||||

| I would like to receive frequent updates about my clinical practice according to defined performance metrics with comparison to mean performance of faculty |

| How often do you systematically review your clinical performance? | Every case | Daily | Weekly | Monthly | Yearly | Never |

| How often would you like to receive a systematic review of your clinical performance? | Every case | Daily | Weekly | Monthly | Yearly | Never |

| How would you like to receive this feedback? [ABLE TO SELECT MULTIPLE ANSWERS] | Automated Email | Website Login | Smartphone App | Paper Copy | ||

| In which of the following areas could your performance improve? [ABLE TO SELECT MULTIPLE ANSWERS] |

|

|

| In what area(s) do you think that you need the most improvement? [ABLE TO SELECT MULTIPLE ANSWERS] |

|

|

| Please describe performance metrics about which you would like to receive frequent feedback? | [FREE TEXT BOX] | |

| Can you think of a specific case in the last month where your performance could have improved? (Please give details if you are willing. All responses are anonymous.) | Yes No [FREE TEXT BOX] | |

This survey was transformed into RedCAP for web-based dissemination to the residents.

CVL = central venous line; OR = operating room; PACU = postanesthesia care unit; PONV = postoperative nausea and vomiting.

Footnotes

From Draft Anesthesiology Milestones circulated to all anesthesiology program directors (Ver 2012.11.11). http://anesthesia.stonybrook.edu/anesfiles/AnesthesiologyMilestones_Version2012.11.11.pdf Last accessed October 16, 2013.

Anesthesiology Program Requirements. http://www.acgme.org/acgmeweb/Portals/0/PFAssets/ProgramRequirements/040_anesthesiology_f07012011.pdf. Last accessed October 16,, 2013.

Conflict of Interest: The authors declare no competing interests

Contributor Information

Jesse M. Ehrenfeld, Department of Anesthesiology, Vanderbilt University School of Medicine

Matthew D. McEvoy, Department of Anesthesiology, Vanderbilt University School of Medicine

William R. Furman, Department of Anesthesiology, Vanderbilt University School of Medicine, Vanderbilt University Medical Center

Dylan Snyder, Department of Anesthesiology, Vanderbilt University School of Medicine, Vanderbilt University Medical Center

Warren S. Sandberg, Department of Anesthesiology, Vanderbilt University School of Medicine, Vanderbilt University Medical Center

References

- 1.Nasca TJ, Philibert I, Brigham T, Flynn TC. The next GME accreditation system--rationale and benefits. N Engl J Med. 2012;366:1051–6. doi: 10.1056/NEJMsr1200117. [DOI] [PubMed] [Google Scholar]

- 2.Brown DL. Using an anesthesia information management system to improve case log data entry and resident workflow. Anesth Analg. 2011;112:260–1. doi: 10.1213/ANE.0b013e3181fe0558. [DOI] [PubMed] [Google Scholar]

- 3.Simpao A, Heitz JW, McNulty SE, Chekemian B, Brenn BR, Epstein RH. The design and implementation of an automated system for logging clinical experiences using an anesthesia information management system. Anesth Analg. 2011;112:422–9. doi: 10.1213/ANE.0b013e3182042e56. [DOI] [PubMed] [Google Scholar]

- 4.O’Reilly M, Talsma A, VanRiper S, Kheterpal S, Burney R. An anesthesia information system designed to provide physician-specific feedback improves timely administration of prophylactic antibiotics. Anesth Analg. 2006;103:908–12. doi: 10.1213/01.ane.0000237272.77090.a2. [DOI] [PubMed] [Google Scholar]

- 5.Spring SF, Sandberg WS, Anupama S, Walsh JL, Driscoll WD, Raines DE. Automated documentation error detection and notification improves anesthesia billing performance. Anesthesiology. 2007;106:157–63. doi: 10.1097/00000542-200701000-00025. [DOI] [PubMed] [Google Scholar]

- 6.Sandberg WS, Sandberg EH, Seim AR, Anupama S, Ehrenfeld JM, Spring SF, Walsh JL. Real-time checking of electronic anesthesia records for documentation errors and automatically text messaging clinicians improves quality of documentation. Anesth Analg. 2008;106:192–201. doi: 10.1213/01.ane.0000289640.38523.bc. [DOI] [PubMed] [Google Scholar]

- 7.Ehrenfeld JM, Henneman JP, Peterfreund RA, Sheehan TD, Xue F, Spring S, Sandberg WS. Ongoing professional performance evaluation (OPPE) using automatically captured electronic anesthesia data. Jt Comm J Qual Patient Saf. 2012;38:73–80. doi: 10.1016/s1553-7250(12)38010-0. [DOI] [PubMed] [Google Scholar]

- 8.Kooij FO, Klok T, Hollmann MW, Kal JE. Decision support increases guideline adherence for prescribing postoperative nausea and vomiting prophylaxis. Anesth Analg. 2008;106:893–8. doi: 10.1213/ane.0b013e31816194fb. [DOI] [PubMed] [Google Scholar]

- 9.Ehrenfeld JM, Epstein RH, Bader S, Kheterpal S, Sandberg WS. Automatic notifications mediated by anesthesia information management systems reduce the frequency of prolonged gaps in blood pressure documentation. Anesth Analg. 2011;113:356–63. doi: 10.1213/ANE.0b013e31820d95e7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Kheterpal S, Gupta R, Blum JM, Tremper KK, O’Reilly M, Kazanjian PE. Electronic reminders improve procedure documentation compliance and professional fee reimbursement. Anesth Analg. 2007;104:592–7. doi: 10.1213/01.ane.0000255707.98268.96. [DOI] [PubMed] [Google Scholar]

- 11.Kurz A, Sessler DI, Lenhardt R. Perioperative normothermia to reduce the incidence of surgical-wound infection and shorten hospitalization. Study of Wound Infection and Temperature Group. N Engl J Med. 1996;334:1209–15. doi: 10.1056/NEJM199605093341901. [DOI] [PubMed] [Google Scholar]

- 12.Rupp SM, Apfelbaum JL, Blitt C, Caplan RA, Connis RT, Domino KB, Fleisher LA, Grant S, Mark JB, Morray JP, Nickinovich DG, Tung A American Society of Anesthesiologists Task Force on Central Venous A. Practice guidelines for central venous access: A report by the American Society of Anesthesiologists Task Force on Central Venous Access. Anesthesiology. 2012;116:539–73. doi: 10.1097/ALN.0b013e31823c9569. [DOI] [PubMed] [Google Scholar]

- 13.Bratzler DW, Houck PM Surgical Infection Prevention Guidelines Writers W, American Academy of Orthopaedic S, American Association of Critical Care N, American Association of Nurse A, American College of S, American College of Osteopathic S, American Geriatrics S, American Society of A, American Society of C, Rectal S, American Society of Health-System P, American Society of PeriAnesthesia N, Ascension H, Association of periOperative Registered N, Association for Professionals in Infection C, Epidemiology, Infectious Diseases Society of A, Medical L, Premier, Society for Healthcare Epidemiology of A, Society of Thoracic S, Surgical Infection S. Antimicrobial prophylaxis for surgery: An advisory statement from the National Surgical Infection Prevention Project. Clin Infect Dis. 2004;38:1706–15. doi: 10.1086/421095. [DOI] [PubMed] [Google Scholar]

- 14.Classen DC, Evans RS, Pestotnik SL, Horn SD, Menlove RL, Burke JP. The timing of prophylactic administration of antibiotics and the risk of surgical-wound infection. N Engl J Med. 1992;326:281–6. doi: 10.1056/NEJM199201303260501. [DOI] [PubMed] [Google Scholar]

- 15.Ehrenfeld JM, McCartney KL, Denton J, Rothman B, Peterfreund R. Impact of an Intraoperative Diabetes Notification System on Perioperative Outcomes. Presented at the Annual Meeting of the American Society of Anesthesiologists; Washington, D.C. October 17, 2012; p. A284. [Google Scholar]

- 16.Apfelbaum JL, Silverstein JH, Chung FF, Connis RT, Fillmore RB, Hunt SE, Nickinovich DG, Schreiner MS, Silverstein JH, Apfelbaum JL, Barlow JC, Chung FF, Connis RT, Fillmore RB, Hunt SE, Joas TA, Nickinovich DG, Schreiner MS. Practice guidelines for postanesthetic care: An updated report by the American Society of Anesthesiologists Task Force on Postanesthetic Care. Anesthesiology. 2013;118:291–307. doi: 10.1097/ALN.0b013e31827773e9. [DOI] [PubMed] [Google Scholar]

- 17.Harris PA, Taylor R, Thielke R, Payne J, Gonzalez N, Conde JG. Research electronic data capture (REDCap)--a metadata-driven methodology and workflow process for providing translational research informatics support. J Biomed Inform. 2009;42:377–81. doi: 10.1016/j.jbi.2008.08.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Sandberg WS. Anesthesia information management systems: Almost there. Anesth Analg. 2008;107:1100–2. doi: 10.1213/ane.0b013e3181867fd0. [DOI] [PubMed] [Google Scholar]

- 19.Seufert TS, Mitchell PM, Wilcox AR, Rubin-Smith JE, White LF, McCabe KK, Schneider JI. An automated procedure logging system improves resident documentation compliance. Acad Emerg Med. 2011;18(Suppl 2):S54–8. doi: 10.1111/j.1553-2712.2011.01183.x. [DOI] [PubMed] [Google Scholar]

- 20.Ross AJ, Kodate N, Anderson JE, Thomas L, Jaye P. Review of simulation studies in anaesthesia journals, 2001–2010: Mapping and content analysis. Br J Anaesth. 2012;109:99–109. doi: 10.1093/bja/aes184. [DOI] [PubMed] [Google Scholar]

- 21.Baker K. Determining resident clinical performance: getting beyond the noise. Anesthesiology. 2011;115:862–78. doi: 10.1097/ALN.0b013e318229a27d. [DOI] [PubMed] [Google Scholar]

- 22.Schell RM, Dilorenzo AN, Li HF, Fragneto RY, Bowe EA, Hessel EA., 2nd Anesthesiology resident personality type correlates with faculty assessment of resident performance. J Clin Anesth. 2012;24:566–72. doi: 10.1016/j.jclinane.2012.04.008. [DOI] [PubMed] [Google Scholar]

- 23.Holmboe ES, Meehan TP, Lynn L, Doyle P, Sherwin T, Duffy FD. Promoting physicians’ self-assessment and quality improvement: The ABIM diabetes practice improvement module. J Contin Educ Health Prof. 2006;26:109–19. doi: 10.1002/chp.59. [DOI] [PubMed] [Google Scholar]

- 24.Lipner RS, Weng W, Caverzagie KJ, Hess BJ. Physician performance assessment: prevention of cardiovascular disease. Adv Health Sci Educ Theory Pract. 2013 doi: 10.1007/s10459-013-9447-7. Epub ahead of print. [DOI] [PubMed] [Google Scholar]

- 25.Weifeng W, Hess BJ, Lynn LA, Holmboe ES, Lipner RS. Measuring physicians’ performance in clinical practice: Reliability, classification accuracy, and validity. Eval Health Prof. 2010;33:302–20. doi: 10.1177/0163278710376400. [DOI] [PubMed] [Google Scholar]

- 26.Veloski J, Boex JR, Grasberger MJ, Evans A, Wolfson DB. Systematic review of the literature on assessment, feedback and physicians’ clinical performance: BEME Guide No. 7. Med Teach. 2006;28:117–28. doi: 10.1080/01421590600622665. [DOI] [PubMed] [Google Scholar]

- 27.Ehrenfeld JM, Funk LM, Van Schalkwyk J, Merry AF, Sandberg WS, Gawande A. The incidence of hypoxemia during surgery: Evidence from two institutions. Can J Anaesth. 2010;57:888–97. doi: 10.1007/s12630-010-9366-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Epstein RH, Dexter F, Ehrenfeld JM, Sandberg WS. Implications of event entry latency on anesthesia information management decision support systems. Anesth Analg. 2009;108:941–7. doi: 10.1213/ane.0b013e3181949ae6. [DOI] [PubMed] [Google Scholar]

- 29.Rothman B, Sandberg WS, St Jacques P. Using information technology to improve quality in the OR. Anesthesiol Clin. 2011;29:29–55. doi: 10.1016/j.anclin.2010.11.006. [DOI] [PubMed] [Google Scholar]

- 30.Hess BJ, Weng W, Lynn LA, Holmboe ES, Lipner RS. Setting a fair performance standard for physicians’ quality of patient care. J Gen Intern Med. 2011;26:467–73. doi: 10.1007/s11606-010-1572-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Boulet JR, De Champlain AF, McKinley DW. Setting defensible performance standards on OSCEs and standardized patient examinations. Med Teach. 2003;25:245–9. doi: 10.1080/0142159031000100274. [DOI] [PubMed] [Google Scholar]

- 32.Wayne DB, Butter J, Cohen ER, McGaghie WC. Setting defensible standards for cardiac auscultation skills in medical students. Acad Med. 2009;84:S94–6. doi: 10.1097/ACM.0b013e3181b38e8c. [DOI] [PubMed] [Google Scholar]

- 33.Wayne DB, Fudala MJ, Butter J, Siddall VJ, Feinglass J, Wade LD, McGaghie WC. Comparison of two standard-setting methods for advanced cardiac life support training. Acad Med. 2005;80:S63–6. doi: 10.1097/00001888-200510001-00018. [DOI] [PubMed] [Google Scholar]

- 34.Buvanendran A, Kroin JS. Multimodal analgesia for controlling acute postoperative pain. Curr Opin Anaesthesiol. 2009;22:588–93. doi: 10.1097/ACO.0b013e328330373a. [DOI] [PubMed] [Google Scholar]

- 35.Sessler DI, Sigl JC, Kelley SD, Chamoun NG, Manberg PJ, Saager L, Kurz A, Greenwald S. Hospital stay and mortality are increased in patients having a “triple low” of low blood pressure, low bispectral index, and low minimum alveolar concentration of volatile anesthesia. Anesthesiology. 2012;116:1195–203. doi: 10.1097/ALN.0b013e31825683dc. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supplemental Digital Content 1. Resident Performance Dashboard User Manual and Logic