Abstract

Objective

While there has been a dramatic increase in the number of evidence-based practices (EBPs) to improve child and adolescent mental health, the poor uptake of these EBPs has led to investigations of factors related to their successful dissemination and implementation. The purpose of this systematic review was to identify key findings from empirical studies examining the dissemination and implementation of EBPs for child and adolescent mental health.

Method

Out of 14,247 citations initially identified, 73 articles drawn from 44 studies met inclusion criteria. The articles were classified by implementation phase (exploration, preparation, implementation, and sustainment) and specific implementation factors examined. These factors were divided into outer (i.e., system level) and inner (i.e., organizational level) contexts.

Results

Few studies utilized true experimental designs; most were observational. Of the many inner context factors that were examined in these studies (e.g., provider characteristics, organizational resources, leadership), fidelity monitoring and supervision had the strongest empirical evidence. While the focus of fewer studies, implementation interventions focused on improving organizational climate and culture were associated with better intervention sustainment as well as child and adolescent outcomes. Outer contextual factors such as training and use of specific technologies to support intervention use were also important in facilitating the implementation process.

Conclusions

The further development and testing of dissemination and implementation strategies is needed in order to more efficiently move EBPs into usual care.

Keywords: children, dissemination and implementation research, evidence-based practice, mental health, substance abuse

INTRODUCTION

While the last several decades have been marked by the development of a number of important preventive and clinical evidence-based practices (EBPs) for mental health problems among children and adolescents, the science of disseminating and implementing these practices into community and clinical settings has received considerably less attention.1 Indeed, the poor uptake of EBPs in usual care settings remains one of the major barriers to providing safe, effective, and efficient mental health care.1,2 The improvement of this process is the focus of dissemination and implementation research.

For the purposes of this review we define dissemination as the “targeted distribution of information and intervention materials to a specific public health or clinical practice audience. The intent is to spread knowledge and the associated evidence-based interventions.”3 We define implementation as “the use of strategies to introduce or change evidence-based health interventions within specific settings.”3,4 Both dissemination and implementation are considered “active” strategies, standing in contrast to processes described as “diffusion,” which refer to the natural uptake of innovations.5,6 Implementation research falls under the broad rubric of “translational science” and is considered “T3” where T1 is the translation of basic science discovery to a clinical intervention (e.g., translating a basic biological process into a medication, or a behavioral process to a psychosocial intervention), T2 expands basic findings to clinical practice, and T3 is the dissemination and/or implementation of a new intervention.7

We define EBPs as those health interventions that are supported by rigorous scientific research, allow for clinical judgment and expertise in their application, and provide for consumer choice, preference, and culture.8,9 While the extent to which EBPs are being provided in usual care for children and adolescents is not clear, it is clear that there are critical gaps in the quality and effectiveness of mental health care currently being delivered to children.1 Indeed, the ability to adopt, implement, and sustain EBPs is becoming increasingly important for mental health, schools, and other human service organizations and providers10 as well as for integration into primary health care settings.

Implementation research is informed by a range of theories including seminal work on the diffusion of innovation in agriculture.6,11 This work generalized to diffusion of innovations in general and in social service settings in particular.5 In the 1970s work being developed in the United Kingdom addressed understanding intervention effectiveness in health care settings through systematic reviews of scientific literature12 and systematically applying relevant research to practice13 leading to clearinghouses to support dissemination of information regarding EBPs.14 In the 1980–1990s there was developing interest in the study of the implementation of innovations in business15,16 and an increasing impetus for quality improvement in health care that culminated in the Health Care Quality Improvement Act (1986)17 and later the Institute of Medicine’s Crossing the Quality Chasm report (2001).18 Specific calls from the NIH to support implementation research began in 1999,3 Centers for Disease Control funding began in 2009.19 National Institutes of Health (NIH)–sponsored conferences20 and training programs21 focused on dissemination and implementation research have also helped to advance the field.

Moving from the development of interventions to implementation in usual care is often a lengthy process and that time lag compromises the well-being of children with mental health needs.22 A number of mechanisms have been developed to accelerate this process through support for research and practice in implementation science. For example, NIH supports active research and training programs focused squarely on the dissemination and implementation of EBPs.3 The W.T. Grant Foundation is funding studies to better understand how research evidence is accessed, shared, and interpreted by policymakers and practitioners,4,23 and the Centers for Disease Control and Prevention also funds studies to examine translation of EBPs into usual care.24

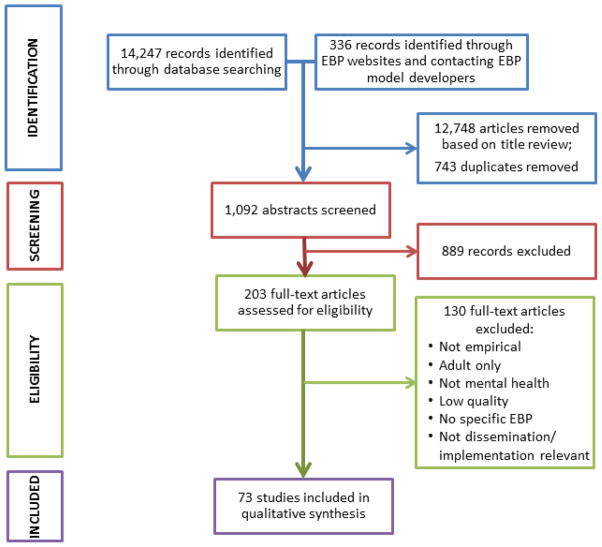

There are a number of published dissemination and implementation frameworks; most approach implementation as a complex, multiphasic process that involves multiple stakeholders in service systems, organizations, and practices.11,25,26 One such framework developed specifically for public mental health and social service settings is the EPIS model. It divides the dissemination and implementation process into the following 4 phases: Exploration (consideration of new approaches to providing services), Preparation (planning for providing a new service), Implementation (provision of this new service), and Sustainment (maintaining this new service over time; EPIS).10 The EPIS model also emphasizes the importance of contextual factors, both inside the unit providing services (i.e., service organization, individuals providers) as well as those in the larger environment in which the service unit operates (e.g., policy and funding, relationships with intervention developers and technical assistance providers, certification and regulatory environment) Figure 1 shows the multiple phases and levels of the EPIS framework. Note that some factors (e.g., fidelity, provider attitudes, interorganizational networks) are relevant to multiple EPIS phases. In order to illuminate this complexity, we provide the following hypothetical example:

Figure 1.

Exploration, preparation, implementation, sustainment (EPIS) multilevel implementation framework: factors impacting evidence-based treatment (EBT) implementation in child and adolescent mental health. Note: This figure depicts some examples of factors in the outer and inner contexts to be considered in each phase of the EPIS framework phases found in this study and in the original article. From Aarons et al. Advancing a conceptual model of evidence-based practice implementation in public service sectors. 2011;28(1):4–23.10 Reprinted with permission from Springer.

In the exploration phase, a service system, organization, (e.g., hospital, clinic, community-based provider, etc.) or an individual considers what factors might be important in regard to implementing a practice. For a new medication, these might include regulatory and reimbursement issues (e.g., Food and Drug Administration [FDA] approval, health plan formularies) and the need for training and support for physicians and pharmacists in appropriate prescribing practices and potential drug interactions. In the preparation phase, changes in formularies would be made and electronic medical records would need to be amended to allow for documenting indications and prescribing the new medication. Plans would need to be made for physician/pharmacist training including scheduling, procuring space, and follow-up coaching and support, if needed. In the implementation phase training begins along with assuring that the medication is now available in formularies and for patients to obtain from pharmacies. In the sustainment phase, ongoing monitoring of appropriate prescribing practices, patient adherence, adverse events, and outcomes would be utilized to understand and increase the likelihood of positive outcomes. While this example is oversimplified, it illustrates that there are a number of issues to be considered in order to facilitate effective implementation of an EBP in each EPIS phase.

In addition to EPIS, we considered a number of frameworks and approaches for framing the results of this review. For example, Damschroder et al.25 identified multiple models, theories, and frameworks based on previous synthesis of implementation literature as well as individual frameworks. They synthesized 19 of these to develop the Consolidated Framework for Implementation Research (CFIR). Powell et al.11 examined the characteristics of 65 implementation strategies and documented “key” processes including planning, educating, financing, restructuring, managing quality, and attending to the policy context. Meyers et al.27 reviewed 25 implementation theories and developed the Quality Implementation Framework (QIF) that has 4 phases to address implementation processes. Each of these approaches has overlap with the EPIS framework. For example, like the CFIR the EPIS framework has a strong emphasis on structures and process in the outer policy and inner organizational contexts. Like the QIF, the EPIS framework has phases that help guide thinking and problem solving regarding implementation.

While there is variability in the focus and contexts considered in extant approaches, the EPIS framework integrates other theories of systemic and organizational change that help to inform EBP implementation efforts. In addition, for the purposes of this systematic review the EPIS framework was deemed appropriate because of its emphasis on public sector service sectors where a majority of children and adolescents receive mental health services. These service sectors include mental health, child welfare, substance abuse treatment, and primary care settings and commonly are strongly impacted by both outer policy and inner organizational context factors. In point of fact, most of the studies that met inclusion criteria for this review, took place in such public sector service settings.

The purpose of this systematic review is to examine the current state of the science regarding the implementation of EBPs for the prevention and treatment of mental health problems among children and adolescents in community, primary care, and specialty mental health settings.

METHOD

Databases and Search Methodology

We conducted a systematic literature search for all of the available empirical studies examining dissemination and implementation of evidence based practices in child and adolescent mental health using PubMed, Embase, PsycInfo, Web of Science, Cumulative Index to Nursing and Allied Health Literature (CINHL), and Cochrane Library. Search terms relating to 1) dissemination and implementation, 2) mental health including DSM-IV diagnoses, and 3) child and adolescent populations were used to search each database (see Supplement 1, available online, materials for Boolean search parameters). A second search strategy involved contacting model developers of prominent child/adolescent focused EBPs and examining publication lists on EBP websites. See Table S1, available online, for the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) Systematic Review Checklist.

Study Selection

Included were English-language empirical journal articles that examined the dissemination and implementation of EBPs in child and adolescent mental health between 1991 and December 2011. Studies were excluded if they did not examine dissemination or implementation issues in child and adolescent mental health or if they contained no empirical data (e.g., case descriptions of implementations or a summary of “lessons learned” from such efforts). Screening of search results followed the PRISMA statement recommendations for reporting of the systematic review process.28

A team of 4 investigators (1 child/adolescent psychiatrists, 2 child/adolescent psychologists, 1 general psychiatry resident) completed the review of titles, abstracts, and full-text articles. Investigators independently reviewed and selected articles resulting from the database, developer, and website searches. Once the results of these searches were merged and duplicates eliminated, the investigators employed an iterative calibration procedure prior to reviewing article abstracts. During the calibration procedure, ten percent of the abstracts were reviewed by the 4 investigators until consensus was made to either reject or include each article for full text review. After the calibration procedure was conducted, the team divided the remaining abstracts for independent investigator review. The resulting full text articles were then reviewed by at least 2 investigators for final inclusion. Finally, these full-text articles were rated on methodological rigor and relevance to EBP dissemination and implementation issues in child and family mental health. Scores ranged from 1 (high rigor/high relevance) to 3 (low rigor/low relevance). Studies that received a score of 3 were discussed by the team of coauthors and eliminated if consensus was reached that the study did not meet methodological rigor for inclusion or was not relevant enough to EBP dissemination and implementation in children’s mental health. Each study included in this qualitative review was examined and agreed upon for inclusion by all 4 coauthors.

Coding of Studies

Included studies were then coded by setting (e.g., mental health, school, child welfare), type of EBP (i.e. prevention, clinical), design (e.g., experimental, descriptive quantitative, qualitative, mixed methods), EPIS phase, and EPIS contextual level factors. Terms utilized by authors to describe their work were examined; however, articles were classified according to the EPIS model based on results and data that were presented. For example, a number of studies purported to address sustainment; however, in some cases the intervention was not carried out long enough (i.e., a minimum of 1 year) to meet criteria for sustainment.

RESULTS

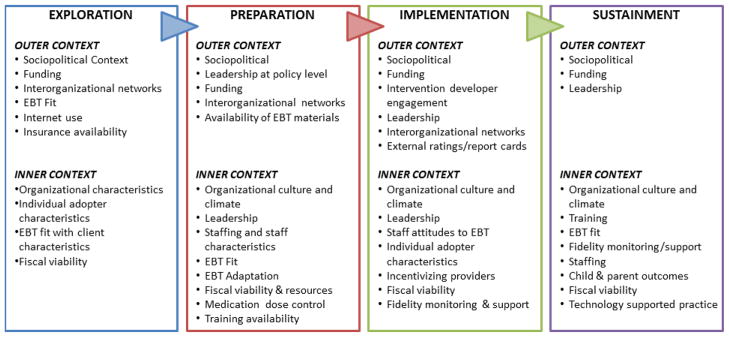

A total of 14,247 citations were identified from database searches based on title alone, with an additional 336 records identified through EBP websites and contacting EBP model developers. These citations were further distilled down to 1,092 after reviewing the abstracts and eliminating duplicates. The abstract review resulted in 203 potential articles for full review; 104 were kept after full article review. Finally, these studies were assessed for methodological rigor and relevance to dissemination and implementation, resulting in 73 articles which met all study criteria for inclusion (see Figure 1 for PRISMA Diagram). Table 1 provides a synopsis of those papers utilizing randomized experimental methods. Table S2, available online, lists the key characteristics of all papers included in this review.

Table 1.

Summary of Randomized Studies of Evidence-Based Practice (EBP) Dissemination and Implementation in Youth Mental Health

| Paper | EPIS Stage | Prevention/Treatment | Treatment/Practice Studied | Treatment/Practice Setting | Contextual Focus | Intervention | Unit(s) of Analysis | N |

|---|---|---|---|---|---|---|---|---|

| Aarons et al., 200956 | Implementation | Prevention | SafeCare | Child welfare | Inner (organizational; provider; fidelity) | SafeCare vs. care as usual x fidelity monitoring vs. no monitoring | Multiple-organization/provider (organization dropped from final analyses) | 153/25 |

| Summary: In multivariate model analyses, SafeCare plus fidelity monitoring had higher staff retention rates than SafeCare plus no monitoring. Older age, greater perceived job autonomy, and lower expressed intention to leave their job also positively correlated with higher staff retention rates. | ||||||||

| Aarons et al., 200937 | Implementation, Sustainment | Prevention | SafeCare | Child welfare | Inner (organizational; provider; fidelity; coaching) | SafeCare vs. care as usual x fidelity monitoring vs. no monitoring | Multiple-agencies/providers (analyses Were conducted to address clustering of providers in programs) | 21/99 |

| Summary: Providers in programs implementing SafeCare had lower levels of emotional exhaustion. Significant predictors of emotional exhaustion included age (older=less emotional exhaustion) and caseload (higher=greater emotional exhaustion). | ||||||||

| Barwick et al., 200958 | Implementation | Treatment | CAFAS | Mental health clinics | Inner (organizational) | Use of a community of practice approach for improving adherence | Single - provider (randomization was done by organization, which was not addressed in the analyses) | (6)/37 |

| Summary: Providers working in organizations using a Community of Practice implementation model had higher rates CAFAS use; their knowledge regarding the CAFAS increased over time while those in the Practice as Usual programs decreased. | ||||||||

| Beidas et al., 201251 | Implementation | Treatment | CBT for anxiety | Mental health clinicians in private practice | Outer (training) | 3 different training workshops (routine, computerized, and routine augmented with active learning principles) | Single - provider | 115 |

| Summary: Based on the Adherence and Skill Checklist, the three workshops did not show significant differences in adherence, skill, and knowledge; however, participants preferred the in-person over the computerized training. Follow-up played a critical role with adherence and skill but was not a part of the randomized design. | ||||||||

| Di Noia et al. 200329 | Exploration | Prevention | Multiple | Schools, community agencies | Outer (Information transmission) | 3 different dissemination strategies (pamphlet, CD, internet) | Single-provider | 188 |

| Summary: Exploration impacts (accessibility of materials, self-efficacy to select/recommend an intervention, and behavioral intentions to encourage adoption of interventions) was stronger in the internet group, intermediate in the CD-ROM group, and weakest in the pamphlet group. | ||||||||

| Epstein et al., 200752 | Preparation, Implementation | Treatment | Stimulant Medication Dosage Determination Support | Primary care clinics | Inner (provider; fidelity; coaching) | A pre-packaged and specialist-supported titration trial to determine optimal methylphenidate dose in children with ADHD | Multiple-patient, provider, practice (note patient and provider levels dropped from key analyses) | 145/52/12 |

| Summary: Availability of the titration service increased the use of titration trials but not the monitoring of ADHD symptoms, and it did not impact ADHD symptomatology overall. However, patients who actually received this service received higher stimulant doses and experienced greater improvement in symptoms. | ||||||||

| Foster and Stiffman, 200934 | Preparation, Sustainment | Treatment | Decision Support System | Social services | Inner (organizational; provider) | Personal digital assistant (PDA) vs. PDA plus desktop computer | Single-provider | 28 |

| Summary: Availability of the titration service increased the use of titration trials but not the monitoring of ADHD symptoms, and it did not impact ADHD symptomatology overall. However, patients who actually received this service received higher stimulant doses and experienced greater improvement in symptoms. | ||||||||

| Garner et al., 201162 | Implementation | Treatment | Adolescent Community Reinforcement Approach (A-CRA) and Assertive Continuing Care (ACC). | Substance abuse treatment clinics | Inner (organizational) | Pay for performance (payments for receiving a rating of at least minimal competency on an audio session recording and for each adolescent receiving a targeted threshold of treatment) | Multiple - providers and agency | 95/29 |

| Summary: Therapists in the pay for performance condition reported significantly greater intentions to achieve each of the two quality targets (to achieve monthly intervention competence and to deliver the intervention at a targeted threshold level) during the first 3 months of the experiment. | ||||||||

| Glisson et al. 201060 | Implementation | Treatment | MST and ARC (availability, responsiveness, and continuity) | Mental health clinics | Inner (organizational- culture/climate) | Organizational intervention (ARC) that addresses services barriers, training in principles of effective service systems, and facilitates the development of flexibility, openness, and engagement among providers | Multiple - youth, providers, counties (number of providers not specified, but this level was included in analyses) | 615/?/14 |

| Summary: At 6-month follow-up youth total problem behavior in the MST plus ARC condition was at a nonclinical level and significantly lower than in other conditions. At 18-month follow-up youth in the MST plus ARC condition entered out-of-home placements at a significantly lower rate than in the other conditions. | ||||||||

| Henggeler et al., 199753 | Implementation | Treatment | MST | Mental health clinics | Inner (provider; fidelity) | Implementation of MST without fidelity measures compared to care as usual (and MST with fidelity measures drawing on prior publications) | Multiple - clinics/therapists/youth | 2/4/155 |

| Summary: Modest outcomes were achieved when not considering fidelity to MST, especially when compared to prior MST trials that included fidelity monitoring. Parent and adolescent ratings of treatment adherence predicted low rates of re-arrest; and therapist ratings of treatment adherence and treatment engagement predicted decreased self-reported index offenses and low probability of incarceration, respectively. | ||||||||

| Henggeler et al., 200835 | Implementation, Sustainment | Treatment | Contingency Management within the context of MST | Mental health and substance abuse clinics | Outer (training); Inner (organizational; provider; fidelity; coaching) | Workshop training + intensive quality assurance vs. workshop training only | Multiple-provider/caregiver- youth | 30/70 |

| Summary: Workshop plus intensive quality assurance was more effective than workshop only at increasing practitioner implementation of contingency management techniques in the short-term based on youth and caregiver reports, and these increases were sustained based on youth reports. | ||||||||

| Holth et al., 201195 | Implementation, Sustainment | Treatment | Multiple (MST, contingency management) | Mental health and substance abuse clinics | Outer (training); Inner (organizational; provider; fidelity; coaching) | Workshop training + intensive quality assurance vs. workshop training only | Multiple-supervisor/provider | 21/41 |

| Summary: Compared to workshop only, intensive quality assurance enhanced therapist adherence to CBT techniques but not to contingency management. Cannabis abstinence increased as a function of time in therapy and was more likely with stronger adherence to contingency management. | ||||||||

| Kolko et al., 201259 | Implementation | Treatment | AF-CBT | Mental health clinics, child welfare | Outer (training) | “Learning community”-based training versus training as usual | Single-agency (not addressed in analyses)/providers | (10)/182 |

| Summary: Providers who participated in the community condition rated higher on knowledge and use of skills included in the EBP. Practitioners in both groups reported significantly more negative perceptions of organizational climate during the intervention phase. | ||||||||

| Lochman et al., 200955 | Implementation | Prevention | Coping Power | Schools | Outer (training); Inner (provider; fidelity); Outcomes (youth) | 2 different levels of training and a control condition | Multiple-school/counselor/student (school not included in modeling-some schools shared counselors) | (57)/49/531 |

| Summary: The higher intensity training condition resulted in better engagement with students and better child outcomes (reductions in children’s externalizing behavior problems and improvement in their social and academic skills. | ||||||||

| Pentz et al., 199057 | Implementation | Prevention | Midwestern Prevention Project | Schools | Inner (organizational; provider; fidelity), Outcomes (participant) | Implementation versus wait list control (by school) | Multiple-schools, teachers, students | 42/65/5065 |

| Summary: All schools implemented the program. Exposure to the intervention was the strongest predictor of student outcome (minimizing increases in drug use behavior). Reinvention (adherence/adaptation) was not related to student outcome. | ||||||||

| Rohrbach et al. 199333 | Preparation, Implementation | Prevention | Adolescent Alcohol Prevention Trial | Schools | Inner (organizational- leadership; adopter) | Brief versus intensive training x principal versus no principal intervention | Multiple-teacher, school | 60/25 |

| Summary: There was no difference in rates of implementation/fidelity between the brief and intensive teacher training. Principal training increased implementation/fidelity. Teachers who reported higher rates of implementation/fidelity also reported less teaching experience, stronger self-efficacy, greater enthusiasm, better preparedness, higher teaching method compatibility, and greater principal encouragement. Integrity of implementation was related to positive program outcomes. | ||||||||

Note: ADHD=attention-deficit/hyperactivity disorder; AF-CBT=Alternatives for Families: A Cognitive-Behavioral Therapy (AF-CBT); ARC=availability, responsiveness, and continuity; CAFAS=Child And Adolescent Functional Assessment Scale; CBT=cognitive behavioral therapy; CD=compact disc; MST=Multisystemic Therapy.

In the following sections we present an analysis of the findings of the included 73 articles organized by the EPIS framework constructs. Papers that address more than one EPIS phase (n=16) are counted and analyzed in all relevant EPIS phase sections; results from these studies are described in each relevant phase. Table 2 provides a summary of key paper characteristics by EPIS phase.

Table 2.

Summary of Key Characteristics of Studies by Exploration, Preparation, Implementation, Sustainment (EPIS) Framework Phase

| Exploration (n=2) | Preparation (n=19) | Implementation (n=60) | Sustainment (n=8) | TOTAL (N=73)a

|

||

|---|---|---|---|---|---|---|

| n | % | |||||

| Intervention Type | ||||||

| Preventive | 1 | 8 | 22 | 3 | 27 | 37.0 |

| Clinical | 1 | 11 | 38 | 5 | 46 | 63.0 |

| Design and Analysis | ||||||

| Experimental | 1 | 3 | 14 | 4 | 18 | 24.7 |

| Multilevelb | 0 | 6 | 20 | 3 | 23 | 31.5 |

| Contexts Examined | ||||||

| Inner | 1 | 18 | 56 | 8 | 67 | 91.8 |

| Outer | 2 | 11 | 24 | 3 | 27 | 37.0 |

| Intervention | 1 | 2 | 3 | 0 | 4 | 5.5 |

| Outcomesc | 0 | 0 | 12 | 1 | 12 | 16.4 |

Note:

Total = nonduplicated count of studies

Multilevel = studies that examined results using hierarchical modeling (e.g., providers clustered within clinics)

Outcomes = parent, child, and/or family outcomes

Exploration

The Exploration phase involves awareness of a clinical or service issue and developing processes to identify an improved approach to address that issue. Two papers met our review criteria and addressed issues of exploration. One paper focused on preventive interventions29, the other on a clinical intervention30. The preventive intervention paper utilized a randomized design and found that using the internet to facilitate the exploration of preventive interventions by schools and community agencies was more effective than pamphlets or CDs (outer contexts of the exploration process [i.e., information transmission]).29 The treatment paper utilized both qualitative and quantitative (i.e., mixed) descriptive methods to assess the pilot implementation of a program for providing services to children with attention-deficit/hyperactivity disorder in primary care settings. The authors found that the intervention itself required adaption to provide better guidance for addressing assessment of insurance coverage, managing complex clinical situations, and working with mental health specialists in both outer [interorganizational networks] and inner [patient, family] contexts.30

Preparation

The Preparation phase involves planning and decision making regarding adoption and implementation of an innovation and addressing system, organizational, and individual readiness for change. Nineteen papers addressed issues of preparation with 8 focused on preventive interventions (with the Triple P Positive Parenting Program®31 [n=3] and the Arson Prevention Program for Children [n=2]32 being the most commonly studied). Eleven studies focused on clinical interventions with 2 focused specifically on medication guidelines. The remainder focused on different psychosocial/psychotherapeutic interventions. Almost all of the papers involved an explicit focus on the inner context of the preparation and implementation process (n=17) with organizational (e.g., staffing, resources, climate), individual provider, and leadership factors being the most commonly examined. Eleven papers included an explicit focus on outer contexts with interorganizational networks, sociopolitical contexts, and training factors being the most commonly examined. One paper focused explicitly on the intervention itself and ways it should be adapted to improve prospects for effective implementation. Only 3 papers utilized randomized experimental designs. The remainder utilized descriptive methods, including 3 that exclusively utilized qualitative methods.

The 3 randomized controlled trials examined different methods to increase likelihood of EBP implementation. Rohrbach et al.33 found that the training of the school principal, but not the intensity of teacher training, increased the likelihood of adoption of a school-based intervention. Foster and Stiffman34 found that the addition of a personal digital assistant version of a desktop decision support system for social service workers increased EBP use. Epstein et al. found that (1) providing pre-packaged medications to enable practitioners to conduct n of 1 double-blind placebo-controlled trials of different stimulant doses for use with individual patients, along with (2) specialist support for its interpretation to pediatric practices increased the use of such trials, though it did not increase the frequency of monitoring of ADHD symptoms over time. None of these papers examined the importance or impacts of outer contextual factors in preparation for implementation.

Descriptive studies also consistently supported the importance of inner contextual factors during the preparation process and suggest that outer contextual factors may be important as well. Inner context factors that emerged as important beyond those identified in the above experimental studies include the fit (or perceived fit) of the intervention with the organization,32,35–37 provider self-efficacy,38 the availability of appropriate training, 38,39 adequate resources (including personnel and funding),39–43 use of locally and nationally-generated data for the decision making process,37,41,44 leadership (e.g., leadership skills, administrator support of quality improvement goals, attitudes towards EBP implementation),37,39,42,43,45 and organizational culture and climate.43,46 Some studies suggest that providers earlier in their careers had more positive attitudes towards EBPs and were more likely to implement them,42,46 while others suggested that more experienced providers were more likely to actually adopt an intervention.35 Outer contextual factors associated with preparation included the quality of interorganizational networks,38,47 leadership from policymakers at the state level,41,48 and the availability of implementation materials from nationally recognized sources.41

Implementation

The Implementation phase addresses factors related to the active implementation and scale up of an innovation. Sixty papers (82% of those included in this review) addressed issues of implementation. Twenty-three of these papers focused on preventive interventions (with the SafeCare® child neglect intervention [n=4])49 and the Triple P Positive Parenting Program31 [n=3] being the most commonly studied) and 37 focused on clinical interventions (with Multisystemic Therapy®50 being the most commonly studied [n=12]). Almost all of these papers included an explicit focus on the inner context of the implementation process (n=56) with training/fidelity monitoring and support and individual provider characteristics being the most commonly examined. Twenty-four papers included an explicit focus on outer contexts with training and interorganizational networks (particularly EBP developer engagement in implementation) being the most commonly examined. The importance of adherence to EBP protocol was examined in 27 papers while the place of flexibility and adaption of EBPs for use with diverse populations and settings was examined in 8 papers. Twelve papers examined the relationship of implementation quality and related factors to child, parent, and/or family outcomes. Fourteen papers utilized randomized experimental designs; 2 utilized nonrandomized experimental designs, and 4 used quasiexperimental designs. The remainder used descriptive methods, including 14 that exclusively utilized qualitative methods.

Five of the 16 of studies with experimental designs focused on the level of provider support necessary to assure implementation with adherence to EBP protocols. Consistent across these studies, which involved multiple EBPs and both preventive and clinical services, is that ongoing supervision, fidelity monitoring, and support to providers resulted in higher levels of adherence/fidelity.51–55 Two other experimental studies suggested that supervision, monitoring, and support also improve staff retention56 and reduce staff emotional exhaustion,37 One paper found that training of school principals, but not the teachers delivering the intervention, improved adherence/fidelity to the EBP protocol.33 Two of the above papers extended their work to demonstrate that higher levels of adherence/fidelity resulted in better patient/family outcomes;53,55 another study that examined the impact of fidelity/adherence on student outcomes in the experimental arm of their effectiveness study were unable to find such a relationship.57 Studies with experimental designs also support the premise that attention to organizational factors results in more successful implementation of EBPs, including the formation of “communities of practice”58 or “learning communities”59 to support EBP implementation and the use of availability, responsiveness, and continuity (ARC), an organizational intervention designed to improve organizational culture and climate in mental health and social service organizations.60 The latter is particularly notable as it tied improvement in organizational culture and climate to youth outcomes in organizations that received the ARC intervention.60 However, this study did not find an impact of ARC on intermediary implementation outcomes as identified by Proctor et al.61 (i.e., fidelity). One experimental study suggested that incentives to providers increases the quality of EBP implementation.62 Finally, studies that examined different approaches to initial training (brief versus intensive,33 didactic versus experiential,63 in-person versus videoconference64) were unable to demonstrate significant differences in their impacts on implementation success.

Descriptive studies were generally consistent with the findings of the experimental studies around the need for training and ongoing monitoring/support in order to achieve successful implementation35,65–71 and that better adherence was related to better child outcomes.67,72–74 Additional inner contextual factors that were related to more successful implementation in these studies included those related to providers characteristics,75 including sociodemographics,35 experience,43,76 disciplinary background,35 exposure to32,38 and attitudes towards EBPs,35,69,71,77–80 sense of self-efficacy,32,38,68,77 opportunities for reward/achievement,67,80 concerns about competing demands [which reduced likelihood of implementation]71,79 and ethnic matching of therapist and youth.78,81 Supervisor factors associated with implementation included experience with EBPs and82 relationships with supervisees,70 while organizational factors included leadership,65,66,79,80,83 organizational culture and climate,36,73,84 perceived fit of an EBP with the organization’s mission, 21,25, 53,63,80 organizational support for implementation,43,65,67–69,79,83 and effectively addressing institutional barriers68,79,85 such as financing,65,75,80 resources,36 readiness to change,35 and governance [i.e., for-profit organizations were more likely to implement EBPs than nonprofits].76 Within the context of fidelity to EBP protocols, some studies suggested allowing therapists flexibility in their implementation of the EBP (e.g., adapting cognitive behavioral therapy for anxiety to better meet specific child needs,86 language translation65) improved engagement, treatment retention, and child outcomes.86 Other studies noted that adaptation was common when providers implemented EBPs87–90 with another study developing a process in which EBP developers worked with providers and youth to identify and approve potential adaptions.91 Child and family characteristics,69,78,81,92 and family engagement in the treatment process35,69,79 were also reported to be related to implementation success.

Outer contextual factors identified in these studies that supported the implementation process include interorganizational/provider networks,38,79,80 external ratings/report cards,93 and interactions between organizations and/or individual providers and EBP developers.89

Finally, these studies also suggest that additional attention should be paid to the interaction of different variables in the implementation process. For example, Asgary-Eden and Lee found that provider experience was a significant predictor of implementation only in those organizations that were perceived as less amenable to implementation (in those organizations that were perceived amenable to implementation, provider experience was not related to implementation).43 Schoenwald et al. found that organizational factors were predictive of child outcomes only when EBP adherence was low among the organization’s providers.67

Several papers raised questions about optimal methods for measuring implementation quality (which is generally defined as adherence to EBP protocols). Independent observation, provider self-report, and parent report have all been utilized in implementation studies. Studies that have compared at least 2 of these methods suggest that agreement between them is generally good94 but that providers tend to rate their implementation quality as higher than independent observers,94 and in one study independent observer ratings quality were related to child outcomes, but provider ratings were not.94

Sustainment

The Sustainment phase focuses on the maintenance of an innovation beyond 1 year. Only 8 papers addressed issues of sustainment with 3 of these papers focused on preventive interventions (with SafeCare [n=2])49 being the most commonly studied) and 5 focused on clinical interventions (with Contingency Management in combination with Multisystemic Therapy50 being the most commonly studied [n=2]). All of these papers included an explicit focus on the inner context of the implementation process with training/fidelity monitoring and support being the most commonly examined. Three papers included an explicit focus on outer contexts with interorganizational networks being the most commonly examined. One paper examined the relationship of sustainment and factors related to child, parent, and/or family outcomes. Four papers utilized randomized experimental designs, 4 utilized descriptive methods, including 1 that exclusively utilized qualitative methods.

Three of the 4 randomized controlled trials examined the impacts of different intensities of training/fidelity monitoring and support. Both Henggeler et al.54 and Holth et al.95 found that ongoing fidelity monitoring and support was superior to an initial workshop only for sustaining adherence to EBP protocols (in this case Multisystemic Therapy and Contingency Management), although Holth et al.’s results suggest that the impacts may have been limited to the use of cognitive behavioral therapy techniques included in Multisystemic Therapy and not Contingency Management. Aarons et al.37 found that fidelity monitoring resulted in lower levels of staff emotional exhaustion when such monitoring took place in the context of sustainment of an EBP (in this case SafeCare) compared to fidelity monitoring for providers providing usual care services. In their small clinical trial comparing desktop computer only versus desktop computer plus personal digital assistant access to the a decision making support system, Foster and Stiffman34 found that use of the system declined during the sustainment phase of their trial, though use of the decision support system remained higher in the desktop computer plus personal digital assistant condition during the sustainment phase.

The descriptive studies reinforced the importance of access to ongoing supervision to assure the sustainment of an intervention with fidelity 65,96 (and that connections with the EBP developers themselves can be particularly helpful in supporting sustainment)65 while also suggesting that careful selection of the EBP (taking into account important organizational, staff, and children and families served),65,69 addressing sustainability during the preparation and implementation phase,65 and a supportive organizational culture increases the likelihood of sustainment.84

DISCUSSION

Of the many inner contextual factors that have been examined in the studies included in this review (e.g., provider characteristics, organizational resources, leadership), fidelity monitoring and supervision were most frequently examined and have the strongest empirical evidence. Ongoing fidelity assessment, supervision, and support increases the likelihood that expected intervention effects will be realized and has important ancillary benefits including reduced staff burnout and improved staff retention. This is an important finding for the health system and organization leaders as a strong and stable workforce is critical for delivery of effective services and cost containment.97 While the focus of fewer studies, it is also compelling that interventions specifically focused on improving organizational culture and climate were associated with better intervention sustainment as well as better child and adolescent outcomes. These studies suggest that there is more to dissemination and implementation than simply getting individual providers to deliver interventions with fidelity, but that we also must pay attention to characteristics of the workplace.73,84

Our findings suggest that factors such as training strategies and technologies to support intervention use are important to the dissemination and implementation process, though the results are more clearly linked to the outer context in contrast to the inner context. Studies suggest that web-based clearinghouses that provide EBP descriptions, efficacy/effectiveness ratings, and relevance ratings for particular service sectors or disorders will be more accessible and have higher utility with easier, more flexible access than print or physical media.98,99 Though there is currently a great deal of interest in the relative impacts of different dissemination approaches, we were largely unable to find studies that support specific sets of strategies in this regard. Other outer contextual factors that appear to support the dissemination and implementation process include connections with EBP developers and interorganizational networks that link key stakeholders and facilitate interaction and communication. Unfortunately, we found little to no focus on policy or larger system issues that may impact uptake of EBPs. This is notable given federal, state, and local legislation and policies often dictate or encourage EBP adoption and use, but such policies do not always provide appropriate infrastructure support for implementation (e.g., funded vs. unfunded mandates).100

This review raises a number of important methodological issues in the field. First, while we found a large number (n=14,583) of citations that invoke the terms dissemination, implementation, sustainability, and sustainment, we identified only 73 well-conducted, empirical studies of implementation process and/or outcomes in children’s mental health. When we adjust this number for the fact that several papers were published drawing data from the same studies, the number of unique studies is even smaller (n=44). While this low yield is partially the result of our inclusion criteria, which excluded review articles and single case descriptions, most of the papers identified by our search terms did not address dissemination or implementation per se. As the relatively young field of implementation science matures and garners, for example, its own set of specific search terms in Medical Subject Headings (MeSH),101 conducting more efficient literature searches will be possible, but identifying relevant articles is currently more challenging than is typical in studies of children’s mental health.

Second, studies encompassed different research methods including quantitative, qualitative, and mixed methods, consistent with the needs of a developing field of study but this is also indicative of the complexity of studying the dissemination and implementation process, which often involves individual providers nested within organizations, larger interorganizational networks, and functioning in a complex policy environment.102 Only 11 papers (15%) utilized a cluster-randomized design necessary to address these complexities. While such studies are centrally important to advancing the field (and we hope a future systematic review will be able to identify many more), it is also important to acknowledge that some dissemination and implementation research questions do not easily lend themselves to experimental designs (e.g., changes in policy) and the other methodological approaches included in this review will continue to remain important even as the field advances. For example, where the number of organizational units may be limited thus decreasing quantitative statistical power, qualitative methods can be used for triangulation (to corroborate findings) and expansion (to add new information) of results.103 Other alternatives include rollout designs, where multiple cohorts of providers or organizations are randomized in sequence, and each cohort serves as the control group for the previous cohort.104,105 Other methods will be required to identify and explicate complex interactions and change mechanisms within social and organizational contexts. It may be particularly fruitful to draw on measurement and process assessment from other fields including time series analysis, regression discontinuity designs, system dynamics, network analysis, and qualitative methods.105,106.

Third, that this literature was dominated by implementation phase studies addressing training and training support is most likely because most of this research was conducted by intervention developers working to determine the most efficient and effective ways to implement their programs with fidelity. There was also a disproportionate number studies examining implementation of Multisystemic Therapy (MST). MST, one of the most widely utilized and best-studied EBPs for adolescents with behavior problems, was the subject of one of the earliest NIH-funded implementation studies in child mental health.67 However, as implementation science matures research studies should address a more diverse array of EBPs and provide better coverage of the exploration and sustainment phases of this complex process.

Limitations of this review should be noted. First, the heterogeneity of papers included in this systematic review, which range from cluster randomized trials to qualitative investigations, precluded the utilization of quantitative meta-analytic techniques. The field needs to advance further before such techniques can be employed. Second, this paper utilizes the EPIS Framework as an organizing heuristic for this systematic review; it does not however represent an empirical test of this framework. Third, this paper focuses on dissemination and implementation research in child and adolescent mental health. Many of the general principles that emerged from this systematic review do not appear to differ in major ways from those emerging from research in other settings.107 However, children’s mental health prevention and clinical services have a number of distinguishing characteristics that could lead to a greater separation of this literature over time. For example, children typically live in a setting with parents/caregivers and many interventions involve the family. There is a preponderance of psychosocial interventions for children and adolescence and a much thinner evidence-base for psychopharmacologic interventions. Professionals working with children and adolescents receive what is often additional, specialty training that distinguishes them from those serving adults. Service organizations and providers often are dedicated specifically to child services. There are also often different insurance and funding infrastructures for child and adolescent-focused services. Indeed, some findings highlighted in this review, such as the differential impacts of training on provider fidelity in implementing two EBPs in a single study (i.e., Multisystemic Therapy and Contingency Management)95 suggest that an exclusive focus on child and adolescent mental health is warranted.

The present review reflects the current state of the field of implementation research as applied to child and adolescent mental health, capturing its progress from an era focused largely intervention development, to one that places increasing importance on moving effective interventions into widespread use in order to have a positive impact on public health. Forces moving the field in this direction include the development of evidence-based medicine and its spread to mental health prevention and treatment, the development and increase in interest and funding for research focusing on mechanisms for effective EBP implementation, and calls from funding agencies to increase the use of EBPs. Because scholarly and applied interest in implementation is increasing, there are a burgeoning number of studies underway that will enrich this literature over the next several years. Indeed, there are a number of implementation research areas ripe for study. This include approaches such as effective public–academic collaborations and community partnered research,108 engaging intervention developers to consider implementation during the intervention design,109 including implementation research as part of efficacy and effectiveness studies,110 the use of technology in training,111 coaching and fidelity assessment and feedback,24 the use of organizational development strategies to improve implementation efficiency, 60 and the use of a strategic and comprehensive approach move large scale implementation through EBP exploration, preparation, implementation, and sustainment,24 and strategies to scale-up EBPs across entire service systems112. These studies carry additional significance in the coming years as the Affordable Care Act places greater emphasis on mental health services as an essential benefit as well as greater accountability regarding the use of evidence-based treatments, integration of allied health and primary care, overall quality of care, cost efficiency, and patient outcomes.113

Implementation research is inherently complex. It involves the study of system, organizational, individual, and social change that takes place in complex systems involving patients, clinicians, organizations, policymakers, drawing on an increasingly sophisticated evidence base, and supported by exciting technological advances. Despite this complexity, the development and testing of dissemination and implementation strategies is needed in order to efficiently move effective interventions into usual care. Indeed, the results of dissemination implementation studies must be broadly applied so that the benefits of basic scientific discovery and clinical intervention can be effectively and efficiently translated to improving the public health status of individuals and the communities in which they reside.

Supplementary Material

Figure 2.

Preferred reporting items for systematic reviews and meta-analyses (PRISMA) study selection flow diagram.

Acknowledgments

This study was supported in part by National Institutes of Health (NIH) grants R01DA022239, R01MH072961, P30MH074678, and R25MH080916.

Footnotes

Supplemental material cited in this article is available online.

Drs. Novins and Aarons served as the statistical experts for this research.

Disclosure: Dr. Novins is a deputy editor for the Journal of the American Academy of Child and Adolescent Psychiatry and co-editor for the special series of papers in this Journal on Dissemination and Implementation research. He has received funding for research, evaluation, and/or technical assistance activities from NIH, the Administration for Children and Families (ACF), the Substance Abuse and Mental Health Services Administration, and the U.S. Department of Justice. Dr. Green has received funding from for research and/or evaluation from NIH, the Centers for Disease Control and Prevention (CDC), and ACF. Dr. Aarons is Co-Editor for the special series of papers in this Journal focused on Dissemination and Implementation research. He has received funding for research and/or evaluation from NIH, CDC, and ACF.

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Contributor Information

Dr. Douglas K. Novins, University of Colorado Anschutz Medical Campus

Dr. Amy E. Green, University of California–San Diego

Dr. Rupinder K. Legha, University of Colorado School of Medicine

Dr. Gregory A. Aarons, University of California–San Diego

References

- 1.Hoagwood K, Burns BJ, Kiser L, Ringeisen H, Schoenwald SK. Evidence-based practice in child and adolescent mental health services. Psychiatr Serv. 2001;52(9):1179–1189. doi: 10.1176/appi.ps.52.9.1179. [DOI] [PubMed] [Google Scholar]

- 2.Chambers DA, Wang PS, Insel TR. Maximizing efficiency and impact in effectiveness and services research. Gen Hosp Psychiatry. 2010;32(5):453. doi: 10.1016/j.genhosppsych.2010.07.011. [DOI] [PubMed] [Google Scholar]

- 3.NIH. [Accessed January 9, 2013];Program annoucement (PAR-13-055) Dissemination and implementation research in health (R01) 2013 http://grants.nih.gov/grants/guide/pa-files/PAR-13-055.html.

- 4.William T Grant Foundation. Request for Research Proposals: Understanding the acquisition, interpretation, and use of research evidence in policy and practice. New York: NY: 2012. [Google Scholar]

- 5.Greenhalgh T, Robert G, Macfarlane F, Bate P, Kyriakidou O. Diffusion of innovations in service organizations: systematic review and recommendations. Milbank Q. 2004;82(4):581–629. doi: 10.1111/j.0887-378X.2004.00325.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Rogers EM. Diffusion of Innovations. 5. New York: Free Press; 2003. [Google Scholar]

- 7.Woolf SH. The meaning of translational research and why it matters. JAMA. 2008;299(2):211–213. doi: 10.1001/jama.2007.26. [DOI] [PubMed] [Google Scholar]

- 8.Institute of Medicine. Crossing the quality chasm: A new health system for the 21st century. Washington, DC: National Academy Press; 2001. [PubMed] [Google Scholar]

- 9.American Psychological Association. Policy statement on evidence-based practice in psychology. American Psychological Association; 2005. [Google Scholar]

- 10.Aarons GA, Hurlburt M, Horwitz SM. Advancing a conceptual model of evidence-based practice implementation in public service sectors. Adm Policy Ment Health. 2011;38(1):4–23. doi: 10.1007/s10488-010-0327-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Powell BJ, McMillen JC, Proctor EK, et al. A compilation of strategies for implementing clinical innovations in health and mental health. Med Care Res Rev. 2012;69:123–157. doi: 10.1177/1077558711430690. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Cochrane AL. Effectiveness and efficiency: random reflections on health services. London: Nuffield Provincial Hospitals Trust London; 1972. [Google Scholar]

- 13.Dopson S, Locock L, Gabbay J, Ferlie E, Fitzgerald L. Evidence-based medicine and the implementation gap. Health. 2003;7(3):311–330. [Google Scholar]

- 14.Palinkas LA, Soydan H. New Horizons of Translational Research and Research Translation in Social Work. Res Social Work Prac. 2012;22(1):85–92. [Google Scholar]

- 15.Klein KJ, Sorra JS. The challenge of innovation implementation. Academy of management review. 1996:1055–1080. [Google Scholar]

- 16.Tornatzky LG, Klein KJ. Innovation characteristics and innovation adoption-implementation: A meta-analysis of findings. IEEE Transactions on Engineering Management. 1982;(1):28–45. [Google Scholar]

- 17.States U, editor. Title IV- Health Quality Improvement Act. Title 42 U.S. Code 111011986. [Google Scholar]

- 18.IOM. Crossing the quality chasm: A new health system for the 21st century. National Academies Press; 2001. [PubMed] [Google Scholar]

- 19.CDC. [Accessed 4/29/2013];Implemenation science: What CDC is doing. 2012 http://www.cdc.gov/globalaids/What-CDC-is-Doing/implementation-science.html.

- 20.NIH. 5th Annual NIH Conference on the Science of Implementation and Dissemination; Bethesda, Maryland. 2012. [Google Scholar]

- 21.Implementation Research Institute. [Accessed 04/29/13, 2013]; http://cmhsr.wustl.edu/Training/IRI/Pages/ImplementationResearchTraining.aspx.

- 22.Balas EA, Boren SA. Managing clinical knowledge for healthcare improvements. In: Bemmel J, McCray AT, editors. Yearbook of Medical Informatics 2000: Patient-Centered Systems. Stuttgart, Germany: Schattauer Verlagsgesellschaft mbH; 2000. pp. 65–70. [Google Scholar]

- 23.Lavis JN, Posada FB, Haines A, Osei E. Use of research to inform public policymaking. Lancet. 2004;364(9445):1615–1621. doi: 10.1016/S0140-6736(04)17317-0. [DOI] [PubMed] [Google Scholar]

- 24.Aarons GA, Green AE, Palinkas LA, et al. Dynamic adaptation process to implement an evidence-based child maltreatment intervention. Implement Sci. 2012;7(32) doi: 10.1186/1748-5908-7-32. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Damschroder L, Aron D, Keith R, Kirsh S, Alexander J, Lowery J. Fostering implementation of health services research findings into practice: A consolidated framework for advancing implementation science. Implement Sci. 2009;4:50. doi: 10.1186/1748-5908-4-50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Fixsen DL, Naoom SF, Blase KA, Friedman RM, Wallace F. Implementation research: A synthesis of the literature. Tampa: University of South Florida, Louis de la Parte Florida Mental Health Institute, the National Implementation Research Network; 2005. (FMHI Publication #231) [Google Scholar]

- 27.Meyers DC, Durlak JA, Wandersman A. The quality implementation framework: A synthesis of critical steps in the implementation process. Amer J of Community Psychol. 2012;50(3–4):462–480. doi: 10.1007/s10464-012-9522-x. [DOI] [PubMed] [Google Scholar]

- 28.Moher D, Liberati A, Tetzlaff J, Altman DG. Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. PLoS medicine. 2009;6(7):e1000097. doi: 10.1371/journal.pmed.1000097. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Di Noia J, Schwinn TM, Dastur ZA, Schinke SP. The relative efficacy of pamphlets, CD-ROM, and the Internet for disseminating adolescent drug abuse prevention programs: An exploratory study. Prev Med. 2003;37(6 Pt1):646–653. doi: 10.1016/j.ypmed.2003.09.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Leslie LK, Weckerly J, Plemmons D, Landsverk J, Eastman S. Implementing the American Academy of Pediatrics Attention-Deficit/Hyperactivity Disorder Diagnostic Guidelines in primary care settings. Pediatrics. 2004;114(1):129–140. doi: 10.1542/peds.114.1.129. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Sanders MR. Triple P-Positive Parenting Program: Towards an empirically validated multilevel parenting and family support strategy for the prevention of behavior and emotional problems in children. Clin Child Fam Psychol Rev. 1999;2(2):71–90. doi: 10.1023/a:1021843613840. [DOI] [PubMed] [Google Scholar]

- 32.Henderson JL, MacKay S, Peterson-Badali M. Closing the Research-Practice Gap: Factors Affecting Adoption and Implementation of a Children’s Mental Health Program. J Clin Child Adolesc Psychol. 2006;35(1):2–12. doi: 10.1207/s15374424jccp3501_1. [DOI] [PubMed] [Google Scholar]

- 33.Rohrbach LA, Graham JW, Hansen WB. Diffusion of a school-based substance abuse prevention program: predictors of program implementation. Prev Med. 1993;22(2):237–260. doi: 10.1006/pmed.1993.1020. [DOI] [PubMed] [Google Scholar]

- 34.Foster KA, Stiffman AR. Child welfare workers’ adoption of decision support technology. J Technol Hum Serv. 2009;27(2):106–126. doi: 10.1080/15228830902749039. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Henggeler SW, Chapman JE, Rowland MD, et al. Statewide adoption and initial implementation of contingency management for substance-abusing adolescents. J Consult Clin Psychol. 2008;76(4):556–567. doi: 10.1037/0022-006X.76.4.556. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Zazzali JL, Sherbourne C, Hoagwood KE, Greene D, Bigley MF, Sexton TL. The adoption and implementation of an evidence based practice in child and family mental health services organizations: A pilot study of functional family therapy in New York State. Adm Policy Ment Health. 2008;35(1–2):38–49. doi: 10.1007/s10488-007-0145-8. [DOI] [PubMed] [Google Scholar]

- 37.Aarons GA, Fettes DL, Flores LE, Sommerfeld DH. Evidence-based practice implementation and staff emotional exhaustion in children’s services. Behav Res Ther. 2009;47(11):954–960. doi: 10.1016/j.brat.2009.07.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Henderson JL, Mackay S, Peterson-Badali M. Interdisciplinary knowledge translation: lessons learned from a mental health: fire service collaboration. Am J of Community Psychol. 2010;46(3–4):277–288. doi: 10.1007/s10464-010-9349-2. [DOI] [PubMed] [Google Scholar]

- 39.Henderson CE, Taxman FS, Young DW. A Rasch model analysis of evidence-based treatment practices used in the criminal justice system. Drug Alcohol Depend. 2008;93(1–2):163–175. doi: 10.1016/j.drugalcdep.2007.09.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Pankratz MM, Hallfors DD. Implementing evidence-based substance use prevention curricula in North Carolina public school districts. J Sch Health. 2004;74(9):353–358. doi: 10.1111/j.1746-1561.2004.tb06628.x. [DOI] [PubMed] [Google Scholar]

- 41.Rohrbach LA, Ringwalt CL, Ennett ST, Vincus AA. Factors associated with adoption of evidence-based substance use prevention curricula in US school districts. Health Educ Res. 2005;20(5):514–526. doi: 10.1093/her/cyh008. [DOI] [PubMed] [Google Scholar]

- 42.Aarons GA. Transformational and Transactional Leadership: Association With Attitudes Toward Evidence-Based Practice. Psychiatr Serv. 2006;57(8):1162–1169. doi: 10.1176/appi.ps.57.8.1162. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Asgary-Eden V, Lee CM. Implementing an Evidence-Based Parenting Program in Community Agencies: What Helps and What Gets in the Way? Adm Policy Ment Health. 2011;39(6):1–11. doi: 10.1007/s10488-011-0371-y. [DOI] [PubMed] [Google Scholar]

- 44.Stevens J, Kelleher KJ, Wang W, Schoenwald SK, Hoagwood KE, Landsverk J. Use of psychotropic medication guidelines at child-serving community mental health centers as assessed by clinic directors. Community Ment Health J. 2011;47(3):361–363. doi: 10.1007/s10597-010-9352-y. [DOI] [PubMed] [Google Scholar]

- 45.Sanders MR, Prinz RJ, Shapiro CJ. Predicting utilization of evidence-based parenting interventions with organizational, service-provider and client variables. Adm Policy Ment Health. 2009;36(2):133–143. doi: 10.1007/s10488-009-0205-3. [DOI] [PubMed] [Google Scholar]

- 46.Aarons GA, Sawitzky AC. Organizational culture and climate and mental health provider attitudes toward evidence-based practice. Psychol Serv. 2006;3(1):61–72. doi: 10.1037/1541-1559.3.1.61. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Carstens CA, Panzano PC, Massatti R, Roth D, Sweeney HA. A naturalistic study of MST dissemination in 13 Ohio communities. J Behav Health Serv Res. 2009;36(3):344–360. doi: 10.1007/s11414-008-9124-4. [DOI] [PubMed] [Google Scholar]

- 48.Henderson CE, Young DW, Farrell J, Taxman FS. Associations among state and local organizational contexts: Use of evidence-based practices in the criminal justice system. Drug Alcohol Depend. 2009;103:S23–32. doi: 10.1016/j.drugalcdep.2008.12.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Chaffin M, Hecht D, Bard D, Silovsky JF, Beasley WH. A statewide trial of the SafeCare home-based services model with parents in Child Protective Services. Pediatrics. 2012;129(3):509–515. doi: 10.1542/peds.2011-1840. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Henggeler SW. Multisystemic therapy: An overview of clinical procedures, outcomes, and policy implications. Child Adolesc Ment Health. 1999;4(1):2–10. [Google Scholar]

- 51.Beidas R, Edmonds J, Marcus S, Kendall P. An RCT of Training and Consultation as Implementation Strategies for an Empirically Supported Treatment. Psychiatr Serv. 2012;4(4):197–206. doi: 10.1176/appi.ps.201100401. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Epstein JN, Rabiner D, Johnson DE, et al. Improving attention-deficit/hyperactivity disorder treatment outcomes through use of a collaborative consultation treatment service by community-based pediatricians: a cluster randomized trial. Arch Pediatr Adolesc Med. 2007;161(9):835–840. doi: 10.1001/archpedi.161.9.835. [DOI] [PubMed] [Google Scholar]

- 53.Henggeler SW, Melton GB, Brondino MJ, Scherer DG, Hanley JH. Multisystemic therapy with violent and chronic juvenile offenders and their families: the role of treatment fidelity in successful dissemination. J Consult Clin Psychol. 1997;65(5):821–833. doi: 10.1037//0022-006x.65.5.821. [DOI] [PubMed] [Google Scholar]

- 54.Henggeler SW, Sheidow AJ, Cunningham PB, Donohue BC, Ford JD. Promoting the implementation of an evidence-based intervention for adolescent marijuana abuse in community settings: Testing the use of intensive quality assurance. J Clin Child Adolesc Psychol. 2008;37(3):682–689. doi: 10.1080/15374410802148087. [DOI] [PubMed] [Google Scholar]

- 55.Lochman JE, Boxmeyer C, Powell N, Qu L, Wells K, Windle M. Dissemination of the Coping Power program: importance of intensity of counselor training. J Consult Clin Psychol. 2009;77(3):397–409. doi: 10.1037/a0014514. [DOI] [PubMed] [Google Scholar]

- 56.Aarons GA, Sommerfeld DH, Hecht DB, Silovsky JF, Chaffin MJ. The impact of evidence-based practice implementation and fidelity monitoring on staff turnover: Evidence for a protective effect. J Consult Clin Psychol. 2009;77(2):270–280. doi: 10.1037/a0013223. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Pentz MA, Trebow EA, Hansen WB, MacKinnon DP, et al. Effects of program implementation on adolescent drug use behavior: The Midwestern Prevention Project (MPP) Eval Rev. 1990;14(3):264–289. [Google Scholar]

- 58.Barwick MA, Peters J, Boydell K. Getting to uptake: Do communities of practice support the implementation of evidence-based practice? J Can Acad Child Adolesc Psychiatry. 2009;18(1):16–29. [PMC free article] [PubMed] [Google Scholar]

- 59.Kolko DJ, Baumann BL, Herschell AD, Hart JA, Holden EA, Wisniewski SR. Implementation of AF-CBT by Community Practitioners Serving Child Welfare and Mental Health: A Randomized Trial. Child Maltreat. 2012;17(1):32–46. doi: 10.1177/1077559511427346. [DOI] [PubMed] [Google Scholar]

- 60.Glisson C, Schoenwald SK, Hemmelgarn A, et al. Randomized trial of MST and ARC in a two-level evidence-based treatment implementation strategy. J Consult Clin Psychol. 2010;78(4):537–550. doi: 10.1037/a0019160. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Proctor E, Silmere H, Raghavan R, et al. Outcomes for implementation research: Conceptual distinctions, measurement challenges, and research agenda. Adm Policy Ment Health. 2011;38(2):65–76. doi: 10.1007/s10488-010-0319-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Garner BR, Godley SH, Bair CM. The impact of pay-for-performance on therapists’ intentions to deliver high-quality treatment. J Subst Abuse Treat. 2011;41(1):97–103. doi: 10.1016/j.jsat.2011.01.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Herschell AD, McNeil CB, Urquiza AJ, et al. Evaluation of a treatment manual and workshops for disseminating, parent-child interaction therapy. Adm Policy Ment Health. 2009;36(1):63–81. doi: 10.1007/s10488-008-0194-7. [DOI] [PubMed] [Google Scholar]

- 64.Vismara LA, Young GS, Stahmer AC, Griffith EM, Rogers SJ. Dissemination of evidence-based practice: can we train therapists from a distance? J Autism Dev Disord. 2009;39(12):1636–1651. doi: 10.1007/s10803-009-0796-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Hoagwood KE, Kelleher K, Murray LK, Jensen PS. Implementation of evidence-based practices for children in four countries: a project of the World Psychiatric Association. Rev Bras Psiquiatr. 2006;28(1):59–66. doi: 10.1590/s1516-44462006000100012. [DOI] [PubMed] [Google Scholar]

- 66.Roberts-Gray C, Gingiss PM, Boerm M. Evaluating school capacity to implement new programs. Eval Program Plann. 2007;30(3):247–257. doi: 10.1016/j.evalprogplan.2007.04.002. [DOI] [PubMed] [Google Scholar]

- 67.Schoenwald SK, Sheidow AJ, Letourneau EJ, Liao JG. Transportability of multisystemic therapy: evidence for multilevel influences. Ment Health Serv Res. 2003;5(4):223–239. doi: 10.1023/a:1026229102151. [DOI] [PubMed] [Google Scholar]

- 68.Turner KMT, Nicholson JM, Sanders MR. The role of practitioner self-efficacy, training, program and workplace factors on the implementation of an evidence-based parenting intervention in primary care. J Prim Prev. 2011:1–18. doi: 10.1007/s10935-011-0240-1. [DOI] [PubMed] [Google Scholar]

- 69.Aarons GA, Palinkas LA. Implementation of evidence-based practice in child welfare: Service provider perspectives. Adm Policy Ment Health. 2007;34(4):411–419. doi: 10.1007/s10488-007-0121-3. [DOI] [PubMed] [Google Scholar]

- 70.Fagen MC, Flay BR. Sustaining a school-based prevention program: results from the Aban Aya Sustainability Project. Health Educ Behav. 2009;36(1):9–23. doi: 10.1177/1090198106291376. [DOI] [PubMed] [Google Scholar]

- 71.McBride N, Farringdon F, Midford R. Implementing a school drug education programme: reflections on fidelity. International Journal of Health Promotion and Education. 2002;40(2):40–50. [Google Scholar]

- 72.Robbins MS, Feaster DJ, Horigian VE, Puccinelli MJ, Henderson C, Szapocznik J. Therapist adherence in brief strategic family therapy for adolescent drug abusers. J Consult Clin Psychol. 2011;79(1):43–53. doi: 10.1037/a0022146. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Schoenwald SK, Carter RE, Chapman JE, Sheidow AJ. Therapist adherence and organizational effects on change in youth behavior problems one year after multisystemic therapy. Adm Policy Ment Health. 2008;35(5):379–394. doi: 10.1007/s10488-008-0181-z. [DOI] [PubMed] [Google Scholar]

- 74.Schoenwald SK, Sheidow AJ, Chapman JE. Clinical supervision in treatment transport: effects on adherence and outcomes. J Consult Clin Psychol. 2009;77(3):410–421. doi: 10.1037/a0013788. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Aarons GA, Wells RS, Zagursky K, Fettes DL, Palinkas LA. Implementing evidence-based practice in community mental health agencies: A multiple stakeholder analysis. Am J Public Health. 2009;99(11):2087–2095. doi: 10.2105/AJPH.2009.161711. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76.Schoenwald SK, Chapman JE, Kelleher K, et al. A survey of the infrastructure for children’s mental health services: implications for the implementation of empirically supported treatments (ESTs) Adm Policy Ment Health. 2008;35(1–2):84–97. doi: 10.1007/s10488-007-0147-6. [DOI] [PubMed] [Google Scholar]

- 77.Klimes-Dougan B, August GJ, Lee C-YS, et al. Practitioner and site characteristics that relate to fidelity of implementation: The Early Risers prevention program in a going-to-scale intervention trial. Prof Psychol: Res Prac. 2009;40(5):467–475. [Google Scholar]

- 78.Schoenwald SK, Letourneau EJ, Halliday-Boykins C. Predicting therapist adherence to a transported family-based treatment for youth. J Clin Child Adolesc Psychol. 2005;34(4):658–670. doi: 10.1207/s15374424jccp3404_8. [DOI] [PubMed] [Google Scholar]

- 79.Langley AK, Nadeem E, Kataoka SH, Stein BD, Jaycox LH. Evidence-based mental health programs in schools: Barriers and facilitators of successful implementation. School Mental Health. 2010;2(3):105–113. doi: 10.1007/s12310-010-9038-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 80.Palinkas LA, Aarons GA. A view from the top: Executive and management challenges in a statewide implementation of an evidence based practice to reduce child neglect. International Journal of Child Health and Human Development. 2009;2(1):47–55. [Google Scholar]

- 81.Chapman JE, Schoenwald SK. Ethnic Similarity, Therapist Adherence, and Long-Term Multisystemic Therapy Outcomes. J Emot Behav Dis. 2011;19(1):3–16. doi: 10.1177/1063426610376773. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 82.Henggeler SW, Schoenwald SK, Liao JG, Letourneau EJ, Edwards DL. Transporting efficacious treatments to field settings: The link between supervisory practices and therapist fidelity in MST programs. J Clin Child Adolesc Psychol. 2002;31(2):155–167. doi: 10.1207/S15374424JCCP3102_02. [DOI] [PubMed] [Google Scholar]

- 83.Kam CM, Greenberg MT, Walls CT. Examining the role of implementation quality in school-based prevention using the PATHS curriculum. Promoting Alternative THinking Skills Curriculum. Prev Sci. 2003;4(1):55–63. doi: 10.1023/a:1021786811186. [DOI] [PubMed] [Google Scholar]

- 84.Glisson C, Schoenwald SK, Kelleher K, et al. Therapist turnover and new program sustainability in mental health clinics as a function of organizational culture, climate, and service structure. Adm Policy Ment Health. 2008;35(1–2):124–133. doi: 10.1007/s10488-007-0152-9. [DOI] [PubMed] [Google Scholar]

- 85.Jensen-Doss A, Hawley KM, Lopez M, Osterberg LD. Using evidence-based treatments: The experiences of youth providers working under a mandate. Prof Psychol: Res Prac. 2009;40(4):417–424. [Google Scholar]

- 86.Chu BC, Kendall PC. Therapist responsiveness to child engagement: flexibility within manual-based CBT for anxious youth. J Clin Psychol. 2009;65(7):736–754. doi: 10.1002/jclp.20582. [DOI] [PubMed] [Google Scholar]

- 87.Pankratz MM, Jackson-Newsom J, Giles SM, Ringwalt CL, Bliss K, Bell M. Implementation fidelity in a teacher-led alcohol use prevention curriculum. J Drug Educ. 2006;36(4):317–333. doi: 10.2190/H210-2N47-5X5T-21U4. [DOI] [PubMed] [Google Scholar]

- 88.Palinkas LA, Schoenwald SK, Hoagwood K, Landsverk J, Chorpita BF, Weisz JR. An ethnographic study of implementation of evidence-based treatments in child mental health: First steps. Psychiatr Serv. 2008;59(7):738–746. doi: 10.1176/ps.2008.59.7.738. [DOI] [PubMed] [Google Scholar]

- 89.Palinkas LA, Aarons GA, Chorpita BF, Hoagwood K, Landsverk J, Weisz JR. Cultural exchange and the implementation of evidence-based practices. Research on Social Work Practice. 2009;19(5):602–612. [Google Scholar]

- 90.Riley KJ, Rieckmann T, McCarty D. Implementation of MET/CBT 5 for Adolescents. J Behav Health Serv Res. 2008;35(3):304–314. doi: 10.1007/s11414-008-9111-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 91.Ozer EJ, Wanis MG, Bazell N. Diffusion of school-based prevention programs in two urban districts: adaptations, rationales, and suggestions for change. Prev Sci. 2010;11(1):42–55. doi: 10.1007/s11121-009-0148-7. [DOI] [PMC free article] [PubMed] [Google Scholar]