Significance

One primary goal of computational neuroscience is to uncover fundamental principles of computations that are performed by the brain. In our work, we took direct inspiration from biology for a technical application of brain-like processing. We make use of neuromorphic hardware—electronic versions of neurons and synapses on a microchip—to implement a neural network inspired by the sensory processing architecture of the nervous system of insects. We demonstrate that this neuromorphic network achieves classification of generic multidimensional data—a widespread problem with many technical applications. Our work provides a proof of concept for using analog electronic microcircuits mimicking neurons to perform real-world computing tasks, and it describes the benefits and challenges of the neuromorphic approach.

Keywords: bioinspired computing, spiking networks, machine learning, multivariate classification

Abstract

Computational neuroscience has uncovered a number of computational principles used by nervous systems. At the same time, neuromorphic hardware has matured to a state where fast silicon implementations of complex neural networks have become feasible. En route to future technical applications of neuromorphic computing the current challenge lies in the identification and implementation of functional brain algorithms. Taking inspiration from the olfactory system of insects, we constructed a spiking neural network for the classification of multivariate data, a common problem in signal and data analysis. In this model, real-valued multivariate data are converted into spike trains using “virtual receptors” (VRs). Their output is processed by lateral inhibition and drives a winner-take-all circuit that supports supervised learning. VRs are conveniently implemented in software, whereas the lateral inhibition and classification stages run on accelerated neuromorphic hardware. When trained and tested on real-world datasets, we find that the classification performance is on par with a naïve Bayes classifier. An analysis of the network dynamics shows that stable decisions in output neuron populations are reached within less than 100 ms of biological time, matching the time-to-decision reported for the insect nervous system. Through leveraging a population code, the network tolerates the variability of neuronal transfer functions and trial-to-trial variation that is inevitably present on the hardware system. Our work provides a proof of principle for the successful implementation of a functional spiking neural network on a configurable neuromorphic hardware system that can readily be applied to real-world computing problems.

The remarkable sensory and behavioral capabilities of all higher organisms are provided by the network of neurons in their nervous systems. The computing principles of the brain have inspired many powerful algorithms for data processing, most importantly the perceptron and, building on top of that, multilayer artificial neural networks, which are being applied with great success to various data analysis problems (1). Although these networks operate with continuous values, computation in biological neuronal networks relies on the exchange of action potentials, or “spikes.”

Simulating networks of spiking neurons with software tools is computationally intensive, imposing limits to the duration of simulations and maximum network size. To overcome this limitation, several groups around the world have started to develop hardware realizations of spiking neuron models and neuronal networks (2–10) for studying the behavior of biological networks (11). The approach of the Spikey hardware system used in the present study is to enable high-throughput network simulations by speeding up computation by a factor of 104 compared with biological real time (12, 13). It has been developed as a reconfigurable multineuron computing substrate supporting a wide range of network topologies (14).

In addition to providing faster tools for neurosimulation, high-throughput spiking network computation in hardware offers the possibility of using spiking networks to solve real-world computational problems. The massive parallelism is a potential advantage over conventional computing when processing large amounts of data in parallel. However, conventional algorithms are often difficult to implement using spiking networks for which many neuromorphic hardware substrates are designed. Novel algorithms have to be designed that embrace the inherent parallelism of a brain-like computing architecture.

A common problem in data analysis is classification of multivariate data. Many problems in artificial intelligence relate to classification in some way or the other, such as object recognition or decision making. It is the basis for data mining and, as such, has widespread applications in industry. We interact with classification systems in many aspects of daily life, for example in the form of Web shop recommendations, driver assistance systems, or when sending a letter with a handwritten address that is deciphered automatically in the post office.

In this work, we present a neuromorphic network for supervised classification of multivariate data. We implemented the spiking network part on a neuromorphic hardware system. Using a range of datasets, we demonstrate how the classifier network supports nonlinear separation through encoding by virtual receptors, whereas lateral inhibition transforms the input data into a sparser encoding that is better suited for learning.

Results

We first outline our spiking neural network design and show examples of the network activity during operation in supervised classification of multivariate data. Then we analyze the temporal dynamics of the classification process and compare the network classification performance against the performance of a naïve Bayes (NB) classifier. We show that the network tolerates the neuronal variability that is present on the hardware through leveraging a population code. Finally, we demonstrate that the network design is generic and can be applied, without reparameterization, to different multivariate problems. We used the PyNN software package for network implementations on the Spikey neuromorphic hardware system (15, 16). For simplicity, all temporal parameters are specified in the biological time domain throughout this study. The actual time values referring to the spiking network execution on the hardware are 104 times smaller due to the speedup factor of the accelerated Spikey system.

A Spiking Network for Supervised Learning of Data Classification.

In multivariate classification problems, data are typically organized as observations of a number of variables arranged in a matrix X, with rows corresponding to observations and columns to real-valued features. Each observation has an associated class label stored in a binary matrix Y, with Yi,j = 1 if the observation i belongs to class j. The aim is to find a mapping A such that argmax(X·A) = Y, with argmax returning 1 for the maximal value in each row and 0 otherwise. The classes of new observations X′ can then be predicted by applying the transformation argmax(X′·A) = Y′. The architecture of the insect olfactory system maps well on this task (17–19).

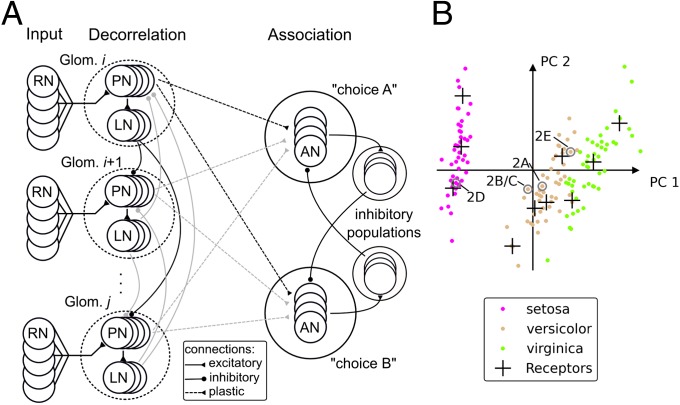

We designed a classifier network that approximates the basic blueprint of the insect olfactory system, without claiming to be an exact model of the biological reality. Its three-stage architecture consists of an input layer, a decorrelation layer, and an association layer (Fig. 1A). We provide a detailed description in SI Materials and Methods and a parameter list in Table S1.

Fig. 1.

Network architecture and real-world classification problem. (A) Schematic of the generic network. AN, association neuron; LN, local inhibitory neuron; PN, projection neuron; RN, receptor neuron. (B) Projection of the complete iris dataset to the first two principal components (97.7% variance explained) and locations of 10 VRs. Annotations refer to data points presented in Fig. 2.

In the input layer, real-valued multidimensional data are transformed into bounded and positive firing rates. The data enter the network via ensembles of receptor neurons (RNs). RNs fire spikes at specified rates which are computed from the real-valued input data using “virtual receptors” (VRs) (17) (see also SI Materials and Methods, VRs for details). A VR corresponds to the center of a linear (cone-shaped) radial basis function in feature space. The magnitude of its response to a data point (a “stimulus”) depends on the distance between the VR and the stimulus. Hence, the VR response is large for small distances between stimulus and receptor, and vice versa. VRs are placed in data space in a self-organized manner using the neural gas algorithm (20).

RN ensembles project onto projection neurons (PNs) in the decorrelation layer, which are grouped in ensembles that represent the so-called glomeruli in the insect antennal lobe. Each RN ensemble targets one glomerulus, which thus receives excitatory input that represents the activation of one VR. The PNs project to local inhibitory neurons (LNs), which laterally inhibit other glomeruli. Moderate lateral inhibition between glomeruli reduces correlations between the variables they represent without degrading the encoding to a fully orthogonalized representation (14, 21–23).

The output of the decorrelation layer is projected to the association layer, in which supervised learning for data classification is realized. Association neurons (ANs) are grouped in as many populations as there are classes in the dataset. Each population in the association layer is assigned one label from the dataset (for example, “choice A” and “choice B” as indicated in Fig. 1A). The AN populations project onto associated populations of inhibitory neurons. The strong inhibition between AN populations induces a soft winner-take-all (sWTA) behavior in the association layer. The synaptic weights from PNs to ANs are initialized randomly. An activity pattern presented to the network will thus by chance deliver more input to one of the “choice” populations than to the others, resulting in higher firing rate of that population (the “winner population”). If the label of the winner population matches the one of the stimulus, the network performed a correct classification. We used a 50% connection probability from RNs to PNs, from PNs to LNs and to ANs, and from excitatory to inhibitory neurons in the sWTA circuit (Table S1). Inhibitory populations are fully connected to excitatory populations.

We train the network in a supervised fashion by presenting stimuli with known class labels. If classification was correct, active synapses from PNs to the winner population are potentiated. If classification was incorrect, active synapses are depressed (see Materials and Methods for a detailed description of the algorithm). This learning rule is derived from the delta rule for perceptron training (24, 25). Network training leads to an optimized set of synaptic weights for classification of the dataset. After successful training, the winner population in the association layer indicates which class a stimulus belongs to, and it can predict the class adherence for unseen stimuli.

Application of the Neuromorphic Classifier Network to a Real-World Dataset.

We implemented the classifier network on the Spikey hardware system, which has been described in detail previously (14). We assessed its performance using Fisher's iris dataset (26) as a benchmark. The iris dataset is a four-dimensional dataset describing features of the blossom leaves for three species of the iris flower, Iris setosa, Iris virginica, and Iris versicolor. This dataset is particularly well suited for this study for two reasons. First, it contains only 150 data points, which makes rapid prototyping of the network feasible. Second, the constellation of the data points allows for a fine-grained interpretation of the classifier capabilities: The I. setosa class is well separated from the other two, making learning the classification boundary easy (Fig. 1B). Separation of the I. virginica and I. versicolor classes is more difficult because they partly overlap in feature space. Classifier performance on this separation indicates how well the classifier copes with more challenging problems. Separating such overlapping data classes typically requires supervised learning methods, because there is no clear “gap” between the classes in data space that would allow an unsupervised method to detect class boundaries.

We used 10 VRs to encode the dataset. They represented the data points by firing intensities, which were used to generate the RN spike trains in the input layer using a gamma point process. The number of VRs determines the number of glomeruli, and thus the total number of neurons required for the network. The specific choice of 10 VRs was a compromise between choosing a number as high as possible while staying within the maximal neuron count of 192 on the present neuromorphic hardware system (see SI Results, Number of Glomeruli for a detailed explanation).

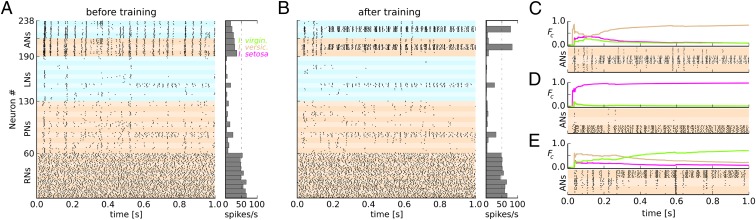

The spiking activity of the classifier network is depicted in Fig. 2. Fig. 2A shows the activity of all neurons in all three layers in the beginning of the training phase when stimulated with the data point annotated as “2A” in Fig. 1B. The activity pattern across the RN population expresses the activation level of the VRs. PNs exhibited sparser activity compared with RNs, largely due to lateral inhibition from LNs. All three populations of ANs responded with approximately the same intensity because the weights from decorrelation layer to the association layer are initially random. Due to the strong lateral inhibition in the association layer, all three populations showed synchronized and oscillating activity. The population associated to the I. setosa class emitted a slightly higher number of spikes than the others during the 1-s stimulus presentation. Because the presented data point belonged to the I. versicolor class, this association was wrong, and hence the weights of synapses targeting the I. setosa population were reduced after this presentation as part of the training procedure. During the training phase, 80% of all data points were presented and the weights adjusted according to the learning rule after each presentation. Fig. 2B shows network activity in response to a sample from the I. versicolor class in the test phase. The AN population activity rapidly converged to a representation that indicated the correct association after only a few spikes and maintained this state throughout the duration of the stimulus presentation.

Fig. 2.

Network activity during stimulus presentation before and after training. (A) Untrained network. Spike raster display and population spike count of all neuron populations in the network in response to the presentation of one data point from I. versicolor as indicated in Fig. 1B. Distinct neuronal populations are labeled by alternating color saturation. Warm/cool color, excitatory/inhibitory population. The stimulus was applied at time t = 0 s for the duration of 1 s. (B) Network activity after training during 1 s of stimulation with a test sample from I. versicolor as labeled in Fig. 1B. (C–E) Spiking activity and temporal evolution of Fc(t) (Eq. 1) for all three excitatory AN populations in response to three different data samples as labeled in Fig. 1B. Color of Fc(t) trace indicates the Iris species associated to the respective AN population (color code as in Fig. 1B). Only spiking activity from excitatory ANs is shown.

To assess the convergence of the association layer activity to a winner population, we calculated the cumulative fraction of spikes Fc(t) from each population c at time t as follows:

where Ic(t) indicates the number of spikes emitted by population c within the interval (0,t], whereas Iall(t) refers to the total number of spikes from all AN populations. Fc(t) thus reflects, at each time point t, the integrated activity of one AN population compared with the total AN activity up to that point in time. Fig. 2C shows the resulting population dynamics for the example in Fig. 2B, together with spike trains in the AN populations. For this data point, it took about 150–200 ms before the network activity converged toward a stable state with the I. versicolor population having the highest activity, indicating the correct association. This convergence happened faster for data samples from the well-separated I. setosa class (Fig. 2D). In a third example from the I. versicolor class close to the class boundary, the network first showed a slightly higher firing rate for the correct class, but eventually converged to a wrong decision (Fig. 2E).

Time to Decision and Classification Performance.

We used Gorodkin's K-category correlation coefficient RK to measure classification performance (27) (see SI Materials and Methods, Evaluation of Classifier Performance for a formal definition and rationale behind our preference of RK over more frequently used performance measures like “percent correct”).

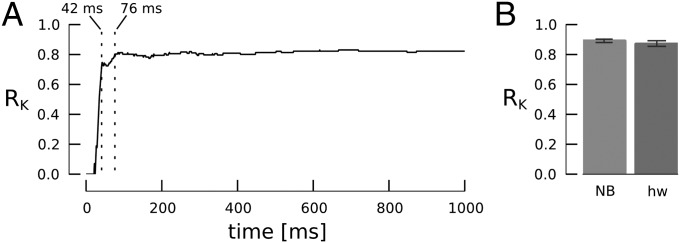

In our network, each data class is represented by a different AN population. For each presentation of a test stimulus, the population that generated the most spikes within a certain observation time window is the winner population, indicating either a correct or incorrect classification. We computed the RK across all test samples in a time-resolved manner by varying the time t after stimulus onset that was used to count AN spikes. As shown in Fig. 3A, RK rapidly approaches a stable maximum indicating a time-to-decision of less than 100 ms in biological time corresponding to 10 μs of real time with the Spikey chip.

Fig. 3.

Classification performance. (A) RK obtained for decision time points between 0 and 1,000 ms from a single cross-validation run. The vertical dotted lines indicate when RK first exceeds values of 0.7 (42 ms) and 0.8 (76 ms). (B) Classification performance of the hardware classifier network (hw) at decision time of 1 s compared with a naïve Bayes classifier (NB) in fivefold cross-validation. Error bars indicate 20th/80th percentile from 10 repetitions.

We next compared the absolute classification performance with that of the NB classifier, which we use here as a benchmark for conventional machine learning methods. We chose NB because it is a linear classifier without any free parameters, so it delivers robust classification without the need for parameter tuning. We evaluated RK across the entire 1-s stimulus presentation. For the iris dataset, the NB classifier yields an average RK of 0.89 (P20 = 0.88, P80 = 0.90) in 50 repetitions of fivefold cross-validation and thus slightly outperforms the neuromorphic classifier with RK = 0.87 (P20 = 0.85, P80 = 0.89, 50 repetitions) (Fig. 3B). The performance evaluation is described in detail in SI Materials and Methods.

For a thorough examination of the classification outcome, we compared the confusion matrix produced by the classifier network (Table S2 and SI Results, Per-Class Classification Performance). The classifier only produced errors on the more challenging separation of I. versicolor and I. virginica, whereas it always succeeded to separate I. setosa. This observation indicates that the classifier network is capable of delivering reliable classification not only of well-separable data, but also in cases where samples from different classes overlap in feature space.

Tolerance Against Neuronal Variability.

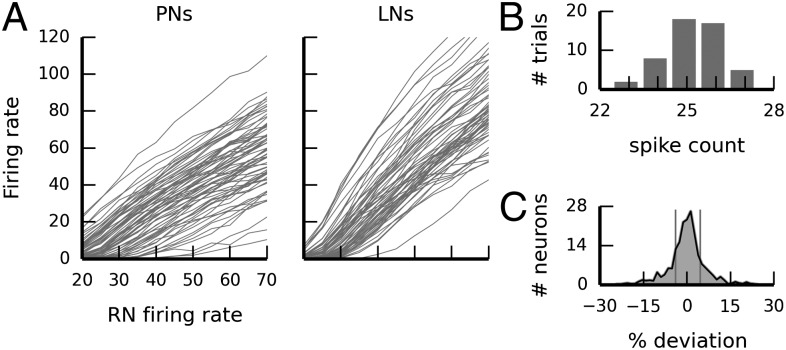

The analog circuits used to represent neurons in the Spikey system exhibit inherent variability that the classifier network must tolerate to be useful in practice. Two sources of variability on the hardware system can be distinguished: “temporal noise” and “fixed-pattern noise.” Temporal noise (including thermal noise and other sources of stochastic variability) affects the circuits on short timescales in an unpredictable fashion. In contrast, fixed-pattern noise is caused by device mismatch. Device mismatch describes the deviance of an electronic component from its specification due to inevitable variations in the manufacturing process. The variations of neuron parameters due to device mismatch occur on much slower timescales and can be regarded as constant for our use case. They introduce heterogeneity across all analog components—neurons and synapses—according to a fixed pattern (hence the term “fixed-pattern noise”). The individual variation can be measured and calibrated for. The integrated development environment of the Spikey system contains calibration methods that reduce the amount of fixed-pattern variability. However, such generic calibration methods cannot account for all network configurations in an efficient manner, because calibration at the neuron level does not take into account network effects. This is particularly relevant for the Spikey system, which was designed to accommodate a wide variety of network topologies (14). In our case, the fixed-pattern variation that remains after built-in calibration manifests itself in variability of the neurons' transfer functions that relate input rate to output rate. Both maximal output rate and slope of the transfer functions varied considerably across PNs and LNs (Fig. 4A).

Fig. 4.

Neuromorphic hardware variability. (A) Variability of transfer functions across all PNs and LNs on the hardware chip. Each line corresponds to one neuron. (B) Hardware trial-to-trial variability: Histogram of spike counts for one example neuron across 50 repeated stimulations with identical “frozen input” of 1-s duration (average spike count, 25.3; variance, 1.0). (C) Histogram of per-neuron spike counts relative to the individual average spike count emitted by each of the 192 neurons across 50 repeated identical 1-s stimulations. The vertical lines indicate 20th/80th percentile (P20 = 3.99, P80 = 4.58).

Due to its stochastic nature, temporal noise cannot be avoided by systematic measures such as calibration of synaptic weights. We quantified the variation in spike count caused by temporal noise by measuring the variability of the spike count in all 192 hardware neurons across 50 repetitions with identical stimuli. For this purpose, we generated input spike trains only once and used them repeatedly as input to all 192 neurons (“frozen input”). We used six gamma processes of order five and mean rate of 25 spikes/s to mimic the inputs that PNs receive in the classifier network. We adjusted the weights of the neurons to yield a mean output frequency of 25.4 spikes/s. The neurons exhibited moderate trial-to-trial variability under these conditions. Fig. 4B shows the distribution of spike counts for one exemplary neuron that produced 25.3 spikes on average, with a variance of 1.0. This amount of variability is also reflected when considering the total population (Fig. 4C). On average, the individual spike trains from the same neuron varied with a Fano factor (28) of 0.083, which is smaller than the variability inherent to the gamma process used for the generation of the RN spike trains (γ = 5, Fano factor = 0.2). Thus, the trial-to-trial variability due to temporal noise intrinsic to the neuromorphic hardware is small compared with those variations imposed by the biologically realistic stochastic generation of input spike trains.

The classifier network achieved the reported performance despite transfer function variability caused by fixed-pattern noise and trial-to-trial variability caused by temporal noise and by the stochasticity of the input. This robustness is the result of considerable efforts to optimize network topology. Essentially, the key to achieve robustness in our network was to leverage population coding. Two network properties proved essential to ensure a valid population code. First, synchronization of neurons within a population should be avoided because it violates the rate code assumption of independent neurons within each population. We achieved this by sparsifying the input to individual neurons, i.e., using 50% connection probability instead of full connectivity. Second, population sizes must be sufficiently large to reduce the variance of the population transfer function. We provide a detailed explanation of how these properties affect network operation in SI Results (Figs. S1 and S2, and SI Results, Network Optimization for Robustness Against Neuronal Variability).

General Applicability to Other Datasets.

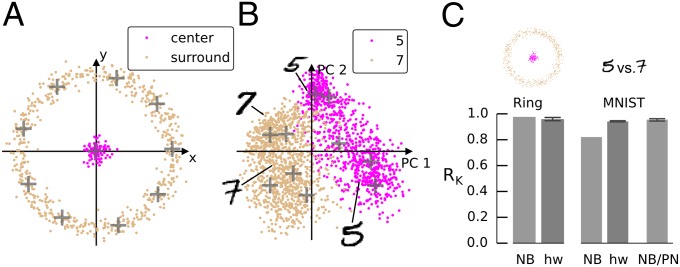

As a demonstration for the ability of the network to solve nonlinear problems, we applied the network to classification of a 2D “Ring” dataset. This simple dataset consists of two classes, one class situated in a cluster centered at the origin and a second class surrounding it (Fig. 5A). It has skewed class proportions with sevenfold more data points in the surround than in the center class. In addition, the arrangement of data points requires a nonlinear separation between the center and surround classes. Our network achieves this separation through the VR trick: By using 10 VRs to represent a 2D dataset, we transform the data into a higher-dimensional space in which linear separation is possible. The classifier network running on the Spikey system achieved an average performance of RK = 0.96 on the Ring dataset (NB: RK = 0.98; Fig. 5C, Left).

Fig. 5.

Application to generic classification problems. (A) Ring dataset (training samples) and locations of 10 VRs. (B) First two principal components of MNIST digits “5” (893 samples) and “7” (1,107 samples) from the training set (2,000 samples total) and VR locations. (C) Performance comparison of the naïve Bayes classifier (NB) vs. the classifier network on hardware (hw), and the NB classifier trained on PN firing rates (NB/PN). Error bars indicate 20th/80th percentile from 10 repetitions. When trained on the VR responses, the NB performance is deterministic for these datasets because the training and test datasets are fixed. For NB/PN, we extracted the PN firing rates from the 10 repeated network runs that we used to assess the spiking network. Hence, the NB classification performance varies.

The Mixed National Institute of Standards and Technology (MNIST) database is a commonly used high-dimensional benchmark problem with practical relevance (http://yann.lecun.com/exdb/mnist/). The database contains images of handwritten digits from 0 to 9, digitized to 28 × 28 pixels. Hence, each observation has 28·28 = 768 dimensions. The dataset is divided into a training and a test set to enable reproducible benchmarking. We picked a subset of this dataset consisting of the digits “5” and “7,” using 2,000 samples from the training set and 1,920 samples from the test set (Fig. 5B). On the MNIST dataset, the spiking network outperformed the NB classifier by a large margin (hardware network: mean RK = 0.94; NB: 0.82; Fig. 5C, Right). Interestingly, when training the NB classifier on the spike counts produced by PNs in the network (that is, after the lateral inhibition stage in the decorrelation layer), its performance increases to similar levels as obtained with the classifier network (mean RK = 0.96). This observation is in line with a previous study which demonstrated that lateral inhibition increases classifier performance on a 184-dimensional odor dataset (17).

The reason for this effect lies in the fact that lateral inhibition transforms the broad, overlapping receptive fields of VRs (and in consequence RNs) into more localized representations of input space. In other words, receptive fields of PNs are narrower than those of VRs, and they overlap to a lesser degree. As a result, PN activity is also sparser than VR activity, that is, only few PN populations respond to a particular stimulus. This behavior can be observed, for example, when comparing the spike counts of RNs and PNs in Fig. 2 A and B. Sparser activity and more local receptive fields simplify the training process, because it becomes easier to identify the input units that are relevant to discriminate data points in a particular region of input space (the “credit assignment problem”). We explain the soft partitioning effect provided by lateral inhibition in detail in SI Results, Effect of Lateral Inhibition on Classification Performance, including an illustrated example (Fig. S3).

Speed Considerations.

The major advantage in using accelerated neuromorphic hardware for spiking neuronal simulations is its potentially fast execution time. On the neuromorphic hardware system used in this study, simulations run with a speedup factor of 104. Hence, presenting all 150 iris data points for 1 s (biological time) each to the hardware network takes 150 s/104 = 15 ms pure network run time. Practical applications require data transfer for spikes and synaptic weights to and from the system as well as the parameterization of the hardware network, which adds to the pure network run time (for details, see SI Materials and Methods and Fig. S4). These factors depend on the efficiency of the software interface for the hardware system. Because we are working with a prototype setup, its interface is under constant development and improvement. At the time of writing, the hardware system effectively achieved an overall 13-fold speedup compared with biological real time. We want to stress that this number may improve as the software interface is continuously optimized.

Discussion

We demonstrated the implementation of a spiking neuronal network for classification of multidimensional data on a neuromorphic hardware system. The network is capable of separating data in a nonlinear fashion through encoding by VRs. The transformation by lateral inhibition increases classification performance. It performed robustly in the presence of stochastic trial-to-trial variability inherent to the hardware system. The network is not restricted to any specific kind of data, but is capable of classifying arbitrary real-valued, multidimensional data, and hence universally suited for all kinds of classification tasks. It achieved performance values comparable to a standard machine learning classifier, which points out the network's wide applicability to real-world problems. The present network implementation is a proof of concept that can serve as a building block for classifier tasks on neuromorphic hardware. Together with the high speedup factor of the neuromorphic hardware system, our universal classification network is an important step toward high-performance neurocomputing.

We verified the capability of our implementation of VRs to transform data into a higher-dimensional space in which linear separation is possible. The network we presented contains a linear classifier, with the additional constraint that the separating hyperplane must pass through the origin (29). As such, it is limited to separating linear problems. We overcame this limitation through the VR approach, which provides a higher-dimensional representation of the data. Our results on the MNIST dataset point out that the lateral inhibition step is crucial for successful classification of real-world, high-dimensional datasets. Although more complex machine learning algorithms like support vector machines or restricted Boltzmann machines may allow for better classification performance directly on the VR data, the strength of our approach lies in the simplicity of a linear classifier combined with appropriate filtering of input data through the lateral inhibition step, which is very efficiently carried out in a massively parallel neuromorphic hardware network.

Lateral inhibition provides a soft partitioning of input space that facilitates classifier training. Note that this circumstance also points out a limitation of the presented classifier network, because class boundaries in data space can only be optimally represented if they coincide with partition borders. A straightforward way to deal with this problem is to increase the number of VRs and glomeruli, resulting in a more fine-grained partitioning of data space. Such an approach will be possible using emerging large-scale neuromorphic hardware systems supporting tens of thousands of neurons (8, 30).

VRs depend on a self-organizing process that is trained in data space. A particularly interesting prospect is to implement this process on the neuronal substrate. Spiking self-organizing maps have been described in the literature (31–33), suggesting that, in principle, it is possible to implement a self-organizing process on a neuromorphic hardware system. However, the learning rules used in these studies would require sophisticated control logic, which makes it difficult to implement them on the Spikey system. A more straightforward and mathematically well-founded approach has recently been put forward by Nessler et al. (34). They suggested a probabilistic, self-organizing mechanism to learn prototypes in feature space using spike timing-dependent plasticity (STDP) and a winner-take-all circuit, which is suited to represent the VR encoding. An integrated implementation of this encoding together with the classifier network we present here will likely require a much higher neuron count and more flexible plasticity mechanisms compared with what is available on the Spikey system (13). On-chip implementations may become feasible considering the BrainScaleS wafer-scale hardware system that extends the number of available neurons by up to several orders of magnitude and provides more sophisticated plasticity mechanisms (35, 36). In that system, multiple identical neuromorphic modules may be implemented on a single silicon wafer and communicate through high-bandwidth connections. Moreover, advanced control logic for on-chip implementation of elaborate STDP rules is under development (36), which is designed to be compatible with the self-organized prototype learning mechanisms described by Nessler et al. (34). In addition, the deterministic connectivity structure of the glomerular classifier network presented here facilitates splitting the network across different neuromorphic modules. The increased neuron count available in a large-scale system would allow for a larger number of VRs to solve more complex problems and enables scaling the network to larger population sizes to support robustness against noise.

Analysis of the dynamic network activation in response to the onset of a stimulus presentation revealed a fast decision time where the average performance reached its maximum within less than 100 ms in biological time (Fig. 3A). This is in good agreement with recent measurements in insects. In the honeybee, a prominent animal model for studying learning and memory, it was shown that the encoding of the identity of an olfactory stimulus at the level of PNs evolved rapidly within tens of milliseconds (37, 38). Neuronal populations at the output of the mushroom body encode odor–reward associations. These neuronal populations fulfill a similar function like the ANs in our network. In a classical conditioning paradigm, they indicated the classification of the conditioned stimulus (an odor that was previously paired with a sugar reward) within less than 200 ms (39).

Our network proved to be robust against neuronal variability, which is an important factor in the design of neuromorphic algorithms. Biological neuronal networks face a similar challenge. The study of neuronal variance is an integral part of today's neuroscience ever since the seminal study by Mainen and Sejnowski (40). Many neural properties are stochastic in nature, like neurotransmitter release or spike initiation, so a certain amount of variability is inevitable in biological neuronal networks (41). In the same vein, the analog nature of the circuits in the hardware enables the massive speedup and integration density, but unavoidably entails variability. In our case, we achieved tolerance against variability by using a population code. Generally, accelerated analog neurocomputing requires models that can cope with and, ideally, make use of variability. The design of these models will benefit greatly from a deep understanding of biological circuits, interpreted in the light of variability. Likewise, creating functional networks on an analog neuromorphic substrate provides insight into critical properties that networks must possess to operate under noisy conditions.

Materials and Methods

Stimuli were presented to the classifier network in a sequential manner. For each stimulus i, the corresponding feature vector xi was obtained from the observation matrix X, converted into a firing-rate presentation with VRs, from which spike trains were generated by a gamma point process (42). Each stimulus was presented for 1 s of biological time. Synaptic weights that fulfilled a Hebbian eligibility constraint were updated after each stimulus presentation. A synaptic weight was eligible for updating if the target neuron was a member of the winner population, and if the spike count emitted by the presynaptic neuron during the previous stimulus presentation exceeded a threshold (fixed to 35 spikes in the 1-s stimulus interval). Eligible synapses were potentiated by a fixed amount if classification was correct, or depressed by a fixed amount if classification was incorrect. A formal description of the training algorithm is available in SI Materials and Methods, Network Training and Supervised Learning Rule.

Network training was implemented in an interactive chip-in-the-loop fashion: Stimuli were processed by the network on the chip. After each stimulus, the network response was evaluated on the host computer where the weight changes are calculated. The network was then reconfigured and the next stimulus presented.

Supplementary Material

Acknowledgments

We are deeply indebted to Daniel Brüderle for his support in using the Spikey system, and we thank Eric Müller for technical assistance and Mihai A. Petrovici and Emre Neftci for comments and discussion. We also thank the two anonymous reviewers for insightful comments that stimulated new experiments that led to great improvements of the manuscript. This work was supported by Deutsche Forschungsgemeinschaft Grants SCHM2474/1-1 and 1-2 (to M.S.), a grant from the German Ministry of Education and Research [Bundesministerium für Bildung und Forschung (BMBF)], Grant 01GQ1001D [to M.S. (Bernstein Center for Computational Neuroscience Berlin, Project A7) and M.P.N. (Project A9)], and a grant from BMBF [Bernstein Focus Neuronal Learning: Insect Inspired Robots; Grant 01GQ0941 (to M.P.N.)]. T.P. has received funding by the European Union Seventh Framework Programme under Grant 243914 (Brain-i-Nets) and Grant 269921 (BrainScaleS).

Footnotes

The authors declare no conflict of interest.

This article is a PNAS Direct Submission.

This article contains supporting information online at www.pnas.org/lookup/suppl/doi:10.1073/pnas.1303053111/-/DCSupplemental.

References

- 1.Hertz JA, Krogh AS, Palmer RG. Introduction to the Theory of Neural Computation. Vol 1. Redwood City, CA: Addison-Wesley; 1991. [Google Scholar]

- 2.Mahowald M, Douglas RJ. A silicon neuron. Nature. 1991;354(6354):515–518. doi: 10.1038/354515a0. [DOI] [PubMed] [Google Scholar]

- 3.Indiveri G, Chicca E, Douglas R. Artificial cognitive systems: From VLSI networks of spiking neurons to neuromorphic cognition. Cognit Comput. 2009;1:119–127. [Google Scholar]

- 4.Yu T, Sejnowski TJ, Cauwenberghs G. Biophysical neural spiking, bursting, and excitability dynamics in reconfigurable analog VLSI. IEEE Trans Biomed Circuits Syst. 2011;5(5):420–429. doi: 10.1109/TBCAS.2011.2169794. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Indiveri G, et al. Neuromorphic silicon neuron circuits. Front Neurosci. 2011;5:73. doi: 10.3389/fnins.2011.00073. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Renaud S, et al. PAX: A mixed hardware/software simulation platform for spiking neural networks. Neural Netw. 2010;23(7):905–916. doi: 10.1016/j.neunet.2010.02.006. [DOI] [PubMed] [Google Scholar]

- 7.Brüderle D, et al. A comprehensive workflow for general-purpose neural modeling with highly configurable neuromorphic hardware systems. Biol Cybern. 2011;104(4-5):263–296. doi: 10.1007/s00422-011-0435-9. [DOI] [PubMed] [Google Scholar]

- 8.Furber SB, et al. Overview of the SpiNNaker System Architecture. IEEE Trans Comput. 2013;62(12):2454–2467. [Google Scholar]

- 9.Choudhary S, et al. Silicon neurons that compute. In: Villa AEP, Duch W, Érdi P, Masulli F, Palm G, editors. Artificial Neural Networks and Machine Learning–ICANN 2012. Berlin: Springer; 2012. pp. 121–128. [Google Scholar]

- 10. Merolla P, et al. (2011) A digital neurosynaptic core using embedded crossbar memory with 45pJ per spike in 45nm. Custom Integrated Circuits Conference (CICC), 2011 IEEE (IEEE, Piscataway, NJ), pp 1–4.

- 11.Imam N, et al. Implementation of olfactory bulb glomerular-layer computations in a digital neurosynaptic core. Front Neurosci. 2012;6:83. doi: 10.3389/fnins.2012.00083. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Brüderle D, et al. (2010) Simulator-like exploration of cortical network architectures with a mixed-signal VLSI system. Proceedings of 2010 IEEE International Symposium on Circuits and Systems (IEEE, Piscataway, NJ), pp 2784–2787.

- 13. Schemmel J, Gruebl A, Meier K, Mueller E (2006) Implementing synaptic plasticity in a VLSI spiking neural network model. Proceedings of the 2006 International Joint Conference on Neural Networks (IJCNN) (IEEE, Vancouver), pp 1–6.

- 14.Pfeil T, et al. Six networks on a universal neuromorphic computing substrate. Front Neurosci. 2013;7:11. doi: 10.3389/fnins.2013.00011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Davison AP, et al. PyNN: A common interface for neuronal network simulators. Front Neuroinform. 2008;2:11. doi: 10.3389/neuro.11.011.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Brüderle D, et al. Establishing a novel modeling tool: A Python-based interface for a neuromorphic hardware system. Front Neuroinform. 2009;3:17. doi: 10.3389/neuro.11.017.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Schmuker M, Schneider G. Processing and classification of chemical data inspired by insect olfaction. Proc Natl Acad Sci USA. 2007;104(51):20285–20289. doi: 10.1073/pnas.0705683104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Huerta R, Nowotny T, García-Sanchez M, Abarbanel HDI, Rabinovich MI. Learning classification in the olfactory system of insects. Neural Comput. 2004;16(8):1601–1640. doi: 10.1162/089976604774201613. [DOI] [PubMed] [Google Scholar]

- 19.Huerta R, Nowotny T. Fast and robust learning by reinforcement signals: Explorations in the insect brain. Neural Comput. 2009;21(8):2123–2151. doi: 10.1162/neco.2009.03-08-733. [DOI] [PubMed] [Google Scholar]

- 20. Martinetz T, Schulten K (1991) A “neural-gas” network learns topologies. Artificial Neural Networks, eds Kohonen T, Mäkisara K, Simula O, Kangas J (Elsevier B.V., North-Holland, Amsterdam), pp 397–402.

- 21.Linster C, Sachse S, Galizia CG. Computational modeling suggests that response properties rather than spatial position determine connectivity between olfactory glomeruli. J Neurophysiol. 2005;93(6):3410–3417. doi: 10.1152/jn.01285.2004. [DOI] [PubMed] [Google Scholar]

- 22.Wick SD, Wiechert MT, Friedrich RW, Riecke H. Pattern orthogonalization via channel decorrelation by adaptive networks. J Comput Neurosci. 2010;28(1):29–45. doi: 10.1007/s10827-009-0183-1. [DOI] [PubMed] [Google Scholar]

- 23.Schmuker M, Yamagata N, Nawrot MP, Menzel R. Parallel representation of stimulus identity and intensity in a dual pathway model inspired by the olfactory system of the honeybee. Front Neuroeng. 2011;4:17. doi: 10.3389/fneng.2011.00017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Brader JM, Senn W, Fusi S. Learning real-world stimuli in a neural network with spike-driven synaptic dynamics. Neural Comput. 2007;19(11):2881–2912. doi: 10.1162/neco.2007.19.11.2881. [DOI] [PubMed] [Google Scholar]

- 25.Soltani A, Wang X-J. Synaptic computation underlying probabilistic inference. Nat Neurosci. 2010;13(1):112–119. doi: 10.1038/nn.2450. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Fisher R. The use of multiple measurements in taxonomic problems. Ann Eugen. 1936;7(2):179–188. [Google Scholar]

- 27.Gorodkin J. Comparing two K-category assignments by a K-category correlation coefficient. Comput Biol Chem. 2004;28(5-6):367–374. doi: 10.1016/j.compbiolchem.2004.09.006. [DOI] [PubMed] [Google Scholar]

- 28.Nawrot MP, et al. Measurement of variability dynamics in cortical spike trains. J Neurosci Methods. 2008;169(2):374–390. doi: 10.1016/j.jneumeth.2007.10.013. [DOI] [PubMed] [Google Scholar]

- 29. Häusler C, Nawrot MP, Schmuker M (2011) A spiking neuron classifier network with a deep architecture inspired by the olfactory system of the honeybee. 2011 5th International IEEE/EMBS Conference on Neural Engineering (IEEE, Piscataway, NJ), pp 198–202.

- 30. Schemmel J, et al. (2010) A wafer-scale neuromorphic hardware system for large-scale neural modeling. Proceedings of the 2010 International Symposium on Circuits and Systems (ISCAS) (IEEE, Paris), pp 1947–1950.

- 31.Ruf B, Schmitt M. Self-organization of spiking neurons using action potential timing. IEEE Trans Neural Netw. 1998;9(3):575–578. doi: 10.1109/72.668899. [DOI] [PubMed] [Google Scholar]

- 32.Choe Y, Miikkulainen R. Self-organization and segmentation in a laterally connected orientation map of spiking neurons. Neurocomputing. 1998;21(1-3):139–158. [Google Scholar]

- 33.Behi T, Arous N. Word recognition in continuous speech and speaker independent by means of recurrent self-organizing spiking neurons. Signal Processing. 2011;5(5):215–226. [Google Scholar]

- 34.Nessler B, Pfeiffer M, Buesing L, Maass W. Bayesian computation emerges in generic cortical microcircuits through spike-timing-dependent plasticity. PLoS Comput Biol. 2013;9(4):e1003037. doi: 10.1371/journal.pcbi.1003037. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Pfeil T, et al. Is a 4-bit synaptic weight resolution enough?—constraints on enabling spike-timing dependent plasticity in neuromorphic hardware. Front Neurosci. 2012;6:90. doi: 10.3389/fnins.2012.00090. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Friedmann S, Frémaux N, Schemmel J, Gerstner W, Meier K. Reward-based learning under hardware constraints-using a RISC processor embedded in a neuromorphic substrate. Front Neurosci. 2013;7:160. doi: 10.3389/fnins.2013.00160. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Krofczik S, Menzel R, Nawrot MP. Rapid odor processing in the honeybee antennal lobe network. Front Comput Neurosci. 2008;2:9. doi: 10.3389/neuro.10.009.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Strube-Bloss MF, Herrera-Valdez MA, Smith BH. Ensemble response in mushroom body output neurons of the honey bee outpaces spatiotemporal odor processing two synapses earlier in the antennal lobe. PLoS One. 2012;7(11):e50322. doi: 10.1371/journal.pone.0050322. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Strube-Bloss MF, Nawrot MP, Menzel R. Mushroom body output neurons encode odor-reward associations. J Neurosci. 2011;31(8):3129–3140. doi: 10.1523/JNEUROSCI.2583-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Mainen ZF, Sejnowski TJ. Reliability of spike timing in neocortical neurons. Science. 1995;268(5216):1503–1506. doi: 10.1126/science.7770778. [DOI] [PubMed] [Google Scholar]

- 41.Boucsein C, Nawrot MP, Schnepel P, Aertsen A. Beyond the cortical column: Abundance and physiology of horizontal connections imply a strong role for inputs from the surround. Front Neurosci. 2011;5:32. doi: 10.3389/fnins.2011.00032. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Tuckwell HC. Introduction to Theoretical Neurobiology: Volume 2, Nonlinear and Stochastic Theories. Cambridge, UK: Cambridge Univ Press; 1988. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.