Abstract

Investigated the relationship between change over time in severity of depression symptoms and facial expression. Depressed participants were followed over the course of treatment and video recorded during a series of clinical interviews. Facial expressions were analyzed from the video using both manual and automatic systems. Automatic and manual coding were highly consistent for FACS action units, and showed similar effects for change over time in depression severity. For both systems, when symptom severity was high, participants made more facial expressions associated with contempt, smiled less, and those smiles that occurred were more likely to be accompanied by facial actions associated with contempt. These results are consistent with the “social risk hypothesis” of depression. According to this hypothesis, when symptoms are severe, depressed participants withdraw from other people in order to protect themselves from anticipated rejection, scorn, and social exclusion. As their symptoms fade, participants send more signals indicating a willingness to affiliate. The finding that automatic facial expression analysis was both consistent with manual coding and produced the same pattern of depression effects suggests that automatic facial expression analysis may be ready for use in behavioral and clinical science.

I. INTRODUCTION

Unipolar depression is a commonly occurring mental disorder that meaningfully impairs quality of life for patients and their relatives [42]. It is marked by depressed mood and anhedonia, as well as changes in appetitive motivation, cognition, and behavior [3]. Theoretical conjectures concerning the nature of depression have focused on neurobiological, psychosocial, and evolutionary processes. Recent work also underscored the important role of facial behavior in depression as a mechanism of emotional expression and nonverbal communication. The current study used manual and automatic facial expression analysis to investigate the impact of depressive symptom severity on facial expressions in a dataset of clinical interviews.

A. Facial Expression and Depression

A facial expression can be examined with regard to both its structure and its function. The structure of a facial expression refers to the specific pattern of muscle contraction that produces it, while the function of a facial expression refers to its potential as a bearer of information about emotions, intentions, and desires [31]. According to the neuro-cultural model, facial expressions of emotion are subject to two sets of determinants [14]. First, a neurologically-based “facial affect program” links particular emotions with the firing of particular facial muscles. Second, cultural learning dictates what events elicit which emotions and what display rules are activated in a given context. Display rules are social norms regarding emotional expression that may lead to a facial expression being deamplified, neutralized, qualified, masked, amplified, or simulated [15].

From this perspective (i.e., due to the influence of display rules), it is impossible to infer a person’s “true” feelings, intentions, and desires from a single facial expression with absolute confidence. The current study addresses this issue by analyzing patterns of facial expression over time and by presenting the structure of these patterns before speculating about their functional meaning.

A number of theories attempt to predict and explain the patterns of facial behavior displayed by individuals suffering from depression. However, these theories make predictions based on broad emotional or behavioral dimensions, and some work is required to convert them to testable hypotheses based on facial expression structure. This conversion is accomplished by linking the contraction of particular facial muscles to particular emotions [15] which have been located on the relevant emotional dimensions [29, 30, 33, 41].

The current study explores three emotional dimensions: valence, dominance, and affiliation. Valence captures the attractiveness and hedonic value of an emotion or behavioral intention; happiness is positive in valence, while anger, contempt, disgust, fear, and sadness are negative in valence. Dominance captures the status, power, and control of an emotion or behavioral intention; anger, contempt, and disgust are positive in dominance, while fear and sadness are negative in dominance. Affiliation captures the solidarity, friendliness, and approachability of an emotion or behavioral intention; happiness, sadness, and fear are positive in affiliation, while anger, contempt, and disgust are negative in affiliation.

The mood-facilitation (MF) hypothesis [39] states that moods increase the likelihood and intensity of “matching” emotions and decrease the likelihood and intensity of “opposing” emotions. As depression is marked by pervasively negative mood, this hypothesis predicts that the depressed state will be marked by potentiation of facial expressions related to negative valence emotions and attenuation of facial expressions related to positive valence emotions [53].

The emotion context insensitivity (ECI) hypothesis [40] views depression as a defensive motivational state of environmental disengagement. It posits that depressed mood serves to conserve resources in low-reward environments by inhibiting overall emotional reactivity. As such, this hypothesis predicts that the depressed state will be marked by attenuation of all facial expressions of emotion.

The social risk (SR) hypothesis [1] views depression as a risk-averse motivational state activated by threats of social exclusion. It posits that depressed mood tailors communication patterns to the social context in order to minimize outcome variability. This is accomplished by signaling submission (i.e., negative dominance) in competitive contexts and withdrawal (i.e., negative affiliation) in exchange-oriented contexts where requests for aid may be ignored or scorned. Help-seeking behavior (i.e., negative valence and positive affiliation) is reserved for reciprocity-oriented contexts with allies and kin who are likely to provide the requested succor.

B. Summary of Previous Work

Early work demonstrated that untrained observers were able to identify depressed individuals and depression severity from nonverbal behavior [54, 55]. These findings impelled a long history of research investigating the facial expressions of patients with depression. Overall, this work has indicated that depression is characterized by the attenuation of smiles produced by the zygomatic major muscle (e.g., [10, 19, 25, 32, 50]). Studies also found differences in terms of other facial expressions, but these results were largely equivocal. For example, some studies found evidence of attenuation of facial expressions related to negative valence emotions [5, 25, 26, 38, 50], while others found evidence of their potentiation [6, 37, 44, 47]. Although a full literature review is beyond the scope of this paper, a summary of this work and its limitations is included to motivate our approach.

First, many previous studies confounded current and past depression by comparing depressed and non-depressed control groups. Because history of depression has been highly correlated with heritable individual differences in personality traits (e.g., neuroticism) [34], any between-group differences could have been due to either current depression or stable individual differences. The studies that addressed this issue by following depressed participants over time have yielded interesting results. For instance, patterns of facial expression during an intake interview were predictive of later clinical improvement [7, 17, 45], and patterns of facial expression changed over the course of treatment [22, 24, 32, 43].

Second, many previous studies examined the facial expressions of depressed participants in contexts of low sociality (e.g., viewing static images or film clips alone). However, research has found that rates of certain facial expressions can be very low in such contexts [23]. As such, previous studies may have been influenced by a social “floor effect.”

Third, the majority of previous work explored an extremely limited sample of facial expressions. However, proper interpretation of the function of a facial expression requires comprehensive description of its structure. For example, the most prominent finding in depression so far has been the attenuation of zygomatic major activity. This led many researchers to conclude that depression is marked by attenuation of expressed positive affect. However, when paired with the activation of other facial muscles, zygomatic major contraction has been linked to all of the following, in addition to positive affect: polite engagement, pain, contempt, and embarrassment (e.g., [2, 15]).

To explore this issue, a number of studies on depression [10, 25, 38, 51] compared smiles with and without the “Duchenne marker” (i.e., orbicularis oculi contraction), which has been linked to “felt” but not “social” smiles [16]. Because the results of these studies were somewhat equivocal, more research is needed on the occurrence of different smile types in depression. Similarly, with only one exception, no studies have examined the occurrence of “negative affect smiles” in depression. These smiles include the contraction of facial muscles that are related to negative valence emotions. Reed et al. [37] found that negative affect smiles were more common in currently depressed participants than in those without current symptomatology.

Finally, the majority of previous work used manual facial expression analysis (i.e., visual coding). Although humans can become highly reliable at coding even complex facial expressions, the training required for this and the coding itself is incredibly time-consuming. In an effort to alleviate this time burden, a great deal of interdisciplinary research has been devoted to the development of automated systems for facial expression analysis. Although initial applications to clinical science are beginning to appear [13, 28], very few automatic systems have been trained or tested on populations with psychopathology.

C. The Current Study

The current study addresses the limitations of previous research and makes several contributions to the literature. First, we utilize a longitudinal design and follow depressed patients over the course of treatment to examine whether their patterns of facial expression change with symptom reduction. By sampling participants over time, we are able to control for personality and other correlates of depression, as well as stable individual differences in expressive behavior.

Second, we explore patterns of facial expression during a semi-structured clinical interview. In addition to providing a highly social context, this interview is also more ecologically valid than a solitary stimulus-viewing procedure.

Third, we examine a wide range of facial actions conceptually and empirically related to different affective states and communicative functions. This allows us to explore the possibility that depression may affect different facial actions in different ways. In addition to examining these facial actions individually, we also examine their co-occurrence with smiling. This allows us to explore complex expressions such as Duchenne smiles and negative affect smiles.

Finally, we evaluate an automatic facial expression analysis system on its ability to detect patterns of facial expression in depression. If an automated system can achieve comparability with manual coding, it may become possible to code larger datasets with minimal human involvement.

II. METHODS

A. Participants

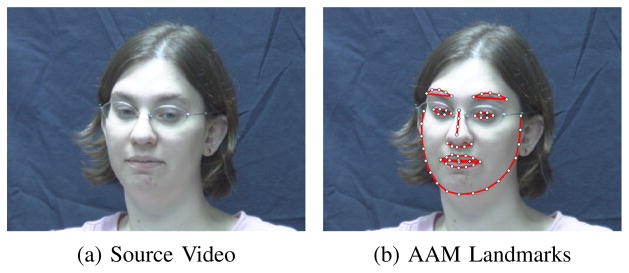

Video from 34 adults (67.6% female, 88.2% white, mean age 41.6 years) in the Spectrum database [13] has been FACS-coded. The participants were diagnosed with major depressive disorder [3] using a structured clinical interview [20]. They were also interviewed on one or more occasions using the Hamilton Rating Scale for Depression (HRSD) [27] to assess symptom severity over the course of treatment (i.e., interpersonal psychotherapy or a selective serotonin reuptake inhibitor). These interviews were recorded using four hardware-synchronized analogue cameras. Video from a camera roughly 15 degrees to the participant’s right was digitized into 640x480 pixel arrays at a frame rate of 29.97 frames per second (Fig. 1a).

Fig. 1.

Example images from the Spectrum dataset [13]

Data from all 34 participants was used in the training of the automatic facial expression analysis system. To be included in the depression analyses, participants had to have high symptom severity (i.e., HRSD ≥ 15) during their initial interview and low symptom severity (i.e., HRSD ≤ 7) during a subsequent interview. A total of 18 participants (61.1% female, 88.9% white, mean age 41.1 years) met these criteria, yielding a total of 36 interviews for analysis. Because manual FACS coding is highly time-consuming, only the first three interview questions (about depressed mood, feelings of guilt, and suicidal ideation) were analyzed. These segments ranged in length from 857 to 7249 frames with an average of 2896.7 frames. To protect participant confidentiality, the Spectrum database is not publicly available.

B. Manual FACS Coding

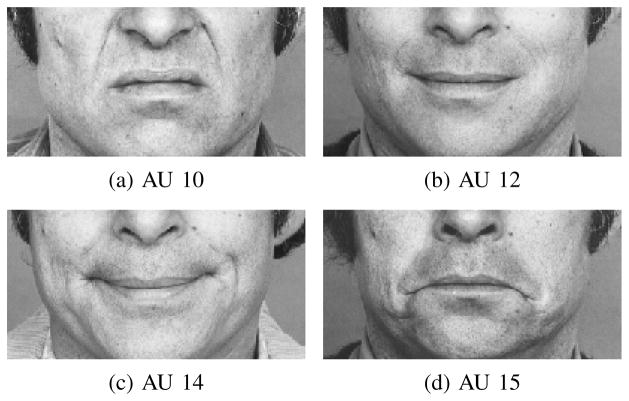

The Facial Action Coding System (FACS) [18] is the current gold standard for facial expression annotation. FACS decomposes facial expressions into component parts called action units. Action units (AU) are anatomically-based facial actions that correspond to the contraction of specific facial muscles. For example, AU 12 codes contractions of the zygomatic major muscle and AU 6 codes contractions of the orbicularis oculi muscle. For annotating facial expressions, an AU may occur alone or in combination with other AUs. For instance, the combination of AU 6+12 corresponds to the Duchenne smile described earlier.

Participant facial behavior was manually FACS coded from video by certified and experienced coders. Expression onset, apex, and offset were coded for 17 commonly occurring AU. Overall inter-observer agreement for AU occurrence, quantified by Cohen’s Kappa [12], was 0.75; according to convention, this can be considered good or excellent agreement. The current study analyzed nine of these AU (Table I) that are conceptually related to affect [15] and occurred sufficiently in the database (i.e., ≥5% occurrence).

TABLE I.

FACS Coded Action Units

| AU | Muscle Name | Related Emotions |

|---|---|---|

| 1 | Frontalis | Fear, Sadness, Surprise |

| 4 | Corrugator | Anger, Fear, Sadness |

| 6 | Orbicularis Oculi | Happiness |

| 10 | Levator | Disgust |

| 12 | Zygomatic Major | Happiness |

| 14 | Buccinator | Contempt |

| 15 | Triangularis | Sadness |

| 17 | Mentalis | Sadness |

| 24 | Orbicularis Oris | Anger |

C. Automatic FACS Coding

Face Registration: In order to register two facial images (i.e., the “reference image” and the “sensed image”), a set of points called landmark points is utilized. Facial landmark points indicate the location of the important facial components (e.g., eye corners, nose tip, jaw line). In order to automatically interpret a novel face image, an efficient method for tracking the facial landmark points is required. In this study, we utilized Active Appearance Models (AAM) to track facial landmark points. AAM is a powerful statistical approach that can simultaneously match the face shape and texture (i.e., model) into a new facial image [36]. In this study, we used 66 facial landmark points (Fig. 1b). In order to build the AAM shape and appearance model, we used approximately 3% of frames for each subject. The frames then were automatically aligned using a gradient-descent AAM fit. Afterwards, we utilized 2D similarity transformation for mapping the landmark points to the references points, which were calculated by averaging over all frames.

Feature Extraction: Facial expressions appear as changes in facial shape (e.g., curvedness of the mouth) and appearance (e.g., wrinkles and furrows). In order to describe facial expression efficiently, it would be helpful to utilize a representation that can simultaneously describe the shape and appearance of an image. Localized Binary Pattern Histogram (LBPH), Histogram of Oriented Gradient (HOG), and localized Gabor are some well-known features that can be used for this purpose [48]. Localized Gabor features were used in this study, as they have been found to be relatively robust to alignment error [11]. A bank of 40 Gabor filters (i.e., 5 scales and 8 orientations) was applied to regions defined around the 66 landmark points, which resulted in 2640 Gabor features per video frame.

Dimensionality Reduction: In many pattern classification applications, the number of features is extremely large. This size makes the analysis and classification of the data a very complex task. In order to extract the most important and efficient features of the data, several linear techniques, such as Principle Component Analysis (PCA) and Independent Component Analysis (ICA), and nonlinear techniques, such as Kernel PCA and Manifold Learning, have been proposed [21]. We utilized the manifold learning technique to reduce the dimensionality of the Gabor features. The idea behind this technique is that all the data points lie on a low dimensional manifold that is embedded in a high dimensional space [8]. Specifically, we utilized Laplacian Eigenmap [4] to extract the low-dimensional features and then, similar to Mahoor et al. [35], we applied spectral regression to calculate the corresponding projection function for each AU. Through this step, the dimensionality of the Gabor data was reduced to 29 features per video frame.

Classifier Training: In machine learning, feature classification aims to assign each input value to one of a given set of classes. A well-known classification technique is the Support Vector Machine (SVM). The SVM classifier applies the “kernel trick,” which uses dot product, to keep computational loads reasonable. The kernel functions (e.g., linear, polynomial, and radial basis function (RBF)) enable the SVM algorithm to fit a hyperplane with a maximum margin into the transformed high dimensional feature space. RBF kernels were used in the current study. To build a training set, we randomly sampled 50 positive and 50 negative frames from each subject. To train and test the SVM classifiers, we used the LIBSVM library [9]. To find the best classifier and kernel parameters (e.g., C and γ), we used a “grid-search.”

D. Data Analysis

To enable comparison between interviews with high and low symptom severity, the same facial expression measures were extracted from both. First, to capture how often the different expressions occurred, we calculated the proportion of all frames during which each AU was present. Second, to explore complex facial expressions, we also looked at how often different AU co-occurred with AU 12 (i.e., smiles). To do so, we calculated the proportion of smiling frames during which each AU occurred. These measures were calculated separately for each interview and compared within-subjects using paired-sample t-tests.

We compared manual and automatic FACS coding with respect to both agreement and reliability using a leave-one-subject-out cross-validation. Agreement represents the extent to which two systems tend to make the same judgment on a frame-by-frame basis [49]. That is, for any given frame, do both systems detect the same AU? Inter-system reliability, in contrast, represents the extent to which the judgments of different systems are proportional when expressed as deviations from their means [49]. For each video, reliability asks whether the systems are consistent in their estimates of the proportion of frames that include a given AU. For many purposes, such as comparing the proportion of positive and negative expressions in relation to severity of depression, reliability of measurement is what matters. Agreement was quantified using F1-score [52], which is the geometric mean of precision and recall. Reliability was assessed using intraclass correlation (ICC) [46].

III. RESULTS

A. Comparison of manual and automatic coding

Manual and automatic coding was very consistent (Table II). The two systems demonstrated high reliability with respect to the proportion of frames each AU was present; ICC scores were above 0.850 for each single AU and above 0.700 for most AU combinations. Inter-system agreement with respect to the frame-by-frame presence of each AU was moderate to high, with F1 scores above 0.700 for most single AUs and above 0.600 for most AU combinations.

TABLE II.

Inter-System Reliability and Agreement

| Single Action Units

|

Action Unit Combinations

|

||||

|---|---|---|---|---|---|

| AU | ICC | F1-score | AU | ICC | F1-score |

|

|

|

||||

| 1 | 0.957 | 0.775 | 1+12 | 0.931 | 0.736 |

| 4 | 0.954 | 0.796 | 4+12 | 0.918 | 0.714 |

| 6 | 0.850 | 0.714 | 6+12 | 0.879 | 0.767 |

| 10 | 0.910 | 0.742 | 10+12 | 0.931 | 0.767 |

| 12 | 0.854 | 0.773 | |||

| 14 | 0.889 | 0.708 | 12+14 | 0.953 | 0.658 |

| 15 | 0.893 | 0.674 | 12+15 | 0.941 | 0.631 |

| 17 | 0.882 | 0.725 | 12+17 | 0.698 | 0.571 |

| 24 | 0.911 | 0.713 | 12+24 | 0.706 | 0.630 |

B. Depression findings

Manual FACS coding indicated that patterns of facial expression varied with depressive symptom severity. Specifically, the highly depressed state was marked by three structural patterns of facial expressive behavior (Tables III and IV). Compared with low symptom severity interviews, high symptom severity interviews were marked by (1) significantly lower overall AU 12 activity, (2) significantly higher overall AU 14 activity, and (3) significantly more AU 14 activity during smiling. There were also two non-significant trends worth noting. Compared to the low symptom severity interviews, the high symptom severity interviews were marked by (4) less overall AU 15 activity and (5) more AU 10 activity during smiling.

TABLE III.

Proportion of All Frames

| AU | Manual FACS Coding

|

Automatic FACS Coding

|

||||

|---|---|---|---|---|---|---|

| High | Low | p | High | Low | p | |

| 1 | 0.169 (0.209) | 0.208 (0.260) | .58 | 0.182 (0.174) | 0.224 (0.232) | .46 |

| 4 | 0.177 (0.245) | 0.152 (0.196) | .69 | 0.172 (0.201) | 0.155 (0.173) | .72 |

| 6 | 0.082 (0.117) | 0.134 (0.199) | .27 | 0.120 (0.111) | 0.133 (0.131) | .70 |

| 10 | 0.210 (0.275) | 0.162 (0.227) | .51 | 0.222 (0.234) | 0.155 (0.155) | .24 |

| 12 | 0.191 (0.167) | 0.392 (0.313) | .02* | 0.223 (0.160) | 0.322 (0.221) | .09† |

| 14 | 0.247 (0.244) | 0.139 (0.159) | .03* | 0.278 (0.184) | 0.170 (0.142) | .01* |

| 15 | 0.059 (0.167) | 0.119 (0.134) | .06† | 0.085 (0.124) | 0.151 (0.123) | .01* |

| 17 | 0.310 (0.264) | 0.313 (0.193) | .97 | 0.357 (0.191) | 0.353 (0.110) | .92 |

| 24 | 0.123 (0.124) | 0.142 (0.156) | .65 | 0.184 (0.130) | 0.154 (0.136) | .50 |

Mean (Standard Deviation), High Symptom Severity, Low Symptom Severity,

p<.05,

p<.10

TABLE IV.

Proportion of Smiling Frames

| AU | Manual FACS Coding

|

Automatic FACS Coding

|

||||

|---|---|---|---|---|---|---|

| High | Low | p | High | Low | p | |

| 1 | 0.178 (0.240) | 0.277 (0.326) | .21 | 0.149 (0.217) | 0.204 (0.258) | .25 |

| 4 | 0.125 (0.192) | 0.060 (0.114) | .22 | 0.107 (0.192) | 0.060 (0.107) | .36 |

| 6 | 0.313 (0.306) | 0.301 (0.292) | .88 | 0.290 (0.266) | 0.262 (0.262) | .65 |

| 10 | 0.461 (0.393) | 0.294 (0.350) | .09† | 0.317 (0.292) | 0.236 (0.319) | .25 |

| 14 | 0.247 (0.310) | 0.042 (0.073) | .03* | 0.182 (0.244) | 0.051 (0.082) | .05* |

| 15 | 0.086 (0.155) | 0.115 (0.112) | .55 | 0.089 (0.138) | 0.114 (0.120) | .55 |

| 17 | 0.253 (0.209) | 0.215 (0.205) | .57 | 0.282 (0.218) | 0.274 (0.224) | .89 |

| 24 | 0.057 (0.068) | 0.062 (0.072) | .88 | 0.100 (0.132) | 0.054 (0.067) | .26 |

Mean (Standard Deviation), High Symptom Severity, Low Symptom Severity,

p<.05,

p<.10

These results were largely replicated by automatic FACS coding. With the exception of the finding regarding AU 10 during smiling, all of the differences that were significant or trending according to manual FACS coding were also significant or trending according to the automatic system.

IV. DISCUSSION

A. Mood-facilitation Hypothesis

The MF hypothesis predicted that participants would show potentiation of facial expressions related to negative valence emotions (i.e., anger, contempt, disgust, fear, and sadness) and attenuation of facial expressions related to positive valence emotions (i.e., happiness). The results for this hypothesis were mixed. In support of this hypothesis, participants showed potentiation of expressions related to contempt (i.e., AU 14) and attenuation of expressions related to happiness (i.e., AU 12) when severely depressed. When participants did smile while severely depressed, they included more facial expressions related to contempt (i.e., AU 14) and disgust (i.e., AU 10). Contrary to this hypothesis, however, there was evidence of attenuation of expressions related to sadness (i.e., AU 15). There were also other expressions related to happiness (i.e., AU 6) that did not show attenuation and expressions related to anger (i.e., AU 24) and fear (i.e., AU 4) that did not show potentiation.

B. Emotion Context Insensitivity Hypothesis

The ECI hypothesis predicted that participants would show attenuation of all facial expressions of emotion during high symptom severity interviews. The results did not support this hypothesis. Although participants did show attenuation of expressions related to happiness (i.e., AU 12) and sadness (i.e., AU 15) during high symptom severity interviews, they also showed potentiation of expressions related to contempt (i.e., AU 14). Additionally, there was no evidence of attenuation of other facial expressions (i.e., AU 1, 4, 6, 10, 17, or 24).

C. Social Risk Hypothesis

The SR hypothesis predicted that participants’ patterns of facial expression would be determined by both their level of symptom severity and by their social context. Because it is unclear whether participants experienced the clinical interview as primarily competitive, exchange-oriented, or reciprocity-oriented, each of these possibilities was explored.

Competitive Submission: The SR hypothesis predicted that participants would display more submission in competitive contexts while severely depressed. Submission was operationalized as potentiation of negative dominance expressions (i.e., fear and sadness) and attenuation of positive dominance expressions (i.e., anger, contempt, and disgust). The results did not support this hypothesis. Contrary to this hypothesis, participants showed attenuation of expressions related to sadness (i.e., AU 15) and potentiation of expressions related to contempt (i.e., AU 14). Additionally, there was no evidence of potentiation of expressions related to fear (i.e., AU 1 or 4) or of attenuation of expressions related to anger (i.e., AU 4 or 24) and disgust (i.e., AU 10).

Exchange-oriented Withdrawal: The SR hypothesis predicted that participants would display more withdrawal in exchange-oriented contexts while severely depressed. Withdrawal was operationalized as potentiation of negative affiliation expressions (i.e., anger, contempt, and disgust) and attenuation of positive affiliation expressions (i.e., happiness, sadness, and fear). The results partially supported this hypothesis. In support of this hypothesis, when severely depressed, participants showed potentiation of expressions related to contempt (i.e., AU 14), as well as attenuation of expressions related to happiness (i.e., AU 12) and sadness (i.e., AU 15). Furthermore, when participants did smile, they included more expressions related to contempt (i.e., AU 14) and disgust (i.e., AU 10). There was no evidence, however, of attenuation of expressions related to fear (i.e., AU 4) or of potentiation of expressions related to anger (i.e., AU 24). There were also other expressions related to happiness (i.e., AU 6) and sadness (i.e., AU 1) that did not show attenuation.

Reciprocity-oriented Help-seeking: The SR hypothesis predicted that participants would display more help-seeking in reciprocity-oriented contexts while severely depressed. Help-seeking was operationalized as potentiation of expressions that are both negative in valence and positive in affiliation (i.e., fear and sadness). The results did not support this hypothesis. Contrary to this hypothesis, participants showed attenuation of expressions related to sadness (i.e., AU 15) while severely depressed. Additionally, there was no evidence of potentiation of other expressions related to sadness (i.e., AU 17) or fear (i.e., AU 1 or 4).

D. Conclusions

Of the hypotheses explored in this study, the “exchange-oriented withdrawal” portion of the social risk hypothesis was most successful at predicting the obtained results. From this perspective, participants experienced the clinical interview as a primarily exchange-oriented context and, when severely depressed, were attempting to minimize their social burden by withdrawing (i.e., by signaling that they did not want to affiliate). Social withdrawal is thought to manage social burden by presenting the depressed individual as a self-sufficient group member who is neither a competitive rival nor a drain on group resources. This response may have been triggered by a drop in participants’ self-perceived “social investment potential” engendered by the interview questions about depressive symptomatology.

Frame-by-frame agreement between manual FACS coding and automatic FACS Coding was good, and reliability for our specific measures was very good. The distinction between agreement and reliability is important because it speaks to the different constructs these metrics capture. Here, reliability of the automatic system refers to its ability to identify patterns of facial expression, i.e., how much of the time each AU occurred. This is a different task than frame-level agreement, which refers to the system’s ability to identify and classify individual AU events. The results suggest that, while there is still room for improvement in terms of agreement, reliability for proportion of frames is already quite high. Given that agreement is an overly-conservative measure for many applications, this finding implies that automatic facial analysis systems may be ready for use in behavioral science.

E. Limitations and Future Directions

Although the use of a highly social context confers advantages in terms of ecological validity, it also presents a number of limitations. First, interviews in general are less structured than the viewing of pictures or film. All participants were asked the same questions, but how much detail they used in responding and the extent of follow-up questions asked by the interviewer varied. Unlike with pictures or film, it was also possible for participants to influence the experimental context with their own behavior. For example, recent work found that the vocal prosody of interviewers varied with the symptom severity of their depressed interviewees [56].

Second, the specific questions analyzed in this study (i.e., about depressed mood, feelings of guilt, and suicidal ideation) likely colored the types of emotions and behaviors participants displayed, e.g., these questions may have produced a context in which positive affect was unlikely to occur. Future studies might explore the behavior of depressed participants in social contexts likely to elicit a broader range of emotion. These questions were also the first three in the interview and facial behavior may change as a function of time, e.g., participants’ feelings and goals may change over the course of an interview.

Finally, although the current study represents an important step towards more comprehensive description of facial expressions in depression, future work would benefit from the exploration of additional measures. Specifically, future studies should explore depression in terms of facial expression intensity, symmetry, and dynamics, as well as other aspects of facial behavior such as head pose and gaze. Measures of symmetry would be especially informative for AU 14, as this facial action has been linked to contempt primarily when it is strongly asymmetrical or unilateral [15].

Fig. 2.

Example images from the FACS manual [18]

Acknowledgments

The authors wish to thank Nicole Siverling and Shawn Zuratovic for their generous assistance. This work was supported in part by US National Institutes of Health grants R01 MH61435 and R01 MH65376 to the University of Pittsburgh.

References

- 1.Allen NB, Badcock PBT. The social risk hypothesis of depressed mood: Evolutionary, psychosocial, and neurobiological perspectives. Psychological Bulletin. 2003;129(6):887–913. doi: 10.1037/0033-2909.129.6.887. [DOI] [PubMed] [Google Scholar]

- 2.Ambadar Z, Cohn JF, Reed LI. All smiles are not created equal: Morphology and timing of smiles perceived as amused, polite, and embarrassed/nervous. Journal of Nonverbal Behavior. 2009;33(1):17–34. doi: 10.1007/s10919-008-0059-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.American Psychiatric Association. Diagnostic and statistical manual of mental disorders. 4. Washington, DC: 1994. [Google Scholar]

- 4.Belkin M, Niyogi P. Laplacian eigenmaps for dimensionality reduction and data representation. Neural Computation. 2003;15(6):1373–1396. [Google Scholar]

- 5.Berenbaum H, Oltmanns TF. Emotional experience and expression in schizophrenia and depression. Journal of Abnormal Psychology. 1992;101(1):37–44. doi: 10.1037//0021-843x.101.1.37. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Brozgold AZ, Borod JC, Martin CC, Pick LH, Alpert M, Welkowitz J. Social functioning and facial emotional expression in neurological and psychiatric disorders. Applied Neuropsychology. 1998;5(1):15–23. doi: 10.1207/s15324826an0501_2. [DOI] [PubMed] [Google Scholar]

- 7.Carney RM, Hong BA, O’Connell MF, Amado H. Facial electromyography as a predictor of treatment outcome in depression. The British Journal of Psychiatry. 1981;138(6):485–489. doi: 10.1192/bjp.138.6.485. [DOI] [PubMed] [Google Scholar]

- 8.Cayton L. Technical Report CS2008-0923. University of California; San Diego: 2005. Algorithms for manifold learning. [Google Scholar]

- 9.Chang CC, Lin CJ. Libsvm: a library for support vector machines. ACM Transactions on Intelligent Systems and Technology. 2011;2(3):27. [Google Scholar]

- 10.Chentsova-Dutton YE, Tsai JL, Gotlib IH. Further evidence for the cultural norm hypothesis: positive emotion in depressed and control european american and asian american women. Cultural Diversity and Ethnic Minority Psychology. 2010;16(2):284–295. doi: 10.1037/a0017562. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Chew SW, Lucey P, Lucey S, Saragih J, Cohn JF, Matthews I, Sridharan S. In the pursuit of effective affective computing: The relationship between features and registration. IEEE Transactions on Systems, Man, and Cybernetics. 2012;42(4):1006–1016. doi: 10.1109/TSMCB.2012.2194485. [DOI] [PubMed] [Google Scholar]

- 12.Cohen J. A coefficient of agreement for nominal scales. Educational and Psychological Measurement. 1960;20(1):37–46. [Google Scholar]

- 13.Cohn JF, Kruez TS, Matthews I, Ying Yang, Hoai Nguyen Minh, Padilla MT, Feng Zhou, De la Torre F. Detecting depression from facial actions and vocal prosody. Affective Computing and Intelligent Interaction. 2009:1–7. [Google Scholar]

- 14.Ekman P, Friesen WV. The repertoire of nonverbal behavior: Categories, origins, usage, and coding. Semiotica. 1969;1:49–98. [Google Scholar]

- 15.Ekman P, Matsumoto D. Facial expression analysis. Scholarpedia. 2008;3 [Google Scholar]

- 16.Ekman P, Davidson RJ, Friesen WV. The duchenne smile: Emotional expression and brain physiology: Ii. Journal of Personality and Social Psychology. 1990;58(2):342. [PubMed] [Google Scholar]

- 17.Ekman P, Matsumoto D, Friesen WV. Facial Expression in Affective Disorders. Oxford: 1997. [Google Scholar]

- 18.Ekman P, Friesen WV, Hager J. Research Nexus. Salt Lake City, UT: 2002. Facial Action Coding System (FACS): A technique for the measurement of facial movement. [Google Scholar]

- 19.Ellgring H. Nonverbal communication in depression. Cambridge University Press; Cambridge: 1989. [Google Scholar]

- 20.First MB, Gibbon M, Spitzer RL, Williams JB. User’s guide for the structured clinical interview for DSM-IV Axis I disorders (SCID-I, Version 2.0, October 1995 Final Version) Biometric Research; New York: 1995. [Google Scholar]

- 21.Fodor IK. Technical Report UCRL-ID-148494. Lawrence Livermore National Laboratory; 2002. A survey of dimension reduction techniques. [Google Scholar]

- 22.Fossi L, Faravelli C, Paoli M. The ethological approach to the assessment of depressive disorders. Journal of Nervous and Mental Disease; Journal of Nervous and Mental Disease. 1984 doi: 10.1097/00005053-198406000-00004. [DOI] [PubMed] [Google Scholar]

- 23.Fridlund AJ. Human facial expression: An evolutionary view. Academic Press; 1994. [Google Scholar]

- 24.Gaebel W, Wolwer W. Facial expression and emotional face recognition in schizophrenia and depression. European Archives of Psychiatry and Clinical Neuroscience. 1992;242(1):46–52. doi: 10.1007/BF02190342. [DOI] [PubMed] [Google Scholar]

- 25.Gaebel W, Wolwer W. Facial expressivity in the course of schizophrenia and depression. European Archives of Psychiatry and Clinical Neuroscience. 2004;254(5):335–42. doi: 10.1007/s00406-004-0510-5. [DOI] [PubMed] [Google Scholar]

- 26.Gehricke JG, Shapiro D. Reduced facial expression and social context in major depression: discrepancies between facial muscle activity and self-reported emotion. Psychiatry Research. 2000;95(2):157–167. doi: 10.1016/s0165-1781(00)00168-2. [DOI] [PubMed] [Google Scholar]

- 27.Hamilton M. Development of a rating scale for primary depressive illness. British Journal of Social and Clinical Psychology. 1967;6(4):278–296. doi: 10.1111/j.2044-8260.1967.tb00530.x. [DOI] [PubMed] [Google Scholar]

- 28.Hamm J, Kohler CG, Gur RC, Verma R. Automated facial action coding system for dynamic analysis of facial expressions in neuropsychiatric disorders. Journal of Neuroscience Methods. 2011 doi: 10.1016/j.jneumeth.2011.06.023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Hess U, Blairy S, Kleck RE. The influence of facial emotion displays, gender, and ethnicity on judgments of dominance and affiliation. Journal of Nonverbal Behavior. 2000;24(4):265–283. [Google Scholar]

- 30.Hess U, Jr, Adams R, Kleck R. Who may frown and who should smile? dominance, affiliation, and the display of happiness and anger. Cognition and Emotion. 2005;19(4):515–536. [Google Scholar]

- 31.Horstmann G. What do facial expressions convey: Feeling states, behavioral intentions, or actions requests? Emotion. 2003;3(2):150. doi: 10.1037/1528-3542.3.2.150. [DOI] [PubMed] [Google Scholar]

- 32.Jones IH, Pansa M. Some nonverbal aspects of depression and schizophrenia occurring during the interview. Journal of Nervous and Mental Disease. 1979;167(7):402–9. doi: 10.1097/00005053-197907000-00002. [DOI] [PubMed] [Google Scholar]

- 33.Knutson B. Facial expressions of emotion influence interpersonal trait inferences. Journal of Nonverbal Behavior. 1996;20(3):165–182. [Google Scholar]

- 34.Kotov R, Gamez W, Schmidt F, Watson D. Linking big personality traits to anxiety, depressive, and substance use disorders: A meta-analysis. Psychological Bulletin. 2010;136(5):768. doi: 10.1037/a0020327. [DOI] [PubMed] [Google Scholar]

- 35.Mahoor MH, Cadavid S, Messinger DS, Cohn JF. A framework for automated measurement of the intensity of non-posed facial action units. Computer Vision and Pattern Recognition Workshops. 2009:74–80. [Google Scholar]

- 36.Matthews I, Baker S. Active appearance models revisited. International Journal of Computer Vision. 2004;60(2):135–164. [Google Scholar]

- 37.Reed LI, Sayette MA, Cohn JF. Impact of depression on response to comedy: a dynamic facial coding analysis. Journal of Abnormal Psychology. 2007;116(4):804–809. doi: 10.1037/0021-843X.116.4.804. [DOI] [PubMed] [Google Scholar]

- 38.Renneberg B, Heyn K, Gebhard R, Bachmann S. Facial expression of emotions in borderline personality disorder and depression. Journal of Behavior Therapy and Experimental Psychiatry. 2005;36(3):183–196. doi: 10.1016/j.jbtep.2005.05.002. [DOI] [PubMed] [Google Scholar]

- 39.Rosenberg EL. Levels of analysis and the organization of affect. Review of General Psychology. 1998;2(3):247–270. [Google Scholar]

- 40.Rottenberg J, Gross JJ, Gotlib IH. Emotion context insensitivity in major depressive disorder. Journal of Abnormal Psychology. 2005;114(4):627–639. doi: 10.1037/0021-843X.114.4.627. [DOI] [PubMed] [Google Scholar]

- 41.Russell JA, Bullock M. Multidimensional scaling of emotional facial expressions: Similarity from preschoolers to adults. Journal of Personality and Social Psychology; Journal of Personality and Social Psychology. 1985;48(5):1290. [Google Scholar]

- 42.Saarni SI, Suvisaari J, Sintonen H, Pirkola S, Koskinen S, Aromaa A, LNNQVIST J. Impact of psychiatric disorders on health-related quality of life: general population survey. British Journal of Psychiatry. 2007;190(4):326–332. doi: 10.1192/bjp.bp.106.025106. [DOI] [PubMed] [Google Scholar]

- 43.Sakamoto S, Nameta K, Kawasaki T, Yamashita K, Shimizu A. Polygraphic evaluation of laughing and smiling in schizophrenic and depressive patients. Perceptual and Motor Skills. 1997;85(3f):1291–1302. doi: 10.2466/pms.1997.85.3f.1291. [DOI] [PubMed] [Google Scholar]

- 44.Schwartz GE, Fair PL, Salt P, Mandel MR, Klerman GL. Facial muscle patterning to affective imagery in depressed and nondepressed subjects. Science. 1976;192(4238):489–491. doi: 10.1126/science.1257786. [DOI] [PubMed] [Google Scholar]

- 45.Schwartz GE, Fair PL, Mandel MR, Salt P, Mieske M, Klerman GL. Facial electromyography in the assessment of improvement in depression. Psychosomatic Medicine. 1978;40(4):355–360. doi: 10.1097/00006842-197806000-00008. [DOI] [PubMed] [Google Scholar]

- 46.Shrout PE, Fleiss JL. Intraclass correlations: uses in assessing rater reliability. Psychological Bulletin. 1979;86(2):420. doi: 10.1037//0033-2909.86.2.420. [DOI] [PubMed] [Google Scholar]

- 47.Sloan DM, Strauss ME, Quirk SW, Sajatovic M. Subjective and expressive emotional responses in depression. Journal of Affective Disorders. 1997;46(2):135–141. doi: 10.1016/s0165-0327(97)00097-9. [DOI] [PubMed] [Google Scholar]

- 48.Tian YL, Kanade T, Cohn JF. Facial expression analysis. 2005:247–275. doi: 10.1109/34.908962. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Tinsley HE, Weiss DJ. Interrater reliability and agreement of subjective judgments. Journal of Counseling Psychology. 1975;22(4):358. [Google Scholar]

- 50.Tremeau F, Malaspina D, Duval F, Correa H, Hager-Budny M, Coin-Bariou L, Macher JP, Gorman JM. Facial expressiveness in patients with schizophrenia compared to depressed patients and non-patient comparison subjects. American Journal of Psychiatry. 2005;162(1):92–101. doi: 10.1176/appi.ajp.162.1.92. [DOI] [PubMed] [Google Scholar]

- 51.Tsai JL, Pole N, Levenson RW, Munoz RF. The effects of depression on the emotional responses of spanish-speaking latinas. Cultural Diversity and Ethnic Minority Psychology. 2003;9(1):49–63. doi: 10.1037/1099-9809.9.1.49. [DOI] [PubMed] [Google Scholar]

- 52.van Rijsbergen CJ. Information Retrieval. 2. Butter-worth; London: 1979. [Google Scholar]

- 53.Watson D, Clark LA, Carey G. Positive and negative affectivity and their relation to anxiety and depressive disorders. Journal of abnormal psychology. 1988;97(3):346. doi: 10.1037//0021-843x.97.3.346. [DOI] [PubMed] [Google Scholar]

- 54.Waxer P. Nonverbal cues for depression. Journal of Abnormal Psychology. 1974;83(3):319. doi: 10.1037/h0036706. [DOI] [PubMed] [Google Scholar]

- 55.Waxer P. Nonverbal cues for depth of depression: Set versus no set. Journal of Consulting and Clinical Psychology. 1976;44(3):493. doi: 10.1037//0022-006x.44.3.493. [DOI] [PubMed] [Google Scholar]

- 56.Yang Y, Fairbairn CE, Cohn JF. Detecting depression severity from intra- and interpersonal vocal prosody. IEEE Transactions on Affective Computing. doi: 10.1109/T-AFFC.2012.38. In press. [DOI] [PMC free article] [PubMed] [Google Scholar]