Summary

In many studies with a survival outcome, it is often not feasible to fully observe the primary event of interest. This often leads to heavy censoring and thus, difficulty in efficiently estimating survival or comparing survival rates between two groups. In certain diseases, baseline covariates and the event time of non-fatal intermediate events may be associated with overall survival. In these settings, incorporating such additional information may lead to gains in efficiency in estimation of survival and testing for a difference in survival between two treatment groups. If gains in efficiency can be achieved, it may then be possible to decrease the sample size of patients required for a study to achieve a particular power level or decrease the duration of the study. Most existing methods for incorporating intermediate events and covariates to predict survival focus on estimation of relative risk parameters and/or the joint distribution of events under semiparametric models. However, in practice, these model assumptions may not hold and hence may lead to biased estimates of the marginal survival. In this paper, we propose a semi-nonparametric two-stage procedure to estimate and compare t-year survival rates by incorporating intermediate event information observed before some landmark time, which serves as a useful approach to overcome semi-competing risks issues. In a randomized clinical trial setting, we further improve efficiency through an additional calibration step. Simulation studies demonstrate substantial potential gains in efficiency in terms of estimation and power. We illustrate our proposed procedures using an AIDS Clinical Trial Protocol 175 dataset by estimating survival and examining the difference in survival between two treatment groups: zidovudine and zidovudine plus zalcitabine.

Keywords: Efficiency Augmentation, Kaplan Meier, Landmark Prediction, Semi-competing Risks, Survival Analysis

1 Introduction

In many research settings with an event time outcome, it is often not feasible to fully observe the primary event of interest as these outcomes are typically subject to censoring. In the presence of noninformative censoring, the Kaplan Meier (KM) estimator (Kaplan & Meier, 1958) can provide consistent estimation of the event rate. However, such a simple estimate may not be extremely precise in settings with heavy censoring. To improve the efficiency, many novel procedures have been proposed to take advantage of available auxiliary information. For example, when the auxiliary information consists of a single discrete variable W, it has been shown that the nonparametric maximum likelihood estimator of survival is n−1∑i F̂T|W(t|Wi) where F̂T|W is the KM estimate of survival based on the subsample with W = Wi (Rotnitzky & Robins, 2005). Murray & Tsiatis (1996) considered a nonparametric estimation procedure to incorporate a single discrete covariate and provided theoretical results on when such augmentation enables more efficient estimation than the KM estimate. However, when multiple and/or continuous covariates are available, such fully nonparametric procedures may not perform well due to the curse of dimensionality.

Additional complications may arise when auxiliary variables include intermediate event information observed over time. In many clinical studies, information on non-fatal intermediate events associated with survival may be available in addition to baseline covariates. For example, in acute leukemia patients, the development of acute graft-versus-host disease is often monitored as it is predictive of survival following bone marrow or stem cell transplantation (Lee et al., 2002; Cortese & Andersen, 2010). The occurrence of bacterial pneumonia provides useful information for predicting death among HIV-positive patients (Hirschtick et al., 1995). In these settings, incorporating intermediate event information along with baseline covariates may lead to gains in efficiency for the estimation and comparison of survival rates.

In the aforementioned examples, the primary outcome of interest is time to a terminal event such as death and the intermediate event is time to a non-terminal event. This setting is referred to as a semi-competing risk setting since the occurrence of the terminal event would censor the non-terminal event but not vice versa. With a single intermediate event and no baseline covariates, Gray (1994) proposed a kernel smoothing procedure to incorporate such event information in order to improve estimation of survival. Parast et al. (2011) proposed a nonparametric procedure for risk prediction of residual life when there is a single intermediate event and a single discrete marker. Parast et al. (2012) extended this procedure to incorporate multiple covariates using a flexible varying-coefficient model. However, such methods cannot be easily extended to allow for both multiple intermediate events and baseline covariates due to the curse of dimensionality (Robins & Ritov, 1997).

Most existing methods for analyzing semi-competing risk data focus on estimation of relative risk parameters and/or the joint distribution of events under semiparametric models (Fine et al., 2001; Siannis et al., 2007; Jiang et al., 2005). These semiparametric models, while useful in approximating the relationship between the event times and predictors, may not be fully accurate given the complexity of the disease process. Therefore, marginal survival rates derived under such models may be biased and thus lead to invalid conclusions (Lin & Wei, 1989; Hjort, 1992; Lagakos, 1988). To overcome such limitations, we propose a two-stage procedure by (i) first using a semiparametric approach to incorporate baseline covariates and intermediate event information observed before some landmark time; and (ii) then estimating the marginal survival nonparametrically by smoothing over risk scores derived from the model in the first stage. The landmarking approach allows us to overcome semi-competing risk issues and the smoothing procedure in the second stage ensures the consistency of our survival estimates.

In a randomized clinical trial (RCT) setting, there is often interest in testing for a treatment difference in terms of survival. Robust methods to incorporate auxiliary information when testing for a treatment effect have been previously proposed in the literature. Cook & Lawless (2001) discuss a variety of statistical methods that have been proposed including parametric and semiparametric models. Gray (1994) adopted kernel estimation methods which incorporate information on a single intermediate event. To incorporate multiple baseline covariates, Lu & Tsiatis (2008) used an augmentation procedure to improve the efficiency of estimating the log hazard ratio, β, from a Cox model and testing for an overall treatment effect by examining the null hypothesis β = 0 and demonstrated substantial gains in efficiency. However, if the Cox model does not hold, the hazard ratio estimate converges to a parameter that may be difficult to interpret and may depend on the censoring distribution. It would thus be desirable to use a model-free summary measure to assess treatment difference. Furthermore, none of the existing procedures incorporate both intermediate event and baseline covariate information. In this paper, we use our proposed two-stage estimation procedure, which utilizes all available information, to make more precise inference about treatment difference as quantified by the difference in t-year survival rates.

The rest of the paper is organized as follows. Section 2 describes the proposed two-stage estimation procedure in a one-sample setting. Section 3 presents a testing procedure using these resulting estimates. We present an additional augmentation step which takes advantage of treatment randomization to further improve efficiency in an RCT setting. Simulation studies given in Section 4 demonstrate substantial potential gains in efficiency in terms of estimation and power. In Section 5 we illustrate our proposed procedures using an AIDS Clinical Trial comparing the survival rates of HIV patients treated with zidovudine and those treated with zidovudine plus zalcitabine.

2 Estimation

For the ith subject, let T𝕃i denote the time of the primary terminal event of interest, T𝕊i denote the vector of intermediate event times, Zi denote the vector of baseline covariates, and Ci denote the administrative censoring time assumed independent of (T𝕃,T𝕊i,Zi). Due to censoring, for T𝕃i and T𝕊i, we could potentially observe X𝕃i = min(T𝕃i, Ci), X𝕊i = min(T𝕊i, Ci) and δ𝕃i = I(T𝕃i ≤ Ci), δ𝕊i = I(T𝕊i ≤ Ci). Due to semi-competing risks, T𝕊i is additionally subject to informative censoring by T𝕃i, while T𝕃i is only subject to administrative censoring and cannot be censored by T𝕊i. Let t0 denote some landmark time prior to t. Our goal is to estimate S(t) = P(T𝕃i > t) using intermediate event collected up to t0 and baseline covariate information, where t is some pre-specified time point such that P(X𝕃 > t | T𝕃 ≥ t0) ∈ (0, 1) and P(T𝕃 ≤ t0, T𝕊 ≤ t0) ∈ (0, 1).

2·1 Two-stage estimation procedure

For t > t0, S(t) = P(T𝕃i > t) can be expressed as S(t | t0)S(t0), where

| (2·1) |

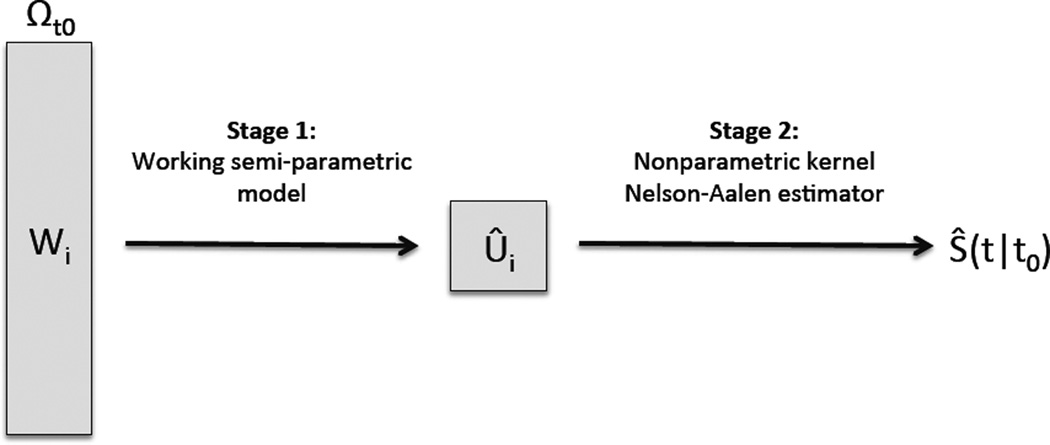

We first consider estimation of S(t | t0) with a two-stage procedure using subjects in the subset Ωt0 = {i : X𝕃i > t0} as illustrated in Figure 1. Note that conditional on X𝕃i > t0, T𝕊i is observable up to T𝕊i ∧ t0 and I(T𝕊i ≤ t0) is always observable. Let for all subjects in Ωt0. Note that for any function of W, ζ(·),

| (2·2) |

where Sζ(W)(t | t0) = P{T𝕃i > t | X𝕃i > t0, ζ(Wi)}. Hence if Ŝζ(W)(t | t0) is a consistent estimator of Sζ(W)(t | t0), S(t | t0) can be estimated consistently with where . When T𝕊i consists of a single intermediate event and Z consists of a single discrete marker, the nonparametric estimation procedure proposed in Parast et al. (2011) can be used to obtain a consistent estimator of SW(t | t0) with ζ(W) = W. However, when the dimension of W is not small, such nonparametric estimation of SW(t | t0) may not behave well (Robins & Ritov, 1997). Instead, we propose to reduce the dimension of W by first approximating SW(t | t0) where ζ(W) = W with a working semiparametric model and use the model to construct a low dimensional function that is then used to derive an estimator for S(t | t0). A useful example of such a model is the landmark proportional hazards model (Van Houwelingen & Putter, 2008; Van Houwelingen, 2007; Van Houwelingen & Putter, 2012)

| (2·3) |

where is the unspecified baseline cumulative hazard function for T𝕃i among Ωt0 and β is an unknown vector of coefficients. This is referred to as our Stage 1. Let β̂ be the maximizer of the corresponding log partial likelihood function and be the Breslow-type estimate of baseline hazard (Breslow, 1972). When (2·3) is correctly specified, could consistently estimate SW(t | t0). However, if (2·3) is not correctly specified, ŜW(t | t0) would not longer be a consistent estimator of SW(t | t0) and thus may be a biased estimate of S(t | t0) (Lagakos, 1988; O’neill, 1986). On the other hand, the risk score Ûi ≡ β̂𝖳Wi may still be highly predictive of T𝕃i. This motivates us to overcome the difficulty arising from model misspecification and multi-dimensionality of Wi by using . Therefore, S(t | t0) = E{SUi(t | t0)}, where Su(t | t0) = P(T𝕃i > t | T𝕃i > t0, Ui = u), and β0 is the limit of β̂, which always exists (Hjort, 1992). In essence, we use the working Cox model as a tool to construct in stage 1 and estimate S(t | t0) as , where Ŝu(t | t0) is a consistent estimator of Su(t | t0) obtained in stage 2.

Figure 1.

Illustration of proposed two-stage procedure

To estimate Su(t | t0) in stage 2, we use a nonparametric conditional Nelson-Aalen estimator (Beran, 1981) based on subjects in Ωt0. Specifically for any given t and u, we use the synthetic data {(X𝕃i, δ𝕃i, Ûi), i ∈ Ωt0} to obtain a local constant estimator for the conditional hazard Λu(t | t0) = −log Su(t | t0) as

as in Cai et al. (2010), where Yi(t) = I(T𝕃i ≥ t), Ni(t) = I(T𝕃i ≤ t)δ𝕃i, D̂ui = Ûi − u, K(·) is a smooth symmetric density function, Kh(x) = K(x/h)/h, and h = O(n−υ) is a bandwidth with 1/2 > υ > 1/4. The resulting estimate for Su(t | t0) is Ŝu(t | t0) = exp{−Λ̂u(t | t0)}. The uniform consistency of Ŝu(t | t0) in u for Su(t | t0) can be shown using similar arguments as in Cai et al. (2010) under mild regularity conditions. Note that this consistency still holds when is discrete as long as it takes only a finite number of values (Du & Akritas, 2002). Finally, we propose to estimate S(t | t0) as

The uniform consistency of Ŝu(t | t0) along with the consistency of β̂ for β0 ensures the consistency of Ŝ(t | t0) for S(t | t0). This approach is similar to the semiparametric dimension reduction estimation procedure proposed in Hu et al. (2011) and Hu et al. (2010) for estimating the conditional mean response in the presence of missing data.

Now that we have obtained an estimate of S(t|t0) in (2·1), an estimate for S(t0) follows similarly from this same two-stage procedure replacing Wi with Zi and Ωt0 with all patients. More specifically, let β̂* be the maximizer of the partial likelihood function corresponding to the Cox model:

| (2·4) |

where Λ0(·) is the baseline cumulative hazard function and β* is a vector of coefficients. We then define and use a local constant estimator, Λ̂u(t0), to obtain where is a consistent estimator of . An estimate for our primary quantity of interest S(t) incorporating intermediate event and covariate information follows as ŜLM(t) ≡ Ŝ(t | t0)Ŝ(t0) where LM indicates that we have used a landmark time to decompose our estimate into two components.

2·2 Theoretical Properties of the Two-Stage Estimator

In this section, we provide theoretical results on how the proposed estimator compares to the standard KM estimator denoted by ŜKM(t). We also aim to investigate how various components of the proposed procedure contribute to the gain in efficiency. To this end, we first show in Supplementary Appendix A that the variability of β̂ and β̂* can be ignored when making inference about ŜLM(t). In Appendix I, we show that the influence functions of Ŝ(t0), Ŝ(t|t0), and ŜLM(t) can be decomposed as the influence function corresponding to the full data without censoring plus an additional component accounting for variation due to censoring.

To examine the potential gain in efficiency of our proposed estimator over the KM estimator, in Appendix II, we show that the reduction in variance is always non-negative taking the form

as defined in Appendix II. Obviously, there would not be any gain in the absence of censoring with G(t) ≡ 1. The maximum reduction is achieved when SUi(t | t0) = P(T𝕃i ≥ t | T𝕃i ≥ t0, Wi) and . This condition for optimal efficiency is parallel to the recoverability condition described in Hu et al. (2010) for conditional mean response estimation, and is satisfied when the working models in (2·3) and (2·4) are correctly specified. It is important to note that the optimality of the proposed estimator is within the class of estimators constructed based on the specific choice of W and thus depends on t0. In Sections 4 and 5, we examine the sensitivity of our estimator to the choice of t0 and propose data-driven rules to further improve the robustness of our approach by combining information from multiple t0s.

To shed light on where the gain in efficiency is coming from, we show in Supplementary Appendix B.1 that ŜLM(t) ≈ ŜKM(t) + ℛ̂(t), where

That is, our proposed estimator can also be expressed as the KM estimator augmented by a mean zero martingale ℛ̂(t) corresponding to censoring weighted by baseline covariate and intermediate event information. The term ℛ̂(t) is negatively correlated with ŜKM(t) and improves efficiency by leveraging independent censoring and the correlation between T𝕃i and {T𝕊i, Zi}. The proposed two-stage modeling framework allows us to optimally use this additional information while protecting us against model misspecification. This decomposition also connects our proposed estimator to the existing augmentation procedures such as those considered in Lu & Tsiatis (2008). The advantage of our proposal is that the modeling step naturally estimates the optimal bases without necessitating the correct specification of the model. Variance estimates can be obtained using a perturbation-resampling procedure as described in Supplementary Appendix C.

3 Comparing Survival Between Two Treatment Groups

Consider a two-arm RCT setting where we use 𝒢 to denote treatment assignment with 𝒢 taking a value B with probability p and A otherwise. For 𝒢 ∈ {A, B}, let S𝒢(t) denote the survival rate of T𝕃 at time t in group 𝒢 and ŜLM,𝒢(t) denote the aforementioned two-stage estimator based on data from treatment group 𝒢. An estimator for the risk difference, Δ(t) = SA(t) − SB(t), may be obtained as Δ̂LM(t) = ŜLM,A(t) − ŜLM,B(t). The standard error of Δ̂LM(t) can be estimated as σ̂(Δ̂LM(t)) using a perturbation-resampling procedure as described in Supplementary Appendix C. A normal confidence interval (CI) for Δ(t) may be constructed accordingly. To test the null hypothesis of H0 : Δ(t) = 0, a Wald-type test may be performed based on ZLM(t) = Δ̂LM(t)/σ̂(Δ̂LM(t)). To compare this testing procedure to a test based on ŜKM(t) let Δ̂KM(t) = ŜKM,A(t)− ŜKM,B(t) denote the KM estimate for Δ(t) where ŜKM,𝒢(t) is the KM estimate of survival at time t in group 𝒢.

Through an augmentation procedure in a RCT setting, Lu & Tsiatis (2008) demonstrated substantial gains in power when testing the log hazard ratio under a Cox model. Though Lu & Tsiatis (2008) does not offer procedures to estimate or test for a difference in t-year survival rates, the investigation of their useful and effective procedure is worthwhile in our setting. The augmentation procedure in Lu & Tsiatis (2008) (LT) leads to significant gains in efficiency by leveraging two key sets of assumptions: (1) C is independent of {T𝕃, Z} given 𝒢 and (2) 𝒢 is independent of Z. The LT procedure takes advantage of such information by explicitly creating functional bases and augmenting over both censoring martingales and I(𝒢 = B) − p, weighted by these specified bases. Similar to the arguments given in Section 2 and Supplementary Appendix B.1, one may interpret Δ̂LM(t) as augmenting Δ̂KM(t) with an optimal basis of censoring martingales approximated by the working models. However, our procedure does not require explicit specification of the basis and also leverages information on C being independent of T𝕊.

However, Δ̂LM(t) does not yet leverage information on the randomization of treatment assignment (that is, 𝒢 is independent of Z)and hence it is possible to further improve this estimator via augmentation. One may follow similar strategies as the LT procedure by explicitly augmenting over certain basis functions of Z. Specifically, let H(Z) be any arbitrary vector of basis functions. An augmented estimator can be obtained as Δ̂LM(t; ε, H) = Δ̂LM(t) + ε𝖳 ∑i{I(𝒢i = B) − p}H(Zi), where ε is chosen to minimize the variance of Δ̂LM(t; ε, H). However, a poor choice of bases and/or a large number of non-informative bases might result in a loss of efficiency in finite samples (Tian et al., 2012). Here, we propose to explicitly derive an optimal basis using the form of the influence function for Δ̂LM(t) and subsequently use our two-stage procedure to estimate the basis. Specifically, in Supplementary Appendix B.2 we derive an explicit form for the optimal choice for H(Z) in this setting as

| (3·1) |

where SZi,𝒢(t) ≡ P(T𝕃i > t | Zi, 𝒢i = 𝒢) for 𝒢 = A, B. There are various ways one may estimate (3·1). We choose to guard against possible model misspecification and consider a robust estimate using the proposed two-stage procedure. Specifically, we first fit a Cox model to treatment group 𝒢 using only Z information to obtain an estimated log hazard ratio, , and then approximate −log SZ,𝒢(t) as , where Λ̂u,𝒢(β, t) is obtained similarly to Λ̂u(β, t) but only using data in the treatment group 𝒢. We denote the resulting estimated basis as Ĥopt(Zi, t). We then obtain an augmented estimator for Δ(t) as Δ̂AUG(t) = Δ̂LM(t) − ε̂∑i{I(𝒢i = B) − p}Ĥopt(Zi, t),where ε̂ is an estimate of cov{Δ̂LM(t), ∑i{I(𝒢i = B) − p}Ĥopt(Zi, t)}var{∑i{I(𝒢i = B) − p}Ĥopt(Zi, t)}}−1 which can be approximated using the perturbation procedure as described in Supplementary Appendix C. Note that we use the proposed two-stage procedure to approximate the optimal basis for augmentation. Therefore, when the working models do not hold, the estimated basis may not be optimal but can still improve efficiency empirically by capturing important associations between T𝕃i and Zi. In addition, we estimate ε empirically as ε̂ to guard against model misspecification. Since the variability due to Ĥopt and ε̂ has a negligible contribution asymptotically to the variance of Δ̂AUG(t), such calibration and weighting improve the robustness of our proposed approach. Hence, this approach could ultimately improve the efficiency of Δ̂AUG(t) even in the presence of model misspecification.

4 Simulations

We conducted simulation studies to examine the finite sample properties of the proposed estimation procedures. If a gain in efficiency is observed over the KM estimate, one may consider whether this advantage is due in part to incorporating covariate information or incorporating T𝕊 information or both. To address this question, let denote an estimate of S(t) incorporating only T𝕊 information where ŜT𝕊(t | t0) is obtained using the two-stage procedure with W = [I(T𝕊i ≤ t0), min(T𝕊i, t0)]𝖳. In addition, let ŜZ(t) denote an estimate of S(t) incorporating only Z information. In our numerical examples, we additionally estimate these quantities for comparison. In an effort to compare our results to those examined previously in the literature, we apply our method to similar simulation settings as in Gray (1994). Three settings were considered: (i) a no treatment effect setting i.e. null setting (ii) a small treatment effect setting (iii) a large treatment effect setting. For illustration, t0 =1 year and t = 2 years i.e. we are interested in the probability of survival past 2 years. In all settings, censoring, Ci, was generated from a Uniform(0.5,2.5) distribution. For each treatment group, n=1000 and results summarize 2000 replications under the null setting (i) and 1000 replications under settings (ii) and (iii). Variance estimates are obtained using the perturbation-resampling method. Under the null setting (i), the event times for each treatment group (Treatment A and Treatment B) were generated from a Weibull distribution with scale parameter and shape parameter 1/a1 i.e. as where a1 = 1.5, b1 = 1, and U1 ~ Uniform(0,1). The conditional distribution T𝕃 − T𝕊 | T𝕊 was generated from a Weibull distribution with scale parameter and shape parameter 1/a2 i.e. as where a2 = 1.5, b2 = exp(g(T𝕊)), g(t) = 0.15−0.5t2 and U2 ~ Uniform(0,1). We take the covariate Z to be 0.75U2 + .25U3 where U3 ~ Uniform(0,1). Note that in these simulations, T𝕊 must occur before T𝕃 though our procedures do not require this assumption to hold. In this setting, P(X𝕃i < t, δ𝕃i = 1) = 0.37 and P(X𝕊i < t0, δ𝕊i = 1) = 0.59 in each group. In setting (ii) the Treatment A event times were generated as shown above and the Treatment B event times were generated similarly but with b1 = 1.2. In this setting, P(X𝕃i < t, δ𝕃i = 1) = 0.39 and P(X𝕊i < t0, δ𝕊i = 1) = 0.65 in the Treatment B group. Finally, in setting (iii) the Treatment A event times were generated as shown above and the Treatment B event times were generated similarly but with b1 = 1.4. In this setting, P(X𝕃i < t, δ𝕃i = 1) = 0.43 and P(X𝕊i < t0, δ𝕊i = 1) = 0.70 in the Treatment B group.

As in most nonparametric functional estimation procedures, the choice of the smoothing parameter h is critical. In our setting, the ultimate quantity of interest is the marginal survival and the estimator is effectively an integral of the conditional survival estimates. To eliminate the impact of the bias of the conditional survival function on the resulting estimator, we also require the standard undersmoothing assumption of h = O(n−δ) with δ ∈ (1/4, 1/2). To obtain an appropriate h we first use the bandwidth selection procedure given by Scott (1992) to obtain hopt, and then we let h = hoptn−c0 for some c0 ∈ (1/20, 3/10) to ensure the desired rate for h. In all numerical examples, we chose c0 = 0.10.

Results from estimation of S(t) are shown in Table 1. The standard error estimates obtained by the perturbation-resampling procedure approximate the empirical standard error estimates well. While all estimators have negligible bias and satisfactory coverage levels, our proposed estimate which incorporates both Z and T𝕊 information is more efficient than that from the standard Kaplan Meier estimate with relative efficiencies with respect to mean squared error (MSE) around 1.20. Though extensive simulation studies may be performed, we expect that such efficiency gains would not be seen in settings with little censoring or with weak correlation between the intermediate and terminal event (Cook & Lawless, 2001). Note that in all three settings, Ci ~ Uniform(0.5, 2.5) and thus P(C < t0) = 0.25 and P(C < t) = 0.75. To examine efficiency gains under varying degrees of censoring, we simulated data as in setting (i) but with (a) more censoring where Ci ~ Uniform(0.5, 2.15) and (b) less censoring Ci ~ Uniform(0.5, 3.5). In (a) P(C < t0) = 0.30 and P(C < t) = 0.90 and the relative efficiency with respect to MSE was 1.40 (not shown). In (b) P(C < t0) = 0.17 and P(C < t) = 0.50 and the relative efficiency with respect to MSE was 1.10 (not shown).

Table 1.

Estimates of S(t) for t = 2 years in treatment group B in settings (i),(ii) and (iii) using a Kaplan Meier estimator, ŜKM(t), the proposed estimator using Z information only, ŜZ(t), T𝕊 information only, ŜZ(t), and Z and T𝕊 information, , with corresponding empirical standard error (ESE), average of the standard error estimates from the perturbation-resampling method (ASE), efficiency relative to ŜKM(t) with respect to mean squared error (MSE) (REMSE), and empirical coverage (Cov) of the 95% CIs.

| ŜKM(t) | ŜZ(t) | |||||

|---|---|---|---|---|---|---|

| Setting (i) | ||||||

| Estimate | 0.4205 | 0.4267 | 0.4238 | 0.4246 | ||

| Bias | −0.0004 | 0.0060 | 0.0031 | 0.0039 | ||

| ESE | 0.0225 | 0.0220 | 0.0220 | 0.0207 | ||

| ASE | 0.0231 | 0.0218 | 0.0218 | 0.0206 | ||

| REMSE | - | 1.07 | 1.04 | 1.20 | ||

| Cov | 0.95 | 0.95 | 0.94 | 0.95 | ||

| Setting (ii) | ||||||

| Estimate | 0.3676 | 0.3745 | 0.3716 | 0.3720 | ||

| Bias | −0.0008 | 0.006 | 0.0032 | 0.0036 | ||

| ESE | 0.0224 | 0.0222 | 0.0219 | 0.0210 | ||

| ASE | 0.0227 | 0.0213 | 0.0215 | 0.0201 | ||

| REMSE | - | 1.07 | 1.03 | 1.19 | ||

| Cov | 0.96 | 0.94 | 0.94 | 0.93 | ||

| Setting (iii) | ||||||

| Estimate | 0.3248 | 0.3322 | 0.3289 | 0.3292 | ||

| Bias | 0.0001 | 0.0074 | 0.0040 | 0.0043 | ||

| ESE | 0.0224 | 0.0222 | 0.0218 | 0.0206 | ||

| ASE | 0.0222 | 0.0208 | 0.0210 | 0.0196 | ||

| REMSE | - | 1.06 | 1.01 | 1.20 | ||

| Cov | 0.94 | 0.94 | 0.91 | 0.93 | ||

To examine the sensitivity of our estimator to the choice of t0 and to further improve the robustness of our procedure by combining information from multiple t0s, we estimate S(t) with t0 ∈ ℧ = {k0.25, k0.50, k0.75}, where kp is the pth percentile of {X𝕊i : δ𝕊i = 1}, in setting (i). We denote the resulting estimators and corresponding standard errors estimated using the perturbation-resampling procedure as Ŝk0.25(t), Ŝk0.50(t), Ŝk0.75(t) and σ̂k0.25, σ̂k0.50, σ̂k0.75, respectively. One potential data-driven approach to choose t0 would be to set t0 as and let denote the corresponding estimator of S(t). Alternatively, one could consider an optimal linear combination of Ŝt0(t) = (Ŝk0.25(t), Ŝk0.50(t), Ŝk0.75(t))𝖳, , where wcomb = 1𝖳∑̂−1[1𝖳∑̂−11]−1 and ∑̂ is the estimated covariance matrix of Ŝt0(t) estimated using the perturbation-resampling procedure. The variance of Ŝcomb(t) can be estimated as 1𝖳∑̂−11. Due to the high collinearity of Ŝt0(t), we expect that in finite samples, ∑̂−1 may be an unstable estimate of var{Ŝt0(t)}−1. Consequently Ŝcomb(t) may not perform well and 1𝖳∑̂−11 may be under-estimating the true variance of Ŝcomb(t). Another alternative would be an inverse variance weighted (IVW) estimator of S(t), where wIVW = 1𝖳diag(Σ̂)−1[1𝖳diag(Σ̂)−11]−1. Under the current simulation setting (ii), the empirical standard errors of Ŝk0.25(t), Ŝk0.50(t), Ŝk0.75(t), , Ŝcomb(t), and ŜIVW(t) are 0.0215, 0.0210, 0.0207, 0.0210, 0.0206,and 0.0206 respectively. Although asymptotically the variance of should be the same as that of Ŝk0.75(t), due to the similarity among {σ̂t0, t0 ∈ ℧}, the variability in does contribute additional noise to . As expected, using 1𝖳∑̂−11, the average variance of Ŝcomb(t) is estimated as 0.0202, which is slightly lower than the empirical variance of Ŝcomb(t). For ŜIVW(t), the average of the estimated variance is 0.0204, which is close to the empirical variance. The empirical coverage levels of the 95% confidence intervals using Ŝcomb(t) and ŜIVW(t) are 0.945 and 0.943, respectively, indicating that such estimators perform well when combining across a small number of choices for t0. This suggests that our proposed estimators are not overly sensitive to the choice of t0 and a reasonable approach in practice may be to estimate S(t) using the proposed combined or IVW estimator.

For comparing treatment groups, we show results on the estimation precision and power in testing in Table 2. Similar to above, let Δ̂Z(t) denote the estimate of Δ(t) using Z information only and denote the estimate using T𝕊 information only. Corresponding test statistics can then be constructed similar to Δ̂LM(t). With respect to estimation precision for the treatment difference in survival rate, our proposed procedure yields about 49% gain in efficiency compared to the KM estimator. For testing for a treatment difference, under the null setting (i), the type I error is close to the nominal level of 0.05 with having a slightly higher value of 0.0538. When there is a small treatment difference, setting (ii), the power to detect a difference in survival at time t = 2 is 0.374 using a standard Kaplan Meier estimate and 0.457 when using the proposed procedure incorporating Z and T𝕊 information. We gain more power through additional augmentation which results in a power of 0.520. Similarly, in setting (iii), when there is a large treatment difference, power increases from 0.846 to 0.941 after incorporating Z and T𝕊 information and augmentation. These results suggest that in this setting, we can gain substantial efficiency by using the proposed procedures.

Table 2.

Results from testing for a difference in survival at time t = 2 years between the two treatment groups using a Kaplan Meier estimator, Δ̂KM(t), the proposed estimator using Z information only, Δ̂Z(t), T𝕊 information only, , Z and T𝕊 information, , and the augmented estimator, with corresponding empirical standard error (ESE), average of the standard error estimates from the perturbation-resampling method (ASE), efficiency relative to ŜKM(t) with respect to MSE, and power.

| Δ̂KM(t) | Δ̂Z(t) | |||||||

|---|---|---|---|---|---|---|---|---|

| Setting (i), no treatment difference | ||||||||

| Estimate | −0.0002 | −0.0002 | −0.0004 | −0.0001 | 0.0003 | |||

| ESE | 0.0323 | 0.0318 | 0.0316 | 0.0300 | 0.0282 | |||

| ASE | 0.0326 | 0.0308 | 0.0309 | 0.0291 | 0.0267 | |||

| REMSE | - | 1.12 | 1.12 | 1.26 | 1.49 | |||

| α | 0.0415 | 0.0462 | 0.0544 | 0.0467 | 0.0538 | |||

| Setting (ii), small treatment difference | ||||||||

| Estimate | 0.0525 | 0.0519 | 0.0520 | 0.0523 | 0.0525 | |||

| ESE | 0.0318 | 0.0312 | 0.0312 | 0.0292 | 0.0276 | |||

| ASE | 0.0323 | 0.0305 | 0.0306 | 0.0287 | 0.0263 | |||

| REMSE | - | 1.12 | 1.11 | 1.27 | 1.51 | |||

| Power | 0.3740 | 0.4110 | 0.4060 | 0.4570 | 0.5200 | |||

| Setting (iii), large treatment difference | ||||||||

| Estimate | 0.0954 | 0.0942 | 0.0947 | 0.0952 | 0.0952 | |||

| ESE | 0.0317 | 0.0312 | 0.0310 | 0.0288 | 0.0270 | |||

| ASE | 0.0320 | 0.0301 | 0.0303 | 0.0283 | 0.0259 | |||

| REMSE | - | 1.13 | 1.11 | 1.27 | 1.53 | |||

| Power | 0.8460 | 0.8690 | 0.8650 | 0.9090 | 0.9410 | |||

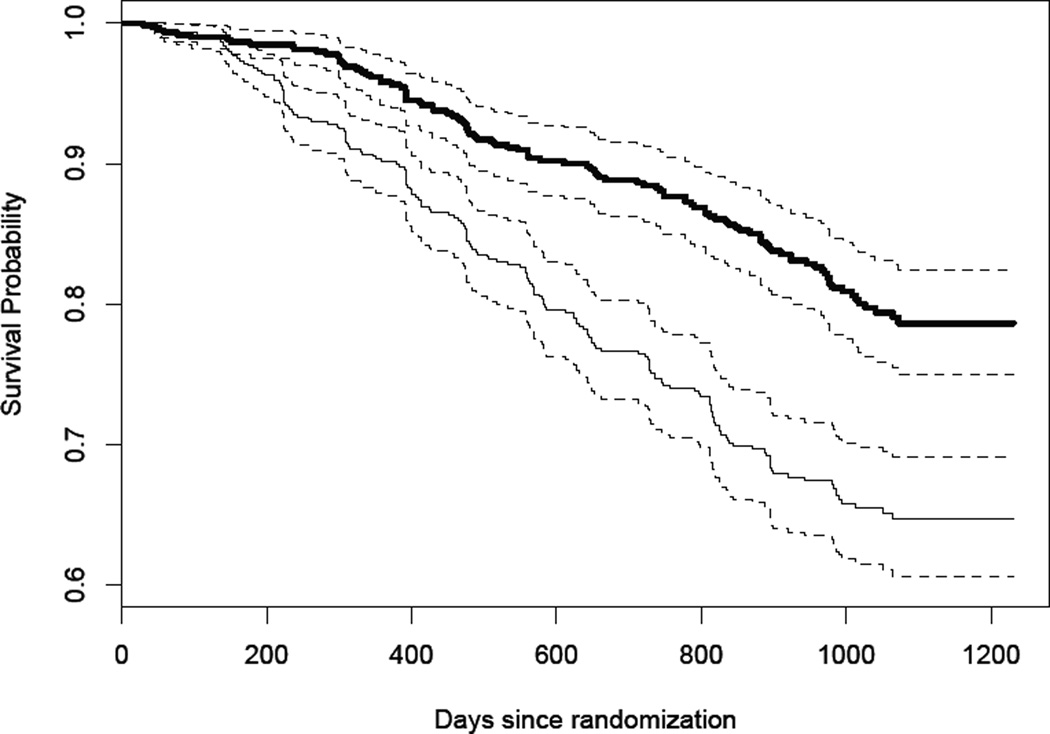

5 Example

We illustrate the proposed procedures using a dataset from the AIDS Clinical Trial Group (ACTG) Protocol 175 (Hammer et al., 1996). This dataset consists of 2467 patients randomized to 4 different treatments: zidovudine only, zidovudine + didanosine, zidovudine + zalcitabine, and didanosine only. The long term event of interest, T𝕃, is the time to death and intermediate event information consists of two intermediate events, T𝕊 = (T𝕊1, T𝕊2)𝖳 where T𝕊1 = time to an AIDS-defining event e.g. pneumocystis pneumonia and T𝕊2 = time to a 50% decline in CD4. If a patient experienced multiple intermediate events of one kind, for example multiple AIDS-defining events, the earliest occurrence of the event was used. For illustration, t0 = 1 year and t = 2.5 years and we examine survival in patients from the zidovudine only (mono group, n=619) and zidovudine + zalcitabine (combo group, n=620) groups. Figure 2 displays the KM estimate of survival in each group. Baseline covariates, Z, include the mean of two baseline CD4 counts, Karnofsky score, age at randomization, weight, symptomatic status, use of zidovudine in the 30 days prior to randomization, and days of antiretroviral therapy before randomization. Results are shown in Table 3. Our proposed procedure leads to a t-year survival rate estimate of 0.926 for the mono group and 0.953 for the combo group. The KM estimate of survival is similar though smaller in both groups.

Figure 2.

Kaplan Meier estimate of survival for the mono group (thin black line) and combo group (thick black line) with corresponding 95% confidence intervals (dashed lines).

Table 3.

Estimates of S(t) for t = 2.5 years in two treatment groups from ACTG Protocol 175 using a Kaplan Meier estimator, ŜKM(t), the proposed estimator using Z information only, ŜZ(t), T𝕊 information only, ŜZ(t), and Z and T𝕊 information, , with corresponding standard error from the perturbation-resampling method (SE).

| ŜKM(t) | Ŝz(t) | |||||

|---|---|---|---|---|---|---|

| Treatment: Zidovudine | ||||||

| Estimate | 0.92 | 0.93 | 0.93 | 0.93 | ||

| SE | 0.0114 | 0.0108 | 0.0107 | 0.0105 | ||

| Treatment: Zidovudine and Zalcitabine | ||||||

| Estimate | 0.95 | 0.96 | 0.95 | 0.95 | ||

| SE | 0.0101 | 0.0091 | 0.0094 | 0.0091 | ||

To examine the sensitivity of our estimator to the choice of t0 in this example, we estimate S(t) with t0 ∈ ℧ = {k0.25, k0.50, k0.75} = {392, 582, 818}, where kp is the pth percentile of {X𝕊1i : δ𝕊1i = 1, X𝕊2i : δ𝕊2i = 1}, X𝕊ji = min(T𝕊ji,Ci) and δ𝕊ji = I(T𝕊ji ≤ Ci) for j = 1, 2 in the zidovudine only group. Note that when t0 = 365 as used above, Ŝ(t) = 0.9260 with estimated standard error equal to 0.0105. With t0 ∈ ℧, our estimates of S(t) are 0.9272, 0.9262, and 0.9262 and estimates of standard error are 0.0106, 0.0105, and 0.0107, respectively. As suggested above, a reasonable alternative approach would be to estimate S(t) using the proposed combined or IVW estimator. Here, Ŝcomb(t) = 0.9265 and ŜIVW(t) = 0.9266 with standard error estimates 0.0105 and 0.0105, respectively. Therefore, we find that our estimates of survival and corresponding standard error are not sensitive to the choice of t0 in this example and that both of the combined procedures give similar results on both the point and interval estimators.

We are interested in testing H0 : Δ(2.5) ≡ Smono(2.5) − Scombo(2.5) = 0. Results for comparing the two treatment groups are shown in Table 4. Point estimates of Δ(2.5) from various procedures are reasonably close to each other with the KM estimator being 0.0255 and our proposed estimators being 0.0271 and 0.0278 without and with further augmentation, respectively. The proposed procedure provides an estimate which is roughly 19% more efficient than that from the KM estimate. The p-value for this test decreased from 0.0942 to 0.0533 after incorporating information on baseline covariates and T𝕊. Furthermore, augmentation in addition to the two-stage procedure provides an estimate approximately 49% more efficient than the KM estimate and a p-value of 0.0267.

Table 4.

Results from testing for a difference in survival at time t = 2.5 years between the two treatment groups from ACTG Protocol 175 using a Kaplan Meier estimator, Δ̂KM(t), the proposed estimator using Z information only, Δ̂Z(t), T𝕊 information only, , Z and T𝕊 information, , and the augmented estimator, with corresponding standard error from the perturbation-resampling method (SE) and efficiency relative to ŜKM(t) with respect to variance (RE) and p-value

| Δ̂KM(t) | Δ̂Z(t) | |||||||

|---|---|---|---|---|---|---|---|---|

| Estimate | 0.03 | 0.03 | 0.02 | 0.03 | 0.03 | |||

| SE | 0.0153 | 0.0143 | 0.0143 | 0.0140 | 0.0125 | |||

| RE | 1.00 | 1.13 | 1.14 | 1.19 | 1.49 | |||

| p-value | 0.09 | 0.04 | 0.10 | 0.05 | 0.03 | |||

6 Remarks

We have proposed a semi-nonparametric two-stage procedure to improve the efficiency in estimating S(t) in a RCT setting. We demonstrate with both theoretical and numerical studies that there are substantial potential efficiency gains in both estimation and testing of t-year survival. Our approach can easily incorporate multiple intermediate events, which is appealing when multiple recurrent event information is useful for predicting the terminal events.

This procedure uses the landmark concept developed in Van Houwelingen (2007), Van Houwelingen & Putter (2012) and Parast et al. (2012) to leverage information on multiple intermediate events and baseline covariates, which overcomes complications that arise in a semi-competing risk setting. Existing work on landmark prediction has focused primarily on the prediction of residual life while this paper focuses on improving the estimation of marginal survival. Model misspecification may lead to inaccurate prediction, but would not void the validity of many of the previously proposed prediction procedures. On the contrary, model misspecification of the conditional survival could lead to a biased estimate of the marginal survival. To guard against model misspecification, we use working models to estimate the conditional survival with an additional layer of calibration which ensures the consistency of the marginal survival estimates. We derive asymptotic expansions of the proposed estimator to provide insight into why this method would always improve efficiency compared to the KM estimator and when we would expect to achieve optimal efficiency. The proposed approach can be seen as being related to Bang & Tsiatis (2000) which proposes a partitioned estimator that makes use of the cost history for censored observations to estimate overall medical costs and to Hu et al. (2011) and Hu et al. (2010) which use a semiparametric dimension reduction procedure to estimate the conditional mean response in the presence of missing data.

To avoid any bias due to model specification we use the Cox model only as a tool for dimension reduction followed by a nonparametric kernel Nelson-Aalen estimator of survival to obtain a consistent final estimate of survival. Two conditions required for the consistency of Ŝu(t|t0) are independent censoring and the score derived under a given working model, Û = β̂′W, must converge to a deterministic score U = β′W. This convergence would require the objective function used to derive β̂ to have a unique optimizer. Such an objective function could involve convex functions such as a partial likelihood or an estimating functions with a unique solution such as those considered in Tian et al. (2007) and Uno et al. (2007). In this paper, we have used the Cox model as a working model to obtain β̂ and it has been shown that the Cox partial likelihood has a unique solution even under model misspecification (Hjort, 1992; Van Houwelingen, 2007). Though misspecification of the working model will not affect consistency, it may affect the efficiency of the proposed estimator.

In general, our methods are not restricted to the Cox model being the working model; any working model with this property along with providing root-n convergence of β̂ would be valid and ensure consistency of the final estimate. For example, a proportional odds model or other semi-parametric transformation model could be considered as long as this property holds. However, it is unclear if the maximizer of the rank correlation under the single-index models or the non-parametric transformation model for survival data (Khan & Tamer, 2009) is unique under model misspecification. Therefore, some care should be taken in the choice of the working models. It is interesting to note that it has been shown in some non-survival settings that generalized linear model-type estimators are robust to misspecification of the link function and that the resulting coefficients can be estimated consistently up to a scalar (Li & Duan, 1989). In such settings, even under model misspecification, we would not expect any loss of efficiency due to the link misspecification. Further investigation of this property in a survival setting would be valuable for future research.

The ability to include multiple intermediate events is a desirable property of this proposed procedure. In settings with a small number of potential intermediate events, including all intermediate event information in stage 1 would not affect the efficiency of the overall estimate due to the fact that the variability in β̂ does not contribute to the asymptotic variance (shown in Supplementary Appendix A). However, in finite samples, this additional noise could be problematic and in settings with many potential intermediate events, a regularization procedure (Friedman et al., 2001; Tian et al., 2012) may be considered for variable selection in stage 1.

As in most nonparametric functional estimation procedures, a sufficiently large sample size is needed to obtain stable estimation performance and observe efficiency gains. The degree of efficiency gain using this proposed procedure may be less than expected in small sample sizes. Alternative robust methods should be considered in such settings.

Supplementary Material

Acknowledgements

The authors would like to thank the AIDS Clinical Trials Group for providing the data for use in this example. Support for this research was provided by National Institutes of Health grants T32-GM074897, R01-AI052817, R01-HL089778-01A1, R01-GM079330 and U54LM008748. The majority of this work was completed while Parast was a Ph.D. candidate in biostatistics at Harvard University.

Appendix

Asymptotic properties of ŜLM(t)

We explicitly examine the asymptotic properties of our estimate of survival compared to the KM estimate of survival. Recall that our proposed estimate ŜLM(t) = Ŝ(t | t0)Ŝ(t0). Let β̂ and β̂* be the maximizers of the log partial likelihood functions corresponding to the proportional hazards working models (2·3) and (2·4), respectively. In addition, let β0 and denote the limits of β̂ and β̂*, respectively. Let , and ŜLM(β*, β, t) = Ŝ(β*, t0)Ŝ(β, t | t0), where Ŝu(β*, t0) = e−Λ̂u(β*,t0) Ŝυ(β, t | t0) = e−Λ̂υ(β,t|t0), Λ̂u(β*, t0) and Λ̂υ(β, t | t0) are obtained by replacing β̂* and β̂ in Λ̂u(t0) and Λ̂υ(t | t0) by β* and β, respectively. Then Ŝ(β̂*, t0) = Ŝ(t0), Ŝ (β̂*, t | t0) = Ŝ(t | t0), and ŜLM(β̂*, β̂, t) = ŜLM(t). Furthermore, with a slight abuse of notation, we define and Hence and Sv(t | t0) = Sυ(β0, t | t0). We will derive asymptotic expansions for , which can be decomposed as

In Appendix I below we will express and 𝒲̂LM(t, t0) in martingale notation. Using these martingale representations, in Appendix II we examine the variance of ŜLM(β̂*, β̂, t) and show that this variance is always less than or equal to the variance of ŜKM(t) and the reduction is maximized when the assumed working models in (2·3) and (2·4) are true.

Assumption A.1 Censoring, C, is independent of {T𝕃, Z} given treatment assignment, 𝒢.

Assumption A.2 Treatment assignment, 𝒢, is independent of Z.

Regularity Conditions (C.1) Throughout, we assume several regularity conditions: (1) the joint density of {T𝕃, T𝕊, C} is continuously differentiable with density function bounded away from 0 on [0, t], (2) and W𝖳β0 have continuously differentiable densities and Z is bounded, (3) h = O(n−υ) with 1/4 < υ < 1/2, (4) K(x) is a symmetric smooth kernel function with continuous second derivative on its support and ∫ K̇ (x)2dx < ∞, where K̇ (x) = dK(x)/dx.

Appendix I

Martingale Representation

In Supplementary Appendix A we show that the variability of β̂* and β̂ is negligible and can be ignored in making inferences on Ŝ(β̂*, t0), Ŝ(β̂*, t|t0) and . Therefore, we focus on the asymptotic expansions of 𝒲̃Z (t0), 𝒲̃(t | t0), and 𝒲̃LM(t, t0) with and β0 and express these quantities in martingale notation. Throughout, we will repeatedly use the fact that nt0/n ≈ SC(t0)S(t0), where SC(t0) = P(C > t0).

Theorem 1. Assume A.1, A.2 and Regularity Conditions (C.1) hold. Note that in Supplementary Appendix A it was shown that 𝒲̃LM(t)) ≈ 𝒲̃LM(t, t0).

| (I·1) |

| (I·2) |

| (I·3) |

and 𝒲̃LM(t) converges to a Gaussian process indexed by t ∈ [t0, τ].

Proof of Theorem 1. Using a Taylor series expansion and Lemma A.3 of Bilias et al. (1997),

Let Ni(s) = I(X𝕃i ≤ s, δ𝕃i = 1), Yi(s) = I(X𝕃i ≥ s) and . By a martingale representation, . Using a change of variable where , and a Taylor series expansion, let and , where f(·) is the probability density function corresponding to S(·),

It then follows that

| (I·4) |

To understand how Ŝ(β̂*, t0) can be viewed as a KM estimator augmented by censoring martingales, we next express the influence function of 𝒲Z(t0) in such a form. From (A.2) in Supplementary Appendix A and using martingale representation once again and integration by parts,

| (I·5) |

where and ΛC(s) = −log SC(s). Using similar arguments, it can be shown that

| (I·6) |

| (I·7) |

where and πUi(s|t0) = P(X𝕃i ≥ υ|Ui = u, X𝕃i > t0).

Using (I·5), (I·7) and (A.2) in Supplementary Appendix A, we now examine the form of our complete proposed estimator for S(t), ŜLM(β̂*,β̂, t), and obtain

| (I·8) |

| (I·9) |

| (I·10) |

It is interesting to note that these two terms, and , converge to two independent normal distributions and that E{ϕ1i(t, t0)ϕ2i(t, t0)} = 0. Since SUi(t|t0) is a bounded smooth monotone function in t and is a martingale process, is Donsker. This coupled with the fact that the simple class {I(X𝕃i > t)|t} is Donsker, implies that {ϕji(t, t0)|t}, j = 1, 2 are Donsker by Theorem 9.30 of Kosorok (2008). Therefore 𝒲̂LM(t)) converges to a Gaussian process indexed by t ∈ [t0, τ].

Appendix II

Variance of ŜKM(β̂*,β̂, t)

In this section we compare the variance of the proposed estimator to that of the KM estimator, as described in the next theorem.

Theorem 2. Assume A.1, A.2, and Regularity Conditions (C.1) hold. The proposed estimator, ŜLM(β̂*, β̂, t), is always at least as efficient as the Kaplan Meier estimate. Specifically,

where ΔVarLM denotes the difference in variance.

Proof of Theorem 2. We first note that it can be shown that Ŝ(β̂*, t0) and Ŝ(β̂, t) are asymptotically independent and hence can be handled separately. From (I·4), we can easily examine the variance of Ŝ(β̂*, t0) and compare it to the variance of ŜKM(t0). The resulting form of the difference in variance allows us to make some interesting conclusions regarding the reduction in variance when using the proposed marginal estimate. Note that this result only concerns one component of our complete estimate since ŜLM(β̂*,β̂, t0) = Ŝ(β̂*, t0)Ŝ(β̂, t|t0), however we consider the results and conclusions worthy of discussion. Similar to Murray & Tsiatis (1996), we use a simple application of the conditional variance formula along with martingale properties, integration by parts and independent censoring,

| (II·1) |

where G(s) = 1/Sc(s). Murray & Tsiatis (1996) showed that . Comparing these two variances, we have

Here and in the sequel, for any random variable 𝒰, we use notation S𝒰(t | s) to denote P(T𝕃 > t | T𝕃 > s, 𝒰) and S(t | s) = P(T𝕃 > t | T𝒰 > s). Provided that is always more efficient than ŜKM(t0), asymptotically. Furthermore, ΔVar is maximized when the model used to obtain the score, , is the true model. To see this, we note that if is replaced with the true conditional survival SZi(t), the corresponding variance reduction over the KM estimator is . Comparing the variance reduction using SZi(t) versus using an arbitrary function of Zi, denoted by 𝒰i, we have

Since E{SZi(t0|s) | Ti > s, 𝒰i} = E{E(I(T𝕃i > t)|T𝕃i > s,Zi)|T𝕃i > s, 𝒰i} = E{T𝕃i > t|T𝕃i > s, 𝒰i} = S𝒰i(t0|s), and hence E{[S𝒰i(t0|s) − S(t|s)][SZi(t0|s) − S𝒰i(t0|s)]|T𝕃i > s} = 0. Therefore, ΔVar(Z) − ΔVar(𝒰) ≥ 0. Since under the correct specification of the working model, ŜZ(t) achieves maximal efficiency when the fitted Cox model holds.

We next derive the variance form of our complete estimate, 𝒲̃LM(t, t0). From (I·8), Var{𝒲̃LM(t, t0)} ≈ E [{ϕ1i(t, t0) + ϕ2i(t, t0)}2] = E{ϕ1i(t, t0)2}+E {ϕ2i(t, t0)2} since it can be shown that E{ϕ1i(t, t0)ϕ2i(t, t0)} = 0. It follows from (I·5) and (II·1), that

Using similar arguments as above and the expression in (I·6), we can show that

where G(s|t0) = 1/P(Ci > s|t0). It follows along with (I·7) that

Therefore, Var{𝒲̂LM(t, t0)} ≈

Note that ŜKM(t) = ŜKM(t|t0)ŜKM(t0) where ŜKM(t|t0) is the KM estimate of survival at t using only Ωt0. Similarly to (A.2) in Supplementary Appendix A, using a Taylor series expansion, one can show that ŜKM(t) − S(t) ≈ S(t0)[ŜKM(t|t0) − S(t|t0)] + S(t|t0)[ŜKM(t0) − S(t0)] and thus,

where we have used the independence of ŜKM(t|t0) and ŜKM(t0), and KM variance estimates from Murray & Tsiatis (1996). Examining the difference which we denote by ΔVarLM,

Thus, the proposed estimator, ŜLM(β̂*, β̂, t), is at least as efficient as the Kaplan Meier estimate. In addition, the reduction is maximized when the assumed working models in (2·3) and (2·4) are true. Note that the gain in efficiency depends on t0 and the true values of β and β*. The maximum reduction is within the class of estimators constructed using W and t0. The amount of reduction would also depend on the magnitude of β and β*. In particular, if β and β* are equal to zero, there would be no efficiency gain, but the larger β and β* are, the more variation we would expect in SUi(t|s) and which would lead to a larger expected reduction in variance.

References

- Bang H, Tsiatis A. Estimating medical costs with censored data. Biometrika. 2000;87:329–343. [Google Scholar]

- Beran R. Technical report. University of California Berkeley; 1981. Nonparametric regression with randomly censored survival data. [Google Scholar]

- Bilias Y, Gu M, Ying Z. Towards a general asymptotic theory for Cox model with staggered entry. The Annals of Statistics. 1997;25:662–682. [Google Scholar]

- Breslow N. Contribution to the discussion of the paper by dr cox. Journal of the Royal Statistical Society, Series B. 1972;34:216–217. [Google Scholar]

- Cai T, Tian L, Uno H, Solomon S, Wei L. Calibrating parametric subject-specific risk estimation. Biometrika. 2010;97:389–404. doi: 10.1093/biomet/asq012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cook R, Lawless J. Some comments on efficiency gains from auxiliary information for right-censored data. Journal of Statistical Planning and Inference. 2001;96:191–202. [Google Scholar]

- Cortese G, Andersen P. Competing risks and time-dependent covariates. Biometrical Journal. 2010;52:138–158. doi: 10.1002/bimj.200900076. [DOI] [PubMed] [Google Scholar]

- Du Y, Akritas M. Uniform strong representation of the conditional kaplan-meier process. Mathematical Methods of Statistics. 2002;11:152–182. [Google Scholar]

- Fine J, Jiang H, Chappell R. On semi-competing risks data. Biometrika. 2001;88:907–919. [Google Scholar]

- Friedman J, Hastie T, Tibshirani R. The elements of statistical learning. volume 1. Springer Series in Statistics; 2001. [Google Scholar]

- Gray R. A kernel method for incorporating information on disease progression in the analysis of survival. Biometrika. 1994;81:527–539. [Google Scholar]

- Hammer S, Katzenstein D, Hughes M, Gundacker H, Schooley R, Haubrich R, Henry W, Lederman M, Phair J, Niu M, et al. A trial comparing nucleoside monotherapy with combination therapy in hiv-infected adults with cd4 cell counts from 200 to 500 per cubic millimeter. New England Journal of Medicine. 1996;335:1081–1090. doi: 10.1056/NEJM199610103351501. [DOI] [PubMed] [Google Scholar]

- Hirschtick R, Glassroth J, Jordan M, Wilcosky T, Wallace J, Kvale P, Markowitz N, Rosen M, Mangura B, Hopewell P, et al. Bacterial pneumonia in persons infected with the human immunodeficiency virus. New England Journal of Medicine. 1995;333:845. doi: 10.1056/NEJM199509283331305. [DOI] [PubMed] [Google Scholar]

- Hjort NL. On inference in parametric survival data models. International Statistical Review/Revue Internationale de Statistique. 1992;60:355–387. [Google Scholar]

- Hu Z, Follmann D, Qin J. Semiparametric dimension reduction estimation for mean response with missing data. Biometrika. 2010;97:305–319. doi: 10.1093/biomet/asq005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hu Z, Follmann D, Qin J. Dimension reduced kernel estimation for distribution function with incomplete data. Journal of statistical planning and inference. 2011;141:3084–3093. doi: 10.1016/j.jspi.2011.03.030. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jiang H, Fine J, Chappell R. Semiparametric analysis of survival data with left truncation and dependent right censoring. Biometrics. 2005;61:567–575. doi: 10.1111/j.1541-0420.2005.00335.x. [DOI] [PubMed] [Google Scholar]

- Kaplan E, Meier P. Nonparametric estimation from incomplete observations. Journal of the American statistical association. 1958:457–481. [Google Scholar]

- Khan S, Tamer E. Inference on endogenously censored regression models using conditional moment inequalities. Journal of Econometrics. 2009;152:104–119. [Google Scholar]

- Kosorok MR. Introduction to empirical processes and semiparametric inference. Springer: 2008. [Google Scholar]

- Lagakos S. The loss in efficiency from misspecifying covariates in proportional hazards regression models. Biometrika. 1988;75:156–160. [Google Scholar]

- Lee S, Klein J, Barrett A, Ringden O, Antin J, Cahn J, Carabasi M, Gale R, Giralt S, Hale G, et al. Severity of chronic graft-versus-host disease: association with treatment-related mortality and relapse. Blood. 2002;100:406–414. doi: 10.1182/blood.v100.2.406. [DOI] [PubMed] [Google Scholar]

- Li K-C, Duan N. Regression analysis under link violation. The Annals of Statistics. 1989;17:1009–1052. [Google Scholar]

- Lin D, Wei L. The robust inference for the cox proportional hazards model. Journal of the American Statistical Association. 1989:1074–1078. [Google Scholar]

- Lu X, Tsiatis A. Improving the efficiency of the log-rank test using auxiliary covariates. Biometrika. 2008;95:679–694. [Google Scholar]

- Murray S, Tsiatis A. Nonparametric survival estimation using prognostic longitudinal covariates. Biometrics. 1996:137–151. [PubMed] [Google Scholar]

- O’neill T. Inconsistency of the misspecified proportional hazards model. Statistics & probability letters. 1986;4:219–222. [Google Scholar]

- Parast L, Cheng SC, Cai T. Incorporating short-term outcome information to predict long-term survival with discrete markers. Biometrical Journal. 2011;53:294–307. doi: 10.1002/bimj.201000150. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Parast L, Cheng S-C, Cai T. Landmark prediction of long term survival incorporating short term event time information. Journal of the American Statistical Association. 2012;107:1492–1501. doi: 10.1080/01621459.2012.721281. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Robins J, Ritov Y. Toward a curse of dimensionality appropriate (CODA) asymptotic theory for semi-parametric models. Statistics in Medicine. 1997;16:285–319. doi: 10.1002/(sici)1097-0258(19970215)16:3<285::aid-sim535>3.0.co;2-#. [DOI] [PubMed] [Google Scholar]

- Rotnitzky A, Robins J. Inverse probability weighted estimation in survival analysis. Encyclopedia of Biostatistics. 2005:2619–2625. [Google Scholar]

- Scott D. Multivariate Density Estimation. Vol. 1. New York: Wiley; 1992. Multivariate density estimation. 1992. [Google Scholar]

- Siannis F, Farewell V, Head J. A multi-state model for joint modeling of terminal and non-terminal events with application to whitehall ii. Statistics in medicine. 2007;26:426–442. doi: 10.1002/sim.2342. [DOI] [PubMed] [Google Scholar]

- Tian L, Cai T, Goetghebeur E, Wei L. Model evaluation based on the sampling distribution of estimated absolute prediction error. Biometrika. 2007;94:297–311. [Google Scholar]

- Tian L, Cai T, Zhao L, Wei L. On the covariate-adjusted estimation for an overall treatment difference with data from a randomized comparative clinical trial. Biostatistics. 2012 doi: 10.1093/biostatistics/kxr050. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Uno H, Cai T, Tian L, Wei LJ. Evaluating prediction rules for t-year survivors with censored regression models. Journal of the American Statistical Association. 2007;102:527–537. [Google Scholar]

- Van Houwelingen H. Dynamic prediction by landmarking in event history analysis. Scandinavian Journal of Statistics. 2007;34:70–85. [Google Scholar]

- Van Houwelingen H, Putter H. Dynamic predicting by landmarking as an alternative for multi-state modeling: an application to acute lymphoid leukemia data. Lifetime Data Analysis. 2008;14:447–463. doi: 10.1007/s10985-008-9099-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Van Houwelingen J, Putter H. Dynamic Prediction in Clinical Survival Analysis. CRC Press; 2012. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.