Abstract

Background

In non-experimental comparative effectiveness research using healthcare databases, outcome measurements must be validated to evaluate and potentially adjust for misclassification bias. We aimed to validate claims-based myocardial infarction algorithms in a Medicaid population using an HIV clinical cohort as the gold standard.

Methods

Medicaid administrative data were obtained for the years 2002–2008 and linked to the UNC CFAR HIV Clinical Cohort based on social security number, first name and last name and myocardial infarction were adjudicated. Sensitivity, specificity, positive predictive value, and negative predictive value were calculated.

Results

There were 1,063 individuals included. Over a median observed time of 2.5 years, 17 had a myocardial infarction. Specificity ranged from 0.979–0.993 with the highest specificity obtained using criteria with the ICD-9 code in the primary and secondary position and a length of stay ≥ 3 days. Sensitivity of myocardial infarction ascertainment varied from 0.588–0.824 depending on algorithm. Conclusion: Specificities of varying claims-based myocardial infarction ascertainment criteria are high but small changes impact positive predictive value in a cohort with low incidence. Sensitivities vary based on ascertainment criteria. Type of algorithm used should be prioritized based on study question and maximization of specific validation parameters that will minimize bias while also considering precision.

Introduction

Large health care databases are useful for conducting non-experimental comparative effectiveness research. While not perfect, the population is often closer to ideal than ad hoc studies because it is less selected, information on drug exposure in these sources is good for prescription drugs in the outpatient setting, the data is generally available, and their large sample size provides an opportunity to examine rare outcomes.1 As these data are collected primarily for administrative purposes and not for research, however, outcome measurements should be validated to quantify or minimize bias due to misclassification.

Measures of accuracy, sensitivity, specificity, positive predictive value (PPV) and negative predictive value (NPV) are used to quantify misclassification. Sensitivity and specificity generally assess outcome and exposure misclassification while PPV and NPV are most often used for population selection. There is a tradeoff between maximizing sensitivity versus specificity in comparative effectiveness and safety studies and choice of measure should be based on the overarching study question.2 In studies estimating relative effects, specificity is the most important outcome misclassification measure because a perfect specificity will lead to unbiased relative risk estimates even if sensitivity is low. 3 A high sensitivity allows for identification of most events and reduces bias of effect measures on the absolute scale (risk difference [RD] or number needed to treat).4 Many validation studies start with a large administrative healthcare database where algorithms to define events are validated against a gold standard (e.g., medical records). These studies are only able to calculate PPVs and not sensitivity and specificity as they do not have access to the gold standard population without the event (true negatives).

Observational clinical cohort studies have contributed substantially to our understanding of the effectiveness of different antiretroviral treatments for HIV clinical management.5–10 Similarities and differences between clinical cohort studies and other more traditional observational studies (e.g. interval cohorts) have been discussed elsewhere.11 Briefly, participants in clinical cohort studies are enrolled as they seek or receive care and the medical record is the source for information collected on the participants. Despite their use to examine the effect of treatments in a real world setting, these studies may not reach adequate person-time of follow-up required to study rare events.

The accuracy of myocardial infarction (MI) ascertainment in administrative healthcare data has been assessed; however, most studies only present PPV due to the lack of true negatives needed to estimate sensitivity and specificity. 12–17 Further, some validation studies used algorithms to identify MI events that may now be outdated due to changes in patient treatment as well as healthcare service and reimbursement.15–17 For example, many current MI ascertainment algorithms contain a length of stay criteria ≥ 3 days. Analyses of hospital discharge records from Minnesota and New England suggest that the median length of stay for patients hospitalized with MI is decreasing.18,19 These observations justify a periodic reassessment and validation of MI algorithms used for outcome ascertainment as changes occur in systems for diagnostic coding, healthcare practices and reimbursement policies. 20,21

By linking clinical cohort data to administrative healthcare data, it is possible to validate algorithms defining health outcomes of interest. In this study, we used the UNC HIV CFAR Clinical Cohort (UCHCC) study and the North Carolina (NC) Medicaid administrative data, to validate different claims-based definitions of MI within an HIV-infected population.

Methods

Study Population

We used the UCHCC and NC Medicaid administrative data for this validation study. The UCHCC is a dynamic clinical cohort study initiated in 2000 and includes all HIV-infected patients that are ≥18 years of age unless they are unable or unwilling to provide written informed consent in English or Spanish. The cohort includes data from different sources including existing hospital electronic databases, medical chart abstractions, in-person interviews, and data from federal agencies including mortality information. UCHCC participants are not seen at exact regular intervals, but rather as indicated by clinical care.

The Medicaid program is a joint state and federally funded program providing healthcare benefits to individuals of low income. Individuals qualify based on age, disability, income and financial resources.22 The Medicaid data contains health care service reimbursement information including doctor visits, hospital care, outpatient visits, treatments, emergency use, prescription medications, and diagnoses, procedures and provider information. These data also include reimbursement information for Medicaid patients also enrolled in Medicare (dually eligible beneficiaries). We included all HIV-infected NC Medicaid beneficiaries and those dually eligible beneficiaries ≥ 18 years of age with Medicaid enrollment between January 1, 2002 and December 31, 2008. HIV patients were identified in the Medicaid administrative data using the following definition: an ICD-9 code of 042 in any position or a prescription of any of the 27 FDA approved antiretrovirals between 2002 and 2008. Antiretrovirals were identified in the administrative data through National Drug Codes (NDC). Patients enrolled both in the UCHCC and Medicaid at any point between 2002 and 2008 formed the validation sample. For these patients we merged the UCHCC and the Medicaid administrative data based on social security number, first and last name.

Validation study mechanics

We synchronized periods of continuous Medicaid eligibility with the UCHCC and included all patients in both Medicaid and UCHCC between 2002 and 2008 with at least 30 days of observation time in both data sources. Patients contributed observed time from the last of (i) January 1, 2002, (ii) entry into the UCHCC or (iii) start of Medicaid enrollment. Patients’ time was included until the first of (i) December 31, 2008, (ii) 12 months following the last documented CD4 count or HIV RNA measurement in the UCHCC, or (iii) more than 30 days without Medicaid enrollment. If a patient was lost to HIV care in the UCHCC (i.e. more than 12 months without a documented CD4 count or HIV RNA measurement) or lost Medicaid coverage for more than 30 days but then reinitiated HIV care or Medicaid enrollment, this time at risk was not considered in these analyses. Among patients who died, observed time was stopped on the date of death.

Event definitions

We included all patients that had either a definite or probable MI as defined in the UCHCC and Medicaid data sources during the study period. In an initial validation analysis, we included the first MI event documented in either the UCHCC or Medicaid occurring during the observed period and did not impose any restriction on dates of events when assessing validation parameters. In a secondary analysis, we accounted for multiple MI events per patient, timing of the event, and length of observed time in each source by creating standardized time increments (3,6,12,24 months) within the previously defined observed period. In order to maintain synchronicity between data sources and to account for differing lengths of follow-up time, if the observed period ended in the middle of the defined increment, that observation was not included in the analysis. Time increments were determined based on length of continuous follow-up often required for comparative effectiveness studies. Due to sample size constraints, we did not consider time increments > 24 months.

Myocardial Infarction definition—UNC CFAR HIV Clinical Cohort (Gold Standard)

Myocardial infarction events were initially identified in the UCHCC through medical chart abstraction and adjudicated by health care personnel. The MI event definition expands upon the World Health Organization definition and includes serum markers, ECGs and chest pain criteria. 23,24

Myocardial Infarction definition—Medicaid Administrative Claims

As we were interested in validation of incident MI events and most new MI events present to the hospital versus an outpatient setting, we used inpatient claims. The administrative data included claims for patients enrolled in Medicaid as well as those who were dually eligible for Medicaid and Medicare. Our initial MI event definition included a diagnosis code (ICD-9-CM) of 410 in the 1st or 2nd position and a length of stay ≥ 3 days as has been used in previous validation studies. 15–17 We then used varying algorithms to identify MI events to determine the algorithm that would best identify MI events in this population. The 12 algorithms considered included varying: (i) ICD-9 code 410.xx in 1st or 2nd position, versus any position; (ii) length of stay as any number of day, ≥ 1 day, and ≥ 3 days; and (iii) inclusion of diagnosis related group (DRG) codes 121, 122 and 123.

Statistical Analysis

We examined basic baseline demographic and clinical characteristics of the NC Medicaid population, the UCHCC and the validation sample for the enrollees identified with a MI in the gold standard. We then cross-tabulated MI events identified in both cohorts based on the definitions outlined above to estimate sensitivity (proportion of true MI events identified in Medicaid among all gold standard defined MI events), specificity (proportion of true non-events identified in Medicaid among all gold standard defined non- events), PPV (proportion of true MI events identified in Medicaid among all MI events identified in Medicaid) and NPV (proportion of true non-events identified in Medicaid among all non-events identified in Medicaid). Exact binomial 95% confidence intervals (CI) quantified the precision around each validation measure.25 For our secondary analysis, intercept only generalized estimating equation models with a binomial distribution, independent correlation structure and logit link estimated sensitivity and specificity. These characteristics were calculated for the 3, 6, 12 and 24 month time increments.

Finally, we explored the impact of outcome misclassification on relative risk and absolute risk estimates in a hypothetical population of 1,100 individuals, a baseline probability of exposure of 0.09 and a risk of MI of 0.1 in the exposed and 0.08 in the unexposed to a hypothetical risk factor (true Risk Ratio [RR]: 1.25, true Risk Difference [RD]: 0.02). We used sensitivities and specificities estimated from our validation study to calculate the expected percent bias in the estimated RR and RD under different definitions of MI assuming no misclassification of exposure and non-differential outcome misclassification. We used the following equations to calculate the observed RR and RD:

where

a=sensitivity*proportion exposed*risk in exposed + (1-specificity)*proportion exposed*(1-risk in exposed)

b=(1-sensitivity)*proportion exposed*risk in exposed + specificity*proportion exposed*(1-risk in exposed)

c=sensitivity*(1-proportion exposed)*incidence in the unexposed + (1-specificity)*(1- proportion exposed)*(1-risk in unexposed)

d=(1-sensitivity)*(1-proportion exposed)*incidence in the unexposed + specificity*(1-proportion exposed)*(1-risk in unexposed)

We quantified the percent bias for both the RR and RD using the following equation:

All analyses were conducted using SAS version 9.2 or Intercooled Stata11. The study was approved by the [REDACTED FOR BLINDING] on the Protection of the Rights of Human Subjects.

Results

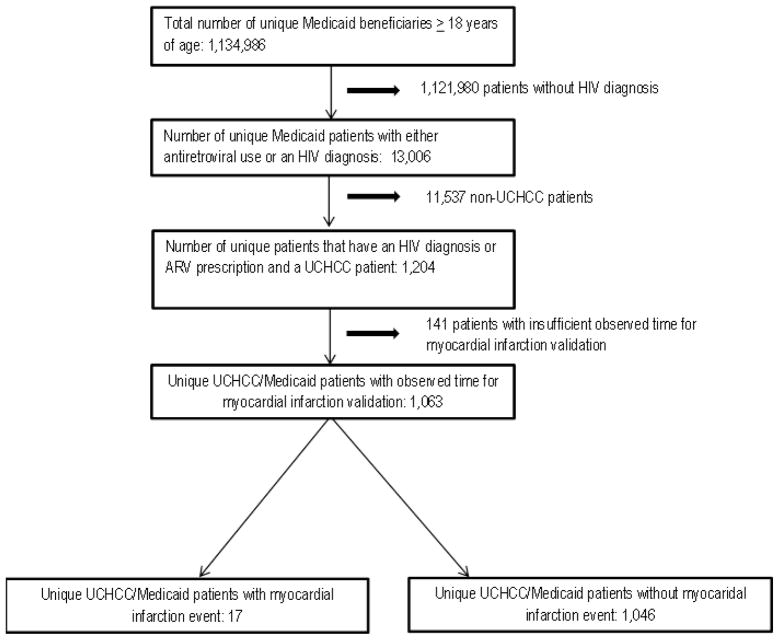

Between 2002 and 2008 there were 1,134,986 NC Medicaid beneficiaries ≥ 18 years of age of whom 13,006 patients were HIV-infected based on an ICD-9 or antiretroviral use criteria. Of 2,340 HIV-infected patients in the UCHCC who received care between 2002 and 2008, 1,204 patients were also Medicaid beneficiaries. There were 141 UCHCC and Medicaid beneficiaries that were not included as they either did not have sufficient follow-up time in either data source or the period of Medicaid eligibility did not overlap with follow-up time in the UCHCC, leaving 1,063 patients included in the validation sample. (Figure 1) The median length of observed time for the validation population was 2.5 years (Interquartile Range: 0.9, 4.7; Full range: 0.2, 7.0). The distribution of most demographic and clinical characteristics of the overall Medicaid population, UCHCC and validation sample were similar. (Table 1) The overall Medicaid population and validation sample had a greater proportion of black, women and younger patients when compared to the UCHCC while the validation sample had a larger proportion of intravenous drug users. Clinically, patients included in the validation sample had similar log HIV RNA and CD4 cell counts at entry into care at UNC. In the validation sample, 17 patients had a MI event that occurred during their observation period and there were 19 total MI events.

Figure 1.

Generation of the sample population used to validate myocardial infarction outcomes ascertained from the North Carolina Medicaid Administrative Data. The North Carolina Medicaid data was linked to the UNC CFAR HIV Clinical Cohort (UCHCC) study via social security number, last name and first name.

Table 1.

Clinical and socio-demographic characteristics of Medicaid, UNC HIV Clinical Cohort and Validation Sample populations

| Medicaid N=13,006 n(%) |

UCHCC N=2,340 (%) n (%) |

Validation Sample N=1,063 n (%) |

|

|---|---|---|---|

| Gender | |||

| Female | 5,918 (45.5) | 706 (30.2) | 437 (41.1) |

| Age at UCHCC/Medicaid entry, years | |||

| <40 | 5,505 (42.3) | 1,259 (53.8) | 541 (50.9) |

| 40–50 | 4,699 (35.1) | 783 (33.5) | 389 (36.6) |

| >50 | 2,802 (21.5) | 298 (12.7) | 133 (12.5) |

| Race | |||

| White | 2,740 (21.1) | 738 (31.5) | 229 (21.5) |

| Black | 9,221 (71.0) | 1,369 (58.5) | 757 (71.2) |

| Hispanic | 0 (0.0) | 138 (5.9) | 22 (2.1) |

| Asian | 68 (0.5) | * | 0 (0.0) |

| Native American/Pacific Islander | 169 (1.3) | 42 (1.8) | 32 (3.0) |

| Other | 0 (0.0) | 48 (2.1) | 22 (2.1) |

| Unknown | 808 (6.0) | * | * |

| Insurance at UCHCC entry | |||

| Medicaid | NA | 572 (24.5) | 475 (44.7) |

| Medicare | NA | 133 (5.7) | 54 (5.1) |

| Other Public Insurance | NA | 149 (6.4) | 71 (6.7) |

| Private | NA | 630 (27.0) | 117 (11.0) |

| No Insurance | NA | 849 (36.3) | 344 (32.4) |

| Men who have sex with Men (MSM) | |||

| Yes | NA | 914 (39.1) | 263 (24.7) |

| Intravenous Drug User (IDU) | |||

| Yes | NA | 338 (14.0) | 235 (22.1) |

| CD4 count (cells/μL) most proximal to UCHCC entry (median, IQR)† | NA | 310 (104, 507) | 280 (73, 483) |

| log HIV RNA (copies/mL) most proximal to UCHCC entry (median, IQR)†† | NA | 4.4 (3.2, 5.0) | 4.4 (3.4, 5.1) |

Cell counts < 11 not displayed

<0.01 percent missing values for insurance status in the UCHCC and validation cohort respectively

<0.01 percent missing CD4 count values for UCHCC and the validation cohort respectively.

<0.01 percent missing log HIV RNA values for UCHCC and the validation cohort respectively.

The validation test characteristics comparing MI events in the UCHCC with those identified in the Medicaid data using varying algorithms are displayed in table 2. The current most frequent algorithm used to identify MI events in administrative data, ICD-9 code in the 1st or 2nd position and a length of stay ≥ 3 days, resulted in a calculated sensitivity of 0.588 (95% CI: 0.329, 0.816) and a specificity of 0.994 (95% CI: 0.988, 0.998). Removing the length of stay criteria increased sensitivity to 0.647 (95% CI: 0.383, 0.857) and decreased specificity to 0.988 (95% CI: 0.980, 0.994). The position of the diagnosis code also influenced validation parameters. Allowing the ICD 9-code 410 to be present in any of the nine ICD-9 code positions while keeping the ≥ 3 day length of stay requirement increased the sensitivity of MI identification to 0.765 (95% CI: 0.501, 0.932). Removing the position and length of stay requirement resulted in the highest sensitivity and lowest specificity of event ascertainment (Sensitivity=0.823 [95% CI: 0.566, 0.962]; Specificity=0.982 [95% CI: 0.972, 0.999]). Overall PPVs were low for all of the algorithms explored (Range: 0.438–0.625) while NPVs remained consistently high (0.993–0.997). The addition of DRG codes 121, 122, 123 did not appreciably change validation parameters (data not shown).

Table 2.

Sensitivity, specificity, positive predictive value, and negative predictive values resulting from the comparison of different claims based ascertainment criteria of myocardial infarction events with myocardial infarction events observed in the UNC-CFAR HIV Clinical Cohort (gold standard).

| Se* (TP) | 95% CI* | Sp* (TN) | 95% CI* | PPV* (FP) | 95% CI* | NPV* (FN) | 95% CI* | |

|---|---|---|---|---|---|---|---|---|

| Medicaid Claims-based event ascertainment algorithm† n=1,063 (17 UCHCC events) | ||||||||

| - ICD-9 410.xx in 1st or 2nd position | 0.588 (10) | 0.329, 0.816 | 0.994 (1040) | 0.988, 0.998 | 0.625 (6) | 0.354, 0.848 | 0.993 (7) | 0.986, 0.997 |

| -Length of stay ≥ 3 days | ||||||||

| - ICD-9 410.xx in 1st or 2nd position | 0.588 (10) | 0.329, 0.816 | 0.993 (1039) | 0.986, 0.997 | 0.588 (7) | 0.329, 0.816 | 0.993 (7) | 0.986, 0.997 |

| -Length of stay ≥ 1 day | ||||||||

| -ICD-9 410.xx in 1st, or 2nd position | 0.647 (11) | 0.383, 0.857 | 0.988 (1034) | 0.980, 0.994 | 0.478 (12) | 0.268, 0.694 | 0.994 (6) | 0.987, 0.997 |

| -Any length of stay | ||||||||

| -ICD-9 410.xx in any position | 0.765 (13) | 0.501, 0.932 | 0.991 (1037) | 0.984, 0.996 | 0.591 (9) | 0.364, 0.793 | 0.996 (4) | 0.990, 0.999 |

| -Length of stay ≥ 3 days | ||||||||

| -ICD-9 410.xx in any position | 0.765 (13) | 0.501, 0.932 | 0.989 (1034) | 0.980, 0.994 | 0.520 (12) | 0.313, 0.64 | 0.996 (4) | 0.990, 0.999 |

| -Length of stay ≥ 1 days | ||||||||

| -ICD-9 410.xx in any position | 0.824 (14) | 0.566, 0.962 | 0.982 (1028) | 0.972, 0.999 | 0.438 (18) | 0.264, 0.623 | 0.997 (3) | 0.992, 0.999 |

| -Any length of stay |

Se: Sensitivity; Sp: Specificity; PPV: Positive Predictive Value; NPV: Negative Predictive Value; CI: Confidence Interval; TP: True Positive; TN: True Negative; FP: False Positive; FN: False Negative

Myocardial infarctions were identified in the UNC-CFAR HIV Clinical Cohort through extensive medical chart abstraction and adjudicated by health care personnel using modified WHO Monica criteria.

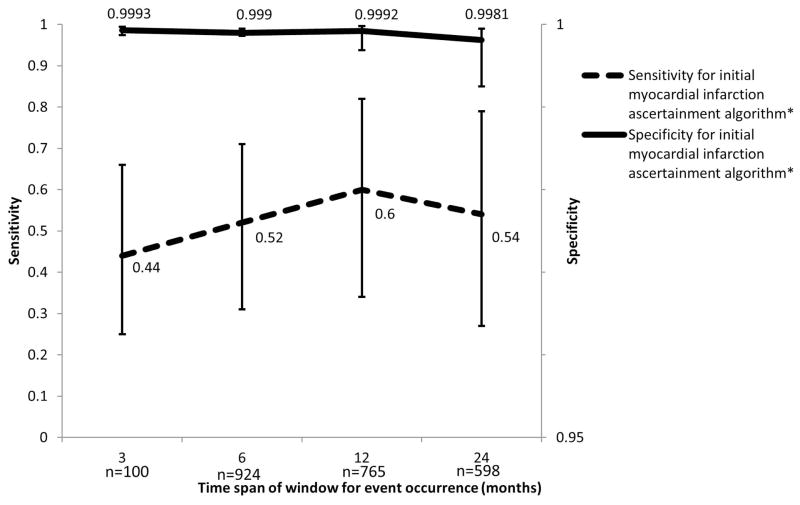

We also examined the effect of length of observation, timing of events as well as multiple MI events per patient. For this analysis we used the most commonly used MI ascertainment criteria in the literature (ICD-9 code in 1st or 2nd position and a length of stay ≥ 3 days). Since we required the entire length of time for each time increment, the number of unique patients included decreased as increments increased from 3 to 24 months (1,007 patients to 598 patients respectively). When allowing for a 24 month increment of observed time, sensitivity and specificity measurements were similar to those in the first validation analysis (Sensitivity=0.538 [95% CI: 0.268, 0.788]; Specificity=0.998 [95% CI: 0.993, 0.999]). Sensitivity was lowest when allowing for only a three month period of eligibility for the event to occur in both data sources (0.444 [95% CI: 0.250, 0.658]), and increased for the 6 and 12 month incremental periods (0.516 [95% CI: 0.314, 0.713] and 0.600 [95% CI: 0.338, 0.815] respectively). (Figure 2) A similar relationship between length of observed time and sensitivity and specificity was observed for the other MI algorithms. For all algorithms, the maximum sensitivity was observed when the standardized 12 month time increment was used (data not shown).

Figure 2.

Sensitivity and false positive rate for claims-based identification of myocardial infarctions allowing for varying periods of continuous eligibility (3, 6, 12, 24 months). Myocardial infarction events were identified by ICD-9 code 410 in the 1st or 2nd position and a length of stay ≥ 3 days.

Table 3 displays the effect of outcome misclassification in a hypothetical population using sensitivity and specificity measures from the following algorithms: 1) ICD-9 code 410 in the 1st or 2nd position and length of stay ≥ 3 days 2) ICD-9 code 410 in 1st or 2nd position and a length of stay ≥ 1 day and 3) ICD-9 code 410 in any position and any length of stay. Given a population of 1,100 individuals, a baseline probability of exposure to a hypothetical risk factor of 0.09 and a risk of MI of 0.1 in the exposed and 0.08 in the unexposed (true RR: 1.25, true RD: 0.02); a sensitivity of 0.588 and a specificity of 0.994 will result in an observed RR of 1.21 and an observed RD of 0.015. A sensitivity of 0.824 and a specificity of 0.982 would result in a RR of 1.10 and a RD of 0.009. An assessment of bias reveals that the percent bias is highest for both relative and absolute measures when specificity is the lowest.

Discussion

We examined sensitivity, specificity, PPV and NPV of various algorithms to identify MI events among HIV-infected individuals enrolled in the NC Medicaid program relying on events adjudicated in the UCHCC as the gold standard. We found that using our best algorithm for relative effect measures, we achieved a specificity of 0.994 translating to a bias of around 11% based on plausible parameter values for a study of antiretrovirals on risk of MI using administrative healthcare data. In general specificity measures using all ascertainment algorithms were high (0.982–0.994), however, even small deviations in specificity increased bias of effect measures.

Compared to other studies, the sensitivity of a commonly used algorithm to identify MIs (ICD-9 code 410 in the 1st or 2nd position and length of stay ≥ 3 days), was low in our study (0.59). Other studies reported sensitivities ranging from 0.65–0.83.13,26 The observed sensitivities may be explained by our study population and ICD-9 code position. HIV patients are often admitted to the hospital for HIV-related and general medical comorbidities and an MI event occurring during a hospital stay may not get coded in the 1st or 2nd ICD-9 code position. Therefore, an expansion of the criteria to include all ICD-9 code positions would increase the sensitivity of the ascertainment criteria as we observed. Rosamond et al. noted that sensitivities of ICD-9 code 410 also have declined over time; in part due to changes in diagnostic practices and the use of differing algorithms for defining MI in the gold standard. 27

We investigated other diagnoses in the Medicaid data or the UCHCC for patients that remained misclassified despite the use of the most sensitive or most specific algorithms (three false negatives and six false positives respectively). Using the most sensitive algorithm, three patients had a MI in the gold standard. Two of these patients had ICD-9 codes for non-MI, cardiovascular related conditions, on or around the diagnosis date identified by the gold standard (unspecified chest pain and precordial pain; coronary atherosclerosis of native coronary artery). The third patient did not have any ICD-9 codes related to cardiovascular disease conditions coded on or around the date of diagnosis in the UCHCC. Using the most specific algorithm, there were six patients with a claim for a MI in the administrative data that did not have an MI in the UCHCC. Five out of six of these patients did have other, non-MI, cardiovascular related conditions in the UCHCC. The sixth patient did not have any cardiovascular conditions noted but did have a history of drug abuse.

We also addressed the impact of varying lengths of observed time, timing of events, and multiple MI events on ascertainment criteria performance. Sensitivity was lowest for the shortest time increment indicating that dates recorded for events in the Medicaid data were not the same as dates recorded in the UCHCC. Sensitivities for the 6 and 12 month intervals were similar to those calculated in the first validation study. The decrease in sensitivity for the 24 month time frame was likely due to the reduction in number of patients with at least 24 months of observed time for analysis. These results suggest that a requirement for a full 12 months of eligibility in Medicaid may maximize the sensitivity of claims-based MI identification algorithm.

Positive predictive values calculated using the differing MI ascertainment algorithms in our study were substantially lower than values obtained from previous studies (0.93–0.97). 12,15–17 These results are likely due to the low prevalence of MI in this population. However, while PPV is an important measure for some research questions, this measure has less importance in the context of comparative effectiveness research. Nevertheless, the low PPVs suggest that the administrative healthcare data used here may not be ideal for the selection of a study cohort.

Chubak et al. and Setoguchi et al. explored bias related to outcome misclassification in a hypothetical population (Chubak) and a Medicare population (Setoguchi). 28,29 Their results quantified outcome misclassification bias on a relative scale, but did not address misclassification bias on an absolute scale. Often absolute measures, like the RD, are used in comparative safety and effectiveness studies; therefore addressing the impact of less than perfect specificity and sensitivity on both types of effect measures is warranted. In our hypothetical example, as expected, deviations from perfect specificity led to biased results on the relative scale while increases in sensitivity decreased bias on the absolute scale. However, both sensitivity and specificity influenced absolute measures. While a perfect specificity decreases the bias of relative effect measures, the reduction of the number of cases identified may decrease precision around estimates substantially. Therefore, the choice of ascertainment algorithm used should be prioritized based on the study question, using the specific validation parameter that will minimize bias while maximizing precision. For this HIV Medicaid population, it may be important to use an algorithm that expands the ICD-9 code position requirement to maximize sensitivity with minimal decreases in specificity.

Our study has limitations. The number of events obtained for validation was low which influenced the precision around our measurements. Further, we intentionally conducted this study in a Medicaid HIV population limiting the generalizability of these algorithms to other populations or data sources. Despite these limitations, our study has important implications. Since this population includes patients seeking care throughout the state of NC, we will be able to examine the effects of antiretrovirals on MI in a more generalized population. Each ascertainment algorithm had relatively high specificity and can be used to conduct comparative effectiveness studies examining the relationship between antiretroviral use and MI outcomes in the NC Medicaid population. Finally, the measures of validity reported here may be used by other researchers to assess the role of outcome misclassification in studies using administrative healthcare databases.

Acknowledgments

The authors would like to thank Drs. Charles Poole, Michelle Jonsson Funk, and William Miller for their assistance with the preparation of this manuscript.

Grant Support: This project was supported in part by grants M01RR00046 and UL1RR025747 from the National Center of Research Resources, National Institutes of Health and the BIRCWH grant (#K12 DA035150) from OWHR, NIDA and the NIH. The project was also supported by National Institutes of Health grants P30 AI590410 and R01 AG023178 as well as the Agency for Healthcare Research and Quality grant R01 HS018731

Footnotes

Preliminary results were presented at the 27th International Conference on Pharmacoepidemiology and Therapeutic Risk Management, Chicago, IL, August 15, 2011

References

- 1.Sturmer T, Jonsson Funk M, Poole C, Brookhart MA. Nonexperimental comparative effectiveness research using linked healthcare databases. Epidemiology. 2011 May;22(3):298–301. doi: 10.1097/EDE.0b013e318212640c. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Kleinbaum D, Kupper L, Muller K, Nizam A. Applied regression analysis and other multivariable methods. 3. Pacific Grove, CA: International Thomson Publishing Company; 1998. [Google Scholar]

- 3.Solomon DH, Glynn RJ, Rothman KJ, et al. Subgroup analyses to determine cardiovascular risk associated with nonsteroidal antiinflammatory drugs and coxibs in specific patient groups. Arthritis Rheum. 2008 Aug 15;59(8):1097–1104. doi: 10.1002/art.23911. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Rothman K, Greenland S. Modern Epidemiology. 2. Lippincott Williams & Wilkins; 1998. [Google Scholar]

- 5.Sabin CA, Worm SW, Weber R, et al. Use of nucleoside reverse transcriptase inhibitors and risk of myocardial infarction in HIV-infected patients enrolled in the D:A:D study: a multi-cohort collaboration. Lancet. 2008 Apr 26;371(9622):1417–1426. doi: 10.1016/S0140-6736(08)60423-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Friis-Moller N, Reiss P, Sabin CA, et al. Class of antiretroviral drugs and the risk of myocardial infarction. The New England journal of medicine. 2007 Apr 26;356(17):1723–1735. doi: 10.1056/NEJMoa062744. [DOI] [PubMed] [Google Scholar]

- 7.Weber R, Sabin CA, Friis-Moller N, et al. Liver-related deaths in persons infected with the human immunodeficiency virus: the D:A:D study. Archives of internal medicine. 2006 Aug 14–28;166(15):1632–1641. doi: 10.1001/archinte.166.15.1632. [DOI] [PubMed] [Google Scholar]

- 8.d’Arminio A, Sabin CA, Phillips AN, et al. Cardio- and cerebrovascular events in HIV-infected persons. Aids. 2004 Sep 3;18(13):1811–1817. doi: 10.1097/00002030-200409030-00010. [DOI] [PubMed] [Google Scholar]

- 9.Althoff KN, Justice AC, Gange SJ, et al. Virologic and immunologic response to HAART, by age and regimen class. AIDS. 2010 Oct 23;24(16):2469–2479. doi: 10.1097/QAD.0b013e32833e6d14. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Crane HM, Grunfeld C, Willig JH, et al. Impact of NRTIs on lipid levels among a large HIV-infected cohort initiating antiretroviral therapy in clinical care. AIDS. 2011 Jan 14;25(2):185–195. doi: 10.1097/QAD.0b013e328341f925. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Lau B, Gange SJ, Moore RD. Risk of non-AIDS-related mortality may exceed risk of AIDS-related mortality among individuals enrolling into care with CD4+ counts greater than 200 cells/mm3. J Acquir Immune Defic Syndr. 2007 Feb 1;44(2):179–187. doi: 10.1097/01.qai.0000247229.68246.c5. [DOI] [PubMed] [Google Scholar]

- 12.Varas-Lorenzo C, Castellsague J, Stang MR, Tomas L, Aguado J, Perez-Gutthann S. Positive predictive value of ICD-9 codes 410 and 411 in the identification of cases of acute coronary syndromes in the Saskatchewan Hospital automated database. Pharmacoepidemiology and drug safety. 2008 Aug;17(8):842–852. doi: 10.1002/pds.1619. [DOI] [PubMed] [Google Scholar]

- 13.Pladevall M, Goff DC, Nichaman MZ, et al. An assessment of the validity of ICD Code 410 to identify hospital admissions for myocardial infarction: The Corpus Christi Heart Project. International journal of epidemiology. 1996 Oct;25(5):948–952. doi: 10.1093/ije/25.5.948. [DOI] [PubMed] [Google Scholar]

- 14.Chambless LE, Toole JF, Nieto FJ, Rosamond W, Paton C. Association between symptoms reported in a population questionnaire and future ischemic stroke: the ARIC study. Neuroepidemiology. 2004 Jan-Apr;23(1–2):33–37. doi: 10.1159/000073972. [DOI] [PubMed] [Google Scholar]

- 15.Kiyota Y, Schneeweiss S, Glynn RJ, Cannuscio CC, Avorn J, Solomon DH. Accuracy of Medicare claims-based diagnosis of acute myocardial infarction: estimating positive predictive value on the basis of review of hospital records. American heart journal. 2004 Jul;148(1):99–104. doi: 10.1016/j.ahj.2004.02.013. [DOI] [PubMed] [Google Scholar]

- 16.Petersen LA, Wright S, Normand SL, Daley J. Positive predictive value of the diagnosis of acute myocardial infarction in an administrative database. Journal of general internal medicine. 1999 Sep;14(9):555–558. doi: 10.1046/j.1525-1497.1999.10198.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Choma NN, Griffin MR, Huang RL, et al. An algorithm to identify incident myocardial infarction using Medicaid data. Pharmacoepidemiology and drug safety. 2009 Nov;18(11):1064–1071. doi: 10.1002/pds.1821. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Berger AK, Duval S, Jacobs DR, Jr, et al. Relation of Length of Hospital Stay in Acute Myocardial Infarction to Postdischarge Mortality. The American journal of cardiology. 2008;101(4):428–434. doi: 10.1016/j.amjcard.2007.09.090. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Saczynski JS, Lessard D, Spencer FA, et al. Declining Length of Stay for Patients Hospitalized with AMI: Impact on Mortality and Readmissions. Am J Med. 2010 Nov;123(11):1007–1015. doi: 10.1016/j.amjmed.2010.05.018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Benchimol EI, Manuel DG, To T, Griffiths AM, Rabeneck L, Guttmann A. Development and use of reporting guidelines for assessing the quality of validation studies of health administrative data. Journal of clinical epidemiology. 2011 Aug;64(8):821–829. doi: 10.1016/j.jclinepi.2010.10.006. [DOI] [PubMed] [Google Scholar]

- 21.De Coster C, Quan H, Finlayson A, et al. Identifying priorities in methodological research using ICD-9-CM and ICD-10 administrative data: report from an international consortium. BMC health services research. 2006;6:77. doi: 10.1186/1472-6963-6-77. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Prela CM, Baumgardner GA, Reiber GE, et al. Challenges in merging Medicaid and Medicare databases to obtain healthcare costs for dual-eligible beneficiaries: using diabetes as an example. Pharmaco Economics. 2009;27(2):167–177. doi: 10.2165/00019053-200927020-00007. [DOI] [PubMed] [Google Scholar]

- 23.Bild DE, Bluemke DA, Burke GL, et al. Multi-ethnic study of atherosclerosis: objectives and design. American journal of epidemiology. 2002 Nov 1;156(9):871–881. doi: 10.1093/aje/kwf113. [DOI] [PubMed] [Google Scholar]

- 24.Crane HM, Paramsothy P, Rodriguez C, et al. Primary versus secondary myocardial infarction events among HIV-infected individuals in the CNICS cohort. 19th Conference on Retroviruses and Opportunistic Infections; Seattle, WA. 2012. [Google Scholar]

- 25.Rosner B. Fundamentals of Biostatistics. 4. Belmont: Duxbury Press; 1995. [Google Scholar]

- 26.Rosamond WD, Chambless LE, Sorlie PD, et al. Trends in the sensitivity, positive predictive value, false-positive rate, and comparability ratio of hospital discharge diagnosis codes for acute myocardial infarction in four US communities, 1987–2000. American journal of epidemiology. 2004 Dec 15;160(12):1137–1146. doi: 10.1093/aje/kwh341. [DOI] [PubMed] [Google Scholar]

- 27.Rosamond W. Are migraine and coronary heart disease associated? An epidemiologic review. Headache. 2004 May;44( Suppl 1):S5–12. doi: 10.1111/j.1526-4610.2004.04103.x. [DOI] [PubMed] [Google Scholar]

- 28.Chubak J, Pocobelli G, Weiss NS. Tradeoffs between accuracy measures for electronic health care data algorithms. Journal of clinical epidemiology. 2012 Mar;65(3):343–349. e342. doi: 10.1016/j.jclinepi.2011.09.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Setoguchi S, Solomon DH, Glynn RJ, Cook EF, Levin R, Schneeweiss S. Agreement of diagnosis and its date for hematologic malignancies and solid tumors between medicare claims and cancer registry data. Cancer causes & control: CCC. 2007 Jun;18(5):561–569. doi: 10.1007/s10552-007-0131-1. [DOI] [PubMed] [Google Scholar]