Summary

The gene regulation network (GRN) is a high-dimensional complex system, which can be represented by various mathematical or statistical models. The ordinary differential equation (ODE) model is one of the popular dynamic GRN models. High-dimensional linear ODE models have been proposed to identify GRNs, but with a limitation of the linear regulation effect assumption. In this article, we propose a sparse additive ODE (SA-ODE) model, coupled with ODE estimation methods and adaptive group LASSO techniques, to model dynamic GRNs that could flexibly deal with nonlinear regulation effects. The asymptotic properties of the proposed method are established and simulation studies are performed to validate the proposed approach. An application example for identifying the nonlinear dynamic GRN of T-cell activation is used to illustrate the usefulness of the proposed method.

Keywords: Adaptive group LASSO, Differential equations, High-dimensional data, Sparse additive models, Time course microarray data, Variable selection

1. Introduction

The gene regulatory network (GRN) is a complex system associated with biological activities at the cellular level, such as cell growth, division, development, and response to environmental stimulus (Carthew and Sontheimer, 2009), which should be modeled in a dynamic way (Hecker et al., 2009). The new high-throughput technologies such as DNA microarray and next generation RNA-Seq enable us to observe the dynamic features of gene expression profiles in a genome scale. In particular, the time course gene expression data collected from these new technologies allow investigators to study gene regulatory networks from a dynamic point of view in more details. Currently, several models have been proposed for GRN construction, such as information theory models (Steuer et al., 2002;Stuart et al., 2003); Boolean networks (Kauffman, 1969; Thomas, 1973; Bornholdt, 2008); Bayesian Networks (Heckerman, 1996; Imoto et al., 2003; Needham et al., 2007; Werhli and Husmeier, 2007); latent variable models (Shojaie and Michailidis, 2009); and other regression models (Kim et al., 2009).

In particular, many popular models have been proposed for inferring gene regulatory networks using time course gene expression data. For examples, dynamic Boolean networks and probabilistic Boolean networks (Liang et al., 1998; Akutsu et al., 2000; Shmulevich et al., 2002; Martin et al., 2007), dynamic Bayesian networks (Murphy and Mian, 1999; Friedman et al., 2000; Hartemink et al., 2001; Zou and Conzen, 2005; Song et al., 2009), vector autoregressive and state space models (Hirose et al., 2008; Kojima et al., 2009; Shimamura et al., 2009); and differential equation models (Voit, 2000; Holter et al., 2001; DeJong, 2002; Yeung et al., 2002). Especially Xing and his associates have recently introduced temporal exponential random graph models and time-varying networks to capture dynamics of networks (Hanneke and Xing, 2006; Guo et al., 2007; Kolar et al., 2010). Most of these dynamic network models such as dynamic Bayesian networks and random graph models require extensive computations for posterior inference, which only allow us to deal with small networks. Song et al. (2009) also introduced a time-varying dynamic Bayesian network to model structurally varying directed graphs. Gupta et al. (2007) proposed a hierarchical hidden Markov regression model for determining gene regulatory networks from gene expression microarray data, which also allows for covariate effects varying between states and gene clusters varying over time. Furthermore, Gupta and Ibrahim (2007) introduced a hierarchical regression mixture model to combine gene clustering and motif discovery in an unified framework, in which a Monte Carlo method was used for simultaneous variable selection (for motifs) and clustering (for time course gene expression data). Shojaie et al. (2012) recently proposed an adaptive thresholding estimate under the framework of graphical Granger causality for reconstructing regulatory networks from time-course gene expression data.

In this paper we focus on ordinary differential equation (ODE) models for dynamic GRN construction. The ODE approach models the dynamic change of a gene expression (the derivative of the expression) as a function of expression levels of all related genes. So the dynamic feature of the GRN is automatically and naturally quantified. Both positive and negative as well as the feedback effects of gene regulations can be appropriately captured by the ODE model in a systematic way. A general ODE model for GRNs can be written as:

| (1.1) |

where t ∈ [t0, T] (0 ≤ t0< T < ∞) is time, X(t) = (X1(t), ⋯, Xp (t))T is a vector representing the gene expression level of gene 1, ⋯, p at time t and X′(t) is the first order derivative of X(t). F serves as the link function that quantifies the regulatory effects of regulator genes on the expression change of a target gene which depends on a vector of parameters θ. In general F can take any linear or nonlinear functional forms. Many GRN models are based on linear ODEs due to its simplicity. Lu et al. (2011) proposed using the following linear ODEs for dynamic GRN identification and applied the SCAD approach for variable selection,

| (1.2) |

where parameters θ = {θkj}k,j=1, ⋯, p quantify the regulations and interactions among the genes in the network. In practice, however, there is little a priori justification for assuming that the effects of regulatory genes take a linear form. Thus, the linear ODE models may be very restrictive for practical applications. In fact, nonlinear parametric ODE models have been proposed for gene regulatory networks (Weaver et al., 1999; Sakamoto and Iba, 2001; Spieth et al., 2006), but the variable selection (network edge identification) problem for high-dimensional nonlinear ODEs has not been addressed. In this paper, we intend to extend the high-dimensional linear ODE models in Lu et al. (2011) to a more general additive nonparametric ODE model for modeling high-dimensional nonlinear GRNs:

| (1.3) |

where μk is an intercept term and fkj (·) is a smooth function to quantify the nonlinear relationship among related genes in the GRN. Based on the sparseness principle of gene regulatory networks and other biological systems, we usually assume that the number of significant nonlinear effects, fkj (·), is small for each of the p variables (genes), Xk, although the total number of variables (genes), p, in the network may be large. Thus, we refer to model (1.3) as the sparse additive ODE (SA-ODE) model. Also we assume that the measurements of gene expression for the kth gene are obtained at multiple time points, ti, i = 1, ⋯, n, that is,

| (1.4) |

where the measurement errors εk(ti) (i = 1, ⋯, n) are assumed to be i.i.d. with mean zero and variance . The challenging question is how to perform model selection for the nonparametric SA-ODE model (1.3) under the assumption of sparsity constraints on the index set {j : fkj (·) ≠ 0} of functions fkj (·) that are not identically zero.

There exist several classes of parameter estimation methods for ODE models, which include the nonlinear least squares method (NLS) (Hemker, 1972; Bard, 1974; Li et al., 2005; Xue et al., 2010), the two-stage smoothing-based estimation method (Varah, 1982; Brunel, 2008; Chen and Wu, 2008a,b; Liang and Wu, 2008; Wu et al., 2012), the principal differential analysis (PDA) and its extensions (Ramsay, 1996; Heckman and Ramsay, 2000; Ramsay and Silverman, 2005; Poyton et al., 2006; Ramsay et al., 2007; Varziri et al., 2008; Qi and Zhao, 2010) and the Bayesian approaches (Putter et al., 2002; Huang et al., 2006; Donnet and Samson, 2007). Among these methods, we are more interested in the two-stage smoothing-based estimation method, where in the first stage, a nonparametric smoothing approach is used to obtain the estimates of both the state variables and their derivatives from the observed data, and then in the second stage, these estimated functions are plugged into the ODEs to estimate the unknown parameters using a formulated pseudo-regression model. In particular, the two-stage smoothing-based estimation method avoids numerically solving the differential equations directly and does not need the initial or boundary conditions of the state variables. This method also decouples the high-dimensional ODEs to allow us to perform variable selection and parameter estimaion for one equation at a time (Voit and Almeida, 2004; Jia et al., 2011; Lu et al., 2011). These good features of the two-stage smoothing-based estimation method in addition to its computational efficiency greatly outweigh its disadvantage in a small loss of estimation accuracy in dealing with high-dimensional nonlinear ODE models (Lu et al., 2011).

In the past two decades, there has been much work on penalization methods for variable selection and parameter estimation for high-dimensional data, including the bridge estimator (Frank and Friedman, 1993); the least absolute shrinkage and selection operator (LASSO) (Tibshirani, 1996) and its extensions, such as the adaptive LASSO (Zou, 2006), the group LASSO (Yuan and Lin, 2006) and the adaptive group LASSO (Wang and Leng, 2008); the smoothly clipped absolute deviation (SCAD) penalty (Fan and Li, 2001); the elastic net (Zou and Hastie, 2006) among others. Recently, the LASSO approach has been applied to high-dimensional nonparametric sparse additive (regression) models to perform variable selection and parameter estimation simultaneously (Meier et al., 2009; Ravikumar et al., 2009; Huang et al., 2010; Cantoni et al., 2011). In this paper, we propose to couple the ideas of the two-stage smoothing-based estimation method and the high-dimensional variable selection techniques to perform variable selection and nonparametric function estimation for the proposed SA-ODE model (1.3). However, this is not just to trivially combine the two ideas, instead we have to tackle several critical challenges, which include: 1) the two-stage smoothing-based estimation method allows us to convert our nonparametric SA-ODE model into a pseudo-sparse additive regression model where the covariates and the response variables are derived from nonparametric smoothing estimates of the ODE state variables and their derivatives (instead of direct observed data); 2) the resulting errors in the pseudo-sparse additive regression model are not i.i.d., but dependent; and 3) the number of covariates, p, in the SA-ODE models is usually large (maybe greatly larger than the sample size). Thus, it is not trivial to establish the theoretical properties for the proposed method. To the best of our knowledge, this is the first attempt to propose a variable selection method for a high-dimensional nonparametric ODE model, and it is also the first time to establish the theoretical results for the penalized estimators for ODE models under the “large p, small n” setting.

The remainder of this paper is organized as follows. In Section 2, we propose a five-step variable selection procedure for the SA-ODE model (1.3). In Section 3, the theoretical properties of the proposed method are established in the “large p, small n” setting. In Section 4, we present some simulation results to validate the proposed method. To illustrate the usefulness of the proposed methods, we apply the proposed method to identify a dynamic gene regulatory network based on time course gene expression data from a T-cell activation study in Section 5. We conclude the paper with some remarks in Section 6. The detailed technical proofs are given in the Appendix.

2. Pseudo-Sparse Additive Model and Variable Selection

In this section, we propose a five-step variable selection procedure for the SA-ODE model (1.3). In Step I, we use one of the nonparametric smoothing approaches to estimate both the ODE state variables and their derivatives based on the measurement model (1.4). In Step II, we use spline functions to approximate each of the nonparametric additive components in the SA-ODE model (1.3), and then substitute the estimated state variables and their derivatives from Step I into the SA-ODE model (1.3) to form a ‘pseudo’ sparse additive model. In Step III, we apply the group LASSO approach to obtain an initial estimator and reduce the dimension of the problem. In Step IV, we apply the adaptive group LASSO approach (Wang and Leng, 2008; Huang et al., 2010) for component selection, which combines ideas from the adaptive LASSO (Zou, 2006) and the group LASSO (Yuan and Lin, 2006). In Step II, we usually use a larger number of basis functions to approximate the nonparametric functions and some of these basis functions may not be necessary. In Step V, we propose to use the regular LASSO again to the selected model from Step IV to further shrink some of the coefficients of B-spline basis to zero so that we can obtain a more parsimonious model at the end.

Step I. Nonparametric Smoothing

First we apply one of nonparametric smoothing approaches such as smoothing splines, regression splines, penalized splines or local polynomial to estimate the state variables and their derivatives, Xk (t) and based on model (1.4). In this paper, we adopt the penalized splines (Ruppert et al., 2003; Li and Ruppert, 2008; Claeskens et al., 2009; Wu et al., 2012) to obtain the estimates, X̂k (t) and , k = 1, ⋯, p. That is, approximate Xk (t) one by one for 1 ≤ k ≤ p by , where δk = (δk,−ν, ⋯, δk,Kk)T is the unknown coefficient vector to be estimated from the data, and Nk,ν+1(t) = {Nk,−ν,ν+1(t), ⋯, Nk,Kk,ν+1(t)}T is the B-spline basis function vector of degree ν and dimension Kk + ν + 1 at a sequence of knots t0 = τk,−ν = τk,−ν+1 = ⋯ = τk,−1 = τk,0 < τk,1 < ⋯ < τk,Kk < τk,Kk+1 = τk,Kk+2 = ⋯ = τk,Kk+ν+1 = T on [t0, T] (Schumaker, 1981). Define n × (Kk + ν + 1) matrix Nk = {Nk,ν+1(t1), ⋯, Nk,ν+1(tn)}T, Yk = (Yk (t1), ⋯, Yk (tn))T and let . The penalized spline (P-spline) objective function contains a penalized sum of squared differences with a penalized term by the integrated squared second order derivative of the spline function as

| (2.1) |

The minimizer of (2.1) takes the form . Then we have

| (2.2) |

From de Boor (2001), the derivatives of spline functions can be simply expressed in terms of lower order spline functions, then we can obtain the explicit expressions of and . To determine λk, we use the standard generalized cross validation (GCV) method (Craven and Wahba, 1979). Note that, if the longitudinally replicate data are available (see our application example in Section 5), the nonparametric mixed-effects smoothing methods can be used in this step in order to obtain better smoothing results (Wu and Zhang, 2006; Lu et al., 2011).

Step II. Pseudo-Sparse Additive Models

In this step, we propose a method to identify significant functions in model (1.3) using a high-dimensional variable selection technique, the group LASSO. First, following the idea similar to Varah (1982); Brunel (2008); Chen and Wu (2008a,b); Liang and Wu (2008);Wu et al. (2012), we substitute the estimated state variables X̂k (t) and their derivatives into ODE model (1.3) to form the following pseudo-sparse additive (PSA) model:

| (2.3) |

where and X̂ji = X̂j (ti), ϒki is the sum of measurement errors and estimation errors of and X̂k (t) from Step I. In model (2.3), the response variables and the covariates are derived from the nonparametric smoothing estimates of the state variables and their derivatives, respectively. Moreover, the resulting error terms ϒki are not i.i.d., but dependent. Thus this is not a standard sparse additive regression model studied in the literature (Meier et al., 2009; Ravikumar et al., 2009; Huang et al., 2010; Cantoni et al., 2011). That is why we call it as a ‘pseudo’ sparse additive (PSA) model. Since X̂k (t) and in the above model are estimated continuously at any time point t from Step I, we may augment more time points than the original observation times for the next step analysis. In fact, other investigators (D’Haeseleer et al., 1999; Wessels et al., 2001; Bansal et al., 2006) have used this data augmentation strategy for ODE parameter estimation. We adopt a similar idea here.

Remark 1. Decoupled property. Note that the substitution approach in model (2.3) allows us to decouple the p-dimensional ODE model into p one-dimensional ODEs independently (Voit and Almeida, 2004; Jia et al., 2011; Lu et al., 2011), so that we can deal with the variable selection problem for these ODEs one by one separately. This is a unique feature of the two-stage smoothing-based estimation method for ODEs (Varah, 1982; Brunel, 2008; Chen and Wu, 2008a,b; Liang and Wu, 2008; Wu et al., 2012).

We adopt a similar idea from Huang et al. (2010) and apply truncated series expansions with B-spline bases to approximate the additive components in model (2.3). Let t0 = ξ0 < ξ1 < ⋯ < ξKn < ξKn+1 = T be a partition of the interval [t0, T], where Kn = O(nϖ) (0 < ϖ < 0.5) is a positive integer such that max0≤m≤Kn |ξm+1 − ξm| = O(n−ϖ). Let 𝒮n be the space of polynomial splines on [t0, T] of degree l ≥ 1 consisting of functions s satisfying: (i) s is a polynomial of degree l on the subintervals Im = [ξm, ξm+1), m = 0, ⋯, Kn − 1 and IKn = [ξKn, ξKn+1]; (ii) for l ≥ 2 and 0 ≥ l′ ≤ l − 2, s is l′ times continuously differentiable on [t0, T]. Then there exists a normalized B-spline basis {ϕm, 1 ≤ m ≤ mn} on [t0, T] for 𝒮n, where mn ≡ Kn + l such that, for any , it can be expressed

| (2.4) |

where βkjm are spline coefficients. Here we propose to conservatively choose the number of basis functions, mn, as large as possible (more than enough or under-smoothing). Note that it is computationally prohibited to select different for different functions fkj (·) when p is large. To deal with this problem, we will re-apply the LASSO approach to shrink unnecessary basis coefficients into zero in Step V. Replacing fkj by its B-spline approximation in (2.4), model (2.3) can be expressed as

| (2.5) |

where is the sum of ϒki and the approximation errors of the additive regression functions by splines. Let βkj = (βkj1, ⋯, βkjmn)T (k, j = 1, ⋯, p) and . Then we have p groups of parameters and our purpose is to select non-zero groups, i.e., nonzero βkj, k, j = 1, ⋯, p.

Step III. Group LASSO

For model (1.3), we need a constraint to deal with unidentifiability problem, i.e., Efkj (Xj (t)) = 0 (j = 1, ⋯, p). Thus, for model (2.5), we impose the constraints . We may also use the centralization of the response and the basis functions to remove the restrictions. Let and ψm(x) ≡ ψjm(x) = ϕm(x) − ϕ̄jm. Write Zij = {ψ1(X̂ji),⋯ ψmn(X̂ji)}T, Zj = (Z1j, ⋯, Znj)T, and Z = (Z1, ⋯,Zp). Let and Hk = (Hk1 − H̄k, ⋯, Hkn − H̄k)T. Similar to Brunel (2008) and Wu et al. (2012), a weight function with boundary restrictions should be imposed in order to achieve a better convergence rate for parameter estimation. Let Dk1 = diag{dk1(t1), ⋯, dk1(tn)}, Dk2 = diag{dk2(t1), ⋯, dk2(tn)} and Dk3 = diag{dk3(t1), ⋯, dk3(tn)}, where dk1(t), dk2(t) and dk3(t) are prescribed non-negative weight functions on [t0, T] with boundary conditions dk1(t0) = dk1(T) = 0, dk2(t0) = dk2(T) = 0 and dk3(t0) = dk3(T) = 0. More discussions on how to select the weight function can be found in Brunel (2008) and Wu et al. (2012). With these notations, we can obtain the group LASSO estimator β̃k by minimizing the following penalized weighted least squares criterion,

| (2.6) |

where λk1 is a penalty parameter, which can be determined by BIC or EBIC (Chen and Chen, 2008). Here we have dropped μk in the arguments of Lk1 with the centering μ̂k = H̄k. Based on the group LASSO estimator β̃k, we can also obtain the estimates of the nonparametric functions, , 1 ≤ j ≤ p.

Step IV. Adaptive Group LASSO

The above group LASSO penalty treats coefficients from each group equally which is not optimal. In order to allow different amounts of shrinkage for different coefficients, an adaptive group LASSO is necessary. In this step, we perform the adaptive group LASSO based on the results from Step III by setting if ‖β̃kj‖2 > 0, otherwise wkj = ∞. Then we obtain the adaptive group LASSO estimator β̂k by minimizing the penalized weighted least squares criterion,

| (2.7) |

with a penalty parameter λk2, which can also be determined by BIC or EBIC (Chen and Chen, 2008). Then we obtain the adaptive group LASSO estimates of μk and fkj, and , 1 ≤ j ≤ p.

Step V. Regular LASSO for Shrinking Basis Coefficients

In Step II, we approximate each of the nonparametric functions in the pseudo-sparse additive model intentionally using a larger number of basis functions (under-smoothing). Thus, some of these basis functions may not be necessary. In this step, we re-apply the regular LASSO or adaptive LASSO to the final model selected from the adaptive group LASSO in Step IV to shrink the coefficients of unnecessary basis functions into zero so that we can obtain a final parsimonious model. The minimization criterion for the adaptive LASSO is

| (2.8) |

where the superscript “(s)” stands for the corresponding quantities for groups picked up from Step IV and s is the total number of groups. The weight wkjm is set as if and wkjm = ∞, otherwise.

3. Theoretical Results

In this section, we establish the asymptotic properties of the proposed group LASSO estimator in Step III and the adaptive group LASSO estimator in Step IV for the pseudo-sparse additive model derived from a set of ODEs in the last section. This is challenging since we need to integrate the asymptotic results from the two-step smoothing-based estimation method for ODE models (Varah, 1982; Brunel, 2008; Chen and Wu, 2008a,b; Liang and Wu, 2008; Wu et al., 2012) and the sparse additive models (Meier et al., 2009; Ravikumar et al., 2009; Huang et al., 2010; Cantoni et al., 2011) together.

Let r be a nonnegative integer and ζ ∈ (0, 1] such that ϱ = r + ζ > 0.5. Let ℱ be the collection of functions f on [t0, T] whose r-th derivative, f(r) exists and satisfies the Lipschitz condition of order ζ: |f(r)(s) − f(r)(t)| ≤ C|s − t|ζ for s, t ∈ [t0, T] with a general positive constant C. In model (1.3), without loss of generality, suppose that the first q components are nonzero, that is, fkj (x) ≠ 0, 1 ≤ j ≤ q, but fkj (x) ≡ 0, q+1 ≤ j ≤ p. Let A1 = {1, ⋯, q}, A0 = {q+1, ⋯, p}, Ã1 = {j :, ‖β̃kj‖2 ≠ 0, 1 ≤ j ≤ p}, and Ã2 = A1 ∪ Ã1. Let |A| be the cardinality for any index set A. Define for any function f(x) at x ∈ [a, b], whenever the integral exists. For 1 ≤ k ≤ p, we make the following assumptions:

Assumption A:

-

(A1)

For , there exists a constant M > 0 such that and .

-

(A2)

Kk ~ cnϑ with 1/(2ν + 3) ≤ ϑ < 1 and λk = O(nπ) with π ≤ ν /(2ν + 3).

-

(A3)

Xk(t) ∈ Cν+1[t0, T] with ν ≥ 2.

-

(A4)

K* = (Kk + ν − 1)(λkc̃1)1/4n−1/4 < 1 for some constant c̃1.

-

(A5)

Random design points t1, ⋯, tn are i.i.d. with a cumulative distribution function Q(t) and a positive and continuous derivative density ρ(t). Moreover, ρ(t) is bounded away from 0 and +∞ and has a bounded and continuous first-order derivative.

Assumption B:

-

(B1)

l + 1 ≥ ϱ.

-

(B2)

The number of nonzero components q is fixed and there is a constant cf > 0 such that min1≤j≤q ‖fkj‖2 ≥ cf for those nonzero fkj ’s.

-

(B3)

The random variables εk(ti) (i = 1, ⋯, n) are i.i.d. with E[εk(ti)] = 0 and . Furthermore, their tail probabilities satisfy P{|εk(ti)| > x} ≤ K exp(−Cx2), i = 1, ⋯, n, for all x ≥ 0 and for some constants C and K.

-

(B4)

E[fkj (Xj (t))] = 0 and fkj ∈ ℱ for k, j = 1, ⋯, p.

-

(B5)

ν ≥ 3ϱ.

-

(B6)

Both the weight functions dk1(․) and dk2(․) are bounded and nonnegative on [t0, T] with dk1(t0) = dk1(T) = 0 and dk2(t0) = dk2(T) = 0. For simplicity, assume that ‖dk1‖∞ ≤ 1 and ‖dk2‖∞ ≤ 1. Moreover, both dk1(t) and dk2(t) have bounded and continuous first-order derivatives.

Note that Assumption A is required to derive the local properties for penalized splines estimates, which were also used by Claeskens et al. (2009) and Wu et al. (2012). Assumption B1 is required for the nonparametric smoothing approaches (Huang, 2003; Xue et al., 2010). Assumptions B2, B3 and B4 are standard conditions for nonparametric additive models (Huang et al., 2010). Assumption B5 means that the smoothness degrees for the state variables Xk (․) are higher than those for the additive functions fkj(․), which is required to control the error of the first-step nonparametric smoothing in order to achieve the same order of the error rate in the pseudo-sparse additive model in Step II. Assumption B6 is for dealing with the boundary effect for the derivative estimation so that the proposed adaptive group LASSO estimator can achieve the optimal nonparametric convergence rate.

Theorem 1 Suppose that Assumptions A and B hold and for a sufficiently large constant C. Then we have

With probability approaching to one, |Ã1| ≤ M1|A1| = M1q for a finite constant M1 > 1.

If and as n → ∞, then all the nonzero βk,j, 1 ≤ j ≤ q, are selected with probability approaching to one.

.

Theorem 2 Suppose that Assumptions A and B hold and that for a sufficiently large constant C. Then we have the following results:

Let Ãf = {j : ‖f̃k,j‖2 > 0, 1 ≤ j ≤ p}. There is a constant M1 > 1 such that, with probability approaching to 1, |Ãf| ≤ M1q.

If mn log(pmn))/n → 0 and as n → ∞, then all the nonzero additive components fkj, 1 ≤ j ≤ q, are selected with probability approaching to one.

, j ∈ Ã2.

Corollary 1 Suppose that Assumptions A and B hold. If and mn ≍ n1/(2ϱ+1), we have

If n−2ϱ/(2ϱ+1) log(p) → 0 as n → ∞, then with probability approaching to one, all the nonzero components fkj, 1 ≤ j ≤ q, are selected and the number of selected components is no more than M1q.

, j ∈ Ã2.

Theorem 3 Suppose Assumptions A and B hold. If , mn ≍ n1/(2ϱ+1), and λk2 ≤ O(n1/2) and satisfies and , then P (‖f̂kj − fkj‖2 > 0, j ∈ A1 and ‖f̂kj‖2 = 0, j ∈ A0) → 1, that is, the adaptive group LASSO consistently selects the nonzero components. In addition, .

All detailed proofs of Theorems 1–3, Corollary 1 are given in the Appendix.

Remark 2. We note that, for the λk1, λk2 and mn given in Theorem 3, the number of zero components can be as large as exp (o(n2ϱ/(2ϱ+1))), which is much larger than n. Thus, under the conditions of the theorems, the proposed adaptive group LASSO estimator is selection consistent and achieves the optimal rate convergence even when p is much larger than n.

Remark 3. All of these theoretical results (Theorems 1–3 and Corollary 1) can be extended to the case of fixed design points t1, ⋯, tn, in which case we can assume that there exists a distribution function Q(t) with a positive and continuous derivative density ρ(t) such that for the empirical distribution Qn(t), . This assumption was also adopted by Zhou et al. (1998) and Zhou and Wolfe (2000).

For the pseudo-sparse additive models derived from a set of nonlinear/nonparametric ODEs, we have achieved similar theoretical results (Theorems 1–3 and Corollary 1) to those obtained by Huang et al. (2010) for nonparametric additive regression models. To prove these results, we develop an important lemma (Lemma A.1 in the Appendix) on the convergence rate of the projection involving the weighted residuals of the derivative estimate of the state variables in the ODEs. In addition, we used the weight functions, Dk1 and Dk2, in the group LASSO objective function (2.6) and the adaptive group LASSO objective function (2.7), respectively. Under a parametric ODE model setting, Wu et al. (2012) used the weighted function with similar boundary conditions for estimation of constant coefficients in ODE models. They found that the parametric estimator has different convergence rates for different boundary conditions of the weight function, that is, if the weight function is zero at both boundaries, the standard root n rate for the constant ODE parameter estimates can be achieved; otherwise, only an optimal nonparametric convergence rate can be reached. In the situation of the pseudo-sparse additive models, we have achieved the optimal nonparametric convergence rate for the adaptive group LASSO estimator (Theorem 3) of the nonparametric functions in the SA-ODE model under the zero boundary assumption for the weight function. If the zero boundary assumption of the weight function does not hold, a lower than the optimal nonparametric convergence rate is expected although we did not provide a detailed proof for this claim. Here we adopt similar ideas in the proofs of Theorems 1–3 and Corollary 1 to those in Huang et al. (2010), but we have to tackle some challenges in the detailed proofs due to the difference between the pseudo-sparse additive model and the sparse additive regression model in Huang et al. (2010).

4. Simulation Studies

In this section, we design simulation experiments to validate the proposed variable selection method for the SA-ODE model. We consider a true SA-ODE model with 8 coupled ODEs as a dynamic network (a higher dimensional SA-ODE model is prohibited for simulation studies due to the limitation of current computational power):

| (4.1) |

| (4.2) |

| (4.3) |

and we set

| (4.4) |

| (4.5) |

| (4.6) |

| (4.7) |

| (4.8) |

We assume the measurement model as

| (4.9) |

Note that, to mimic the real data example in the next section, we also allow to have replicates of measurements, j = 1, 2, ⋯, nk at each time point for the kth equation, and the number of replicates may be different for different equations. In our simulation studies, we assume the numbers of replicates for the 8 equations as (n1, n2, n3, n4, n5, n6, n7, n8) = (50, 60, 70, 40, 50, 30, 45, 35), respectively; and the number of measurement time points is taken as n = 15 and 150 (equally-spaced), respectively. If the replicates are repeated measures or longitudinal data as in our real data example in next section, we can use the nonparametric mixed-effects smoothing approach (Wu and Zhang, 2006) for the first step nonparametric smoothing, which is more efficient (Lu et al., 2011). In our simulation studies, we also consider the case of longitudinal replicates. We first generated the mean trajectory of the observational data, X̄k (t), by numerically solving the ODE models (4.1)–(4.3) using the initial values of the state variables (in log-scale), Xk (0) = Xk0, sampled from a normal distribution with a mean 8 and a standard deviation 5. Then, assuming the real observational data to have a random departure from the mean trajectory at time ti, bki, which is assumed to follow a standard normal distribution. In addition, the measurement error εk(tij) is assumed to follow a normal distribution with mean zero and variance σ2, and we took σ2 = 0.01 and 0.1, respectively in our simulation studies. In summary, the observational data were generated as yk (tij) = X̄k (tij) + bki + εk(tij). The number of simulation runs is taken as 100.

We evaluate the performance of the proposed adaptive group LASSO for the pseudo-sparse additive models derived from a set of ODEs based on the simulated data that are described above. In Step II, the number of basis functions for approximating the non-parametric components was taken as 12 and the data augmentation strategy (D’Haeseleer et al., 1999; Wessels et al., 2001; Bansal et al., 2006; Lu et al., 2011) was used (2000 data points were taken from the smoothed estimates in Step I). The simulation results are reported in Table 1. From Table 1, we can see that, when the sample size is smaller (n = 15) and the measurement error is larger (σ2 = 0.1), the true positive rate (TP) of the variable selection by the proposed adaptive group LASSO method ranges from 51% to 84%, and the false positive rate(FP) ranges from 33% to 45% for the eight ODEs respectively. However, when the sample size is increased (n = 150), the minimum TP for the eight ODEs is increased to 74% and the FP is decreased to 17–30%. When the measurement error is reduced from σ2 = 0.1 to σ2 = 0.01 for the sample size n = 15, the minimum TP for the eight ODEs is 68% and the FP is decreased to 25–36%. When both the sample size is increased (n = 150) and the measurement error is reduced (σ2 = 0.01), the TP ranges 88–100% and the FP is further decreased to 7–16%. These simulation results show that the proposed adaptive LASSO method is reasonably good and tends to perform perfectly when the measurement error is small and the sample size is large.

Table 1.

Simulation results for the adaptive group LASSO in Step IV. The numbers are the averages of true positive rate (TP%) and false positive rate(FP%) from 100 simulation replicates for different scenarios of sample sizes and measurement errors. Standard deviations are given in parenthesis.

| δ2 | n | Eqn# | TP% | FP% | n | Eqn# | TP% | FP% |

|---|---|---|---|---|---|---|---|---|

| 0.1 | 15 | 150 | ||||||

| (1) | 51(0.57) | 45(0.64) | (1) | 74(0.39) | 30(0.27) | |||

| (2) | 55(0.48) | 44(0.37) | (2) | 78(0.27) | 27(0.34) | |||

| (3) | 61(0.56) | 39(0.42) | (3) | 80(0.37) | 22(0.4) | |||

| (4) | 84(0.39) | 45(0.53) | (4) | 100(0.0) | 17(0.33) | |||

| (5) | 61(0.72) | 38(0.61) | (5) | 78(0.31) | 26(0.33) | |||

| (6) | 70(0.42) | 33(0.39) | (6) | 94(0.12) | 25(0.27) | |||

| (7) | 72(0.37) | 39(0.48) | (7) | 93(0.22) | 21(0.33) | |||

| (8) | 75(0.62) | 37(0.69) | (8) | 96(0.11) | 19(0.17) | |||

| 0.01 | 15 | 150 | ||||||

| (1) | 71(0.83) | 29(0.21) | (1) | 88(0.22) | 7(0.09) | |||

| (2) | 74(0.51) | 33(0.31) | (2) | 95(0.13) | 12(0.16) | |||

| (3) | 68(0.44) | 25(0.25) | (3) | 94(0.17) | 8(0.20) | |||

| (4) | 89(0.26) | 28(0.63) | (4) | 100(0.0) | 10(0.34) | |||

| (5) | 68(0.29) | 29(0.79) | (5) | 91(0.12) | 16(0.14) | |||

| (6) | 79(0.47) | 36(0.58) | (6) | 100(0.0) | 9(0.18) | |||

| (7) | 80(0.48) | 31(0.44) | (7) | 97(0.11) | 13(0.12) | |||

| (8) | 88(0.33) | 28(0.83) | (8) | 100(0.0) | 11(0.23) | |||

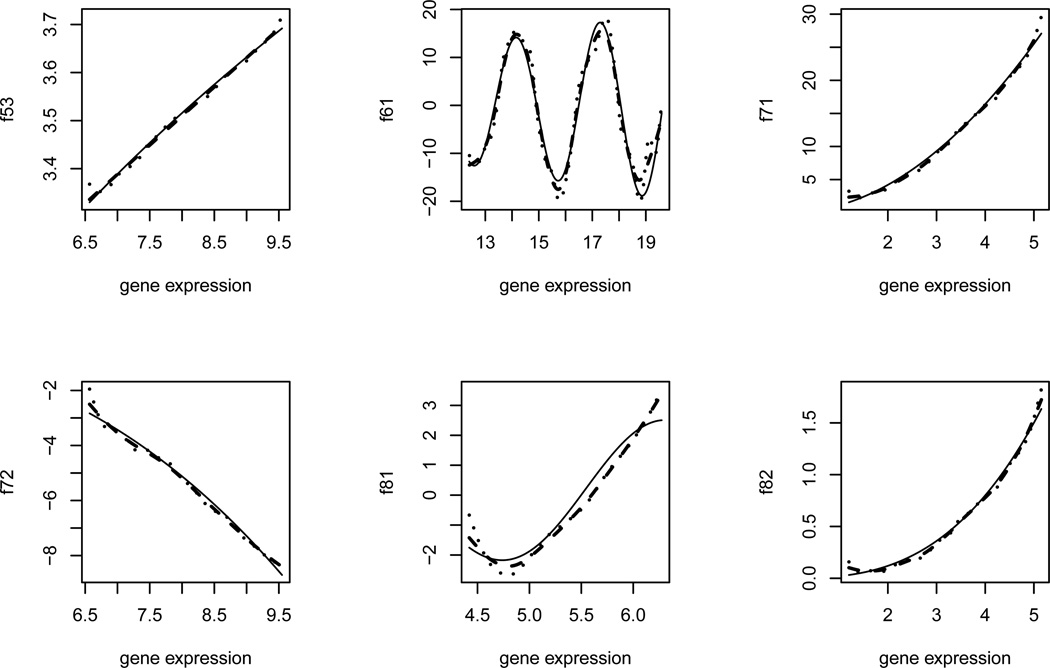

To illustrate the effect of Step V (the regular LASSO for shrinking basis coefficients) in the proposed variable selection procedure in Section 2, we plot the estimated nonparametric functions from Step IV (adaptive group LASSO) and Step V as well as the true function from one simulation run in Figure 1. From this figure, we can see that, the two estimated nonparametric functions have similar trends and can capture the complex non-linear functions, but the adaptive group LASSO estimates from Step IV are too wiggly (dotted lines), which is presumably due to too many basis functions included (under-smoothing). That is why Step V is necessary to shrink some of the unnecessary basis coefficients to zero using the regular LASSO, which produces better (smoother) estimates (dashed lines in Figure 1). We can also see that Step V estimates perform better at boundaries and inection points of the curves compared to Step IV estimates, although this observation is only based on one simulation case. But in general, Step V estimates should be better for more smooth functions compared to those of Step IV, since Step V is designed to correct the under-smoothing problem for Step IV. To quantify the gains from Step V, a comparison of residual sum of squares (RSS) between Step IV and V for each of 15 nonlinear functions are listed in Table 2. The RSS for each function fij is defined as , where is estimated from Step IV or V and fij(xnij) is the true function value. nss is the sample size (here we use 2,000). Table 2 shows that the RSS is reduced by Step V from Step IV in most cases. However, for two functions, f22 and f51, out of 15 functions, the RSS from Step V is larger than that from Step IV.

Figure 1.

Simulation example: A comparison of the true functions (solid lines) and estimated functions from the adaptive group LASSO in Step IV (dotted lines) and from Step V after further basis coefficient shrinking (dashed lines) from one simulation run.

Table 2.

Comparisons of residual sum of squares (RSS) from Step IV and V.

| f11 | f12 | f21 | f22 | f31 | f32 | f41 | f51 | |

| (×103) | (×10) | (×103) | (×103) | (×103) | ||||

| Step IV | 7.0 | 53.1 | 8.2 | 1.5 | 34.3 | 7.0 | 9.0 | 1.1 |

| Step V | 6.8 | 9.9 | 8.0 | 2.7 | 8.6 | 5.8 | 1.2 | 2.8 |

| f52 | f53 | f61 | f71 | f72 | f81 | f82 | ||

| (×106) | (×10−2) | (×105) | (×106) | (×10) | (×102) | (×103) | ||

| Step IV | 8.6 | 35.3 | 1.5 | 1.2 | 19.2 | 8.0 | 4.0 | |

| Step V | 8.1 | 9.0 | 1.4 | 1.1 | 8.4 | 5.1 | 3.9 | |

5. Application: Identification of Nonlinear Dynamic Gene Regulatory Networks

In this section, we apply the proposed method to identify a nonlinear dynamic gene regulatory network based on time course microarray data for T-cell activation. The central event in generation of an immune response is the activation of T-lymphocytes (T-cells). Activated T-cells proliferate and produce cytokines involved in the regulation of effector cells such as B cells and macrophages, which are the primary mediators of the immune response. T-cell activation is initiated by the interaction between the T-cell receptor (TCR) complex and the antigenic peptide presented on the surface of an antigen-presenting cell. This event triggers a network of signaling molecules, including kinases, phosphatases and adaptor proteins that couple the stimulatory signal received from the TCR to gene transcription events in the nucleus (Iwashima et al., 1994; Ley et al., 1991).

In order to better understand the gene regulation network during T-cell activation, Rangel et al. (2004) performed two experiments to characterize the response of a human T cell line (Jurkat) to PMA and ionomicin treatment. In the first experiment, they monitored the expression of 88 genes using cDNA array technology across 10 unequally spaced time points (0, 2, 4, 6, 8, 18, 24, 32, 48, 72h) and each gene was replicated 34 times. In the second experiment, an identical experimental protocol was used, but additional genes were added to the arrays and each gene was replicated 10 times. Genes that displayed very poor reproducibility between the two experiments were removed and the expression data for 58 genes were considered for final analysis by Rangel et al. (2004). Details of data collection and preprocessing can be found in Rangel et al. (2004). Rangel et al. (2004) applied a linear state space model (SSM) to identify the transcriptional network based on this highly replicated expression profiling data. Beal et al. (2005) proposed a variational Bayesian treatment for identifying suitable dimension of hidden state space in SSM. In this paper, we intend to apply the proposed SA-ODE model and the proposed variable selection method in the previous sections to establish a nonlinear dynamic regulatory network among 58 genes for the T-cell activation process. Through this application example, we will demonstrate that some of the gene regulation effects are essentially nonlinear and the linear network model may not be sufficient to capture the nonlinear features of the dynamic network.

We consider the 58 T-cell activation genes that were identified as reproducible from the two experiments by Rangel et al. (2004). For consistency, we only use the expression data from the first experiment with each gene replicated 34 times at 10 time points. The 34 replicates for each gene showed a similar expression pattern during the T-cell activation experiments. It is legitimate to smooth the data for each of the genes using the nonparametric mixed-effects smoothing splines technique (Wu and Zhang, 2006; Lu et al., 2011) to obtain the estimates of the mean expression curves and their derivative curves for each gene, respectively. At the second step, these estimates were plugged in ODE model (1.3) to form the pseudo-sparse additive (PSA) model (2.3). The penalized pseudo-least squares methods, the group LASSO and adaptive group LASSO approaches were used to identify significant regulations (connections) among the 58 genes with potential nonlinear regulation effects. We obtained the fitted curves for all genes by integrating 58 genes concurrently with parameters estimated from the adaptive grouped LASSO approach in Step IV and after shrinking the basis coefficients using regular LASSO in Step V, respectively.

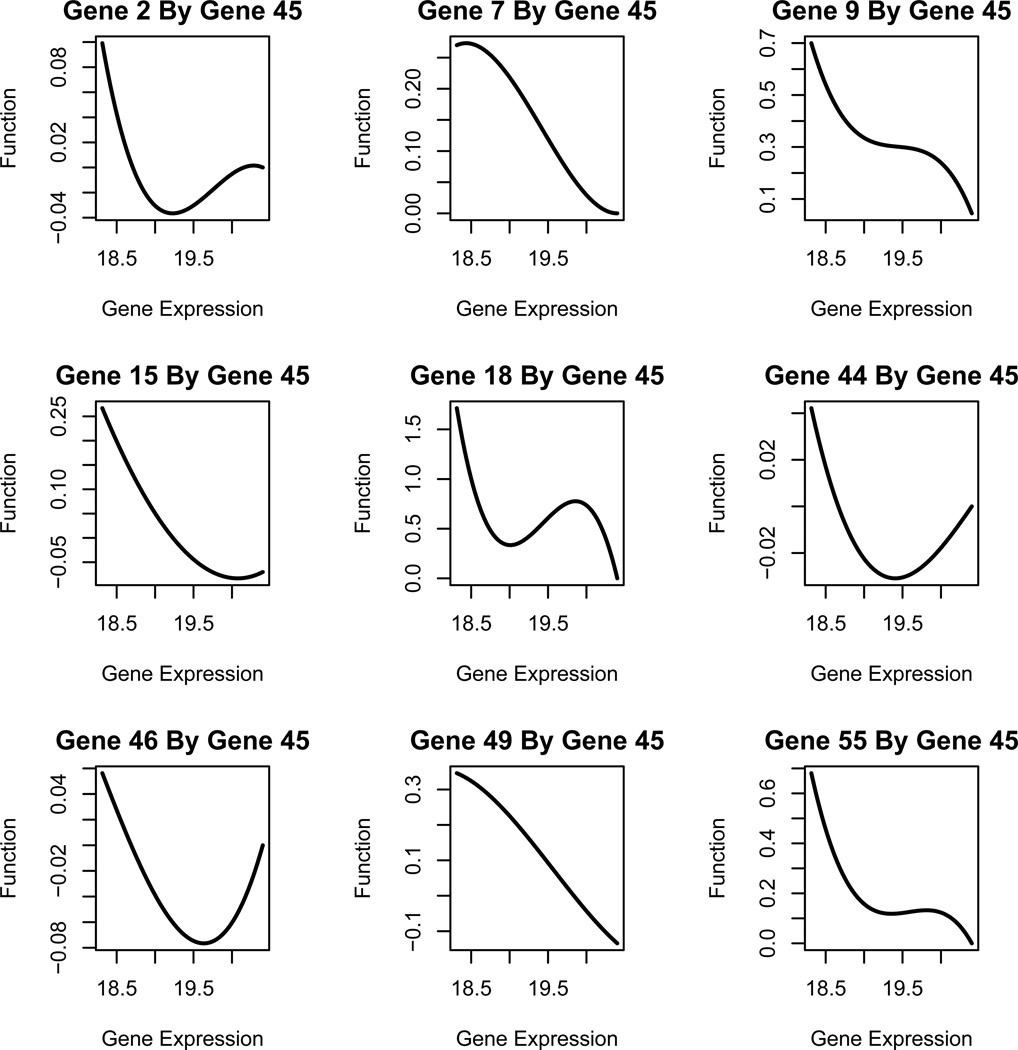

We plot the fitted expression curves (dashed lines) for 9 genes from Step V of the proposed procedure, overlaid with the raw data (dots) and the smoothed mean curves (solid lines) from Step I in Figure 2. For comparison purpose, the estimation results from the linear ODE model (Lu et al., 2011) were also obtained and plotted (dotted lines). From this figure, we can see that the proposed nonparametric additive ODE models fit the data better (also closer to the smoothed mean curves), compared to the linear ODE model fit. We also notice that the proposed SA-ODE models not only can fit the simple monotonic curves well, but also can exibly fit complex nonlinear curves reasonably good. For each of 58 genes, we list the regulatory genes, identified by the proposed method in Table 3. One important feature of the identified GRN is that each of these 58 genes is regulated by only a few other genes (ranging from 1 to 8 genes), which reects the fact of sparseness of the network connections.

Figure 2.

Real data analysis for T cell activation experimental data (dots): the estimation results for gene FYB (gene 45) and 9 genes regulated by gene FYB which include nonparametric smoothing estimates of mean expression curves from Step I (solid lines), the estimated curves (dashed lines) based on the proposed SA-ODE model from Step V of the proposed procedure, and the estimated curves (dotted lines) using the linear ODE model fit from Lu et al. (2011).

Table 3.

T-cell activation gene regulatory network: variable selection results from the adaptive group LASSO. For a particular gene listed in Columns 1 and 4, Columns 2 and 5 provide the list of genes that have a significant regulation effects (inward inuence) on this gene, and Columns 3 and 6 provide the list of genes that this particular gene has a significant regulation effect on (outward inuence).

| Gene | Inward Inuence Genes | Outward Inuence Genes | Gen | Inward Inuence Genes | Outward Inuence Genes |

|---|---|---|---|---|---|

| 1 | 29, 32, 43 | 30 | 6, 46, 52 | ||

| 2 | 32, 41, 45 | 31 | 25 | 6, 10, 23, 27, 35, 46, 48 | |

| 3 | 29, 35, 41 | 32 | 48 | 1, 2, 5, 21, 24, 26, 56, 57 | |

| 4 | 25 | 33 | 27, 28, 41 | 11, 23 | |

| 5 | 32, 35 | 34 | 6, 27 | ||

| 6 | 6, 25, 31 | 6, 9, 16, 26, 30, 34, 42, 52 | 35 | 20, 31 | 3, 5, 9, 14, 18, 20, 21, 38 |

| 7 | 45, 52, 57 | 11, 23 | 36 | 37 | 23 |

| 8 | 25 | 37 | 25 | 12, 13, 16, 22, 27, 36, 40, 56 | |

| 9 | 6, 17, 27, 28, 35, 45 | 38 | 11, 28, 29, 35 | ||

| 10 | 31, 51, 52 | 39 | 25 | 53 | |

| 11 | 7, 13, 33, 46, 57 | 14, 18, 21, 38 | 40 | 37, 46 | |

| 12 | 29, 37 | 41 | 20, 55 | 2, 3, 15, 17, 25, 33, 42, 50, 58 | |

| 13 | 37 | 11, 14, 15, 23, 50 | 42 | 6, 41, 46 | |

| 14 | 11, 13, 20, 28, 29, 35 | 43 | 27 | 1 | |

| 15 | 13, 41, 45 | 44 | 28, 29, 45, 57 | ||

| 16 | 6, 27, 37 | 45 | 52 | 2, 7, 9, 15, 18, 44, 46, 49, 55 | |

| 17 | 41, 48 | 9, 54 | 46 | 31, 45 | 11, 23, 24, 30, 40, 42, 58 |

| 18 | 11, 35, 45, 54 | 47 | 27 | ||

| 19 | 25 | 58 | 48 | 31, 48 | 17, 28, 32, 48 |

| 20 | 29, 35, 55 | 14, 25, 35, 41, 57 | 49 | 35, 45, 52 | |

| 21 | 11, 28, 32, 35 | 50 | 13, 41, 57 | ||

| 22 | 27, 37, 52 | 51 | 25 | 10 | |

| 23 | 7, 13, 31, 33, 36, 46, 57 | 52 | 6 | 7, 10, 22, 29, 30, 45, 49, 53, 55 | |

| 24 | 32, 46, 57 | 53 | 39, 52 | ||

| 25 | 20, 28, 41 | 4, 6, 8, 19, 26, 31, 37, 39, 51 | 54 | 17 | |

| 26 | 6, 25, 32 | 55 | 27, 45, 52 | 20, 41 | |

| 27 | 31, 37 | 9, 16, 22, 33, 34, 43, 47, 55, 58 | 56 | 32, 37 | |

| 28 | 48, 57 | 9, 14, 21, 25, 33, 38, 44 | 57 | 20, 32 | 7, 11, 23, 24, 28, 44, 50 |

| 29 | 52 | 1, 3, 12, 14, 20, 38, 44 | 58 | 19, 27, 41, 46 |

In agreement with the findings from Rangel et al. (2004), our model identified the important adaptor molecule in T-cell receptor signaling pathway, FYB (gene 45 in Table 3) as one of the genes having the highest number of outward connections. In addition to the 6 genes, found by Rangel et al. (2004), that are regulated by FYB, we identified three more FYB-regulated genes which carry functions in proliferation (gene 2), interference (gene 44) and apoptosis (gene 49). This is presumably due to that the proposed nonparametric additive ODE model and the variable selection method allow us to identify significant nonlinear regulation effects compared to the linear state space model (SSM) used by Rangel et al. (2004). As another interesting finding based on the proposed model, we identified the direct regulation of integrin (gene 15) by FYB, which has already been corroborated by the experimental study (Peterson et al., 2001). On the contrary, the linear SSM used by Rangel et al. (2004) only found the indirect regulation mediated by IL3Rθ (gene 55) and TRAF5 (gene 3). The estimated regulation functions of FYB (gene 45 in Table 3) to other genes are shown in Figure 3. Our results show that the gene FYB always has a positive regulation on genes 9, 18, 46 and 55, but a negative effect on gene 7, which agree with the findings from Rangel et al. (2004). For gene 15 which was found to be indirectly regulated by FYB in Rangel et al. (2004), we discover that the direct regulation effect is positive when the FYB expression level is low and turns to be negative when the FYB expression level is high. Similarly, for the three newly discovered FYB-regulated genes, genes 2, 44 and 49, by our method, we found that the regulation effect changes from positive to negative when the FYB expression is high. This demonstrates that the proposed nonparametric additive ODE model may help us to discover not only nonlinear regulation effects, but also varying effects due to the expression level of the regulator genes.

Figure 3.

Estimated regulation functions of genes inuenced by gene 45 (FYB)

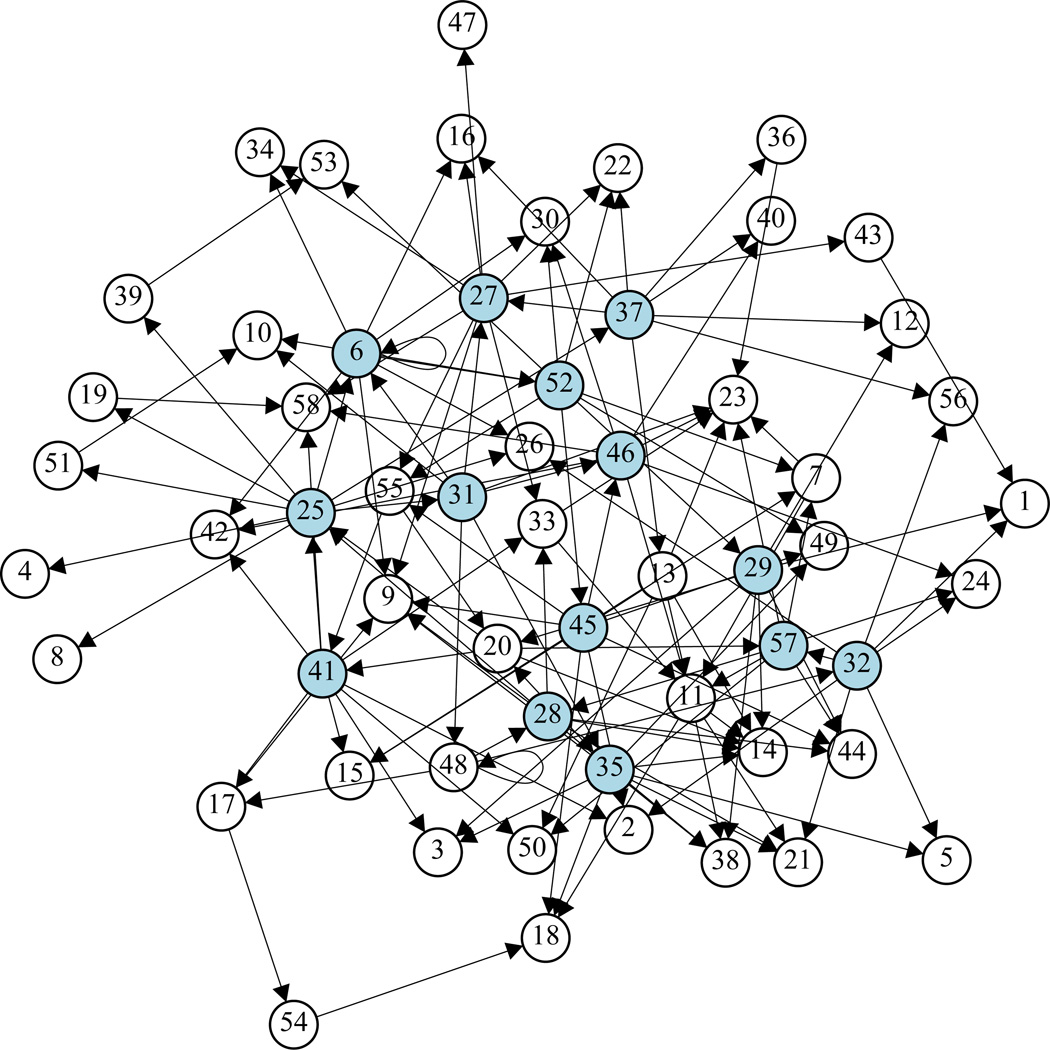

We use R function ‘igraph’ to plot the obtained GRN in Figure 4 in order to visualize the complete network. From this figure, we can clearly see that there are 14 ‘big’ regulator genes that regulate more than 5 other genes for each of them (see also Table 3). Due to space limitation, we will report more detailed annotations and biological implications of this established dynamic GRN for T-cell activation somewhere else. Notice that any statistical methods and modeling approaches can only identify potential regulation effects in actual GRNs. Usually some of these findings can be confirmed by existing literature and some others may need new experiments to validate. We expect that the proposed SA-ODE model and the variable selection methods provide a exible tool to explore and identify additive nonlinear regulation effects in the GRNs.

Figure 4.

Graph of T cell activation GRN formed by 58 genes. Each gene is represented as a node. Arrow stands for the direction of inuence. The 14 “big” regulators are in blue.

6. Concluding Remarks

In this paper, we have proposed a sparse additive ordinary differential equation (SA-ODE) model for dynamic gene regulatory networks (GRN) in order to capture the nonlinear regulation effects. A five-step variable selection and estimation procedure by coupling the two-step smoothing-based ODE estimation method and LASSO techniques is developed. The asymptotic properties of the proposed methods are established. Simulation studies are conducted to demonstrate the good performance of the proposed variable selection method. We have successfully applied the proposed method to construct a nonlinear dynamic GRN for T cell activation. We discovered additional new regulation effects in the complicated nonlinear network consisting of 58 genes, compared to the existing linear network modeling approach. The real data analysis results also show that the proposed SA-ODE model not only can capture complex nonlinear regulation effects, but also it can identify the varying-effects due to the expression levels of regulator genes.

In the theoretical development in Section 3, we have shown that the adaptive group LASSO selects the correct model with probability approaching 1 and achieves the optimal nonparametric convergence rate for the proposed SA-ODE model, which are similar to those obtained by Huang et al. (2010) for a nonparametric additive regression model. Notice that we convert the proposed SA-ODE model into a ‘pseudo’ sparse additive (PSA) model, which is different from the additive regression model in Huang et al. (2010) in the sense that both response variables and covariates in the PSA model are derived from the first step nonparametric smoothing of state variables and their derivatives, instead of observed data, and the error terms in the PSA model are not i.i.d. but dependent. To tackle the challenges in this complex model, we used a weight function with boundary restrictions in the penalized least squares (LASSO) criteria and established a critical lemma in order to achieve the optimal convergence rate.

Alternative models for dynamic GRNs include boolean network, Bayesian network, hidden Markov models among others (Liang et al., 1998; Akutsu et al., 2000; Shmulevich et al., 2002; Martin et al., 2007; Murphy and Mian, 1999; Friedman et al., 2000; Hartemink et al., 2001; Zou and Conzen, 2005; Song et al., 2009; Hirose et al., 2008; Kojima et al., 2009; Shimamura et al., 2009; Gupta et al., 2007). It is interesting to compare these modeling approaches with the proposed ODE modeling method from the perspectives of computational cost and likelihood to recover the true network. But the computational cost for this comparison is beyond the limit of our current computational power. We employed the two-stage smoothing-based ODE estimation method for the SA-ODE model in order to reduce the computational cost and simplify the implementation of LASSO-based variable selection methods. One limitation of the two-stage smoothing-based ODE estimation method is its requirement of direct observations of all state variables in the system. It is still a challenging problem to perform variable selection for high-dimensional ODE models with partially observed state variables. Some other more efficient estimation approaches for ODE models such as the numerical discretization-based estimation method (Wu et al., 2012) and generalized profiling approach (Ramsay et al., 2007) may also be considered for high-dimensional ODE variable selection. We believe that it is worth future exploration along a similar line, although the computational cost and implementation details need to be carefully considered. In the proposed SA-ODE model (1.3), we only consider the nonparametric additive structure of related variables. It could be extended to a nonparametric structure involving interactions (Radchenko and James, 2010) since co-regulations are very often in gene regulatory networks. We are currently working on this problem and will report the results elsewhere.

Acknowledgments

The authors would like to thank the editor, an associate editor, and two referees for their constructive comments and suggestions. This research was partially supported by the NIAID/NIH grants HHSN272201000055C and AI087135 as well as two University of Rochester CTSI pilot awards (UL1RR024160) from the National Center For Research Resources. Liang’s research was partially supported by NSF grants DMS-1007167 and DMS-1207444, and by Award Number 11228103, made by National Natural Science Foundation of China.

APPENDIX

Proofs

We can prove the theoretical results in Theorems 1–3 and Corollary 1 similarly to Huang et al. (2010). But there are more technical challenges that we need to deal with. The major differences between the ‘pseudo’ sparse additive (PSA) model (2.3) and the additive regression model in Huang et al. (2010) include: 1) both response variables and covariates in the PSA model are derived from the first step nonparametric smoothing of state variables and their derivatives, instead of observed data, and ii) the error terms, ϒki in the PSA model, are not i.i.d. but dependent. To tackle these problems, we establish the following lemma. For 1 ≤ k ≤ p, let for 1 ≤ j ≤ p, 1 ≤ m ≤ mn (for notational simplicity, we suppress the dependence of k), and Tn = max1≤j≤p,1≤m≤mn |Tjm|.

Lemma A.1 Under Assumptions A, B2–B4 and B6 in Section 3, we have .

Proof: From (2.2), we know that Tjm can be expressed as follows.

with εk = (εk(t1), ⋯, εk(tn))T and gω(ti) being the ω-th component of the n-dimensional vector . Under Assumption B3, εk(ti)’s are i.i.d. and sub-Gaussian. Moreover, for given ti’s, the coefficient of εk(tω) is given. Therefore conditional on ti’s, Tjm’s are sub-Gaussian.

Let and . Then by the maximal inequality for sub-Gaussian random variables (Lemmas 2.2.1 and 2.2.2, van der Vaart and Wellner, 1996), we have , where C1 > 0 is a constant. Therefore,

| (A.1) |

Now we discuss the order of E(sn). Similar to Wu et al. (2012), we consider the integral approximation of Tjm because of the boundary effects. By the strong law of large number, we have

| (A.2) |

From the integration by parts, it follows that

where and the second equality holds because of the boundary condition dk1(t0) = dk1(T) = 0. Denote and

By the Hölder’s inequality, we have

| (A.3) |

From the proof of Lemma 6 in Wu et al. (2012), we know that

| (A.4) |

for some positive constant C2. By the extremal equality, it follows that , where L1(μ, μ) is the bi-variate Lebesgue function space with L1-norm. Continuing to apply integration by parts and the boundary condition dk1(t0) = dk1(T) = 0, we have

Then for some positive constant C3. By the properties of B-splines, we have

| (A.5) |

for some positive constant C4. Combining (A.3)–(A.5) together, it follows that for some positive constant C. Then from (A.2), we have that . Therefore for some positive constant C. Thus from (A.1), we have

for some positive constant C.

Proof of Theorem 1. The proof of part (i) is similar in spirit to the proof of Theorem 1 in Huang et al. (2010). But some technical challenges have to be tackled since here we need to consider the estimation errors of and X̂k(t) (involving the measurement error) and the approximation error of the additive regression functions by splines. Specifically, from (2.3) and Taylor expansion, we have

where Xji locates between X̂j(ti) and Xj(ti).

For Dk1 = diag{dk1(t1), ⋯, dk1(tn)}, we have . Let , where Ξk = (Ξk(t1), ⋯, Ξk(tn))T, Πk = (Πk1, ⋯, Πkn)T and δk = (δk1, ⋯, δkn)T with . For any integer m, let

where for |A| = q1 = m ≥ 0 (here the inverse matrix can also be replaced by the generalized inverse), , SAk = λk1UAk and ‖UAk‖2 = 1. Then from the expression of and X̂k(t), we have with .

For a sufficiently large constant C1 > 0, Define

and

where m0 ≥ 0. Similar to the proof of Theorem 2.1 of Wei and Huang (2010), it can follow that (Z, Ξk) ∈ Ωq ⇒ |Ã1| ≤ M1q for a constant M1 > 1 and

| (A.6) |

By the triangle and Cauchy-Schwarz inequalities,

| (A.7) |

Since for mn = n1/(2ϱ+1), we have that, for all m ≥ q and n sufficiently large,

| (A.8) |

for some constant C2 > 0. From Lemma 1(i) of Wu et al. (2012) and Theorem 2(a) of Claeskens et al. (2009), we have and E[X̂k(t)]−Xk(t) = O(κν+1)+O(λkn−1κ−2) = O(n−(ν+1)/(2ν+3)) for κ = n−1/(2ν+3) and λk = O(nν/(2ν+3)). Then Πki = O(qn−ν/(2ν+3)). Under Assumption B5, it follows that 3/(4ν + 6) ≤ 1/(4ϱ + 2). Then for all m ≥ q and n sufficiently large, we have

| (A.9) |

for some constant C3 > 0. Finally, it follows from (A.6)–(A.9) that P(Ωq) → 1. This completes the proof of part (i) of Theorem 1.

Before proving part (ii), we first prove part (iii) of Theorem 1. By the definition of ,

| (A.10) |

Let A2 = {j : ‖βkj‖2 ≠ 0 or ‖β̃kj‖2 ≠ 0} and dk2 = |A2|. By part (i), dk2 = Op (q). By (A.10) and the definition of A2,

| (A.11) |

Let and . Write . We have . (A.11) can therefore be expressed as . Note that . These two expressions indicate that

| (A.12) |

Let be the projection of ηk on the span of ; i.e., . By Cauchy-Schwarz inequality and ab ≤ a2 + b2/4, we have that

| (A.13) |

From (A.12) and (A.13), we have

| (A.14) |

By the definition of Dk1, we know that is a nonnegative definite matrix and its smallest positive eigenvalue cn* exists. Under Assumptions A5 and B3, by Lemma 3 in Huang et al. (2010) and part (i), we have with probability converging to one. Since and 2ab ≤ a2 + b2, from (A.14), we have

It follows that

| (A.15) |

Let and with f* (․) Defined by (2.4). For each component ηi of ηk, we have

Since |μk − H̄k|2 = Op(n−1) and , we have

| (A.16) |

where and are projections of Гk = {Гk(t1), ⋯, Гk(tn)}T and Λk = {fkA2(X̂ (t1)), ⋯, fkA2 (X̂ (tn))}T on the span of , respectively. We have . Now

where 𝒵jm = {ψm(X̂j (t1)), ⋯, ψm(X̂j(ti))}T. By Lemma A.1, we have

It follows that,

| (A.17) |

Combining (A.15)–(A.17), we get

Since dn2 = Op(q), with probability converging to one, we have

| (A.18) |

This completes the proof of part (iii).

We now prove part (ii). Under Assumption B2, ‖fkj‖2 ≥ cf, 1 ≤ j ≤ q, and , we have for n sufficiently large. By a result of de Boor (2001), see also (12) of Stone (1986), there are positive constants c1 and c2 such that . It follows that . Therefore, if ‖βkj‖2 ≠ 0 but ‖β̃kj‖2 = 0, then , which contradicts part (iii) since and as n → ∞.

Proof of Theorem 2. By the definition of kj, 1 ≤ j ≤ p, parts (i) and (ii) follow from parts (i) and (ii) of Theorem 1 directly. Now, consider part (iii). By the properties of spline (de Boor, 2001), there exist positive constants c1 and c2 such that Thus

| (A.19) |

By Assumption B3, . Part (iii) follows.

Corollary 1 follows from Theorem 2 directly. The proof of Theorem 3 essentially follows the proof of Corollary 2 in Huang et al. (2010) by similar changes to those given in the proof of part (iii) of Theorem 1, and we omit it here.

Contributor Information

Hulin Wu, Email: Hulin Wu@urmc.rochester.edu, Department of Biostatistics and Computational Biology, University of Rochester, School of Medicine and Dentistry, 601 Elmwood Avenue, Box 630, Rochester, NY 14642.

Tao Lu, Email: stat.lu11@gmail.com, Department of Epidemiology and Biostatistics, State University of New York, Albany, NY 12144.

Hongqi Xue, Email: Hongqi_Xue@urmc.rochester.edu, Department of Biostatistics and Computational Biology, University of Rochester, School of Medicine and Dentistry, 601 Elmwood Avenue, Box 630, Rochester, NY 14642.

Hua Liang, Email: hliang@gwu.edu, Department of Statistics, George Washington University, 801 22nd St. NW, Washington, D.C. 20052.

References

- Akutsu T, Miyano S, Kuhara S. Inferring qualitative relations in genetic networks and metabolic pathways. Bioinformatics. 2000;16:727–734. doi: 10.1093/bioinformatics/16.8.727. [DOI] [PubMed] [Google Scholar]

- Bansal M, Della Gatta G, di Bernardo D. Inference of gene regulatory networks and compound mode of action from time course gene expression profiles. Bioinformatics. 2006;22:815–822. doi: 10.1093/bioinformatics/btl003. [DOI] [PubMed] [Google Scholar]

- Bard Y. Nonlinear Parameter Estimation. New York: Academic Press; 1974. [Google Scholar]

- Beal MJ, Falciani F, Ghahramani Z, Rangel C, Wild DL. A Bayesian approach to reconstructing genetic regulatory networks with hidden factors. Bioinformatics. 2005;21:349–356. doi: 10.1093/bioinformatics/bti014. [DOI] [PubMed] [Google Scholar]

- Bornholdt S. Boolean network models of cellular regulation: prospects and limitations. Journal of the Royal Soceity Interface. 2008;5(Suppl 1):S85–S94. doi: 10.1098/rsif.2008.0132.focus. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brunel N. Parameter estimation of ODE’s via nonparametric estimators. Electronic Journal of Statistics. 2008;2:1242–1267. [Google Scholar]

- Cantoni E, Flemming JM, Ronchetti E. Variable selection in additive models by nonnegative garrote. Statistical Modelling. 2011;11:237–252. [Google Scholar]

- Carthew RW, Sontheimer EJ. Origins and mechanisms of miRNAs and siR-NAs. Cell. 2009;136:642–655. doi: 10.1016/j.cell.2009.01.035. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen J, Chen Z. Extended Bayesian information criteria for model selection with large model spaces. Biometrika. 2008;95:759–771. [Google Scholar]

- Chen J, Wu H. Estimation of time-varying parameters in deterministic dynamic models. Statistica Sinica. 2008a;18:987–1006. [Google Scholar]

- Chen J, Wu H. Efficient local estimation for time-varying *coefficients in deterministic dynamic models with applications to HIV-1 dynamics. Journal of the American Statistical Association. 2008b;103:369–384. [Google Scholar]

- Claeskens G, Krivobokova T, Opsomer JD. Asymptotic properties of penalized spline estimators. Biometrika. 2009;96:529–544. [Google Scholar]

- Craven P, Wahba G. Smoothing noisy data with spline functions: estimating the correct degree of smoothing by the method of generalized cross-validation. Numerische Mathematik. 1979;31:337–403. [Google Scholar]

- de Boor C. A Practical Guide to Splines. revised ed. New York: Springer-Verlag; 2001. [Google Scholar]

- DeJong H. Modeling and simulation of genetic regulatory systems: a literature review. Journal of Computational Biology. 2002;9:67–103. doi: 10.1089/10665270252833208. [DOI] [PubMed] [Google Scholar]

- D’Haeseleer P, Wen X, Fuhrman S, Somogyi R. Linear modeling of mRNA expression levels during CNS development and injury. Pacific Symposium on Biocomputing. 1999;4:41–52. doi: 10.1142/9789814447300_0005. [DOI] [PubMed] [Google Scholar]

- Donnet S, Samson A. Estimation of parameters in incomplete data models defined by dynamical systems. Journal of Statistical Planning and Inference. 2007;137:2815–2831. [Google Scholar]

- Fan J, Li R. Variable selection via nonconcave penalized likelihood and its oracle properties. Journal of the American Statistical Association. 2001;96:1348–1360. [Google Scholar]

- Frank I, Friedman J. A statistical view of some chemometrics regression tools (with discussion) Technometrics. 1993;35:109–148. [Google Scholar]

- Friedman N, Linial M, Nachman I, Peer D. Using Bayesian networks to analyze expression data. Journal of Computational Biology. 2000;7:601–620. doi: 10.1089/106652700750050961. [DOI] [PubMed] [Google Scholar]

- Guo F, Hanneke S, Fu W, Xing EP. Recovering temporally rewiring networks: a model-based approach; Proceedings of the 24th International Conference on Machine Learning (ICML); 2007. [Google Scholar]

- Gupta M, Ibrahim JG. Varialbe selection in regression mixture modeling for the discovery of gene regulatory networks. Journal of the American Statistical Association. 2007;102:867–880. [Google Scholar]

- Gupta M, Qu P, Ibrahim JG. A temporal hidden Markov regression model for the analysis of gene regulatory networks. Biostatistics. 2007;8:805–820. doi: 10.1093/biostatistics/kxm007. [DOI] [PubMed] [Google Scholar]

- Hanneke S, Xing EP. Discrete Temporal Models of Social Networks; In proceedings of the Workshop on Statistical Network Analysis, the 23rd International Conference on Machine Learning (ICML-SNA); 2006. [Google Scholar]

- Hartemink A, Gifford D, Jaakkola T, Young R. Using graphical models and genomic expression data to statistically validate models of genetic regulatory networks. Pacific Symposium on Biocomputing. 2001;6:422–433. doi: 10.1142/9789814447362_0042. [DOI] [PubMed] [Google Scholar]

- Hecker M, Lambeck S, Toepfer S, van Someren E, Guthke R. Gene gegulatory network inference: data integration in dynamic models - a review. Biosystems. 2009;96:86–103. doi: 10.1016/j.biosystems.2008.12.004. [DOI] [PubMed] [Google Scholar]

- Heckerman D. A tutorial on learning with Bayesian networks. Microsoft Research Technical Report: MSR-TR-95-06. 1996 [Google Scholar]

- Heckman NE, Ramsay JO. Penalized regression with model-based penalties. Canadian Journal of Statistics. 2000;28:241–258. [Google Scholar]

- Hemker P. Numerical methods for differential equations in system simulation and in parameter estimation. Analysis and Simulation of Biochemical Systems. 1972:59–80. [Google Scholar]

- Hirose O, Yoshida R, Imoto S, Yamaguchi R, Higuchi T, Charnock-Jones DS, Print C, Miyano S. Statistical inference of transcriptional module-based gene networks from time course gene expression profiles by using state space models. Bioinformatics. 2008;24:932–942. doi: 10.1093/bioinformatics/btm639. [DOI] [PubMed] [Google Scholar]

- Holter NS, Maritan A, Cieplak M, Fedoroff NV, Banavar JR. Dynamic modeling of gene expression data. Proceedings of the National Academy of Sciences of the United States of America. 2001;98:1693–1698. doi: 10.1073/pnas.98.4.1693. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Huang J, Horowitz JL, Wei F. Variable selection in nonparametric additive models. The Annals of Statistics. 2010;38:2282–2313. doi: 10.1214/09-AOS781. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Huang JZ. Local asymptotics for polynomial spline regression. The Annals of Statistics. 2003;31:1600–1635. [Google Scholar]

- Huang Y, Liu D, Wu H. Hierarchical Bayesian methods for estimation of parameters in a longitudinal HIV dynamic system. Biometrics. 2006;62:413–423. doi: 10.1111/j.1541-0420.2005.00447.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Imoto S, Sunyong K, Goto T, Aburatani S, Tashiro K, Kuhara S, Miyano S. Bayesian network and nonparametric heteroscedastic regression for nonlinear modeling of genetic network. Journal of Bioinformatics and Computational Biology. 2003;6:231–252. doi: 10.1142/s0219720003000071. [DOI] [PubMed] [Google Scholar]

- Iwashima M, Irving BA, van Oers NS, Chan AC, Weiss A. Sequential Interactions of the TCR with two Distinct Cytoplasmic Tyrosine Kinases. Science. 1994;263:1136–1139. doi: 10.1126/science.7509083. [DOI] [PubMed] [Google Scholar]

- Jia G, Stephanopoulos GN, Gunawan R. Parameter estimation of kinetic models from metabolic profiles: Two-phase dynamic decoupling method. Bioinformatics. 2011;27:1964–1970. doi: 10.1093/bioinformatics/btr293. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kauffman SA. Metabolic stability and epigenesis in randomly constructed genetic nets. Journal of Theoretical Biology. 1969;22:437–467. doi: 10.1016/0022-5193(69)90015-0. [DOI] [PubMed] [Google Scholar]

- Kim H, Lee JK, Park T. Inference of large-scale gene regulatory networks using regression-based network approach. J. Bioinform. Comput. Biol. 2009;7:717–735. doi: 10.1142/s0219720009004278. [DOI] [PubMed] [Google Scholar]

- Kojima K, Yamaguchi R, Imoto S, Yamauchi M, Nagasaki M, Yoshida R, Shimamura T, Ueno K, Higuchi T, Gotoh N, Miyano S. A state space representation of VAR models with sparse learning for dynamic gene networks. Genome Informatics. 2009;22:56–68. [PubMed] [Google Scholar]

- Kolar M, Song L, Ahmed A, Xing EP. Estimating time-varying networks. Annals of Applied Statistics. 2010;4:94–123. [Google Scholar]

- Ley SC, Davies AA, Druker B, Crumpton MJ. The T-Cell Receptor/CD3 Complex and CD2 Stimulate the Tyrosine Phosphorylation of Indistinguishable Patterns of Polypeptides in the Human T-Leukemic Cell-Line Jurkat. European Journal of Immunology. 1991;21:2203–2209. doi: 10.1002/eji.1830210931. [DOI] [PubMed] [Google Scholar]

- Li Y, Ruppert D. On the asymptotics of penalized splines. Biometrika. 2008;95:415–436. [Google Scholar]

- Li Z, Osborne MR, Pravan T. Parameter estimation in ordinary differential equations. IMA Journal of Numerical Analysis. 2005;25:264–285. [Google Scholar]

- Liang H, Wu H. Parameter estimation for differential equation models using a framework of measurement error in regression models. Journal of the American Statistical Association. 2008;103:1570–1583. doi: 10.1198/016214508000000797. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liang S, Fuhrman S, Somogyi R. REVEAL, a general reverse engineering algorithm for inference of genetic network architectures. Proc. Pacific Symp. on Biocomputing. 1998;3:18–29. [PubMed] [Google Scholar]

- Lu T, Liang H, Li H, Wu H. High dimensional ODEs coupled with mixed-effects modeling techniques for dynamic gene regulatory network identification. Journal of the American Statistical Association. 2011;106:1242–1258. doi: 10.1198/jasa.2011.ap10194. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Martin S, Zhang Z, Martino A, Faulon JL. Boolean dynamics of genetic regulatory networks inferred from microarray time series data. Bioinformatics. 2007;23:866–874. doi: 10.1093/bioinformatics/btm021. [DOI] [PubMed] [Google Scholar]

- Meier L, van de Geer S, Buhlmann P. High-dimensional additive modeling. The Annals of Statistics. 2009;37:3779–3821. [Google Scholar]

- Murphy K, Mian S. Modelling gene expression data using dynamic Bayesian networks. Technical Report, University of California, Berkeley. 1999 [Google Scholar]

- Needham CJ, Bradford JR, Bulpitt AJ, Westhead DR. A primer on learning in Bayesian networks for computational biology. PLoS Computational Biology. 2007;3:e129. doi: 10.1371/journal.pcbi.0030129. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peterson EJ, Woods ML, Dmowski SA, Derimanov G, Jordan MS, Wu JN, Myung PS, Liu Q, Pribila JT, Freedman BD, Shimizu Y, Koretzky GA. Coupling of the TCR to integrin activation by SLAP-130/Fyb. Science. 2001;293:2263–2265. doi: 10.1126/science.1063486. [DOI] [PubMed] [Google Scholar]

- Poyton AA, Varziri MS, McAuley KB, McLellan PJ, Ramsay JO. Parameter estimation in continuous-time dynamic models using principal differential analysis. Computers & Chemical Engineering. 2006;30:698–708. [Google Scholar]

- Putter H, Heisterkamp SH, Lange JMA, de Wolf F. A Bayesian approach to parameter estimation in HIV dynamical models. Statistics in Medicine. 2002;21:2199–2214. doi: 10.1002/sim.1211. [DOI] [PubMed] [Google Scholar]

- Qi X, Zhao H. Asymptotic efficiency and finite-sample properties of the generalized profiling estimation of the parameters in ordinary differential equations. The Annals of Statistics. 2010;38:435–481. [Google Scholar]

- Radchenko P, James GM. Variable selection using adaptive nonlinear interaction structures in high dimensions. Journal of the American Statistical Association. 2010;105:1541–1553. [Google Scholar]

- Ramsay JO. Principal differential analysis: data reduction by differential operators. Journal of the Royal Statistical Society, Series B. 1996;58:495–508. [Google Scholar]

- Ramsay JO, Hooker G, Campbell D, Cao J. Parameter estimation for differential equations: a generalized smoothing approach (with discussion) Journal of the Royal Statistical Society, Series B. 2007;69:741–796. [Google Scholar]

- Ramsay JO, Silverman BW. Functional Data Analysis. 2nd ed. New York: Springer; 2005. [Google Scholar]

- Rangel C, Angus J, Ghahramani Z, Lioumi M, Sotheran E, Gaiba A, Wild DL, Falciani F. Modeling T-Cell activation using gene expression profiling and state-space models. Bioinformatics. 2004;20:1361–1372. doi: 10.1093/bioinformatics/bth093. [DOI] [PubMed] [Google Scholar]

- Ravikumar P, Lafferty J, Liu H, Wasserman L. Sparse additive models. Journal of the Royal Statistical Society, Series B. 2009;71:1009–1030. [Google Scholar]

- Ruppert D, Wand MP, Carroll RJ. Semiparametric Regression. Cambridge: Cambridge University Press; 2003. [Google Scholar]

- Sakamoto E, Iba H. Inferring a systems of differential equations for a gene regulatory network by using genetic programming; Proceedings of the IEEE Congress on Evolutionary Computation; 2001. pp. 720–726. [Google Scholar]

- Schumaker LL. Spline Functions: Basic Theory. New York: Wiley; 1981. [Google Scholar]

- Shimamura T, Imoto S, Yamaguchi R, Fujita A, Nagasaki M, Miyano S. Recursive regularization for inferring gene networks from time-course gene expression profiles. BMC Systems Biology. 2009;3:41–54. doi: 10.1186/1752-0509-3-41. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shmulevich I, Dougherty ER, Kim S, Zhang W. Probabilistic Boolean networks: a rule-based uncertainty model for gene regulatory networks. Bioinformatics. 2002;18:261–274. doi: 10.1093/bioinformatics/18.2.261. [DOI] [PubMed] [Google Scholar]

- Shojaie A, Basu S, Michailidis G. Adaptive thresholding for reconstructing regulatory networks from time-course gene expression data. Stat Biosci. 2012;4:66–83. [Google Scholar]

- Shojaie A, Michailidis G. Analysis of gene sets based on the underlying regulatory network. J. Comput. Biol. 2009;16:407–426. doi: 10.1089/cmb.2008.0081. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Song L, Kolar M, Xing EP. Time-Varying Dynamic Bayesian Networks. Proceeding of the 23rd Neural Information Processing Systems, (NIPS) 2009 [Google Scholar]

- Spieth C, Hassis N, Streichert F. Comparing mathematical models on the problem of network inference; Proceedings of 8th Annual Conference on Genetic and evolutionary computation; 2006. pp. 279–285. [Google Scholar]

- Steuer R, Kurths J, Daub CO, Weise J, Selbig J. The mutual information: detecting and evaluating dependencies between variables. Bioinformatics. 2002;18:S231–S240. doi: 10.1093/bioinformatics/18.suppl_2.s231. [DOI] [PubMed] [Google Scholar]

- Stone CJ. The Dimensionality Reduction Principle for Generalized Additive Models. The Annals of Statistics. 1986;14:590–606. [Google Scholar]

- Stuart JM, Segal E, Koller D, Kim SK. A gene-coexpression network for global discovery of conserved genetic modules. Science. 2003;302:249–255. doi: 10.1126/science.1087447. [DOI] [PubMed] [Google Scholar]

- Thomas R. Boolean formalization of genetic control circuits. Journal of Theoretic Biology. 1973;42:563–585. doi: 10.1016/0022-5193(73)90247-6. [DOI] [PubMed] [Google Scholar]

- Tibshirani RJ. Regression shrinkage and selection via the Lasso. Journal of the Royal Statistical Society, Series B. 1996;58:267–288. [Google Scholar]

- van der Vaart AW, Wellner JA. Weak Convergence and Empirical Processes. New York: Springer; 1996. [Google Scholar]

- Varah JM. A spline least squares method for numerical parameter estimation differential equations. SIAM Journal on Scientific and Statistical Computing. 1982;3:28–46. [Google Scholar]

- Varziri MS, Poyton AA, McAuley KB, McLellan PJ, Ramsay JO. Selecting optimal weighting factors in iPDA for parameter estimation in continuous-time dynamic models. Computers & Chemical Engineering. 2008;32:3011–3022. [Google Scholar]

- Voit EO. Computational Analysis of Biochemical Systems: a Practical Guide for Biochemists and Molecular Biologists. Cambridge: Cambridge University Press; 2000. [Google Scholar]

- Voit EO, Almeida J. Decoupling dynamical systems for pathway identification from metabolic profiles. Bioinformatics. 2004;22:1670–1681. doi: 10.1093/bioinformatics/bth140. [DOI] [PubMed] [Google Scholar]

- Wang H, Leng C. A note on the adaptive group Lasso. Computational Statisti & Data Analysis. 2008;52:5277–5286. [Google Scholar]

- Weaver DC, Workman CT, Stormo GD. Modeling regulatory networks with weight matrices. Pacific Symposium on Biocomputing. 1999;4:112–123. doi: 10.1142/9789814447300_0011. [DOI] [PubMed] [Google Scholar]

- Wei F, Huang J. Consistent group selection in high-dimensional linear regression. Bernoulli. 2010;16:1369–1384. doi: 10.3150/10-BEJ252. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Werhli AV, Husmeier D. Reconstructing gene regulatory networks with Bayesian networks by combining expression data with multiple sources of prior knowledge. Statistical Applications in Genetics and Molecular Biology. 2007;6(Iss.1) doi: 10.2202/1544-6115.1282. Article15. [DOI] [PubMed] [Google Scholar]

- Wessels LF, van Someren EP, Reinders MJ. A comparison of genetic network models. Pacific Symposium on Biocomputing. 2001;6:508–519. [PubMed] [Google Scholar]

- Wu H, Xue H, Kumar A. Numerical algorithm-based estimation methods for ODE models via penalized spline smoothing. Biometrics. 2012;68:344–352. doi: 10.1111/j.1541-0420.2012.01752.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wu H, Zhang JT. Nonparametric regression methods for longitudinal data analysis. Hoboken: John Wiley & Sons; 2006. [Google Scholar]

- Xue H, Miao H, andWu H. Sieve estimation of constant and time-varying coefficients in nonlinear ordinary differential equation models by considering both numerical error and measurement error. The Annals of Statistics. 2010;38:2351–2387. doi: 10.1214/09-aos784. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yeung MKS, Tegner J, Collins JJ. Reverse engineering gene networks using singular value decomposition and robust regression. Proceedings of the National Academy of Sciences of the United States of America. 2002;99:6163–6168. doi: 10.1073/pnas.092576199. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yuan M, Lin Y. Model selection and estimation in regression with grouped variables. Journal of the Royal Statistical Society, Series B. 2006;68:49–67. [Google Scholar]

- Zhou S, Shen X, Wolfe D. Local asymptotics for regression splines and confidence regions. The Annals of Statistics. 1998;26:1760–1782. [Google Scholar]

- Zhou S, Wolfe D. On derivative regression in spline estimation. Statistica Sinica. 2000;10:93–108. [Google Scholar]

- Zou H. The adaptive LASSO and its oracle properties. Journal of the American Statistical Association. 2006;101:1418–1429. [Google Scholar]

- Zou H, Hastie T. Regularization and variable selection via the elastic net. Journal of the Royal Statistical Society, Series B. 2006;67:301–320. [Google Scholar]

- Zou M, Conzen SD. A new dynamic Bayesian network (DBN) approach for identifying gene regulatory networks from time course microarray data. Bioinformatics. 2005;21:71–79. doi: 10.1093/bioinformatics/bth463. [DOI] [PubMed] [Google Scholar]