Abstract

There is a rich literature on Bayesian variable selection for parametric models. Our focus is on generalizing methods and asymptotic theory established for mixtures of g-priors to semiparametric linear regression models having unknown residual densities. Using a Dirichlet process location mixture for the residual density, we propose a semiparametric g-prior which incorporates an unknown matrix of cluster allocation indicators. For this class of priors, posterior computation can proceed via a straightforward stochastic search variable selection algorithm. In addition, Bayes factor and variable selection consistency is shown to result under a class of proper priors on g even when the number of candidate predictors p is allowed to increase much faster than sample size n, while making sparsity assumptions on the true model size.

Keywords: Asymptotic theory, Bayes factor, g-prior, Large p, small n, Model selection, Posterior consistency, Subset selection, Stochastic search variable selection

1. INTRODUCTION

Bayesian variable selection is widely applied, with O’Hara and Sillanpää providing a recent review (2009). There is a rich literature proposing variable selection methods and studying asymptotic properties for parametric models, while our focus is variable selection in semiparametric linear regression models of the form:

| (1) |

where Yn is n × 1, γ = {γj, j = 1, …, p} ∈ Γ, γj = 1 if the jth candidate predictor is included in the model with γj = 0 otherwise, Γ is the set of all possible subsets that are given non-zero prior probability, is the size of model γ, βγ is the pγ × 1 vector of regression coefficients, Xγ is the n × pγ design matrix containing the predictors in model γ, and f is an unknown residual density. Our focus is on avoiding parametric assumptions on f, while accommodating high-dimensional settings in which the number of candidate predictors p can be much larger than the sample size n but Γ is restricted to sparse models having pγ < n.

There has been limited consideration of variable selection in semiparametric Bayesian models, with essentially no results on asymptotic properties. In particular, it would be appealing to provide a computationally efficient procedure for Bayesian variable selection based on (1) for which it can be shown that the posterior probability on the true model converges to one as n → ∞ even when the number of candidate predictors increases much faster than n. In order for the asymptotic analysis to reflect the high dimensionality, it is important to allow p to grow with n. There has been some consideration of increasing p asymptotics in Bayesian parametric models. Castillo and van der Vaart (2012) study concentration of the posterior distribution in the normal means problem. Armagan et al. (2013) provide conditions for consistency in high-dimensional normal linear regression with shrinkage priors on the coefficients. Jiang (2007) studies convergence rates of the predictive distribution resulting from Bayesian model averaging in generalized linear models with high-dimensional predictors. These approaches do not consider consistency of model selection or semiparametric settings.

This article proposes a practical, useful and general methodology for Bayesian variable selection in semiparametric linear models (1), while providing basic theoretical support by showing Bayes factor and variable selection consistency. We also extend our approach and theory to increasing model dimensions involving p ≫ n candidate predictors while making sparsity assumptions on the true model. Our approach relies on placing a Dirichlet process (DP, Ferguson, 1972) location mixture of Gaussians (Lo, 1984) prior on the residual density f, inducing clustering of subjects. We introduce a prior on the coefficients βγ specific to each model γ, which generalizes mixtures of g-priors (Zellner and Siow, 1980; Liang et al., 2008) to include cluster allocation indices induced through the Dirichlet process. The formulation leads to a straightforward implementation via a stochastic search variable selection (SSVS) algorithm (George and McCulloch, 1997).

Section 2 develops the proposed framework. Section 3 considers asymptotic properties. Section 4 contains simulation results. Section 5 applies the approach to a type 2 diabetes data example, and the proofs of Theorems are contained in the Appendix.

2. MIXTURES OF SEMIPARAMETRIC g-PRIORS

2.1 Model Formulation

In this section, we propose a new class of priors for Bayesian variable selection in linear regression models with an unknown residual density characterized via a Dirichlet process (DP) location mixture of Gaussians. In particular, let

| (2) |

where xγ,i is the ith row of Xγ and does not include an intercept as we do not restrict f to have zero mean, and f is a density with respect to Lebesgue measure on ℜ. We address uncertainty in subset selection by placing a prior on γ, while the prior on βγ characterizes prior knowledge of the size of the coefficients for the selected predictors.

The DP mixture prior on the density f induces clustering of the n subjects into k groups/subclusters, where k is random and each group has a distinct intercept in the linear regression model. Let A denote an n × k allocation matrix, with Aij = 1 if the ith subject is allocated to the jth cluster and 0 otherwise. The jth column of A then sums to nj, the number of subjects allocated to subcluster j, with . Following Kyung, Gill and Casella (2009), conditionally on the allocation matrix A, (2) can be represented as a linear model with random intercepts

| (3) |

where A is random with a certain prior probability given by the coefficients in the summation of the likelihood expression (8) and the response and predictors are centered prior to analysis. In the special case in which A = 1n, the model reduces to a linear regression model with a common intercept η and Gaussian residuals. In this case, the conditional posterior for η given A = 1n is , which has realizations increasingly concentrated at zero as n increases.

We would like the prior on the regression coefficients to retain the essential elements of Zellner’s g-prior (Zellner, 1986), while being suitably adapted to the semiparametric case. To this effect, we propose a mixture of semi-parametric g-priors constructed to scale the covariance matrix in Zellner’s g-prior to reflect the clustering phenomenon as follows:

| (4) |

Prior (4) inherits advantages of previous mixtures of g-priors including computational efficiency in computing marginal likelihoods (conditional on A) and robustness to mis-specification of g. The prior can be interpreted as having arisen from the analysis of a conceptual sample generated using a scaled design matrix , reflecting the clustering phenomenon due to the DP kernel mixture prior. Moreover, the proposed prior leads to Bayes factor and variable selection consistency in semi-parametric linear models (2) as we will show.

Note that , so , implying that the prior variance of βγ conditional on (g, τ) is higher for the semi-parametric g–prior as compared to the traditional g–prior for any allocation matrix A. To assess the influence of A on the prior for βγ, we did simulations which revealed that for fixed (n, p), var(βγl) increases but the cov(βγl, βγl′) decreases as the number of underlying subclusters in the data increase (l′, l = 1, …, p, l′ ≠ l). This suggests that as the number of groups in A increase, the components of βγ are likely to be more dispersed with decreasing association between each other.

2.2 Bayes Factor in Semiparametric Linear Models

In studying asymptotic properties of our proposed approach, we follow standard practice in Bayesian model selection, and assume that the data Yn = (y1, …, yn)′ arise from one of the models in the list under consideration. This true model is denoted

as defined in equation (5). For pairwise comparison, we evaluate the evidence in favor of

as defined in equation (5). For pairwise comparison, we evaluate the evidence in favor of

compared to an alternative model

compared to an alternative model

using the Bayes factor, where

using the Bayes factor, where

| (5) |

where γj ∈ Γ indexes models of dimension pj and π(βγj) is defined in (4), j = 1, 2. Our prior specification philosophy is similar to the one adopted by Guo and Speckman (2009) for normal linear models, in that we assign proper priors on all elements of both βγ1, βγ2 conditional on (g, τ−1), and an improper prior on τ−1 for a more objective assessment. However unlike Guo and Speckman (2009), our focus is on Bayesian variable selection in semi-parametric linear models.

Note that the conditional likelihood of the response after marginalizing out η in (3) is L(Yn|A,

βγ, τ−1) = N (Xγβγ, τ−1ΣA) (Kyung et. al., 2009). Thus conditional on A and under the DP mixture of Gaussians prior on f,

in (5) reduces to the normal linear model:

in (5) reduces to the normal linear model:

| (6) |

where . Under a mixture of semi-parametric g-priors, we can directly use expression (17) in Guo and Speckman (2009) to obtain (conditional on A) for j = 1, 2

| (7) |

where .

Also, marginalizing over all possible subcluster allocations for a given sample size n, the following marginal likelihood can be obtained under a DP prior on f (Kyung et. al., 2009):

| (8) |

where

is the collection of all possible n×k matrices corresponding to different allocations of n subjects into k subclusters, and

is the collection of all possible n×k matrices corresponding to different allocations of n subjects into k subclusters, and

is the collection of all possible allocation matrices for a sample size n with

is the collection of all possible allocation matrices for a sample size n with

wl = 1. In the limiting case as n → ∞, we have

wl = 1. In the limiting case as n → ∞, we have

as the class of limiting allocation matrices. Further using (7), the Bayes factor in favor of

as the class of limiting allocation matrices. Further using (7), the Bayes factor in favor of

conditional on the allocation matrix A is given by

conditional on the allocation matrix A is given by

| (9) |

where

, (j = 1, 2). Finally using (8), the unconditional Bayes factor in favor of

marginalizing out A is

marginalizing out A is

| (10) |

2.3 Posterior Computation

We propose a MCMC algorithm for posterior computation for model (2), which combines a stochastic search variable selection algorithm or SSVS (George and McCulloch, 1997) with recently proposed methods for efficient computation in DP mixture models. In particular, we utilize the slice sampler of Walker (2007) incorporating the modification of Yau et al.(2011). Using Sethuraman’s (1994) stick-breaking representation, let

| (11) |

The slice sampler of Walker (2007) relies on augmentation with uniform latent variables, which allows us to move from an infinite summation for P in (11) to a finite sum given the uniform latent variable. In particular,

For the DP precision parameter, we specify the hyperprior m ~ Ga(am, bm) for greater flexibility. We specify a Ga(aτ, bτ) prior on τ and Be(a1, b1) prior on Pr(γl = 1) for implementing SSVS, l = 1, …, p. We choose π(g) as the hyper-g prior with a = 4 and use the fact that to sample g using a griddy Gibbs approach employing equally spaced quantiles. Inverting the n × n matrix ΣA in the mixtures of semiparametric g-prior in (4) does not add much to the computational burden even for large n, as we can use the closed form expression , where k grows at a rate log(n) (Antoniak, 1974) and is small to moderate in most practical applications. We outline the posterior computation steps in Appendix I.

3. ASYMPTOTIC PROPERTIES

In this section we establish asymptotic properties for the proposed approach using γ1 to index the true model

defined in (5) and γ2 to index an arbitrary model

defined in (5) and γ2 to index an arbitrary model

being compared to

being compared to

, with

, with

⊂

⊂

denoting nesting of

denoting nesting of

in

in

. Before proceeding, we introduce some regularity conditions essential for the development of asymptotic theory.

. Before proceeding, we introduce some regularity conditions essential for the development of asymptotic theory.

-

(A1′)

.

-

(A2′)

For

⊈

⊈

,

, with

.

,

, with

. -

(A1)

For p1 = O(na1), 0 ≤ a1 < 1, .

-

(A2)

For

⊈

⊈

,

, where bA,2 ∈ [0, bA,1) for fixed p1, p2, and bA,2 ∈ (0, bA,1) for pj = O(naj) (j = 1, 2, 0 ≤ a1 < a2 < 1).

,

, where bA,2 ∈ [0, bA,1) for fixed p1, p2, and bA,2 ∈ (0, bA,1) for pj = O(naj) (j = 1, 2, 0 ≤ a1 < a2 < 1).(A1), (A2) depend on the allocation matrix A, which is a n × k binary matrix that for large n tends to have k ≪ n, and be very sparse containing mostly zeros with sparsity increasing with column index. We also assume the following for the class of proper priors π(g) on g:

-

(A3)

There exists a constant k ≥ 0 such that for any constant c0 > 1 and any sequence an ≈ n. Here an ≈ bn implies that limn→∞ an/bn > 0.

-

(A4)

There exists a constant ku such that k−(p2−p1)/2 < ku ≤ k and .

We state (A1′), (A2′) as the standard assumptions for establishing Bayes factor consistency in normal linear models, on which our assumptions (A1), (A2) are based. We develop asymptotic theory for semiparametric linear models (5) based on assumptions (A1)–(A4). We note that (A1) is stronger compared to (A1′), since (A1) implies (A1′) as . Further, in the extreme case when A = In, we have , so that (A1′) implies (A1). Again when A =1n, for large n, for . Hence , where is the centered design matrix. When , (A1′) implies (A1).

Assumption (A2) can be interpreted as a positive ‘limiting distance’ between the two models corresponding to design matrices Xγ1 and Xγ2 in (3) conditional on A, after marginalizing out η, i.e. . Such a ‘limiting distance’ (Δ21,A) can be considered as a natural extension of the definition of distance between two normal linear models in Casella et. al. (2009) and Moreno et. al. (2010) to models with random intercept as in (3).

Assumptions (A3), (A4) define a class of proper priors for g described in Guo and Speckman (2009). This class includes and priors with 2 < a ≤ 4 (Liang et. al. 2008), Zellner-Siow priors (Zellner and Siow, 1980) as well as beta-prime priors (Maruyama and George, 2008). It is clear that these assumptions on π(g) are satisfied by quite a few standard priors are hence are quite reasonable.

The following lemma gives the limits of quantities such as

, which would be useful for establishing asymptotic properties. The proof follows directly using Lemmas 1, 2 of Guo and Speckman (2009) and from (6) which essentially states that under the DP mixture of Gaussians prior on f for

in (5) and conditional on allocation matrix A,

, j = 1, 2.

in (5) and conditional on allocation matrix A,

, j = 1, 2.

Lemma 1

Let assumptions (A1), (A2) hold.

If

⊂

⊂

, conditional on

A,

, under

, conditional on

A,

, under

If

⊈

⊈

, conditional on

A,

, under

, conditional on

A,

, under

As shown by the following result, the proposed approach leads to Bayes factor consistency when comparing fixed dimensional models as well as models growing at the rate O(nt), 0 < t < 1, when the truth is sparse.

Theorem I

Let assumptions (A1), (A2) hold.

Suppose p1 and p2 are fixed. If

⊂

⊂

, then under

, then under

and assumptions (A3), (A4),

as n→ ∞ and if p2 − p1

> 2 + 2(k − ku),

as n → ∞. Further, if

and assumptions (A3), (A4),

as n→ ∞ and if p2 − p1

> 2 + 2(k − ku),

as n → ∞. Further, if

⊈

⊈

, then under

, then under

and assumption (A3),

as n → ∞.

and assumption (A3),

as n → ∞.Suppose pj is growing at the rate O(naj), j=1,2, with 0 ≤ a1 < a2 < 1. Then under

and assumption (A3),

as n → ∞.

and assumption (A3),

as n → ∞.

REMARK 1

Although we omit the proof here, Theorem I can be modified to accommodate the case of improper priors on g (i.e.

). In such a case, assumptions (A3), (A4) are excluded and we require p2 − p1 ≥ 3 for a.s. convergence in (I) for

⊂

⊂

.

.

The next result establishes model selection consistency for the proposed approach, even in cases when the cardinality of the model space increases with n. In particular, we consider cases when the number of candidate predictors pn is growing at the rate O(na), a > 0, but the prior on the model space assigns zero probability to models growing at a rate equal to or faster than n. When a ≥ 1, the prior support consists of models constructed using O(nt) (0 ≤ t < 1) sized subsets of pn = O(na) candidate predictors.

To elaborate, let the support of the prior on the model space be

=

=

∪

∪

, where

, where

is the set of all (non-null) models γ such that there exists a sample size n0 < ∈ for which γj = 0 for all j > pn0, and

is the set of all (non-null) models γ such that there exists a sample size n0 < ∈ for which γj = 0 for all j > pn0, and

is the set of all models with dimensions growing at a rate strictly less than n,

. Letting p0 = max{j: γ ∈

is the set of all models with dimensions growing at a rate strictly less than n,

. Letting p0 = max{j: γ ∈

, γj = 1}, we can discard predictors having a higher index than p0 for all γ ∈

, γj = 1}, we can discard predictors having a higher index than p0 for all γ ∈

and treat

and treat

as finite dimensional having 2p0 − 1 elements (excluding the null model). Let γjl denote the lth model having dimension pj. Consider the following sequence of priors which penalizes models with increasing dimensions, thus encouraging sparsity:

as finite dimensional having 2p0 − 1 elements (excluding the null model). Let γjl denote the lth model having dimension pj. Consider the following sequence of priors which penalizes models with increasing dimensions, thus encouraging sparsity:

| (12) |

When the truth is sparse such that

∈

∈

, we have the following result.

, we have the following result.

Theorem II

Suppose assumptions (A1)–(A4) hold. For fixed p and under

for any prior on Γ with π(

) > 0. When pn = O(na) (a > 0) and

under

) > 0. When pn = O(na) (a > 0) and

under

, for πn(γjl) defined as in (12).

, for πn(γjl) defined as in (12).

4. SIMULATION STUDY

We present the results of two simulation studies comparing our method (SLM) with the normal linear model (NLM) having (designed to assign comparable prior information when the residual is Gaussian), the lasso (Tibshirani, 1996) and elastic net (Zou and Hastie, 2005), as well as robust variable selection methods including an MM-type regression estimator (Yohai, 1987; Koller and Stahel, 2011), and a median regression model with SSVS for variable selection (Yu et al., 2013). The data is generated as follows:

where xi is a ten dimensional predictor (p=10), with xij, j = 1, …, 10 generated independently from U(−1,1), and βT = (3, 2, −1, 0, 1.5, 1, 0, −4, −1.5, 0).

We used Ga(0.1, 1) prior on the DP precision parameter and Be(0.1, 1) prior on P(γj = 1), j=1,…,p, which corresponds to a weakly informative prior favoring parsimony. We update g using the griddy Gibbs approach having 1000 equally spaced quantiles for corresponding to a = 4 in the hyper-g prior. For both SLM and NLM, we ran 50,000 iterations with a burn in of 5,000. We implemented the lasso (L1) and elastic net (EL) using the GLMNET package in R with default settings, while the MM-type estimator (LMR) was implemented using ‘lmrob’ function in ‘robustbase’ package in R and the median regression with SSVS (QR) was implemented using function ‘SSVSquantreg’ in ‘MCMCpack’ package in R, with a Be(0.1, 1) prior on the prior inclusion probability for predictors. All results are summarized across 20 replicates. The computation time for SLM per iteration was marginally slower than NLM. The mixing for the fixed effects was good under both the methods. The results for SLM do not appear to be sensitive to the hyper-parameters in π(m), but are mildly sensitive to hyper-parameters in π(g) for n = 100.

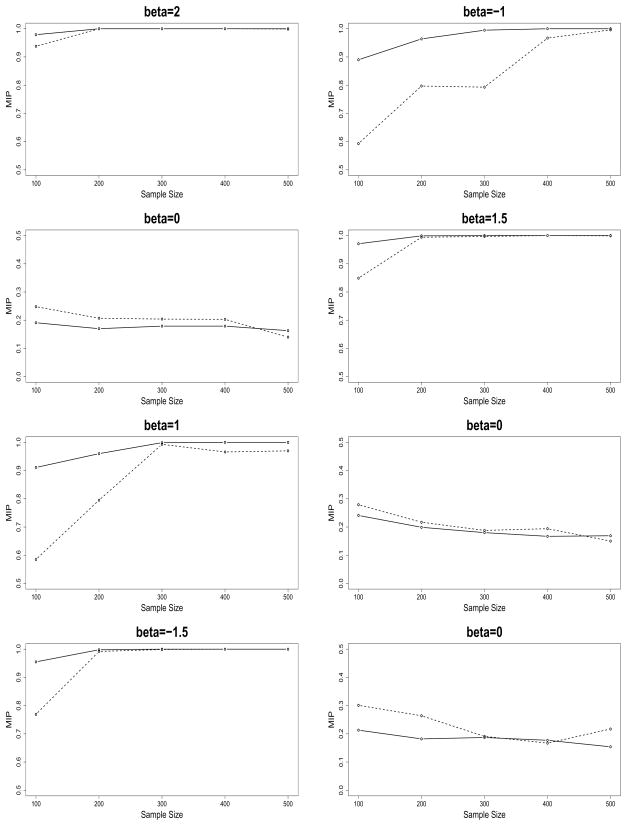

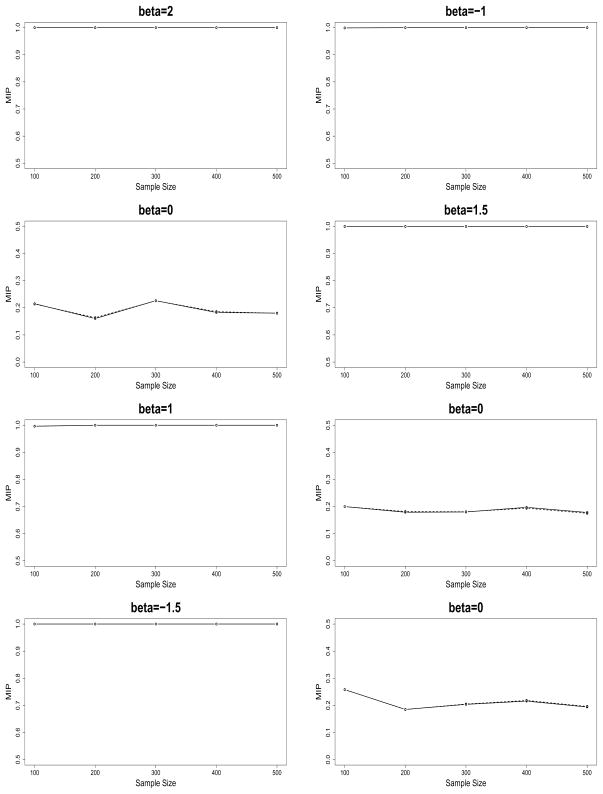

We study the marginal inclusion probabilities (MIP) under SLM and NLM over varying sample sizes in Figures 1 and 2. These plots suggest a faster rate of increase of the MIP for the important predictors under SLM as compared to NLM when the true residuals are non-Gaussian, and a very similar rate of increase under both methods when the true residuals are Gaussian (thus justifying the prior choice for NLM). In contrast, the exclusion probabilities for the unimportant predictors converge to one slowly under both the methods, reflecting the well known tendency for slower accumulation of evidence in favor of the true null.

Figure 1.

Marginal Inclusion Probabilities (MIP) over varying sample sizes: Truth generated from bimodal residual. Solid lines - Semi-parametric Linear Model, dashed lines - Normal Linear Model.

Figure 2.

Marginal Inclusion Probabilities (MIP) over varying sample sizes: Truth generated from Gaussian residual. Solid lines - Semi-parametric Linear Model, dashed lines - Normal Linear Model.

Tables 1 and 2 present some summaries for n = 100 for Case I. The MIPs in Table I suggests correct variable selection decision by SLM, but poor performance by NLM which fails to exclude any of the unimportant predictors under median probability model. Further, L1, EL and QR seem to favor an overly complex model by choosing a superset of important predictors. In terms of estimation of the fixed effects, SLM has the highest degree of accuracy as reflected by the smallest mean square error ( ) in Table 2, where βT is the vector of true regression coefficients. In addition, the replicate average mean square error for out of sample prediction for a test sample size of 25 (Table 2) is smallest under the SLM, followed by lasso and elastic net. NLM is seen to be clearly inadequate for prediction purposes as indicated by the extremely high out of sample predictive MSE. Thus in conclusion, when the true residual is non-Gaussian, the SLM has the best performance compared to competitors, whereas NLM performs poorly in general.

Table 1.

Fixed effects estimates and marginal inclusion probabilities (MIP) for fixed effects for Case I when n=100.

| βT | MIPSLM | βSLM | MIPNLM | βSLM | βL1 | βEL | βLMR | βQR |

|---|---|---|---|---|---|---|---|---|

| 3 | 1.00 | 2.88(2.34, 3.41) | 1.00 | 2.83(1.86, 3.81) | 3.08 | 3.08 | 3.15 | 2.92 |

| 2 | 0.99 | 1.89(1.34, 2.44) | 0.98 | 1.95(0.96, 2.91) | 2.06 | 2.06 | 2.11 | 1.84 |

| −1 | 0.93 | −0.91(−1.46, −0.36) | 0.75 | −0.78(−1.75,0.03) | −0.98 | −0.98 | −0.87 | −0.78 |

| 0 | 0.45 | −0.01(−0.44,0.44) | 0.53 | 0.006(−0.82, 0.81) | 0.01 | 0.009 | −0.003 | −0.02 |

| 1.5 | 0.98 | 1.43(0.89,1.98) | 0.90 | 1.35(0.35, 2.35) | 1.54 | 1.54 | 1.57 | 1.29 |

| 1 | 0.90 | 0.79(0.28, 1.35) | 0.68 | 0.54(−0.26, 1.48) | 0.74 | 0.74 | 0.66 | 0.42 |

| 0 | 0.43 | −0.005(−0.44, 0.42) | 0.53 | −0.05(−0.85, 0.73) | −0.04 | −0.04 | −0.09 | −0.06 |

| −4 | 1.00 | −3.89(−4.43, −3.33) | 1.00 | −3.75(−4.74, −2.74) | −4.05 | −4.04 | −4.14 | −3.95 |

| −1.5 | 0.99 | −1.54(−2.08, −0.98) | 0.92 | −1.43(−2.41, −0.41) | −1.57 | −1.57 | −1.54 | −1.30 |

| 0 | 0.42 | 0.008(−0.43, 0.43) | 0.54 | −0.12(−0.93, 0.64) | −0.12 | −0.12 | −0.06 | −0.14 |

SLM: Semi-parametric linear model, NLM: Normal linear model, L1: Lasso, EL: Elastic Net, LMR: MM-type estimator, QR: Median regression with SSVS.

Table 2.

Summaries for Case I when n=100.

| Measure | SLM | NLM | L1 | EL | LMR | QR |

|---|---|---|---|---|---|---|

| MSE around βT | 0.07 | 0.21 | 0.24 | 0.24 | 0.40 | 0.50 |

| MSE for out of sample prediction | 7.70 | 16.44 | 8.33 | 8.32 | 8.83 | 9.11 |

SLM: Semi-parametric linear model, NLM: Normal linear model, L1: Lasso, EL: Elastic Net, LMR: MM-type estimator, QR: Median regression with SSVS. MSE: mean square error and βT is the vector of true regression coefficients.

5. APPLICATION TO DIABETES DATA

The prevalence of diabetes in the United States is expected to more than double to 48 million people by 2050 (Mokdad et. al., 2001). Previous medical studies have suggested that Diabetes Mellitus type II (DM II) or adult onset diabetes could be associated with high levels of total cholesterol (Brunham et. al., 2007) and obesity (often characterized by BMI and waist to hip ratio) (Schmidt et. al., 1992), as well as hypertension (indicated by a high systolic or diastolic blood pressure or both) which is twice as prevalent in diabetics compared to non-diabetic individuals (Epstein and Sowers, 1992).

We develop a comprehensive variable selection strategy for indicators of DM II in African-Americans based on data obtained from Department of Biostatistics, Vanderbilt University website. Our primary focus is to discover important indicators of DM II by modeling the continuous outcome glycosylated hemoglobin (> 7mg/dL indicates a positive diagnosis of diabetes) based on predictors such as total cholesterol (TC), stabilized glucose (SG), high density lipoprotein (HDL), age, gender, body mass index (BMI) indicator (overweight and obese with normal as baseline), systolic and diastolic blood pressure (SBP and DBP), waist to hip ratio (WHR) and postprandial time indicator (PPT) (1 if blood was drawn within 2 hours of a meal, 0 otherwise). We note that lower levels of HDL have been known to be associated with insulin resistance syndrome, often considered a precursor of DM II with a conversion rate around 30%. We also expect PPT to be a significant indicator as blood sugar levels are high up to 2 hours after a meal.

After excluding the records containing missing values, the data consisted of 365 subjects which was split into multiple training and test samples of sizes 330 and 35 respectively. The replicate averaged fixed effects estimates (multiplied by 100) for the SLM, NLM, L1, EL, LMR and QR are presented in Table 3, and the marginal inclusion probabilities (MIP) for the SLM, NLM and QR are summarized in Table 4. We also evaluate the out of sample predictive performance for each training-test split using predictive MSE in Table 5, and additionally provide the mean coverage (COV) and width (CIW) of 95% pointwise credible intervals for the predicted responses under SLM and NLM. The same values of hyper-parameters were used as in section 5. For each replicate, we randomized the initial starting points and made 100,000 runs for SLM (burn in = 20,000) and 50,000 runs for NLM (burn in = 5,000).

Table 3.

Fixed effects (times 100) for type-II diabetes example.

| Predictor | β̂SLM | β̂NLM | β̂L1 | β̂EL | β̂LMR | β̂QR |

|---|---|---|---|---|---|---|

| TC | 0.55(0.11,0.73) | 0.74(0.25,1.20) | 0.75 | 0.75 | 0.29 | 0.01 |

| SG | 2.11(1.75,2.48) | 2.82(2.5,3.15) | 2.83 | 2.82 | 2.99 | 3.23 |

| HDL | −0.50(−1.4,0.015) | −0.36(−1.61,0) | −1.02 | −1.02 | −0.42 | 0 |

| Age | 0.34(−0.06,1.3) | 0.98(0,2.35) | 1.19 | 1.19 | 0.57 | 0.04 |

| Gender | −3.72(−30.12,4.39) | −1.53(−25.46,3.22) | −19.66 | −19.81 | −7.87 | −0.86 |

| BMI(overwt) | 1.55(−9.43,24.03) | 2.04(−3.33,29.53) | 4.33 | 4.27 | 15.12 | 1.84 |

| BMI(obese) | −0.74(−20.33,13.44) | −0.91(−21.93,6.14) | −14.88 | −15.03 | 8.16 | 0.62 |

| SBP | 0.53(0,1.35) | 0.03(−0.13,0.65) | 0.25 | 0.25 | 0.56 | 0.009 |

| DBP | −0.03(−0.99,0.69) | 0(−0.45,0.45) | 0.018 | 0.017 | −0.55 | 0.002 |

| WHR | 224.27(67.72,381.88) | 3.16(−44.74,91.4) | 90.47 | 91.53 | 90.79 | 129.23 |

| PPT | 21.42(1.89,57.49) | 33.04(0,80.39) | 47.31 | 47.32 | 37.55 | 18.99 |

Table 4.

Marginal Inclusion Probabilities for SLM, NLM, QR in type-II diabetes data.

| Predictor | TC | SG | HDL | Age | Gender | BMI(overwt) | BMI(obese) | SBP | DBP | WHR | PPT |

|---|---|---|---|---|---|---|---|---|---|---|---|

| MIPSLM | 0.97 | 1.00 | 0.64 | 0.43 | 0.17 | 0.15 | 0.22 | 0.72 | 0.23 | 0.93 | 0.64 |

| MIPNLM | 0.98 | 1.00 | 0.39 | 0.67 | 0.12 | 0.13 | 0.11 | 0.14 | 0.10 | 0.13 | 0.68 |

| MIPQR | 0.02 | 1.00 | 0.002 | 0.03 | 0.08 | 0.10 | 0.08 | 0.01 | 0.004 | 0.71 | 0.42 |

Table 5.

Out of Sample Prediction.

| Replicate | S 1 | S 2 | S 3 | S 4 | S 5 | S 6 | S 7 | S 8 |

|---|---|---|---|---|---|---|---|---|

| MSESLM | 1.25 | 1.24 | 1.55 | 1.21 | 1.45 | 1.47 | 3.44 | 1.23 |

| MSENLM | 1.23 | 1.33 | 1.74 | 1.29 | 1.14 | 1.46 | 3.43 | 1.52 |

| MSEL1 | 1.28 | 1.45 | 2.49 | 2.34 | 1.13 | 1.45 | 3.47 | 1.75 |

| MSEEL | 1.29 | 1.47 | 2.51 | 2.36 | 1.14 | 1.45 | 3.48 | 1.75 |

| MSELMR | 2.23 | 1.21 | 2.15 | 1.02 | 1.09 | 1.36 | 4.06 | 1.69 |

| MSEQR | 1.82 | 1.91 | 2.64 | 1.15 | 1.64 | 2.68 | 3.98 | 2.44 |

| CovSLM | 100.00 | 97.14 | 100.00 | 97.14 | 100.00 | 100.00 | 91.42 | 100.00 |

| CovNLM | 97.12 | 97.14 | 94.28 | 97.14 | 100.00 | 97.14 | 91.42 | 100.00 |

| CIWNLM | 5.92 | 5.41 | 5.84 | 5.94 | 5.93 | 5.91 | 5.59 | 5.90 |

| CIWSLM | 6.93 | 6.16 | 6.80 | 6.81 | 6.84 | 6.86 | 6.13 | 6.77 |

MSE: out of sample predictive mean square error, Cov: 95% credible interval coverage, CIW: 95% credible interval width.

It is interesting to note from Table 4 that the variable selection decisions under SLM (using median probability model) are quite different compared to the NLM. In particular, while both the models successfully identify total cholesterol, stabilized glucose and postprandial time as important predictors, it is only the SLM which identifies systolic blood pressure (MIP = 0.72), HDL (MIP = 0.64) and waist to hip ratio (MIP = 0.93) as important indicators, compared to NLM which assigns MIP = 0.14, 0.39 and 0.13 to these three predictors respectively. Age is identified as an important predictor under NLM (MIP = 0.67), but not under SLM (MIP=0.43). For both the methods, the MIPs for BMI (overweight and obese) were low, which could potentially be attributed to adjusting for the other obesity factors such as waist to hip ratio. From Tables 3 and 4, we also see that the lasso, elastic net and the MM-type estimator select an overly complex model by excluding minimal number of predictors, while the quantile regression with SSVS fails to include several important predictors and selects a highly parsimonious and inadequate model.

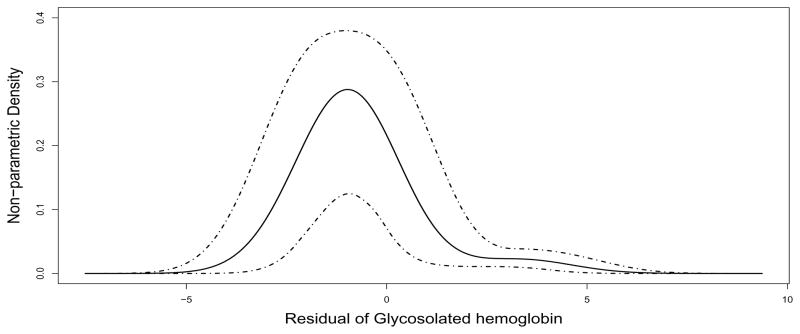

Variable selection in this application is clearly influenced by the assumptions on the residual density, with the nonparametric residual density providing a more realistic characterization that should lead to a more accurate selection of the important predictors. Figure 3 shows an estimate of the residual density obtained from the SLM analysis, suggesting a uni-modal right skewed density with a heavy right tail. The SLM results suggest that a mixture of two Gaussians provides an adequate characterization of this density. The computation time for SLM is only marginally slower than NLM, and in addition SLM exhibits good mixing for most of the fixed effects (Table 6). These results are robust to SSVS starting points, and consistency in the results across training-test splits also indirectly suggests adequate computational efficiency of SSVS.

Figure 3.

Residual density for Type II Diabetes study under Semi-parametric Linear Model.

Table 6.

Auto-correlations across lags for fixed effects in type-II diabetes data.

| Predictor | Lag 1 | Lag 5 | Lag 10 | Lag 25 | Lag 50 | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| SLM | NLM | SLM | NLM | SLM | NLM | SLM | NLM | SLM | NLM | |

| TC | 0.22 | 0.18 | 0.113 | 0.194 | 0.073 | 0.159 | 0.032 | 0.111 | 0.013 | 0.059 |

| SG | 0.59 | 0.06 | 0.386 | 0.038 | 0.285 | 0.022 | 0.14 | 0.009 | 0.06 | 0.016 |

| HDL | 0.19 | 0.02 | 0.081 | 0.012 | 0.041 | 0.013 | 0.01 | 0.021 | 0.0005 | −0.006 |

| Age | 0.21 | 0.04 | 0.072 | 0.009 | 0.053 | −0.0001 | 0.025 | 0.006 | 0.007 | −0.014 |

| Gender | 0.06 | −0.007 | 0.030 | 0.0003 | 0.013 | −0.006 | 0.009 | −0.014 | 0.005 | 0.019 |

| BMI(overwt) | 0.02 | −0.002 | 0.01 | −0.006 | 0.006 | 0.013 | −0.006 | 0.009 | 0.0014 | 0.018 |

| BMI(obese) | 0.02 | 0.002 | 0.017 | 0.004 | 0.004 | 0.018 | 0.007 | −0.003 | 0.000 | 0.000 |

| SBP | 0.29 | 0.0711 | 0.137 | 0.019 | 0.096 | 0.007 | 0.047 | 0.03 | 0.014 | 0.022 |

| DBP | 0.07 | 0.0239 | 0.021 | 0.019 | 0.019 | 0.031 | 0.009 | −0.003 | 0.004 | −0.012 |

| WHR | 0.44 | 0.0642 | 0.353 | 0.043 | 0.321 | 0.061 | 0.251 | 0.06 | 0.186 | −0.003 |

| PPT | 0.22 | 0.0600 | 0.118 | 0.047 | 0.068 | 0.045 | 0.015 | 0.004 | −0.002 | 0.019 |

In terms of out of sample predictive MSE (Table 5), the relative performance between SLM, NLM, L1 and EL vary across training-test splits so that none of the models can be said to dominate the others, while LMR and QR produce relatively inferior prediction results. Overall, the NLM has narrower 95% pointwise credible intervals compared to SLM, often resulting in poorer coverage for out of sample predictions. In conclusion, SLM succeeds in choosing the most reasonable model for DM II, consistent with previous medical evidence, and compares favorably with other competitors for prediction purposes.

Acknowledgments

This work was supported by Award Number R01ES017240 from the National Institute of Environmental Health Sciences. The authors thank the referees and the associate editor for their valuable comments.

APPENDIX A: PROOF OF RESULTS

Proof of Theorem I

Using similar methods as in the proof of Theorem 2 in Guo and Speckman (2009), it can be shown that conditional on A and assumptions (A3) and (A4), the upper and lower bounds of are

and . Similarly, . Therefore,

| (13) |

Case (I): For fixed pj (j = 1, 2) and large n,

, ignoring terms independent of n. Using the results in proof of Theorems 2, 3 in Guo and Speckman (2009), we can show that

when

⊈

⊈

. Again for

. Again for

⊂

⊂

, using results in the aforementioned proofs, we have

. Further for

, using results in the aforementioned proofs, we have

. Further for

⊂

⊂

when p2 − p1 > 2 + 2(k − ku), we have

, where δ > 0 is such that i ≤ 2(k − ku) + 2δ < i + 1 when i ≤ 2(k − ku) < i + 1. This implies that for large enough n,

when p2 − p1 > 2 + 2(k − ku), we have

, where δ > 0 is such that i ≤ 2(k − ku) + 2δ < i + 1 when i ≤ 2(k − ku) < i + 1. This implies that for large enough n,

| (14) |

Then for large enough n, we have,

| (15) |

where ζ*(n) is the LHS in equations (14) which is independent of A, and ζ*(n) → 0 as n → ∞ (using (A4)). Dividing both sides of (15) by L(Yn|

), we have

as n → ∞.

), we have

as n → ∞.

Case (II): For increasing dimensions p1 = O(na1), p2 = O(na2) with 0 ≤ a1 < a2 < 1, we will only assume (A3) for g ~ π(g) so that ku = 0. We have using (13)

| (16) |

Let us consider the following cases under 0 ≤ a1 < a2 < 1.

Case C1:

⊂

⊂

. We have

, j=1,2, and

. Using Lemma 1 of Guo et. al. (2009),

. We have

, j=1,2, and

. Using Lemma 1 of Guo et. al. (2009),

Moreover

under

. Then for large n,

. Then for large n,

where a* > 0 is such that 0 < 1 − a* − a2 < 1. This implies that

under

for any constant K* > 0. Case C2:

for any constant K* > 0. Case C2:

⊈

⊈

. Using Lemma 1,

. Using Lemma 1,

For fixed τ−1 and bA,2 > 0 (under (A2)), . This implies that in the limiting case when n → ∞, we have

| (17) |

where K* > 0 is a constant. Denoting the upper bounds as ζ*(n), it is clear that ζ*(n) is independent of A and ζ*(n) → 0 as n → ∞ when 0 ≤ a1 < a2 < 1. Using similar arguments as in equation (15) of Case (I), we have and consistency follows.

Proof of Theorem II

Given the assumptions (A1)–(A4), Bayes factor consistency holds under the different cases elaborated in Theorem I. For fixed p, the proof follows trivially using Bayes factor consistency. For increasing pn = O(na) (a > 0), our prior is

, where π ~ Be(a1, b1) and

. Let Wγ denote the prior weight for γ ∈

after marginalizing out π under the Be(a1, b1) prior (W1 being the weight for

after marginalizing out π under the Be(a1, b1) prior (W1 being the weight for

). Let

Bayes factor between models γ and

). Let

Bayes factor between models γ and

, let D = {pγ : γ ∈

, let D = {pγ : γ ∈

} and denote

} and denote

= {γ ∈

= {γ ∈

: dim(γ) = pj}. Note that under (A1)–(A4) and

: dim(γ) = pj}. Note that under (A1)–(A4) and

∈

∈

,

for all

, using Theorem I. Also,

,

for all

, using Theorem I. Also,

where

for large enough n, and ε0 → 0 as n → ∞ since all the individual terms in the finite summation → 0 using Theorem I. Further using (17), the upper bound of

for any γjl ∈

is given by ζ*(n) = n−K* when n is large, where K* > 0 is a constant. Noting that the cardinality of

, we have for large n,

is given by ζ*(n) = n−K* when n is large, where K* > 0 is a constant. Noting that the cardinality of

, we have for large n,

Now note that W1 is fixed and the cardinality of D < κ0n for some constant κ0 > 0. Thus it is clear that as n → ∞ for large K*. The rest is straightforward.

APPENDIX B: COMPUTATIONAL STEPS FOR MCMC

The posterior computation steps are:

-

Step 1.1

Update the ν’s after marginalizing out the augmented uniform variable using π(νh|−) = Be(1 + nh, Σj>h nj + m), h=1, …, M, where M is the total number of clusters satisfying , with wh = νh Πl<h(1 − νl).

-

Step 1.2

Update ui, i=1, …, n, from its full conditional as described in Walker (2007).

-

Step 2

Update the cluster membership of different subjects using f(yi|ui, Aih = 1) ∝ N(yi|ηh, xγ,i, βγ, τ−1)I(h ∈ Bw(ui)), h=1, …, M, with Bw(ui) defined as in section 2.3.

-

Step 3

Update the Dirichlet process atom ηl for the l-th cluster using , where is the cardinality of the l-th cluster, l=1, …, M.

-

Step 4

Update the DP precision using .

-

Step 5

Letting , update precision τ using .

-

Step 6

Using the hyper-g prior and the fact that for a = 4, we can adopt the griddy Gibbs approach (Ritter and Tanner, 1992) to update g.

-

Step 7

Update the prior inclusion probability π = Pr(γj = 1) using f(π|−) = Be(a1 + pγ, b1 + p − pγ).

-

Step 8Update γj’s one at a time by computing their posterior inclusion probabilities after marginalizing out βγ and conditional on inclusion indicators for the remaining predictors as well as g, τ and A. Denote γ(j) and γ(−j) as the vector of current variable inclusion indicators with γj fixed at 1 and 0 respectively, and let pγ(j) and pγ(−j) denote the corresponding vector sums. We can sample γj from the Bernoulli conditional posterior distribution with probabilities Pr(γj = 1|−) = pj1/(pj1 + pj0) and Pr(γj = 0|−) = pj0/(pj1 + pj0), where

-

Step 9

Set {βj : γj = 0} = 0 and update βγ = {βj : γj = 1} using π(βγ|−) = N(βγ; E, V), where and .

Contributor Information

Suprateek Kundu, Email: sk@stat.tamu.edu.

David B. Dunson, Email: dunson@stat.duke.edu.

References

- 1.Antoniak CE. Mixtures of Dirichlet processes with applications to Bayesian nonparametric problems. Annals of Statistics. 1974;2:11521174. [Google Scholar]

- 2.Armagan A, Dunson DB, Lee J, Bajwa WU, Strawn N. Posterior consistency in high-dimensional linear models. Biometrika. 2013 To appear. [Google Scholar]

- 3.Brunham LR, Kruit JK, Pape TD, Timmins JM, Reuwer AQ, Vasanji Z, Marsh BJ, Rodrigues B, Johnson JD, Parks JS, Verchere CB, Hayden MR. β-cell ABCA1 influences insulin secretion, glucose homeostasis and response to thiazolidinedione treatment. Nature Medicine. 2007;13:340–347. doi: 10.1038/nm1546. [DOI] [PubMed] [Google Scholar]

- 4.Casella G, Girón FJ, Martínez ML, Moreno E. Consistency of Bayesian procedures for variable selection. Annals of Statistics. 2009;37:1207–1228. [Google Scholar]

- 5.Castillo I, van der Vaart A. Needles and straws in a haystack: Posterior concentration for possibly sparse sequences. Annals of Statistics. 2012;40:2069–2101. [Google Scholar]

- 6.Epstein M, Sowers JR. Diabetes mellitus and hypertension. Hypertension. 1992;19:403–418. doi: 10.1161/01.hyp.19.5.403. [DOI] [PubMed] [Google Scholar]

- 7.Ferguson TS. A Bayesian analysis of some nonparametric problems. Annals of Statistics. 1972;1:209–230. [Google Scholar]

- 8.George EI, McCulloch RE. Approaches for Bayesian Variable Selection. Statistica Sinica. 1997;7(2):339–74. [Google Scholar]

- 9.Guo R, Speckman P. Bayes factor consistency in linear models. In the 2009 International Workshop on Objective Bayes Methodology; Philadelphia. 2009.2009. [Google Scholar]

- 10.Jiang W. Bayesian variable selection for high dimensional generalized linear models: Convergence rates of the fitted densities. Annals of Statistics. 2007;35:1487–1511. [Google Scholar]

- 11.Koller M, Stahel WA. Sharpening Wald-type inference in robust regression for small samples. Computational Statistics and Data Analysis. 2011;55:25042515. [Google Scholar]

- 12.Kyung M, Gill J, Casella G. Characterizing the variance improvement in linear Dirichlet random effects models. Statistics and Probability Letters. 2009;79:2343–2350. [Google Scholar]

- 13.Liang F, Paulo R, Molina G, Clyde MA, Berger JO. Mixtures of g-priors for Bayesian Variable Selection. Journal of the American Statistical Association. 2008;103:410–423. [Google Scholar]

- 14.Lo AY. On a class of Bayesian nonparametric estimates: I. density estimates. Annals of Statistics. 1984;12:351–357. [Google Scholar]

- 15.Maruyama Y, George E. A g–prior extension for p > n. 2008 arxiv:0801.4410v1 [stat.ME] [Google Scholar]

- 16.Mokdad AH, Bowman BA, Ford ES, Vinicor F, Marks JS, Koplan JP. The continuing epidemics of obesity and diabetes in the United States. Journal of the American Medical Association. 2001;286:1195–1200. doi: 10.1001/jama.286.10.1195. [DOI] [PubMed] [Google Scholar]

- 17.O’Hara RB, Sillanpää MJ. Review of Bayesian variable selection methods: What, how and which. Bayesian Analysis. 2009;4:85–118. [Google Scholar]

- 18.Yu K, Chen CWS, Reed C, Dunson D. Bayesian Variable Selection in Quantile Regression. Statistics and its Interface. 2013;6:261–274. [Google Scholar]

- 19.Ritter C, Tanner MA. Facilitating the Gibbs sampler: the Gibbs stopper and the griddy-Gibbs sampler. Journal of the American Statistical Association. 1992;87:861–868. [Google Scholar]

- 20.Schmidt MI, Duncan BB, Canani LH, Karohl C, Chambless L. Association of waist-hip ratio with diabetes mellitus. Strength and possible modifiers. Diabetes Care. 1992;15:912–4. doi: 10.2337/diacare.15.7.912. [DOI] [PubMed] [Google Scholar]

- 21.Tibshirani R. Regression shrinkage and selection via the lasso. Journal of the Royal Statistical Society, Series B. 1996;58(1):267–288. [Google Scholar]

- 22.Walker S. Sampling the dirichlet mixture model with slices. Communication in Statistics - Simulation and Computation. 2007;36:45–54. [Google Scholar]

- 23.Yau C, Papaspiliopoulos O, Roberts G, Holmes C. Bayesian non-parametric hidden Markov models with applications in genomics. Journal of the Royal Statical Society, Series B. 2011;73(Part 1):33–57. doi: 10.1111/j.1467-9868.2010.00756.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Yohai VJ. High breakdown-point and high efficiency estimates for regression. Annals of Statistics. 1987;15:642–65. [Google Scholar]

- 25.Zellner A, Siow A. Bayesian Statistics: Proceedings of the First International Meeting. Valencia: University of Valencia Press; 1980. Posterior odds ratios for selected regression hypotheses; pp. 585–603. [Google Scholar]

- 26.Zellner A. On assessing prior distributions and Bayesian regression analysis with g-prior distributions. Bayesian Inference and Decision Techniques: Essays in Honor of Bruno de Finetti. 1986:233–243. [Google Scholar]

- 27.Zou H, Hastie T. Regularization and variable selection via the elastic net. Journal of the Royal Statistical Society, Series B. 2005;67:301–320. [Google Scholar]