Abstract

This article provides the author's perspective on the development of digital hearing aids and how digital signal processing approaches have led to changes in hearing aid design. Major landmarks in the evolution of digital technology are identified, and their impact on the development of digital hearing aids is discussed. Differences between analog and digital approaches to signal processing in hearing aids are identified.

Keywords: acoustic amplification, digital hearing aids, programmable hearing aids, digital signal processing

The need to communicate has yielded a vast array of inventions. The need to compute has similarly stimulated our inventiveness. These 2 powerful forces have danced together for generations and have yielded several remarkable offspring. Information technology is perhaps the most widely known, but there are other less well-known progeny, such as the ubiquitous microchip doing its bit (pun intended) in almost every modern electrical device.

In the predigital era, hearing aids did little more than amplify sound. Today, the hearing aid is a far more complex instrument in which amplification is combined with advanced forms of signal processing for speech enhancement, noise reduction, self-adapting directional inputs, feedback cancellation, data monitoring, and acoustic scene analysis, as well as the means for a wireless link with other communication systems. This article traces the application of digital signal processing (DSP) approaches to hearing aid applications and how these approaches introduced new ways of thinking regarding the fundamentals of acoustic amplification. The article is clearly biased in the direction of the author's own thinking as one who played a role in the development of digital hearing aids.

Background

Prelude to Digital Hearing Aids: Digitizing Audio Signals

The fundamental difference between analog and digital hearing aids is that in the digital hearing aid, the audio signal is converted to a sequence of discrete samples, processed digitally, and then converted back to an analog signal. An important first step in developing the field of digital audio was that of developing a device for converting analog audio signals to digital form and then converting the digital signal back to analog form. It should come as no surprise that this crucial first step in digital audio was taken at Bell Laboratories, which has pioneered so many other major advances in science and engineering. What may come as a surprise, however, is when and why this work was done. During World War II, there was a pressing need to develop a secure telephone link between Washington and London. The project, known simply as Project X, was initiated in the early 1940s and involved several of the most brilliant minds at the time, including Claude Shannon, Harry Nyquist, and, for a short period, Alan Turing on a visit from England.1,2

To ensure that the speech signals were encrypted securely, the Vernam cipher was used. This method of encryption required that the signal be represented as a sequence of binary digits (0s and 1s). The binary signal was then encrypted by adding a random sequence of binary digits to the signal, thereby creating another sequence of binary digits that is effectively a random sequence, except to those who knew the binary sequence used to encrypt the signal. A key component of this project was the development of a method for digitizing the speech signal. The speech was filtered into a series of narrow bands, and the amplitude in each band was sampled and converted into a binary signal. The binary signals from the different filter bands were combined, encrypted, and then transmitted. The receiving party had a recording of the binary sequence used to encrypt the speech, which was then used to decode the received signal.

Vacuum tubes were used in the construction of the device so that, on completion, this rather special analog-to-digital converter with encryption required 30 seven-foot racks of equipment and 30 kW of power to produce a digital audio signal of 1 mW.2 The device was obviously not practical, except for its highly specialized and very important secret application.

Project X remained a closely guarded secret until 1975.2 However, work on the project generated new ways of thinking about the nature of speech, how information is conveyed by analog and digital signals, and on the use of pulses for encoding and transmitting audio signals. These ideas were embodied in later, unclassified work that had a substantial impact on communication technology, such as the development of practical pulse-code modulation systems. More important, powerful new theories were developed such as Shannon's information theory,3 which drew on ideas inherent to Project X. In his classic article, Shannon not only provided a proof of the sampling theorem initially proposed by Nyquist4 but also provided the underlying theory for reconstructing an analog signal from its digital form.

The problem of converting analog audio signals to digital form was addressed once again almost 20 years after Project X. As before, the work was done at Bell Laboratories, but this time, the motivation was to improve the efficiency of developing and evaluating speech-processing systems by means of digital simulation. There was much interest at the time in improving the efficiency of telephone transmissions on the transatlantic cable. Various vocoder systems were being evaluated, but the construction of each system was time-consuming, and progress was slow. The simulation of experimental speech-processing systems on a digital computer represented a much more efficient way of developing, evaluating, and modifying complex systems without having to build one in hardware until the design of the system was finalized. It was also recognized that if speech signals could be digitized and entered into a digital computer, new ways of analyzing, processing, and synthesizing speech could then be developed. With these objectives in mind, analog-to-digital converters (ADC) and digital-to-analog converters (DAC) were developed for audio signals.5

Relevance of the Sampling Theorem for Modern Hearing Aids

It was inconceivable at the time (circa 1948) that the sampling theorem and the methods developed for efficient sampling of audio signals in pulse-code modulation systems would be of any relevance to the design of hearing aids. Today, these considerations are of paramount importance for modern hearing aids, most of which employ digital technology. It is also important for audiologists and others interested in the capabilities of digital hearing aids to understand the theoretical and practical limitations of digital sampling. A tutorial on digital sampling is provided in the review article by Levitt.6

Once practical methods for digitizing audio signals were developed, attention focused on developing methods of processing audio signals digitally. Initially, software was written to replicate conventional methods of analog signal processing, such as the classic Butterworth and Bessel filters. It was soon realized, however, that the capabilities of DSP far exceeded analog signal processing. It was also recognized that there are fundamental differences between analog signal processing and DSP and that it was necessary to change one's mind-set in using DSP; that is, one needs to think in digital rather than analog terms. This change in mind-set opened the door to a vast new terrain, resulting in substantial advances in signal processing per se, which in turn had a major impact on the development of digital hearing aids.

Initially, the driving force behind the development of DSP was the need for more efficient methods of developing and evaluating complex speech transmission systems. Consequently, many of the early advances in DSP were in the area of speech analysis and processing.5 The tremendous capabilities of DSP and the challenges raised by this new technology were of considerable interest to researchers and led to research by scientists in many disciplines. As a consequence, advances in DSP were both rapid and broad in scope, encompassing many different fields, including musical acoustics, room acoustics, underwater sound, ultrasonics, video signal transmission, and image processing. The field of DSP is now substantially greater than that of speech processing, which fathered it (and mothered it in its early days). Since the early development of DSP focused on problems in speech analysis, synthesis, and perception, many of the DSP techniques developed in those early days turned out to be of particular value for the future development of hearing aids.

In the sections that follow, the sequence followed in applying digital approaches to hearing aid applications is described. Initially, digital processing was done offline. As technology allowed, real-time processing using computer-controlled analog equipment was used for experiments, then real-time DSP with a laboratory computer, and finally real-time DSP in a wearable instrument.

Signal Processing for Hearing Aid Applications

Offline DSP

The earliest work in DSP used a large mainframe computer, and processing was done offline. This approach made for slow progress because of the long turnaround time. For example, in one of the earliest experiments at Bell Laboratories in which DSP was used to study a method of acoustic amplification, it took several days to prepare the test stimuli.7 The sequence of events began with an analog recording of the unprocessed speech signal being brought to the ADC and a digital tape containing the unprocessed digitized signal prepared. The tape was then sent to the computer, where it sat in a queue waiting to be processed. After processing, the digital signal was sent to the DAC, where an analog recording of the processed speech was prepared. The analog recording was then checked. Glitches were detected, and the process was repeated until an error-free version of the processed speech was obtained. The analog recording of the processed speech was then sent to an audiology research clinic for evaluation since there were no clinical facilities at Bell Laboratories. Although this experiment did not yield any dramatic new information (subjects with different audiograms showed different improvements in speech intelligibility with frequency shaping), the experiment was important in that it demonstrated the enormous potential of DSP for the field of audiology. Complex methods of signal processing that would require considerable effort to be implemented using analog circuits could be implemented digitally with relative ease. Of particular interest to the researchers involved in this project were the new insights provided by computer simulation in studying new forms of acoustic amplification.

Computer-Controlled Analog Equipment

The introduction of small laboratory computers in the mid-1960s circumvented the inconvenience and inefficiency of offline processing. The laboratory computers at the time were too slow for digital processing of audio signals in real time but could be used to control analog equipment for real-time processing. This combination of digital and analog technology proved to be a practical approach to online experimental testing and was widely used during the 1970s. There was considerable interest in multichannel amplitude compression during this period, and many of the key experiments evaluating multichannel systems used computer-controlled analog equipment. A particularly important series of experiments evaluating multiband amplitude compression using this approach was performed by Louis Braida and his colleagues at the Massachusetts Institute of Technology.8–13 The development of equipment to perform advanced signal processing in real time allowed for systematic investigation of a complex form of hearing aid processing. The successful melding of digital and analog technology was not lost on hearing aid engineers, and the first so-called “digital” hearing aids were in fact hybrid instruments with digital control of analog components.

The use of a dedicated laboratory computer to control analog equipment also allowed for the convenient implementation of adaptive test procedures for the fitting of complex hearing aids. In an adaptive test, the stimulus on any given trial is dependent on the data obtained on previous trials. Adaptive testing is more efficient than traditional methods of testing, in which the stimulus levels to be used are decided on before the experiment. The efficiency of adaptive testing relative to nonadaptive testing is substantial if several variables need to be adjusted. The process of fitting a hearing aid involves the adjustment of several variables so that the use of an adaptive procedure is very attractive in terms of improving the efficiency of hearing aid evaluation and fitting. The complexity of multivariate adaptive fitting procedures, however, precluded the use of these procedures until the introduction of computer-assisted testing. A multivariate adaptive strategy for fitting a hearing aid was developed in the mid-1970s,14,15 but the use of this technique was limited to research laboratories until the development of programmable hearing aids.

The 1980s was a period of substantial activity, in which several research laboratories applied advanced methods of signal processing to problems in acoustic amplification. The technology used in these investigations included programmable digital filters, offline implementation of advanced signal-processing algorithms, and digital control of analog or sampled data systems. The avenues of research described in the following paragraphs provided considerable insight with respect to the capabilities of DSP in addressing the most basic problems in acoustic amplification. These experiments laid the groundwork for the types of signal processing that could be used in wearable digital hearing aids.

A major thrust was the investigation of adaptive signal processing for noise reduction. Graupe16 developed a self-adaptive filter for noise reduction using a single microphone input. A chip implementing Graupe's method of noise reduction was developed (Intellitech's Zeta Blocker17) and incorporated in several early hearing aids using advanced signal processing. A number of researchers investigated adaptive filtering approaches to noise reduction using the output of 2 or more microphones. For example, Chabries and his colleagues18,19 investigated the use of an adaptive filter with 2 microphone inputs: one input containing speech and noise and the other containing a linearly processed version of the noise. The system works well if the noise microphone is placed near the noise source. This is not a convenient arrangement for everyday use of a hearing aid. A more practical arrangement is to place both microphones on the head. One approach is to place a microphone on each ear. The sum of the 2 microphones consists of both speech and noise, while the difference between the 2 microphones consists of noise only for a speech source directly in front of the listener. These 2 inputs (the sum and difference, respectively, of the signals at each ear) are used as the inputs to the 2-input adaptive filter. An experimental evaluation of this technique showed significant improvements in intelligibility relative to normal binaural listening in an acoustic environment with little or no reverberation.20 Implementation of this technique, however, requires a link between the 2 ears, which is not very practical, although this may change with improved methods of wireless transmission.

A more practical implementation of the 2-input adaptive filter is that in which both microphones are mounted on the same ear: an omnidirectional microphone to pick up both speech and noise and a directional microphone pointed toward the noise source to pick up mostly noise. A system of this type was found to work well in a room with low reverberation21 but not in the presence of significant reverberation.22 This result is similar to that obtained with a directional microphone input in a reverberant environment. A variation of this approach using several microphones was developed by Chazan et al.23 Techniques in which the outputs of 2 or more microphones are processed to achieve directionality and reduce background noise require advanced processing and are well beyond the capabilities of analog hearing aids. These early studies laid the groundwork for the development of multimicrophone digital hearing aids with superior directional characteristics.

Another area of research involved nonlinear methods of enhancing the speech signal for people with severe high-frequency hearing loss. One such method, frequency lowering, is to lower the high-frequency speech components into a lower frequency region, where the listener has relatively good residual hearing. There is a long history of research on frequency lowering. (Note that the term frequency lowering is a general descriptor covering any form of frequency recoding in which the output has lower frequency content than the input.) Two commonly used forms of frequency lowering are (1) frequency transposition or frequency shifting, in which signal components in a high-frequency region are shifted to a lower frequency region, and (2) frequency compression, in which all frequencies in the signal are lowered proportionally or with some frequency warping. Most of the early evaluations of frequency lowering did not show significant improvements in speech recognition.24 However, the 1980s saw a revival of interest in frequency lowering largely because new methods of signal processing had recently been developed that were substantially more powerful than the techniques used in the past and allowed for innovative new ways of addressing the problem. Reed and her colleagues,25,26 for example, were able to obtain significant improvements in speech recognition, particularly for stops, fricatives, and affricates, using these new methods of frequency lowering.

Digital approaches to speech processing enabled the implementation of other methods of speech enhancement that were based on the results of perceptual studies designed to identify the cues most important for good speech intelligibility (clear speech).27,28 Many of these differences are manifested in increased consonant-to-vowel intensity ratios (CVR), the importance of which for speech recognition was demonstrated earlier by Hecker.29 The signal-processing capabilities of the 1980s provided the means for detailed investigations of the effects of increasing consonant amplitude and duration on intelligibility.30–35 The results of these studies were mixed in that some improvements were obtained, such as improved consonant recognition, but a significant increase in intelligibility was not always obtained. Nevertheless, the results were sufficiently promising for engineers to investigate methods for improving CVRs automatically using the new tools provided by DSP.36

Other methods of speech processing involved enhancing the temporal structure of speech so as to reduce the masking of weak sounds by more intense sounds and, in the case of reverberant speech, to restore the temporal modulations of the speech signal reduced by the temporal smoothing of the reverberation. Experimental evaluations of these techniques yielded mixed results.37–40 Yet other signal-processing approaches were designed to increase the spectral contrasts in the speech signal so as to compensate for the reduced frequency resolution of the impaired ear.41–46 Experimental evaluations of this approach have also yielded mixed results. Both approaches have nevertheless shown sufficient promise to warrant additional research in the area.

The use of either offline DSP or computer-controlled analog equipment provided substantial new insights with respect to developing improved methods of signal processing for people with hearing loss, but to implement these techniques in a digital hearing aid, it was necessary that methods be developed for digital processing of audio signals in real time.

DSP in Real Time: The Digital Master Hearing Aid

The first digital computers performed computations in sequence. To speed up digital processing, computers were developed using parallel processing, in which arrays of numbers were processed simultaneously (the early super computers) instead of 1 number at a time. By the late 1970s, high-speed laboratory computers were developed that incorporated array processing. These array processors were capable of processing audio signals in real time.

The events leading to the development of a digital hearing aid using array processing followed the usual process of trial and error; that is, we learn from our mistakes. In the late 1970s, it was decided to build a digital hearing aid as part of the ongoing research in acoustic amplification at the City University of New York. Douglas Mook, who was a summer student at the time, and Myron Zimmerman, with input from the author and some funds from an ongoing research grant, designed a reduced instruction set computer microprocessor to serve as the central element of the proposed hearing aid. CMOS technology was chosen because of its low power consumption, but it was found to be too slow for real-time operation. A hybrid system with 3 analog channels was then designed. However, the engineer responsible for hardware development left the group soon after (possibly because of the frustration of trying to build a complex system with limited resources), and the project was terminated. Having learned from this mistake, it was decided to follow the path of computer simulation. At the time, the idea of a computer-simulated hearing aid was viewed as “a variation of the master hearing aid approach” using “an on-line computer to simulate an adjustable hearing aid, the adjustments being made by the computer according to an established multivariate optimization routine.”47 A valuable feature of this approach was that one did not have to worry about hardware constraints, although the difficulties of real-time programming were not appreciated at the time.

Whereas computer-controlled analog equipment for real-time signal processing had proven to be very useful, the limitations of analog signal processing remained. The array processor provided the means for processing speech and other audio signals digitally in real time. It was thus possible to develop a digital hearing aid using a high-speed array processor for real-time digital processing of audio signals.

A grant application to develop a digital hearing aid using a high-speed array processor was submitted to the National Institutes of Health and was funded in 1981. After much pain and suffering in developing the software for real-time operation, a working system was up and running by the summer of 1982.48 Although it worked well and was capable of advanced signal processing previously not thought possible in a hearing aid, the device was met with some amusement by clinicians. It was a large instrument mounted on a rack of equipment with FM radio links between the processor and an ear-worn unit containing a microphone and output transducer. At that time, the idea of a computer being small enough to fit on or in the ear was viewed as science fiction, and as one wag put it, “It may be a good hearing aid, but you'll need a friend with a wheelbarrow behind you to carry the instrument.”

The array processor digital hearing aid was designed as a research tool (ie, a master hearing aid) for exploring the potential of DSP in hearing aids. An important feature of its design was that it could be used to simulate experimental hearing aids. As illustrated by the preceding account of the difficulties encountered in actually constructing a digital hearing aid, a basic problem in hearing aid research up until that time was that any new idea for improving signal processing required that an instrument be constructed to evaluate the technique. In most cases, the evaluation of the experimental technique was negative, and it was not clear whether the fault lay in the limitations of the hardware implementation or whether it resulted from a fundamental limitation of the technique itself. There are many examples in hearing aid research in which new ideas were tried and found to be wanting and then tried again some time later, with better equipment and usually (but not always) with better results.49

The underlying philosophy in developing the array processor digital master hearing aid was to evaluate new methods of signal processing without regard to the size or complexity of the necessary instrumentation. If significant improvements could be obtained, such as improved speech intelligibility in noise, then the problem of achieving these results, or as close an approximation as possible, with a simpler, more practical instrument would then be addressed. If improved results could not be obtained using sophisticated signal processing without hardware limitations, then there would no point in proceeding further with that form of processing.

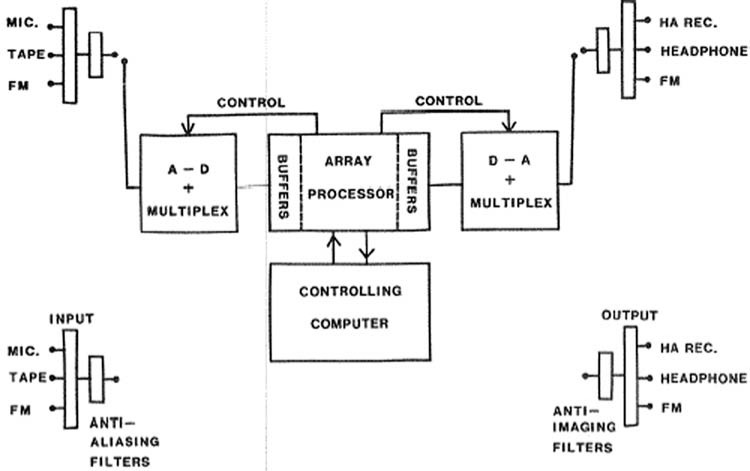

Figure 1 shows a block diagram of the array processor digital hearing aid. There were 3 possible inputs: microphone, tape player, and FM receiver. The analog input signal was fed to an anti-aliasing filter and ADC. The digitized output was fed to the array processor (MAP 300) where the signal was processed. The type of processing was determined by the controlling computer (DEC LSI-11/23). For example, simple linear filtering might be used for one experimental condition; another condition might require advanced signal processing for noise reduction. The output of the array processor was then fed to the DAC and anti-imaging filter and the resulting analog signal fed to 1 of the 3 possible outputs: hearing aid receiver, headphone, or FM transmitter. The array processor was dedicated to high-speed processing. The smaller, controlling computer was programmed to implement adaptive procedures for determining the type of processing and relevant parameter values to be used at any given time in an experiment.

Figure 1.

A block diagram of the array processor digital hearing aid. From Levitt H, Neuman AC, Mills R, Schwander TJ. A digital master hearing aid. J Rehab Res and Dev. 1986; 23(1): 79–87. Reprinted with permission.

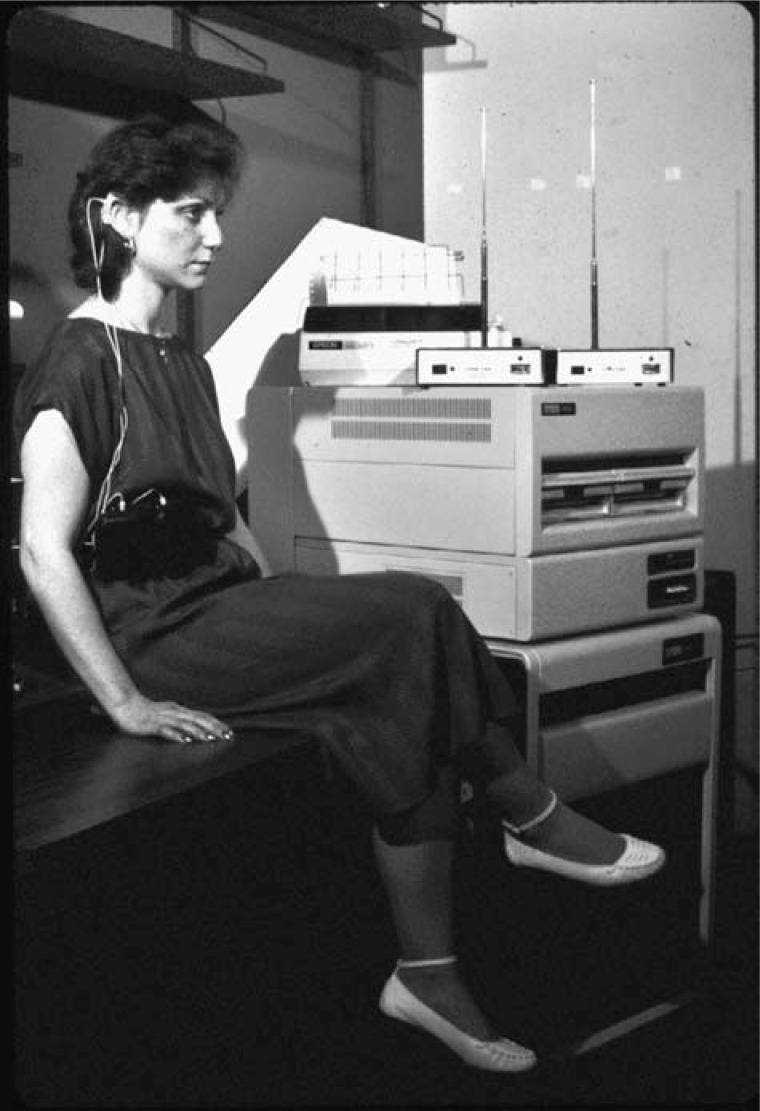

Figure 2 shows a person using the system. She is wearing a hearing aid case containing a microphone and hearing aid receiver. The output of the microphone is fed to a body-worn FM unit, which transmits the signal to the input stage of the processor. A second FM unit is used to transmit the processed signal to a body-worn FM receiver, the output of which is connected to the hearing aid receiver. The 2 large units on the right consist of the array processor and controlling computer. The FM receiver and transmitter connected to the input and output, respectively, of the processor are visible above the unit. A printer is behind the 2 FM units.

Figure 2.

Photograph of the array processor digital hearing aid and a person using the system. Photograph reprinted with permission of the City University of New York.

A series of experiments were carried out using the array processor master hearing aid. One set of studies developed and evaluated various methods of signal processing for noise reduction, such as linear filtering, spectrum subtraction, adaptive filters of various kinds, and spectral shaping,50–62 as well as methods for reducing reverberation63 and a general approach to compression amplification.64 The second avenue of investigation focused on adaptive strategies for hearing aid fitting and other audiological applications65–69 The experiments on adaptive strategies for hearing aid fitting were of particular interest since the original rationale for developing the array processor digital hearing aid was to develop a computerized master hearing aid that would employ efficient adaptive techniques for fitting hearing aids.

The array processor digital hearing aid turned out to be a powerful tool for investigating basic issues in acoustic amplification, but it was also difficult to use, requiring the skills of an expert computer programmer. Shortly after the development of the array processor digital hearing aid, high-speed chips for DSP were introduced. These DSP chips were fast enough to process audio signals in real time, and it was decided to develop a simpler digital master hearing aid that would be easier to use. This instrument did not have the capability of implementing highly sophisticated methods of DSP, but it could be used for simulating hearing aids that were current at that time. The instrument was used in experiments evaluating prescriptive fitting strategies70 and the effect of resonances introduced by coupling a behind-the-ear (BTE) hearing aid to an ear mold using acoustic tubing.71 An interesting variation of the instrument was The Chameleon,72 which, when connected to a conventional hearing aid, would proceed to measure its electroacoustic characteristics and then simulate that hearing aid.

The array processor digital hearing aid was an important milestone in the development of digital hearing aids in that it demonstrated the tremendous capabilities of DSP in real time. The research studies using this instrument provided considerable insight as to what could be achieved with respect to noise and reverberation reduction (single microphone or multiple microphone inputs), more general forms of compression amplification, and multivariate adaptive fitting using DSP. The subsequent development of a simpler digital master hearing aid using DSP chips dedicated to high-speed DSP was a straw in the wind for the future development of a wearable digital hearing aid. Although the DSP chips and supporting components used in this simpler digital master hearing aid were too large with too high a power consumption for a wearable instrument, it was only a matter of time before these chips could be made small enough with sufficiently low power consumption for a practical, wearable digital hearing aid.

Wearable Digital Hearing Aids

The section that follows traces the development of wearable digital hearing aids. Note that the material contains references to commercial products. No endorsements are intended. These products are significant in a historical context. They were the first of their kind to be marketed and hence available to consumers.

As with almost every important invention, more than 1 individual or group can rightfully lay claim to the invention or an important aspect of it. In 1975, Graupe et al73 reported the development of a 6-channel instrument with digital control of the gain in each channel. Graupe went on to develop the Zeta Noise Blocker, which, as described above, was a self-adapting filter that automatically attenuated frequency channels containing high noise levels. In 1977, Mangold and Leijon74 reported on the use of a programmable filter in acoustic amplification and subsequently developed a programmable multichannel compression hearing aid using digital control of analog components.75 Two Australian groups also investigated the possible use of microprocessors in acoustic amplification at this time.76,77 A programmable digital filter for use in audio experiments was also developed by Trinder at about the same time.78 This filter was used by Studebaker in an experiment on spectrum shaping.79 A patent for a digital hearing aid was obtained by Moser in 1980.80 The patent covers the concept of a digital hearing aid in general terms.

Engebretsen et al at the Central Institute for the Deaf (CID) began working on a digital hearing aid in the early 1980s and were the first to construct a wearable instrument including fabrication of the DSP chips.81–87 The instrument was patented in 1985.88 The CID group later joined with the 3M Company in a consortium to develop digital hearing aids. The array processor digital hearing aid developed at the City University of New York48 was working in the summer of 1982 and was used for more than a decade in a series of experiments investigating the capabilities of DSP in a hearing aid.50–66,69 Although not wearable, it was the first digital hearing aid in regular use as a research tool. A prototype wearable digital hearing aid was developed by Nunley et al in 1983.89 It was a relatively large body-worn instrument and was not developed beyond the prototype stage. Concurrent with these developments, the Audimax Corporation developed a body-worn sampled-data hearing aid in which acoustic feedback was cancelled by means of a finite impulse response (FIR) filter in an electrical feedback loop adjusted to be equal and opposite to the acoustic feedback loop.90

A wearable microprocessor hearing aid was developed at University College London by Adrian Fourcin during the same period.91 The instrument embodied a fundamentally different approach to acoustic amplification. The rationale was that a simplified acoustic signal conveying important speech cues would be more helpful to a person with limited residual hearing than the complex audio signal. The first version of this hearing aid extracted the voice fundamental frequency from the speech signal and presented it to the listener as a frequency-modulated sine wave with an amplitude and frequency range appropriate for the listener's residual hearing.91 This instrument was known as the sinusoidal voice hearing aid and was modeled on an earlier instrument developed by Fourcin for single-channel stimulation of an extracochlear implant.92 More advanced versions of the instrument (speech-pattern element hearing aids) include coding of amplitude envelope and the presence of voiceless excitation in addition to the voice fundamental frequency.93–96

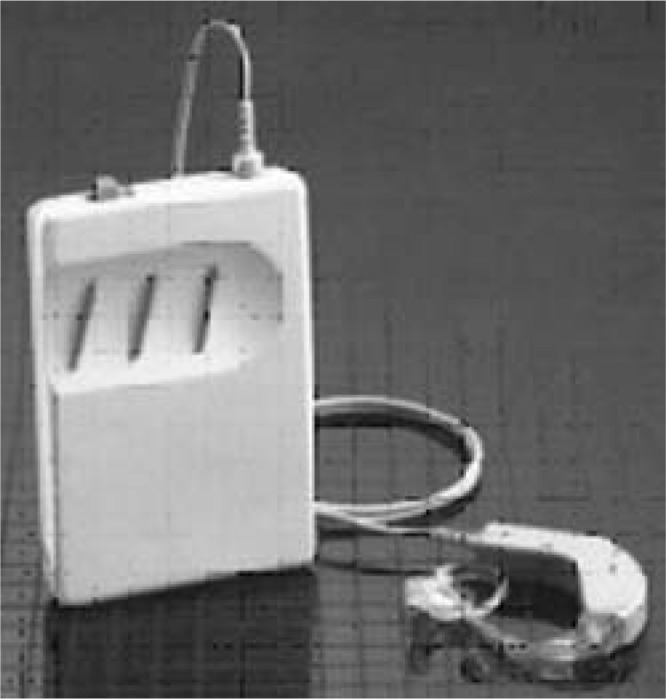

The high-speed DSP chips developed in the 1980s provided the means for a wearable digital hearing aid. Although the earliest DSP chips were relatively large and consumed too much power for hearing aid applications, smaller chips of smaller size and low power consumption were subsequently developed specifically for use in hearing aids. The Nicolet Corporation is credited with developing the first commercial digital hearing aid using custom fabricated chips.97–99 Nicolet's Project Phoenix under the direction of Kurt Hecox was initiated in 1984 and ended in 1989, when Nicolet withdrew from the hearing aid market. Their first hearing aid consisted of a body-worn processor with a hardwire connection to ear-mounted transducers. The hearing aid is shown in Figure 3. A BTE digital hearing aid, shown in Figure 4, was developed toward the end of the project.

Figure 3.

Photograph of the Project Phoenix body-worn digital hearing aid. Photograph reprinted with permission of Audible-Difference®.

Figure 4.

Photograph of the Project Phoenix behind-the-ear digital hearing aid. Photograph reprinted with permission of Audible-Difference®.

The Project Phoenix hearing aid, although not a commercial success, demonstrated the feasibility of a wearable digital hearing aid, and the race was on for other hearing aid companies to develop commercially viable digital hearing aids. Bell Laboratories developed a hybrid digital-analog hearing aid in which digital circuits controlled a 2-channel compression amplifier.100 Field trials carried out at the City University of New York showed the instrument to be superior to state-of-the-art analog hearing aids that were available at that time, but AT&T (the parent company of Bell Laboratories), for reasons unrelated to the product, decided to terminate the project (and all other small projects) to concentrate on its core business. AT&T assigned the rights to their hearing aid to the Resound Corporation in 1987, and after further refinement, the hearing aid was marketed and was an immediate success.

It is significant to note that the companies that led the way in implementing digital technology in hearing aids were not traditional hearing aid companies but rather major industrial companies with a history of innovative research and development. These companies also introduced new ideas and methods that had a lasting impact on the field.

Nicolet, AT&T/Resound, and 3M developed sophisticated digital instrumentation for audiological measurement and fitting of hearing aids. Nicolet developed the Aurora, which included basic audiometry, real-ear measurement, adaptive methods of hearing aid fitting, middle-ear analysis, and access to a computerized database in a single instrument.99 The system developed by Resound100 included an automated method for measuring loudness growth in octave bands (the LGOB procedure101), the output of which was used to program the hearing aid for appropriate wide-dynamic-range compression for each individual. The company also introduced the ultrasonic remote control, which made it easier to adjust hearing aids. 3M developed a fitting system configured around a personal computer with software for simplifying the fitting of a 2-channel compression hearing aid with multiple memories and data-logging capabilities.102

The first commercially available programmable hearing aid, the Audiotone System 2000, was introduced by Dahlberg in 1988.103 Other hearing aid companies followed soon after with programmable instruments such as the Bernafon PHOX and the Siemen's Triton. In 1989, the 3M Corporation introduced the Memory Mate, a 2-channel compression hearing aid with multiple memories and data-logging capabilities. For a description of these and other early programmable hearing aids, see Sandlin104 and Sammeth.105 Within a few years, virtually every hearing aid company was marketing 1 or more programmable hearing aids. These instruments used analog components (amplifiers, filters, limiters) controlled by a digital unit. As such, they provided some of the potential benefits of digital hearing aids, such as memory for storing parameter settings, the ability to modify settings of the hearing aid easily and with greater precision than earlier hearing aids, the capability for paired-comparison testing, and convenient selection of appropriate parameter settings for different acoustic environments.

The switch to programmable hearing aids led to changes in methods of hearing aid dispensing. Initially, all of the companies producing programmable hearing aids had proprietary fitting systems. Unfortunately, these fitting systems were expensive, and since the hearing aids produced by 1 company could be fitted only with that company's proprietary fitting system, the cost of equipping a hearing aid dispensary with the fitting systems of several different companies became prohibitive. To address this problem, the hearing aid industry developed standardized hardware and software platforms (HI-PRO and NOAH) to serve as a common interface between a computer and a digital hearing aid. This allowed for a relatively inexpensive personal computer to be used as the programming tool, with each manufacturer providing proprietary software for fitting its instruments. The system could also interface with office management software. It was not long before computers became essential equipment for every hearing aid dispensary.

The next significant milestone in the development of wearable digital hearing aids was the introduction of sampled data instruments using switched capacitor technology. It is important to bear in mind that the digitization of analog signals involves 2 distinct stages: (1) sampling the value of the waveform at discrete intervals in time and (2) converting the sampled value to binary form (ie, a series of 0s and 1s). A system that uses the sampled values without converting them to binary form (stage 2) is known as a sampled-data system. Sampled-data systems have several important advantages over digitally controlled analog instruments. The signals are discrete, and many basic DSP algorithms can be implemented using this technology, such as FIR filters with precise, independent control of both amplitude and phase. The power consumption of sampled-data hearing aids is also less than that of a digital hearing aid, so that low-power, sampled-data hearing aids were feasible long before digital hearing aids could be developed with sufficiently low power consumption to be practical.

The first commercial sampled-data hearing aid, the Argosy Electronics 3-Channel-Clock hearing aid, was designed by David Preves at the end of the 1980s.106 It consisted of 3 channels with an adjustable frequency response that was far superior to earlier digitally controlled analog devices. The frequency response was determined by the clock rate, hence, its unusual name. The Ensoniq Sound Selector was another early sampled-data hearing aid with low power consumption and excellent frequency-shaping capabilities.107 The instrument was manufactured by an electronic keyboard company and incorporated well-tried sampled data technology that had proven its worth in another highly competitive market. The Ensoniq Sound Selector had 13 frequency channels, allowing for much greater programmable frequency resolution than previously available.

This was a period of rapid change in the hearing aid industry. New hearing aids embodying novel designs with increasingly more complex signal processing were introduced in quick succession by different manufacturers. At the same time, work progressed intensely on developing the ultimate prize: a true digital hearing aid. The main problem in developing a commercially viable digital hearing aid was that of reducing the size and power consumption of the digital chips. Analog chips for hearing aid applications had been refined over many years so that even when digital chips were developed with an acceptable level of power consumption and small enough for use in a practical hearing aid, there remained the problem of competing with analog technology that was well established with chips of even smaller size and lower power consumption. Advances in digital chip technology continued steadily over the years, so that it was essentially a matter of time before digital chips became competitive with analog chips for hearing aid applications.

The turning point came in 1996, when Widex introduced the Senso, the first commercially successful digital hearing aid.108 The Oticon Company began marketing their digital hearing aid, the DigiFocus, immediately afterward. The DigiFocus was based on the JUMP-1 digital hearing aid platform, which had been distributed the year before to audiological research centers worldwide to spur independent investigations of how best to implement digital technology in acoustic amplification.108

It is not coincidental that a fundamental change in the market forces driving hearing aid development occurred at about the time DSP in hearing aids became a reality. Hearing aid manufacturers, to be competitive, began to pay more attention to improved signal processing. The introduction of multichannel amplitude compression in hearing aids signaled this important change. Considering that the consumer audio industry had already embraced digital technology and that multichannel compression was already a well-established technique in audio recording and reproduction, it is not surprising that the first use of digital technology in hearing aids accompanied the development of multichannel compression hearing aids.

The first generation of digital hearing aids used a fixed multichannel architecture. As a consequence, these hearing aids were not very different conceptually from the previous generation of hybrid digital-analog hearing aids. Multichannel compression amplification was a trusted design, and the first digital instruments essentially refined this format. There is an interesting analogy here. As noted earlier, the first DSP algorithms used in the telecommunications industry replicated conventional methods of analog signal processing. It was only later that DSP algorithms were developed that took advantage of the unique capabilities of digital processing. The evolution of digital hearing aids appears to be following the same pattern. Whereas the first digital hearing aids were essentially refined versions of the digitally controlled analog hearing aids that they replaced, the recent introduction of digital hearing aids with an open architecture has allowed for the development of innovative new designs that take greater advantage of the unique capabilities of digital processing.

Have Digital Hearing Aids Lived Up to Their Promise?

Now that the field of acoustic amplification has embraced digital technology, it is relevant to ask whether the quality of acoustic amplification has actually improved with the introduction of digital technology. More important, has digital technology, by providing the means for new ways of signal processing, opened up new, more productive ways of thinking about acoustic amplification?

Comparing Analog and Digital Instruments

The digital hearing aid represents a major technological breakthrough, and when these instruments were first introduced, it was widely expected that digital instruments would show substantial improvements in benefit over analog hearing aids. Early experimental comparisons between digital and analog hearing aids, however, showed only small to negligible advantages for the digital instruments.109–119 Subjective assessments showed the largest improvements. Relatively few improvements were obtained with objective measurements. It was also demonstrated by Bentler et al120 in a clever experiment that there was a significant halo effect in that if a subject believed a hearing aid to be a digital instrument, then the subjective assessment of the hearing aid was likely to be more positive than if the subject believed otherwise.

In retrospect, these findings are not surprising considering that early digital hearing aids were essentially refinements of the programmable analog hearing aids that they had replaced. The important advantage of digital hearing aids is that their capabilities exceed those of analog hearing aids. This advantage became increasingly apparent with the introduction of digital hearing aids using a less constrained or open architecture that allowed for innovative signal processing to be implemented.

How Digital Hearing Aids Differ From Their Analog Predecessors

Compression Amplification

If a digital and an analog hearing aid have the same compression characteristics, then an experimental comparison between the 2 hearing aids should not show a difference. The advantage of digital hearing aids in this context is that more advanced forms of compression can be achieved using digital techniques.121 These advantages include sharper filter boundaries, less filter overlap, and more flexible and more precise control of changes in gain. The concepts of attack and release times developed with analog processing can be implemented with digital processing, but a more general approach to the temporal dynamics of amplifier gain is possible with digital processing.

Feedback Cancellation

An important advantage of DSP is that digital filters can be programmed to provide independent adjustment of amplitude and phase. This capability has allowed for the development of techniques for canceling acoustic feedback.122 Analog methods of feedback control typically reduce the hearing aid gain (eg, by means of a notch filter) in those frequency regions in which the acoustic feedback is likely to become unstable and produce an intense whistling sound. Although this approach may prevent unstable oscillations, it does not eliminate feedback. Acoustic feedback is present even when the hearing aid is not in oscillation, and as a consequence, the overall frequency gain characteristic of the hearing aid when worn on the ear is altered by the acoustic feedback.

Of the various developments in digital hearing aids, improved control of acoustic feedback has had the greatest impact. All of the modern high-end digital hearing aids use feedback cancellation. This form of feedback control involves more than avoidance of loud unpleasant whistling; it also reduces major constraints on overall gain, thereby allowing for greater use of open ear molds, more accurate control of the overall frequency-gain characteristic, and other improvements in hearing aid design. The methods of feedback cancellation that have been implemented require precise, adaptive control of both amplitude and phase in the feedback circuit, which would not have been possible in analog hearing aids.

A more subtle problem imposed by acoustic feedback is that the hearing aid may not provide the required gain in the high frequencies. High-frequency gain is commonly prescribed for a high-frequency hearing loss, and there are many cases in which the prescribed high-frequency gain cannot be provided to prevent unstable acoustic feedback. Another manifestation of the constraints imposed by acoustic feedback is that ear molds, or a hearing aid case, with a good seal are needed to reduce the leakage between the hearing aid receiver and the microphone. These ear molds are uncomfortable and are a cause of the occlusion effect. With digital cancellation of acoustic feedback, a good seal may no longer be needed; in cases of more mild to moderate losses, more comfortable open ear molds can be used.

Digital cancellation of acoustic feedback is not perfect because of practical constraints on filter complexity, but this type of processing does allow for substantially more gain without unstable feedback. An additional advantage of digital feedback cancellation is that it does not alter the frequency-gain characteristic of the hearing aid. Furthermore, if feedback cancellation is not used, the suboscillatory acoustic feedback (feedback that is not strong enough to cause unstable oscillations) will alter the frequency gain characteristic of the hearing aid when it is worn on the ear.

Noise Reduction

A major problem in acoustic amplification that has eluded a satisfactory solution is that of background noise. It was hoped that advanced methods of DSP would provide significantly improved speech recognition in noise. Experimental evaluations of a wide range of signal-processing strategies have shown that for the case of a single microphone input, advanced methods of signal processing provide little if any improvement in speech recognition despite substantial reductions in the audibility of the background noise.50–52,54,56

The directional microphone represents a much simpler approach to improving speech recognition in noise. Hearing aids with directional microphones were first marketed in the United States in 1971,123 and although they were well received initially, the use of directional microphones in hearing aids gradually declined after 1980. A problem with directional microphones is that there are many acoustic environments in which they provide no benefit, such as speech and noise coming from the same direction or a reverberant environment.124 Interest in directional microphones was revived in 1992, when Phonak introduced the AudioZoom,™ which allowed the user to switch between a directional or omnidirectional input to the hearing aid. Whereas advanced technology is not required for manual switching of a microphone input, automatic switching or adaptive adjustment of directionality does requires sophisticated signal processing in order to be effective. Experimental evaluations of adjustable directional inputs to a hearing aid have been positive,125,126 but the problem of predicting when a directional input is appropriate is proving to be difficult.127 Hearing aids with self-adaptive directional inputs are designed to identify the directions of the most powerful sound and whether they are speech or noise to adjust the directional characteristics of the input. This is not a simple problem, and practical systems that have been developed have their shortcomings.128

The limited benefit obtained from a directional input in the everyday use of a hearing aid has engendered new thinking about how to improve directional benefit in real-world listening. This has resulted in the development of signal-processing techniques for recognizing and classifying the acoustic environment129 to automatically adjust parameters of the hearing aid. This is a departure from traditional methods of signal processing, which have typically focused on signals that have been received and not on signals that might be received. This form of signal processing involves aspects of automatic sound recognition. Elementary levels of automatic speech recognition have also been used in some digital hearing aids, such as determining whether speech or noise is present, recognizing whether the speech is voiced or voiceless, or extracting and modifying the voice fundamental frequency. It is likely that more advanced forms of automatic sound recognition will be implemented in the digital hearing aids of the future. In this respect, digital hearing aids have fulfilled the promise of introducing new ways of thinking about acoustic amplification.

Wireless Links Between Hearing Aids and Telephones

The use of Bluetooth wireless transmissions for linking telephones and hearing aids has important advantages over the current use of a low-frequency magnetic link using telecoils. A major problem with telecoils is that of finding the “sweet spot” for a good connection. This depends on the appropriate alignment of the telecoil axis relative to the direction of the magnetic field. This is not always easy to do, as many hearing aid wearers will attest. The problem is made more difficult by the fact that the direction of the magnetic field in a room loop is different from that in a telephone, where the handset is held in the natural position for telephone use. A high-frequency radio link such as Bluetooth not only provides a more convenient and more reliable connection with telephones and other communication devices but can also be used to program a digital hearing aid or transfer data from a hearing aid with data-logging capabilities.

Binaural Amplification

Hearing instruments that are currently advertised as binaural hearing aids do not have the capability for the appropriate interaural adjustments, especially with respect to the interaural phase, and are actually bilateral rather than binaural hearing aids.130 A true binaural hearing aid requires a connection between the 2 ears for the convenient control of interaural amplitude and phase differences. A hard-wire connection is not practical, and a conventional AM or FM radio link draws too much power. A novel way of providing a low-power wireless link between the 2 ears is to transmit control signals rather than the full audio signal. Since the bit rate for control signals is small, the power consumption of the transmitter and receiver is low enough to be practical.

Siemens Hearing Instruments131 has recently introduced a bilateral hearing instrument in which a hearing aid on one ear transmits control signals to the hearing aid on the opposite ear. This instrument represents an important step toward the realization of a true binaural hearing aid. The binaural auditory system is particularly sensitive to interaural phase, and an instrument of this type provides the means for controlling both interaural amplitude and phase characteristics with the high accuracy needed for true binaural amplification.

Frequency Transposition/Compression

A method of signal processing that has been visited time and again since the earliest days of electronic signal processing is that of frequency lowering for people with a high-frequency hearing loss. As noted earlier, most of the early evaluations of frequency lowering did not show significant improvements in speech recognition.24 Methods of frequency lowering have improved since these early evaluations, and these improved methods of frequency lowering have been implemented in digital hearing aids.

AVR Sonovation introduced a body-worn digital hearing aid using frequency lowering in 1991 and a BTE transposing hearing aid several years later.132,133 Digital processing is used to identify voiceless sounds and to compress the frequencies of these sounds proportionally by an amount appropriate for each user. This method of frequency lowering is essentially an improvement of the transposition method pioneered by Johansson,134 in which the high-frequency components of voiceless fricatives are transposed downward and added to the low-frequency components, thereby masking them. Proportional frequency lowering introduces less distortion and less masking of the processed sounds, particularly if the minimum required amount of proportional compression is used. Experimental evaluations of the AVR Transonic frequency-transposing hearing aid have shown positive results but not for all subjects.135–140

Digital hearing aids provide greater flexibility and more accurate control of frequency lowering than was possible with analog hearing aids, with concomitant improvements in the benefits provided by frequency lowering. There is continued development in this area, as witnessed by the recent introduction of the Widex Indeo hearing aid with frequency lowering.141

Data Logging in Hearing Aids

Hearing aids are now capable of documenting patterns of hearing aid use, such as volume control settings, daily usage, recognizing and classifying the acoustic environment,142 and storing information about the types of sounds being received (speech, noise). A method of monitoring hearing aid usage using analog technology was implemented as far back as 1975 in an early study on a wearable analog master hearing aid.143 An electrochemical elapsed-time indicator (Curtis Instruments, model 120 PC) was used to monitor power flow from the hearing aid battery. More extensive digital monitoring of hearing aid usage was used some 10 years later in Project Phoenix.98 The data from these studies were used for research purposes. The first data logging in a hearing aid available for clinical use was in the 3M hearing aid, which provided information about usage of the multiple memories contained in the hearing aid.102

But as indicated above, current hearing aids can recognize and classify the acoustic environments in which the hearing aid has been worn.129 This capability requires monitoring of the acoustic environment coupled with automatic sound recognition. It will be of great interest to see whether the clinical implementation of data monitoring will be used effectively to improve fitting and counseling and, in the longer term, to improve hearing aids.

Concluding Comments

When the first cumbersome, desk-mounted array processor digital hearing aid was developed, it was predicted that digital hearing aids would not only be able to do everything that an analog hearing aid could do but also be able to do things that analog hearing aids could not do, and, most important of all, digital hearing aids would change our way of thinking about acoustic amplification.6 In retrospect, this prediction has proven to be reasonably accurate. Modern digital hearing aids differ substantially from analog hearing aids and incorporate features that are well beyond the capabilities of analog instruments. Digital technology, in general, has much to offer toward the development of better hearing aids. Of the many benefits of digital technology, the most important is that our thinking regarding the fundamentals of acoustic amplification has changed dramatically and for the better.

Acknowledgments

I wish to thank the Journal of Rehabilitation Research and Development for permission to reproduce Figure 1, the City University of New York for permission to reproduce Figure 2, and Audible-Difference for permission to reproduce Figures 3 and 4. Preparation of this article was supported by grant H133G050228 from the National Institute on Disability and Rehabilitation Research. The opinions expressed in this article are those of the author and do not necessarily reflect those of the Department of Education. The author does not endorse any of the products mentioned in this article.

References

- 1.Fagen MD. ed. A History of Engineering & Science in the Bell System: National Service in War and Peace (1925–1975). Murray Hill, NJ: AT&T Bell Laboratories; 1978 [Google Scholar]

- 2.Hodges A. Alan Turing: The Enigma. Touchstone ed. New York, NY: Simon and Schuster; 1984: 246–249 [Google Scholar]

- 3.Shannon CE. A mathematical theory of communication. Bell System Technical Journal. 1948;27: 379–423, 623–656 [Google Scholar]

- 4.Nyquist H. Certain topics in telegraph transmission. AIEE Trans. 1928;47: 214, 617 [Google Scholar]

- 5.Milman S. ed. A History of Engineering & Science in the Bell System: Communication Sciences (1925–1980). Murray Hill, NJ: AT&T Bell Laboratories; 1984: 110–111 [Google Scholar]

- 6.Levitt H. Digital hearing aids: a tutorial review. J Rehab Res Dev. 1987;24(4): 7–20 [PubMed] [Google Scholar]

- 7.Black R, Levitt H. Evaluation of a Shaped Gain Handset for Telephone Users With Impaired Hearing, Internal Report. Murray Hill, NJ: Bell Telephone Laboratories; 1969 [Google Scholar]

- 8.Coln MCW. A Computer Controlled Amplitude Compressor [master's thesis]. Cambridge: Massachussetts Institute of Technology; 1979 [Google Scholar]

- 9.Lippmann RP, Braida LD, Durlach NI. Study of multichannel amplitude compression and linear amplification for persons with sensorineural hearing loss. J Acoust Soc Am. 1980;69: 524–534 [DOI] [PubMed] [Google Scholar]

- 10.Bustamante DK, Braida LD. Wideband compression and spectral sharpening for hearing-impaired listeners. J Acoust Soc Am. 1986;80(suppl 1): S12–S13 [Google Scholar]

- 11.DeGennaro SD, Braida LD, Durlach NI. Multiband syllabic compression for severely impaired listeners. J Rehab Res Dev. 1986;23(1): 17–24 [PubMed] [Google Scholar]

- 12.Bustamante DK, Braida LD. Multiband compression limiting for hearing impaired listeners. J Rehab Res Dev. 1987;24(4): 149–160 [PubMed] [Google Scholar]

- 13.Bustamante DK, Braida LD. Principle-component amplitude compression for the hearing impaired. J Acoust Soc Am. 1987;82: 1227–1242 [DOI] [PubMed] [Google Scholar]

- 14.Levitt H. Methods for the evaluation of hearing aids. Scand Audiol. 1978;(suppl 6): 199–240 [PubMed] [Google Scholar]

- 15.Levitt H. Adaptive testing in audiology. Scand Audiol. 1978;(suppl 6): 241–291 [PubMed] [Google Scholar]

- 16.Graupe D, Grosspietsch JK, Basseas SP. A single-microphone-based self-adaptive filter of noise from speech and its performance evaluation. J Rehab Res Dev. 1987;24(4): 127–134 [PubMed] [Google Scholar]

- 17.Graupe D, Grosspitch J, Taylor RA. A self-adaptive noise filtering system. Hearing Instruments. 1986;37(9): 29–34 [Google Scholar]

- 18.Chabries DM, Christiansen RW, Brey RH, Robinette MS, Harris RE. Application of adaptive signal processing to speech enhancement for the hearing impaired. J Rehab Res Dev. 1987;24(4): 65–74 [PubMed] [Google Scholar]

- 19.Brey RH, Robinette MS, Chabries DM, Christiansen RW. Improvement in speech intelligibility in noise employing an adaptive filter with normal and hearing-impaired subjects. J Rehab Res Dev. 1987;24(4): 75–86 [PubMed] [Google Scholar]

- 20.Peterson PM, Durlach NI, Rabinowitz WM, Zurek PM. Multimicrophone adaptive beamforming for interference reduction in hearing aids. J Rehab Res Dev. 1987;24(4): 103–110 [PubMed] [Google Scholar]

- 21.Schwander TJ, Levitt H. Effect of two-microphone noise reduction on speech recognition by normal-hearing listeners. J Rehab Res Dev. 1987;24(4): 87–92 [PubMed] [Google Scholar]

- 22.Weiss M. Use of an adaptive noise canceller as an input preprocessor for a hearing aid. J Rehab Res Dev. 1987;24(4): 93–102 [PubMed] [Google Scholar]

- 23.Chazan D, Medan Y, Shvadron U. Evaluation of adaptive multimicrophone algorithms for hearing aids. J Rehab Res Dev. 1987;24(4): 111–118 [PubMed] [Google Scholar]

- 24.Pickett JM. Frequency lowering for hearing aids. In: Levitt H, Pickett JM, Houde RA. eds. Sensory Aids for the Hearing Impaired. New York, NY: IEEE; Press; 1980: 191–194 [Google Scholar]

- 25.Reed CM, Schultz KI, Braida LD, Durlach NI. Discrimination and identification of frequency-lowered speech in listeners with high-frequency hearing impairment. J Acoust Soc Am. 1985;78: 2139–2141 [DOI] [PubMed] [Google Scholar]

- 26.Posen PM, Reed CM, Braida LD. Intelligibility of frequency-lowered speech produced by a channel vocoder. J Rehab Res Dev. 1993;30(1): 26–38 [PubMed] [Google Scholar]

- 27.Picheny M, Durlach N, Braida L. Speaking clearly for the hard of hearing, I: Intelligibility differences between clear and conversational speech. J Speech Hear Res. 1985;28: 96–103 [DOI] [PubMed] [Google Scholar]

- 28.Picheny M, Durlach N, Braida L. Speaking clearly for the hard of hearing, II: acoustic characteristics of clear and conversational speech. J Speech Hear Res. 1986;29: 434–446 [DOI] [PubMed] [Google Scholar]

- 29.Hecker MHL. A Study of the Relationship Between Consonant-Vowel Ratios and Speaker Intelligibility [doctoral dissertation]. Palo Alto, Calif: Stanford University; 1974 [Google Scholar]

- 30.Gordon-Salant S. Effects of acoustic modification on consonant recognition by elderly hearing-impaired subjects. J Acoust Soc Am. 1987;81: 1199–1202 [DOI] [PubMed] [Google Scholar]

- 31.Gordon-Salant S. Recognition of natural and time/intensity altered CVs by young and elderly subjects with normal hearing. J Acoust Soc Am. 1986;80: 1599–1607 [DOI] [PubMed] [Google Scholar]

- 32.Montgomery AA, Edge RA. Evaluation of two speech enhancement techniques to improve intelligibility for hearing impaired adults. J Speech Hear Res. 1988;31: 386–393 [DOI] [PubMed] [Google Scholar]

- 33.Revoile SG, Holden-Pitt L, Edward D, Pickett JM. Some rehabilitative considerations for future speech-processing hearing aids. J Rehab Res Dev. 1986;23(1): 89–94 [PubMed] [Google Scholar]

- 34.Revoile SG, Holden-Pitt L, Edward D, Pickett JM, Brandt F. Speech-cue enhancement for the hearing impaired: amplification of burst/murmur cues for improved perception of final stop voicing. J Rehab Res Dev. 1987;24: 207–216 [PubMed] [Google Scholar]

- 35.Guelke RW. Consonant burst enhancement: a possible means to improve intelligibility for the hard of hearing. J Rehab Res Dev. 1987;24: 217–220 [PubMed] [Google Scholar]

- 36.Preves D, Fortune T, Woodruff B, Newton J. Strategies for enhancing the consonant to vowel ratio with in-the-ear hearing aids. Ear Hear. 1991;6(suppl 12): 1395–1535 [DOI] [PubMed] [Google Scholar]

- 37.Langhans T, Strube HW. Speech enhancement by nonlinear multiband envelope filtering. In: Proceedings of the 1982 IEEE International Conference on Acoustics, Speech and Signal Processing. Vol 1. New York, NY: IEEE; 1982: 156–159 [Google Scholar]

- 38.Clarkson PM, Bahgat SF. Envelope expansion methods for speech enhancement. J Acoust Soc Am. 1991;89: 1378–1382 [DOI] [PubMed] [Google Scholar]

- 39.Hermansky H, Wan E, Avendano C. Speech enhancement based on temporal processing. In: Proceedings of the IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP-95). Vol 1. New York, NY: IEEE; 1995: 405–408 [Google Scholar]

- 40.Avendano C, Hermansky H. Study on the dereverberation of speech based on temporal envelope filtering. Paper presented at: Fourth International Conference on Spoken Language Processing; October 3–6, 1996; Philadelphia, Pa. [Google Scholar]

- 41.Boers PM. Formant enhancement of speech for listeners with sensorineural hearing loss. In: IPO Annual Progress Report No. 15. Eindhoven, the Netherlands: Institut voor Perceptie Onderzoek; 1980: 21–28 [Google Scholar]

- 42.Bunnell HT. On enhancement of spectral contrast in speech for hearing-impaired listeners. J Acoust Soc Am. 1990;88: 2546–2556 [DOI] [PubMed] [Google Scholar]

- 43.Stone MA, Moore BCJ. Spectral feature enhancement for people with sensorineural hearing impairment: effects on speech intelligibility and quality. J Rehab Res Dev. 1992;29(2): 39–56 [DOI] [PubMed] [Google Scholar]

- 44.Simpson AM, Moore BCJ, Glasberg BR. Spectral enhancement to improve the intelligibility of speech in noise for hearing impaired listeners. Acta Otolaryngol. 1990;469(suppl): 101–107 [DOI] [PubMed] [Google Scholar]

- 45.Franck BA, van Kreveld-Bos CS, Dreschler WA, Verschuure H. Evaluation of spectral enhancement in hearing aids, combined with phonemic compression. J Acoust Soc Am. 1999;106(3 pt 1): 1452–1464 [DOI] [PubMed] [Google Scholar]

- 46.Alcantara JI, Dooley GJ, Blamey PJ, Seligman PM. Preliminary evaluation of a formant enhancement algorithm on the perception of speech in noise for normally hearing listeners. Audiology. 1994;33: 15–27 [DOI] [PubMed] [Google Scholar]

- 47.Levitt H. Hearing aids: prescription, selection, evaluation. In: Levitt H, Pickett JM, Houde RA. eds. Sensory Aids for the Hearing Impaired. New York, NY: IEEE; Press; 1980: 29–34 [Google Scholar]

- 48.Levitt H. An array-processor computer hearing aid. ASHA. 1982;24: 805 [Google Scholar]

- 49.Levitt H, Pickett JM, Houde RA. eds. Sensory Aids for the Hearing Impaired. New York, NY: IEEE; Press; 1980 [Google Scholar]

- 50.Levitt H, Neuman AC, Mills R, Schwander TJ. A digital master hearing aid. J Rehab Res Dev. 1986;23(1): 79–87 [PubMed] [Google Scholar]

- 51.Bakke MH, Neuman AC, Toraskar J. The effect of Wiener filtering on consonant recognition in noise. ASHA. 1987;29: 189 [Google Scholar]

- 52.Levitt H, Sullivan JA, Neuman AC, Rubin-Spitz J. Experiments with a programmable master hearing aid. J Rehab Res Dev. 1987;24(4): 29–54 [PubMed] [Google Scholar]

- 53.Neuman AC, Schwander TJ. The effect of filtering on the intelligibility and quality of speech in noise. J Rehab Res Dev. 1987;24(4): 127–134 [PubMed] [Google Scholar]

- 54.Levitt H, Neuman A, Sullivan J. Studies with digital hearing aids. Acta Otolaryngol (Stockh.). 1990;(suppl 469): 57–69 [PubMed] [Google Scholar]

- 55.Hochberg I, Boothroyd A. Weiss M, Hellman S. Effect of noise and noise suppression on speech perception by cochlear implantees. Ear Hear. 1992;13: 263–271 [DOI] [PubMed] [Google Scholar]

- 56.Levitt H, Bakke M, Kates J, Neuman A, Schwander T, Weiss M. Signal processing for hearing impairment. Scand Audiol. 1993;(suppl 38): 7–19 [PubMed] [Google Scholar]

- 57.Weiss M, Neuman AC. Noise reduction in hearing aids. In: Studebaker GA, Hochberg I. eds. Acoustical Factors Affecting Hearing Aid Performance. 2nd ed. Needham Heights, Mass: Allyn & Bacon; 1993: 337–352 [Google Scholar]

- 58.Levitt H. Digital hearing aids. In: Studebaker GA, Hochberg I. eds. Acoustical Factors Affecting Hearing Aid Performance. 2nd ed. Needham Heights, Mass: Allyn & Bacon; 1993: 317–335 [Google Scholar]

- 59.Levitt H, Bakke M, Kates J, Neuman A, Weiss M. Advanced signal processing hearing aids. In: Beilin J, Jensen GR. eds. Recent Developments in Hearing Instrument Technology, 15th Danavox Symposium-1993. Copenhagen, Denmark: Stougaard Jensen; 1993: 333–358 [Google Scholar]

- 60.Cudahy E, Levitt H. Digital hearing aids: a historical perspective. In: Sandlin RE. ed. Understanding Digitally Programmable Hearing Aids. Needham Heights, Mass: Allyn & Bacon; 1994: 275–313 [Google Scholar]

- 61.Levitt H. Processing of speech signals for physical and sensory disabilities. Proc Natl Acad Sci U S A. 1995;92: 9999–10006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Levitt H. Digital hearing aids, past, present, and future. In: Tobin H. ed. Practical Hearing Aid Selection and Fitting. Monograph 001. Washington, DC: VA-Rehabilitation Research and Development Service; 1997: xi–xxiii [Google Scholar]

- 63.Neuman AC, Eisenberg L. Evaluation of a dereverberation technique. J Commun Disord. 1991;24(3): 211–221 [DOI] [PubMed] [Google Scholar]

- 64.Levitt H, Neuman A. Evaluation of orthogonal polynomial compression. J Acoust Soc Am. 1991;90: 241–252 [DOI] [PubMed] [Google Scholar]

- 65.Levitt H. Computer applications in audiology and rehabilitation of the hearing impaired. J Commun Disord. 1980;13: 471–481 [DOI] [PubMed] [Google Scholar]

- 66.Neuman AC, Levitt H, Mills R, Schwander T. An evaluation of three adaptive hearing aid selection strategies. J Acoust Soc Am. 1987;82: 1967–1976 [DOI] [PubMed] [Google Scholar]

- 67.Eisenberg L, Levitt H. Paired comparison judgments for hearing aid selection in children. Ear Hear. 1991;12: 417–430 [DOI] [PubMed] [Google Scholar]

- 68.Levitt H. Adaptive procedures for hearing aid prescription and other audiologic applications. J Am Acad Audiol. 1992;3: 119–131 [PubMed] [Google Scholar]

- 69.Neuman AC. The application of adaptive test strategies to hearing aid selection. In: Studebaker GA, Hochberg I. eds. Acoustical Factors Affecting Hearing Aid Performance. 2nd ed. Needham Heights, Mass: Allyn & Bacon; 1993: 103–117 [Google Scholar]

- 70.Sullivan JA, Levitt H, Hwang J-Y, Hennessey AM. An experimental comparison of four hearing aid prescription methods. Ear Hear. 1988;9: 22–32 [DOI] [PubMed] [Google Scholar]

- 71.Sullivan JA, Levitt H, Hwang J-Y, Hennessey AM. Discriminability of frequency response irregularities in hearing aids. In: Steele RD, Gerrey W. eds. Proceedings of the Tenth Annual Conference on Rehabilitation Technology. Washington, DC: RESNA-Association for the Advancement of Rehabilitation Technology; 1987: 407–409 [Google Scholar]

- 72.Levitt H, Sullivan JA, Hwang J. The Chameleon: a computerized hearing aid measurement/simulation system. Hearing Instruments. 1986;37(2): 16–18 [Google Scholar]

- 73.Graupe D, Causey GD. Development of a hearing aid system with independently adjustable subranges of its spectrum using microprocessor hardware. Bull Prosthet Res. 1975;12: 241–242 [Google Scholar]

- 74.Mangold S, Rissler-Aq Kesson G. Programmable filter helps faulty hearing. Eltek Aktuell Elektron. 1977;20(15): 64–66 [Google Scholar]

- 75.Mangold S, Leijon A. Programmable hearing aid with multichannel compression. Scand Audiol. 1979;8: 121–126 [DOI] [PubMed] [Google Scholar]

- 76.Dowle RD, Vaughan R, Holmes WH. Digital processing of audio signals in the development of a modern integrated hearing aid. In: Conference Digest IREECON International Sydney 1979. Sydney, Australia: The Institution of Radio and Electronics Engineering Australia; 1979: 290–293 [Google Scholar]

- 77.Yip JCS. A microprocessor-controlled system for electroacoustic testing of hearing aids and for audiological experiments. Presented at: Conference on Microprocessor Systems; November 1981; Brisbane, Australia [Google Scholar]

- 78.Trinder JP. Hardware-software configuration for high performance digital filtering in real-time. In: Proceedings of the International Conference on Acoustics, Speech, and Signal Processing. New York, NY: IEEE; 1982 [Google Scholar]

- 79.Studebaker GA, Sherbecoe RL, Matesich JS. Spectrum shaping with a hardware digital filter. J Rehab Res Dev. 1987;24(4): 21–28 [PubMed] [Google Scholar]

- 80.Moser LM. inventor. Hearing aid with digital processing for correlation of signals from plural microphones, dynamic range control, or filtering using an erasable memory. US patent 4,187,413. February 5, 1980 [Google Scholar]

- 81.Popelka GR, Engebretson AM. A computer-based audiologic system for hearing aid assessment. Hearing Instruments. 1983;34(7): 6–8 [Google Scholar]

- 82.Engebretson AM, Morley RE, Jr, O'Connell MP. A wearable pocket sized instrument for digital hearing aid and other hearing prosthesis applications, ICASSP86 Proceedings. Paper presented at: IEEE IECEJ ASJ International Conference on Acoustics, Speech, and Signal Processing. IEEE Institute of Electronic Communication, Japan Acoustic Society; April 1986; Tokyo, Japan. [Google Scholar]

- 83.Morley RE, Jr, Engebretson AM, Trotta JG. Multiprocessor digital signal processing system for real-time audio applications. IEEE Trans Acoust Speech Signal. 1986;34: 225–231 [Google Scholar]

- 84.Engebretson AM, Popelka GR, Morley RE, Niemoeller AF, Heidbreder AF. A digital hearing aid and computer-based fitting procedure. Hearing Instruments. 1986;37(2): 8–14 [Google Scholar]

- 85.Popelka G. Computer-assisted hearing-aid evaluation and fitting program. Adv Otorhinolaryngol. 1987;37: 166–168 [DOI] [PubMed] [Google Scholar]

- 86.Engebretson AM, Morley RE, Popelka GR. Development of an ear-level digital hearing aid and computer-assisted fitting procedure: an interim report. J Rehab Res Dev. 1987;24(4): 55–64 [PubMed] [Google Scholar]

- 87.Popelka GR. Computer technology and hearing aids. In: Sandlin R. ed. Handbook of Technical and Theoretical Considerations in Hearing Aid Amplification. San Diego, Calif: College Hill Press; 1988: 239–263 [Google Scholar]

- 88.Engebretson AM, Morley RE, Jr, Popelka G. inventors. Hearing aids, signal supplying apparatus, systems for compensating hearing deficiencies, and methods. US patent 4,548,082. October 22, 1985 [Google Scholar]

- 89.Nunley J, Staab W, Steadman J, Wechsler P, Spenser B. A wearable digital hearing aid. Hear J. 1983: 34–35 [Google Scholar]

- 90.Levitt H, Dugot R, Kopper KW. Inventors; Audimax Corporation. Programmable digital hearing aid system. US patent 4,731,850. 1988 [Google Scholar]

- 91.Rosen S, Walliker JR, Fourcin A, Ball V. A microprocessor based acoustic hearing aid for the profoundly impaired listener. J Rehabil Res Dev. 1987;24: 239–260 [PubMed] [Google Scholar]

- 92.Douek E, Fourcin AJ, Moore BCJ, Clarke GP. A new approach to the cochlear implant,. Proc R Soc Med. 1977;70: 379–383 [PMC free article] [PubMed] [Google Scholar]

- 93.Faulkner A, Fourcin AJ, Moore BC. Psychoacoustic aspects of speech pattern coding for the deaf. Acta Otolaryngol Suppl. 1990;469: 172–180 [PubMed] [Google Scholar]

- 94.Faulkner A, Ball V, Rosen SR, Moore BC, Fourcin AJ. Speech pattern hearing aids for the profoundly hearing-impaired: speech perception and auditory abilities. J Acoust Soc Am. 1992;91: 2136–2155 [DOI] [PubMed] [Google Scholar]

- 95.Faulkner A, Walliker JR, Howard IS, Ball V, Fourcin AJ. New developments in speech pattern element hearing aids for the profoundly deaf. Scand Audiol Suppl. 1993;38: 124–135 [PubMed] [Google Scholar]

- 96.Fourcin A. Speech analytic hearing aids for the profoundly deaf: STRIDE. In: Ballabio E, Porrero P, De La Bellacasa RP. eds. Rehabilitation Technology. Amsterdam, the Netherlands: IOS Press; 1993 [Google Scholar]

- 97.Hecox KE, Miller E. New hearing instrument technologies. Hearing Instruments. 1988;39: 38–40 [Google Scholar]

- 98.Cummins KL, Hecox KE. Ambulatory testing of digital hearing aid algorithms. In: Proceedings of the 10th Annual RESNA Conference. Washington, DC: RESNA Press; 1987: 398–400 [Google Scholar]