Abstract

Social and technological innovations often spread through social networks as people respond to what their neighbors are doing. Previous research has identified specific network structures, such as local clustering, that promote rapid diffusion. Here we derive bounds that are independent of network structure and size, such that diffusion is fast whenever the payoff gain from the innovation is sufficiently high and the agents’ responses are sufficiently noisy. We also provide a simple method for computing an upper bound on the expected time it takes for the innovation to become established in any finite network. For example, if agents choose log-linear responses to what their neighbors are doing, it takes on average less than 80 revision periods for the innovation to diffuse widely in any network, provided that the error rate is at least 5% and the payoff gain (relative to the status quo) is at least 150%. Qualitatively similar results hold for other smoothed best-response functions and populations that experience heterogeneous payoff shocks.

Keywords: convergence time, local interaction model, noisy best response, logit, coordination game

Local Interaction Topology and Fast Diffusion

New ideas and ways of doing things often spread through social networks. People tend to adopt an innovation with increasing likelihood, depending on the proportion of their friends and neighbors who have adopted it. The innovation in question might be a technological advance such as a new piece of software, a medical drug (1), or a new hybrid seed (2). Or it might represent a social practice, such as contraception (3), a novel form of employment contract (4), or a group behavior such as binge drinking (5).

In recent years such diffusion models have been extensively studied from both a theoretical and an empirical standpoint. Some authors have highlighted the importance of the payoff gains from the innovation: Larger gains lead to faster adoption (2, 6). Others have pointed to the role that the network topology plays in the rate at which an innovation spreads (6–19).

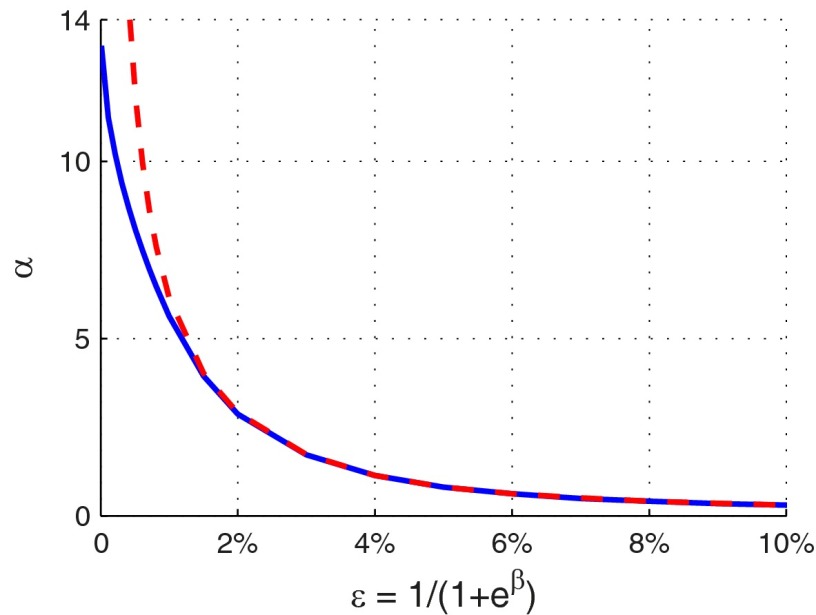

A key finding of this literature is that the amount of local clustering has a significant effect on the speed with which the innovation spreads. Fig. 1 presents three examples of simple networks that differ in the amount of local clustering. Fig. 1A shows a randomly generated network in which the neighborhoods of nearby agents have relatively little overlap. In such a network the expected waiting time until the innovation spreads grows exponentially in the network size unless the payoff gains from the innovation are very large (11, 18). Fig. 1B is a ring in which agents are connected to nearby agents. In this case the expected waiting time until the innovation becomes widely adopted is bounded independently of the network size (20). The intuition for this result is that the innovation gains an initial foothold relatively quickly within small groups of adjacent agents on the ring, and it then maintains its hold even when those outside the group have not yet adopted. Thus, the innovation tends to diffuse quite rapidly as it gains independent footholds among many groups scattered across the network. A similar logic applies to the lattice in Fig. 1C; in this case the local footholds consist of squares or rectangles of agents.

Fig. 1.

Four-regular networks with different topologies: random network (Left), ring network (Center), and lattice network on a torus (Right).

The contribution of this paper is to show that innovations can in fact spread quite rapidly in any finite network (as long as it does not have isolated vertices). The methods we use to establish our results are quite different from those in the prior networks literature, many of which rely on the “independent footholds” argument outlined above. Instead we take our inspiration from another branch of the learning literature, namely the analysis of dynamics when agents choose noisy best responses to samples drawn at random from the whole population. If the population is large, such a process can be well approximated by a deterministic (mean-field) dynamical system (14, 21–25).

This setup is different from network models, in which agents choose noisy best responses to a fixed set of neighbors. To see the distinction, consider the situation where agents can coordinate on one of two actions: A (the innovation) or B (the status quo). Assume for the moment that they choose pure (instead of noisy) best responses to the actions of their neighbors. Such a game will typically have a vast number of heterogeneous equilibria whose structure depends on the fine details of the network topology. By contrast, in a global interaction model with sampling there will typically be just three equilibria, two of which are homogeneous and stable and the other one heterogeneous and unstable.

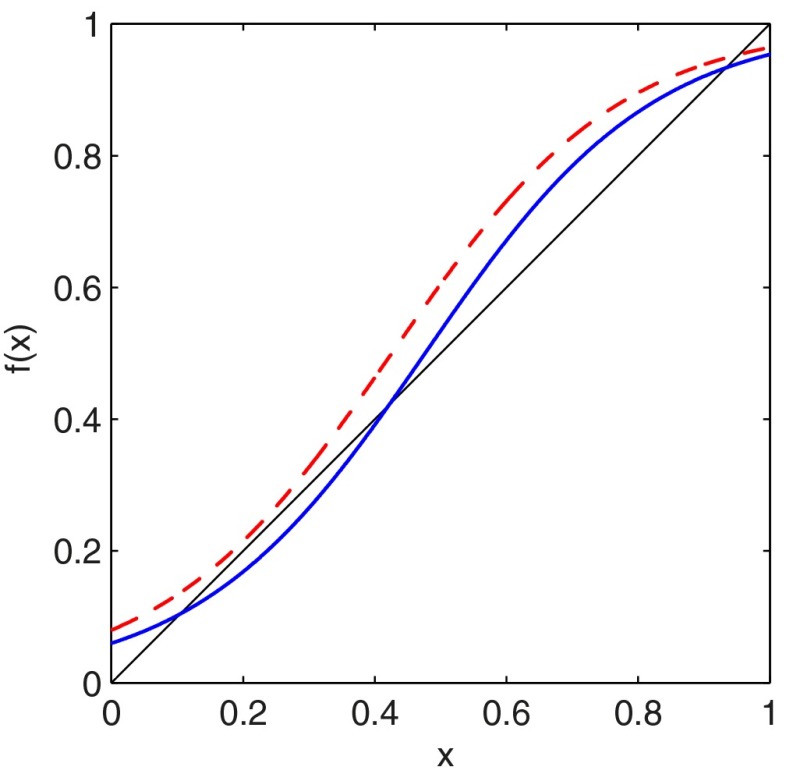

Now consider a (possibly noisy) best-response process and an unboundedly large number of agents who interact globally. The expected motion can be represented by a deterministic, continuous-time dynamic of the form , where is the proportion of agents playing action A at time t, and is the rate of change of this variable. Under fairly general assumptions has a convex–concave shape as shown in Fig. 2. Depending on the choice of parameters, such a curve will typically have either one or three rest points. In the latter case the middle one (the up-crossing) is unstable and the other two are stable. One can use a mean-field approach to study the effects of varying the payoffs and the distribution of sample sizes on the location of these rest points. The lower the unstable rest point is, the smaller the basin on attraction of the status quo equilibrium and hence the easier it is to escape that equilibrium (14, 23).

Fig. 2.

The logit response function.

Of particular interest is the case where there is a single rest point and it lies above the halfway mark (0.5, 0.5) (red dashed curve in Fig. 2). [In almost any normal form game with the logit response function, for sufficiently large noise levels there is a unique rest point (a quantile equilibrium) (26). In the case when agents best respond to random samples, if there exists a -dominant equilibrium (where k is a suitably chosen sample size), then the dynamics have a unique stable rest point (21, 24).] In this case the process moves from the initial state (all B) to a majority playing A in a bounded length of time. In an earlier paper we examined the implications of this observation for the logit best-response function (25). The main result in that paper is that, if the payoff gain from the innovation and/or the noise level is sufficiently large, then there is a unique rest point lying above (0.5, 0.5) and the expected waiting time until a majority of the agents choose A is bounded above independently of the population size; moreover, we give an explicit estimate of the expected waiting time. However, this argument depends crucially on the assumption that the agents interact globally, which ensures that the expected dynamics have the simple form shown in Fig. 2. This allows one to use standard stochastic approximation techniques to estimate the waiting time as a function of the payoffs, noise level, and sample size. In a network setting, by contrast, the underlying state space is vastly more complex and generally the unperturbed process will possess a large number of rest points, each with a different stochastic potential. Thus, the approximation techniques from our earlier paper do not apply to this case.

The contribution of the present paper is to show that, despite the complications introduced by the network setting, we can derive an upper bound on the rate of diffusion that holds uniformly for all networks provided that payoff gain from the innovation is sufficiently high and the noise level is not too low. These results are established using martingale arguments rather than the stochastic approximation methods that are common in the prior literature. The bound we establish is easy to compute, given the shape of the stochastic response function. Thus, our approach provides a practical estimation method that can in principle be applied to empirical data.

We emphasize that our results do not imply that network topology does not matter at all. Indeed, the diffusion rate will be faster than the upper bound that we establish, for classes of networks identified by previous research (14, 27). Thus, our results are particularly useful in situations where the exact topology of the network is not known or is changing over time.

The remainder of the paper is organized as follows. The Local Interaction Model introduces the adoption model with local interactions. In Topology-Free Fast Diffusion in Regular Networks we establish the main results for regular networks, and in General Networks we extend the arguments. In Smooth Stochastic Best-Response Functions we show that the results are robust for a large family of stochastic best-response functions other than the logit. We also show that the results can be interpreted in terms of payoff heterogeneity instead of noisy best responses.

The Local Interaction Model

The model is expressed in terms of a stochastic process denoted that depends on three parameters: the potential gain α, the noise level β, and the interaction graph (or network) G. Consider a population of N agents numbered from 1 to N linked by an undirected graph G. We assume throughout this paper that each agent is connected to at least one other agent; i.e., the graph G does not have isolated nodes. Each agent chooses one of two available actions, A and B. Interaction is given by a symmetric 2 × 2 coordination game with payoff matrix

where and . This game has potential function

Define the normalized potential gain of passing from equilibrium to as

Assume without loss of generality that the potential function achieves its strict maximum at the equilibrium or equivalently . Hence is the risk-dominant equilibrium of the game; note that may or may not also be the Pareto-dominant equilibrium. Standard results in evolutionary game theory imply that will be selected in the long run (28–30).

An important special case is when interaction is given by a pure coordination game with payoff matrix

| [1] |

In this case we can think of B as the “status quo” and of A as the “innovation.” The term is now the payoff gain of adopting the innovation relative to the status quo. Note that the potential function of the pure coordination game is proportional to the potential function in the general case. It follows that for the logit model and under a suitable rescaling of the noise level, the two settings are in fact equivalent.

Payoffs are as follows. At the start of each time period every pair of agents linked by an edge interact once and they receive the one-shot payoffs from the game defined in [1]. Thus, each agent’s payoff in a given period is the sum of the payoffs from his pairwise interactions in that period. Note that players with a high number of connections will, ceteris paribus, have higher payoffs per period. Formally, let be i’s payoff from interacting with j, when i plays and j plays . Letting denote the set of i’s neighbors, the total payoff for i from playing is .

We posit the following revision process. At times with , and only at these times, one agent is randomly (independently over time) chosen to revise his action. (The results in this paper also hold under the following alternative revision protocol. Time is continuous, each agent has a Poisson clock that rings once per period in expectation, and an agent revises his action when his clock rings.) When revising, an agent observes the actions currently used by his neighbors in the graph G. Assume that a fraction x of agent i’s neighbors are playing A; then i chooses a noisy best response given by the logit model,

where is a measure of the noise in the revision process. For convenience we sometimes drop the dependence of f on in the notation and simply write and in some cases we drop both and and write . Let denote the error rate at the start of the process when nobody has yet adopted the innovation A. This is called the initial error rate. Both and measure the noise level of the process. Because is easier to interpret as the rate at which agents depart from best response at the outset, we sometimes express our results in terms of both and .

Embedded in the above formulation is the assumption that the error rate depends only on the proportion of an agent’s neighbors playing A or B and it does not depend on the number of neighbors, that is, on the agent’s degree. In Smooth Stochastic Best-Response Functions we show that this follows naturally if we assume that in each period each agent experiences an idiosyncratic shock that affects his realized payoffs from all interactions during that period. The logit is one of the most commonly used models of this type (7, 8, 31–33). The key feature of logit for our results is that the probability of not choosing the best response action decreases smoothly as a function of the payoff difference between the two choices. In Smooth Stochastic Best-Response Functions we show that the main result in this paper remains true for a large family of smooth stochastic response functions that are qualitatively similar to the logit. The stochastic response functions we consider emerge in a setting where agents myopically best respond to the actions of their neighbors, and payoffs from playing each action are subject to random shocks. (The response functions can also be viewed as a primitive element of the model that can be estimated directly from empirical data.)

The above revision process fully describes the stochastic process . The states of the process are the adoption vectors , where if agent i plays A at time t, and otherwise. The adoption rate is defined as . By assumption, the process starts in the all-B state, namely .

We now turn to the issue of speed of diffusion, measured in terms of the expected time until a majority of the population adopts action A. Formally, define the random hitting time

This measure is appropriate because it captures the time it takes for the system to escape the status quo equilibrium . More generally, given one can consider the waiting time until is at least p for the first time. The method of analysis in this paper can be extended in a straightforward way to treat this case.

Fast diffusion is defined as follows.

Definition 1:

Given a family of graphs, we say that the family of processes displays fast diffusion if there exists a constant such that for any the expected waiting time until a majority of agents play A under process is at most S independently of G; that is, .

When the above conditions are satisfied, we say that displays fast diffusion.

Topology-Free Fast Diffusion in Regular Networks

In this section we establish our first result on sufficient conditions for fast diffusion in d-regular networks, and we provide an upper bound on the expected waiting time for a majority of the population to adopt. In the next section we show how to extend these arguments to general networks.

Fast Diffusion in Regular Networks.

To find sufficient conditions for fast diffusion, we begin by lower bounding the expected change in the adoption rate in any state where adopters constitute a weak minority.

A graph G is d regular if every node in G has exactly d neighbors. Throughout this section, . Denote by the family of all d-regular graphs. Fix a payoff gain , a noise level , and a graph . Consider a state of the process such that . For any , denote by the fraction of players who have exactly k neighbors currently playing A.

The expected next period adoption of an agent i who has k neighbors currently playing A is

Note that each agent is chosen to revise with equal probability; hence the expected change in the population adoption rate is

| [2] |

We wish to bound expression [2] from below, because this provides a lower bound on the expected change in the adoption rate for all configurations that have a minority of adopters. We begin by expressing as the population average of the fraction of i’s neighbors who have adopted the innovation. Let denote the set of neighbors of i. We can then write

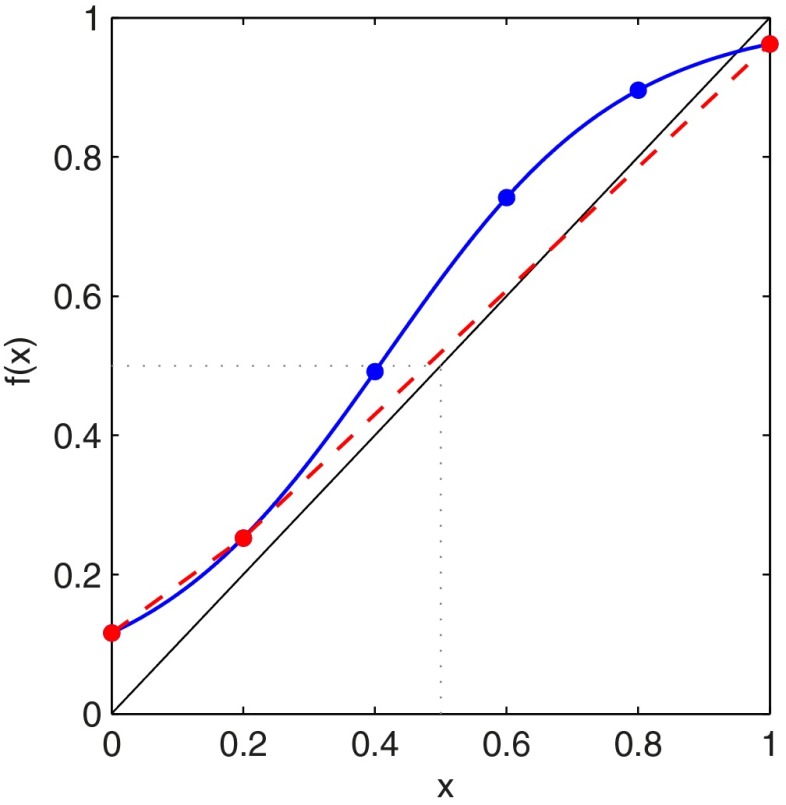

Note that the weights “convexify” the set of points . Denote by the lower envelope of the convex hull of . Fig. 3 illustrates the construction.

Fig. 3.

The function (red dashed line) is the lower envelope of the convex hull of the set (solid circles).

We now apply Jensen’s inequality for the convex function , to obtain

Using identity [2], we obtain

| [3] |

Given , and , define the quantities

and

If the function lies strictly above the identity function (the 45° line) on the interval or, equivalently, if , then the expected change in the adoption rate is positive for any state with a weak minority of adopters. This implies diffusion is fast for the family of d-regular graphs, as the following result shows. (The entire argument can be adapted to estimate the waiting time until a proportion of players have adopted; here we have stated the results in terms of the target for simplicity.)

Theorem 1.

There exist uniform lower bounds on the payoff gain and the noise level such that the expected waiting time until a majority of agents play A is bounded for all regular graphs of degree at least one, irrespective of the number of agents. Concretely, displays fast diffusion whenever and . Furthermore, given any , displays fast diffusion whenever .

Prior work in the literature on fast diffusion in evolutionary models has focused mainly on the topological properties of the graphs in the family that guarantee fast diffusion (18, 20, 27). In contrast, Theorem 1 establishes topology-free bounds that guarantee fast diffusion for the entire family of d-regular graphs.

Proof:

The proof proceeds in two steps. First, we show that the expected change in the adoption rate is strictly positive whenever the adoption rate is at most . Second, we show that the expected waiting time until a majority adopts is bounded for all graphs in .

Fix some . By construction, . In addition, Lemma 1 provides a positive lower bound for for all . It follows from [3] that the expected change in the adoption rate is strictly positive for any state such that .

Lemma 1.

Let ; then for all .

Proof:

Because f is first convex and then concave, there exists such that for any we have and for any we have

The right-hand side is the equation of the line passing through the points and . In particular, we have that for all . We claim that for all . Note that by definition this holds with equality for . Moreover, so the opposite inequality must hold for . This completes the proof of Lemma 1.

■

The following claim provides explicit conditions that ensure that .

Claim 1.

If , then . It follows that when , the expected change in the adoption rate is positive in any state with .

The Proof of Claim 1 relies on elementary calculus, using the logit formula, and can be found in SI Text.

We now turn to the second step in the Proof, namely that the expected waiting time to reach a majority of adopters is bounded for the family of -regular graphs whenever .

We already know that for any and for any , for any graph and any state of the process such that the adoption rate is at most one-half, the expected change in the adoption rate is positive. By Lemma 1 we know that

| [4] |

We now establish a uniform upper bound on the expected waiting time for all -regular graphs (of any size), expressed in terms of .

Lemma 2.

Assume that is positive. Then for any the expected waiting time until a majority of the population adopts the innovation satisfies

The proof of Lemma 2 can be found in SI Text. It is based on inequality [4] and a careful accounting of the worst-case expected waiting time to go from a state with adoption rate to any state with adoption rate , where .

Upper Bound on the Expected Waiting Time.

We now show how to obtain a bound on the absolute magnitude of the waiting time for any regular graph, irrespective of size or degree, using a technique similar to one contained in the Proof of Theorem 1.

Fix the payoff gain and the noise level and let G be a d-regular graph on N vertices. Given an adoption level , the expected change in the adoption rate is given by [2]. We want to lower-bound this quantity independently of d. To this effect, let denote the lower envelope of the convex hull of the graph . The function is convex, so it follows that for any d

It is easy to show that , where . (The proof is similar to that of Lemma 1.)

We can now apply Lemma 2 to establish a uniform upper bound on the expected waiting time, for any d and any d-regular graph (of any size), in terms of the shape of the function . Specifically, for any and such that and for any regular graph G we have

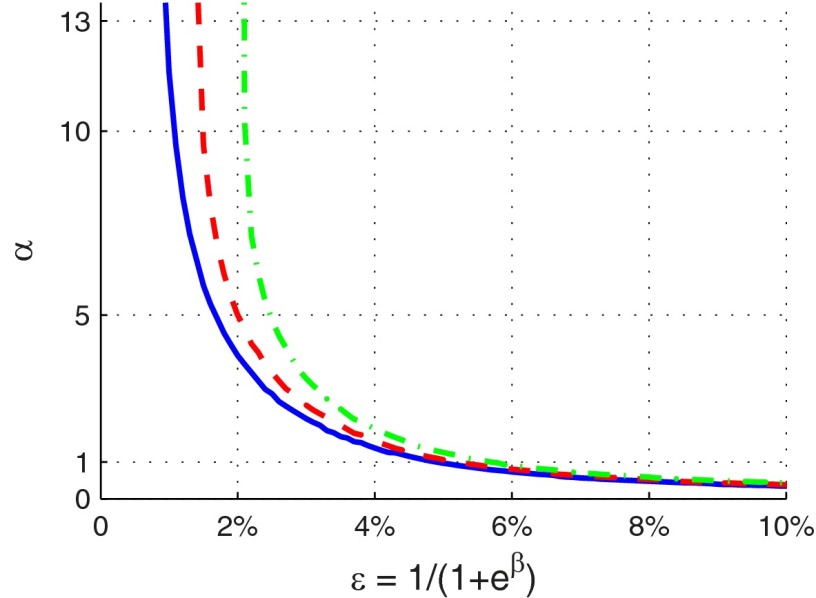

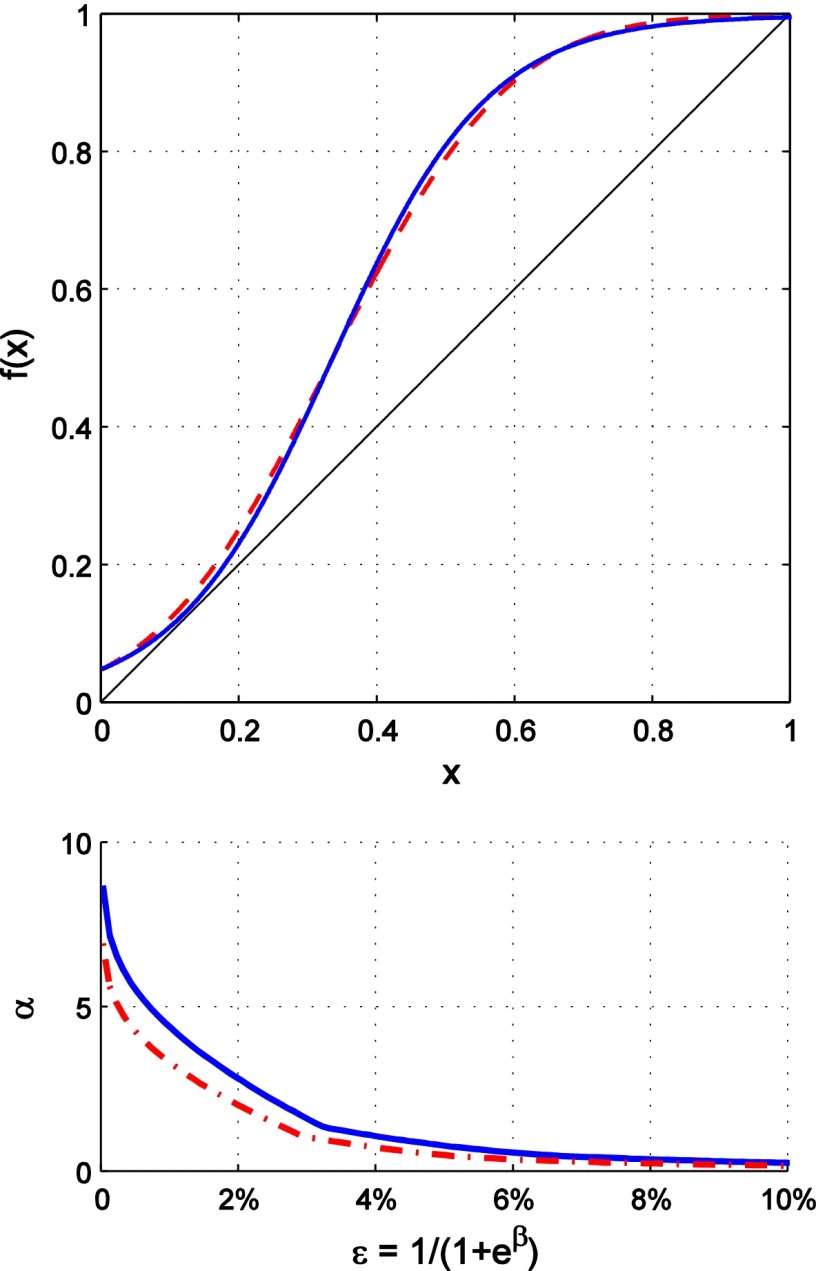

Fig. 4 shows the expected waiting time to reach a majority of adopters, based on the above upper bound and on numerical simulations of the term . The expected waiting time is at most 100, 60, and 40 revisions per capita for payoff gains lying above the blue (solid), red (dashed), and green (dash–dot) lines, respectively. Fig. 4 shows, for example, that for and , the expected waiting time is at most 60 revisions per capita, for regular graphs of arbitrary size.

Fig. 4.

Expected waiting times to reach a majority of adopters. The expected waiting time is at most 100, 60, and 40 revisions per capita for payoff gains above the blue (solid), red (dashed), and green (dash–dot) lines, respectively.

General Networks

In this section we show how our framework can be applied to more general families of graphs. We derive a similar result to Theorem 1, but the proof is more complex and relies on stopping time results in the theory of martingales. Consider a finite graph G and let denote the degree of agent i. We assume that for all i; i.e., there are no isolated nodes. Denote the average degree in the graph by . We use the following measure of adoption. For a state , define

This is the probability of interacting with an adopter when placed at the end of a randomly chosen link in the graph G (14, 23). In particular, adopters with higher degrees are weighted more heavily because they are more “visible” to other players. Note that the adoption rate always lies in the interval , and it reduces to the usual definition when G is regular.

The definitions of the expected waiting time and of fast diffusion introduced in The Local Interaction Model extend naturally to the adoption rate measure . Concretely, a family of graphs displays fast diffusion if for any graph the expected waiting time until is bounded independently of the size of G. Note that the analysis that follows carries through if we define fast diffusion relative to a threshold other than .

We begin by considering graphs with degrees bounded above by some integer . Denote by the family of all such graphs. Fix a state such that . We propose to study the expected change in the adoption rate, namely .

Choose some individual i and let be the number of adopters among i’s neighbors at time t. The expected change in i’s adoption rate is

Taking the (weighted) average across all agents, we obtain

We can rewrite the adoption rate as

| [5] |

Hence the expected change in the state variable can be written

| [6] |

Let and consider the set . Let denote the lower envelope of the convex hull of the set . Note that for all so [6] implies that

By definition is convex, and a fortiori so is . By applying Jensen’s inequality and using [5], we obtain

| [7] |

Thus, a sufficient condition for the expected motion to be positive is that lies strictly above the identity function on the interval . The function is decreasing on this interval; hence this condition is equivalent to

Define the function

Several key properties of the function are summarized in the following result.

Proposition 1.

, and for .

Proof:

The intuition for the first statement is that when , it is a best response to play A whenever at least one neighbor plays A. Formally, for we have for all . It follows that the lower envelope of the convex hull of the set is given by the line that joins and . This implies that , which is strictly positive. The last two statements of Proposition 1 follow from Claim 1 in the Proof of Theorem 1.

■

To study the family of all graphs, we can consider the limit as D tends to infinity. We claim that is finite for all . Indeed, let denote the lower envelope of the convex hull of the set , and let

If we define , then for all the expected change in the adoption rate is positive for any graph as long as .

The next result extends Theorem 1 to the family of all graphs. It establishes the existence of a payoff threshold for fast diffusion, as well as an absolute bound on the expected waiting time.

Theorem 2.

Given a noise level , if the payoff gain exceeds the threshold , then diffusion is fast for all graphs. Moreover, when , for any graph the expected waiting time until the adoption rate is at least satisfies

Theorem 2 shows that for any graph the expected time until the adoption rate reaches is uniformly bounded above as long as the payoff gain is greater than a threshold value that depends on the noise level. Moreover, Theorem 2 provides an explicit bound on the expected waiting time that is easily computed and has a simple geometric interpretation.

We can improve on the threshold in Theorem 2 if we restrict ourselves to graphs with degrees bounded by some number D. Specifically, for any integer , if the payoff gain exceeds , then diffusion is fast for all graphs with degrees bounded by D. Moreover, if , the expected waiting time satisfies for every graph .

A notable feature of these results is that the bounds are topology-free: They do not depend on any of the network details. The only other result in the literature of this nature is proposition 4 in (34), which shows that guarantees fast diffusion for all graphs in . (The same bound arises in a number of other evolutionary models that are based on deterministic best-response processes, in particular refs. 11, 21, 24, and 35.) Proposition 1 shows that the bound is better than this; indeed for many families of graphs it is much better.

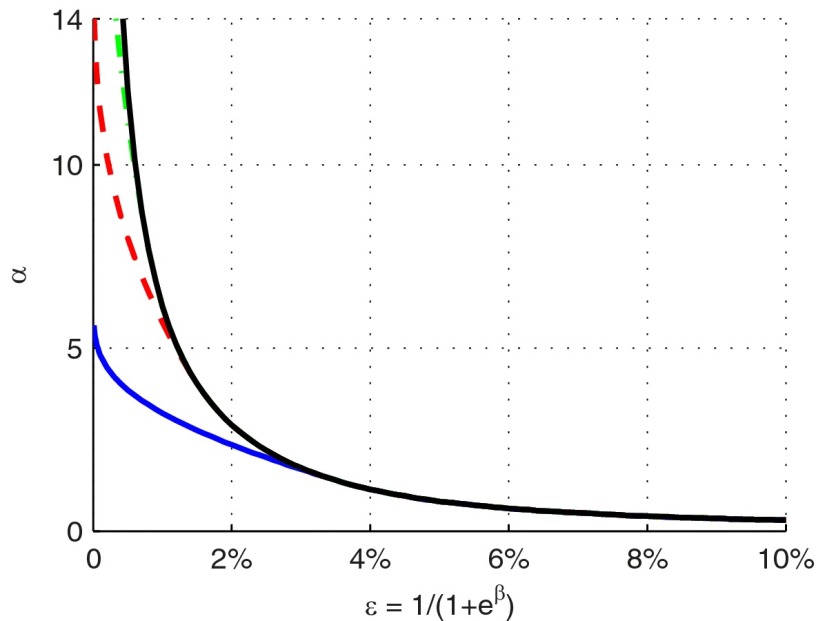

Fig. 5 plots the threshold for several values of D. Fig. 5 also includes the threshold ; combinations of and above this line have the property that diffusion is fast for the family of all finite graphs.

Fig. 5.

Threshold for (blue solid line), (red dashed line), (green dash–dot line) and (black solid line).

For each pair and for any value of D, the term is uniquely determined by the shape of the function and can be easily calculated. Table 1 presents the bounds on the expected waiting time established in Theorem 2. The bounds apply to all finite graphs, irrespective of maximum degree or size. For example, when and , it takes at most 55 revisions per capita in expectation until the adoption rate exceeds .

Table 1.

Upper bounds on the expected waiting time for any graph

| Payoff gain | 1% | 2.5% | 5% | 10% |

| — | — | 205 | 30 | |

| — | — | 55 | 21 | |

| — | 116 | 41 | 20 | |

| 437 | 81 | 40 | 20 | |

Remark:

Note that the waiting time upper bound in Theorem 2 cannot be lower than . Indeed, the points and are always part of the graph of f, which implies that is at most .

The numbers in Table 1 are expressed in terms of revisions per capita. The actual rate at which individuals revise will depend on the particular situation that is being modeled. For example, consider a new communication technology that is twice as good as the old technology when used by two agents who communicate with each other. Suppose that people review their decision about which technology to use once a week and that they choose a best response 9 times out of 10 initially (when there are no adopters). The model predicts that the new technology will be widely adopted in less than 7 mo irrespective of the network topology.

Proof of Theorem 2:

Inequality [7] implies that for any we have

| [8] |

We want to estimate the stopping time . Note that the process stopped at T is a submartingale. Define the process and note that by [8] we know that stopped at T is still a submartingale.

Doob’s optional stopping theorem says that if is a submartingale and if the stopping time T satisfies , , and as , then (36). In our case, note that there exists such that with probability at least p we have , which implies that . This implies the three conditions of Theorem 2. Rewriting this result, we obtain

Noting that , we obtain , as was to be proved. (In the Proof of Theorem 1 we use a different method to establish the stronger bound instead of .)

■

Remark:

The analysis so far corresponds to a worst-case analysis over all graphs with degrees below a certain D. When the degree distribution is known, this information can be used in conjunction with identity [6] to derive a more precise payoff threshold for fast diffusion. To illustrate, suppose we are given a degree distribution , where denotes the fraction of agents in the network that have degree d. To be specific let us consider the case where P is described by the truncated power law for some . (Such a network is said to be scale-free). Empirical studies of real networks show that they often resemble scale-free networks; examples include author citation networks, the World Wide Web, and the networks of sexual partners (37). A network formation process that generates scale-free networks is the preferential attachment model, where a new agent added to the network is more likely to link with existing nodes that have a high degree (38). However, we want to stress that apart from the degree distribution, our results do not impose any constraints on the realized topology of the network.

Fig. 6 plots a numerically simulated upper bound on the threshold for fast diffusion for the truncated distribution on the interval , where is a normalizing constant. These simulations show that for small noise levels the threshold for the truncated power law is significantly lower compared with the threshold for all graphs.

Fig. 6.

The estimated bound (blue solid line) for topology-free fast diffusion for a power-law degree distribution ( for ). [Empirical studies of scale-free networks typically find exponents between 2.1 and 4 (38).] Also shown is the threshold (red dashed line).

The estimated curve in Fig. 6 has a very similar shape to the curves in Fig. 5. In particular, fast diffusion is achieved when the noise level is 5% and the payoff gain is at least . Changing the exponent and the parameters of the degree distribution yields qualitatively and quantitatively similar threshold functions.

Smooth Stochastic Best-Response Functions

In this paper we have established the existence of a payoff gain threshold that ensures fast diffusion in networks. The bound is “topology-free” in the sense that it holds for all networks. In this section we show that our method for proving these results does not depend in any crucial way on the logit response function: Results similar to those of Theorems 1 and 2 hold for a large family of response functions that are qualitatively similar to the logit and that arise from idiosyncratic payoff shocks.

Assume that at the start of each period each agent’s payoffs from playing A and B are perturbed by independent payoff shocks and . These shocks alter the player’s payoffs from all interactions during the period. In particular, agent i’s payoff from an interaction with j when playing is . His total payoff over the period is

where is the set of i’s neighbors, which has elements.

We stress that the shocks and are assumed to be constant for all of the agent’s interactions in a given period; they are also independent across agents and across periods. Let us assume that the shocks are identically distributed with cumulative distribution function and density . The variance of the shocks is captured by the noise parameter ; as tends to infinity the variance goes to zero. Let denote the cumulative distribution function of the random variable .

Suppose that x is the proportion of adopters (i.e., A players) in the neighborhood of a given agent i in a given period. Let denote the expected difference (excluding payoff shocks) from a single interaction:

Assume that whenever an agent revises his action, he chooses a (myopic) best response given his realized payoffs in that period. Thus, from an observer’s standpoint the probability that i chooses A is

Note that this probability does not depend on the agent’s degree.

If the shocks are drawn from an extreme-value distribution of the form , then is distributed according to a logistic distribution, and the resulting response function is the logit.

Another natural example is given by normally distributed payoff shocks. If the shocks are normally distributed with mean zero and SE , the resulting response function is the probit choice rule (39), given by

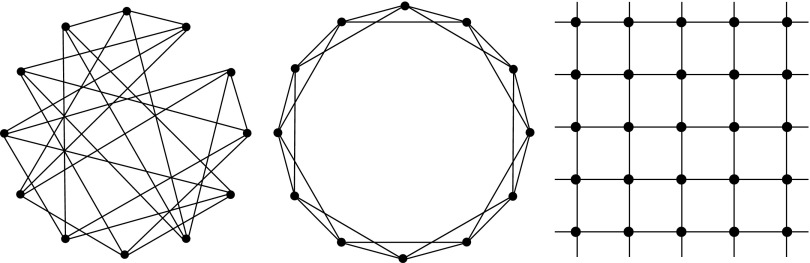

where is the cumulative distribution function of the standard normal distribution. It will be seen that this response function, plotted in Fig. 7, is very similar in shape to the logit. Moreover, the bound between slow and fast diffusion is qualitatively very similar to that in the other models.

Fig. 7.

(Upper) Logit response function (blue solid line) and normally distributed payoff shocks (red dashed line) (, ). (Lower) Thresholds for topology-free fast diffusion: logit (blue solid line) and normally distributed payoff shocks (red dashed line) .

In general, consider a family of densities with parameter . Assume that satisfies the following conditions: (i) is continuous; for every it is quasi-concave in and symmetric around 0; (ii) for any the distribution given by second-order stochastically dominates the distribution given by ; and (iii) tends to zero as tends to zero, and it tends to the Dirac delta function as tends to infinity.

The first condition implies that is also quasi-concave and symmetric around zero. This implies that the function is convex for and concave for . Hence the response function has a convex–concave shape. The second and third conditions say that the amount of payoff disturbances is arbitrarily large for small , decreases in , and tends to zero as tends to infinity. It follows that converges to random choice for close to 0 and to the best-response function for large.

We are now in a position to extend Theorem 2 to general families of response functions. Let denote the lower envelope of the convex hull of the set , and let

The next result extends Theorem 2 to families of payoff shocks that satisfy conditions i and ii.

Theorem 3.

Assume that agents experience independent and identically distributed payoff shocks drawn from a density that satisfies conditions i–iii. For any noise level , there exists a payoff threshold such that whenever , diffusion is fast for all graphs. Moreover, if , then for every graph G the expected waiting time until the adoption rate is at least is at most .

In SI Text we present a different characterization of response functions that are similar to the logit, in terms of error functions. A salient characteristic of the logit is that the probability of an error can be expressed as a decreasing function of the payoff difference between the two alternatives. The risk-dominant equilibrium remains stochastically stable for a large class of such response functions (30). We show that there is a one-to-one correspondence between decreasing, convex error functions and families of payoff disturbances. It follows that the main message of Theorems 1 and 2 carries through for a general class of error functions.

Conclusion

In this paper we have studied some of the factors that affect the speed of diffusion of innovations on social networks. The two main factors that we identify are the payoff gain of the innovation relative to the status quo and the amount of noise in the players’ response functions.

As has been noted by a number of authors, including Griliches in his classic study of hybrid corn (2), larger payoff gains tend to increase the speed with which an innovation spreads. This makes intuitive sense. A less obvious but equally important factor is the amount of noise or variability in the players’ behavior. This variability can be variously interpreted as errors, experimentation, or unobserved payoff shocks. Under all of these interpretations, greater variability tends to increase the speed at which an innovation spreads. The reason is that higher variability makes it easier to escape from the initial low equilibrium. A particularly interesting finding is that different combinations of variability and payoff gain determine a threshold above which diffusion is fast in a “strong” sense; namely, the expected diffusion time is uniformly bounded irrespective of population size and interaction structure. These results are robust to quite general parameterizations of the variability in the system. For the logit, which is commonly used in empirical work, the waiting time is bounded (and quite small absolutely) if the initial error rate is at least 5% and the payoff gain from the innovation is at least 83% relative to the status quo.

Unlike previous results, a central feature of our analysis is that the bounds on waiting time are topology-free. The results apply to all networks irrespective of size and structure. In addition, our method of analysis extends to families of graphs with restrictions on the degree distribution. The virtue of this approach is that it yields concrete predictions that are straightforward to compute even when the fine details of the network structure are unknown, which is arguably the case in many real-world applications. Moreover, in practice social networks are constantly in flux, which makes predictions that depend on the specific network topology quite problematic. We conjecture that our framework can be extended to settings where the network coevolves with players’ choices, as in refs. 40 and 41 for example, but this issue will be left for future work.

Supplementary Material

Acknowledgments

We thank Glenn Ellison and participants in the 2014 Sackler Colloquium, the MIT theory workshop, and the 2013 Brown University Mini-Conference on Networks for constructive suggestions. This research was sponsored by the Office of Naval Research Grant N00014-09-1-0751 and the Air Force Office of Scientific Research Grant FA9550-09-1-0538.

Footnotes

This paper results from the Arthur M. Sackler Colloquium of the National Academy of Sciences, “In the Light of Evolution VIII: Darwinian Thinking in the Social Sciences,” held January 10–11, 2014, at the Arnold and Mabel Beckman Center of the National Academies of Sciences and Engineering in Irvine, CA. The complete program and audio files of most presentations are available on the NAS website at www.nasonline.org/ILE-Darwinian-Thinking.

The authors declare no conflict of interest.

This article is a PNAS Direct Submission.

This article contains supporting information online at www.pnas.org/lookup/suppl/doi:10.1073/pnas.1400842111/-/DCSupplemental.

References

- 1.Coleman J, Katz E, Menzel H. The diffusion of an innovation among physicians. Sociometry. 1957;20(4):253–270. [Google Scholar]

- 2.Griliches Z. Hybrid corn: An exploration in the economics of technological change. Econometrica. 1957;25(4):501–522. [Google Scholar]

- 3.Munshi K, Myaux J. Social norms and the fertility transition. J Dev Econ. 2006;80(1):1–38. [Google Scholar]

- 4.Young H, Burke M. Competition and custom in economic contracts: A case study of Illinois agriculture. Am Econ Rev. 2001;91(3):559–573. [Google Scholar]

- 5.Kremer M, Levy D. Peer effects and alcohol use among college students. J Econ Perspect. 2008;22(3):189–206. [Google Scholar]

- 6.Bala V, Goyal S. Learning from neighbours. Rev Econom Stud. 1998;65(3):595–621. [Google Scholar]

- 7.Blume L. The statistical mechanics of strategic interaction. Games Econ Behav. 1993;5(3):387–424. [Google Scholar]

- 8.Blume L. The statistical mechanics of best-response strategy revision. Games Econ Behav. 1995;11(2):111–145. [Google Scholar]

- 9.Valente T. Network Models of the Diffusion of Innovations. Cresskill, NJ: Hampton Press; 1995. [Google Scholar]

- 10.Valente T. Network models and methods for studying the diffusion of innovations. In: Carrington PJ, Scott J, Wasserman S, editors. Models and Methods in Social Network Analysis. Cambridge, UK: Cambridge Univ Press; 2005. pp. 98–116. [Google Scholar]

- 11.Morris S. Contagion. Rev Econ Stud. 2000;67(1):57–78. [Google Scholar]

- 12.Watts DJ. A simple model of global cascades on random networks. Proc Natl Acad Sci USA. 2002;99(9):5766–5771. doi: 10.1073/pnas.082090499. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Watts D, Dodds P. Influentials, networks, and public opinion formation. J Consum Res. 2007;34(4):441–445. [Google Scholar]

- 14.Jackson M, Yariv L. Diffusion of behavior and equilibrium properties in network games. Am Econ Rev. 2007;97(2):92–98. [Google Scholar]

- 15.Vega-Redondo F. Complex Social Networks. Cambridge, UK: Cambridge Univ Press; 2007. [Google Scholar]

- 16.Golub B, Jackson M. Naïve learning in social networks and the wisdom of crowds. Am Econ J Microecon. 2010;2(1):112–149. [Google Scholar]

- 17.Jackson MO. Social and Economic Networks. Princeton, NJ: Princeton Univ Press; 2010. [Google Scholar]

- 18.Montanari A, Saberi A. The spread of innovations in social networks. Proc Natl Acad Sci USA. 2010;107(47):20196–20201. doi: 10.1073/pnas.1004098107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Newton J, Angus S. 2013. Coalitions, tipping points and the speed of evolution. Working Paper 2013-02 (University of Sydney School of Economics, Sydney, NSW, Australia)

- 20.Ellison G. Learning, local interaction, and coordination. Econometrica. 1993;61(5):1047–1071. [Google Scholar]

- 21.Sandholm W. Almost global convergence to p-dominant equilibrium. Int J Game Theory. 2001;30(1):107–116. [Google Scholar]

- 22.Blume L, Durlauf S. Equilibrium concepts for social interaction models. Int Game Theory Rev. 2003;5(3):193–209. [Google Scholar]

- 23.López-Pintado D. Contagion and coordination in random networks. Int J Game Theory. 2006;34(3):371–381. [Google Scholar]

- 24.Oyama D, Sandholm W, Tercieux O. 2014. Sampling best response dynamics and deterministic equilibrium selection. Theor Econ, in press.

- 25.Kreindler G, Young H. Fast convergence in evolutionary equilibrium selection. Games Econ Behav. 2013;80(1):39–67. [Google Scholar]

- 26.McKelvey R, Palfrey T. Quantal response equilibria for normal form games. Games Econ Behav. 1995;10(1):6–38. [Google Scholar]

- 27.Young H. Individual Strategy and Social Structure: An Evolutionary Theory of Institutions. Princeton, NJ: Princeton Univ Press; 1998. [Google Scholar]

- 28.Kandori M, Mailath G, Rob R. Learning, mutation, and long run equilibria in games. Econometrica. 1993;61(1):29–56. [Google Scholar]

- 29.Young H. The evolution of conventions. Econometrica. 1993;61(1):57–84. [Google Scholar]

- 30.Blume L. How noise matters. Games Econ Behav. 2003;44(2):251–271. [Google Scholar]

- 31.McFadden DL. Quantal choice analysis: A survey. Ann Econ Soc Meas. 1976;5(4):363–390. [Google Scholar]

- 32.Anderson S, De Palma A, Thisse J. Discrete Choice Theory of Product Differentiation. Cambridge, MA: MIT Press; 1992. [Google Scholar]

- 33.Brock WA, Durlauf SN. Interactions-based models. Handb Econ. 2001;1(5):3297–3380. [Google Scholar]

- 34.Young H. The dynamics of social innovation. Proc Natl Acad Sci USA. 2011;108(4):21285–21291. doi: 10.1073/pnas.1100973108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Ellison G. Learning from personal experience: One rational guy and the justification of myopia. Games Econ Behav. 1997;19(2):180–210. [Google Scholar]

- 36.Grimmett G, Stirzaker D. Probability and Random Processes. New York: Oxford Univ Press; 1992. [Google Scholar]

- 37.Liljeros F, Edling CR, Amaral LA, Stanley HE, Aberg Y. The web of human sexual contacts. Nature. 2001;411(6840):907–908. doi: 10.1038/35082140. [DOI] [PubMed] [Google Scholar]

- 38.Barabási A-L, Albert R. Emergence of scaling in random networks. Science. 1999;286(5439):509–512. doi: 10.1126/science.286.5439.509. [DOI] [PubMed] [Google Scholar]

- 39.Myatt D, Wallace C. A multinomial probit model of stochastic evolution. J Econ Theory. 2003;113(2):286–301. [Google Scholar]

- 40.Jackson M, Watts A. On the formation of interaction networks in social coordination games. Games Econ Behav. 2002;41:265–291. [Google Scholar]

- 41.Staudigl M. 2010. On a general class of stochastic co-evolutionary dynamics. Working Paper 1001 (Department of Economics, University of Vienna, Vienna)