Abstract

While age-related declines in facial expression recognition are well documented, previous research relied mostly on isolated faces devoid of context. We investigated the effects of context on age differences in recognition of facial emotions and in visual scanning patterns of emotional faces. While their eye movements were monitored, younger and older participants viewed facial expressions (i.e., anger, disgust) in contexts that were emotionally congruent, incongruent, or neutral to the facial expression to be identified. Both age groups had highest recognition rates of facial expressions in the congruent context, followed by the neutral context, and recognition rates in the incongruent context were worst. These context effects were more pronounced for older adults. Compared to younger adults, older adults exhibited a greater benefit from congruent contextual information, regardless of facial expression. Context also influenced the pattern of visual scanning characteristics of emotional faces in a similar manner across age groups. In addition, older adults initially attended more to context overall. Our data highlight the importance of considering the role of context in understanding emotion recognition in adulthood.

Keywords: emotion recognition, facial expressions, gaze patterns, context, aging, emotion recognition

Aging and the Malleability of Emotion Recognition: The Role of Context

The ability to identify emotions plays a critical role in effective social functioning as it aids the development of interpersonal and intergroup relationships and can limit social conflict (Fischer & Manstead, 2008). Therefore, inability to decode emotion could lead to inappropriate behavior and problems in social relations in everyday life (e.g., Ciarrochi, Chan, & Caputi, 2000; Marsh, Kozak, & Ambady, 2007). The literature on facial emotion recognition and aging suggests a substantial decline in the ability to recognize facial emotions in later life (e.g., Isaacowitz et al., 2007; see meta-analysis in Ruffman, Henry, Livingstone, & Phillips, 2008). The majority of previous research, however, has focused on isolated facial expressions without considering the role of context; despite the growing body of research to suggest that age differences in emotion recognition of facial expressions may depend on context (Aviezer, Hassin, Bentin, & Trope, 2008; Barrett, Mesquita, & Gendron, 2011; Isaacowitz & Stanley, 2011). In the present study, therefore, we investigated the role of context in age differences in emotion recognition of facial expressions.

Aging and Emotion Recognition

A large body of literature consistently reports age-related declines in emotion recognition of facial expressions, especially those expressing anger, fear, and sadness (e.g., Calder et al., 2003; Isaacowitz et al., 2007; Mill, Allik, Realo, & Valk, 2009; Murphy & Isaacowitz, 2010; Ruffman et al., 2008). In contrast, age equivalence has been reported for disgust recognition (Henry et al., 2008; Orgeta & Phillips, 2008) and some studies show that older adults may even have greater disgust recognition than their younger counterparts (e.g., Calder et al., 2003; Ruffman, Hallberstadt, & Murray, 2009; Wong, Cronin-Golomb, & Neargarder, 2005).

Why do older adults show such difficulty in recognizing certain negative emotions? While the exact mechanism underlying such age-related declines is not well understood, some eye-tracking studies suggest that the different gaze pattern strategies for sampling information from facial expressions could lead to poorer emotion recognition in older adults (Sullivan, Ruffman, & Hutton, 2007; Wong et al., 2005). Identifying emotions of anger, fear, and sadness requires more information from the upper part of the face (i.e., the eye region) than the lower part of the face (i.e., the mouth region), whereas identifying disgusted emotions relies more on the lower part of the face (e.g., Calder, Young, Kean, & Dean, 2000). Older adults were shown to fixate more to the mouth region than to the eye region when viewing angry, fearful, and sad faces than were younger adults (Wong et al., 2005, Experiment 3), while no age differences were found for disgusted faces. Similarly, Sullivan and colleagues (2007, Experiment 2) found that compared to younger adults, older adults, in general, looked longer at the mouth region of faces portraying varying emotions and they looked less at the eye region of fearful and disgusted faces. In a more recent study, Murphy and Isaacowitz (2010) also found that older adults showed less gazing toward the eye region when viewing angry faces, compared to their younger counterparts, although they found no age difference in mouth-looking patterns.

Wong and colleagues (2005) further reported that the tendency in older adults to look less at the eye region was indeed correlated with worse recognition accuracy for anger, fear, and sadness, while greater amounts of looking in the mouth region were associated with better recognition of disgust (Wong et al., 2005, Experiment 3). Sullivan et al. (2007) also found older adults' increased mouth-looking to be associated with worse recognition of grouped emotions of anger, sadness, and fear, although lesser amounts of eye-looking in older adults was not associated with better recognition of these emotions. Together, these findings suggest that older adults use a less optimal gaze strategy for extracting diagnostic information related to facial expressions that are best identified from the eyes. This trend might hamper their ability to identify facial expressions of anger, fear, and sadness.

The majority of previous research, however, has focused almost exclusively on isolated facial expressions, devoid of context; this method has been guided by the view that basic facial expressions are universal (e.g., Ekman, 1993) and categorically discrete signals of emotion (Young et al., 1997; see also, Aviezer, Hassin, Bentin, et al., 2008, for a review). Facial expressions are rarely encountered in isolation in the real world; rather, they are perceived in a context accompanied by bodily expressions, voices, and situational information that may directly influence the perception of the facial expressions. Indeed, growing evidence has suggested that recognition of facial expressions can be influenced by context more than has been previously considered (e.g., Aviezer, Hassin, Ryan, et al., 2008; Barrett, Lindquist, & Gendron, 2007; Meeren, van Heijnsbergen, & de Gelder, 2005; Righart & de Gelder, 2008).

Emotion Recognition of Facial Expressions in Context

Based on samples of younger adults, recent research has shown that contextual elements, including emotional body postures (or body postures holding emotional objects; Aviezer et al., 2009; Aviezer, Hassin, Ryan, et al., 2008; Meeren et al., 2005), visual scenes (Righart & de Gelder, 2008), descriptions of situations (Carroll & Russell, 1996; Kim et al., 2004), voices (de Gelder & Vroomen, 2000), word choice (Lindquist, Barrett, Bliss-Moreau, & Russell, 2006), and task goals (Barrett & Kensinger, 2010) can each influence attributions or categorizations of a particular emotion expressed by faces. Contextual information is perceived early and automatically, such that a rapid neural response (200 ms or less) occurs when perceiving facial expressions in emotional scenes (Righart & de Gelder, 2008) or in body postures (Meeren et al., 2005), and that perceivers appear to be unable to ignore contextual information when being explicitly told to do so (Aviezer, Bentin, Dudareva, & Hassin, 2011). Moreover, these contextual influences are not disrupted by cognitive load (Aviezer et al., 2011). These findings suggest that contextual information is rapidly integrated with the facial expression.

Recent studies conducted by Aviezer and colleagues (Aviezer, Hassin, Ryan, et al., 2008, Aviezer et al., 2009) further demonstrated that the magnitude of the contextual influence on the recognition of facial expressions depends on the perceptual similarity between the target facial expression and the facial expression associated with the emotional context. The perceptual similarity between facial expressions has been assessed using computational models that calculated similarities among basic facial expressions based on their physical properties (Susskind, Littlewort, Bartlett, Movellan, & Anderson, 2007), as well as by evaluating the pattern of recognition errors made by human perceivers (for details, see Aviezer, Hassin, Bentin, et al., 2008). For example, the facial expression of disgust is most similar to that of anger, but less similar to that of sadness, and even less similar to that of fear (Aviezer, Hassin, Bentin, et al., 2008; Aviezer, Hassin, Ryan, et al., 2008). Therefore, when disgusted faces were embedded on the bodies of models conveying emotions of disgust, anger, sadness, and fear (Aviezer, Hassin, Ryan, et al., 2008, Experiment 1), the accuracy for disgusted faces in the disgust context was 91%, but the accuracy reduced to 59% in a fear context, 35% in a sad context, and 11% in an angry context. Moreover, when the percentage of confusability (i.e., responses corresponding to the context emotion) was assessed, the pattern of categorization reversed. That is, 87% of the disgusted faces were categorized as “anger” in the anger context, 29% as “sad” in the sad context, and 13% as “fear” in the fear context. Thus, the pattern of accuracy and confusability indicate the more similar the target facial expression is to the expression associated with the emotional context, the stronger the contextual influence (see Aviezer et al., 2009 for similar findings with individuals in the pre-clinical stages of Huntington's disease).

By monitoring eye gaze patterns, Aviezer, Hassin, Ryan, and colleagues (2008, Experiment 3) also showed that emotional context can actually alter the characteristic scanning of emotional facial expressions. When disgusted faces appeared in a disgust context (and in a neutral context in which no specific emotion was conveyed by context), both the mouth and eye regions received equal frequency of fixation; however, when the same faces appeared in an anger context (i.e., an incongruent context), more fixations were made to the eye regions than to the mouth regions. Conversely, when angry faces appeared in an anger context (and in a neutral context), more fixations were directed to the eye than the mouth regions, but the two regions received similar numbers of fixations when the same faces were placed in a disgust context. These shifts in characteristics eye-scanning patterns in the incongruent context occurred from an early stage, assessed by the first gaze duration (e.g., eyes were faster to enter to the eye region when disgusted faces appeared in the angry context).

Could adding contextual information attenuate age differences in emotion recognition? Lack of emotional context may be one of the potential mechanisms for the effects of age on emotion recognition in lab tasks (Isaacowitz & Stanley, 2011). According to the social expertise perspective proposed by Hess and colleagues (Hess, 2006; Leclerc & Hess, 2007), increasing age is associated with increased sensitivity to relevant social cues that facilitate adaptive functioning in social situations. In addition, the cognitive aging literature also suggests that aging brings an increased reliance on context or environmental support, perhaps as a way of compensating for age-related perceptual and cognitive declines (e.g., Morrow & Rogers, 2008; Spieler, Mayr, & LaGrone, 2006; Smith, Park, Earles, Shaw, & Whiting, 1998). Therefore, older adults may rely more on context to help them identify facial expressions. Consequently, the use of isolated facial stimuli may limit an older adult's opportunity to draw upon external affective information, thereby leading to an underestimation of the capacities of older adults for emotion recognition.

Adopting contextual approaches to aging and emotion recognition may thus increase the ecological validity of tests of emotion-recognition skill sets (Isaacowitz & Stanley, 2011), and improve our understanding of the effects of aging on emotion recognition ability. Supporting this idea, Murphy, Lehrfeld, and Isaacowitz (2010) recently found that older adults performed better than younger adults when discriminating between posed versus spontaneous smiles in a task utilizing dynamic facial stimuli (presumed to provide more cues than are available in static faces). In addition, Ruffman, Sullivan, and Dittrich (2009, Experiment 3) have shown that when bodily and facial expressions were presented in tandem, there were no age differences in anger recognition, although there were still age differences in fear and sadness recognition. Together, these findings appear to suggest that age-related deficits in the recognition of emotion in facial expressions can be attenuated by using a more ecological approach that incorporates contextual elements.

The Present Study

The present study was specifically designed to test age differences in the recognition and eye-scanning of facial expressions in context. Using the same paradigm as in Aviezer, Hassin, Ryan, et al. (2008, Experiment 3), the present study focused on facial expressions of anger and disgust. As the recognition of angry and disgusted faces in older adults has previously raised theoretical interest because of the dissociated developmental trajectories of these emotions despite both being negative (e.g., Ruffman et al., 2008), focusing on these emotions would allow us to assess whether age differences in contextual influence are further moderated by specific emotions (anger vs. disgust). The faces were presented in one of three contexts: neutral (e.g., a disgusted face in a non-emotional, neutral-pose, i.e., similar to typical isolated faces), congruent (e.g., a disgusted face placed on a body holding a disgusted object), or incongruent (e.g., a disgusted face in a threatening pose waiving an angry fist).

We tested the following hypotheses. First, emotion recognition accuracy for angry and disgusted expressions will depend on the congruence of the context. Both expressions will be better recognized in the congruent context than in the neutral context; whereas, those embedded in an incongruent context will be recognized poorly, as those target expressions will be categorized as expressing the emotion conveyed by context (Aviezer, Hassin, Ryan, et al., 2008). Second, older adults will show better emotion recognition in the congruent context, and worse recognition in the incongruent context, compared to their younger counterparts. Third, these age differences will differ by facial expressions, such that the effects will particularly occur for anger, and not for disgust (e.g., Calder et al., 2003; Ruffman, Halderstadt, & Murray, 2009). Fourth, older adults will show more mouth- than eye-looking patterns, whereas younger adults will show a reverse pattern; these age differences will be more pronounced for angry expressions than for disgust expressions (Sullivan et al., 2007; Wong et al., 2005). Finally, older adults should show more context looking patterns than younger adults.

Method

Participants

Thirty-seven younger adults (23 female; aged 18-29 years) and 47 older adults (39 female; aged 61-92 years) participated in this study. Younger participants were recruited from a psychology course and through flyers posted on campus at Brandeis University. Older adults were recruited from the Boston community through advertisements in local newspapers. Participants received either course credit or a monetary stipend. All participants were native speakers of English and the sample of participants was 86.9 % White, 8.3% African American, 2.4% Asian, and 2.4% Hispanic. Table 1 shows the participants' demographic characteristics, visual acuity, and cognitive functioning. As shown in Table 1, older participants had high functioning cognitive abilities.

Table 1. Means and Standard Deviations of Participants' Demographic Information and Results From Perceptual and Cognitive Tests.

| Variable | Younger | Older | ||

|---|---|---|---|---|

|

|

|

|||

| M | SD | M | SD | |

| Education (years)* | 15.68 | 1.68 | 18.09 | 2.00 |

| Health | 4.05 | 0.7 | 3.87 | 0.81 |

| Vision measures | ||||

| Snellen visual acuity* | 23.32 | 5.57 | 31.89 | 13.19 |

| Rosenbaum near vision* | 22.36 | 3.04 | 28.67 | 5.04 |

| Pelli-Robson contrast* | 1.70 | 0.11 | 1.49 | 0.19 |

| Cognitive measures | ||||

| MMSE | 29.70 | 0.62 | 29.39 | 0.86 |

| Forward Digit Span | 7.03 | 1.09 | 6.85 | 1.46 |

| Backward Digit Span | 5.24 | 1.18 | 5.14 | 1.48 |

| Digit Symbol Substitution | 0.50 | 0.09 | 0.50 | 0.10 |

| Shipley Vocabulary Test* | 13.92 | 2.27 | 15.49 | 2.80 |

Notes. Means are given for all participants who completed the recognition task. Asterisks denote significant age differences at p < .05. Self-reported current health, ranging from 1 (poor) to 5 (excellent). Snellen chart for visual acuity (Snellen, 1862). Rosenbaum Pocket Vision Screener for near vision (Rosenbaum, 1984). Pelli-Robson Contrast Sensitivity Chart (Pelli, Robson & Wilkins, 1988). Mini-Mental State Examination (MMSE: Folstein, Folstein, & McHugh, 1975), means indicate number of correct responses of 30. Forward digit span, backward digit span, and digit symbol are subtests of the Wechsler Adult Intelligence Scale–Revised (Wechsler, 1981). Shipley Vocabulary Test (Zachary, 1986), means indicate number of correct responses of 21.

Stimuli and Apparatus

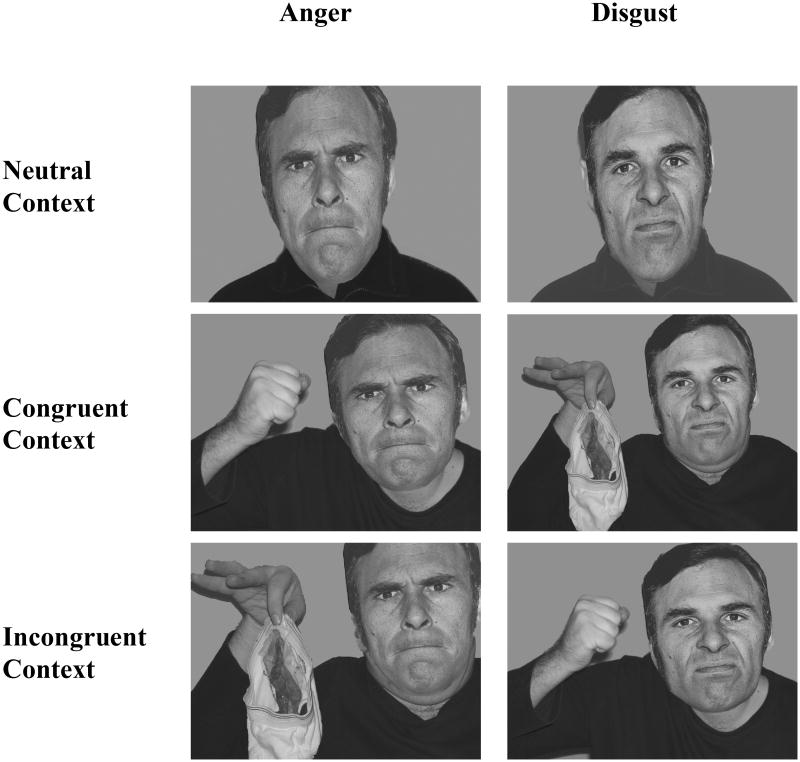

Experimental stimuli were borrowed from Aviezer, Hassin, Ryan, et al. (2008, Experiment 3). Images of 10 target middle-aged faces (five females) expressing anger and disgust were chosen from Ekman and Friesen's (1976) facial set. These target faces were placed on images of models (one female and one male upper body to be used for female and male faces, accordingly) in emotional contexts that had three levels: neutral, congruent, or incongruent to the target face emotions. Figure 1 illustrates examples of angry and disgusted faces presented in a neutral, congruent, and incongruent context. As shown in Figure 1, the neutral contextual information was a partial exposure of a body excluding any emotional bodily gesture or object. Thus, angry and disgusted faces embedded in the neutral information could be analogous to typical isolated facial expressions. For angry faces, the congruent contextual information included an upper body with a clenched fist, raised in anger. On the other hand, the congruent contextual information presented for disgusted faces was an upper body holding an emotionally-laden object (i.e., dirty underwear). The incongruent contextual information presented for angry and disgusted faces was the congruent contextual information for disgusted and angry faces. For example, the incongruent contextual information presented for the angry faces was the upper body holding a pair of dirty underwear and the incongruent contextual information for the disgusted faces was the upper body with a raised, angry fist. These emotional context images have been previously shown to be highly and equally recognizable indicators of their respective emotional categories among adults aging from their 20s to their 60s (Aviezer, Hassin, Ryan, et al., 2008; Aviezer et al., 2009).

Figure 1.

Examples of angry and disgusted faces in a neutral, congruent, and incongruent context. Body-context images taken from Aviezer et al.'s (2008) collection. Facial expressions from Ekman and Friesen (1976). Reproduced with permission from the Paul Ekman Group.

The combination of both the angry and disgusted faces with the three contextual levels created six groupings of face-context compound stimuli (n = 10 for each group). That is, the facial expressions of anger appeared in a neutral, angry, and disgust context, and facial expressions of disgust also appeared in a neutral, angry, and disgust context. Thus, a total of 60 target, face-context composites were included. An additional 30 filler face-context composites, in which facial expressions of other emotions (fear, happiness, sadness, surprise, and neutral) were embedded in a congruent or a neutral context, were also included in order to create a broad distribution of possible responses.1 While the total visual area was the same in all trials, the relative area of faces and contexts varied somewhat; however, the faces for a particular target emotion were always the same size.

The face-context compound stimuli were displayed on a 17-inch monitor with a pixel resolution of 1280 × 1024 and a gray background. GazeTracker software was used to present the stimuli. The order of the experimental and filler stimuli were randomized across the three lists and the order of the lists was randomized across participants. The viewing distance was 24 inches from the screen and the composite subtended at a visual angle of approximately 35° - 38° horizontally and 20° - 23° vertically.

Eye movements were monitored with a D6 Remote Tracking Optics apparatus, which tracks gaze by sending illumination from a remote unit to the pupil of the selected eye of the participant. The eye tracker automatically sampled the position of the selected eye at a rate of 60 Hz. A fixation is defined as a period in which gaze is directed within a 1° visual angle for at least 100 ms (Manor & Gordon, 2003) within the pre-determined Region of Interest (ROI).

Procedure

Participants were tested individually. After providing informed consent, each participant completed a demographic questionnaire, visual acuity test, and cognitive measures. The participant was then seated in front of the eye tracker and a 17-point calibration was administered to ensure that the eye tracker was recording the accurate position of the pupil. Following the calibration procedure, the participant completed an emotion recognition task for each of the 90 images (60 experimental and 30 filler face-context composites). The recognition task was self-paced in order to provide sufficient time for older adults to process face-context images, so that the participant was asked to look at each photograph freely at their own pace and click the left-mouse button to continue on to the emotion-judgment screen. The participant was told to select, in their own time, the emotional label that best described the emotion being displayed on the face from a list of seven distinct emotions (anger, sadness, surprise, disgust, happiness, fear, or neutral). Each image, and the emotion judgment screen, was followed by a 0.5 second fixation cross slide to realign the participant's gaze to the center of the screen. Participants could not return to the photograph screen once they had continued to the judgment screen. Each participant was given six practice trials before the experimental trials.

Results

The presentation of results is divided into two sections. The first section reports age differences in emotion recognition of facial expressions as a function of context. In the second section, results on age-related gaze patterns toward emotional faces and contexts. For all analyses of variance (ANOVAs) involving within-subjects factors, P-values were adjusted using the Greenhouse-Geisser correction for violations of the sphericity assumption. All post-hoc comparisons were Bonferroni corrected.

Age Differences in Emotion Recognition of Faces in Contexts

Recognition of correct emotion

Mean correct recognition accuracy (in percentage) was conducted in a 2 (Age: younger, older) × 2 (Facial Expression: angry, disgust) × 3 (Context: neutral, congruent, incongruent) mixed-model ANOVA, with Age as a between-subjects factor and Facial Expression and Context as within-subjects factors. There were significant main effects of Facial Expression, F(1, 82) = 8.18, p < .01, ηp2 = .09, Context, F(2, 164) = 586.11, p < .001, ηp2 = .88, and Age, F(1, 82) = 3.38, p = .07, ηp2 = .04 (see Table 2 for mean accuracy scores). These main effects were also qualified by a number of interactions. There was a significant Facial Expression × Context interaction, F(2, 164) = 22.25, p < .001, ηp2 = .21. Simple main effects were conducted on the means across context conditions within facial expressions. As shown in Table 2, supporting our hypothesis, within angry expressions, recognition accuracy was higher in the congruent than in the neutral context, and was lowest in the incongruent context, F(2, 81) = 226.85, p < .001, ηp2 = .85 (all ps < .001); the same results were found within disgusted expressions, F(2, 81) = 616.15, p < .001, ηp2 = .94 (all ps < .001). Importantly, in line with our hypothesis, a significant Age × Context interaction emerged, F(2, 164) = 14.40, p < .001, ηp2 = .15. A test of simple main effects within contexts revealed that older adults showed significantly better accuracy than younger adults in the congruent context, F(1, 82) = 4.80, p < .05, ηp2 = .06. This pattern was reversed in the incongruent context as older adults demonstrated poorer accuracy than younger adults, F(82) = 26.43, p < .001, ηp2 = .24. There was no age difference in the neutral context, F < 1 (see Table 2).

Table 2. Percentage of Correctly Recognized Facial Expression in Younger and Older Groups.

| Age Group | Facial Expression | Context | |||||

|---|---|---|---|---|---|---|---|

|

| |||||||

| Neutral | Congruent | Incongruent | |||||

| M | SE | M | SE | M | SE | ||

| Younger | |||||||

| Anger | 65.80 | 3.32 | 86.46 | 2.38 | 38.65 | 3.69 | |

| Disgust | 59.82 | 3.66 | 88.29 | 2.16 | 15.68 | 2.36 | |

| All Expression | 62.81 | 2.64 | 87.37 | 1.99 | 27.16 | 2.34 | |

| Older | |||||||

| Anger | 57.47 | 2.94 | 92.77 | 2.11 | 16.62 | 3.18 | |

| Disgust | 66.81 | 3.25 | 93.62 | 1.91 | 5.58 | 2.10 | |

| All Expression | 62.14 | 2.35 | 93.19 | 1.76 | 11.10 | 2.07 | |

| All | |||||||

| Anger | 61.63 | 2.22 | 89.61 | 1.59 | 27.63 | 2.40 | |

| Disgust | 63.31 | 2.45 | 90.95 | 1.44 | 10.63 | 1.58 | |

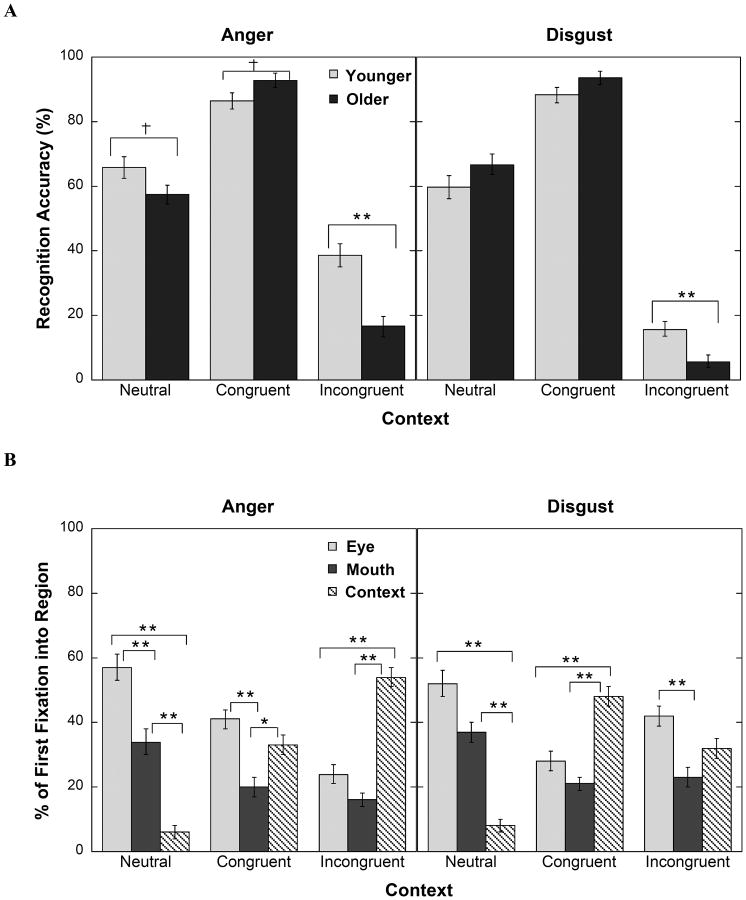

This two-way interaction was further qualified by a significant three-way Age × Context × Facial Expression interaction, F(2, 164) = 3.60, p < .05, ηp2 = .04. Figure 2A shows this three-way interaction. Simple main effects were conducted on the means across context conditions within facial expressions. For angry expressions, there was a trend toward younger adults being more accurate than older adults in the neutral context, F(1, 82) = 3.52, p = .06, ηp2 = .04; yet, the pattern appeared to be reversed in the congruent context in which older adults tended to be more accurate than younger adults, F(1, 82) = 3.93, p = .05, ηp2 = .05, though this effect was only marginally significant. However, older adults performed significantly worse than younger adults in the incongruent context, F(1, 82) = 21.08, p < .001, ηp2 = .21. For disgusted expressions, there were no age differences in the neutral, F(1, 82) = 2.04, p = .16, ηp2 = .02, nor in the congruent context, F(1, 82) = 3.41, p = .07, ηp2 = .04; however, older adults again performed significantly less accurately than younger adults in the incongruent context, F(1, 82) = 10.21, p < .01, ηp2 = .11.

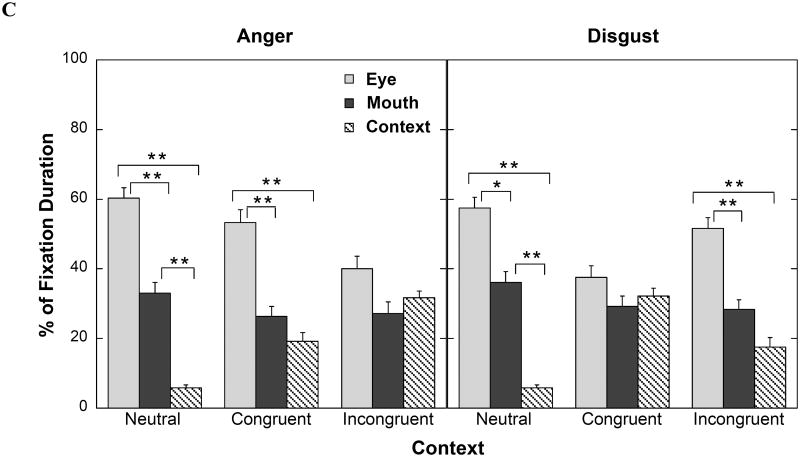

Figure 2.

A. Emotion recognition accuracy for angry and disgusted expressions for younger and older adults as a function of emotional context. B. Percent first fixation to each ROI (eye, mouth and context regions), as a function of facial expression and emotional context. C. Percent fixation duration to each ROI (eye, mouth and context regions) relative to total fixation duration, as a function of facial expression and emotional context. Error bars represent standard errors of the means.

†p ≤ .07, *p < .05, **p < .01.

Due to a high proportion of female participants in the current study, gender was included as a covariate in the original 2 (Age) × 2 (Facial Expression) × 3 (Context) mixed-model ANOVA. Gender emerged as a significant covariate, F(1, 80) = 6.15, p < .05, ηp2 = .07, as female participants recognized target emotions more accurately than male participants (Mfemale = 58.80%, SE = 1.16; Mmale = 53.19%, SE = 1.95). The main effect of Age became significant, F(1, 80) = 4.83, p < .05, hp2 = .06, as younger adults showed better emotion recognition than did older adults (Myounger = 58.84%, SE = 1.49; Molder = 53.50%, SE = 1.71). However, the Age by Gender interaction was not significant, F < 1. Of importance, the two two-way Facial Expression × Context and Age × Context interactions remained significant, F(2, 160) = 14.26, p < .001, ηp2 = .15 and F(2, 160) = 10.65, p < .001, ηp2 = .12, respectively. The three-way Age x Facial Expression x Context interaction, however, became non-significant, F(2, 160) = 2.43, p = .09, ηp2 = .03. Thus, even accounting for gender differences, older adults still demonstrated larger context effects than younger adults for emotion recognition, but this age differences did not vary by facial expression.2

The fact that we did not find a strong age effect for anger in the neutral condition is surprising, given the well-documented disadvantage in anger recognition found in previous studies (e.g., Calder et al., 2003; Wong et al., 2005). To explore this further, the participants were split into three groups by dividing the older adults into a young-old group (YO: n = 23; range 61-71 years) and an old-old group (OO: n = 24; range 72-92 years). We then performed an ANOVA on anger recognition in the neutral context with gender as a covariate. Gender was a significant covariate, F(1, 80) = 4.13, p < .05, ηp2 = .05, and the effect of Age was also significant, F(2, 80) = 4.11, p < .05, ηp2 = .09. While there was no difference between the younger and YO groups, t < 1, the OO group showed poorer anger recognition than the younger group, t(59) = 2.40, p < .05.

Recognition of incorrect emotion

We further examined contextual influences on the pattern of error responses of younger and older adults. Table 3 shows the percentage of each emotion category as a response to the target facial expression presented in the particular context with the upper matrix representing the younger group and the lower matrix representing the older group. The shaded cells correspond to correct recognition, and all other cells represent the percentage of incorrect responses by emotion category. The element-by-element comparison of these two matrices reveals that they are highly correlated, r(42) = .98, p < .001, indicating that younger and older groups categorized emotions in a similar way (see Mill et al., 2009). Because we were particularly interested in age differences in contextual influences on the inaccurate recognition of context emotions, we tested whether there were age differences in the percentage of times the target face was categorized as expressing the context emotion rather than any other emotion, following Aviezer, Hassin, Ryan et al. (2008). Because the effects in the congruent context (accurate recognition) were reported above, we will only report effects in the neutral and incongruent contexts.

Table 3. Confusion Matrices for Facial Expression in Younger and Older Groups.

| Labeled Emotion/Context | Presented Emotion | ||||||

|---|---|---|---|---|---|---|---|

|

| |||||||

| Anger | Disgust | ||||||

|

| |||||||

| Neutral | Congruent | Incongruent | Neutral | Congruent | Incongruent | ||

| Younger group | |||||||

| Anger | 65.80 | 86.46 | 38.65 | 28.42 | 8.60 | 74.91 | |

| Disgust | 10.20 | 3.00 | 43.69 | 59.82 | 88.29 | 15.68 | |

| Sadness | 9.16 | 2.72 | 2.99 | 2.93 | 1.00 | 2.27 | |

| Surprise | 3.07 | 1.44 | 2.35 | 0.86 | 0.22 | 0.28 | |

| Happiness | 0.00 | 0.00 | 0.30 | 0.00 | 0.00 | 0.00 | |

| Fear | 3.27 | 1.44 | 2.25 | 0.58 | 0.00 | 1.06 | |

| Neutral | 8.00 | 4.89 | 7.23 | 6.97 | 1.63 | 3.61 | |

| Older group | |||||||

| Anger | 57.47 | 92.77 | 16.62 | 16.89 | 2.59 | 88.94 | |

| Disgust | 9.87 | 1.96 | 75.06 | 66.81 | 93.62 | 5.78 | |

| Sadness | 7.12 | 0.48 | 1.31 | 2.88 | 2.29 | 0.92 | |

| Surprise | 5.43 | 0.70 | 1.03 | 2.50 | 0.66 | 0.00 | |

| Happiness | 0.00 | 0.00 | 0.19 | 0.37 | 0.00 | 0.00 | |

| Fear | 11.38 | 2.78 | 1.19 | 2.80 | 0.42 | 2.02 | |

| Neutral | 7.92 | 1.51 | 2.92 | 6.11 | 1.24 | 1.65 | |

Note. The shaded cells represent the percentage of correct recognitions.

A 2 (Age) × 2 (Facial Expression) × 3 (Context) mixed-model ANOVA showed the Age x Context interaction, F(2, 164) = 16.90, p < .001, ηp2 = .17. 3 A test of simple main effects within contexts revealed that there were no age differences in the neutral context (F < 1), indicating that both younger and older adults rarely categorized the target facial expressions as expressing neutral emotion (Myounger = 7.73%, SE = 1.36; Molder = 7.32%, SE = 1.21). There were, however, significant age differences in incongruent contexts, F(1, 82) = 24.60, p < .001, ηp2 = .23. In the incongruent context, older adults were more likely to categorize the target facial expressions as expressing the context emotion than were younger adults (Myounger = 61.22%, SE = 3.28; Molder = 82.93%, SE = 2.91).

The significant three-way Age × Facial Expression × Context interaction, F(2, 164) = 4.46, p < .05, ηp2 = .05, shows that in the incongruent context significant age differences emerged for both angry and disgusted expressions, F(1, 82) = 23.71, p < .001, ηp2 = .22 and F(1, 82) = 10.01, p < .01, ηp2 = .11, respectively, with older adults showing greater contextual influences than younger adults. As shown in Table 3, these age differences appear to be larger for expressions of anger (Mdiff = 31.37%) than for disgust (Mdiff = 14.03%).

To summarize, age differences in the pattern of recognition errors further support our hypothesis that older adults were more likely to be influenced by emotional context when recognizing facial expressions than younger adults.

Age Differences in Gaze Patterns for Emotional Faces in Contexts

If eye-tracking data were missing from more than three slides for any particular emotion-context condition due to loss of eye position, participant's data were removed from that condition. Approximately 1.6% of all data points were removed on the basis of these criteria.4, 5 Three ROIs (regions of interest) were created for each target face, including: (1) the eye region—a rectangular area covering the highest point of the eyebrow down to the bridge of the nose and across the entire width of the target's eyes; (2) the mouth region—a rectangular area containing the mouth from the bottom of the chin to below the nose and the entire width of the mouth; and (3) the context region—any other areas outside the eye and mouth regions, including contextual information.

Two types of eye movement measures were gathered: (a) the percent first fixation is the percentage of first fixations that landed within the ROI, and (b) the percent fixation duration is the percentage of time spent fixating in each ROI relative to the total time spent looking at the entire image (Murphy & Isaacowitz, 2010). While the percent first fixation denotes the scanning priority of different regions at an early stage (when the image was perceptually recognized), the percent fixation duration can provide details regarding which regions of the image were given selective attention over time once the image has been perceptually recognized. A 2 (Age) × 2 (Facial Expression) × 3 (Context) × 3 (ROI: eye, mouth, context) mixed-model ANOVA was conducted on each measure, with Age as a between-subjects factor and the other three as within-subjects factors. Table 4 summarizes the results. We also examined the number of fixations, and similar patterns emerged; therefore, we did not discuss the measure further.6 There were no gender effects for gaze patterns, so gender was not further considered in the gaze analysis.

Table 4. Means for Percent First Fixation (PFF) and Percent Fixation Duration (PFD) (standard errors in parenthesis).

| Age Group | Facial Expression | ROI | Context | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

| ||||||||||||||

| Neutral | Congruent | Incongruent | ||||||||||||

| PFF | PFD | PFF | PFD | PFF | PFD | |||||||||

| Younger | ||||||||||||||

| Anger | ||||||||||||||

| Eye | 60.35 | (5.30) | 62.1 | (4.79) | 52.76 | (4.42) | 57.16 | (4.90) | 30.35 | (3.99) | 43.97 | (4.80) | ||

| Mouth | 32.07 | (5.50) | 33.27 | (4.48) | 18.97 | (4.13) | 28.41 | (4.33) | 15.86 | (3.02) | 28.37 | (3.96) | ||

| Context | 4.14 | (2.68) | 3.94 | (1.86) | 24.14 | (4.34) | 13.55 | (3.24) | 47.24 | (4.72) | 23.28 | (4.22) | ||

| Disgust | ||||||||||||||

| Eye | 53.79 | (5.26) | 56.23 | (5.04) | 33.10 | (4.66) | 42.39 | (5.04) | 50.69 | (4.95) | 57.38 | (5.00) | ||

| Mouth | 38.97 | (5.09) | 36.03 | (4.66) | 19.66 | (3.49) | 31.16 | (4.18) | 22.07 | (4.17) | 28.18 | (4.67) | ||

| Context | 4.48 | (2.88) | 3.36 | (1.74) | 44.14 | (4.44) | 26.10 | (4.01) | 23.79 | (3.81) | 13.40 | (3.34) | ||

| Older | ||||||||||||||

| Anger | ||||||||||||||

| Eye | 53.71 | (4.83) | 55.55 | (4.36) | 35.43 | (4.02) | 46.47 | (4.46) | 18.00 | (3.63) | 31.02 | (4.37) | ||

| Mouth | 35.14 | (5.01) | 34.32 | (4.08) | 20.00 | (3.76) | 24.26 | (3.94) | 16.00 | (2.74) | 23.60 | (3.60) | ||

| Context | 8.57 | (2.44) | 8.99 | (1.69) | 41.14 | (3.95) | 24.65 | (2.94) | 60.57 | (4.29) | 40.03 | (3.84) | ||

| Disgust | ||||||||||||||

| Eye | 50.57 | (4.78) | 53.2 | (4.58) | 22.00 | (4.24) | 30.47 | (4.58) | 34.00 | (4.50) | 43.66 | (4.55) | ||

| Mouth | 35.43 | (4.63) | 36.66 | (4.24) | 22.00 | (3.17) | 24.22 | (3.81) | 23.14 | (3.79) | 29.10 | (4.25) | ||

| Context | 11.14 | (2.62) | 9.28 | (1.58) | 50.86 | (4.04) | 36.34 | (3.65) | 39.43 | (3.47) | 23.82 | (3.04) | ||

Percent first fixation

The 2 (Age) × 2 (Facial Expression) × 3 (Context) × 3 (ROI) mixed-model ANOVA yielded a significant main effect for ROI, F(2, 124) = 8.53, p < .05, ηp2 = .12, which was qualified by a significant Age × ROI interaction, F(2, 124) = 3.67, p < .001, ηp2 = .06. Simple main effects within ROIs indicated that younger adults more likely initially landed on the eye region than did older adults (younger: M = 46.84%, SE = 3.82; older: M = 35.62%, SE = 3.47), F(1, 62) = 4.73, p < .05, ηp2 = .07; however, there were no age differences in the mouth region (younger: M = 24.60%, SE = 3.59; older: M = 25.29%, SE = 3.27), F < 1. Older adults, on the other hand, were more likely to initially land on the context region than younger adults (younger: M = 24.66%, SE = 2.92; older: M = 35.29%, SE = 2.65), F(1, 62) = 7.27, p < .01, ηp2 = .11.7 There were also two significant two-way interactions including the Facial Expression × ROI interaction, F(2, 124) = 4.56, p < .05, ηp2 = .07, and the Context × ROI interaction, F(4, 248) = 79.55, p < .001, ηp2 = .56, which were further qualified by a significant Facial Expression × Context × ROI interaction, F(4, 248) = 36.66, p < .001, ηp2 = .37. The four-way Age × Facial Expression × Context × ROI interaction did not reach significance, F < 2. None of the remaining effects were significant.

Figure 2B illustrates the three-way interaction. The three-way interaction was decomposed using tests of simple main effects within expressions and within contexts across ROIs. For angry faces, the simple main effects of ROI were significant for respective context conditions, indicating that more first fixations landed on the eye region than on the mouth region and the least on the context region (all ps < .01) in the neutral context, F(2, 61) = 111.71, p < .001, ηp2 = .79. On the other hand, more first fixations landed on the eye and context regions compared to the mouth region (ps < .05) in the congruent context, while the eye and context regions yielded equivalent initial landing positions (p = .09), F(2, 61) = 11.80, p < .001, ηp2 = .28. In the incongruent context, more first fixations landed on the context region compared to both the eye and mouth regions (ps < .01), while the two regions drew equivalent occurrence of initial fixations (p = .09), F(2, 61) = 37.15, p < .001, ηp2 = .55.

For disgusted faces, the simple main effects of ROI were also significant for respective context conditions, indicating that both the eye and mouth regions drew more first fixations than the context region (ps < .01) in the neutral context, while the eye and mouth regions drew equivalent occurrence of first fixations (p = .08), F(2, 61) = 79.40, p < .001, ηp2 = .72. On the other hand, more first fixations landed on the context region compared to both the eye and mouth regions (ps < .01) in the congruent context, while the two regions attracted equivalent initial fixations (p = .48), F(2, 61) = 18.25, p < .001, ηp2 = .37. In the incongruent context, more first fixations landed on the eye region compared to the mouth region (p < .01), F(2, 61) = 6.21, p < .01, ηp2 = .17.

Percent fixation duration

The percent fixation duration analysis yielded a significant main effect of ROI, F(2, 124) = 24.84, p < .001, ηp2 = .29, which was qualified by a significant Facial Expression × ROI interaction, F(2, 124) = 5.11, p < .01, ηp2 = .08, and a Context × ROI interaction, F(4, 248) = 42.15, p < .001, ηp2 = .41. These interactions were further qualified by a significant three-way Facial Expression × Context × ROI interaction, F(4, 248) = 31.54, p < .001, ηp2 = .34, but the four-way Age × Facial Expression × Context × ROI interaction was not significant, F < 1. None of the remaining main effects or two- and three-way interactions reached significance.

To disentangle the Facial Expression × Context × ROI interaction, simple main effects tests were performed within expressions and within context conditions by comparing fixations across ROIs. Figure 2C illustrates the three-way interaction. When angry faces appeared in the neutral context, the effect of ROI, F(2, 61) = 200.71, p < .001, ηp2 = .87, indicated a higher percentage of fixation duration in the eye region than the mouth and context regions, along with a higher percentage of fixation duration in the mouth region than the context region (all ps < .001). In the congruent context, the effect of ROI, F(2, 61) = 25.78, p < .001, ηp2 = .46, showed a higher percentage of fixation duration in the eye area than the other two areas (ps < .001), along with no significant difference in percent fixation duration between the mouth and context regions (p = .23). On the other hand, the effect of ROI was not significant, F(2, 61) = 2.83, p = .07, ηp2 = .09, in the incongruent context, indicating the percent fixation duration was equivalent across all regions.

When disgusted faces appeared in the neutral context, the effect of ROI, F(2, 61) = 209.12, p < .001, ηp2 = .87, indicated a higher percentage of fixation duration in the eye region than the mouth and context regions, along with a higher percentage of fixation duration in the mouth region than the context region (all ps < .05). In the congruent context, however, the effect of ROI was not significant, F(2, 61) = 1.29, p = .28, ηp2 = .04. In other words, the percent fixation duration did not differ across the regions. In the incongruent context, the effect of ROI, F(2, 61) = 22.92, p < .001, ηp2 = .43, indicated a higher percent fixation duration in the eye region than in both the mouth and context regions (ps < .01), along with no significant difference between the mouth and context regions (p = .07).

Overall, the gaze patterns assessed by the percent first fixation and percent fixation duration in the scanning of angry and disgusted faces suggest that the contexts in which the emotional faces were embedded influenced the way those faces were scanned. Moreover, the percent first fixation data suggests that the context regions received similar or greater initial attention compared to the face regions in the congruent and incongruent contexts. Regarding age differences in gaze patterns, collapsed across expressions, younger adults showed more initial fixation toward the eye region compared to older adults, while older adults showed more initial fixation toward the context region compared to their younger counterparts. However, there was no evidence that older adults looked less at the eye region when looking angry faces.8

Discussion

The aim of the present study was to investigate age differences in contextual influence on the recognition of facial expressions (anger, disgust) and visual scanning patterns of emotional faces. While age-related declines in emotion recognition of facial expressions are well documented (e.g., Ruffman et al., 2008), previous research relied mostly on isolated faces devoid of context. Although the recognition of isolated facial expressions is of theoretical interest, this approach lacks the ecological validity of viewing the expression in a naturalistic setting (e.g., Barrett et al., 2011; Isaacowitz & Stanley, 2011), which limits our ability to understand the effects of aging on emotion recognition. Indeed, we observed that emotional context not only influenced the emotion recognized from the face for both younger and older adults, but also impacted the way the face was visually scanned. Importantly, supporting our hypothesis, the results of the present study demonstrated that older adults were more likely to be influenced by context in their judgment of emotion recognition of facial expressions as well as in their scanning of facial information.

Age Differences in Emotion Recognition in Context

Our results show that emotion recognition accuracy for angry and disgusted expressions depended on the congruence of the context. Both expressions were better recognized in the congruent context than in the neutral context; whereas those embedded in an incongruent context were recognized poorly, as the participants categorized those target expressions as expressing the emotion conveyed by context. Thus, replicating Aviezer, Hassin, Ryan, et al. (2008), the present study also demonstrated that context can induce a categorical shift in the recognition of facial emotion (Aviezer, Hassin, Ryan, et al., 2008; Avierzer et al., 2009).

With regard to age differences, older adults showed better emotion recognition when contextual information was congruent to the face to be recognized, but showed worse emotion recognition when contextual information was incongruent, compared to their younger counterparts. There was, however, no age difference in emotion recognition when contextual information was neutral. The shifted pattern of age differences in the congruent vs. incongruent contexts suggest that compared to their younger counterparts, older adults benefited more from congruent contextual information in identifying target facial expressions, whereas they were more prone to contextual errors from congruent contextual information. Thus, greater contextual influence for older adults is consistent with recent arguments that the contextual element should be incorporated in assessing age differences in emotion recognition of facial expressions (Isaacowitz & Stanley, 2011; Murphy, Lehrfeld, & Isaacowitz, 2010).

Contrary to our prediction and previous findings of robust age-related declines in anger recognition (e.g., Isaacowitz et al., 2007; Mill et al., 2009; Ruffman et al., 2008), there was only weak evidence in the present study that older adults showed worse anger recognition in the neutral context, which disappeared after controlling for gender. By splitting the older group into the young-old and old-old groups, the age differences in anger recognition in the neutral context further indicated that the lack of age differences, in part, was driven by the fact the young-old group showed equivalent anger recognition as did the younger group, and only the old-old group showed reduced anger recognition compared to the younger group. The reason for this finding could be that perhaps non-emotional cues conveyed by the neutral context made the angry face stand out more, or at least made it more meaningful to older adults (especially those in the young-old group), even though we assumed that facial expressions placed in the neutral context would be perceived like typical isolated facial expressions. If this is the case, future studies could explore whether age-related deficits in the recognition of other negative facial emotions such as fear and sadness, could be reduced or even removed by providing more contextual cues, even if those cues are neutral.9

An examination of emotion-specific response biases of younger and older adults revealed a highly similar response pattern, suggesting that younger and older adults confused emotion categories in a comparable way (see Mill et al., 2009 for similar findings based on isolated facial expressions). Nevertheless, older adults were more likely to confuse angry and disgusted expressions in the incongruent context as expressing the emotion conveyed by context rather than any other emotion, and that these age differences were greater for angry expressions. Furthermore, age differences in the probability of making contextual errors for angry expressions appeared to be relatively higher than the probability of making contextual errors for disgusted expressions.

Why is it that younger and older adults showed somewhat different patterns of contextual errors with each facial expression? One possibility might be a potential difference of emotional meaning associated with the face-posture congruence and the face-object congruence. That is, the angry context emotion was presented by an emotional body posture, whereas the disgust context emotion was displayed by a body posture in conjunction with an emotional object. The pairing of a disgusted face on an angry posture may indeed be incongruent, but the paring of an angry face with a body holding a disgusting object may not necessarily be incongruent. In a real situation, a person might be angered by the presence of a dirty object in their environment. Perhaps, younger adults may perceive such face-object pairing to be congruent, leading them to fewer contextual errors. This could cause better recognition for angry expressions than disgusted expressions in the incongruent context. It is, however, important to stress that older adults were equally likely to be influenced by context regardless of such potential difference of emotional meaning associated with types of contextual information. It may be that older adults' increased attention to context made them less sensitive to this subtle aspect of congruence.

Age Differences in Visual Scanning of Faces in Context

Examining the pattern of the percent first fixation during face scanning revealed that angry and disgusted faces were scanned differently from an early stage of processing in accordance with Aviezer, Hassin, Ryan, et al. (2008). In the neutral and congruent contexts, the eye region was more frequently the initial landing position, compared to the mouth region, for angry faces whereas a more symmetrical initial landing position between the eye and mouth regions was observed for disgusted faces. This initial scanning pattern, however, reversed when the angry and disgusted faces were placed in the incongruent context. Furthermore, the context region also received initial fixations equally or even more than the eye and/or mouth region in the congruent and incongruent contexts. Thus, the results extend the claim that context not only influences the actual processing of emotional faces from an early stage (Aviezer, Hassin, Ryan, et al., 2008), but that context is also perceived early and automatically (e.g., Meeren et al., 2005).

The pattern of findings for percent fixation duration further showed that participants looked more at the eye than the mouth region when viewing angry faces in the neutral and congruent contexts, and that they showed symmetrical looking patterns (between the two regions) in the incongruent context. In contrast, participants showed symmetrical looking patterns when viewing disgusted faces in the congruent contexts, but they exhibited more eye-looking when viewing the same faces in the incongruent context. In other words, both angry and disgusted faces in an angry context were scanned liked angry faces, and those faces in a disgust context were scanned like disgusted faces. This pattern strongly suggests that context influences how information is extracted from faces by shifting the profile gaze patterns to facial expressions (Aviezer, Hassin, Ryan, et al., 2008).

With regard to age differences in gaze patterns, younger and older adults differed in how they scanned a face in context at an early stage of processing. That is, during the initial moments of the scan, younger adults were more likely to fixate on the eye region, whereas older adults were more likely to fixate to the context region. There was, however, no age difference in the initial mouth-looking pattern. The results further support our hypotheses that younger adults focus more on information garnered from the eye region (e.g., Sullivan et al., 2007; Wong et al., 2005), whereas older adults rely more on contextual information when recognizing facial expressions in context. We further observed that this tendency occurred from an early stage of processing. Surprisingly, the gaze patterns (based on the two measures) in scanning angry and disgusted faces across contexts were nearly identical for younger and older adults. Thus, no evidence existed that older adults looked less at the eye region when looking at angry faces; even in the neutral context. This lack of age difference in scanning of facial regions may, in part, explain why there was weak evidence for age-related deficits in anger recognition in the neutral context in the present study. As we postulated earlier, non-emotional cues conveyed by the neutral context might somehow lead older adults' attention to the eye region. This possibility, and the underlying mechanism, should be explored further in future studies.

Taken together, our results indicate that older adults rely more on context when they encounter emotional facial expressions embedded in context than their younger counterparts. Such increased reliance on context seem to play a beneficial role as older adults showed better emotion recognition when contextual information was congruent to target facial expressions. Yet, at the same time, such reliance could lead older adults to be more prone to contextual errors. One could argue that older adults may have simply relied on or been distracted by context, which is perhaps more salient than information from facial expression, due to an age-related decrease in inhibitory effectiveness (Hasher & Zacks, 1988). While this is possible, a recent study by Ko, Lee, Yoon, Kwon, Mather (2011) has found no evidence that older adults (neither Westerners nor East Asians) were more likely to be influenced by irrelevant contextual information when isolated faces were placed in emotional scenes (based on their face-emotion intensity ratings). Such divergent findings on context effects suggest that the extent to which older adults are influenced by emotional context may depend on the type of context in which target faces are embedded. Body postures (with emotional objects) may be more relevant contextual cues in recognizing facial expressions than background scenes; therefore, older adults may attend more to related contextual information in order to enable their reading of facial expressions. Future studies will have to further explore the role of context type in age differences in emotion recognition.

The results of the eye scanning data reveal that older adults were more likely to initially fixate on the context region, whereas younger adults initially attended more to the eye region. This finding further fosters the idea that older adults rely more on context when recognizing facial expressions. However, these age differences occurred regardless of facial expressions and congruity of contextual information, and there were no age differences at all based on the fixation duration measure. Thus, except for the initial moments of the scan, there was dissociation between recognition and the eye scanning data as a function of age, which seems to suggest that eye scanning is not the only pathway through which context influences performance of older adults. This finding may be attributable to older adults placing more weight on context than younger adults when making emotional judgments of facial expressions.

Limitations

While the present study's use of context provides a way to increase ecological validity, and thereby improves the current understanding of emotion recognition and aging, several limitations should be discussed. First, only two target emotions were tested in the present study, which limits the application of the findings. Future studies, therefore, should include more emotions to create diverse combinations of facial expressions and context in order to systematically assess whether context effects differ by emotion and age group. Second, following the original Aviezer, Hassin, Ryan et al. (2008) design, we did not disentangle a potential difference in the face-posture congruency from that of the face-external object congruency. Therefore, future investigations should systematically investigate whether emotional facial expressions paired with the posture congruence, versus those paired with the external contextual congruence, make any difference. Third, we used static stimuli, which are still artificial and far from relevant to daily life experience. Future studies should use more realistic examples, such as dynamic displays, to better assess age-related differences (or similarities) in emotion recognition in context (Isaacowitz & Stanley, 2011). Finally, while we think that the accumulated life experiences of older adults may engender their sensitivity to, or increase their reliance upon, contextual cues, we did not directly assess individual differences in levels of social interactions. Thus, future investigations should examine the direct relationship between the social experiences of older adults and their use of context for emotion recognition.

Conclusions

Our findings provide evidence not only that context influences emotion recognition of facial expressions for both younger and older adults, but also that such contextual influences are greater for older adults. Eye-tracking results further suggest that context influences visual scanning characteristics of emotional faces in a similar manner across age groups, but older adults are more likely to initially attend to contextual information than younger adults. To conclude, our results highlight the importance of incorporating context into the study of emotion recognition and aging to better understand how emotion recognition skills change across the lifespan. If context becomes increasingly important with age, it may be a key determinant of when older adults are able to accurately identify emotions in their environment, and when they are led astray and make inaccurate judgments.

Acknowledgments

This research was supported by National Institutes of Health Grant R01 AG026323 to Derek M. Isaacowitz. Soo Rim Noh is now at the Department of Psychology, Duksung Women's University. Derek Isaacowitz is now at the Department of Psychology, Northeastern University. We are grateful to Hillel Aviezer and his research group who allowed us to use their stimuli. We thank Jennifer Tehan Stanley for her comments on a previous draft of this paper. Partial results were presented at Gerontological Society of America in New Orleans (November 2010).

Footnotes

Age differences in emotion recognition based on the filler images were also tested. Overall, there were no age differences in emotion recognition collapsed across facial expressions (Myounger = 90.49%, SE = 1.85; Molder = 87.29%, SE = 1.69), t < 2. Due to unbalanced numbers of stimuli in each facial expression category, we conducted independent sample t-tests to compare emotion recognition of fear, happiness, sadness, surprise, and neutral between age groups. The only age difference emerged for sadness, as older adults had lower recognition of sadness than younger adults (Myounger = 85.95%, SE = 3.28; Molder = 75.11%, SE = 3.48), t(80) = 2.23, p < .05 (all others, ts < 1).

As there were significant age-related impairments in visual acuity and visual contrast sensitivity, we carried out three separate analyses of covariance (ANCOVAs) with vision scores on the Snellen chart for visual acuity (Snellen, 1862), Rosenbaum Pocket Vision Screener for near vision (Rosenbaum, 1984), and Pelli Robson Contrast Sensitivity Chart (Pelli et al., 1988). These analyses did not remove the significant effect of Age × Context interaction, F(2, 136) = 12.58, p < .001, ηp2 = .16, F(2, 156) = 11.14, p < .001, ηp2 = .13, and F(2, 160) = 6.86, p < .01, ηp2 = .08, respectively. The three-way Age × Facial Expression × Context interaction remained significant controlling for scores on the Rosenbaum vision screening test, F(2, 156) = 3.99, p < .05, ηp2 = .05, but became non-significant controlling for scores on the Snellen chart and Pelli Robson Contrast Sensitivity Chart, F(2, 136) = 1.68, p = .19, ηp2 = .02 and F(2, 160) = 2.56, p = .08, ηp2 = .03.

Gender was not a significant covariate so it was removed from the analysis.

Of the sample, 20 (8 younger, 12 older) were excluded from the eye-tracking analyses (but remained in the recognition data) because of poor calibration for various reasons (e.g., reflective eyewear, contact lenses). There were no differences between the trackable and non-trackable participants within the age groups on any cognitive or visual measures except for education; trackable younger adults had completed more years of education (M = 16.00, SD = 1.60) than the non-trackble younger adults (M = 14.50, SD = .93), p < .05.

We also repeated the recognition data analyses based only on trackable participants. For recognition accuracy, all the effect patterns were maintained, but the Age × Facial Expression × Context became non-significant, F < 3. For the same analysis with a gender as a covariate, the only different result was that the Facial Expression × Context interaction became non-significant, F < 2. For contextual influence (categorization of the faces as the emotion by context), all the effect patterns were maintained, but the Age × Facial Expression × Context became non-significant, F < 2.

We also tested whether younger and older adults differed in their response to contextualized faces based on the total fixation duration, i.e. the summed duration of all fixations that encapsulated the entire face-context images across all ROIs. This could be considered a form of reaction time measure as it constitutes how long it took for the participant to stop looking at the image during the untimed task. A 2 (Age) × 2 (Facial Expression) × 3 (Context) mixed-model ANOVA revealed significant main effects of Facial Expression, F(1, 58) = 4.59, p < .05, ηp2 = .07, and Context, F(2, 116) = 14.17, p < .001, ηp2 = .20, which were further qualified by an Age × Context interaction, F(2, 116) = 5.33, p < .02, ηp2 = .08. Simple main effects analyses within contexts indicated that none of the age differences emerged significant (Fs < 2), suggesting that younger and older adults did not differ in the amount of time they looked at the face-context images in each context condition.

To discern whether age-related declines in visual acuity and visual contrast sensitivity contributed to age differences in the percent first fixation to different regions, we conducted ANCOVAs with performance on the three vision measures. These analyses did not remove the significant effect of Age x ROI interaction; F(2, 106) = 5.62, p < .01, ηp2 = .10 for the Snellen, F(2, 116) = 4.96, p < .05, ηp2 = .08 for the Rosenbaum, and F(2, 118) = 5.88, p < .01, ηp2 = .09 for the Pelli Robson Contrast Sensitivity Chart.

Given previous findings that suggest older adults look less at the eye region when viewing angry faces (e.g., Murphy & Isaacowitz, 2010; Wong et al., 2005), we conducted planned analysis on eye-scanning when looking at angry faces in the neutral context based on two (younger, older) and three (younger, YO, OO) age groups. There were no age differences found for all the analyses, Fs < 2.

We thank an anonymous reviewer for this valuable comment.

References

- Aviezer H, Bentin S, Dudareva V, Hassin RR. The automaticity of emotional face-context integration. Emotion. 2011;11:1406–1414. doi: 10.1037/a0023578. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aviezer H, Bentin S, Hassin RR, Meschino WS, Kennedy J, Grewal S, et al. Moscovitch M. Not on the face alone: perception of contextualized face expressions in Huntington's disease. Brain. 2009;132:1633–1644. doi: 10.1093/brain/awp067. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aviezer H, Hassin RR, Bentin S, Trope Y. Putting facial expressions into context. In: Ambady N, Skowronski JJ, editors. First impressions. New York: Guilford Press; 2008. pp. 255–286. [Google Scholar]

- Aviezer H, Hassin RR, Ryan J, Grady CL, Susskind J, Anderson AK, et al. Bentin S. Angry, disgusted, or afraid? Studies on the malleability of emotion perception. Psychological Science. 2008;19:724–432. doi: 10.1111/j.1467-9280.2008.02148.x. [DOI] [PubMed] [Google Scholar]

- Barrett LF, Kensinger EA. Context is routinely encoded during emotion perception. Psychological Science. 2010;21:595–599. doi: 10.1177/0956797610363547. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barrett LF, Lindquist K, Gendron M. Language as a context for emotion perception. Trends in Cognitive Sciences. 2007;11:327–332. doi: 10.1016/j.tics.2007.06.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barrett LF, Mesquita B, Gendron M. Context in emotion perception. Current Directions in Psychological Science. 2011;20:286–290. doi: 10.1177/0963721411422522. [DOI] [Google Scholar]

- Calder AJ, Keane J, Manly T, Sprengelmeyer R, Scott S, Nimmo-Smith I, Young AW. Facial expression recognition across the adult life span. Neuropsychologia. 2003;41:195–202. doi: 10.1016/S0028-3932(02)00149-5. [DOI] [PubMed] [Google Scholar]

- Calder AJ, Young AW, Keane J, Dean M. Configural information in facial expression perception. Journal of Experimental Psychology: Human Perception and Performance. 2000;26:527–551. doi: 10.1037/0096-1523.26.2.527. [DOI] [PubMed] [Google Scholar]

- Carroll JM, Russell JA. Do facial expressions signal specific emotions? Judging emotion from the face in context. Journal of Personality and Social Psychology. 1996;70:205–218. doi: 10.1037/0022-3514.70.2.205. [DOI] [PubMed] [Google Scholar]

- Ciarrochi JV, Chan AYC, Caputi P. A critical evaluation of the emotional intelligence construct. Personality and Individual Differences. 2000;28:539–561. doi: 10.1016/S0191-8869(99)00119-1. [DOI] [Google Scholar]

- D6 Remote Optic Tracking Optics [Apparatus] Bedford, MA: Applied Science Laboratories; 2009. [Google Scholar]

- de Gelder B, Vroomen J. The perception of emotions by ear and by eye. Cognition & Emotion. 2000;14:289–311. doi: 10.1080/026999300378824. [DOI] [Google Scholar]

- Ekman P. Facial expression of emotion. American Psychologist. 1993;48:384–392. doi: 10.1037/0003-066X.48.4.384. [DOI] [PubMed] [Google Scholar]

- Ekman P, Friesen WV. Pictures of facial affect. Palo Alto, CA: Consulting Psychologists Press; 1976. [Google Scholar]

- Fischer AH, Manstead AS. Social functions of emotion. In: Lewis M, Haviland-Jones JM, Barrett LF, editors. Handbook of emotions. 3rd. New York: Guilford Press; 2008. pp. 456–468. [Google Scholar]

- Folstein MF, Folstein SE, McHugh PR. “Mini-mental state”: A practical method for grading the cognitive state of patients for the clinician. Journal of Psychiatric Research. 1975;12:189–198. doi: 10.1016/0022-3956(75)90026-6. [DOI] [PubMed] [Google Scholar]

- GazeTracker [Computer Software] Charlottesville, VA: Eyetellect, LLC; [Google Scholar]

- Hasher L, Zacks RT. Working memory, comprehension, and aging: A review and a new view. In: Bower GH, editor. The psychology of learning and motivation. Vol. 22. New York: Academic Press; 1988. pp. 193–225. [Google Scholar]

- Henry JD, Ruffman T, McDonald S, Peek O'Leary MA, Phillips LH, Brodaty H, Rendell PG. Recognition of disgust is selectively preserved in Alzheimer's disease. Neuropsychologia. 2008;46:1363–1370. doi: 10.1016/j.neuropsychologia.2007.12.012. [DOI] [PubMed] [Google Scholar]

- Hess TM. Adaptive aspects of social cognitive functioning in adulthood: age-related goal and knowledge influences. Social Cognition. 2006;24:279–309. doi: 10.1521/soco.2006.24.3.279. [DOI] [Google Scholar]

- Isaacowitz DM, Loeckenhoff C, Lane R, Wright R, Sechrest L, Riedel R, Costa PT. Age differences in recognition of emotion in lexical stimuli and facial expressions. Psychology and Aging. 2007;22:147–259. doi: 10.1037/0882-7974.22.1.147. [DOI] [PubMed] [Google Scholar]

- Isaacowitz DM, Stanley JT. Bringing an ecological perspective to the study of aging and emotion recognition: Past, current, and future methods. Special Issue of Journal of Nonverbal Behavior Advance online publication. 2011 doi: 10.1007/s10919-011-0113-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kim H, Somerville LH, Johnstone T, Polis S, Alexander AL, Shin LM, et al. Whalen PJ. Contextual modulation of amygdala responsivity to surprised faces. Journal of Cognitive Neuroscience. 2004;16:1730–1745. doi: 10.1162/0898929042947865. [DOI] [PubMed] [Google Scholar]

- Ko S, Lee T, Yoon H, Kwon J, Mather M. How does context affect assessment of facial emotion? The role of culture and age. Psychology and Aging. 2011;26:48–59. doi: 10.1037/a0020222. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leclerc CM, Hess TM. Age differences in the bases for social judgments: Tests of a social expertise perspective. Experimental Aging Research. 2007;33:95–120. doi: 10.1080/03610730601006446. [DOI] [PubMed] [Google Scholar]

- Lindquist KA, Barrett LF, Bliss-Moreau E, Russell JA. Language and the Perception of Emotion. Emotion. 2006;6:125–138. doi: 10.1037/1528-3542.6.1.125. [DOI] [PubMed] [Google Scholar]

- Manor BR, Gordon E. Defining the temporal threshold for ocular fixation in free-viewing visuocognitive tasks. Journal of Neuroscience Methods. 2003;128:85–93. doi: 10.1016/S0165-0270(03)00151-1. [DOI] [PubMed] [Google Scholar]

- Marsh AA, Kozak MN, Ambady N. Accurate identification of fear facial expressions predicts prosocial behaviors. Emotion. 2007;7:239–251. doi: 10.1037/1528-3542.7.2.239. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Meeren HK, van Heijnsbergen CC, de Gelder B. Rapid perceptual integration of facial expression and emotional body language. Proceedings of the National Academy of Sciences of the United States of America. 2005;102:16518–16523. doi: 10.1073/pnas.0507650102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mill A, Allik J, Realo A, Valk R. Age-related differences in emotion recognition ability: A cross-sectional study. Emotion. 2009;9:619–630. doi: 10.1037/a0016562. [DOI] [PubMed] [Google Scholar]

- Morrow DG, Rogers WA. Environmental support: An integrative framework. Human Factors. 2008;50:589–613. doi: 10.1518/001872008X312251. [DOI] [PubMed] [Google Scholar]

- Murphy NA, Isaacowitz DM. Age effects and gaze patterns in recognizing emotional expressions: An in-depth look at gaze measures and covariates. Cognition & Emotion. 2010;24:436–452. doi: 10.1080/02699930802664623. [DOI] [Google Scholar]

- Murphy NA, Lehrfeld J, Isaacowitz DM. Recognition of posed and spontaneous dynamic smiles in younger and older adults. Psychology and Aging. 2010;4:811–821. doi: 10.1037/a0019888. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Orgeta V, Phillips LH. Effects of age and emotional intensity on the recognition of facial emotion. Experimental Aging Research. 2008;34:63–79. doi: 10.1093/geronb/gbq007. [DOI] [PubMed] [Google Scholar]

- Pelli DG, Robson JG, Wilkins AJ. The design of a new letter chart for measuring contrast sensitivity. Clinical Vision Science. 1988;2:187–199. [Google Scholar]

- Righart R, de Gelder B. Rapid influence of emotional scenes on encoding of facial expressions. An ERP study. Social Cognitive and Affective Neuroscience. 2008;3:270–278. doi: 10.1093/scan/nsn021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rosenbaum JG. The biggest reward for my invention isn't money. Medical Economics. 1984;61:152–163. [Google Scholar]

- Ruffman T, Henry JD, Livingstone V, Phillips LH. A meta-analytic review of emotion recognition and aging: Implications for neuropsychological models of aging. Neuroscience & Biobehavioral Reviews. 2008;32:863–881. doi: 10.1016/j.neubiorev.2008.01.001. [DOI] [PubMed] [Google Scholar]

- Ruffman T, Halberstadt J, Murray J. Recognition of facial, auditory, and bodily emotions in older adults. Journal of Gerontology: Psychological Sciences. 2009;64(B):P696–P703. doi: 10.1093/geronb/gbp072. [DOI] [PubMed] [Google Scholar]

- Ruffman T, Sullivan S, Dittrich W. Older adults' recognition of bodily and auditory expressions of emotion. Psychology and Aging. 2009;24:614–622. doi: 10.1037/a0016356. [DOI] [PubMed] [Google Scholar]

- Smith AD, Park DC, Earles JL, Shaw RJ, Whiting WL. Age differences in context integration in memory. Psychology and Aging. 1998;13:21–28. doi: 10.1037//0882-7974.13.1.21. [DOI] [PubMed] [Google Scholar]

- Snellen H. Letterproeven tot Bepaling der Gezichtsscherpte. Utrecht: 1862. [Google Scholar]

- Spieler DH, Mayr U, LaGrone S. Outsourcing cognitive control to the environment: Adult age differences in the use of task cues. Psychonomic Bulletin & Review. 2006;13:787–793. doi: 10.3758/BF03193998. [DOI] [PubMed] [Google Scholar]

- Sullivan S, Ruffman T, Hutton SB. Age differences in emotion recognition skills and the visual scanning of emotion faces. Journal of Gerontology: Psychological Sciences. 2007;62B:P53–P60. doi: 10.1093/geronb/gbp072. [DOI] [PubMed] [Google Scholar]

- Susskind JM, Littlewort GC, Bartlett MS, Movellan JR, Anderson AK. Human and computer recognition of facial expressions of emotion. Neuropsychologia. 2007;45:152–162. doi: 10.1016/j.neuropsychologia.2006.05.001. [DOI] [PubMed] [Google Scholar]

- Wechsler D. Manual for the Wechsler Adult Intelligence Scale–Revised. New York: Psychological Corp; 1981. [Google Scholar]

- Wong B, Cronin-Golomb A, Neargarder S. Patterns of visual scanning as predictors of emotion identification in normal aging. Neuropsychology. 2005;19:739–749. doi: 10.1037/0894-4105.19.6.739. [DOI] [PubMed] [Google Scholar]

- Young AW, Rowland D, Calder AJ, Etcoff NL, Seth A, Perrett DI. Facial expression megamix: Tests of dimensional and category accounts of emotion recognition. Cognition. 1997;63:271–313. doi: 10.1016/S0010-0277(97)00003-6. [DOI] [PubMed] [Google Scholar]

- Zachary R. Shipley Institute of Living Scale, revised manual. Los Angeles: Western Psychological Services; 1986. [Google Scholar]