Abstract

Spectral CT provides information on material characterization and quantification because of its ability to separate different basis materials. Dual-energy (DE) CT provides two sets of measurements at two different source energies. In principle, two materials can be accurately decomposed from DECT measurements. However, many clinical and industrial applications require three or more material images. For triple-material decomposition, a third constraint, such as volume conservation, mass conservation or both, is required to solve three sets of unknowns from two sets of measurements. The recently proposed flexible image-domain (ID) multi-material decomposition (MMD) method assumes each pixel contains at most three materials out of several possible materials and decomposes a mixture pixel by pixel. We propose a penalized-likelihood (PL) method with edge-preserving regularizers for each material to reconstruct multi-material images using a similar constraint from sinogram data. We develop an optimization transfer method with a series of pixel-wise separable quadratic surrogate (PWSQS) functions to monotonically decrease the complicated PL cost function. The PWSQS algorithm separates pixels to allow simultaneous update of all pixels, but keeps the basis materials coupled to allow faster convergence rate than our previous proposed material-and pixel-wise SQS algorithms. Comparing with the ID method using 2D fan-beam simulations, the PL method greatly reduced noise, streak and cross-talk artifacts in the reconstructed basis component images, and achieved much smaller root-mean-square (RMS) errors.

Index Terms: spectral CT, dual-energy CT, multi-material decomposition, statistical image reconstruction, optimization transfer

I. INTRODUCTION

X-ray computed tomography (CT) images the spatial distribution of attenuation coefficients of the object being scanned. Attenuation maps have many applications both in medical diagnosis and treatment and in industry for nondestructive evaluation. A conventional CT scanner measures a single sinogram at single X-ray source potential. Conventional image reconstruction methods process such measurements to produce a scalar-valued image of the scanned object.

In practice the scanned object always contains multiple materials. For example, the organs and tissues of human body under CT scans contain typical basis materials of blood, fat, muscle, water, cortical bone, air and contrast agent [1], [2]. Material attenuation coefficients depend on the energy of the incident photons. An X-ray beam in clinical practice is usually composed of individual photons with a wide range of energies, and each photon energy is attenuated differently by the materials in the object. If uncorrected, this energy dependence causes artifacts in images reconstructed by conventional methods, such as beam-hardening artifacts [3]. This energy dependence also allows the possibility of basis-material decomposition [1], [4]–[8]. Numerous applications of two-material decomposition have been explored, including CT-based attenuation correction for positron emission tomography (PET) [7], [9], beam-hardening artifacts correction [10], [11], and virtual un-enhancement (VUE) CT [1], [8].

Dual-energy (DE) CT methods, pioneered by Alvarez and Macovski et al. [4], [12]–[15], are the most predominant approaches for reconstructing two basis materials (e.g., soft-tissue and bone). They decomposed the energy dependence of attenuation coefficients into two components, one approximated the photoelectric interaction and another approximated Compton scattering, and separated these two components from two sets of measurements at two different source energies. Although DECT methods were originally proposed in the late 1970s and early 1980s, DECT scanners became clinically available only recently with technological developments, such as fast kVp-switching, dual-source CT and dual-layer detectors. These new techniques have brought renewed interest in DECT [6], [7], [9], [16]–[18], [18]–[26].

Several methods have been developed for reconstructing two basis materials from one CT scan with a single tube voltage setting. Ritchings and Pullan [27] described a technique for acquiring spectrally different data by filtering alternate detector elements. Taschereau et al. [28] retrofitted a preclinical microCT scanner with a filter wheel that alternates two beam filters between successive projections. One filter provides a low energy beam while the other filter provides a high energy beam. We [29] proposed a statistical penalized weighted least-squares (PWLS) method for reconstructing two basis materials from a single-voltage CT scan, exploiting the incident spectra difference of rays created by filtration, such as split [30] and bow-tie filters. One major limitation of decomposition methods based on single-voltage CT is the significant overlap in the two spectra that are generated by different filters.

Many clinical and industrial applications desire three or more component images [1], [22], [31], [32]. Quantifying liver fat concentration requires images of four constitute materials, liver tissue, blood, fat and contrast agent [1], [32]. Multi-material decomposition (MMD) can generate VUE images by removing the effect of contrast agents from contrast-enhanced CT exams without needing an additional contrast-free scan, reducing patient dose [1]. For radiotherapy, it is also useful to know the distributions of materials besides bone and soft tissue, such as calcium, metal (e.g., gold) and iodine-based contrast agent.

Typically, spectral CT methods reconstruct images of L0 = M0 basis materials from M0 sets of measurements with M0 different spectra [5]. Sukovic and Clinthorne [5] separated L0 = 3 basis materials from M0 = 3 sinograms acquired using M0 = 3 distinct source voltages, one of which was near the K-edge of one basis material. Generally, spectral CT requires multiple scans or specialized scanner designs, such as quasi-monochromatic sources [33] or energy-resolved photon-counting detectors [34]. In this paper, we focus on MMD using DECT which is currently the only commerically available version of spectral CT. However, the general formulation is applicable to a variety of spectral CT approaches.

A third criteria, such as volume conservation [21], mass conservation [22] or both [1], can enable reconstructing three basis materials from DECT measurements. Volume (mass) conservation assumes the sum of the volumes (masses) of the three constituent materials is equivalent to the volume (mass) of the mixture. To reconstruct L0 > 3 materials from DECT, solving this ill-posed problem requires additional assumptions. Mendonca et al. [1] proposed an image-domain (ID) method to reconstruct multiple materials pixel by pixel from a DECT scan. In addition to both volume and mass conservation assumptions, that method assumes that each pixel contains a mixture of at most three materials where the material types can vary between pixels. It establishes a material library containing all the possible triplets of basis materials for a specific application. It obtains a dual-material-density pair through projection-based decomposition approach from DECT measurements, and then generates a linear-attenuation-coefficient (LAC) pair for each pixel at two selected distinct energies (e.g.. 70 and 140 keV). Given a LAC pair, a material triplet and the sum-to-one constraint that was derived using both the volume and mass conservation assumption, triple material decomposition is solved for each pixel. This method sequentially decomposes each pixel into different triplets in the material library in a prioritized order, and collects solutions of volume fractions that satisfy a box ([0, 1]) constraint and sum-to-one constraint. If there is a solution, it moves on to the next pixel and skips material triplets with lower priorities. If there is no feasible solution for all the material triplets, it relaxes the box constraints and accepts the volume fractions corresponding to the triplet with minimal Hausdorff distance to the LAC pair over all possible triplets.

Inspired by the ID method [1], [35] we proposed a penalized-likelihood (PL) method [36] with an edge-preserving regularizer to reconstruct multi-material images. It is well known that statistical image reconstruction methods based on physical models of the CT system and scanned object and statistical models of the measurements can obtain lower noise images with higher quality. The proposed PL method considers each material image as a whole, instead of pixel by pixel, so prior knowledge, such as piecewise smoothness, can help solve the reconstruction problem.

The cost function of the PL method is minimized under the constraints that each pixel contains at most three materials, the volume fractions of basis materials sum to one, and the fractions are in the box [0, 1]. It is difficult to minimize the PL cost function directly. The preliminary PL method [36] applied optimization transfer principles to develop a series of pixel- and material-wise separable quadratic surrogates to monotonically decrease the cost function. The separability both in the pixel and material made the curvatures of surrogate functions large, causing slow convergence. In this paper, we propose an optimization transfer method with pixel-wise separable quadratic surrogates (PWSQS) that keep the materials coupled. The coupling in materials results in faster convergence. The maintained separability in pixels makes the PWSQS algorithm simultaneous and constrained optimization on each pixel easy.

We evaluated the proposed PL method on a modified NCAT chest phantom [37] containing fat, blood, omnipaque300 (iodine-based contrast agent), cortical bone, and air. Comparing with the ID method, the PL method was able to reconstruct component images with lower noise, greatly reduced streak artifacts, and alleviated the cross-talk phenomenon where a component of one material appearing in the image of another material. The RMS errors of the PL method were about 60% lower for fat, blood, omnipaque300 and cortical bone, compared to the filtered ID method.

The organization of this paper is as follows. Section II introduces the physical models, including the measurement and object model. Section III describes the PL method. Section IV derives the PWSQS algorithm. Section V shows the results. Section VI presents conclusions.

II. Physical Models

A. Measurement Model

We use the following general model to describe the measurement physics for X-ray CT. The detector measures X-ray photons emerging from the object at M0 ≥ 1 different incident spectra. Based on current technologies, different incident spectra can be realized by either scanning with different X-ray spectra, such as fast kVp-switching [16] or dual-source CT [18], or by energy-resolved photon-counting detectors [34]. Let Yim denote the measurement for the ray ℒim which is the ith ray for the mth energy scan, where m = 1, …, M0, i = 1, …, Nd, and Nd is the number of rays. For notational simplicity we assume that the same number of rays are measured for each incident spectrum, but the physics model and methods presented in this paper can be easily generalized to cases where different incident spectra have different number of recorded rays. For a ray ℒim of infinitesimal width, the mean of the projection measurements can be expressed as:

| (1) |

where μ(x⃗, ℰ) denotes the 3D unknown spatially- and energy-dependent attenuation distribution, ∫ℒim · dℓ denotes the “line integral” function along line ℒim, and the incident X-ray intensity Iim(ℰ) incorporates the source spectrum and the detector gain. In reality, the measurements suffer from background signals such as Compton scatter, dark current and noise. The ensemble mean of those effects (for the ray ℒim) is denoted as rim. We treat each Iim(ℰ) and rim as known nonnegative quantities. In practice, Iim(ℰ) can be determined by careful calibration [38], and rim are estimated by some preprocessing steps prior to iterative reconstruction [39]–[41].

B. Object Model for Basis Material Decomposition

We describe the object model for basis material decomposition as

| (2) |

where μl(ℰ) denotes the energy-dependent LAC of the lth material type, bj(x⃗) denotes spatial basis functions (e.g., pixels), and xlj denotes the volume fraction of the lth material in the jth pixel. Conventionally, one reconstructs L0 = M0 sets of basis materials from M0 sets of measurements with M0 different spectra [42].

Volume conservation [21], mass conservation [22] and combinations [1] have been used to provide extra information for solving L0 = M0 + 1 sets of unknowns from M0 sets of independent measurements. Volume (mass) conservation assumes the sum of the volumes (masses) of the three constituent materials is equivalent to the volume (mass) of the mixture. Mendonca et al. [1] pointed out that any reasonable method for material decomposition already makes an implicit assumption of mass conservation. They used both volume and mass conservation to produce a model for the LAC of a mixture of materials. In this paper we adopt their model where the volume fractions xlj should satisfy the following sum to one and box constraints:

| (3) |

We relax the lower limit of the box constraint to be slightly smaller than 0, and the upper limit to be slightly greater than 1, i.e., where , and where . This relaxation is similar to the work in [43], where negative values are allowed for the reconstructed densities of basis materials, such as water and iodine. This is because the estimated volume fractions are just coefficients for combining the linear attenuation coefficients of basis materials to produce the equivalent attenuation of a mixture. The sum to one constraint in (3) provides an extra criteria for solving L0 = M0 + 1 sets of unknowns from M0 sets of measurements.

Additional assumptions are needed to estimate L0 > M0 + 1 sets of unknowns from M0 sets of measurements [1]. We assume that each pixel contains at most (M0 + 1) types of materials and the material types can vary between pixels, i.e.

| (4) |

Let Ω be a (M0 + 1)-tuple library containing all tuples formed from L0 pre-selected materials of interest. Given a tuple ω in Ω, there are only (M0 + 1) unknowns for each pixel, which are solvable from M0 sets of measurements with the help of the box and sum-to-one constraints given in (3). Note that air must be included as one basis material type even if it is typically not of primary interest. This is because there are always locations with LACs of zeros in the field of view (FOV) of the scanner and only the LAC of air is zero.

C. Combining Measurement and Object Model

Let x denote the image vector x = (x1, …, xl, …, xL0) ∈ ℝNp×L0 for xl = (xl1, …, xlj, …, xlNp) ∈ ℝNp of the lth material. Combining the general measurement model (1) and the object model (2), the mean of the projection measurement ȳim(x) can be represented as follows,

| (5) |

for m = 1, …, M0 and i = 1, …, Nd where

| (6) |

The linear attenuation vector μ(ℰ) and the sinogram vector sim(x) are defined as

| (7) |

| (8) |

| (9) |

where Am denotes the Nd × Np system matrix with entries

| (10) |

As usual, we ignore the exponential edge gradient effects caused by the nonlinearity of Beer’s law [44], [45].

III. Penalized-Likelihood (PL) Reconstruction

For the case of normal clinical exposures, the X-ray CT measurements are often modeled as independently Poisson random variables with means (1), i.e.

The corresponding negative log-likelihood for independent measurements Yim has the form

| (11) |

where ≡ means “equal to within irrelevant constants independent of x”, and

| (12) |

| (13) |

We estimate component fraction images x from the noisy measurements Yim by minimizing a Penalized-Likelihood (PL) cost function subject to constraints given in (3) and (4) on the elements of x as follows:

| (14) |

| (15) |

The edge-preserving regularization term R(x) is

| (16) |

where the regularizer for the lth material is

| (17) |

where the potential function Ψl is a hyperbola

| (18) |

and where κlj and κlk are parameters encouraging uniform spatial resolution [46] and 𝒩lj is some neighborhood of voxel xlj. The regularization parameters βl and δl can be chosen differently for different materials according to their properties.

Since the LAC of air is zero there is no contribution of air component to the data fitting term L̄(x) in (11), but the regularizer term R(x) in (16) should include the air component because its image is piecewise smooth like other components. One could generalize the regularizer to consider joint sparsity of the component images [47], [48].

IV. Optimization Algorithm

Because the cost function Ψ(x) in (15) is difficult to minimize directly, we apply optimization transfer principles (OTP) [49]–[52] to develop an algorithm that monotonically decreases Ψ(x) each iteration. We find a pixel-wise separable quadratic surrogate (PWSQS) ϕ(n) (x) of the cost function, and then minimize ϕ(n) (x) under constraints given in (3) and (4) on each pixel. We loop over all tuples in the pre-determined material library Ω, minimize the surrogates under box and sum-to-one constraints in (3), and determine the optimal tuple for each pixel as the one minimizing the surrogate of that pixel.

A. Optimization Transfer Principles

The optimization transfer method [49], [50] replaces the cost function Ψ(x) that is difficult to minimize with a surrogate function ϕ(n) (x) that is easier to minimize at the nth iteration. The next estimate x(n+1) is the minimizer of the surrogate function, i.e.,

| (19) |

Repeatedly choosing a surrogate function and minimizing it at each iteration, one obtains a sequence of vectors {x(n)} that monotonically decrease the original cost function Ψ(x). The monotonicity is guaranteed by the following surrogate conditions:

| (20) |

To derive surrogate functions for Ψ(x) in (15), we consider the data fidelity term (11) and regularizer term (16) separately.

B. Surrogate of the Data Fidelity Term

-

First Surrogate: Non-Separable Convex Surrogate: The first step is to derive a convex surrogate as a function of the sinogram vector sim. Since him(sim) is convex (See Appendix A-A), it is bounded below by its tangent plane:

where . This inequality and the nonnegativity of Poisson random variable Yim lead to our first surrogate for the data fidelity term L̄(x):(21)

where(22) (23) If the source is monoenergetic and if rim = 0, then him is linear and the first surrogate exactly equals the original negative log-likelihood data fidelity term L̄(x), so it should be a “reasonably tight” surrogate function.

-

Second Surrogate: Non-Separable Quadratic Surrogate: The second step is to find a quadratic surrogate of the first convex surrogate given in (22) and (23). We rewrite as follows,

where , and(24)

and C denotes the constants in (23) that are irrelevant to sim.(25) Let c̆(·; α) given in (55) denote the optimal curvature for f(x; α) given in [52, Eqn. (28)]. We form the optimal quadratic surrogate of f(x; α) using c̆(·; α), and substitute it into (24) to obtain a quadratic surrogate of as follows (See Appendix A-B),

where the L0 × L0 curvature matrices are given by(26) (27) (28) Summing this quadratic surrogate leads to the following non-separable quadratic surrogate for the first convex surrogate :

where is given in (26).(29) -

Third Surrogate: Pixel-Wise Separable Quadratic Surrogate: Define pixel vector as xj ≜ (x1j, …, xL0j). The surrogate function is a non-separable quadratic function of xj. Non-separable surrogates are inconvenient for simultaneous update algorithms and for enforcing the constraint in (3) and (4) on each pixel. To derive a simple simultaneous update algorithm that is fully parallelizable and suitable for ordered-subsets implementation [53], [54], we find next a pixel-wise separable quadratic surrogate of the non-separable quadratic surrogate by applying De Pierro’s additive convexity trick [50], [53]. This novel pixel-wise separable quadratic surrogate remains non-separable with respect to the basis materials.

Appendix A-C derives in detail. It is defined as

where(30) (31)

The πmij values are non-negative and are zero only when amij is zero, and satisfy . For our empirical results, we use the following typical choice for πmij [53],(32) (33) - Pixel-Wise Separable Quadratic Surrogate in Matrix-Vector Formation: We have designed three surrogate functions sequentially having relationships

Therefore, is a surrogate function of L̄(x). It is much easier to minimize the the surrogate under the proposed constraints since it is quadratic and separable with respect to pixels.(34)

Combining the function value (60), gradient (61) and Hessian (62) derived in Appendix A-C, the pixel-wise separable quadratic surrogate has the following matrix-vector form

| (35) |

where is a block diagonal matrix over j = 1, …, Np, i.e.,

| (36) |

| (37) |

and is defined in (27). The L0 × L0 matrix is not diagonal due to the outer products in (27).

C. Surrogate of the Penalty Term

To derive a SQS function for the penalty term, we apply De Pierro’s additive convexity trick [50], [53], [55] in a similar fashion and use Huber’s optimal curvature [56, p. 185] for the potential function Ψl. The SQS function has the following matix-vector form

| (38) |

where is a diagonal matrix, i.e.,

| (39) |

| (40) |

where ωΨ(t) ≜ Ψ̇(t) /t.

D. Pixel-Wise Separable Quadratic Surrogate (PWSQS)

Combining the surrogates for the data fidelity term (35) and penalty term (38), the PWSQS function for the cost function Ψ(x) is

| (41) |

It is easier to minimize ϕ(n) (x) than the original cost function Ψ(x) because it is quadratic and separable with respect to pixels by construction. For the sake of optimization under constraints on each pixel, we rewrite ϕ(n) (x) in terms of j as

| (42) |

where

| (43) |

where ∇̵xj denotes the gradient with respect to xj, and the L0 × L0 Hessian matrix of is

| (44) |

E. Optimization With Material Constraints

After designing the surrogate (42), the next step of the optimization transfer algorithm is to minimize ϕ(n) (x) under constraints given in (3) and (4) on each pixel. Because ϕ(n) (x) is pixel-wise separable, one can minimize for all pixels simultaneously. We now focus on the problem of minimizing under the proposed constraints.

Let ω denote a tuple in the material library Ω, i.e., ω ∈ Ω. The optimization problem on the jth pixel is

| (45) |

where

| (46) |

| (47) |

| (48) |

The goal of this optimization is to estimate xj and material types ω. We solve it as follows.

- For each ω ∈ Ω, find the optimal x̂j(ω) and the corresponding function values . Without loss of generality, we consider the case where a given ω = (1, …, L) for some L between 1 and L0, then the optimization problem is

where , and H(ω) and p(ω) are formed from elements in H and p with indexes corresponding to ω = (1, …, L) respectively.(49) - Determine the best tuple ω̂ by comparing all , i.e.,

Obtain x̂j ≡ x̂j(ω̂) with padded zeros for l ∉ ω.

Given material types, i.e., given ω, the optimization problem defined in (49) is a typical convex quadratic programming problem. We used the Generalized Sequential Minimization Algorithm (GSMO) [57] to solve (49), and parallelized GSMO to update all pixels simultaneously. The pseudo-code of GSMO for solving the quadratic optimization problem with constraints in (49) is summarized in the supplementary material. One can use other quadratic programming methods to solve (49).

F. Ordered-Subset PWSQS Algorithm Outline

We use the ordered subsets approach to accelerate the “convergence” to a good local minimum [52] by replacing the gradient in (43) with a subset gradient scaled by the total number of subsets.

The overall ordered-subset pixel-wise separable quadratic surrogate (OS-PWSQS) algorithm for minimizing the PL cost function with constraints given in (14) is outlined in Table I.

TABLE I.

Ordered-subset pixel-wise separable quadratic surrogate (OS-PWSQS) algorithm outline.

| 1) Choose πmij factors using (33). | |

| 2) Initialize x(0) using the results of the image-domain method [1]. | |

| 3) For each iteration d = 1, …, Diter | |

| a) For each subset (subiteration) q = 1, …, Qiter | |

| i) n = d + q/Qiter | |

| ii) Compute gradient of the data fidelity term L̇qj. | |

| where ∇̵simȳim and ∇̵sim him are given in (50) and (52) respectively, and | |

| iii) Compute gradient of penalty term Ṙqj. | |

|

| |

|

| |

| iv) Compute L0 × L0 curvature matrices . | |

| where and are defined in (27) and (40) respectively. | |

| v) Compute H and p using (47) and (48), i.e., | |

| vi) For each tuple ω ∈ Ω | |

| A) Form , H(ω), p(ω) by extracting elements in , H and p with indexes corresponding to ω respectively. | |

| B) Obtain minimizer x̂j(ω) of the QP problem in (49) using GSMO. | |

| C) Compute and store minimal surrogate function value using (49). | |

| End | |

| vii) Determine optimal ω̂ by comparing all , i.e., | |

|

| |

| viii) Obtain x̂j ≡ x̂j(ω̂) with padded zeros for l ∉ ω. | |

| ix) Update all pixels x(n+1/Qiter) = x̂ = (x̂1, …, x̂j, …, x̂Np). | |

| End | |

| x(n+1) = x(n+Qiter/Qiter). | |

| End |

V. Results

To evaluate the proposed PL method for MMD and to compare it with the ID method [1], we simulated a DECT scan and reconstructed volume fractions of a modified NCAT chest phantom [37] containing fat, blood, omnipaque300 (iodine-based contrast agent), cortical bone and air. We generated virtual un-enhancement (VUE) images from the reconstructed volume fractions using these two methods.

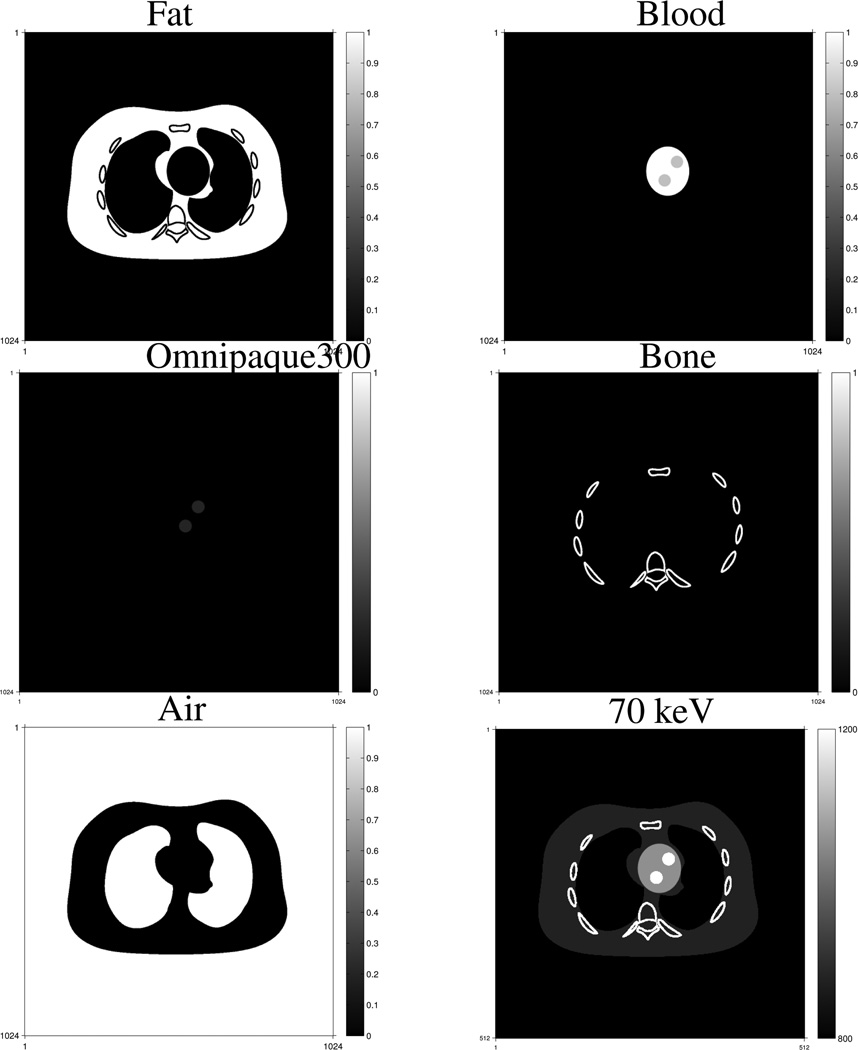

Fig. 1 shows true volume fractions and monoenergetic image at 70 keV of the simulated NCAT chest phantom. The simulated true images were 1024 × 1024 and the pixel size was 0.49 mm, while the reconstructed images were 512 × 512 and the pixel size was 0.98 mm. We introduced this model mismatch deliberately to test the MMD methods. We down-sampled the simulated true component images to the sizes of the reconstructed images by linearly averaging, and used these down-sampled images for comparisons with the reconstructed images.

Fig. 1.

True volume fractions and the monoenergetic image at 70 keV of the NCAT chest phantom. The volume fractions are in the range of [0, 1] and the monoenergetic image is displayed over [800, 1200] with the shifted Hounsfield unit (HU) scale where air is 0 HU and water is 1000 HU.

We simulated the geometry of a GE LightSpeed X-ray CT fan-beam system with an arc detector of 888 detector channels by 984 views over 360°. The size of each detector cell was 1.0239 mm. The source to detector distance was Dsd = 949.075mm, and the source to rotation center distance was Ds0 = 541mm. We included a quarter detector offset to reduce aliasing. We used the distance-driven (DD) projector [58] to generate projections of the true object. We simulated two incident spectra of X-ray tube voltages at 140 kVp and 80 kVp, and normalized them by their corresponding total intensities by summing the intensities over all energy bins. We generated noiseless measurements ȳim of the simulated NCAT phantom using (1) and the normalized spectra. To add Poisson distributed noise to the noiseless measurements ȳim, we first chose 2 × 105 incident photons per ray for the 140 kVp measurements, and then determined the value of incident photons per ray for the 80 kVp measurements according to the ratio of total intensities of the originally simulated spectra at high and low energies. The incident photons per ray for the 80 kVp measurements was 2 × 105 · Ii2/Ii1 = 6 × 104 where Ii1 and Ii2 denote the total intensity of the ith ray for the 140 kVp and 80 kVp spectrum respectively.

For this simulation we let the triplet material library Ω contain five triplets selected from five materials: fat, blood, omnipaque300, cortical bone and air, excluding the combination of omnipaque300 and cortical bone and the combination of omnipaque300 and fat. (This material library is based on the fact that contrast agent does not spread into the cortical bone area and fat area.) We implemented the ID method with several different priority lists of material triplets as described in [1]. We found that the performance of the ID method depends on the priorities of material triplets in the list. We selected the priority list that produces the best ID image quality in terms of noise, artifacts and crosstalk of component images. To initialize the PL iteration, we applied a 3 × 3 median filter to the ID images to decrease noise, especially salt-and-pepper noise due to crosstalk among component images. (See Fig. 1 in the supplementary material for the ID images.) A priority list is not used in the PL method as it determines the optimal triplet as the one that minimizes the PWSQS function in (46) for each pixel.

We used the conventional projection-domain dual-material decomposition method with polynomial approximation [4] followed by FBP to reconstruct water-iodine density images and chose 70 keV and 140 keV to yield LAC pairs for the ID method. We also tried a more sophisticated dual-material decomposition method, the statistical sinogram restoration method proposed in [9], but the final reconstructed component images were very similar to those of using poly-nominal approximation. For the PL method we chose βl = 28, 211, 211, 28, 24 and δl = 0.01, 0.01, 0.005, 0.01, 0.1 for fat, blood, omnipaque300, cortical bone and air, respectively. We ran 500 iterations of the optimization transfer algorithm in Table I with 41 subsets to accelerate the convergence. Because (14) is a nonconvex problem, the algorithm finds a local minimum.

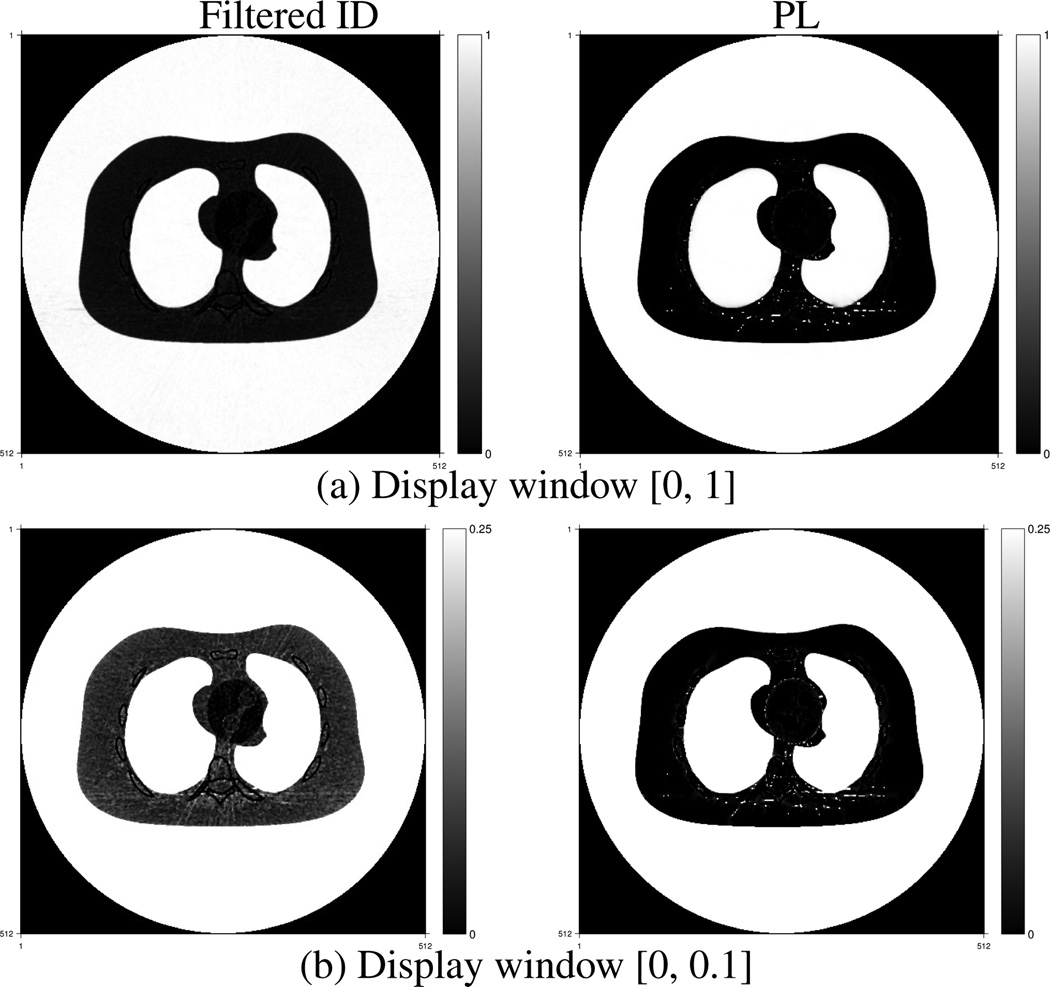

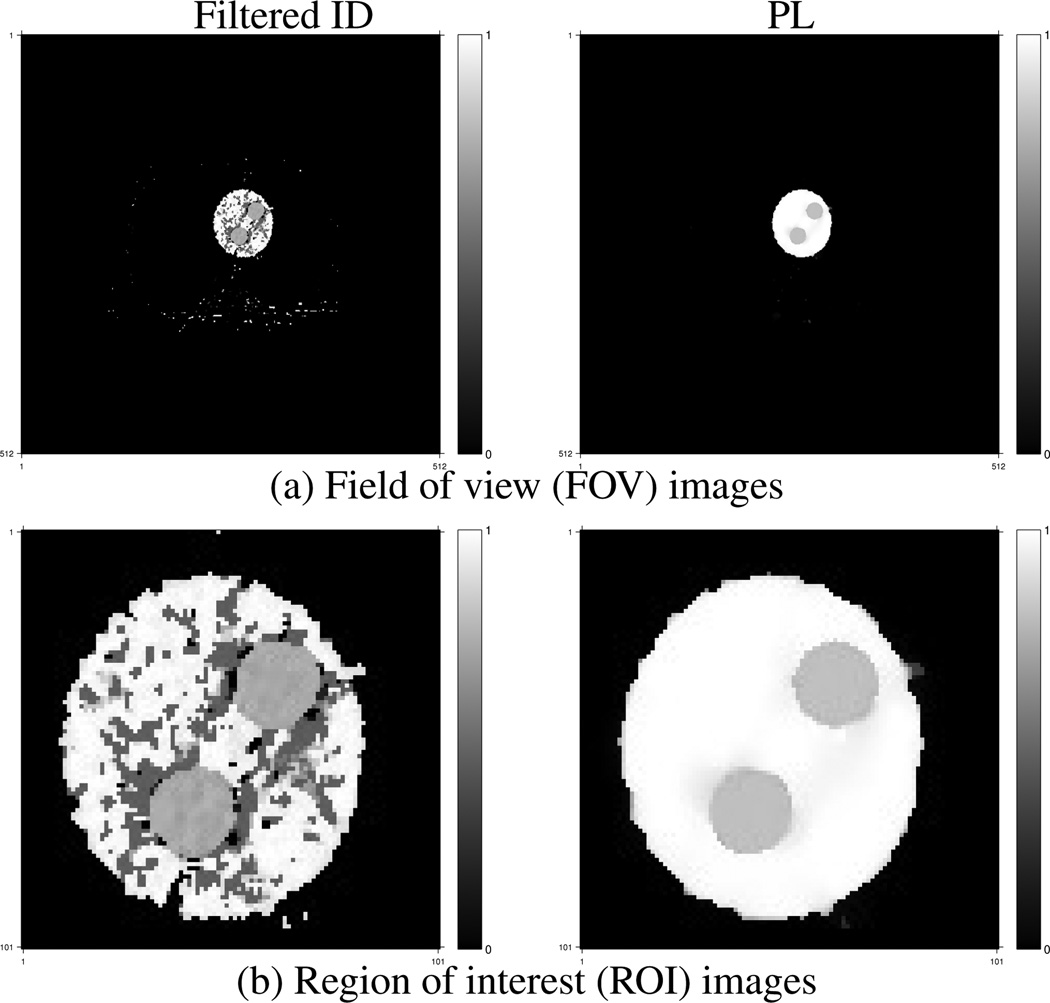

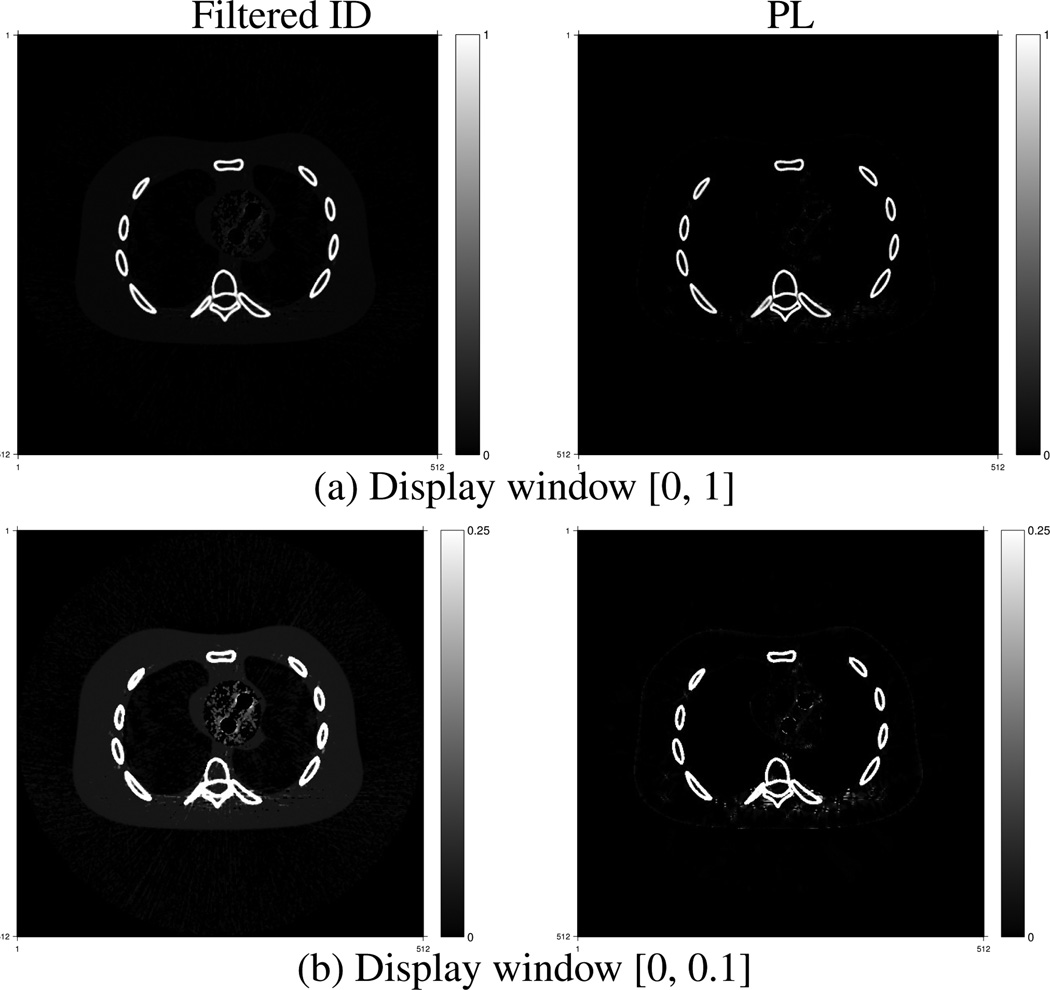

Fig. 2–Fig. 6 show estimated volume fractions of the five materials reconstructed by the PL method and the filtered ID method. The grayscale values represent volume fractions of each material. The big white disks in Fig. 6 are due to the circular reconstruction support. The streak-like artifacts in the reconstructed images by the filtered ID method are very similar to those in Figure 4 in [35] and Fig. 6 in [1]. The PL method greatly reduces these streak-like artifacts. Material cross talk is evident in the filtered ID results. Blood went into the fat image in Fig. 2, especially in the heart region. Cortical bone presented in the blood image in the upper left image in Fig. 3. Fat appeared in the cortical bone image, as evident in the lower left image in Fig. 5. The PL method alleviated this cross-talk phenomenon very effectively. In addition, the PL method reconstructed component images with lower noise.

Fig. 2.

Fat component fraction images reconstructed by the filtered ID method (left) and the PL method (right).

Fig. 6.

Air component fraction images reconstructed by the filtered ID method (left) and the PL method (right).

Fig. 4.

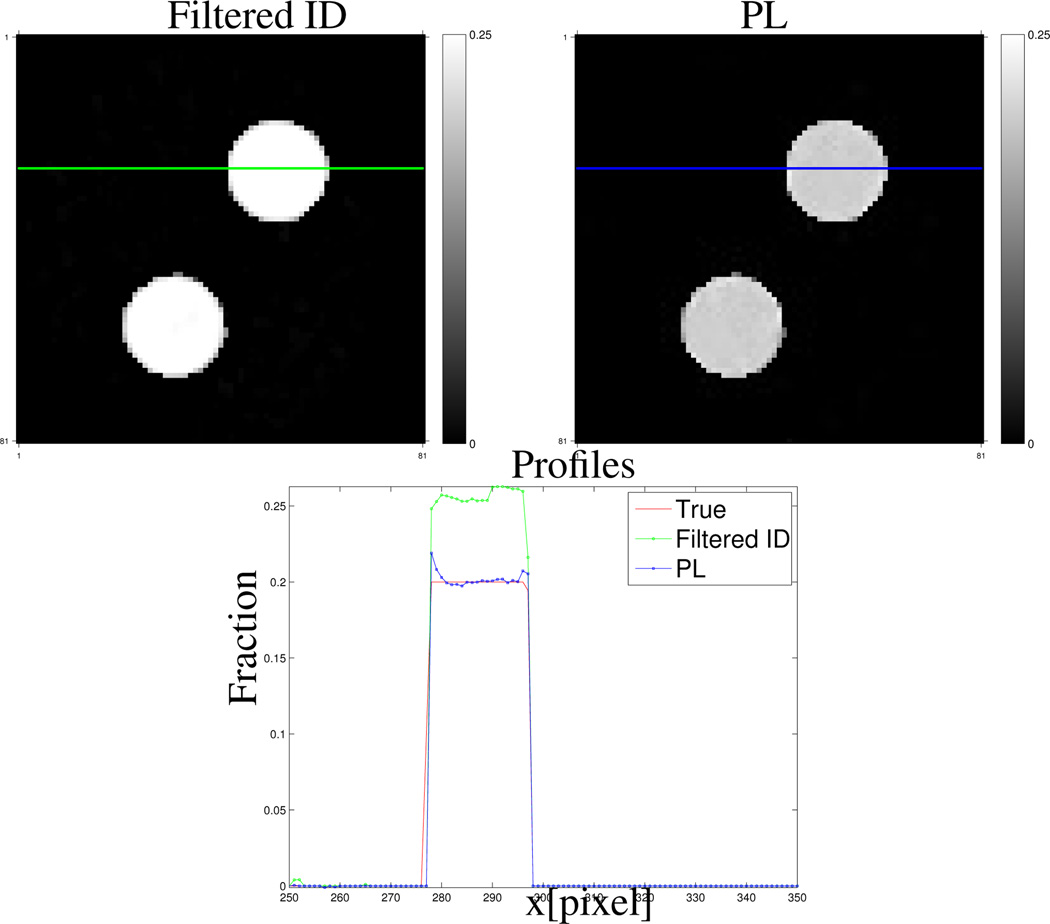

Zoomed-in omnipaque300 component fraction images reconstructed by the filtered ID method (upper left) and the PL method (upper right). The lower image shows the horizontal profiles.

Fig. 3.

Blood component fraction images reconstructed by the filtered ID method (left) and the PL method (right).

Fig. 5.

Cortical bone component fraction images reconstructed by the filtered ID method (left) and the PL method (right).

Fig. 4 shows the profiles of the down-sampled true and reconstructed omnipaque300 component images by the PL and filtered ID method respectively in the lower image. The locations of the profiles are indicated as a blue line and green line in the PL and filtered ID images. The PL method corrected the bias introduced by the filtered ID method. Profiles of reconstructed other component images are provided in the supplementary material.

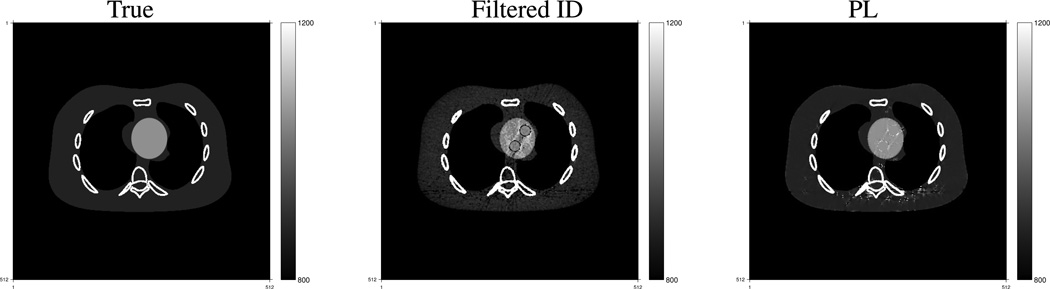

We constructed the virtual un-enhancement (VUE) images by replacing the volume of contrast agent (omnipaque300) in each pixel by the same amount of blood according to the method introduced in [1], [35]. Fig. 7 shows true and constructed VUE images at 70 keV using the component images reconstructed by the filtered ID and PL method. The images are displayed in a window of [800, 1200] with the shifted Hounsfield unit (HU) scale where air is 0 HU and water is 1000 HU. Having more accurate contrast agent and blood component images, the PL method produced a VUE image that is closer to the truth, while the VUE image using the filtered ID method has more obvious residuals of contrast agent. The PL method decreased beam-hardening artifacts in the monochromatic VUE image.

Fig. 7.

True and estimated virtual un-enhancement (VUE) images at 70 KeV. The display window is [800, 1200] HU where the HU scale is shifted by 1000 HU, i.e., air is 0 HU and water is 1000 HU.

We calculated the root-mean-square (RMS) errors of the component fractions within the reconstruct support for each material based on the down-sampled true images. Table II shows RMS errors of the component images reconstructed by the ID method, filtered ID method and the PL method. The errors of the component images were scaled by 103 for easy comparison. Table II also shows the RMS errors of the VUE images using the ID, filtered ID and PL method. The median filtering greatly decreased the RMS errors of the ID images, especially for the fat and blood basis materials. Comparing with the filtered ID method, the PL method lowered the RMS errors by about 60% for fat, blood, omnipaque300 and cortical bone component images, 2% for air image, and 20% for the monochromatic VUE image at 70 keV.

TABLE II.

RMS error comparison of the reconstructed component fraction images by the ID method, filtered ID method and the PL method. The errors of component fraction images are unitless and enlarged by 103. The errors of the VUE images are in HU unit.

| Method | Fat | Blood | Omnipaque | Bone | Air | VUE |

|---|---|---|---|---|---|---|

| ID | 156.3 | 134.7 | 4.0 | 38.1 | 49.5 | 54.6 |

| Filtered ID | 101.6 | 62.5 | 3.3 | 34.9 | 42.0 | 54.3 |

| PL | 51.3 | 19.4 | 1.4 | 16.6 | 41.2 | 43.6 |

VI. Conclusions

We proposed a statistical image reconstruction method for multi-material decomposition (MMD) using DECT measurements. We used a PL cost function containing a negative log-likelihood term and an edge-preserving regularization term for each basis material. We adopted the mass and volume conservation assumption and assumed each pixel contains at most three basis materials to help solve this ill-posed problem of estimating multiple sets of unknowns from two sets of sinograms. Comparing with the ID method [1], [35] that uses the same assumptions, the proposed PL method reconstructed component and monochromatic VUE images with reduced noise, streak artifacts and cross-talk. The PL method was able to lower the RMS error by about 60% for fat, blood, omnipaque300 and cortical bone basis material images, and 20% for the monochromatic VUE image at 70 keV, compared to the filtered ID method.

Due to the complexity and non-convexity of the PL cost function it is difficult to minimize the cost function directly. We previously introduced an optimization transfer method [36] with a series of material- and pixel-wise separable quadratic surrogate functions to monotonically decrease the PL cost function. The separability in both pixel and material caused the curvature of each surrogate function to be small. The smaller the curvature, the slower the convergence rate. The constraints on each pixel couple the estimates of material fractions, so even if we used a surrogate function that is separable across materials, the minimization step would not be separable due to the constraints. In this paper, we proposed a PWSQS optimization transfer method with separable quadratic surrogate functions that decouples pixels only. The PL cost function decreases faster with the PWSQS method (results not shown). However, iterative methods for MMD are computationally expensive. In each iteration, a MMD method requires one forward projection and one back-projection for each basis-material image, solving a constrained quadratic programming problem for each physically meaningful material tuple, and comparing results of all material tuples to determine the optimal tuple for each pixel. The simulations in this paper used 2D fan-beam data; to apply the PWSQS method to 3D data, more future work on accelerating the optimization process is needed. One potential accelerating method is combining OS-PWSQS with spatially non-uniform optimization transfer [59].

The PL cost function has two parameters, one regularizer coefficient βl and one edge-preserving parameter δl for each material. We found that the choice of parameters for one material component influenced the reconstructed image of another component. An appropriate combination of parameters needs to be carefully determined for each application. Huh and Fessler [60] used a material-cross penalty for DECT reconstruction. This penalty used the prior knowledge that different component images have common edges; this idea could be used for MMD as well. Choosing regularizers for the PL method and optimizing the parameters needs further investigation.

Since the PL cost function is non-convex, good initialization is important. We used the results of the ID method followed by median filtering as the initialization of the PL method. The median filtering decreased noise and RMS errors of the ID images, but did not preserve the sum-to-one constraint that the ID images satisfied. Even with this initialization the PL method was able to converge to a good local minimum that is close to the truth. As future work we will investigate image-domain “statistical” reconstruction methods that are computationally more practical than the PL method. Such methods could also serve to initialize the PL method.

We used the contrast agent, omnipaque300, as a basis material in this paper. Alternatively one can use diluted contrast agent or iodine as basis materials. Future work would investigate the effects of using various basis materials. Future work also includes applying the PL method to real spectral CT data, e.g., from fast-kVp switching DE scans, dual-source scans, or dual-layer detectors, to decompose materials as needed by the application.

Supplementary Material

ACKNOWLEDGMENT

We thank Paulo Mendonca for discussions about the ID method. The second author thanks Paul Kinahan and Adam Alessio for numerous discussions related to DE imaging.

This work is supported in part by NIH grant R01 HL-098686 and R01 CA-115870.

Appendix A

Surroagte Function Derivations

This section describes derivation details of the surrogate design in Section IV.

A. Convexity Proof

This section proves the convexity of him(sim). The gradient of ȳim(sim) with respect to sim is

| (50) |

and the Hessian is

| (51) |

Since the Hessian matrix is positive-semidefinite, i.e. , the function ȳim(sim) is convex.

The gradient of him(sim) with respect to sim is

| (52) |

The Hessian of him(sim) is,

| (53) |

where we define the following “probability density function”

| (54) |

and used the fact that zim(sim) ≤ ȳim(sim) for the first inequality. Since the Hessian matrix of him(sim) is positive-semidefinite, him(sim) is a convex function of sim.

B. Derivation of Non-Separable Quadratic Surrogate

The section derives the non-separable quadratic surrogate in Section IV-B2 in details.

The optimal curvature c̆(·; α) for f(x; α) derived in [52] is

| (55) |

Thus the optimal quadratic surrogate for f(x; α) is

| (56) |

where q(x; x0, α) ≥ f(x; α).

Substituting (56) into (24) leads to a non-separable quadratic surrogate of , i.e.,

| (57) |

where is defined in (28). Because is a quadratic function of sim, one can rewrite it as (26).

C. Derivation of Pixel-Wise Separable Quadratic Surrogate

This section derives the pixel-wise separable quadratic surrogate in Section IV-B3.

We rewrite the sinogram vector as

| (58) |

provided and πmij is zero only if amij is zero. If the πmij’s are nonnegative, then we can apply the convexity inequality to the quadratic function defined in (26) to write

| (59) |

where bmij is defined in (32).

The value of evaluated at x(n) is

| (60) |

The column gradient of has elements xlj

| (61) |

The “matched function value” and “matched derivative” properties are inherent to optimization transfer methods [51]. The Hessian has elements

| (62) |

where is defined in (28).

Contributor Information

Yong Long, Email: yong.long@sjtu.edu.cn, CT Systems and Application Laboratory, GE Global Research Center, Niskayuna, NY 12309.

Jeffrey A. Fessler, Email: fessler@umich.edu, Department of Electrical Engineering and Computer Science, University of Michigan, Ann Arbor, MI 48109.

References

- 1.Mendonca PRS, Lamb P, Sahani D. A flexible method for multi-material decomposition of dual-energy CT images. IEEE Trans. Med. Imag. 2014 Jan.33(no. 1):99–116. doi: 10.1109/TMI.2013.2281719. [DOI] [PubMed] [Google Scholar]

- 2.Elbakri IA, Fessler JA. Segmentation-free statistical image reconstruction for polyenergetic X-ray computed tomography with experimental validation. Phys. Med. Biol. 2003 Aug.48(no. 15):2543–2578. doi: 10.1088/0031-9155/48/15/314. [DOI] [PubMed] [Google Scholar]

- 3.Brooks RA, Di Chiro G. Beam-hardening in X-ray reconstructive tomography. Phys. Med. Biol. 1976 May;21(no. 3):390–398. doi: 10.1088/0031-9155/21/3/004. [DOI] [PubMed] [Google Scholar]

- 4.Alvarez RE, Macovski A. Energy-selective reconstructions in X-ray computed tomography. Phys. Med. Biol. 1976 Sept.21(no. 5):733–744. doi: 10.1088/0031-9155/21/5/002. [DOI] [PubMed] [Google Scholar]

- 5.Sukovic P, Clinthorne NH. Basis material decomposition using triple-energy X-ray computed tomography. IEEE Instrumentation and Measurement Technology Conference, Venice. 1999;3:1615–1618. [Google Scholar]

- 6.Sukovic P, Clinthorne NH. Penalized weighted least-squares image reconstruction for dual energy X-ray transmission tomography. IEEE Trans. Med. Imag. 2000 Nov.19(no. 11):1075–1081. doi: 10.1109/42.896783. [DOI] [PubMed] [Google Scholar]

- 7.Kinahan PE, Alessio AM, Fessler JA. Dual energy CT attenuation correction methods for quantitative assessment of response to cancer therapy with PET/CT imaging. Technology in Cancer Research and Treatment. 2006 Aug.5(no. 4):319–328. doi: 10.1177/153303460600500403. [DOI] [PubMed] [Google Scholar]

- 8.Zhang L-J, Peng J, Wu S-Y, Jane Wang Z, Wu X-S, Zhou C-S, Ji X-M, Lu G-M. Liver virtual non-enhanced CT with dual-source, dual-energy CT: a preliminary study. European Radiology. 2010;20(no. 9):2257–2264. doi: 10.1007/s00330-010-1778-7. [DOI] [PubMed] [Google Scholar]

- 9.Noh J, Fessler JA, Kinahan PE. Statistical sinogram restoration in dual-energy CT for PET attenuation correction. IEEE Trans. Med. Imag. 2009 Nov.28(no. 11):1688–1702. doi: 10.1109/TMI.2009.2018283. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Coleman AJ, Sinclair M. A beam-hardening correction using dual-energy computed tomography. Phys. Med. Biol. 1985 Nov.30(no. 11):1251–1256. doi: 10.1088/0031-9155/30/11/007. [DOI] [PubMed] [Google Scholar]

- 11.Goodsitt MM. Beam hardening errors in post-processing dual energy quantitative computed tomography. Med. Phys. 1995 Jul;22(no. 7):1039–1047. doi: 10.1118/1.597590. [DOI] [PubMed] [Google Scholar]

- 12.Macovski A, Alvarez RE, Chan J, Stonestrom JP, Zatz LM. Energy dependent reconstruction in X-ray computerized tomography. Computers in Biology and Medicine. 1976 Oct.6(no. 4):325–336. doi: 10.1016/0010-4825(76)90069-x. [DOI] [PubMed] [Google Scholar]

- 13.Alvarez RE, Macovski A. X-ray spectral decomposition imaging system. No. 4,029,963. U.S. Patent. 1977 Jun 14; 1977.

- 14.Marshall WH, Alvarez RE, Macovski A. Initial results with prereconstruction dual-energy computed tomography (PREDECT) Radiology. 1981 Aug.140(no. 2):421–430. doi: 10.1148/radiology.140.2.7255718. [DOI] [PubMed] [Google Scholar]

- 15.Stonestrom JP, Alvarez RE, Macovski A. A framework for spectral artifact corrections in X-ray CT. IEEE Trans. Biomed. Engin. 1981 Feb.28(no. 2):128–141. doi: 10.1109/TBME.1981.324786. [DOI] [PubMed] [Google Scholar]

- 16.Huh W, Fessler JA, Alessio AM, Kinahan PE. Fast kVp-switching dual energy CT for PET attenuation correction; Proc. IEEE Nuc. Sci. Symp. Med. Im. Conf; 2009. pp. 2510–2515. [Google Scholar]

- 17.Huh W, Fessler JA. Model-based image reconstruction for dual-energy X-ray CT with fast kVp switching; Proc. IEEE Intl. Symp. Biomed. Imag; 2009. pp. 326–329. [Google Scholar]

- 18.Primak AN, Ramirez Giraldo JC, Liu X, Yu L, McCollough CH. Improved dual-energy material discrimination for dual-source CT by means of additional spectral filtration. Med. Phys. 2009 Mar.36(no. 4):1359–1369. doi: 10.1118/1.3083567. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Giakos GC, Chowdhury S, Shah N, Mehta K, Sumrain S, Passerini A, Patnekar N, Evans EA, Fraiwan L, Ugweje OC, Nemer R. Signal evaluation of a novel dual-energy multimedia imaging sensor. IEEE Trans. Med. Imag. 2002 Oct.51(no. 5):949–954. [Google Scholar]

- 20.Kappler S, Grasruck M, Niederlöhner D, Strassburg M, Wirth S. Dual-energy performance of dual-kVp in comparison to dual-layer and quantum-counting CT system concepts. Proc. SPIE 7258, Medical Imaging 2009: Phys. Med. Im. 2009:725842. [Google Scholar]

- 21.Yu L, Liu X, McCollough CH. Pre-reconstruction three-material decomposition in dual-energy CT. Proc. SPIE 7258, Medical Imaging 2009: Phys. Med. Im. 2009:72581V. [Google Scholar]

- 22.Liu X, Yu L, Primak AN, McCollough CH. Quantitative imaging of element composition and mass fraction using dual-energy CT: Three-material decomposition. Med. Phys. 2009 Apr.36(no. 5):1602–1609. doi: 10.1118/1.3097632. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Nogueira LP, Barroso RC, Pinheiro CJG, Braz D, de Oliveira LF, Tromba G, Sodini N. Mapping lead distribution in bones by dual-energy computed microtomography with synchrotron radiation; Proc. IEEE Nuc. Sci. Symp. Med. Im. Conf; 2009. pp. 3471–3474. [Google Scholar]

- 24.Maass C, Grimmer R, Kachelriess M. Dual energy CT material decomposition from inconsistent rays (MDIR); Proc. IEEE Nuc. Sci. Symp. Med. Im. Conf; 2009. pp. 3446–3452. [Google Scholar]

- 25.Cai C, Rodet T, Legoupil S, Mohammad-Djafari A. A full-spectral Bayesian reconstruction approach based on the material decomposition model applied in dual-energy computed tomography. Med. Phys. 2013 Nov.40(no. 11):111916. doi: 10.1118/1.4820478. [DOI] [PubMed] [Google Scholar]

- 26.Jin Y, Fu G, Lobastov V, Edic PM, De Man B. Dual-energy performance of x-ray CT with an energy-resolved photon-counting detector in comparison to x-ray CT with dual kVp. Proc. Intl. Mtg. on Fully 3D Image Recon. in Rad. and Nuc. Med. 2013:456–460. [Google Scholar]

- 27.Ritchings RT, Pullan BR. A technique for simultaneous dual energy scanning. J. Comp. Assisted Tomo. 1979 Dec.3(no. 6):842–846. [PubMed] [Google Scholar]

- 28.Taschereau R, Silverman RW, Chatziioannou AF. Dual-energy attenuation coefficient decomposition with differential filtration and application to a microCT scanner. Phys. Med. Biol. 2010 Feb.55(no. 4):1141–1155. doi: 10.1088/0031-9155/55/4/016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Long Y, Fessler JA, Balter JM. Two-material decomposition from a single CT scan using statistical image reconstruction. Proc. Amer. Assoc. Phys. Med. 2011 To appear 15164. Wed. 11:20 AM, WC-C-110-6 oral. [Google Scholar]

- 30.Rutt B, Fenster A. Split-filter computed tomography: A simple technique for dual energy scanning. J. Comp. Assisted Tomo. 1980 Aug.4(no. 4):501–509. doi: 10.1097/00004728-198008000-00019. [DOI] [PubMed] [Google Scholar]

- 31.Laidevant AD, Malkov S, Flowers CI, Kerlikowske K, Shepherd JA. Compositional breast imaging using a dual-energy mammography protocol. Med. Phys. 2010 Jan.37(no. 1):164–174. doi: 10.1118/1.3259715. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Kis BJ, Sarnyai Z, Kakonyi R, Erdelyi M, Szabo G. Fat and iron quantification of the liver with dual-energy computed tomography in the presence of high atomic number elements. Proc. 2nd Intl. Mtg. on image formation in X-ray CT. 2012:230–233. [Google Scholar]

- 33.Masetti S, Roma L, Rossi PL, Lanconelli N, Baldazzi G. Preliminary results of a multi-energy CT system for small animals. J. Inst. 2009 Jun;4(no. 6):06011. (6 pp.) [Google Scholar]

- 34.Wang X, Meier D, Mikkelsen S, Maehlum GE, Wagenaar DJ, Tsui BMW, Patt BE, Frey EC. MicroCT with energy-resolved photon-counting detectors. Phys. Med. Biol. 2011 May;56(no. 9):27912816. doi: 10.1088/0031-9155/56/9/011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Mendonca PRS, Bhotika R, Thomsen BW, Licato PE, Joshi MC. Multi-material decomposition of dual-energy CT data. spie. 2010;7622:76221W. [Google Scholar]

- 36.Long Y, Fessler JA. Multi-material decomposition using statistical image reconstruction in X-ray CT. Proc. 2nd Intl. Mtg. on image formation in X-ray CT. 2012:413–416. [Google Scholar]

- 37.Segars WP, Tsui BMW. Study of the efficacy of respiratory gating in myocardial SPECT using the new 4-D NCAT phantom. IEEE Trans. Nuc. Sci. 2002 Jun;49(no. 3):675–679. [Google Scholar]

- 38.Ruth C, Joseph PM. Estimation of a photon energy spectrum for a computed tomography scanner. Med. Phys. 1997 May;24(no. 5):695–702. doi: 10.1118/1.598159. [DOI] [PubMed] [Google Scholar]

- 39.Colijn AP, Beekman FJ. Accelerated simulation of cone beam X-ray scatter projections. IEEE Trans. Med. Imag. 2004 May;23(no. 5):584–590. doi: 10.1109/tmi.2004.825600. [DOI] [PubMed] [Google Scholar]

- 40.Yavuz M, Fessler JA. Statistical image reconstruction methods for randoms-precorrected PET scans. Med. Im. Anal. 1998 Dec.2(no. 4):369–378. doi: 10.1016/s1361-8415(98)80017-0. [DOI] [PubMed] [Google Scholar]

- 41.Yavuz M, Fessler JA. Penalized-likelihood estimators and noise analysis for randoms-precorrected PET transmission scans. IEEE Trans. Med. Imag. 1999 Aug.18(no. 8):665–674. doi: 10.1109/42.796280. [DOI] [PubMed] [Google Scholar]

- 42.Sukovic P, Clinthorne NH. Data weighted vs. non-data weighted dual energy reconstructions for X-ray tomography. Proc. IEEE Nuc. Sci. Symp. Med. Im. Conf. 1998;3:1481–1483. [Google Scholar]

- 43.Zhang R, Thibault JB, Bouman CA, Sauer KD, Hsieh J. Model-based iterative reconstruction for dual-energy X-ray CT using a joint quadratic likelihood model. IEEE Trans. Med. Imag. 2014 Jan.33(no. 1):117–134. doi: 10.1109/TMI.2013.2282370. [DOI] [PubMed] [Google Scholar]

- 44.Glover GH, Pelc NJ. Nonlinear partial volume artifacts in x-ray computed tomography. Med. Phys. 1980 May;7(no. 3):238–248. doi: 10.1118/1.594678. [DOI] [PubMed] [Google Scholar]

- 45.Joseph PM, Spital RD. The exponential edge-gradient effect in x-ray computed tomography. Phys. Med. Biol. 1981 May;26(no. 3):473–487. doi: 10.1088/0031-9155/26/3/010. [DOI] [PubMed] [Google Scholar]

- 46.Fessler JA, Rogers WL. Spatial resolution properties of penalized-likelihood image reconstruction methods: Space-invariant tomographs. IEEE Trans. Im. Proc. 1996 Sept.5(no. 9):1346–1358. doi: 10.1109/83.535846. [DOI] [PubMed] [Google Scholar]

- 47.Valenzuela J, Fessler JA. Joint reconstruction of Stokes images from polarimetric measurements. J. Opt. Soc. Am. A. 2009 Apr.26(no. 4):962–968. doi: 10.1364/josaa.26.000962. [DOI] [PubMed] [Google Scholar]

- 48.He X, Fessler JA, Cheng L, Frey EC. Regularized image reconstruction algorithms for dual-isotope myocardial perfusion SPECT (MPS) imaging using a cross-tracer edge-preserving prior. IEEE Trans. Med. Imag. 2011 Jun;30(no. 6):1169–1183. doi: 10.1109/TMI.2010.2087031. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Lange K, Hunter DR, Yang I. Optimization transfer using surrogate objective functions. J. Computational and Graphical Stat. 2000 Mar.9(no. 1):1–20. [Google Scholar]

- 50.De Pierro AR. A modified expectation maximization algorithm for penalized likelihood estimation in emission tomography. IEEE Trans. Med. Imag. 1995 Mar.14(no. 1):132–137. doi: 10.1109/42.370409. [DOI] [PubMed] [Google Scholar]

- 51.Jacobson MW, Fessler JA. An expanded theoretical treatment of iteration-dependent majorize-minimize algorithms. IEEE Trans. Im. Proc. 2007 Oct.16(no. 10):2411–2422. doi: 10.1109/tip.2007.904387. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Erdoğan H, Fessler JA. Monotonic algorithms for transmission tomography. IEEE Trans. Med. Imag. 1999 Sept.18(no. 9):801–814. doi: 10.1109/42.802758. [DOI] [PubMed] [Google Scholar]

- 53.Erdoğan H, Fessler JA. Ordered subsets algorithms for transmission tomography. Phys. Med. Biol. 1999 Nov.44(no. 11):2835–2851. doi: 10.1088/0031-9155/44/11/311. [DOI] [PubMed] [Google Scholar]

- 54.Hudson HM, Larkin RS. Accelerated image reconstruction using ordered subsets of projection data. IEEE Trans. Med. Imag. 1994 Dec.13(no. 4):601–609. doi: 10.1109/42.363108. [DOI] [PubMed] [Google Scholar]

- 55.Fessler JA. Statistical image reconstruction methods for transmission tomography. In: Sonka M, Michael Fitzpatrick J, editors. Handbook of Medical Imaging, Volume 2. Medical Image Processing and Analysis. Bellingham: SPIE; 2000. pp. 1–70. [Google Scholar]

- 56.Huber PJ. Robust statistics. New York: Wiley; 1981. [Google Scholar]

- 57.Keerthi SS, Gilbert EG. Convergence of a generalized SMO algorithm for SVM classifier design. Machine Learning. 2002;46(no. 1–3):351–360. [Google Scholar]

- 58.De Man B, Basu S. Distance-driven projection and backprojection in three dimensions. Phys. Med. Biol. 2004 Jun;49(no. 11):2463–2475. doi: 10.1088/0031-9155/49/11/024. [DOI] [PubMed] [Google Scholar]

- 59.Kim D, Pal D, Thibault J-B, Fessler JA. Accelerating ordered subsets image reconstruction for X-ray CT using spatially non-uniform optimization transfer. IEEE Trans. Med. Imag. 2013 Nov.32(no. 11):1965–1978. doi: 10.1109/TMI.2013.2266898. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Huh W, Fessler JA. Iterative image reconstruction for dual-energy x-ray CT using regularized material sinogram estimates; Proc. IEEE Intl. Symp. Biomed. Imag; 2011. pp. 1512–1515. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.