Abstract

Background

Studies of whether inpatient mortality in U.S. teaching hospitals rises in July as a result of organizational disruption and relative inexperience of new physicians (‘July effect’) find small and mixed results, perhaps because study populations primarily include low-risk inpatients whose mortality outcomes are unlikely to exhibit a July effect.

Methods and Results

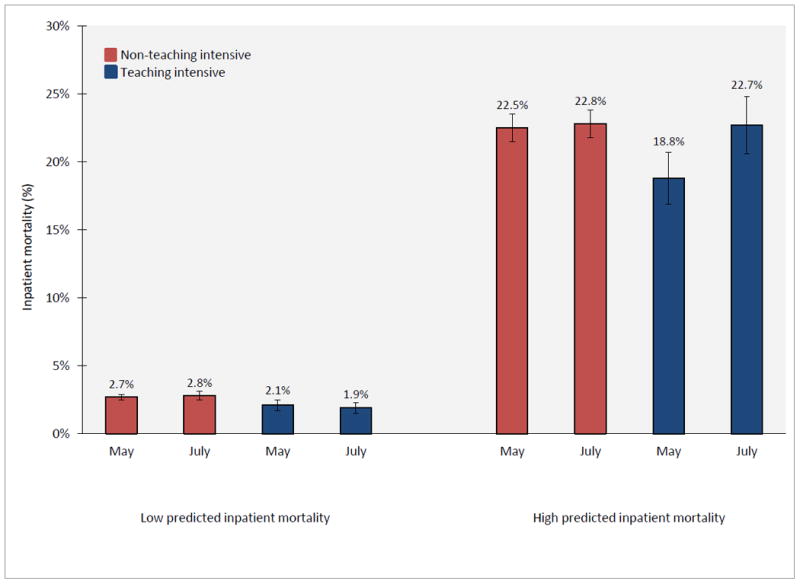

Using the U.S. Nationwide Inpatient sample, we estimated difference-in-difference models of mortality, percutaneous coronary intervention (PCI) rates, and bleeding complication rates, for high and low risk patients with acute myocardial infarction (AMI) admitted to 98 teaching-intensive and 1353 non-teaching-intensive hospitals during May and July 2002 to 2008. Among patients in the top quartile of predicted AMI mortality (high risk), adjusted mortality was lower in May than July in teaching-intensive hospitals (18.8% in May, 22.7% in July, p<0.01), but similar in non-teaching-intensive hospitals (22.5% in May, 22.8% in July, p=0.70). Among patients in the lowest three quartiles of predicted AMI mortality (low risk), adjusted mortality was similar in May and July in both teaching-intensive hospitals (2.1% in May, 1.9% in July, p=0.45) and non-teaching-intensive hospitals (2.7% in May, 2.8% in July, p=0.21). Differences in PCI and bleeding complication rates could not explain the observed July mortality effect among high risk patients.

Conclusions

High risk AMI patients experience similar mortality in teaching- and non-teaching-intensive hospitals in July, but lower mortality in teaching-intensive hospitals in May. Low risk patients experience no such “July effect” in teaching-intensive hospitals.

Keywords: July effect, inpatient mortality, acute myocardial infarction

Introduction

Each summer U.S. teaching hospitals experience a turnover of resident physicians, leading many to investigate whether declines in patient outcomes occur as a result of operational disruption and relative inexperience of new cohorts of physicians (“July effect”).1-3 While substantial variability in results exists across studies of the July effect, most large and high quality studies find a relatively small but statistically significant increase in mortality at the start of the residency year.1

An important reason why prior estimated July effects may have been mixed and small in magnitude is that most studies do not examine whether the July effect varies according to the predicted risk of inpatient mortality. Mortality outcomes of patients at low risk of inpatient mortality – either because of few severe co-morbid conditions or because the disease necessitating hospitalization is relatively low risk – may be unaffected by resident inexperience in July, whereas mortality among hospitalized patients with high predicted mortality may be most affected by errors or relative inexperience at the start of the residency year.

While several studies have examined the July effect among patients at high risk of inpatient mortality – e.g. patients with femoral neck fractures4, patients undergoing cardiac surgery5-8, and trauma patients9-11 – these studies have been primarily surgery-oriented in nature and do not include comparisons to patients at lower risk of inpatient mortality.

A second limitation of most prior studies is that they do not adequately distinguish between teaching hospitals that are highly teaching-intensive versus those that are not. While some studies distinguish teaching hospitals as being minor or major,3 even among major teaching hospitals there may be substantial variation in the number of resident physicians per bed. The July effect is more likely to occur in hospitals that rely heavily on resident physicians for patient care than hospitals in which residents play a smaller role.

We studied inpatient mortality among a national sample of patients admitted with acute myocardial infarction (AMI) to U.S. hospitals during May and July 2002 to 2008. We studied AMI given its prevalence, range in mortality risk, and the clinical importance of early recognition of complications and implementation of optimal medical therapy and of percutaneous coronary intervention (PCI). We estimated the difference in inpatient mortality between May and July in teaching-intensive and non-teaching-intensive hospitals (July effect) for patients at low and high predicted risk of inpatient mortality after AMI. We hypothesized that a July mortality increase in teaching-intensive hospitals would be greatest for patients already at high risk of inpatient mortality because this group of patients may be most susceptible to errors arising from organizational disruption and the relative inexperience of residents in July. In order to assess possible mechanisms of a differential July effect between low and high risk patients with AMI, we also estimated rates of PCI and rates of complication from bleeding among both groups.

Methods

Data source

We used the Nationwide Inpatient Sample (NIS) to identify a nationally representative sample of patients admitted to U.S. hospitals with AMI. The NIS approximates a 20-percent stratified random sample of U.S. hospitals. Each hospital discharge includes demographic and clinical data on each patient, including age, sex, race (White, Black, Hispanic, Other/Unknown), month and year of hospital admission, length of stay, primary and secondary diagnoses and procedures, and disposition (e.g., inpatient death). Diagnoses are coded according to the International Classification of Diseases, Ninth Revision (ICD-9). Use of the data for this project was approved by the Institutional Review Board at the University of Southern California.

Study sample

In our baseline analysis, we identified patients admitted with AMI during May and July 2002 to 2008. Patients with AMI were identified according to ICD-9 criteria in the Agency for Health Care Research and Quality (AHRQ) Inpatient Quality Indicators, version 3.2 (AHRQ, Rockville, Maryland).12, 13 We studied patients admitted during May and July, rather than longer timeframes such as March-to-May and July-to-September, to minimize differences in patient characteristics and outcomes that may occur with seasonal variation.1 To establish a clean comparison between May and July, we studied patients whose admission date to the hospital was during May 1 to May 31or July 1 to July 31. In our baseline analysis, we did not study patients admitted during June since some residency programs begin within the month. However, in additional analyses we explored differences in mortality between teaching-intensive and non-teaching intensive hospitals throughout the academic year.

We studied AMI given its prevalence, range in mortality risk across patients, and the importance for clinical outcomes of early recognition of AMI and its complications and early implementation of optimal medical therapy and PCI in appropriate patients. Identifying a condition with a large range in mortality risk across patients is important to assessing whether the relative inexperience of residents in July disproportionately impacts patients at high rather than low mortality risk. Similarly, relative inexperience of residents may be expected to have its greatest adverse effect for conditions in which early recognition and management of disease is particularly important. We studied all patients with AMI, regardless of whether they were admitted directly to a hospital or transferred from another hospital; our results were unchanged when transfers were excluded.

Definition of teaching-intensive hospitals

Teaching hospitals have traditionally been identified according to the American Hospital Association's (AHA) Annual Survey, which defines teaching hospitals by an American Medical Association approved residency, membership in the Council of Teaching Hospitals, or a ratio of resident physicians to beds of ≥ 0.25.14 Within this definition, however, teaching hospitals vary significantly in the extent to which residents are involved in the care of the hospitals' patients. For instance, in some teaching hospitals trainees do not perform specific procedures whereas in other hospitals they do, leading some analyses of the July effect to focus on procedures performed by trainees only.7, 15 Similarly, teaching hospitals vary significantly in the ratio of resident physicians to hospital beds,3 mitigating the July effect when a substantial number of teaching hospitals have relatively few resident physicians per bed.

In order to appropriately focus our analysis of the July effect among teaching hospitals in which a substantial amount of patient care is actually delivered by resident physicians, we divided hospitals into the following categories based on prior studies: non-teaching hospitals (zero residents per bed), very minor or minor teaching hospitals (>0 - 0.25 residents per bed), major teaching hospitals (>0.25 – 0.60 residents per bed), and very major teaching hospitals (>0.60 residents per bed).16, 17 Based on these categorizations, we defined teaching-intensive hospitals as those that were very major teaching hospitals. Non-teaching-intensive hospitals were defined as all other hospitals. Teaching-intensive or very major teaching hospitals approximately corresponded to the top quartile of teaching hospitals in terms of the ratio of residents per bed. In addition to our baseline analysis which studied the July effect using this binary classification of teaching-intensive and non-teaching-intensive hospitals, we studied how mortality differences between July and May varied across the four more specific hospital categories.

Our final sample of patients admitted with AMI included 14919 patients admitted to 98 teaching-intensive hospitals (7630 in May; 7289 in July) and 61298 admitted to 1353 non-teaching-intensive hospitals (31375 in May; 29923 in July).

Outcome variables

Our primary outcome variable was all-cause inpatient mortality. Longer mortality measures such as 30-day mortality could not be evaluated since the NIS does not follow patients after discharge. In order to evaluate mechanisms for how a July mortality effect among patients with AMI could be mediated, we analyzed rates of PCI and bleeding complications associated with either PCI or anti-coagulant therapy. We hypothesized that patients admitted with AMI to teaching-intensive hospitals in July may experience either relatively lower rates of PCI and/or higher rates of bleeding complications. PCI was identified through procedural codes; bleeding complications were identified through ICD-9 diagnoses codes for hemorrhage associated with a procedure or anti-coagulation therapy and procedural codes for blood transfusion.

Risk stratification of patients with AMI

In order to study the July effect among patients at highest predicted risk of inpatient mortality after AMI, we computed mortality after AMI for each individual in our database using a validated risk adjustment tool from the AHRQ.12 The AHRQ risk prediction tool includes risk parameters for patient age, sex, and relevant diagnoses and procedure codes that have been estimated from national AMI discharge data. These risk parameters can be applied to other claims-based discharge data to predict patient-level inpatient mortality after AMI. We applied the AHRQ risk parameters to each patient in our data to obtain patient-level predicted mortality. We did not directly estimate a risk prediction model; rather, the AHRQ tool allows investigators to use administrative data to compute predicted mortality for a patient using AHRQ's risk coefficients already estimated from national data. A priori and based on prior studies, we defined patients dichotomously to be at high predicted inpatient mortality risk after AMI if their predicted mortality was in the top quartile and at low risk if their predicted mortality was in the bottom three quartiles.18 In order to ensure that our categorization of high and low risk patients was homogenous across teaching-intensive and non-teaching intensive hospitals, risk quartiles were defined for the entire population rather than separately for teaching-intensive and non-teaching-intensive hospital populations.

We chose predicted-mortality categories that were broad enough to ensure an adequate sample size for comparison but that also exhibited a substantial difference in predicted mortality. For instance, predicted inpatient mortality after AMI in the top quartile and bottom three quartiles of patients in our data was 19.8% (3774 deaths among 19054 patients) and 3.32% (11964 deaths among 57163 patients), respectively. Our results were insensitive to alternative definitions of high risk such as the top tercile, quintile, and decile of predicted inpatient mortality. In addition to defining high risk patients as the top quartile of inpatient mortality, we also explored whether the estimated July effect varied with more discrete measures of predicted inpatient mortality.

Baseline statistical analysis

We estimated a difference-in-difference model comparing inpatient mortality among patients admitted with AMI to teaching-intensive versus non-teaching-intensive hospitals in May and July of the same calendar year. In this model, the difference in mortality between May and July in teaching-intensive hospitals is compared to the difference in mortality between May and July in non-teaching-intensive hospitals, the latter difference accounting for any seasonal differences in AMI mortality occurring between the two months. Difference-in-difference models have been commonly used to study the July effect.1, 3, 19-22

We estimated a difference-in-difference multivariable logistic regression model of the following form:

where Di was a binary indicator variable for inpatient mortality in hospitalization i, Julyi was a July indicator variable, Teachi was an indicator variable for teaching-intensive hospitals status, Julyi*Teachi was the July indicator variable interacted with teaching-intensive hospital status (i.e., July effect), Zi was a vector of covariates including patient age (continuous), sex, race / ethnicity, AHRQ predicted mortality after AMI, and length of stay, and εi was the error term. The model's standard errors were clustered at the hospital level to allow for correlation in outcomes across patients at a hospital. In order to study whether the July effect was different for patients at highest predicted risk of inpatient mortality after AMI, we estimated this model separately for patients with high and low predicted inpatient mortality risk (defined as top versus bottom three quartiles of predicted inpatient mortality, respectively). In order to evaluate mechanisms through which a July mortality effect among patients with AMI could be mediated, we estimated two additional logistic models in which the first outcome variable was a binary indicator for whether PCI was performed during hospitalization and the second outcome variable was a binary indicator for whether a bleeding complication occurred during hospitalization. Covariates in each of these models were identical to the baseline model. STATA, version 11 (STATA Corporation, College Station, Texas) was used for statistical analyses and the 95% confidence interval around reported means reflects 0.025 in each tail or P ≤ 0.05.

Additional analyses

In addition to our baseline analyses, we also explored several other questions related to the July effect.

First, we studied whether the mortality difference between AMI patients admitted to teaching-intensive and non-teaching-intensive hospitals was greatest in July compared to all other months and declined over time. We hypothesized that mortality differences between teaching-intensive and non-teaching intensive hospitals would be greatest in July and would decline over time until May, at least among AMI patients with high predicted mortality risk. We analyzed this question by estimating a multivariable logistic regression of the following form:

where Di was a again binary indicator variable for inpatient mortality, Monthi was a vector of indicator variables for each month (omitted month was May), Teachi was an indicator variable for teaching-intensive hospitals status, Monthi*Teachi was vector of month indicator variables interacted with teaching-intensive hospital status, Zi was a vector of covariates including patient age, sex, race / ethnicity, AHRQ predicted mortality after AMI, and length of stay, and εi was the error term. The model's standard errors were again clustered at the hospital level. We reported adjusted inpatient mortality for high and low risk AMI patients hospitalized in teaching-intensive and non-teaching intensive hospitals, as well as odds ratios of mortality between teaching-intensive and non-teaching intensive hospitals, according to calendar month. Mortality odds ratios between teaching-intensive and non-teaching intensive hospitals in a given month (e.g., July, August, September, etc.) were compared to identical mortality odds ratios in May.

Second, we analyzed whether the estimated July effects for high and low risk AMI patients varied according to hospitals of different teaching intensity, rather than comparing very major teaching hospitals (i.e., ‘teaching-intensive’) to all other hospitals (i.e., ‘non-teaching-intensive’). Specifically, we estimated a multivariable logistic regression of the following form:

where Di was a binary indicator variable for inpatient mortality, Julyi was a July indicator variable, Teach_intensityi was a vector of indicator variables for teaching-intensive hospitals status (non-teaching hospitals (zero residents per bed), very minor or minor teaching hospitals (>0 - 0.25 residents per bed), major teaching hospitals (> 0.25 – 0.6 residents per bed), and very major teaching hospitals (>0.6 residents per bed).16, 17), Julyi*Teach_intensityi was a vector of teaching-intensive hospital status indicator variables interacted with the July indicator variable, Zi was a vector of covariates including patient age, sex, race / ethnicity, AHRQ predicted mortality after AMI, and length of stay, and εi was the error term. The model's standard errors were clustered at the hospital level. We hypothesized that July mortality effects would rise with the intensity of teaching hospitals or be present at only the most teaching intensive hospitals.

Third, we analyzed whether the estimated July effects changed in magnitude over the study period. In 2003, the Accreditation Council for Graduate Medical Education (ACGME) implemented national duty hour regulations which established a maximum 80-hour work week and reduced shift lengths to no longer than 30 consecutive hours, among other provisions.23 An increasing trend in adherence to duty hour regulations by residency programs may have mixed impacts on the July effect. Increases in resident oversight may mitigate the July effect over time, while in contrast, increased patient hand-offs and decreased continuity of care may make the July effect stronger in recent years. We analyzed whether the July effect varied by year by estimating a multivariable logistic regression of the following form:

where Di was a binary indicator variable for inpatient mortality, Julyi was a July indicator variable, Teachi was an indicator variable for teaching-intensive hospitals status, and Julyi*Teachi*Yeari was the interaction between the July indicator variable, teaching-intensive hospital indicator, and a vector of year indicators (omitted year was 2002). Zi was a vector of covariates including patient age, sex, race / ethnicity, AHRQ predicted mortality after AMI, and length of stay, and εi was the error term. The model's standard errors were clustered at the hospital level.

Fourth, we analyzed whether the estimated July effect varied with more discrete measures of predicted inpatient mortality risk, rather than estimating the July effect dichotomously for patients in the top versus bottom three quartiles of predicted mortality. We divided patients into four categories on the basis of predicted inpatient mortality: those in the bottom two quartiles of predicted risk (i.e., bottom half), third quartile, 75th – 90th percentile, and top decile. We chose a broader categorization for patients at low predicted inpatient mortality risk (i.e., we combined the bottom two quartiles) since AHRQ predicted mortality was low in this population (1.7%), necessitating a greater sample size to be able to detect a statistically significant July effect. Similarly, we divided the top quartile into patients with predicted mortality between the 75th – 90th percentile and those in the top decile, since deaths were more prevalent in these groups. We estimated a multivariable logistic regression of the following form:

where Di was a binary indicator variable for inpatient mortality, Julyi was a July indicator variable, Teachi was an indicator variable for teaching-intensive hospitals status, and Julyi*Teachi*Severityi was the interaction between the July indicator variable, teaching-intensive hospital indicator, and indicators for each of the four predicted mortality groups we defined. Zi was a vector of covariates including patient age, sex, race / ethnicity, AHRQ predicted mortality after AMI, and length of stay, and εi was the error term. The model's standard errors were clustered at the hospital level. We reported adjusted inpatient mortality in teaching-intensive and non-teaching intensive hospitals in May and July, as well as odds ratios, for each of these predicted inpatient mortality groups.

Results

Baseline analyses

The mean age of patients was lower in teaching-intensive hospitals compared to non-teaching-intensive hospitals (e.g., 66.3y v 68.8y in May, p < 0.001) as was the proportion of patients that were female (e.g., 38.2% v 41.1% in May, p = 0.003) (Table 1). Unadjusted inpatient mortality was lower among patients in teaching-intensive hospitals (e.g., 5.6% v 8.0% in May, p < 0.001) as was AHRQ predicted inpatient mortality (e.g., 6.9% v 7.8% in May, p < 0.001). Rates of PCI were higher in teaching-intensive hospitals.

Table 1. Characteristics of patients admitted with AMI to U.S. teaching-intensive and non-teaching-intensive hospitals, 2002-2008.

| Teaching-intensive hospitals | Non-teaching-intensive hospitals | |||

|---|---|---|---|---|

| May | July | May | July | |

| No. of hospitals | 98 | 98 | 1353 | 1353 |

| No. of patients with AMI | 7073 | 6810 | 31932 | 30402 |

| Mean patient age, y (sd) | 66.3 (14.1) | 66.0 (14.2) | 68.8 (14.7) | 68.3 (14.8) |

| Female, No. (%) | 2702 (38.2) | 2658 (39.0) | 13112 (41.1) | 12433 (40.9) |

| Race, No. (%) | ||||

| White | 4278 (60.5) | 4068 (59.7) | 18072 (56.6) | 17188 (56.5) |

| Black | 629 (8.9) | 573 (8.4) | 1601 (5.0) | 1586 (5.2) |

| Hispanic | 441 (6.2) | 450 (6.6) | 1272 (4.0) | 1227 (4.0) |

| Other or unknown | 1725 (24.4) | 1719 (25.2) | 10987 (34.4) | 10401 (34.2) |

| Mean No. Charlson-Deyo comorbidities (sd) | 2.4 (1.5) | 2.4 (1.6) | 2.5 (1.6) | 2.4 (1.6) |

| Deaths, No. | 398 | 429 | 2547 | 2374 |

| Mortality rate, % (95% CI) | 5.6 (5.1 - 6.2) | 6.3 (5.7 - 6.9) | 8.0 (7.7 - 8.3) | 7.8 (7.5 - 8.1) |

| Predicted mortality rate, Mean % (95% CI) | 6.9 (6.7 - 7.1) | 6.8 (6.6 - 7.0) | 7.8 (7.7 - 7.9) | 7.5 (7.4 - 7.6) |

| No. patients with PCI (%) | 3269 (46.2) | 3165 (46.5) | 12472 (39.1) | 12191 (40.1) |

| No. patients with bleeding complications (%) | 764 (10.8) | 758 (11.1) | 2219 (6.9) | 2119 (7.0) |

Notes: Teaching-intensive hospitals were defined as teaching hospitals with a ratio of resident physicians to hospital beds of more than 0.6. Non-teaching-intensive hospitals were defined as all other hospitals. Predicted mortality was based on a validated risk-adjustment tool from the Agency for Health care Research and Quality.

Unadjusted inpatient mortality was lower in teaching-intensive hospitals in May compared to July (5.6% v 6.3%, p = 0.069) whereas it was slightly higher in non-teaching-intensive hospitals in May compared to July (8.0% v 7.8%, p = 0.42). The unadjusted difference-in-difference July mortality effect implied by these unadjusted estimates was a 0.8 percentage points mortality increase (p = 0.04), computed as [(6.3% - 5.6%) – (7.8% - 8.0%)]. AHRQ predicted mortality was slightly lower in July than May in teaching-intensive-hospitals, and lower in non-teaching-intensive hospitals (7.5% v 7.8%). The difference-in-difference July mortality effect implied by AHRQ predicted estimates was a 0.3 percentage point mortality increase (p = 0.12), computed as [(6.8% - 6.9%) – (7.5% - 7.8%)]. Rates of PCI increased between May and July in both teaching-intensive and non-teaching-intensive hospitals.

Among patients at highest predicted risk of inpatient mortality after AMI (defined as those in the top quartile of AHRQ predicted risk), actual mortality in teaching-intensive hospitals was substantially lower in May compared to July (285 v 333 deaths; 20.6% v 24.8%, p = 0.01) (Table 2). In contrast, mortality was similar among high risk patients between May and July in non-teaching-intensive hospitals (1908 v 1730 deaths; 22.3% v 22.3%, p = 0.98). Among these high risk patients, the unadjusted July mortality effect was 4.4 percentage points (p = 0.02). Unadjusted rates of PCI were not statistically different between May and July in teaching-intensive hospitals (275 v 267 PCIs; 19.9% v 19.9%, p = 0.97) and in non-teaching-intensive hospitals (1115 v 1052 PCIs; 13.0% v 13.5%, p = 0.33). The same was true for bleeding complications.

Table 2. Inpatient mortality, rates of PCI and bleeding complications among patients admitted with AMI during May and July, according to teaching-intensive hospital status and predicted inpatient mortality risk.

| Teaching-intensive hospitals | Non-teaching-intensive hospitals | |||

|---|---|---|---|---|

| May | July | May | July | |

| Patients at high predicted inpatient mortality | ||||

| No. patients | 1381 | 1344 | 8561 | 7768 |

| Deaths, No. | 285 | 333 | 1908 | 1730 |

| Mortality rate, % (95% CI) | 20.6 (18.5 - 22.9) | 24.8 (22.5 - 27.2) | 22.3 (21.4 - 23.2) | 22.3 (21.4 - 23.2) |

| Received PCI, No. | 275 | 267 | 1,117 | 1,052 |

| PCI rate, % (95% CI) | 19.9 (17.8 - 22.1) | 19.9 (17.8 - 22.1) | 13.0 (12.3 - 13.8) | 13.5 (12.8 - 14.3) |

| Bleeding complication, No. | 170 | 179 | 600 | 544 |

| Bleeding rate, % (95% CI) | 12.3 (10.6 - 14.2) | 13.3 (11.5 - 15.3) | 7.0 (6.5 - 7.6) | 7.0 (6. 5 - 7.6) |

| Patients at low predicted inpatient mortality | ||||

| No. patients | 5692 | 5466 | 23371 | 22634 |

| Deaths, No. | 113 | 96 | 639 | 644 |

| Mortality rate, % (95% CI) | 2.0 (1.6 - 2.4) | 1.8 (1.4 - 2.1) | 2.7 (2.5 - 3.0) | 2.8 (2.6 - 3.1) |

| Received PCI, No. | 2,994 | 2,898 | 11,355 | 11,139 |

| PCI rate, % (95% CI) | 52.6 (51.3 - 53.9) | 53.0 (51.7 - 54.3) | 48.6 (47.9 - 49.2) | 49.2 (48.6 - 49.9) |

| Bleeding complication, No. | 594 | 579 | 1,619 | 1,575 |

| Bleeding rate, % (95% CI) | 10.4 (9.7 - 11.3) | 10.6 (9.8 - 11.4) | 6.9 (6.6 - 7.3) | 7.0 (6.6 - 7.3) |

Notes: Predicted mortality was based on a validated risk-adjustment tool from the Agency for Health care Research and Quality. Patients at high predicted inpatient mortality were defined as those in the top quartile of predicted mortality. Patients at low predicted inpatient mortality were defined as those in the bottom three quartiles of predicted mortality.

Among patients in the bottom three quartiles of AHRQ predicted risk (low risk), actual mortality was similar in May and July in teaching-intensive hospitals (113 v 96 deaths; 2.0% v 1.8%, p = 0.36) and non-teaching-intensive hospitals (639 v 644 deaths; 2.7% v 2.8%, p = 0.48). Among low risk patients, the unadjusted July mortality effect was −0.3 percentage points (p = 0.27). PCI rates were not statistically different between May and July in teaching-intensive hospitals (2994 v 2898 PCIs; 52.6% v 53.0%, p = 0.599) and in non-teaching-intensive hospitals (11355 v 11139 PCIs; 48.6% v 49.2%, p = 0.16). The same was true for bleeding complications.

Adjusted mortality among high risk patients was substantially lower in May than July in teaching-intensive hospitals (18.8% in May, 95% CI 16.9% - 20.7%; 22.7% in July, 95% 20.6% - 24.8%; p = 0.006) but similar between these months in non-teaching-intensive hospitals (22.5% in May, 95% CI 21.5% - 23.5%; 22.8% in July, 95% 21.8% - 23.8%; p = 0.70) (Figure 1). The adjusted difference-in-difference July mortality effect among high risk patients was 3.6 percentage points (p = 0.017). Adjusted mortality among low risk patients was not statistically significantly different between May and July in teaching-intensive hospitals (2.1% in May, 95% CI 1.6% - 2.5%; 1.9% in July, 95% 1.5% - 2.3%; p = 0.45) or non-teaching-intensive hospitals (2.7% in May, 95% CI 2.4% - 2.9%; 2.8% in July, 95% 2.6% - 3.1%; p = 0.21) (Figure 1). The adjusted difference-in-difference July mortality effect among low risk patients was -0.3 percentage points (p = 0.237).

Figure 1.

Adjusted inpatient mortality among patients admitted with AMI during May and July, according to teaching-intensive hospital status and predicted inpatient mortality risk. Adjusted inpatient mortality for teaching-intensive and non-teaching-intensive hospitals during May and July was estimated from a difference-in-difference logistic regression model which adjusted for patient age, sex, race, AHRQ predicted mortality, and year. The July mortality effect among high risk patients is (22.7 – 18.8) – (22.8 – 22.5) = 3.6 percentage points, p-value = 0.02. The July mortality effect among low risk patients is (1.9 – 2.1) – (2.8 – 2.7) = -0.3 percentage points, p-value = 0.24.

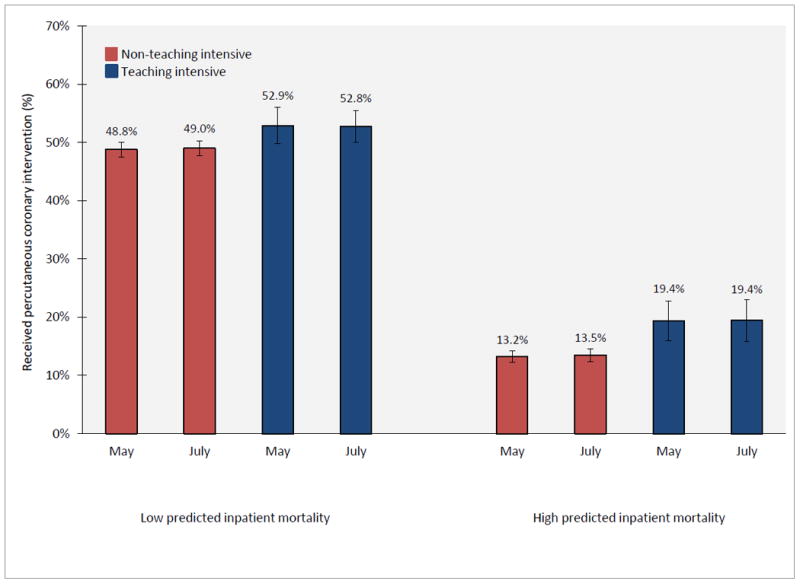

Figures 2 and 3 explore whether differences in rates of PCI and/or bleeding complications explain the greater July mortality effect estimated among high risk patients with AMI compared to low risk patients. Adjusted rates of PCI among high risk patients with AMI were not statistically significantly different between May and July in teaching-intensive hospitals (19.4% in May, 95% CI 16.0% - 22.8%; 19.4% in July, 95% 15.9% - 23.0%; p = 0.98) or non-teaching-intensive hospitals (13.2% in May, 95% CI 12.1% - 14.2%; 13.5% in July, 95% 12.4% - 14.6%; p = 0.53) (Figure 2). Adjusted PCI rates among low risk patients were also similar between May and July in both teaching-intensive and non-teaching-intensive hospitals.

Figure 2.

Adjusted rates of percutaneous coronary intervention among patients admitted with AMI during May and July, according to teaching-intensive hospital status and predicted inpatient mortality risk. Adjusted rates of PCI for teaching-intensive and non-teaching-intensive hospitals during May and July was estimated from a difference-in-difference logistic regression model which adjusted for patient age, sex, race, AHRQ predicted mortality, and year. The July PCI effect among high risk patients is (19.4 – 19.4) – (13.5 – 13.2) = -0.3 percentage points, p-value = 0.80. The July PCI effect among low risk patients is (52.8 – 52.9) – (49.0 – 48.8) = -0.3 percentage points, p-value = 0.71.

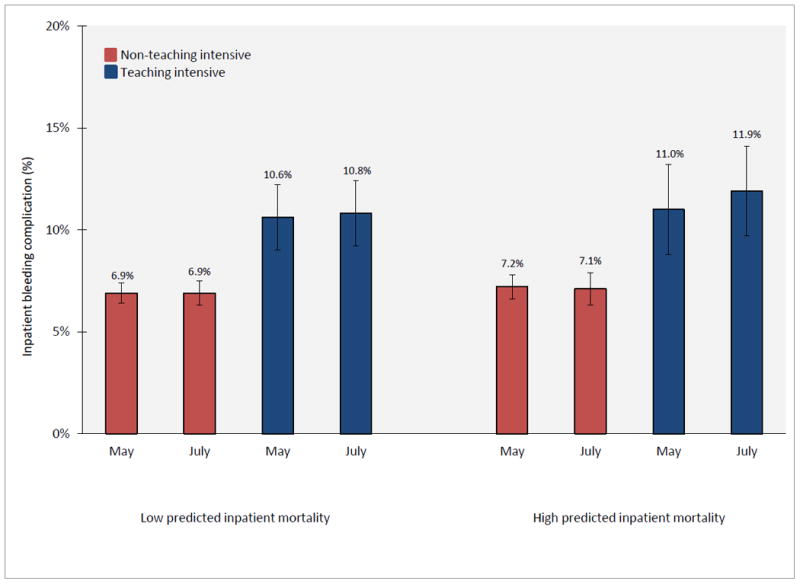

Figure 3.

Adjusted rates of complications from bleeding among patients admitted with AMI during May and July, according to teaching-intensive hospital status and predicted inpatient mortality risk. Adjusted rates of bleeding complications for teaching-intensive and non-teaching-intensive hospitals during May and July was estimated from a difference-in-difference logistic regression model which adjusted for patient age, sex, race, AHRQ predicted mortality, and year. The July bleeding effect among high risk patients is (11.9 – 11.0) – (7.1 – 7.2) = -1.0 percentage points, p-value = 0.53. The July bleeding effect among low risk patients is (10.8 – 10.6) – (6.9 – 6.9) = 0.2 percentage points, p-value = 0.94.

Adjusted rates of bleeding complication among high risk patients were not statistically significantly different between May and July in teaching-intensive hospitals (11.0% in May, 95% CI 8.9% - 13.2%; 11.9% in July, 95% 9.6% - 14.1%; p = 0.52) or non-teaching-intensive hospitals (7.2% in May, 95% CI 6.5% - 7.8%; 7.1% in July, 95% 6.4% - 7.9%; p = 0.92) (Figure 3). Adjusted rates of complications from bleeding among low risk patients were similar between May and July in both teaching-intensive and non-teaching-intensive hospitals as well.

Additional analyses

Differences in mortality between teaching-intensive and non-teaching-intensive hospitals according to month of hospitalization

Analysis of mortality differences between teaching-intensive and non-teaching intensive hospitals over the entire academic year confirmed our baseline results. Among high risk AMI inpatients, the adjusted mortality difference between teaching-intensive and non-teaching intensive hospitals was greatest in July and declined over the course of the academic year spanning July to May/June (Table 3). For example, among high risk AMI patients the adjusted odds ratio of mortality between teaching-intensive and non-teaching intensive hospitals was at it lowest in the year in May (OR 0.79) and at its highest in July (1.00), p = 0.02. From July to May, the adjusted odds ratio of mortality between teaching-intensive and non-teaching intensive hospitals generally declined in magnitude, for example 0.94 in October, 0.86 in December, and 0.74 in March. Although odds ratios for most of the months, except July and October, were not statistically significantly different from May, they did demonstrate an overall declining trend from July to May. The odds ratio of mortality between teaching-intensive and non-teaching intensive hospitals in June was higher than the odds ratio in May, but lower than the odds ratio in July, presumably reflecting differences in start dates of new residents across teaching-intensive hospitals. Consistent with our baseline results, the odds ratio of mortality for low risk AMI patients hospitalized in teaching-intensive versus non-teaching intensive hospitals did not vary significantly throughout the academic year.

Table 3. Adjusted inpatient mortality among patients admitted with AMI to teaching-intensive and non-teaching intensive hospitals, according to month of admission.

| Adjusted mortality among low risk patients | Adjusted mortality among high risk patients | |||||||

|---|---|---|---|---|---|---|---|---|

| Month | Teaching-intensive | Non-teaching-intensive | Odds ratio (95% CI) | p-value of OR compared to May | Teaching-intensive | Non-teaching-intensive | Odds ratio (95% CI) | p-value of OR compared to May |

| January | 2.1% | 2.8% | 0.72 (0.55 - 0.90) | 0.73 | 20.4% | 25.0% | 0.75 (0.64 - 0.86) | 0.55 |

| February | 2.6% | 2.9% | 0.89 (0.71 - 1.07) | 0.24 | 21.8% | 24.1% | 0.87 (0.74 - 1.00) | 0.35 |

| March | 2.0% | 2.8% | 0.71 (0.56 - 0.86) | 0.59 | 18.5% | 22.9% | 0.74 (0.62 - 0.86) | 0.59 |

| April | 2.1% | 2.8% | 0.74 (0.53 - 0.94) | 0.80 | 19.7% | 23.3% | 0.79 (0.66 - 0.92) | 0.97 |

| May | 2.1% | 2.7% | 0.76 (0.60 - 0.97) | - | 18.9% | 22.5% | 0.79 (0.68 - 0.91) | - |

| June | 2.1% | 2.6% | 0.81 (0.63 - 0.98) | 0.69 | 20.9% | 23.4% | 0.85 (0.72 - 0.98) | 0.42 |

| July | 1.9% | 2.9% | 0.64 (0.48 - 0.80) | 0.23 | 22.9% | 22.8% | 1.00 (0.86 - 1.15) | 0.02 |

| August | 2.1% | 2.6% | 0.78 (0.62 - 0.95) | 0.88 | 20.1% | 23.1% | 0.82 (0.69 - 0.95) | 0.70 |

| September | 1.8% | 2.5% | 0.71 (0.55 - 0.86) | 0.63 | 20.6% | 22.5% | 0.89 (0.76 - 1.02) | 0.24 |

| October | 2.1% | 2.8% | 0.73 (0.58 - 0.88) | 0.74 | 21.9% | 22.9% | 0.94 (0.80 - 1.07) | 0.05 |

| November | 2.3% | 2.6% | 0.86 (0.66 - 1.05) | 0.43 | 21.2% | 22.8% | 0.90 (0.76 - 1.05) | 0.21 |

| December | 1.9% | 2.8% | 0.68 (0.53 - 0.83) | 0.45 | 20.7% | 23.0% | 0.86 (0.74 - 0.97) | 0.31 |

Notes: Odds ratio reflects comparison of mortality between teaching-intensive and non-teaching intensive hospitals. P-value reflects comparison of mortality odds ratio in teaching-intensive versus non-teaching-intensive hospitals in a given month, relative to May. For example, the mortality odds ratio between teaching-intensive and non-teaching intensive hospitals in July is compared to the identical odds ratio in May, which is defined as the ‘July effect.’ Similar comparisons are made for other months, relative to May.

Association between July effect and hospital teaching intensity

Mortality increases among high risk AMI patients in July relative to May were primarily concentrated at very major teaching hospitals (those with greater than 0.6 residents per bed and defined in our baseline analyses as ‘teaching intensive’) rather than hospitals of lower teaching intensity (Table 4). For example, the odds ratio of mortality in July relative to May was 1.01 in non-teaching hospitals, 1.10 in very minor or minor teaching hospitals (p = 0.35 for odds ratio compared to non-teaching hospitals), 0.90 in major teaching hospitals (p = 0.40 for odds ratio compared to non-teaching), and 1.30 in very major teaching hospitals (p = 0.02 for odds ratio compared to non-teaching).

Table 4. Adjusted inpatient mortality among patients admitted with AMI in May and July, according to teaching hospital status.

| Adjusted mortality among low risk patients | Adjusted mortality among high risk patients | |||||||

|---|---|---|---|---|---|---|---|---|

| Teaching hospital status | May | July | Odds ratio (95% CI) | p-value of OR compared to Non-teaching | May | July | Odds ratio (95t% CI) | p-value of OR compared to Non-teaching |

| Non-teaching | 3.0% | 3.1% | 1.06 (0.92 - 1.23) | - | 23.4% | 23.5% | 1.01 (0.91 - 1.11) | - |

| Very minor or minor | 2.3% | 2.6% | 1.15 (0.85 - 1.45) | 0.60 | 21.8% | 23.3% | 1.10 (0.92 - 1.28) | 0.35 |

| Major | 2.1% | 2.1% | 1.00 (0.70 - 1.31) | 0.73 | 20.0% | 18.5% | 0.90 (0.69 - 1.11) | 0.40 |

| Very major | 2.1% | 1.9% | 0.90 (0.66 - 1.15) | 0.29 | 18.8% | 22.7% | 1.30 (1.06 - 1.55) | 0.02 |

Notes: Odds ratio reflects comparison of mortality in July relative to May in each hospital type. Very minor or minor teaching hospitals were defined as those with up to 0.25 residents per bed; major > 0.25 – 0.60 residents per bed; very major > 0.6 residents per bed. P-value reflects comparison of July-May mortality odds ratio for patients hospitalized in a given hospital type, relative to odds ratio for patients hospitalized in non-teaching hospitals. For example, among high risk patients, the p-value comparing the July-May mortality odds ratio in very major teaching hospitals to the odds ratio in non-teaching hospitals is 0.02.

Trends in the July effect from 2002 to 2008

The July effect among high risk AMI patients increased in magnitude over the study period, although differences across years were not statistically significantly different at p < 0.05 (Supplementary Table 1). For example, in 2002, adjusted mortality among high risk AMI patients admitted to teaching-intensive hospitals was 27.7% in May and 26.5% in July (July-May odds ratio 1.08), compared to 26.7% in May and 26.1% in July in non-teaching intensive hospitals (July-May odds ratio 0.96). In contrast, in 2008, adjusted mortality among high risk AMI patients admitted to teaching-intensive hospitals was 14.2% in May and 21.6% in July (July-May odds ratio 1.75), compared to 18.2% in May and 17.0% in July in non-teaching intensive hospitals (July-May odds ratio 0.91). Despite increasing in magnitude over the study period, the July effect odds ratio – which reflects a comparison of the July-May mortality odds ratio in teaching-intensive hospitals compared to the identical ratio in non-teaching-intensive hospitals – was not statistically distinguishable across years given reduced sample sizes in analyses broken down by year.

July effect across alternative categories of predicted inpatient mortality risk

In addition to estimating the July effect for binary categorizations of high and low risk AMI patients, we also analyzed whether the July effect varied with more discrete measures of predicted inpatient mortality risk. The July effect in teaching-intensive hospitals was primarily concentrated among patients in the top decile of predicted inpatient mortality risk of patients (Supplementary Table 2). The magnitude of the July effect increased with predicted inpatient mortality risk, although differences in the July effect across predicted risk categories were not statistically significantly different from one another with the exception of the top decile of risk.

Dicussion

Using data from a national sample of patients admitted with AMI to U.S. hospitals, we found that adjusted mortality among high risk AMI patients was similar in teaching- and non-teaching-intensive hospitals in July, but was substantially lower in teaching-intensive hospitals compared to non-teaching-intensive hospitals in May and throughout the rest of the academic year. Importantly, because adjusted mortality among high risk AMI patients was similar between teaching- and non-teaching-intensive hospitals in July, our findings do not suggest any role of avoidance of teaching-intensive hospitals in July. Rather, adjusted mortality among high risk AMI patients is generally lower in teaching-intensive hospitals throughout the year, except for July, consistent with an adverse impact of organizational disruption and physician inexperience in teaching-intensive hospitals in July on outcomes of high risk AMI patients.

The increase in mortality in July relative to May among high risk patients admitted to teaching-intensive hospitals was concentrated at the most teaching intensive hospitals: very major teaching hospitals with > 0.6 residents per bed. We found no July mortality increase among low risk patients with AMI admitted to teaching-intensive hospitals. Differences in rates of PCI and complications from bleeding could not explain the observed July mortality increase among high risk patients with AMI.

While prior studies have focused on surgical patients4-11 and intensive care unit patients in U.S. hospitals24, 25 as well as emergency admissions to British hospitals26 – patients at arguably high risk of inpatient mortality – to our knowledge no national studies exist evaluating how the July effect is modified by severity of patient illness. This is important because one reason why July effects estimated in prior studies may have been mixed and small in magnitude is that most studies do not examine patient populations whose mortality outcomes are most likely to be adversely impacted by the relative inexperience of residents in July.

Our findings suggest that patients at high predicted risk of inpatient mortality may not only be most susceptible to adverse events occurring during resident turnover, but that interventions targeting high risk patients in July may improve mortality substantially. While our analysis suggests that rates of PCI and complications from bleeding do not explain the July mortality effect among high risk patients with AMI, we could not explore whether delays in the timing of PCI, errors of medication administration,2 or other failures to diagnose and expeditiously treat complications of AMI could explain our findings. Our finding that the July mortality effect among patients with AMI is greatest for those already at high risk of inpatient mortality suggests that greater supervisor attention towards these patients may be warranted. This additional oversight may provide a safeguard against errors made due to resident or fellow inexperience and organizational disruption. Importantly, because we focused our analysis on patients admitted with AMI during the month of July – as opposed to patients admitted during June who continued their hospitalization into July – our results should not reflect errors arising from pass-off of patients between resident physicians changing over from June to July.

The estimated July effect among high risk AMI patients admitted to teaching-intensive hospitals also appeared to increase over the study period, though differences were not statistically significantly different across years, perhaps due to low sample sizes in analyses broken down by year. This suggests that while increases in resident oversight would have been expected to diminish the July effect over time, increased patient hand-offs and decreased continuity of care may have more than offset this effect.

Our study also highlighted an interesting risk-treatment paradox in both teaching-intensive and non-teaching intensive hospitals, whereby AMI patients with lower inpatient predicted mortality risk were more likely to undergo PCI than high-risk patients who, in theory, would be most likely to benefit from revascularization. This phenomenon has been attributed to a number of explanations including uncertainty among physicians about the benefits of PCI in higher risk patients who are arguably not reflective of clinical trial populations; risk aversion among physicians; and limited clinical information available in administrative databases which may make patients who are clinically inappropriate for PCI appear appropriate to researchers analyzing these data.27, 28

Our study had several limitations. Despite our difference-in-difference study design, our results may still be confounded by differences in hospital staffing or patient characteristics that occur in teaching-intensive hospitals in July. For instance, we were unable to account for additional resident supervision occurring in teaching-intensive hospitals in July that may mitigate our results. We were also unable to ensure that the care of specific patients in teaching-intensive hospitals was provided by trainees, leading some studies to focus on July effects in procedural outcomes among procedures performed only by trainees.7, 15 We were able to partly address this issue, however, by focusing our analysis of teaching hospitals on those that were highly teaching-intensive, an innovation over prior studies. Similarly, physicians recently completing residency or fellowship may begin independent practice in July and may also be responsible for the observed July effect. Although our difference-in-difference study design should account for this possibility if new physicians are equally likely to begin their careers in teaching-intensive and non-teaching-intensive hospitals in July, we cannot exclude the contribution of new attending physicians to the observed July effect.

Our study also relied on administrative diagnoses codes which may inaccurately reflect patient risk if coding practices vary between teaching and non-teaching hospitals. However, institutional norms which lead to biases in the measurement of illness severity should be similar across months and therefore addressed by our difference-in-difference study design. Our study also focused only on AMI and may not generalize to comparisons of July effects among high and low risk patients admitted with other acute conditions. Finally, although we demonstrated that differences in rates of PCI and bleeding complications could not explain the greater July mortality effect among high risk patients admitted with AMI, we could not identify the specific pathways by which increased mortality in teaching-intensive hospitals in July occurred.

Despite its limitations, our study illustrates that the July mortality effect in teaching-intensive hospitals is most pronounced in high risk patient populations for whom relative physician inexperience and organizational disruption would be predicted to be most adversely impactful. Recognition of the unique impact of resident turnover on the outcomes of high risk patients in teaching-intensive hospitals may shape policies to improve mortality outcomes in this vulnerable population.

Supplementary Material

Acknowledgments

Funding Sources: Support was provided by the Office of the Director, National Institutes of Health (1DP5OD017897-01, Dr. Jena), by the National Institute on Aging (1R03AG031990-A1, Dr. Romley), and the Leonard Schaeffer Center for Health Policy and Economics at the University of Southern California (Dr. Romley).

Footnotes

Conflict of Interest Disclosures: None.

References

- 1.Young JQ, Ranji SR, Wachter RM, Lee CM, Niehaus B, Auerbach AD. “July effect”: Impact of the academic year-end changeover on patient outcomes: A systematic review. Ann Intern Med. 2011;155:309–315. doi: 10.7326/0003-4819-155-5-201109060-00354. [DOI] [PubMed] [Google Scholar]

- 2.Phillips DP, Barker GE. A july spike in fatal medication errors: A possible effect of new medical residents. J Gen Intern Med. 2010;25:774–779. doi: 10.1007/s11606-010-1356-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Huckman RS, Barro JR, National Bureau of Economic Research . Cohort turnover and productivity : The july phenomenon in teaching hospitals. Cambridge, Mass.: National Bureau of Economic Research; 2005. [Google Scholar]

- 4.Anderson KL, Koval KJ, Spratt KF. Hip fracture outcome: Is there a “july effect”? Am J Orthop (Belle Mead NJ) 2009;38:606–611. [PubMed] [Google Scholar]

- 5.Bakaeen FG, Huh J, LeMaire SA, Coselli JS, Sansgiry S, Atluri PV, Chu D. The july effect: Impact of the beginning of the academic cycle on cardiac surgical outcomes in a cohort of 70,616 patients. Ann Thorac Surg. 2009;88:70–75. doi: 10.1016/j.athoracsur.2009.04.022. [DOI] [PubMed] [Google Scholar]

- 6.Dhaliwal AS, Chu D, Deswal A, Bozkurt B, Coselli JS, Lemaire SA, Huh J, Bakaeen FG. The july effect and cardiac surgery: The effect of the beginning of the academic cycle on outcomes. Am J Surg. 2008;196:720–725. doi: 10.1016/j.amjsurg.2008.07.005. [DOI] [PubMed] [Google Scholar]

- 7.Shuhaiber JH, Goldsmith K, Nashef SA. Impact of cardiothoracic resident turnover on mortality after cardiac surgery: A dynamic human factor. Ann Thorac Surg. 2008;86:123–130. doi: 10.1016/j.athoracsur.2008.03.041. discussion 130-121. [DOI] [PubMed] [Google Scholar]

- 8.Englesbe MJ, Fan Z, Baser O, Birkmeyer JD. Mortality in medicare patients undergoing surgery in july in teaching hospitals. Ann Surg. 2009;249:871–876. doi: 10.1097/SLA.0b013e3181a501bd. [DOI] [PubMed] [Google Scholar]

- 9.Claridge JA, Schulman AM, Sawyer RG, Ghezel-Ayagh A, Young JS. The “july phenomenon” and the care of the severely injured patient: Fact or fiction? Surgery. 2001;130:346–353. doi: 10.1067/msy.2001.116670. [DOI] [PubMed] [Google Scholar]

- 10.Highstead RG, Johnson LS, Street JH, 3rd, Trankiem CT, Kennedy SO, Sava JA. July--as good a time as any to be injured. J Trauma. 2009;67:1087–1090. doi: 10.1097/TA.0b013e3181b8441d. [DOI] [PubMed] [Google Scholar]

- 11.Schroeppel TJ, Fischer PE, Magnotti LJ, Croce MA, Fabian TC. The “july phenomenon”: Is trauma the exception? J Am Coll Surg. 2009;209:378–384. doi: 10.1016/j.jamcollsurg.2009.05.026. [DOI] [PubMed] [Google Scholar]

- 12.Agency for Healthcare Research and Quality. Guide to inpatient quality indicators: Quality of care in hospitals--volume, mortality, and utilization. 2007 [Google Scholar]

- 13.Romley JA, Jena AB, Goldman DP. Hospital spending and inpatient mortality: Evidence from california: An observational study. Ann Intern Med. 2011;154:160–167. doi: 10.7326/0003-4819-154-3-201102010-00005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.American Hospital Association. 2010 annual survey database. 2010 [Google Scholar]

- 15.Haller G, Myles PS, Taffe P, Perneger TV, Wu CL. Rate of undesirable events at beginning of academic year: Retrospective cohort study. Bmj. 2009;339:b3974. doi: 10.1136/bmj.b3974. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Volpp KG, Rosen AK, Rosenbaum PR, Romano PS, Even-Shoshan O, Canamucio A, Bellini L, Behringer T, Silber JH. Mortality among patients in va hospitals in the first 2 years following acgme resident duty hour reform. Jama. 2007;298:984–992. doi: 10.1001/jama.298.9.984. [DOI] [PubMed] [Google Scholar]

- 17.Volpp KG, Rosen AK, Rosenbaum PR, Romano PS, Even-Shoshan O, Wang Y, Bellini L, Behringer T, Silber JH. Mortality among hospitalized medicare beneficiaries in the first 2 years following acgme resident duty hour reform. Jama. 2007;298:975–983. doi: 10.1001/jama.298.9.975. [DOI] [PubMed] [Google Scholar]

- 18.Volpp KG, Rosen AK, Rosenbaum PR, Romano PS, Itani KM, Bellini L, Even-Shoshan O, Cen L, Wang Y, Halenar MJ, Silber JH. Did duty hour reform lead to better outcomes among the highest risk patients? J Gen Intern Med. 2009;24:1149–1155. doi: 10.1007/s11606-009-1011-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Anderson KL, Koval KJ, Spratt KF. Hip fracture outcome: Is there a “july effect”? Am J Orthop (Belle Mead NJ) 2009;38:606–611. [PubMed] [Google Scholar]

- 20.Barry WA, Rosenthal GE. Is there a july phenomenon? The effect of july admission on intensive care mortality and length of stay in teaching hospitals. J Gen Intern Med. 2003;18:639–645. doi: 10.1046/j.1525-1497.2003.20605.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Englesbe MJ, Fan Z, Baser O, Birkmeyer JD. Mortality in medicare patients undergoing surgery in july in teaching hospitals. Ann Surg. 2009;249:871–876. doi: 10.1097/SLA.0b013e3181a501bd. [DOI] [PubMed] [Google Scholar]

- 22.Smith ER, Butler WE, Barker FG., 2nd Is there a “july phenomenon” in pediatric neurosurgery at teaching hospitals? J Neurosurg. 2006;105:169–176. doi: 10.3171/ped.2006.105.3.169. [DOI] [PubMed] [Google Scholar]

- 23.Philibert I, Friedmann P, Williams WT. New requirements for resident duty hours. Jama. 2002;288:1112–1114. doi: 10.1001/jama.288.9.1112. [DOI] [PubMed] [Google Scholar]

- 24.Barry WA, Rosenthal GE. Is there a july phenomenon? The effect of july admission on intensive care mortality and length of stay in teaching hospitals. J Gen Intern Med. 2003;18:639–645. doi: 10.1046/j.1525-1497.2003.20605.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Ayas NT, Norena M, Wong H, Chittock D, Dodek PM. Pneumothorax after insertion of central venous catheters in the intensive care unit: Association with month of year and week of month. Qual Saf Health Care. 2007;16:252–255. doi: 10.1136/qshc.2006.021162. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Jen MH, Bottle A, Majeed A, Bell D, Aylin P. Early in-hospital mortality following trainee doctors' first day at work. PLoS One. 2009;4:e7103. doi: 10.1371/journal.pone.0007103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.McAlister FA. The end of the risk-treatment paradox? A rising tide lifts all boats. J Am Coll Cardiol. 2011;58:1766–1767. doi: 10.1016/j.jacc.2011.07.028. [DOI] [PubMed] [Google Scholar]

- 28.Motivala AA, Cannon CP, Srinivas VS, Dai D, Hernandez AF, Peterson ED, Bhatt DL, Fonarow GC. Changes in myocardial infarction guideline adherence as a function of patient risk: An end to paradoxical care? J Am Coll Cardiol. 2011;58:1760–1765. doi: 10.1016/j.jacc.2011.06.050. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.