Abstract

Softness perception intrinsically relies on haptic information. However, through everyday experiences we learn correspondences between felt softness and the visual effects of exploratory movements that are executed to feel softness. Here, we studied how visual and haptic information is integrated to assess the softness of deformable objects. Participants discriminated between the softness of two softer or two harder objects using only-visual, only-haptic or both visual and haptic information. We assessed the reliabilities of the softness judgments using the method of constant stimuli. In visuo-haptic trials, discrepancies between the two senses' information allowed us to measure the contribution of the individual senses to the judgments. Visual information (finger movement and object deformation) was simulated using computer graphics; input in visual trials was taken from previous visuo-haptic trials. Participants were able to infer softness from vision alone, and vision considerably contributed to bisensory judgments (∼35%). The visual contribution was higher than predicted from models of optimal integration (senses are weighted according to their reliabilities). Bisensory judgments were less reliable than predicted from optimal integration. We conclude that the visuo-haptic integration of softness information is biased toward vision, rather than being optimal, and might even be guided by a fixed weighting scheme.

Keywords: integration, softness, visuo-haptic, multisensory, material perception

1. Introduction

Humans use information from several modalities in everyday life, especially when interacting with objects in their environment. We refer to more than one sense simultaneously when we estimate the properties of an object in order to use it, grasp it or explore it properly. However, several properties of the environment seem to be more specifically linked to one sense as compared to the others. For example, many material properties of an object (such as thermal conductivity, weight or compliance) are most specifically linked to haptics. One important material property is softness, which is the psychological correlate of the compliance of a surface. Compliance is defined by the relation between the force applied to an object and the object's deformation, including the position of its surface. The compliance of objects plays an important role in haptic discrimination, classification and identification of objects (Bergmann Tiest & Kappers, 2006; Hollins, Bensmaia, Karlof, & Young, 2000), as well as in the manipulation of objects, because it determines how the object is deformed by the hand (Srinivasan & Lamotte, 1995). Softness perception intrinsically relies on haptic information. Only the haptic sense directly measures both force and position information, which are necessary to judge softness. In contrast, the visual sense can directly measure position changes, but intrinsic visual cues to force are hardly available. However, through everyday experience, we can learn correspondences between the haptically perceived softness and the visual or auditory effects of exploratory movements that are executed to feel softness, thus providing indirect information on the object's softness (cf. Ernst, 2007). For example, when a compliant object comes into contact with an indenter, such as our finger or another object, our vision can provide us with information concerning the time-course and pattern of the object's surface deformation around the contact region. In this study, we investigated whether the softness of objects with deformable surfaces can be inferred from such indirect visual information, and, if yes, how this indirect visual information is integrated with the direct haptic information.

The integration of information has been studied extensively, both for the case of different visual depth signals (e.g., perspective signals, disparity or shading) and for cases of multisensory integration, where both senses have direct access to the property to be perceived, e.g., seen and felt size (Ernst & Bulthoff, 2004). A precondition of integration is the spatial as well as the temporal congruence of the different signals (Stein & Meredith, 1993). However, if discrepancies between the signals are below the threshold of perception, integration is still observed (Calvert, Spence, & Stein, 2004). Jacobs (2002) suggests that such integration takes into account all signals that are available for a given property. Signal-specific estimates for that property are derived from each signal, and then all estimates are combined into a percept through “weighted averaging”:

| (1) |

Weighted averaging well describes the percept of a property, when stimuli with signals conflicting in their information on this property are created (Backus & Banks, 1999; Young, Landy, & Maloney, 1993). Averaging different estimates has been further predicted to increase the percept's reliability , which is the inverse of the percept's variance (Landy, Maloney, Johnston, & Young, 1995). This prediction was confirmed with lower discrimination thresholds for a property in multisignal, as compared to single-signal situations (Perotti, Todd, Lappin, & Phillips, 1998). The integration of signal-specific estimates in several cases has been successfully described by principles that originated from the maximum-likelihood-estimation (MLE) model. The model (Ernst & Banks, 2002; Landy et al., 1995) statistically describes an optimal integration under the assumptions that noise in each signal-specific estimate follows a Gaussian distribution and that these noise distributions are mutually independent. Under these conditions, the MLE rule states that optimal integration.in the sense of producing the lowest-variance estimate.is achieved by averaging all individual signal estimates weighted by their normalized reciprocal variances, i.e., their relative reliabilities.

| (2) |

If the MLE rule is used to combine two estimates, and with independent noises, for instance visual and haptic estimates, the reliability of the final estimate is found using the following equation:

| (3) |

Thus, the combined estimate has lower variance than either the visual or the haptic estimate alone. Several studies have reported evidence for optimal integration of different signal-specific estimates within a single sense. In vision, for example, estimates from disparity and texture signals (Hillis, Watt, Landy, & Banks, 2004) seem to be optimally integrated in order to perceive surface slant. Regarding the haptic domain, the integration of force and position information for shape perception has been shown to follow predictions from optimal integration (Drewing & Ernst, 2006; Drewing, Wiecki, & Ernst, 2008). In particular, the MLE model has been successfully applied to the integration of redundant information in different modalities, e.g., for visuo-haptic integration of size, shape, position information (Ernst & Banks, 2002; Gepshtein, Burge, Ernst, & Banks, 2005; Helbig & Ernst, 2007, 2008; van Beers, Sittig, & Gon, 1996, 1999) or for visuo-auditory integration (Alais & Burr, 2004). However, there are also many cases in which suboptimal reliability-dependent weighting has been observed (Gepshtein et al., 2005; Rosas, Wagemans, Ernst, & Wichmann, 2005). Whereas most authors agree that the estimates' weights depend on reliabilities, there is some debate over the conditions in which weights are indeed set optimally (Rosas et al., 2005).

As concerns the multisensory perception of softness, only a few studies, which investigated the perception of softness in virtual surfaces, have been conducted. It has been shown that perceived softness, when haptically judged from tapping a virtual surface, can be altered by incongruent tapping sounds (DiFranco, Beauregard, & Srinivasan, 1997). Other studies indicate that visual information on surface displacement can improve and even dominate haptic softness judgments: Wu, Basdogan, and Srinivasan (1999) explored the influence of visual feedback on the softness perception of virtual compliant buttons. When using haptic information alone, judgments on the button's softness varied with button location. Such location bias, however, disappeared when both visual information and haptic information were provided. In addition, the judgments were more reliable in the visuo-haptic than in the haptic condition. Srinivasan, Beauregard, and Brock (1996) conducted an experiment, in which participants compared the softness of two springs. The participants could press the springs and feel the corresponding displacement and forces through their hands. At the same time, the deformation of the springs was displayed on a computer monitor. The relationship between the visually presented deformation of each spring and actual displacement was systematically varied. In this experiment, the participants seem to have based their softness judgment mainly on visual information. Finally, a recent study (Kuschel, Di Luca, Buss, & Klatzky, 2010) investigated visuo-haptic softness perception of virtual surfaces with respect to the MLE model of an optimal integration. According to the authors' analysis, the visual and haptic weights were chosen optimally when visual and haptic softness information was congruent, but not when information was incongruent. The weights in the incongruent conditions were similar to the weights in the congruent condition. The authors interpret these fixed (or “sticky”) weights in terms of a longer-term optimization to a congruent natural environment. But note also that this study did not include a direct measure of visual softness estimates. The authors substituted for this measure by educated guesses, when concluding on optimal/non-optimal integration of visual and haptic estimates in visuo-haptic perception. Hence, conclusions from this study tend to be somewhat tentative. Taken together, these studies do, however, indicate an important or even dominant role for visual information in visuo-haptic softness judgments on virtual surfaces.

A major corresponding role for visual information is not necessarily to be expected for more natural cases of softness perception, in particular when participants explore deformable surfaces, as in the present study. One reason is that the softness of deformable surfaces is perceived haptically via more precise input than the softness of virtual surfaces: the virtual surfaces in the previous studies were haptically rendered by displaying net reaction forces from an object to the entire finger and their softness could be sensed via kinesthetic input alone. In contrast, deformable surfaces can be further haptically distinguished by their differential skin deformation at the contact area of the finger, i.e., by cutaneous input (cf. Srinivasan & Lamotte, 1995). Srinivasan and Lamotte (1995) found that due to the additional cutaneous input, deformable surfaces (here, a rubber stimulus) are far better discriminated by touch than rigid ones (for example a piano key, which corresponds to the virtual surfaces). Consistent with this finding, recent empirical work (Bergmann Tiest & Kappers, 2008) demonstrated that cutaneous input, as opposed to kinesthetic input, plays a dominant role in the softness perception of deformable surfaces. Moreover, in the studies that demonstrate visual dominance (Srinivasan et al., 1996; Wu et al., 1999), the virtual surfaces had relatively high compliances (1.5 to 7.1 mm/N), which resulted in easily visible surface displacements. Lower visual weights of about 40% are reported for the less-compliant stimuli (0.7 mm/N) in Kuschel et al. (2010). Overall, the virtual stimuli investigated so far might have usually had quite high reliability of visual information and probably quite low reliability of haptic information, and, hence visual information might have played a major role.

Here, we investigate visuo-haptic softness perception for deformable stimuli. For our study, we created two different sets of rubber stimuli with lower compliances than those used in most previous studies: one with compliances between 0.147 and 0.23 mm/N (“harder” set) and one with compliances between 0.73 and 1.15 mm/N (“softer” set). According to the above considerations, we expected that haptic softness estimates for these stimuli would be more reliable than in previous studies, while visual estimates are less reliable'probably resulting in lower reliabilities for vision compared to haptics. Moreover, we expected that visual reliabilities for the harder stimuli would be lower than that for the softer stimuli because visible deformations of the harder stimuli would be more subtle than those of the softer stimuli. The MLE model of optimal integration predicts that visuo-haptic estimates are more reliable than estimates from either sense alone. Further, the model predicts that the bisensory estimates represent a compromise between visual and haptic estimates with weights for each sense in accordance with its relative reliability. That is, the model predicts more weight for haptic than for visual estimates in bisensory integration. Additionally, the visual weight should be lower (and the haptic with more weight) for the hard stimuli (with lower visual reliability) than for the soft stimuli. A previous study (Drewing, Ramisch, & Bayer, 2009) suggested that participants could judge the softness of deformable surfaces by visual information alone, showing that it is possible to estimate softness without any force information from the haptic modality. This study also provided preliminary hints that vision may be weighted more than it should if visuo-haptic softness perception were optimal. In the previous study, participants judged softness by the method of magnitude estimation (Stevens, 1956) and judgment variance was taken to indicate estimate reliability. However, not only perceptual processes, but also higher-order processes can contribute to judgment variance. So much so that the previous study did not allow testing the quantitative predictions of the MLE model.

In the present study, we tested quantitatively whether visual and haptic softness information is integrated in an optimal manner, as described by the MLE model. In each trial, participants compared a standard stimulus to one of nine comparison stimuli and judged which of the stimuli were softer. Using a two-interval forced choice task and the method of constant stimuli, we quantified perceptual reliability (assessed by just-noticeable difference [JND]) and perceived softness (assessed by PSEs). Participants explored two harder or two softer deformable stimuli under three different modality conditions: using only haptic information (only-haptic), using only visual information (only-visual) and using both (visuo-haptic). In the haptic condition, the participants explored two real, rubber stimuli by touching them with their finger. In the visuo-haptic condition, the participants explored the physical stimuli while a real-time visual representation of their finger movements and the stimulus deformation were being presented on a monitor. In the visual condition, we only displayed visual virtual stimuli based on finger movements recorded previously during the visuo-haptic condition. The redisplay of recorded finger movements ensured that the visual information on softness in the only-visual conditions exactly matched with the visual information in the visuo-haptic conditions, improving the prediction validity of visuo-haptic from visual performance. Note also that the sum of the information provided in the only-haptic condition (tactile, kinesthetic and motor information on executed exploration) and in the only-visual condition (only-visual information) equals the information in the visuo-haptic condition (visual, tactile, kinesthetic and motor information on executed exploration), which warrants that predictions for visuo-haptic performance are based on exactly the same information as is the performance itself. The approach is valid, given the evidence that in visuo-haptic compliance judgments, the system first separately produces a visual estimate (from visual information only) and another haptic one (from tactile, kinesthetic and motor information), which are then integrated at some second stage (Kuschel et al., 2010).

Further, we introduced small discrepancies between visual and haptic information in a proportion of the visuo-haptic trials in order to assess the visual and haptic weights in bisensorily judged softness (note that visual and haptic weights always sum to one, so that in practice it was sufficient to analyze only the visual weights). Observed visuo-haptic reliability and the observed weights were compared to optimal reliability and weights predicted by the MLE model from visual- and haptic-only reliabilities.

2. Methods

2.1. Participants

A total of eight healthy participants from the University of Giessen were tested (mean age: 26, age range: 20–30, five females, three males). All participants had normal or corrected to normal visual acuity. Six participants were right-handed and two were left-handed. None reported cutaneous or motor impairments. Participants were naïve to the purpose of the study and were paid 8 € per hour for participation. Our research adhered to the tenets of the Declaration of Helsinki.

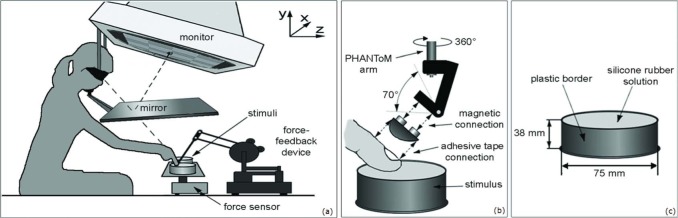

2.2. Apparatus

Stimuli were presented in a visuo-haptic workbench (Figure 1), including a PHANToM 1.5A force-feedback device (spatial resolution: 0.03 mm, temporal resolution: 1,000 Hz), a 22″-computer screen (Iiyama MA203 DT, 120 Hz, 1,280 × 1,024 pixel), liquid-crystal shutter glasses (CrystallEyesTM, StereoGraphics) and a force sensor. The force sensor consisted of a measuring beam (LCB 130) and a measuring amplifier (GSV-2AS, force resolution 0.05 N, temporal resolution: 682 Hz, ME-Messsysteme GmbH). The PHANToM monitored the position of the finger as the participants touched real rubber stimuli. The participant's index finger was connected to the PHANToM via a novel, custom-built adapter, which was fixed to the fingernail by double-sided tape in order to allow the fingertip to directly touch the physical rubber stimuli. In this way, the participants had both kinesthetic and tactile input on compliance. The weight of the adapter was about the same as of the original ‘thimble-gimble’ and it allowed for participant exploration of the stimuli in a 30 × 20 × 15 cm workspace. Participants looked via stereo glasses and via a mirror onto the screen. The mirror prevented them from seeing their hand and the stimuli. The stimuli were displayed on the screen as cylinders within a virtual 3D scene. The apparatus was calibrated to align the visual and haptic stimuli spatially. PHANToM, screen and sensor were connected to a PC. Custom-made software controlled the experiment, collected responses and recorded the data from the PHANToM and the sensor (recording interval: 5 ms). An experimenter placed the correct rubber stimuli, as indicated by the software, in the apparatus.

Figure 1.

(a) Visuo-haptic set-up. (b) Adapter between the participant's index finger and the PHANToM's arm. One part of the adapter was fixed to the PHANToM, while the second part was fixed to the finger by double-faced adhesive tape. Both parts were connected with permanent magnets, which allowed the participant to easily disconnect the finger from the set-up. This type of connection allowed the participants to touch and feel the natural rubber stimulus, while finger trajectories were measured by the PHANToM. (c) Rubber stimulus.

2.3. Stimuli

The haptic stimuli consisted of silicon rubber cylinders (75 mm diameter × 38 mm high) embedded in a plastic dish. The surface was flat and the stimuli had no discriminable differences in texture or in size, but varied only in compliance. Compliance was varied by mixing a two-component silicon rubber solution (Modasil Extra, Modulor) with varying, but measured amounts of a diluent (silicone oil V50, Modulor). Two sets of stimuli were created. Each set (both the “hard” one and the “soft”) consisted of nine rubber specimens, which differed in compliance from the hardest to the softest. The compliances of the hard stimuli were 0.147, 0.153, 0.164, 0.18, 0.19, 0.2, 0.21, 0.22 and 0.23 mm/N. The compliances of the soft stimuli were 0.73, 0.78, 0.83, 0.87, 0.92, 0.98, 1.04, 1.09, and 1.15 mm/N.

In the experiment, these stimuli were compared to standard stimuli of 0.92 mm/N (soft set) and of 0.19 mm/N (hard set). The number of standard stimuli (with the same compliance) was the same as the number of comparison stimuli (i.e., nine “copies” of the standard vs. nine comparisons) in order to avoid possible unequal mechanical wear in standard and comparisons. The copies of the standards did not differ by more than 0.002 mm/N in the hard condition and 0.01 mm/N in the soft condition. The standard-comparison parings were changed between sessions of the experiment in order to prevent a comparison stimulus always being compared to the same single copy of a standard stimulus. The compliance of the stimuli was determined using the experimental setup. Each specimen was placed on a rigid platform that was mounted on a force transducer. We repeatedly indented the stimuli with a rigid, flat-ended cylindrical probe (circular flat area of 1 cm2) that was attached to the PHANToM device. The PHANToM measured the position of the probe. The probe was slowly manually lowered to indent the flat surface centrally, until the force reached 10 N for the soft set and 40 N for the hard set. Then, it was retracted. This procedure was repeated four times on each specimen. For each stimulus, we calculated mean depth of indentation of the cylindrical probe at forces between 0 and 9 N for the soft set and 0–29 N for the hard set (steps of 1 N, bins of +/−0.4 N). The displacement increased linearly with increasing force. Statistically, linear regressions of displacement on force explained most of the variance in the data points (r2 ≥ 0.99 for each stimulus) and the intercepts of the regressions were close to zero (0.08). The compliance of a stimulus was then well defined by the slope of the respective regression line.

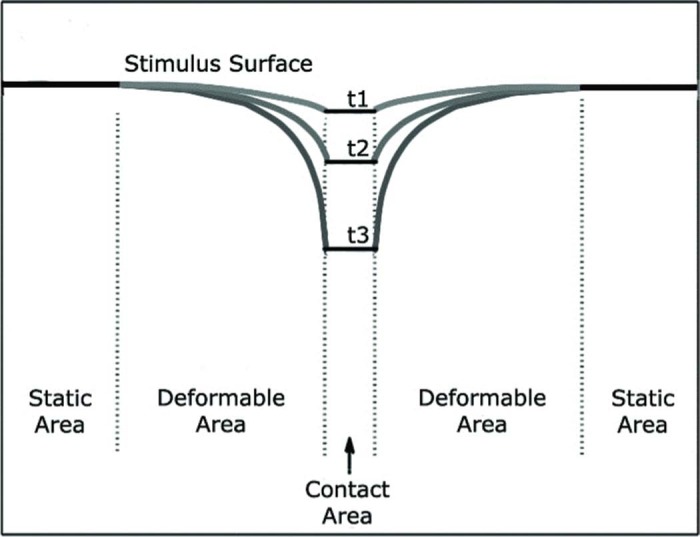

A virtual visual scene was showed on the monitor. Finger positions were represented by a “visual finger,” a small sphere of 8 mm diameter. Visual softness stimuli were cylinders with the same height (38 mm), diameter (75 mm) and positions as the natural rubber stimuli. Participants saw a top-side view of the visual stimuli (Figure 1a). The 3D shape of the stimuli and their deformation was rendered by shading combined with the stereo cues. Position, size and color of the visual stimuli corresponded to those of the natural haptic ones. Visual stimuli deformed with the virtual indentation of the visual finger into the object as follows: we defined three distinct areas on the visible stimulus surface, the contact area with 6 mm of diameter (the area of the contact between the finger and the surface of the stimulus), the deformable area with an annulus shape with the outer radius equal to 21 mm (the area that deformed by the indentation of the finger) and the static area (the area that never deformed). The contact area was not visible to the participants since it was covered by the visual finger'as happens during the exploration of a real compliant object with a finger. Visible deformation was limited to the deformable area. The surface in the deformable area was vertically displaced with the vertical indentation of the visual finger into the object; the displacement increased with the indentation of the visual finger and with the proximity of a surface point to the area of finger–object contact (contact area, Figure 2). The vertical displacement, V, at the surface point, Pxz, for a given vertical finger indentation, y, of the object was determined by the following equation:

Figure 2.

Visual virtual stimulus indentation. 2D (vertical cross-section) depiction of the rendering method for visual stimuli t1, t2 and t3. The corresponding lines show the deformation of the visual stimulus at the three time points during the exploration of the physical stimulus with the finger. From t1 to t3, the indentation of the stimulus increases. The contact area, i.e., the area of the visual stimulus that was not rendered because it was covered behind the sphere representing the participant's finger.

| (4) |

where d(Pxz) is the distance of point Pxz (in mm) to the border of the deformable area (Figure 2). The indentation, y, was equal to zero, when the virtual sphere representing the finger just contacted the surface of the stimulus.

In visuo-haptic trials, the positions of the visual finger representations were determined from the actual positions of the participant's finger. In all conditions, the visual finger's x-, z- and y-coordinates before object contact (no stimulus indentation) were identical to the coordinates of the participant's finger. In trials with consistent haptic and visual information on compliance (“consistent visuo-haptic trials”) the indentation of the visual finger into the object (and the resulting stimulus deformation) was also identical to the actual indentation of the participant's finger into the natural stimulus. In “inconsistent” visuo-haptic trials, the seen indentation, y, of the finger was obtained by multiplying the actual indentation of the natural stimulus by a constant “indentation factor” (which also changed the observable stimulus deformation; Equation 4). The indentation factor was greater than one when a more-compliant visual displacement compared to the manually explored natural rubber stimulus was desired and less than one for a visually less-compliant stimulus. With an indentation factor greater than one, the visual finger indents (and deforms) the seen stimulus more than the participant's finger indents the rubber stimulus and vice versa.

Further, we recorded the participants' movement trajectories (i.e., the indentation of the natural stimulus over time) during visuo-haptic trials. We used these data later in only-visual trials, in which participants judged stimulus softness from the characteristics of visual finger position and deformation over time only. In other words, for the only-visual condition, we recorded the 3D virtual visual “movies” that the participants observed during their own exploration movements in the visuo-haptic trials, then presented these 3D virtual visual movies in random order during the only-visual condition. Each movie essentially consisted of the two visual stimuli that were successively indented and deformed by the visual finger representation (the sphere). The participants passively watched them without moving their fingers. The compliance of the visual stimuli was defined by the compliance of the originally explored rubber stimulus multiplied by the indentation factor in visual presentation (being one for consistent visuo-haptic trials).

2.4. Design and procedures

Within participants, we varied the compliance set of the stimuli (hard vs. soft set) and the modality (visuo-haptic, only-visual, only-haptic), in which softness information was presented. In visuo-haptic conditions, visual and haptic information on compliance was either consistent, or inconsistent (visual information indicated a softer or harder object than the haptic information). For each modality and compliance set, we assessed the participants' ability to discriminate softness by measuring JND. We also measured the PSEs, in particular to estimate the weighting of visual and haptic information from inconsistent visuo-haptic conditions. We used a two-alternative forced-choice paradigm combined with the method of constant stimuli.

In each trial, the participants were presented, one after another, a standard stimulus and one of the nine comparison stimuli from the same compliance set. Participants judged which stimulus was softer. The standard stimuli in the only-haptic and the consistent visuo-haptic conditions had visual and haptic compliances of 0.19 mm/N (hard set) and 0.92 mm/N (soft set). Standard stimuli in the inconsistent visuo-haptic conditions had haptic compliances of 0.19 and 0.92 mm/N, but higher (0.21 and 1.09 mm/N, respectively) or lower (0.163 and 0.83 mm/N, respectively) visual compliances. The compliance of the six standard stimuli in the only-visual condition corresponded to the visual compliance information in the six visuo-haptic standards. Comparison stimuli were always presented in the same modality as the standard; comparison stimuli in visuo-haptic conditions always had consistent visual and haptic compliances.

In order to obtain psychometric functions, we presented each pair of standard and comparison stimulus 20 times and split the presentation into 10 sessions. In each session, each pair was presented twice; once with the standard being located on the left side and once on right side. Trials of each modality condition were blocked within each session and the order of trials within each block was randomized. The visuo-haptic block (108 trials = 3 standards × 2 compliances × 9 comparisons × 2 stimulus locations) was always performed first because the participant's exploratory behavior in the visuo-haptic condition defined the stimuli in the only-visual condition. The order of the only-visual block and the only-haptic block was balanced between participants. In the only-visual block, the visual information from each single visuo-haptic trial, recorded previously in the same session, was redisplayed once (108 trials, see above), testing softness discrimination for each of the three visual standard stimuli per compliance condition. The only-haptic blocks contained 36 trials (= 1 standard × 2 compliances × 9 comparisons × 2 stimulus locations). In each of the 10 sessions, a corresponding visuo-haptic, only-visual and only-haptic block were presented. Sessions were conducted on 10 different days and each session took approximately 90 minutes.

In each trial of the visuo-haptic and only-haptic conditions, the participants had first to place their right index finger in a “waiting” area. The experimenter started the trial with a button press. Then, an auditory warning signal indicated the participants to start the exploration of the stimulus on the left. Participant had 2.5 seconds of time to move their finger to the stimulus and to explore it. After this time, another warning signal indicated the participants to move toward the other stimulus (again 2.5 seconds for movement and exploration followed by another warning signal that indicated the end of the trial and the beginning of the response phase). After the exploration phase, the participants indicated the stimulus that they considered to be the softer one by a button press. In visuo-haptic conditions, participants touched the physical stimuli and saw the virtual visual ones (in 3D). In only-haptic trials, the monitor went black except for a small red cross, indicating the center of the stimulus they had to explore, and for the sphere representing the finger. Both cross and sphere disappeared once the participants touched the surface of the stimulus. In the only-visual trials, the participants looked at the representation of previous exploration movements. The order of only-visual trials was random, also with respect to the order of the previous explorations. In this condition, each trial started automatically once the participants placed their finger in the “waiting” area. Between trials the experimenter changed the rubber specimen (haptic and visuo-haptic conditions).

2.5. Data analysis

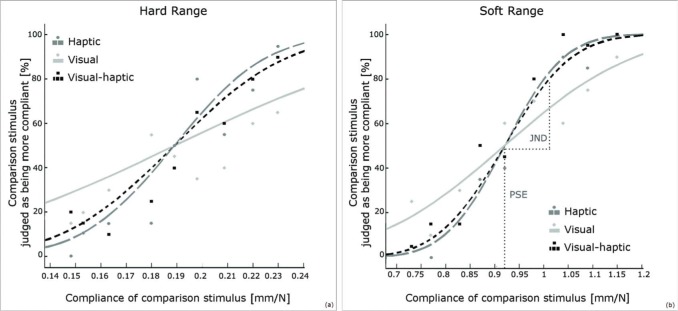

We determined individual psychometric functions for each of the 14 standards (2 Compliance sets × 7 Standards [1 haptic + 3 visuo-haptic + 3 visual]), i.e., we plotted the proportion of trials in which a comparison was perceived as being softer than the standard against the compliance of the comparison stimulus. We calculated the JNDs and the PSEs by fitting cumulative Gaussian functions to the proportion of “softer” responses (including 180 trials each; details in Drewing & Ernst, 2006, see Figure 3) using the psignifit toolbox, which implements MLE methods (Wichmann & Hill, 2001). In two out of 112 cases, these values were removed from further analyses because the JNDs were clearly outliers that deviate by more than four standard deviations from the average in the respective condition. The PSE is defined as the intensity (here compliance) of the comparison stimulus at which discrimination performance is random (here 50%). It was assumed that the perceived softness of the standard stimulus and that of the stimulus corresponding to the PSE were equal. For the unisensory conditions, the JND was only estimated, but the PSE was fixed by equating it to the compliance of the respective standard stimulus. The JND was defined as the difference between the PSE and the amplitude of the comparison when it was judged to be higher than the standard 84% of the time. It was assumed that JND2/2 estimated the percept's variance (cf. Ernst & Banks, 2002). Visual and visuo-haptic JND values were determined as averages of the single JNDs for the three different standard stimuli in these modality conditions. We predicted the JNDs in the consistent visuo-haptic conditions from the corresponding JNDs in the unisensory conditions, under the assumption that visual and haptic information is optimally integrated (Equation 3, MLE model). The predicted JNDs were compared with the actual observed JNDs. For analysis, JNDs were transformed into Weber fractions, i.e., ratios between the JND and the compliance values of the corresponding (middle) standard stimulus (either hard: 0.19 mm/N or soft: 0.92 mm/N):

Figure 3.

Typical psychometric data and fits for an individual participant and for standard stimuli of 0.19 mm/N (hard condition[a]) and 0.92 mm/N (soft condition[b]).

| (5) |

We further predicted optimal visual weights for the two inconsistent visuo-haptic conditions from the corresponding JNDs in the two unisensory conditions (Equation 2, MLE model). Optimal weights were compared to the empirical visual weights that were obtained from PSEs in the inconsistent visuo-haptic conditions, as follows:

| (6) |

where sv and sh refer to the visually and haptically specified compliance in the standard stimulus. Individual weights were averaged over the two inconsistent visuo-haptic conditions.

Finally, it was checked whether visuo-haptic JNDs could be, alternatively, explained by stochastic selection rather than by integration (Nardini, Jones, Bedford, & Braddick, 2008; Serwe, Drewing, & Trommershäuser, 2009; Kuschel et al. 2010). Whereas integration models assume that redundant visual and haptic information is integrated in each single bisensory trial, stochastic selection refers to the case that participants switch between the senses from trial to trial and never integrate. Probabilities for using either sense then correspond to the sense's empirical weight. We predicted visuo-haptic JNDs (and Weber fractions) for the case of stochastic selection using the following equation:

| (7) |

The variances σ2 were equated with the JND2/2, the weights, w, with the empirical weights, wemp, and the signal estimates, š, with the signals, s.

3. Results

When asked after the experiment, none of the participants reported noticing inconsistencies between the visual and haptic information in a proportion of the visuo-haptic trials.

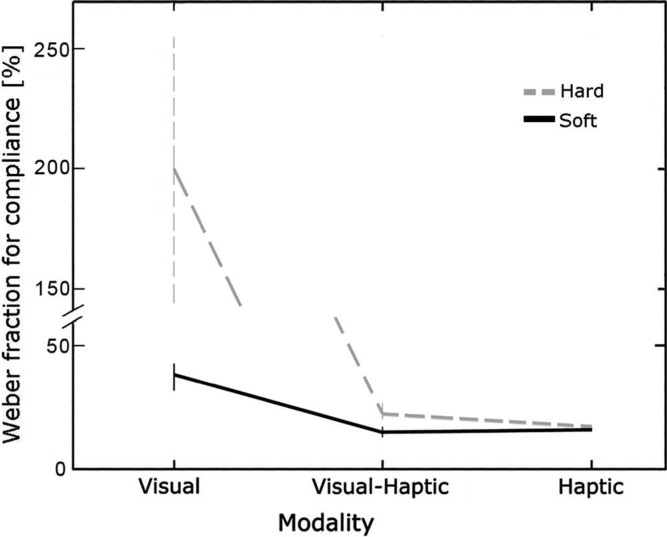

3.1. Weber fractions

The individual Weber fractions (Figure 4) were entered into an analysis of variance (ANOVA) with the two within-participants factors modality (only-visual, only-haptics, visuo-haptics) and compliance set (hard vs. soft). Both main effects were significant (modality: F(2,14) = 11.99, p = 0.001, compliance set: F(1,7) = 11.31, p = 0.012) as well as the interaction: F(2,14) = 8.55, p = 0.004. t-tests showed, as expected, Weber fractions for the hard stimuli were higher than those for the soft stimuli when participants used only-visual information, t(7) = 3.07, p = 0.009 (one-tailed) or both visual and haptic information, t(7) = 2.67, p = 0.016 (one-tailed). There was no significant difference between hard and soft conditions when exploring only haptically, t(7) = 1.00, p = 0.34 (two-tailed). Within the hard condition only, Weber fractions were, as anticipated, larger when using only-visual information as compared to when using only-haptic or when using visuo-haptic information, t(7) = 3.27, p = 0.006, and t(7) = 3.16, p = 0.007, respectively (one-tailed). Surprisingly, only-haptic Weber fractions tended to be lower, instead of being higher as predicted, than visuo-haptic Weber fractions, t(7) = 2.3, p = 0.061 (two-tailed). Within the soft condition, observed trends were in the expected directions: Weber fractions in the only-visual conditions were higher than in the visuo-haptic and the only-haptic conditions (t(7) = 4.66, p = 0.001 and t(7) = 4.16, p = 0.002, respectively). Only-haptic Weber fractions tended to be higher than visuo-haptic ones, t(7) = 1.58, p = 0.078 (all tests one-tailed).

Figure 4.

Average JND reported as percentage of the standards' compliance values (average Weber fraction) for each modality and compliance set condition. Error bars represent standard errors of the mean (SEM). The y axis, as well the dotted gray line, is cutted in order to give a better rendering of the data.

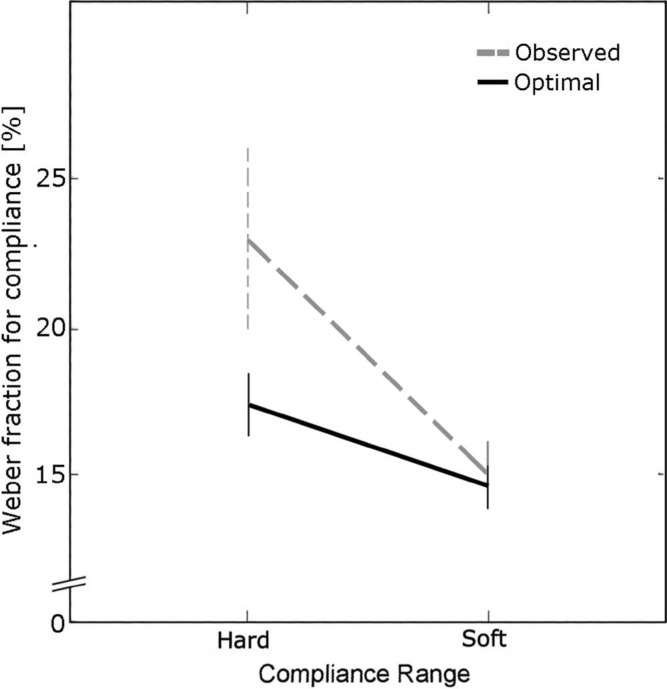

We then compared the observed visuo-haptic Weber fractions to those quantitatively predicted for optimal integration (Figure 5). Individual values were entered into an ANOVA with the two within-participant variables observation (observed vs. predicted) and compliance (hard vs. soft). We found a significant main effect of compliance, F(1,7) = 6.57, p = 0.037, and a significant interaction compliance × observation, F(1,7) = 6.44, p = 0.039. We did not find a significant main effect for observation, F(1,7) = 4.45, p = 0.073. t-tests separated by compliance set indicated that observed Weber fractions were higher than optimal within the hard condition, t(7) = 2.35, p = 0.025 (one-tailed), but not with the soft, t(7) = 0.63, p = 0.27 (one-tailed).

Figure 5.

Average observed and optimal Weber fraction as function of compliance set. Error bars represent standard errors of the mean (SEM).

Finally, we predicted JNDs and Weber fractions for the visuo-haptic conditions in both compliance sets from the stochastic selection model (Equation 7). The averaged predicted Weber fractions were equal to 111% (hard) and 25% (soft), whereas the observed ones were equal to 23% (hard) and 15% (soft). Paired t-tests confirmed that Weber fractions predicted from stochastic selection were significantly higher than those observed (hard: t(7) = 2.29, p = 0.027; soft: t(7) = 6.5, p < 0.001, one-tailed), rejecting the stochastic selection model as an alternative explanation for the data.

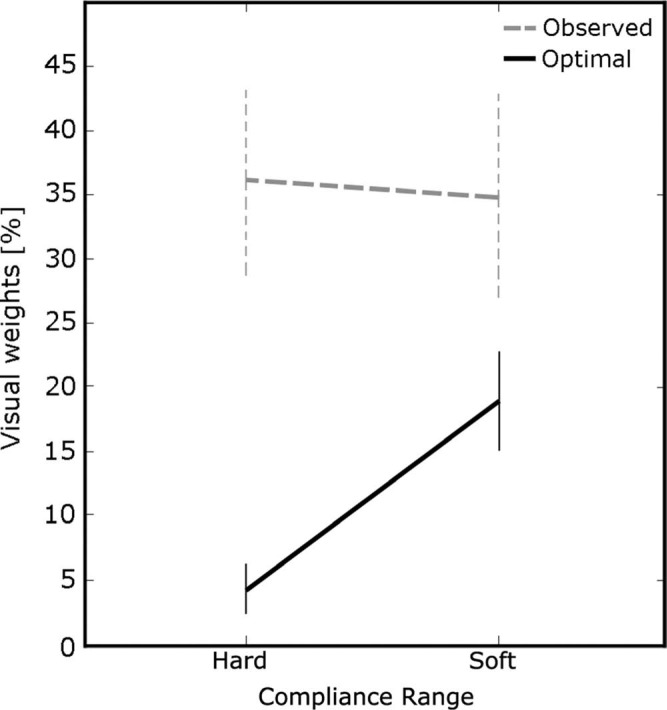

3.2. PSEs and weights

The averaged individual PSE values from the consistent visuo-haptic conditions were 0.194 mm/N for the hard range and 0.914 mm/N for the soft, and thus, as should be the case, close to the compliance of the standard stimuli (hard = 0.19 mm/N and soft = 0.92 mm/N). PSE values for the inconsistent visuo-haptic conditions were used to calculate empirical visual weights (Figure 6). The empirical weights were compared with the predicted optimal weights. Weights were entered into an ANOVA with two within-participant variables observation (observed vs. predicted) and compliance set (hard vs. soft). Both, the main effect of the compliance set F(1,7) = 6.03, p = 0.044 and the main effect of observation were significant, F(1,7) = 13.43, p = 0.008, as was the interaction between the two variables F(1,7) = 12.81, p = 0.009. Vision was weighted more than predicted from optimal integration. t-tests confirmed this effect both for the soft, t(7) = 2.45, p = 0.043, and the hard compliance set, t(7) = 4.40, p = 0.003. The effect was more pronounced for the hard as compared to the soft compliance set (difference between average predicted and observed weight: 32% vs. 16%, respectively). Also, individual data points in Figure 7b nicely show the larger deviation of the observed from the predicted weights for the hard as compared to the soft set (larger vertical distance of triangles from line of identity). Thereby, there was a significant difference between the soft and the hard stimuli for the predicted visual weights, t(7) = 5.92, p <0.001, but not for the observed weights, t(7) = 0.24, p = 0.81.

Figure 6.

Average observed and optimal visual weights. Error bars represent standard errors of the mean (SEM).

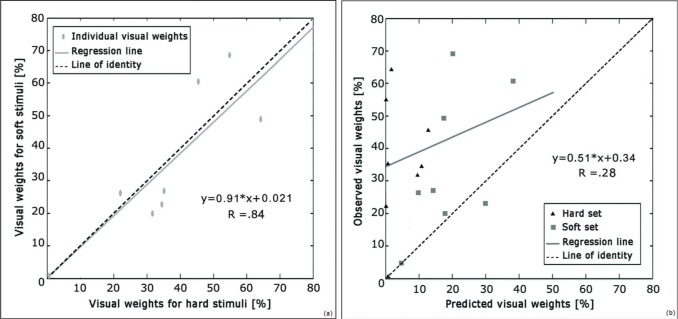

Figure 7.

Individual visual weights, regression lines and line of identity (dotted): (a) individual weights for the soft stimulus set as a function of weights for the hard stimuli; (b) observed vs. predicted weights for both soft (darker triangles) and hard (lighter squares) set.

Numerically, the weights observed for the two compliance sets were almost identical (wv = 0.36 for the hard set; wv = 0.35 for the soft set). In Figure 7a, it can be seen that this holds for each individual: Individual weights in hard conditions were highly similar to the individual weights in the soft condition (correlation: r = 0.84). Thereby, average individual weights spread across almost the entire possible range: Weights can take values between 0 and 1, but in this study individual empirical weights actually ranged from 0 to 0.64 for the hard set and from 0.04 to 0.69 for the soft set. In contrast, predicted optimal individual weights covered a much smaller range (hard set [0.001 to 0.125]; soft set [0.04 to 0.38]; Figure 7b). In other words, each participant weighted vision similarly in both compliance sets, while weights considerably differed between individuals. This suggests that each participant used their own idiosyncratic weight values, rather than adapting the weight to the senses' reliabilities.

4. Discussion

In the present study, we investigated how participants judged the softness of objects with deformable surfaces using haptic, visual or both types of information. Whereas haptic information is directly informative for softness perception, visual information provides indirect informative signals from which softness can be inferred, but cannot be perceived. One important result of the present experiment is that despite this, participants were partially able to discriminate between the stimuli using visual signals alone. Much as expected, participants were able to visually discriminate between soft stimuli, but were substantially worse at distinguishing between harder stimuli. This finding is consistent with our earlier results from magnitude estimation (Drewing et al., 2009) and confirms our hypothesis that visual information from the exploration of soft stimuli (Weber fraction, 38%) is more reliable than that from hard stimuli (200%), probably because the visual effects of exploration are much more subtle in the latter case. Also as expected, haptic softness information was more reliable than visual information, with little difference between soft and hard stimuli (Weber fractions: hard = 18%; soft = 16%).

A second result revealed that unlike in previous studies with virtual surfaces (Srinivasan et al., 1996; Wu et al., 1999), vision did not dominate the bisensory judgments (average visual weight, here ∼35%). We had expected a minor role for visual information with the present deformable surfaces as compared to rigid virtual surfaces because haptic softness discrimination has been shown to be considerably more reliable with deformable surfaces compared to rigid surfaces (Srinivasan & Lamotte, 1995). Indeed, in our study, haptic was much better than visual discrimination. Thus, the smaller role of vision in bisensory judgments is consistent with the general idea that the weights change proportionally to the relative reliabilities of the estimates, i.e., toward haptics in the present study as compared to the previous studies.

We tested quantitatively whether visual and haptic information on softness is integrated in an optimal way (Ernst & Banks, 2002). First, the MLE model of optimal integration predicts that bisensory judgments are more reliable than judgments from either sense alone, if weights are chosen optimally. For soft stimuli, bisensory discrimination tended to be better than for only-visual and only-haptic discrimination (latter test marginally failed to reach significance; Weber fractions: 15%, 38% and 16%, respectively). In contrast, for the hard stimuli, visuo-haptic performance was in-between only-visual and only-haptic performances (23%, 200% and 18%, respectively). Consistent with these findings, the observed visuo-haptic Weber fractions were higher than those predicted from optimal integration for hard stimuli. For soft stimuli, the observed Weber fractions did not differ from the optimal ones (15% vs. 15%). Second, the MLE model of optimal integration predicts the relative weighting of the senses' estimates. Observed weights in bisensory judgments differed from the optimal weights for both hard and soft stimuli. Vision was weighted more than it should be according to the predictions of optimal integration. We concluded that visual and haptic information on the softness of deformable surface is not integrated optimally, which for the hard stimuli, may even have resulted in a decline of visuo-haptic as compared to only-haptic performance.

We have predicted optimal weights under the assumption that in bisensory judgments the noises of visual and haptic estimates are statistically independent (cf. Equations 2 and 3 above, and Ernst & Banks, 2002). Given the prolonged manual exploration of each stimulus, it may be that shared sources of error affected the visual and haptic estimates in each trial. That is, the estimates' noises might have been positively correlated. Importantly, this does not change our conclusions: Oruc, Maloney, and Landy (2003; Equations 5 and 6) showed that with positively correlated noises optimal weights are lower for the less-reliable estimate than with uncorrelated noises. In the present study, the less-reliable estimate is the visual estimate. It is weighted more than being optimal when assuming uncorrelated noises and thus, even more so when assuming the lower optimal weights predicted for positively correlated noises. Moreover, if the reliability of the bisensory estimates falls between the reliability of the cues in isolation (only-haptic and only-visual), as was the case for the hard stimuli, this outcome is inconsistent with an optimal choice of weights independent of the noises' correlation (Oruc et al. 2003; Equation 7)'suggesting a distinctly suboptimal choice. In other words, also when assuming correlated noises our findings suggest suboptimal integration that is biased toward vision.

But was visual information on compliance in the visuo-haptic condition, indeed, comparable to that in the only-visual condition? Visual information was actively gathered in the visuo-haptic condition, but passively obtained in the only-visual condition. The experimental procedure warranted that the presented visual signals exactly matched in both conditions, but still one may speculate that the additional motor information in the visuo-haptic condition influenced how visual information was processed into a compliance estimate. Findings from Kuschel et al. (2010) argue against this speculation. Their findings suggest that visual position information is not combined into a compliance estimate with haptic (tactile, kinesthetic and motor) force information. Instead, they suggest that in visuo-haptic compliance judgments first, each sense (vision from visual info, haptics from tactile, kinesthetic and motor info) arrives at a separate compliance estimate, and then the two separate estimates are combined. This further corroborates the validity of our approach and our conclusions on suboptimal integration. One might further speculate that participants paid less attention to the judgments in the passive only-visual condition as compared to the active visuo-haptic condition and we did not observe optimal integration because we underestimated visual reliability (and as a consequence also the predicted optimal visual weights and the predicted bisensory reliability). However, this speculation cannot explain the observation that, for hard stimuli, bisensory reliability is larger than haptic reliability because this observation is in no case consistent with optimal integration (Oruc et al., 2003; Equation 7).

So, overall we found strong evidence for suboptimal integration of compliance estimates. Interestingly, although the optimal visual weights were different for the two compliance sets, the observed weights for the hard and the soft set were almost the same. This pattern of results implies that the overestimation of vision relative to optimality was more pronounced for the hard as compared to the soft set. We interpret the difference in overestimation as a consequence of the unexpected similarity of the observed weights in the two compliance conditions despite the differences in visual reliabilities. The considerably lower reliability of visual information on hard as compared to soft stimuli (with haptic reliability being about the same; as assessed by the Weber fractions) predicted the difference in optimal weights. The similarity of observed weights under these circumstances means that these did not even partially follow the senses' relative reliabilities. Put another way, we found evidence for a weighting that is neither optimal nor suboptimal reliability-dependent weighting, as has been reported in previous studies (Rosas et al., 2005). One interpretation of this result is that the system gives a fixed weight to visual compliance estimates even though visual reliability differs. Fixed suboptimal weights can explain why visuo-haptic performance in the hard condition was even worse than the haptic performance. Specifically, because the highly unreliable visual information about hard stimuli contributed too much to the percept, this impaired performance. The idea of fixed weights is also consistent with findings from Kuschel et al. (2010) on the visuo-haptic softness perception of virtual surfaces. Additional support is provided by the present findings that visual weights did considerably differ between the participants, whereas for each participant the visual weight used for soft stimuli was nearly identical to the visual weight used for hard stimuli.

Why should participants use fixed weights in visuo-haptic softness perception, but not in other tasks (e.g., in size and form perception; cf. Ernst & Bulthoff, 2004)? That is, why should participants not have adapted their weights to changing visual reliabilities in the present soft and hard conditions? One possible answer is because they lacked (fast) access to their visual reliabilities. Optimal integration with optimal reliability-dependent weights presupposes that the reliabilities are available short-term. Short-term adaptation of weights might not be possible in the case of visuo-haptic softness perception because information about visual reliability may not be immediately available to the brain. In order to explain the necessary short-term (trial-by-trial) availability of reliability information in cases where optimal integration occurred, it has been suggested that a percept's reliability might be immediately coded in the pattern of activity across a population of perceptual neurons, and that this population code may enable optimal integration (e.g., Ernst & Banks, 2002). In contrast, vision does not provide a direct softness percept, but softness is rather inferred indirectly from visual information. It seems plausible that such indirect estimates lack immediate reliability information. If, however, short-term adaption is not possible, long-term adaptations will determine the senses' weighting. Note that visual information is highly reliable in many everyday contexts, and thus the automatic integration of visual information with fixed weights, independent of whether the information is direct or indirect, may represent a valuable long-term adaptation. However, these are mere speculations that need to be tested in future experiments.

Finally, it is notable in its own right that participants can judge compliance from visual information alone. What kind of information might they use? A previous study (Drewing et al., 2009) showed that people can provide reliable magnitude estimates of softness when watching another person exploring a compliant stimulus. In that study, participants simultaneously saw the position of the finger in the stimulus, the finger and stimulus deformations and the hemodynamic state of the fingertip. The 2D pattern of blood volume beneath the fingernail provides a potent cue to applied force (Mascaro & Asada, 2001), and thus a softness estimate could have been, in principle, judged from the combination of this cue with visually perceived finger position. Additional cues potentially may have been given in the specific pattern of stimulus deformation. However, in the present study, the real fingertip was not visible and stimulus deformation followed the same shape independent of compliance. The only information available stems from finger movement, namely the time-course and pattern of the indentation of the stimulus. Thereby, the finger movement is only passively observed, and thus the programmed motor forces are not known. At first glance, this visual cue seems to provide position information only, with no force information, hence making a softness judgment impossible. However, there are two possibilities by which participants may have been able to visually judge softness: they may have used a standard force in exploration, being able to judge softness differences from maximal indentation alone. The larger observed indentation then indicates the more compliant of two stimuli. Alternatively, participants may have taken into account the pattern of stimulus indentation over time: Less-compliant stimuli decelerate the indenting finger in less time than do more-compliant stimuli, providing cues to compliance that are not constrained to the use of a single standard force. Then, the longer deceleration phase indicates the more-compliant stimulus. Both potential cues rely on the detection of differences between the observable indentation profiles, and thus can explain the much higher Weber fractions for the harder as compared to the softer stimuli by the more subtle visible indentations of the harder stimuli. However, it is an open question whether the above cues are indeed used to visually estimate softness.

5. Conclusions

In summary, we demonstrated that participants can infer the softness of natural deformable stimuli from the visual effects of stimulus exploration alone (stimulus deformation, trajectory), and that this secondary visual information is integrated with the primary haptic information in visuo-haptic judgments. Visuo-haptic estimates were more biased toward visual information than would be predicted by an optimal integration strategy. Moreover, observed visual weights were similar for the hard and the soft stimuli, whereas optimal visual weights had been predicted to be larger for the soft relative to the hard set. Correspondingly, visuo-haptic softness discrimination was worse than predicted, at least for the hard stimuli. Taken together, these results argue against an optimal integration of direct haptic with indirect visual information. It even seems as if vision is assigned a fixed weight independent of visual reliability, at least in this context. This may point to long-term influences in multisensory integration, which are usually not taken into account in current modeling approaches.

Acknowledgments

We wish to thank two anonymous reviewers for their helpful criticism, suggestions and comments on an earlier draft, and Michael Rector for native-speaker and stylistic advice. The research leading to these results has received funding from the European Community's Seventh Framework Programme FP7/2007–2013 under grant agreement number 214728-2.

Biography

Cristiano Cellini is a PhD candidate at the University of Giessen (Germany). He holds a BSc degree in general and experimental psychology from the University of Florence, Italy and a MSc degree in experimental psychology from University of Florence, Italy. He did his master thesis at the University of Leiden, Netherlands in Professor Bernard Hommel's lab studying the binding problem in multiple objects tracking (MOT).

Cristiano Cellini is a PhD candidate at the University of Giessen (Germany). He holds a BSc degree in general and experimental psychology from the University of Florence, Italy and a MSc degree in experimental psychology from University of Florence, Italy. He did his master thesis at the University of Leiden, Netherlands in Professor Bernard Hommel's lab studying the binding problem in multiple objects tracking (MOT).

His research project deals with the integration of information from different sources. The estimate of an environmental property by a sensory system can be represented as the operation by which the nervous system does the estimation. This operation could refer to different modalities (vision and touch) or could also refer to different cues within a modality. Precisely, during his PhD he worked on visuo-haptic cross modal integration (visuo-haptic integration on the estimation of softness), sensorimotor integration (Haptic Flash Lag Effect) and visual cues integration (Center of Gravity effect on saccadic eye movement: color vs. luminance).

Lukas Kaim studied computer sciences at the University of Applied Sciences in Giessen and received a PhD degree in psychology from the University of Giessen in 2010. Currently, he works as a scientist of experimental and perceptual psychology in the Department of Psychology, Giessen University. The main focus of his research is on active haptic touch, specifically, the relationship between parametric variations of the exploratory movements and the haptic percept. Since 2010 he has worked in a company that develops tactile sensing systems.

Lukas Kaim studied computer sciences at the University of Applied Sciences in Giessen and received a PhD degree in psychology from the University of Giessen in 2010. Currently, he works as a scientist of experimental and perceptual psychology in the Department of Psychology, Giessen University. The main focus of his research is on active haptic touch, specifically, the relationship between parametric variations of the exploratory movements and the haptic percept. Since 2010 he has worked in a company that develops tactile sensing systems.

Knut Drewing received a PhD degree in psychology from the University of Munich in 2001 and a postdoctoral lecture qualification (habilitation) in psychology in 2010 from Giessen University.

Knut Drewing received a PhD degree in psychology from the University of Munich in 2001 and a postdoctoral lecture qualification (habilitation) in psychology in 2010 from Giessen University.

He worked in the Max-Planck-Institutes for Psychological Research (Munich) and for Biological Cybernetics (Tuebingen). Currently, he is a senior scientist and private lecturer of experimental and perceptual psychology in the Department of Psychology, Giessen University.

His research is in haptic perception, multisensory integration and human movement control.

Contributor Information

Cristiano Cellini, Department of General Psychology, Justus-Liebig-University of Giessen, Otto-Behaghel-Strasse 10F, 35394 Giessen, Germany; e-mail: Cristiano.Cellini@psychol.uni-giessen.de.

Lukas Kaim, Department of General Psychology, Justus-Liebig-University of Giessen, Otto-Behaghel-Strasse 10F, 35394 Giessen, Germany; e-mail: Lukas.Kaim@psychol.uni-giessen.de.

Knut Drewing, Department of General Psychology, Justus-Liebig-University of Giessen, Otto-Behaghel-Strasse 10F, 35394 Giessen, Germany; e-mail: Knut.Drewing@psychol.uni-giessen.de.

References

- Alais D., Burr D. The ventriloquist effect results from near-optimal bimodal integration. Current Biology. (2004);14((3)):257–262. doi: 10.1016/j.cub.2004.01.029. [DOI] [PubMed] [Google Scholar]

- Backus B. T., Banks M. S. Estimator reliability and distance scaling in stereoscopic slant perception. Perception. (1999);28((2)):217–242. doi: 10.1068/p2753. [DOI] [PubMed] [Google Scholar]

- Bergmann Tiest W. M., Kappers A. M. Analysis of haptic perception of materials by multidimensional scaling and physical measurements of roughness and compressibility. Acta Psychologica. (2006);121((1)):1–20. doi: 10.1016/j.actpsy.2005.04.005. [DOI] [PubMed] [Google Scholar]

- Bergmann Tiest W. M., Kappers A. M. Kinaesthetic and cutaneous contributions to the perception of compressibility. In: Ferre M., editor. Haptics: Perception, devices and scenarios. Berlin: Springer; (2008). pp. 255–264. (Ed.) [Google Scholar]

- Calvert G. A., Spence C., Stein B. E. The handbook of multisensory processes. Cambridge, MA: MIT Press; (2004). (Eds.) [Google Scholar]

- DiFranco D., Beauregard G., Srinivasan M. A. The effect of auditory cues on the haptic perception of stiffness in virtual environments. Proceedings of the ASME Dynamic Systems and Control Division. (1997);61:17–22. [Google Scholar]

- Drewing K., Ernst M. O. Integration of force and position cues for shape perception through active touch. Brain Research. (2006);1078:92–100. doi: 10.1016/j.brainres.2005.12.026. [DOI] [PubMed] [Google Scholar]

- Drewing K., Ramisch A., Bayer F. EuroHaptics Conference, 2009 and Symposium on Haptic Interfaces for Virtual Environment and Teleoperator Systems. World Haptics 2009. Third Joint, Salt Lake City, UT. IEEE; (2009). Haptic, visual and visuo-haptic softness judgments for objects with deformable surfaces; pp. 640–645. [Google Scholar]

- Drewing K., Wiecki T. V., Ernst M. O. Material properties determine how force and position signals combine in haptic shape perception. Acta Psychologica. (2008);128((2)):264–273. doi: 10.1016/j.actpsy.2008.02.002. [DOI] [PubMed] [Google Scholar]

- Ernst M. O., Banks M. S. Humans integrate visual and haptic information in a statistically optimal fashion. Nature. (2002);415((6870)):429–433. doi: 10.1038/415429a. [DOI] [PubMed] [Google Scholar]

- Ernst M. O., Bulthoff H. H. Merging the senses into a robust percept. Trends in Cognitive Sciences. (2004);8((4)):162–169. doi: 10.1016/j.tics.2004.02.002. [DOI] [PubMed] [Google Scholar]

- Ernst M. O. Learning to integrate arbitrary signals from vision and touch. Journal of Vision. (2007);7((5)) doi: 10.1167/7.5.7. [DOI] [PubMed] [Google Scholar]

- Gepshtein S., Burge J., Ernst M. O., Banks M. S. The combination of vision and touch depends on spatial proximity. Journal of Vision. (2005);5((11)):1013–1023. doi: 10.1167/5.11.7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Helbig H. B., Ernst M. O. Optimal integration of shape information from vision and touch. Experimental Brain Research. (2007);179((4)):595–606. doi: 10.1007/s00221-006-0814-y. [DOI] [PubMed] [Google Scholar]

- Helbig H. B., Ernst M. O. Visual-haptic cue weighting is independent of modality-specific attention. Journal of Vision. (2008);8((1)):21, 1–16. doi: 10.1167/8.1.21. [DOI] [PubMed] [Google Scholar]

- Hillis J. M., Watt S. J., Landy M. S., Banks M. O. Slant from texture and disparity cues: Optimal cue combination. Journal of Vision. (2004);4((12)):967–992. doi: 10.1167/4.12.1. [DOI] [PubMed] [Google Scholar]

- Hollins M., Bensmaia S., Karlof K., Young F. Individual differences in perceptual space for tactile textures: Evidence from multidimensional scaling. Perception and Psychophysics. (2000);62((8)):1534–1544. doi: 10.3758/BF03212154. [DOI] [PubMed] [Google Scholar]

- Jacobs R. A. What determines visual cue reliability? Trends in Cognitive Sciences. (2002);6((8)):345–350. doi: 10.1016/S1364-6613(02)01948-4. [DOI] [PubMed] [Google Scholar]

- Kuschel M., Di Luca M., Buss M., Klatzky R. L. Combination and integration in the perception of visual-haptic compliance information. IEEE Transactions on Haptics. (2010);3((4)):234–244. doi: 10.1109/TOH.2010.9. [DOI] [PubMed] [Google Scholar]

- Landy M. S., Maloney L. T., Johnston E. B., Young M. Measurement and modeling of depth cue combination: In defense of weak fusion. Vision Research. (1995);35((3)):389–412. doi: 10.1016/0042-6989(94)00176-m. [DOI] [PubMed] [Google Scholar]

- Mascaro S. A., Asada H. H. Photoplethysmograph fingernail sensors for measuring finger forces without haptic obstruction. IEEE Transactions on Robotics and Automation. (2001);17((5)):698–708. [Google Scholar]

- Nardini M., Jones P., Bedford R., Braddick O. Development of cue integration in human navigation. Current Biology. (2008);18((9)):689–693. doi: 10.1016/j.cub.2008.04.021. [DOI] [PubMed] [Google Scholar]

- Oruc I., Maloney L. T., Landy M. S. Weighted linear cue combination with possibly correlated error. Vision Research. (2003);43((23)):2451–2468. doi: 10.1016/s0042-6989(03)00435-8. [DOI] [PubMed] [Google Scholar]

- Perotti V. J., Todd J. T., Lappin J. S., Phillips F. The perception of surface curvature from optical motion. Perception and Psychophysics. (1998);60((3)):377–388. doi: 10.3758/bf03206861. [DOI] [PubMed] [Google Scholar]

- Rosas P., Wagemans J., Ernst M. O., Wichmann F. A. Texture and haptic cues in slant discrimination: Reliability-based cue weighting without statistically optimal cue combination. Journal of the Optical Society of America a-Optics Image Science and Vision. (2005);22((5)):801–809. doi: 10.1364/josaa.22.000801. [DOI] [PubMed] [Google Scholar]

- Serwe S., Drewing K., Trommershäuser J. Combination of noisy directional visual and proprioceptive information. Journal of Vision. (2009);9((5)):11–14. doi: 10.1167/9.5.28. [DOI] [PubMed] [Google Scholar]

- Srinivasan M. A., Lamotte R. H. Tactual discrimination of softness. Journal of Neurophysiology. (1995);73((1)):88–101. doi: 10.1152/jn.1995.73.1.88. [DOI] [PubMed] [Google Scholar]

- Srinivasan M. A., Beauregard G. L., Brock D. L. The impact of visual information on the haptic perception of stiffness in virtual environments. Proceedings of the ASME Dynamic Systems and Control Division. (1996);58:555–559. [Google Scholar]

- Stein B. E., Meredith M. A. The merging of the senses. Nature. (1993);364((6438)):588–588. [Google Scholar]

- Stevens S. S. The direct estimation of sensory magnitudes – loudness. American Journal of Psychology. (1956);69((1)):1–25. doi: 10.2307/1418112. [DOI] [PubMed] [Google Scholar]

- van Beers R. J., Sittig A. C., Gon J. J. How humans combine simultaneous proprioceptive and visual position information. Experimental Brain Research. (1996);111((2)):253–261. doi: 10.1007/BF00227302. [DOI] [PubMed] [Google Scholar]

- van Beers R. J., Sittig A. C., Gon J. J. Integration of proprioceptive and visual position-information: An experimentally supported model. Journal of Neurophysiology. (1999);81((3)):1355–1364. doi: 10.1152/jn.1999.81.3.1355. [DOI] [PubMed] [Google Scholar]

- Wichmann F. A., Hill N. J. The psychometric function: I. Fitting, sampling, and goodness of fit. Perception and Psychophysics. (2001);63((8)):1293–1313. doi: 10.3758/BF03194544. [DOI] [PubMed] [Google Scholar]

- Wu W. C., Basdogan C., Srinivasan M. A. Visual, haptic, and bimodal perception of size and stiffness in virtual environments. Proceedings of the ASME Dynamic Systems and Control Division. (1999);67:19–26. [Google Scholar]

- Young M. J., Landy M. S., Maloney L. T. A perturbation analysis of depth perception from combinations of texture and motion cues. Vision Research. (1993);33((18)):2685–2696. doi: 10.1016/0042-6989(93)90228-o. [DOI] [PubMed] [Google Scholar]