Abstract

The neurobiological basis of reading is of considerable interest, yet analyzing data from subjects reading words aloud during functional MRI data collection can be difficult. Therefore, many investigators use surrogate tasks such as visual matching or rhyme matching to eliminate the need for spoken output. Use of these tasks has been justified by the presumption of “automatic activation” of reading‐related neural processing when a word is viewed. We have tested the efficacy of using a nonreading task for studying “reading effects” by directly comparing blood oxygen level dependent (BOLD) activity in subjects performing a visual matching task and an item naming task on words, pseudowords (meaningless but legal letter combinations), and nonwords (meaningless and illegal letter combinations). When compared directly, there is significantly more activity during the naming task in “reading‐related” regions such as the inferior frontal gyrus (IFG) and supramarginal gyrus. More importantly, there are differing effects of lexicality in the tasks. A whole‐brain task (matching vs. naming) by string type (word vs. pseudoword vs. nonword) by BOLD timecourse analysis identifies regions showing this three‐way interaction, including the left IFG and left angular gyrus (AG). In the majority of the identified regions (including the left IFG and left AG), there is a string type × timecourse interaction in the naming but not the matching task. These results argue that the processing performed in specific regions is contingent on task, even in reading‐related regions and is thus nonautomatic. Such differences should be taken into consideration when designing studies intended to investigate reading. Hum Brain Mapp 34:2425–2438, 2013. © 2012 Wiley Periodicals, Inc.

Keywords: fMRI, orthography, phonology, spoken, language, adult

INTRODUCTION

The neurobiological underpinnings of reading have been studied since the advent of functional neuroimaging [i.e., Petersen et al., 2009] and interest in the neural processing systems contributing to fluent reading has grown considerably. A recent PubMed (http://www.ncbi.nlm.nih.gov/sites/entrez) search of the terms “reading” or “language” and “fMRI,” “PET,” or “neuroimaging” returned 9,194 results, of which 6,836 were published in the last 10 years. As a whole, this research has contributed much to our knowledge about the neuroscience of reading, including identification of brain regions consistently used in single word reading [see Bolger et al., 2009; Fiez and Petersen, 2009; Jobard et al., 2009; Turkeltaub et al., 2009; Vigneau et al., 2009 for meta‐analyses], how the neural systems for reading change with development [reviewed in Schlaggar and McCandliss, 2009], and how these systems may be disrupted in dyslexic readers [see Gabrieli, 2009; Shaywitz, 2009]. However, due to the technical difficulties of imaging during spoken output, including recording verbal responses [Nelles et al., 2009] and the possibility of movement related artifacts [Mehta et al., 2009], many groups have used implicit reading tasks such as matching [i.e., Tagamets et al., 2009], ascender judgments [i.e., Price et al., 2009 Turkeltaub et al., 2009], target string detection [i.e., Vinckier et al., 2009], and silent reading [i.e., Dehaene et al., 2009]. In fact, only 375 of the aforementioned neuroimaging studies are found if “aloud” or “spoken” is added to PubMed search terms described above.

The use of nonvocal tasks for studying reading‐related processing has been justified by the proposition that the reading pathway is automatically activated whenever a word is viewed. Automaticity in reading has a long history, dating back at least to William James [James, 2009]. Behavioral studies of reading have provided some evidence for the automatic activation of reading pathways when viewing (or matching or scanning) words. For example, in the classic word‐color Stroop effect, subjects are slower to report the ink color of words that name a color other than the ink color, an indication that the word itself has been read despite its lack of relevance to the task at hand [see MacLeod, 2009]. Additionally, two influential models of word reading, a connectionist model in which orthographic, phonologic, and semantic processors work together to produce a spoken word [e.g., Harm and Seidenberg, 2009], and the dual route connectionist model in which words are processed in distinct phonologic and orthographic pathways [e.g., Coltheart et al., 2009], generally assume automatic activation of these neural components whenever a word is viewed. Finally, evidence in support of automatic activation can be found in semantic priming; when there is a short time between a semantically related prime and a target word to be read, there is facilitation (a decrease in response time) for reading the target word [i.e., Neely, 2009].

Early functional neuroimaging studies also support the concept of “automatic activation.” As described above, functional neuroimaging studies have generally converged on a set of left hemisphere regions used for single word reading [Jobard et al., 2009; Turkletaub et al., 2009; Bolger et al., 2009; Vigneau et al., 2009], including a region near the left occipitotemporal border in the fusiform cortex termed the visual word form area (VWFA) [see McCandliss et al., 2009 for a review], regions near the left supramarginal gyrus (SMG) and angular gyrus (AG) which have been reported as phonologic and/or semantic processors [Binder et al., 2009; Church et al., 2009; Graves et al., 2009; Sandak et al., 2009], and regions in the left inferior frontal gyrus (IFG) thought to be involved in phonological processing and/or articulatory processes [Booth et al., 2009; Fiez et al., 2009; Mechelli et al., 2009]. Many studies that do not require reading aloud [i.e., Cohen et al., 2009; Dehaene et al., 2009; Polk et al., 2009; Price et al., 2009; Tagamets et al., 2009; Turkeltaub et al., 2009] show activity in these regions.

However, there is some evidence that task manipulation may alter lexical processing in these regions. Returning to semantic priming provides one such example. While there is always facilitation of responses to targets with semantically related primes if the prime and target are presented close together in time, there is also evidence of top down control changing that relationship [Neely, 2009]. If subjects are taught nonsemantically related prime/target associations, the learned (but not semantically related) prime will facilitate response time to read the target word if prime and target are presented with an adequate time apart (on the order of several hundred ms). Moreover, in this case, the semantically related prime becomes inhibitory (causing an increase in response time to name the semantically related target). Thus, the “lexical processing” required for primed word processing can be affected by top‐down control.

Further, there is evidence that task manipulation can affect reading‐related neural processing in at least some brain regions. For example, activity differences between the processing of letters and digits are reduced in an orthographic processing region when the subjects are asked to name the stimuli aloud relative to silent reading [Polk et al., 2009]. Starrfeldt and Gerlach 2009 have also shown differential stimulus effects for color versus category naming in the VWFA. Twomey et al. 2009 demonstrated task‐dependent activation patterns in the VWFA and left IFG regions for words, pseudowords that sound like words, and pseudowords that do not sound like words. Tasks that emphasize specific processing components of reading, such as rhyme matching versus spelling, show clear distinctions in BOLD activity in regions such as the SMG, IFG, and VWFA [Bitan et al., 2009; Booth et al., 2009]. More regions show differential activation in dyslexic and typical readers when subjects read words aloud than when subjects perform an implicit reading task [Brunswick et al., 2009].

In this study, we directly test for neural processing differences between subjects reading aloud and making a visual matching judgment on three classes of orthographic stimuli: words, pseudowords (defined as orthographically legal letter combinations) and nonwords (defined as orthographically illegal letter combinations). Variations of visual matching have been used as an implicit reading task [i.e., Tagamets et al., 2009], and we contend that this matching task involves a similar form of low level or implicit visual processing involved in tasks like ascender judgments or unique string detection. By using both word and nonword stimuli, we are not only able to test for task effects (i.e., matching vs. naming), but also interactions between string type (word vs. pseudoword vs. nonword) and task. String type × task interactions are most likely to reflect processing differences between the two tasks. While activity may be generally reduced for the implicit task (matching) relative to the explicit task (reading), if there is truly automatic activation of the reading pathway, there should be similar effects of string type in the two tasks. If, in contrast, the string types are processed differently in the two tasks, this difference likely reflects an effect of top‐down control on reading‐related processes, a result that would necessarily encourage caution when comparing implicit reading to reading aloud, or when assuming that implicit reading tasks act as surrogates for explicit reading.

METHODS

Participants

Subjects included 22 (10 males) right‐handed native English speakers ages 21–26‐years old. All were screened for neurologic and psychiatric diagnoses and medications by telephone interview and questionnaire. The majority was from the Washington University or Saint Louis University communities, and all were either college students or college graduates. All gave informed, written consent and were reimbursed for their time per the Washington University Human Studies Committee approval. All subjects were tested for IQ using two subtests of the Wechsler Abbreviated Scale of Intelligence [Wechsler, 2009] and for reading level using three subtests of the Woodcock‐Johnson III (Letter‐Word ID, Passage Comprehension, and Word Attack) [Woodcock and Johnson, 2009]. All subjects had above average IQ (mean = 127, range 115–138, standard deviation 6.4) and reading level (mean standard reading level 17.3 years education (college graduates), range 15.4–18 years education (the maximum estimated by the WJ‐III), standard deviation 0.88).

Stimuli

All stimuli consisted of 4‐letter strings. Letter strings were of three types: real words (e.g., ROAD), pseudowords with all orthographically legal letter combinations (e.g., PRET) or nonwords with orthographically illegal letter combinations in English (e.g., PPID). Each letter subtended ∼0.5° horizontal visual angle and were presented in uppercase Verdana font in white on a black background.

In the item naming task (hereinafter “naming task”), one string was presented foveally, replacing a central fixation crosshair. All strings were presented for 1 s. Forty‐five strings including 15 real words (e.g., FACE), 15 pseudowords (e.g., RALL), 15 nonwords (e.g., GOCV) were presented in pseudorandom order in each of four runs per subject, resulting in a total of 180 stimuli. The strings consisted of a subset of those presented in the string matching task described below. Stimuli were pseudorandomized within run with the constraint that no string type appear on more than three consecutive trials, and run order was counterbalanced across subjects.

In the string matching task (hereinafter “matching task”), two strings appeared parafoveally, one above the fixation crosshair and one below (each ∼1.5° vertical visual angle from the fixation cross). Each pair was presented for 1.5 s. The pairs were either both real words, both pseudowords, or both nonwords. Subjects saw a single run of each stimulus type, with 60 pairs per run. Within each run half of the pairs (30) were the same and half (30 pairs) were different, and half of those that were different (15 pairs) differed in all four character positions, while half (15 pairs) different in only two character positions. A total of four separate pseudorandom orders were generated for each run/stimulus type. Examples of the matching stimuli can be seen in Table 1.

Table 1.

Examples of string matching stimuli

| String type | Same pairs | Different pairs | |

|---|---|---|---|

| Easy (four character difference) | Difficult (two character difference) | ||

| Words | ROAD+ROAD | FACE+COAT | LAND+TEND |

| Legal pseudowords | RALL+RALL | TARE+FLOY | KRIT+PRET |

| Illegal nonwords | GOCV+GOCV | BAOO+NLES | FOCR+WECR |

Task Design

Two tasks were used in this study; string naming and string matching. Each subject performed both tasks. Of note, both tasks were embedded within a longer study consisting of single letter and picture matching tasks, single letter and picture naming tasks, a rhyme judgment and picture–sound judgment task. All together, each subject performed 16 runs split over two scanning sessions held 1–28 days apart. The order of the runs was counterbalanced within and across scanning sessions.

For the single naming task, the 45 stimuli (15 of each string type) were intermixed with 90 null frames where only a fixation crosshair was presented. The trials were arranged such that the words were presented sequentially or with one, two, or three null frames between strings. Each trial consisted of a single 2.5 s TR; thus, the actual time between stimuli was either 1.5, 4, 6.5, or 9 s. Such a jitter allows the event‐related timecourse to be extracted [Miezin et al., 2009]. Subjects were instructed to read aloud each item as accurately and quickly as possible.

In the matching task, each stimulus pair was presented for 1.5 s, within a 2.5 s TR trial. Sixty stimulus trials of the same type (i.e., all real words) were intermixed with 60 null frames in each run such that the stimuli appeared either sequentially or with one or two null frames between pairs. Subjects were instructed to press a button with one index finger if the stimuli were the same and with the other index finger if they were different. The hands assigned to the “same” and “different” judgments were counterbalanced across subjects. Stimuli were pseudorandomized within each run so that more than two consecutive correct responses required the same hand for a response.

Behavioral Measures

Behavioral data were collected with digital voice recording software for the naming task [described in Nelles et al., 2009] and with a PsyScope compatible optical button box for the matching task [Cohen et al., 2009]. For the naming task, responses were scored as correct for pseudowords if the subject gave the correct sequence of orthographic to phonologic conversions. Responses to the nonwords were scored liberally; if the subject incorporated a sound associated with all letters or graphemes in the word in the correct order the response was scored as “correct.” For example, correct responses to PPID included “pi‐pid” and “pid.”

MR Data Acquisition and Preprocessing

A Siemens 3T Trio scanner (Erlanger, Germany) was used to collect all functional and anatomical scans. A single high‐resolution structural scan was acquired using a sagittal magnetization‐prepared rapid gradient echo (MP‐RAGE) sequence (slice time echo= 3.08 ms, TR= 2.4 s, inversion time= 1 s, flip angle= 8°, 176 slices, 1 × 1 × 1 mm voxels). All functional runs were acquired parallel to the anterior‐posterior commissure plane using an asymmetric spin‐echo echo‐planar pulse sequence (TR= 2.5 s, T2* evolution time 27 ms, flip angle 90°). Complete brain coverage was achieved by collecting 32 contiguous interleaved 4‐mm axial slices (4 × 4 mm2 in‐plane resolution).

Preliminary image processing included removal of a single pixel spike caused by signal offset, whole brain normalization of signal intensity across frames, movement correction within and across runs, and slice by slice normalization to correct for differences in signal intensity due to collecting interleaved slices. For a detailed description, see Miezin et al. 2009.

After preprocessing, data were transformed into a common stereotactic space based on Talairach and Tournoux 2009 using an in‐house atlas composed of the average anatomy of 12 healthy young adults age 21–29‐years old and 12 healthy children age 7–8‐years old [see Brown et al., 2009; Lancaster et al., 2009; Snyder, 2009 for Methods section]. As part of the atlas transformation, the data were resampled isotropically at 2 × 2 × 2 mm3. Registration was accomplished via a 12 parameter affine warping of each individual's MP‐RAGE to the atlas target using difference image variance minimization as the objective function. The atlas‐transformed images were also checked qualitatively against a reference average to ensure appropriate registration.

Participant motion was corrected and quantified using an analysis of head position based on rigid body translation and rotation. In scanner movement was relatively low as subjects were both instructed to hold as still as possible during each run and were fitted with a thermoplastic mask molded to each individual's face. However, frame‐by‐frame movement correction data from the rotation and translation in the x, y, and z planes were compiled to assess movement as single measurement as the number of millimeters rms. In this experiment movement ranged from 0.10 to 0.54 mm rms (mean = 0.273 mm rms, standard deviation = 0.120 mm). The difference in movement between the matching (mean = 0.262 mm rms, standard deviation = 0.127 mm) and naming (mean = 0.284 mm rms, standard deviation = 0.114 mm) tasks was not significant.

fMRI Processing and Data Analysis

Statistical analyses of event‐related fMRI data were based on the general linear model (GLM) conducted using in‐house software programmed in the interactive data language (IDL, Research Systems, Boulder, CO) as previously described [Brown et al., 2009; Miezin et al., 2009; Schlaggar et al., 2009]. The GLM for each subject included time as a nine‐level factor made up of nine MR frames (22.5 s, 2.5 s/frame) following the presentation of the stimulus, task as a two‐level factor (matching and naming) and string type as a three‐level factor (words, pseudowords, and nonwords). No assumptions were made regarding the shape of the hemodynamic response function. Only correct trials were included in the analysis; errors were coded separately in the GLM but were not analyzed.

First, a two task (matching vs. naming) × three string type (words vs. pseudowords vs. nonwords) × 9 timepoint voxelwise whole brain repeated measures ANOVA was conducted. A Monte Carlo correction was used to guard against false positives resulting from conducting a large number of statistical comparisons over many images [Forman et al., 2009; McAvoy et al., 2009]. To achieve a P < 0.05 corrected for multiple comparisons, a threshold of 24 contiguous voxels with a Z > 3.5 was applied.

This voxelwise ANOVA produced three images of interest: voxels with a main effect of timecourse (activity that showed differences among the nine time points collapsing across task and string type), voxels with a task × timecourse interaction (activity that shows timecourse differences between the matching and naming tasks), and voxels with a string type × task × timecourse interaction (activity that shows timecourse differences between the three string types dependent on the two task conditions).

Regions were extracted from these images using an in‐house peak‐finding algorithm (courtesy of Avi Snyder) that located activity peaks within the Monte Carlo corrected contiguous voxel images, by first smoothing with a 4‐mm kernel, then extracting only peaks with a Z score >3.5, containing 24 contiguous voxels and located at least 10 mm from other peaks.

The nature of the statistical effects was demonstrated both by performing planned posthoc ANOVA comparisons (i.e., string type × timecourse interactions within each string type separately) and by extracting the timecourse (percent BOLD signal change at each of the 9 time points) in every individual subject for each stimulus type in each task in each of the regions defined from the ANOVAs described above. Percent BOLD signal change at each time point was averaged across all subjects and these average timecourses plotted for each stimulus type in each task.

In the task × timecourse analysis, we have labeled some regions as showing activity in only one task or the other. To make that distinction we calculated the main effect of timecourse in the regions defined by the task type × timecourse ANOVA for the matching and naming tasks, separately. Because we are defining our main effect of timecourse by looking for changes in BOLD activity across 9 timepoints in predefined ROIs, there were some regions that were defined as showing statistically significant BOLD activity in a task, although there was only a small deflection from baseline (<0.05% BOLD signal change at the peak deflection). We argue such small changes in signal are unlikely to be biologically meaningful and have labeled these as showing activity for only one task in Figure 2A. We have noted those locations as showing activity <0.05% BOLD signal change in Table 3.

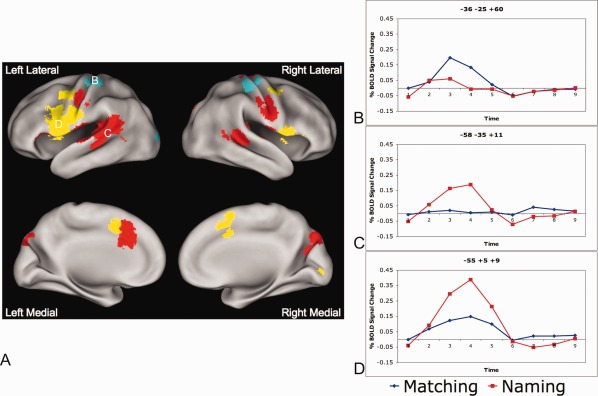

Figure 2.

Task by timecourse effects. A: Regions obtained from a whole brain task (matching vs. naming) by timecourse‐repeated measures ANOVA. Blue regions show more activity for matching than naming (in a statistical test of reliability). Red regions show activity in the naming task but have no significant activity in the matching task > 0.05% BOLD signal change. Yellow regions are active in both tasks, but have more activity in the naming relative to matching tasks. B: Timecourses for an exemplar blue (matching > naming) region (left finger sensorimotor region: −36, −28, and 57). Timecourse for matching is shown in blue and for naming in red. C: Timecourses for an exemplar red (naming only) region (left auditory cortex: −56, −26, and 10). Timecourses for matching shown in blue and naming in red. D: Timecourses for an exemplar yellow (naming > matching) region (left IFG: −52, 2, and 10). Timecourses for matching shown in blue) and naming in red.

Table 3.

Task by timecourse regions

| x | y | z | Anatomical location |

|---|---|---|---|

| String matching > string naming | |||

| Main effect of time in naming and matching tasks (Fig. 2A, blue) | |||

| −27 | −97 | 10 | Left occipital |

| 37 | −22 | 58 | Right sensorimotor |

| 46 | −25 | 54 | Right sensorimotor |

| Main effect of time in matching, no main effect or < 0.05% signal change in naming (Fig. 2A, blue) adenote regions with statistically significant (P < 0.05) but not biologically significant (<0.05% BOLD signal change) activity | |||

| −36 | −25 | 60 | Left finger sensorimotora |

| String naming > string matching | |||

| Main effect of time in naming and matching tasks (Fig. 2A, yellow) | |||

| 17 | −88 | 0 | Right occipital |

| −46 | 2 | 46 | Left premotor |

| 45 | 2 | 54 | Right premotor |

| 11 | 11 | 52 | Right anterior cingulate |

| −15 | 9 | 43 | Left anterior cingulate |

| 3 | 15 | 42 | Right anterior cingulate |

| −55 | 5 | 9 | Left inferior frontal gyrus |

| 58 | 12 | 3 | Right inferior frontal gyrus |

| −54 | 10 | 19 | Left inferior frontal gyrus |

| −46 | 12 | 28 | Left inferior frontal gyrus |

| −40 | 6 | 7 | Left mid insula |

| 47 | 11 | 5 | Right mid insula |

| 53 | 22 | −3 | Right anterior insula |

| −45 | −16 | 40 | Left sensorimotor |

| Main effect of time in naming, no main effect or <0.5% signal change in matching (Fig. 2A, red) adenote regions with statistically significant (P < 0.05) but not biologically significant (<0.05% BOLD signal change) activity | |||

| −10 | −84 | 34 | Left medial parietal/occipital junctiona |

| 10 | −83 | 41 | Right medial parietal/occipital junctiona |

| 17 | −80 | 34 | Right medial parietal/occipital junctiona |

| −58 | −66 | 7 | Left superior temporal sulcusa |

| 63 | −53 | 9 | Right superior temporal sulcus |

| −56 | −49 | 18 | Left supramarginal gyrusa |

| −41 | −40 | 18 | Left supramarginal gyrusa |

| 25 | −64 | −21 | Right superior temporal sulcusa |

| −58 | −35 | 11 | Left superior temporal gyrus |

| 55 | −34 | 3 | Right superior temporal gyrus |

| −48 | −32 | −1 | Left superior temporal gyrus |

| −44 | −29 | 13 | Left superior temporal gyrusa |

| 43 | −29 | 13 | Right superior temporal gyrusa |

| −15 | −32 | 70 | Left superior parietal |

| 18 | −28 | 63 | Right superior parietal |

| −57 | −17 | 7 | Left mouth sensorimotor |

| 56 | −10 | 8 | Right mouth sensorimotora |

| 50 | −11 | 35 | Right mouth sensorimotora |

| −57 | −7 | 25 | Left mouth sensorimotora |

| 57 | −6 | 25 | Right mouth sensorimotora |

| −43 | 21 | −1 | Left anterior insulaa |

| −8 | 22 | 27 | Left anterior cingulatea |

| −10 | 22 | 41 | Left anterior cingulatea |

Regions defined in a whole brain task (matching vs. naming) by timecourse‐repeated measures ANOVA (reported in MNI coordinates, depicted on the brain in Fig. 2).

To ensure the effects were not due to response time differences between the two tasks, a second set of GLMs was generated for each subject as described above but with an additional regressor coding the response time for each individual trial. Thus response time could be used as a continuous regressor and unique variance related to response time should be assigned to that variable.

RESULTS

Behavioral Results

Subjects showed high accuracy in both the naming (average 98.3%) and matching (average 98.0%) tasks. A 3 (string type: words, pseudowords, nonwords) × 2 (task type: matching and naming) repeated measures ANOVA indicated no difference between the tasks (P = 0.770) or the string types (P = 0.17), and no string type × task interaction (P = 0.98).

An analysis of response time with a 3 (string type: words, pseudowords, nonwords) × 2 (task type: matching and naming) repeated measures ANOVA demonstrated a string type × task interaction (P < 0.0001), and though there was no effect of task (P = 0.289), there was a significant effect of string type (P < 0.0001). Posthoc three‐level (string type) ANOVAs performed for the matching and naming tasks individually showed that the task × string type interaction was driven by an effect of string type on response time for the naming task (P < 0.0001, posthoc paired t‐tests indicated nonwords > pseudowords > words) that was not present in the matching task (P = 0.46). See Table 2 for details.

Table 2.

Behavioral results

| Task | Accuracy (%) | Response Time (ms) | ||||

|---|---|---|---|---|---|---|

| Average | Range | sd | Average | Range | sd | |

| Naming | ||||||

| Words | 99.0 | 95.0–100 | 1.6 | 837 | 647–1,032 | 100 |

| Pseudowords | 98.2 | 91.7–100 | 2.3 | 932 | 752–1,102 | 100 |

| Nonwords | 97.5 | 93.3–100 | 2.5 | 1,038 | 851–1,270 | 120 |

| Average | 98.3 | 95.5–100 | 1.3 | 955 | 741–1,103 | 100 |

| Statistical effects | No effect of string type | Nonwords > pseudowords > words | ||||

| Matching | ||||||

| Words | 98.6 | 95.0–100 | 1.8 | 914 | 705–1,253 | 139 |

| Pseudowords | 98.3 | 90.0–100 | 2.5 | 889 | 701–1,325 | 138 |

| Nonwords | 97.9 | 73.3–100 | 5.1 | 910 | 771–1,483 | 164 |

| Average | 98.0 | 88.9–100 | 2.5 | 904 | 735–1,331 | 147 |

| Statistical effects | No effect of string type | No effect of string type | ||||

Imaging Results

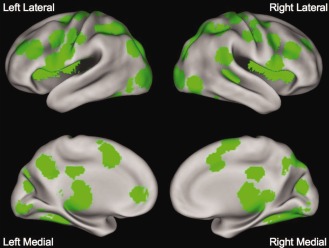

Regions common to both matching and naming tasks

Many regions showed statistically significant activity with BOLD signal change >0.05% in both the matching and naming tasks, as seen in Figure 1. These regions were in locations thought to be important for reading, including the left VWFA, IFG, and posterior AG, as described in the introduction. However, there was also significant activity throughout bilateral primary visual and fusiform cortex, and in regions thought to be involved in directing spatial attention (such as the left and right superior parietal cortex) or control processes (such as bilateral intraparietal sulcus and frontal operculum).

Figure 1.

Main effect of timecourse. Regions showing a main effect of time and at least 0.05% peak BOLD signal change in both the string‐matching and string‐naming tasks.

Task by timecourse effects

Many regions displayed a task (matching vs. naming) × timecourse effect (Fig. 2, Table 3). Of these regions, only bilateral finger sensorimotor cortex and a single left occipital region showed more activity for matching relative to naming (regions shown in blue in Fig. 2A, timecourses for left finger motor cortex in Fig. 2B). Many more regions, including bilateral oropharyngeal sensorimotor cortex and auditory cortex, showed statistically (P < 0.05) significant activity with BOLD signal change >0.05% from baseline only during the naming task (regions shown in red in Fig. 2A, timecourses for a representative region in auditory cortex in Fig. 2C). A third set of regions, including the left IFG, demonstrated activity in both matching and naming tasks, but significantly more activity in the naming task (regions shown in yellow in Fig. 2A, timecourses for a representative left IFG region in Fig. 2D).

String type by task by timecourse effects

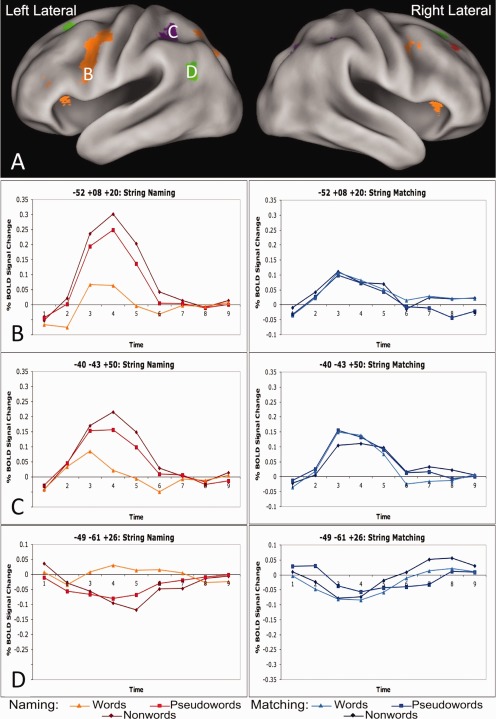

Perhaps most important for evaluating implicit versus explicit reading tasks are the regions showing a task (matching vs. naming) × string type (word vs. pseudowords vs. nonwords) × timecourse (time points 1–9) interaction (Fig. 3, Table 4), as it is this interaction that most likely reveals lexical processing differences between the two tasks. Regions identified in this analysis do not simply have different overall levels of activity between tasks, but have differing effects of string type dependent on the task demands.

Figure 3.

String type × task × timecourse effects. Regions identified in a whole brain string type (words vs. pseudowords vs. nonwords) × task (matching vs. naming) × timecourse‐repeated measures ANOVA. Region colors described in the legend are elaborated upon in the text as effect types 1, 2, and 3.

Table 4.

String‐type by task by timecourse regions

| x | y | z | Anatomical location | P value | P value | P value |

|---|---|---|---|---|---|---|

| String type × time | String type × time | Task × time | ||||

| String matching | String naming | |||||

| Orange regions in Figure 3 | ||||||

| −22 | −74 | 39 | Left superior occipital | >0.05 | <0.001 | <0.01 |

| −23 | −60 | 46 | Left posterior parietal | >0.05 | <0.001 | <0.01 |

| 22 | −70 | 52 | Right posterior parietal | >0.05 | <0.001 | <0.01 |

| −42 | 1 | 39 | Left MFG | >0.05 | <0.001 | <0.001 |

| 46 | 6 | 37 | Right MFG | <0.01 (nonwords < pseudowords) | <0.001 | <0.001 |

| −49 | −3 | 49 | Left superior posterior frontal | >0.05 | <0.001 | <0.001 |

| −42 | 3 | 27 | Left IFG | >0.05 | <0.001 | <0.001 |

| −52 | 8 | 20 | Left IFG | >0.05 | <0.001 | <0.001 |

| −46 | 39 | 14 | Left anterior IFG | >0.05 | <0.001 | <0.001 |

| −34 | 21 | 4 | Left insula | >0.05 | <0.001 | <0.001 |

| 32 | 22 | 4 | Right insula | >0.05 (P = 0.02 with RT regressed) | <0.001 | <0.01 |

| −3 | 13 | 54 | Medial superior frontal | >0.05 | <0.001 | <0.001 |

| Purple regions in Figure 3 | ||||||

| 28 | −64 | 41 | Right occipitoparietal | >0.05 | <0.001 | >0.05 (P = .01 with RT regressed) |

| −41 | −43 | 50 | Left lateral parietal | >0.05 | <0.001 | >0.05 |

| 44 | −37 | 48 | Right lateral parietal | <0.01 (P = 0.06 with RT regressed) | <0.001 | >0.05 |

| Green regions in Figure 3 | ||||||

| −49 | −61 | 26 | Left AG | <0.03 (words/nonwords < pseudowords) | <0.001 | >0.05 |

| −10 | −35 | 38 | Left posterior cingulate | >0.05 | <0.001 | <0.05 (matching < naming) |

| −8 | −47 | 39 | Left precuneus | >0.05 | <0.001 | >0.05 |

| 3 | −37 | 47 | Right precuneus | >0.05 | <0.001 | <0.001 (matching < naming) |

| −23 | 18 | 46 | Left superior frontal | >0.05 | <0.001 | <0.05 (naming < matching) |

| 20 | 31 | 46 | Right superior frontal | >0.05 | <0.001 | <0.01 (naming < matching) |

| Red regions in Figure 3 | ||||||

| 29 | 35 | 39 | Right MFG | BOLD activity < 0.05% | <0.01 | <0.01 |

Regions defined in a whole brain string type (illegal nonwords vs. legal pseudowords vs. words) by task (matching vs. naming) by time repeated measures ANOVA (in MNI coordinates), with a Z ≥ 3.5 (P < 0.05 corrected for multiple comparisons). Colors reflect those used in Figure 3. Regions with statistical effects that do not strictly conform to the grouping described in the text are noted and effect direction is described in the table. Any changes in effect significance for individual regions when response time is regressed are noted.

Planned posthoc comparisons were done on each of these three‐way interaction regions to explore the separate task type × timecourse and string type × timecourse effects; the timecourses showed three general patterns:

One group of regions showed positive timecourses with an effect of lexicality in the naming task (pseudowords and nonwords > words) but no such effect in the matching task. These regions also demonstrated a task × timecourse effect, with significantly more BOLD activity for nonword naming and much lower BOLD activity for all string types in the matching task (which are instead qualitatively similar in activity level to word naming). Regions demonstrating these effects are shown in orange in Figure 3 and timecourses from a representative left IFG region are shown in Figure 4B.

The second group of regions also demonstrated positive timecourses and an effect of string type (nonwords > pseudowords > words) × timecourse (time points 1–9) in the naming but not matching task (purple in Figure 3, timecourses from a representative left lateral parietal region in Fig. 4C). However, in these regions there was no task × timecourse effect, as the average level of matching activity is equivalent to the average BOLD activity in the naming task. Of note, in the representative left lateral parietal region depicted in Figure 4C, there may have been an effect of lexicality in addition to the string type effect, as there was much larger increase in the BOLD activity for naming pseudowords than words compared to the activity difference between reading pseudowords and nonwords. However, the other regions in this category displayed an equivalent increase in the amount of activity for reading pseudowords relative to words and nonwords relative to pseudowords.

A third group contained regions with negative BOLD timecourses that also have an effect of string type × timecourse in the naming task (nonwords < pseudowords < words) but not the matching task. As in Group 2, there was no task × timecourse effect, as the magnitude of negative deflection in the matching task was similar to the negative deflection of nonword naming (which are all more negative than word naming). These regions are depicted in green in Figure 3 and timecourses for a representative left AG region are shown in Figure 4D. Notably, these regions showed similar effects to those described for Group 2, only with a negative range of BOLD activity change.

Figure 4.

Examples of regions showing three types of string type × task × timecourse effects. A: Lateral views of regions showing a string type (nonwords vs. pseudowords vs. words) by task (matching vs. naming) by timecourse interaction. B: Timecourses from an exemplar orange region (left lateral IFG: −53, 8, and 20) in the string‐naming task in the left panel and string‐matching task in the right panel. While there is positive activity in both the naming and matching tasks, there is also task × time effect in this region. Moreover, there is an effect of lexicality (pseudowords and nonwords > words) in the naming, but not the matching task. C: Timecourses from an exemplar purple region (left lateral parietal: −40, −43, and 50) in the string naming task on the left and string matching task on the right. There is no task × timecourse effect in these regions and the string type × task × timecourse interaction is driven by an effect of string type (nonwords > pseudowords > words) in the naming task while there are no such differences in the matching task. D: Timecourses from an exemplar green region (left AG: −49, −61, and 26) in the string‐naming task on the left and string‐matching task on the right. There is no task × timecourse effect in the green regions, and the string type × task × timecourse interaction is driven by the a lexicality effect (pseudowords and nonwords < words) in the string naming task but no lexicality or string type × time effects in the string matching task.

In addition to these general patterns, there is a single region with dissimilar effects from those described above. A right posterior frontal region (shown in red in Fig. 3) demonstrated positive timecourses and a string type × timecourse effect in the naming task (nonwords > pseudowords and words) but no statistically significant activity >0.05% BOLD signal change in the matching task.

While there is a task × string‐type interaction in response time that mimics the imaging effects (nonwords RT > pseudowords RT > words RT), the imaging results described above are not dependent on response time. When RT was added as an individual trial regressor to the GLM, all regions with string type × task × timecourse interactions described above continue to show the interaction. One right occipital parietal region (purple in Fig. 3) changed from a nonsignificant to significant task type × time effect when RT was regressed. One region in the right insula (orange in Fig. 3) has a significant string‐type × timecourse effect in the string matching task once RT is regressed out, though this effect was nonsignificant before RT regression). The regions affected by RT regression are noted in Table 4, and may represent those regions in which there is both an effect of lexical processing and performance as reflected in RT, and only by removing the effect of the latter can the effect of the former be visualized.

DISCUSSION

Here, we have demonstrated that while there are similarities in BOLD activity for reading aloud and matching words, pseudowords, and nonwords, there are also considerable differences between these two tasks in the level of evoked activity and in the effects of lexical manipulation in reading‐related regions. Many classically described reading‐related regions, including the left IFG and AG, show an effect of lexicality only in the naming task. The task × stimulus‐type interactions provide an argument for reconsidering the general automaticity of reading‐related processing, offer grist for further insights into the neural processing underlying the matching and naming tasks, and give reason for careful consideration of study designs that use implicit reading tasks as a surrogate for explicit reading tasks.

Limited automatic activation of reading‐related pathways

Because of the difficulties of collecting and analyzing fMRI data while the subjects are speaking aloud (detailed in the introduction), some investigators have substituted implicit reading tasks for aloud word reading, assuming that there is automatic activation of the reading pathway, a point also critiqued in Schlaggar and McCandliss 2009. While there is BOLD activity in the traditionally described “reading” pathway during the implicit reading (visual matching) task, this activity fails to distinguish between strings with different lexical properties—words, pseudowords, and nonwords, while reading aloud does produce this distinction. A critical point, then, is that while there is activity in some classically described reading regions in implicit reading, there is not general equivalence in the way these classically described reading regions process items during explicit and implicit reading tasks.

It should be noted that while we argue the differences observed are most likely due to the differing processing demands elicited by the tasks themselves, there are other features that distinguish between the matching and naming conditions. In the matching task, two stimuli are presented simultaneously and parafoveally. By contrast, a single stimulus is presented foveally in the naming task. Additionally, the stimulus types were intermixed in the naming task, while they were presented in separate runs in the matching task. Finally, in the matching task, the stimulus presentation was 1.5 s and required a button press response. For the naming task, the stimulus presentation was 1 s and required a speaking response. There was a difference in response times to name nonwords versus pseudowords and words, which was not present in the matching task. While these constraints were necessary due to our desire to optimize the individual tasks for other performance measures (i.e., to minimize the working memory load and maximize accuracy), they could be the source of some of the task × timecourse effects. Indeed, differences between the tasks are quite likely to be driving some of the task × timecourse effects observed in finger and oropharyngeal sensorimotor cortex regions as well as auditory processing regions. It is also likely that the presence of two stimuli in the matching task contributes to the increased activity for matching over naming in the left occipital region observed in the task × timecourse interaction. However, we argue that these other task differences are less likely to be contributing significantly to the task × string type × timecourse interactions. Our primary argument is that this task × string type × timecourse interactions demonstrate the differential processing between the two tasks. Yet, the presentation and response differences only exist between the tasks—they are consistent across the stimulus types within each task, thus making it is less likely that the three‐way interactions shown in Figures 3 and 4 are driven by the surface differences between tasks.

Differential effects of ‘lexical’ processing

The pattern of BOLD activity during the matching and naming tasks may inform our understanding of the type of neural processing performed in regions involved in the two tasks. The left supramarginal (SMG) and AG have sometimes been treated as a single region performing phonological and/or semantic processing [Booth et al., 2009]. However, regions in these two locations show very different effects in this study. The SMG does not show BOLD activity >0.05% signal change during the matching task and also shows no task × timecourse interaction or task × stimulus type × timecourse interaction. On the other hand, the AG, which has been purported to be involved in semantic processing [Binder et al., 2009; Binder et al., 2009; Graves et al., 2009], shows a negative range of BOLD activity. In both the naming and matching tasks, the BOLD signal shows a negative deflection from baseline. In the matching task, this activity is equivalently negative for all three stimulus types (see a lack of stimulus type × timecourse interaction in the matching task, Fig. 4A), and the percent signal change is equivalent to the negative deflection for naming nonwords (see Fig. 4D). There is also a negative deflection of BOLD activity from baseline for naming pseudowords and nonwords, but no change in BOLD activity from baseline when reading words, consistent with previous reports [Bolger et al., 2009; Church et al., 2009; Church et al., 2009; Graves et al., 2009]. Interestingly, this pattern is also present in other members of the default mode network (green regions in Fig. 3, see Raichle et al., 2009 for a further description of the default mode network).

A pattern of BOLD activity nearly inverse to that observed in the left AG can be observed in left and right anterior superior parietal lobule (SPL) regions, where there is very little activity for reading words but stronger activity for reading pseudowords and nonwords that is equivalent to the activity produced by matching all string types (purple regions in Fig. 3). In the case of the SPL regions, these differences may be related to increased shifts of spatial attention or task difficulty, as these regions are near left IPS regions in the dorsal attention network [Corbetta et al., 2009] and left lateral parietal regions in the fronto‐parietal control network [Dosenbach et al., 2009]. Likewise, the negative deflections in default mode network regions (including the AG) may be related to the level of difficulty in performing the tasks on the particular stimuli, not necessarily due to a generally high level of semantic processing ongoing at rest that continues when reading words but decreases when naming pseudowords and nonwords or matching words, pseudowords, and nonwords. When considered together, the pattern of activity in the AG and lateral parietal regions indicates a reduced need for task level control or attentional processing when reading words relative to reading pseudo‐ and nonwords or matching letter strings.

Implications for study design

Given the described patterns of task‐related differences, we suggest a careful consideration of task design when attempting to draw conclusions about neural activity related to reading. While we particularly promote the use of a truly explicit reading task, we acknowledge that finding the “right” task to study even single‐word reading can be difficult.

Reading silently may not suffice for fMRI analyses, in part because it is difficult for the investigator to ensure subjects are performing the task or to monitor errors during silent reading. For example, if the subject becomes inattentive or drowsy the experimenter has no way to remove responses made during that state. As many stimulus related differences appear as reduced activity for reading words, inattention or failure to perform the task may reduce stimulus related differences. There is also increasing evidence that even if the subject is performing the task adequately, error responses change BOLD activity in many brain regions [Dosenbach et al., 2009; Garavan et al., 2009]. If experimenters are not able to detect and then either remove or control for error responses, then those responses may artificially contribute to differences in BOLD activity. Though, it should be noted that reading aloud is ecologically less common than is silent reading in adults.

Making a low‐level vocal response such as “yes” to a word, nonword, or nonletter string is also unlikely to be equivalent to reading and likely more similar to the matching results presented here. The task control demands of reading and making a single, repetitive response are disparate, and we have shown here that varying task demand does have an effect on the BOLD activity in reading regions.

Our call to use overt reading in order to study reading should not be taken as a call against using other lexical (or nonlexical) processing tasks to study processes related to reading. For example, many studies of orthographic specialization entail comparing word and letter stimuli versus nonword and nonletter stimuli‐like false fonts, which cannot be named overtly. Spelling or lexical decision tasks have been used to emphasize graphemic, phonological and/or semantic processes. However, the results presented here argue that investigators should not conflate the study of orthographic processing in matching, or perhaps even spelling or lexical decision tasks with the study of “reading.” The limitations of any “nonreading” task should be taken into account when designing neuroanatomic models of single‐word reading.

It is also true that single word reading aloud may not be the most ecologically valid construct, as much adult reading is silent and requires processing not just a single word, but sentences. Such “reading for meaning” adds layers of complexity such as syntax and grammar. Efforts are underway to study these other components of reading [Lee and Newman, 2009; Yarkoni et al., 2009] while being able to measure task compliance via a measured response. Yet, designing and implementing experimental paradigms that can both present such complex stimuli in a controlled way and allow for the attribution of neural responses to particular parts of these stimuli is nontrivial. Nonetheless, we argue single word reading is valuable for multiple reasons: (1) much of the cognitive psychology literature on reading is limited to single word reading, making lexical tasks a reasonable means to look for correspondences between psychological constructs and neural activity, (2) reading development is one of our core interests and often progresses through a stage of aloud, single word reading, and (3) a good understanding of the neural processing related to single words will aid in the understanding of the more complex processing required of more ecologically valid experimental designs.

Finally, these task considerations may be particularly important when comparing different subjects groups such as children (early readers) to adults or dyslexic to typical fluent readers. When making group based comparisons not only can the task potentially confound lexical processing (as demonstrated here), but subject group comparisons assuming equivalent performance in the two groups may also confound results [a problematic point expanded on in Carp et al., 2009, Church et al., 2009, Palmer et al., 2009, and Schlaggar and McCandliss, 2009].

SUMMARY AND CONCLUSIONS

There are task‐related differences in BOLD responses to words, pseudowords, and nonwords when directly comparing adults performing an implicit (visual matching) and explicit reading task. String type (words vs. pseudowords vs. nonwords) × timecourse effects were only present during an explicit naming task in many putative reading regions. The pattern of such effects indicates an automaticity or decreased difficulty in reading words during the naming task only. We suggest that these task‐related differences should be considered when designing studies for the purpose of understanding neural activity related to reading processes.

ACKNOWLEDGMENTS

The authors gratefully acknowledge Fran Miezin for his technical assistance in collecting and analyzing the data, particularly the spoken responses. The authors thank Rebecca Coalson and Kelly McVey for their help in acquiring functional data, and thank Jessica Church and Kelly Barnes for their very helpful discussions about and critical reading of this article.

REFERENCES

- Binder JR, Desai RH, Graves WW, Conant LL (2009): Where is the semantic system? A critical review and meta‐analysis of 120 functional neuroimaging studies. Cereb Cortex 19:2767–2796. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Binder JR, Medler DA, Desai R, Conant LL, Liebenthal E (2005a): Some neurophysiological constraints on models of word naming. Neuroimage 27:677–693. [DOI] [PubMed] [Google Scholar]

- Binder JR, Westbury CF, McKiernan KA, Possing ET, Medler DA (2005b): Distinct brain systems for processing concrete and abstract concepts. J Cogn Neurosci 17:905–917. [DOI] [PubMed] [Google Scholar]

- Bitan T, Cheon J, Lu D, Burman DD, Gitelman DR, Mesulam MM, Booth JR (2007): Developmental changes in activation and effective connectivity in phonological processing. Neuroimage 38:564–575. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bolger DJ, Hornickel J, Cone NE, Burman DD, Booth JR (2000): Neural correlates of orthographic and phonological consistency effects in children. Hum Brain Mapp 29:1416–1429. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bolger DJ, Perfetti CA, Schneider W (2005): Cross‐cultural effect on the brain revisited: Universal structures plus writing system variation. Hum Brain Mapp 25:92–104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Booth JR, Burman DD, Meyer JR, Gitelman DR, Parrish TB, Mesulam MM (2002): Functional anatomy of intra‐ and cross‐modal lexical tasks. Neuroimage 16:7–22. [DOI] [PubMed] [Google Scholar]

- Booth JR, Burman DD, Meyer JR, Gitelman DR, Parrish TB, Mesulam MM (2004): Development of brain mechanisms for processing orthographic and phonologic representations. J Cogn Neurosci 16:1234–1249. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Booth JR, Cho S, Burman DD, Bitan T (2007): Neural correlates of mapping from phonology to orthography in children performing an auditory spelling task. Dev Sci 10:441–451. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brown TT, Lugar HM, Coalson RS, Miezin FM, Petersen SE, Schlaggar BL (2005): Developmental changes in human cerebral functional organization for word generation. Cerebral Cortex 15:275–290. [DOI] [PubMed] [Google Scholar]

- Brunswick N, McCrory E, Price CJ, Frith CD, Frith U (1999): Explicit and implicit processing of words and pseudowords by adult developmental dyslexics: A search for Wernicke's Wortschatz? Brain 122:1901–1917. [DOI] [PubMed] [Google Scholar]

- Carp J, Fitzgerald KD, Taylor SF, Weissman DH (2012): Removing the effect of response time on brain activity reveals developmental differences in conflict processing in the posterior medial frontal cortex. NeuroImage 59:853–860. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Church JA, Coalson RS, Lugar HM, Petersen SE, Schlaggar BL (2008): A developmental fMRI study of reading and repetition reveals changes in phonological and visual mechanisms over age. Cereb Cortex 18:2054–2065. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Church JA, Balota DA, Petersen SE, Schlaggar BL (2010a): Manipulation of length and lexicality localizes the functional neuroanatomy of phonological processing in adult readers. J Cogn Neurosci 23:1475–1493. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Church JA, Petersen SE, Schlaggar BL (2010b): The “Task B Problem” and other considerations in developmental functional neuroimaging. Human Brain Mapp 31:852–862. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cohen JD, MacWhinney B, Flatt M, Provost J (1993): PsyScope: A new graphic interactive environment for designing psychology experiments. Behav Res Methods, Instrum Comput 25:257–271. [Google Scholar]

- Cohen L, Dehaene S (2004): Specialization within the ventral stream: The case for the visual word form area. Neuroimage 22:466–476. [DOI] [PubMed] [Google Scholar]

- Cohen L, Martinaud O, Lemer C, Lehericy S, Samson Y, Obadia M, Slachevsky A, Dehaene S (2003): Visual word recognition in the left and right hemispheres: Anatomical and functional correlates of peripheral alexias. Cereb Cortex 13:1313–1333. [DOI] [PubMed] [Google Scholar]

- Coltheart M, Rastle K, Perry C, Langdon R, Ziegler J (2001): DRC: A dual route cascaded model of visual word recognition and reading aloud. Psychol Rev 108:204–256. [DOI] [PubMed] [Google Scholar]

- Corbetta M, Kincade JM, Ollinger JM, McAvoy MP, Shulman GL (2000): Voluntary orienting is dissociated from target detection in human posterior parietal cortex. Nature Neurosci 3:292–297. [DOI] [PubMed] [Google Scholar]

- Dehaene S, Naccache L, Cohen L, Bihan DL, Mangin JF, Poline JB, Riviere D (2001): Cerebral mechanisms of word masking and unconscious repetition priming. Nat Neurosci 4:752–758. [DOI] [PubMed] [Google Scholar]

- Dosenbach NUF, Visscher KM, Palmer ED, Miezin FM, Wenger KK, Kang HC, Burgund ED, Grimes AL, Schlaggar BL, Petersen SE (2006): A core system for the implementation of task sets. Neuron 50:799–812. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fiez J, Balota D, Raichle M, Petersen S (1999): Effects of lexicality, frequency, and spelling‐to‐sound consistency on the functional anatomy of reading. Neuron 24:205–218. [DOI] [PubMed] [Google Scholar]

- Fiez J, Petersen S (1998): Neuroimaging studies of word reading. Proc Natl Acad Sci USA 95:914–921. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Forman SD, Cohen JD, Fitzgerald M, Eddy WF, Mintun MA, Noll DC (1995): Improved assessment of significant activation in functional magnetic resonance imaging (fMRI): Use of a cluster‐size threshold. Magn Res Med 33:636–647. [DOI] [PubMed] [Google Scholar]

- Gabrieli JD (2009): Dyslexia: A new synergy between education and cognitive neuroscience. Science 325:280–283. [DOI] [PubMed] [Google Scholar]

- Garavan H, Ross TJ, Murphy K, Roche RA, Stein EA (2002): Dissociable executive functions in the dynamic control of behavior: Inhibition, error detection, and correction. Neuroimage 17:1820–1829. [DOI] [PubMed] [Google Scholar]

- Graves WW, Desai R, Humphries C, Seidenberg MS, Binder JR (2010): Neural systems for reading aloud: A multiparametric approach. Cereb Cortex 20:1799–1815. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Harm MW, Seidenberg MS (2004): Computing the meanings of words in reading: Cooperative division of labor between visual and phonological processes. Psychol Rev 111:662–720. [DOI] [PubMed] [Google Scholar]

- James W,1890The Principles of Psychology.New York:Henry Holt and Company. [Google Scholar]

- Jobard G, Crivello F, Tzourio‐Mazoyer N (2003): Evaluation of the dual route theory of reading: A metanalysis of 35 neuroimaging studies. Neuroimage 20:693–712. [DOI] [PubMed] [Google Scholar]

- Lancaster JL, Glass TG, Lankipalli BR, Downs H, Mayberg H, Fox PT (1995): A modality‐independent approach to spatial normalization of tomographic images of the human brain. Hum Brain Mapp 3:209–223. [Google Scholar]

- Lee D, Newman SD (2010): The effect of presentation paradigm on syntactic processing: An event related FMRI study. Hum Brain Mapp 31:65–79. [DOI] [PMC free article] [PubMed] [Google Scholar]

- MacLeod CM (1991): Half a century of research on the Stroop effect: An integrative review. Psychol Bull 109:163–203. [DOI] [PubMed] [Google Scholar]

- McAvoy MP, Ollinger JM, Buckner RL (2001): Cluster size thresholds for assessment of significant activation in fMRI. Neuroimage 13:S198. [Google Scholar]

- McCandliss BD, Cohen L, Dehaene S (2003): The visual word form area: Expertise for reading in the fusiform gyrus. Trends Cogn Sci 7:293–299. [DOI] [PubMed] [Google Scholar]

- Mechelli A, Gorno‐Tempini ML, Price CJ (2003): Neuroimaging studies of word and pseudoword reading: Consistencies, inconsistencies, and limitations. J Cogn Neurosci 15:260–271. [DOI] [PubMed] [Google Scholar]

- Mehta S, Grabowski TJ, Razavi M, Eaton B, Bolinger L (2006): Analysis of speech‐related variance in rapid event‐related fMRI using a time‐aware acquisition system. Neuroimage 29:1278–1293. [DOI] [PubMed] [Google Scholar]

- Miezin FM, Maccotta L, Ollinger JM, Petersen SE, Buckner RL (2000): Characterizing the hemodynamic response: Effects of presentation rate, sampling procedure, and the possibility of ordering brain activity based on relative timing. Neuroimage 11:735–759. [DOI] [PubMed] [Google Scholar]

- Neely JH (1977): Semantic priming and retrieval from lexical memory: Roles of inhibitionless spreading activation and limitless capacity attention. J Exp Psych Gen 106:226–254. [Google Scholar]

- Nelles JL, Lugar HM, Coalson RS, Miezin FM, Petersen SE, Schlaggar BL (2003): An automated method for extracting response latencies of subject vocalizations in event‐related fMRI experiments. Neuroimage 20:1865–1871. [DOI] [PubMed] [Google Scholar]

- Palmer ED, Brown TT, Petersen SE, Schlaggar BL (2004): Investigation of the functional neuroanatomy of single word reading and its development. Sci Stud Read 8:203–223. [Google Scholar]

- Petersen SE, Fox PT, Posner MI, Mintun M, Raichle ME (1988): Positron emission tomographic studies of the cortical anatomy of single‐word processing. Nature 331:585–589. [DOI] [PubMed] [Google Scholar]

- Polk TA, Stallcup M, Aguirre GK, Alsop DC, D'Esposito M, Detre JA, Farah MJ (2002): Neural specialization for letter recognition. J Cogn Neurosci 14:145–159. [DOI] [PubMed] [Google Scholar]

- Price CJ, Wise RJS, Frackowiak RSJ (1996): Demonstrating the implicit processing of visually presented words and pseudowords. Cereb Cortex 6:62–70. [DOI] [PubMed] [Google Scholar]

- Raichle ME, MacLeod AM, Snyder AZ, Powers WJ, Gusnard DA, Shulman GL (2001): A default mode of brain function. Proc Natl Acad Sci USA 98:676–682. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sandak R, Mencl WE, Frost SJ, Rueckl JG, Katz L, Moore DL, Mason SA, Fulbright RK, Constable RT, Pugh KR (2004): The neurobiology of adaptive learning in reading: A contrast of different training conditions. Cogn Affect Behav Neurosci 4:67–88. [DOI] [PubMed] [Google Scholar]

- Schlaggar B, Brown T, Lugar H, Visscher K (2002): Functional neuroanatomical differences between adults and school‐age children in the processing of single words. Science 296:1476–1479. [DOI] [PubMed] [Google Scholar]

- Schlaggar BL, McCandliss BD (2007): Development of neural systems for reading. Ann Rev Neurosci 30:475–503. [DOI] [PubMed] [Google Scholar]

- Shaywitz SE (1998): Dyslexia. N Engl J Med 338:307–312. [DOI] [PubMed] [Google Scholar]

- Snyder AZ (1996): Difference image vs. ratio image error function forms in PET‐PET realignment In: Myer R., Cunningham V.J., Bailey D.L., Jones T., editors.Quantification of Brain Function Using PET.San Diego, CA:Academic Press; pp131–137. [Google Scholar]

- Starrfelt R, Gerlach C (2007): The visual what for area: Words and pictures in the left fusiform gyrus. Neuroimage 35:334–342. [DOI] [PubMed] [Google Scholar]

- Tagamets MA, Novick JM, Chalmers ML, Friedman RB (2000): A parametric approach to orthographic processing in the brain: An fMRI study. J Cogn Neurosci 12:281–297. [DOI] [PubMed] [Google Scholar]

- Talairach J, Tournoux P (1988):Co‐planar stereotaxic atlas of the human brain: 3‐dimensional proportional system: An approach to cerebral imaging.Stuttgart:Thieme. [Google Scholar]

- Turkeltaub PE, Eden GF, Jones KM, Zeffiro TA (2002): Meta‐analysis of the functional neuroanatomy of single‐word reading: Method and validation. Neuroimage 16:765–780. [DOI] [PubMed] [Google Scholar]

- Turkeltaub PE, Gareau L, Flowers DL, Zeffiro TA, Eden GF (2003): Development of neural mechanisms for reading. Nat Neurosci 6:767–773. [DOI] [PubMed] [Google Scholar]

- Twomey T, Duncan KJK, Price CJ, Devlin JT (2011): Top‐down modulation of ventral occipito‐temporal responses during visual word recognition. Neuroimage 55:1242–1251. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vigneau M, Beaucousin V, Herve PY, Duffau H, Crivello F, Houde O, Mazoyer B, Tzourio‐Mazoyer N (2006): Meta‐analyzing left hemisphere language areas: Phonology, semantics, and sentence processing. Neuroimage 30:1414–1432. [DOI] [PubMed] [Google Scholar]

- Vinckier F, Dehaene S, Jobert A, Dubus JP, Sigman M, Cohen L (2007): Hierarchical coding of letter strings in the ventral stream: Dissecting the inner organization of the visual word‐form system. Neuron 55:143–156. [DOI] [PubMed] [Google Scholar]

- Wechsler D (1999):Wechsler Abbreviated Scale of Intelligence, 3rd edSan Antonio:The Psychological Corporation. [Google Scholar]

- Woodcock RW, Johnson MB (2002):Woodcock‐Johnson‐Revised Tests of Achievement.Itasca, IL:Riverside Publishing. [Google Scholar]

- Yarkoni T, Speer NK, Balota DA, McAvoy MP, Zacks JM (2008): Pictures of a thousand words: Investigating the neural mechanisms of reading with extremely rapid event related FMRI. NeuroImage 42:973–987. [DOI] [PMC free article] [PubMed] [Google Scholar]