Abstract

Objective

About half of medical and health-related studies are not published. We conducted a systematic review of reports on reasons given by investigators for not publishing their studies in peer-reviewed journals.

Methods

MEDLINE, EMBASE, PsycINFO, and SCOPUS (until 13/09/2013), and references of identified articles were searched to identify reports of surveys that provided data on reasons given by investigators for not publishing studies. The proportion of non-submission and reasons for non-publication was calculated using the number of unpublished studies as the denominator. Because of heterogeneity across studies, quantitative pooling was not conducted. Exploratory subgroup analyses were conducted.

Results

We included 54 survey reports. Data from 38 included reports were available to estimate proportions of at least one reason given for not publishing studies. The proportion of non-submission among unpublished studies ranged from 55% to 100%, with a median of 85%. The reasons given by investigators for not publishing their studies included: lack of time or low priority (median 33%), studies being incomplete (median 15%), study not for publication (median 14%), manuscript in preparation or under review (median 12%), unimportant or negative result (median 12%), poor study quality or design (median 11%), fear of rejection (median 12%), rejection by journals (median 6%), author or co-author problems (median 10%), and sponsor or funder problems (median 9%). In general, the frequency of reasons given for non-publication was not associated with the source of unpublished studies, study design, or time when a survey was conducted.

Conclusions

Non-submission of studies for publication remains the main cause of non-publication of studies. Measures to reduce non-publication of studies and alternative models of research dissemination need to be developed to address the main reasons given by investigators for not publishing their studies, such as lack of time or low priority and fear of being rejected by journals.

Introduction

About half of medical and health related research studies remain not published [1], [2], and there is still ongoing debate about whether publishing selectively could be justifiable [3], [4]. However, research ethical obligations require the appropriate dissemination and publication of all research results [5]. When research methods and results are inaccessible to users of research, the investment in such research contributes little or nothing to knowledge or practice. Failure to publish research can potentially be regarded as a form of research misconduct and unethical [6]. In addition, empirical evidence indicates the existence of research dissemination bias, as published studies tend to be systematically different from unpublished studies [1], [7]. Adverse consequences of research inaccessibility include unnecessary duplication, harm to patients, waste of limited resources, and loss of (trust in) scientific integrity [1], [8].

The dissemination profile of research is influenced by the interests of a variety of different stakeholders [8]. For example, there have been a number of high profile cases in which unfavourable results of studies sponsored by pharmaceutical companies were publically inaccessible for commercial reasons [1], [9]. Rejection by journals may also be a cause of non-publication of studies. However, evidence indicates that many studies remain unpublished because researchers failed to write up and submit their work to journals for publication [10]–[12]. Researchers are motivated to publish as many studies as possible because of a “publish or perish” culture [13], [14]. It is therefore surprising that investigators who need to publish are the main cause of non-publication of studies.

There are many recently published survey studies that have reported reasons given by researchers describing why they failed to publish their work in peer-reviewed journals. We conducted a systematic review of relevant surveys, in order to improve our understanding of reasons given by investigators for not publishing studies, and help to develop innovative, new or better measures to reduce non-publication of completed research.

Methods

We searched MEDLINE, EMBASE, PsycINFO, and SCOPUS for relevant reports until 13th September 2013 (see Appendix S1 for the search strategy). References of retrieved articles and reviews on publication bias were also examined for relevant studies. Titles and abstracts of retrieved citations from the searches of electronic databases were screened by two independent reviewers. Full text articles of possibly relevant studies were assessed by one reviewer to identify eligible studies.

We included any reports of surveys that provided data on reasons given by investigators for not publishing studies they conducted. Articles that discussed reasons for not publishing studies in general but did not provide empirical data on reasons given by investigators were excluded. There were no restrictions on languages or publication status. We used Google Translate to obtain key information from studies published in languages other than English.

Data extraction was conducted by one reviewer (FS), and checked by a second reviewer (YL or LH). From the included survey reports, we extracted the following data: source and types of unpublished studies (conference abstracts, study protocols, survey of academics or professionals, postgraduate submissions, rejected manuscripts, study sponsors, and trial registries), survey methods, response rate, number of unpublished studies, number of unsubmitted studies, stated reasons for not publishing.

Methods used to categorise reasons for not publishing may be different across included surveys. Stated reasons for not publishing were grouped into categories: including lack of time or low priority, unimportant or negative results, journal rejection, fear of being rejected, and so on. We used the number of unpublished studies as the denominator to calculate the proportion of non-submission and specific reasons for non-publication for each included survey reports. First, proportions of reasons reported in survey reports were transformed to normally distributed values using the Freeman-Tukey transformation methods [15], [16]. The normally distributed transformed proportions were then used to estimate 95% confidence intervals and for meta-regression analyses. The use of transformed proportions for estimating confidence intervals also avoided the possibility of inappropriate results where the lower limit is below zero or the upper limit exceeds one, when the point proportion is approaching 0% or 100%.

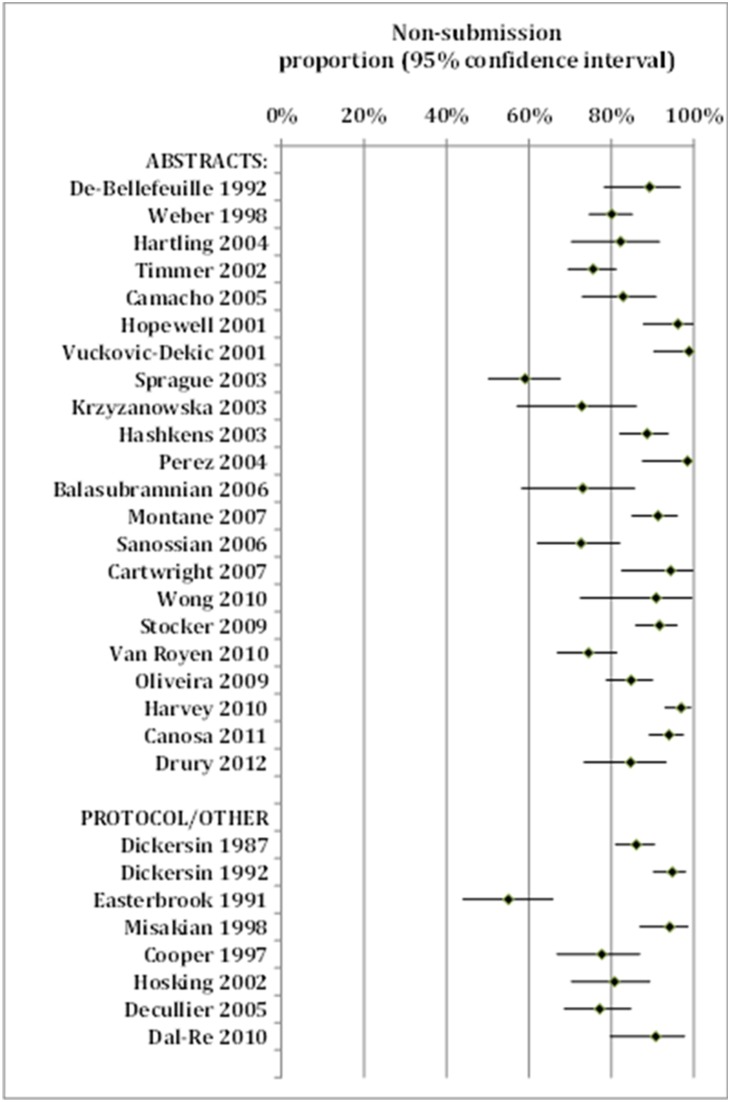

Because of significant heterogeneity across reports, quantitative pooling of results was not conducted. We used forest plots to visually present individual results of included reports, and presented medians and ranges (minimum to maximum) of reported proportions of reasons given for non-publication.

For exploratory subgroup analysis, included reports were separated into subgroups by types of unpublished studies (abstracts vs. protocols or other), design of unpublished studies (clinical trials only vs. other or different designs), period of surveys conducted (before 2000, from 2000 to 2004, and since 2005), rate of non-submission, and response rate. The differences between subgroups were statistically tested using univariate, random-effects meta-regression (Stata/IC 12.1 for Windows “metareg” command), and the Bonferroni correction was used for multiple statistical testing.

Results

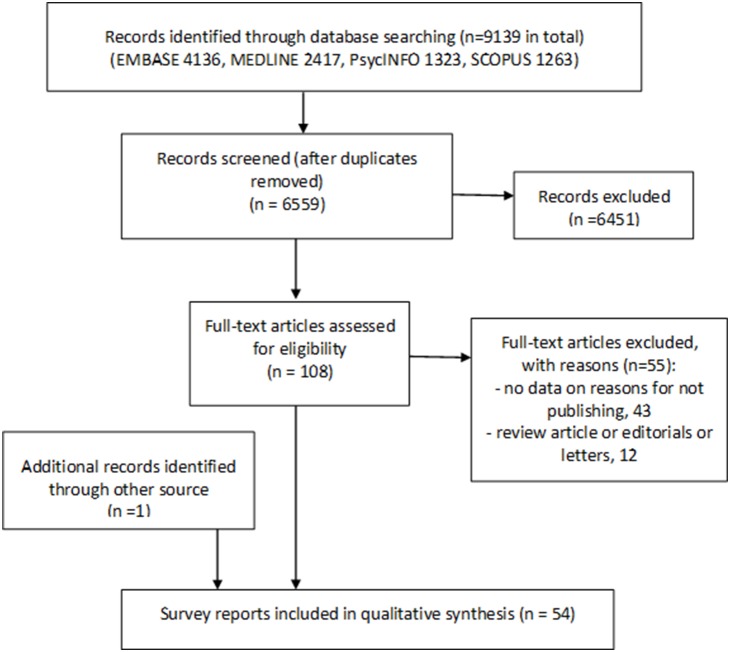

The process of study selection is shown in Figure 1. By checking titles and abstracts of retrieved citations (n = 6559), we identified 108 citations that were possibly relevant. After examining the 108 full-text articles, we included 53 reports that provided investigator’s reasons for not publishing their studies [10], [11], [17]–[67]. One additional report was identified by an informal search of PubMed [68], because the formal search excluded titles that used terms “meta-analysis” or “systematic review” (See Appendix S1). We included 54 survey reports in total.

Figure 1. Flow diagram for study selections.

The main characteristics of the included reports are presented in Appendix S2. Non-publication was generally defined as lack of full publication in peer-reviewed journals. Of the 54 included reports, 10 surveys were conducted before 2000, 17 conducted from 2000 to 2004, and 27 conducted since 2005. There were 27 surveys of authors of unpublished conference abstracts, 11 reports in which unpublished studies were revealed by academics or professionals who responded to a survey, 7 reports in which unpublished studies were identified from protocol cohorts, 4 surveys of postgraduate submissions, two surveys of studies sponsored by a funding body, two surveys of rejected manuscripts, and one survey of studies from a trial registry.

There were 38 reports that included unpublished studies with a mix of different designs or types, 11 reports that included only unpublished trials, and one each for unpublished animal research, epidemiological research, qualitative research, methodological research, and systematic reviews (Appendix S2).

Survey methods included mainly postal or email questionnaires, and telephone or face-to-face interviewing. The response rate by authors ranged from 8% to 100%, with a median of 64% (Appendix S2). According to 43 reports with sufficient data, the median number of unpublished studies was 65 (ranged from 7 to 223). Of the 54 included reports, 38 provided sufficient data to estimate proportions of at least one stated reason.

Main reasons for non-publication

Table 1 presents the medians and ranges of reported proportions of reasons for non-publication. Estimated proportions (with 95% confidence intervals) of reasons for non-publication from individual reports and results of statistical tests for heterogeneity are shown in Appendix S3 (forest plots).

Table 1. Reasons given by investigators for non-publication of studies –summary of results of included surveys.

| Reasons | No. ofsurveys thatreportedthe reason | Total no. of statedreason/unpublishedstudies | Reported proportion:median (range) |

| Non-submission | 30 | 2156/2592 | 85% (55%, 100%) |

| Study incomplete or ongoing | 18 | 273/1509 | 15% (3%, 56%) |

| In preparation or under review | 22 | 293/1778 | 12% (3%, 65%) |

| Study not for publication | 9 | 107/880 | 14% (3%, 38%) |

| Similar findings published | 10 | 52/867 | 5% (3%, 13%) |

| Submission rejected by journal | 25 | 210/2197 | 6% (2%, 27%) |

| Fear of being rejected | 9 | 110/926 | 12% (6%, 26%) |

| Lack of time or low priority | 32 | 873/2634 | 33% (11%, 60%) |

| Results not important or negative | 19 | 293/1593 | 12% (1%, 34%) |

| Poor study quality or design | 16 | 197/1611 | 11% (2%, 32%) |

| Sponsor/funder problems | 4 | 31/230 | 9% (5%, 24%) |

| Author/co-author problems | 14 | 156/1337 | 10% (4%, 23%) |

Two reports included only studies that had been rejected for publication by journals [35], [46]. Using data from the other 30 reports, the proportion of non-submission among unpublished studies ranged from 55% to 100%, with a median of 85% (Table 1 and Figure 2).

Figure 2. Proportions of non-submission among unpublished studies – results of individual surveys.

The most commonly stated reason for non-publication was lack of time or low priority (median 33%, range: 11% to 60%) (Table 1). Other important reasons for non-publication included: studies being incomplete or still ongoing (median 15%), study not for publication (median 14%), manuscript in preparation or under review (median 12%), unimportant or negative result (median 12%), poor study quality or design (median 11%), fear of rejection (median 12%), rejection by journals (median 6%), author or co-author problem (median 10%), and sponsor or funder problem (median 9%).

Findings from the included reports in which data were insufficient for quantitative analyses were qualitatively consistent with the above results. For example, lack of time was often mentioned as an important reason for non-publication of studies (Appendix S2).

Heterogeneity in reasons given for non-publication

There was substantial heterogeneity in the proportion of reasons given for non-publication across individual reports (Table 1 and Appendix S3). The results of exploratory subgroup analyses are presented in Appendix S4. In most cases, heterogeneity across reports could not be explained by sources of unpublished studies, study design, period of surveys, response rate, or non-submission rate (Appendix S4). There were four statistically significant findings (P<0.05) out of a total of 57 statistical tests of subgroup differences. If a Bonferroni correction was used for multiple statistical testing, only one of the subgroup analyses retained statistical significance. A higher proportion of non-submission was statistically significantly associated with a lower proportion of journal rejection (after the Bonferroni correction, P<0.01) as a reason for non-publication, which is expected as unsubmitted manuscripts cannot be rejected by journals. Specifically, journal rejection as a reason for non-publication was reduced by 0.7%, with a 10% increase in non-submission. For the three other subgroup analyses with P<0.05, subgroup differences became statistically non-significant with the Bonferroni correction for multiple testing (see Appendix S4 for details).

Discussion

Our systematic review is the most comprehensive review of reasons given by investigators for not publishing studies. As 85% of unpublished studies have not been submitted to journals, non-publication of many studies was directly caused by failure of authors to write up and submit their work to journals. The most commonly stated reason for non-publication was lack of time or low priority. Other stated reasons included unimportant or negative result, study not completed, study not for publication, fear of journal rejection, poor study quality or design, manuscript in preparation or under review, author or co-author problem, sponsor or funder problem.

Acceptability of reasons given for not publishing studies

Research dissemination will be a biased process when non-publication is due to negative results, which is clearly unacceptable [8]. Some of the stated reasons seem acceptable for non-publication of a study, such as manuscript in preparation or under review, or the study being still ongoing. However, the acceptability of most stated reasons is disputable in terms of potential bias in the research literature and the ethics of scientific conduct [69], [70].

Except for two reports that included only studies rejected by a journal, journal rejection was an infrequently stated reason for non-publication (median 6%). Rejection by a journal may not necessarily be an acceptable reason for non-publication, as many studies which were eventually published had previously been rejected by one or more journals. Okike et al. found that, in five years, 76% of the manuscripts rejected by the Journal of Bone and Joint Surgery (American Volume) had been published in other journals [46].

Fear of journal rejection (median 12%) was a more frequently stated reason than the actual rejection by journals (median 6%). Therefore, the low proportion of journal rejection as a reason for non-publication may be partly due to the selective submission of studies by investigators. To some extent, experienced researchers may be able to guess whether and where a study is likely to be accepted for publication. Fear of being rejected may originate from perception that results are not important or statistically non-significant, awareness of poor study quality, and similar findings already being published by others. Brice and Chalmers found that only 12% of high quality medical journals explicitly encourage authors to submit manuscripts of robust research, “regardless of the direction or strength of the results” [71]. Unfortunately, there was no direct empirical evidence to reveal why authors felt their work was unlikely to be accepted by a journal.

Lack of time or low priority (median 33%) is an arguable reason for not publishing studies. Individual researchers may become swamped if engaged in several streams of research that could potentially be developed for journal publication. It is very time-consuming to prepare, submit, revise, and re-submit manuscripts for publication. Repeated rejection by multiple journals is not unusual before a study is eventually accepted. For career advancement, rewards from publishing in high-impact journals would be greater than in low-impact journals. Many researchers may indeed have insufficient time to publish all their work in peer-reviewed journals. It is therefore practically logical for investigators to focus on “wonderful results” rather than “negative results”, as the former may be more likely to be published in a high-impact journal [20]. Fanelli found that the frequency of reporting positive results was associated with ‘the more competitive and productive academic environments’ in the United States [72].

It has been stated that some unpublished studies were not intended for publishing in peer-reviewed journals (median 14%). Postgraduate theses or dissertations are used to obtain academic degrees or professional certificates. However, there is no good reason not to publish degree theses [73]. Feasibility or pilot studies aim to help investigators to develop full scale studies, and the methods and results of such studies should be published or publically accessible to other researchers. Findings from industry sponsored trials are used to gain regulatory approvals of commercial products, and such studies should be published or publically accessible [74].

Poor study quality or design problems as a reason for non-publication (median 11%) is also arguable. It has been suggested that quality rather than the number of publications should be emphasized to measure researchers’ scientific productivity [75]. However, we need to distinguish the number of studies published and the number of studies conducted. A reduction in emphasis on the volume of published material should not be used to justify non-publication of studies that have been conducted [1], [76]. The results of poor quality studies may have no immediate impact on clinical practice and health policy. However, methodological issues or problems experienced in failed studies may inform other investigators to avoid similar mistakes or problems [77]. It may be difficult to publish failed studies in peer-reviewed journals, and alternative dissemination approaches may be required. For example, clinical trial registries may provide a conventional platform to record the methods and results of failed trials [78].

Suppression of unfavourable results by study sponsors or funders has always been a concern [8]. However, only four surveys provided data on the proportion of sponsor or funder problem as a reason for non-publication [21], [34], [39], [68]. A survey of study protocols with different designs, conducted in 1990, found that 24% of the 78 unpublished studies were due to sponsor control of data [34]. The three more recent studies reported lower proportions of sponsor or funder problem as a reason for non-publication (5%, 7%, and 11% respectively) [21], [39], [68]. Non-publication of studies because of sponsors or funders was considered to be rare but important in another three studies that did not provide quantitative data [33], [50], [59].

Some studies were not published because other investigators had already published similar findings (median 5%). However, unnecessary duplication should be distinguished from appropriate replication. Empirical evidence does reveal the existence of unnecessary duplication in health research, and new research should be justified according to what is already known from systematically reviewing existing evidence [79]. However, with regards to completed studies, results from all primary research should be properly maintained and made publicly accessible.

Author or co-author problems were further reasons given for not publishing studies (median 9%), including job/post change, trouble with co-authors, and other’s responsibility to write up. It is unclear why a higher proportion of author problems was associated with a lower response rate, and why it was a more frequent reason for non-publication of clinical trials compared to non-publication of studies with mixed or other designs (Appendix S4). It is likely that, to some extent, author problems are associated with other stated reasons such as lack of time or low priority, unimportant results, and poor study quality. For example, if the principal investigator decides not to publish, it may be very difficult for junior co-investigators to publish results, even if they would like to [80].

Implications for strategies to reduce non-publication of studies

Prospective registration of clinical trials at their inception has been developed in order to reduce selective publication of trials [8], [81]. However, it is currently mandatory only for certain categories of clinical trials (for example, trials of medicinal products or medical device). It is still difficult, if not impossible, to uncover unpublished observational research and basic biomedical studies. In addition to prospective registration of studies and other enforcement measures, additional measures are required, relating to the main reasons given by investigators for not publishing their studies.

Existing recommendations for reducing non-publication of studies may have failed to give sufficient consideration to commonly stated reasons, such as lack of time or low priority, by investigators for not publishing studies. For instance, the problem of lack of time needs to be addressed from the beginning to the end of a research. First, as Altman recommended, we need “less research, better research, and research done for the right reasons” [82]. Any new research should be relevant and of sufficiently high quality [79]. If national registries of funded projects (across funding bodies) were made available, research funders would be able to take into account the recent and current workload of investigators, so that they won’t simultaneously conduct too many studies. Moreover, researchers who failed to publish findings from their completed studies could be given lower priority for further funding, preferably across funding bodies. The publication rate of funded studies from the Health Technology Assessment (HTA) Programme in the UK was 98% after 2002, by publishing the results in the programme’s own journal (HTA monograph) and withholding 10% of funds until the publication of the full report [83].

Second, the process of peer-reviewed publications should be more streamlined, and modified to save investigators’, peer-reviewers, and editors’ time [84]. It would save time if the same general guidelines for manuscript submission could be adopted by different journals. Many studies are rejected by multiple journals before they are eventually accepted for publication. Effort and time required to publish studies that are likely to be rejected by multiple journals will be particularly great, because the format of a manuscript needs to be changed before submitting it to each different journal, and repeated editorial and peer-review processes by different journals are required for a single manuscript.

For some studies, publication in a conventional journal may be too time-consuming to pursue, or the possibility of being accepted by a journal is extremely slim for various reasons. Therefore, there is a need for alternative modalities to retain “unpublishable” studies so that other researchers could easily identify these studies and access their methods and results. Investigators should need much less time to publish their work by alternative approaches than in conventional journals. The publication of studies through the alternative system should be acceptable as the fulfilment of mandatory research dissemination required by research sponsors, funders, and other regulatory bodies. Given advanced information technology, the development of publication processes alternative to conventional journals is technically possible. For example, established trial registration system can be alternative models for research dissemination, whereby results can be posted together with previously registered protocols [84]. Other models for scientific communication have also been tested in some research fields [85], [86].

Limitations of the review

Biased selection of studies for publication may be a common problem in any research fields. However, it is unclear whether the results of this systematic review are directly relevant to the non-publication of research in fields other than medical and health-related studies. Reasons for non-publication of studies may even be different within medical and health-research. Further research is required to examine the similarity and differences in research dissemination of different fields.

The quality of the included surveys was not formally assessed, as we are not aware of any validated tools to assess quality of such studies. One readily available indicator of survey quality may be the response rate by authors, which ranged from 8% to 100% (median 64%). Publication bias and outcome reporting bias is likely in the included studies. The estimated frequency of stated reasons may be exaggerated when multiple reasons reported by a small number of respondents in some surveys were lumped together into a single “other” category, which could not be used for calculating proportions. Multiple reasons for non-publication of individual studies were usually allowed in included surveys, so it is possible that the frequency of some stated reasons may have been under-estimated when investigators partially selected reasons that were interrelated. In addition, we were unable to distinguish between one-off rejections as compared to repeated rejections by journals.

This systematic review included very diverse studies in terms of sources of unpublished studies, types of unpublished studies, research fields, survey methods, selection of survey participants, and questions asked about reasons for not publishing studies. Although the generalizability of findings from this systematic review may be improved by including diverse reports, there was significant heterogeneity in results across the included reports. In general, the frequency of main reasons given for non-publication was not associated with the source of unpublished studies, study design, response rate, or time when a survey was conducted. Three of the four significant subgroup differences (at the level of P<0.05), from a large number of subgroup analyses, were no longer statistically significant if the Bonferroni correction was carried out. In most cases, the differences between subgroups were small and with unclear practical importance. In addition, the power of meta-regression analyses was limited due to the relatively small number of primary studies included. Therefore, results of our subgroup analyses should be interpreted with great caution [87].

Conclusions

Non-submission of studies for publication remains the main cause of non-publication of medical and health-related studies. Measures to reduce non-publication of studies and alternative models of research dissemination need to be developed taking into account the common reasons given by investigators for not publishing their studies, such as lack of time or low priority and fear of being rejected by journals.

Supporting Information

Literature search strategy (Ovid –MEDLINE, EMBASE).

(PDF)

The main characteristics of reports that provided data on reasons for non-publication of studies.

(PDF)

Forest plots of stated reasons for non-publication of studies.

(PDF)

Results of meta-regression analyses of differences between subgroups.

(PDF)

PRISMA Checklist.

(PDF)

Data Availability

The authors confirm that all data underlying the findings are fully available without restriction. All relevant data are within the paper and its Supporting Information files.

Funding Statement

The authors received no specific funding for this work.

References

- 1. Chan AW, Song F, Vickers A, Jefferson T, Dickersin K, et al. (2014) Increasing value and reducing waste: addressing inaccessible research. Lancet 383: 257–266. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Blumle A, Meerpohl JJ, Schumacher M, von Elm E (2014) Fate of clinical research studies after ethical approval–follow-up of study protocols until publication. PLoS One 9: e87184. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. de Winter J, Happee R (2013) Why selective publication of statistically significant results can be effective. PLoS One 8: e66463. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. van Assen MA, van Aert RC, Nuijten MB, Wicherts JM (2014) Why publishing everything is more effective than selective publishing of statistically significant results. PLoS One 9: e84896. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. World Medical Association (2013) World Medical Association Declaration of Helsinki: Ethical Principles for Medical Research Involving Human Subjects. JAMA 310: 2191–2194. [DOI] [PubMed] [Google Scholar]

- 6. Chalmers I (1990) Under-reporting research is scientific misconduct. JAMA 263: 1405–1408. [PubMed] [Google Scholar]

- 7. Dwan K, Gamble C, Williamson PR, Kirkham JJ (2013) Systematic review of the empirical evidence of study publication bias and outcome reporting bias - an updated review. PLoS One 8: e66844. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Song F, Parekh S, Hooper L, Loke YK, Ryder J, et al. (2010) Dissemination and publication of research findings: an updated review of related biases. Health Technol Assess 14: iii, ix–xi, 1–193. [DOI] [PubMed]

- 9. McGauran N, Wieseler B, Kreis J, Schuler YB, Kolsch H, et al. (2010) Reporting bias in medical research - a narrative review. Trials 11: 37. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Dickersin K, Chan S, Chalmers TC, Sacks HS, Smith H Jr (1987) Publication bias and clinical trials. Control Clin Trials 8: 343–353. [DOI] [PubMed] [Google Scholar]

- 11. Weber EJ, Callaham ML, Wears RL, Barton C, Young G (1998) Unpublished research from a medical specialty meeting: why investigators fail to publish. JAMA 280: 257–259. [DOI] [PubMed] [Google Scholar]

- 12.Chalmers I, Dickersin K (2013) Biased under-reporting of research reflects biased under-submission more than biased editorial rejection. F1000Res 2 (1). doi:10.12688/f1000research.2-1.v1. [DOI] [PMC free article] [PubMed]

- 13. van Dalen HP, Henkens K (2012) Intended and unintended consequences of a publish-or-perish culture: A worldwide survey. Journal of the American Society for Information Science and Technology 63: 1282–1293. [Google Scholar]

- 14. Tijdink JK, Vergouwen ACM, Smulders YM (2013) Publication Pressure and Burn Out among Dutch Medical Professors: A Nationwide Survey. PLoS ONE 8: e73381. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Freeman MF, Tukey JW (1950) Transformations related to the angular and the square root. Annals of Mathematical Statistics 21: 607–611. [Google Scholar]

- 16. Miller JJ (1978) The inverse of the Freeman-Tukey double arcsine transformation. American Statistician 32: 138. [Google Scholar]

- 17. Ammenwerth E, de Keizer N (2007) A viewpoint on evidence-based health informatics, based on a pilot survey on evaluation studies in health care informatics. Journal of the American Medical Informatics Association 14: 368–371. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Balasubramanian SP, Kumar ID, Wyld L, Reed MW (2006) Publication of surgical abstracts in full text: A retrospective cohort study. Annals of the Royal College of Surgeons of England 88: 57–61. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19. Bullen CR, Reeve J (2011) Turning postgraduate students’ research into publications: a survey of New Zealand masters in public health students. Asia-Pacific journal of public health/Asia-Pacific Academic Consortium for Public Health 23: 801–809. [DOI] [PubMed] [Google Scholar]

- 20. Calnan M, Davey Smith G, Sterne JAC (2006) The publication process itself was the major cause of publication bias in genetic epidemiology. Journal of Clinical Epidemiology 59: 1312–1318. [DOI] [PubMed] [Google Scholar]

- 21. Camacho LH, Bacik J, Cheung A, Spriggs DR (2005) Presentation and subsequent publication rates of phase I oncology clinical trials. Cancer 104: 1497–1504. [DOI] [PubMed] [Google Scholar]

- 22. Canosa D, Ferrero F, Melamud A, Otero PD, Merech RS, et al. (2011) [Full-text publication of abstracts presented at the 33th Argentinean pediatric meeting and non publication related factors]. Archivos Argentinos de Pediatria 109: 56–59. [DOI] [PubMed] [Google Scholar]

- 23. Cartwright R, Khoo AK, Cardozo L (2007) Publish or be damned? The fate of abstracts presented at the International Continence Society Meeting 2003. Neurourology & Urodynamics 26: 154–157. [DOI] [PubMed] [Google Scholar]

- 24. Cooper H, DeNeve K, Charlton K (1997) Finding the missing science: The fate of studies submitted for review by a human subjects committee. Psychological Methods 2: 447–452. [Google Scholar]

- 25. Dal-Re R, Pedromingo A, Garcia-Losa M, Lahuerta J, Ortega R (2010) Are results from pharmaceutical-company-sponsored studies available to the public?. [Erratum appears in Eur J Clin Pharmacol. 2011 Jun; 67(6):643]. European Journal of Clinical Pharmacology 66: 1081–1089. [DOI] [PubMed] [Google Scholar]

- 26. De Bellefeuille C, Morrison CA, Tannock IF (1992) The fate of abstracts submitted to a cancer meeting: factors which influence presentation and subsequent publication. Annals of Oncology 3: 187–191. [DOI] [PubMed] [Google Scholar]

- 27. Decullier E, Chapuis F (2006) Impact of funding on biomedical research: a retrospective cohort study. BMC Public Health 6: 165. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28. Decullier E, Lheritier V, Chapuis F (2005) Fate of biomedical research protocols and publication bias in France: retrospective cohort study. BMJ 331: 19. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Dickersin K, Min YI (1993) NIH clinical trials and publication bias. Online Journal of Current Clinical Trials Doc No 50 (3). [PubMed]

- 30. Dickersin K, Min YI, Meinert CL (1992) Factors influencing publication of research results. Follow-up of applications submitted to two institutional review boards. JAMA 267: 374–378. [PubMed] [Google Scholar]

- 31. Donaldson IJ, Cresswell PA (1996) Dissemination of the work of public health medicine trainees in peer-reviewed publications: an unfulfilled potential. Public Health 110: 61–63. [DOI] [PubMed] [Google Scholar]

- 32. Drury NE, Maniakis-Grivas G, Rogers VJ, Williams LK, Pagano D, et al. (2012) The fate of abstracts presented at annual meetings of the Society for Cardiothoracic Surgery in Great Britain and Ireland from 1993 to 2007. European Journal of Cardio-Thoracic Surgery 42: 885–889. [DOI] [PubMed] [Google Scholar]

- 33. Dyson DH, Sparling SC (2006) Delay in final publication following abstract presentation: American College of Veterinary Anesthesiologists annual meeting. Journal of Veterinary Medical Education 33: 145–148. [DOI] [PubMed] [Google Scholar]

- 34. Easterbrook PJ, Berlin JA, Gopalan R, Matthews DR (1991) Publication bias in clinical research. Lancet 337: 867–872. [DOI] [PubMed] [Google Scholar]

- 35. Green R, Del Mar C (2006) The fate of papers rejected by Australian Family Physician. Australian Family Physician 35: 655–656. [PubMed] [Google Scholar]

- 36. Hartling L, Craig WR, Russell K, Stevens K, Klassen TP (2004) Factors influencing the publication of randomized controlled trials in child health research. Archives of Pediatrics & Adolescent Medicine 158: 983–987. [DOI] [PubMed] [Google Scholar]

- 37. Harvey SA, Wandersee JR (2010) Publication rate of abstracts of papers and posters presented at Medical Library Association annual meetings. Journal of the Medical Library Association 98: 250–255. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38. Hashkes P, Uziel Y (2003) The publication rate of abstracts from the 4th Park City Pediatric Rheumatology meeting in peer-reviewed journals: what factors influenced publication? Journal of Rheumatology 30: 597–602. [PubMed] [Google Scholar]

- 39. Hoeg RT, Lee JA, Mathiason MA, Rokkones K, Serck SL, et al. (2009) Publication outcomes of phase II oncology clinical trials. American Journal of Clinical Oncology 32: 253–257. [DOI] [PubMed] [Google Scholar]

- 40. Hopewell S, Clarke M (2001) Methodologists and their methods. Do methodologists write up their conference presentations or is it just 15 minutes of fame? International Journal of Technology Assessment in Health Care 17: 601–603. [PubMed] [Google Scholar]

- 41. Hosking EJ, Albert T (2002) Bottom drawer papers: Another waste of clinicians’ time. Medical Education 36: 693–694. [DOI] [PubMed] [Google Scholar]

- 42. Krzyzanowska MK, Pintilie M, Tannock IF (2003) Factors associated with failure to publish large randomized trials presented at an oncology meeting. JAMA 290: 495–501. [DOI] [PubMed] [Google Scholar]

- 43. Misakian AL, Bero LA (1998) Publication bias and research on passive smoking: comparison of published and unpublished studies. JAMA 280: 250–253. [DOI] [PubMed] [Google Scholar]

- 44. Montane E, Vidal X (2007) Fate of the abstracts presented at three Spanish clinical pharmacology congresses and reasons for unpublished research. European Journal of Clinical Pharmacology 63: 103–111. [DOI] [PubMed] [Google Scholar]

- 45. Morris CT, Hatton RC, Kimberlin CL (2011) Factors associated with the publication of scholarly articles by pharmacists. American Journal of Health-System Pharmacy 68: 1640–1645. [DOI] [PubMed] [Google Scholar]

- 46. Okike K, Kocher MS, Nwachukwu BU, Mehlman CT, Heckman JD, et al. (2012) The fate of manuscripts rejected by The Journal of Bone and Joint Surgery (American Volume). Journal of Bone & Joint Surgery - American Volume 94: e130. [DOI] [PubMed] [Google Scholar]

- 47. Oliveira LR, Figueiredo AA, Choi M, Ferrarez CE, Bastos AN, et al. (2009) The publication rate of abstracts presented at the 2003 urological Brazilian meeting. Clinics (Sao Paulo, Brazil) 64: 345–349. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Perez S, Hashkes PJ, Uziel Y (2004) [The impact of the annual scientific meetings of the Israel Society of Rheumatology as measured by publication rates of the abstracts in peer-reviewed journals]. Harefuah 143: 266–269, 319. [PubMed]

- 49. Petticrew M, Egan M, Thomson H, Hamilton V, Kunkler R, et al. (2008) Publication bias in qualitative research: What becomes of qualitative research presented at conferences? Journal of Epidemiology and Community Health 62: 552–554. [DOI] [PubMed] [Google Scholar]

- 50. Reveiz L, Cardona AF, Ospina EG, de Agular S (2006) An e-mail survey identified unpublished studies for systematic reviews. Journal of Clinical Epidemiology 59: 755–758. [DOI] [PubMed] [Google Scholar]

- 51. Reysen S (2006) Publication of Nonsignificant Results: A Survey of Psychologists’ Opinions. Psychological Reports 98: 169–175. [DOI] [PubMed] [Google Scholar]

- 52.Rodriguez SP, Vassallo JC, Berlin V, Kulik V, Grenoville M (2009) [Factors related to the approval, development and publication of research protocols in a paediatric hospital]. Archivos Argentinos de Pediatria 107: 504–509, e501. [DOI] [PubMed]

- 53. Rotton J, Foos PW, Van Meek L, Levitt M (1995) Publication practices and the file drawer problem: A survey of published authors. Journal of Social Behavior & Personality 10: 1–13. [Google Scholar]

- 54. Sanossian N, Ohanian AG, Saver JL, Kim LI, Ovbiagele B (2006) Frequency and determinants of nonpublication of research in the stroke literature. Stroke 37: 2588–2592. [DOI] [PubMed] [Google Scholar]

- 55. Smith MA, Barry HC, Williamson J, Keefe CW, Anderson WA (2009) Factors related to publication success among faculty development fellowship graduates. Family Medicine 41: 120–125. [PubMed] [Google Scholar]

- 56. Snedeker KG, Totton SC, Sargeant JM (2010) Analysis of trends in the full publication of papers from conference abstracts involving pre-harvest or abattoir-level interventions against foodborne pathogens. Preventive Veterinary Medicine 95: 1–9. [DOI] [PubMed] [Google Scholar]

- 57. Sprague S, Bhandari M, Devereaux PJ, Swiontkowski MF, Tornetta P 3rd, et al. (2003) Barriers to full-text publication following presentation of abstracts at annual orthopaedic meetings. Journal of Bone & Joint Surgery - American Volume 85-A: 158–163. [DOI] [PubMed] [Google Scholar]

- 58. Stocker J, Fischer T, Hummers-Pradier E (2009) Better than presumed - German College of General Practitioners’ (DEGAM) congress abstracts and published articles. [German] Besser als gedacht - DEGAM kongress-abstracts und veroffentlichte artikel. Zeitschrift fur Allgemeinmedizin 85: 123–129. [Google Scholar]

- 59. ter Riet G, Korevaar DA, Leenaars M, Sterk PJ, Van Noorden CJ, et al. (2012) Publication bias in laboratory animal research: a survey on magnitude, drivers, consequences and potential solutions. PLoS ONE [Electronic Resource] 7: e43404. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60. Timmer A, Hilsden RJ, Cole J, Hailey D, Sutherland LR (2002) Publication bias in gastroenterological research - a retrospective cohort study based on abstracts submitted to a scientific meeting. BMC Medical Research Methodology 2: 7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61. Timmons S, Park J (2008) A qualitative study of the factors influencing the submission for publication of research undertaken by students. Nurse Education Today 28: 744–750. [DOI] [PubMed] [Google Scholar]

- 62. Tricco AC, Pham B, Brehaut J, Tetroe J, Cappelli M, et al. (2009) An international survey indicated that unpublished systematic reviews exist. Journal of Clinical Epidemiology 62 617–623: e615. [DOI] [PubMed] [Google Scholar]

- 63. Van Royen P, Sandholzer H, Griffiths F, Lionis C, Rethans JJ, et al. (2010) Are presentations of abstracts at EGPRN meetings followed by publication? European Journal of General Practice 16: 100–105. [DOI] [PubMed] [Google Scholar]

- 64. Vawdrey DK, Hripcsak G (2013) Publication bias in clinical trials of electronic health records. Journal of Biomedical Informatics 46: 139–141. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65. Vuckovic-Dekic L, Gajic-Veljanoski O, Jovicevic-Bekic A, Jelic S (2001) Research results presented at scientific meetings: To publish or not? Archive of Oncology 9: 161–163. [Google Scholar]

- 66. Woo JMP, Furst D, McCurdy DK, Lund OI, Eyal R, et al. (2012) Factors contributing to non-publication of abstracts presented at the american college of rheumatology/association of rheumatology health professionals annual meeting. Arthritis and Rheumatism 64: S806–S807. [Google Scholar]

- 67. Woodrow R, Jacobs A, Llewellyn P, Magrann J, Eastmond N (2012) Publication of past and future clinical trial data: Perspectives and opinions from a survey of 607 medical publication professionals. Current Medical Research and Opinion 28: S18. [Google Scholar]

- 68. Wong SS, Fraser C, Lourenco T, Barnett D, Avenell A, et al. (2010) The fate of conference abstracts: systematic review and meta-analysis of surgical treatments for men with benign prostatic enlargement. World J Urol 28: 63–69. [DOI] [PubMed] [Google Scholar]

- 69. Yee GC, Hillman AL (1997) Applied pharmacoeconomics. When can publication be legitimately withheld? Pharmacoeconomics 12: 511–516. [DOI] [PubMed] [Google Scholar]

- 70. Gyles C (2012) Is there ever good reason to not publish good science? Can Vet J 53: 587–588. [PMC free article] [PubMed] [Google Scholar]

- 71.Brice A, Chalmers I (2013) Medical journal editors and publication bias. BMJ 347. doi:10.1136/bmj.f6170. [DOI] [PubMed]

- 72. Fanelli D (2010) Do pressures to publish increase scientists’ bias? An empirical support from US States Data. PLoS One 5: e10271. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73. Chalmers I (2012) For ethical, economic and scientific reasons, health-relevant degree theses must be made publicly accessible. Evid Based Med 17: 69–70. [DOI] [PubMed] [Google Scholar]

- 74.Doshi P, Dickersin K, Healy D, Vedula SS, Jefferson T (2013) Restoring invisible and abandoned trials: a call for people to publish the findings. BMJ 346. doi:10.1136/bmj.f2865. [DOI] [PMC free article] [PubMed]

- 75. Ioannidis JP, Greenland S, Hlatky MA, Khoury MJ, Macleod MR, et al. (2014) Increasing value and reducing waste in research design, conduct, and analysis. Lancet 383: 166–175. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76. Hart A (2011) The scandal of unpublished research. Matern Child Nutr 7: 333–334. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77. Smyth RL (2011) Lessons to be learnt from unsuccessful clinical trials. Thorax 66: 459–460. [DOI] [PubMed] [Google Scholar]

- 78. Riveros C, Dechartres A, Perrodeau E, Haneef R, Boutron I, et al. (2013) Timing and completeness of trial results posted at ClinicalTrials.gov and published in journals. PLoS Med 10: e1001566 discussion e1001566. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 79. Chalmers I, Bracken MB, Djulbegovic B, Garattini S, Grant J, et al. (2014) How to increase value and reduce waste when research priorities are set. Lancet 383: 156–165. [DOI] [PubMed] [Google Scholar]

- 80.Rubiales AS (2013) Who is responsible for publishing the results of old trials? BMJ 347. doi:10.1136/bmj.f7199. [DOI] [PubMed]

- 81. Wager E, Williams P (2013) “Hardly worth the effort”? Medical journals’ policies and their editors’ and publishers’ views on trial registration and publication bias: quantitative and qualitative study. BMJ 347: f5248. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 82. Altman DG (1994) The scandal of poor medical research. BMJ 308: 283–284. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 83.Turner S, Wright D, Maeso R, Cook A, Milne R (2013) Publication rate for funded studies from a major UK health research funder: a cohort study. BMJ Open 3 (5) doi:10.1136/bmjopen-2012-002521. [DOI] [PMC free article] [PubMed]

- 84. Wager E (2006) Publishing clinical trial results: the future beckons. PLoS Clin Trials 1: e31. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 85. Callaway E (2012) Geneticists eye the potential of arXiv. Nature 488: 19. [DOI] [PubMed] [Google Scholar]

- 86. Burdett AN (2013) Science communication: Self-publishing’s benefits. Science 342: 1169–1170. [DOI] [PubMed] [Google Scholar]

- 87. Sun X, Ioannidis JP, Agoritsas T, Alba AC, Guyatt G (2014) How to use a subgroup analysis: users’ guide to the medical literature. JAMA 311: 405–411. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Literature search strategy (Ovid –MEDLINE, EMBASE).

(PDF)

The main characteristics of reports that provided data on reasons for non-publication of studies.

(PDF)

Forest plots of stated reasons for non-publication of studies.

(PDF)

Results of meta-regression analyses of differences between subgroups.

(PDF)

PRISMA Checklist.

(PDF)

Data Availability Statement

The authors confirm that all data underlying the findings are fully available without restriction. All relevant data are within the paper and its Supporting Information files.