Abstract

Recent advances in mobile devices have made profound changes in people's daily lives. In particular, the impact of easy access of information by the smartphone has been tremendous. However, the impact of mobile devices on healthcare has been limited. Diagnosis and treatment of diseases are still initiated by occurrences of symptoms, and technologies and devices that emphasize on disease prevention and early detection outside hospitals are under-developed. Besides healthcare, mobile devices have not yet been designed to fully benefit people with special needs, such as the elderly and those suffering from certain disabilities, such blindness. In this paper, an overview of our research on a new wearable computer called eButton is presented. The concepts of its design and electronic implementation are described. Several applications of the eButton are described, including evaluating diet and physical activity, studying sedentary behavior, assisting the blind and visually impaired people, and monitoring older adults suffering from dementia.

Keywords: Wearable Computer, Mobile Computing, Healthcare, Health Monitoring, Wellness, Diet, Physical Activity, Sedentary behavior, Lifestyle, Chronic Disease, Obesity, Older Adults, Navigational Assistance to the blind

1. INTRODUCTION

Healthcare has recently become one of the most debated issues in the United States. More than 1.7 million Americans die annually of chronic diseases, such as heart disease, cancer, stroke, diabetes, and chronic obstructive pulmonary disease, accounting for approximately 70% of all U.S. deaths [1]. The costs for chronic diseases are about 75% of more than $2 trillion spent on medical care annually [2]. The recent implementation of health care reform has been a significant effort to improve healthcare. However, the reform is mostly focused on medical insurance. While paying for care is certainly important, the primary root of the high healthcare cost perhaps lies in the rising number of cases where people need care compounded by the increasing costs of hospital visits. Unfortunately, the current healthcare system in the U.S. does not actually focus on “health care”. Instead, the system focuses on “sick care”. It is in fact a symptom-based system primarily designed for a patient to see a doctor who finds a cure or a relief. With the most advanced medical research and facilities in the world, the U.S. healthcare system has worked very well after a disease is diagnosed. However, it has not worked well in disease prevention. In recent years, unhealthy lifestyle has been adopted by an increasing portion of the U.S. population. More than 60% of U.S. adults are overweight, and approximately one-third are obese [3]. Obesity has caused a steady rise of chronic diseases, such as cardiovascular disease, cancer, lung disease, and diabetes. Obesity causes approximately 300,000 premature deaths each year in the U.S. [4]. A recent study indicates that the estimated direct and indirect costs of obesity to the U.S. economy are at least $215 billion annually [5].

Among numerous health and wellness related factors, lifestyle modification, including adopting healthy diet and active living, is extremely important because these practices reduce occurrences of chronic diseases effectively, providing the best solution to the current healthcare problem. Towards this goal, intelligent wearable systems need to be developed to collect real-world data on individuals’ lifestyle so that they can better understand their own risks of chronic diseases and be empowered by physicians and caregivers to improve lifestyle.

Besides healthcare, the envisioned intelligent wearable systems can provide tremendous benefits to certain segments of the population. For example, older people suffering from dementia or other degenerative neurological diseases may be monitored by these systems to ensure their safety and wellbeing. Likewise, disabled individuals, such as the blind, can take advantage of advanced sensors, data processors, and wireless communication links within the wearable system to navigate in both indoor and outdoor environments and accomplish their daily living tasks. Unfortunately, the current designs of mobile devices, such as smartphones, tablet computers and smart wristwatches, are not well suited for use by these individuals because they cannot operate these devices effectively. As a result, the advanced functions of these devices are far from being fully utilized.

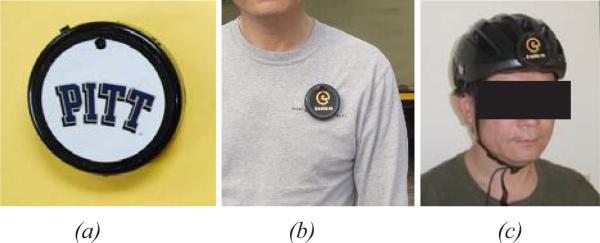

Recognizing the limitations of the current mobile devices, we have developed a wearable computer called eButton as a data collection hub in the personal space [6; 7]. The eButton has a small size and a light weight, resembling a decorative object rather than an electronic gadget (Fig. 1). The face of the device can be designed according to individual's age, gender, and preference. Despite its simple and personalized appearance, eButton is a complex miniature computer with a powerful CPU and an array of sensors for data collection. This paper gives an overview of the design concepts and describes a number of applications that we have explored recently, including diet and physical activity evaluation, sedentary behavior evaluation, and assistance to the elderly and blind. We finally discuss certain practical issues, such as privacy concerns, before concluding this paper.

Fig. 1.

(a) eButton has a personalized appearance; (b) and (c) wearing methods examples of

2. SYSTEM DESIGN

2.1 Design Concepts

The eButton utilizes the same electronic components as those used in the smartphone, such as ARM-based, power-efficient microprocessors, MEMS sensors, integrated wireless communication channels, and solid state data storage. However, there are major conceptual differences between the designs of the smartphone and eButton, described as follows.

Body Location

The smartphone is not a wearable device therefore its design does not need to consider wearability. In the design of eButton, wearability is a critical issue. We studied a number of body locations and selected the chest (Fig. 1b) as the primary site to wear the device. At this site, people often use a pin, a badge or a printed marker to decorate their clothing or make a certain statement (e.g., indicating an organizational/political affiliation or support). By replacing the chest pin by a miniature computer, many new functions can be achieved while keeping its original appearance and function. In addition, when the eButton is pinned at this location, it is able to acquire data from not only the external environment, but also from the internal space of the body since this location is very close to the heart, lung and an implantable device which, if exists, is often implanted below the collarbone. Therefore, the chest location facilitates the future use of the eButton to acquire physiological data from biological sensors by passing both information and energy (for powering sensors and implants) across a cloth/tissue layer wirelessly.

Besides the chest, the eButton can also be worn at other locations, such as on a bicycle helmet (Fig. 1c) or a hat which, based on our experiments, allows acquisition of better images during sports and certain physical activities (e.g., hiking).

User Interface

The smartphone must be operated actively. As a result, it is equipped with a set of sophisticated interfaces (e.g., high-resolution touch-screen, real or simulated keyboard, voice and sound channels). The eButton, on the hand, aims at a nearly passive operation. Its user-interface is thus much simpler, including an audio channel through an earphone connection, a vibrator that delivers messages in certain predefined vibrating patterns, and a mechanical interface allowing the user to communicate with the device by taping.

Device Size

The size of the smartphone is mainly limited by its screen which must be large enough for both visualization and touch-screen operation. In contrast, the eButton is not subject to such a limitation. Its size can be reduced aggressively, constrained mainly by the size/weight of the battery. As the semiconductor technology advances rapidly, chips become smaller and more power-efficient. As a result, battery size/weight can be reduced, so can the size/weight of the eButton.

Operational Mode

The smartphone must spend most time “sleeping” in a pocket or a purse because it is designed to work intermittently. This represents a major limit in its health-related applications. In contrast, the eButton adopts a continuous operational mode. Its cameras and other sensors can keep open eyes on various events and activities (e.g., eating, physical activity, sedentary behavior, navigational landmarks, and events or structures that may lead to an injury). As advanced data processing algorithms are improved and implemented not only on-board, but also on powerful platforms on the Internet, the acquired data can be understood by increasingly using computer vision instead of human observation. Our goal is to substantially reduce human involvements in observing the sensor data while providing both information and assistance whenever the wearer needs.

In summary, the smartphone is designed to be a user-operated handheld computer for interpersonal communication and app-based information access, while the eButton is designed to be a self-operated wearable computer within the personal space. Obviously, one can take advantage of their operational differences to form a joint system. For example, the tremendous computing task of the eButton can be shared by the smartphone which is otherwise idle. In addition, the smartphone provides a set of sophisticated user-interface that eButton lacks. This joint system thus has functions far exceeding those currently provided by the smartphone alone, capable of helping people in a completely new dimension.

2.2 Wearable System Design

The eButton has been redesigned several times to improve its functions. The most recent design has a diameter of 60mm and a weight approximately one quarter of the smartphone. It has a microprocessor with the 32-bit ARM Cortex A9 architecture and four processing cores clocked at a maximum of 1.4 GHz. It can run the Linux or Android operating system, capable of using public-domain software resources for app development. The ARM Cortex A9 has 2GB of RAM, 8GB of NAND flash memory, and wireless communication ports supporting both Bluetooth and Wi-Fi. As described previously, the eButton is equipped with an array of sensors collecting real-world data as it needs. These sensors include two wide-angle cameras providing a large field of view with stereo and depth information, a UV sensor that distinguishes indoor and outdoor environments, a 3-in-1 inertial measurement unit (IMU) consisting of one 3-axis accelerometer, one 3-axis gyroscope and one magnetometer for motion/orientation measurements, an audio processor for entertainment and delivering information to the user, a proximity sensor for observing arm/hand motion in front of the body assisting activity recognition, a barometer gauging the distance from the eButton to the floor after a certain calibration, and a global positioning system (GPS) receiver determining the geographical location of the wearer. The acquired data can be stored, with or without preprocessing, in a flush storage within the device or wirelessly transmitted to a smartphone or a server using the Bluetooth or Wi-Fi channel.

The eButton design provides two mechanisms to attach the device to clothing. The first mechanism uses a needle in the same way as the regular chest pin. The second mechanism uses a pair of disc magnets across the cloth while the needle, made of gold-plated steel, is attracted to the space between the two magnets. During vigorous physical activity, the two mechanisms can be used simultaneously to improve stability.

3. APPLICATIONS

In this section, we briefly describe several applications of eButton that we have investigated, including evaluating diet, physical activity, and sedentary behavior; monitoring older adults with dementia; and helping the blind and visually impaired people find their way using the device.

3.1 Dietary Assessment

The eButton utilizes a simple mechanism for dietary assessment. At the chest location, the cameras have a field of view shown as the blue-shaded region in Fig. 2. Without attention from the wearer, pictures of the food on the table are automatically taken at a preset rate, e.g., one picture in every two seconds. As a result, the entire eating process is recorded. These pictures (e.g., Fig. 3a) are then processed in the following steps [8-11]: First, regularly shaped utensils (e.g., circular plates or bowls) are detected (Fig. 3b). Next, food items are segmented based on color, texture and a complexity measure (Fig. 3c). Then, the volume of each food item is estimated based on a food-specific shape model (e.g., a part of an ellipsoid for a plate of spaghetti) after a coordinate calibration. This shape model, shown in Fig. 3d as a wire mesh, fits the food by changing size and shape until the model and food are closely matched. Since the volume of the shape model is known, the volume of the food can be estimated by equating the two volumes (Fig. 3e). Finally, the information about calories and nutrients is obtained from a database, such as the Food and Nutrient Database for Dietary Studies (FNDDS, a public domain database developed by the U.S. Department of Agriculture) using the food name and volume as inputs [12].

Fig. 2.

The cameras on the eButton automatically take pictures of food

Fig. 3.

Food image processing: (a) raw image, (b) dining plate detection, (c) food segmentation, (d) food shape modeling, (e) fitting result

To evaluate the accuracy of this method, we compared the results obtained by eButton and physical measurement (ground truth) using 100 real-world foods [10]. Although there were significant errors when food was irregularly shaped or the food image was occluded, in most cases (85/100), the error was on the order of 30%, which represents a significant improvement from the visual estimation of the same foods[10].

3.2 Physical Activity and Sedentary Events

It is well-known that physical activity plays a critical role in health and wellness. Unlike the diet evaluation case where objective sensors for eating evaluation are rare, there have been numerous commercial devices for physical activity evaluation, such as pedometers to count the number of steps and accelerometers to gauge body motion. These devices can be worn in several ways, such as a waist belt attachment (e.g., Actigraph) and a wristband (e.g., Fitbit and Jawbone UP). Although these devices provide objective measurements, they cannot identify certain activities specifically, such as sedentary activities on which most people spend majority of their daytimes [13].

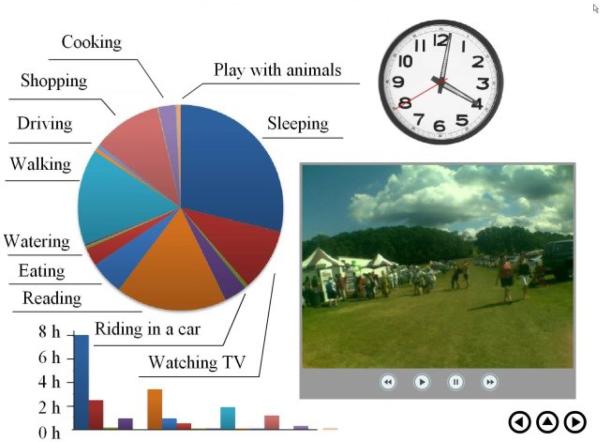

Since the eButton is equipped with both motion and visual sensors, it has multiple ways to evaluate both exercises and sedentary activities, depending on the “resolution” requirement in activity evaluation. The simplest method utilizes a single accelerometer by measuring the average signal amplitude within certain time windows. These amplitude measures are then converted to caloric expenditure [14]. The second method uses one or two cameras from which both activity type and duration are identified. Then, the physical activity compendium (a standard database) is utilized to calculate the caloric expenditure [15] . Currently, activity recognition was mostly performed manually by visually scanning the recorded image sequence assisted by an event segmentation algorithm [16; 17]. It is desirable to automate this time-consuming process. Unfortunately, this automation is difficult mainly because eButton produces “first-person” images in which the person performing activities are not in the recorded images. Although the general problem of activity recognition has not been solved, we found that certain commonly performed activities, such as walking, can be identified by image processing algorithms [18; 19]. The last method utilizes multiple sensor outputs to form a pattern vector. A pattern recognition algorithm, such as the support vector machines (SVMs) [20], is them applied to identify activities. We have also used a personal experience model in which the activities performed by an individual are modeled in terms of probabilities to assist activity recognition [18]. These methods have been implemented and studied using real-world data. The results are presented in a condensed form for observation. Fig. 4 shows a snapshot of an interactive video summary of the activities as observed by eButton from a male adult during a weekend day. When each piece of the pie is activated by mouse, the video summary shows an image sequence of the activity performed with the clock showing the corresponding time.

Fig. 4.

Snapshot of an interactive video summary of a single day of events experience by an individual.

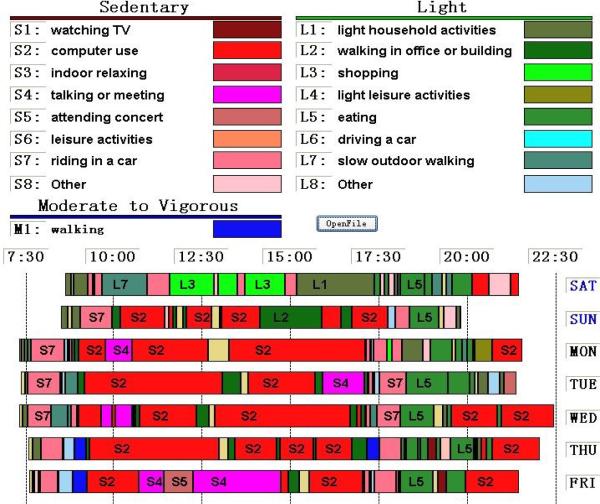

For health and wellness, maintaining an active lifestyle is important. Therefore, in another experiment, we used the eButton to probe individuals’ sedentary behavior. Fig. 5 summarizes the activities performed by a female software engineer during one week using the second method described previously (camera based method with manual activity recognition). It can be observed from Fig. 5 that sitting in front of the computer at home or office occupies most of the daytimes, there were no intentional physical exercises, very few physical activities at the moderate level were performed, and vigorous physical activities were totally absent. In addition, hours of sitting time without a short break dominated work hours. Although this sedentary lifestyle is common in the modern society, if not corrected, it may lead to overweight and/or development of chronic diseases.

Fig. 5.

One-week summary of daytime activities for a female software engineer

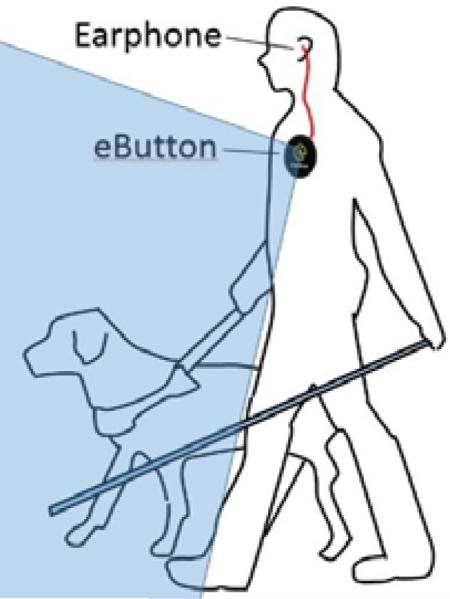

3.3 Assistance to Blind People

We have investigated the use of eButton to help the blind and visually impaired navigate the environment. Our basic system design is illustrated in Fig. 6. An eButton is worn on the chest, which requires no attention by the wearer. A short cord connects the eButton with an earphone, providing navigational instructions to the wearer in voice. This design has two unique features. First, the use of the eButton does not interfere with the use of other assistive means, such as a cane and a guiding dog. Second, the device has both a fixed height to the ground and a fixed camera view in the direction of travel all the time. As a result, the image sequence acquired can well duplicate a pre-stored image sequence acquired by another person with a normal vision who has worn the eButton in the same way and walked previously along the same route. This valuable feature allows navigation by matching two image sequences, equivalent to following an established route in an indoor or outdoor map [21]. Therefore, the eButton approach has clear advantages over the existing smartphone based approaches which often require device handling and suffer from greater variability in acquired images due to varying camera location and orientation.

Fig. 6.

The eButton provides guiding information without interfering with other means for navigation

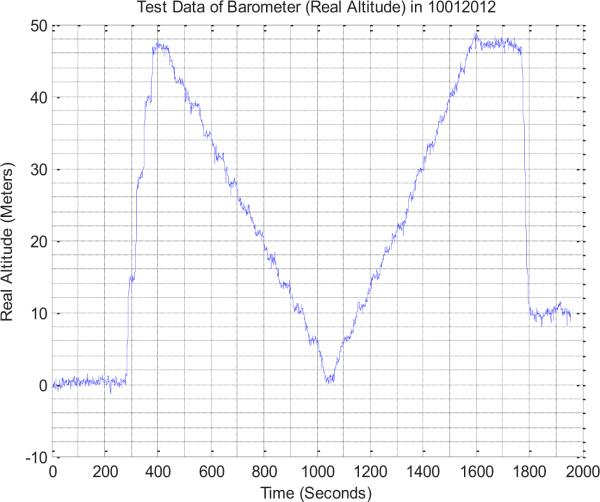

During navigation, the GPS receiver (for outdoor case) or the Wi-Fi receiver (for indoor case) within the eButton provides a rough route while the video and inertial sensor data fine-tune the route. In the indoor case, a calibrated barometer within the eButton measures the relative height of the wearer with respect to the entrance level thus to detect the floor level in a multi-story building[21; 22]. Fig. 7 plots an example of height measurement. The subject first took the elevator to the 12th floor. It can be observed that the elevator stopped 3 times before reaching the destination. Next, the subject took stairs down to the first floor, then up to the 12th floor, finally down to the third floor by elevator (nonstop). Clearly, the floor level can be determined with a known height of each floor for the building.

Fig. 7.

Barometer output when a subject took an elevator to the 12th floor, walked down and up the stairs and then took the elevator again down to the third floor.

3.4 Safety Monitoring for Older Adults

As in the previous case, the eButton can be worn unobtrusively and passively on the chest of older adults, allowing continuous monitoring of their health, safety and wellbeing. We conducted a preliminary study on this important application for older adults with dementia [23; 24]. Our system structure is shown in Fig. 8. The system consists of two data acquisition devices, an eButton and a self-constructed prototype wristwatch which records heart rate and skin temperature. The data acquired by the wristwatch are wirelessly transmitted to the eButton where the physiological data join the eButton-acquired data forming a single data stream. This data stream is then sent remotely to a secure server through the Wi-Fi router to a remote computational platform for advanced data processing and access by caregivers.

Fig. 8.

Components of safety monitoring system for the elderly: Physiological data acquired by the wristwatch join the data acquired by eButton. The combined data are then connected through the Wi-Fi to a remote server where they are processed and accessed by caregivers.

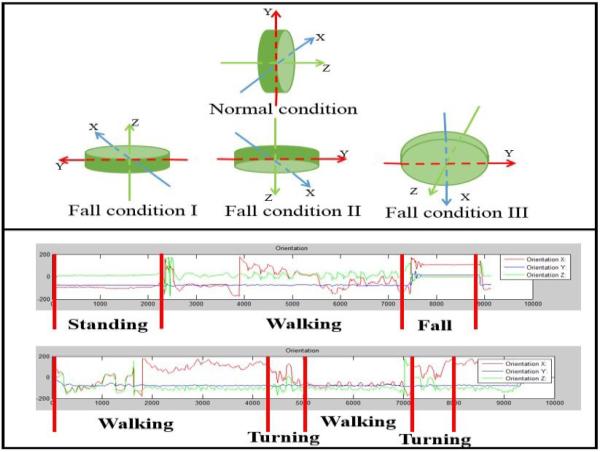

In our system design, the data from motion sensors and the barometer are utilized to monitor body motion and posture (e.g., walking, standing, and sitting). The GPS and visual data are used to determine the location of the wearer in a similar way to that described in the previous section. The system detects a possible fall by observing the rotation angles in a coordinate transformation of the motion sensor data [25]. At a normal standing or sitting position, the Y-axis after transformation is nearly aligned with the gravity vector (“normal condition” in Fig. 9), determined according to the output of a magnetometer. In case of a fall or lying down, the Y-axis changes significantly (the three “fall conditions” in Fig. 9). A fall is detected if the Y-axis rotates rapidly within a predefined short period. By imposing this constraint, the case where the elder lies down intentionally can be excluded. The bottom panel in Fig. 9 shows our experimental data in which the rotation angles of all three axes after the transformation are plotted. It can be observed that the Y-axis had a significant change when a simulated fall took place. Our test results with repeated maneuvers indicated that, using this transformation method, the accuracy in detecting simulated falls reached 95% [24].

Fig. 9.

Upper Panel: A fall is likely when any one of the three fall conditions are detected. Bottom Panel: The Y-component of the transformation vector (blue curve) has a large increase in a simulated fall.

4. DISCUSSION

It has been well recognized that the rise of the smartphone marked a historical milestone in the development of the information technology. Although the smartphone and its tablet cousin currently dominate the market of mobile devices, the tide will change. It is highly likely that many forms of “wearables” will join the club of mobile devices and the trend has recently become increasingly clear. Among numerous real-world applications, wearables will play important roles in health and wellness because they operate within the personal space, being attached, or within a cloth layer, to the human body. As mentioned previously, wearables can operate autonomously. Their “eyes” are always open to observe both the external environment and the internal space of the human body, providing a new set of functions for health and wellness far beyond those provided by the smartphone alone.

Although the future of wearables is very promising, there are many challenges in the design of these devices. The most challenging problem is perhaps finding the best balance between device functions and privacy concerns. With high-resolution cameras, advanced sensors (some are implantable within the body), high-performance microprocessors, fast wireless connections, and nearly unlimited data storage and processing power offered by cloud computing platforms, miniature computers like eButton will soon be able to acquire broad datasets that not only help the wearer, but also expose him/her to a high risk of privacy breach. In addition, when cameras are activated, wearables can record other people's image and behavior without their consent, causing serious privacy concerns. While part of the privacy problem is in the legal and regulatory domains, there may be powerful technical solutions, such as limiting the scope of data acquisition using an intelligent control so that only the data relevant to the designated purpose are acquired. Another technical solution is to automate data analysis, avoid human observation of recorded data, and/or block privacy-sensitive information. Advanced algorithms to implement these solutions are yet to be developed.

It must be recognized that wearables operate at the boundary between a biological body and a man-made information system. Hence, wearables must meet special requirements on wearability, different from the case of the smartphone. Currently, wearability is perhaps the most challenging problem in wearable device design. Although many body locations (e.g., wrist, head, arm and chest) and clothing sites (outerwear and underwear) can be considered, it is difficult to find an ideal design and location that not only implement the afore mentioned device functions, but also being unobtrusive and convenient. In addition, wearables are expected to be pleasant-looking if they are exposed. It is also expected that these devices support convenient human-computer interfaces, such as a display and a textual input mechanism. While a perfect wearable design that provides all desired properties may not exist, studies on maximizing both wearability and functionality are extremely important.

In order to support automatic operation, the “big data” problem of the wearables must be solved. The multimodality data, including image sequences and waveforms, require a tremendous processing power. Although fast wireless links and web-based computing platforms are helpful, advanced algorithms, such as those for information extraction, pattern recognition, and database assess, must be developed with dedicated efforts.

In the wearable design, a power supply is always a critical issue since the battery often has a dominant effect on device size and weight. The use of low-power components and power management schemes is essential. In addition, harvesting energy produced by the human body in the thermal, metabolic (cell based), or mechanical form and converting the energy to electricity provide an attractive option.

5. CONCLUSION

We have presented our recent studies on eButton as a wearable computing platform in the domain of health and wellness. Our design concepts of the eButon have been described, the electronic components and device functions have been overviewed, and several applications of the eButton, including diet and physical activity assessment, sedentary behavior evaluation, and assistance to the elderly and blind, are described. Our studies have shown that new wearable devices like eButton can play an important role in personal health and wellness.

ACKNOWLEDGMENTS

This work was supported in part by National Institutes of Health grants U01 HL91736, R01CA165255, and R21CA172864. The opinions expressed in this article are the author's own and do not reflect the view of the National Institutes of Health, the Department of Health and Human Services, or the United States government.

Footnotes

Permission to make digital or hard copies of all or part of this work for personal or classroom use is granted without fee provided that copies are not made or distributed for profit or commercial advantage and that copies bear this notice and the full citation on the first page. Copyrights for components of this work owned by others than ACM must be honored. Abstracting with credit is permitted. To copy otherwise, or republish, to post on servers or to redistribute to lists, requires prior specific permission and/or a fee.

General Terms

Algorithms, Measurement, Documentation, Performance, Design

REFERENCES

- 1.Centers for Disease Control and Prevention Chronic diseases: the power to prevent, the call to control, at-a-glance. 2009 http://www.cdc.gov/chronicdisease/resources/publications/aag/chronic.htm.

- 2.Centers for Disease Control and Prevention Rising Health Care Costs are Unsustainable. 2013 http://www.cdc.gov/workplacehealthpromotion/businesscase/reasons/rising.html.

- 3.Flegal KM, Carroll MD, Ogden CL, Curtin LR. Prevalence and trends in obesity among US adults, 1999-2008. JAMA. 2010 Jan 20;303(3):235–241. doi: 10.1001/jama.2009.2014. [DOI] [PubMed] [Google Scholar]

- 4.U.S. Department of Health and Human Services. Overweight and obesity: a major public health issue. 2001 http://odphp.osophs.dhhs.gov/pubs/prevrpt/01fall/pr.htm.

- 5.Hammond RA, Levine R. The economic impact of obesity in the United States. Diabetes Metab Syndr Obes. 2010;3:285–295. doi: 10.2147/DMSOTT.S7384. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Sun M, Fernstrom JD, Jia W, Hackworth SA, Yao N, Li Y, Li C, Fernstrom MH, Sclabassi RJ. A wearable electronic system for objective dietary assessment. J Am Diet Assoc. 2010 Jan;110(1):45–47. doi: 10.1016/j.jada.2009.10.013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Bai Y, Li C, Yue Y, Jia W, Li J, Mao Z-H, Sun M. Designing a wearable computer for lifestyle evaluation.. Proceedings of the 38th Annual Northeast Biomedical Engineering Conference (NEBEC); Philadelphia, PA. March 16-18 2012; 2012. pp. 243–244. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Nie J, Wei Z, Jia W, Li L, Fernstrom JD, Sclabassi RJ, Sun M. Automatic detection of dining plates for image-based dietary evaluation.; Buenos Aires, Argentina. August 31 - September 4 2010; 2010. Proceedings of the Conf Proc IEEE Eng Med Biol Soc. pp. 4312–4315. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Chen HC, Jia W, Yue Y, Li Z, Sun YN, Fernstrom JD, Sun M. Model-based measurement of food portion size for image-based dietary assessment using 3D/2D registration. Meas Sci Technol. 2013 Oct;24(10) doi: 10.1088/0957-0233/24/10/105701. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Jia W, Chen HC, Yue Y, Li Z, Fernstrom J, Bai Y, Li C, Sun M. Accuracy of food portion size estimation from digital pictures acquired by a chest-worn camera. Public Health Nutr. 2013 Dec 4;:1–11. doi: 10.1017/S1368980013003236. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Jia W, Yue Y, Fernstrom JD, Zhang Z, Yang Y, Sun M. 3D localization of circular feature in 2D image and application to food volume estimation.. Proceedings of the Proc. IEEE 34th Annual Conf. on Engr. in Medicine and Biology; San Diego, CA. Aug.28 - Sept. 1 2012; 2012. pp. 4545–4548. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Ahuja JKA, Montville JB, Omolewa-Tomobi G, Heendeniya KY, Martin CL, Steinfeldt LC, Anand J, Adler ME, Lacomb RP, Moshfegh AJ. USDA Food and Nutrient Database for Dietary Studies, 5.0. U.S. Department of Agriculture, Agricultural Research Service, Food Surveys Research Group; Beltsville, MD: 2012. [Google Scholar]

- 13.Kerr J, Marshall SJ, Godbole S, Chen J, Legge A, Doherty AR, Kelly P, Oliver M, Badland HM, Foster C. Using the sensecam to improve classifications of sedentary behavior in free-living settings. American Journal of Preventive Medicine. 2013 Mar;44(3):290–296. doi: 10.1016/j.amepre.2012.11.004. [DOI] [PubMed] [Google Scholar]

- 14.ActiGraph Service What are counts? 2011 https://help.theactigraph.com/entries/20723176-what-are-counts.

- 15.Ainsworth BE, Haskell WL, Herrmann SD, Meckes N, Bassett DR, Jr., Tudor-Locke C, Greer JL, Vezina J, Whitt-Glover MC, Leon AS. 2011 compendium of physical activities: a second update of codes and MET values. Med Sci Sports Exerc. 2011 Aug;43(8):1575–1581. doi: 10.1249/MSS.0b013e31821ece12. [DOI] [PubMed] [Google Scholar]

- 16.Li Z, Wei Z, Jia W, Sun M. Daily life event segmentation for lifestyle evaluation based on multi-sensor data recorded by a wearable device.. Proceedings of the 35th IEEE Int. Conf. Engineering in Medicine and Biology; Osaka, Japan. July 3-7 2013; 2013. pp. 2858–2861. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Zhang W, Jia W, Sun M. Segmentation for efficient browsing of chronical video recorded by a wearable device.. Proceedings of the Proc. IEEE 36th Northeast Biomedical Engineering Conference; New York, NY. March 26-28 2010.2010. [Google Scholar]

- 18.Li Z. Doctoral Thesis. Ocean University of China; 2013. Study on analytic methods for human physical activity recognition based on wearable systems. [Google Scholar]

- 19.Zhang H, Li L, Jia W, Fernstrom JD, Sclabassi RJ, Mao ZH, Sun M. Physical activity recognition based on motion in images acquired by a wearable camera. Neurocomputing. 2011 Jun 1;74(12-13):2184–2192. doi: 10.1016/j.neucom.2011.02.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Burges CJC. A tutorial on Support Vector Machines for pattern recognition. Data Mining and Knowledge Discovery. 1998 Jun;2(2):121–167. [Google Scholar]

- 21.Bai Y. Doctoral Thesis. University of Pittsburgh; 2014. A Wearable Indoor Navigation System for the Blind and Visually Impaired Individuals. [Google Scholar]

- 22.Bai Y, Jia W, Zhang H, Mao ZH, Sun M. Helping the blind to find the floor of destination in multistory buildings using a barometer.. Proceedings of the 35th IEEE Int. Conf. Engineering in Medicine and Biology; Osaka, Japan. July 3-7 2013; 2013. pp. 4738–4741. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.PandaCare: Demo Prepaing. 2013 https://blogs.cornell.edu/cornellcup2013pandacare/

- 24.Li C, Bai Y, Li M, Lang P, Sun M, Chen Y, Sejdic E. PandaCare: electronic unit for demensia care. Final Report, 2013 Cornnel Cup Competition. 2013 [Google Scholar]

- 25.STARLINO DCM tutorial – an introduction to orientation kinematics. 2011 http://www.starlino.com/dcm_tutorial.html.