Abstract

The protection of privacy of individual-level information in genome-wide association study (GWAS) databases has been a major concern of researchers following the publication of “an attack” on GWAS data by Homer et al. [1]. Traditional statistical methods for confidentiality and privacy protection of statistical databases do not scale well to deal with GWAS data, especially in terms of guarantees regarding protection from linkage to external information. The more recent concept of differential privacy, introduced by the cryptographic community, is an approach that provides a rigorous definition of privacy with meaningful privacy guarantees in the presence of arbitrary external information, although the guarantees may come at a serious price in terms of data utility. Building on such notions, Uhler et al. [2] proposed new methods to release aggregate GWAS data without compromising an individual's privacy. We extend the methods developed in [2] for releasing differentially-private χ2-statistics by allowing for arbitrary number of cases and controls, and for releasing differentially-private allelic test statistics. We also provide a new interpretation by assuming the controls’ data are known, which is a realistic assumption because some GWAS use publicly available data as controls. We assess the performance of the proposed methods through a risk-utility analysis on a real data set consisting of DNA samples collected by the Wellcome Trust Case Control Consortium and compare the methods with the differentially-private release mechanism proposed by Johnson and Shmatikov [3].

Keywords: differential privacy, genome-wide association study (GWAS), Pearson χ2-test, allelic test, contingency table, single-nucleotide polymorphism (SNP)

1. Introduction

A genome-wide association study (GWAS) tries to identify genetic variations that are associated with a disease. A typical GWAS examines single-nucleotide polymorphisms (SNPs) from thousands of individuals and produces aggregate statistics, such as the χ2-statistic and the corresponding p-value, to evaluate the association of a SNP with a disease.

For many years researchers have assumed that it is safe to publish aggregate statistics of SNPs that they found most relevant to the disease. Because these aggregate statistics were pooled from thousands of individuals, they believed that their release would not compromise the participants’ privacy. However, such belief was challenged when Homer et al. [1] demonstrated that, under certain conditions, given an individual's genotype, one only needs the minor allele frequencies (MAFs) in a study and other publicly available MAF information, such as SNP data from the HapMap1 project, in order to “accurately and robustly” determine whether the individual is in the test population or the reference population. Here, the test population can be the cases in a study, and the reference population can be the data from the HapMap project. Homer et al. [1] defined a distance metric that contrasts the similarity between an individual and the test population and that between the individual and the reference population, and constructed a t-test based on this distance metric. They then showed that their method of identifying an individual's membership status has almost zero false positive rate and zero false negative rate.

However, Braun et al. [4] argued that the key assumptions of the Homer et al. [1] attack are too stringent to be applicable in realistic settings. Most problematic are the assumptions that (i) the SNPs are in linkage equilibrium and (ii) that the individual, the reference population, and the test population are samples from the same underlying population. They presented a sensitivity analysis of the key assumptions and showed that violation of the first assumption results in a substantial increase in variance and violation of the second condition, together with the condition that the reference population and the test population have different sizes, results in the test statistic deviating considerably from the standard normal distribution.

Notwithstanding the apparent limitation of the Homer et al. [1] attack, the National Institute of Health (NIH) was cautious about the potential breach of privacy in genetic studies (see Couzin [5] and Zerhouni and Nabel [6]), and swiftly instituted an elaborate approval process that every researcher has to go through in order to gain access to aggregate genetic data.2,3 This NIH policy remains in e ect today.

The paper by Homer et al. [1] attracted considerable attention within the genetics community and spurred interest in investigating the vulnerability of confidentiality protection of GWAS databases. The research efforts include modifications and extensions of the Homer et al. attack, alternative formulations of the identification problem, and different aspects of attacking and protecting the GWAS databases; e.g., see [7–17]. In partial response to this literature, Uhler et al. [2] proposed new methods for releasing aggregate GWAS data without compromising an individual's privacy by focusing on the release of differentially-private minor allele frequencies, χ2-statistics and p-values.

In this paper, we develop a differentially-private allelic test statistic and extend the results on differentially-private χ2-statistics in [2] to allow for an arbitrary number of cases and controls. We start with some main definitions and notation in Section 2. The new sensitivity results are presented in Section 3. Uhler et al. [2] proposed an algorithm based on the Laplace mechanism for releasing the M most relevant SNPs in a differentially-private way. In the same paper they also developed an alternative approach to differential privacy in the GWAS setting using what is known as the exponential mechanism linked to an objective function perturbation method by Chaudhuri et al. [18]. This was proposed as a way to achieve a differentially-private algorithm for detecting epistasis. But the exponential mechanism could in principle have also been used as a direct alternative to the Laplace mechanism of Uhler et al. [2]. This is in fact what Johnson and Shmatikov [3] proposed. Their method selects the top-ranked M SNPs using the exponential mechanism. In Section 4 we review the algorithm based on the Laplace mechanism from [2] and propose a new algorithm based on the exponential mechanism by adapting the method by Johnson and Shmatikov [3]. Finally, in Section 5 we compare our two algorithms to the algorithm proposed in [3] by analyzing a data set consisting of DNA samples collected by the Wellcome Trust Consortium (WTCCC)4 and made available to us for reanalysis.

2. Main Definitions and Notation

The concept of differential privacy, recently introduced by the cryptographic community (e.g., Dwork et al. [19]), provides a notion of privacy guarantees that protect GWAS databases against arbitrary external information.

Definition 1. Let D denote the set of all data sets. Write D ~ D′ if D and D′ differ in one individual. A randomized mechanism K is ε-differentially private if, for all D ~ D′ and for any measurable set S ⊂ ,

Definition 2. The sensitivity of a function f : DN → Rd, where DN denotes the set of all databases with N individuals, is the smallest number S(f) such that

for all data sets D, D′ ∈ DN such that D ~ D′. Releasing f(D) + b, where b ~ Laplace , satisfies the definition of ε-differential privacy (e.g., see [19]). This type of release mechanism is often referred to as the Laplace mechanism. Here ε is the privacy budget; a smaller value of ε implies stronger privacy guarantees.

2.1. SNP Summaries Using Contingency Tables

Following the notation in [20], we can summarize the data for a single SNP in a case-control study with R cases and S controls using a 2 × 3 genotype contingency table shown in Table 1, or a 2×2 allelic contingency table shown in Table 2. We require that margins of the contingency table be positive.

Table 1.

Genotype distribution

| # of minor alleles |

||||

|---|---|---|---|---|

| 0 | 1 | 2 | Total | |

| Case | r 0 | r 1 | r 2 | R |

| Control | s 0 | s 1 | s 2 | S |

| Total | n 0 | n 1 | n 2 | N |

Table 2.

Allelic distribution

| Allele type |

|||

|---|---|---|---|

| Minor | Major | Total | |

| Case | r1 + 2r2 | 2r0 + r1 | 2R |

| Control | s1 + 2s2 | 2s0 + s1 | 2S |

| Total | n1 + 2n2 | 2n0 + n1 | 2N |

Definition 3. The (Pearson) χ2-statistic based on a genotype contingency table (Table 1) is

Definition 4. The allelic test is also known as the Cochran-Armitage trend test for the additive model. The allelic test statistic based on a genotype contingency table (Table 1) is equivalent to the χ2-statistic based on the corresponding allelic contingency table (Table 2). The allelic test statistic can be written as

The Pearson χ2-test for genotype data and the allelic test for allele data are among the most commonly used statistical tests for association in GWAS. Zheng et al. [21] suggest using the allelic test when the genetic model of the phenotype is additive, and the Pearson χ2-test when the genetic model is unknown.

3. Sensitivity Results

Under the assumption that there are an equal number of cases and controls, Uhler et al. [2] found the sensitivities of the χ2-statistic, the corresponding p-value and the projected p-value. For completeness, we briefly review these results here.

Theorem 3.1 (Uhler et al. [2]). The sensitivity of the χ2-statistic based on a 3×2 contingency table with positive margins and N/2 cases and N/2 controls is .

Theorem 3.2. (Uhler et al. [2]). The sensitivity of the p-values of the χ2-statistic based on a 3 × 2 contingency table with positive margins and N/2 cases and N/2 controls is exp(−2/3), when the null distribution is a χ2-distribution with 2 degrees of freedom.

Corollary 3.3 (Uhler et al. [2]). Projecting all p-values larger than p* = exp(−N/c) onto p* results in a sensitivity of exp(−N/c) −exp for any fixed constant c ≥ 3, which is a factor of N/2.

In the remainder of this section, we generalize these results to allow for an arbitrary number of cases and controls. This makes the proposed methods applicable in a typical GWAS setting, in which there are more controls than cases, as researchers often use data pertaining to other diseases as controls to increase the statistical power.

3.1. Sensitivity Results for the Pearson χ2-Statistic

We first consider the situation in which the adversary has complete information about the controls. This situation arises when a GWAS uses publicly available data for the controls, such as those from the HapMap project. In this scenario, it is only necessary to protect information about the cases.

Theorem 3.4. Let D denote the set of all 2 × 3 contingency tables with positive margins, R cases and S controls. Suppose the numbers of controls of all three genotypes are known. Let N = R + S, and smax = max{s0, s1, s2}. The sensitivity of the χ2-statistic based on tables in D is bounded above by .

Proof. See Appendix A.

Theorem 3.4 gives an upper bound for the sensitivity of the χ2-statistic based on 2 × 3 contingency tables with positive margins and known numbers of controls for all three genotypes. In Corollary 3.5 we show that, assuming r0 ≥ r2 and s0 ≥ s2, which reflects the definition of a major and minor allele, the upper bound for the sensitivity is attained.

Corollary 3.5. Let D denote the set of all 2 × 3 contingency tables with positive margins, R cases and S controls. We further assume that for tables in D, r0 ≥ r2 and s0 ≥ s2; i.e., in the case and control populations the number of individuals having two minor alleles is no greater than the number of individuals having two major alleles. The sensitivity of the χ2-statistic based on tables in is , where N = R + S.

Proof. For a change that occurs in the cases, we first treat s0, s1, and s2 as fixed, and get the result in Theorem 3.4. By taking (r0, r1, r2, s0, s1, s2) = (r0, 1, r2, 0, S, 0), r0 ≥ r2 > 0, and changing the table in the direction of u = (1, −1, 0, 0, 0, 0), we attain the upper bound . The same analysis for a change that occurs in the controls shows that the maximum change of the Pearson χ2-statistic (i.e., Y in Appendix A) is .

If we have no knowledge of either the cases or the controls, we get the sensitivity result presented in Corollary 3.5. On the other hand, when the controls are known, we can use Theorem 3.4 to reduce the sensitivity assigned to each set of SNPs grouped by the maximum number of controls among the three genotypes. However, in most GWAS the number of controls, S, is large and smax = max{s0, s1, s2} ≥ S/3. In this case, the following computation shows that the reduction in sensitivity obtained by Theorem 3.4 is insignificant:

In order to improve on statistical utility, Uhler et al. [2] proposed projecting the p-values that are larger than a threshold value onto the threshold value itself to reduce the sensitivity. In Theorem 3.6 we generalize this result to nonnegative score functions, showing how to incorporate projections into the Laplace mechanism.

Theorem 3.6. Given a nonnegative function f(d), define hC(d) = max{C, f(d)}, with C > 0; i.e., we project values of f(d) that are smaller than C onto C. Let s denote the sensitivity of hC(d), and suppose Y ~ Laplace , then W (d) = max{C, Z(d)}, with Z(d) = hC(d) + Y , is ε-differentially private.

Proof. From the definition of W (d), we know that W (d) ≥ C for all d. For t > C,

For t = C,

For example, when we apply this result to χ2-statistics in a differentially-private mechanism, we set C to be the χ2-statistic that corresponds to a small p-value and use an upper bound for the sensitivity of the projection function as sC, namely

3.2. Sensitivity Results for the Allelic Test Statistic

Theorem 3.7. The sensitivity of the allelic test statistic based on a 2 × 3 contingency table with positive margins, R cases and S controls is given by the maximum of

Proof. See Appendix B.

4. Privacy-Preserving Release of the Top M Statistics

In a GWAS setting, researchers usually assign to every SNP a score that reflects its association with a disease, but only release scores for the M most significant SNPs. Most commonly used scores are the Pearson χ2-statistic, the allelic test statistic, and the corresponding p-values. If those M SNPs were chosen according to a uniform distribution, ε-differential privacy can be achieved by the Laplace mechanism with noise , where s denotes the sensitivity of the scoring statistic. Recall that ε is the privacy budget, so a smaller value of ε implies stronger privacy guarantees.

However, by releasing M SNPs according to their rankings, an attacker knows that the released SNPs have higher scores than all other SNPs regardless of the face value of the released scores. Therefore, we need a more sophisticated algorithm for releasing the M most significant SNPs.

Adapting from the differentially-private algorithm for releasing the most frequent patterns in Bhaskar et al. [22], Uhler et al. [2] suggested an algorithm (see Algorithm 1) for releasing the M most relevant SNPs ranked by their χ2- statistics or the corresponding p-values while satisfying differential privacy. They also showed that adding noise directly to the χ2-statistic achieves a better trade-off between privacy and utility than by adding noise to the p-values or cell entries themselves. Using the results from Section 3, we can now also apply this algorithm when the number of cases and controls differ.

Algorithm 1.

The ε-differentially private algorithm for releasing the M most relevant SNPs using the Laplace mechanism.

| Input: The score (e.g., χ2-statistic or allelic test statistic) used to rank all M′ SNPs, the number of SNPs, M, that we want to release, the sensitivity, s, of the statistic, and ε, the privacy budget. |

| Output: M noisy statistics. |

| 1. Add Laplace noise with mean zero and scale to the true statistics. |

| 2. Pick the top M SNPs with respect to the perturbed statistics. Denote the corresponding set of SNPs by . |

| 3. Add new Laplace noise with mean zero and scale to the true statistics in . |

While Algorithm 1 is based on the Laplace mechanism, in Algorithm 2 we propose a new algorithm based on the exponential mechanism by adopting and simplifying the ideas proposed by Johnson and Shmatikov [3]. The first application of the exponential mechanism in the GWAS setting was given in [2], which resulted in a di erentially private algorithm for detecting epistasis.

Algorithm 2.

The ε-differentially private algorithm for releasing the M most relevant SNPs using the exponential mechanism.

| Input: The score (e.g., χ2-statistic or allelic test statistic) used to rank all M′ SNPs, the number of SNPs, M, that we want to release, the sensitivity, s, of the statistic, and ε, the privacy budget. |

| Output: M noisy statistics. |

| 1. Let and qi = score of SNPi. |

| 2. For i ∈ {1, . . ., M′}, set . |

| 3. Set , i ∈ {1, . . ., M′}, the probability of sampling the ith SNP. |

| 4. Sample k ∈ {1, . . ., M′} with probability {p1, . . ., pM′}. Add SNPk to . Set qk = –∞. |

| 5. If the size of is less than M, return to Step 2. |

| 6. Add new Laplace noise with mean zero and scale to the true statistics in . |

Theorem 4.1. Algorithm 2 is ε-differentially private.

Proof. See Appendix C.

5. Application of Differentially Private Release Mechanisms to Human GWAS Data

In this section we evaluate the trade-off between data utility and privacy risk by applying Algorithm 1 and Algorithm 2 with the new sensitivity results developed in Section 3 to a GWAS data set containing human DNA samples from WTCCC. We also compare the performance of Algorithm 1 and Algorithm 2 to that of the LocSig method developed by Johnson and Shmatikov [3]. Essential to the LocSig method is a scoring function based on the p-value of a statistical test. In this paper, we call the resulting scores the JS scores. In contrast to [3], which used the p-value of the G-test to construct the JS scores, we use the p-value of the Pearson χ2-test instead.

5.1. Data Set from WTCCC: Crohn's Disease

We use a real data set that was collected by the WTCCC and intended for genome-wide association studies of Crohn's disease. The data set consists of DNA samples from 3 cohorts, the subjects of which all lived within Great Britain and identified themselves as white Europeans: 1958 British Birth Cohort (58C), UK Blood Services (NBS), and Crohn's disease (CD). In the original study [23] the DNA samples from the 58C and NBS cohorts are treated as controls and those from the CD cohort as cases.

The data were sampled using the A ymetrix GeneChip 500K Mapping Array Set. The genotype data were called by an algorithm named CHIAMO (see [23]), which WTCCC developed and deemed more powerful than Affymetrix's BRLMM genotype calling algorithm. According to the WTCCC analysis, some DNA samples were contaminated or came from non-Caucasian ancestry. In addition, they indicated that some SNPs did not pass quality control filters. Finally, WTCCC [23] removed additional SNPs from their analysis by visually inspecting cluster plots.

5.2. Our Re-Analysis of the WTCCC Data

In [23], the authors mainly used the allelic test and the Pearson χ2-test to find SNPs with a strong association with Crohn's disease, and reported the relevant statistics and their p-values for the most significant SNPs. In general, the Wellcome Trust Case Control Consortium [23] considered a SNP significant if its allelic test p-value or χ2-test p-value were smaller than 10−5. In the supplementary material of [23] they reported 26 significant SNPs, 6 of which were imputed. Per [23], imputing SNPs that do not exist in the WTCCC databases does not affect the calculation of the allelic test statistics or the Pearson χ2-statistics of SNPs already in the WTCCC databases; therefore, we disregard the imputed SNPs in our analysis and retain 20 significant SNPs.

We followed the filtering process in [23] closely and removed DNA samples and SNPs that [23] deemed contaminated. However, we did not remove any further SNPs due to poor cluster plots. We verified that our processing of the raw genotype data leads to the same results as those published in the supplementary material of [23]: our calculations for 16 of the 20 reported significant SNPs match those in [23], deviating no more than 2% in allelic test statistic and χ2-statistic. However, we found that a number of significant SNPs were not reported by the WTCCC. We corresponded with one of the principal authors of [23] and received confirmation that the WTCCC also found those SNPs to be significant. However, Wellcome Trust Case Control Consortium [23] did not report these SNPs because they su ered from poor calling quality according to visual inspection of the cluster plot, a procedure that we did not implement. We excluded from our analysis these SNPs that have significant allelic test p-values or χ2-test p-values, but are not reported by the WTCCC.

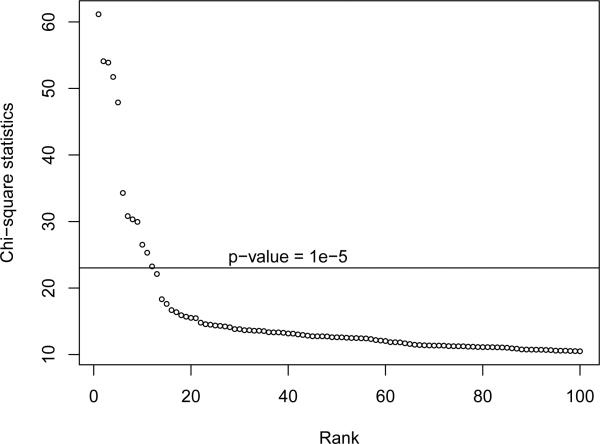

In Figure 1 we plot the χ2-statistics resulting from our analysis in descending order. Note that there is a large gap between the 5th and the 6th largest χ2-statistics. This is an important observation for the risk-utility analysis of the perturbed statistics in Section 5.3. Because of the nature of the distribution of the top χ2-statistics in this data set, it is easier to recover all top 5 SNPs as the top rated 5 SNPs in the perturbed data than it is to recover all top M SNPs for M < 5 or M > 5, as is evident in Figure 2, which we discuss in the next section.

Figure 1.

Unperturbed top χ-statistics in descending order.

Figure 2.

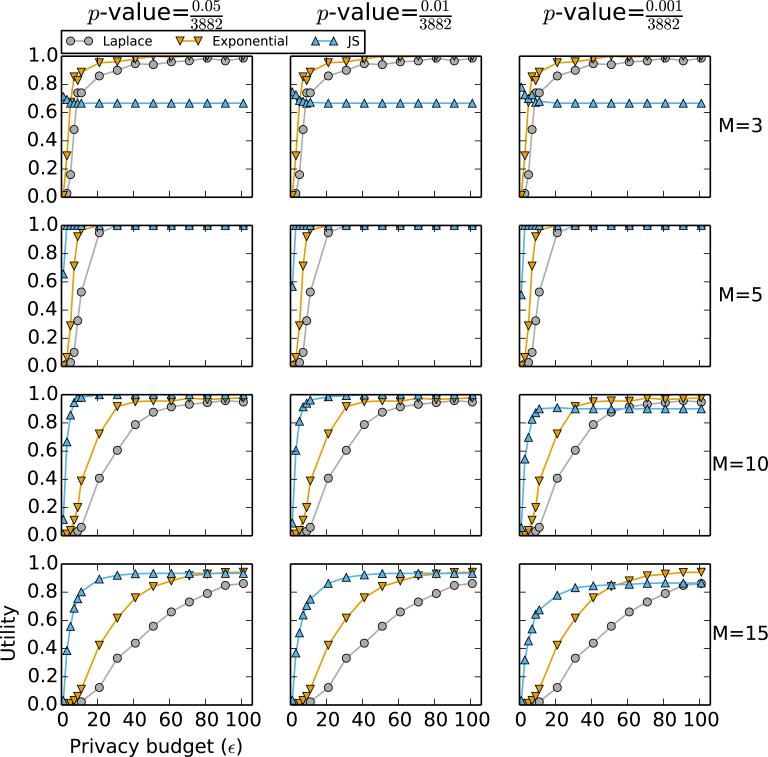

Performance comparison of Algorithm 1 (“Laplace”), Algorithm 2 with χ2-statistics as scores (“Exponential”), and the LocSig method in Johnson and Shmatikov [3] (“JS”) based on the p-value of the χ2-statistic. Each row corresponds to a fixed M, the number of top SNPs to release. Each column corresponds to a fixed threshold p-value, which is relevant to the LocSig method only; it is irrelevant to the other methods. Data used to generate this figure consist of SNPs with p-values smaller than 10−5 and a randomly chosen 1% sample of SNPs with p-values larger than 10−5; the total number of SNPs used for calculation is 3882.

To summarize, we were able to reproduce a high percentage of significant SNPs from [23]. Therefore, we are confident that our data processing procedure is sound and the χ2-statistics and allelic test statistics that we obtained from the data are comparable with those produced in a high quality GWAS.

5.3. Risk-Utility Analysis of Differentially Private Pearson χ2-statistics

In this section, we use the χ2-statistics obtained from the WTCCC dataset described in Section 5.1 and analyze the statistical utility of releasing di erentially private χ2-statistics for various privacy budgets, ε. With 1748 cases and 2938 controls in the WTCCC dataset, we use Corollary 3.5 to obtain a sensitivity of 4.27 for the χ2-statistic.

We define statistical utility as follows: let BS0 be the set of top M SNPs ordered according to their true χ2-statistics and let BS be the set of top M SNPs chosen after perturbation (either by Algorithm 1 using the Laplace mechanism or by Algorithm 2 using the exponential mechanism). Then the utility as a function of ε is

We perform the following procedure to approximate the expected utility for Algorithm 1: (i) add Laplace noise with mean zero and scale to the true χ2-statistics, where s is the sensitivity of the χ2-statistic; (ii) pick the top M SNPs with respect to the perturbed χ2-statistics; (iii) denote the set of SNPs chosen according to the true and perturbed χ2-statistics by S0 and S0, respectively; (iv) calculate . We repeat the aforementioned procedure 50 times for a fixed ε and report the average of the utility u(ε). To approximate for Algorithm 2, we repeat 50 times the process of generating S by performing steps 1–5 in Algorithm 2 and report the average of the utility u(ε). In order to approximate for the procedure LocSig from [3], we rank the SNPs by their χ2-statistics but replace the scores in Step 1 by the JS scores.

The runtimes of the different algorithms vary considerably (see Table 3). The runtimes were obtained on a PC with an Intel i5-3570K CPU, 32 GB of RAM and the Ubuntu 13.04 operating system. Calculating the χ2-statistics from genotype tables is a trivial task and takes very little time. Calculating the JS scores can be a daunting task, however, if one cannot find a clever simplification. The JS score is essentially the shortest Hamming distance between the original database and the set of databases at which the significance of the p-value changes. Thus without any simplifications, one would need to search the entire space of databases in order to find the table with the shortest Hamming distance. In our implementation for finding the JS score for a genotype table based on the p-value corresponding to the χ2-statistic, we simplify the calculation by greedily following the path of maximum change of the χ2-statistic until we find a table with altered significance.

Table 3.

Comparison of runtime for the simulations in Section.5.3 The number of repetitions is 50, the number of different values for M is 4, the number of different values for ε is 15, and the number of SNPs is around 4000. is the set of SNPs to be released after the perturbation.

| Method | Time spent on generating (in minutes) | Time spent on calculating the scores (in minutes) |

|---|---|---|

| Algorithm 1 (Laplace) | 0.04 | ≈ 0 |

| Algorithm 2 (Exponential) | 1.53 | ≈ 0 |

| LocSig (JS) | 2.00 | 3.50 |

In Figure 2, we compare the performance of Algorithm 1 (based on the Laplace mechanism) and Algorithm 2 (based on the exponential mechanism) to LocSig ([3]). It is clear that when ε = 1, the LocSig method outperforms the other methods with respect to utility. Nevertheless, we note a few features regarding the performance of LocSig.

When M = 3, the utility of the LocSig method cannot exceed 0.67 even as ε continues to increase. This artifact is due to the fact that the ranking of SNPs based on the JS scores is di erent from the ranking based on the χ2-statistics.

Table 4 gives the top 6 SNPs ranked by their χ2-statistics and the corresponding JS scores. For all threshold p-values, the JS score of the 4th SNP is larger than that of the 2nd SNP and that of the ith SNP for i ≥ 5. Thus, when ε is sufficiently large, the LocSig method will almost always output the 1st, 3rd, and 4th SNPs. Consequently, the utility for the LocSig method will not increase when ε increases.

The LocSig method is sensitive to the choice of p-value. This becomes apparent in the plots for M = 15 in Figure 2. The risk-utility curves of the LocSig method tend to have lower utility for the same ε when the threshold p-value is smaller.

Even though Algorithm 1 and Algorithm 2 do not perform as well as the LocSig method for small values of ε, they do not suffer from the aforementioned issues. Furthermore, we can see from Figure 2 that the exponential mechanism always outperforms the Laplace mechanism, i.e., it achieves a higher utility for each value of ε.

Table 4.

Ranking of the top 6 SNPs by χ2-statistics and the corresponding JS scores. K denotes the total number of SNPs.

| Scoring scheme | Threshold p-value | Score (nearest integer) | |||||

|---|---|---|---|---|---|---|---|

| 1st | 2nd | 3rd | 4th | 5th | 6th | ||

| χ2-statistic | - | 61 | 54 | 54 | 52 | 48 | 34 |

| JS score | 0.001/K | 51 | 31 | 37 | 33 | 25 | 6 |

| JS score | 0.01/K | 61 | 38 | 47 | 41 | 33 | 13 |

| JS score | 0.05/K | 69 | 43 | 55 | 48 | 38 | 18 |

To summarize, in this application Algorithm 2 outperforms Algorithm 1. The method based on LocSig improves on Algorithm 2 for small values of ε, but shows some problematic behavior when ε increases. Finally, the LocSig method comes at a much higher computational cost than the other two algorithms and might not be computationally feasible for some data sets.

6. Conclusions

A number of authors have argued that it is possible to use aggregate data to compromise the privacy of individual-level information collected in GWAS databases. We have used the concept of differential privacy and built on the approach in Uhler et al. [2] to propose new methods to release aggregate GWAS data without compromising an individual's privacy. A key component of the differential privacy approach involves the sensitivity of a released statistic when we remove an observation. In this paper, we have obtained sensitivity results for the Pearson χ2-statistic when there are arbitrary number of cases and controls. Furthermore, we showed that the sensitivity can be reduced in the situation where data for the cases (or the controls) are known to the attacker. Nevertheless, we also showed that the reduction in sensitivity is insignificant in typical GWAS, in which the number of cases is large.

By incorporating the two-step differentially-private mechanism for releasing the top M SNPs (Algorithm 1) with the projected Laplace perturbation mechanism (Theorem 3.6), we have created an algorithm that outputs significant SNPs while preserving di erential privacy. We demonstrated that the algorithm works effectively in human GWAS datasets, and that it produces outputs that resemble the outputs of regular GWAS. We also showed that the performance of Algorithm 1, which is based on the Laplace mechanism, can be improved by using Algorithm 2, which is based on the exponential mechanism. Furthermore, Algorithm 2 is computationally more efficient than the LocSig method of Johnson and Shmatikov [3], and it performs better for increasing values of ε.

Finally, we showed that a risk-utility analysis of the algorithm allows us to understand the trade-off between privacy budget and statistical utility, and therefore helps us decide on the appropriate level of privacy guarantee for the released data. We hope that approaches such as those that we demonstrate in this paper will allow the release of more information from GWAS going forward and allay the privacy concerns that others have voiced over the past decade.

Acknowledgment

This research was partially supported by NSF Awards EMSW21-RTG and BCS-0941518 to the Department of Statistics at Carnegie Mellon University, and by NSF Grant BCS-0941553 to the Department of Statistics at Pennsylvania State University. This work was also supported in part by the National Center for Research Resources, Grant UL1 RR033184, and is now at the National Center for Advancing Translational Sciences, Grant UL1 TR000127 to Pennsylvania State University. The content is solely the responsibility of the authors and does not necessarily represent the official views of the NSF and NIH. We thank the Wellcome Trust Consortium for giving us access to the data used in this paper to illustrate our methods.

Appendices

Appendix A. Proof of Theorem 3.4

Proof. The Pearson χ2-statistic can be written as

| (A.1a) |

| (A.1b) |

We denote a contingency table and its column sums by v = (r0, r1, r2, s0, s1, s2, n0, n1, n2). Let v′ = v + u, with v′ and v differing by Hamming distance 1. Finding the sensitivity of Y boils down to finding v and u that maximize .

Suppose r0 > 0 and consider u = (−1, 1, 0, 0, 0, 0, −1, 1, 0). As a conse quence of (A.1b) we find that

Because r0 > 0, we get that n0 = r0 + s0 ≥ 1 + s0, and

Similarly,

Therefore,

A similar analysis for all possible directions u and scenarios in which r1 > 0 or r2 > 0 reveals that the sensitivity of Y is bounded above by .

Appendix B. Proof of Theorem 3.7

Proof. We denote a contingency table and its column sums by v = (r0, r1, r2, s0, s1, s2, n0, n1, n2). With the number of cases, R, and the number of controls, S, fixed, we can simply write vs = (s1, s2, n1, n2) or vr= (r1, r2, r1, r2). Then the allelic test statistic can be written as

Let v′ = v+u, with v′ and v differing by Hamming distance 1. Finding the sensitivity of YA boils down to finding v and v′ that maximize . This is equivalent to maximizing and , with us and ur defined as follows:

In other words, when r0 > 0, we search for tables that maximize or ; when s0 > 0, we search for tables that maximize and .

Let's first consider the case r0 > 0. We have and . Denote by

then

Therefore, tables that maximize also maximize . Furthermore, for the same table vs, the change of YAA(vs) in the direction of is no less than that in the direction of

Fixing n1 and n2, depends only on s1 and s2. So maximizing is equivalent to maximizine the absolute value of

where . Note that the term D not depend on s1 or S2. There are three scenarios:

when n0 = n2, is maximized when s1 + 2s2 is minimized or maximized;

when n0 > n2, is maximized when is maximized or minimized, which occurs when s1 + 2s2 is minimized or maximized;

- when n0 < n2, is maximized when is maximized or minimized as well. Because

is maximized when (s1+2s2) is minimized, and it is minimized when (s1 + 2s2) is maximized.

The preceding analysis shows that for any given n1 and n2, is maximized when (s1 + 2s2) is maximized or minimized; in other words, to maximized , we only need to consider tables for which (s1, s2) = (0, 0) or (s1, s2) = (n1 n2).

Given (s1, s2) = (0, 0), we have , and

So is maximized when 2n0 + n1 is minimized. Because (r0 > 0, s1 = s2 = 0) =⇒ (r0 ≥ 1, r1 = n1, r2 = n2, s0 = S) =⇒ (s0 ≥ S + 1, n1 ≥ 1), the minimum occurs at vs = (0, 0, 1, R − 2), i.e.,

Given (s1, s2) = (n1, n), we have , and

Because (s1 = n1, s2 = n2) =⇒ (r1 = r2 = 0) =⇒ (r0 = R) =⇒ (n0 ≥ R), we have . So is maximized when when 2n0+n1 is minimized, which is occurs at vs = (1, S − 1, 1, S − 1), i.e.,

To summarize, when r0 > 0, for any table vs, the change of YA(vs) in the direction of is no less than that in the direction of . The maximum change of YA in the direction of occurs

The same analysis for s0 > 0 reveals that , and the maximum change of YA in the direction of

Appendix C. Proof of Theorem 4.1

Proof. To show that Algorithm 2 is ε-differentially private, it suffices to show that choosing S is ε/2-differentially private. The rest of the proof follows from the proof of Algorithm 1 in Uhler et al. [2].

Following the notation in McSherry and Talwar [24], we define the random variable of sampling a single SNP, , by

where q(D, i) is the score for SNPi, s is the sensitivity for the scoring function q(D, i), and μ(I) = 1/M′ is constant. We also define

where B is a set of SNPs and qB denotes the scoring function given that the SNPs in B have been sampled and thus have 0 sampling probability in subsequent sampling steps. Note that

Let σ denote a permutation of BS.

Footnotes

Contributor Information

Fei Yu, Department of Statistics, Carnegie Mellon University, Pittsburgh, PA 15213-3890, USA.

Stephen E. Fienberg, Department of Statistics, Heinz College, Machine Learning Department, and Cylab, Carnegie Mellon University, Pittsburgh, PA 15213-3890, USA

Aleksandra Slavković, Department of Statistics, Department of Public Health Sciences, Penn State University, University Park, PA 16802 USA.

Caroline Uhler, Institute of Science and Technology Austria, Am Campus 1, 3400 Klosterneuburg, Austria.

References

- 1.Homer N, Szelinger S, Redman M, Duggan D, Tembe W, Muehling J, Pearson JV, Stephan DA, Nelson SF, Craig DW. Resolving individuals contributing trace amounts of DNA to highly complex mixtures using high-density SNP genotyping microarrays. PLoS Genetics. 2008;4:e1000167. doi: 10.1371/journal.pgen.1000167. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Uhler C, Slavkovic AB, Fienberg SE. Privacy-preserving data sharing for genome-wide association studies. Journal of Privacy and Confidentiality. 2013;5:137–166. [PMC free article] [PubMed] [Google Scholar]

- 3.Johnson A, Shmatikov V. Privacy-preserving data exploration in genome-wide association studies. Proceedings of the 19th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining. 2013:1079–1087. doi: 10.1145/2487575.2487687. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Braun R, Rowe W, Schaefer C, Zhang J, Buetow K. Needles in the haystack: identifying individuals present in pooled genomic data. PLoS Genetics. 2009;5:e1000668. doi: 10.1371/journal.pgen.1000668. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Couzin J. Whole-genome data not anonymous, challenging assumptions. Science. 2008;321:1278. doi: 10.1126/science.321.5894.1278. [DOI] [PubMed] [Google Scholar]

- 6.Zerhouni EA, Nabel EG. Protecting aggregate genomic data. Science. 2008;322:44. doi: 10.1126/science.322.5898.44b. [DOI] [PubMed] [Google Scholar]

- 7.Jacobs KB, Yeager M, Wacholder S, Craig D, Kraft P, Hunter DJ, Paschal J, Manolio TA, Tucker M, Hoover RN, Thomas GD, Chanock SJ, Chatterjee N. A new statistic and its power to infer membership in a genome-wide association study using genotype frequencies. Nature Genetics. 2009;41:1253–7. doi: 10.1038/ng.455. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Clayton D. On inferring presence of an individual in a mixture: a Bayesian approach. Biostatistics. 2010;11:661–73. doi: 10.1093/biostatistics/kxq035. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Sankararaman S, Obozinski G, Jordan MI, Halperin E. Genomic privacy and limits of individual detection in a pool. Nature Genetics. 2009;41:965–7. doi: 10.1038/ng.436. [DOI] [PubMed] [Google Scholar]

- 10.Visscher PM, Hill WG. The limits of individual identification from sample allele frequencies: theory and statistical analysis. PLoS Genetics. 2009;5:e1000628. doi: 10.1371/journal.pgen.1000628. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Wen X, Stephens M. Using linear predictors to impute allele frequencies from summary or pooled genotype data. Annals of Applied Statistics. 2010;4:1158–1182. doi: 10.1214/10-aoas338. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Lumley T, Rice K. Potential for revealing individual-level information in genome-wide association studies. JAMA. 2010;303:659–60. doi: 10.1001/jama.2010.120. [DOI] [PubMed] [Google Scholar]

- 13.Loukides G, Gkoulalas-Divanis A, Malin B. Anonymization of electronic medical records for validating genome-wide association studies. Proceedings of the National Academy of Sciences of the United States of America. 2010;107:7898–903. doi: 10.1073/pnas.0911686107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Zhou X, Peng B, Li Y, Chen Y. To release or not to release: evaluating information leaks in aggregate human-genome data. ESORICS, Springer. 2011:607–627. [Google Scholar]

- 15.Masca N, Burton PR, Sheehan NA. Participant identification in genetic association studies: improved methods and practical implications. International Journal of Epidemiology. 2011;40:1629–42. doi: 10.1093/ije/dyr149. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Im HK, Gamazon ER, Nicolae DL, Cox NJ. On sharing quantitative trait GWAS results in an era of multiple-omics data and the limits of genomic privacy. American Journal of Human Genetics. 2012;90:591–8. doi: 10.1016/j.ajhg.2012.02.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Gymrek M, McGuire AL, Golan D, Halperin E, Erlich Y. Identifying personal genomes by surname inference. Science. 2013;339:321–4. doi: 10.1126/science.1229566. [DOI] [PubMed] [Google Scholar]

- 18.Chaudhuri K, Monteleoni C, Sarwate AD. Differentially Private Empirical Risk Minimization. Journal of machine learning research : JMLR. 2011;12:1069–1109. [PMC free article] [PubMed] [Google Scholar]

- 19.Dwork C, McSherry F, Nissim K, Smith A. Calibrating noise to sensitivity in private data analysis. Theory of Cryptography. 2006:1–20. [Google Scholar]

- 20.Devlin B, Roeder K. Genomic control for association studies. Biometrics. 1999;55:997–1004. doi: 10.1111/j.0006-341x.1999.00997.x. [DOI] [PubMed] [Google Scholar]

- 21.Zheng G, Joo J, Yang Y. Pearson's test, trend test, and MAX are all trend tests with different types of scores. Annals of Human Genetics. 2009;73:133–40. doi: 10.1111/j.1469-1809.2008.00500.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Bhaskar R, Laxman S, Smith A, Thakurta A. Proceedings of the 16th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining. ACM Press; New York, New York, USA: 2010. Discovering frequent patterns in sensitive data; pp. 503–512. [Google Scholar]

- 23.Wellcome Trust Case Control Consortium Genome-wide association study of 14,000 cases of seven common diseases and 3,000 shared controls. Nature. 2007;447:661–78. doi: 10.1038/nature05911. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.McSherry F, Talwar K. Mechanism Design via Differential Privacy. 48th Annual IEEE Symposium on Foundations of Computer Science (FOCS'07) 2007:94–103. [Google Scholar]