Abstract

The communication of uncertainty in clinical evidence is an important endeavor that poses difficult conceptual, methodological, and ethical problems. Conceptual problems include logical paradoxes in the meaning of probability and “ambiguity”— second-order uncertainty arising from the lack of reliability, credibility, or adequacy of probability information. Methodological problems include questions about optimal methods for representing fundamental uncertainties and for communicating these uncertainties in clinical practice. Ethical problems include questions about whether communicating uncertainty enhances or diminishes patient autonomy and produces net benefits or harms. This article reviews the limited but growing literature on these problems and efforts to address them and identifies key areas of focus for future research. It is argued that the critical need moving forward is for greater conceptual clarity and consistent representational methods that make the meaning of various uncertainties understandable, and for clinical interventions to support patients in coping with uncertainty in decision making.

Keywords: uncertainty, probability, ambiguity, evidence, communication

The communication of uncertainty in clinical evidence has become an increasingly important endeavor in health care. As the growing evidence-based medicine (EBM) movement has expanded the base of scientific knowledge supporting medical decisions, it has also brought to light substantial limitations in this knowledge and a need to convey these limitations to health professionals and patients. Meanwhile, awareness of scientific uncertainty in health care and the need to communicate it has been further heightened by an increasing cultural emphasis on informed patient choice, promoted by the growing shared decision making movement, and expanding mass media coverage of numerous medical controversies ranging from drug safety to disease screening and treatment.

While these recent trends have increased the amount and visibility of uncertainty in health care, the demand to communicate uncertainty to decision makers has always existed. From a descriptive standpoint, people use information about uncertainty to determine their confidence in existing evidence and in decisions based on this evidence (Fischhoff & MacGregor, 1982; Griffin & Tversky, 1992; Kahneman & Tversky, 1982; Smithson, 2008). Appropriate calibration of this confidence depends on information about uncertainty. From a normative standpoint as well, people not only need but deserve information about uncertainty. The ethical principle of respect for patient autonomy, which underlies the ideal of informed decision making, affirms the right of individuals to the information necessary to make good decisions, and the obligation of health professionals to provide this information to patients. Information about uncertainty is critical in this regard, because it allows decision makers to judge for themselves whether existing evidence is sufficient to justify action.

Yet despite increasing awareness of uncertainty in clinical evidence and the ever-present psychological and ethical demands to communicate this uncertainty to patients, the task itself is complicated by several problems. Some are conceptual and relate to questions about the meaning and nature of uncertainty in clinical evidence. Exactly what are we communicating? Other problems are methodological and relate to questions about the optimal approaches for representing important uncertainties and communicating them in clinical practice. How should we communicate these uncertainties? Still other problems are ethical, and relate to the unclear benefits and potential harms of communicating uncertainty, along with inadequate understanding about the appropriate clinical circumstances in which uncertainty ought to be communicated. Why should we communicate uncertainty, and what are the consequences of doing so?

New Contribution

In this article, I will discuss each category of problems—conceptual, methodological, and ethical—that pose challenges for the communication of uncertainty in clinical evidence. I will review the limited but growing literature on efforts to address these problems, and recommend key directions for future research. I will argue that the critical need moving forward is for greater conceptual clarity and consistent representational methods that make the meaning of various uncertainties understandable to patients and for patient-centered interventions that apply these methods to help patients cope with this uncertainty in health care decision making.

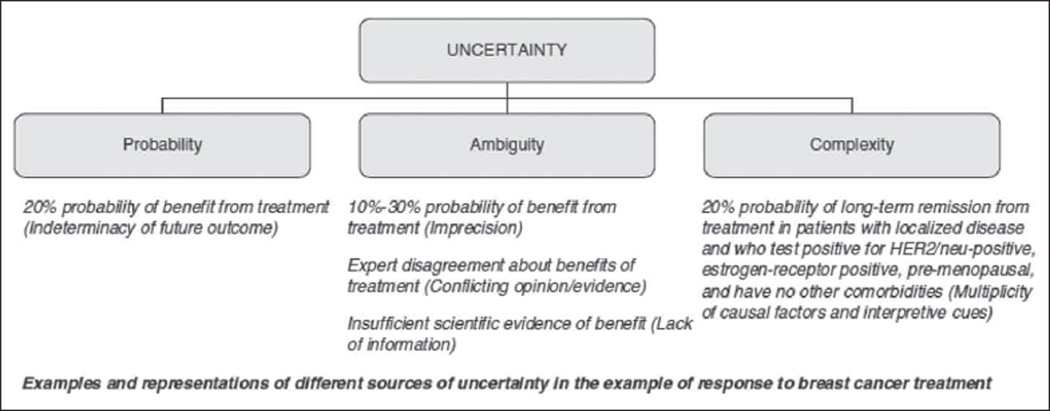

To accomplish these tasks, some operational definitions are needed since uncertainty itself is a concept with multiple meanings. Following Smithson (1989) and recent work on this topic (Han, Klein, & Arora, 2011), I define uncertainty as the subjective consciousness of ignorance. As such, uncertainty is a “metacognition”—a thinking about thinking—characterized by self-awareness of incomplete knowledge about some aspect of the world. Our concern is with the uncertainty that pertains to clinical evidence and exists in the minds of producers and consumers of this evidence. Three principal sources of this uncertainty can be distinguished (Figure 1): (a) probability, (b) ambiguity, and (c) complexity (Han, Klein, & Arora, 2011). Probability (otherwise known as “risk”) refers to the fundamental indeterminacy or randomness of future outcomes and has also been termed “aleatory” or first-order uncertainty; the exemplar is the point estimate of risk (e.g., “20% probability of benefit from treatment”). Ambiguity refers to the lack of reliability, credibility, or adequacy of information about probability and is also known as “epistemic” or “second-order” uncertainty. Ambiguity arises in situations in which risk information is unavailable, inadequate, or imprecise; the exemplar is the confidence interval around a point estimate (e.g., “10% to 30% probability of benefit from treatment”). Complexity refers to features of risk information that make it difficult to understand; examples include the presence of conditional probabilities or of multiplicity in risk factors, outcomes, or decisional alternatives, which diminish their comprehensibility or produce information overload. This article will focus primarily on probability and ambiguity since these are the primary sources of uncertainty when clinical evidence is low. The distinctive problems they raise will be analyzed separately, while their common problems will be addressed as consequences of “uncertainty” in general.

Figure 1.

Sources of uncertainty in clinical evidence (Han, Klein, & Arora, 2011)

The evidence in which different uncertainties are manifest ranges from anecdotal clinical observations to data from randomized clinical trials. The current discussion, however, will focus on two forms of evidence that have similar functions and applications in the care of individual patients: (a) probability or “risk” estimates produced by clinical prediction models (CPMs) and (b) practice recommendations articulated by clinical practice guidelines (CPGs). CPMs use characteristics of the patient, disease, or treatment to estimate individualized probabilities of health outcomes and thus “provide the evidence-based input” for shared decision making (Steyerberg, 2010). CPGs synthesize available evidence to predict the best course of action for individual patients, and thus “assist practitioner and patient decisions about appropriate health care for specific clinical circumstances” (Institute of Medicine, 1990). Both CPMs and CPGs embody the primary goal of EBM: “the conscientious, explicit, and judicious use of current best evidence in making decisions about individual patients” (Sackett, Rosenberg, Gray, Haynes, & Richardson, 1996, p. 71). In doing so, however, they also embody uncertainty, given their function of transforming raw data from multiple primary sources into actionable higher order evidence. CPMs and CPGs thus provide a useful focus for our discussion, although the problems of communicating uncertainty arise whenever scientific evidence of any kind—for example, primary data from clinical trials—are applied to clinical decision making. These problems are conceptual, methodological, and ethical in nature and will be discussed in turn.

Conceptual Problems

The first problems are conceptual and relate to the meaning and existence of various uncertainties that need to be communicated to patients.

The Paradox of Single-Event Probability

The most fundamental uncertainty in clinical evidence is probability, and the conceptual problem it entails arises from the endeavor to apply objective probability estimates to the domain of single events experienced by individual patients. Objective probability estimates in health care are derived from and expressed in terms of the observed frequency of past outcomes in a population of individuals, and enable inferences about the frequency of expected future outcomes in a similar population.

The problem, however, is that objective probability estimates are logically incoherent when applied to the prospect of a future event experienced by a single individual with only one life to live. As Hacking (2001) has observed, “It does not make sense to speak of the ‘frequency’ of a single event.” This conceptual paradox has been the subject of long-standing conflict between “frequentist” and “subjective” interpretations that view probability as an objective property of the material world versus a subjective mental state (Gigerenzer, Swijtink, Daston, Beatty, & Krueger, 1989; Gillies, 2000). The subjectivist interpretation was articulated by de Finetti in his statement “probability does not exist” (de Finetti, 1974), meaning that probabilities are not factual accounts of reality but linguistic constructs expressing a person’s degree of belief or confidence about the future. In this view, there is no single “true” probability or right action for individual patients; rather, their future outcomes are indeterminate, unknowable, and ultimately subject to randomness.

This deep conceptual problem—indeterminacy or randomness arising from incoherence in the idea of objective single-event probability—is inherent in the interpretation of both individualized risk estimates and CPG. However, it is difficult for patients to grasp (Han, Lehman, et al., 2009; Weinfurt, Sulmasy, Schulman, & Meropol, 2003) and obscured by communication efforts that focus solely on conveying the magnitude of risks or the strength of recommendations, rather than their conceptual meaning. Inattention to the conceptual limitations of single-event probabilities is reinforced, furthermore, by major health care initiatives. Perhaps the most conspicuous example is the ideal of personalized health care—care that is “calibrated to each patient and personally effective for each individual” (U.S. Department of Health and Human Services, 2008, p. 1)—which assumes the possibility of estimating individuals’ “true” risk of health outcomes. Similarly, efforts to disseminate CPGs assume the possibility of defining “appropriate care” for individuals.

Yet these efforts ultimately run up against the conceptual problem of single-event probability, as noted by Fuchs (2011):

… the heterogeneity of patient populations and uncertainty about the response of individual patients to an intervention means that it is often difficult or impossible to determine in advance which ones will prove to help particular patients and which will turn out to have been unnecessary. There is no escaping the fact that many interventions are valuable for some patients even if, for the population as a whole, their cost is greater than their benefit. (p. 586)

Although heterogeneity of populations and treatment effects may be an empirical problem, potentially resolvable by methodological means (Kent, Rothwell, Ioannidis, Altman, & Hayward, 2010), the deeper irresolvable problem is conceptual rather than empirical, and reflects the limited applicability of probability estimates—derived by averaging the aggregate outcomes experienced by a population—to single events experienced by individual persons (Asch & Hershey, 1995; Rose, 1994). The problem originates from the very idea of single-event objective probability: such probability simply does not exist in a literal sense and would be unknowable even if heterogeneity could be methodologically eliminated (Knight, 1921).

Importantly, the logical incoherence of objective probabilities does not undermine their potential utility in clinical decision making. Objective probabilities can inform subjective probabilities (de Finetti, 1974), and that is their principal value in health care and other decision making domains. A woman who learns from an online CPM that her lifetime risk of breast cancer is “12%,” for example, ends up with an evidence-based and thus better-informed risk perception. Nevertheless, the logical incoherence of objective single-event probabilities calls for clarity and precision in their presentation and use. The woman in our example needs to be made aware that “12%” is not a literal representation of her own “true” risk but a figurative expression of scientists’ confidence based on the aggregated outcomes of individuals whose characteristics are similar—but not completely equivalent—to her own. Her true risk is anyone’s guess and contingent on factors beyond the available evidence that may justify higher or lower levels of confidence (Griffin & Tversky, 1992). Estimating this risk is not a strictly scientific act in which “facts” are discovered, but a social process in which personal confidence is constructed and expressed in mathematical terms.

This view of probability as a socially constructed, metaphorical expression of confidence in an unpredictable future is a departure from conventional clinical discourse and the expressed aspirations of personalized health care and EBM. It is also difficult for patients to understand (Han, Lehman, et al., 2009), and thus represents an important conceptual problem in efforts to communicate uncertainty in clinical evidence.

Ambiguity Versus Probability

Ambiguity, the other key uncertainty pertaining to probability estimates and CPGs, also poses conceptual problems. From a normative standpoint, probability estimates themselves are expressions of uncertainty. Some experts have thus questioned the logical coherence and necessity of invoking an additional “second order” of uncertainty—that is, uncertainty about probability (Howard, 1988). Morgan et al. (2009) make the case as follows:

Very often people are interested in using ranges or even second-order probability distributions on probabilities—to express “uncertainty about their uncertainty” … this usually arises from an implicit confusion that there is a “true” probability out there … and people want to express uncertainty about that “true” probability. Of course, there is no such thing. The probability itself is a way to express uncertainty. A second-order distribution rarely adds anything useful. (p. 46)

This argument against communicating ambiguity, which depends on the subjectivists’ rejection of a single “true” probability, is logically coherent and defensible from a normative standpoint.

Yet this argument is not a valid description of how people actually think and behave. A large body of behavioral research has shown that people do distinguish between first- and second-order uncertainty, probability, and ambiguity. In choice experiments, people show a clear preference against options involving unknown (ambiguous) versus known probabilities (Camerer & Weber, 1992; Ellsberg, 1961). Likewise, when decision makers are presented with probability estimates accompanied by information about ambiguity—for example, confidence intervals expressing second-order uncertainty—they form pessimistic judgments of these estimates and avoid decision making (Han, Klein, Lehman, et al., 2011; Kuhn, 1997; Kuhn & Budescu, 1996; Viscusi, 1997; Viscusi, Magat, & Huber, 1999). These responses, collectively known as “ambiguity aversion,” prove that regardless of whether ambiguity “exists” from a normative standpoint, it is psychologically real and an important determinant of people’s confidence in decision making—over and above the confidence they infer from probability estimates themselves. Ambiguity exists and matters to people, irrespective of whether it should.

Nevertheless, these problems raise the need to clarify that the distinction between ambiguity and probability is not an objective feature of the real world, but a conceptual abstraction ultimately justified by its heuristical value in decision making. Morgan et al. (2009), for example, acknowledge that although distinguishing and communicating ambiguity lacks justification from a normative standpoint, it has the potential utility of allowing experts “to provide information about the confidence they have in their judgment.” Communicating ambiguity—for example, using confidence intervals to represent imprecision in probability estimates—can thus be construed as a way of augmenting the formal expression of confidence and disabusing individuals of the notion that a single “true” probability exists regarding their own futures. Nevertheless, the distinction between ambiguity and probability may be neither logically coherent nor useful for all individuals, and thus represents another important conceptual problem in the communication of uncertainty in clinical evidence.

Methodological Problems

Ensuring people’s understanding of the uncertainties in clinical evidence is furthermore challenging since methods for representing these uncertainties are only starting to be developed. Recent developments are promising but raise important problems and questions, which will now be discussed.

Representing Indeterminacy (Randomness)

Indeterminacy or randomness—the first-order or aleatory uncertainty arising from the unpredictability of future events—is theoretically but only implicitly represented by probability estimates and difficult for laypersons to understand (Han, Lehman, et al., 2009). Commonly used methods of representing probability—for example, numeric terms such as percentages and natural frequencies (i.e., proportions), verbal (qualitative) terms, and visual representations (Ancker, Senathirajah, Kukafka, & Starren, 2006; Lipkus, 2007; Visschers, Meertens, Passchier, & de Vries, 2009)—focus exclusively on conveying the magnitude of risk estimates. Whether the randomness inherent to risk estimates can be effectively communicated thus remains an open question.

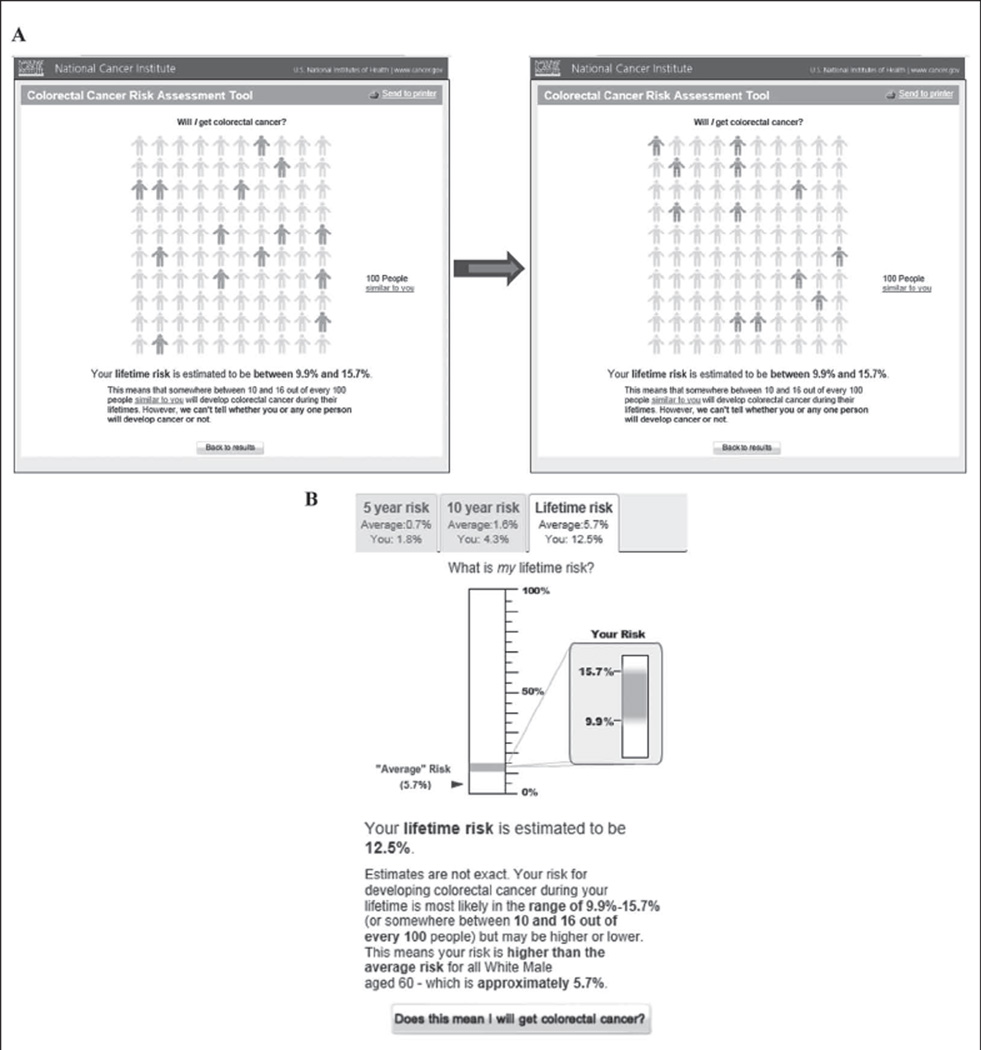

A small number of studies have begun exploring this question using novel visual representations, such as icon arrays displaying affected individuals in a scattered rather than a conventional clustered or sequential arrangement across the array. Ancker, Chan, and Kukafka (2009) recently developed a game-like web-based interactive graphical display of disease risk that requires users to click on an icon array to search for affected individuals within a population. Working with the National Cancer Institute (NCI) Colorectal Cancer Risk Assessment Tool (NCI, 2010), other researchers (Han, Klein, et al., 2012) have developed an icon array-based visualization that uses software animation to express randomness by dynamically varying the distribution of affected individuals (Figure 2A).

Figure 2.

Software-based representations of randomness and ambiguity in probability estimates: National Cancer Institute (NCI) Colorectal Cancer Risk Assessment Tool (NCI, 2010)

(A) Dynamic visual representation of randomness. Animation is used to randomly change the pattern of shaded icons every 2 seconds. (B) Representation of ambiguity. Textual and visual representations of a confidence interval; blurred borders of the interval are used to convey imprecision.

The limited available evidence suggests that these methods have no significant effect on risk perceptions, although their effects on patient understanding are unknown. In one study, the communication of randomness was associated with greater subjective uncertainty about estimated risk (Han, Klein, et al., 2012). However, several important questions remain. First, which representational methods are most effective in improving patient understanding of randomness, and under what circumstances? Dynamic icon arrays, for example, may be more effective than static arrays in increasing subjective awareness of uncertainty, but their effects may be moderated by personality characteristics (Han, Klein, et al., 2012). Second, does communicating randomness affect decision making or provide added value beyond communicating only the magnitude of risk estimates? Finally, are there effective methods of representing randomness pertaining to CPGs?

Representing Ambiguity

Equally challenging methodological questions pertain to the representation of ambiguity (epistemic uncertainty). Ambiguity is pervasive in clinical evidence and represented in various ways. In risk modeling, ambiguity arises at numerous levels ranging from the specification of model parameters to model structure and the adequacy of the modeling process itself (Bilcke, Beutels, Brisson, & Jit, 2011; Spiegelhalter & Riesch, 2011). Ambiguity arising from some sources—for example, imprecision engendered by model misspecification and limitations in external validity—is readily quantifiable and representable using confidence intervals, although some controversy exists regarding the appropriate mathematical derivation, interpretation, and clinical usefulness of confidence intervals in individual risk prediction (Grossi, 2006; Hanson & Howard, 2010; Kattan, 2011; Willink & Lira, 2005). Nonetheless, confidence intervals are widely used in risk modeling although they have rarely been incorporated in decision support tools and their optimal representational methods have only begun to be explored (Figure 2B; Han, Klein, et al., 2009; Han, Klein, Lehman, et al., 2011; Muscatello, Searles, MacDonald, & Jorm, 2006; Spiegelhalter, Pearson, & Short, 2011).

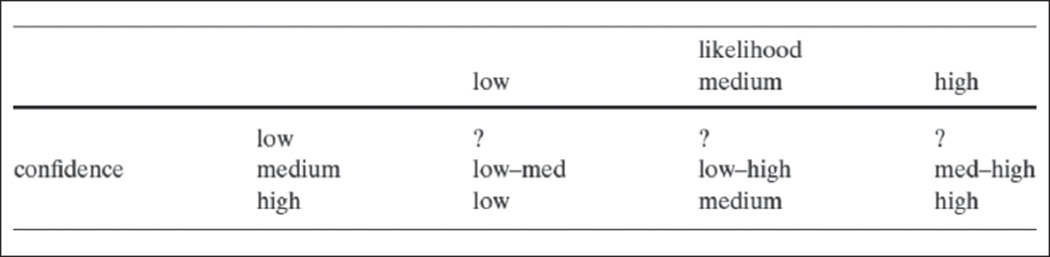

Another important area of investigation is how to represent higher order ambiguity arising from sources such as shortcomings in the validity and adequacy of risk prediction models themselves. This type of ambiguity is not readily quantifiable and thus requires representation in qualitative terms. Risbey and Kandlikar (2007) have shown how higher order ambiguity might be expressed in terms of “low,” “medium,” and “high” degrees of confidence in risk estimates (Figure 3), but such descriptors have not been applied in health risk communication efforts.

Figure 3.

A rating scheme for associating qualitative estimates of confidence and likelihood (ambiguity and probability) (Risbey & Kandlikar, 2007)

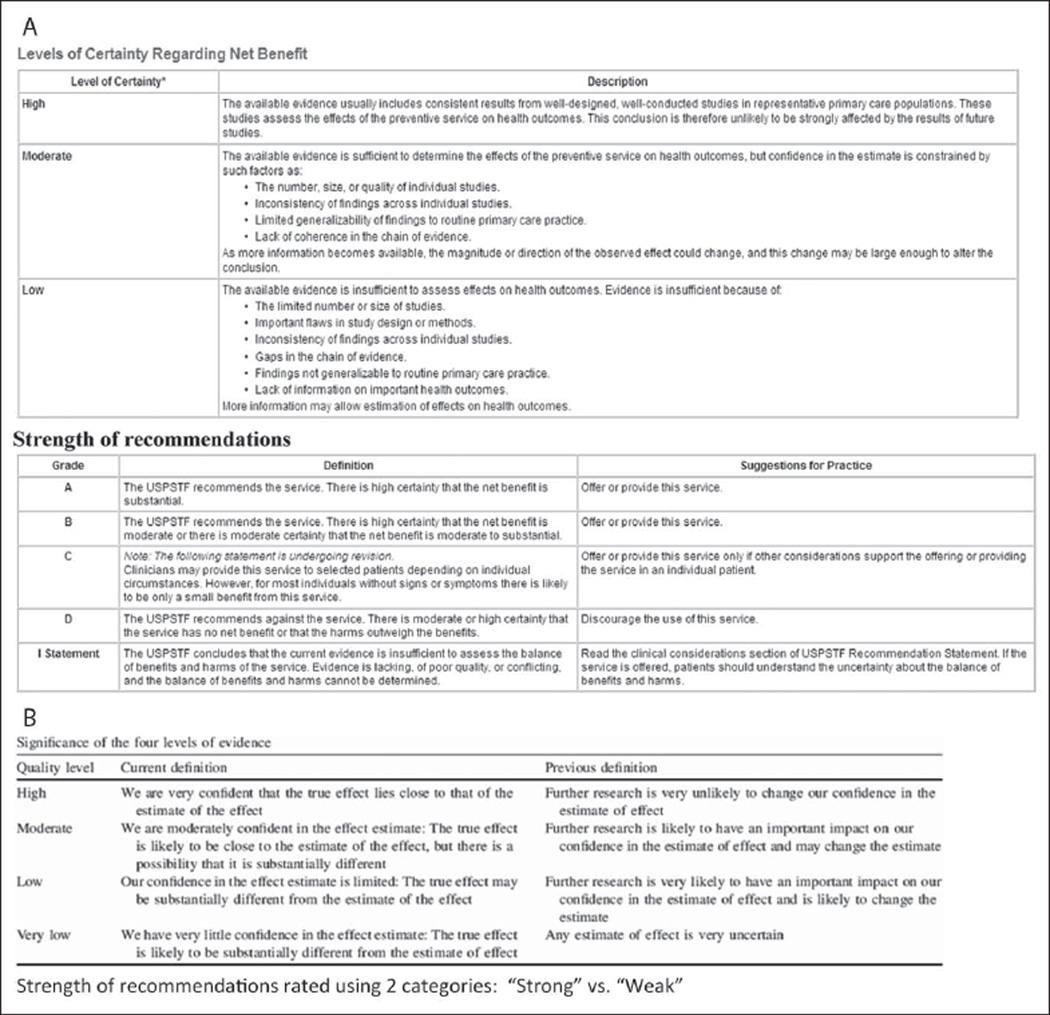

However, a conceptually analogous approach has been applied in innovative efforts to rate clinical evidence and CPGs. Formal qualitative two-dimensional rating schemes have been developed to separately assess the quality of evidence and the strength of guideline recommendations (Figure 4). These schemes are thus analogous to efforts that distinguish ambiguity and probability, confidence and likelihood in clinical risk prediction. In these schemes, ratings of the quality (ambiguity) of evidence express the level of confidence warranted by the evidence (and are described precisely in these terms) while ratings of the strength (probability) of recommendations express the estimated “effect size” or likelihood that a given clinical action is “correct.” The U.S. Preventive Services Task Force (2008) uses a three-category scale (low, moderate, high) to rate levels of certainty regarding the evidence, and a five-category letter grade scale to rate strength of recommendations. The Grading of Recommendations Assessment, Development and Evaluation (GRADE) Working Group uses a four-category scale (very low, low, moderate, high) to rate “quality” of evidence—defined in terms of “confidence” in estimates of an intervention’s effect—and a two-category scale (weak, strong) for the strength of recommendations (Balshem et al., 2011). The American College of Physicians uses an adaptation of the GRADE criteria that replaces the “very low” category for evidence quality with “insufficient” (Qaseem, Snow, Owens, & Shekelle, 2010). In all these schemes, the quality of evidence is graded according to key sources of ambiguity, including inconsistency, imprecision, and indirectness (limited generalizability and applicability) of results and methodological problems leading to bias (Balshem et al., 2011; Guyatt, Oxman, Vist, et al., 2008).

Figure 4.

Qualitative rating schemes for the quality of evidence and the strength of clinical practice guideline recommendations

(A) United States Preventive Services Task Force (2008). (B) Grading of Recommendations Assessment, Development and Evaluation (GRADE) Working Group (Balshem et al., 2011).

These rating systems are a major advance in the communication of uncertainty in clinical evidence; however, they also raise several methodological problems. One problem is that separating ratings of the quality of evidence (ambiguity) and the strength of recommendations (probability) can produce logically paradoxical results. In their analysis for the Intergovernmental Panel on Climate Change, Risbey and Kandlikar (2007) noted,

… likelihood and confidence cannot be fully separated. Likelihoods contain implicit confidence levels. When an event is said to be extremely likely (or extremely unlikely) it is implicit that we have high confidence. It wouldn’t make any sense to declare that an event was extremely likely and then turn around and say that we had low confidence in that statement … People would rightly ask us how we could give such a high (near certain) likelihood to an event about which we profess to have little understanding. (p. 24)

High likelihood ratings in cases of low confidence in risk information do not make sense (Figure 3). In the domain of CPGs, one can similarly criticize efforts to combine low-quality evidence (corresponding to low confidence) with strong recommendations (corresponding to estimates of high likelihood) as being logically incoherent. Yet as proponents of evidence rating schemes argue, the strength of clinical recommendations is determined not only by ambiguity but by other factors, such as the magnitude of potential benefits or harms, the number, effectiveness, and cost of available alternatives, ethical values, and perspectives (e.g., the precautionary principle; Balshem et al., 2011; Guyatt, Oxman, Kunz, et al., 2008; Kunz et al., 2008; Resnick, 2004). These factors beyond ambiguity and the scientific evidence itself may justify strong recommendations based on low-quality evidence (or weak recommendations based on high-quality evidence). The unresolved methodological question, however, is how to clearly represent these factors and their added influence on judgments of the strength of recommendations.

Additional important methodological questions regarding the representation of ambiguity in clinical evidence remain to be resolved. The language and categories used to describe the quality of evidence in existing rating schemes have begun to be explored (Akl et al., 2012; Lomotan, Michel, Lin, & Shiffman, 2010; Schunemann, Best, Vist, & Oxman, 2003), but other methods of representing ambiguity—for example, using alternative qualitative descriptors or visual approaches—might also be effective. Perhaps most important, existing rating schemes are directed at clinicians and policy makers rather than patients. More research is needed to develop methods of representing ambiguity in clinical evidence to patients and to assess the effectiveness of these methods in promoting meaningful clinical outcomes such as patient understanding and informed and shared decision making.

Communicating Uncertainty Clinically: The Subjectivization of Objective Probability

While we understand little about how best to represent the uncertainties pertaining to clinical evidence (both probability and ambiguity), we know even less about strategies for communicating these uncertainties in clinical practice. One natural strategy is to integrate representations of these uncertainties within decision support interventions such as decision aids. The problem then becomes one of implementing these interventions effectively at the point of care. Another strategy is to enhance patient–clinician discussions of uncertainty in clinical encounters. This strategy poses other challenges, including training clinicians and creating the systemic conditions needed (time, educational resources) to facilitate these discussions.

Yet these tasks are complicated further by problems unique to the topic of uncertainty. In the final analysis, the formulation of judgments about the magnitude of probabilities and the strength of clinical recommendations for individual patients amounts to a process in which objective probabilities given by the evidence—whether consisting of a CPM or a CPG—are integrated with other types of evidence and translated into personal feelings of confidence. In this process, the individual’s probability, or “risk,” is not scientifically discovered but socially constructed. To the extent that communicating uncertainty in clinical evidence involves this process—what might be termed the subjectivization of objective probability—effective communication entails more than the unidirectional transmission of risk information from expert to layperson. It requires an interactive exchange that facilitates the joint construction of confidence by clinicians and patients, based on available evidence and personal values. This type of exchange falls within the ideal of shared decision making, the specific process elements of which have begun to be defined (Makoul & Clayman, 2006; Moumjid, Gafni, Bremond, & Carrere, 2007). Yet the communication of uncertainty might also entail other elements that are less well-understood, including the provision of emotional support and the facilitation of psychological coping (Han, Klein, & Arora, 2011). At this time, however, we lack empirical evidence about the specific strategies (e.g., language, counseling techniques) that clinicians should use to accomplish these goals.

Ethical Problems

The last set of challenges in communicating uncertainty about clinical evidence are ethical, and relate to the unclear benefits and potentially adverse outcomes of communicating uncertainty and questions about the appropriate clinical circumstances in which uncertainty ought to be communicated.

Patient Autonomy

The primary ethical justification for communicating uncertainty about clinical evidence is the principle of respect for patient autonomy, which acknowledges an individual patient’s capacity for self-determination and right to make choices based on his or her own values (Beauchamp & Childress, 1994). This principle provides the primary ethical justification for the broader ideals of informed and shared decision making, of which the communication of uncertainty is one vital element (Braddock, Edwards, Hasenberg, Laidley, & Levinson, 1999; Briss et al., 2004; Moumjid et al., 2007). In theory, communicating the uncertainty pertaining to clinical evidence (both probability and ambiguity) should enhance autonomy by enabling patients to judge for themselves whether existing evidence warrants action.

Whether communicating uncertainty truly enhances patient autonomy, however, is currently unknown, and there are barriers to this goal. Information about risk and uncertainty is abstract, complex, and difficult to understand, particularly given patients’ well-documented deficits in health numeracy and literacy (Reyna, Nelson, Han, & Dieckmann, 2009). There are also psychological limits to the amount of information patients are able to process, and practical limits in the time and resources available to facilitate this processing. In the setting of all these limitations, communicating uncertainty may simply confuse and lead patients to defer decision making to the clinician—paradoxically diminishing rather than enhancing patient autonomy. Furthermore, not all patients prefer maximal information and participation in decision making. Additional empirical evidence is needed to understand patients’ preferences regarding the communication of uncertainty and its effects on patient autonomy.

Benefits and Harms

There may be benefits other than maximizing autonomy that justify the communication of at least some uncertainties in clinical evidence. For example, patients with life-limiting illness are often in the position of considering treatment options associated with substantial scientific uncertainty. Under these circumstances, scientific uncertainty may be a source of hope—for example, a lack of definitive evidence or the presence of wide confidence intervals around estimated benefits may suggest greater outcome variability and thus a higher likelihood that a given individual will “beat the odds” (Gould, 1985; Innes & Payne, 2009). However, such hope could be beneficial or harmful depending on how it affects well-being and decision making.

Communicating ambiguity in clinical evidence also poses potential harms that arise from the phenomenon of “ambiguity aversion,” a psychological response characterized by pessimistic judgments about risks and the outcomes of decisions and avoidance of decision making. This robust effect has been demonstrated in numerous decision-making domains, including health care. Experimental studies have shown that ambiguous information about health risks leads to heightened perceptions of these risks, and that ambiguity concerning the outcomes of health-protective measures makes people less willing to adopt them (Ritov & Baron, 1990; Viscusi, 1997; Viscusi, Magat, & Huber, 1991). Intervention studies have demonstrated that informing people about uncertainties surrounding controversial cancer-screening measures decreases their interest in screening (Frosch, Kaplan, & Felitti, 2003; Volk, Spann, Cass, & Hawley, 2003). Perceptions of ambiguity regarding cancer prevention and screening recommendations have been shown to be negatively associated with both cancer-protective behaviors and perceptions that may influence these behaviors (Han et al., 2007; Han, Moser, & Klein, 2006). Communicating ambiguity about disease risk estimates (through the use of confidence intervals) has been shown to increase risk perceptions and worry (Han, Klein, Lehman, et al., 2011).

These outcomes—heightened risk perceptions and worry, pessimistic judgments of the benefits and harms of medical intervention, avoidance of decision making—raise ethical concerns because they may reduce patient well-being and promote refusal of potentially beneficial interventions. Nevertheless, ambiguity aversion may be ethically justifiable. One can argue that the optimal response of an individual to scientific uncertainty about a medical intervention is to feel skeptical about its benefits, cautious about its harms, and to avoid decision making until better evidence is available.

Furthermore, ambiguity aversion is not a universal phenomenon; many people are ambiguity indifferent (Camerer & Weber, 1992). This variation may reflect individual-level differences in (a) tolerance of ambiguity and uncertainty (Geller, Faden, & Levine, 1990; Han, Reeve, Moser, & Klein, 2009); (b) personality traits such as optimism (Bier & Connell, 1994; Highhouse, 1994; Pulford, 2009); (c) fundamental values, beliefs, worldviews; and (d) other moderators that remain to be defined. The variety of sources of ambiguity aversion makes it difficult to determine whether or not this response is adaptive (which depends on one’s perspective) and ethically warranted, although in some circumstances it is clearly neither. For example, a patient might choose one intervention over another based on misconceptions of the degree of ambiguity surrounding both. Erroneous beliefs about the quality of supporting evidence, unrealistic expectations of certainty about future outcomes, or misunderstandings of the scientific method might all lead patients to attribute either excess certainty or uncertainty (what might be termed “pseudoambiguity”) to different alternatives and to thereby make poor decisions. For example, studies have shown that laypersons underestimate ambiguity surrounding controversial medical interventions (Schwartz, Woloshin, Fowler, & Welch, 2004), while physicians may overestimate ambiguity when expert consensus exists (Han, Klabunde, et al., 2012).

A final potential harm of communicating uncertainty consists of negative effects on patient experiences with care. Emerging evidence suggests that communicating uncertainty may reduce patients’ satisfaction with their decisions (Politi, Clark, Ombao, Dizon, & Elwyn, 2011). It might also reduce trust in experts, a concern raised by risk analysts outside of the health care domain, although the sparse empirical evidence on this outcome is inconclusive (Johnson & Slovic, 1995).

Conclusion

Important conceptual, methodological, and ethical problems pose numerous challenges to the communication of uncertainty in clinical evidence. Conceptual problems include logical paradoxes in the meaning of probability and ambiguity, including their distinguishability and importance in clinical decision making. Methodological problems include questions about the optimal methods for representing fundamental uncertainties, including probability and ambiguity—both of which are difficult to quantify and thus require alternative modes of representation—and for communicating these uncertainties in clinical practice. Ethical problems include questions about whether communicating uncertainty enhances or diminishes patient autonomy and offers net benefits or harms related to ambiguity aversion and adverse effects on patient experiences with care.

At this time, more empirical evidence is needed to answer these questions definitively and to provide specific recommendations regarding the optimal approaches for representing and communicating uncertainty in clinical practice. However, sufficient empirical data and theoretical insights exist to identify key areas of focus for future research and practice.

1. Determine the right type and amount of uncertainty information to communicate

The foregoing discussion has identified numerous evidence-related uncertainties, only some of which have been explicitly communicated to patients. Further conceptual and ethical analysis is needed to identify and prioritize the types of uncertainties that ought to be communicated in order to ensure informed patient decision making. The answers to these questions likely depend on various factors, including the nature and associated risks of the decision at hand, the number of decisional alternatives, and the types of available evidence; such work has been done to establish criteria for shared decision making (Whitney, McGuire, & McCullough, 2004). At the same time, further empirical research is needed to determine the optimal amount of uncertainty information that clinicians should communicate to patients. The problem is that communicating uncertainty can lead to negative outcomes, including not only ambiguity aversion, as discussed previously, but also information overload and confusion resulting solely from the added complexity that uncertainty information introduces to decision making—itself a source of uncertainty (Figure 1; Han, Klein, & Arora, 2011). More work is needed to determine at what point this added complexity undermines the benefits of communicating uncertainty.

2. Improve conceptual understanding of uncertainty in clinical evidence

A strong ethical justification exists to help patients understand the uncertainties underlying individualized clinical risk estimates and practice guidelines. This understanding, however, entails not only computational reasoning but also higher order comprehension of randomness and ambiguity—fundamental but abstract concepts that have long eluded even statisticians and philosophers. To what extent patients’ comprehension of these concepts can be improved is an open question, since relatively little work has been devoted to this aim. Initial progress has been made in developing explicit representations of randomness and ambiguity in clinical risk estimates and practice guidelines, but we do not know whether these representations improve patient understanding or how best to measure such understanding. More research is needed to develop methods to measure and improve conceptual understanding of the uncertainties that pertain to risk information, practice recommendations, and other types of evidence.

3. Standardize the language and methods used to represent and communicate uncertainty

Promising new representational methods are beginning to provide clinicians and patients with a language for communicating about uncertainties in clinical evidence. However, despite these recent advances, there is not yet complete agreement between existing ambiguity rating systems for guideline recommendations, and there have been no efforts to standardize methods for communicating uncertainty in probability estimates (Waters, Sullivan, Nelson, & Hesse, 2009). Diversity in the methods used to represent these uncertainties, however, only magnifies ambiguity and generates uncertainty due to complexity (Han, Klein, & Arora, 2011), creating potential confusion for clinicians and patients. Moving forward, the language for expressing uncertainty in clinical evidence should ideally be standardized. This also applies to clinician–patient discussions of uncertainty in clinical encounter, in which a standardized language and communication approaches may also help to minimize both underrecognition of ambiguity and pseudoambiguity. Clearly, any such efforts pose the challenges of achieving consensus among diverse stakeholders including policy makers, risk modelers, guideline developers, medical educators, and clinicians.

4. Promote patient centeredness in the communication of uncertainty

Existing methods for representing probability and ambiguity in clinical evidence have been developed and validated through expert consensus. This is appropriate since experts have the requisite scientific knowledge to represent the normative perspective on the state of evidence and the professional standards used to evaluate it. However, when it comes to producing subjective ratings of probability or ambiguity (i.e., recommendation strength or evidence quality) at the single-event level of clinical care, the perspective of the patient is paramount. Although uncertainty rating schemes may be defined by expert consensus, they need to make sense to patients—whose conceptual understanding, evidentiary standards, and thresholds for action may differ from those of experts. Moving forward, efforts to develop formal systems for rating uncertainty in clinical evidence should incorporate the patient perspective in order to ensure their comprehensibility, meaningfulness, and appropriate use by patients as well as clinicians.

Incorporating the patient perspective is equally important in efforts to communicate uncertainty clinically. Individual patients vary in their preferences for information and tolerance of uncertainty; many—but not all—patients are averse to ambiguity. At the same time, communicating uncertainty may not always enhance autonomy and improve outcomes. These issues all raise the need for clinicians to tailor the communication of uncertainty according to patient preferences for information and their tolerance of uncertainty. Importantly, such a tailored approach does not obviate the need to standardize methods for representing uncertainty, but instead calls for flexible application of these methods with different individuals. Nevertheless, a tailored approach has ethical limits given that noncommunication of uncertainty could be misconstrued as communication of certainty, potentially engendering overconfidence in an otherwise undesirable alternative; as Edwards (2003, p. 692) has put it, “Offering too much apparent certainty may be to misinform.”

Consider a man who is offered prostate-specific antigen screening without discussion of the uncertainties surrounding its net benefits and harms—an unfortunately common scenario (Han, Coates, Uhler, & Breen, 2006; Hoffman et al., 2009). This man has a high likelihood of subsequently being diagnosed with early-stage prostate cancer, undergoing surgical treatment, and eventually suffering irreversible incontinence and erectile dysfunction that may make him regret his initial screening choice. Of course, the randomness of single events makes this man’s outcome unpredictable, and this aleatory uncertainty should ideally have been communicated to him. But the more critical uncertainty that might have changed this man’s decision and thus should have been communicated is the ambiguity pertaining to the benefits of screening. Clinicians are arguably obligated to communicate this type of uncertainty irrespective of patient preferences for information or tolerance of uncertainty. More research is needed to specify when such an approach is warranted, and what uncertainties ought to be communicated.

Yet the optimal strategy may not be to tailor communication according to patients’ tolerance of uncertainty, but rather to provide patients with the support needed to increase this tolerance. This is an alternative strategy, however, about which we know the least. Tolerance of uncertainty implies adaptive coping with the consciousness of ignorance, but the nature of this coping and the ways to facilitate it remain to be defined. It likely involves more than cognitive processes alone, and its facilitation may thus require a “patient-centered” approach focused not only on exchanging information but also on responding to emotions and promoting healing physician–patient relationships (Epstein & Street, 2007). More work is needed to develop strategies for supporting patients’ tolerance of uncertainty. Until then, a tailored approach to communicating uncertainty may be the most prudent strategy.

For in the end, the communication of uncertainty in clinical evidence burdens patients and clinicians alike with a set of difficult new tasks that they have been historically ill-prepared to undertake: to affirm the value of available evidence while simultaneously recognizing its inevitable limitations; to undertake decisive action while acknowledging all the reasons for indecision; to have faith about the rightness of one’s actions and about what the future holds while affirming the irreducibility of doubt. These formidable challenges favor a cautious approach to communicating uncertainty while optimal strategies are worked out through further research. The problem, however, is that the current rate of production of uncertainty in clinical evidence is too great and the ethical stakes too high to delay efforts to communicate uncertainty to patients. What the task therefore requires are good faith efforts to communicate uncertainty while providing support—primarily for the patients who must cope with uncertainty about clinical evidence but also for the clinicians who must tolerate uncertainty about the communication task itself. Exactly what this support entails and how to provide it are the pressing questions for future research.

Acknowledgments

The author thanks Dominick Frosch and Steven Woolf for commentary at the Eisenberg Conference Series 2011 Meeting, and David Spiegelhalter, Richard Street, Robert Volk, and anonymous reviewers for helpful input on an earlier version of this article.

Funding

The author(s) disclosed receipt of the following financial support for the research, authorship, and/or publication of this article: A stipend covering a portion of the lead author’s work on this article was provided by the John M. Eisenberg Center for Clinical Decisions and Communications Science at Baylor College of Medicine with funding support from the Agency for Healthcare Research and Quality under Contract No. HHSA290200810015C, Rockville, MD.

Footnotes

Portions of this article were presented at the Eisenberg Conference Series 2011 Meeting, “Differing Levels of Clinical Evidence: Exploring Communication Challenges in Shared Decision Making” conducted by the John M. Eisenberg Center for Clinical Decisions and Communications Science at Baylor College of Medicine, Houston, Texas under contract to the Agency for Healthcare Research and Quality, Contract No. HHSA290200810015C, Rockville, Maryland. The author of this article is responsible for its content. No statement may be construed as the official position of the Agency for Healthcare Research and Quality of the U.S. Department of Health and Human Services.

Declaration of Conflicting Interests

The author(s) declared no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

References

- Akl EA, Guyatt GH, Irani J, Feldstein D, Wasi P, Shaw E, Schunemann HJ. “Might” or “suggest”? No wording approach was clearly superior in conveying the strength of recommendation. Journal of Clinical Epidemiology. 2012;65:268–275. doi: 10.1016/j.jclinepi.2011.08.001. [DOI] [PubMed] [Google Scholar]

- Ancker JS, Chan C, Kukafka R. Interactive graphics for expressing health risks: development and qualitative evaluation. Journal of Health Communication. 2009;14:461–475. doi: 10.1080/10810730903032960. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ancker JS, Senathirajah Y, Kukafka R, Starren JB. Design features of graphs in health risk communication: a systematic review. Journal of the American Medical Informatics Association. 2006;13:608–618. doi: 10.1197/jamia.M2115. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Asch DA, Hershey JC. Why some health policies don’t make sense at the bedside. Annals of Internal Medicine. 1995;122:846–850. doi: 10.7326/0003-4819-122-11-199506010-00007. [DOI] [PubMed] [Google Scholar]

- Balshem H, Helfand M, Schunemann HJ, Oxman AD, Kunz R, Brozek J, Guyatt GH. GRADE guidelines: 3. Rating the quality of evidence. Journal of Clinical Epidemiology. 2011;64:401–406. doi: 10.1016/j.jclinepi.2010.07.015. [DOI] [PubMed] [Google Scholar]

- Beauchamp TL, Childress JF. Principles of biomedical ethics. 4th ed. New York, NY: Oxford University Press; 1994. [Google Scholar]

- Bier VM, Connell BL. Ambiguity seeking in multi-attribute decisions: Effects of optimism and message framing. Journal of Behavioral Decision Making. 1994;7:169–182. [Google Scholar]

- Bilcke J, Beutels P, Brisson M, Jit M. Accounting for methodological, structural, and parameter uncertainty in decision-analytic models: A practical guide. Medical Decision Making. 2011;31:675–692. doi: 10.1177/0272989X11409240. [DOI] [PubMed] [Google Scholar]

- Braddock CH, III, Edwards KA, Hasenberg NM, Laidley TL, Levinson W. Informed decision making in outpatient practice: time to get back to basics. Journal of the American Medical Association. 1999;282:2313–2320. doi: 10.1001/jama.282.24.2313. [DOI] [PubMed] [Google Scholar]

- Briss P, Rimer B, Reilley B, Coates RC, Lee NC, Mullen P Task Force on Community Preventive Services. Promoting informed decisions about cancer screening in communities and healthcare systems. American Journal of Preventive Medicine. 2004;26:67–80. doi: 10.1016/j.amepre.2003.09.012. [DOI] [PubMed] [Google Scholar]

- Camerer C, Weber M. Recent developments in modeling preferences: uncertainty and ambiguity. Journal of Risk and Uncertainty. 1992;5:325–370. [Google Scholar]

- de Finetti B. Theory of probability. Vol. 1. New York, NY: John Wiley; 1974. [Google Scholar]

- Edwards A. Communicating risks. British Medical Journal. 2003;327:691–692. doi: 10.1136/bmj.327.7417.691. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ellsberg D. Risk, ambiguity, and the savage axioms. Quarterly Journal of Economics. 1961;75:643–669. [Google Scholar]

- Epstein RM, Street RLJ. Patient-centered communication in cancer care: Promoting healing and reducing suffering. Bethesda, MD: National Institutes of Health; 2007. (NIH Publication No. 07-6225). [Google Scholar]

- Fischhoff B, MacGregor D. Subjective confidence in forecasts. Journal of Forecasting. 1982;1:155–172. [Google Scholar]

- Frosch DL, Kaplan RM, Felitti VJ. A randomized controlled trial comparing internet and video to facilitate patient education for men considering the prostate specific antigen test. Journal of General Internal Medicine. 2003;18:781–787. doi: 10.1046/j.1525-1497.2003.20911.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fuchs VR. The doctor’s dilemma: What is “appropriate” care? New England Journal of Medicine. 2011;365:585–587. doi: 10.1056/NEJMp1107283. [DOI] [PubMed] [Google Scholar]

- Geller G, Faden RR, Levine DM. Tolerance for ambiguity among medical students: Implications for their selection, training and practice. Social Science & Medicine. 1990;31:619–624. doi: 10.1016/0277-9536(90)90098-d. [DOI] [PubMed] [Google Scholar]

- Gigerenzer G, Swijtink ZTP, Daston L, Beatty J, Krueger L. The empire of chance: How probability changed science and everyday life. Cambridge, England: Cambridge University Press; 1989. [Google Scholar]

- Gillies D. Philosophical theories of probability. London, England: Routledge; 2000. [Google Scholar]

- Gould SJ. The median isn’t the message. Discover. 1985 Jun;6:40–42. [Google Scholar]

- Griffin D, Tversky A. The weighing of evidence and the determinants of confidence. Cognitive Psychology. 1992;24:411–435. [Google Scholar]

- Grossi E. How artificial intelligence tools can be used to assess individual patient risk in cardiovascular disease: Problems with the current methods. BMC Cardiovascular Disorders. 2006;6:20. doi: 10.1186/1471-2261-6-20. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Guyatt GH, Oxman AD, Kunz R, Falck-Ytter Y, Vist GE, Liberati A, Schunemann HJ. Going from evidence to recommendations. British Medical Journal. 2008;336:1049–1051. doi: 10.1136/bmj.39493.646875.AE. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Guyatt GH, Oxman AD, Vist GE, Kunz R, Falck-Ytter Y, Alonso-Coello P, Schunemann HJ. GRADE: An emerging consensus on rating quality of evidence and strength of recommendations. British Medical Journal. 2008;336:924–926. doi: 10.1136/bmj.39489.470347.AD. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hacking I. An introduction to probability and inductive logic. New York, NY: Cambridge University Press; 2001. [Google Scholar]

- Han PK, Coates RJ, Uhler RJ, Breen N. Decision making in prostate-specific antigen screening national health interview survey, 2000. American Journal of Preventive Medicine. 2006;30:394–404. doi: 10.1016/j.amepre.2005.12.006. [DOI] [PubMed] [Google Scholar]

- Han PKJ, Klabunde CN, Noone AM, Earle CC, Ayanian JZ, Ganz PA, et al. Physicians’ beliefs about surveillance testing for breast cancer survivors: Findings from a national survey. 2012 Manuscript under review. [Google Scholar]

- Han PK, Klein WM, Arora NK. Varieties of uncertainty in health care: a conceptual taxonomy. Medical Decision Making. 2011;31:828–838. doi: 10.1177/0272989X11393976. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Han PK, Klein WM, Killam B, Lehman T, Massett H, Freedman AN. Representing randomness in the communication of individualized cancer risk estimates: Effects on cancer risk perceptions, worry, and subjective uncertainty about risk. Patient Education and Counseling. 2012;86:106–113. doi: 10.1016/j.pec.2011.01.033. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Han PK, Klein WM, Lehman T, Killam B, Massett H, Freedman AN. Communication of uncertainty regarding individualized cancer risk estimates: effects and influential factors. Medical Decision Making. 2011;31:354–366. doi: 10.1177/0272989X10371830. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Han PK, Klein WM, Lehman TC, Massett H, Lee SC, Freedman AN. Laypersons’ responses to the communication of uncertainty regarding cancer risk estimates. Medical Decision Making. 2009;29:391–403. doi: 10.1177/0272989X08327396. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Han PK, Kobrin SC, Klein WM, Davis WW, Stefanek M, Taplin SH. Perceived ambiguity about screening mammography recommendations: association with future mammography uptake and perceptions. Cancer Epidemiology, Biomarkers, and Prevention. 2007;16:458–466. doi: 10.1158/1055-9965.EPI-06-0533. [DOI] [PubMed] [Google Scholar]

- Han PK, Lehman TC, Massett H, Lee SJ, Klein WM, Freedman AN. Conceptual problems in laypersons’ understanding of individualized cancer risk: a qualitative study. Health Expectations. 2009;12:4–17. doi: 10.1111/j.1369-7625.2008.00524.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Han PK, Moser RP, Klein WM. Perceived ambiguity about cancer prevention recommendations: relationship to perceptions of cancer preventability, risk, and worry. Journal of Health Communication. 2006;11(Suppl. 1):51–69. doi: 10.1080/10810730600637541. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Han PK, Reeve BB, Moser RP, Klein WM. Aversion to ambiguity regarding medical tests and treatments: measurement, prevalence, and relationship to sociodemographic factors. Journal of Health Communication. 2009;14:556–572. doi: 10.1080/10810730903089630. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hanson RK, Howard PD. Individual confidence intervals do not inform decision-makers about the accuracy of risk assessment evaluations. Law and Human Behavior. 2010;34:275–281. doi: 10.1007/s10979-010-9227-3. [DOI] [PubMed] [Google Scholar]

- Highhouse S. A verbal protocol analysis of choice under ambiguity. Journal of Economic Psychology. 1994;15:621–635. [Google Scholar]

- Hoffman RM, Couper MP, Zikmund-Fisher BJ, Levin CA, McNaughton-Collins M, Helitzer DL, Barry MJ. Prostate cancer screening decisions: Results from the National Survey of Medical Decisions (DECISIONS study) Archives of Internal Medicine. 2009;169:1611–1618. doi: 10.1001/archinternmed.2009.262. [DOI] [PubMed] [Google Scholar]

- Howard RA. Uncertainty about probability: A decision analysis perspective. Risk Analysis. 1988;8:91–98. [Google Scholar]

- Innes S, Payne S. Advanced cancer patients’ prognostic information preferences: A review. Palliative Medicine. 2009;23:29–39. doi: 10.1177/0269216308098799. [DOI] [PubMed] [Google Scholar]

- Institute of Medicine. Clinical practice guidelines: Directions for a new program. Washington, DC: National Academies Press; 1990. [PubMed] [Google Scholar]

- Johnson BB, Slovic P. Presenting uncertainty in health risk assessment: Initial studies of its effects on risk perception and trust. Risk Analysis. 1995;15:485–494. doi: 10.1111/j.1539-6924.1995.tb00341.x. [DOI] [PubMed] [Google Scholar]

- Kahneman D, Tversky A. Variants of uncertainty. Cognition. 1982;11:143–157. doi: 10.1016/0010-0277(82)90023-3. [DOI] [PubMed] [Google Scholar]

- Kattan MW. Doc, what are my chances? A conversation about prognostic uncertainty. European Urology. 2011;59:224. doi: 10.1016/j.eururo.2010.10.041. [DOI] [PubMed] [Google Scholar]

- Kent DM, Rothwell PM, Ioannidis JP, Altman DG, Hayward RA. Assessing and reporting heterogeneity in treatment effects in clinical trials: A proposal. Trials. 2010;11:85. doi: 10.1186/1745-6215-11-85. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Knight FH. Risk, uncertainty, and profit. Boston, MA: Houghton Mifflin; 1921. [Google Scholar]

- Kuhn KM. Communicating uncertainty: Framing effects on responses to vague probabilities. Organizational Behavior and Human Decision Processes. 1997;71:55–83. [Google Scholar]

- Kuhn KM, Budescu DV. The relative importance of probabilities, outcomes, and vagueness in hazard risk decisions. Organizational Behavior and Human Decision Processes. 1996;68:301–317. [Google Scholar]

- Kunz R, Djulbegovic B, Schunemann HJ, Stanulla M, Muti P, Guyatt G. Misconceptions, challenges, uncertainty, and progress in guideline recommendations. Seminars in Hematology. 2008;45:167–175. doi: 10.1053/j.seminhematol.2008.04.005. [DOI] [PubMed] [Google Scholar]

- Lipkus IM. Numeric, verbal, and visual formats of conveying health risks: Suggested best practices and future recommendations. Medical Decision Making. 2007;27:696–713. doi: 10.1177/0272989X07307271. [DOI] [PubMed] [Google Scholar]

- Lomotan EA, Michel G, Lin Z, Shiffman RN. How should we write guideline recommendations? Interpretation of deontic terminology in clinical practice guidelines: Survey of the health services community. Quality & Safety in Health Care. 2010;19:509–513. doi: 10.1136/qshc.2009.032565. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Makoul G, Clayman ML. An integrative model of shared decision making in medical encounters. Patient Education and Counseling. 2006;60:301–312. doi: 10.1016/j.pec.2005.06.010. [DOI] [PubMed] [Google Scholar]

- Morgan G, Dowlatabadi H, Henrion M, Keith D, Lempert R, McBride S, Wilbanks T, editors. Best practice approaches for characterizing, communicating, and incorporating scientific uncertainty in decisionmaking. A report by the U.S. Climate Change Science Program and the subcommittee on Global Change Research (Synthesis and Assessment Product 5.2M) Washington DC: National Oceanic and Atmospheric Administration, U.S. Climate Change Science Program; 2009. [Google Scholar]

- Moumjid N, Gafni A, Bremond A, Carrere MO. Shared decision making in the medical encounter: Are we all talking about the same thing? Medical Decision Making. 2007;27:539–546. doi: 10.1177/0272989X07306779. [DOI] [PubMed] [Google Scholar]

- Muscatello DJ, Searles A, MacDonald R, Jorm L. Communicating population health statistics through graphs: A randomised controlled trial of graph design interventions. BMC Medicine. 2006;4:33. doi: 10.1186/1741-7015-4-33. [DOI] [PMC free article] [PubMed] [Google Scholar]

- National Cancer Institute. Colorectal cancer risk assessment tool. 2010 Retrieved from http://www.cancer.gov/colorectalcancerrisk/

- Politi MC, Clark MA, Ombao H, Dizon D, Elwyn G. Communicating uncertainty can lead to less decision satisfaction: A necessary cost of involving patients in shared decision making? Health Expectations. 2011;14:84–91. doi: 10.1111/j.1369-7625.2010.00626.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pulford BD. Is luck on my side? Optimism, pessimism, and ambiguity aversion. Quarterly Journal of Experimental Psychology. 2009;62:1079–1087. doi: 10.1080/17470210802592113. [DOI] [PubMed] [Google Scholar]

- Qaseem A, Snow V, Owens DK, Shekelle P. The development of clinical practice guidelines and guidance statements of the American College of Physicians: Summary of methods. Annals of Internal Medicine. 2010;153:194–199. doi: 10.7326/0003-4819-153-3-201008030-00010. [DOI] [PubMed] [Google Scholar]

- Resnick D. The precautionary principle and medical decision making. Journal of Medicine and Philosophy. 2004;29:281–299. doi: 10.1080/03605310490500509. [DOI] [PubMed] [Google Scholar]

- Reyna VF, Nelson WL, Han PK, Dieckmann NF. How numeracy influences risk comprehension and medical decision making. Psychological Bulletin. 2009;135:943–973. doi: 10.1037/a0017327. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Risbey JS, Kandlikar M. Expressions of likelihood and confidence in the IPCC uncertainty assessment process. Climatic Change. 2007;85:19–31. [Google Scholar]

- Ritov I, Baron J. Reluctance to vaccinate: Omission bias and ambiguity. Journal of Behavioral Decision Making. 1990;3:263–277. [Google Scholar]

- Rose G. The strategy of preventive medicine. New York, NY: Oxford University Press; 1994. [Google Scholar]

- Sackett DL, Rosenberg WM, Gray JA, Haynes RB, Richardson WS. Evidence based medicine: What it is and what it isn’t. British Medical Journal. 1996;312:71–72. doi: 10.1136/bmj.312.7023.71. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schunemann HJ, Best D, Vist G, Oxman AD. Letters, numbers, symbols and words: how to communicate grades of evidence and recommendations. Canadian Medical Association Journal. 2003;169:677–680. [PMC free article] [PubMed] [Google Scholar]

- Schwartz LM, Woloshin S, Fowler FJ, Jr, Welch HG. Enthusiasm for cancer screening in the United States. Journal of the American Medical Association. 2004;291:71–78. doi: 10.1001/jama.291.1.71. [DOI] [PubMed] [Google Scholar]

- Smithson M. Ignorance and uncertainty: Emerging paradigms. New York, NY: Springer Verlag; 1989. [Google Scholar]

- Smithson M. Psychology’s ambivalent view of uncertainty. In: G G, Smithson M, editors. Uncertainty and risk: Multidisciplinary perspectives. London: England: Earthscan; 2008. pp. 205–217. [Google Scholar]

- Spiegelhalter D, Pearson M, Short I. Visualizing uncertainty about the future. Science. 2011;333:1393–1400. doi: 10.1126/science.1191181. [DOI] [PubMed] [Google Scholar]

- Spiegelhalter DJ, Riesch H. Don’t know, can’t know: Embracing deeper uncertainties when analysing risks. Philosophical Transactions of the Royal Society A: Mathematical, Physical, and Engineering Sciences. 2011;369:4730–4750. doi: 10.1098/rsta.2011.0163. [DOI] [PubMed] [Google Scholar]

- Steyerberg EW. Clinical prediction models: A practical approach to development, validation, and updating. New York, NY: Springer; 2010. [Google Scholar]

- U.S. Department of Health and Human Services. Personalized health care: Opportunities, pathways, resources. 2008 Retrieved from http://www.hhs.gov/myhealthcare/

- U.S. Preventive Services Task Force. U.S. Preventive Services Task Force Grade definitions. 2008 Retrieved from http://www.uspreventiveservicestaskforce.org/uspstf/grades.htm. [Google Scholar]

- Viscusi WK. Alarmist decisions with divergent risk information. The Economic Journal. 1997;107:1657–1670. [Google Scholar]

- Viscusi WK, Magat WA, Huber J. Communication of ambiguous risk information. Theory and Decision. 1991;31:159–173. [Google Scholar]

- Viscusi WK, Magat WA, Huber J. Smoking status and public responses to ambiguous scientific risk evidence. Southern Economic Journal. 1999;66:250–270. [Google Scholar]

- Visschers VH, Meertens RM, Passchier WW, de Vries NN. Probability information in risk communication: a review of the research literature. Risk Analysis. 2009;29:267–287. doi: 10.1111/j.1539-6924.2008.01137.x. [DOI] [PubMed] [Google Scholar]

- Volk RJ, Spann SJ, Cass AR, Hawley ST. Patient education for informed decision making about prostate cancer screening: a randomized controlled trial with 1-year follow-up. Annals of Family Medicine. 2003;1:22–28. doi: 10.1370/afm.7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Waters EA, Sullivan HW, Nelson W, Hesse BW. What is my cancer risk? How internet-based cancer risk assessment tools communicate individualized risk estimates to the public: Content analysis. Journal of Medical Internet Research. 2009;11(3):e33. doi: 10.2196/jmir.1222. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Weinfurt KP, Sulmasy DP, Schulman KA, Meropol NJ. Patient expectations of benefit from phase I clinical trials: linguistic considerations in diagnosing a therapeutic misconception. Theoretical Medicine and Bioethics. 2003;24:329–344. doi: 10.1023/a:1026072409595. [DOI] [PubMed] [Google Scholar]

- Whitney SN, McGuire AL, McCullough LB. A typology of shared decision making, informed consent, and simple consent. Annals of Internal Medicine. 2004;140:54–59. doi: 10.7326/0003-4819-140-1-200401060-00012. [DOI] [PubMed] [Google Scholar]

- Willink R, Lira I. A united interpretation of different uncertainty intervals. Measurement. 2005;38:61–66. [Google Scholar]