ABSTRACT

BACKGROUND

Implementing new programs and practices is challenging, even when they are mandated. Implementation Facilitation (IF) strategies that focus on partnering with sites show promise for addressing these challenges.

OBJECTIVE

Our aim was to evaluate the effectiveness of an external/internal IF strategy within the context of a Department of Veterans Affairs (VA) mandate of Primary Care–Mental Health Integration (PC-MHI).

DESIGN

This was a quasi-experimental, Hybrid Type III study. Generalized estimating equations assessed differences across sites.

PARTICIPANTS

Patients and providers at seven VA primary care clinics receiving the IF intervention and national support and seven matched comparison clinics receiving national support only participated in the study.

INTERVENTION

We used a highly partnered IF strategy incorporating evidence-based implementation interventions.

MAIN MEASURES

We evaluated the IF strategy using VA administrative data and RE-AIM framework measures for two 6-month periods.

KEY RESULTS

Evaluation of RE-AIM measures from the first 6-month period indicated that PC patients at IF clinics had nine times the odds (OR=8.93, p<0.001) of also being seen in PC-MHI (Reach) compared to patients at non-IF clinics. PC providers at IF clinics had seven times the odds (OR=7.12, p=0.029) of referring patients to PC-MHI (Adoption) than providers at non-IF clinics, and a greater proportion of providers’ patients at IF clinics were referred to PC-MHI (Adoption) compared to non-IF clinics (β=0.027, p<0.001). Compared to PC patients at non-IF sites, patients at IF clinics did not have lower odds (OR=1.34, p=0.232) of being referred for first-time mental health specialty clinic visits (Effectiveness), or higher odds (OR=1.90, p=0.350) of receiving same-day access (Implementation). Assessment of program sustainability (Maintenance) was conducted by repeating this analysis for a second 6-month time period. Maintenance analyses results were similar to the earlier period.

CONCLUSION

The addition of a highly partnered IF strategy to national level support resulted in greater Reach and Adoption of the mandated PC-MHI initiative, thereby increasing patient access to VA mental health care.

Electronic supplementary material

The online version of this article (doi:10.1007/s11606-014-3027-2) contains supplementary material, which is available to authorized users.

KEY WORDS: implementation research, primary care, mental health, health policy

INTRODUCTION

Implementing new practices and sustaining clinical practice change is challenging,1–3 and decades of organizational science and more recent implementation science work have identified limitations in both top-down mandates and bottom-up approaches.4–7 Implementation Facilitation (IF) has shown promise in implementing programs and practices, particularly at locations that would otherwise be unable to conduct quality improvement efforts. IF strategies that bundle evidence-based implementation interventions8 usually also focus on building relationships and partnering with sites.8–11 Facilitators use particular activities and techniques depending on the purpose of facilitation and stakeholder needs.12–15 Unfortunately, when funding ends, sites often have difficulty sustaining changes.16

We developed an IF strategy in collaboration with the Department of Veterans Affairs’ (VA) regional mental health and primary care leadership that addresses these challenges. We served as consultants, providing implementation science expertise,6,17 tools18 and resources19 needed to support implementation. VA regional and facility leadership provided expertise in their organizational structures and clinical processes, and identified typical implementation facilitators and barriers within their network.

We worked together to design an implementation strategy incorporating scientific, clinical and operational evidence within the context of a VA policy to implement Primary Care–Mental Health Integration (PC-MHI).19 This policy mandates implementation of two complex care models, Co-Located Collaborative Care and Care Management, at primary care sites serving more than 5,000 Veterans.20 To support PC-MHI program implementation, VA established a national program office that provides ongoing consultation, technical assistance, education and training, dissemination of best practices, and informational tools.21 The support from the national program office operated as the comparison condition in our test of the IF strategy (described in more detail below).

The IF strategy included a national external expert facilitator (NEEF) and an internal regional facilitator (IRF) who partnered with providers and regional, facility, and clinic managers to implement PC-MHI. The NEEF had expertise in implementation science and the evidence base for PC-MHI. The IRF had protected time to support implementation activities, was embedded within the clinical organization at the regional level, and was familiar with local and regional organizational structures, procedures, culture, and clinical processes. The pilot of this strategy showed promising results, but was not rigorously evaluated.19 However, it led to an extramurally funded evaluation of the IF strategy, in which we partnered with similar stakeholders in two other VA regional networks within the context of the same VA policy to implement PC-MHI. This article describes the quantitative evaluation component of that larger study.

For the larger study, we used mixed methods to document facilitation and implementation activities; facilitators’ and stakeholders’ time spent in implementation activities; stakeholders’ perceptions of facilitation activities; and the NEEF’s process for transferring IF skills and knowledge to the IRFs. For all study sites, we also documented organizational context and perceptions of evidence for PC-MHI, as well as the PC-MHI components that were implemented. For this manuscript, we report only the quantitative evaluation of the IF strategy’s effectiveness using administrative data.

It is important to note that the implementation effort did not rely on research funding for PC-MHI personnel. Also, the IF team was blinded to the evaluation we conducted to test the hypothesis that, compared to national support alone, national support plus this highly partnered facilitation strategy would improve implementation of PC-MHI.

METHODS

Evaluation of the IF strategy was conducted using a multi-site, quasi-experimental, Hybrid Type III22 study design with a matched comparison group. The study was approved by the VA Central Institutional Review Board and conducted from February 2009 through August 2013.

Network Selection

The VA is comprised of 21 geographic regions termed networks. To identify sites, we matched at both network and clinic levels. We selected and recruited two IF networks based on 1) organizational structures with network mental health (MH) leadership similar to the network in which we developed the IF strategy,19,23 2) ability to identify an IRF who could provide 50 % effort to facilitate the clinical initiative, and 3) willingness to participate. To avoid possible contamination between IF and non-IF sites within the same network, we matched and compared intervention sites to sites in other networks. We interviewed network-level MH leaders to assess and match networks on strength of MH service line structure23 and current network-level facilitation efforts, selecting and recruiting the two networks most closely resembling IF networks on these domains.

Clinic Selection

Each IF network MH leader identified four primary care (PC) clinics, one located in a VA medical center (VAMC) and three in community-based outpatient clinics (CBOCs), that 1) would have difficulty implementing PC-MHI without assistance, 2) served, or had potential to serve, 5,000 or more PC patients during the study period, and 3) planned to implement a PC-MHI program during fiscal year 2009. This resulted in two VAMCs and six CBOCs that received IF. MH leaders in non-IF networks identified all eligible clinics. We interviewed clinic leadership at all sites to document characteristics used to match sites (see Tables 1 and 2). One IF CBOC did not complete the implementation plan within the study period and evaluation time periods could not be established. We excluded that CBOC and its matched site from this analysis. Analyses of the RE-AIM measures comparing IF and non-IF groups at both late-phase implementation and maintenance evaluation periods were conducted on a total of two matched pairs of VAMCs and five matched pairs of CBOCs (N=14).

Table 1.

Site Matching Characteristics

| Matched Sites‡ | Facility Type | Clinic size (# of unique patients) | # of PC Providers | Rural/Urban* | Academic Affiliation | Perceived Need for PC-MHI | Innovative | PC-MHI Program | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| IF | Non-IF | IF | Non-IF | IF | Non-IF | IF | Non-IF | IF | Non-IF | IF | Non-IF | IF | Non-IF | ||

| A1/B1 | VAMC§ | 5,632 | 7,454 | 6 | 13 | Urban† | Urban | Yes | Yes | Yes | No | At times | No | No | No |

| A2/B2 | CBOC ll | 9,224 | 11,308 | 12 | 10 | Urban† | Urban† | Yes | Yes | Yes | Yes | At times | At times | No | No |

| A3/B3 | CBOC | 4,025 | 5,944 | 5.5 | 6 | Urban | Urban† | Yes | Yes | Yes | Yes | Yes | Yes | No | Partial |

| A4/B4 | CBOC | 5,654 | 7,527 | 6 | 6 | Urban† | Urban† | No | Yes | No | No | Yes | Yes | No | No |

| C1/D1 | VAMC | 34,805 | 35,000 | 16 | 30 | Urban | Urban | Yes | Yes | No | Yes | Yes | At times | No | Partial |

| C2/D2 | CBOC | 14,763 | 13,600 | 12.6 | 11 | Urban | Urban | Yes | Yes | No | No | No | No | No | No |

| C3/D3 | CBOC | 8,125 | 8,463 | 8 | 7 | Urban | Urban | No | Yes | Yes | Yes | No | At times | No | No |

* Determined by US Census Bureau Metropolitan Statistical Area codes

† Clinic leadership perceived location as rural or suburban

‡ A, B, C, D denote matched networks; 1, 2, 3, 4 denote matched clinics

§ VAMC=VA Medical Center

ll CBOC=community based outpatient clinic

Table 2.

Baseline (Preparation Phase) Characteristics

| Matched Sites‡ | Facility Type | # of PC Visits | # of PC Patients | # of PC Providers | # of PC-MHI Visits** | ||||

|---|---|---|---|---|---|---|---|---|---|

| IF | Non-IF | IF | Non-IF | IF | Non-IF | IF** | Non-IF | ||

| A1/B1 | VAMC | 8,696 | 14,839 | 4,500 | 6,362 | 10 | 10 | 0+ | 0 |

| A2/B2 | CBOC | 13,835 | 16,292 | 7,577 | 7,266 | 16 | 12 | 0+ | 0 |

| A3/B3 | CBOC | 6,000 | 10,805 | 3,219 | 4,976 | 6 | 7 | 0+ | 11++ |

| A4/B4 | CBOC | 7,486 | 11,087 | 4,631 | 4,759 | 7 | 7 | 0+ | 0 |

| C1/D1 | VAMC | 26,836 | 31,878 | 12,442 | 20,595 | 26 | 44 | 0 | 65 |

| C2/D2 | CBOC | 14,378 | 19,722 | 7,976 | 7,978 | 14 | 12 | 0 | 0 |

| C3/D3 | CBOC | 7,066 | 7,430 | 4,835 | 4,289 | 8 | 7 | 0 | 0 |

| Total | 84,297 | 112,053 | 45,180 | 56,225 | 87 | 99 | 0 | 76++ | |

| Mean | 12,042.4 | 16,007.6 | 6,454.3 | 5,704.8 | 12.43 | 14.14 | 0 | 10.86 | |

| Mean Difference (SD) | 3,965.1 (7,706.7)* | 1,577.9 (4,609.1)* | 1.71 (10.67)* | 10.86 (17.13)* | |||||

* p > 0.05 ‡ A, B, C, D denote matched networks; 1, 2, 3, 4 denote matched clinics § VAMC=VA Medical Center ll CBOC=community based outpatient clinic

There was no statistical difference in the average number of PC visits, PC patients, and PC providers between IF and non-IF sites. ** 1,863 PC-MHI encounters were dropped because the sites did not have PC-MHI programs. Thus, no encounters were recorded during the preparation phase at any IF site

++Two non-IF sites recorded 76 PC-MHI encounters and had partial PC-MHI programs during this phase

The Implementation Facilitation Strategy

To implement PC-MHI concordant with the VA policy mandate, facilitators collaborated with stakeholders at all levels during several phases of implementation. We briefly outline the facilitation activities here. Greater detail can be found in an online appendix and in a manual we developed for use inside and outside of the VA.24 The NEEF for this study was an implementation researcher with clinical leadership experience; she devoted an average of approximately 15 % effort across all of the sites over the study period. In the implementation preparation phase, the NEEF briefed network-level administrative and medical leadership about IF. The network mental health leader identified facilitation sites and alerted medical center leadership to the facilitation opportunity. The network mental health leader and NEEF visited each site, engaging mental health and primary care leadership at the medical center and clinic levels, and detailing facilitation activities. Each of the two IF network mental health leaders identified an IRF who was clinically trained and had experience in PC-MHI. The IRFs’ time was supported at 50 % effort through study funds. Additional pre-implementation activities focused on maintaining site level leadership support through regular updates on implementation planning, identifying key stakeholders, and conducting a formative evaluation to identify potential barriers and facilitators to implementing PC-MHI.

When sites identified or hired PC-MHI staff, facilitators conducted site visits to initiate a design phase during which they partnered with key stakeholders (e.g., primary care and mental health leadership, nursing leadership, administrative personnel, suicide prevention coordinators, and informatics personnel) to help them make PC-MHI program decisions and adapt programs to site-level needs. These site visits included academic detailing about the PC-MHI program to clinic leadership, providers, and administrators; and marketing to primary care staff. The design phase concluded with a comprehensive implementation plan.18

During early PC-MHI program implementation, facilitators continued to partner with stakeholders to help sites implement and refine their plans, assess and address barriers, and monitor progress, providing the findings to each site and PC-MHI provider (audit and feedback). Audit and feedback included documenting the completion of activities identified in a site’s implementation plan and monitoring use of the 534 clinic code and PC-MHI program fidelity. Facilitators also continued marketing and established regional learning collaboratives of site-level PC-MHI staff and champions who met monthly to review implementation progress and share lessons learned.

Facilitators and stakeholders continued partnering during late-phase implementation to sustain PC-MHI. Activities included continued audit and feedback of the implementation process, problem identification and resolution, and integrating PC-MHI into organizational systems and processes. Over time, and with close mentoring, the NEEF transferred IF knowledge and skills to the IRF, thus fostering retention of these skills within local organizations.

Evaluation

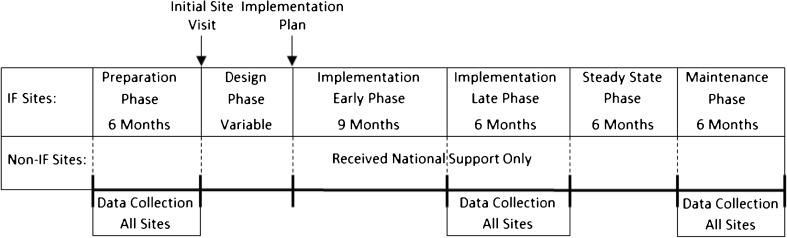

This evaluation was based upon the RE-AIM (Reach, Effectiveness, Adoption, Implementation, and Maintenance) framework (Table 4).25,26 We specified two evaluation periods (see Fig. 1): late-phase implementation and maintenance phase. These periods were determined by completion of the implementation plan.18 Evaluation periods for non-IF sites were the same as their matched IF sites.

Table 4.

Evaluation of PC-MHI Implementation

| RE-AIM (n) Outcome Measure |

Outcomes Percent/Proportion IF sites–non-IF sites |

Model 1 OR/β (95 % CI) |

Model 2 OR/β (95 % CI) |

|---|---|---|---|

| Late-Phase Implementation | |||

| Reach (98,758) % of Patients with PC-MHI Encounter |

4.14–1.53 % | OR = 6.20 (1.30, 29.53)* | OR = 8.93 (2.99, 26.61)† |

| Effectiveness (98,758) % of Patients Referred to MHSC |

0.87–0.63 % | OR = 1.17 (0.73, 1.89) | OR =1 .34 (0.83, 2.18) |

| Adoption (183) % of Providers Referring to PC-MHI |

86.02–70.00 % | OR = 6.02 (1.26, 28.61)* | OR = 7.12 (1.22, 41.57)* |

| Adoption (183) Proportion of PCP Patients Referred to PC-MHI |

0.028–0.014 | β = 0.024 (0.009, 0.039)† | β = 0.027 (0.012, 0.041)† |

| Implementation (2,017) % PC-MHI Referrals with Same Day Access |

27.97–7.43 % | OR = 2.42 (0.59, 9.83) | OR = 1.90 (0.49, 7.30) |

| Maintenance Phase | |||

| Reach (98,238) % of Patients with PC-MHI Encounter |

4.77–2.13 % | OR = 3.57 (1.57, 8.11)* | OR = 4.30 (1.90, 9.73)† |

| Effectiveness (98,238) % of Patients Referred to MHSC |

1.15–0.75 % | OR = 1.32 (0.81, 2.17) | OR = 1.49 (0.84, 2.64) |

| Adoption (183) % of Providers Referring to PC-MHI |

91.21–66.30 % | OR = 7.27 (1.71, 30.82) * | OR = 9.73 (1.95, 48.56)* |

| Adoption (183) Proportion of PCP Patients Referred to PC-MHI |

0.031–0.016 | β = 0.020 (0.007, 0.034)* | β = 0.022 (0.008, 0.037)* |

| Implementation (2,090) % PC-MHI Referrals with Same Day Access |

28.82–22.47 % | OR = 1.57 (0.55, 2.39) | OR = 0.82 (0.47, 1.43) |

* p< 0.05, †p< 0.001, PC-MHI Primary Care-Mental Health Integration; PCP Primary Care Provider; MHSC Mental Health Specialty Clinic; IF Implementation Facilitation; non-IF non-Implementation Facilitation; β regression co-efficient

Figure 1.

Study Periods. The initial site visit date served as the index for the Preparation and Design Phase periods. The Implementation Plan date served as the index date for all subsequent periods and varied by Implementation Facilitation (IF) site. Non-Implementation Facilitation (non-IF) sites were assigned the index dates of their matched IF sites.

We used Medical SAS® Outpatient data sets to identify patient encounters (see Table 3), and Primary Care Management Module (PCMM) data sets to identify PC providers at each site. We conducted all analyses using SAS® software, version 9.1 (SAS Institute Inc., Cary, North Carolina).

Table 3.

RE-AIM Evaluation Measures Defined

| RE-AIM Constructs | Study Measures | Definition and Specification |

|---|---|---|

| Reach represents the absolute number/proportion of targeted patients receiving the evidence-based practice |

The percentage of patients seen in PC with a PC-MHI encounter. | Patient encounters were identified from the VHA Medical SAS Outpatient data as visits with a PC clinic code (322 Women’s Primary Care, 323 Primary Care, and 350 Geriatric Primary Care). Patient PC-MHI encounters were identified by clinic code 534. |

| Effectiveness represents the clinical impact of the evidence-based practice as implemented in routine care settings |

The percentage of PC patients with an initial visit to mental health specialty care. | Patients with an initial mental health specialty visit were identified in the Medical SAS Outpatient data using clinic codes 500–599 (excluding 534), with receipt of direct patient care as identified by CPT code and the absence of a mental health specialty visit in the preceding year. |

| Adoption represents the absolute number/proportion of staff using the evidence-based practice |

1) The percentage of PCPs referring at least one patient to PC-MHI. 2) The proportion of PCPs’ patients referred to PC-MHI. |

PCPs were identified from the VHA PCMM data set. The Medical SAS Outpatient data set was used to verify that each PCP provided direct patient care during the specified study period. Initial PC-MHI encounters were defined as the first PC-MHI visit at that site in the past year as identified using the Medical SAS Outpatient data. A referral was credited to a PCP whenever a patient’s first PC-MHI encounter followed a PC encounter at the same site during the study period. Initial PC-MHI encounters with latencies exceeding 6 months from the date of PC encounter were not considered as referrals. PCPs were only credited with referrals if they were the provider of record associated with the PC encounter, or in the absence of a provider of record for the encounter, were listed as the patient’s assigned provider. |

| Implementation represents the fidelity of the evidence-based practice as implemented in routine care |

The percentage of patients referred to PC-MHI that were seen on the same day (open access). | PC-MHI referrals were identified for each patient as defined above under Adoption. The date of the patient’s PC encounter and the patient’s PC-MHI encounter were compared and those encounters occurring on the same date were considered same day. |

| Maintenance represents the degree to which implementation of the evidence-based practice is sustained |

A re-assessment of each measure defined above during the maintenance phase. | The definitions and specifications listed above were used to recalculate each measure during the maintenance phase of the study. |

PC Primary Care; PC-MHI Primary Care-Mental Health Integration; CPT Current Procedural Terminology; PCP Primary Care Provider; PCMM Primary Care Management Module

Variables

Study measures of RE-AIM and their definition and specification are detailed in Table 3. Two require further explanation here. By design, this study evaluated the effectiveness of IF on patient process measures rather than clinical outcomes. One objective of PC-MHI is to decrease workload in mental health specialty clinics (MHSC), to allow greater focus on patients with more severe mental illness. Therefore, for program Effectiveness, we hypothesized that IF sites would have a lower percentage of initial MHSC encounters than non-facilitation sites.

A key component of co-located collaborative care is open access (the ability to provide “warm handoffs” or same-day referrals from PC to MH providers). As a proxy for Implementation fidelity, we identified the percentage of all patients with an initial PC-MHI encounter who had a PC encounter on the same day.

To compare statistical differences in outcomes between implementation and control sites, we used generalized estimating equations (GEE), as operationalized by PROC GENMOD, SAS Enterprise Guide 5.1, to control for the clustering of observations (patients or providers) within sites. For the dichotomous outcomes, we used a binary distribution with a logit link, and for the second Adoption measure (proportion of patients a provider referred to PC-MHI), we used a normal distribution with a linear link. For all models, we assumed a compound symmetry structure for the correlations within each site. To reduce the complexity of the regression analyses, we only included patient-level and site-level characteristics that were substantially different between implementation and control sites. We examined all variables available during the late-phase implementation and maintenance phases, including number of unique primary care patients and number of primary care providers (PCPs), age, gender, marital status, and percent service connection (the degree to which health problems caused or exacerbated by military service prevented the Veteran from working). None of the available patient-level variables differed substantially across implementation and control sites in either the late-phase implementation or maintenance phase. Gender differed by 1.1 % and 0.83 % respectively, while marital status differed by 4.4 % and 3.9 %. Likewise, age differed by 0.48 and 0.81 years, and service connection differed by 3.3 and 3.7 percentage points. The number of PCPs did not differ substantially between implementation and control sites in the late-phase implementation (mean=12.9 and mean=13.3, respectively) or maintenance phase (mean=13.0 and mean=13.1, respectively). However, despite the site matching, the number of unique primary care patients differed substantially across implementation and control sites in both the late-phase implementation (mean=6,107 and mean=8,001, respectively) and the maintenance phase (mean=5,972 and mean=8,062, respectively). Therefore, the number of primary care patients was included as a covariate in all the late-phase implementation and maintenance phase models.

RESULTS

Baseline measures were gathered during the study’s 6-month preparation phase (see Fig. 1). There was no statistical difference in average number of PC visits, PC patients, and PCPs between IF and non-IF sites (See Table 1).

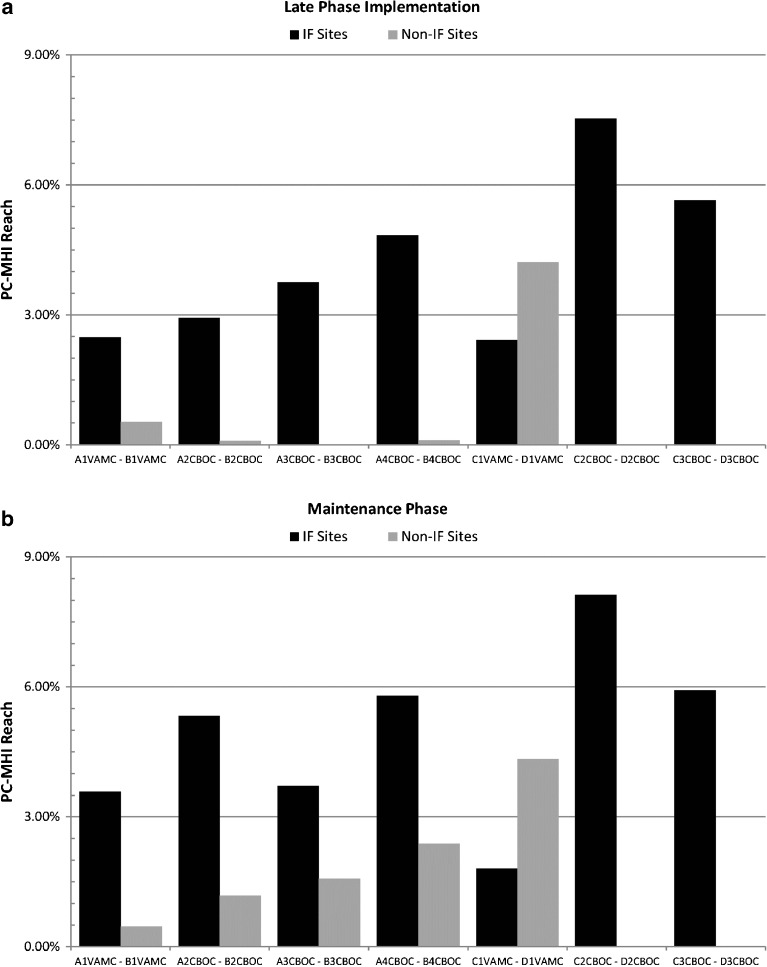

The percentage of PC patients seen in PC-MHI (Reach) was greater at IF sites compared to non-IF sites during both late-phase implementation (4.14% to 1.53%) and maintenance evaluation (4.77% to 2.13%) periods (see Fig. 2). In the GEE models that accounted for clustering of patients within sites (Model 1) and a second model that additionally controlled for the number of PC patients (Model 2), both odds ratios indicated significantly higher probabilities of PC patients being seen in PC-MHI compared to patients at non-IF sites during both evaluation periods (Table 4).

Figure 2.

Reach Measure Comparisons. The charts above illustrate the differences in the percentage of Primary Care (PC) patients seen in Primary Care-Mental Health Integration (PC-MHI) during (a) Late-Phase Implementation and (b) Maintenance Phase. The black bars represent Reach for each of the seven Implementation Facilitation (IF) sites and the gray bars represent each of the seven non-Implementation Facilitation (Non-IF) sites. Bars are grouped by matched sites.

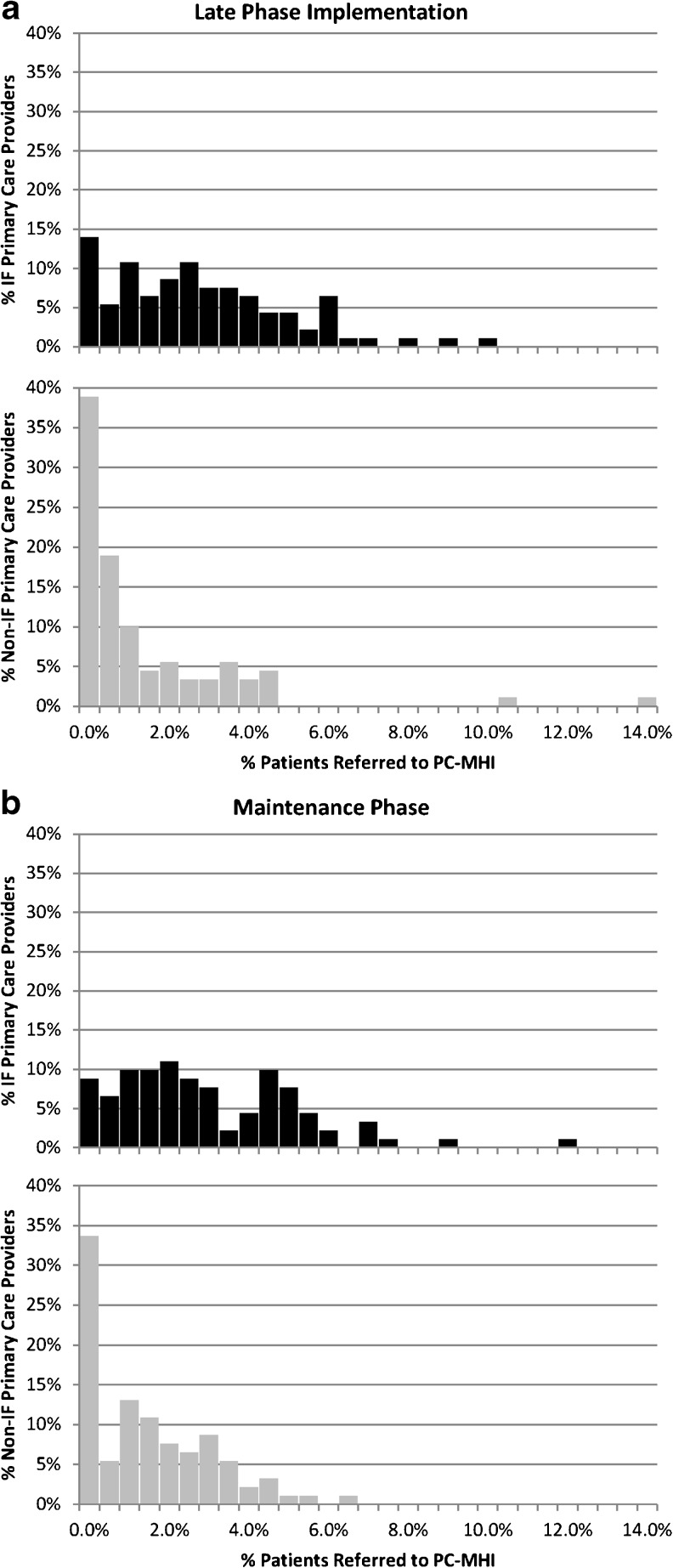

For the first Adoption measure, a higher percentage of PCPs at IF sites referred at least one patient to PC-MHI during both evaluation periods (86.02 % to 70.00 % and 91.21 % to 66.30 %, respectively) (see Fig. 3). Odds ratios from both Model 1 (accounting for clustering of PCPs by site) and Model 2 (additionally controlling for the number of PC patients) indicated significantly higher probabilities that PCPs at IF sites referred at least one patient to PC-MHI compared to PCPs at non-IF sites during both evaluation periods (Table 4). The second Adoption measure that indicated a greater proportion of PCPs’ patients received initial referrals to PC-MHI at IF clinics compared to non-IF clinics during both evaluation periods (0.028 to 0.014 and 0.031 to 0.016, respectively). Both GEE models indicated that a significantly higher proportion of PCPs’ patients at IF sites were referred to PC-MHI compared to non-IF sites during both evaluation periods (Table 4).

Figure 3.

Adoption Measure Comparisons. The histograms illustrate, in aggregate, the distribution of Primary Care Providers (PCPs) and the percentage of patients they referred to Primary Care-Mental Health Integration (PC-MHI) during (a) Late-Phase Implementation and (b) Maintenance Phase. Non-Implementation Facilitation (non-IF) sites had more PCPs referring no patients to PC-MHI and referred a lower proportion of patients overall during both study phases compared to PCPs at Implementation Facilitation (IF) sites (see Table 4).

For the Implementation fidelity measure, a greater percentage of patients at IF sites received a warm hand-off to PC-MHI during both evaluation periods (27.97 % to 7.43 % and 28.82 % to 22.47 %, respectively). These differences in percentages were found to not be significant once we accounted for the clustering of patients within sites (Model 1) and controlled for the number of PC patients (Model 2). However, the number of PC patients at a site was found to be a statistically significant predictor of same-day access at both time points (p=0.013 and p<0.001, respectively) with negative parameter estimates indicating higher percentages of “warm handoffs’” to PC-MHI at sites with lower numbers of PC patients. For the Effectiveness measure, IF sites were more likely to refer PC patients to specialty mental health than non-IF sites during both evaluation periods (0.87 % to 0.63 % and 1.15 % to 0.75 % respectively). However, these differences also failed to meet statistical significance in the general linear model analyses (Table 4).

DISCUSSION

The design and timing of our study took advantage of a naturally occurring experiment associated with a policy initiative to implement PC-MHI programs throughout VA. This allowed us to document the potential added value of a highly partnered IF strategy. The addition of this strategy to national-level education and technical assistance resulted in significant differences in PC-MHI Reach and Adoption when compared to matched clinics that did not receive IF. These findings were sustained through the maintenance phase. We suspect that sites receiving IF experienced these improvements at least in part because we developed and implemented this strategy through clinical-research partnerships.19 Additionally, we engaged stakeholders at all levels and helped them learn about and adapt PC-MHI to their local context, needs, and preferences, as well as address site-specific implementation barriers. It is important to note that as the IRF became more skilled, the NEEF became less involved. Thus sustained findings through the maintenance phase may be attributable to the efforts of the IRF.

Our measure of Effectiveness did not statistically differ between facilitation and non-facilitation sites.27 While we hypothesized that the IF strategy would decrease MH encounters by shifting care to PC-MHI, PC-MHI may have increased identification of patients needing mental health specialty care. This is consistent with emerging literature, which notes a significant increase in second visits in MHSC for patients seen in PC-MHI.28 This may be because PC-MHI increases detection of MH disorders and decreases no-show rates.29–32 Future work should evaluate the influence of PC-MHI programs on the complexity of patients entering specialty MH care.

Because this evaluation relied solely on administrative data, we developed a proxy measure of Implementation fidelity: first access to PC-MHI care on the same day as a PCP appointment. Though a larger percentage of patients at IF sites met this measure than those at non-IF sites, this was not significant when we accounted for site-level clustering and number of primary care patients seen. Interestingly, the number of primary care patients was a statistically significant predictor of the measure. There are several possible explanations. The first possibility is that PC-MHI programs at smaller sites were more highly resourced proportional to the patient population. The second possibility is that smaller sites, such as CBOCs, have stronger familiarity and relationships between PC and PC-MHI providers, making “warm handoffs” more likely. Alternatively, this may be an artifact of how we defined the measure. We were unable to account for PC-MHI referrals from other clinic staff or self-referral by Veterans. Regardless of etiology, the need to ensure that same day access occurs at larger sites should be addressed in future studies, as well as in the VA’s implementation of the PC-MHI program.

Our results should be considered in light of the following limitations. First, despite a rigorous site matching process, we could not fully control for the potential for selection bias. Second, the PC-MHI clinic code may have been incorrectly applied at non-IF sites, making our findings conservative. We identified that the PC-MHI clinic code was inappropriately applied at four IF sites in the preparation phase and at two non-IF sites in the late-implementation and maintenance phases. Facilitators identified and corrected inappropriate use of this code at IF sites. Because we assessed program implementation at all sites, we were able to identify this error at some, but not all non-IF sites. Finally, although the primary aim of this evaluation was to determine the effectiveness of IF, we were unable to collect patient-level clinical outcomes because of limits of the clinical data systems. Rather, we reported process of care measures that are assumed to be correlated with patient outcomes. Future studies will document the clinical impact of PC-MHI.

Quantitative data used for this evaluation do not provide us with information about particular IF activities, their effect on adoption and sustainability, the amount of time facilitators devoted to sites, or the different ways sites implemented PC-MHI programs. The larger study will provide future analyses of clinical and qualitative data with further insights into these important questions. In addition, future research should examine long-term maintenance of these types of initiatives.

This IF strategy was implemented as a network model with each IRF working at 50 % effort to support PC-MHI implementation at four clinics and to act as an informant for the evaluation of the strategy. The internal facilitator might alternatively be located at the facility or clinic level. The appropriate percent effort of the facilitators would depend upon the complexity of the program or practice being facilitated, the purpose of facilitation, and the number of organizations targeted.

In much of the IF literature, researchers serve as initiators and facilitators for experimental interventions.8 A unique feature of this project was that clinical leadership, rather than researchers, initiated the program. Further, facilitators were blinded to the evaluation. By doing this, we hoped clinicians and managers would view IF efforts as a significant clinical program rather than merely an experiment, thereby fostering buy-in. We also hoped they would view facilitators as aligned with current and long-term clinical needs and policy, rather than with an experimental and possibly temporary program.

Prior to the IF intervention, none of the eight IF clinics were implementing evidenced-based PC-MHI programs. Mid-way through the study, seven of the eight had implemented programs. The clinic that had not implemented PC-MHI was affiliated with a medical center that had not yet implemented the policy. Facilitators concluded that it would be most productive to concentrate their efforts at the medical center level. Eventually, even this clinic implemented the program, but not in time to be included in the study.

Researchers and policymakers have observed that implementation scientists struggle to create actionable guidance for managers.33 By embedding our work within true clinical-research partnerships, we enabled the sharing of implementation knowledge and the creation of a “shelf-ready” implementation strategy. VA leadership has already adopted IF nationally and has expanded it beyond PC-MHI to other clinical initiatives, with our facilitators training clinical operations personnel and developing resources.24,34,35 Although this work was conducted within VA, the IF strategy could be applied in other healthcare systems willing to provide resources dedicated to innovative implementation.

Electronic supplementary material

(DOCX 25 kb)

Acknowledgements

The authors thank Carrie Edlund, MS for assistance with editing and manuscript preparation.

This material is based on work supported by the U.S. Department of Veterans Affairs (VA) Health Services Research and Development (HSR&D) Service and the VA Quality Enhancement Research Initiative (QUERI) (grant SDP 08–316).

Disclaimer

The views expressed in this article are those of the authors and do not necessarily reflect the position or policy of the Department of Veterans Affairs.

Conflicts of Interest

The authors declare that they do not have a conflict of interest.

REFERENCES

- 1.National Research Council . Improving the quality of health care for mental and substance-use conditions: Quality Chasm Series. Washington, DC: The National Academies Press; 2006. [PubMed] [Google Scholar]

- 2.Grol R, Wensing M, Eccles M. Improving Patient Care: The Implementation of Change in Clinical Practice. London: Elsevier; 2005. [Google Scholar]

- 3.Wensing M, Fluit C, Grol R. Educational Strategies. In: Grol R, Wensing M, Eccles M, Davis D, editors. Improving Patient Care: The Implementation of Change in Clinical Practice. 2. West Sussex, UK: John Wiley and Sons, Ltd.; 2013. pp. 197–209. [Google Scholar]

- 4.Greenhalgh T, Robert G, MacFarlane F, Bate P, Kyriakidou O. Diffusion of innovations in service organizations: Systematic review and recommendations. Milbank Q. 2004;82:581–629. doi: 10.1111/j.0887-378X.2004.00325.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Ferlie EB, Shortell SM. Improving the quality of health care in the United Kingdom and the United States: A framework for change. Milbank Q. 2001;79:281–315. doi: 10.1111/1468-0009.00206. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Parker LE, Kirchner JE, Bonner LM, et al. Creating a quality improvement dialogue: Utilizing knowledge from frontline staff, managers, and experts to foster health care quality improvement. Qual Health Res. 2009;19:229–242. doi: 10.1177/1049732308329481. [DOI] [PubMed] [Google Scholar]

- 7.Parker LE, De Pillis E, Altschuler A, Rubenstein LV, Meredith LS. Balancing participation and expertise: A comparison of locally and centrally managed health care quality improvement within primary care practices. Qual Health Res. 2007;17:1268–1279. doi: 10.1177/1049732307307447. [DOI] [PubMed] [Google Scholar]

- 8.Stetler CB, Legro MW, Rycroft-Malone J et al. Role of external facilitation in implementation of research findings: A qualitative evaluation of facilitation experiences in the Veterans Health Administration. Implement Sci. 2006;1. [DOI] [PMC free article] [PubMed]

- 9.Taylor EF, Machta RM, Meyers DS, Genevro J, Peikes DN. Enhancing the primary care team to provide redesigned care: The roles of practice facilitators and care managers. Ann Fam Med. 2013;11:80–83. doi: 10.1370/afm.1462. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Dogherty E, Harrison M, Baker C, Graham I. Following a natural experiment of guideline adaptation and early implementation: A mixed-methods study of facilitation. Implement Sci. 2012;7:9. doi: 10.1186/1748-5908-7-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Rycroft-Malone J, Harvey G, Seers K, Kitson A, McCormack B, Titchen A. An exploration of the factors that influence the implementation of evidence into practice. J Clin Nurs. 2004;13:913–924. doi: 10.1111/j.1365-2702.2004.01007.x. [DOI] [PubMed] [Google Scholar]

- 12.Harvey G, Loftus-Hills A, Rycroft-Malone J, et al. Getting evidence into practice: The role and function of facilitation. J Adv Nurs. 2002;37:577–588. doi: 10.1046/j.1365-2648.2002.02126.x. [DOI] [PubMed] [Google Scholar]

- 13.Hayden P, Frederick L, Smith BJ, Broudy A. Developmental Facilitation: Helping Teams Promote Systems Change. Collaborative Planning Project for Planning Comprehensive Early Childhood Systems. 2–21. 2001. Center for Collaborative Educational Leadership, Denver CO 80204.

- 14.Heron J. Dimensions of facilitator style. Guildford: University of Surrey; 1977. [Google Scholar]

- 15.Thompson GN, Estabrooks C, Degner LF. Clarifying the concepts in knowledge transfer: A literature review. J Adv Nurs. 2006;53:691–701. doi: 10.1111/j.1365-2648.2006.03775.x. [DOI] [PubMed] [Google Scholar]

- 16.Wiltsey Stirman S, Kimberly J, Cook N, Calloway A, Castro F, Charns M. The sustainability of new programs and innovations: A review of the empirical literature and recommendations for future research. Implement Sci. 2012;7:17. doi: 10.1186/1748-5908-7-17. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Kirchner JE, Parker LE, Bonner LM, Fickel JJ, Yano E, Ritchie MJ. Roles of managers, frontline staff and local champions, in implementing quality improvement: Stakeholders' perspectives. J Eval Clin Pract. 2012;18:63–69. doi: 10.1111/j.1365-2753.2010.01518.x. [DOI] [PubMed] [Google Scholar]

- 18.Fortney JC, Pyne JM, Smith JL, et al. Steps for implementing collaborative care programs for depression. Popul Health Manag. 2009;12:69–79. doi: 10.1089/pop.2008.0023. [DOI] [PubMed] [Google Scholar]

- 19.Kirchner J, Edlund CN, Henderson K, Daily L, Parker LE, Fortney JC. Using a multi-level approach to implement a primary care mental health (PCMH) program. Fam Syst Health. 2010;28:161–174. doi: 10.1037/a0020250. [DOI] [PubMed] [Google Scholar]

- 20.Veterans Health Administration. Uniform Mental Health Services in VA Medical Centers and Clinics, VHA Handbook 1600.01. 6-11-2008. Washington, D.C., Department of Veterans Affairs.

- 21.Post EP, Metzger M, Dumas P, Lehmann L. Integrating mental health into primary care within the Veterans Health Administration. Fam Syst Health. 2010;28:83–90. doi: 10.1037/a0020130. [DOI] [PubMed] [Google Scholar]

- 22.Curran GM, Bauer M, Mittman B, Pyne JM, Stetler C. Effectiveness-implementation hybrid designs: Combining elements of clinical effectiveness and implementation research to enhance public health impact. Med Care. 2012;50:217–226. doi: 10.1097/MLR.0b013e3182408812. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Charns MP. Organization design of integrated delivery systems. Hosp Health Serv Adm. 1997;42:411–432. [PubMed] [Google Scholar]

- 24.Kirchner JE, Ritchie MJ, Dollar KM, Gundlach P, Smith JL. Implementation Facilitation Training Manual: Using External and Internal Facilitation to Improve Care in the Veterans Health Administration. http://www.queri.research.va.gov/tools/implementation/Facilitation-Manual.pdf 2013. Accessed August 25, 2014.

- 25.Glasgow RE, McKay HG, Piette JD, Reynolds KD. The RE-AIM framework for evaluating interventions: What can it tell us about approaches to chronic illness management? Patient Educ Couns. 2001;44:119–127. doi: 10.1016/S0738-3991(00)00186-5. [DOI] [PubMed] [Google Scholar]

- 26.Glasgow RE. Translating research to practice. Lessons learned, areas for improvement, and future directions. Diabetes Care. 2003;26:2451–2456. doi: 10.2337/diacare.26.8.2451. [DOI] [PubMed] [Google Scholar]

- 27.Pfeiffer PN, Szymanski BR, Zivin K, Post EP, Valenstein M, McCarthy JF. Are primary care mental health services associated with differences in specialty mental health clinic use? Psychiatr Serv. 2011;62:422–425. doi: 10.1176/appi.ps.62.4.422. [DOI] [PubMed] [Google Scholar]

- 28.Wray LO, Szymanski BR, Kearney LK, McCarthy JF. Implementation of primary care-mental health integration services in the Veterans Health Administration: program activity and associations with engagement in specialty mental health services. J Clin Psychol Med Settings. 2012;19:105–116. doi: 10.1007/s10880-011-9285-9. [DOI] [PubMed] [Google Scholar]

- 29.Zivin K, Pfeiffer PN, Szymanski BR, et al. Initiation of Primary Care-Mental Health Integration programs in the VA Health System: associations with psychiatric diagnoses in primary care. Med Care. 2010;48:843–851. doi: 10.1097/MLR.0b013e3181e5792b. [DOI] [PubMed] [Google Scholar]

- 30.Unutzer J, Katon W, Callahan CM, et al. Collaborative care management of late-life depression in the primary care setting: A randomized controlled trial. JAMA. 2002;288:2836–2845. doi: 10.1001/jama.288.22.2836. [DOI] [PubMed] [Google Scholar]

- 31.Krahn DD, Bartels SJ, Coakley E, et al. PRISM-E: Comparison of integrated care and enhanced specialty referral models in depression outcomes. Psychiatr Serv. 2006;57:946–953. doi: 10.1176/appi.ps.57.7.946. [DOI] [PubMed] [Google Scholar]

- 32.Zanjani F, Miller B, Turiano N, Ross J, Oslin D. Effectiveness of telephone-based referral-care management, a brief intervention designed to improve psychiatric treatment engagement. Psychiatr Serv. 2008;59:776–781. doi: 10.1176/appi.ps.59.7.776. [DOI] [PubMed] [Google Scholar]

- 33.Atkins D. QUERI: Connecting Research and Patient Care. HSR&D Field Based Meeting; 10 Jul; 2010.

- 34.Ritchie MJ, Dollar KM, Kearney LK, Kirchner JE. Research and Services Partnerships: Responding to needs of clinical operations partners: Transferring implementation facilitation knowledge and skills. Psychiatr Serv. 2014;65(2):141–143. doi: 10.1176/appi.ps.201300468. [DOI] [PubMed] [Google Scholar]

- 35.Kirchner JE, Kearney LK, Ritchie MJ, Dollar KM, Swensen AB, Schohn M. Research & Services Partnerships: Lessons learned through a national partnership between clinical leaders and researchers. Psychiatr Serv. 2014;65(5):577–579. doi: 10.1176/appi.ps.201400054. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

(DOCX 25 kb)