Abstract

With the increasing recognition of health literacy as a worldwide research priority, the development and refinement of indices to measure the construct is an important area of inquiry. Furthermore, the proliferation of online resources and research means that there is a growing need for self-administered instruments. We undertook a systematic overview to identify all published self-administered health literacy assessment indices to report their content and considerations associated with their administration. A primary aim of this study was to assist those seeking to employ a self-reported health literacy index to select one that has been developed and validated for an appropriate context, as well as with desired administration characteristics. Systematic searches were carried out in four electronic databases, and studies were included if they reported the development and/or validation of a novel health literacy assessment measure. Data were systematically extracted on key characteristics of the instruments: breadth of construct (“generic” vs. “content- or context- specific” health literacy), whether it was an original instrument or a derivative, country of origin, administration characteristics, age of target population (adult vs. pediatric), and evidence for validity. 35 articles met the inclusion criteria. There were 27 original instruments (27/35; 77.1%) and 8 derivative instruments (8/35; 22.9%). 22 indices measured “general” health literacy (22/35; 62.9%) while the remainder measured condition- or context- specific health literacy (13/35; 37.1%). Most health literacy measures were developed in the United States (22/35; 62.9%), and about half had adequate face, content, and construct validity (16/35; 45.7%). Given the number of measures available for many specific conditions and contexts, and that several have acceptable validity, our findings suggest that the research agenda should shift towards the investigation and elaboration of health literacy as a construct itself, in order for research in health literacy measurement to progress.

Introduction

Health literacy- one commonly-cited definition of which is “the degree to which individuals have the capacity to obtain, process, and understand basic health information and services needed to make appropriate health decisions” [1] - is increasingly identified as an important research and policy priority [2], [3]. Using available assessment indices, a high prevalence of lower health literacy has been reported in many countries in both population-representative [4] and patient samples [5], [6]. The largest available survey from the United States, for example, was conducted by the Department of Health and Human Services in 2003, and identified 35% of the population as having ‘basic’ or ‘below basic’ levels of health literacy [7]. In an Australian sample, Barber et al [4] found a varying prevalence of ‘low’ health literacy based on their use of three separate assessment measures: between 6.8% (measured by the Test of Functional Health Literacy in Adults) [8] and 26.0% (measured by the Newest Vital Sign) [9].

Although there are many currently available indices, it has been noted that they are not all of equal quality [10]. Repeated criticisms of existing health literacy indices have noted their lack of comprehensiveness, unsuitability to specific patient populations, and psychometric weakness and heterogeneity [11], [12], [13]. The response to these criticisms, particularly in the past few years, has been to develop more indices, which have parsed health literacy into context-specific constructs [14], [15] as well as attempting more comprehensively to measure the concept in its entirety [16]. The most extensive review of available indices noted that all had significant deficiencies limiting their acceptability and generalizability [11]. While that review provided an assessment of available indices up to 2008, there have been many other indices subsequently published. In addition, the aforementioned review focused primarily on the psychometric aspects of individual indices, rather than on characteristics associated with their administration. Subsequent reviews on indices appropriate for eHealth applications [17] and diabetes [18] have been limited in scope and are useful only to select indices for these specific applications.

A key focus in recent research on health literacy measurement has been the development of self-administered indices. These do not require a trained research assistant or clinician to administer, and have been referred to in the literature as ‘indirect’ [18] or ‘self-reported’ instruments [11]. They have the advantage of potentially decreasing the burden placed on clinical practitioners while assessing health literacy, thereby reducing the resources required for research in this area. Yet, there is no available review which reports administration characteristics and critically appraises available self-administered measures, in order to support those looking to choose a measure, as well as to identify what research gaps exist in this area.

Therefore, to identify all available self-administered health literacy measurement indices, we undertook an overview of these measures. A primary aim of this study was to assist those seeking to use a health literacy index to select one that has been developed and validated for an appropriate context, as well as with desired administration characteristics. Furthermore, we aimed to inform and understand to what extent the development of additional health literacy measures is appropriate. Our purpose was to identify all available self-reported health literacy assessment measures and report their validity, their administration characteristics, and their intended uses.

Methods

Search strategy

We conducted systematic searches of the following electronic databases: OVID Medline (1946- April 2014), OVID Embase (1980- April 2014, OVID PsycINFO (1987- April 2014), and CINAHL (1981- April 2014). The searches were undertaken in two phases: first, on 11 September 2012, then an update search was carried out on 8 April 2014. All references were imported into EndNote X4 (Thomson Reuters, 2011).

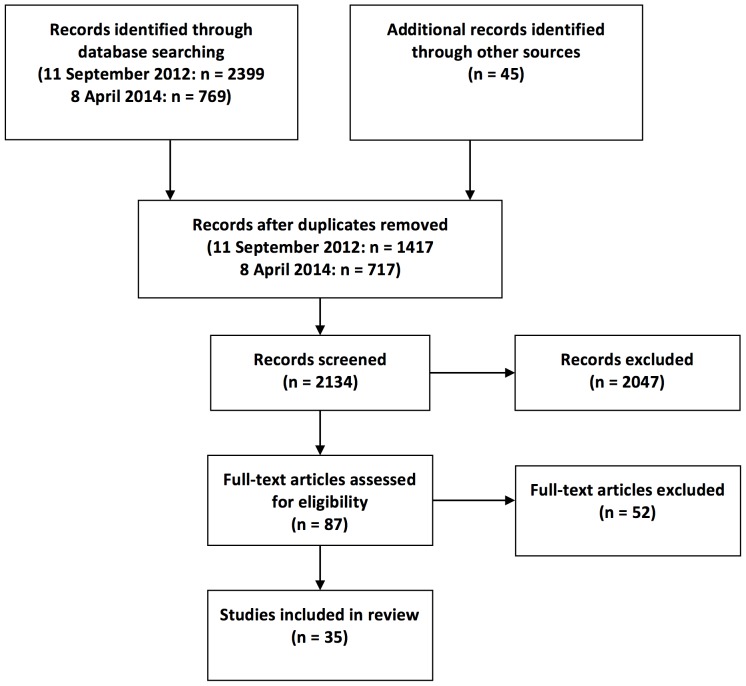

The search strategy was developed with a specialist librarian, with an emphasis on sensitivity to identifying studies reporting the development of condition- and specialty-specific health literacy measures, in addition to measures of general health literacy. Search terms used included literacy, health, assessment, indices, and measurement. The full search strategy is available as supporting information; see Appendix S1. Our systematic searches were augmented by ‘snowballing’ [19] from the reference lists of key identified papers, which identified 45 additional studies for title and abstract review. The PRISMA flow diagram is included as Figure 1 and the PRISMA checklist is available as supporting information (Checklist S1).

Figure 1. PRISMA Flow Diagram.

Study selection

In order to capture the literature as broadly as possible, we included studies that reported the development and/or validation of a novel measure of health-related literacy. For inclusion, studies must have reported assessing the validity of a measure, as well as providing some description of its administration characteristics. Generic measures, as well as those focussing on more specialized health literacy such as “nutrition literacy” [20] and “eHealth literacy” [21] were included. We included only those references published in English, which reported the development and/or validation of a measure that was available in English (measures were also included if they were available in English as well as another language). Only one key reference was included for each measure (usually the first to report on a particular measure). We excluded studies that reported the development or validation of literacy measures that were not specifically related to health, as well as those that reported solely on “numeracy”, since the relationship between numeracy and health outcomes in the absence of literacy assessment remains unclear [22].

Data extraction and synthesis

One author (BO) conducted the search; three authors (BO, DC, IRC) scanned abstracts, and selected full text articles for review. The remaining two authors (JV, SZ) contributed to developing the data extraction forms and to analysis of the extracted data. We designed and used structured forms to extract pertinent information from each article. One author extracted data from each included reference into a data extraction form in Microsoft Excel; another author (either DG or IRC) independently extracted data from each reference as well. Disagreements were resolved by consensus.

Calculations of means and standard deviations were undertaken in Microsoft Excel. Data were systematically extracted on key characteristics of the instruments: breadth of construct (‘general’ vs. ‘condition- or context- specific’ health literacy), whether it was an original instrument or a derivative, country, time for completion, number of items, age of target population (adult vs. pediatric), as well as evidence for validity. We extracted data relevant to assessing the face validity (the extent to which an index appears to measure what it is supposed to), content validity (how far items of which the index is comprised reflect the concept to be measured), and construct validity (the extent to which the index as a whole measures what it is supposed to) of included measures [22].

Results

Literature search

Our initial database search, carried out on 12 September 2012, identified 2399 references. An update search was carried out on 8 April 2014 identifying 769 references which had been published after the original search was carried out. Hand searching of reference lists of included papers resulted in an additional 45 references. After removing duplicate references, 2431 abstracts were scanned for inclusion and 2047 were excluded. 87 full-text articles were obtained for review, of which 52 were excluded because upon full-text review they did not report the development of a novel or newly validated self-report health literacy measure. Thus, 35 articles reporting the development and/or validation of a health literacy instrument were included (Figure 1), all of which were published during or after 2004.

Study characteristics

Type of measure

Of 35 instruments included in the review, there were 27 original instruments (27/35; 77.1%) and 8 derivative instruments (8/35; 22.9%), which were either modifications or short-form versions of original instruments (Appendix S2). We classified the measures into two groups: general (stated as measuring “health literacy”, or literacy and its implications for general use of health information); and condition- or specialty-specific (stated as measuring “literacy” with a health-related prefix, such as “oral health literacy” [23] or “colon cancer literacy” [26]). Most measures were classified as general (22/35; 62.9%%), while the remainder (13/35; 37.1%) were classified as condition - or specialty specific. Indices were available for a variety of conditions, such as Human Immunodeficiency Virus (HIV) infection [24], cancer [25], [26], as well as specific indications such as eHealth [21] (Table 1).

Table 1. Indications for general and content- or context- specific measures.

| Indication | Measures |

| General health literacy | 3 questions [27]; NAAL HL [28]; SILS [29]; CCHL [30]; CHC [31]; HLSI [16]; METER [32]; Talking touchscreen [33]; Graph literacy [34]; Health LiTT [35]; CHL [36]; TAIMI [37]; MHLS [38]; Canadian high school student measure [39]; HLSI short form [40]; SDPI-HH HL [41]; Massey 2012 measure [42]; CAHPS Item Set [43]; AAHLS [44]; HeLMS [45]; HLQ [46] |

| Dental/oral health literacy | HeLD [23]; Harper 2014 measure [47] |

| Diabetes literacy | FCCHL [48] |

| Cancer literacy | SIRACT [26]; CMLT-L/CMLT-R [25] |

| Mental health literacy | Reavley 2014 measure [49] |

| Nutrition literacy | FLANKK [50]; NLAI [20] |

| Hospital literacy | HCAHPS Item Set [51] |

| HIV literacy | HIV-HL [24] |

| Medication literacy | MedLitRxSE [52] |

| Colon cancer literacy | ACCL [14]; |

| Intellectual disability literacy | ILDS [53]; |

| eHealth literacy | eHEALS [21]; |

Country of origin

Most of the available instruments to measure health literacy have been validated in the United States (22/35; 59.5%), with Australia and Japan being the next most frequent country of origin of health literacy indices (both contributed 4/35; 11.4%). Two indices were validated in each of the following countries: the United Kingdom, Canada, and Germany. There were two measures initially validated in multiple countries: the ‘Graph literacy’ scale (in Germany and the United States) [34] and the Intellectual Disability Literacy Scale (ILDS; validated in the United Kingdom, India, China, and Singapore) [53] (Table 2).

Table 2. Country of origin of measures.

| Country of origin | Measures |

| United States | 3 questions [27]; NAAL HL [28]; SILS [29]; HLSI [16]; METER [32]; Talking touchscreen [33]; Health LiTT [35]; HLSI short form [40]; SDPI-HH HL [41]; Massey 2012 measure [42]; CAHPS Item Set [43]; SIRACT [26]; CMLT-L/CMLT-R [25]; FLANKK [50]; NLAI [20]; ACCL [14]; MedLitRxSE [52]; HCAHPS Item Set [51] |

| Australia | HeLMS [45]; HLQ [46]; HeLD [23]; Reavley 2014 measure [49] |

| Japan | FCCHL [48]; CCHL [30]; CHL [36]; TAIMI [37] |

| Canada | eHEALS [21]; Canadian high school student measure [39] |

| United Kingdom | ILDS [53]; AAHLS [44] |

| China | ILDS [53] |

| Germany | CHC [31] |

| India | ILDS [53] |

| Korea | MHLS [38] |

| Singapore | ILDS [53] |

Setting

Most included studies reported the setting from which participants were recruited for validation. About a third of the instruments were validated in populations recruited from secondary and specialty care settings (12/35; 34.2%), while a quarter of studies recruited in primary care settings (8/35; 22.9%). The remainder of validation studies recruited from diverse non-clinical settings (17/35; 48.6%), such as schools and community centres, and shopping malls. Two studies [39], [47] reported validating general health literacy measures through online surveys and did not provide information about the characteristics of the subjects recruited. Three studies [41], [46], [52] recruited participants from more than one setting (Appendix S2).

Age of participants

The majority of available indices were initially studied and validated with populations between 18 and 65 years old. The mean age of participants in included studies ranged from 18 to 76 years. There were six indices validated in <18 year old populations: the eHealth Literacy Scale (eHEALS) [21], the Food Label Literacy for Applied Nutrition Knowledge scale (FLLANK) [50], the All Aspects of Health Literacy Scale (AAHLS) [44], and three unnamed measures: one for adolescents [42], one for Canadian high school students [31], and one to assess mental health literacy [49]. One of these, the eHEALS, is suggested by the authors to be valid for all ages.

Psychometric characteristics

16 indices were assessed in included studies as having adequate face, content, and construct validity (16/35; 45.7%) (Table 3). 18 indices had adequate face and content validity, but construct validity was not assessed in the study included in this review (18/35; 51.4%). One index (validated in one of the largest studies included in this review, which included 6083 participants) (1/35; 2.9%) established face validity only [37]. There were no clear differences between the validity of general indices versus content- and context- specific (Table 3). No included studies reported that sensitivity to change in health literacy level over time could be measured using the instrument, but test-retest reliability was reported as being adequate in four measures [21], [45], [50], [53]. The authors of one measure noted that although test-retest reliability had not been addressed in their study, that they intended to assess this in a future study [46].

Table 3. Psychometrics of general and context- or content- specific indices.

| Levels of validity addressed | Number (%) of general measures at each level | Number (%) of context- or content- specific indices at each level |

| Face only | 1 (4.5) | 0 (0) |

| Face and content | 11 (50.0) | 6 (46.2) |

| Face, content, and construct | 10 (45.5) | 7 (53.8) |

Time to administer/number of items

Only 16 studies (16/35; 45.7%) reported how long it takes to administer the instrument being reported; this ranged from 2 minutes (Medical Term Recognition Test; METER) [32] to 70 minutes (Cancer Message Literacy Test- Listening/Cancer Literacy Message Test- Reading; CMLT-L/CMLT-R) [25]. Overall, health literacy indices required on average 19.1 (SD 19.6) minutes to administer, although there are 7 available measures that can be administered in 5 minutes or less [9], [21], [27], [29], [32], [36], [40] (Appendix S2). General measures took an average of 22.3 minutes (SD 26.1) to complete, while condition- and specialty- specific measures took an average of 20.8 minutes (SD 22.5). 32/35 (91.4%) indices reported the number of items included, which varied from 1 [29] to 80 [32] (SD 20.5) (Appendix S2).

Discussion

Statement of principal findings

This review reports the administration characteristics and validity of 35 health literacy measures, and aims to assist selection of an appropriate index. The majority of included indices have been developed and validated in the United States. There is a clear trend towards the development of more measures in recent years. The instruments identified in this review are mostly intended for adults (with only six available for pediatric populations) and have been primarily tested in non-clinical settings. Several instruments take less than 5 minutes to complete, and there are many with adequate validity.

Low health literacy is strongly associated with worse health outcomes [54]. In order to improve the provision of healthcare for patients with low health literacy, it has been suggested that we need to have appropriate measurement tools to identify these individuals at clinical and population levels. Several authors have proposed that new health literacy assessment measures are required [11], [12], [55], [56] yet we have identified 35 indices, validated for use in a wide variety of populations. While it is understandable that no single assessment measure has been able to represent a complex construct such as health literacy in its entirety, there are additional aspects to the construct itself that are inherent to the way in which it is assessed. Our review is the first to use systematic overview methodology to review the administration, development, and grouping of generic and condition- or context- specific self-report measures. We used a sensitive search strategy to report the breadth of health-related literacy assessment, building upon an earlier review of the psychometric properties of 19 health literacy instruments [11].

Conceptual implications for health literacy

The measures included in this review represent a cross-sectional evaluation of 10 years of health literacy measurement research. We focused on self-report measures because these require fewer resources than those for which examiners (or clinicians) must be trained and provided. Consequently, we believe there will be more of a future in self-administered measures, as they are more scalable and can more easily be incorporated into online surveys, which are becoming more prevalent in health research [57], [58], [59].

It has been noted that there is underlying conceptual disagreement about what health literacy is and that this may be contributing to an “unstable foundation” in research in this area [60]. Our review identified two broad classes of measures, each of which represented about half of the available self-report measures. While ‘general’ measures seek to measure the construct in its entirety, ‘condition- or context- specific’ measures are focused on a specific area in which health literacy is used by individuals in the course of making health decisions. These two streams of research diverge to some extent in how they conceptualize health literacy; while the development of ‘general’ measures seeks to better measure (and thereby more comprehensively define) the entire construct based on some a priori definition of health literacy, ‘condition- or context- specific’ indices are developed in the course of identifying a particular area in which the authors (presumably) believe it will be more beneficial to measure specific deficits that can be ameliorated. Existing research has not found clear patterns of effectiveness in interventions that are designed to mitigate the detrimental effects of low health literacy [60], [61], [62].

Complimentary to ‘mitigating’ the negative effects is the idea of ‘improving’ health literacy, which is widespread throughout available policy documents [63], [64], [65], [66]. Yet, there is little evidence on how to do this. The separate streams of research identified in this overview may offer some promise for developing a way of going about improving people's health literacy; either ‘general’ health literacy should be targeted, or some context-specific operationalization of health literacy should be addressed. None of these measures demonstrated sensitivity to change, so even if it was possible to ‘improve’ health literacy, it appears no current measure would be appropriate to assess this.

Limitations

This study has identified that new measures are being developed and published frequently. One important limitation is therefore that there are other measures which have been developed and published since the search was carried out which have not been included. In addition, given the clinical heterogeneity and diversity of health literacy measurement, it is possible that relevant studies available at the time the search was carried out have not been retrieved. In particular, since only one reference was included for each measure, it is probable that many of these measures were further validated in subsequent studies. Although the risk of bias was not determined for each individual study, the quality of instruments reported in included studies was assessed; since the primary aim of this study was to assess the quality of these measures, it was felt that being inclusive regarding studies would allow this study to more adequately represent the available literature. Given that one of the conclusions of this study is that the need for additional health literacy measures should be questioned, this selection bias serves only to further the point that there are already many high-quality available general and specific indices available. Limiting this study to self-administered measures has the advantage of providing those looking for one of these measures an accessible, clear reference to guide selection of an index of this type, however it excludes many other health literacy measures, which may be of lesser quality. Conclusions of a review encompassing all the measures may prove less optimistic about the quality of available indices for health literacy measurement, which is what the most comprehensive available review including these measures identified [11].

Relevance to an ongoing research agenda for health literacy measurement

Researchers and clinicians alike need to consider practicalities of administration, including how long a measure takes to complete, whether it is suitable for self-completion (either online or with paper and pencil), and in what other circumstances and populations it has been used. Our review draws attention to the fact that several health literacy assessments require a great deal of time, potentially exceeding the average length of a primary care consultation. In a large European study, for example, Deveugele et al [67] found that the average length of a primary care consultation was 10.7 (6.7) minutes. In the present study, the average time required to administer a clinical or clinical/research health literacy assessment in this review was 20.7 (23.6) minutes. Since primary care was a common location in which these indices were developed and validated, it is critical that future research in this area is attentive to the reality of busy clinical practice. Our review demonstrates that currently, health literacy assessment using most available tools is impractical in clinical practice due to the time required, despite authors' suggestions that some of these measures should be incorporated into consultations. Despite the association between low health literacy and poorer outcomes, there is no evidence that health literacy screening has an effect on health outcomes [68]. It seems unlikely that health literacy assessment will become a fixture of clinical practice. Resources might be better allocated to developing interventions to mitigate the effect of low health literacy on health outcomes, for which there is already a strong evidence-base.

Almost three quarters of available health literacy indices included in this review have been developed and validated in populations in the United States. Indices from the United States may not be fully transferable to another health system, although it should be noted that this review only included English language papers. Again, in contrast to proposed research agendas for health literacy [55], [56] it is unclear whether this is best dealt with by undertaking validation of existing indices in other countries and health systems, as opposed to developing new indices to address these issues.

Many successful interventions intended to mitigate the effects of low health literacy on outcomes have resulted in mixed effects [60], [61], [62]. Some have improved health outcomes, such as end-of-life care preferences [69] whereas others have had no effect on other outcomes such as hemoglobin A1c in patients with type II diabetes [70]. Although it is somewhat unclear what benefit the development of additional health literacy assessment measures could provide, one key deficiency is that in order to move forward in ‘improving’ people's health literacy, either new indices must either be developed or existing indices tested to determine if they are sensitive to change over time. It will not be possible to ascertain if it is even possible to ‘improve’ people's health literacy until there are measures which have been shown to be sensitive to change in this regard.

Conclusion

Asking the right questions is critical to effective research, and doing so is necessary to eliminate “research waste” [71]. There are currently 35 self-report health literacy measures available, validated in a variety of contexts and intended for diverse applications. Since many already have adequate validity, it should be identified whether existing measures are sensitive to change as a result of improved health literacy. It is probable that some of these existing measures may be adequate for this purpose, but if not, this is a key deficiency that would suggest the development of new measures Further conceptual work on health literacy is necessary to understand whether it is a static or dynamic construct. These findings will influence the research agenda for whether it is necessary to develop new measures, or to expand the use of existing ones.

Supporting Information

PRISMA Checklist.

(DOC)

Search strategy for MEDLINE.

(DOCX)

Characteristics of included indices.

(DOCX)

Acknowledgments

Ethical approval was not required for this systematic review. We would like to thank Nia Roberts for her assistance with the search strategy.

Data Availability

The authors confirm that all data underlying the findings are fully available without restriction. All relevant data are within the paper.

Funding Statement

This publication reports research conducted as part of the iPEx programme, which presents independent research funded by the National Institute for Health Research (NIHR) under its Programme Grants for Applied Research funding scheme (RP-PG-0608-10147). The views expressed in this publication are those of the authors, representing iPEx, and not necessarily those of the NHS, the NIHR, or the Department of Health. Braden O'Neill's studentship is funded by the Rhodes Trust. The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

References

- 1.Ratzan SC, Parker RM (2000) Introduction. In: Selden CR, Zorn M, Ratzan SC, Parker, RM (Eds.), National Library of Medicine Current Bibliographies in Medicine: Health Literacy. Bethesda, MD: National Institutes of Health, U.S. Department of Health and Human Services. [Google Scholar]

- 2.Kickbusch I, Pelikan J, Apfel F, Tsouros A (2013) Health literacy- the solid facts. World Health Organization. Available: http://www.euro.who.int/__data/assets/pdf_file/0008/190655/e96854.pdf

- 3. Bailey SC, McCormack LA, Rush SR, Paasche-Orlow MK (2013) The progress and promise of health literacy research. J Health Comm 18:5–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Barber MN, Staples M, Osborne RH, Clerehan R, Elder C, et al. (2009) Up to a quarter of the Australian population may have suboptimal health literacy depending upon the measurement tool: results from a population-based survey. Health Promot Internat 24:252–261. [DOI] [PubMed] [Google Scholar]

- 5. Peterson PN, Shetterly SM, Clarke CL, Bekelman DB, Chan PS, et al. (2011) Health literacy and outcomes among patients with heart failure. JAMA 305:1695–1701. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Williams MV, Parker RM, Baker DW, Parikh NS, Pitkin K, et al. (1995) Inadequate functional health literacy among patients at two public hospitals. JAMA 274:1677–1682. [PubMed] [Google Scholar]

- 7. Koh HK, Berwick DM, Clancy CM, Baur C, Brach C, et al. (2012) New federal policy initiatives to boost health literacy can help the nation move beyond the cycle of costly 'crisis care'. Health Aff 31:434–443. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Parker RM, Baker DW, Williams MV, Nurss JR (1995) The test of functional health literacy in adults. J Gen Intern Med 10:537–541. [DOI] [PubMed] [Google Scholar]

- 9. Weiss BD, Mays MZ, Martz W, Castro KM, DeWalt DA, et al. (2005) Quick assessment of literacy in primary care: the Newest Vital Sign. Ann Fam Med 3:514–522. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Mancuso JM (2009) Assessment and measurement of health literacy: An integrative review of the literature. Nurs Health Sci 11:77–89. [DOI] [PubMed] [Google Scholar]

- 11. Jordan JE, Osborne RH, Buchbinder R (2011) Critical appraisal of health literacy indices revealed variable underlying constructs, narrow content and psychometric weaknesses. J Clin Epi 64:366–379. [DOI] [PubMed] [Google Scholar]

- 12. Baker DW (2006) The meaning and the measure of health literacy. J Gen Intern Med 21:878–883. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Fransen MP, Van Schaik TM, Twickler TB, Essink-Bot ML (2011) Applicability of internationally available health literacy measures in the Netherlands. J Health Comm 16:134–149. [DOI] [PubMed] [Google Scholar]

- 14. Pendlimari R, Holubar SD, Hassinger JP, Cima RR (2011) Assessment of colon cancer literacy in screening colonoscopy patients: A validation study. J Surg Res 175:221–226. [DOI] [PubMed] [Google Scholar]

- 15. Diviani N, Schulz PJ (2012) First insights on the validity of the concept of Cancer Literacy: A test in a sample of Ticino (Switzerland) residents. Patient Educ Couns 87:152–159. [DOI] [PubMed] [Google Scholar]

- 16. McCormack L, Bann C, Squiers L, Berkman ND, Squire C, et al. (2010) Measuring health literacy: a pilot study of a new skills-based instrument. J Health Comm 15:51–71. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17. Collins SA, Currie LM, Bakken S, Vawdrey DK, Stone PW (2012) Health literacy screening instruments for eHealth applications: A systematic review. J Biomed Inform 45:598–607. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Al Sayah F, Williams B, Johnson JA (2013) Measuring Health Literacy in Individuals With Diabetes A Systematic Review and Evaluation of Available Measures. Health Educ Behav 40:42–55. [DOI] [PubMed] [Google Scholar]

- 19. Greenhalgh T, Peacock R (2005) Effectiveness and efficiency of search methods in systematic reviews of complex evidence: audit of primary sources. BMJ 331:1064–1065. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Gibbs H, Chapman-Novakofski K, Gibbs H, Chapman-Novakofski K (2013) Establishing content validity for the Nutrition Literacy Assessment Instrument. Preventing chronic disease 10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. Norman CD, Skinner HA (2006) eHEALS: The eHealth Literacy Scale. J Med Internet Res 8:e27. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Portney LG, Watkins MP (1993) Foundations of clinical research- applications to practice. Stamford, CT: Appleton & Lange. 912 p. [Google Scholar]

- 23. Jones K, Parker E, Mills H, Brennan D, Jamieson LM (2014) Development and psychometric validation of a Health Literacy in Dentistry scale (HeLD). Comm Dent Health 31:37–43. [PubMed] [Google Scholar]

- 24. Ownby RL, Waldrop-Valverde D, Hardigan P, Caballero J, Jacobs R, et al. (2013) Development and validation of a brief computer-administered HIV-Related Health Literacy Scale (HIV-HL). AIDS Behav 17:710–718. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25. Mazor KM, Roblin DW, Williams AE, Greene SM, Gaglio B, et al. (2012) Health literacy and cancer prevention: two new instruments to assess comprehension. Patient Educ Couns 88:54–60. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26. Agre P, Stieglitz E, Milstein G (2007) The Case for Development of a New Test of Health Literacy. Oncol Nurs Forum 33:283–289. [DOI] [PubMed] [Google Scholar]

- 27. Chew LD, Bradley KA, Boyko EJ (2004) Brief Questions to Identify Patients with Inadequate Health Literacy. Fam Med 36:588–594. [PubMed] [Google Scholar]

- 28.Kutner M, Greenberg E, Jin Y, Paulsen C (2006) The health literacy of America's adults: Results from the 2003 National Assessment of Adult Literacy. Washington: National Center for Education Statistics, US Department of Education. 76 p. [Google Scholar]

- 29. Morris NS, MacLean CD, Chew LD, Littenberg B (2006) The Single Item Literacy Screener: Evaluation of a brief instrument to identify limited reading ability. BMC Fam Pract 7:21. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30. Ishikawa H, Nomura K, Sato M, Yano E (2008) Developing a measure of communicative and critical health literacy: a pilot study of Japanese office workers. Health Promot Internat 23:269–274. [DOI] [PubMed] [Google Scholar]

- 31. Steckelberg A, Hülfenhaus C, Kasper J, Rost J, Mühlhauser I (2009) How to measure critical health competences: development and validation of the Critical Health Competence Test (CHC Test). Adv Health Sci Educ Theory Pract 14:11–22. [DOI] [PubMed] [Google Scholar]

- 32. Rawson KA, Gunstad J, Hughes J, Spitznagel MB, Potter V, et al. (2010) The METER: a brief, self-administered measure of health literacy. J Gen Intern Med 25:67–71. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33. Yost KJ, Webster K, Baker DW, Jacobs EA, Anderson A, et al. (2010) Acceptability of the talking touchscreen for health literacy assessment. J Health Comm 15:80–92. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34. Galesic M, Garcia-Retamero R (2011) Graph literacy: A cross-cultural comparison. Med Decis Making 31:444–457. [DOI] [PubMed] [Google Scholar]

- 35. Hahn EA, Choi SW, Griffith JW, Yost KJ, Baker DW (2011) Health literacy assessment using talking touchscreen technology (Health LiTT): A new item response theory-based measure of health literacy. J Health Comm 16:150–162. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36. Ishikawa H, Yano E (2011) The relationship of patient participation and diabetes outcomes for patients with high vs. low health literacy. Patient Edu Couns 84:393–397. [DOI] [PubMed] [Google Scholar]

- 37. Takahashi Y, Sakai M, Fukui T, Shimbo T (2011) Measuring the ability to interpret medical information among the Japanese public and the relationship with inappropriate purchasing attitudes of health-related goods. Asia-Pacific Journal of Public Health 23:386–398. [DOI] [PubMed] [Google Scholar]

- 38. Tsai TI, Lee SYD, Tsai YW, Kuo KN (2011) Methodology and validation of health literacy scale development in Taiwan. J Health Comm 16:50–61. [DOI] [PubMed] [Google Scholar]

- 39. Begoray DL, Kwan B (2012) A Canadian exploratory study to define a measure of health literacy. Health Promot Int 27:23–32. [DOI] [PubMed] [Google Scholar]

- 40. Bann CM, McCormack LA, Berkman ND, Squiers LB, Bann CM, et al. (2012) The Health Literacy Skills Instrument: a 10-item short form. J Health Comm 3:191–202. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41. Brega AG, Jiang L, Beals J, Manson SM, Acton KJ, et al. (2012) Special diabetes program for Indians: reliability and validity of brief measures of print literacy and numeracy. Ethnicity Dis 22:207–214. [PubMed] [Google Scholar]

- 42. Massey PM, Prelip M, Calimlim BM, Quiter ES, Glik DC (2012) Contextualizing an expanded definition of health literacy among adolescents in the health care setting. Health Educ Res 26:961–974. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43. Weidmer BA, Brach C, Hays RD, Weidmer BA, Brach C, et al. (2012) Development and evaluation of CAHPS survey items assessing how well healthcare providers address health literacy. Med Care 50:S3–11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44. Chinn D, McCarthy C (2013) All Aspects of Health Literacy Scale (AAHLS): Developing a tool to measure functional, communicative and critical health literacy in primary healthcare settings. Patient Educ Couns 90:247–253. [DOI] [PubMed] [Google Scholar]

- 45. Jordan JE, Buchbinder R, Briggs AM, Elsworth GR, Busija L, et al. (2013) The Health Literacy Management Scale (HeLMS): A measure of an individual's capacity to seek, understand and use health information within the healthcare setting. Patient Educ Couns 91:228–235. [DOI] [PubMed] [Google Scholar]

- 46. Osborne RH, Batterham RW, Elsworth GR, Hawkins M, Buchbinder R, et al. (2013) The grounded psychometric development and initial validation of the Health Literacy Questionnaire (HLQ). BMC Public Health 13:658. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47. Harper R (2014) Development of a Health Literacy Assessment for Young Adult College Students: A Pilot Study. J Am Coll Health 62:125–134. [DOI] [PubMed] [Google Scholar]

- 48. Ishikawa H, Takeuchi T, Yano E (2008) Measuring functional, communicative, and critical health literacy among diabetic patients. Diabetes Care 31:874–879. [DOI] [PubMed] [Google Scholar]

- 49. Reavley NJ, Morgan AJ, Jorm AF (2014) Development of scales to assess mental health literacy relating to recognition of and interventions for depression, anxiety disorders and schizophrenia/psychosis. Aust N Z J Psychiatry 48:61–69. [DOI] [PubMed] [Google Scholar]

- 50. Reynolds JS, Treu JA, Njike V, Walker J, Smith E, et al. (2012) The validation of a food label literacy questionnaire for elementary school children. J Nutr Educ Behav 44:262–266. [DOI] [PubMed] [Google Scholar]

- 51. Weidmer BA, Brach C, Slaughter ME, Hays RD (2012) Development of Items to Assess Patients' Health Literacy Experiences at Hospitals for the Consumer Assessment of Healthcare Providers and Systems (CAHPS) Hospital Survey. Med Care 50:S12–21. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52. Sauceda JA, Loya AM, Sias JJ, Taylor T, Wiebe JS, et al. (2012) Medication literacy in Spanish and English: psychometric evaluation of a new assessment tool. J Am Pharm Assoc 52:e231–240. [DOI] [PubMed] [Google Scholar]

- 53. Scior K, Furnham A (2011) Development and validation of the intellectual disability literacy scale for assessment of knowledge, beliefs and attitudes to intellectual disability. Res Dev Disabil 32:1530–1541. [DOI] [PubMed] [Google Scholar]

- 54. Berkman ND, Sheridan SL, Donahue KE, Halpern DJ, Crotty K (2011) Low health literacy and health outcomes: an updated systematic review. Ann Intern Med 155:97–107. [DOI] [PubMed] [Google Scholar]

- 55. Pleasant A, McKinney J, Rikard RV (2011) Health Literacy Measurement: A Proposed Research Agenda. J Health Comm 16:11–21. [DOI] [PubMed] [Google Scholar]

- 56. McCormack L, Haun J, Sorensen K, Valerio M (2013) Recommendations for advancing health literacy measurement. J Health Comm 18:9–14. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57. Lupianez-Villanueva F, Mayer MA, Torrent J (2009) Opportunities and challenges of Web 2.0 within the health care systems: an empirical exploration. Inform Health Soc Care 34:117–126. [DOI] [PubMed] [Google Scholar]

- 58. Miller LMS, Bell RA (2012) Online health information seeking: the influence of age, information trustworthiness, and search challenges. Journal of aging and health 24:525–541. [DOI] [PubMed] [Google Scholar]

- 59. Berkman ND, Davis TC, McCormack L (2010) Health Literacy: What Is It? J Health Comm 15:9–19. [DOI] [PubMed] [Google Scholar]

- 60. Sheridan SL, Halpern DJ, Viera AJ, Berkman ND, Donahue KE, et al. (2011) Interventions for Individuals with Low Health Literacy: A Systematic Review. J Health Comm 16:30–54. [DOI] [PubMed] [Google Scholar]

- 61. Allen K, Zoellner J, Motley M, Estabrooks PA (2011) Understanding the Internal and External Validity of Health Literacy Interventions: A Systematic Literature Review Using the RE-AIM Framework. J Health Comm 16:55–72. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62. Clement S, Ibrahim S, Crichton N, Wolf M, Rowlands G (2009) Complex interventions to improve the health of people with limited literacy: A systematic review. Patient Educ Couns 75:340–351. [DOI] [PubMed] [Google Scholar]

- 63.Department of Health (2012) The Power of Information: Putting all of us in control of the health and care information we need. London, UK. p. 115.

- 64.NHS England Patients and Information Directorate (2013) Transforming Participation in Health and Care. London, UK.

- 65.Puntoni S (2011) Health Literacy in Wales: A scoping document for Wales. Cardiff: Welsh Assembly Government. [Google Scholar]

- 66.United Nations Educational Scientific and Cultural Organization (UNESCO) (1997) International Standard Classification of Education. Geneva, Switzerland.

- 67. Deveugele M, Derese A, van den Brink-Muinen A, Bensing J, De Maeseneer J (2002) Consultation length in general practice: cross sectional study in six European countries. BMJ 325:472. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68. Paasche-Orlow MK, Wolf MS (2007) Evidence Does Not Support Clinical Screening of Literacy. J Gen Intern Med 23:100–102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69. Volandes AE, Paasche-Orlow MK, Barry MJ, Gillick MR, Minaker KL, et al. (2009) Video decision support tool for advance care planning in dementia: randomised controlled trial. BMJ 338:b2159. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70. Gerber BS, Brodsky IG, Lawless KA, Smolin LI, Arozullah AM, et al. (2005) Implementation and evaluation of a low-literacy diabetes education computer multimedia application. Diabetes Care 28:1574–1580. [DOI] [PubMed] [Google Scholar]

- 71. Chalmers I, Glasziou P (2009) Avoidable waste in the production and reporting of research evidence. Lancet 374:86–89. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

PRISMA Checklist.

(DOC)

Search strategy for MEDLINE.

(DOCX)

Characteristics of included indices.

(DOCX)

Data Availability Statement

The authors confirm that all data underlying the findings are fully available without restriction. All relevant data are within the paper.