Abstract

For classification problems with significant class imbalance, subsampling can reduce computational costs at the price of inflated variance in estimating model parameters. We propose a method for subsampling efficiently for logistic regression by adjusting the class balance locally in feature space via an accept–reject scheme. Our method generalizes standard case-control sampling, using a pilot estimate to preferentially select examples whose responses are conditionally rare given their features. The biased subsampling is corrected by a post-hoc analytic adjustment to the parameters. The method is simple and requires one parallelizable scan over the full data set.

Standard case-control sampling is inconsistent under model misspecification for the population risk-minimizing coefficients θ*. By contrast, our estimator is consistent for θ* provided that the pilot estimate is. Moreover, under correct specification and with a consistent, independent pilot estimate, our estimator has exactly twice the asymptotic variance of the full-sample MLE—even if the selected subsample comprises a miniscule fraction of the full data set, as happens when the original data are severely imbalanced. The factor of two improves to if we multiply the baseline acceptance probabilities by c > 1 (and weight points with acceptance probability greater than 1), taking roughly times as many data points into the subsample. Experiments on simulated and real data show that our method can substantially outperform standard case-control subsampling.

Key words and phrases: Logistic regression, case-control sampling, subsampling

1. Introduction

In recent years, statisticians, scientists and engineers are increasingly analyzing enormous data sets. When data sets grow sufficiently large, computational costs may play a major role in the analysis, potentially constraining our choice of methodology or the number of data points we can afford to process. Computational savings can translate directly to statistical gains if they:

enable us to experiment with and prototype a variety of models, instead of trying only one or two,

allow us to refit our models more often to adapt to changing conditions,

allow for cross-validation, bagging, boosting, bootstrapping or other computationally intensive statistical procedures or

open the door to using more sophisticated statistical techniques on a compressed data set.

Bottou and Bousquet (2008) discuss the tradeoffs arising when we adopt this point of view. One simple manifestation of these tradeoffs is that we may run out of computing resources before we run out of data, in effect making the sample size n a function of the efficiency of our fitting method.

1.1. Imbalanced data sets

Class imbalance is pervasive in modern classification problems and has received a great deal of attention in the machine learning literature [Chawla, Japkowicz and Kotcz (2004)]. It can come in two forms:

Marginal imbalance. One of the classes is quite rare; for instance, ℙ(Y = 1) ≈ 0. Such imbalance typically occurs in data sets for predicting click-through rates in online advertising, detecting fraud or diagnosing rare diseases.

Conditional imbalance. For most values of the features X, the response Y is very easy to predict; for instance, ℙ(Y = 1|X = 0) ≈ 0 but ℙ(Y = 1|X = 1) ≈ 1. For example, such imbalance might arise in the context of email spam filtering, where well-trained classifiers typically make very few mistakes.

Both or neither of the above may occur in any given data set. The machine learning literature on class imbalance usually focuses on the first type, but the second type is also common.

If, for example, our data set contains one thousand or one million negative examples for each positive example, then many of the negative data points are in some sense redundant. Typically in such problems, the statistical noise is primarily driven by the number of representatives of the rare class, whereas the total size of the sample determines the computational cost. If so, we might hope to finesse our computational constraints by subsampling the original data set in a way that enriches for the rare class. Such a strategy must be implemented with care if our ultimate inferences are to be valid for the full data set.

This article proposes one such data reduction scheme, local case-control sampling, for use in fitting logistic regression models. The method requires one parallelizable scan over the full data set and yields a potentially much smaller subsample containing roughly half of the information found in the original data set.

1.2. Subsampling

The simplest way to reduce the computational cost of a procedure is to subsample the data before doing anything else. However, uniform subsampling from an imbalanced data set is inefficient since it fails to exploit the unequal importance of the data points.

Case-control sampling—sampling uniformly from each class but adjusting the mixture of the classes—is a more promising approach. This procedure originated in epidemiology, where the positive examples (cases) are typically diseased patients and negative examples (controls) are disease-free [Mantel and Haenszel (1959)]. Often, an equal number of cases and controls are sampled, resulting in a subsample with no marginal imbalance, and costly measurements of predictor variables are only made for selected patients [Breslow, Day et al. (1980)]. This method is useful in our context as well, since a logistic regression model fitted on the subsample can be converted to a valid model for the original population via a simple adjustment to the intercept [Anderson (1972), Prentice and Pyke (1979)].

However, standard case-control sampling still may not make most efficient use of the data. For instance, it does nothing to exploit conditional imbalance in a data set that is marginally balanced. Even with some marginal imbalance, a control that looks similar to the cases is often more useful for discrimination purposes than one that is obviously not a case.

We propose a method, local case-control sampling, which attempts to remedy imbalance locally throughout the feature space. Given a pilot estimate (α̃, β̃) of the logistic regression parameters, local case-control sampling preferentially keeps data points for which Y is surprising given X. Specifically, if , we accept (xi, yi) with probability |yi − p̃(xi)|, the ℓ1 residual of the pilot model. In the presence of extreme marginal or conditional imbalance, these errors will generally be quite small and the subsample can be many orders of magnitude smaller than the full data set.

Just as with case-control sampling, we can fit our model to the subsample and make an equally simple correction to obtain an estimate for the original data set. When the logistic regression model is correctly specified and the pilot is consistent and independent of the data, the asymptotic variance of the local case-control estimate is exactly twice the variance of a logistic regression fit on the (potentially much larger) full data set. This factor of two improves to if we accept with probability c|yi − p̃(xi)| ∧ 1 and weight accepted points by a factor of c|yi − p̃(xi)| ∨ 1. For example, if c = 5 then the variance of the subsampled estimate is only 20% greater than the variance of the full-sample MLE. The subsample we take with c > 1 is no more than c times larger than the subsample for c = 1, and for data sets with large imbalance is roughly times as large.

Local case-control sampling also improves on the bias of standard case-control sampling. When the logistic regression model is misspecified, case-control sampling is in general inconsistent for the risk minimizer in the original population. By contrast, local case-control sampling is always consistent given a consistent pilot, and is also asymptotically unbiased when the pilot is. Sections 5 and 6 present empirical results demonstrating the advantages of our approach in simulations and on the Yahoo! webspam data set.

1.3. Notation and problem setting

Our setting is that of predictive classification: we are given n independent and identically distributed observations, each consisting of predictors xi ∈ 𝒳 and a binary response yi ∈ {0, 1}, with joint probability measure ℙ. For our purposes, we assume the predictors are mapped into some real covariate vector space, so that 𝒳 ⊆ ℝp Our aim is to learn the function

| (1) |

or equivalently to learn

| (2) |

which could be infinite for some x.

A linear logistic regression model assumes f is linear in x; that is,

| (3) |

where θ = (α, β) ∈ ℝp+1. This is less of a restriction than it might seem, since x may represent a very large basis expansion of some smaller set of “raw” features.

Nevertheless, in the real world, f is unlikely to satisfy our parametric model for any given basis x. When the model is misspecified, we can still view logistic regression as an M-estimator with convex loss equal to the negative log-likelihood for a single data set:

| (4) |

As an M-estimator, under general conditions logistic regression in large samples will converge to the minimizer of the population risk R(θ) = 𝔼ρ(θ; X, Y) [Huber (2011)]. That is, θ converges to the population maximizer of the expected log-likelihood

| (5) |

| (6) |

If f = fθ0 for some θ0, then θ* = θ0; otherwise fθ* is the best linear approximation to f in the sense of (5). For a misspecified model, fθ̂ cannot possibly converge to f no matter what sampling scheme or estimation procedure we use, or how much data we obtain. Consistency, then, will mean that .

Model misspecification is ubiquitous in real-world applications of regression methods. For reasons of exposition, the misspecification always takes a simple form in our simulations, for example, in Example 1 there are two binary predictors, and we would have correct specification if only we added one interaction—but in the real world it usually is neither possible nor even desirable to expand the feature basis until the model is correctly specified. For instance, if p = 1000, then there are quadratic terms. Even if we included all those terms as features, we would still be missing cubic terms, quartic terms, and so on.

Some kinds of misspecification are milder than others, and some are easier to find and fix than others. Seeking better-specified models (without adding too much model complexity) is a worthy goal, but realistically perfect specification is unattainable.

Our goal, then, is to speed up computation while still obtaining a good estimate of θ*, the population logistic regression parameters. As we will see, standard case-control sampling achieves the first goal, but may fail at the second.

1.4. Related work

Recent years have seen substantial work on classification in imbalanced data sets. See Chawla, Japkowicz and Kotcz (2004) and He and Garcia (2009) for surveys of machine learning efforts on this problem. Many of the methods proposed involve some form of undersampling the majority class, oversampling the minority class, or both. Owen (2007) examined the limit of marginally imbalanced logistic regression and proved it is equivalent to fitting an exponential family model to the minority class.

One recurring theme is to preferentially sample negative examples that lie near positive examples in feature space. For example, Mani and Zhang (2003) propose selecting majority-class examples whose average distance to its three nearest minority examples is smallest. Our method has a similar flavor since the probability of sampling a negative example (x, 0) is p̃(x), which is large when the features x are similar to those characteristic of positive examples.

Our proposal lies more in the tradition of the epidemiological case-control sampling literature. In particular, case-control sampling within several categorical strata has been studied by Fears and Brown (1986), Breslow and Cain (1988), Weinberg and Wacholder (1990), Scott and Wild (1991). Typically, the strata are based on easy-to-measure screening variables available for a wide population, with more laborious-to-collect variables being measured on the sampled subjects. Lumley, Shaw and Dai (2011) discuss survey calibration methods for efficient regression in two-stage sampling schemes, which are interesting but too computationally intensive for our purposes here.

2. Case-control subsampling

Case-control sampling is commonly carried out by taking all the cases and exactly c times as many controls for some fixed c (e.g., c = 1, 2, 5). However, for our purposes it will be simpler to consider a nearly equivalent procedure based on accept–reject sampling.

Define some acceptance probability function a(y) and let , the log-selection bias. Consider the following algorithm:

Generate independent zi ~ Bernoulli(a(yi)).

Fit a logistic regression to the subsample S = {(xi, yi) : zi = 1}, obtaining unadjusted estimates θ̂S = (α̂S, β̂S).

Assign α̂ ← α̂S − b and β̂ ← β̂S.

Specifically, we could generate the zi by first generating ui ~ U(0, 1) mutually independent of the pilot, the data, and each other, then taking zi = 1ui≤a(yi). Note that steps (2)–(3) are equivalent to logistic regression with offset b for each data point.

This variant is convenient to analyze because the subsample thus obtained is an i.i.d. sample from a new population:

| (7) |

with ā = a(1)ℙ(Y = 1) + a(0)ℙ(Y = 0), the marginal probability of Z = 1.

The estimate (α̂, β̂) is motivated by a simple application of Bayes’ rule relating the odds of Y = 1 in ℙ and ℙS. If g(x) is the true conditional log-odds function for ℙS, we have

| (8) |

| (9) |

| (10) |

That is, the log-odds g(x) in our biased population is simply a vertical shift by b of the log-odds f(x) in the original population, so given an estimate of g we can subtract b to estimate f. If the model is correctly specified, logistic regression on the subsample yields a consistent estimate for the function g(x), so the estimate for f(x) is also consistent.

Note that the derivation (8)–(10) is equally valid if the sampling bias b depends on x, in which case we have g(x) = f(x) + b(x). Local case-control sampling exploits this more general identity.

2.1. Conditional probability and the logit loss

If X is integrable, then upon differentiating the population risk (5) with respect to θ we obtain the population score criterion:

| (11) |

Informally, the best linear predictor is the one that gets the conditional probabilities right on average. Note this is not the same as a predictor that gets the conditional log-odds right on average.

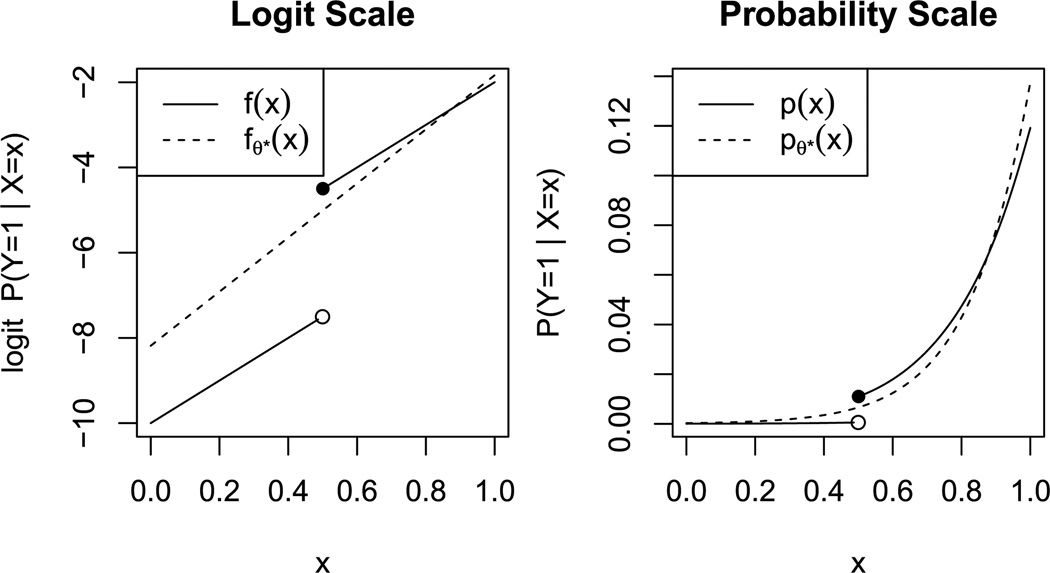

To illustrate the difference between approximating probabilities and approximating logits, suppose that X ~ U(0, 1) and f(x) = −10 + 5x + 3 · 1x>0.5. The left panel of Figure 1 shows f(x) as a solid line and its best linear approximation as a dashed line. On the logit scale, the dashed line appears to be a very poor fit to the black curve. It fits reasonably well for large x, but it appears more or less to ignore the smaller values of x.

Fig. 1.

The best linear fit fθ* (x) approximates the true log-odds f(x) in the sense of matching its implied conditional probabilities, not logits.

The right panel of Figure 1 shows why. When we transform both curves to the probability scale, the fit looks much more reasonable. fθ*(x) need not approximate f(x) particularly well for small x, because in that range even a large change in the log-odds produces a negligible change in the conditional probability p(x). By contrast, fθ*(x) needs to approximate f(x) well for larger x, where p(x) changes more rapidly.

In general, logistic regression places highest priority on fitting f where is largest: where f(x) ≈ 0 and p(x) ≈ 0.5. In this example, with its strong marginal imbalance, the regions that matter most are those where p(x) is largest. This often makes sense in applications such as medical screening or advertising click-through rate prediction, where accuracy is most important when the probability of disease or click-through is nonnegligible. In Section 7, we consider how to modify the method to obtain classifiers that prioritize correctness near some other, user-defined level curve of p(x).

Finally, note that Figure 1 suggests the case-control sampling estimate is unlikely to be consistent for θ* in general. The nature of our linear approximation in the left panel is intimately related to the fact that f(x) < 0 everywhere in the sample space. If f(x) were shifted upward by some constant, the response of the dashed curve would be more complicated than a simple constant shift by b, since the relative importance of the two segments would change. Therefore, estimating f(x) + b and then subtracting b may not be a successful strategy.

2.2. Inconsistency of case-control under misspecification

If the linear model is misspecified, the case-control estimate is generically not consistent for the best linear predictor θ* as n → ∞ [Xie and Manski (1989), Manski and Thompson (1989)]. The unadjusted estimate will instead converge to the best linear predictor of g for the distribution ℙS, which solves the score criterion

| (12) |

Let be the large-sample limit of the adjusted case-control sampling estimate with bias b. Then solves the population score criterion

| (13) |

which differs from (11) in two ways. First, the integral is taken over a different distribution for X. Second, and more importantly, the integrand is different. We are now approximating f(x) in a different sense than we were.

In general under misspecification, is different for every b. If we sample cases and controls equally, in the limit we will get a different answer than if we sample twice as many controls; and in either case we will get a different answer than if we use the entire data set or subsample uniformly.

These differences can be quite consequential for our inferences about β or the predictive performance of our model, as we see next.

Example 1 (Oatmeal and disease risk). In this fictitious example, we consider estimating the effect of exposure to oatmeal on a person’s risk of developing some rare disease. Suppose that 10% of the population has a family history of the disease, half the population eats oatmeal (independently of family history), and that both exposure and family history are binary predictors. Suppose further that the true conditional log-odds function f(x) is given by the top-left panel of Table 1.

Table 1.

Disease risk in the full population, and in the population created by case-control sampling with equal numbers in each class

| Original population (ℙ) | Case-control population (ℙS) | ||||

| Conditional log-odds (f) | Conditional log-odds (g) | ||||

| History − | History + | History − | History + | ||

| Oatmeal − | −5 | −4 | Oatmeal − | −1.2 | −0.2 |

| Oatmeal + | −10 | −1 | Oatmeal + | −6.2 | 2.8 |

| Conditional probabilities | Conditional probabilities | ||||

| History − | History + | History − | History + | ||

| Oatmeal − | 0.007 | 0.02 | Oatmeal − | 0.24 | 0.46 |

| Oatmeal + | 5E−5 | 0.37 | Oatmeal + | 0.002 | 0.94 |

The corresponding conditional probabilities p(x) are given in the lower-left panel of Table 1. Notice that oatmeal increases the risk for people who are already at risk by virtue of their family history, but has a protective effect for everyone else. This interaction means that the additive logistic regression model is misspecified.

Because only the probabilities in the “History +” column are large enough to matter, the fitted model for f(x) pays more attention to the at-risk population, for whom oatmeal elevates the risk of disease. A logistic regression on a large sample from this population estimates the coefficient for oatmeal as , implying an odds ratio of about 4.0. This is close to the marginal odds ratio of roughly 4.3 that we would obtain if we did not control for family history.

Suppose, however, that we sampled an equal number of cases and controls. Then the conditional log-odds of disease in our sample would reflect the top-right panel of Table 1, with all cells increased by the same amount.

For large samples, the case-control estimate is , implying an odds ratio of about 0.44. Using case-control sampling has reversed our inference about the effect of oatmeal exposure, because after shifting the log-odds the left column becomes much more important.

Example 2 (Two-class Gaussian model). Suppose that ℙ(Y = 1) = 1%, and that X|Y ~ N(μY, ΣY). Let

| (14) |

| (15) |

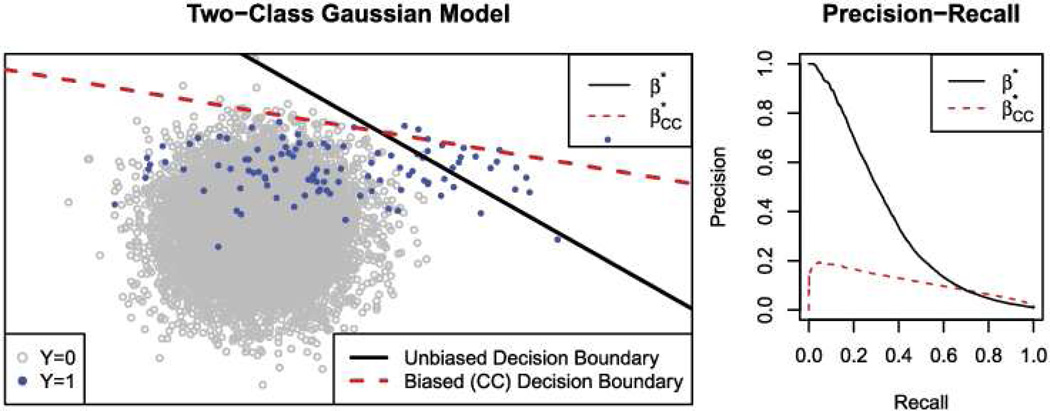

Data simulated from this model are shown in the left panel of Figure 2. In this example, the true log-odds f(x) is an additive quadratic function of the two coordinates X1 and X2.

Fig. 2.

At left, biased (case-control) and unbiased decision boundaries for the bivariate Gaussian mixture model. At right, precision–recall curves for β* and .

In this example as in the previous one, the population-optimal case-control parameters differ substantially from the optimal parameters in the original population, with dramatic effects for the predictive performance of the model. The decision boundaries for the two estimates are overlayed on the left panel of Figure 2. In the right panel, we plot the precision–recall curves resulting from each set of parameters on a large test set.

2.3. Weighted case-control sampling

A simple alternative to standard case-control sampling is to weight the subsampled data points by the inverse of their probability of being sampled. We include weighted case-control sampling as a competitor in our simulation studies in Section 5. Because it is a Horvitz–Thompson estimator with positive sampling probabilities for any (x, y) pair, this method is , and asymptotically normal and unbiased under general conditions [Horvitz and Thompson (1952)].

Although weighting succeeds in removing the bias induced by the case-control sampling, this consistency comes at a cost of increasing the variance, since the effective sample size is reduced [Scott and Wild (1986, 2002)].

Despite its inefficiency, the weighted case-control method can be an attractive means of obtaining a consistent pilot if another good pilot is not immediately available, and we later will use it to that end in our experiments.

3. Local case-control subsampling

In this section, we describe local case-control subsampling, a generalization of standard case-control sampling that both improves on its efficiency and resolves its problem of inconsistency. To achieve these benefits, we require a pilot estimate, that is, a good guess θ̃ = (α̃, β̃) for the population-optimal θ*.

3.1. The local case-control sampling algorithm

Local case-control sampling differs from case-control sampling only in that the acceptance probability a is allowed to depend on x as well as y. Our criterion for selection will be the degree of “surprise” we experience upon observing yi given xi:

| (16) |

where is the pilot estimate of ℙ(Y = 1|X = x). The algorithm is:

Generate independent zi ~ Bernoulli(a(xi, yi)).

Fit a logistic regression to the sample S = {(xi, yi) : zi = 1} to obtain unadjusted estimates θ̂S = (α̂S, β̂S).

Assign α̂ ← α̂S + α̃ and β̂ ← β̂S + β̃.

As before, steps (2)–(3) are equivalent to fitting a logistic regression in the subsample with offsets −α̃ − β̃′xi. The zi are generated as in Section 2, and the adjustment is again justified by (8)–(10), only now with the constant b replaced by

| (17) |

In other words, the subsample is drawn from a measure with

| (18) |

If f(x) is well approximated by the pilot estimate, then g(x) ≈ 0 throughout feature space. That is, conditional on selection into S, yi given xi is nearly a fair coin toss.

To motivate this choice heuristically, recall that the Fisher information for the log-odds of a Bernoulli random variable is maximized when the probability is : fair coin tosses are more informative than heavily biased ones. In effect, local case-control sampling tilts the conditional distribution of Y given X = x to make each yi in the subsample more informative. We then fit a logistic regression in the more favorable sampling measure, and “tilt back” to obtain a valid estimate for the original population.

In marginally imbalanced data sets where ℙ(Y = 1|X = x) is small everywhere in the predictor space, a good pilot has p̃(x) ≈ 0 for all x, and the number of cases discarded by this algorithm will be quite small. If we wish to avoid discarding any cases, we can always modify the algorithm so that instead of keeping (x, 1) with probability a(x, 1), we keep it with probability 1 and assign weight a(x, 1).

3.2. Choosing the pilot fit

In many applications, there may be a natural choice of pilot fit θ̃; for instance, if we are refitting a classification model every day to adapt to a changing world, then yesterday’s fit is a natural choice for today’s pilot.

If no pilot fit is available from such a source, we recommend an initial pass of weighted case-control sampling (described in Section 2.3) to obtain the pilot. Weighted case-control sampling using a fixed fraction of the original sample is itself and asymptotically unbiased for the true parameters. Consequently, if the pilot were fit using an independent data set the second-stage estimate would enjoy consistency and asymptotic unbiasedness per the results in Section 4.

Our experiments suggest that mild inaccuracy in the pilot estimate, and using a data-dependent pilot, do not unduly degrade the performance of the local case-control algorithm. For example, is Simulation 2 of Section 5.2, the pilot is fifty times less efficient than the final local case-control estimate. The main role of the pilot fit is to guide us in discarding most of the data points for which yi is obvious given xi while keeping those for which yi is conditionally surprising.

In our example and simulations, we use a pilot sample about the same size as the local case-control subsample, on the principle that we can afford to spend about as much time computing the pilot as computing the second-stage estimate. When ℙ(Y = 1|X) is small throughout 𝒳, this rule amounts roughly to weighted case-control sampling using all the cases and one control per case. Although the above rule has worked reasonably well for us, at this time we can offer no finite-sample guarantees that any given pilot sample size is large enough.

Because standard case-control sampling amounts to local case-control sampling with a constant-only pilot fit, we might expect that the pilot fit need not be perfect to improve upon case-control sampling. Our experiments in Sections 5 and 6 support this intuition.

3.3. Taking a larger or smaller sample

As we will see in Section 4.3, under correct model specification, and with an independent and consistent pilot, the baseline procedure described above has exactly twice the asymptotic variance as a logistic regression estimated with the full sample, despite using a potentially very small subset of the data. We can improve upon this factor of two by increasing the size of the subsample.

One simple way to achieve this is to multiply all acceptance probabilities by some constant c, for example, c = 5. When deciding whether to sample the point (xi, yi), we would then generate zi ~ Bernoulli(ca(xi, yi) ∧ 1) and assign weight wi = ca(xi, yi) ∨ 1 to each sampled point. This amounts to a larger, weighted subsample from ℙS, and we can make the same correction to the estimates from the subsample. We see in Section 4.4 that for c > 1 the factor of two is replaced by a factor of .

In the case of large imbalance, most of the p̃(xi) are near 0 or 1. For c > 1, the marginal acceptance probability at xi becomes

| (19) |

| (20) |

where the approximation holds for p(x) ≈ p̃(x) ≈ 0 or 1. For c = 1, the marginal acceptance probability is p(x)(1 − p̃(x)) + (1 − p(x))p̃(x) ≈ 2p(x)(1 − p(x)), so for c > 1 we take roughly times as many data points as for c = 1. For example, if c = 5, the subsample accepted is roughly 3 times as large, and the relative efficiency improves from 1/2 to 5/6.

Alternatively, if n is extremely large, even a small fraction of the full data set may still be more than we want. In that case, we can proceed as above with c < 1, or simply sample any desired number ns of data points uniformly from the local case-control subsample.

4. Asymptotics of the local case-control estimate

We now turn to examining the asymptotic behavior of the local case-control estimate. We first establish consistency, assuming a consistent pilot estimate θ̃. We expressly do not assume that the pilot estimate is independent of the data, since in some cases we may recycle into the subsample some of the data we used to calculate the pilot.

By assuming independence of θ̃ and the data, we can obtain finer results about the asymptotic distribution of θ̂. We show it is asymptotically unbiased when θ̃ is, and derive the asymptotic variance of the estimate. When the logistic regression model is correctly specified, the local case-control estimate has exactly twice the asymptotic variance of the MLE for the full data set.

4.1. Preliminaries

For better clarity of notation in this section, we will use the letter λ in place of θ̃ to denote pilot estimates. Additionally, we drop the notation and absorb the constant term into x, so that fθ(x) = θ′x. To avoid trivialities, assume without loss of generality that there is no υ ∈ ℝp for which 𝔼|υ′X| = 0 (if not, we can discard redundant features).

For π ∈ [0, 1] define the “soft hinge” function

| (21) |

and note that

| (22) |

As a function of η, h is positive and strictly convex, its magnitude is bounded by 1 + |η|, and it has Lipschitz constant max(π, 1 − π) ≤ 1. If π < 1, h diverges as η → ∞, and if π > 0 h diverges as η → −∞.

As a function of λ, has Lipschitz constant ≤ ‖x‖. Hence, ā(λ) = 𝔼aλ(X, Y) ∈ (0, 1) with Lipschitz constant ≤ 𝔼‖X‖. The marginal acceptance probability given x is

| (23) |

Given pilot λ, the local case-control subsampling scheme effectively samples from the probability measure ℙλ, where

| (24) |

and ā(λ) = ∫ aλ(x, y) dℙ(x, y) is the marginal probability of acceptance. Under this measure,

| (25) |

Because aλ(x, y) ≤ 1, if g(X, Y) is integrable under ℙ it is also integrable under any ℙλ.

Conditioning on X, we can write the population risk of the logistic regression parameters θ with respect to sampling measure ℙλ as

| (26) |

By Cauchy–Schwarz, the integrand in (26) is bounded by 2(1 + ‖θ‖‖x‖). If 𝔼‖X‖ < ∞, then, we may appeal to dominated convergence and take limits with respect to θ and λ inside the integral.

Rλ(θ) is strictly convex because the integrand is, and always has a unique population minimizer if there is no separating hyperplane in the population.

Lemma 1. Assume there is no υ for which

| (27) |

Henceforth, we refer to this assumption as nonseparability. Then Rλ(θ) attains a unique minimum for every λ ∈ ℝp.

Denote by the empirical risk on a local case-control subsample taken using the pilot estimate λ. Then

| (28) |

It will be somewhat simpler to replace the random subsample size with its expectation nā(λ). Define

| (29) |

Since minimizing (28) with respect to θ is equivalent to minimizing (29), the two are equivalent for our purposes.

If the unadjusted parameters θ̂S minimize R̂λ, the local case-control estimate θ̂ = θ̂S + λ is an M-estimator minimizing Q̂λ(θ) = R̂λ(θ − λ). We use analogous notation for the population version:

| (30) |

For any given pilot estimate λ and large n, we expect

| (31) |

Define the right-hand side of (31) to be θ̄(λ), the large-sample limit of local case-control sampling with pilot estimate fixed at λ. The best linear predictor for the original population corresponds to the case λ = 0 (uniform subsampling), that is, θ* = θ̄(0). Consistency means that for large n, .

Recall that if the model is correctly specified with true parameters θ0, then θ̄(λ) = θ0 for any fixed pilot estimate λ. Minimizing Q̂λ therefore yields a consistent estimate. Unfortunately, in the misspecified case θ̄(λ) ≠ θ̄(0) = θ*. In this sense, local case-control sampling with the pilot λ held fixed is in general not consistent for θ*. However, we see below that it is consistent if λ = θ*.

Proposition 2. Assume 𝔼‖X‖ < ∞, that the classes are nonseparable, and that θ* = θ̄(0) is the best linear predictor for the original measure ℙ. Then

| (32) |

In other words, if we could only choose our pilot perfectly, then the local case-control estimate would converge to θ* as n → ∞.

Proof of Proposition 2. Write . The population optimality criterion for LCC with pilot λ is

| (33) |

| (34) |

Noting that , if we evaluate the above at λ = θ = θ*, we obtain

| (35) |

| (36) |

which is exactly half the population score (11) for the original population. Since θ* optimizes the risk for the original population, this value is 0.

There is an intuitive explanation of this result: in ℙθ*, the acceptance probabilities are p*(X) if Y = 0 and 1 − p*(X) if Y = 1; hence they play the same role as the pseudoresiduals Y − p*(X) did in the original measure ℙ. For example, the point (x, 0) would contribute p*(x)x to the gradient if we evaluated the full-sample score at θ*. Evaluating the subsample score at 0, the same point now contributes to the score—but only if it is accepted, which occurs with probability exactly p* (x). So, in essence, the subsampling stands in for the reweighting that we otherwise would have done when fitting our logistic regression to the full sample.

Of course, in practice we never have a perfect pilot—if we did we would not need to estimate θ*—but Proposition 2 suggests that if λ is near θ*, minimizing Q̂λ yields a good estimate. In fact, we will see that if then as well.

4.2. Consistency

For our asymptotic results, assume we have an infinite reservoir (x1, y1), (x2, y2), … of i.i.d. pairs, a sequence of i.i.d. U(0, 1) variables u1, u2, … for making accept–reject decisions, and a sequence of pilot estimates λ1, λ2, …. The λn are possibly dependent upon the data, but the ui are assumed to be independent of everything else.

θ̂n is the local case-control estimate, computed using pilot λn, data , and accept–reject decisions zi = 1ui≤aλn(xi, yi).

The main result of this section is that if the pilot estimate λn is consistent for θ*, then so is θ̂n. The details are somewhat technical, especially the proof of Proposition 3, but the main idea is that if , then for large n

| (37) |

in the appropriate sense. Q̂λn is what the local case-control estimate actually minimizes, whereas the last function is minimized by θ*, our ultimate target.

First, we establish pointwise convergence.

Proposition 3. If 𝔼‖X‖ < ∞ and , then for each θ ∈ ℝp,

| (38) |

Because we avoid assuming independence between the pilot λn and the data (xi, yi), the proof is technical and is deferred to the Appendix. The proof relies on the coupling of the acceptance decisions zi for different pilot estimates through ui. With this coupling, two nearby pilot estimates will differ on very few accept–reject decisions.

Because neither Q̂λn(θ) nor Qλ∞(θ) changes very fast, pointwise convergence also implies uniform convergence on compacts.

Proposition 4. If 𝔼‖X‖ < ∞ and , then for compact Θ ⊆ ℝp,

| (39) |

Proof. Define

| (40) |

By Proposition 3, pointwise. Next, we show it is Lipschitz. The integrand in (35) is x times two factors each bounded by ±1, hence

| (41) |

Similarly for Q̂λn, we have

| (42) |

so that

| (43) |

It follows that, with probability tending to 1, Fn(θ) has Lipschitz constant less than c = 3ā(λ∞)−1𝔼‖X‖.

Now, for any ε > 0, we can cover Θ with finitely many Euclidean balls of radius δ = ε/c, centered at θ1, …, θM(ε). Let An(ε) be the event that Fn has Lipschitz constant less than c and

| (44) |

On An(ε), we have supθ∈Θ |Fn(θ)| < 2ε, and ℙ(An(ε)) → 1 as n → ∞.

Finally, we come to the main result of the section, in which we prove that the local case-control estimate is consistent when the pilot is. Because the functions are strictly convex, we can ignore everything but a neighborhood of θ*.

Theorem 5. Assume 𝔼‖X‖ < 0 and the classes are nonseparable. If then the local case-control estimate as well.

Proof. Let Θ ⊆ ℝp be any compact set with θ* in its interior, and let

| (45) |

where the strict inequality follows from strict convexity. Uniform convergence implies that with probability tending to 1,

| (46) |

which implies in turn that

| (47) |

Whenever this is the case, the strictly convex function Q̂λn has a unique minimizer in the interior of Θ. Since Θ was arbitrary, we can take its diameter to be less than any δ > 0. Hence, .

4.3. Asymptotic distribution

In this section, we derive the asymptotic distribution of the local case-control logistic regression estimate, in the same asymptotic regime as the previous section. To prove our results here, we assume the pilot estimate λn is independent of our data set. This would not be the case if our pilot were based on a subsample of the data (the procedure we use for all our simulations), but it could hold if the pilot came from a model fitted to data from an earlier time period.

The main result of this section is that if the logistic regression model is correctly specified and the pilot is consistent, the asymptotic covariance matrix of the local case-control estimate for θ is exactly twice the asymptotic covariance matrix of a logistic regression performed on the entire data set. For the results in this section, we will need 𝔼‖X‖2 < ∞.

It will be convenient to give names to some recurring quantities. First, we have seen that if 𝔼‖X‖ < ∞ we can differentiate Qλ(θ) inside the integral to obtain the gradient of the population risk:

| (48) |

| (49) |

Whereas G is the expectation of the logistic regression score with respect to ℙλ, we can also define its covariance matrix:

| (50) |

When 𝔼‖X‖2 < ∞, J(θ, λ) < ∞, and is continuous in θ and λ by dominated convergence.

Since the derivatives of the integrand in (48) are uniformly bounded by 2‖x‖2, dominated convergence implies we can again differentiate inside the integral. Differentiating with respect to θ we obtain

| (51) |

| (52) |

Here, the integrand is dominated by xx′, so dominated convergence again applies and thus we see that H is continuous in θ and λ. H(θ, λ) ≻ 0 for any θ, λ since we have assumed there is no nonzero υ for which 𝔼|υ′ X| = 0. Finally, define the matrix of crossed partials:

| (53) |

To be concrete, . Continuity of C again follows from noting the derivative of the integrand in (48) with respect to λ is dominated by 8‖x‖2.

To begin, we consider the behavior of θ̄(λ) for λ near θ*. By Proposition 2, we have G(θ*, θ*) = 0. Since H (θ, λ) ≻ 0, we can apply the implicit function theorem to the relation G(θ̄(λ), λ) = 0 to obtain

| (54) |

By standard M-estimator theory, if we fix λ and send n → ∞ the coefficients of a logistic regression performed on a sample of size |S| from ℙλ would be asymptotically normal with covariance matrix

| (55) |

In light of this and the fact that |S| ≈ ā(λ)n, we might predict the following.

Theorem 6. Assume 𝔼‖X‖2 < ∞. If independently of the data, then

| (56) |

with Σ = H(θ*, θ*)−1 J(θ*, θ*)H(θ*, θ*)−1.

Again, we defer the proof to the Appendix. We can combine (56) with (54) to immediately obtain the following reassuring facts.

Corollary 7. Assume 𝔼‖X‖2 < ∞ and λn is a sequence of pilot estimators given independently of the data. Then:

If λn is , so is θ̂n.

If λn is asymptotically unbiased, so is θ̂n.

If then with

| (57) |

In (57), we have suppressed the arguments of θ* in H, C, ā and J.

The first term in (57) characterizes the contribution of conditional bias (given λn) to the overall variance, and the second is the contribution of conditional variance.

In the special case where logistic regression model is correctly specified, we have the following.

Theorem 8. Assume the logistic regression model is correct and let be the asymptotic variance of the MLE for the full sample. Then if 𝔼‖X‖2 < ∞ and independently of the data, we have

| (58) |

Hence, although the size of a local case-control subsample is roughly nā(λ), the variance of θ̂ is the same as if we took a simple random sample of size n/2 from the full data set. In other words, each point sampled is worth about points sampled uniformly.

Proof of Theorem 8. If logistic regression is correctly specified for ℙ, it is also for ℙλ, regardless of λ, so θ̄(λ) ≡ θ0. Furthermore, by standard maximum likelihood theory J(θ0, λ) = H(θ0, λ)−1 for each λ. Therefore, (56) specializes to

| (59) |

But

| (60) |

If and λ = θ0, then (60) simplifies to

| (61) |

| (62) |

| (63) |

This result is surprisingly simple. No characterization like Theorem 8 is available for the case-control and weighted case-control estimates, whose variances are not simple scalar multiples of Σfull.

We can offer a simple heuristic argument for Theorem 8, similar to that of Proposition 2. In ℙθ0, the acceptance probability âλ(x) for an observation at x is 2p(x) (1 −p(x)), and given that it is accepted it contributes to the observed information. In the full sample, a point at x is always accepted but contributes less, p(x) (1 − p(x))xx′, to the observed information. Again, the sampling probability stands in for the reweighting we would have done in the full sample. If p(x) (1 − p(x)) is very small, we are discarding most of the data instead of keeping all of it and assigning it a tiny weight in the fit.

The practical meaning of Theorem 8 is that local case-control sampling is most advantageous when ā(θ0) = 𝔼(|Y − p̃(X)|) is small, that is, when Y is easy to predict throughout much of the covariate space. This can happen as a result of marginal or conditional imbalance, or both. Standard case-control sampling can also improve our efficiency in the presence of marginal imbalance, but unlike local case-control sampling, it does not exploit conditional imbalance. Hence, we would expect local case-control to outperform standard case-control most dramatically when the marginal imbalance is very high, as in the simulation of Section 5.2.

For data-dependent pilots, the efficiency picture is somewhat more complicated. For example, θ̄(λ) is approximately a linear function of λ − θ*. Thus, if λ is unbiased but correlated with the noise in the data, we might get more or less variance relative to (58), depending on how this correlation interacts with C. If the model is correctly specified, it less clear whether an adversarially chosen pilot can affect the efficiency.

Either way, we do not anticipate serious problems from nonindependence. To stress-test our results against violations of independence, we expressly use a data-dependent pilot for all of our experiments: namely, a weighted case-control sample with sample points allowed to be recycled for the second-stage fit.

4.4. Variance for a larger sample

In Section 3.3, we proposed increasing the size of the local case-control subsample by multiplying all the acceptance probabilities a(x, y) by a constant c > 1 and assigning weight w = ca(x, y) when ca(x, y) > 1. We analyze the asymptotic variance here as a function of c. To simplify matters, suppose the model is correctly specified and λ is fixed at θ0.

The weighted log-likelihood for the subsample and its derivatives are then

| (64) |

| (65) |

| (66) |

Conditionally on x, there is a p(x)·(c(1 − p(x)) ∧ 1) chance y = z = 1 and w = c(1 − p(x))∨1, where p(x) = pθ0(x). Similarly, there is a (1 − p(x))·(cp(x) ∧ 1) chance y = 0, z = 1, and w = cp(x) ∨ 1. We immediately obtain

| (67) |

The expectation and variance of the score evaluated at 0 are

| (68) |

| (69) |

| (70) |

and the expected Hessian is

| (71) |

We have derived

| (72) |

For c = 1, we recover the factor of two from (58), but, for example, c = 5 we only pay 20% increased variance relative to the full sample.

5. Simulations

Here, we compare our method to standard weighted and unweighted case-control sampling for two-class Gaussian models like the one considered in Section 2.2. The standard case-control estimates use a 50–50 split between the two classes.

5.1. Simulation 1: Two-class Gaussian, different variances

We begin with a five-dimensional two-class Gaussian simulation where the classes have different covariance matrices. If X|Y = y ~ N(μy, Σy), then

| (73) |

Equation (73) is linear if Σ1 = Σ0, and quadratic otherwise, so if the two covariance matrices were the same the linear logistic model would be correctly specified. In this case the model is incorrectly specified, letting us compare the behavior of the different methods under model misspecification.

Take ℙ(Y = 1) = 1%, μ0 = 0, and μ1 = (1, 1, 1, 1, 4)′. The covariance matrices are Σ0 = diag(1, 1, 1, 1, 9) and Σ1 = I5. Hence f(x) is additive, but with a nonzero quadratic term in x5.

For our simulation, we first generate a large (n = 106) sample from the population described above. Second, we obtain a pilot model using the weighted case-control method on ns = 1000 data points. Next, we take a local case-control sample of size 1000 using that pilot model.

For comparison, we obtain standard case-control (CC) and weighted case-control (WCC) estimates. For the comparison estimators we do not use a sample of size 1000 again but rather use the total number of observations seen by the LCC model or the pilot model, roughly 2000, so the LCC estimate must pay for its pilot sample. We repeat this entire procedure 1000 times.

Table 2 shows the squared bias and variance of β̂ over the 1000 realizations for each of the three methods. As expected, we face a bias-variance tradeoff in choosing between the WCC and CC methods, whereas the LCC method improves substantially on the bias of CC and the variance of WCC. Standard errors for both bias and variance are computed via bootstrapping the 1000 realizations.

Table 2.

Estimated bias and variance of β̂ for each sampling method. For β̂ ∈ ℝp, we define Bias2 = ‖𝔼β̂ − β‖2 and

| Simulation 1 (Σ0 ≠ Σ1 ⇒ model misspecified) | ||||||

| (s.e.) |

|

(s.e.) | ||||

| LCC | 0.0049 | (0.00031) | 0.025 | (0.00059) | ||

| WCC | 0.023 | (0.0022) | 0.16 | (0.0038) | ||

| CC | 0.15 | (0.0016) | 0.043 | (0.00096) | ||

| Simulation 2 (Σ0 = Σ1 ⇒ model correct) | ||||||

|

|

(s.e.) |

|

(s.e.) | |||

| LCC | 0.0037 | (0.0083) | 0.039 | (0.00045) | ||

| WCC | 0.59 | (0.064) | 1.7 | (0.017) | ||

| CC | 0.06 | (0.042) | 0.87 | (0.0086) | ||

More surprising is the fact that LCC enjoys smaller bias than WCC and smaller variance than CC, dominating the other two methods on both measures. The improvement in variance over the CC estimate is likely due to the conditional imbalance present in the sample, while the improvement in bias over the WCC estimate may come from the fact that the methods are only unbiased asymptotically and the LCC estimate is closer to its asymptotic limiting behavior.

5.2. Simulation 2: Two-class Gaussian, same variance

Next, we simulate a two-class Gaussian model with each class having the same variance, so that the true log-odds function f is linear. We also increase the dimension to 50 for this simulation.

Since the model is now correctly specified, all three methods are asymptotically unbiased. However, in this case we introduce more substantial conditional imbalance, to demonstrate the variance-reduction advantages of local case-control sampling in that setting.

For this example, ℙ(Y = 1) = 10%, , μ0 = 050, and Σ0 = Σ1 = I50. We repeat the procedure from Section 5.1, now with ns = 104. Instead of generating a full sample, the full data set is implicit and we sample directly from ℙS.

In this example, the difference between the methods is more dramatic. Table 2 shows the squared bias and variance of the three methods. Here, local case-control enjoys substantially better bias than the other two methods, improving on CC more than twenty-fold. For the correct pilot model, ā(θ0) is roughly 0.005, so the local case-control subsample size is around n/200. Since the model is correctly specified, the variance is roughly twice that of logistic regression on the full sample of size n. In other words, local case-control subsampling is roughly 100 times more efficient than uniform subsampling.

Asymptotically, all three methods are unbiased but it appears that LCC again enjoys a smaller bias in finite samples.

6. Web spam data set

Relative to standard case-control sampling, local case-control sampling is especially well-suited for data sets with significant conditional imbalance, that is, data sets in which yi is easy to predict for most xi.

One such application is spam filtering. To demonstrate the advantages of local case-control sampling and compare asymptotic predictions to actual performance, we test our method on the Web Spam data available on the LIBSVM website3 and originally from Webb, Caverlee and Pu (2006). The data set contains 350,000 web pages, of which about 60% are labeled as “web spam,” that is, web pages designed to manipulate search engines rather than display legitimate content. This data set is marginally balanced, though as we will see the conditional imbalance is considerable.

As features, we use frequency of the 99 unigrams that appeared in at least 200 documents, log-transformed with an offset so as to reduce skew in the features. In this data set, the downsampling ratio ā is around 10%, that is, when using a good pilot we will retain about 10% of the observations.

Since we only have a single data set, we use subsampling as a method to assess the sampling distribution of our estimators. In each of 100 replications, we begin by taking a uniform subsample of size n = 100,000 from the population of 350,000 documents. After obtaining 100 data sets of size n = 100,000, we use the same procedure as we used in our two simulations with nS = 10,000.

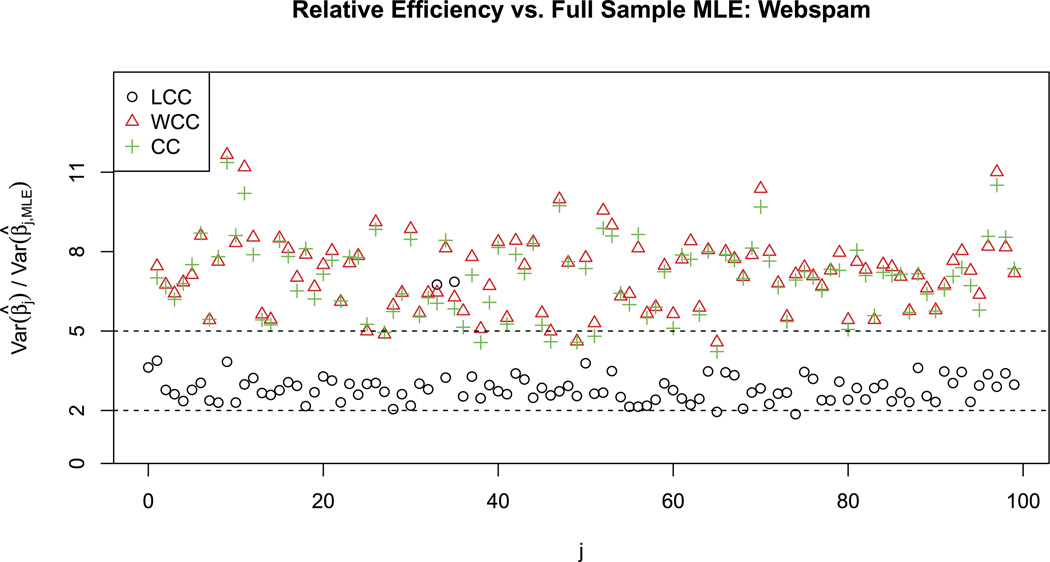

Our asymptotic theory predicts that the variance of the local case-control sampling estimate of θ should be a little more than twice the variance using the full sample (more because the model is misspecified and our pilot has some variance). Because the full sample is close to marginally balanced, the standard case-control sampling methods should do about as well as a uniform subsample of size 20,000—that is, they should have variance roughly 5 times that of the full sample.

Note that 20,000 is roughly twice the size of the local case-control sample, since we are counting the pilot sample against the local case-control method. If we had a readily available pilot model, as we would in many applications, it would be more relevant to give the CC and WCC methods access to only 10,000 data points, doubling their variance relative to the observed variance in this experiment.

The theoretical predictions come reasonably close in this experiment, as shown in Figure 3. The horizontal axis indexes each of the 100 coefficients to be fit (there are 99 covariates and an intercept), and the vertical axis gives the variance of each estimated coefficient, relative to the variance of the same coefficient in a model fitted to the full sample.

Fig. 3.

Relative variance of coefficients for different subsampling methods. The theoretical predictions (2× variance for local case-control, 5× variance for standard) are reasonably close to the mark, though a bit optimistic.

The magnitude of our improvement over standard case-control sampling is substantial here, but could be much larger in a data set with an even stronger signal. The key point is that standard case-control methods have no way to exploit conditional imbalance, so the more there is, the more local case-control dominates the other methods.

7. Discussion

We have shown that in imbalanced logistic regression, we can speed up computation by subsampling the data in a biased fashion and making a post-hoc correction to the coefficients estimated in the subsample. Standard case-control sampling is one such scheme, but it has two main flaws: it has no way to exploit conditional imbalance, and when the model is misspecified it is inconsistent for the population risk minimizer.

Local case-control sampling generalizes standard case-control sampling to address both flaws, subsampling with a bias that is allowed to depend on both x and y. When the pilot is consistent, our estimate is consistent even under misspecification, and if the model is correct then local case-control sampling has exactly twice the asymptotic variance of logistic regression on the full data set. Our simulations suggest that local case-control performs favorably in practice.

7.1. Translating computational gains to statistical gains

In the Introduction, we motivated our inquiry by identifying four ways that computational gains can translate to statistical ones. Specifically, we suggested that computational savings can:

enable us to experiment with and prototype a variety of models, instead of trying only one or two,

allow us to refit our models more often to adapt to changing conditions,

allow for cross-validation, bagging, boosting, bootstrapping, or other computationally intensive statistical procedures or

open the door to using more sophisticated statistical techniques on a compressed data set.

It is relatively clear how our proposed method can help with points (1) and (2). As for point (3), faster fitting procedures can directly speed up straightforward resampling techniques like bootstrapping or cross-validation, possibly making them feasible at scales where they previously were not. We discuss in Section 7.2 how it can also help with boosting.

The basic method as we have described it above does not deliver on point (4), because the pilot model and second-stage model are the same. However, an extension of our method can help, which we discuss below.

There is no reason in principle why the pilot model must be linear, or belong to the same model class as the model we fit to the local case-control sample. We can use any pilot predictions in the sampling algorithm, and then model the log-odds in the subsample quite flexibly—by a GAM, kernel logistic regression, random forests or any other method—so long as we can use offsets − f̃(xi) in the second-stage procedure. For example, we could use as our pilot fit a simple model with a few important variables explaining most of the response, and in the second stage estimate more complex models refining the first.

Formally, our theoretical results may not cover this use. Suppose the second-stage model can be written as a logistic regression after some basis expansion. Then consistency of the second-stage estimate requires either that the pilot be consistent (the new variables contribute nothing to the population fit) or that the second-stage model be correctly specified. If neither of these assumptions holds approximately, then our estimate could be biased—though perhaps not as biased as case-control sampling, which is a special case of local case-control with an intercept-only pilot.

If we are prototyping, guarantees of consistency may not be a high priority. If they are, then as with case-control sampling, we can repair the inconsistency of the local case-control estimate by using a Horvitz–Thompson estimator with weights aθ̃(xi, yi)−1. This may come at a cost of some added variance. It would be interesting to examine the bias of local case-control and the variance of weighted local case-control in this more general problem setting.

7.2. Extensions

This work suggests extensions in several directions, described below.

Indifference point other than 50%

In some applications (e.g., diagnostic medical screening), a false negative may be more costly than a false positive, or vice-versa. One of the implications of the discussion in Section 2.2 is that the Bernoulli log-likelihood implicitly places most emphasis on approximating the log-odds well near the 0 (50% probability) level curve, which may not be appropriate if the decision boundary relevant to our application is at 10%. In general, we would expect to obtain a better model in the large-n limit if we target the decision boundary we care most about.

In a sense, the reason that standard case-control sampling performed so badly in Example 2 of Section 2.2 is that it targeted a level curve of ℙ(Y = 1|X = x) other than 50%. Specifically, it targeted the level curve corresponding to 50% in the subsampling population for equal-sampled case-control sampling, which corresponds to the marginal ℙ(Y = 1) level curve in the original population.

What happened by accident in Example 2 need not always be one, and it would be interesting to generalize our procedure so as to target any chosen decision threshold. More generally still, our indifference point could depend on our features x—in online advertising, for instance, some advertisers may be willing to pay more per click than others.

Boosting

In Section 7.1 we suggested using offsets to obtain a complex second-stage fit. Alternatively, we can obtain any fitted log-odds function fs(x) for the sample and simply add it to the pilot f̃(x) to obtain an estimate for f(x).

This observation suggests the possibility of iteratively fitting a “base model” to the subsample, then adding it to f̃(x) to obtain a new pilot for the next iteration. Indeed, that iterative algorithm is closely related to the AdaBoost algorithm of Freund and Schapire (1997). Even more similarly to AdaBoost, we could weight each point by |yi − p̃(xi)| instead of sampling it with that probability.

Friedman, Hastie and Tibshirani (2000) show that the AdaBoost algorithm can be thought of as fitting a logistic regression model additive in base learners. In AdaBoost, the function simply records the number of classifiers fm classifying x as belonging to class +1 minus the number classifying it as class −1, and Friedman et al. show that can be thought of as approximating the log-odds of Y = +1 given X = x.

The difference is that while AdaBoost weights the point (xi, yi) by e(2yi−1)Fm(xi), the local case-control version would use weights

| (74) |

Operationally, this alternative weighting scheme limits the influence of “outliers,” that is, hard-to-classify points that can unduly drive the AdaBoost fit.

Logistic regression with regularization

In high-dimensional settings, lasso- or ridge-penalized logistic regressions are often preferable to standard logistic regression, the model considered here. One could use local case-control sampling with a regularized version of logistic regression, but our asymptotic results might need revisiting in such a case—especially in a high-dimensional asymptotic regime [p ≫ n or p/n → γ ∈ (0, ∞)]. Since the high-dimensional setting is important in modern statistics and machine learning, this bears further investigation.

Other generalized linear models

One way of viewing the method is as a way of “tilting” the conditional distribution of Y by a linear function of X in the natural parameter space so as to enrich our subsample for more informative observations. We could use similar tricks on other GLMs.

For instance, suppose we are given a Poisson variable with natural parameter η = log 𝔼Y. By sampling with acceptance probability proportional to eξY, we obtain (conditional on acceptance) a Poisson with natural parameter η + ξ. Since Poisson variables with larger means carry more information, this could yield a substantial improvement over uniform subsampling.

If our data arise from a Poisson GLM with η(x) ≈ α + β′x, we could generalize the local case-control scheme by sampling (xi, yi) with probability proportional to exp{(ξ0 − α − β′xi)yi}, where the extra parameter ξ0 guarantees that we always tilt the conditional mean of yi upward. Similar generalizations may apply for multinomial logit and survival models.

Acknowledgements

The authors are grateful to Jerome Friedman for suggesting that we investigate the bias of case-control sampling, and to Nike Sun for helpful comments and suggestions.

APPENDIX A

PROOF OF LEMMA 1 (UNIQUENESS OF θ*)

Because Rλ(θ) is strictly convex, it is sufficient to show that Rλ(θ) → ∞ as θ → ∞ in any direction.

Assume w.l.o.g. there is some neighborhood N ⊆ ℝp for which

| (75) |

h(η; πN) is linear in its second argument, and is increasing for sufficiently large η. Thus, for large enough ‖θ‖ε, the population risk for ℙ is

| (76) |

| (77) |

| (78) |

ℙλ ≫ ℙ for any λ, so (75) holds for ℙλ with the same N (but a different πN < 1). Thus, we can repeat the same argument with ℙ replaced by ℙλ.

APPENDIX B

PROOF OF PROPOSITION 3 (POINTWISE CONVERGENCE)

Fix θ and begin by writing

| (79) |

Let be the Bernoulli selection decisions, generated by comparing mutually independent ui ~ U(0, 1) to the threshold aλ(xi, yi). The are independent conditional on λ and the data. Also, write , so that .

By the Cauchy–Schwarz inequality, we have

| (80) |

Now, for δ > 0 define Λδ = {λ: ‖λ − λ∞‖ < δ}. For λ ∈ Λ1, we have

| (81) |

which is integrable by assumption. Finally let 𝔼n denote an average taken over indices i = 1, …, n, that is, . Then

| (82) |

By continuity, . Therefore, it suffices to show . Because by the law of large numbers, it suffices equally well to show that .

Now fix ε > 0 and take K large enough that 𝔼(m1m>K) < ε. For λn ∈ Λ1 we have

| (83) |

With probability one the second term is eventually less than 2ε. Further, for λn ∈ Λδ, we have

| (84) |

| (85) |

Now, write

| (86) |

iff ui lies between aλn (xi, yi) and aλ∞(xi, yi). Hence, conditionally on λn and the data, the di are mutually independent nonnegative random variables bounded by K with means

| (87) |

since ∇λaλ(xi, yi) ≤ ‖xi‖ < mi.

Continuing, we have

| (88) |

| (89) |

Conditioning on λ and {(xi, yi)}, the first term is a sum of independent zero-mean random variables that are bounded in absolute value by K. By Hoeffding’s inequality,

| (90) |

Since this bound is deterministic, the same applies to the unconditional probability that 𝔼n(d − μ) is large. Take δ = ε/(K + K2). With probability tending to 1, λn ∈ Λδ and the event in (90) holds, in which case

| (91) |

Since ε was arbitrary, the proof is complete.

APPENDIX C

PROOF OF THEOREM 6 [DISTRIBUTION OF θ̂ − θ̄(λ)]

By the mean value theorem, we have for each n

| (92) |

where ϕn is some convex combination of θ̂n and θ̄(λn). Noting that the LHS is by definition 0 and rearranging, we obtain

| (93) |

If we can show the first factor tends in probability to and the second tends in distribution to N(0, ā(θ*)−1 J(θ*, θ*)), then by Slutsky’s theorem we have the desired result.

Using the Skorokhod construction define a joint probability space for λn such that . We will condition on the sequence λn and use a triangular array central limit theorem for the random variables

| (94) |

| (95) |

Because λn is independent of the data, 𝔼(f(gni)|λn, zni = 1) = 𝔼λn(f(gni)) for any f. The triangular array CLT applies since

| (96) |

| (97) |

| (98) |

| (99) |

| (100) |

Therefore, defining and Z = N(0, a(θ*)−1 J(θ*, θ*)), the CLT tells us ℙ(Sn ∈ A|λn) → ℙ(Z ∈ A) whenever λn → θ*, which we assumed occurs with probability 1. By dominated convergence, we also have ℙ(Sn ∈ A) → ℙ (Z ∈ A).

Next we turn to the Hessian. We have by Theorem 5, so as well. Writing

| (101) |

we need to show that

| (102) |

Note that , which is integrable; hence . Since ā is continuous and strictly positive, and , it suffices to show that

| (103) |

Note that , and define .

Following the structure of the proof of Proposition 3, take K large enough that 𝔼‖x‖21‖x‖>K < ε and truncate the hi:

| (104) |

| (105) |

The second term is eventually less than 2ε. Now, is small, because

| (106) |

Hence, by Cauchy–Schwarz

| (107) |

So on the event {max ‖λn − θ*‖, ‖ϕn − θ*‖ < δ}, we have

| (108) |

| (109) |

| (110) |

Finally, we can bound the first term exactly as we did in the proof of Proposition 3, defining and μi = 𝔼(di|xi, yi, λn) ≤ δK3. The same argument implies ℙ(𝔼n(d − μ) ≥ ε) ≤ 2 exp[−nε2/(2K4)], so as n → ∞ we have with probability approaching 1,

| (111) |

| (112) |

so taking δ < ε/K3, the right-hand side is less than 5ε.

Footnotes

Supported by NSF VIGRE Grant DMS-05-02385.

Supported in part by NSF Grant DMS-10-07719 and NIH Grant RO1-EB001988-15.

Contributor Information

William Fithian, Email: wfithian@stanford.edu.

Trevor Hastie, Email: hastie@stanford.edu.

REFERENCES

- Anderson JA. Separate sample logistic discrimination. Biometrika. 1972;59:19–35. MR0345332. [Google Scholar]

- Bottou L, Bousquet O. The tradeoffs of large scale learning. Adv. Neural Inf. Process. Syst. 2008;20:161–168. [Google Scholar]

- Breslow NE, Cain KC. Logistic regression for two-stage case-control data. Biometrika. 1988;75:11–20. MR0932812. [Google Scholar]

- Breslow NE, Day NE, et al. Statistical Methods in Cancer Research. The Analysis of Case-Control Studies 1. Geneva, Switzerland: Distributed for IARC by WHO; 1980. [PubMed] [Google Scholar]

- Chawla NV, Japkowicz N, Kotcz A. Editorial: Special issue on learning from imbalanced data sets. ACM SIGKDD Explor. Newsl. 2004;6:1–6. [Google Scholar]

- Fears TR, Brown CC. Logistic regression methods for retrospective case-control studies using complex sampling procedures. Biometrics. 1986;6:955–960. [PubMed] [Google Scholar]

- Freund Y, Schapire RE. A decision-theoretic generalization of online learning and an application to boosting. J. Comput. System Sci. 1997;55:119–139. MR1473055. [Google Scholar]

- Friedman J, Hastie T, Tibshirani R. Additive logistic regression: A statistical view of boosting. Ann. Statist. 2000;28:337–407. MR1790002. [Google Scholar]

- He H, Garcia EA. Learning from imbalanced data. IEEE Trans. Knowl. Data Eng. 2009;21:1263–1284. [Google Scholar]

- Horvitz DG, Thompson DJ. A generalization of sampling without replacement from a finite universe. J. Amer. Statist. Assoc. 1952;47:663–685. MR0053460. [Google Scholar]

- Huber PJ. Robust Statistics. Berlin: Springer; 2011. [Google Scholar]

- Lumley T, Shaw PA, Dai JY. Connections between survey calibration estimators and semiparametric models for incomplete data. Int. Stat. Rev. 2011;79:200–220. doi: 10.1111/j.1751-5823.2011.00138.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mani I, Zhang I. Proceedings of Workshop on Learning from Imbalanced Datasets. Washington, DC: ICML; 2003. kNN approach to unbalanced data distributions: A case study involving information extraction. [Google Scholar]

- Manski CF, Thompson TS. Estimation of best predictors of binary response. J. Econometrics. 1989;40:97–123. MR0975759. [Google Scholar]

- Mantel N, Haenszel W. Statistical aspects of the analysis of data from retrospective studies of disease. J. Natl. Cancer Inst. 1959;22:719–748. [PubMed] [Google Scholar]

- Owen AB. Infinitely imbalanced logistic regression. J. Mach. Learn. Res. 2007;8:761–773. MR2320678. [Google Scholar]

- Prentice RL, Pyke R. Logistic disease incidence models and case-control studies. Biometrika. 1979;66:403–411. MR0556730. [Google Scholar]

- Scott AJ, Wild CJ. Fitting logistic models under case-control or choice based sampling. J. Roy. Statist. Soc. Ser. B. 1986;48:170–182. MR0867995. [Google Scholar]

- Scott AJ, Wild CJ. Fitting logistic regression models in stratified case-control studies. Biometrics. 1991;47:497–510. MR1132540. [Google Scholar]

- Scott A, Wild C. On the robustness of weighted methods for fitting models to case-control data. J. R. Stat. Soc. Ser. B Stat. Methodol. 2002;64:207–219. MR1904701. [Google Scholar]

- Webb S, Caverlee J, Pu C. Introducing the webb spam corpus: Using email spam to identify web spam automatically. Proceedings of the Third Conference on Email and Anti-Spam (CEAS); CEAS; Mountain View, CA. 2006. [Google Scholar]

- Weinberg CR, Wacholder S. The design and analysis of case-control studies with biased sampling. Biometrics. 1990:963–975. [PubMed] [Google Scholar]

- Xie Y, Manski CF. The logit model and response-based samples. Sociol. Methods Res. 1989;17:283–302. [Google Scholar]