Summary

Evidence-based rules for optimal treatment allocation are key components in the quest for efficient, effective health care delivery. Q-learning, an approximate dynamic programming algorithm, is a popular method for estimating optimal sequential decision rules from data. Q-learning requires the modeling of nonsmooth, nonmonotone transformations of the data, complicating the search for adequately expressive, yet parsimonious, statistical models. The default Q-learning working model is multiple linear regression, which is not only provably misspecified under most data-generating models, but also results in nonregular regression estimators, complicating inference. We propose an alternative strategy for estimating optimal sequential decision rules for which the requisite statistical modeling does not depend on nonsmooth, nonmonotone transformed data, does not result in nonregular regression estimators, is consistent under a broader array of data-generation models than Q-learning, results in estimated sequential decision rules that have better sampling properties, and is amenable to established statistical approaches for exploratory data analysis, model building, and validation. We derive the new method, IQ-learning, via an interchange in the order of certain steps in Q-learning. In simulated experiments IQ-learning improves on Q-learning in terms of integrated mean squared error and power. The method is illustrated using data from a study of major depressive disorder.

Some key words: Dynamic Treatment Regime, Personalized Medicine, Treatment Selection

1. Introduction

Clinical treatment decisions are based on a patient’s treatment history and current health status. Sequential decision rules, also known as dynamic treatment regimes, formalize this process by specifying a sequence of decision rules, one for each treatment decision, that take as input a patient’s treatment and covariate history and output recommended treatments. An optimal sequential decision rule is one that maximizes a desirable clinical outcome. The sequential nature of clinical decision making problems has led researchers to estimate optimal sequential decision rules using approximate dynamic programming procedures (Robins, 2004; Murphy, 2005a). Q-learning with function approximation, hereafter Q-learning, is one such popular method (Murphy, 2005a). However, Q-learning and similar variants of it involve modeling nonsmooth, nonmonotone functions of the data. Nonmonotonicity complicates the regression function whereas nonsmoothness imparts nonregularity to estimators. Inference in the presence of such nonregularity has been well-studied by Robins (2004), Chakraborty et al. (2010), Laber et al. (2014), and Moodie and Richardson (2010). However, much less attention has been directed toward the effect of nonsmoothness on the equally-important applied problems of model building and diagnostics. The preceding references all study linear working models, making little mention of their appropriateness or how to interactively build a model using data. As we demonstrate, even under simple generative models, linear working models result in questionable fits.

Rather than develop specialized exploratory and model building techniques for Q-learning, we propose to model the data before applying the necessary nonmonotone, nonsmooth operations. Because standard interactive model building techniques can be used with our new version of Q-learning, we call it IQ-learning for interactive Q-learning. Interactive model building is an essential part of extracting meaningful information from data (Henderson and Velleman, 1981; Cook and Weisberg, 1982; Henderson et al., 2010; Rich et al., 2010; Chakraborty and Moodie, 2013). This is especially true when the analysis is intended to inform clinical practice or provide scientific insight, and thus IQ-learning is especially attractive in applications.

IQ-learning has several advantages over Q-learning. For a large class of generative models, IQ-learning involves only simple, well-studied, and well-understood conditional mean and variance modeling of smooth transformations of the data, resulting in better fitting and more interpretable models (Carroll and Ruppert, 1988). Furthermore, inference for coefficients indexing the working models in IQ-learning is greatly simplified by regular normal limit theory. However, IQ-learning does not resolve the problem of nonregularity of the estimated non-terminal Q-functions, just of the coefficients on which they depend. Nevertheless, this is an important distinction from Q-learning and related methods. This issue is discussed further in Section 2.3.

2. Q- and IQ-Learning

2·1. Setup

For simplicity we consider the case of two treatment decisions and two treatment options per stage. In addition to being the most common in practice (see www4.stat.ncsu.edu/~laber/smarts.html), the two-stage, binary-treatment setting facilitates exposition while maintaining the key features of more general problems. While we focus on randomized studies, the proposed approach is valid for observational data under the same causal conditions required for Q-learning (Schulte et al., 2012).

Training data for the two-stage, two-treatment case, , consists of independent identically distributed copies of the quintuple (X1, A1, X2, A2, Y) containing data collected on a single subject. Each quintuple is time ordered and thus called a trajectory: X1 ∈ ℝp1 is baseline covariate information; A1 ∈ {−1, 1} is the first treatment; X2 ∈ ℝp2 is covariate information collected between the first and second treatment assignments; A2 ∈ {−1, 1} is the second treatment; and Y ∈ ℝ is the outcome, coded so that higher values coincide with more desirable clinical outcomes. For notational compactness and conformity with established practice, we denote the information available prior to the tth treatment assignment by Ht. Thus, H1 = X1 and .

2·2. Q-Learning

The goal of Q- and IQ-learning is estimation of a pair of decision rules π = (π1, π2) such that π t maps the domain of Ht into the set of treatments, πt : ℋt ↦ {−1, 1}. An optimal sequential decision rule πopt maximizes expected outcome. Let Eπ denote expectation under the restriction At = πt(Ht); then πopt satisfies Eπopt (Y) = supπ Eπ (Y).

Q-learning, a regression-based, approximate dynamic programming algorithm, is commonly used to estimate πopt (Murphy, 2005a). The algorithm depends on the Q-functions,

| (1) |

| (2) |

Thus, Qt(ht, at) measures the quality of treatment at when assigned to a patient with history ht, assuming that optimal treatment decisions are made in future stages (Sutton and Barto, 1998). If the Q-functions were known, the optimal sequential decision rule could be determined using dynamic programming (Bellman, 1957), yielding the solution . The Q-functions are seldom known, and in practice Q-learning mimics the dynamic programming solution by replacing the unknown conditional expectations with fitted regression models. For reasons of data scarcity, model parsimony, and simplicity, it is common to use linear models for the Q-functions:

| (3) |

where ht,0 and ht,1 are, possibly the same, subvectors of ht, and . Q-learning with linear models consists of three steps:

-

Q1

estimate β2, and hence Q2, via least squares regression of Y on H2 and A2 using the working model (3), i.e., ;

-

Q2calculate predicted future outcomes Ỹ assuming optimal Stage 2 decisions

then estimate β1, and hence Q1, via least squares regression of Ỹ on H1 and A1 using the working model (3), i.e., ; and -

Q3calculate the estimated Q-learning optimal treatment policy as

There is nothing unusual about the regression modeling in the first step, which is amenable to well-studied techniques and diagnostics. The same is not true of the modeling in the second step. The absolute value function in the definition of Ỹ means that even if the relationships among the components of H1 and H2 are approximately linear, the dependence of Ỹ on H1 would necessarily be nonmonotone. Thus the working model for Ỹ commonly used in practice is generally wrong. Furthermore, there are no simple transformations of the observed variables or simple modifications to the working model that render the true regression of Ỹ on H1 and A1 linear, even asymptotically. We illustrate this point with data generated from the model

| (4) |

where σ2, τ2, ζ, and γ2 are fixed parameters. In most applications one expects ζ = cov(X1, X2) ≠ 0. Treatments are randomly assigned at each stage as in a sequential multiple assignment randomized trial design (Murphy, 2005b; see also Lavori and Dawson, 2000, 2004). The working model in (3) for Q2(H2, A2) is correct, so the resulting predicted value Ỹ approximates the true fitted value ỸTrue = |1.25A1 + X2| − A1X1. It follows that the regression in Step Q2 approximates the regression of ỸTrue on H1 = X1 and A1. Substituting for X2 in Ỹ shows that ỸTrue = |1.25A1 + ζX1 + ξ| − A1X1, from which it is apparent that E(ỸTrue|X1, A1) is linear in X1 for fixed A1 only in the unlikely case that ζ = 0. Thus correlation between X1 and X2 induces a nonlinear dependence of Ỹ on X1.

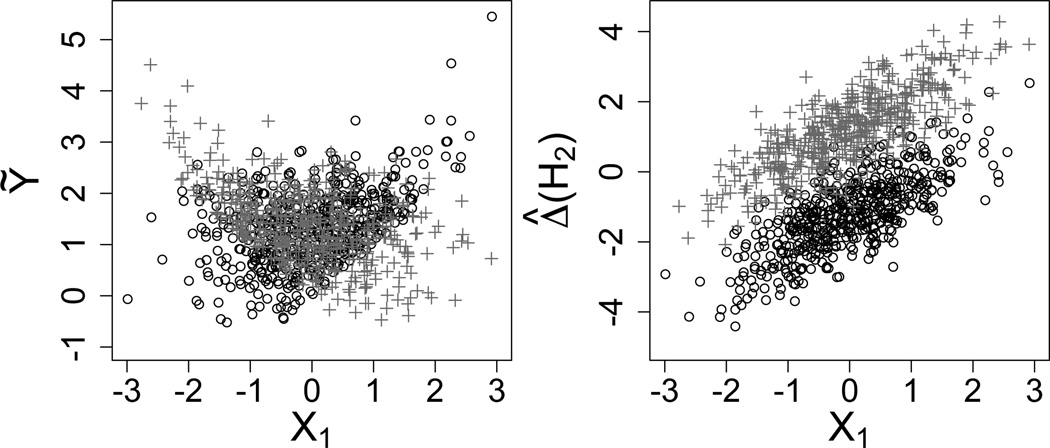

The left-hand panel of Figure 1 displays a scatterplot of Ỹ against X1 for each value of A1 based on 1,000 random draws from model (4) with ζ = 0.85, σ = 1, and τ = γ = 1/√2, using the Q-learning algorithm to calculate Ỹ. The figure illustrates nonlinearity in the regression of Ỹ on X1 and also heteroscedastic variation induced by the max operation in Step Q2. As this toy model makes clear, identifying the correct form of the regression model for E(ỸTrue | H1,A1) and fitting it efficiently would be difficult in the realistic case that the data-generating model is unknown; one approach would be to adopt nonparametric models for the Q-functions (e.g., Zhao et al., 2011; Moodie et al., 2013), but some clinicians are wary of black-box approaches and it 125 can be difficult to glean scientific knowledge from these models. Thus, common practice is to ignore the problem and settle for the best approximation afforded by fitting linear models. This problem is shared by variants of Q-learning (e.g., A-learning, Murphy, 2003; Blatt et al., 2004; Robins, 2004; Schulte et al., 2012). In contrast, the right-hand panel of Fig. 1 shows the first-stage regression model that must be fit in our proposed method, IQ-learning, described next. It is a common analysis of covariance model in X1 and A1.

Fig. 1.

Scatterplots of Ỹ (left) and Δ̂(H2) (right) against X1 for A1 = −1 (black circles) and A1 = 1 (grey crosses) for 1, 000 random samples from the toy model. Step 2 of the Q-learning algorithm requires modeling the data in the left plot; note the nonlinearity and heteroscedasticity. Data in the right plot must be modeled for IQ-learning; note the common analysis of covariance structure.

2·3. IQ-Learning

IQ-learning replaces the difficult problem of modeling the predicted future optimal outcomes with two ordinary mean-variance function modeling problems. Its practical advantages result from the fact that there is a wealth of models and theory for mean-variance function modeling (Carroll and Ruppert, 1988). Thus it has the potential for better model building and diagnostics. The modeling required is familiar and is generally interactive. We first describe the IQ-learning algorithm in general terms and then discuss special cases that are useful in practice.

Whereas Q-learning models maxa2∈{−1,1} Q2(H2, a2) directly, IQ-learning starts with the Q2 contrast and main-effect functions: Δ(H2) = {Q2(H2, 1) − Q2(H2, −1)} /2; μ(H2) = {Q2(H2, 1) + Q2(H2, −1)} /2. The contrast and main-effect functions are linear, and hence smooth and monotone functions of Q2(·). Let gh1, a1(·) denote the conditional distribution of the contrast Δ(H2) given H1 = h1 and A1 = a1. With these definitions Q1(h1, a1) defined in (2) can be written as

| (5) |

The IQ-learning estimator of Q1(h1, a1) has the form

| (6) |

where L̂(h1, a1) and ĝh1, a1(·) are estimators of E {μ(H2) | H1 = h1, A1 = a1} and gh1, a1(·).

Let Q̂2(H2, A2) denote the estimator obtained in Step Q1 of the Q-learning algorithm. Define the estimated main-effect and contrast functions

| (7) |

Then L̂(h1, a1) is obtained by modeling the regression of μ̂(H2) on H1 and A1 for which linear models are often adequate as no unusual transformations are involved. Obtaining ĝh1, a1(·) is accomplished by estimating the conditional distribution of Δ̂(H2) given H1 and A1. For this we exploit mean-variance function modeling as explained in Section 2·4. Thus we have the following algorithm for IQ-learning:

-

IQ1

use Step Q1 of the Q-learning algorithm to obtain β̂2 and ;

-

IQ2

regress the estimated main-effect function μ̂(H2) from (7) on H1 and A1 to obtain an estimator L̂(h1, a1) of E {μ(H2) | H1 = h1, a1};

model the conditional distribution of the estimated contrast function Δ̂(H2) from (7) given H1 = h1 and A1 = a1 to obtain an estimator ĝh1, a1(·) of gh1, a1(·);

- combine the estimators from IQ2a and IQ2b to obtain

-

IQ3

define the IQ-learning estimated optimal treatment policy so that .

Completing our algorithm requires specific models for Steps IQ1, IQ2a, and IQ2b. As noted previously, Steps IQ1 and IQ2a are usually straightforward and linear models will often suffice. We now show how to accomplish the modeling in Step IQ2b efficiently and with sufficient flexibility for many applications by using mean-variance models.

2·4. Location-Scale Working Models for gh1, a1(·)

Henceforth we consider mean-variance, location-scale estimators of gh1, a1(·) of the form

| (8) |

where m̂(h1, a1) is an estimator of m(h1, a1) = E {Δ(H2) | H1 = h1, A1 = a1}, σ̂2(h1, a1) is an estimator of σ2(h1, a1) = E [{Δ(H2) − m(h1, a1)}2 | H1 = h1, A1 = a1], and ϕ̂ is an estimator of the density of the standardized residuals {Δ(H2) − m(h1, a1)} /σ(h1, a1), say ϕh1, a1, which we assume does not depend on the history h1 or the treatment a1. In other words, we assume that all of the dependence of Δ(H2) on (H1, A1) is captured by the conditional mean and variance functions. The great success of mean-variance function modeling (Carroll and Ruppert, 1988) suggests that this assumption is reasonable quite generally; however, we also note that substantial departures from the assumption can be investigated by stratifying on h1 and a1 and comparing higher-order moments, such as skewness and kurtosis, or nonparametric density estimates of the empirical residuals {Δ̂(H2) − m̂(H1,A1)} /σ̂(H1, A1) across the strata. We now describe two special cases of the estimator in (8).

Let ϕ denote the density of a standard normal random variable. A simple but useful estimator of gh1, a1(·) is the normal location-scale model:

| (9) |

which is a special case of (8) with ϕ̂ = ϕ. An advantage of this model is that can be evaluated in closed form. In particular,

| (10) |

where Φ is the standard normal cumulative distribution function. If the mean and variance functions are correctly specified and ϕh1, a1 = ϕ, then the IQ-learning location-scale model is correct. As commonly implemented, Q-learning fits a misspecified model and thus estimators are not consistent. The closed form expression in (10) makes it possible to study the bias in Q-learning when the true data-generating model is a normal mean-variance function model. Details are in the Supplementary Material.

The normal location-scale model assumes that ϕh1, a1 = ϕ, the standard normal density. Violations of normality can be diagnosed via examination of the observed standardized residuals, êi = {Δ̂(H2,i) − m̂{(H1, i, A1, i)}/σ̂(H1,i, A1,i) (i = 1, …, n). When greater modeling flexibility is desired, the normality assumption can be dropped and the empirical distribution of the êi used instead. Defining

| (11) |

leads to the nonparametric location-scale estimator of ∫|z|gh1, a1(z)dz, . We show in Section 2·5 that the nonparametric location-scale estimator is consistent and asymptotically normal.

Data for estimating optimal sequential decision rules are typically expensive to collect, so samples are seldom large and parametric mean and variance function models are more useful than nonparametric models. Thus we assume that m(h1, a1) = m(h1, a1; θ) and σ(h1, a1) = σ(h1, a1; γ) for some θ ∈ ℝpm and γ ∈ ℝps. Similarly, we assume that L(h1, a1) = L(h1, a1; α), α ∈ ℝpL. For the results in Sections 3 we completed specification of the IQ-learning algorithm in Section 2·3 as follows. Steps IQ2a and IQ2b are amended to:

-

IQ2a

Set L̂(h1, a1) = L(h1, a1; α̂), where

-

IQ2bi

Set m̂(h1, a1) = m(h1, a1;θ̂), where

-

IQ2biiSet σ̂(h1, a1) = σ(h1, a1; γ̂) where

- IQ2biii

We have used a simple model for the conditional variance in step IQ2bii; for a discussion of other conditional variance estimators and their asymptotic properties see Carroll and Ruppert (1988).

2·5. Asymptotic Theory

Asymptotic distribution theory for is covered by standard results for linear regression, so we address only the asymptotic distribution of for the particular parametric estimators defined in the IQ-learning algorithm. Define the population residuals

and the population parameters:

Let θ̂, γ̂, β̂2, and α̂ denote n1/2-consistent estimators of their population analogs θ*, γ*, , and α*. For x ∈ ℝp, let ℬd(x) denote a ball of radius d centered at x, and let En denote the empirical expectation operator so that . The asymptotic results are stated in terms of the seven centered statistics:

The following assumptions are used to establish the limit theory for IQ-learning:

-

(A1N)

n1/2 (ΔL, Δm, Δσ) is asymptotically N {0,ΣN(h1, a1)};

-

(A1E)

n1/2 (ΔL, Δθ, Δγ, Δβ, Δε) is asymptotically N {0, ΣE(h1, a1)};

-

(A2)let k1, k2, and k3 denote fixed positive constants, the class of functions

is a P-measurable Donsker class with a square-integrable envelope. In addition, J(θ, γ, β2) = Ef(H2, H1, A1; θ, γ, β2) is continuously differentiable with a bounded derivative in a neighborhood of ; -

(A3)

the random variable has a continuously differentiable density κ with derivative κ′(z) satisfying |∫z2κ′(z)dz| < ∞.

These assumptions are relatively mild with (A1N), (A1E), and the first part of (A2) verifiable using standard techniques, e.g., the multivariate central limit and Donsker preservation theorems (see Kosorok, 2008, and the Supplementary Material). Assumption (A3) is generally more difficult to verify, but its validity can be roughly assessed using the observed residuals ê1,…, ên. We have not sought the most general assumptions, but rather a set of simple assumptions that illustrate what is needed for the IQ-learning estimator to be well-behaved.

The first result states the asymptotic normality of the normal and nonparametric location-scale IQ-learning estimators of the first-stage Q-function. Let and denote the normal and nonparametric location-scale estimators, respectively, so that

Define I(υ, t, s) = υ + s−1∫|z|ϕ {(z − t)/s}dz. Let I*(h1, a1) denote I{L(h1, a1; α*), m(h1, a1; θ*), σ(h1, a1; γ*)}. The following is proved in the Supplementary Material.

Theorem 1 (Asymptotic normality.). Let h1 and a1 be fixed.

- Assume (A1N). Then

converges in distribution to N{0, ∇I* (h1, a1)⊤ΣN (h1, a1)∇I* (h1, a1)}. - Assume (A1E), (A2), and (A3). Then

converges in distribution to .

Theorem 1 shows that both location-scale estimators and are asymptotically normal under the stated conditions, which do not require correct specification of the IQ-learning models. Under (C1) and (C2) below, the IQ-learning models are correctly specified, and consistency and asymptotic normality of the IQ-learning estimators follow.

-

(C1)

Let Z denote a standard normal random variable, then .

-

(C2)

Let W be a random variable with density κ(·), then .

The following results are direct consequences of Theorem 1 and we omit their proofs.

Corollary 1. Assume and . Then

if (C1), ;

if (C2), .

Theorem 2. Assume (A1N) and the conditions of Theorem 1. Let h1 and a1 be fixed.

If (C1) then converges in distribution to N{0, ∇I*(h1, a1)⊤ΣN (h1, a1)∇I*(h1, a1)}.

If (A1E), (A2), (A3) and (C2) then converges in distribution to .

Remark 1. Theorem 2 can be used to construct asymptotically valid confidence intervals for the first-stage Q-function for fixed patient history h1 and first-stage treatment a1. This is a notoriously difficult task with Q-learning (Laber et al., 2014). In practice, due to the complexity of the variance terms, the bootstrap may be preferred. Remarks about how to extend the above results to obtain bootstrap consistency are made in the Supplementary Material.

Remark 2. As noted in the introduction, IQ-learning does not alleviate the inherent nonregularity present in sequential decision making problems; see Robins (2004), Laber et al. (2014), and Chakraborty et al. (2010). However, IQ-learning is consistent for a nonregular scenario of interest, the so-called global null in which there is no treatment effect for any patients at the second stage, i.e., almost surely. To see this, note that assuming (A1N), (C1) holds with m(H1, A1; θ*) = 0 with σ(H1, A1; γ*) → 0 almost surely. Part 1 of Corollary 1 depends only on part 1 of Theorem 1 and part 1 of Theorem 2. For the more complex case in which we conjecture that using a mixture of normals to estimate gh1,a1(·) may lead to improved small–sample performance.

3. Monte Carlo Results

We compare the small-sample performance of IQ- and Q-learning in using average value of the learned treatment regimes, integrated mean squared error of the first-stage Q-function, and coverage and width of 95% nonparametric bootstrap confidence intervals for the first-stage Q-function. A key advantage of IQ-learning is its compatibility with common model building steps, which we illustrate in the Supplementary Material with a study of the power to detect nonlinear effects in the first-stage Q-function using IQ-learning and Q-learning. Software implementing the IQ-learning estimators is available as part of the iqLearn package on the comprehensive R network (cran.us.r-project.org/). The Monte Carlo results show that for the class of generative models we consider, IQ-learning generally performs better than Q-learning in terms of value, integrated mean squared error, coverage, and width of the confidence intervals. Simulations in the Supplementary Material show that IQ-learning also has higher power to detect nonlinear effects.

Simulations in this section use data from the following class of generative models:

where {ΩAR1 (0.5)}i,j = (0.5)|i−j|, , and ζA1 = (1.5 + 0.5A1)1/2. Thus, the class is indexed by the dimension p, the distributions of ξ and ϕ, and the coefficient vectors β2,0 and β2,1. Here we fix p = 4; results for p = 8 are similar and are provided in the Supplementary Material. We consider ξ ~ Normalp (0, Ip). We fix the main effect parameter β2,0 and vary the second-stage treatment effect size by scaling β2,1 as follows: β2,0 = 12p+2/‖12p+2‖, , where C ranges over a grid from 0 to 2, and 1d denotes a d-dimensional vector of 1s. In addition, we fix the theoretical R2 of the second-stage regression model at 0.6 by specifying and solving for the variance that yields the desired R2. Additional simulations, provided in the Supplementary Material, show results for R2 = 0.4, 0.8 and non-normal error distributions for ξ;

We consider linear working models for the mean and log variance functions

where now . In addition to Q-learning with linear working models, we include results using support vector regression using a Gaussian kernel (Zhao et al., 2011) to estimate both Q-functions. We compare the two versions of Q-learning with two IQ-learning estimators that differ in the estimation of gh1,a1(·) and the model for σ(h1, a1; γ): a normal estimator of the residual distribution and a restricted variance model, log{σ(h1, a1; γ)} = γ0 + a1γ1, that depends only on treatment; and a nonparametric estimator of the residual distribution with a log-linear variance model that depends on h1 and a1. When ξ ~ Normalp(0, Ip), both these estimators are correctly specified. Q-learning is always correctly specified at the second stage but only correctly specified at the first stage when C = 0 and hence β2,1 = 0.

Results are based on a training set of size n = 250 and M = 2,000 Monte Carlo data sets for each generative model. Additional results for n = 500 are provided in the Supplementary Material. For the nonparametric IQ-learning estimator, which is always correctly specified, the true Q-functions and subsequent optimal regime are estimated using a test set of 10,000 observations. Recall that the value, Eπ (Y), of an arbitrary policy π is the expected outcome if all patients are assigned treatment according to π, that is, Eπ (Y) = E(E [E {Y | H2, a2} | H1, a1]) evaluated at a2 = π2(H2) and a1 = π1(H1). For a given training set of size n and an algorithm that produces an estimated optimal policy, say π̂, we define the average value as E {Eπ̂ (Y)}, where the outer expectation is taken over all training sets of size n. We estimate the average value of the IQ- and Q-learning estimators using a test set of size 10,000 to estimate the inner expectation and 2,000 Monte Carlo replications to estimate the outer expectation. We compare average values of the learned IQ and Q regimes to the value of the true optimal regime and present the proportion of optimal value obtained. For an estimator Q̂1(h1, a1) of the first-stage Q-function, Q1(h1, a1), define the integrated mean squared error as E [{Q̂1(H1, A1) − Q1(H1, A1)}2], where the expectation is taken over the joint distribution of (H1, A1) as well as the training data.

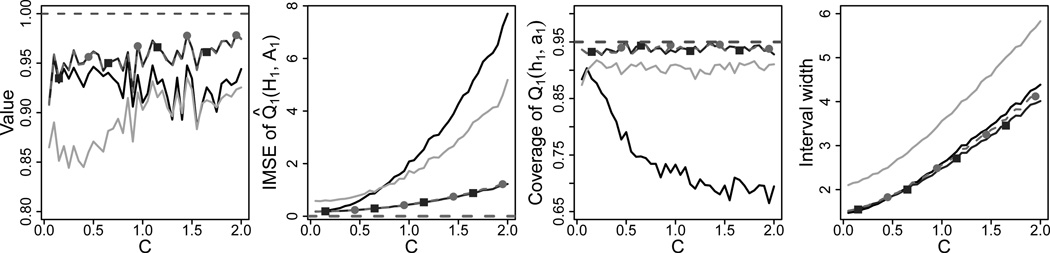

Confidence intervals for Q1(h1, a1) based on IQ-learning and Q-learning estimators are formed by bootstrapping the respective estimators and taking percentiles. For example, if l̂ and û denote the 100 × η/2 and 100 × (1 − η/2) percentiles of the bootstrap distribution of Q̂IQ(h1, a1) based on 1,000 bootstrap resamples, then the 100 × (1 − η)% confidence interval is given by (2Q̂IQ(h1, a1) − û, 2Q̂IQ(h1, a1) − l̂). Bootstrap intervals of this form are sometimes referred to as hybrid bootstrap confidence intervals (Efron and Tibshirani, 1993). Coverage and width of the foregoing confidence intervals are estimated using 2,000 Monte Carlo replications with a new instance (h1, a1) of (H1, A1) drawn for each replication. Figure 2 displays the results from this simulation, where ξ ~ Normalp(0, Ip).

Fig. 2.

Performance of the normal IQ-learning estimator, nonparametric IQ-learning estimator, support vector regression Q-learning, and linear Q-learning given by gray lines with squares, gray lines with circles, light gray lines, and black lines, respectively. Left to Right: Average proportion of optimal value attained; integrated mean squared error of Q1 estimates; coverage of 95% confidence intervals for Q1; width of 95% confidence intervals for Q1.

Figure 2 indicates that the IQ-learning estimators perform better than both Q-learning estimators. Although some gains are achieved using the more flexible support vector regression version of Q-learning, the far left panel indicates that IQ-learning attains higher average value than the Q-learning algorithms across most values of C. The second panel from the left shows that the IQ-learning reduces integrated mean squared error the most, with greater reduction as the second-stage effects increase. IQ-learning also demonstrates a large improvement over linear Q-learning in terms of the coverage of 95% confidence intervals for Q̂1 (h1, a1), as seen in the third panel. The poor coverage of linear Q-learning is attributed to bias, whereas IQ-learning is consistent because the regularity conditions given in Section 2.4 for Theorem 2 hold for the generative models in this section. Thus, IQ-learning estimators come close to achieving the nominal level. The average widths of the confidence intervals are similar for linear Q-learning and IQ-learning, as illustrated by the far right panel. Support vector regression Q-learning greatly improves coverage compared to linear Q-learning, but at the expense of wider intervals. Results from the simulation where the elements of ξ are generated independently from a t5 distribution are included in the Supplementary Material and appear similar to those in Fig. 2, suggesting the normal IQ-learning estimator is robust to slight misspecification of the residual distribution.

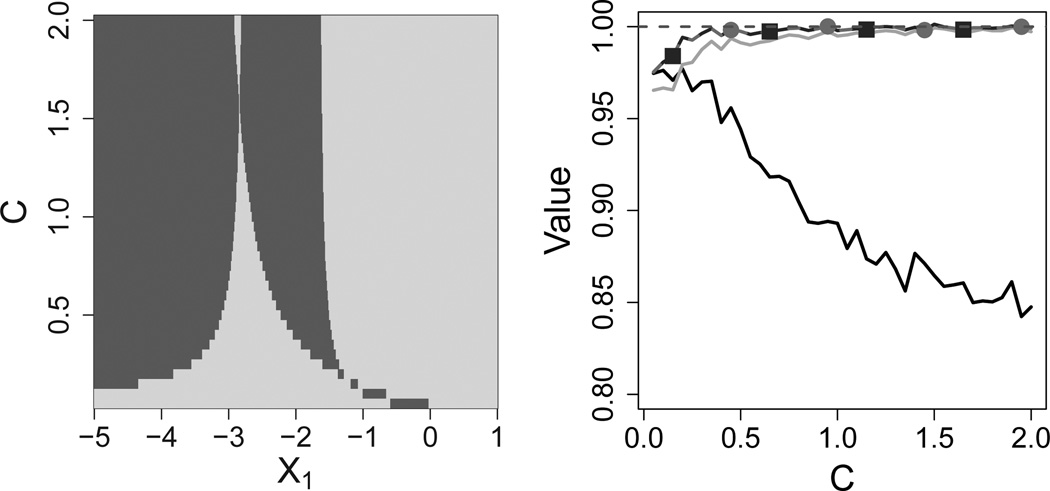

Next, we consider the model

where H2,0 = H2,1 = (1, X1, A1, X1A1, X2)⊤, β2,0 = (3, −1, 0.1, −0.1, −0.1)⊤, and β2,1 = C(−6, −2, 5, 3, −0.2)⊤. We use this example to illustrate a scenario where IQ-learning achieves a large gain in value over Q-learning with linear models. Since the predictor X1 is univariate, we can visualize which patients are treated differently by IQ-learning compared to Q-learning. The left plot in Figure 3 was obtained by deriving the true first stage Q-function, for which IQ-learning is consistent in this case, and comparing the true first-stage rule to the rule recommended by Q-learning with linear models. For each combination of (X1, C), the plot shows whether or not Q-learning makes the correct treatment decision. With this generative model, Q-learning assigns the wrong treatment to approximately half the population for a wide range of effect sizes because the true first-stage Q-function is nonlinear in X1. In contrast, with a sufficiently large sample size, IQ-learning treats all patients according to the optimal rule. A remark explaining the observed pattern is included in Section 6 of the Supplementary Material. Consequently, IQ-learning achieves higher average value than Q-learning, as displayed in the right plot of Fig. 3. Results are based on n = 250 training set samples and M = 1,000 Monte Carlo data sets. In this scenario, both IQ-learning and support vector regression Q-learning reach gains in optimal value attained of approximately 15% as the second-stage effect size grows.

Fig. 3.

A scenario where IQ-learning achieves a large gain in value over Q-learning. The constant C determines the second-stage treatment effect size, from no treatment effects (C = 0) to large effects (C = 2). In the left panel, (X1, C) pairs where linear Q-learning agrees and disagrees with the true first-stage rule are shown in dark and light gray, respectively, where X1 is a normally distributed first-stage covariate. On the right, average proportion of optimal value attained by the normal IQ-learning estimator, nonparametric IQ-learning estimator, support vector regression Q-learning, and linear Q-learning regimes shown by gray lines with squares, gray lines with circles, light gray lines, and black lines, respectively.

4. Application to STAR*D

Sequenced Treatment Alternatives to Relieve Depression (STAR*D; Fava et al., 2003; Rush et al., 2004) is a sequentially randomized study of major depressive disorder. A key feature of this trial is that patients experiencing early remission of symptoms were exempt from future randomization, complicating the analysis. We use a subset of the STAR*D data to illustrate how IQ-learning can be used to estimate an optimal dynamic treatment regime in the presence of responder-status dependent designs. There were four stages in the trial, but each patient received Citalopram in the first stage, and thus, there was no randomization. Since our aim is to demonstrate how to learn a regime using the IQ-learning machinery, we opt to perform a complete-case analysis and consider only the first two of three randomized stages. We refer to the second and third stages as stages one and two, respectively. At each stage, treatments can be categorized as a Selective Serotonin Reuptake Inhibitor or not; this is the binary treatment variable in our analysis. The first-line treatment Citalopram given in the non-randomized stage is in the class of Selective Serotonin Reuptake Inhibitors.

We use a measure of efficacy, the Quick Inventory of Depression Symptomatology score, as the outcome (Rush et al., 2004); side-effects or other competing outcomes could be accommodated using set-valued treatment regimes (Lizotte et al., 2012; Laber et al., 2013) or composite outcomes (Wang et al., 2012). The depression score ranges from 0 to 27 with higher values corresponding to more severe negative symptoms. To be consistent with our development, we recode these scores by subtracting them from 27; thus, higher values correspond to better clinical outcomes. The depression score was recorded at multiple time points throughout each stage, intermediate depression scores used as predictors in our analysis are also recoded (Rush et al., 2004, Schulte et al., 2012). At each stage, patients experiencing remission left the study. Remission was defined as a depression score of 5 or less, or greater than 21 after recoding. Henceforth, we refer to patients who achieved remission and left after stage one as responders and second-stage participants as non-responders. The data we use here consist of n = 795 patient trajectories with complete information, not including 481 patients who dropped out for reasons other than remission. The variables composing each trajectory are given in Table 1 of the Supplementary Material.

Of the 795 total patients, 329 were non-responders in the first stage and continued on to the second stage. We define our primary outcome as Y = RY1 + (1 − R)(Y1 + Y2)/2. That is, Y is taken to be Y1 for patients who left the study after stage one, and Y is taken to be the average of the depression scores measured at the end of the first and second stages for non-responders. Our responder-status version of IQ-learning is based on the Q-learning implementation described in Schulte et al. (2012). The first-stage history vector contains all information available prior to the first-stage treatment randomization. Thus, H1 = (X1,1, X1,2)⊤. The second-stage history is H2 = (X1,1, X1,2, A1, Y1, R, X2,1, X2,2)⊤, which contains all information observed before the second-stage treatment assignment. The second-stage Q-function is Q2(H2, A2) = E(Y | H2 = h2, A2 = a2) = RY1 + (1 − R) {Y1 + E(Y2 | H2 = h2, A2 = a2)} /2, where we have used the fact that R and Y1 are contained in H2. Thus the first step in the IQ-learning algorithm, and in Q-learning, is to specify and fit a model for E(Y2 | H2 = h2, A2 = a2). Defining the second-stage summary vectors as H2,0 = H2,1 = (1, X2,1, X2,2)⊤, we consider a working model of the form , that we fit via least squares. Standard regression diagnostics based on the residuals to not indicate any major departures from the usual linear modeling assumptions.

In our subset of data, all non-responders who did not receive a Selective Serotonin Reuptake Inhibitor in stage one did not receive one in stage two. Table 2 of the Supplementary Material provides the number of patients assigned to each treatment strategy. We define Ỹ = arg maxa2 Q2(H2, a2)R + {Q2(H2, −1)(1 − A1) + arg maxa2 Q2(H2, a2)(1 + A1)} (1 − R)/2. Thus, Ỹ is Q2(H2, −1) for non-responders who received A1 = −1 and arg maxa2 Q2(H2, a2) otherwise. With this working model, after substituting in Q2(H2, a2) and simplifying,

| (12) |

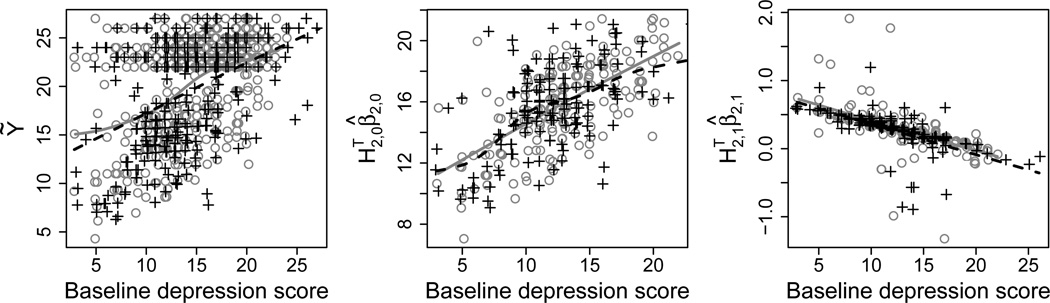

The Q-learning algorithm models E(Ỹ | H1, A1) directly. The left panel in Figure 4 shows a scatterplot of the pseudo-response Ỹ against baseline depression score by first-stage treatment. Cubic smoothing spline fits to the data are indicated by solid gray and dashed black lines for A1 = 1 and A1 = −1, respectively. These fits appear approximately linear; however, the variance appears non-constant across baseline depression score, and there is clear separation between the responder and non-responder groups.

Fig. 4.

First-stage summaries of the STAR*D data. Gray circles and black crosses represent treatments A1 = 1 and A1 = −1, respectively. The left panel shows a scatterplot of Ỹ against baseline depression score by first-stage treatment. The middle panel contains a scatterplot of against baseline depression score by first-stage treatment for non-responders. The right panel contains a scatterplot of against baseline depression score by first-stage treatment for non-responders. Cubic smoothing spline fits to the data for A1 = 1 and A1 = −1 are represented by solid gray and dashed black lines, respectively.

We can write the first three expectation terms in (12) as:

We estimate the right-hand conditional expectations by fitting three separate linear regressions, and we use logistic regression to estimate pr(R = r | H1, A1, r = 0, 1. In particular, we specify linear models of the form for E(Y1 | H1, A1, R = r), where H1,0 = H1,1 = (1, X1,1, X1,2)⊤. We posit the model for the main-effect term, which we fit with least squares using only the non-responder data. The middle and right plots in Fig. 4 display scatterplots of the non-responder realizations of the main-effect term and contrast function, respectively, against baseline depression score by first-stage treatment. Cubic smoothing spline fits to the data appear mostly linear. For the logistic regression, we fit the model .

Finally, we must obtain estimates of and in equation (12). Notice that

We can use the IQ-learning machinery to obtain an estimate of gH1, A1, R=0(·). The logistic regression previously described provides an estimate of pr(R = 0 | H1, A1). To estimate gH1, A1, R=0(·), we first specify a model for the mean of the contrast function for non-responders. We posit the model

| (13) |

where H1,0 = (1, X1,1, X1,2)⊤ and H1,1 = (1, X1,1, X1,2)⊤. This model is also used along with the logistic regression model to estimate , the fourth expectation term in (12). We fit model (13) using least squares. Exploratory analysis suggests that a constant variance assumption is reasonable, so we standardize the residuals of the mean fit using the sample standard deviation. A normal quantile-quantile plot of the standardized residuals suggests heavier tails than would be expected from a normal distribution. Thus, we opt to use the nonparametric density estimator described in Section 2.3.

Assembling the foregoing estimates of the four terms in equation (12) yields

where ĝh1, a1, R=0(·) is the nonparametric density estimator described in Section 2.3 and .

The first-stage rule estimated by Q-learning treats all training data patients with a Selective Serotonin Reuptake Inhibitor. Roughly twelve percent of the these patients are not recommended a Selective Serotonin Reuptake Inhibitor by the estimated first-stage IQ-learning rule. Broadly summarizing the IQ-learning rule, patients with very low recoded depression scores after the non-randomized stage should switch from Citalopram, a Selective Serotonin Reuptake Inhibitor, to another drug not in the class of Selective Serotonin Reuptake Inhibitors. This rule recommends a different strategy for patients who respond poorly to the initial Selective Serotonin Reuptake Inhibitor; otherwise Selective Serotonin Reuptake Inhibitor treatment strategies should continue.

Supplementary Material

Acknowledgments

We acknowledge grant support from the National Institutes of Health (Laber, Stefanski) and National Science Foundation (Stefanski). The authors thank the National Institutes of Health for providing the STAR*D data and Bibhas Chakraborty for his consultation on the analysis presented in Section 4.

Footnotes

Supplementary Material

Supplementary material available at Biometrika online includes proofs of the theoretical results stated in Section 2, additional simulation results, and a list of covariates used in Section 4.

Contributor Information

Eric B. Laber, Email: laber@stat.ncsu.edu.

Kristin A. Linn, Email: kalinn@ncsu.edu.

Leonard A. Stefanski, Email: stefansk@ncsu.edu.

References

- Bellman R. Dynamic Programming. Princeton: Princeton University Press; 1957. [Google Scholar]

- Blatt D, Murphy SA, Zhu J. A-Learning for Approximate Planning. Ann Arbor, 1001. 2004:48109–42122. [Google Scholar]

- Carroll RJ, Ruppert D. Transformation and Weighting in Regression. New York: Chapman and Hall; 1988. [Google Scholar]

- Chakraborty B, Moodie EE. Statistical Methods for Dynamic Treatment Regimes. Springer; 2013. Statistical reinforcement learning; pp. 31–52. [Google Scholar]

- Chakraborty B, Murphy SA, Strecher VJ. Inference for Non-Regular Parameters in Optimal Dynamic Treatment Regimes. Statistical Methods in Medical Research. 2010;19(3):317–343. doi: 10.1177/0962280209105013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cook RD, Weisberg S. Residuals and Influence in Regression. New York: Chapman and Hall; 1982. [Google Scholar]

- Efron B, Tibshirani RJ. An Introduction to the Bootstrap. New York: Chapman and Hall; 1993. [Google Scholar]

- Fava M, Rush AJ, Trivedi MH, Nierenberg AA, Thase ME, Sackeim HA, Quitkin FM, Wisniewski SR, Lavori PW, Rosenbaum JF, Kupfer DJ STAR*D Investigators Group. Background and Rationale for the Sequenced Treatment Alternatives to Relieve Depression (STAR*D) Study. Psychiatric Clinics of North America. 2003;26(1):457+. doi: 10.1016/s0193-953x(02)00107-7. [DOI] [PubMed] [Google Scholar]

- Henderson HV, Velleman PF. Building Multiple Regression Models Interactively. Biometrics. 1981;37(2):391–411. [Google Scholar]

- Henderson R, Ansell P, Alshibani D. Regret-regression for optimal dynamic treatment regimes. Biometrics. 2010;66(4):1192–1201. doi: 10.1111/j.1541-0420.2009.01368.x. [DOI] [PubMed] [Google Scholar]

- Kosorok MR. Introduction to Empirical Processes and Semiparametric Inference. New York: Springer; 2008. [Google Scholar]

- Laber E, Lizotte D, Ferguson B. Set-valued dynamic treatment regimes for competing outcomes. Biometrics. 2013 doi: 10.1111/biom.12132. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Laber EB, Lizotte DJ, Qian M, Pelham WE, Murphy SA. Statistical Inference in Dynamic Treatment Regimes. Under Review. 2014 [Google Scholar]

- Lavori PW, Dawson R. A Design for Testing Clinical Strategies: Biased Adaptive Within-Subject Randomization. Journal of the Royal Statistical Society: Series A (Statistics in Society) 2000;163(1):29–38. [Google Scholar]

- Lavori PW, Dawson R. Dynamic Treatment Regimes: Practical Design Considerations. Clinical Trials. 2004;1(1):9–20. doi: 10.1191/1740774s04cn002oa. [DOI] [PubMed] [Google Scholar]

- Lizotte DJ, Bowling M, Murphy SA. Linear fitted-q iteration with multiple reward functions. Journal of Machine Learning Research. 2012;13:3253–3295. [PMC free article] [PubMed] [Google Scholar]

- Moodie EE, Dean N, Sun YR. Q-learning: Flexible learning about useful utilities. Statistics in Biosciences. 2013:1–21. [Google Scholar]

- Moodie EE, Richardson TS. Estimating optimal dynamic regimes: Correcting bias under the null. Scandinavian Journal of Statistics. 2010;37(1):126–146. doi: 10.1111/j.1467-9469.2009.00661.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Murphy SA. Optimal Dynamic Treatment Regimes. Journal of the Royal Statistical Society: Series B (Statistical Methodology) 2003;65(2):331–355. [Google Scholar]

- Murphy SA. A Generalization Error for Q-Learning. Journal of Machine Learning Research. 2005a;6(7):1073–1097. [PMC free article] [PubMed] [Google Scholar]

- Murphy SA. An Experimental Design for the Development of Adaptive Treatment Strategies. Statistics in Medicine. 2005b;24(10):1455–1481. doi: 10.1002/sim.2022. [DOI] [PubMed] [Google Scholar]

- Rich B, Moodie EE, Stephens DA, Platt RW. Model checking with residuals for g-estimation of optimal dynamic treatment regimes. The international journal of biostatistics. 2010;6(2) doi: 10.2202/1557-4679.1210. [DOI] [PubMed] [Google Scholar]

- Robins JM. Proceedings of the Second Seattle Symposium in Biostatistics. New York: Springer; 2004. Optimal Structural Nested Models for Optimal Sequential Decisions; pp. 189–326. [Google Scholar]

- Rush AJ, Fava M, Wisniewski SR, Lavori PW, Trivedi MH, Sackeim HA, Thase ME, Nierenberg AA, Quitkin FM, Kashner T, Kupfer DJ, Rosenbaum JF, Alpert J, Stewart JW, McGrath PJ, Biggs MM, Shores-Wilson K, Lebowitz BD, Ritz L, Niederehe G STAR*D Investigators Group. Sequenced Treatment Alternatives to Relieve Depression (STAR*D): Rationale and Design. Controlled Clinical Trials. 2004;25(1):119–142. doi: 10.1016/s0197-2456(03)00112-0. [DOI] [PubMed] [Google Scholar]

- Schulte PJ, Tsiatis AA, Laber EB, Davidian M. Q-and a-learning methods for estimating optimal dynamic treatment regimes. arXiv preprint arXiv:1202.4177. 2012 doi: 10.1214/13-STS450. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sutton RS, Barto AG. Reinforcement Learning: An Introduction. 1st edition. Cambridge: MIT Press, Cambridge, MA, USA; 1998. [Google Scholar]

- Wang L, Rotnitzky A, Lin X, Millikan RE, Thall PF. Evaluation of viable dynamic treatment regimes in a sequentially randomized trial of advanced prostate cancer. Journal of the American Statistical Association. 2012;107(498):493–508. doi: 10.1080/01621459.2011.641416. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhao Y, Zeng D, Socinski MA, Kosorok MR. Reinforcement learning strategies for clinical trials in nonsmall cell lung cancer. Biometrics. 2011;67(4):1422–1433. doi: 10.1111/j.1541-0420.2011.01572.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.