Abstract

In clinical practice, physicians make a series of treatment decisions over the course of a patient’s disease based on his/her baseline and evolving characteristics. A dynamic treatment regime is a set of sequential decision rules that operationalizes this process. Each rule corresponds to a decision point and dictates the next treatment action based on the accrued information. Using existing data, a key goal is estimating the optimal regime, that, if followed by the patient population, would yield the most favorable outcome on average. Q- and A-learning are two main approaches for this purpose. We provide a detailed account of these methods, study their performance, and illustrate them using data from a depression study.

Key words and phrases: Advantage learning, bias-variance tradeoff, model misspecification, personalized medicine, potential outcomes, sequential decision making

1. INTRODUCTION

An area of current interest is personalized medicine, which involves making treatment decisions for an individual patient using all information available on the patient, including genetic, physiologic, demographic, and other clinical variables, to achieve the “best” outcome for the patient given this information. In treating a patient with an ongoing disease or disorder, a clinician makes a series of decisions based on the patient’s evolving status. A dynamic treatment regime is a list of sequential decision rules formalizing this process. Each rule corresponds to a key decision point in the disease/disorder progression and takes as input the information on the patient to that point and outputs the treatment that s/he should receive from among the available options. A key step toward personalized medicine is thus finding the optimal dynamic treatment regime, that which, if followed by the entire patient population, would yield the most favorable outcome on average.

The statistical problem is to estimate the optimal regime based on data from a clinical trial or observational study. Q-learning (Q denoting “quality,” Watkins, 1989; Watkins and Dayan, 1992; Nahum-Shani et al., 2010) and advantage learning (A-learning, Murphy, 2003; Robins, 2004; Blatt, Murphy and Zhu, 2004) are two main approaches for this purpose and are related to reinforcement learning methods for sequential decision-making in computer science. Q-learning is based roughly on posited regression models for the outcome of interest given patient information at each decision point and is implemented through a backwards recursive fitting procedure that is related to the dynamic programming algorithm (Bather, 2000), a standard approach for deducing optimal sequential decisions. A-learning involves the same recursive strategy, but requires only posited models for the part of the outcome regression representing contrasts among treatments and for the probability of observed treatment assignment given patient information at each decision point. As discussed later, this may make A-learning more robust to model misspecification than Q-learning for consistent estimation of the optimal treatment regime.

Examples of the use of Q- and A-learning and alternative methods to deduce optimal strategies for treatment of substance abuse, psychiatric disorders, cancer, and HIV infection and for dose adjustment in response to evolving patient status have been presented (Rosthøj et al., 2006; Murphy et al., 2007a,b; Zhao, Kosorok and Zeng, 2009; Henderson, Ansell and Alshibani, 2010). Relevant work includes Thall, Millikan and Sung (2000), Thall, Sung and Etsey (2002), Robins (2004), Moodie, Richardson and Stephens (2007), Thall et al. (2007), van der Laan and Petersen (2007), Robins, Orellana and Rotnitzky (2008), Almirall, Ten Have and Murphy (2010), Orellana, Rotnitzky and Robins (2010), Zhang et al. (2012a,b), Zhao et al. (2012), Zhang et al. (2013) and Zhao et al. (2013).

The objective of this article is to provide readers interested in an introduction to estimation of optimal dynamic treatment regimes with a self-contained, detailed description of an appropriate statistical framework in which to define formally an optimal regime, of some of the operational and philosophical considerations involved, and of Q- and A-learning methods. Section 2 introduces the statistical framework, and Sections 3 and 4 discuss the form of the optimal regime. We describe and contrast Q- and A-learning in Section 5 and present systematic empirical studies of their relative performance and the effects of misspecification of the postulated models involved in Section 6. The methods are demonstrated using data from the Sequenced Treatment Alternatives to Relieve Depression (STAR*D, Rush et al., 2004) study in Section 7.

2. FRAMEWORK AND ASSUMPTIONS

Consider the setting of K prespecified, ordered decision points, indexed by k = 1,…, K, which may be times or events in the disease or disorder process that necessitate a treatment decision, where, at each point, a set of treatment options is available. Assume that there is a final outcome Y of interest for which large values are preferred. The outcome may be ascertained following the Kth decision, as with CD4 T-cell count at a prespecified follow-up time in HIV infection (Moodie et al., 2007); or may be a function of information accrued over the entire sequence of decisions, as in Henderson et al. (2010), where the outcome is the overall proportion of time a measure of blood clotting speed is kept within a target range in dosing of anticoagulant agents.

In order to define an optimal treatment regime and discuss its estimation based on data from an observational study or clinical trial, we define a suitable conceptual framework. For simplicity, our presentation is heuristic. Imagine that there is a superpopulation of patients, denoted by Ω, where one may view an element ω ∈ Ω as a patient from this population. We assume that patients in the population have been treated according to routine clinical practice for the disease or disorder prior to the first treatment decision. Consequently, immediately prior to this first decision, patient ω would present to the decision-maker with a set of baseline information (covariates) denoted by the random variable S1, discussed further below. Thus, S1(ω) is the value of his/her information immediately prior to decision 1, taking values s1, say, in a set 𝒮1. Assume that, at each decision point k = 1,…, K, there is a finite set of all possible treatment options 𝒜k, with elements ak. We do not consider the case of continuous treatment and henceforth restrict attention to a finite set of options. Denote by āk = (a1, …, ak) a possible treatment history that could be administered through decision k, taking values in 𝒜̄k = 𝒜1 × … × 𝒜k, the set of all possible treatment histories āK through all K decisions.

We then define the potential outcomes (Robins, 1986)

| (1) |

In (1), denotes the value of covariate information that would arise between decisions k − 1 and k for a patient ω ∈ Ω in the hypothetical situation that s/he were to have received previously treatment history āk−1, taking values sk in a set 𝒮k, k = 2, …, K. Similarly, Y*(āK)(ω) is the hypothetical outcome that would result for ω were s/he to have been administered the full set of K treatments in āK. This notation implies that, for random variables such as is an index representing prior treatment history. Write , where takes values s̄k in 𝒮̄k = 𝒮1 × … × 𝒮k; this definition includes the baseline covariate S1 and is taken equal to S1 when k = 1. The elements of the may be discrete or continuous; in what follows, for simplicity, we take these random variables to be discrete, but the results hold more generally.

A dynamic treatment regime d = (d1, …, dK) is a set of rules that forms an algorithm for treating a patient over time; it is “dynamic” because treatment is determined based on a patient’s previous history. At the kth decision point, the kth rule dk(s̄k, āk−1), say, takes as input the patient’s realized covariate and treatment history prior to the kth treatment decision and outputs a value ak ∈ Ψk (s̄k, āk−1) ⊆ 𝒜k; for k = 1, there is no prior treatment (a0 is null), and we write d1(s1) and Ψ1(s1). Here, Ψk(s̄k, āk−1) is a specified set of possible treatment options for a patient with realized history (s̄k, āk−1), discussed further below. Accordingly, although we suppress this in the notation for brevity, the definition of a dynamic treatment regime we now present depends on the specified Ψk(s̄k, āk−1), k = 1, …, K. Because dk(s̄k, āk−1) ∈ Ψk(s̄k, āk−1), ⊆ 𝒜k, dk need only map a subset of 𝒮̄k × 𝒜̄k−1 to 𝒜k. We define these subsets recursively as

| (2) |

determined by Ψ = (Ψ1, …, ΨK). The Γk contain all realizations of covariate and treatment history consistent with having followed such Ψ-specific regimes to decision k. Define the class 𝒟 of (Ψ-specific) dynamic treatment regimes to be the set of all d for which dk, k = 1,…, K, is a mapping from Γk into 𝒜k satisfying dk (s̄k, āk−1) ∈ Ψk (s̄k, āk−1) for every (s̄k, āk−1) ∈ Γk.

Specification of the Ψk (s̄k, āk−1), k = 1,…, K, is dictated by the scientific setting and objectives. Some treatment options may be unethical or impossible for patients with certain histories, making it natural to restrict the set of possible options for such patients. In the context of public health policy, the focus may be on regimes involving only treatment options that are less costly or widely available unless a patient’s condition is especially serious, as reflected in his/her covariate information. In what follows, we assume that a particular fixed set Ψ is specified, and by an optimal regime we mean an optimal regime within the class of corresponding Ψ-specific regimes.

An optimal regime should represent the “best” way to intervene to treat patients in Ω. To formalize, for any d ∈ 𝒟, writing d̄k = (d1,… ,dk), k = 1, …, K, d̄K = d, define the potential outcomes associated with d as such that, for any ω ∈ Ω, with S1(ω) = s1,

| (3) |

The index d̄k−1 emphasizes that represents the covariate information that would arise between decisions k − 1 and k were patient ω to receive the treatments sequentially dictated by the first k − 1 rules in d. Similarly, Y*(d)(ω) is the final outcome that ω would experience if s/he were to receive the K treatments dictated by d.

With these definitions, the expected outcome in the population if all patients with initial state S1 = s1 were to follow regime d is E{Y*(d)|S1 = s1}. An optimal regime, dopt ∈ 𝒟, say, satisfies

| (4) |

Because (4) is true for any fixed s1, in fact E{Y*(d)} ≤ E{Y*(d(1)opt)} for any d ∈ 𝒟. In Section 3, we give the form of dopt satisfying (4).

Alternative specifications of Ψ may lead to different classes of regimes across which the optimal regime may differ. We emphasize that the definition (4) is predicated on the particular set Ψ, and hence class 𝒟, of interest. In principle, the class 𝒟 of interest is conceived based on scientific or policy objectives without reference to data available from a particular study.

Of course, potential outcomes for a given patient for all d ∈ 𝒟 are not observed. Thus, the goal is to estimate dopt in (4) using data from a study carried out on a random sample of n patients from Ω that record baseline and evolving covariate information and treatments actually received. Denote these available data as independent and identically distributed (i.i.d.) time-ordered random variables (S1i, A1i, …, SKi, AKi, Yi), i = 1, …, n, on Ω. Here, S1 is as before; Sk, k = 2, …, K, is covariate information recorded between decisions k − 1 and k, taking values sk ∈ 𝒮k; Ak, k = 1, …, K, is the recorded, observed treatment assignment, taking values ak ∈ 𝒜k; and Y is the observed outcome, taking values y ∈ 𝒴. As above, define S̄k = (S1, …. Sk) and Āk = (A1, …, Ak), k = 1, …, K, taking values s̄k ∈ 𝒮̄k and āk ∈ 𝒜̄k.

The available data may arise from an observational study involving n participants randomly sampled from the population; here, treatment assignment takes place according to routine clinical practice in the population. Alternatively, the data may arise from an intervention study. A clinical trial design that has been advocated for collecting data suitable for estimating optimal treatment regimes is that of a so-called sequential multiple-assignment randomized trial (SMART, Lavori and Dawson, 2000; Murphy, 2005). In a SMART involving K pre-specified decision points, each participant is randomized at each decision point to one of a set of treatment options, where, at the kth decision, the randomization probabilities may depend on past realized information s̄k, āk−1.

In order to use the observed data from either type of study to estimate an optimal regime, several assumptions are required. As is standard, we make the consistency assumption (e.g., Robins, 1994) that the covariates and outcomes observed in the study are those that potentially would be seen under the treatments actually received; that is, , and Y = Y*(ĀK). We also make the stable unit treatment value assumption (Rubin, 1978), which ensures that a patient’s covariates and outcome are unaffected by how treatments are allocated to her/him and other patients. The critical assumption of no unmeasured confounders, also referred to as the sequential randomization assumption (Robins, 1994), must be satisfied. A strong version of this assumption states that Ak is conditionally independent of W* in (1) given {S̄k, Āk−1}, k = 1,…,K, where A0 is null, written Ak ⫫ W*|S̄k, Āk−1. In a SMART, this assumption is satisfied by design; in an observational study, it is unverifiable from the observed data. The strong version is sufficient for identification of the distribution of not only Y*(āK) but of the joint distribution of Y*(āK) and and allows the results of Section 4 to hold. Although in the population patients and their providers may make decisions based only on past covariate information available to them, the issue is whether or not all of the information that is related to treatment assignment and future covariates and outcome is recorded in the Sk; see Robins (2004, Sections 2–3) for discussion and a relaxation of the version of the sequential randomization assumption given here. We assume henceforth that these assumptions hold.

Whether or not it is possible to estimate dopt from the available data is predicated on the treatment options in Ψk (s̄k, āk−1), k = 1,…, K, being represented in the data. For a prospectively-designed SMART, ordinarily, Ψ defining the class 𝒟 of interest would dictate the design. At decision k, subjects would be randomized to the options in Ψk (s̄k, āk−1), satisfying this condition. If the data are from an observational study, all treatment options in Ψk (s̄k, āk−1) at each decision k must have been assigned to some patients. That is, if we define recursively satisfying (i) , and (ii) , we must have . The class of regimes dictated by is the largest that can be considered based on the data, sometimes referred to as the class of “feasible regimes” (Robins, 2004). If this inclusion condition does not hold for all k = 1, …, K, dopt cannot be estimated from the data, and the class of regimes 𝒟 of interest must be reevaluated or another data source found.

3. OPTIMAL TREATMENT REGIMES

Q- and A-learning are two approaches to estimating dopt satisfying (4) under the foregoing framework. Both involve recursive fitting algorithms; the main distinguishing feature is the form of the underlying models. To appreciate the rationale, one must understand how dopt is determined via dynamic programming, also known as backward induction. We demonstrate the formulation of dopt in terms of the potential outcomes and then show how dopt may be expressed in terms of the observed data under assumptions including those in Section 2. We sometimes highlight dependence on specific elements of quantities such as āk, writing, for example, āk as (āk−1, ak).

At the Kth decision point, for any s̄K ∈ 𝒮̄K, āK−1 ∈ 𝒜̄K−1 for which (s̄K, āK−1) ∈ ΓK, define

| (5) |

| (6) |

For k = K − 1, …, 1 and any s̄k ∈ 𝒮̄k, āk−1 ∈ 𝒜̄k−1 for which (s̄k, āk−1) ∈ Γk, which clearly holds if (s̄K, āK−1) ∈ ΓK, let

| (7) |

| (8) |

thus, for . Conditional expectations are well-defined by (2)(ii).

Clearly, is a treatment regime, as it comprises a set of rules that uses patient information to assign treatment from among the options in Ψ. The superscript (1) indicates that d(1)opt provides K rules for a patient presenting prior to decision 1 with baseline information S1 = s1; Section 4 considers optimal treatment of patients presenting at subsequent decisions after receiving possibly sub-optimal treatment at prior decisions. Note that d(1)opt is defined in a backward iterative fashion. At decision K, (5) gives the treatment that maximizes the expected potential final outcome given the prior potential information, and (6) is the maximum achieved. At decisions k = K − 1,…, 1, (7) gives the treatment that maximizes the expected outcome that would be achieved if subsequent optimal rules already defined were followed henceforth. In Section A.1 of the supplemental article [Schulte et al. (2012)], we show that d(1)opt defined in (5)–(8) is an optimal treatment regime in the sense of satisfying (4).

The foregoing developments express optimal regimes in terms of the distribution of potential outcomes. If an optimal regime is to be identifiable, it must be possible under the assumptions in Section 2 to express d(1)opt in terms of the distribution of the observed data. To this end, define

| (9) |

| (10) |

| (11) |

and for k = K − 1,…, 1, define

| (12) |

| (13) |

| (14) |

.

The expressions in (9)–(14) are well-defined under assumptions we discuss next. In (9) and (12), Qk(s̄k,āk) are referred to as “Q-functions,” viewed as measuring the “quality” associated with using treatment ak at decision k given the history up to that decision and then following the optimal regime thereafter. The “value functions” Vk(s̄k, āk−1) in (11) and (14) reflect the “value” of a patient’s history s̄k, āk−1 assuming that optimal decisions are made in the future. We emphasize that the , defined (9)–(14) may not be optimal unless the sequential randomization, consistency, and positivity assumptions hold.

As in Section 2, the treatment options in Ψ must be represented in the data, i.e., , in order to estimate an optimal regime. Formally, this implies that

| (15) |

for all k = 1,…, K. In Section A.2 of the supplemental article [Schulte et al. (2012)], under the consistency and sequential randomization assumptions and the positivity assumption (15), we show that, for any (s̄k, āk−1) ∈ Γk and ak ∈ Ψk (s̄k, āk−1), k = 1,…, K,

| (16) |

| (17) |

| (18) |

for j = 1,…, k, where (18) with j = k is the same as the right-hand side of (17), SK+1 = Y and , and when j = 1 the conditioning events do not involve treatment. By (16), the quantities in (9)–(14) are well-defined. Under (17)–(18), the conditional distributions of the observed data involved in (9)–(14) are the same as the conditional distributions of the potential outcomes involved in (5)–(8). It follows that

| (19) |

for (s̄k, āk−1) ∈ Γk, k = 1, …, K. The equivalence in (19) shows that, under the consistency, sequential randomization, and positivity assumptions, an optimal treatment regime in the (Ψ-specific) class of interest 𝒟 may be obtained using the distribution of the observed data.

There may not be a unique dopt. At any decision k, if there is more than one possible option ak maximizing the Q-function, then any rule yielding one of these ak defines an optimal regime.

4. OPTIMAL “MIDSTREAM” TREATMENT REGIME

In Section 3, we define a (Ψ-specific) optimal treatment regime starting at decision point 1 and elucidate conditions under which it may be estimated using data from a clinical or observational study collected through all K decisions on a sample from the patient population. The goal is to estimate the optimal regime and implement it in new such patients presenting at the first decision.

In routine clinical practice, however, a new patient may be encountered subsequent to decision point 1. For definiteness, suppose a new patient presents “midstream,” immediately prior to the ℓth decision point, ℓ = 2,…, K. A natural question is how to treat this patient optimally henceforth. For such a patient, the first ℓ − 1 treatment decisions presumably have been made according to routine practice, and s/he has a realized past history that may be viewed as realizations of random variables . Here, , represent the treatments received by such a patient according to the treatment assignment mechanism governing routine practice; and , denote covariate information collected up to the ℓth decision. Write .

As 𝒜k denotes the set of all possible treatment options at decision k, takes on values āℓ−1 ∈ 𝒜̄ℓ−1. To define Ψ-specific regimes starting at decision ℓ, at the least, must contain the same information as Sk in the data, k = 1, …,ℓ. Because the available data dictate the covariate information incorporated in the class of regimes 𝒟, if contains additional information, it cannot be used in the context of such regimes. We thus take and Sk to contain the same information, stated formally as the consistency assumption . Moreover, we can only consider treating new patients with realized histories (s̄ℓ, āℓ−1) that are contained in Γℓ; that is, that could have resulted from following a Ψ-specific regime through decision ∓ − 1. If the data arise from a SMART including only a subset of the treatments employed in practice, this may not hold.

We thus desire rules , say, that dictate how to treat such midstream patients presenting with realized past history . In the following, we regard (s̄ℓ, āℓ−1) as fixed, corresponding to the particular new patient. Let be all elements of Γk with (s̄ℓ, āℓ−1) fixed at the values for the given new patient. Write to denote regimes starting at the ℓth decision point, and define the class 𝒟(ℓ) of all such regimes to be the set of all d(ℓ) for which for and ak ∈ Ψk (s̄k, āk−1) for k = ℓ,…, K. Then, by analogy to (4), we seek d(ℓ)opt satisfying

| (20) |

for all d(ℓ) ∈ 𝒟(ℓ) and s̄ℓ ∈ 𝒮̄ℓ, āℓ−1 ∈ 𝒜̄ℓ−1 for which . Viewing this as a problem of making K − ℓ+1 decisions at decision points ℓ, ℓ +1,…, K, with initial state , by an argument analogous to that in Section A.1 of the supplemental article [Schulte et al. (2012)] for ℓ = 1 and initial state S1 = s1 letting , it may be shown that d(ℓ)opt satisfying (20) is given by

| (21) |

| (22) |

for any s̄K ∈ 𝒮̄K, āK−1 ∈ 𝒜̄K−1 for which ; and, for k = K − 1,… ℓ,

| (23) |

| (24) |

for any s̄k ∈ 𝒮̄k, āk−1 ∈ 𝒜̄k−1 for which , so that

Comparison of (5)–(8) to (21)–(24) shows that the ℓth to Kth rules of the optimal regime d(1)opt that would be followed by a patient presenting at the first decision are not necessarily the same as those of the optimal regime d(ℓ)opt that would be followed by a patient presenting at the ℓth decision. In particular, noting that the conditioning sets in (5)–(8) are 𝒱1,K and 𝒱1,k, the rules are ℓ-dependent through dependence of the conditioning sets 𝒱ℓ,k, ℓ = 1, …, K, k = ℓ,…, K, on ℓ. However, we now demonstrate that these rules coincide under certain conditions.

Make the consistency, sequential randomization, and positivity (15) assumptions on the available data required to show (19) in Section 3, along with the consistency assumption on the above and the sequential randomization assumption , which ensures that the include all information related to treatment assignment and future covariates and outcome up to decision ℓ. Note that (21)–(24) are expressed in terms of the conditional distributions . We can then use (18) with j = ℓ to deduce that these conditional distributions can be written equivalently as , so solely in terms of the distribution of the potential outcomes. By (17) and (18) with j = 1, this can be written as pr(Sk+1 = sk+1| S̄k = s̄k, Āk = āk). This shows that (21)–(24) can be reexpressed in terms of the observed data, so that, for (s̄k, āk−1) ∈ Γk for ℓ = 1,…, K and k = ℓ,…, K,

| (25) |

Note that (25) subsumes (19) when ℓ = 1. The equivalence in (25) not only demonstrates that an optimal treatment regime can be obtained using the distribution of the observed data but also that the corresponding rules dictating treatment do not depend on ℓ under these assumptions. Thus, the single set of rules defined in (10) and (13) is relevant regardless of when a patient presents. That is, treatment at the ℓth decision point for a patient who presents at decision 1 and has followed the rules in dopt to that point would be determined by evaluated at his/her history up to that point, as would treatment for a subject presenting for the first time immediately prior to decision ℓ. See Robins (2004, pages 305–306) for more discussion.

5. Q- AND A-LEARNING

5.1 Q-Learning

From (10), (13) and (19), an optimal (Ψ-specific) regime dopt may be represented in terms of the Q-functions (9), (12). Thus, estimation of dopt based on i.i.d. data (S1i, A1i,…, SKi, AKi, Yi), i = 1,…, n, may be accomplished via direct modeling and fitting of the Q-functions. This is the approach underlying Q-learning. Specifically, one may posit models Qk(s̄k,āk;ξk), say, for k = K,K − 1,…, 1, each depending on a finite-dimensional parameter ξk. The models may be linear or nonlinear in ξk and include main effects and interactions in the elements of s̄k and āk.

Estimators ξ̂k may be obtained in a backward iterative fashion for k = K, K −1,…, 1 by solving suitable estimating equations [e.g., ordinary (OLS) or weighted (WLS) least squares]. Assuming the latter, for k = K, letting Ṽ(K+1)i = Yi one would first solve

| (26) |

in ξK to obtain ξ̂K, where ΣK(s̄K, āK) is a working variance model. Substituting the model QK (s̄K, āK; ξK) in (10) and accordingly writing , substituting ξ̂K for ξK yields an estimator for the optimal treatment choice at decision K for a patient with past history S̄K = s̄K, ĀK−1 = āK−1. With ξ̂K in hand, one would form for each i, based on (11), ṼKi = maxaK∈ΨK(S̄Ki,Ā(K−1)i) QK(S̄Ki,Ā(K−1)i,aK;ξ̂K). To obtain ξ̂K−1, setting k = K − 1, based on (12) and letting Σk(s̄k, āk) be a working variance model, one would then solve for ξk

| (27) |

The corresponding yields an estimator for the optimal treatment choice at decision K − 1 for a patient with past history S̄K−1 = s̄K−1, ĀK−2 = āK−2, assuming s/he will take the optimal treatment at decision K. One would continue this process in the obvious fashion for k = K − 2,…, 1, forming Ṽki = maxak∈Ψk(S̄ki,Ā(k−1)i) Qk(S̄ki,Ā(k−1)i,ak;ξ̂k), and solving equations of form (27) to obtain ξ̂k and corresponding .

We may now summarize the estimated optimal regime as , where

| (28) |

It is important to recognize that, even under the sequential randomization assumption, the estimated regime (28) may not be a consistent estimator for the true optimal regime unless all the models for the Q-functions are correctly specified.

We illustrate the approach for K = 2, where at each decision there are two possible treatment options coded as 0 and 1; i.e., Ψ1(s1) = 𝒜1 = {0,1} for all s1 and Ψ2(s̄2, a1) = 𝒜2= {0,1} for all s̄2 and a1 ∈ {0,1}. Let . As in many modeling contexts, it is standard to adopt linear models for the Q-functions; accordingly, consider the models

| (29) |

where . In (29), Q2(s̄2, ā2; ξ2) is a model for E(Y|S̄2 = s̄2, Ā2 = ā2), a standard regression problem involving observable data, whereas Q1(s1, a1;ξ1) is a model for the conditional expectation of V2(s̄2, a1 = maxa2∈{0,1} E(Y|S̄2 = s2, A1 = a1, A2 = a2) given S1 = s1 and A1 = a1, which is an approximation to a complex true relationship; see Section 5.3. Under (29), and . Substituting the Q-functions in (29) in (10) and (13) then yields and .

We have presented (26) and (27) in the conventional WLS form, with leading term in the summand ; taking Σk to be a constant yields OLS. At the Kth decision, with responses Yi, standard theory implies that this is the optimal leading term when var(Y|S̄K = sK, ĀK = aK) = ΣK(s̄K, āK), yielding the (asymptotically) efficient estimator for ξK. For k < K, with “responses” Ṽ(k+1)i, this theory may no longer apply; however, deriving the optimal leading term involves considerable complication. Accordingly, it is standard to fit the posited models Qk (s̄k, āk; ξk) via OLS or WLS; some authors define Q-learning as using OLS (Chakraborty, Murphy and Strecher, 2010). The choice may be dictated by apparent relevance of the homoscedasticity assumption on the Ṽ(k+1)i, k = K, K − 1, …, 1, and whether or not linear models are sufficient to approximate the relationships may also be evaluated, but see Section 5.3.

5.2 A-Learning

Advantage learning (A-learning, Blatt et al., 2004) is a term used to describe a class of alternative methods to Q-learning predicated on the fact that the entire Q-function need not be specified to estimate the optimal regime. For simplicity, we consider here only the case of two treatment options coded as 0 and 1 at each decision; i.e., Ψk(s̄k, āk−1) = 𝒜k = {0,1}, k = 1,…, K.

To fix ideas, consider (29). Note that implied by (29) depends only on ; likewise, depends only on . This reflects the general result that, for purposes of deducing the optimal regime, for each k = 1,…, K, it suffices to know the contrast function Ck(s̄k, āk−1) = Qk(s̄k, āk−1, 1) − Qk(s̄k, āk−1, 0). This can be appreciated by noting that any arbitrary Qk(s̄k, āk) may be written as hk(s̄k, āk−1) + akCk(s̄k, āk−1), where hk(s̄k, āk−1) = Qk(s̄k, āk−1, 0), so that Qk(s̄k, āk−1, ak) is maximized by taking ak = I{Ck(s̄k, āk−1) > 0}; and the maximum itself is the expression hk(s̄k, āk−1) + Ck(s̄k, āk−1)I{Ck(s̄k, āk−1) > 0}. In the case of two treatment options we consider here, the contrast function is also referred to as the optimal-blip-to-zero function (Robins, 2004; Moodie et al., 2007). Murphy (2003) considers the expression Ck(S̄k, Āk−1)[I{Ck(S̄k, Āk−1) > 0} − Ak], referred to as the advantage or regret function, as it represents the “advantage” in response incurred if the optimal treatment at the kth decision were given relative to that actually received (or, equivalently, the “regret” incurred by not using the optimal treatment). See Robins (2004) and Moodie et al. (2007) for discussion of the relationship between regrets and optimal blip functions in this and settings other than binary treatment options.

We discuss here an A-learning method based on explicit modeling of the contrast functions, which we refer to as contrast-based A-learning. This approach is implemented via recursive solution of certain estimating equations given below developed by Robins (2004), often referred to as g-estimation. See Moodie et al. (2007) and the supplementary material to Zhang et al. (2013) for details. Contrast-based A-learning is distinguished from the regret-based A-learning methods of Murphy (2003) and Blatt et al. (2004), which rely on direct modeling of the regret functions and are implemented using a different estimating equation formulation called Iterative Minimization for Optimal Regimes by Moodie et al. (2007).

All of these methods are alternatives to Q-learning, which involves modeling the full Q-functions. For k = K − 1,…, 1, the Q-functions involve possibly complex relationships, raising concern over the consequences of model misspecification for estimation of the optimal regime. As identifying the optimal regime depends only on correct specification of the contrast or regret functions, A-learning methods may be less sensitive to mismodeling; see Sections 5.3 and 6.

Although we consider these methods only in the case of binary treatment options here, they may be extended to more than two treatments at the expense of complicating the formulation; see Robins (2004) and Moodie et al. (2007).

Contrast-based A-learning proceeds as follows. Posit models Ck(s̄k, āk−1; ψk), k = 1,…, K, for the contrast functions, depending on parameters ψk. Consider decision K. Let πK(s̄K, āK−1) = pr(AK = 1|S̄K = s̄K, ĀK−1 = āK−1) be the propensity of receiving treatment 1 in the observed data as a function of past history and Ṽ(K+1)i = Yi. Robins (2004) showed that all consistent and asymptotically normal estimators for ψK are solutions to estimating equations of the form

| (30) |

for arbitrary functions λK(s̄K, āK−1) of the same dimension as ψK and arbitrary functions θK(s̄K, āK−1). Assuming that the model CK(s̄K, āK−1; ψK) is correct, if var(Y|S̄K = sk, ĀK−1 = ak−1) is constant, the optimal choices of these functions are given by λK(s̄K, āK−1; ψK) = ∂/∂ψK CK(s̄K, āK−1; ψK) and θK (s̄Ki, ā(K−1)i) = hK (s̄K, āK−1); otherwise, if the variance is not constant, the optimal λK is complex (Robins, 2004).

To implement estimation of ψK via (30), one may adopt parametric models for these functions. Although A-learning obviates the need to specify fully the Q-functions, one may posit models for the optimal θK, hK(s̄K, āK−1; βK), say. Moreover, unless the data are from a SMART study, in which case the propensities πK(s̄K, āK−1) are known, these may be modeled as πK(s̄K, āK−1; ϕK) (e.g., by a logistic regression). These models are only adjuncts to estimating ψK; as long as at least one of these models is correctly specified, (30) will yield a consistent estimator for ψK, the so-called double robustness property. In contrast, Q-learning requires correct specification of all Q-functions; see Section 5.3 and Section A.5 of the supplemental article [Schulte et al. (2012).]

Substituting these models in (30), one solves (30) jointly in with

and the usual binary regression likelihood score equations in ϕK. We then have ; as in Q-learning, substituting ψ̂K yields an estimator for the optimal treatment choice at decision K for a patient with past history S̄K = sK, ĀK−1 = āK−1.

With ψ̂K in hand, the contrast-based A-learning algorithm proceeds in a backward iterative fashion to yield ψ̂k, k = K − 1,…, 1. At the kth decision, given models hk(s̄k, āk−1;βk) and πk(s̄k, āk−1; ϕk), one solves jointly in a system of estimating equations analogous to those above. The kth set of equations is based on “optimal responses” Ṽ(k+1)i, where, for each i, Ṽki estimates Vk(S̄ki, Ā(k−1),i). It may be shown (see Section A.3 of the supplemental article [Schulte et al. (2012)]) that E(Vk+1(S̄k+1, Āk) + Ck(S̄k, Āk−1)[I{Ck(S̄k, Āk−1) > 0} − Ak]| S̄k, Āk−1) = Vk(S̄k, Āk−1). Accordingly, define recursively Ṽki = Ṽ(k+1)i+Ck(S̄ki, Ā(k−1)i; ψ̂k)[I(Ck(S̄ki, Ā(k−1)i;ψ̂k) > 0}− Aki], k = K,K − 1,…1, Ṽ(K+1)i = Yi. The equations at the kth decision are then

| (31) |

for a given specification λk(s̄k, āk−1; ψk), solved jointly with the maximum likelihood score equations for binary regression in ϕk. It follows that . As above, the optimal λk is complex (Robins, 2004); taking λk(s̄k, āk−1; ψk) = ∂/∂ψk Ck(s̄k, āk−1; ψk) is reasonable for practical implementation.

Summarizing, the estimated optimal regime is

| (32) |

How well estimates dopt and hence d(1)opt depends on how close the posited Ck(s̄k, āk−1;ψk) are to the true contrast functions as well as correct specification of the functions hk or πk.

Henceforth, for brevity, we suppress the descriptor “contrast-based” and refer to the foregoing approach simply as A-learning.

5.3 Comparison and Practical Considerations

When K = 1, the Q-function is a model for E(Y|S1 = s1, A1 = a1). If in Q-learning this model and the variance model Σ1 in (26) are correctly specified, then, as above, the form of (26) is optimal for estimating ξ1. Accordingly, even if C1(s1;ψ1) and h1(s1;β1) are correctly modeled, (31) with K = 1 is generally not of this optimal form for any choice λ1(s1;ψ1), and hence A-learning will yield relatively inefficient inference on ψ1 and the optimal regime. However, if in Q-learning the Q-function is mismodeled, but in A-learning C1(s1;ψ1) and π1(s1;ϕ1) are both correctly specified, then A-learning will still yield consistent inference on ψ1 and hence the optimal regime, whereas inference on ξ1 and the optimal regime via Q-learning may be inconsistent. We assess the trade-off between consistency and efficiency in this case in Section 6. For K > 1, owing to the complications involved in specifying optimal estimating equations for Q- and A-learning, relative performance is not readily apparent; we investigate empirically in Section 6.

In special cases, Q- and A-learning lead to identical estimators for the Q-function (Chakraborty et al., 2010). For example, this holds if the propensities for treatment are constant, as would be the case under pure randomization at each decision point, and certain linear models are used for C1(s1;ψ1) and h1(s1;β1); Section A.4 of the supplemental article [Schulte et al. (2012)] demonstrates when K = 1 and pr(A1 = 1|S1 = s1) does not depend on s1. See Robins (2004, page 1999) and Rosenblum and van der Laan (2009) for further discussion.

As we have emphasized, for Q-learning, while modeling the Q-function at decision K is a standard regression problem with response Y, for decisions k = K − 1,…, 1, this involves modeling the estimated value function, which at decision k depends on relationships for future decisions k + 1,…, K. Ideally, the sequence of posited models Qk(s̄k, āk; ξk) should respect this constraint. However, this may be difficult to achieve with standard regression models. To illustrate, consider (29), and assume S1, S2 are scalar, where the conditional distribution of S2 given S1 = s1, A1 = a1 is Normal , say, 𝒦1 = (1, s1, a1)T. Recall that , where . Then, if the model Q2 in (29) were correct, from (12), ideally, Q1(s1, a1) = E{V2(s1, S2, a1; ξ2)|S1 = s1, A1 = a1}. Letting φ(·) and Φ(·) be the standard normal density and cumulative distribution function, respectively, it may be shown (see Section A.5 of the supplemental article [Schulte et al. (2012)]) that

| (33) |

taking ψ22 > 0. The true Q1(s1, a1) in (33) is clearly highly nonlinear and likely poorly approximated by the posited linear model Q1(s1, a1; ξ1) in (29). For larger K, this incompatibility between true and assumed models would propagate from K − 1,…, 1. Thus, while using linear models for the Q-functions is popular in practice, the potential for such mismodeling should be recognized.

An approach that may mitigate the risk of mismodeling is to employ flexible models for the Q-functions; Zhao, Kosorok and Zeng (2009) use support vector regression models. Developments in statistical learning suggest a large collection of powerful regression methods that might be used. Many of these methods must be tuned in order to balance bias and variance, a natural approach to which is to minimize the cross-validated mean squared error of the Q-functions at each decision point. An obvious downside is that the final model may be difficult to interpret, and clinicians may not be willing to use “black box” rules. One compromise is to fit a simple, interpretable model, such as a decision tree, to the fitted values of the complex model in order explore the factors driving the recommended treatment decisions. This simple model can then be checked against scientific theory. If it appears sensible, then clinicians may be willing to use predictions from the complex model. For discussion, see Craven and Shavlik (1996).

A-learning represents a middle ground between Q-learning and these approaches in that it allows for flexible modeling of the functions hk(s̄k, āk−1) while maintaining simple parametric models for the contrast functions Ck(s̄k, āk−1). Thus, the resulting decision rule, which depends only on the contrast function, remains interpretable, while the model for the response is allowed to be nonlinear. This is also appealing in that it may be reasonable to expect, based on the underlying science, that the relationship between patient history and outcome is complex while the optimal rule for treatment assignment is dependent, in a simple fashion, on a small number of variables. The flexibility allowed by a semi-parametric model also has its drawbacks. Techniques for formal model building, critique, and diagnosis are well understood for linear models but much less so for semi-parametric models. Consequently, Q-learning based on building a series of linear models may be more appealing to an analyst interested in formal diagnostics.

A-learning may have certain advantages for making inference under the null hypothesis of no effect of any treatment regime in 𝒟 on outcome. For example, in a SMART, the propensities are specified by design, and under the null, the contrast functions are identically zero and hence correctly specified. Thus, A-learning will yield consistent estimators for the parameters defining the contrast function. See Robins (2004) and the references in Section 8.

6. SIMULATION STUDIES

We examine the finite sample performance of Q- and A-learning on a suite of simple test examples via Monte Carlo simulation. We emphasize that the methods are straightforward to implement in more complex settings than those here. To illustrate trade-offs between the methods, we begin with correctly specified models and systematically introduce misspecification of the Q-function, the propensity model, and both. We focus here on situations where the contrast function is correctly specified to gain insight into impact of other model components. Scenarios with a misspecified contrast model can be constructed to include or exclude the target dopt, precluding generalizable conclusions. See Section A.9 of the supplemental article [Schulte et al. (2012)], Zhang et al. (2012a,b), and Zhang et al. (2013) for simulations involving misspecified contrast functions and Robins (2004, Section 9) for discussion.

In all scenarios, 10,000 Monte Carlo replications were used, and, for each generated data set, in (28) and (32) were obtained using the Q- and A-learning procedures in Sections 5.1 and 5.2. For simplicity, we consider one (K = 1) and two (K = 2) decision problems, where, at each decision point, there are two treatment options coded as 0 and 1. In all cases, we used Q-functions of the form Q1(s1, a1;ξ1) = h1(s1; β1) + a1C1(s1; ψ1) and Q2(s̄2, ā2; ξ2) = h2(s̄2; ā1; β2) + a2,C2(s̄2, a1; ψ2) to represent both true and assumed working models. With the contrast functions correctly specified, ψk, k = 1,2, dictate the optimal regime. Thus, as one measure of performance, we focus on relative efficiency of the estimators of components of ψk as reflected by the ratio of Monte Carlo mean squared errors (MSEs) given by MSE of A-learning/MSE of Q-learning, so that values greater than 1 favor Q-learning. Recognizing that E(Y*(dopt)} is the benchmark achievable outcome on average, as a second measure, we consider the extent to which the estimated regimes achieve E(Y*(dopt)} if followed by the population. Specifically, for regime d indexed by ψ1 (K = 1) or , let H(d) = E{Y*(d)}, a function of these parameters. Then H(dopt) = E{Y*(dopt)} is this function evaluated at the true parameter values, and H(d̂opt) is this function evaluated the estimated parameter values for a given data set, where d̂opt is . Define R(d̂opt) = E{H(d̂opt)}/H(dopt), where the expectation in the numerator is with respect to the distribution of the estimated parameters in d̂opt. We refer to R(d̂opt) as the v-efficiency of d̂opt, as it reflects the extent to which d̂opt achieves the “value” of the true optimal regime. In Section A.6 of the supplemental article [Schulte et al. (2012); we discuss calculation of R(d̂opt).

6.1 One Decision Point

In this and the next section, n = 200. Here, the observed data are (S1i,A1i,Yi), i = 1,…, n. With expit(x) = ex/(1 + ex), we used the class of generative models

| (34) |

indexed by , so that . For A-learning, we assumed models h1(s1; β1) = β10 + β11s1, C1(s1;ψ1) = ψ10 + ψ11s1, and π1(s1;ϕ1) = expit(ϕ10 + ϕ11s1), and for Q-learning we used Q1(s1, a1;ξ1) = h1(s1;β1) + a1C1(s1;ψ1). These models involve correctly specified contrast functions and are nested within the true models, with h1(s1; β1), and hence the Q-function, correctly specified when . The propensity model π1(s1; ϕ1) is correctly specified when . To study the effects of misspecification, we varied while keeping the others fixed, considering parameter settings of the form .

Correctly specified models

As noted in Section 5.3, when all working models are correctly specified, Q-learning is more efficient than A-learning, which for (34) occurs when . Here, the efficiency of Q-learning relative to A-learning is 1.06 for estimating and 2.74 for . Thus, Q-learning is a modest 6% more efficient in estimating but a dramatic 174% more efficient in estimating . Interestingly, the v-efficiency of the decision rules produced by the methods is similar, with , so that inefficiency in estimation of ψ1 via A-learning does not translate into a regime of poorer quality than that found by Q-learning.

Misspecified propensity model

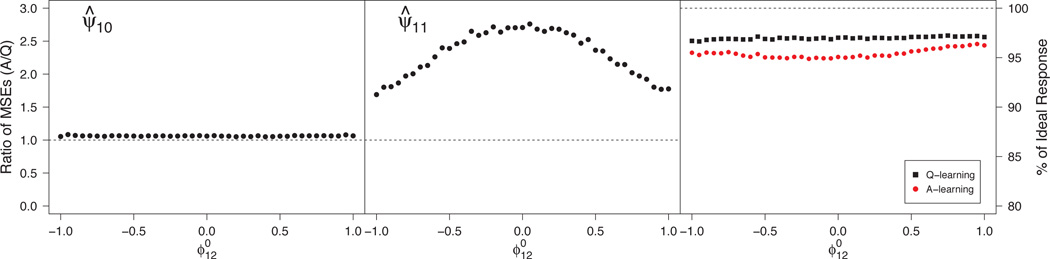

Under (34), this situation corresponds to and nonzero . An appeal of A-learning is the double robustness property noted in Section 5.2, which implies that ψ1 is estimated consistently when the propensity model is misspecified provided that the Q-function is correct. In contrast, Q-learning does not depend on the propensity model, so its performance is unaffected. Figure 1 shows the relative efficiency in estimating and the efficiency of varies from −1 to 1. The leftmost panel shows that there is minimal efficiency gain by using Q-learning instead of A-learning in estimation of . From the center panel, Q-learning yields substantial gains over A-learning for estimating . Interestingly, the gain is largest when , which corresponds to a correctly specified propensity model. Letting be the true propensity, , a possible explanation for this seemingly contradictory result in this scenario is that, as gets larger, becomes more profoundly quadratic. Consequently, the estimator for ϕ11 in the posited model π1(s1; ϕ1) = expit(ϕ10 + ϕ11s1) approaches zero, so that the estimated posited propensity approaches a constant. Because Q- and A-learning are algebraically equivalent under constant propensity here, substituting an estimated propensity that is nearly constant leads to an estimator very similar to that from Q-learning. Consequently, empirical efficiency gains decrease as . The right panel of Figure 1 shows a small gain in v-efficiency of ; both achieve good performance.

Fig 1.

Monte Carlo MSE ratios for estimators of components of ψ1 (left and center panels) and efficiencies for estimating the true dopt (right panel) under misspecification of the propensity model. MSE ratios > 1 favor Q-learning

See Section A.9 of the supplemental article [Schulte et al. (2012)] for evidence demonstrating this behavior of the propensity score and for further summaries reflecting the relative efficiency of the estimated regimes in all scenarios in this and the next section.

Misspecified Q-function

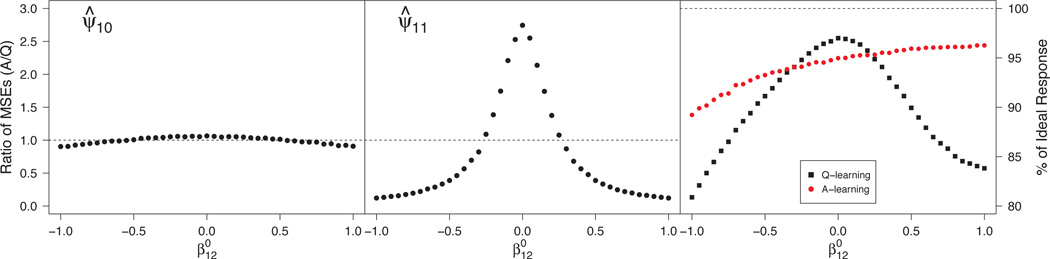

This scenario examines the second aspect of A-learning’s double-robustness, characterized in (34) by and nonzero . Here, A-learning leads to consistent estimation while Q-learning need not. The left panel of Figure 2 shows that the gain in efficiency using A-learning is minimal in estimating . The center panel illustrates the bias-variance trade-off associated with Q- versus A-learning. For far from zero, bias in the misspecified Q-function dominates the variance, and A-learning enjoys smaller MSE while, for small values of , variance dominates bias, and Q-learning is more efficient. The right panel shows that large bias in the Q-function can lead to meaningful loss (~10%) in v-efficiency of relative to .

Fig 2.

Monte Carlo MSE ratios for estimators of components of ψ1 (left and center panels) and efficiencies for estimating the true dopt (right panel) under misspecification of the Q-function. MSE ratios > 1 favor Q-learning

Both propensity model and Q-function misspecified

In our class of generative models (34), this corresponds to nonzero values of both . Rather than vary both values, (e.g., over a grid), we varied one and chose the other so that it is “equivalently misspecified.” In particular, for a given value of , we selected so that the t-statistic associated with testing in the logistic propensity model and the t-statistic associated with testing in the linear Q-function would be approximately equal in distribution. Consequently, across data sets, an analyst would be equally likely to detect either form of misspecification. Details of this construction are given in Section A.7 of the supplemental article [Schulte et al. (2012)].

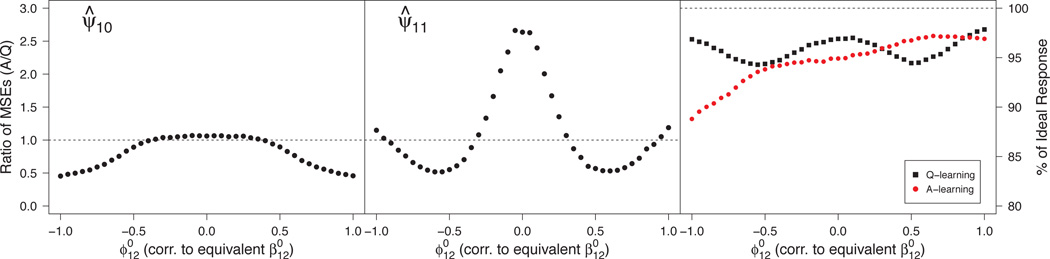

As in the preceding scenario, Figure 3 illustrates the bias-variance trade-off associated with Q- and A-learning. For large misspecification, A-learning provides a large enough reduction in bias to yield lower MSE; for small misspecification, Q-learning incurs some bias but reduces the variance enough to yield lower MSE. From the right panel of the figure, bias seems to translate into a larger loss in v-efficiency of the estimators of dopt than variance.

Fig 3.

Monte Carlo MSE ratios for estimators of components of ψ1 (left and center panels) and efficiencies for estimating the true dopt (right panel) under misspecification of both the propensity model and the Q-function. MSE ratios > 1 favor Q-learning

6.2 Two Decision Points

For K = 2, the observed data available to estimate are (S1i, A1i, S2i, A2i, Yi), i = 1,…, n. For these scenarios, we used a class of true generative data models that differs from those of Chakraborty et al. (2010), Song et al. (2010), and Laber et al. (2010) only in that S2 is continuous instead of binary; as the model at the first stage is saturated, this allows correct specification of the Q-function at decision 1. The generative model is

The model is indexed by , with true and contrast function , say. Because A1 and S1 are binary, the true functions are linear in are derived in terms of parameters indexing the generative model in Section A.8 of the supplemental article [Schulte et al. (2012)]. Thus, the true optimal regime has .

We assumed working models for A-learning of the form h1(s1;β1) = β10 + β11s1, C1(s1;ψ1) = ψ10 + ψ11s1, π1(s1; ϕ1) = expit(ϕ10 + ϕ11s1), h2(s1, s2, a1; β2) = β20 + β21s1 + β22a1 + β23s1a1 + β24s2, C2(s1,s2, a1;ψ2) = ψ20 + ψ21a1 + ψ22s2, and π2(s1,s2, a1;ϕ2) = expit(ϕ20 + ϕ21s1 + ϕ22a1 + ϕ23s2 + ϕ24a1s2); and, similarly, Q-functions Q1(s1, a1; ξ1) = h1(s1;β1) +a1C1(s1;ψ1) and Q2(s1,s2, a1, a2;ξ2) = h2(s1,s2, a1;β2) + a2C2(s1,s2, a1;ψ2) for Q-learning, so that the contrast functions are correctly specified in each case. Comparison of the working and generative models shows that the former are correctly specified when are both zero and are misspecified otherwise. Thus, we systematically varied these parameters to study the effects of misspecification, leaving all other parameter values fixed, taking .

Correctly specified models

This occurs when . As discussed previously, Q-learning is efficient when the models are correctly specified. Efficiencies of Q- learning relative to A-learning for estimating are 1.07, 1.03, 1.19, 1.44, and 1.98, respectively. Hence, Q-learning is markedly more efficient in estimating the second stage parameters but only modestly so for first stage parameters. More efficient estimators of the parameters do not translate into greater v-efficiency of the estimated regimes in this scenario, as .

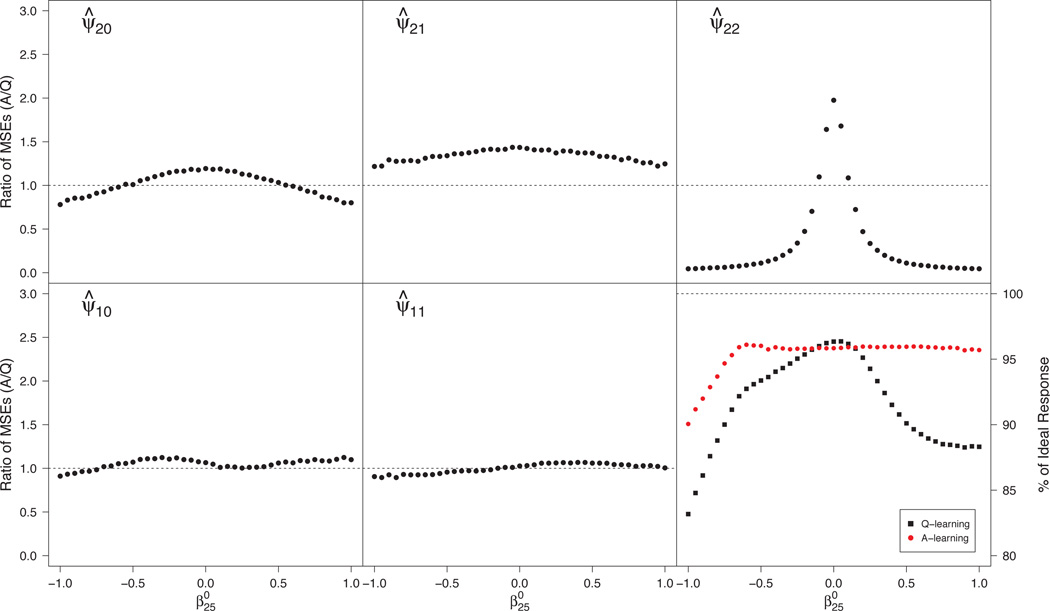

Misspecified propensity model

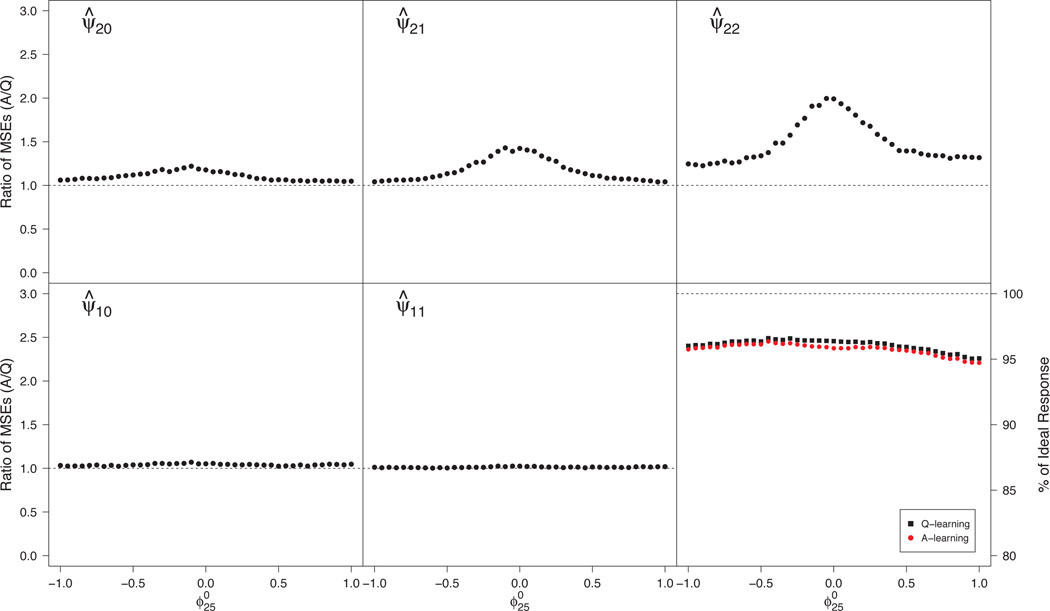

The propensity model at the second stage is misspecified when is nonzero. To isolate the effects of such misspecification, we set and varied between −1 and 1. From Figure 4, Q-learning is more efficient than A-learning for estimation of all parameters in ψ1 and ψ2, and, as in the one decision case, the efficiency gain is largest when , corresponding to a correctly specified propensity model. From the lower right panel, there appears to be little difference in v-efficiency of .

Fig 4.

Monte Carlo MSE ratios for estimators of components of ψ2 and ψ1 (upper row and lower row left and center panels) and efficiencies for estimating the true dopt (lower right panel) under misspecification of the propensity model. MSE ratios > 1 favor Q-learning

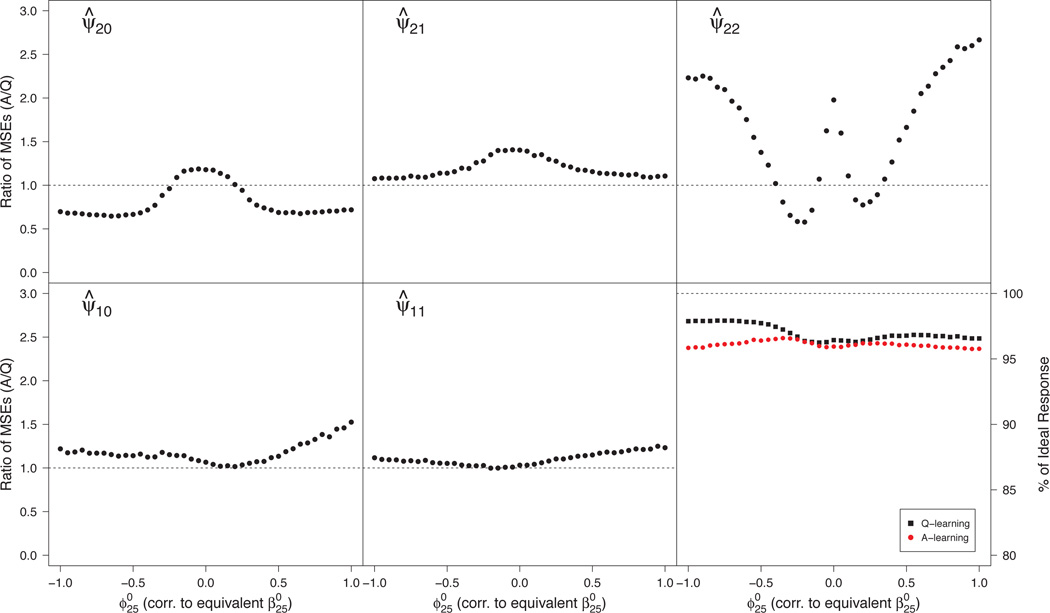

Misspecified Q-function

Under our class of generative models, the Q-function is misspecified when is nonzero. We set to focus on the effects of such misspecification. Figure 5 shows that, for the first stage parameters , there is little difference in efficiency between Q- and A-learning. The upper panels illustrate varying degrees of the bias-variance trade-off between the methods. In particular, in estimating , a small amount of misspecification leads to significant bias, and hence A-learning produces a much more accurate estimator, while, for the bias-variance trade-off is present but attenuated, and there is little difference between Q- and A-learning. In estimation of , variance appears to dominate bias, and Q-learning is preferred for the chosen range of values. From the lower right panel, relative efficiency for estimating weakly tracks the relative efficiencies of the estimated regimes , suggesting that the efficiency gain for A-learning in estimating leads to improved estimation of dopt.

Fig 5.

Monte Carlo MSE ratios for estimators of components ofψ2 and ψ1 (upper row and lower row left and center panels) and efficiencies for estimating the true dopt (lower right panel) under misspecification of the Q-functions. MSE ratios > 1 favor Q-learning

Both the propensity model and Q-function misspecified

This scenario corresponds to nonzero values of . Analogous to the one decision case, we chose pairs that are “equivalently misspecified;” see Section A.7 of the supplemental article [Schulte et al. (2012)]. From Figure 6, there is no general trend in efficiency of estimation across parameters that might recommend one method over the other. Furthermore, from the lower right panel, there is little difference in v-efficiency of the estimated regimes. One should not expect to draw broad conclusions, as neither Q- nor A-learning need be consistent here. Interestingly, despite misspecification of both models, still enjoy high v-efficiency in this scenario.

Fig 6.

Monte Carlo MSE ratios for estimators of components of ψ2 and ψ1 (upper row and lower row left and center panels) and efficiencies for estimating the true dopt (lower right panel) under misspecification of both the propensity models and Q-functions. MSE ratios > 1 favor Q-learning

6.3 Moodie, Richardson, and Stephens Scenario

The foregoing simulation scenarios deliberately involve simple models for the Q-functions in order to allow straightforward interpretation. To investigate the relative performance of the methods in a more challenging setting, we generated data from a scenario similar to that in Moodie et al. (2007) in which the true contrast functions are simple yet the Q-functions are complex.

The data generating process used mimics a study in which HIV-infected patients are randomized to receive antiretroviral therapy (coded as 1) or not (coded as 0) at baseline and again at six months, where the randomization probabilities depend on baseline and six month CD4 counts. Specifically, we generated baseline CD4 count S1 ~ Normal(450, 1502), and baseline treatment A1 was then assigned according to . We generated six month CD4 count S2, distributed conditional on S1 = s1, A1 = a1 as Normal(1.25s1,602). Treatment A2 was then generated according to . In contrast to the scenario in Moodie et al. (2007), this allows all possible treatment combinations. The outcome Y is CD4 count at one year; following Moodie et al. (2007), Y was generated as , where Yopt|S1 = s1, A1 = a1, S2 = s2, A2 = a2 ~ Normal(400 + 1.6s1, 602). Here, are the true advantage (regret) functions; we took to be the true contrast functions, so that, from Section 5.2,

| (35) |

| (36) |

It follows that the optimal treatment regime has and . While the true contrast functions are linear in , the true implied are nonsmooth and possibly complex.

Following Moodie et al. (2007), for A-learning, we assumed working models h1(s1;β1) = β10 + β11s1, C1(s1;ψ1) = ψ10 + ψ11s1, h2(s1, s2, a1; β2) = β20 + β21s1 + β22a1 + β23s1a1 + β24s2, and C2(s1, s2, a1; ψ2) = ψ20 + ψ21s2, and propensity models π1(s1;ϕ1) = expit(ϕ10 + ϕ11s1) and π2(s1, s2, a1; ϕ2) = expit(ϕ20 + ϕ21s2). For Q-learning, we analogously assumed Q-functions Q1(s1, a1; ξ1) = h1(s1; β1) + a1C1(s1; ψ1) and Q2(s1, s2, a1, a2; ξ2) = h2(s1, s1, a1; β2) + a2C2(s1, s2, a1;ψ2). Note that the contrast functions in each case are correctly specified, as are the propensity models; however, the Q-functions are misspecified, as the linear models h1(s1; β1) and h2(s1, s1, a1; β2) are poor approximations to the complex forms of the true .

We report results for n = 1000 with in Table 1. Because the Q-functions are misspecified, the Q-learning estimators for are biased, while those obtained via A-learning are consistent owing to the double robustness property. This leads to the dramatic relative inefficiency of Q-learning reflected by the MSE ratios. Under the assumed models, the estimated optimal regime for Q-learning dictates that, at baseline, therapy be given to patients with baseline CD4 count less than 199.7, while that estimated using A-learning gives treatment to those with baseline CD4 count less than 249.1, almost perfectly achieving the true optimal CD4 threshold of 250. Under the data generative process, using the baseline decision rule estimated via Q-learning may result in as many as 4.4% of patients who would receive therapy at baseline under the true optimal regime being assigned no treatment. Similarly, at the second decision, the estimated optimal regimes obtained by Q- and A-learning dictate that therapy be given to patients with six month CD4 count less than 320.2 and 360.1, respectively. Again, A-learning yields an estimated threshold almost identical to the optimal value of 360. Although that obtained via Q-learning is lower, 4.3% of patients who should receive therapy at six months would not if the estimated six month rule from Q-learning were followed by the population.

Table 1.

Monte Carlo average (standard deviation) of estimates obtained via Q- and A-learning and ratio of Monte Carlo MSE for the Moodie and Richardson scenario; MSE ratios > 1 favor Q-learning

| Parameter (true value) | Q-learning | A-learning | MSE ratio | |

|---|---|---|---|---|

|

|

154.8 (23.2) | 249.1 (18.7) | 0.036 | |

|

|

−0.775 (0.052) | −0.998 (0.041) | 0.032 | |

|

|

507.3 (49.2) | 720.3 (48.4) | 0.050 | |

| −1.584 (0.092) | −2.001 (0.085) | 0.040 |

By Section A.6 of the supplemental article [Schulte et al. (2012)], H(dopt) = 1120, whereas (estimated standard error 1.3) and , so that are virtually equal to one. Thus, although Q-learning yields poor estimation of parameters in the contrast functions, loss in v-efficiency of the estimated optimal regime is negligible. A possible explanation is as follows. For (35) and (36), some patients near the true treatment decision boundary would have , close to zero. Thus, even if a regime improperly assigns treatment to these patients, they would experience only a small loss in outcome and hence have little effect on the overall average. For other patients for whom the true contrast is not close to zero, improper assignment could result in considerable degradation of outcome. Because the proportion of patients receiving improper assignment is small in this scenario, the effect of these latter patients on the overall expected outcome is not substantial, leading to the relatively good expected outcome under the estimated Q-learning regime.

7. APPLICATION TO STAR*D

Sequenced Treatment Alternatives to Relieve Depression (STAR*D) was a randomized clinical trial enrolling 4041 patients designed to compare treatment options for patients with major depressive disorder. The trial involved four levels, where each level consisted of a 12 week period of treatment, with scheduled clinic visits at weeks 0, 2, 4, 6, 9, 12. Severity of depression at any visit was assessed using clinician-rated and self-reported versions of the Quick Inventory of Depressive Symptomatology (QIDS) score (Rush et al., 2003), for which higher values correspond to higher severity. At the end of each level, patients deemed to have an adequate clinical response to that level’s treatment did not move on to future levels, where adequate response was defined by 12-week clinician-rated QIDS score ≤ 5 (remission) or showing a 50% or greater decrease from the baseline score at the beginning of level 1 (successful reduction). During level 1, all patients were treated with citalopram. Patients continuing to level 2 due to inadequate response, conferring with their physicians, expressed preference to (i) switch or (ii) augment citalopram and within that preference were randomized to one of several options: (i) switch: sertraline, bupropion, venlafaxine, or cognitive therapy, or (ii) augment: citalopram plus one of either bupropion, buspirone, or cognitive therapy. Patients randomized to cognitive therapy (alone or augmented with citalopram) were eligible, in the case of inadequate response, to move to a supplementary level 2A and be randomized to switch to bupropion or venlafaxine. All patients without adequate response at level 2 (or 2A) continued to level 3 and, depending on preference to (i) switch or (ii) augment, were randomized within that preference to (i) switch: mirtazepine or nortriptyline or (ii) augment with either: lithium or triiodothyronine. Patients without adequate response continued to level 4, requiring a switch to tranylcypromine or mirtazepine combined with venlafaxine (determined by preference). Thus, although the study involved randomization, it is observational with respect to the treatment options switch or augment. For a complete description see Rush et al. (2004); see Section A.10 of the supplemental article [Schulte et al. (2012)] for a schematic of the design.

To demonstrate formulation of this problem within the framework of Sections 2 and 3, we take level 2A to be part of level 2 and consider only levels 2 and 3, calling them stages (decision points) 1 and 2, respectively (K = 2). Some patients in stage 1 without adequate response dropped out of the study without continuing to stage 2. Hence, we analyze complete case data, excluding dropouts, from 795 patients entering stage 1; 330 of these subsequently continued to stage 2. Let Ak, k = 1, 2, be the treatment at stage k, taking values 0 (augment) or 1 (switch); both options are feasible for all eligible subjects. Let S10 denote baseline (study entry) QIDS score and S11 denote the most recent QIDS score at the beginning of stage 1, respectively, so that S1 = (S10, S11)T is information available immediately prior to the first decision. Similarly, let S2 be the information available immediately prior to stage 2; here, S2 is the most recent QIDS score at the end of stage 1/beginning of stage 2. Finally, let T be QIDS score at the end of stage 2. Because some patients exhibited adequate response at the end of stage 1 and did not progress to stage 2, we define the outcome of interest to be −S2 (negative QIDS score at the end of stage 1) for patients not moving to stage 2 and −(S2 + T)/2 (average of negative QIDS scores at the end of stages 1 and 2) otherwise. Thus, writing L0 = max(5, S10/2), Y = −S2I(S2 ≤ L0) − (S2 + T)I(S2 > L0)/2, the cumulative average negative QIDS score. Thus, this demonstrates the case where outcome is a function of accrued information over the sequence of decisions.

From (9), Q2(s̄2, ā2) = E(Y|S̄2 = s̄2, Ā2 = ā2) = −s2{I{s2 ≤ l0) + I(s2 > l0)/2} + E(−T|S̄2 = s̄2, Ā2 = ā2, S2 > l0)I(s2 > l0)/2, so that V2(s̄2, a1) = −s2I(s2 ≤ l0) + {−s2 + U2(s̄2, a1)}I(s2 > l0)/2, where U2(s̄2, a1) = maxa2 E(−T|S̄2 = s̄2, Ā1 = ā1, A2 = a2, S2 > l0). Thus, from (12),

We describe implementation for Q-learning. At the second decision point, we must posit a model for Q2(s̄2, ā2). From the form of Q2(s̄2, ā2), we need only specify a model for E(−T|S̄2 = s̄2, Ā2 = ā2, S2 > l0); given the form of the conditioning set, this may be carried out using only the data from patients moving to stage 2. Based on exploratory analysis, defining s22 to be the slope of QIDS score over stage 1 based on s11 and s2, we took this model to be of the form β20 + β21s2 + β22s22 + ψ20a2, so that the posited Q-function is

| (37) |

ξ2 = (β20, β21, β22, ψ20)T. Under (37), V2(s̄2, a1;ξ2) = −s2{I(s2 ≤ l0) + I(s2 > l0)/2} + I(s2 > l0){β20 + β21s2 + β22s22 + ψ20I(ψ20 > 0)}/2, and the “responses“ Ṽ2,i for use in (27) may then be formed by substituting the estimate for ξ2. Based on exploratory analysis, we took the posited Q-function at the first stage to be Q1(s1, a1; ξ1) = β10 + β11 s11 + β12s12 + a1 (ψ10 + ψ11s12), where s12 is the slope of QIDS score prior to stage 1 based on s10 and s11; and ξ1 = (β10, β11, β12, ψ10, ψ11)T. For A-learning, we posited models for the functions hk(s̄k, āk−1) and Ck(s̄k, āk−1), k = 1,2, in the obvious way analogous to those above, and we took the propensity models to be of the form π2(s̄2, a1; ϕ2) = expit(ϕ20 + ϕ21s2 + ϕ22s22 + ϕ23a1) and π1(s1; ϕ1) = expit(ϕ10 + ϕ11s11 + ϕ12s12). Section A.11 of the supplemental article [Schulte et al. (2012)] presents model diagnostics.

The results are given in Table 2. To describe implementation, we consider interactions significant based on a test at level α = 0.10. At the first stage, Q-learning suggests a treatment switch for those with QIDS slope prior to stage 1 greater than −1.09 (obtained by solving 1.11 + 1.02S12 = 0); A-learning assigns a treatment switch for those with this QIDS slope greater than −1.66. At stage 2, the results suggest that all patients should switch and not augment their existing treatments.

Table 2.

STAR*D data analysis results

| Q-learning | A-learning | |||||

|---|---|---|---|---|---|---|

| Parameter | Estimate | 95% CI | p-value | Estimate | 95% CI | p-value |

| Stage 2 | ||||||

| β20 | −1.46 | (−3.47 , 0.55) | −1.47 | (−3.49 , 0.54) | ||

| β21 | −0.75 | (−0.88 , −0.61) | * | −0.75 | (−0.88 , −0.61) | * |

| β22 | 1.17 | (0.52 , 1.81) | * | 1.17 | (0.52 , 1.81) | * |

| ψ20 | 1.10 | (0.02 , 2.19) | * | 1.12 | (0.03 , 2.22) | * |

| Stage 1 | ||||||

| β10 | −0.62 | (−1.94 , 0.70) | −0.30 | (−1.69 , 1.09) | ||

| β11 | −0.54 | (−0.62 , −0.45) | * | −0.55 | (−0.64 , −0.46) | * |

| β12 | −0.08 | (−0.60 , 0.45) | 0.10 | (−0.46 , 0.66) | ||

| Ψ10 | 1.11 | (0.28 , 1.94) | * | 0.73 | (−0.18 , 1.65) | |

| ψ11 | 1.02 | (−0.08 , 2.11) | * | 0.44 | (−0.83 , 1.72) | |

Asterisks indicate evidence at level of significance 0.05 (0.10) that the main effect (treatment contrast) parameter is non-zero

8. DISCUSSION

We have provided a self-contained account of Q- and A-learning methods for estimating optimal dynamic treatment regimes, including a detailed discussion of the underlying statistical framework in which these methods may be formalized and of their relative merits. Our discussion of A-learning is limited to the case of two treatment options at each decision. Our simulation studies suggest that, while A-learning may be inefficient relative to Q-learning in estimating parameters that define the optimal regime when the Q-functions required for the latter are correctly specified, A-learning may offer robustness to such misspecification. Nonetheless, Q-learning may have practical advantages in that it involves modeling tasks familiar to most data analysts, allowing the use of standard diagnostic tools. On the other hand, A-learning may be preferred in settings where it is expected that the form of the decision rules defining the optimal regime is not overly complex. However, A-learning increases in complexity with more than two treatment options at each stage, which may limit its appeal. Interestingly, in the simulation scenarios we consider, inefficiency and bias in estimation of parameters defining the optimal regime does not necessarily translate into large degradation of average performance of the estimated regime for either method.

Although our simple simulation studies provide some insight into the relative merits of these methods, there remain many unresolved issues in estimation of optimal treatment regimes. Approaches to address the challenges of high-dimensional information and large numbers of decision points are required. Existing methods for model selection focusing on minimization of prediction error may not be best for developing models optimal for decision-making. When K is very large, the number of parameters in the models required for Q- and A-learning becomes unwieldy. The analyst may wish to postulate models in which parameters are shared across decision points; see Robins (2004), Robins et al. (2008), Orellana et al. (2010) and Chakraborty and Moodie (2012).

In our development, we have invoked a strong version of the sequential randomization assumption to simplify supporting arguments. Richardson and Robins (2013) allow identification of potential outcomes under possibly weaker assumptions via graphical representations. These authors also extend the notion of a dynamic treatment regime.

Formal inference procedures for evaluating the uncertainty associated with estimation of the optimal regime are challenging due to the nonsmooth nature of decision rules, which in turn leads to nonregularity of the parameter estimators; see Robins (2004), Chakraborty et al. (2010), Laber et al. (2010), Moodie and Richardson (2010), Song et al. (2010), and Laber and Murphy (2011).

We have discussed sequential decision-making in the context of personalized medicine, but many other applications exist where, at one or more times in an evolving process, an action must be taken from among a set of plausible actions. Indeed, Q-learning was originally proposed in the computer science literature with these more general problems in mind; see Shortreed et al. (2010).

Supplementary Material

ACKNOWLEDGMENTS

This work was supported by NIH grants R37 AI031789, R01 CA051962, R01 CA085848, P01 CA142538, and T32 HL079896.

Footnotes

Supplementary Material

Supplement A: Supplement to “Q- and A-learning methods for estimating optimal dynamic treatment regimes”

(doi: COMPLETED BY TYPESETTER). Due to space constraints technical details and further results are given in the supplementary document Schulte et al. (2012).

Contributor Information

Phillip J. Schulte, Biostatistician, Duke Clinical Research Institute, Durham, North Carolina 27701, USA (phillip.schulte@duke.edu).

Anastasios A. Tsiatis, Gertrude M. Cox Distinguished Professor, Department of Statistics, North Carolina State University, Raleigh, North Carolina 27695-8203, USA (tsiatis@ncsu.edu).

Eric B. Laber, Assistant Professor, Department of Statistics, North Carolina State University, Raleigh, North Carolina 27695-8203, USA (eblaber@ncsu.edu).

Marie Davidian, William Neal Reynolds Professor, Department of Statistics, North Carolina State University, Raleigh, North Carolina 27695-8203, USA (davidian@ncsu.edu)..

REFERENCES

- Almirall D, Ten Have T, Murphy SA. Structural nested mean models for assessing time-varying effect moderation. Biometrics. 2010;66:131–139. doi: 10.1111/j.1541-0420.2009.01238.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bather J. Decision Theory: an Introduction to Dynamic Programming and Sequential Decisions. Chichester: Wiley; 2000. [Google Scholar]

- Blatt D, Murphy SA, Zhu J. Technical Report 04-63. The Methodology Center, Pennsylvania State University; 2004. A-learning for approximate planning. [Google Scholar]

- Estimating optimal dynamic treatment regimes with shared decision rules across stages: An extension of Q-learning. 2012 Unpublished manuscript. [Google Scholar]

- Chakraborty B, Murphy SA, Strecher V. Inference for non-regular parameters in optimal dynamic treatment regimes. Statistical Methods in Medical Research. 2010;19:317–343. doi: 10.1177/0962280209105013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Craven MW, Shavlik JW. In Advances in Neural Information Processing Systems. ume 8. Denver, CO: MIT Press; 1996. Extracting tree-structured representations of trained networks; pp. 24–30. [Google Scholar]

- Henderson R, Ansell P, Alshibani D. Regret-regression for optimal dynamic treatment regimes. Biometrics. 2010;66:1192–1201. doi: 10.1111/j.1541-0420.2009.01368.x. [DOI] [PubMed] [Google Scholar]

- Lavori PW, Dawson R. A design for testing clinical strategies: biased adaptive within-subject randomization. Journal of the Royal Statistical Society, Series A. 2000;163:29–38. [Google Scholar]

- Laber EB, Murphy SA. Adaptive confidence intervals for the test error in classification. J. Amer. Statist. Assoc. 2011;106:904–913. doi: 10.1198/jasa.2010.tm10053. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Laber EB, Qian M, Lizotte DJ, Murphy SA. Statistical inference in dynamic treatment regimes. 2010 Pre-print, arXiv:1006.5831v1. [Google Scholar]

- Moodie EEM, Richardson TS, Stephens DA. Demystifying optimal dynamic treatment regimes. Biometrics. 2007;63:447–455. doi: 10.1111/j.1541-0420.2006.00686.x. [DOI] [PubMed] [Google Scholar]

- Moodie EEM, Richardson TS. Estimating optimal dynamic regimes: correcting bias under the null. Scand. J. Statist. 2010;37:126–146. doi: 10.1111/j.1467-9469.2009.00661.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Murphy SA. Optimal dynamic treatment regimes (with discussion) J. Royal Statist. Soc. Ser. B. 2003;58:331–366. [Google Scholar]

- Murphy SA. An experimental design for the development of adaptive treatment strategies. Stat. Med. 2005;24:1455–1481. doi: 10.1002/sim.2022. [DOI] [PubMed] [Google Scholar]

- Murphy SA, Lynch KG, Oslin D, McKay JR, Ten Have T. Developing adaptive treatment strategies in substance abuse research. Drug Alcohol Depend. 2007a;88S:S24–S30. doi: 10.1016/j.drugalcdep.2006.09.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Murphy SA, Oslin DW, Rush AJ, Zhu J. Methodological challenges in constructing effective treatment sequences for chronic psychiatric disorders. Neuropsychoarmacology. 2007b;32:257–262. doi: 10.1038/sj.npp.1301241. [DOI] [PubMed] [Google Scholar]

- Nahum-Shani I, Qian M, Almirall D, Pelham WE, Gnagy B, Fabiano G, Waxmonsky J, Yu J, Murphy SA. Q-Learning: A data analysis method for constructing adaptive interventions. Technical report. 2010 doi: 10.1037/a0029373. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Orellana L, Rotnitzky A, Robins J. Dynamic regime marginal structural mean models for estimation of optimal dynamic treatment regimes, part I: Main content. Int. J. Biostatist. 2010;6(Issue 2) Article 8, DOI: 10.2202/1557-4679.1200. [PubMed] [Google Scholar]

- Richardson TS, Robins JM. Single world intervention graphs (SWIGs): A unification of the counterfactual and graphical approaches to causality. 2013 Available at http://www.csss.washington.edu/Papers/. [Google Scholar]

- Robins JM. A new approach to causal inference in mortality studies with sustained exposure periods: Applications to control of the healthy worker survivor effect. Math. Model. 1986;7:1393–1512. [Google Scholar]

- Robins JM. Correcting for non-compliance in randomized trials using structural nested mean models. Comm. Statist. - Theory Meth. 1994;23:2379–2412. [Google Scholar]

- Robins JM. Optimal structured nested models for optimal sequential decisions. In: Lin DY, Heagerty PJ, editors. Proceedings of the Second Seattle Symposium on Biostatistics. New York: Springer; 2004. pp. 189–326. [Google Scholar]

- Robins J, Orellana L, Rotnitzky A. Estimation and extrapolation of optimal treatment and testing strategies. Stat. Med. 2008;27:4678–4721. doi: 10.1002/sim.3301. [DOI] [PubMed] [Google Scholar]

- Rosenblum M, van der Laan MJ. Using regression models to analyze randomized trials: Asymp- totically valid hypothesis tests despite incorrectly specified models. Biometrics. 2009;65:937–945. doi: 10.1111/j.1541-0420.2008.01177.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rosthøj S, Fullwood C, Henderson R, Stewart S. Estimation of optimal dynamic anticoagulation regimes from observational data: A regret-based approach. Stat. Med. 2006;25:4197–4215. doi: 10.1002/sim.2694. [DOI] [PubMed] [Google Scholar]

- Rubin DB. Bayesian inference for causal effects: The role of randomization. Ann. Statist. 1978;6:34–58. [Google Scholar]

- Rush AJ, Fava M, Wisniewski SR, Lavori PW, Trivedi MH, Sackeim HA, Thase ME, Nierenberg AA, Quitkin FM, Kashner TM, Kupfer DJ, Rosenbaum JF, Alpert J, Stewart JW, McGrath PJ, Biggs MM, Shores-Wilson K, Lebowitz BD, Ritz L, Niederehe G. Sequenced Treatment Alternatives to Relieve Depression (STAR*D): rationale and design. Control. Clin. Trials. 2004;25:119–142. doi: 10.1016/s0197-2456(03)00112-0. [DOI] [PubMed] [Google Scholar]

- Rush AJ, Trivedi MH, Ibrahim HM, Carmody TJ, Arnow B, Klein DN, Markowitz JC, Ninan PT, Kornstein S, Manber R, Thase ME, Kocsis JH, Keller MB. The 16-item quick inventory of depressive symptomatology (qids), clinician rating (qids-c), and self-report (qids-sr): a psychometric evaluation in patients with chronic major depression. Biological Psychiatry. 2003;54:573–583. doi: 10.1016/s0006-3223(02)01866-8. [DOI] [PubMed] [Google Scholar]

- Schulte PJ, Tsiatis AA, Laber EB, Davidian M. Supplement to “Q- and A-learning Methods for Estimating Optimal Dynamic Treatment Regimes”. 2012 doi: 10.1214/13-STS450. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shortreed SM, Laber E, Lizotte DJ, Stroup TS, Pineau J, Murphy SA. Informing sequential clinical decision-making through reinforcement learning: an empirical study. Mach. Learn. 2010;11:109–136. doi: 10.1007/s10994-010-5229-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Song R, Wang W, Zeng D, Kosorok MR. Penalized q-learning for dynamic treatment regimes. 2010 doi: 10.5705/ss.2012.364. Pre-Print, arXiv:1108.5338v1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Thall PF, Millikan RE, Sung H. Evaluating multiple treatment courses in clinical trials. Stat. Med. 2000;19:1011–1028. doi: 10.1002/(sici)1097-0258(20000430)19:8<1011::aid-sim414>3.0.co;2-m. [DOI] [PubMed] [Google Scholar]

- Thall PF, Sung H, Etsey E. Selecting therapeutic strategies based on efficacy and death in multicourse clinical trials. J Amer. Statist. Assoc. 2002;97:29–39. [Google Scholar]