Significance

Many scientific challenges involve the study of stochastic dynamic systems for which only noisy or incomplete measurements are available. Inference for partially observed Markov process models provides a framework for formulating and answering questions about these systems. Except when the system is small, or approximately linear and Gaussian, state-of-the-art statistical methods are required to make efficient use of available data. Evaluation of the likelihood for a partially observed Markov process model can be formulated as a filtering problem. Iterated filtering algorithms carry out repeated Monte Carlo filtering operations to maximize the likelihood. We develop a new theoretical framework for iterated filtering and construct a new algorithm that dramatically outperforms previous approaches on a challenging inference problem in disease ecology.

Keywords: sequential Monte Carlo, particle filter, maximum likelihood, Markov process

Abstract

Iterated filtering algorithms are stochastic optimization procedures for latent variable models that recursively combine parameter perturbations with latent variable reconstruction. Previously, theoretical support for these algorithms has been based on the use of conditional moments of perturbed parameters to approximate derivatives of the log likelihood function. Here, a theoretical approach is introduced based on the convergence of an iterated Bayes map. An algorithm supported by this theory displays substantial numerical improvement on the computational challenge of inferring parameters of a partially observed Markov process.

An iterated filtering algorithm was originally proposed for maximum likelihood inference on partially observed Markov process (POMP) models by Ionides et al. (1). Variations on the original algorithm have been proposed to extend it to general latent variable models (2) and to improve numerical performance (3, 4). In this paper, we study an iterated filtering algorithm that generalizes the data cloning method (5, 6) and is therefore also related to other Monte Carlo methods for likelihood-based inference (7–9). Data cloning methodology is based on the observation that iterating a Bayes map converges to a point mass at the maximum likelihood estimate. Combining such iterations with perturbations of model parameters improves the numerical stability of data cloning and provides a foundation for stable algorithms in which the Bayes map is numerically approximated by sequential Monte Carlo computations.

We investigate convergence of a sequential Monte Carlo implementation of an iterated filtering algorithm that combines data cloning, in the sense of Lele et al. (5), with the stochastic parameter perturbations used by the iterated filtering algorithm of (1). Lindström et al. (4) proposed a similar algorithm, termed fast iterated filtering, but the theoretical support for that algorithm involved unproved conjectures. We present convergence results for our algorithm, which we call IF2. Empirically, it can dramatically outperform the previous iterated filtering algorithm of ref. 1, which we refer to as IF1. Although IF1 and IF2 both involve recursively filtering through the data, the theoretical justification and practical implementations of these algorithms are fundamentally different. IF1 approximates the Fisher score function, whereas IF2 implements an iterated Bayes map. IF1 has been used in applications for which no other computationally feasible algorithm for statistically efficient, likelihood-based inference was known (10–15). The extra capabilities offered by IF2 open up further possibilities for drawing inferences about nonlinear partially observed stochastic dynamic models from time series data.

Iterated filtering algorithms implemented using basic sequential Monte Carlo techniques have the property that they do not need to evaluate the transition density of the latent Markov process. Algorithms with this property have been called plug-and-play (12, 16). Various other plug-and-play methods for POMP models have been recently proposed (17–20), due largely to the convenience of this property in scientific applications.

An Algorithm and Related Questions

A general POMP model consists of an unobserved stochastic process with observations made at times . We suppose that takes values in , takes values in , and there is an unknown parameter θ taking values in . We adopt notation for integers , so we write the collection of observations as . Writing , the joint density of and is assumed to exist, and the Markovian property of together with the conditional independence of the observation process means that this joint density can be written as

The data consist of a sequence of observations, . We write for the marginal density of , and the likelihood function is defined to be . We look for a maximum likelihood estimate (MLE), i.e., a value maximizing . The IF2 algorithm defined above provides a plug-and-play Monte Carlo approach to obtaining . A simplification of IF2 arises when , in which case, iterated filtering is called iterated importance sampling (2) (SI Text, Iterated Importance Sampling). Algorithms similar to IF2 with a single iteration have been proposed in the context of Bayesian inference (21, 22) (SI Text, Applying Liu and West’s Method to the Toy Example and Fig. S1). When and degenerates to a point mass at φ, the IF2 algorithm becomes a standard particle filter (23, 24). In the IF2 algorithm description, and are the jth particles at time n in the Monte Carlo representation of the mth iteration of a filtering recursion. The filtering recursion is coupled with a prediction recursion, represented by and . The resampling indices in IF2 are taken to be a multinomial draw for our theoretical analysis, but systematic resampling is preferable in practice (23). A natural choice of is a multivariate normal density with mean φ and variance for some covariance matrix , but in general, could be any conditional density parameterized by σ. Combining the perturbations over all of the time points, we define

We define an extended likelihood function on by

Each iteration of IF2 is a Monte Carlo approximation to a map

| [1] |

with f and approximating the initial and final density of the parameter swarm. For our theoretical analysis, we consider the case when the SD of the parameter perturbations is held fixed at for . In this case, IF2 is a Monte Carlo approximation to . We call the fixed σ version of IF2 “homogeneous” iterated filtering since each iteration implements the same map. For any fixed σ, one cannot expect a procedure such as IF2 to converge to a point mass at the MLE. However, for fixed but small σ, we show that IF2 does approximately maximize the likelihood, with an error that shrinks to zero in a limit as and . An immediate motivation for studying the homogeneous case is simplicity; it turns out that even with this simplifying assumption, the theoretical analysis is not entirely straightforward. Moreover, the homogeneous analysis gives at least as much insight as an asymptotic analysis into the practical properties of IF2, when decreases down to some positive level but never completes the asymptotic limit . Iterated filtering algorithms have been primarily developed in the context of making progress on complex models for which successfully achieving and validating global likelihood optimization is challenging. In such situations, it is advisable to run multiple searches and continue each search up to the limits of available computation (25). If no single search can reliably locate the global maximum, a theory assuring convergence to a neighborhood of the maximum is as relevant as a theory assuring convergence to the maximum itself in a practically unattainable limit.

| Algorithm IF2. Iterated filtering |

| input: |

| Simulator for |

| Simulator for , n in |

| Evaluator for , n in |

| Data, |

| Number of iterations, M |

| Number of particles, J |

| Initial parameter swarm, |

| Perturbation density, , n in |

| Perturbation sequence, |

| output: Final parameter swarm, |

| For m in |

| for j in |

| for j in |

| For n in |

| for j in |

| for j in |

| for j in |

| Draw with |

| and for j in |

| End For |

| Set for j in |

| End For |

The map can be expressed as a composition of a parameter perturbation with a Bayes map that multiplies by the likelihood and renormalizes. Iteration of the Bayes map alone has a central limit theorem (CLT) (5) that forms the theoretical basis for the data cloning methodology of refs. 5 and 6. Repetitions of the parameter perturbation may also be expected to follow a CLT. One might therefore imagine that the composition of these two operations also has a Gaussian limit. This is not generally true, since the rescaling involved in the perturbation CLT prevents the Bayes map CLT from applying (SI Text, A Class of Exact Non-Gaussian Limits for Iterated Importance Sampling). Our agenda is to seek conditions guaranteeing the following:

-

(A1)

For every fixed , exists.

-

(A2)

When J and M become large, IF2 numerically approximates .

-

(A3)

As the noise intensity becomes small, approaches a point mass at the MLE, if it exists.

Stability of filtering problems and uniform convergence of sequential Monte Carlo numerical approximations are closely related, and so A1 and A2 are studied together in Theorem 1. Each iteration of IF2 involves standard sequential Monte Carlo filtering techniques applied to an extended model where latent variable space is augmented to include a time-varying parameter. Indeed, all M iterations together can be represented as a filtering problem for this extended POMP model on M replications of the data. The proof of Theorem 1 therefore leans on existing results. The novel issue of A3 is then addressed in Theorem 2.

Convergence of IF2

First, we set up some notation. Let be a Markov chain taking values in such that has density , and has conditional density given for . Suppose that is constructed on the canonical probability space with for . Let be the corresponding Borel filtration. To consider a time-rescaled limit of as , let be a continuous-time, right-continuous, piecewise constant process defined at its points of discontinuity by when k is a nonnegative integer. Let be the filtered process defined such that, for any event ,

| [2] |

where is the indicator function for event E and

In Eq. 2 , denotes probability under the law of , and denotes expectation under the law of . The process is constructed so that has density . We make the following assumptions.

-

(B1)

converges weakly as to a diffusion , in the space of right-continuous functions with left limits equipped with the uniform convergence topology. For any open set with positive Lebesgue measure and , there is a such that .

-

(B2)

For some and , has a positive density on , uniformly over the distribution of for all and .

-

(B3)

is continuous in a neighborhood for some .

-

(B4)

There is an with for all , and .

-

(B5)

There is a such that when , for all σ.

-

(B6)

There is a such that implies , for all σ and all n.

Conditions B1 and B2 hold when corresponds to a reflected Gaussian random walk and is a reflected Brownian motion (SI Text, Checking Conditions B1 and B2). More generally, when is a location-scale family with mean φ away from a boundary, then will behave like Brownian motion in the interior of . B4 follows if is compact and is positive and continuous as a function of θ and . B5 can be guaranteed by construction. B3 and B6 are undemanding regularity conditions on the likelihood and extended likelihood. A formalization of A1 and A2 can now be stated as follows.

Theorem 1. Let be the map of Eq. 1 and suppose B2 and B4. There is a unique probability density such that for any probability density f on ,

| [3] |

where is the norm of f. Let be the output of IF2, with . There is a finite constant such that, for any function and all M,

| [4] |

Proof. B2 and B4 imply that is mixing, in the sense of ref. 26, for all sufficiently large k. The results of ref. 26 are based on the contractive properties of mixing maps in the Hilbert projective metric. Although ref. 26 stated their results in the case where T itself is mixing, the required geometric contraction in the Hilbert metric holds as long as is mixing for all for some (ref. 27, theorem 2.5.1). Corollary 4.2 of ref. 26 implies Eq. 3, noting the equivalence of the Hilbert projective metric and the total variation norm shown in their lemma 3.4. Then, corollary 5.12 of ref. 26 implies Eq. 4, completing the proof of Theorem 1. A longer version of this proof is given in SI Text, Additional Details for the Proof of Theorem 1.

Results similar to Theorem 1 can be obtained using Dobrushin contraction techniques (28). Results appropriate for noncompact spaces can be obtained using drift conditions on a potential function (29). Now we move on to our formalization of A3:

Theorem 2. Assume B1–B6. For , .

Proof. Let and . From B4, . For positive constants , , , and , define

We can pick , , , , and so that . Suppose that is initialized with the stationary distribution identified in Theorem 1. Now, set M to be the greatest integer less than , and let be the event that spends at least a fraction of time in . Formally,

We wish to show that is small for σ small. Let be the set of sample paths that spend at least a fraction of time up to time M in , i.e.,

Then, we calculate

| [5] |

| [6] |

| [7] |

We used B5 and B6 to arrive at Eq. 5, then, to get to Eq. 6, we have taken σ small enough that and . From B3, is an open set, and B1 therefore ensures each of the probabilities and in Eq. 7 tends to a positive limit as given by the probability under the limiting distribution (SI Text, Lemma S1). The term tends to zero as since, by construction, and . Setting , and noting that is constructed to have stationary marginal density , we have

which can be made arbitrarily small by picking small and σ small, completing the proof.

Demonstration of IF2 with Nonconvex Superlevel Sets

Theorems 1 and 2 do not involve any Taylor series expansions, which are basic in the justification of IF1 (2). This might suggest that IF2 can be effective on likelihood functions without good low-order polynomial approximations. In practice, this can be seen by comparing IF2 with IF1 on a simple 2D toy example in which the superlevel sets are connected but not convex. We also compare with particle Markov chain Monte Carlo (PMCMC) implemented as the particle marginal Metropolis–Hastings algorithm of ref. 17. The justification of PMCMC also does not depend on Taylor series expansions, but PMCMC is computationally expensive compared with iterated filtering (30). Our toy example has a constant and nonrandom latent process, for . The known measurement model is

This example was designed so that a nonlinear combination of the parameters is well identified whereas each parameter is marginally weakly identified. For the truth, we took . We supposed that is suspected to fall in the interval and is expected in . We used a uniform distribution on this rectangle to specify the prior for PMCMC and to generate random starting points for all of the algorithms. We set observations, and we used a Monte Carlo sample size of particles. For IF1 and IF2, we used filtering iterations, with initial random walk SD 0.1 decreasing geometrically down to 0.01. For PMCMC, we used filtering iterations with random walk SD 0.1, awarding PMCMC 100 times the computational resources offered to IF1 and IF2. Independent, normally distributed parameter perturbations were used for IF1, IF2, and PMCMC. The random walk SD for PMCMC is not immediately comparable to that for IF1 and IF2, since the latter add the noise at each observation time whereas the former adds it only between filtering iterations. All three methods could have their parameters fine-tuned, or be modified in other ways to take advantage of the structure of this particular problem. However, this example demonstrates a feature that makes tuning algorithms tricky: The nonlinear ridge along contours of constant becomes increasingly steep as increases, so no single global estimate of the second derivative of the likelihood is appropriate. Reparameterization can linearize the ridge in this toy example, but in practical problems with much larger parameter spaces, one does not always know how to find appropriate reparameterizations, and a single reparameterization may not be appropriate throughout the parameter space.

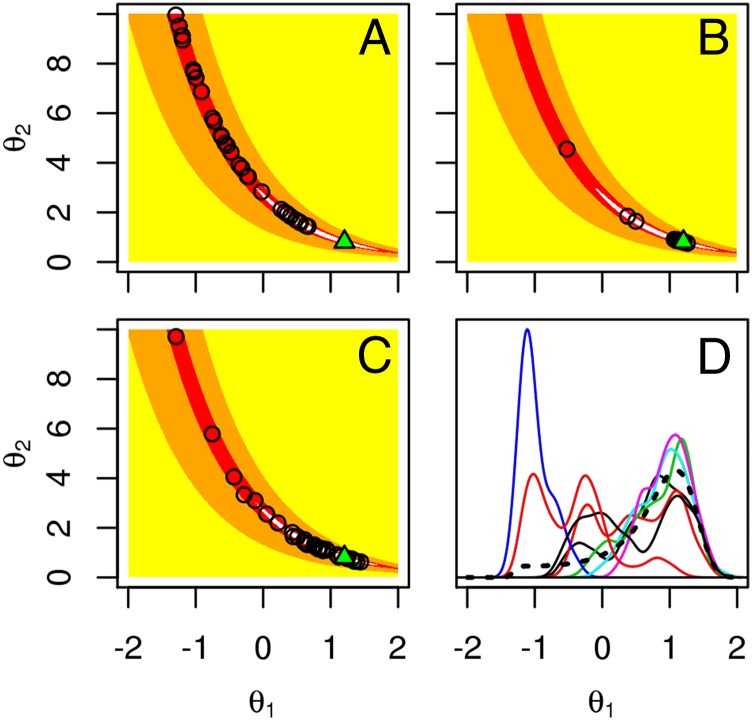

Fig. 1 compares the performance of the three methods, based on 30 Monte Carlo replications. These replications investigate the likelihood and posterior distribution for a single draw from our toy model, since our interest is in the Monte Carlo behavior for a given dataset. For this simulated dataset, the MLE is , shown as a green triangle in Fig. 1 A−C. In this toy example, the posterior distribution can also be computed directly by numerical integration. In Fig. 1A, we see that IF1 performs poorly on this challenge. None of the 30 replications approach the MLE. The linear combination of perturbed parameters involved in the IF1 update formula can all too easily knock the search off a nonlinear ridge. Fig. 1B shows that IF2 performs well on this test, with almost all of the Monte Carlo replications clustering in the region of highest likelihood. Fig. 1C shows the end points of the PMCMC replications, which are nicely spread around the region of high posterior probability. However, Fig. 1D shows that mixing of the PMCMC Markov chains was problematic.

Fig. 1.

Results for the simulation study of the toy example. (A) IF1 point estimates from 30 replications (circles) and the MLE (green triangle). The region of parameter space with likelihood within 3 log units of the maximum (white), within 10 log units (red), within 100 log units (orange), and lower (yellow). (B) IF2 point estimates from 30 replications (circles) with the same algorithmic settings as IF1. (C) Final parameter value of 30 PMCMC chains (circles). (D) Kernel density estimates of the posterior for for the first eight of these 30 PMCMC chains (solid lines), with the true posterior distribution (dotted black line).

Application to a Cholera Model

Highly nonlinear, partially observed, stochastic dynamic systems are ubiquitous in the study of biological processes. The physical scale of the systems vary widely from molecular biology (31) to population ecology and epidemiology (32), but POMP models arise naturally at all scales. In the face of biological complexity, it is necessary to determine which scientific aspects of a system are critical for the investigation. Giving consideration to a range of potential mechanisms, and their interactions, may require working with highly parameterized models. Limitations in the available data may result in some combinations of parameters being weakly identifiable. Despite this, other combinations of parameters may be adequately identifiable and give rise to some interesting statistical inferences. To demonstrate the capabilities of IF2 for such analyses, we fit a model for cholera epidemics in historic Bengal developed by King et al. (10). The model, the data, and the implementations of IF1 and IF2 used below are all contained in the open source R package pomp (33). The code generating the results in this article is provided as supplementary data (Datasets S1 and S2).

Cholera is a diarrheal disease caused by the bacterial pathogen Vibrio cholerae. Without appropriate medical treatment, severe infections can rapidly result in death by dehydration. Many questions regarding cholera transmission remain unresolved: What is the epidemiological role of free-living environmental vibrio? How important are mild and asymptomatic infections for the transmission dynamics? How long does protective immunity last following infection? The model we consider splits up the study population of individuals into those who are susceptible, , infected, , and recovered, . is assumed known from census data. To allow flexibility in representing immunity, is subdivided into , where we take . Cumulative cholera mortality in each month is tracked with a variable that resets to zero at the beginning of each observation period. The state process, , follows a stochastic differential equation,

driven by a Brownian motion . Nonlinearity arises through the force of infection, , specified as

where is a periodic cubic B-spline basis; model seasonality of transmission; model seasonality of the environmental reservoir; and are scaling constants set to , and we set . The data, consisting of monthly counts of cholera mortality, are modeled via for .

The inference goal used to assess IF1 and IF2 is to find high-likelihood parameter values starting from randomly drawn starting values in a large hyperrectangle (Table S1). A single search cannot necessarily be expected to reliably obtain the maximum of the likelihood, due to multimodality, weak identifiability, and considerable Monte Carlo error in evaluating the likelihood. Multiple starts and restarts may be needed both for effective optimization and for assessing the evidence to validate effective optimization. However, optimization progress made on an initial search provides a concrete criterion to compare methodologies. Since IF1 and IF2 have essentially the same computational cost, for a given Monte Carlo sample size and number of iterations, shared fixed values of these algorithmic parameters provide an appropriate comparison.

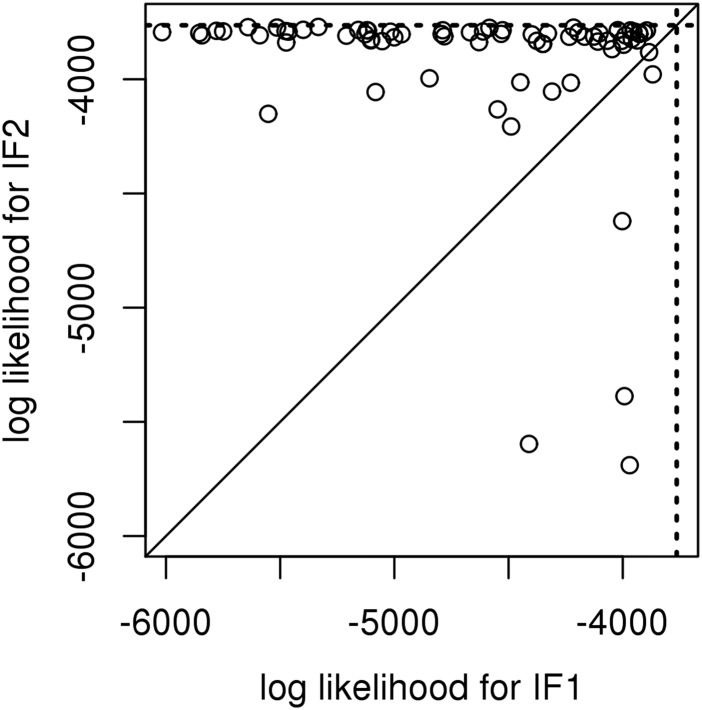

Fig. 2 compares results for 100 searches with particles and iterations of the search. An initial Gaussian random walk SD of 0.1 geometrically decreasing down to a final value of 0.01 was used for all parameters except , , , , and . For those initial value parameters, the random walk SD decreased geometrically from 0.2 down to 0.02, but these perturbations were applied only at time . Since some starting points may lead both IF1 and IF2 to fail to approach the global maximum, Fig. 2 plots the likelihoods of parameter vectors output by IF1 and IF2 for each starting point. Fig. 2 shows that, on this problem, IF2 is considerably more effective than IF1. This maximization was considered challenging for IF1, and (10) required multiple restarts and refinements of the optimization procedure. Our implementation of PMCMC failed to converge on this inference problem (SI Text, Applying PMCMC to the Cholera Model and Fig. S2), and we are not aware of any previous successful PMCMC solution for a comparable situation. For IF2, however, this situation appears routine. Some Monte Carlo replication is needed because searches occasionally fail to approach the global optimum, but replication is always appropriate for Monte Carlo optimization procedures.

Fig. 2.

Comparison of IF1 and IF2 on the cholera model. Points are the log likelihood of the parameter vector output by IF1 and IF2, both started at a uniform draw from a large hyperrectangle (Table S1). Likelihoods were evaluated as the median of 10 particle filter replications (i.e., IF2 applied with and ) each with particles. Seventeen poorly performing searches are off the scale of this plot (15 due to the IF1 estimate, 2 due to the IF2 estimate). Dotted lines show the maximum log likelihood reported by ref. 10.

A fair numerical comparison of methods is difficult. For example, it could hypothetically be the case that the algorithmic settings used here favor IF2. However, the settings used are those that were developed for IF1 by ref. 10 and reflect considerable amounts of trial and error with that method. Likelihood-based inference for general partially observed nonlinear stochastic dynamic models was considered computationally unfeasible before the introduction of IF1, even in situations considerably simpler than the one investigated in this section (19). We have shown that IF2 offers a substantial improvement on IF1, by demonstrating that it functions effectively on a problem at the limit of the capabilities of IF1.

Discussion

Theorems 1 and 2 assert convergence without giving insights into the rate of convergence. In the particular case of a quadratic log likelihood function and additive Gaussian parameter perturbations, is Gaussian, and explicit calculations are available (SI Text, Gaussian and Near-Gaussian Analysis of Iterated Importance Sampling). If is close to quadratic and the parameter perturbation is close to additive Gaussian noise, then exists and is close to the limit for the approximating Gaussian system (SI Text, Gaussian and Near-Gaussian Analysis of Iterated Importance Sampling). These Gaussian and near-Gaussian situations also demonstrate that the compactness conditions for Theorem 2 are not always necessary. In the case , IF2 applies to the more general class of latent variable models. The latent variable model, extended to include a parameter vector that varies over iterations, nevertheless has the formal structure of a POMP in the context of the IF2 algorithm. Some simplifications arise when (SI Text, Iterated Importance Sampling, Gaussian and Near-Gaussian Analysis, and A Class of Exact Non-Gaussian Limits) but the proofs of Theorems 1 and 2 do not greatly change.

A variation on iterated filtering, making white noise perturbations to the parameter rather than random walk perturbations, has favorable asymptotic properties (3). However, practical algorithms based on this theoretical insight have not yet been published. Our experience suggests that white noise perturbations can be effective in a neighborhood of the MLE but fail to match the performance of IF2 for global optimization problems in complex models.

The main theoretical innovation of this paper is Theorem 2, which does not depend on the specific sequential Monte Carlo filter used in IF2. One could, for example, modify IF2 to use an ensemble Kalman filter (20, 34) or an unscented Kalman filter (35). Or, one could take advantage of variations of sequential Monte Carlo that may improve the numerical performance (36). However, basic sequential Monte Carlo is a general and widely used nonlinear filtering technique that provides a simple yet theoretically supported foundation for the IF2 algorithm. The numerical stability of sequential Monte Carlo for the extended POMP model constructed by IF2 is comparable, in our cholera example, to the model with fixed parameters (SI Text, Consequences of Perturbing Parameters for the Numerical Stability of SMC and Fig. S3).

Supplementary Material

Acknowledgments

We acknowledge constructive comments by two anonymous referees, the editor, and Joon Ha Park. Funding was provided by National Science Foundation Grants DMS-1308919 and DMS-1106695, National Institutes of Health Grants 1-R01-AI101155, 1-U54-GM111274, and 1-U01-GM110712, and the Research and Policy for Infectious Disease Dynamics program of Department of Homeland Security and National Institutes of Health, Fogarty International Center.

Footnotes

The authors declare no conflict of interest.

This article is a PNAS Direct Submission.

This article contains supporting information online at www.pnas.org/lookup/suppl/doi:10.1073/pnas.1410597112/-/DCSupplemental.

References

- 1.Ionides EL, Bretó C, King AA. Inference for nonlinear dynamical systems. Proc Natl Acad Sci USA. 2006;103(49):18438–18443. doi: 10.1073/pnas.0603181103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Ionides EL, Bhadra A, Atchadé Y, King AA. Iterated filtering. Ann Stat. 2011;39(3):1776–1802. [Google Scholar]

- 3.Doucet A, Jacob PE, Rubenthaler S. 2013. Derivative-free estimation of the score vector and observed information matrix with application to state-space models. arxiv:1304.5768.

- 4.Lindström E, Ionides EL, Frydendall J, Madsen H. System Identification. Vol 16. Elsevier; New York: 2012. Efficient iterated filtering; pp. 1785–1790. [Google Scholar]

- 5.Lele SR, Dennis B, Lutscher F. Data cloning: Easy maximum likelihood estimation for complex ecological models using Bayesian Markov chain Monte Carlo methods. Ecol Lett. 2007;10(7):551–563. doi: 10.1111/j.1461-0248.2007.01047.x. [DOI] [PubMed] [Google Scholar]

- 6.Lele SR, Nadeem K, Schmuland B. Estimability and likelihood inference for generalized linear mixed models using data cloning. J Am Stat Assoc. 2010;105(492):1617–1625. [Google Scholar]

- 7.Doucet A, Godsill SJ, Robert CP. Marginal maximum a posteriori estimation using Markov chain Monte Carlo. Stat Comput. 2002;12(1):77–84. [Google Scholar]

- 8.Gaetan C, Yao J-F. A multiple-imputation Metropolis version of the EM algorithm. Biometrika. 2003;90(3):643–654. [Google Scholar]

- 9.Jacquier E, Johannes M, Polson N. MCMC maximum likelihood for latent state models. J Econom. 2007;137(2):615–640. [Google Scholar]

- 10.King AA, Ionides EL, Pascual M, Bouma MJ. Inapparent infections and cholera dynamics. Nature. 2008;454(7206):877–880. doi: 10.1038/nature07084. [DOI] [PubMed] [Google Scholar]

- 11.Laneri K, et al. Forcing versus feedback: Epidemic malaria and monsoon rains in NW India. PLoS Comput Biol. 2010;6(9):e1000898. doi: 10.1371/journal.pcbi.1000898. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.He D, Ionides EL, King AA. Plug-and-play inference for disease dynamics: Measles in large and small populations as a case study. J R Soc Interface. 2010;7(43):271–283. doi: 10.1098/rsif.2009.0151. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Blackwood JC, Cummings DAT, Broutin H, Iamsirithaworn S, Rohani P. Deciphering the impacts of vaccination and immunity on pertussis epidemiology in Thailand. Proc Natl Acad Sci USA. 2013;110(23):9595–9600. doi: 10.1073/pnas.1220908110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Shrestha S, Foxman B, Weinberger DM, Steiner C, Viboud C, Rohani P. Identifying the interaction between influenza and pneumococcal pneumonia using incidence data. Sci Transl Med. 2013;5:191ra84. doi: 10.1126/scitranslmed.3005982. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Blake IM, et al. The role of older children and adults in wild poliovirus transmission. Proc Natl Acad Sci USA. 2014;111(29):10604–10609. doi: 10.1073/pnas.1323688111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Bretó C, He D, Ionides EL, King AA. Time series analysis via mechanistic models. Ann Appl Stat. 2009;3:319–348. [Google Scholar]

- 17.Andrieu C, Doucet A, Holenstein R. Particle Markov chain Monte Carlo methods. J R Stat Soc Ser B. 2010;72(3):269–342. [Google Scholar]

- 18.Toni T, Welch D, Strelkowa N, Ipsen A, Stumpf MP. Approximate Bayesian computation scheme for parameter inference and model selection in dynamical systems. J R Soc Interface. 2009;6(31):187–202. doi: 10.1098/rsif.2008.0172. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Wood SN. Statistical inference for noisy nonlinear ecological dynamic systems. Nature. 2010;466(7310):1102–1104. doi: 10.1038/nature09319. [DOI] [PubMed] [Google Scholar]

- 20.Shaman J, Karspeck A. Forecasting seasonal outbreaks of influenza. Proc Natl Acad Sci USA. 2012;109(50):20425–20430. doi: 10.1073/pnas.1208772109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Kitagawa G. A self-organising state-space model. J Am Stat Assoc. 1998;93:1203–1215. [Google Scholar]

- 22.Liu J, West M. Combined parameter and state estimation in simulation-based filtering. In: Doucet A, de Freitas N, Gordon NJ, editors. Sequential Monte Carlo Methods in Practice. Springer; New York: 2001. pp. 197–224. [Google Scholar]

- 23.Arulampalam MS, Maskell S, Gordon N, Clapp T. A tutorial on particle filters for online nonlinear, non-Gaussian Bayesian tracking. IEEE Trans Signal Process. 2002;50(2):174–188. [Google Scholar]

- 24.Doucet A, de Freitas N, Gordon NJ, editors. Sequential Monte Carlo Methods in Practice. Springer; New York: 2001. [Google Scholar]

- 25.Ingber L. Simulated annealing: Practice versus theory. Math Comput Model. 1993;18:29–57. [Google Scholar]

- 26.Le Gland F, Oudjane N. Stability and uniform approximation of nonlinear filters using the Hilbert metric and application to particle filters. Ann Appl Probab. 2004;14(1):144–187. [Google Scholar]

- 27.Eveson SP. Hilbert’s projective metric and the spectral properties of positive linear operators. Proc London Math Soc. 1995;3(2):411–440. [Google Scholar]

- 28.Del Moral P, Doucet A. Particle motions in absorbing medium with hard and soft obstacles. Stochastic Anal Appl. 2004;22(5):1175–1207. [Google Scholar]

- 29.Whiteley N, Kantas N, Jasra A. Linear variance bounds for particle approximations of time-homogeneous Feynman–Kac formulae. Stochastic Process Appl. 2012;122(4):1840–1865. [Google Scholar]

- 30.Bhadra A. Discussion of ‘particle Markov chain Monte Carlo methods’ by C. Andrieu, A. Doucet and R. Holenstein. J R Stat Soc B. 2010;72:314–315. [Google Scholar]

- 31.Wilkinson DJ. Stochastic Modelling for Systems Biology. Chapman & Hall; Boca Raton, FL: 2012. [Google Scholar]

- 32.Keeling M, Rohani P. Modeling Infectious Diseases in Humans and Animals. Princeton Univ. Press; Princeton, NJ: 2009. [Google Scholar]

- 33.King AA, Ionides EL, Bretó CM, Ellner S, Kendall B. 2009. pomp: Statistical inference for partially observed Markov processes (R package). Available at cran.r-project.org/web/packages/pomp.

- 34.Yang W, Karspeck A, Shaman J. Comparison of filtering methods for the modeling and retrospective forecasting of influenza epidemics. PLOS Comput Biol. 2014;10(4):e1003583. doi: 10.1371/journal.pcbi.1003583. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Julier S, Uhlmann J. Unscented filtering and nonlinear estimation. Proc IEEE. 2004;92(3):401–422. [Google Scholar]

- 36.Cappé O, Godsill S, Moulines E. An overview of existing methods and recent advances in sequential Monte Carlo. Proc IEEE. 2007;95(5):899–924. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.