Abstract

Background: The long-term success of child obesity prevention and control efforts depends not only on the efficacy of the approaches selected, but also on the strategies through which they are implemented and sustained. This study introduces the Multilevel Implementation Framework (MIF), a conceptual model of factors affecting the implementation of multilevel, multisector interventions, and describes its application to the evaluation of two of three state sites (CA and MA) participating in the Childhood Obesity Research Demonstration (CORD) project.

Methods/Design: A convergent mixed-methods design is used to document intervention activities and identify determinants of implementation effectiveness at the CA-CORD and MA-CORD sites. Data will be collected from multiple sectors and at multiple levels of influence (e.g., delivery system, academic-community partnership, and coalition). Quantitative surveys will be administered to coalition members and staff in participating delivery systems. Qualitative, semistructured interviews will be conducted with project leaders and key informants at multiple levels (e.g., leaders and frontline staff) within each delivery system. Document analysis of project-related materials and in vivo observations of training sessions will occur on an ongoing basis. Specific constructs assessed will be informed by the MIF. Results will be shared with project leaders and key stakeholders for the purposes of improving processes and informing sustainability discussions and will be used to test and refine the MIF.

Conclusions: Study findings will contribute to knowledge about how to coordinate and implement change strategies within and across sectors in ways that effectively engage diverse stakeholders, minimize policy resistance, and maximize desired intervention outcomes.

Introduction

Despite national efforts to combat the obesity epidemic, almost one third of US children and adolescents 2–19 years of age remain overweight or obese (BMI ≥85th percentile).1 Racially/ethnically diverse and low socioeconomic status groups in particular are disproportionately affected.2 To more effectively prevent and control childhood obesity, federal agencies, such as the National Institutes of Health and the CDC, have begun to promote multilevel, multisector (e.g., family,3 school,4 healthcare,5 community,6,7 and policy8) approaches that focus on changing not just individual behavior, but the broader sociophysical environment in which children live, learn, eat, and play.9,10 Recent, large-scale initiatives, such as Shape Up Somerville11 and the California Endowment's Healthy Eating, Active Communities,12 provide preliminary evidence that social ecological approaches can promote healthy behaviors and prevent weight gain in children.

Developing effective approaches is a critical first step to addressing the obesity epidemic.13 However, subsequent dissemination and implementation of such approaches across communities play an equally important role in determining whether meaningful improvements in population health and well-being are achieved and sustained.14 Dissemination, defined as active and planned efforts to persuade target groups to adopt a new program, policy, or practice, influences the rate at which evidence-based approaches spread to new settings.15 Implementation, which refers to the processes through which a new program, policy, or practice is put into use,16,17 is arguably even more critical given that it affects the extent to which desired outcomes are realized.18,19

Implementation of multilevel, multisector approaches is challenging because they require significant buy-in and coordination of activities from diverse stakeholders, each of whom may vary in their readiness, capacity, and willingness to put in place the system, environmental, and policy changes involved.20,21 The scale of proposed changes and the diversity of actors involved may also result in unintended consequences that adversely affect outcomes and sustainability (e.g., policy resistance).21,22 Policy makers, practitioners, and health promotion researchers are increasingly aware of these challenges.9 However, in part because so few multilevel, multisector interventions have been developed, currently little is known about strategies for overcoming these challenges and effectively implementing childhood obesity prevention and control efforts within communities.

The current study contributes to the literature by introducing the Multilevel Implementation Framework (MIF), a conceptual framework, and describing its application to the evaluation of two of three state sites (MA and CA) implementing multilevel, multisector interventions as part of the Childhood Obesity Research Demonstration (CORD) project. By systematically examining the processes, activities, and resources affecting intervention uptake,17,23 this study will contribute to knowledge about how to coordinate and implement change strategies in ways that effectively engage diverse stakeholders in implementing socioecological approaches to maximize desired intervention outcomes.

Initial Conceptualization of the Multilevel Implementation Framework

Over the last decade, a growing body of research has developed around the science of dissemination and implementation. Drawing upon research from multiple disciplines, such as healthcare,21,24,25 public health,26,27 and business,16,28 a variety of models have been proposed to explain how different contextual factors and implementation activities influence organizational change efforts, consistency and quality of intervention use, and subsequent outcomes. As might be expected, these models are strongly influenced “by the service contexts chosen for emphasis and by the contextual levels that serve as primary organizing arenas”24 and, consequently, tend to differ both in constructs examined and in the level of analysis on which they are focused.29

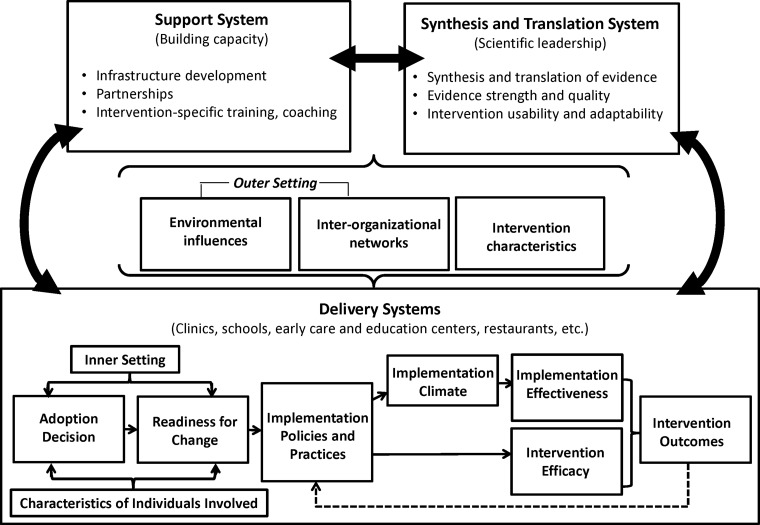

The MIF is a conceptual model developed specifically for identifying factors affecting implementation of multilevel, multisector interventions, for example, obesity prevention and control initiatives that utilize a social ecological approach (see Fig. 1).25,27,30 In developing the MIF, theoretical constructs from previous research particularly relevant to implementation of multilevel, multisector interventions were reviewed, adapted, and integrated into the framework. Specifically, the MIF is informed by constructs from the Interactive Systems Framework,27 the organizational model of innovation implementation,21,30 and the Consolidated Framework for Implementation Research.25 A more detailed overview of how each contributed to the MIF is provided in Supplementary Appendix A (see online supplementary material at www.liebertpub.com/chi).

Figure 1.

Multilevel Implementation Framework. Adapted from the Consolidated Framework for Implementation Research,25 the Interactive Systems Framework,27 and the organizational model of innovation implementation.30

Briefly, the MIF differs from other implementation models by describing how different factors interact not only within, but also across sectors to influence implementation effectiveness and subsequent intervention outcomes. Consistent with a social ecological perspective,31 the MIF does not view individual behavioral settings in isolation, but instead explicitly accounts for the broader community context in which behaviors are enacted.32 Selected constructs reflect multiple levels of influence (community, delivery system, and individual) and can be applied to identify factors affecting implementation in a wide range of contexts. For example, the MIF includes community-centered constructs that account for the roles of multiple stakeholders (funder, researcher, and practitioner) in the implementation process, as well as organization-specific constructs known to affect implementation within individual delivery systems. A complete list of MIF constructs, their definitions, and their hypothesized level of influence is provided in Table 1.30,33–46

Table 1.

Key Constructs Affecting Implementation

| Definition | |

|---|---|

| Level of analysis: all | |

| Outer setting | Sociopolitical context, funding opportunities, and interorganizational networks that influence adoption and implementation24,33,34 |

| Inner setting | Structural, political, and cultural context within a given entity responsible for implementation, including previous experience/history, resource availability, and leadership support for the intervention35 |

| Characteristics of individuals involved | Knowledge, skills, beliefs, and personal characteristics of individuals responsible for implementing intervention activities and of intervention end users (e.g., children and their families).36–39 Characteristics of intervention end users play a particularly important role in implementation of public health and chronic care management interventions.38,39 |

| Intervention characteristics | Attributes of the intervention that may influence the implementation, such as strength of evidence base, cost, extent to which intervention can be adapted to meet local needs, perceived advantages, and difficulty of implementing the intervention, compared to existing practice or an alternative solution15,40–43,88 |

| Level of analysis: community (academic-community partnership and coalition) | |

| Synthesis and translation system | Efforts by scientific leadership to distill and disseminate research evidence to other stakeholders (e.g., developing and adapting an intervention to fit local contexts)27,44 |

| Support system | Stakeholder efforts to build capacity for implementing and/or sustaining the intervention (intervention-specific training and coaching, coalition and/or other partnership development, and so on)27,44 |

| Delivery system | Inner context, implementation processes, and other factors influencing implementation in the organizations/sectors in which the intervention is taking place27,44 |

| Level of analysis: delivery system | |

| Readiness for change | Extent to which individuals responsible for implementation are psychologically and behaviorally prepared to make the changes necessary to implement the intervention.45,98 Strongly influenced by management support and resource availability |

| Implementation processes | Practices, policies, structures, and/or strategies used to put an intervention in place and support its use16 |

| Implementation climate | Extent to which individuals responsible for implementation perceive that participation in intervention activities is expected, supported, and rewarded by the delivery system in which they are located67 |

| Innovation-values fit | Extent to which the intervention is compatible with professional or organizational mission and values46 |

| Innovation-task fit | Extent to which individuals responsible for implementation perceive intervention activities as compatible with local task demands (e.g., work processes and preferences)15 |

| Intervention champion | An individual that promotes the intervention within the delivery system21 |

Description of the MA-CORD and CA-CORD Interventions

Funded by the CDC, the CORD project is designed to test the effectiveness of integrated clinical and public health evidence-based approaches to child obesity prevention and control.47 Though specific activities vary across the CA-CORD and MA-CORD sites, the two interventions share a number of common features48,49: Both CA-CORD and MA-CORD seek to effect change in obesity factors and outcomes among underserved children 2–12 years of age. Their interventions are based on the obesity chronic care model50,51 and, as such, span multiple sectors (healthcare, school, early care, and education) and levels of influence (family, organization, and community).47 CA-CORD and MA-CORD are each led by a project team that includes a public health department, an academic university, and at least one healthcare organization (among other partners). Both sites involve clinicians in promoting intervention activities in the clinic setting and have identified community coalitions as a primary mechanism for coordinating and sustaining intervention activities. Although the communities in which CA-CORD and MA-CORD are being implemented (Brawley and El Centro, CA and New Bedford and Fitchburg, MA, respectively) differ in urbanicity and racial/ethnic composition, they also share certain similarities: All of these communities are low income and have a high prevalence of child overweight and obesity, with specific rates ranging from 37% to 46%.48,49

Table 252–59 provides a brief overview of CA-CORD and MA-CORD activities. More detailed descriptions of the overall CORD project, the CA-CORD and MA-CORD interventions, and the communities in which they are being implemented are available elsewhere.48,49,60,61

Table 2.

Summary of Key Intervention Componentsa

| MA-CORD Children ages 2–12 years in Fitchburg and New Bedford, MA | CA-CORD Children ages 2–10 years in Brawley and El Centro, CA | |

|---|---|---|

| Community health centers (CHCs) Personnel: providers, nurse practitioner, medical assistants, registered dieticians, community health workers (CHWs) |

• Two CHCs (one per community) • Practice change initiative based on High Five for Kids52 • Learning collaborative to improve obesity-related quality of care • Two healthy weight clinics: specialized unit for overweight/obesity referrals |

• Two largest clinics within one CHC (one clinic per community) • Delivery system design including chronic care team and modifications to electronic health records • Practice team preparation including staff and provider training • CHW-led workshops based on several evidence-based interventions: Entre Familia,53,54Aventuras para Niños,55,56 Move/Me Muevo57 |

| Schools Personnel: administrators, teachers, school nurses, food service staff, school wellness champion |

Public elementary schools (N=23), middle schools (N=5), and after-school programs (N=15) in Fitchburg and New Bedford • Eat Well Keep Moving (grades 3–4) • Planet Health (grades 5–6) • Food & Fun (grades K–3, after-school sites) • Media campaign designed by schools |

All public elementary schools (N=13) in Brawley and El Centro • School wellness policy change • SPARK Physical Education58 • Structural water promotion • Sleep curriculum and tip sheets • Parent outreach • Social marketing campaign |

| Early care and education centers Personnel: early care and education center directors, staff, nutritionist, health education specialists |

Nine early care and education centers (for children ages 2–5 years) • I am Moving I Am Learning • NAP SACC59 • Media campaign |

Twnety-six center-based and private early care and education centers • NAP SACC59 • Policy change • SPARK Physical Education58 • Quarterly trainings, toolkit, and technical assistance • Social marketing campaign |

| Community Personnel: coalition coordinator, public health officials, restaurant owners |

• Efforts led by two municipal wellness coalitions • Safe Routes to School: planning only • Media campaign |

• Parks and recreation and boys and girls clubs and gardens (N=4) • Restaurant intervention (N=3) • Social marketing campaign |

Methods/Design

In applying the MIF to examine implementation of the CA-CORD and MA-CORD interventions, a convergent, mixed-methods study design62 involving concurrent qualitative and quantitative data collection at multiple time points and levels of influence will be used to (1) document intervention activities and the processes used to implement them and (2) identify factors affecting implementation effectiveness (i.e., consistency and quality of intervention use).16

Qualitative Procedures

Three inter-related methods of qualitative data collection will be used to identify intervention activities and implementation processes, examine facilitators and barriers to intervention uptake, clarify mechanisms used to coordinate and sustain intervention activities, and empirically verify the relevance of key constructs identified in Figure 1. These qualitative procedures include:

• Semistructured interviews with site-specific CORD project leaders and key informants within each participating delivery system. Given the large number of individuals involved in implementation, a quota sampling approach will be used to ensure that key informants are selected at multiple levels within each delivery system (e.g., school district superintendent, principals, and teachers, clinic chief executive officer, providers, and frontline staff). Semistructured interview questions will gather information on constructs identified in the MIF (see Table 1). A common interview template will be used; however, specific questions will be tailored to reflect differences in respondent role (e.g., project leader, delivery system leader, and individuals responsible for implementing intervention activities) and level of influence as well as in the intervention activities being implemented at each site. Example interview questions are provided in Supplementary Appendix B (see online supplementary material at www.liebertpub.com/chi). Interviews are expected to last between 30 and 60 minutes and, with respondents' permission, will be recorded and transcribed verbatim. Key informant interviews will take place at two time points: shortly after initial implementation (baseline) and 12 months later. Interviews with project leaders will occur once, approximately 9–10 months after initial implementation.

• In vivo observations and corresponding field notes from selected training sessions identified as critical to capacity-building efforts will be used to enhance the external validity of information obtained regarding dose delivered and received, as well as specific implementation strategies being employed.63

• Document analysis of project-related materials, such as investigator and coalition meeting minutes, which will be collected on an ongoing basis. Whereas these materials will primarily be used to document intervention activities and implementation processes, coalition meeting minutes will also be used to track changes in coalition size, diversity, stability, and outcomes (e.g., community changes, planning products, media products, and additional resources generated and/or leveraged) over time.64,65

Quantitative Procedures

To examine dynamic, emergent effects of implemented changes on participating stakeholders,22 quantitative surveys will be administered at two levels of influence: community coalition and organization. These quantitative surveys will assess specific constructs hypothesized to affect implementation at two points of uptake: shortly after initial implementation (baseline) and 12 months later.

• Coalition survey. The coalition survey will be administered to all coalition members in the four intervention communities. At baseline, the coalition survey will include the nine-item Organizational Readiness for Implementing Change (ORIC)66 as well as additional measures assessing specific determinants of readiness for change (e.g., resource availability). At 12 months, the ORIC will be replaced with a six-item measure of implementation climate.67 Both the baseline and 12-month surveys also include items assessing respondent demographic characteristics, 5-point Likert scale items regarding the perceived benefits of participating in the coalition,68 and the Wilder Collaboration Factors Inventory (WCFI). The WCFI is comprised of 47 individual Likert scale items representing six categories of factors shown to affect the success of collaborative endeavors,69,70 including: (1) the local environment in which coalition members are located (e.g., earlier history of collaboration); (2) member characteristics; (3) collaborative processes and structure; (4) quality of communication between coalition members; (5) clarity of coalition purpose; and (6) resources, specifically adequacy of coalition funds, staff, and leadership.

• Organization-level survey. The organization-level survey will be administered to staff responsible for carrying out and/or coordinating intervention activities (e.g., teachers, early care and education center directors, community health workers, and providers). At baseline, survey items will include the nine-item ORIC,66 items assessing respondent demographic characteristics, and four additional measures of innovation-values fit (extent to which intervention activities are compatible with professional or organizational mission and values), innovation-task fit (extent to which implementers perceive intervention activities as compatible with local task demands), management support, and resource availability. In the 12-month survey, the ORIC will be replaced with measures of implementation climate tailored to reflect unique intervention activities being implemented in each delivery system setting.67,71 The 12-month survey also includes Likert scale items regarding perceived utility of specific implementation resources and capacity-building activities provided to respondents over the last year (e.g., training sessions). Additional, quantitative data on environmental and policy changes will also be collected at both time points as part of the overall CORD evaluation.60,61

Measuring Implementation Effectiveness

Implementation effectiveness refers to the consistency and quality of intervention use.16 In the dissemination and implementation literature, conceptualizations of implementation effectiveness vary based on the nature of the intervention or organizational change being introduced.72–75 In the CORD project, dimensions of implementation effectiveness identified as critical mediators of intervention outcomes include exposure (dose and reach), quality of delivery, fidelity, participant responsiveness, and differentiation. A detailed definition of each of these dimensions is provided in Table 3. Data on three dimensions of implementation effectiveness (dose delivered, reach, and fidelity) and participant-level intervention outcomes (e.g., child BMI, physical activity, and fruit and vegetable consumption) will be collected as part of the overall CORD evaluation and are described in more detail elsewhere.60,61 Data on participant responsiveness and differentiation will be collected as part of the qualitative procedures outlined above.

Table 3.

Key Dimensions of Implementation Effectiveness

| Definition | |

|---|---|

| Dose delivered | The amount of intervention delivered or provided by interventionists |

| Exposure (dose received) | The amount of intervention received by participating individuals and organizations |

| Exposure (reach) | Intervention coverage or reach (i.e., whether all participating individuals and organizations who should be exposed to the intervention are actually exposed) |

| Quality | The manner in which staff deliver the intervention (i.e., skill/preparedness, attitude toward participants) |

| Fidelity | Degree to which intervention components are delivered as intended |

| Responsiveness (participant engagement) | The extent to which participating individuals and organizations are engaged in intervention activities and content |

| Differentiation | Identification of core versus peripheral intervention components |

Data Analysis

Qualitative data analysis will occur in multiple steps. First, the qualitative software program, NVivo (10.0; QSR International, Burlington, MA)76 will be used to code all qualitative data files. The initial codebook will be informed by the MIF, but may be revised to include emergent constructs identified from the data. Potential coding areas include constructs identified in Table 1, discrete implementation strategies employed, unexpected outcomes experienced, and facilitators and barriers to implementation perceived by respondents. Codes will also be assigned to describe connections between constructs, including those at different levels of analysis (also known as axial coding).77 All qualitative data files will be reviewed by at least two members of the research team. Any ambiguity or discrepancies in coding will be resolved through discussion and/or enhanced definition of codes. Once the codebook has been finalized, inter-rater reliability will be assessed by comparing level of agreement in the coding.78 Next, we will generate reports of all text segments for each code. These reports will be analyzed to identify themes in the coded data for each construct as well as the degree to which each construct positively or negatively affected implementation. The relative importance of each construct will be examined within and across sectors and communities as well as by respondent role. Results will be shared with project leaders as an additional validity check and used to test and refine the MIF. Selected findings (e.g., summary of facilitators and barriers to effective implementation in each sector) will also be shared with stakeholders for improving processes and informing discussion of sustainability.

Quantitative survey data will also be analyzed in multiple steps. First, confirmatory factor analysis will be used to examine the underlying factor structure of quantitative survey measures. Assuming sample sizes are sufficient, measurement equivalence across sectors will also be assessed. Assessment of psychometric properties will be conducted using standard statistical packages, such as SAS (SAS Institute Inc., Cary, NC) or Stata (StataCorp LP, College Station, TX). Quantitative survey data will then be aggregated to the organization level and combined with qualitative data to empirically test pathways outlined in Figure 1, with a specific focus on establishing predictive validity (i.e., the extent to which constructs are associated with key dimensions of implementation effectiveness) and identifying best practices for implementation.

Analyses will be conducted in aggregate as well as by sector (clinic, school, early care, and education). Specific approaches used to analyze the data will vary by sector. For example, in the healthcare sector, where the number of clinics involved is small (n<5), a multiple case-study approach will be used. Case studies are well suited for studying nonlinear, context-sensitive processes, such as implementation,79 and permit in-depth analysis of individual cases as well as systematic cross-case comparison. In the school and early care and education sectors, where the number of organizations involved is more moderate, case-study data will be analyzed using qualitative comparative analysis (QCA). QCA has been described as a bridge between quantitative and qualitative techniques because it utilizes principles from both case- and variable-oriented research to assess cross-case commonalities and differences that explain why an outcome (e.g., implementation effectiveness or behavior change) occurs.80,81 As appropriate, qualitative and quantitative data will be transformed and calibrated for use in QCA. Analyses will be conducted and visualized using either the TOSMANA81,82 or fsQCA83 software, depending on the nature of the specific constructs being tested.

All study procedures have been approved by the institutional review boards at San Diego State University (San Diego, CA) and the Harvard School of Public Health (Boston, MA).

Discussion

In applying the MIF to evaluate implementation at the MA-CORD and CA-CORD sites, this study will provide both sites with information that can be used to facilitate process corrections or adjustments to intervention activities and/or implementation processes being employed.84,85 In the long term, this study will also contribute to knowledge about how to effectively engage diverse stakeholder groups in multilevel, multisector interventions as well as coordinate and implement change strategies within and across behavioral settings in ways that minimize policy resistance, maximize desired intervention outcomes, and promote subsequent sustainment and scale-up of effective approaches.

As one of the first, large-scale initiatives to test combined public health and clinical approaches to child obesity prevention and control, the CORD project represents an important opportunity to better understand how specified intervention strategies and delivery systems may interact within communities to affect population outcomes over time. To date, few demonstration studies or controlled trials of social ecological approaches to health promotion have systematically collected practitioner- and/or system-level data on facilitators and barriers to implementation (i.e., the processes, activities, and resources affecting uptake).17,23 Part of the challenge is that in their “ideal types,” effectiveness and implementation research do not share many design features, and thus many studies are not structured a priori to address both intervention and implementation aims.86 In recent years, however, hybrid research designs that simultaneously assess program effects and gather information on implementation have been proposed as a way to maximize the relevance of research findings to practitioners and policy makers.86,87

This type of hybrid effectiveness-implementation design may be particularly useful for evaluating interventions such as MA-CORD and CA-CORD, which include evidence-informed activities, but whose overall effectiveness in real-world settings is not as well established. All interventions are comprised of core components (elements essential to the internal logic of the intervention and considered directly responsible for intervention effects) as well as a soft, adaptable periphery (elements, structures, and systems that support implementation and sustainment, but whose necessity may vary across contexts).15 Adaptation at the periphery can facilitate delivery of core components in nonresearch settings and often reflects cultural and contextual “translations” critical to successful dissemination and implementation efforts.88,89 However, adaptation can also result in drift, that is, abandonment of core components or introduction of counterproductive elements that negatively affect intervention effectiveness.88,90 In complex interventions such as CA-CORD and MA-CORD, the distinction between core and peripheral intervention components is often difficult to determine and may be discerned only through trial and error over time as an intervention is “scaled up” and adapted for use in a variety of new contexts.25,91 Careful monitoring of intervention activities and implementation processes can significantly speed up this process. Specific evaluation activities may vary based on available resources. In contexts where resource constraints and/or respondent burden are of particular concern, document analysis of meeting minutes and semistructured interviews with project leaders can capture ad-hoc adaptation and other system changes without contributing significantly to program costs. By providing information of use in evaluating a program's potential for translation and impact in other contexts, this type of approach can decrease the time lag between “discovery” and “routine use” of programs.25,29

Limitations

As with all studies, a number of limitations should be considered. First, implementation is often a dynamic, nonlinear process characterized by considerable ambiguity.92,93 Although data will be collected at multiple points in time, recall bias may limit the accuracy of information provided by respondents. The use of multiple data sources and informants will minimize the threat of this bias and increase the validity of study findings.94,95 Second, all qualitative procedures are susceptible to subjective bias and preconceived ideas of investigators. To minimize such bias, multiple coders will be used to analyze qualitative data collected in this study. Project leaders and other key stakeholders involved with CA-CORD and MA-CORD will also have the opportunity to review and provide feedback on study findings.

Conclusion

Significant resources have been invested in the development of interventions to prevent and control childhood obesity. The long-term success of these efforts depends not only on the efficacy of the approaches selected for use, but also on the strategies through which they are implemented and sustained.46,96,97 By systematically collecting data on processes, activities, and resources affecting intervention uptake in the CA-CORD and MA-CORD sites, this study will contribute to knowledge about how to coordinate and implement multilevel, multisector change strategies in ways that effectively engage diverse stakeholders, minimize policy resistance, and maximize desired intervention outcomes.

Supplementary Material

Acknowledgments

Preparation of this article was supported by the CDC (1U18DP003377-01 and 1U18DP003370-01) and by the Pilot Studies Core of the Johns Hopkins Global Obesity Prevention Center, which is funded by the National Institute of Child Health and Human Development (U54HD070725). The information and opinions expressed herein reflect solely the position of the authors.

Author Disclosure Statement

No competing financial interests exist.

References

- 1.Ogden CL, Carroll MD, Kit BK, et al. Prevalence of childhood and adult obesity in the United States, 2011–2012. JAMA 2014;311:806–814 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Wang Y, Beydoun MA. The obesity epidemic in the United States—Gender, age, socioeconomic, racial/ethnic, and geographic characteristics: A systematic review and meta-regression analysis. Epidemiol Rev 2007;29:6–28 [DOI] [PubMed] [Google Scholar]

- 3.Davison KK, Lawson HA, Coatsworth JD. The Family-Centered Action Model of Intervention Layout and Implementation (FAMILI): The example of childhood obesity. Health Promot Pract 2012;13:454–461 [DOI] [PubMed] [Google Scholar]

- 4.Kropski JA, Keckley PH, Jensen GL. School-based obesity prevention programs: An evidence-based review. Obesity 2008;16:1009–1018 [DOI] [PubMed] [Google Scholar]

- 5.Huang JS, Sallis JF, Patrick K. The role of primary care in promoting children's physical activity. Br J Sports Med 2009;43:19–21 [DOI] [PubMed] [Google Scholar]

- 6.Knai C, Pomerleau J, Lock K, et al. Getting children to eat more fruits and vegetables: A systematic review. Prev Med 2006;42:85–95 [DOI] [PubMed] [Google Scholar]

- 7.Singh GK, Siahpush M, Kogan MD. Neighborhood socioeconomic conditions, built environments, and childhood obesity. Health Aff 2010;29:503–512 [DOI] [PubMed] [Google Scholar]

- 8.Frieden TR, Dietz W, Collins J. Reducing childhood obesity through policy change: Acting now to prevent obesity. Health Aff 2010;29:357–363 [DOI] [PubMed] [Google Scholar]

- 9.IOM (Institute of Medicine). Accelerating progress in obesity prevention: Solving the weight of the nation. The National Academies Press: Washington, DC, 2012 [PubMed] [Google Scholar]

- 10.Green LW, Orleans CT, Ottoson JM, et al. Inferring strategies for disseminating physical activity policies, programs, and practices from the successes of tobacco control. Am J Prev Med 2006;31:S66–S81 [DOI] [PubMed] [Google Scholar]

- 11.Economos CD, Hyatt RR, Goldberg JP, et al. A community intervention reduces BMI z-score in children: Shape Up Somerville first year results. Obesity 2007;15:1325–1336 [DOI] [PubMed] [Google Scholar]

- 12.Samuels SE, Craypo L, Boyle M, et al. The California endowment's Healthy Eating, Active Communities program: A midpoint review. Am J Public Health 2010;100:2114–2123 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Flay BR. Efficacy and effectiveness trials (and other phases of research) in the development of health promotion programs. Prev Med 1986;15:451–474 [DOI] [PubMed] [Google Scholar]

- 14.Glasgow RE, Lichtenstein E, Marcus AC. Why don't we see more translation of health promotion research to practice? Rethinking the efficacy-to-effectiveness transition. Am J Public Health 2003;93:1261–1267 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Greenhalgh T, Robert G, MacFarlane F, et al. Diffusion of innovations in service organizations: Systematic review and recommendations. Milbank Q 2004;82:581–629 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Klein KJ, Sorra JS. The challenge of innovation implementation. Acad Manag Rev 1996;21:1055–1080 [Google Scholar]

- 17.Rabin B, Brownson RC. Developing the terminology for dissemination and implementation research. In: Brownson RC, Colditz G, Proctor EK. (eds), Dissemination and Implementation Research in Health. Oxford University Press: New York, 2012, pp. 23–51 [Google Scholar]

- 18.Durlak JA, DuPre EP. Implementation matters: A review of research on the influence of implementation on program outcomes and the factors affecting implementation. Am J Community Psychol 2008;41:327–350 [DOI] [PubMed] [Google Scholar]

- 19.Wilson SJ, Lipsey MW, Derzon J. The effects of school-based intervention programs on aggressive behavior: A meta-analysis. J Consult Clin Psychol 2003;71:136–149 [PubMed] [Google Scholar]

- 20.Hammond RA. Complex systems modeling for obesity research. Prev Chronic Dis 2009;6:A97–A106 [PMC free article] [PubMed] [Google Scholar]

- 21.Helfrich CD, Weiner BJ, McKinney MM, et al. Determinants of implementation effectiveness: Adapting a framework for complex innovations. Med Care Res Rev 2007;6:279–303 [DOI] [PubMed] [Google Scholar]

- 22.Sterman JD. Learning from evidence in a complex world. Am J Public Health 2006;96:505–514 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Tunis SR, Stryer DB, Clancy CM. Practical clinical trials: Increasing the value of clinical research for decision making in clinical and health policy. JAMA 2003;290:1624–1632 [DOI] [PubMed] [Google Scholar]

- 24.Aarons GA, Hurlburt MS, Horwitz SM. Advancing a conceptual model of evidence-based practice implementation in public service sectors. Adm Policy Ment Health 2011;38:4–23 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Damschroder LJ, Aron DC, Keith RE, et al. Fostering implementation of health services research findings into practice: A consolidated framework for advancing implementation science. Implement Sci 2009;4:50–65 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Glasgow RE, Vogt TM, Boles SM. Evaluating the public health impact of health promotion interventions: The RE-AIM framework. Am J Public Health 1999;89:1322–1327 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Wandersman A, Duffy J, Flaspohler P, et al. Bridging the gap between prevention research and practice: The interactive systems framework for dissemination and implementation. Am J Community Psychol 2008;41:171–181 [DOI] [PubMed] [Google Scholar]

- 28.Frambach R, Schillewaert N. Organizational innovation adoption: A multilevel framework of determinants and opportunities for future research. J Bus Res 2002;55:163–176 [Google Scholar]

- 29.Tabak RG, Khoong EC, Chambers DA, et al. Bridging research and practice: Models for dissemination and implementation research. Am J Prev Med 2012;43:337–350 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Weiner BJ, Lewis MA, Linnan LA. Using organization theory to understand the determinants of effective implementation of worksite health promotion programs. Health Educ Res 2009;24:292–305 [DOI] [PubMed] [Google Scholar]

- 31.McLeroy KR, Bibeau D, Steckler A, et al. An ecological perspective on health promotion programs. Health Educ Behav 1988;15:351–357 [DOI] [PubMed] [Google Scholar]

- 32.Sterman JD. Business Dynamics: Systems Thinking and Modeling for a Complex World. McGraw Hill: Boston, MA, 2000 [Google Scholar]

- 33.Palinkas LA, Fuentes D, Finno M, et al. Inter-organizational collaboration in the implementation of evidence-based practices among public agencies serving abused and neglected youth. Adm Policy Ment Health 2014;41:74–85 [DOI] [PubMed] [Google Scholar]

- 34.Hurlburt MS, Aarons GA, Fettes D, et al. Interagency collaborative team model for capacity building to scale-up evidence-based practice. Child Youth Serv Rev 2014;39:160–168 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Pettigrew AM, Woodman RW, Cameron K. Studying organizational change and development: Challenges for future research. Acad Manag J 2001;44:697–713 [Google Scholar]

- 36.Aarons GA. Mental health provider attitudes towards adoption of evidence-based practice: The evidence-based practice attitude scale (EBPAS). Ment Health Serv Res 2004;6:61–74 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Aarons GA. Measuring provider attitudes towards evidence-based practice: Consideration of organizational context and individual differences. Child Adolesc Psychiatr Clin N Am 2005;14:255–271 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Feldstein AC, Glasgow RE. A practical, robust implementation and sustainability model (PRISM) for integrating research findings into practice. Jt Comm J Qual Patient Saf 2008;34:228–243 [DOI] [PubMed] [Google Scholar]

- 39.Rycroft-Malone J, Seers K, Chandler J, et al. The role of evidence, context, and facilitation in an implementation trial: Implications for the development of the PARIHS framework. Implement Sci 2013;8:28. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Panzano PC, Roth D. The decision to adopt evidence-based and other innovative mental health practices: Risky business? Psychiatr Serv 2006;57:1153–1161 [DOI] [PubMed] [Google Scholar]

- 41.Gustafson DH, Sainfort F, Eichler M, et al. Developing and testing a model to predict outcomes of organizational change. Health Serv Res 2003;38:751–776 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Proctor EK, Silmere H, Raghavan R, et al. Outcomes for implementation research: Conceptual distinctions, measurement challenges, and research agenda. Adm Policy Ment Health 2011;38:65–76 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Raghavan R. The role of economic evaluation in dissemination and implementation research. In: Brownson RC, Colditz G, Proctor EK. (eds), Dissemination and Implementation Research in Health. Oxford University Press: New York, 2012, pp. 94–113 [Google Scholar]

- 44.Flaspohler P, Duffy J, Wandersman A, et al. Unpacking prevention capacity: An intersection of research-to-practice models and community-centered models. Am J Community Psychol 2008;41:182–196 [DOI] [PubMed] [Google Scholar]

- 45.Weiner BJ, Amick H, Lee SY. Conceptualization and measurement of organizational readiness for change: A review of the literature in health services research and other fields. Med Care Res Rev 2008;65:379–436 [DOI] [PubMed] [Google Scholar]

- 46.Weiner BJ, Haynes-Maslow L, Kahwati LC, et al. Implementing the MOVE! weight-management program in the Veterans Health Administration, 2007–2010: A qualitative study. Prev Chronic Dis 2012;9:110127. [PMC free article] [PubMed] [Google Scholar]

- 47.Dooyema CA, Belay B, Foltz JL, et al. The Childhood Obesity Research Demonstration project: A comprehensive community approach to reduce childhood obesity. Child Obes 2013;9:454–459 [DOI] [PubMed] [Google Scholar]

- 48.Ayala GX, Binggeli-Vallarta A, Ibarra L, et al. Our Choice/Nuestra Opción: The Imperial County, California Childhood Obesity Research Demonstration Study (CA-CORD). Child Obes 2015;11:37–47 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Taveras EM, Blaine R, Davison KK, et al. Design of the Massschusetts Childhood Obesity Research Demonstration (MA-CORD) study. Child Obes 2015;11:11–22 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Bodenheimer T, Wagner EH, Grumbach K. Improving primary care for patients with chronic illness: The Chronic Care Model, part 2. JAMA 2002;288:1909–1914 [DOI] [PubMed] [Google Scholar]

- 51.Chen E, Bodenheimer T. Applying the chronic care model to the management of obesity. Obes Manag 2008;4:227–231 [Google Scholar]

- 52.Taveras EM, Gortmaker SL, Hohman KH, et al. Randomized controlled trial to improve primary care to prevent and manage childhood obesity: The High Five for Kids study. Arch Pediatr Adolesc Med 2011;165:714–722 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Ayala GX, Ibarra L, Horton LA, et al. Evidence supporting a promotora-delivered entertainment education intervention for improving mothers' dietary intake: The Entreme Familia: Reflejos de Salud Study. J Health Commun 2014November6 [Epub ahead of print] [DOI] [PubMed] [Google Scholar]

- 54.Horton LA, Parada H, Slymen DJ, et al. Targeting children's dietary behaviors in a family intervention: Entre Familia Reflejos de Salud. Salud Publica Mex 2013;55(Suppl 3):S397–S405 [PubMed] [Google Scholar]

- 55.Ayala GX, Elder JP, Campbell NR, et al. Longitudinal intervention effects on parenting of the Aventuras para Niños study. Am J Prev Med 2010;38:154–162 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Crespo NC, Elder JP, Ayala GX, et al. Results of a multi-level intervention to prevent and control childhood obesity among Latino children: The Aventuras para Niños study. Ann Behav Med 2012;43:84–100 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Elder JP, Crespo NC, Corder K, et al. Childhood obesity prevention and control in city recreation centres and family homes: The MOVE/me Muevo Project. Pediatr Obes 2014;9:218–231 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Sallis JF, McKenzie TL, Alcaraz JE, et al. The effects of a 2-year physical education program (SPARK) on physical activity and fitness in elementary school students: Sports, Play, and Active Recreation for Kids. Am J Public Health 1997;87:1328–1334 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Benjamin SE, Neelon B, Ball SC, et al. Reliability and validity of a nutrition and physical activity environmental self-assessment for child care. Int J Behav Nutr Phys 2007;4:29–39 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Davison KK, Falbe J, Taveras EM, et al. Evaluation overview for the Massachusetts Childhood Obesity Research Demonstration (CORD) Project. Child Obes under review. 2015;11:23–36 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.O'Connor D, Lee RE, Mehta P, et al. Childhood Obesity Research Demonstration Project: Cross-site evaluation models. Child Obes under review. 2015;11:92–103 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Creswell J, Plano Clark V. Designing and Conducting Mixed Methods Research, 2nd ed. Sage: Thousand Oaks, CA, 2011 [Google Scholar]

- 63.Powell BJ, McMillen JC, Proctor EK, et al. A compilation of strategies for implementing clinical innovations in health and mental health. Med Care Res Rev 2012;69:123–157 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Granner ML, Sharpe PA. Evaluating community coalition characteristics and functioning: A summary of measurement tools. Health Educ Res 2004;19:514–532 [DOI] [PubMed] [Google Scholar]

- 65.Chalmers ML, Housemann RA, Wiggs I, et al. Process evaluation of a monitoring log system for community coalition activities: Five-year results and lessons learned. Am J Health Promot 2003;17:190–196 [DOI] [PubMed] [Google Scholar]

- 66.Shea CM, Jacobs SR, Esserman DA, et al. Organizational readiness for implementing change: A psychometric assessment of a new measure. Implement Sci 2014;9:7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Weiner BJ, Belden CM, Bergmire DM, et al. The meaning and measurement of implementation climate. Implement Sci 2011;6:78–89 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Metzger ME, Alexander JA, Weiner BJ. The effects of leadership and governance processes on member participation in community health coalitions. Health Educ Behav 2005;32:455–473 [DOI] [PubMed] [Google Scholar]

- 69.Mattessich P, Murray-Close M, Monsey B. Collaboration: What Makes It Work, 2nd ed. Saint Amherst Wilder Foundation: Paul, MN, 2001 [Google Scholar]

- 70.Zizys T. Collaboration practices in government and in business: A literature review. In: Robert J, O'Connor P. (eds), The Inter-Agency Services Collaboration Project. Wellesley Institute: Toronto, Ontario, Canada, 2007, pp. 68–88 [Google Scholar]

- 71.Jacobs SR, Weiner BJ, Bunger AC. Context matters: Measuring implementation climate among individuals and groups. Implement Sci 2014;9:46. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72.O'Donnell C. Defining, conceptualizing, and measuring fidelity of implementation and its relationship to outcomes in K–12 curriculum intervention research. Rev Educ Res 2008;78:33–84 [Google Scholar]

- 73.Mihalic S. The importance of implementation fidelity. Rep Emot Behav Disord Youth 2004;4:83–86 [Google Scholar]

- 74.Keith RE, Hopp FP, Subramanian U, et al. Fidelity of implementation: Development and testing of a measure. Implement Sci 2010;5:99. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Dusenbury L, Brannigan R, Falco M, et al. A review of research on fidelity of implementation: Implications for drug abuse prevention in school settings. Health Educ Res 2003;18:237–256 [DOI] [PubMed] [Google Scholar]

- 76.Bazeley P, Jackson K. Qualitative Data Analysis With NVivo, 2nd ed. Sage: London, 2013 [Google Scholar]

- 77.Strauss A, Corbin J. Basis of Qualitative Research: Techniques and Procedures for Developing Grounded Theory, 3rd ed. Sage: Thousand Oaks, CA, 1998 [Google Scholar]

- 78.Miles MB, Huberman AM. Qualitative Data Analysis: An Expanded Sourcebook. Sage: Thousand Oaks, CA, 1994 [Google Scholar]

- 79.Hartley J. Case study research. In: Cassell C, Symon G. (eds), Essential Guide to Qualitative Methods in Organizational Research. Sage: Thousand Oaks, CA, 2004, pp. 323–333 [Google Scholar]

- 80.Bryne D, Ragin C. The SAGE Handbook of Case-Based Methods. Sage: Thousand Oaks, CA, 2009 [Google Scholar]

- 81.Rihoux B, Ragin C. (eds). Configurational Comparative Methods: Qualitative Comparative Analysis (QCA) and Related Techniques. Applied Social Research Methods Series; No. 51. Sage: Thousand Oaks, CA, 2009 [Google Scholar]

- 82.Cronqvist L. TOSMANA. Tools for small-N analysis. Version 1.3. 2007. Available at http://www.tosmana.org Last accessed July31, 2013

- 83.Ragin C. Fuzzy-Set Social Science. University of Chicago Press: Chicago, IL, 2000 [Google Scholar]

- 84.Alexander J, Prabhu Das I, Johnson TP. Time issues in multilevel interventions for cancer treatment and prevention. J Natl Cancer Inst Monogr 2012;44:42–48 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 85.Rossi P, Lipsey MW, Freeman H. Evaluation: A Systematic Approach, 7th ed. Sage: Thousand Oaks, CA, 2004 [Google Scholar]

- 86.Curran GM, Bauer M, Mittman B, et al. Effectiveness-implementation hybrid designs: Combining elements of clinical effectiveness and implementation research to enhance public health impact. Med Care 2012;50:217–226 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 87.Glasgow RE, Steiner J. Comparative effectiveness research to accelerate translation: Recommendations for an emerging field of science. In: Brownson RC, Colditz G, Proctor EK. (eds), Dissemination and Implementation Research in Health. Oxford University Press: New York, 2012, pp. 72–93 [Google Scholar]

- 88.Aarons GA, Green AE, Palinkas LA, et al. Dynamic adaptation process to implement an evidence-based child maltreatment intervention. Implement Sci 2012;7:32. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 89.Allen J, Linnan LA, Emmons KM. Fidelity and its relationship to implementation effectiveness, adaptation, and dissemination. In: Brownson RC, Colditz G, Proctor EK. (eds), Dissemination and Implementation Research in Health. Oxford University Press: New York, 2012 [Google Scholar]

- 90.Elliott DS, Mihalic S. Issues in disseminating and replicating effective prevention programs. Prev Sci 2004;5:47–52 [DOI] [PubMed] [Google Scholar]

- 91.Mendel P, Meredith LS, Schoenbaum M, et al. Interventions in organizational and community context: A framework for building evidence on dissemination and implementation in health services research. Adm Policy Ment Health 2008;35:21–37 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 92.Ferlie E, Fitzgerald L, Wood M, et al. The nonspread of innovations: The mediating role of professionals. Acad Manag J 2005;48:117–134 [Google Scholar]

- 93.Van de Ven AH, Polley DE, Garud R, et al. The Innovation Journey. Oxford University Press: Oxford, UK, 1999 [Google Scholar]

- 94.Yin R. Case Study Research, 3rd ed. Sage: Thousand Oaks, CA, 2003 [Google Scholar]

- 95.Golafshani N. Understanding reliability and validity in qualitative research. Qual Rep 2003;8:597–607 [Google Scholar]

- 96.Derksen RE, Brink-Melis WJ, Westerman MJ, et al. A local consensus process making use of focus groups to enhance the implementation of a national integrated health care standard on obesity care. Fam Pract 2012;29:i177–i184 [DOI] [PubMed] [Google Scholar]

- 97.Estabrook B, Zapka J, Lemon SC. Evaluating the implementation of a hospital work-site obesity prevention intervention: Applying the RE-AIM framework. Health Promot Pract 2011;13:190–197 [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.