Abstract

Behavioral improvement within the first hour of training is commonly explained as procedural learning (i.e., strategy changes resulting from task familiarization). However, it may additionally reflect a rapid adjustment of the perceptual and/or attentional system in a goal-directed task. In support of this latter hypothesis, we show feature-specific gains in performance for groups of participants briefly trained to use either a spectral or spatial difference between 2 vowels presented simultaneously during a vowel identification task. In both groups, the neuromagnetic activity measured during the vowel identification task following training revealed source activity in auditory cortices, prefrontal, inferior parietal, and motor areas. More importantly, the contrast between the 2 groups revealed a striking double dissociation in which listeners trained on spectral or spatial cues showed higher source activity in ventral (“what”) and dorsal (“where”) brain areas, respectively. These feature-specific effects indicate that brief training can implicitly bias top-down processing to a trained acoustic cue and induce a rapid recalibration of the ventral and dorsal auditory streams during speech segregation and identification.

Keywords: attention, hearing, MEG, plasticity, speech

Introduction

Learning often involves a rapid improvement in performance that occurs within the first hour. These rapid changes in behavioral performance were previously thought to solely reflect procedural learning, that is, changes in participants' strategies that occurred during task familiarization (Karni and Bertini 1997; Karni et al. 1998; Wright and Fitzgerald 2001). However, evidence from behavioral studies suggests that increased accuracy during the first hour may also involve increased perceptual sensitivity for auditory (Hawkey et al. 2004) or visual (Hussain et al. 2012) stimuli. That is, learning within the first hour shows specificity to the trained stimulus or feature.

The hypothesis that the first hour of training can increase perceptual sensitivity has received support from animal studies investigating rapid learning-induced plasticity in auditory processing. For instance, changes in the receptive fields of ferret auditory cortex can occur within minutes of a tone discrimination task that was previously learned (Fritz et al. 2003, 2005a, 2005b). However, these neuroplastic changes were smaller or absent if the animal listened passively to the same sounds (i.e., when the sounds are task-irrelevant). Such rapid frequency-specific changes in the receptive fields of auditory neurons have been observed in a wide variety of situations including classical conditioning (Bakin and Weinberger 1990; Edeline et al. 1993), instrumental avoidance conditioning (Bakin et al. 1996), and discrimination learning (Fritz et al. 2003). Training-induced plasticity in auditory localization has been observed in mammals (Kacelnik et al. 2006; Bajo et al. 2010), but it remains to be determined whether these changes can occur quickly within the auditory system.

Using the scalp recording of auditory event-related potentials (ERPs), Alain et al. (2007) found that behavioral improvement during the first hour of a concurrent-vowel identification task correlated with enhancements in early (∼130 ms) and late (∼340 ms) ERPs originating from the right superior temporal gyrus (STG) and inferior prefrontal cortex, respectively. These ERP changes reflected listeners' gain in using spectral difference between the 2 vowels, depended on listeners' attention, and were preserved only if practice was continued; familiarity with the task structure (procedural learning) was not sufficient. Rapid changes in sensory (∼100–200 ms) and later (∼320 ms) ERPs have also been revealed when participants learned to identify 2 syllables that differed in voice onset time (Alain, Campeanu, et al. 2010; Ben-David et al. 2011), and were different from changes related to task repetition (Ben-David et al. 2011). Spierer et al. (2007) have also found that a 40-min training session improved participants' performance in discriminating sound source location, which were paralleled by a modulation of ERP amplitude during the 195–250 ms interval localized into the left inferior parietal cortex. Lastly, 1-h exposure in a new linguistic (Shtyrov et al. 2010) or music environment (Loui et al. 2009) can also yield significant learning-related changes in ERP amplitude. Taken together, these studies provide converging evidence for rapid and dynamic neurophysiological changes that coincide with improvement in perception and appear to be associated with both auditory ventral (“what”) and dorsal (“where”) functions (Rauschecker and Tian 2000; Alain et al. 2001).

The rapid changes in ERPs during the first hour of learning differ from simple neural adaptation associated with stimulus exposure and/or task repetition without learning (Alain, Campeanu, et al. 2010; Ben-David et al. 2011) and suggest that neural systems underlying learning and memory are dynamically adjusted depending on factors like goal-directed behavior and experience. This is consistent with theories that emphasize the role of top-down processes in sensory learning (Ahissar and Hochstein 1993). For instance, during rapid learning of speech sounds, higher level representations may be used to gradually gain access to and/or finely tune the attentional “spotlight” on lower level sound features that distinguish acoustically similar stimuli (Ahissar et al. 2009).

One important issue that has not been fully analyzed or confirmed is the specificity of the neuroplastic changes observed during these early stages of learning. Although animal studies have provided evidence for rapid adjustment of the perceptual system during training (for a review see Fritz et al. 2005a), it remains to be determined whether such feature-specific effects take place in humans. In human studies, the nonspecific behavioral improvement observed in the early stages of learning (i.e., lack of specificity) is often taken as evidence for procedural learning with feature-specific effects emerging only after multiple daily training sessions (Watson 1980; Karni and Bertini 1997). Although there is some behavioral evidence for specificity within the first training session (Hawkey et al. 2004; Hussain et al. 2012), neurophysiological evidence supporting such feature-specific effects is lacking.

In the present study, we investigated whether a brief 45-min training program can yield a rapid feature-specific modulation on both behavior and neuromagnetic activity during subsequent measurement while participants identified 2 vowels presented simultaneously. Two groups of participants were trained to utilize differences either in the fundamental frequency (Δƒ0) or in the spatial location (Δlocation) of the 2 vowels presented simultaneously, acoustic features well known to stimulate distinct ventral (“what”) and dorsal (“where”) neural pathways in humans (Alain et al. 2001; Arnott et al. 2004). Shortly after training, we measured neuromagnetic brain activity using magnetoencephalography (MEG) while participants from both groups performed the same task using an identical set of double-vowel stimuli that shared the same ƒ0 and location or differed in either ƒ0 or location or both. This procedure enabled us to investigate the behavioral and neuroplastic changes induced by training listeners to use either spectral or spatial cues in speech separation and identification while holding the bottom-up sensory input constant. If the rapid improvement reflects primarily procedural learning that is independent of trained features, one would anticipate that the training effects on behavioral and neuromagnetic data would be comparable between the differentially trained groups. On the other hand, if learning does involve a rapid adjustment in perceptual sensitivity to the task-relevant attribute, then one would predict feature-specific gains in performance. That is, participants that receive spectral training should perform better when a Δƒ0 cue is available relative to when a Δlocation cue is present, and vice versa. We anticipated that feature-specific gains in performance will be paralleled by changes in neuromagnetic brain activity, which will be illustrated by greater source activity in ventral and dorsal brain regions in groups trained on Δƒ0 or Δlocation, respectively.

Materials and Methods

Participants

Twenty-four participants who provided written informed consent according to the University of Toronto and Baycrest Hospital Human Subject Review Committee guidelines were randomly assigned into 2 training groups: the Frequency group (7 women; aged 20–33 years; mean: 24 years) and the Location group (8 women; aged 20–31 years; mean: 24 years). All participants were right-handed, native English speakers, and had normal pure-tone thresholds at both ears (<25 dB HL for 250–8000 Hz).

Stimuli and Task

Stimuli were 4 synthetic steady-state American English vowels: /a:/ (as in father), /3/ (as in her), /i:/ (as in see), and /u:/ (as in moose), henceforth referred to as “ah,” “er,” “ee,” and “oo,” respectively (Assmann and Summerfield 1994). Each vowel was 200 ms in duration (2442 samples at a 12.21-kHz sample rate, 16-bit quantization), low-pass filtered at 5 kHz, with ƒ0 (100–126 Hz, see later for details) and formant frequencies held constant for the entire duration (see Supplementary Fig. 1). Formant frequencies were patterned after a male talker from the North Texas region. The source signal was the same in all the 4 vowels, simulating “equal vocal effort.” Onsets and offsets were shaped by 2 halves of an 8-ms Kaiser window. Double-vowel stimuli were created by adding together the digital waveforms of 2 different vowels and then dividing the sum by 2. Each vowel was paired with every other vowel. Stimuli were examined using an oscilloscope to ensure that there was no “clipping.” The vowels were added in phase and this resulted in smaller amplitude when the 2 vowels differed in ƒ0.

Stimuli were converted to analog forms (TDT RP-2 real-time processor, Tucker Davis Technologies, Alachua, FL, USA), fed into a headphone driver (TDT HB-7), and presented binaurally at 75 dB sound pressure level (SPL) through Etymotic ER-3A inserted earphones (Etymotic Research, Elk Grove, IL, USA) connected with a 1.5-m reflection-less plastic tube. The intensity of the stimuli was measured using a Larson-Davis SPL meter (Model 824, Provo, UT, USA). The plastic tubes from the ER-3A transducers were attached to a 2-cc coupler on an artificial ear (Model AEC100l) connected to the SPL meter. Separate measurements were taken for both left and right ear channels. Perceived sound locations were induced by applying a head-related transfer function (HRTF) coefficient from the TDT library to the vowels prior to sending them to the headphone driver (for a detailed description and behavioral validation of the HRTF coefficient, see Wightman and Kistler 1989a, 1989b; Wenzel et al. 1993). The HRTF coefficients were individually determined by a brief sound localization task at the beginning of the experiment. On each trial, 1 of the 4 vowels was presented at 1 of the 5 azimuth locations (i.e., −90°, −45°, 0°, 45°, 90°) using a variety of HRTF coefficients selected from the TDT library that best suited the participant's head size. Participants were asked to point toward the sound source location. The HRTF coefficient that resulted in the most accurate localization responses was then determined and used for the remainder of the experiment for each participant.

Before the training task, participants were provided with written instruction, as well as exemplars of the various stimuli. Each vowel was presented individually (16 trials, 4 vowels by 2 ƒ0 levels, 100 and 106 Hz), and participants identified the vowel by pressing 1 of 4 keys on the keyboard, marked “AH,” “ER,” “EE,” and “OO.” All participants achieved single vowel accuracy of 95% or better.

Following the familiarization with the stimuli and task, participants underwent a 45-min training session. For the Frequency group, each vowel pair contained 1 vowel with ƒ0 at 100 Hz and the other ƒ0 at 100, 103, 106, 112, or 126 Hz, resulting in 5 levels of Δƒ0: 0, 0.5, 1, 2, or 4 semitones. Both vowels were presented from the midline. For the Location group, the 2 vowels in each pair had equal ƒ0 (100 Hz) and were presented both from the midline (0°) or from 15°, 30°, 45°, or 60° away from the midline (i.e., one from left of the midline, and the other from right of the midline), resulting in 5 levels of Δlocation: 0°, 30°, 60°, 90°, or 120°. All vowel pairs were presented in the horizontal plane, randomized, and balanced in 4 blocks of 120 trials. Participants were told that 2 different vowels will always be presented on each trial and the 2 vowels might have the same or different pitch (for the Frequency group) or come from the same or different locations (for the Location group). Their task was to identify both vowels by sequentially pressing corresponding keys on the keyboard. In other words, participants were implicitly trained to utilize the spectral or spatial difference between the 2 vowels to facilitate their segregation and identification. This is different from an explicit frequency or spatial discrimination task, which would have required participants to indicate whether the 2 sounds had the same or different pitch or spatial location. Five milliseconds after participant's second response, a visual feedback occurred on the screen in front of the participant for 1 s, showing the stimuli and response for the last trial. The next trial started 2 s after participant's second response.

The MEG session started 15 min after training. Participants were presented with 4 trial types, which were created by the orthogonal combination of Δƒ0 and Δlocation. That is, the 2 vowels could have either the same (100 or 106 Hz) or different ƒ0 (one at 100 Hz and the other at 106 Hz, i.e., 1-semitone Δƒ0), and they could come from either the same (midline) or different azimuth locations (one from 45° to the left and the other from 45° to the right, i.e., 90° Δlocation). These 4 trial types were labeled as follows: same ƒ0 same location (SFSL), same ƒ0 different location (SFDL), different ƒ0 same location (DFSL), and different ƒ0 different location (DFDL), and were randomized and balanced in 4 blocks of 144 trials. Participants performed the same task as during training without feedback, and they were told that the 2 vowels could have the same or different pitch and come from the same or different locations. The next trial started 1.5 s after participant's second response.

MEG Acquisition and Analysis

MEG data were recorded in a magnetically shielded room using a 151-channel whole-head neuromagnetometer (VSM Medtech, Port Coquitlam, BC, Canada). Participants were in the upright seating position with their head resting in the helmet-shaped sensor array. Head localization coils were placed on the nasion, left and right preauricular points for coregistration of the MEG data with anatomical magnetic resonance images (MRIs) and/or realistic estimates of the participant’ head shape by a 3-dimensional digitization system (Fastrak, Polhemus, Colchester, VT, USA) obtained prior to MEG recording. The neuromagnetic activity was sampled at 625 Hz and low-pass filtered at 200 Hz, and 4 blocks were collected with each one lasting about 9 min.

The synthetic aperture magnetometry (SAM), a minimum-variance beamformer algorithm (Van Veen et al. 1997), was used as a spatial filter to estimate the time course of source activity on a lattice of 5-mm spacing across the whole-brain volume in the 0.3 to 20 Hz frequency range. A multiple-sphere head model was used for the beamformer analysis in which a single sphere was fit to the digitized head shape for each MEG sensor. Waveforms of averaged source activity for each trial type were calculated following the event-related SAM approach (ER-SAM, Robinson 2004; Cheyne et al. 2006). The time course of source activity at each node/voxel was estimated as a weighted linear combination of the magnetic field measured at all MEG sensors and represented as a normalized pseudo-Z measure (Robinson and Vrba 1998). For data reduction, the time series were down-sampled by the factor of 5 (i.e., one sample point every 8 ms). Time series of volumetric maps of group mean pseudo-Z values for each trial type were normalized to the Talairach stereotaxic space, spatially smoothed using a Gaussian filter with a full width at half maximum value of 4.0 mm, overlaid on the anatomical image of a template brain (colin27, Montreal Neurological Institute, Holmes et al. 1998), and visualized with the Analysis of Functional Neuroimages software (AFNI version 2.56a, Cox 1996). As the aim of this study was to examine the learning effect on speech segregation rather than response processing, all trials were included regardless of accuracy.

Statistical Analysis

Repeated-measures analysis of variance (ANOVA) followed by 1-way ANOVA, Bonferroni post hoc tests, and t-tests were conducted for behavioral data with the null-hypothesis rejection level set at 0.05.

For MEG data, group analysis was conducted on the grand mean pseudo-Z values across stimulus types of each 40 ms epoch centered at 50, 100, 150, 200, 250, 300, and 350 ms after stimulus onset. The time windows were chosen to encompass the transient auditory evoked fields elicited by double-vowel stimuli (i.e., P1m, N1m, and P2m), which peaked, respectively, at about 45, 110, and 205 ms after sound onset. Moreover, these windows cover the time periods that have shown rapid neuroplastic changes related to spectral (Alain et al. 2007) or spatial training (Spierer et al. 2007). The use of the mean value of a 40-ms epoch also smoothed the individual difference in temporal processing of double-vowel stimuli, took into account the learning-related modulation of brain activity during certain period, and increased the statistical sensitivity and power. First, a voxel-wise, mixed-effect 2-factor ANOVA with group as the fixed factor and with participant as the random factor was computed for each epoch using the 3dANOVA2 function in AFNI. To correct for multiple comparisons, a spatial cluster extent threshold was applied by using AlphaSim with 4096 (212) Monte Carlo simulations. Using an uncorrected P-value threshold of 0.05, the minimum cluster size with a family-wise, false-positive probability of P < 0.05 was 2048 μL (32 voxels) for 30–70 ms epoch, 1792 μL (28 voxels) for 80–120 ms epoch, 1408 μL (22 voxels) for 130–170 ms epoch, 1152 μL (18 voxels) for 180–220 ms epoch, 1344 μL (21 voxels) for 230–270 ms epoch, 1088 μL (17 voxels) for 280–320 ms epoch, and 1280 μL (20 voxels) for 330–370 ms epoch. Thus, only significant activations with the cluster size reached a specific cluster extent threshold listed above were reported for each contrast during each epoch.

Results

Behaviors

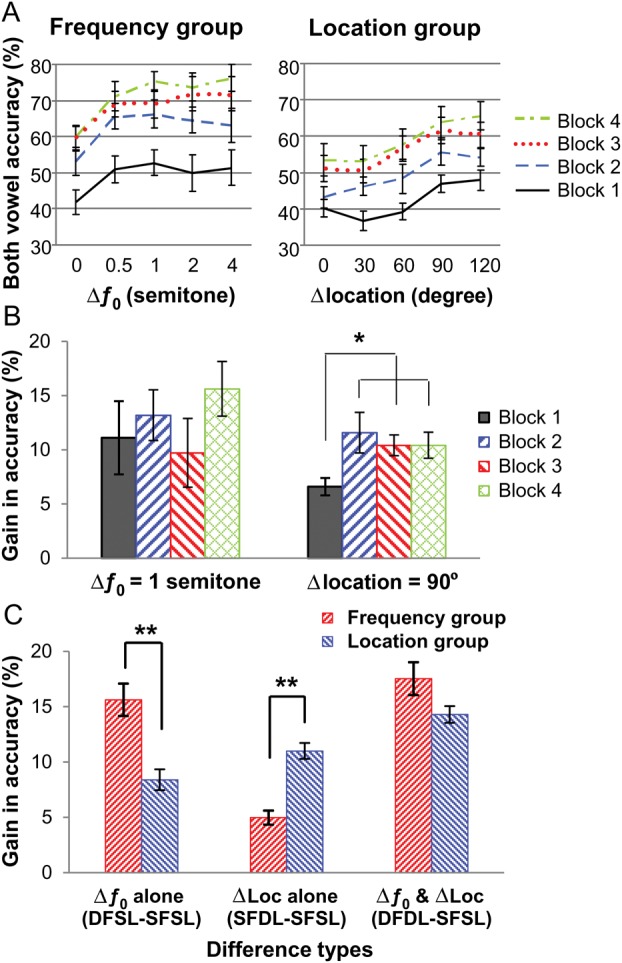

Figure 1A shows the group mean proportion of trials in which both vowels were correctly identified during the training phase as a function of Δƒ0 or Δlocation. Both the Frequency and Location groups achieved about 40% accuracy (the chance level is 25%) under the baseline condition (when the 2 vowels had 0-semitone difference in Δƒ0 and 0° spatial separation) in the first block of the training session, indicating no remarkable group difference before training. In both groups, a repeated-measures ANOVA revealed a significant main effect of block (F3,33 = 37.30 and 15.02, respectively, P < 0.001 in both cases), indicating improved vowel accuracy with practice.

Figure 1.

Behavioral performance during training and MEG recording. (A) Group mean accuracy for identifying both vowels during training is plotted as a function of Δƒ0 or Δlocation. (B) Group mean gain in accuracy when Δƒ0 was 1 semitone or Δlocation was 90° during training. *P < 0.05 by paired t-tests. (C) Group mean gain in accuracy for Δƒ0 alone, Δlocation alone, and both Δƒ0 and Δlocation during MEG recording. **P < 0.001 by independent-sample t-tests. The error bars represent the standard error of the mean.

In the Frequency group, pair-wise comparisons showed that accuracy improved significantly from the first to the second block of trials (P < 0.001). Accuracy measures in all subsequent blocks were also significantly higher than that in the first block of trials (P < 0.001 in all cases). After the second block of trials, the gain in accuracy was smaller, with participants showing neither a significant improvement between the second and third blocks of trials nor between the third and fourth blocks of trials (P = 0.072 and 1.00, respectively). Nonetheless, participants were more accurate in the fourth than in the second block of trials (P < 0.01). Finally, accuracy increased with increasing ƒ0 separation between the 2 vowels (F3,33 = 12.38, P < 0.001 in both cases). Pair-wise comparison revealed that performance improved with increasing ƒ0 separation up to 1 semitone (all P < 0.05), and plateaued thereafter from 1 to 4 semitones.

Similarly, in the Location group, pair-wise comparisons showed significant gains in accuracy between the first and second blocks of trials (P < 0.02) and between the second and third blocks of trials (P < 0.05). Accuracy in all the subsequent blocks of trials was also significantly higher than in the first block of trials (P < 0.01 in all cases). There was no significant increase in accuracy between the third and fourth blocks of trials (P = 1.00). Accuracy also increased with increasing spatial separation between the 2 vowels (F3,33 = 56.27, P < 0.001 in both cases). Participants performed better when the 2 vowels were separated by 90° or 120° than when there was no or small (30° or 60°) separation (all pair-wise comparisons P < 0.01). There was no reliable difference in performance from 0° to 60° or between 90° and 120° separation.

The Location group displayed a smaller overall improvement in performance than the Frequency group during training, which may suggest different learning curves for spectral and spatial training. However, the interaction between group and block was not significant (F3,33 = 1.17, P = 0.34), nor was the 3-way interaction between group, block, and level of difference between the 2 vowels (F12,132 = 0.56, P = 0.87), indicating equal gains in accuracy from practice in both groups and for all levels of Δƒ0 and Δlocation.

To assess the effects of training on listeners' ability to use the frequency or location separation between concurrent vowels, we compared the gain in accuracy for Δƒ0 (1-semitone) and Δlocation (90°) for each block (Fig. 1B), which were used during the MEG recording. For the Frequency group, participants' gain in accuracy for the 1-semitone Δƒ0 did not differ between blocks 1 and 4 (t11 = 1.28, P = 0.23). For the Location group, participants achieved a significantly higher gain in accuracy for the 90° separation in blocks 2–4 compared with block 1 (all t11 > 2.20, P < 0.05, paired t-tests), indicating improved cue utilization after training.

During the MEG recording session, both groups were more accurate when the 2 vowels differed in ƒ0 only (DFSL), location only (SFDL), or both ƒ0 and location (DFDL) than when they shared the same ƒ0 and location (SFSL) (P < 0.001, repeated-measures ANOVA and post hoc tests). To illustrate the feature-specific benefit in performance, we performed a within-group and a between-group comparison. In both cases, we compared the gain in accuracy from Δƒ0 alone (DFSL − SFSL), Δlocation alone (SFDL − SFSL), and both Δƒ0 and Δlocation simultaneously (DFDL − SFSL). Figure 1C shows the effects of training on the gain in accuracy relative to the performance in the SFSL condition. Participants in the Frequency group showed a greater gain in performance for Δƒ0 alone than for Δlocation alone (t11 = 6.06, P < 0.001). Conversely, participants in the Location group showed a greater gain for Δlocation alone than for Δƒ0 alone (t11 = 2.38, P = 0.036). A mixed-model, repeated-measures ANOVA revealed a significant interaction between group and trial type (F2,44 = 33.32, P < 0.001). The Frequency group exhibited a higher gain from Δƒ0 alone than the Location group (t22 = 4.17, P < 0.001), whereas the Location group showed a greater gain from Δlocation alone than the Frequency group (t22 = 6.18, P < 0.001). There was no significant group difference when the 2 vowels differed in both ƒ0 and location.

Notably, we have previously shown that, in a group performing the same task on the same set of stimuli without pre-MEG training, the benefit from either the Δƒ0 or Δlocation cue did not differ from one another and that from having access to both cues equaled the sum of gains for each single cue (Du et al. 2011). However, here in both trained groups, the gain from the trained cue was almost as large as having both cues simultaneously, which was only slightly higher than that from the trained cue alone (both t11 > 2.80, P < 0.05). Moreover, the sum of gains from each cue was considerably larger than having both cues simultaneously (both t11 > 5.80, P < 0.01, paired t-tests).

Neuromagnetic Activity During the Double-Vowel Task

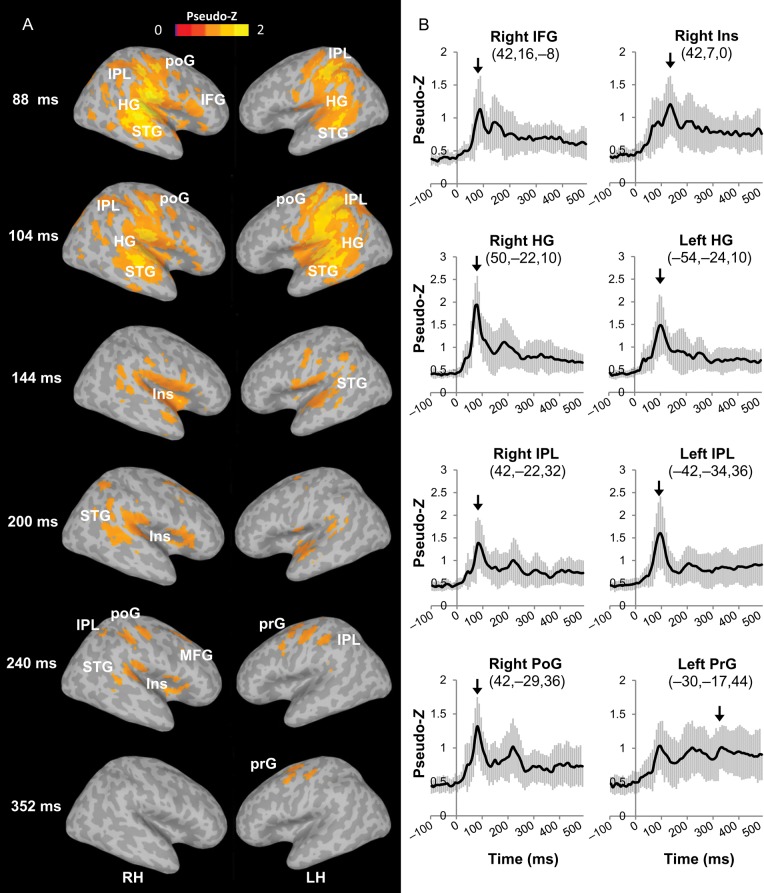

Figure 2 shows the grand averaged (across stimulus types and groups) images of cortical source activity. It reveals that performance during the double-vowel task was associated with a widely distributed neural network. More specifically, we found significant activity in bilateral auditory cortices that started in Heschl's gyrus, the location of primary auditory cortex, around 88 ms, and extended into the STG and insula by 250 ms. Activity was also observed in multimodal attention-related areas such as the right inferior frontal gyrus (IFG), bilateral inferior parietal lobule (IPL), and bilateral postcentral gyrus (poG), starting as early as 88 ms after sound onset and lasting for several hundred milliseconds. The double-vowel task also yielded later components (250–350 ms) in the left precentral gyrus (prG), which presumably related to response selection and preparation.

Figure 2.

Grand mean ER-SAM maps and source waveforms during the double-vowel task. (A) ER-SAM maps averaged across all participants and all stimulus types at selected latencies are thresholded at pseudo-Z >0.9 and overlaid onto a template brain. (B) MEG source waveforms averaged across all participants and all stimulus types at selected peak voxel in ER-SAM maps. The error bars represent the standard error of the mean. The arrows indicate the latency when the chosen voxel was defined as local maxima. HG, Heschl's gyrus; IFG, inferior frontal gyrus; Ins, insula; MFG, middle frontal gyrus; IPL, inferior parietal lobule; poG, postcentral gyrus; prG, precentral gyrus; STG, superior temporal gyrus.

Feature-Specific Training Effects on Neuromagnetic Activity

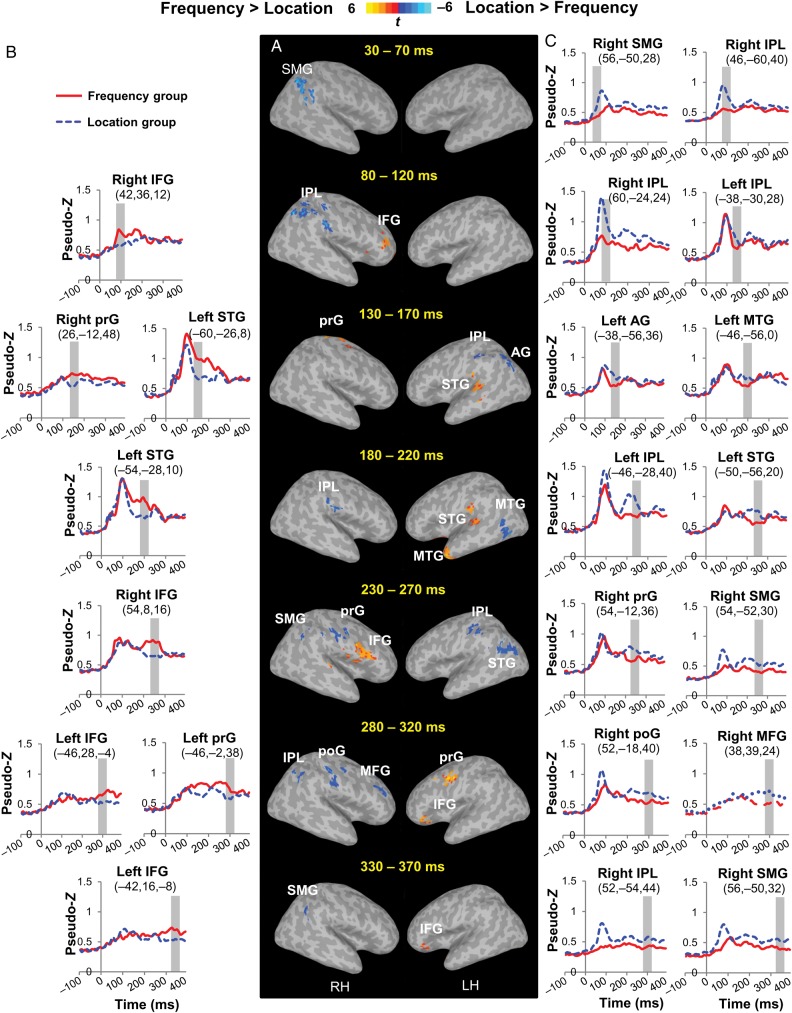

Figure 3 and Table 1 illustrate the feature-specific training effects on neuromagnetic activity. All activations were significant at family-wise corrected P < 0.05 with the cluster size no smaller than 1088 μL. The contrast of the MEG source activity between the 2 groups across trial types revealed differential patterns starting earlier than 100 ms after sound onset and lasting for several hundred milliseconds. Compared with the Location group, the Frequency group showed larger source activity in the bilateral IFG (right IFG, 80–120 and 230–270 ms intervals; left IFG, 280–370 ms interval), the left STG (130–220 ms interval), the left middle temporal gyrus (MTG, 180–220 ms interval), and bilateral prG (right prG, 130–170 ms interval; left prG, 280–320 ms interval). In comparison, the Location group exhibited larger source activity than the Frequency group in more posterior and dorsal areas, namely, the right supramarginal gyrus (SMG, 30–70, 230–270 and 330–370 ms intervals), the bilateral IPL (right IPL, 80–120 and 280–320 ms intervals; left IPL, 130–170 and 230–270 ms intervals), the left angular gyrus (AG, 130–170 ms interval), the left MTG (180–220 ms interval), the posterior area of the left STG (230–270 ms interval), the right prG (230–270 ms interval), the right poG (280–320 ms interval), and the right middle frontal gyrus (280–320 ms interval). Thus, although both groups were processing the same set of stimuli, distinct brain networks were revealed depending on the trained features, with the Frequency group recruiting more anterior and ventral temporo-frontal areas (“what” pathway) while the Location group activating more posterior and dorsal temporo-parietal areas (“where” pathway).

Figure 3.

Activation maps and source waveforms showing the feature-specific training effect. (A) Contrast maps of the MEG source activity between the 2 groups across stimulus types for six 40-ms intervals are overlaid on a template brain. All activations are significant at corrected P < 0.05 and cluster size >1088 μL. (B and C) Group mean MEG source waveforms at selected clusters exhibiting remarkable group differences, (B) Frequency group > Location group; (C) Location group > Frequency group. The numbers below each cluster label show the Talairach coordinates of the peak voxel. The gray bars indicate the 40-ms interval showing a significant group difference. AG, angular gyrus; IFG, inferior frontal gyrus; IPL, inferior parietal lobule; MFG, middle frontal gyrus; MTG, middle temporal gyrus; poG, postcentral gyrus; prG, precentral gyrus; SMG, supramarginal gyrus; STG, superior temporal gyrus.

Table 1.

Feature-specific training effect on neuromagnetic activity

| Latency | Brain regions | BA | Peak Talairach coordinate |

t-value | No. of voxels | ||

|---|---|---|---|---|---|---|---|

| x (mm) | y (mm) | z (mm) | |||||

| Frequency group > Location group | |||||||

| 80–120 ms | R inferior frontral gyrus | 46 | 42 | 36 | 12 | 3.503 | 37 |

| 130–170 ms | R precentral gyrus | 4 | 26 | −12 | 48 | 2.663 | 117 |

| L superior temporal gyrus | 42 | −60 | −26 | 8 | 3.508 | 29 | |

| 180–220 ms | L middle temporal gyrus | 21 | −46 | 7 | −32 | 6.077 | 303 |

| L superior temporal gyrus | 41 | −54 | −28 | 10 | 3.511 | 32 | |

| 230–270 ms | R inferior frontal gyrus | 44 | 54 | 8 | 16 | 2.825 | 94 |

| 280–320 ms | L precentral gyrus | 6 | −46 | −2 | 38 | 5.559 | 54 |

| L inferior frontal gyrus | 47 | −46 | 28 | −4 | 3.276 | 37 | |

| 330–370 ms | L inferior frontal gyrus | 47 | −42 | 16 | −8 | 2.443 | 21 |

| Location group > Frequency group | |||||||

| 30–70 ms | R supramarginal gyrus | 40 | 56 | −50 | 28 | 4.177 | 83 |

| 80–120 ms | R inferior parietal lobule | 40 | 46 | −60 | 40 | 5.335 | 115 |

| 40 | 60 | −24 | 24 | 4.087 | 36 | ||

| 130–170 ms | L inferior parietal lobule | 40 | −38 | −30 | 28 | 2.531 | 43 |

| L angular gyrus | 39 | −38 | −56 | 36 | 3.415 | 27 | |

| 180–220 ms | L middle temporal gyrus | 19 | −46 | −56 | 0 | 4.159 | 29 |

| R inferior parietal lobule | 40 | 65 | −26 | 24 | 3.651 | 27 | |

| 230–270 ms | L superior temporal gyrus | 22 | −50 | −56 | 20 | 3.037 | 78 |

| L inferior parietal lobule | 40 | −44 | −30 | 42 | 2.954 | 25 | |

| R precentral gyrus | 4 | 54 | −12 | 36 | 3.568 | 26 | |

| R supramarginal gyrus | 40 | 54 | −52 | 30 | 3.414 | 25 | |

| 280–320 ms | R postcentral gyrus | 3 | 52 | −18 | 40 | 4.056 | 46 |

| R middle frontal gyrus | 10 | 38 | 39 | 24 | 3.348 | 36 | |

| R inferior parietal lobule | 40 | 52 | −54 | 44 | 4.272 | 30 | |

| 330–370 ms | R supramarginal gyrus | 40 | 56 | −50 | 32 | 3.183 | 22 |

Note: All activations are significant at P < 0.05 and survive family-wise correction for multiple comparisons. BA, Brodmann's area.

Brain-Behavior Correlations

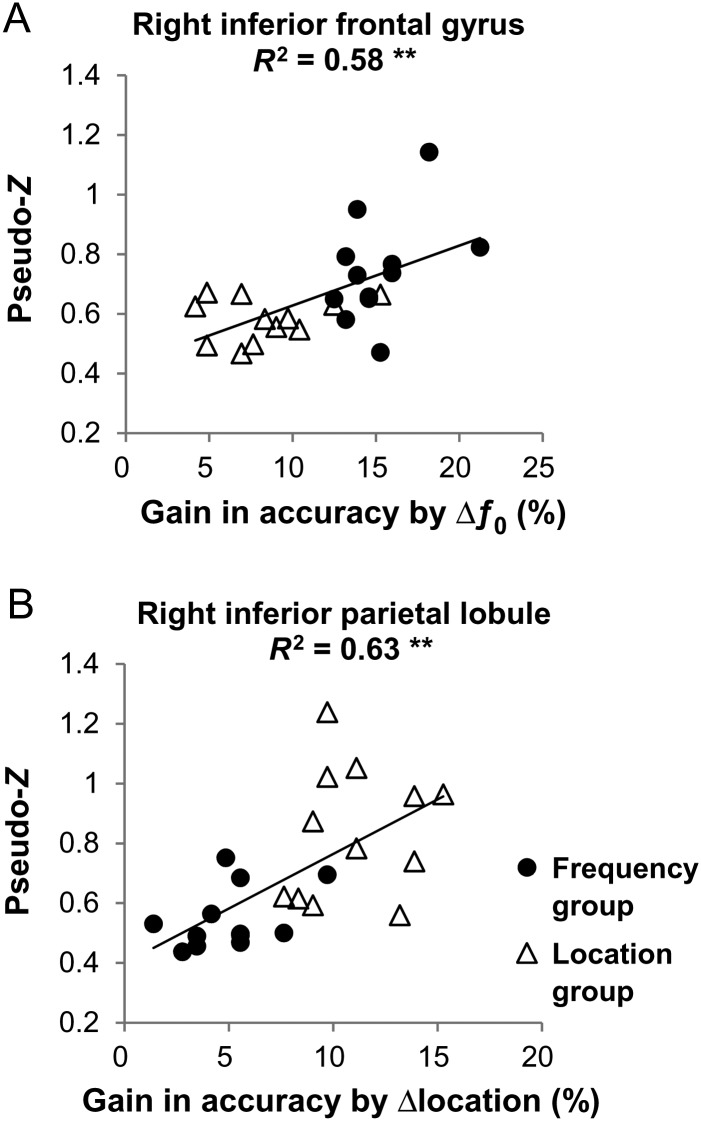

Figure 4 shows the correlations between brain neuromagnetic activity and cue-induced gain in performance in 2 regions showing significant (corrected P < 0.05) feature-specific training effect in source activity. As shown by Figure 4A, individuals' mean source activity in the right IFG during the 80–120 ms interval significantly correlated with listeners' gain in accuracy for Δƒ0 cue alone (R2 = 0.58, P < 0.01, Pearson correlation). Participants from the Frequency and Location groups nicely formed 2 clusters with the former showing a larger gain in performance by Δƒ0 cue and stronger source activity in the right IFG than the latter. In contrast, individuals' mean source activity in the right IPL during the 80–120 ms interval remarkably correlated with listeners' gain in accuracy for Δlocation cue alone (Fig. 4B, R2 = 0.63, P < 0.01). The 2 groups clearly separated from each other with participants from the Location group achieving a higher gain in accuracy by Δlocation cue and a stronger source activity in the right IPL than the Frequency group.

Figure 4.

Brain-behavior correlation. Individuals' mean source activity in the right inferior frontal gyrus (A) and in the right inferior parietal lobule (B) during the 80–120 ms interval from both the Frequency (filled circles) and Location groups (open triangles) are plotted against the listeners' gain in accuracy for Δƒ0 cue alone and Δlocation cue alone, respectively. The 2 regions were chosen as showing a significant (corrected P < 0.05) group difference in source activity. **P < 0.01 by Pearson correlation.

Discussion

The present study was designed to investigate whether a brief training program could yield feature-specific gains in performance that would coincide with changes in neuromagnetic brain activity. As expected, participants' accuracy in identifying 2 vowels presented simultaneously improved during a 45-min training session. More importantly, the training effects transferred to the MEG recording session in a feature-specific fashion, such that participants who learned to use ƒ0 separation between the 2 vowels showed greater accuracy when this cue was present than when the location difference was available. Conversely, participants who learned to use the spatial separation between vowels showed greater benefit when that information was present compared with trials where vowels differing in ƒ0 were presented at the same location. In addition, in both trained groups, the gain in accuracy for the trained feature was greater than that observed in participants trained on the other feature or a control group (Du et al. 2011) who was not exposed to nor trained on the same stimuli prior to the MEG recording session. These results are consistent with prior behavioral studies (Hawkey et al. 2004; Hussain et al. 2012) and provide further evidence that brief training can enhance perceptual sensitivity.

In the present study, the training phase may act as a priming task that biased attention toward the trained acoustic feature even when, in the subsequent task, participants were not explicitly told to focus attention on the voice or location. That is, the training may implicitly bias participants to focus their attention on the trained spectral or location difference between the 2 vowels, which would appear more salient than the untrained cue during MEG measurement that followed the brief training session. The observed pattern of behavioral results and source brain activity are consistent with a rapid adjustment in perceptual sensitivity such that top-down instructions and task parameters during the training phase confer the selectivity, which is necessary to modify a single feature representation (ƒ0 or location) without affecting other spatially organized feature representations embedded within the same neural circuitry. This training-induced selectivity appears to be fairly long-lasting and resilient, as it lingers throughout the delay between training and MEG recording and remains even when participants did not receive feedback on their performance during the MEG session.

Although the pattern of behavioral and neuromagnetic data appears to be consistent with an attention bias and/or changes in perceptual sensitivity, it remains possible that changes in performance and brain activity can be partially due to a repetition effect. Evidence from a repetition priming paradigm has shown superior performance in identifying auditory stimuli when the same stimuli were used in a prior task (Stuart and Jones 1995). Consequently, in the Frequency and Location groups, the enhanced performance for Δƒ0 and Δlocation, respectively, could be attributed to the fact that the participants were more familiar with the stimuli. However, this explanation is unlikely for 2 reasons. First, the SFSL condition was also presented during both training and MEG recording, but there was little evidence of repetition priming for this trial type. More importantly, the feature-specific effects observed in the present study reflect a relative rather than an absolute difference between the training and the MEG recording. That is, the analyses focused on the gain related to having frequency or spatial separation, and this approach controlled for having the same stimuli present in the training and the MEG recording session.

Using the spatial filtering technique of ER-SAM for imaging cortical source activity, we have shown that concurrent-vowel segregation and identification engaged a widely distributed neural network that comprised the primary and associative auditory cortices as well as prefrontal, inferior parietal, and response-related sensorimotor areas. Many of the regions showing significant source activity have been implicated in spatial and nonspatial auditory selective attention tasks and are part of a brain network that controls the focus of attention to task-relevant feature or stimuli (Maeder et al. 2001; Rämä et al. 2004; Degerman et al. 2006, 2008; Krumbholz et al. 2007; Alain et al. 2008; Paltoglou et al. 2011). For instance, enhanced activity in the IPL has been consistently reported during auditory localization tasks (Arnott et al. 2004) and is thought to play an important role in auditory spatial working memory (Alain et al. 2008; Alain, Shen, et al. 2010) and/or transforming auditory location into visuo-spatial coordinates that can guide the ocular system toward the sound sources (Arnott and Alain 2011). In the present study, the increased activity in the IPL could also index auditory source separation using spatial cues. Such an account would be consistent with functional MRI (fMRI) studies showing enhanced activation in the IPL with an increasing number of spatially distinct sound sources presented simultaneously (Zatorre et al. 2002; Smith et al. 2010).

The STG and IFG have also been repeatedly mentioned in tasks that require identifying sound objects (Clarke et al. 2002; Adriani et al. 2003; Arnott et al. 2004) and are often considered being part of the ventral (what) pathway. These areas may play an important role in speech segregation and identification as evidence by a prior fMRI study showing enhance activity in the left thalamus as well as primary and associative auditory cortices when concurrent vowels differing in ƒ0 were successfully identified (Alain et al. 2005). Moreover, there is evidence that the activity in primary and associative auditory cortices is modulated by the perception of concurrent sound objects associated with increasing inharmonicity between a lower harmonic and its fundamental (Arnott et al. 2011), and these areas are also part of a network involved during speech in noise perception (Wong et al. 2008; Bishop and Miller 2009; Dos Santos Sequeira et al. 2010).

In the present study, neuromagnetic source activity associated with segregating and identifying 2 vowels presented simultaneously was observed as early as 100 ms after sound onset in sensory-specific as well as multimodal areas such as the IFG and IPL. The time course of source activity observed in auditory, parietal, and prefrontal cortices is consistent with findings from single- and multi-unit recordings in non-human primates (e.g., Vaadia et al. 1986; Mazzoni et al. 1996) as well as intracerebral recording in epileptic patients (e.g., Richer et al. 1989; Molholm et al. 2006), which have revealed time-locked neural activity to auditory stimuli as early as 100 ms after sound onset. Notably, at longer latencies (i.e., 200–400 ms poststimulus), the source activity was predominantly observed in multimodal areas including the parietal and prefrontal cortices as well as motor areas related to response preparation and execution, consistent with hierarchically organized attention-related increased activity in sensory and attention networks (Ross et al. 2010).

Further, the neuroplastic changes associated with the feature-specific gain in performance were revealed by contrasting source activity between the 2 training groups, which yielded a double dissociation with participants trained on spectral and spatial cues showing higher source activity in ventral (“what”) and dorsal (“where”) brain areas, respectively (Rauschecker and Tian 2000; Alain et al. 2001; Maeder et al. 2001; Arnott et al. 2004; Arnott and Alain 2011). To our knowledge, this is the first demonstration of a feature-specific gain in human brain activity following a brief training designed to enhance perceptual sensitivity to differences in voice pitch and voice location. Notably, this group difference was not all or none, but rather appeared to reflect a bias in recruiting ventral or dorsal brain regions while performing the double-vowel identification task. This is consistent with prior fMRI studies that have revealed relative differences in activation in auditory “what” and “where” processing streams as a function of task instruction/demand and selective attention effect rather than absolute differences (e.g., Alain et al. 2001; Ahveninen et al. 2006; Degerman et al. 2006; Alain et al. 2008; Paltoglou et al. 2011). The group difference may also indicate changes in the tuning properties of the multimodal neurons engaged in sound identification and localization. These rapid feature-specific changes are consistent with animal studies showing task-relevant changes in the receptive fields and synchronized neuronal firing of auditory neurons within minutes of training (Bakin and Weinberger 1990; Edeline et al. 1993; Bakin et al. 1996; Fritz et al. 2003; Du et al. 2012).

Our findings provide further evidence for rapid changes in cortical evoked responses after less than an hour of auditory training on sound spectro-temporal (Alain et al. 2007; Alain, Campeanu, et al. 2010; Ben-David et al. 2011) or spatial (Spierer et al. 2007) features, and offer the first neuroimaging evidence for rapid perceptual (i.e., feature-specific) learning in humans. The neuromagnetic source analyses nicely complement prior fMRI research and provide unique chronometric information regarding the sequence of neural events associated with rapid learning during speech separation and identification. Enhanced source activity in the left STG around N1m–P2m latency (130–220 ms) and in the bilateral IFG at early (80–120 ms) and late (230–370 ms) latency in participants trained on frequency rather than location cue is consistent with previous reports showing spectral-training-related changes in early (∼130 ms) and late (∼340 ms) ERPs localized in the right auditory cortex and inferior prefrontal cortex, respectively (Alain et al. 2007). This is also in accordance with rapid changes in sensory evoked responses (N1 and P2 amplitude) and a later ERP (∼320 ms) over the left frontal site that differed from changes related to procedural learning during stimulus exposure and task repetition in participants trained on speech content like voice onset time (Alain, Campeanu, et al. 2010; Ben-David et al. 2011). On the other hand, compared with the Frequency group, larger source activity in the left posterior STG (230–270 ms) and multiple bilateral parietal regions including the IPL, SMG, and AG throughout the observed period (30–370 ms) in the Location group provides support for spatial-training-related changes in auditory evoked responses at 195–250 ms originating from the left inferior parietal cortex (Spierer et al. 2007). Notably, the group difference in the ventral and dorsal stream activity began as early as 100 ms after sound onset, suggesting the preset (before the presentation of stimuli) attentional bias on trained attributes and differential “warm-up” of corresponding pathways. This early differential activation in the ventral (e.g., IFG) and dorsal regions (e.g., IPL) correlated with individuals' behavioral improvement from spectral and spatial cues, respectively, indicating the critical role of rapid feature-specific tuning of auditory processing streams in speech segregation and identification. The feature-specific effect at later stage (200–400 ms) may reflect learning-induced alteration on task-related processes, including stimulus classification based on different sound attributes in the anterior and posterior associative auditory cortex, nonspatial and spatial working memory in prefrontal and parietal cortices, response selection, preparation, and execution in motor-related areas. Our results are consistent with the temporal sequence of γ-band increases over the left inferior frontal and left posterior parietal cortex during the delayed maintenance phase of an auditory pattern (Kaiser et al. 2003) and spatial working memory (Lutzenberger et al. 2002) tasks, respectively, and over the prefrontal cortex and higher-order executive networks during the responses in both MEG studies. Moreover, taking advantage of the temporal fidelity of the MEG measurement, our findings shed light on the timing of rapid learning-induced modulation of the ventral (nonspatial) and dorsal (spatial) pathways, which complement prior studies using the fMRI approach (Alain et al. 2001; Ahveninen et al. 2006; Degerman et al. 2006; Paltoglou et al. 2011). Our results suggest that neural systems underlying learning and memory are quickly and adaptively adjusted depending on goal-directed behavior. These may reflect top-down attention to task-relevant attributes to optimally process differences in the frequency or location of the stimulus along the hierarchical auditory processing streams (Woods and Alain 1993; Woods et al. 1994, 2001). Further research combining both MEG and fMRI may help clarify the neural interactions underlying such rapid neuroplastic changes, which could help determine whether these rapid changes in source activity are precursors to long-term changes as the training regimen continues.

In summary, a 45-min training session aimed to improve participants' abilities to use ƒ0 or location cues to separate and identify concurrent vowels yielded behavioral benefits specific to the trained attribute. Gains in performance coincided with rapid feature-specific changes in source activity along the ventral “what” and dorsal “where” auditory pathways, respectively. These group differences reflect a rapid recalibration of the perceptual system with training and cannot be easily accounted for by procedural learning, because the stimulus-response requirements were identical in both groups. Taken together, this study provides the first neuromagnetic evidence for rapid perceptual learning in humans and shows that attention can be quickly and adaptively allocated to sound identity and sound location, an effect that is mediated by the differential engagement of brain areas along the cerebral ventral and dorsal streams.

Supplementary Material

Supplementary material can be found at: http://www.cercor.oxfordjournals.org/

Funding

This research was supported by grants from the Canadian Institutes of Health Research (MOP106619), the Natural Sciences and Engineering Research Council of Canada, Chinese State Scholarship Fund, the “973” National Basic Research Program of China (2009CB320900), and the National Natural Science Foundation of China (31170985).

Supplementary Material

Notes

Conflict of Interest: None declared.

References

- Adriani M, Maeder P, Meuli R, Thiran AB, Frischknecht R, Villemure JG, Mayer J, Annoni JM, Bogousslavsky J, Fornari E, et al. Sound recognition and localization in man: specialized cortical networks and effects of acute circumscribed lesions. Exp Brain Res. 2003;153:591–604. doi: 10.1007/s00221-003-1616-0. [DOI] [PubMed] [Google Scholar]

- Ahissar M, Hochstein S. Attentional control of early perceptual learning. Proc Natl Acad Sci USA. 1993;90:5718–5722. doi: 10.1073/pnas.90.12.5718. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ahissar M, Nahum M, Nelken I, Hochstein S. Reverse hierarchies and sensory learning. Philos Trans R Soc Lond B Biol Sci. 2009;364:285–299. doi: 10.1098/rstb.2008.0253. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ahveninen J, Jaaskelainen IP, Raij T, Bonmassar G, Devore S, Hamalainen M, Levanen S, Lin FH, Sams M, Shinn-Cunningham BG, et al. Task-modulated “what” and “where” pathways in human auditory cortex. Proc Natl Acad Sci USA. 2006;103:14608–14613. doi: 10.1073/pnas.0510480103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Alain C, Arnott SR, Hevenor S, Graham S, Grady CL. “What” and “where” in the human auditory system. Proc Natl Acad Sci USA. 2001;98:12301–12306. doi: 10.1073/pnas.211209098. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Alain C, Campeanu S, Tremblay K. Changes in sensory evoked responses coincide with rapid improvement in speech identification performance. J Cogn Neurosci. 2010;22:392–403. doi: 10.1162/jocn.2009.21279. [DOI] [PubMed] [Google Scholar]

- Alain C, He Y, Grady C. The contribution of the inferior parietal lobe to auditory spatial working memory. J Cogn Neurosci. 2008;20:285–295. doi: 10.1162/jocn.2008.20014. [DOI] [PubMed] [Google Scholar]

- Alain C, Reinke K, McDonald KL, Chau W, Tam F, Pacurar A, Graham S. Left thalamo-cortical network implicated in successful speech separation and identification. Neuroimage. 2005;26:592–599. doi: 10.1016/j.neuroimage.2005.02.006. [DOI] [PubMed] [Google Scholar]

- Alain C, Shen D, Yu H, Grady C. Dissociable memory- and response-related activity in parietal cortex during auditory spatial working memory. Front Psychol. 2010;1:202. doi: 10.3389/fpsyg.2010.00202. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Alain C, Snyder JS, He Y, Reinke KS. Changes in auditory cortex parallel rapid perceptual learning. Cereb Cortex. 2007;17:1074–1084. doi: 10.1093/cercor/bhl018. [DOI] [PubMed] [Google Scholar]

- Arnott SR, Alain C. The auditory dorsal pathway: orienting vision. Neurosci Biobehav Rev. 2011;35:2162–2173. doi: 10.1016/j.neubiorev.2011.04.005. [DOI] [PubMed] [Google Scholar]

- Arnott SR, Bardouille T, Ross B, Alain C. Neural generators underlying concurrent sound segregation. Brain Res. 2011;1387:116–124. doi: 10.1016/j.brainres.2011.02.062. [DOI] [PubMed] [Google Scholar]

- Arnott SR, Binns MA, Grady CL, Alain C. Assessing the auditory dual-pathway model in humans. Neuroimage. 2004;22:401–408. doi: 10.1016/j.neuroimage.2004.01.014. [DOI] [PubMed] [Google Scholar]

- Assmann PF, Summerfield Q. The contribution of waveform interactions to the perception of concurrent vowels. J Acoust Soc Am. 1994;95:471–484. doi: 10.1121/1.408342. [DOI] [PubMed] [Google Scholar]

- Bajo VM, Nodal FR, Moore DR, King AJ. The descending corticocollicular pathway mediates learning-induced auditory plasticity. Nat Neurosci. 2010;13:253–260. doi: 10.1038/nn.2466. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bakin JS, South DA, Weinberger NM. Induction of receptive field plasticity in the auditory cortex of the guinea pig during instrumental avoidance conditioning. Behav Neurosci. 1996;110:905–913. doi: 10.1037//0735-7044.110.5.905. [DOI] [PubMed] [Google Scholar]

- Bakin JS, Weinberger NM. Classical conditioning induces CS-specific receptive field plasticity in the auditory cortex of the guinea pig. Brain Res. 1990;536:271–286. doi: 10.1016/0006-8993(90)90035-a. [DOI] [PubMed] [Google Scholar]

- Ben-David BM, Campeanu S, Tremblay KL, Alain C. Auditory evoked potentials dissociate rapid perceptual learning from task repetition without learning. Psychophysiology. 2011;48:797–807. doi: 10.1111/j.1469-8986.2010.01139.x. [DOI] [PubMed] [Google Scholar]

- Bishop CW, Miller LM. A multisensory cortical network for understanding speech in noise. J Cogn Neurosci. 2009;21:1790–1805. doi: 10.1162/jocn.2009.21118. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cheyne D, Bakhtazad L, Gaetz W. Spatiotemporal mapping of cortical activity accompanying voluntary movements using an event-related beamforming approach. Hum Brain Mapp. 2006;27:213–229. doi: 10.1002/hbm.20178. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Clarke S, Bellmann Thiran A, Maeder P, Adriani M, Vernet O, Regli L, Cuisenaire O, Thiran JP. What and where in human audition: selective deficits following focal hemispheric lesions. Exp Brain Res. 2002;147:8–15. doi: 10.1007/s00221-002-1203-9. [DOI] [PubMed] [Google Scholar]

- Cox RW. AFNI: software for analysis and visualization of functional magnetic resonance neuroimages. Comput Biomed Res. 1996;29:162–173. doi: 10.1006/cbmr.1996.0014. [DOI] [PubMed] [Google Scholar]

- Degerman A, Rinne T, Salmi J, Salonen O, Alho K. Selective attention to sound location or pitch studied with fMRI. Brain Res. 2006;1077:123–134. doi: 10.1016/j.brainres.2006.01.025. [DOI] [PubMed] [Google Scholar]

- Degerman A, Rinne T, Sarkka AK, Salmi J, Alho K. Selective attention to sound location or pitch studied with event-related brain potentials and magnetic fields. Eur J Neurosci. 2008;27:3329–3341. doi: 10.1111/j.1460-9568.2008.06286.x. [DOI] [PubMed] [Google Scholar]

- Dos Santos Sequeira S, Specht K, Moosmann M, Westerhausen R, Hugdahl K. The effects of background noise on dichotic listening to consonant-vowel syllables: an fMRI study. Laterality. 2010;15:577–596. doi: 10.1080/13576500903045082. [DOI] [PubMed] [Google Scholar]

- Du Y, He Y, Ross B, Bardouille T, Wu XH, Li L, Alain C. Human auditory cortex activity shows additive effects of spectral and spatial cues during speech segregation. Cereb Cortex. 2011;21:698–707. doi: 10.1093/cercor/bhq136. [DOI] [PubMed] [Google Scholar]

- Du Y, Wang Q, Zhang Y, Wu XH, Li L. Perceived target-masker separation unmasks responses of lateral amygdala to the emotionally conditioned target sounds in awake rats. Neuroscience. 2012;225:249–257. doi: 10.1016/j.neuroscience.2012.08.022. [DOI] [PubMed] [Google Scholar]

- Edeline JM, Pham P, Weinberger NM. Rapid development of learning-induced receptive field plasticity in the auditory cortex. Behav Neurosci. 1993;107:539–551. doi: 10.1037//0735-7044.107.4.539. [DOI] [PubMed] [Google Scholar]

- Fritz J, Elhilali M, Shamma S. Active listening: task-dependent plasticity of spectrotemporal receptive fields in primary auditory cortex. Hear Res. 2005a;206:159–176. doi: 10.1016/j.heares.2005.01.015. [DOI] [PubMed] [Google Scholar]

- Fritz J, Elhilali M, Shamma S. Differential dynamic plasticity of A1 receptive fields during multiple spectral tasks. J Neurosci. 2005b;25:7623–7635. doi: 10.1523/JNEUROSCI.1318-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fritz J, Shamma S, Elhilali M, Klein D. Rapid task-related plasticity of spectrotemporal receptive fields in primary auditory cortex. Nat Neurosci. 2003;6:1216–1223. doi: 10.1038/nn1141. [DOI] [PubMed] [Google Scholar]

- Hawkey DJ, Amitay S, Moore DR. Early and rapid perceptual learning. Nat Neurosci. 2004;7:1055–1056. doi: 10.1038/nn1315. [DOI] [PubMed] [Google Scholar]

- Holmes CJ, Hoge R, Collins L, Woods R, Toga AW, Evans AC. Enhancement of MR images using registration for signal averaging. J Comput Assist Tomogr. 1998;22:324–333. doi: 10.1097/00004728-199803000-00032. [DOI] [PubMed] [Google Scholar]

- Hussain Z, McGraw PV, Sekuler AB, Bennett PJ. The rapid emergence of stimulus specific perceptual learning. Front Psychol. 2012;3:226. doi: 10.3389/fpsyg.2012.00226. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kacelnik O, Nodal FR, Parsons CH, King AJ. Training-induced plasticity of auditory localization in adult mammals. PLoS Biol. 2006;4:e71. doi: 10.1371/journal.pbio.0040071. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kaiser J, Ripper B, Birbaumer N, Lutzenberger W. Dynamics of gamma-band activity in human magnetoencephalogram during auditory pattern working memory. Neuroimage. 2003;20:816–827. doi: 10.1016/S1053-8119(03)00350-1. [DOI] [PubMed] [Google Scholar]

- Karni A, Bertini G. Learning perceptual skills: behavioral probes into adult cortical plasticity. Curr Opin Neurobiol. 1997;7:530–535. doi: 10.1016/s0959-4388(97)80033-5. [DOI] [PubMed] [Google Scholar]

- Karni A, Meyer G, Rey-Hipolito C, Jezzard P, Adams MM, Turner R, Ungerleider LG. The acquisition of skilled motor performance: fast and slow experience-driven changes in primary motor cortex. Proc Natl Acad Sci USA. 1998;95:861–868. doi: 10.1073/pnas.95.3.861. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Krumbholz K, Eickhoff SB, Fink GR. Feature- and object-based attentional modulation in the human auditory “where” pathway. J Cogn Neurosci. 2007;19:1721–1733. doi: 10.1162/jocn.2007.19.10.1721. [DOI] [PubMed] [Google Scholar]

- Loui P, Wu EH, Wessel DL, Knight RT. A generalized mechanism for perception of pitch patterns. J Neurosci. 2009;29:454–459. doi: 10.1523/JNEUROSCI.4503-08.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lutzenberger W, Ripper B, Busse L, Birbaumer N, Kaiser J. Dynamics of gamma-band activity during an audiospatial working memory task in humans. J Neurosci. 2002;22:5630–5638. doi: 10.1523/JNEUROSCI.22-13-05630.2002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Maeder PP, Meuli RA, Adriani M, Bellmann A, Fornari E, Thiran JP, Pittet A, Clarke S. Distinct pathways involved in sound recognition and localization: a human fMRI study. Neuroimage. 2001;14:802–816. doi: 10.1006/nimg.2001.0888. [DOI] [PubMed] [Google Scholar]

- Mazzoni P, Bracewell RM, Barash S, Andersen RA. Spatially tuned auditory responses in area LIP of macaques performing delayed memory saccades to acoustic targets. J Neurophysiol. 1996;75:1233–1241. doi: 10.1152/jn.1996.75.3.1233. [DOI] [PubMed] [Google Scholar]

- Molholm S, Sehatpour P, Mehta AD, Shpaner M, Gomez-Ramirez M, Ortigue S, Dyke JP, Schwartz TH, Foxe JJ. Audio-visual multisensory integration in superior parietal lobule revealed by human intracranial recordings. J Neurophysiol. 2006;96:721–729. doi: 10.1152/jn.00285.2006. [DOI] [PubMed] [Google Scholar]

- Paltoglou AE, Sumner CJ, Hall DA. Mapping feature-sensitivity and attentional modulation in human auditory cortex with functional magnetic resonance imaging. Eur J Neurosci. 2011;33:1733–1741. doi: 10.1111/j.1460-9568.2011.07656.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rämä P, Poremba A, Sala JB, Yee L, Malloy M, Mishkin M, Courtney SM. Dissociable functional cortical topographies for working memory maintenance of voice identity and location. Cereb Cortex. 2004;14:768–780. doi: 10.1093/cercor/bhh037. [DOI] [PubMed] [Google Scholar]

- Rauschecker JP, Tian B. Mechanisms and streams for processing of “what” and “where” in auditory cortex. Proc Natl Acad Sci USA. 2000;97:11800–11806. doi: 10.1073/pnas.97.22.11800. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Richer F, Alain C, Achim A, Bouvier G, Saint-Hilaire JM. Intracerebral amplitude distributions of the auditory evoked potential. Electroencephalogr Clin Neurophysiol. 1989;74:202–208. doi: 10.1016/0013-4694(89)90006-0. [DOI] [PubMed] [Google Scholar]

- Robinson SE. Localization of event-related activity by SAM(erf) Neurol Clin Neurophysiol. 2004;2004:109. [PubMed] [Google Scholar]

- Robinson SE, Vrba J. Functional neuroimaging by synthetic aperture magnetometry (SAM) In: Yoshimoto T, Kotani M, Kuriki S, Karibe H, Nakasato N, editors. Recent advances in biomagnetism. Sendai: Tohuku University Press; 1998. pp. 302–305. [Google Scholar]

- Ross B, Hillyard SA, Picton TW. Temporal dynamics of selective attention during dichotic listening. Cereb Cortex. 2010;20:1360–1371. doi: 10.1093/cercor/bhp201. [DOI] [PubMed] [Google Scholar]

- Shtyrov Y, Nikulin VV, Pulvermuller F. Rapid cortical plasticity underlying novel word learning. J Neurosci. 2010;30:16864–16867. doi: 10.1523/JNEUROSCI.1376-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Smith KR, Hsieh IH, Saberi K, Hickok G. Auditory spatial and object processing in the human planum temporale: no evidence for selectivity. J Cogn Neurosci. 2010;22:632–639. doi: 10.1162/jocn.2009.21196. [DOI] [PubMed] [Google Scholar]

- Spierer L, Tardif E, Sperdin H, Murray MM, Clarke S. Learning-induced plasticity in auditory spatial representations revealed by electrical neuroimaging. J Neurosci. 2007;27:5474–5483. doi: 10.1523/JNEUROSCI.0764-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stuart GP, Jones DM. Priming the identification of environmental sounds. Q J Exp Psychol A Hum Exp Psychol. 1995;48:741–761. doi: 10.1080/14640749508401413. [DOI] [PubMed] [Google Scholar]

- Vaadia E, Benson DA, Hienz RD, Goldstein MH., Jr Unit study of monkey frontal cortex: active localization of auditory and of visual stimuli. J Neurophysiol. 1986;56:934–952. doi: 10.1152/jn.1986.56.4.934. [DOI] [PubMed] [Google Scholar]

- Van Veen BD, van Drongelen W, Yuchtman M, Suzuki A. Localization of brain electrical activity via linearly constrained minimum variance spatial filtering. IEEE Trans Biomed Eng. 1997;44:867–880. doi: 10.1109/10.623056. [DOI] [PubMed] [Google Scholar]

- Watson CS. Time course of auditory perceptual learning. Ann Otol Rhinol Laryngol Suppl. 1980;89:96–102. [PubMed] [Google Scholar]

- Wenzel EM, Arruda M, Kistler DJ, Wightman FL. Localization using nonindividualized head-related transfer functions. J Acoust Soc Am. 1993;94:111–123. doi: 10.1121/1.407089. [DOI] [PubMed] [Google Scholar]

- Wightman FL, Kistler DJ. Headphone simulation of free-field listening. I: stimulus synthesis. J Acoust Soc Am. 1989a;85:858–867. doi: 10.1121/1.397557. [DOI] [PubMed] [Google Scholar]

- Wightman FL, Kistler DJ. Headphone simulation of free-field listening. II: psychophysical validation. J Acoust Soc Am. 1989b;85:868–878. doi: 10.1121/1.397558. [DOI] [PubMed] [Google Scholar]

- Wong PC, Uppunda AK, Parrish TB, Dhar S. Cortical mechanisms of speech perception in noise. J Speech Lang Hear Res. 2008;51:1026–1041. doi: 10.1044/1092-4388(2008/075). [DOI] [PubMed] [Google Scholar]

- Woods DL, Alain C. Feature processing during high-rate auditory selective attention. Percept Psychophys. 1993;53:391–402. doi: 10.3758/bf03206782. [DOI] [PubMed] [Google Scholar]

- Woods DL, Alain C, Diaz R, Rhodes D, Ogawa KH. Location and frequency cues in auditory selective attention. J Exp Psychol Hum Percept Perform. 2001;27:65–74. doi: 10.1037//0096-1523.27.1.65. [DOI] [PubMed] [Google Scholar]

- Woods DL, Alho K, Algazi A. Stages of auditory feature conjunction: an event-related brain potential study. J Exp Psychol Hum Percept Perform. 1994;20:81–94. doi: 10.1037//0096-1523.20.1.81. [DOI] [PubMed] [Google Scholar]

- Wright BA, Fitzgerald MB. Different patterns of human discrimination learning for two interaural cues to sound-source location. Proc Natl Acad Sci USA. 2001;98:12307–12312. doi: 10.1073/pnas.211220498. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zatorre RJ, Bouffard M, Ahad P, Belin P. Where is ‘where’ in the human auditory cortex? Nat Neurosci. 2002;5:905–909. doi: 10.1038/nn904. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.