Abstract

In this paper, the problem of the existence, uniqueness and uniform stability of memristor-based fractional-order neural networks (MFNNs) with two different types of memductance functions is extensively investigated. Moreover, we formulate the complex-valued memristor-based fractional-order neural networks (CVMFNNs) with two different types of memductance functions and analyze the existence, uniqueness and uniform stability of such networks. By using Banach contraction principle and analysis technique, some sufficient conditions are obtained to ensure the existence, uniqueness and uniform stability of the considered MFNNs and CVMFNNs with two different types of memductance functions. The analysis results establish from the theory of fractional-order differential equations with discontinuous right-hand sides. Finally, four numerical examples are presented to show the effectiveness of our theoretical results.

Keywords: Fractional-order, Memristor-based neural networks, Banach contraction principle, Time delays

Introduction

We know that fractional calculus is an old branch of mathematics, which mainly deals with derivatives and integrals of arbitrary non-integer order. It was firstly introduced 300 years ago. Due to lack of application background and its complexity, it did not attract much attention for a long time. Recently, it had been applied to model many real-world phenomena in various fields of physics, engineering and economics, such as dielectric polarization, electromagnetic waves, viscoelastic system, heat conduction, biology, finance etc (Podlubny 1999; Kilbas et al. 2006; Ahmeda and Elgazzar 2007; Hilfer 2000). The fractional-order model gives more accurate results than the corresponding integer-order model. The reasons depend on two main advantages of fractional-order models in comparison with its integer-order counterparts, one is the fractional order parameter that enriches the system performance by increasing one degree of freedom and other one is that fractional derivatives provide an excellent instrument for the description of memory and hereditary properties of various processes. That is, fractional-order model has an infinite memory. Based on the wide range of applications, fractional calculus had increased the interest and attracted the attention of many researchers. Some good results have been proposed in the literature see Laskin (2000), Deng and Li (2005), Delavari et al. (2012), Peng et al. (2008) and Wu et al. (2009) references therein.

In the past few decades, stability analysis of neural networks have received considerable attention and many researches have found being applied in various fields such as communication systems, image processing, signal processing, pattern recognition, optimization problems and other engineering areas see Seow et al. (2010), Guo and Li (2012), Bouzerdoum and Pattison (1993) and Kosko (1988) references therein. In Wu et al. (2012), the authors have been studied the robust asymptotic stability analysis for uncertain BAM neural networks with both interval time-varying delays and stochastic disturbances. Some new synchronization condition were obtained for discontinuous neural networks with time-varying mixed delays by using state feedback and impulsive control in Yang et al. (2014). In recent years, fractional calculus, based on its significant features (more degrees of freedom and infinite memory) has been used to modeling the artificial neural networks, the fractional-order formulation of neural network models is also justified by research results about biological neurons. The study of fractional-order neural networks model have more complexity due to the solution methods of fractional calculus. Some of the researchers have analyzed the fractional-order neural networks and proposed few interesting results see Yu et al. (2012), Kaslik and Sivasundaram (2012), Chen et al. (2013), Boroomand and Menhaj (2009), Zou et al. (2014) and references therein.

On the other hand, due to the potential applications of neural networks are yields new aspects of theories required for novel or more effective functions and mechanisms, that is, the applications are involved in the complex-valued signals (Hirose 2012; Nitta 2004; Tanaka and Aihara 2009). This indicates that the dynamic analysis of complex-valued neural networks is very important. The complex-valued neural networks is an extension of real-valued neural networks with complex-valued state, output, connection weight, and activation function. The use of complex-valued inputs/outputs, weights and activation functions make it possible to increase the functionality of the neural networks, their performance and to reduce the training time. In real-valued neural networks, their activation function is usually chosen to be bounded and analytic. However, in the complex domain, according to the Liovilles theorem (Mathews and Howell 1997), every bounded entire function must be constant. Thus, if the activation function is entire and bounded in the complex domain, then it is constant. This is not suitable. Therefore, choosing appropriate activation function is the main challenge in complex-valued neural networks. However, compared with real-valued recurrent neural networks, research for complex-valued ones has achieved slow and little progress as there are more complicated properties. Nowadays, some of the authors have focused their attention on the study of those complicated properties of complex-valued neural networks and proposed some interesting results see Hu and Wang (2012), Duan and Song (2010), Rao and Murthy (2009), Zhou and Song (2013), Huang et al. (2014), Chen and Song (2013), Xu et al. (2013) and references therein.

Memristor is one of the newly modeled two terminal nonlinear circuit device in the electronic circuit theory. It was theoretically first developed by Chua (1971), and the memristor element has been designed and fabricated by a team from the Hewlett-Packard Company (Tour and He 2008; Strukov et al. 2008). After the invention of practical model of memristor element, the memristor become a very interesting topic because of its potential applications in nonvolatile memory storage, new type of computers will have no booting time, brain like computers etc. This new circuit shares many properties of resistors and shares the same unit of measurement (i.e. ohm). The memristor element have attracted much attention based on the following two main properties. The first one is its memory characteristic and the second one is its nanometer dimensions. The memory characteristic was determined by its physical structure and external input. When the voltage applied on memristor is turned off, the memristor remembers its past values until it is turned on for the next time. It is well known that memristor element reveal features just like as the neurons in the human brain have. Based on these features, the memristor element has been used to build a new model of neural networks. We know that the neural networks can be constructed by nonlinear circuit and have been studied extensively. In this circuit, the self feedback connection weights and connection weights are implemented by resistors. Suppose that we use memristors instead of resistors, then the neural networks model is said to be memristor-based neural networks. The memristor-based neural network is a state-dependent switching system due to the fact that the parameter values of connection weights are changed according to their state. Very recently, the analysis of dynamic behaviors of memristor-based neural networks have been studied by many researchers and some excellent results have been proposed in the literature see Zhang et al. (2013), Yang et al. (2014), Wu and Zeng (2012, 2013, 2014), Wu et al. (2011, 2013a, b), Cai and Huang (2014), Guo et al. (2013), Qi et al. (2014), Wen et al. (2013), Chen et al. (2014) and references therein. The memristor-based neural networks is a differential equation with discontinuous right-hand sides because that it is a state-dependent switching system. It shows that the solutions of this differential equation are not yet been calculated in classical sense. Filippov (1988) proposed a solution method, that is to transform a differential equations with discontinuous right-hand sides into a differential inclusion by using the theories of differential inclusion. Most of the researchers investigated the memristor-based neural networks and proposed some related results by using the framework of Filippov solution see Zhang et al. (2013), Yang et al. (2014), Wu and Zeng (2012, 2013, 2014), Wu et al. (2011, 2013a, b), Cai and Huang (2014) Guo et al. (2013), Qi et al. (2014), Wen et al. (2013), Chen et al. (2014) and references therein. In Yang et al. (2014), the authors extensively studied the problem of exponential synchronization of memristive Cohen–Grossberg neural networks with mixed delays. Several sufficient conditions have been derived for the globally exponentially stability of memristive neural networks with time-varying impulses in Qi et al. (2014). In Wu and Zeng (2014), the authors investigated the passivity problem for memristor-based neural networks with two different types of memductance functions and some sufficient conditions for the passivity of addressed memristor-based neural networks were proposed.

Motivated by the above discussion, the analysis of fractional-order neural networks and memristor-based neural networks have become an ongoing research area. Based on the applications and features of both fractional-order neural networks and memristor-based neural networks, it is necessary to analysis the dynamic behaviors of memristor-based fractional-order neural networks (MFNNs). In Chen et al. (2014), the authors introduced the memristor-based neural networks and proposed some sufficient conditions to ensure the global Mittag–Leffler stability and synchronization are established by using Lyapunov method. The problem of the existence, uniqueness and uniform stability analysis of MFNNs with two different types of memductance functions has not been investigated in the existing literature. In this paper, we consider both real-valued and complex-valued memristor-based fractional-order neural networks (CVMFNNs) with time delay and two different types of memductance functions. Some sufficient conditions that guarantee the existence, uniqueness and uniform stability for both addressed networks are derived by using Banach contraction principle and the framework of Fillipov solution.

The rest of this paper is organized as follows. In “Preliminaries” section, the model of real-valued and CVMFNNs with time delays and two different types of memductance functions is described. Some of the necessary definitions, lemmas and assumptions are also provided in this section. Some sufficient conditions for the existence and uniqueness of solution and uniform stability for the both proposed networks are derived by using the Banach contraction principle and the framework of Fillipov solution in “Main results” section. In “Numerical examples” section, four numerical examples are given to demonstrate the effectiveness of our theoretical results. Finally the conclusion of this paper is given in “Conclusion” section.

Notation

and denotes the n-dimensional Euclidean space and n-dimensional complex space respectively. Throughout this paper, the solutions of all the systems considered in the following are intended in Filippov’s sense. co denotes closure of the convex hull of generated by real numbers and . Similarly, co denotes closure of the convex hull of generated by complex numbers and . denote the complex-valued function, where .

Preliminaries

In this section, we give some basic definitions, lemmas and assumptions which can be used later to derive our main results of this paper.

Definition 1

The fractional integral of order for a function f is defined as

| 1 |

where and is the gamma function defined as .

Definition 2

The Caputo fractional derivative of order for a function (the set of all order continuous differentiable functions on ) is defined by

| 2 |

where and n is a positive integer such that .

Lemma 1

If the Caputo fractional derivativeis integrable, then:

| 3 |

Especially, for, one can obtain:

| 4 |

Consider the real-valued memristor-based fractional-order neural networks (RVMFNNs) described by the following differential equation:

| 5 |

where , n corresponds to the number of units in a neural network, denotes the state variable associated with the pth neuron, is a constant, denote external input vector, and are the nonlinear activation functions of the qth unit at time t and and are connection memristive weights without and with time delays, which are defined as

| 6 |

in which and are represents the memductances of memristors and . represents the memristor between the activation function and and represents the memristor between the activation function and .

Combining with the physical structure of a memristor device, then one see that

| 7 |

where and are the charges corresponding to the memristors and and are denotes magnetic flux corresponding to memristor and respectively.

The initial conditions associated with (5) are of the form

| 8 |

where and norm of an element in is .

Consider the CVMFNNs described by the following differential equation:

| 9 |

where , n corresponds to the number of units in a neural network, denotes the complex-valued state variable associated with the pth neuron, is a constant, denote external input vector, and are the nonlinear complex-valued activation functions of the q th unit at time t and and are complex-valued connection memristive weights without and with time delays, which are defined as

| 10 |

in which and are represents the memductances of memristors and . represents the memristor between the activation function and and represents the memristor between the activation function and .

Combining with the physical structure of a memristor device, then one see that

| 11 |

where and are the charges corresponding to the memristors and and are denotes magnetic flux corresponding to memristor and respectively.

The initial conditions associated with (9) are of the form

| 12 |

where and norm of an element in is and .

Many studies show that pinched hysteresis loops are the fingerprint of memristive devices. Under different pinched hysteresis loops, the evolutionary tendency or process of memristive systems evolves into different forms. It is generally known that the pinched hysteresis loop is due to the nonlinearity of memductance function. As two typical memductance functions, in this paper, we discuss the following four cases.

Case 1

The memductance function and are given by

| 13 |

where and are constants, .

Case 2

The memductance function and are given by

| 14 |

where and are constants, .

Case 3

The complex-valued memductance function and are given by

| 15 |

where and are constants, .

Case 4

The complex-valued memductance function and are given by

| 16 |

where and are constants, .

According to the features of memristors given in cases 1–4, then the following four cases can be happen.

Case1′

In the case 1, then

| 17 |

where the switching jumps , connection weights and are constants, .

Case 2′

In the case 2, and are continuous functions, then

| 18 |

where and are constants, .

Case 3′

In the case 3, then

| 19 |

where the switching jumps , connections weights and are constants, .

Case 4′

In the case 4, and are complex-valued continuous functions, then

| 20 |

where , and are constants, .

Remark 1

The memristor-based neural networks is one of the special kind of differential equations with discontinuous right-hand sides because that it is a state-dependent switching system. Thus, the connection weights are changed depending on their state variable. It shows that the solutions of this differential equation are not yet been calculated in the straightforward manner. Therefore, Filippov (1988) proposed a solution method, that to transform differential equations with discontinuous right-hand sides into a differential inclusion by using the theories of differential inclusion. Many of the authors studied the memristor-based neural networks and proposed some good results in the framework of Filippov solution see Zhang et al. (2013), Yang et al. (2014), Wu and Zeng (2012, 2013, 2014), Wu et al. (2011, 2013a, b), Cai and Huang (2014), Guo et al. (2013), Qi et al. (2014), Wen et al. (2013), Chen et al. (2014) and references therein. If the connection weights are not changed according to the state variable then the memristor-based neural networks become a class of conventional neural networks system.

Definition 3

A set-valued map with nonempty values is said to be upper-semicontinuous at if, for any open set containing , there exists a neighborhood of such that is said to have a closed (convex, compact) image if for each is closed (convex, compact).

Definition 4

For the system , with discontinuous right-hand sides, a set-valued map is defined as

where is the closure of the convex hull of set and is a Lebesgue measure of set . A solution in Filippov’s sense of the Cauchy problem for this system with initial condition is an absolutely continuous function , which satisfies and the differential inclusion:

Definition 5

The solution of systems (5) and (9) is said to be stable if for any there exists such that implies for any two solutions and . It is uniformly stable if the above is independent of .

Assumption 1

satisfy the Lipschitz conditions, i.e., for any there exist positive constants such that

| 21 |

Assumption 2

and satisfy the following conditions:

Assumption 3

and satisfy the following conditions:

Assumption 4

Let , where denotes the imaginary unit, that is, . and can be expressed by separating into its real and imaginary part as

where and and and . For notational simplicity, and are denoted by and respectively.

The partial derivatives of with respect to : and exist and are continuous. Similarly, the partial derivatives of with respect to : and exist and are continuous.

- The partial derivatives and are bounded, that is, there exist positive constant numbers such that

- Also, the partial derivatives and are bounded, that is, there exist positive constant numbers such that

Then, according to the mean value theorem for multivariable functions, we have that for any22

Assumption 5

satisfy the Lipschitz conditions in the complex domain, i.e., for any there exist positive constants such that

| 23 |

Main results

In this section, some sufficient conditions for the existence, uniqueness and uniform stability of considered both RVMFNNs and CVMFNNs are derived.

Real-valued memristor-based fractional-order neural networks

We first consider RVMFNNs with time delays and two different types of memductance functions. By using Filippov’s solution, differential inclusion and Banach contraction principle, some sufficient conditions are obtained to ensure the existence, uniqueness and uniform stability of considered RVMFNNs.

Theorem 1

Under the case, if Assumption 1–2 are satisfied, then the system (5) is satisfying the initial condition (8) is uniformly stable.

Proof

By theories of differential inclusions and set-valued maps, from (5), if follows that

| 24 |

or equivalently, for there exists a measurable functions and such that

| 25 |

for and .

Consider and with . and are any two solutions of the system (5) with initial conditions where , where . We have

Now multiply on both sides, we can write

| 26 |

From (26), we have

By taking absolute value and multiply on both sides, we get

| 27 |

From (27), we can obtain

| 28 |

The above Eq. (28) can be rewritten as

| 29 |

From (29), we can say that for then there exist a such that when . Thus, the solution is uniformly stable.

Theorem 2

Under the case, if Assumption 1–2 are satisfied, then the system (5) is satisfying the initial condition (8) is uniformly stable.

Proof

By (5), if follows that

| 30 |

Transform (30) into the compact form as follows:

| 31 |

where .

Consider and with . and are any two solutions of the system (31) with initial conditions where , where . We have

Now multiply by on both sides, we can write

| 32 |

From (32), we have

By taking absolute value and multiply by on both sides of the above, we get

| 33 |

From (33) we can obtain

| 34 |

The above Eq. (34) can be rewritten as

| 35 |

From (35), we can say that for then there exist a such that when . Thus, the solution is uniformly stable.

Theorem 3

If Assumptions 1 and 2 hold, there exist a unique equilibrium point in system (5), which is uniformly stable.

Proof

Let and constructing a mapping defined by

| 36 |

where .

Now, we will show that is a contraction mapping on endowed with the Euclidean space norm. In fact, for any two different points we have

| 37 |

Based on Assumption 1,

| 38 |

which implies that is a contraction mapping on . Hence, there exists a unique fixed point such that i.e.

| 39 |

That is

| 40 |

for which implies that is an equilibrium point of system (5). Moreover, it follows from Theorem 1 and Theorem 2 that is uniformly stable.

Remark 2

If , then system (5) can be written as

| 41 |

where . Then, the sufficient conditions for the existence, uniqueness and uniform stability of RVMFNNs in Theorems 1–3 reduced to the integer order real-valued memristor-based neural networks (41).

Remark 3

Some sufficient conditions for the existence, uniqueness and uniform stability of RVMFNNs are derived in Theorems 1 and 2 based on Filippov’s solution, differential inclusion theory and Banach contraction principle. Next we are going obtain some sufficient conditions for the existence, uniqueness and uniform stability of CVMFNNs in the following Theorems based on Filippov’s solution, differential inclusion theory and Banach contraction principle.

Complex-valued memristor-based fractional-order neural networks:

In this section, we describe CVMFNNs with time delays and two different types of memductance functions. First, we separate the CVMFNNs into its equivalent two RVMFNNs then by using Filippov’s solution, differential inclusion and Banach contraction principle, some sufficient conditions are obtained to show the existence, uniqueness and uniform stability of considered CVMFNNs.

Theorem 4

Under the case, if Assumptions 3–4 are satisfied, then the system (9) is satisfying the initial condition (12) is uniformly stable.

Proof

Complex-valued memristor-based fractional-order neural networks system (9) can be expressed by separating real and imaginary parts, we get

| 42 |

| 43 |

By theories of differential inclusions and set-valued maps, from (42) and (43), it follows that

| 44 |

| 45 |

or equivalently, for there exists a measurable functions and such that

| 46 |

| 47 |

Clearly, for and .

Consider and with and . and are any two solutions of the system (9) with initial conditions where , where . We have

Now multiply by on both sides, we can write

| 48 |

| 49 |

From (48), we have

By taking absolute value and multiply by on both sides of the above, we get

| 50 |

From (50) we can obtain

| 51 |

The above Eq. (51) can be rewritten as

| 52 |

Similarly, we consider the Eq. (49), one can easily obtain as follows

By taking absolute value and multiply by on both sides, we have

| 53 |

From (53) we can obtain

| 54 |

The above Eq. (54) can be rewritten as

| 55 |

From the Eqs. (52) and (55), we can write in the following form,

| 56 |

| 57 |

where

The Eqs. (56) and (57) can be rewritten in the following form

| 58 |

| 59 |

Substituting (59) into (56), we have

Similarly, substituting (58) into (57), we have

If we take,

where and .

Then Eq. (56) becomes,

| 60 |

Similarly if we take,

where and .

Then Eq. (57) becomes,

| 61 |

From Eqs. (60) and (61), we can say that for then there exist a such that when . Thus, the solution is uniformly stable.

Theorem 5

Under the case, if Assumption 3–4 are satisfied, then the system (9) is satisfying the initial condition (12) is uniformly stable.

Proof

Complex-valued memristor-based fractional-order neural networks system (9) can be expressed by separating real and imaginary parts, we get

| 62 |

| 63 |

| 64 |

| 65 |

Clearly, for

Consider and with and . and are any two solutions of the system (9) with initial conditions where , where . We have

Now multiply by on both sides, we can write

| 66 |

| 67 |

From the Eq. (66), we have

By taking absolute value and multiply by on both sides, we get

| 68 |

From (68) we can obtain

| 69 |

The above Eq. (69) can be rewritten as

| 70 |

Similarly, we consider the Eq. (67), one can easily obtain as follows

By absolute value and multiply by on both sides, we have

| 71 |

From (71) we can obtain

| 72 |

The above Eq. (72) can be rewritten as

| 73 |

From the Eqs. (70) and (73), we can write in the following form,

| 74 |

| 75 |

where

The Eqs. (74) and (75) can be rewritten in the following form

| 76 |

| 77 |

Substituting (77) into (74), we have

Similarly, substituting (76) into (75), we have

If we take,

where and .

Then Eq. (74) becomes,

| 78 |

Similarly if we take,

where and . Then Eq. (75) becomes,

| 79 |

From Eqs. (78) and (79), we can say that for then there exist a such that when . Thus, the solution is uniformly stable.

Theorem 6

If Assumptions 3–5 hold, there exist a unique equilibrium point in system (9), which is uniformly stable.

Proof

Let and constructing a mapping defined by

| 80 |

where .

Now, we will show that is a contraction mapping on endowed with the complex space norm. In fact, for any two different points we have

| 81 |

Based on Assumption 5,

| 82 |

which implies that is a contraction mapping on . Hence, there exists a unique fixed point such that i.e.

| 83 |

That is

| 84 |

for which implies that is an equilibrium point of system (9). Moreover, it follows from Theorem 4 and Theorem 5 that is uniformly stable.

Remark 4

If , then system (9) can be written as

| 85 |

where . Then, the sufficient conditions for the existence, uniqueness and uniform stability of CVMFNNs in Theorems 4–6 reduced to the integer order complex-valued memristor-based neural networks (85).

Remark 5

Many of the authors investigated the dynamic properties of memristor-based neural networks with time delays such as global stability, synchronization, anti-synchronization, passivity and dissipativity see Zhang et al. (2013), Yang et al. (2014), Wu and Zeng (2013, 2014), Chen et al. (2014), Wu and Zeng (2012), Wu et al. (2011, 2013a, b), Cai and Huang (2014), Guo et al. (2013), Qi et al. (2014), Wen et al. (2013) and references therein. In Wu and Zeng (2014), the authors investigated the passivity problem for memristor-based neural networks with two different types of memductance functions and some sufficient conditions were proposed for satisfying the passivity conditions of addressed memristor-based neural networks. In Chen et al. (2014), the authors introduced the memristor-based neural networks and proposed some sufficient conditions that guarantee the global Mittag–Leffler stability and synchronization by using Lyapunov method. The existence, uniqueness and uniform stability analysis of memristor-based fractional-order neural networks with two different types of memductance functions has not been investigated in the literature. In this paper, the authors consider both real-valued and CVMFNNs with time delay and two different types of memductance functions. This obtained results improve and extent to the results proposed in previous works.

Numerical examples

In this section, we give some numerical examples to show the effectiveness of our proposed theoretical results.

Example 1

Consider memristor-based fractional-order neural networks with time delays

| 86 |

where the fractional order is chosen as and the activation functions described by

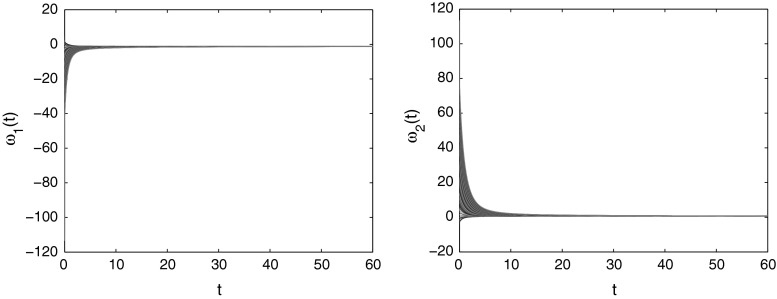

Clearly, . The Assumption 2 is verified by using the above parameters. However, system (86) has a unique uniformly stable solution according to Theorems 1 and 3. Also, according to Theorem 3, system (86) has a unique equilibrium point and which is said to be uniformly stable. Figure 1 shows that the solution of system (86) is converges uniformly to the equilibrium point .

Fig. 1.

Time responses and state trajectories of RVMFNNs (86) with

Example 2

Consider memristor-based fractional-order neural networks with time delays

| 87 |

where the fractional order is chosen as and the activation functions described by

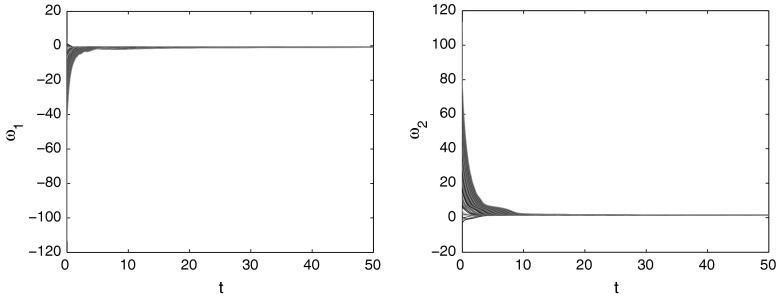

Obviously, . By using the above parameters the Assumption 2 is verified easily. Therefore, system (87) has a unique uniformly stable solution according to Theorems 2 and 3. Also, according to Theorem 3, system (87) has a unique equilibrium point and which is said to be uniformly stable. Figure 2 shows that the solution of system (87) is converges uniformly to the equilibrium point .

Fig. 2.

Time responses and state trajectories of MFNNs (87) with

Example 3

Consider a class of complex-valued memristor-based fractional-order neural networks with time delays

| 88 |

where the fractional order is chosen as and the activation functions described by

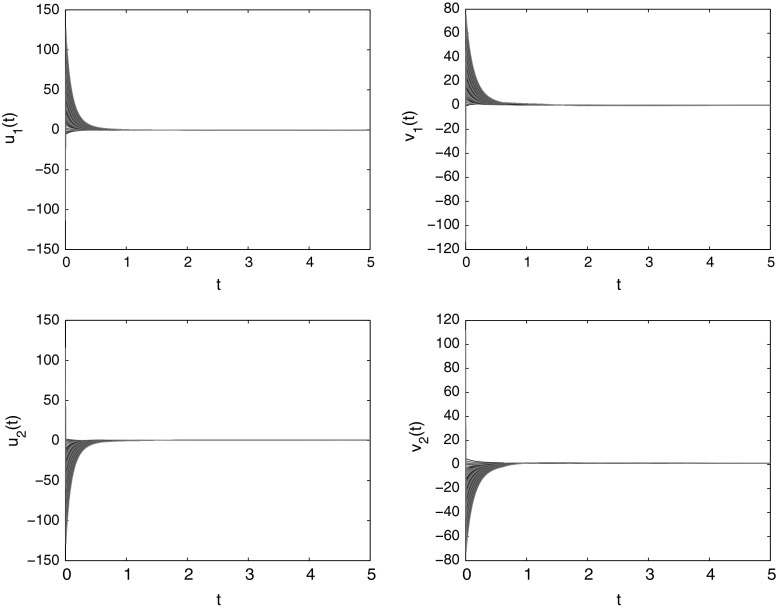

Obviously, . By using the above parameters the Assumption 3 is verified easily. Therefore, system (88) has a unique uniformly stable solution according to Theorems 4 and 6. Also, according to Theorem 6, system (88) has a unique equilibrium point and which is said to be uniformly stable. Figure 3 shows that the solution of system (88) is converges uniformly to the equilibrium point .

Fig. 3.

Time responses and state trajectories of real and imaginary parts of CVMFNNs (88) with

Example 4

Consider a class of complex-valued memristor-based fractional-order neural networks with time delays

| 89 |

where the fractional order is chosen as and the activation functions described by

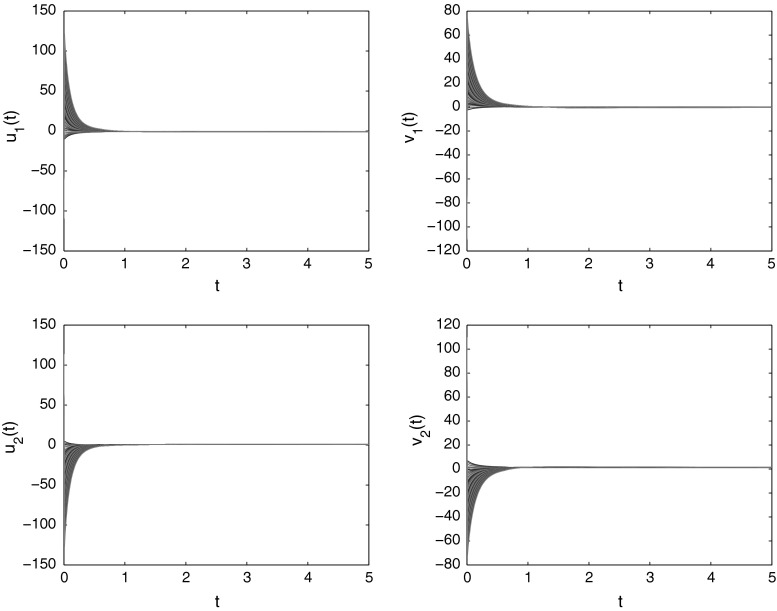

Clearly, we know that . By using the above parameters the Assumption 3 is verified easily. Moreover, system (89) has a unique uniformly stable solution according to Theorems 5 and 6. Also, according to Theorem 6, system (89) has a unique equilibrium point and which is said to be uniformly stable. Figure 4 shows that the solution of system (89) is converges uniformly to the equilibrium point .

Fig. 4.

Time responses and state trajectories of real and imaginary parts of CVMFNNs (89) with

Conclusion

In this paper, the authors have been extensively investigated the problem of existence of uniform stability of a class of MFNNs with time delay and two different types of memductance functions as well as CVMFNNs with time delay and two different types of memductance functions. By using Banach contraction principle, differential inclusion and framework of Filippov solution, some new sufficient conditions that ensure that the existence and uniform stability of the addressed MFNNs and CVMFNNs with time delay and two different types of memductance functions have been derived. Numerical examples are also demonstrate the effectiveness of our theoretical results.

Acknowledgments

This work was supported by NBHM research Project No. 2/48(7)/2012/NBHM(R.P.)/R and D-II/12669, the National Natural Science Foundation of China under Grant Nos. 61272530 and 11072059, the Natural Science Foundation of Jiangsu Province of China under Grant No. BK2012741, and the Specialized Research Fund for the Doctoral Program of Higher Education under Grant Nos. 20110092110017 and 20130092110017.

Contributor Information

R. Rakkiyappan, Email: rakkigru@gmail.com

G. Velmurugan, Email: gvmuruga@gmail.com

Jinde Cao, Email: jdcao@seu.edu.cn.

References

- Ahmeda E, Elgazzar AS. On fractional order differential equations model for nonlocal epidemics. Phys A. 2007;379:607–614. doi: 10.1016/j.physa.2007.01.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Boroomand A, Menhaj M (2009) Fractional-order Hopfield neural networks. Lecture notes in computer science vol 5506, pp 883–890

- Bouzerdoum A, Pattison TR. Neural network for quadratic optimization with bound constraints. IEEE Trans Neural Netw. 1993;4:293–303. doi: 10.1109/72.207617. [DOI] [PubMed] [Google Scholar]

- Cai Z, Huang L. Functional differential inclusions and dynamic behaviors for memristor-based BAM neural networks with time-varying delays. Commun Nonlinear Sci Numer Simul. 2014;19:1279–1300. doi: 10.1016/j.cnsns.2013.09.004. [DOI] [Google Scholar]

- Chen X, Song Q. Global stability of complex-valued neural networks with both leakage time delay and discrete time delay on time scales. Neurocomputing. 2013;121:254–264. doi: 10.1016/j.neucom.2013.04.040. [DOI] [Google Scholar]

- Chen L, Chai Y, Wu R, Ma T, Zhai H. Dynamics analysis of a class of fractional-order neural networks with delay. Neurocomputing. 2013;111:190–194. doi: 10.1016/j.neucom.2012.11.034. [DOI] [Google Scholar]

- Chen J, Zeng Z, Jiang P. Global Mittag–Leffler stability and synchronization of memristor-based fractional-order neural networks. Neural Netw. 2014;51:1–8. doi: 10.1016/j.neunet.2013.11.016. [DOI] [PubMed] [Google Scholar]

- Chua LO. Memristor-the missing circuit element. IEEE Trans Circuit Theory. 1971;18:507–519. doi: 10.1109/TCT.1971.1083337. [DOI] [Google Scholar]

- Delavari H, Baleanu D, Sadati J. Stability analysis of Caputo fractional-order nonlinear systems revisited. Nonlinear Dyn. 2012;67:2433–2439. doi: 10.1007/s11071-011-0157-5. [DOI] [Google Scholar]

- Deng WH, Li CP. Chaos synchronization of the fractional Lu system. Phys A. 2005;353:61–72. doi: 10.1016/j.physa.2005.01.021. [DOI] [Google Scholar]

- Duan CJ, Song O (2010) Boundedness and stability for discrete-time delayed neural networks with complex-valued linear threshold neurons. Discrete Dyn Nat Soc Article ID:368379:1–19

- Filippov A. Differential equations with discontinuous right-hand sides. Dordrecht: Kluwer; 1988. [Google Scholar]

- Guo D, Li C. Population rate coding in recurrent neuronal networks with unreliable synapses. Cogn Neurodyn. 2012;6:75–87. doi: 10.1007/s11571-011-9181-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Guo Z, Wang J, Yan Z. Global exponential dissipativity and stabilization of memristor-based recurrent neural networks with time-varying delays. Neural Netw. 2013;48:158–172. doi: 10.1016/j.neunet.2013.08.002. [DOI] [PubMed] [Google Scholar]

- Hilfer R. Applications of fractional calculus in physics. Singapore: World Scientific York; 2000. [Google Scholar]

- Hirose A. Complex-valued neural networks. Berlin: Springer; 2012. [Google Scholar]

- Hu J, Wang J. Global stability of complex-valued recurrent neural networks with time-delays. IEEE Trans Neural Netw Learn Syst. 2012;23:853–865. doi: 10.1109/TNNLS.2012.2195028. [DOI] [PubMed] [Google Scholar]

- Huang Y, Zhang H, Wang Z. Multistability of complex-valued recurrent neural networks with real-imaginary-type activation functions. Appl Math Comput. 2014;229:187–200. doi: 10.1016/j.amc.2013.12.027. [DOI] [Google Scholar]

- Kaslik E, Sivasundaram S. Nonlinear dynamics and chaos in fractional-order neural networks. Neural Netw. 2012;32:245–256. doi: 10.1016/j.neunet.2012.02.030. [DOI] [PubMed] [Google Scholar]

- Kilbas AA, Srivastava HM, Trujillo JJ. Theory and application of fractional differential equations. New York: Elsevier; 2006. [Google Scholar]

- Kosko B. Bidirectional associative memories. IEEE Trans Syst Man Cybern. 1988;18:49–60. doi: 10.1109/21.87054. [DOI] [Google Scholar]

- Laskin N. Fractional market dynamics. Physica A. 2000;287:482–492. doi: 10.1016/S0378-4371(00)00387-3. [DOI] [Google Scholar]

- Mathews JH, Howell RW (1997) Complex analysis for mathematics and engineering, 3rd edn. Jones and Bartlett Publ Inc, Boston, MA

- Nitta T. Orthogonality of decision boundaries of complex-valued neural networks. Neural Comput. 2004;16:73–97. doi: 10.1162/08997660460734001. [DOI] [PubMed] [Google Scholar]

- Peng GJ, Jiang YL, Chen F. Generalized projective synchronization of fractional order chaotic systems. Physica A. 2008;387:3738–3746. doi: 10.1016/j.physa.2008.02.057. [DOI] [Google Scholar]

- Podlubny I. Fractional differential equations. San Diego: Academic Press; 1999. [Google Scholar]

- Qi J, Li C, Huang T (2014) Stability of delayed memristive neural networks with time-varying impulses. Cogn Neurodyn 8:429–436 [DOI] [PMC free article] [PubMed]

- Rao VSH, Murthy GR. Global dynamics of a class of complex valued neural networks. Int J Neural Syst. 2009;18:165–171. doi: 10.1142/S0129065708001476. [DOI] [PubMed] [Google Scholar]

- Seow MJ, Asari VK, Livingston A. Learning as a nonlinear line of attraction in a recurrent neural network. Neural Comput Appl. 2010;19:337–342. doi: 10.1007/s00521-009-0304-9. [DOI] [Google Scholar]

- Strukov DB, Snider GS, Sterwart DR, Williams RS. The missing memristor found. Nature. 2008;453:80–83. doi: 10.1038/nature06932. [DOI] [PubMed] [Google Scholar]

- Tanaka G, Aihara K. Complex-valued multistate associative memory with nonlinear multilevel functions for gray-level image reconstruction. IEEE Trans Neural Netw. 2009;20:1463–1473. doi: 10.1109/TNN.2009.2025500. [DOI] [PubMed] [Google Scholar]

- Tour JM, He T. The fourth element. Nature. 2008;453:42–43. doi: 10.1038/453042a. [DOI] [PubMed] [Google Scholar]

- Wen S, Zeng Z, Huang T, Chen Y. Passivity analysis of memristor-based recurrent neural networks with time-varying delays. J Frankl Inst. 2013;350:2354–2370. doi: 10.1016/j.jfranklin.2013.05.026. [DOI] [PubMed] [Google Scholar]

- Wu XJ, Lu HT, Shen SL. Synchronization of a new fractional-order hyperchaotic system. Phys Lett A. 2009;373:2329–2337. doi: 10.1016/j.physleta.2009.04.063. [DOI] [Google Scholar]

- Wu A, Zeng Z, Zhu X, Zhang J. Exponential synchronization of memristor-based recurrent neural networks with time delays. Neurocomputing. 2011;74:3043–3050. doi: 10.1016/j.neucom.2011.04.016. [DOI] [Google Scholar]

- Wu H, Liao X, Feng W, Guo S. Mean square stability of uncertain stochastic BAM neural networks with interval time-varying delays. Cogn Neurodyn. 2012;6:443–458. doi: 10.1007/s11571-012-9200-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wu H, Zhang L, Ding S, Guo X, Wang L (2013a) Complete periodic synchronization of memristor-based neural networks with time-varying delays. Discrete Dyn Nat Soci Article ID:140153:1–12

- Wu A, Zeng Z, Xiao J. Dynamic evolution evoked by external inputs in memristor-based wavelet neural networks with different memductance functions. Adv Differ Equ. 2013;258:1–14. [Google Scholar]

- Wu A, Zeng Z. Dynamic behaviors of memristor-based recurrent neural networks with time-varying delays. Neural Netw. 2012;36:1–10. doi: 10.1016/j.neunet.2012.08.009. [DOI] [PubMed] [Google Scholar]

- Wu A, Zeng Z. Anti-synchronization control of a class of memristive recurrent neural networks. Commun Nonlinear Sci Numer Simul. 2013;18:373–385. doi: 10.1016/j.cnsns.2012.07.005. [DOI] [Google Scholar]

- Wu A, Zeng Z. Passivity analysis of memristive neural networks with different memductance functions. Commun Nonlinear Sci Numer Simul. 2014;19:274–285. doi: 10.1016/j.cnsns.2013.05.016. [DOI] [Google Scholar]

- Xu X, Zhang J, Shi J (2014) Exponential stability of complex-valued neural networks with mixed delays. Neurocomputing 128:483–490

- Yang X, Cao J, Ho DWC (2014) Exponential synchronization of discontinuous neural networks with time-varying mixed delays via state feedback and impulsive control. Cogn Neurodyn. doi:10.1007/s11571-014-9307-z [DOI] [PMC free article] [PubMed]

- Yang X, Cao J, Yu W. Exponential synchronization of memristive Cohen–Grossberg neural networks with mixed delays. Cogn Neurodyn. 2014;8:239–249. doi: 10.1007/s11571-013-9277-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yu J, Hu C, Jiang H. -stability and -synchronization for fractional-order neural networks. Neural Netw. 2012;35:82–87. doi: 10.1016/j.neunet.2012.07.009. [DOI] [PubMed] [Google Scholar]

- Zhang G, Shen Y, Wang L. Global anti-synchronization of a class of chaotic memristive neural networks with time-varying delays. Neural Netw. 2013;46:1–8. doi: 10.1016/j.neunet.2013.04.001. [DOI] [PubMed] [Google Scholar]

- Zhou B, Song Q. Boundedness and complete stability of complex-valued neural networks with time delay. IEEE Trans Neural Netw Learn Syst. 2013;24:1227–1238. doi: 10.1109/TNNLS.2013.2247626. [DOI] [PubMed] [Google Scholar]

- Zou T, Qu J, Chen L, Chai Y, Yang Z. Stability analysis of a class of fractional-order neural networks. TELKOMNIKA Indones J Electr Eng. 2014;12:1086–1093. [Google Scholar]