Abstract

Despite an increasingly vast literature on cophylogenetic reconstructions for studying host–parasite associations, understanding the common evolutionary history of such systems remains a problem that is far from being solved. Most algorithms for host–parasite reconciliation use an event-based model, where the events include in general (a subset of) cospeciation, duplication, loss, and host switch. All known parsimonious event-based methods then assign a cost to each type of event in order to find a reconstruction of minimum cost. The main problem with this approach is that the cost of the events strongly influences the reconciliation obtained. Some earlier approaches attempt to avoid this problem by finding a Pareto set of solutions and hence by considering event costs under some minimization constraints. To deal with this problem, we developed an algorithm, called Coala, for estimating the frequency of the events based on an approximate Bayesian computation approach. The benefits of this method are 2-fold: (i) it provides more confidence in the set of costs to be used in a reconciliation, and (ii) it allows estimation of the frequency of the events in cases where the data set consists of trees with a large number of taxa. We evaluate our method on simulated and on biological data sets. We show that in both cases, for the same pair of host and parasite trees, different sets of frequencies for the events lead to equally probable solutions. Moreover, often these solutions differ greatly in terms of the number of inferred events. It appears crucial to take this into account before attempting any further biological interpretation of such reconciliations. More generally, we also show that the set of frequencies can vary widely depending on the input host and parasite trees. Indiscriminately applying a standard vector of costs may thus not be a good strategy.

Keywords: approximate Bayesian computation, cophylogeny, host/parasite systems, likelihood-free inference

Cophylogeny is the reconstruction of ancient relationships among ecologically linked groups of organisms from their phylogenetic information. The study of host–parasite systems has a long history and has been already well addressed in the literature (e.g., Charleston 1998; Conow et al. 2010; Huelsenbeck et al. 1997; Merkle and Middendorf 2005; Page 1994b; Paterson and Banks 2001). It also has broad applications throughout biology. For instance, the same mathematical model can be applied to gene–species associations (Bansal et al. 2012; Doyon et al. 2011a,b; Hallett and Lagergren 2001; Tofigh et al. 2011). Hence, any single method for host/parasite associations that is developed could be applicable to both situations. Lately indeed, there have been attempts to introduce a general framework that incorporates all existing models (Wieseke et al. 2013).

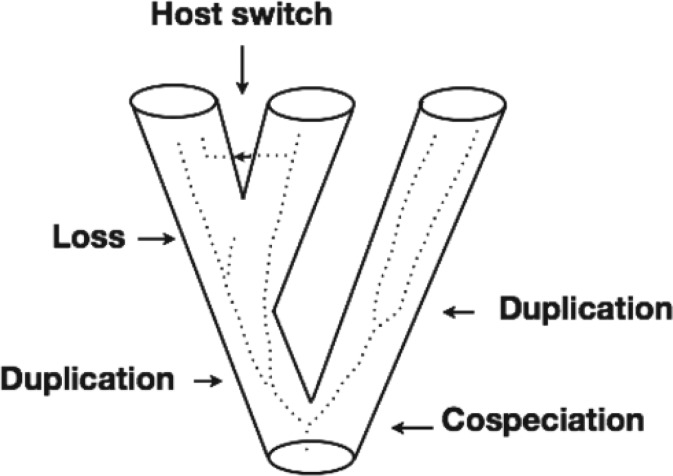

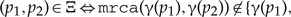

Our work is particularly focused on reconstructing the coevolutionary history of host–parasite systems. Specifically, we are given a host tree , a parasite tree , and a function mapping the leaves of to the leaves of . In general, four main macro-evolutionary events are assumed to be recovered: (i) cospeciation, when the parasite diverges in correspondence to the divergence of a host species; (ii) duplication, when the parasite diverges “without the stimulus of host speciation” (Paterson and Banks 2001); (iii) host-switching, when a parasite switches, or jumps from one host species to another independent of any host divergence; and (iv) loss, which can describe three different and undistinguishable situations: (a) speciation of the host species independently of the parasite, which then follows just one of the new host species due to factors such as, for instance, geographical isolation; (b) cospeciation of host and parasite, followed by extinction of one of the new parasite species and; (c) same as (b) with failure to detect the parasite in one of the two new host species. These events are depicted in Figure 1.

Figure 1.

Recoverable events for a coevolutionary reconstruction. The tube represents the host tree and the dotted lines the parasite tree.

A parsimonious solution for reconciling the phylogenetic trees for hosts on one side, and parasites on the other, simply assigns a cost to each of the four types of events and then seeks to minimize the total cost of the mapping. If host switches are forbidden, exact solutions can be found in time linear in the size of the trees (e.g., Goodman et al. 1979; Guigó et al. 1996; Mirkin et al. 1995; Page 1994a). If timing information is available, for example, if we happen to know the order in which speciation events occurred in the host phylogeny, then any proposed reconciliation must also respect the temporal constraints imposed by the available timing information. Host switches are thus restricted to occur only between coexisting species. When coexistence relationships are known for all host species, the reconciliation problem can again easily be solved using dynamic programming, this time polynomially in the size of the trees (Conow et al. 2010; Drinkwater and Charleston 2014; Libeskind-Hadas and Charleston 2009). However, when timing information is not available, the difficulty of separating between compatible and incompatible switches makes the reconciliation problem NP-hard (Ovadia et al. 2011; Tofigh et al. 2011). A number of algorithms have been developed that allow for solutions that are biologically unfeasible, that is, solutions where some of the switches induce a contradictory timing ordering for the internal vertices of the host tree (Doyon et al. 2011). In this case, the algorithms are able to generate optimal solutions in polynomial time. For the fastest existing ones, see for example Bansal et al. (2012).

Clearly in all situations, the choice for the cost values is crucial in the solution(s) found. Indeed, arbitrarily choosing a cost vector may lead to solutions where the events in the optimal solutions do not necessary reflect the reality (Charleston 2003, e.g. describes a study on the distribution of the events in optimal reconciliations). From a biological point of view, reasonable cost values for an event-based reconciliation are not easily chosen. It is also natural to think that the frequency of the events is not constant across data sets. Thus, different pairs of host/parasite phylogenies might be associated with different cost events. Moreover, our results show that for the same pair of host and parasite trees, different reconciliations—in the sense of presenting a different set of frequencies for the events—may constitute equally probable solutions. It is thus crucial to take this into account before attempting any further biological interpretation of such reconciliations.

Some approaches (Charleston 2012; Libeskind-Hadas et al. 2014) attempt to choose the costs of the events by adopting some minimization constraints and by focusing on Pareto optimal solutions. As indicated in Ronquist (2003), if each event is associated with a cost that is inversely related to its likelihood (the more likely is the event, the smaller its cost), then the most parsimonious reconstruction will also, in some sense, be the most likely explanation of the observed data. Likelihood-based approaches should in general be preferred to parsimony-based methods as they remove the subjective step of cost parameter choice and rely instead on a simultaneous inference of parameter values and events. Some work has been done along these lines, for instance in testing for coevolution (Huelsenbeck et al. 1997,2000. This, however, excluded duplications and tended to over-estimate the number of host switches. Instead, in Szöllősi et al. (2013) all four types of events are considered, but the method was developed with the objective of reconstructing a species tree starting from multiple gene trees. The aim is similar in Arvestad et al. (2003) but the type of approach is different and the model again incomplete as in Huelsenbeck et al. (1997, 2000), this time not allowing for host switches. The likelihood approach adopted in Huelsenbeck et al. (1997, 2000) and Szöllősi et al. (2013) moreover presents the inconvenience of being computationally intensive.

The huge space of possible solutions is also an issue, for instance, in population genetics for reconstructing the evolutionary history of a set of individuals. Since the early work of Pritchard et al. (1999), the literature from this domain has seen classical Monte Carlo methods and their variants being replaced by Approximate Bayesian Computation (ABC), a set of more efficient statistical techniques (Beaumont et al. 2002). In complex models, likelihood calculation is often unfeasible or computationally prohibitive. ABC methods, also called likelihood-free inference methods, bypass this issue while remaining statistically well-founded. For more details, we refer to the review of Marin et al. (2012) as well as the convergence results in Fearnhead and Prangle (2012).

Following these ideas, we developed an algorithm, called Coala (Coala stands for “COevolution Assessment by a Likelihood-free Approach”, and is also the Portuguese spelling for Koala, the arboreal herbivorous marsupial native to Australia), for estimating the frequency of the events based on a likelihood-free approach. Given a pair of “known” host and parasite trees and a prior probability distribution associated with the events, Coala simulates the temporal evolution of a set of species (the parasites) following the evolution of another set (the hosts) as represented by the latter's known phylogenetic tree. In this way, it generates under different parameter values a number of simulated multilabeled parasite trees which are then compared with the known parasite tree. The ABC principle is to keep the parameter values (event probabilities) giving rise to parasite trees that are “close” to the known one. The output of the algorithm is then a distribution on such parameter values that is a surrogate of the posterior probability for the events which would best explain the observed data.

To the best of our knowledge, the only other method that might be compared with ours is the parameter adaptive approach CoRe-Pa (Merkle et al. 2010). In this case, the space of cost vectors is explored either by sampling such vectors at random assuming a uniform distribution model or by using a more sophisticated approach, the so-called Nelder–Mead simplex method (Nelder and Mead 1965). The first appears to be the option by default in CoRe-Pa. In both cases, the function to minimize is the difference between the probabilities directly computed from the cost vector chosen and the actual relative frequencies observed during the reconstruction using such vector. This choice may appear somewhat circular as one would expect that, because reconstruction is driven by the cost vector, the frequency of the events thus reconstructed not only would, but indeed should agree with it.

Method

General Framework

The method we propose relies on an ABC. This belongs to a family of likelihood-free Bayesian inference algorithms that attempt to estimate posterior densities for problems where the likelihood is unknown a priori. Given a set of observed data and starting with a prior distribution on the parameter space of the model, the objective is to estimate the parameter values that could lead to the observed data using a Bayesian framework. More precisely, the Bayesian paradigm consists in finding the posterior given defined as:

If the likelihood function cannot be derived, then a likelihood-free approximation can be used to estimate this posterior distribution and thus the parameter values. In general, a likelihood-free computation involves a chain of parameter proposals and only accepts a set of parameter values on condition that the model with these values generates data that satisfy a performance criterion with respect to the observed data (Sisson et al. 2007, 2009). Strict acceptance (or inversely rejection) is based on whether the generated data perfectly matches the observed data . In cases where the probability of perfectly matching the data is very small, a tolerance is adopted to relax the rejection policy, where is a distance measure. In either case, this is called the fitting criterion. Note that this fitting criterion often relies only on a summary statistic instead of the full data sets and . Moreover, for complex models where the prior and posterior densities are believed to be sufficiently different, the acceptance rate is very low and then the use of a likelihood-free Sequential Monte Carlo (SMC) search that involves many iterations leads to a more appropriate strategy. SMC is also preferred among other possible methods as it is flexible, easy to implement, parallelisable, and applicable to general settings (Del Moral et al. 2012).

The ABC–SMC algorithms approximate the posterior distribution by using a large set of randomly chosen parameter values. Over sufficiently many iterations and under suitable conditions, the stationary distribution of the Markov chain will approach the distribution of , which will converge to the posterior density if the statistics used to compare the generated data with the real one are sufficient and is small enough. In our case, the observed data are a pair of host and parasite trees, denoted by and respectively, and a list of associations between parasite and host leaves. The parameter vector of the model is composed of the probabilities of each one of four events corresponding to respectively: speciation of the parasite together with a speciation of its host (called cospeciation); speciation of the parasite without concomittant speciation of the host (called duplication); switch (also known as jump) of the parasite to another host (called host switch, which is further assumed to be without loss on the original host); and speciation of the host without concomitant speciation of the parasite, and thus loss of the parasite for one of the new host species (called loss). We thus have that stands for a vector of four probabilities . Note that each node in the host tree either matches a node in the parasite tree or represents a loss, giving rise to the four possible events. For this reason, the parameter is constrained such that (see Section “Parasite tree generation algorithm” for more details).

Starting from the host and respecting the probabilities of the events specified in a given parameter vector , we generate parasite trees, where .

Once a parasite tree is thus simulated, it can be compared with the real parasite tree by computing a distance between the two. For a given parameter vector , we can then produce a distance summary of the generated trees, and use this as a criterion in the ABC rejection method. The latter selects the parameter vector(s) that approximate the observed data within a given tolerance threshold.

The ABC–SMC procedure allows us to refine the list of accepted probability vectors by sampling a vector , introducing a small perturbation to it to produce a vector , and then collecting a new distance summary for .

The list of vectors output in the final step of the algorithm defines the posterior distribution of the coevolutionary event probabilities for the given pair and . Table 1 shows a summary of the notation used throughout this work.

Table 1.

Notation

| Notation | Description |

| Host tree. | |

| Parasite tree. | |

| Function from the leaves of to the leaves of . It represents the associations between currently living host species and parasites. | |

| Function from the vertices of to the vertices of . It represents the reconciliation between and and extends . | |

| Sets of parasite vertices associated with, respectively, cospeciation, duplication, and host switch events. | |

| Set containing arcs of the parasite tree that are associated to host switch events. | |

| Multiset containing all vertices that are associated to loss events. | |

| Observed data. | |

| Generated data. | |

| Parameter space. | |

| Parameter value. | |

| Simulated parasite tree. | |

| Probability of the event , where . | |

| Cost of the event , where . | |

| Number of observed events of the type , where . |

Note: cospeciation, duplication, host switch, and loss.

Parasite Tree Generation Algorithm

The Duplication–Transfer–Loss (DTL) model

To simulate the coevolutionary history of the two input phylogenies, we rely on the event-based model presented in Tofigh et al. (2011), and later further analyzed in Bansal et al. (2012).

A rooted phylogenetic tree is a leaf-labeled tree that models the evolution of a set of taxa from their most recent common ancestor (placed at the root). The internal vertices of the tree correspond to the speciation events. The tree is rooted so a direction is intrinsically assumed that corresponds to the direction of increasing evolutionary time. Henceforth, by a phylogenetic tree , we mean a rooted tree with labeled leaves where every vertex has in-degree 1 and out-degree 2 except for the leaves, which have out-degree 0. For such a tree , the set of vertices is denoted by , the set of arcs by , and the set of leaves by . The root of is denoted by . Given an arc , going from to , we call its head, denoted by , the vertex and its tail, denoted by , the vertex . For a vertex , we define the set of descendants of , denoted by , as the set of vertices in the subtree of rooted at (including ). Similarly, the set of ancestors of , denoted by , is the set of vertices in the unique path from the root of to (including the end points). For a vertex different from the root, we call its parent, denoted by , the vertex for which there is the arc . We denote by  the most recent common ancestor of in . Finally, we denote by the partial order induced by the ancestry relation in the tree. Formally, for , we say that if . If neither nor , the vertices are said to be incomparable.

the most recent common ancestor of in . Finally, we denote by the partial order induced by the ancestry relation in the tree. Formally, for , we say that if . If neither nor , the vertices are said to be incomparable.

Let be the phylogenetic trees for the host and parasite species respectively. We define as a function from the leaves of to the leaves of that represents the association between currently living host species and parasites. These associations are part of the input of our algorithm, together with the trees themselves. In our model, we allow each parasite to be related to one and only one host, whereas a host can be related to zero, one, or more than one parasite. More formally, is thus a function which needs not be surjective nor injective.

A reconciliation is a function that is an extension of . In particular partitions the set into three sets , , and which correspond to the vertices of associated with, respectively, cospeciations, duplications, and host switches. The reconciliation also defines a subset of which corresponds to the arcs associated with host switches.

Given a reconciliation , the following holds (Tofigh et al. 2011; Charleston 2002):

For any , ( extends ).

- For any internal vertex with children and :

- (a)

, for (a child cannot be mapped to an ancestor of the parent).

, for (a child cannot be mapped to an ancestor of the parent). - (b)

or

or

(one of the two children is mapped to the subtree rooted at the parent).

(one of the two children is mapped to the subtree rooted at the parent).

For any

(the arc is an arc denoting a host switch).

(the arc is an arc denoting a host switch).- For any with children and :

- (a) or ( is associated with a host switch).

- (b)

(the children are mapped to comparable vertices and is associated with a duplication event).

(the children are mapped to comparable vertices and is associated with a duplication event). - (c)

and and are incomparable ( is associated with a cospeciation event).

and and are incomparable ( is associated with a cospeciation event).

The losses are identified by a multiset (generalization of a set where the elements are allowed to appear more than once) whose elements are in containing all the vertices that are in the path between the image of a vertex and the image of one of its children. The images themselves are not included in the count, except for the duplication event, where one of the images is included.

The triple is said to be a reconciliation. Given a vector of nonnegative real values that correspond to the cost of each type of event, the cost of a reconciliation is equal to .

Finally, a reconciliation is said to be acyclic or time feasible if there exists a total order on that is consistent with the two partial orders induced by and and respects all temporal constraints imposed by both tree topologies and by the set of host switch events. For a detailed definition of a time-feasible scenario, we refer to Stolzer et al. (2012).

Evolution of parasites

The evolution of the parasites is simulated by following the evolution of the hosts traversing the phylogenetic tree from the root to the leaves, and progressively constructing the phylogenetic tree for the parasites. During this process, a single parasite vertex can be in two different states: mapped or unmapped. At the moment of its creation, a new vertex is unmapped and is assigned a temporary position on an arc of the host tree . We denote this position by . From this position, we can decide to map to a vertex of (all coevolutionary events except for loss), or, in the case of a loss, to move to another position. In the first case, is always mapped to the vertex that is the head of the arc . We denote this mapping by with .

Because in all three nonloss cases (cospeciation, duplication, and host switch), the parasite is supposed to speciate and two children are created for , denoted by and . Their positioning along arcs of the host then depends on which of the three events took place. In the case of a loss, no child for is created (at this step) because there is no parasite speciation, and is just moved to one of the two arcs outgoing from chosen randomly. Notice however that, in order to avoid confusing a loss with another event (for instance, a cospeciation), some precautions must be taken, as explained more specifically in the next paragraph concerning the simulation of a loss event.

These choices, together with the general framework for our parasite tree generation method, are provided next.

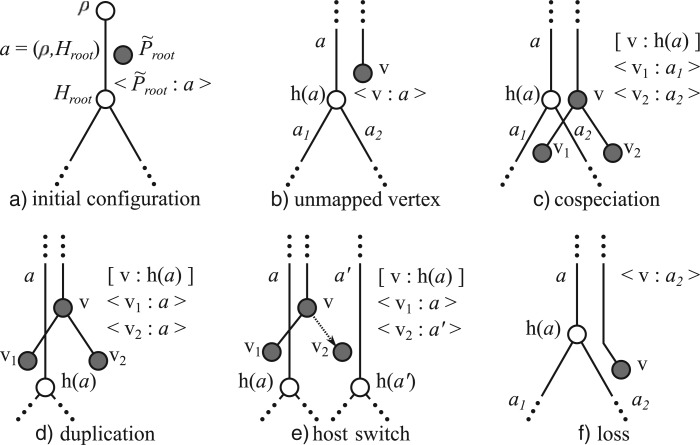

Starting the generation

The generation of the simulated parasite tree starts with the creation of its root vertex . This vertex is positioned before the root of on the arc . This allows the simulation of events that happened in the parasite tree before the most recent common ancestor of all host species in . Figure 2a depicts this initial configuration.

Figure 2.

Events during the generation of the parasite tree . The host tree has white vertices and the parasite tree gray vertices. The association indicates that an unmapped parasite vertex is positioned on the arc of the host tree. The association indicates that the parasite vertex is mapped to the host vertex . a) initial configuration; b) unmapped vertex; c) cospeciation; d) duplication; e) host switch; f) loss.

The evolutionary events

For any vertex of that is not yet mapped and whose position is (Fig. 2b), we choose to apply one among the four allowed operations, depending on the probability of each event. In what follows, we denote by the arcs outgoing from the head of the arc .

Cospeciation (Fig. 2c): we apply the mapping and we create the vertices and as children of . We position them as follows: and . This operation is executed with probability .

Duplication (Fig. 2d): We apply the mapping and we create the vertices and as children of . Both and are positioned on . This operation is executed with probability .

Host switch (Fig. 2e): We apply the mapping and we create the vertices and as children of . We then randomly choose one of the two children and position it on . Finally, we randomly choose an arc that does not violate the time feasibility of the reconstruction so far Stolzer et al. 2012. If such an arc does not exist, it is not possible for a host switch to take place. In this case, we choose between the three remaining events with probability with . Otherwise, we position on . This operation is executed with probability .

Loss (Fig. 2f): This operation is executed with probability and consists of randomly choosing an arc outgoing from the head of and positioning on it. Observe that we are considering only losses resulting from lineage sorting. It would be interesting to incorporate extinction or failure to detect infection but this would require the addition of new parameters, thus making the model more complex to analyze. However, if was created by a duplication event and is being processed for the first time, we have to verify if its sibling vertex was already processed and also suffered a loss. In this case, must be positioned on the same arc where was positioned. This procedure is adopted to avoid later mappings where a duplication followed by two losses would be confused with a cospeciation.

We also assume that no evolutionary event takes place whenever a leaf of is reached. This means that, if is positioned on an arc incoming to a leaf, then is mapped to the leaf and no further operation is executed. Hence, the generation of terminates when all the created vertices are mapped (i.e., have reached a leaf of the host tree). Finally, the leaves of the parasite tree are labeled according to their mapping to the leaves of the host tree. Observe that as more than one parasite can be mapped to the same host, is a multilabeled tree (i.e. trees whose leaf labels need not be unique). Finally, some combinations of host switches can introduce an incompatibility due to the temporal constraints imposed by the host and parasite trees, as well as by the reconciliation itself. During the generation of the parasite tree, we always allow only for host switches that do not violate the time-feasibility constraints. For the criteria enabling to assess time-feasibility, we refer to Stolzer et al. (2012).

Note that in this model, we do not use information about edge lengths. This is a positive aspect of the method in the sense that branch lengths are not always easy to determine with accuracy. In contrast, we cannot simulate the “null events” (parasite doing nothing in the host tree). Moreover, for now, we do not simulate “failure to diverge” which describes a situation where a host speciates whereas the parasite does not but continues to inhabit both of the two new species of hosts. Despite the importance of this event, mathematically speaking it is not clear how to include it in the cophylogenetic reconciliation model because we have to allow the association of a parasite to multiple hosts. The ideas presented by Drinkwater and Charleston (2014) for the improvement of node mapping algorithms may help on the simulation of the “failure to diverge” event in future work.

Because the simulation model is restricted to the events of cospeciation, duplication, host switch, and loss, the probabilities of these four events sum up to one.

Cophylogeny Parameter Estimation Algorithm

Prior distribution

The parameter lives in the simplex (the 's are positive and sum to one). It is then standard to sample from a Dirichlet distribution which is a family of continuous multivariate probability distributions parameterized by a vector of positive real numbers that determine the shape of the distribution (Gelman et al. 2003).

In our simulations, we adopt a uniform Dirichlet distribution (namely ) that corresponds to sampling uniformly from the simplex . This is often used when there is no previous knowledge favoring one component (e.g., coevolutionary event) of over another. However, the method we implemented allows the user to specify other prior distributions when such knowledge is available.

Choice of summary statistic and fitting criterion

The ABC inference method is based on the choice of a summary statistic that describes the data while performing a dimension reduction task. The latter is used to evaluate the quality of agreement (similarity) between the simulated data sets (the generated parasite trees) and the observed (the real parasite tree). In our case, the summary statistic will be based on the measured distances between the generated parasite trees and the real one.

The distance of each simulated tree to the real parasite tree is therefore informative about the quality of the vector that generated it. Hence, the distance that will be used must take into account: (i) how well does the simulated tree represent the set of trees generated by a given vector, and (ii) how topologically similar is the simulated tree to the real parasite tree.

Concerning the first point, the intuition is as follows. In our model, when generating a parasite tree, the expected frequency of an event should be close to the corresponding probability value of the parameter vector used to generate the tree. To this purpose, for a given vector and for each simulated tree that was generated according to this vector, we kept track of the number of events associated with this simulation. We compared the observed number of events to the expected . Observe that the expected number of events can be easily calculated using the size of the parasite tree and the vector . A tree is a good representative if the observed number of events is near to the expected. More formally, for a real parasite tree , a vector , and a simulated parasite tree for which the observed number of events are , we define a measure as follows:

As concerns point (ii), we use a metric for comparing phylogenetic trees. There is a wide literature on distances for phylogenetic trees (Felsenstein 2003). Our choice was driven by the need to have one that can be computed efficiently and accurately. Unfortunately, many of the distances used in biology are also NP-hard to compute (Baroni et al. 2005; Hein 1990; Waterman and Smith 1978), whereas some of the fastest, like for instance, the Robinson–Foulds distance (Robinson and Foulds 1981) which can be calculated in linear time (Day 1985), are poorly distributed and thus not good enough discriminators (Bryant and Steel 2009; Steel and Penny 1993). Moreover, many efficient-to-compute distances are not robust to small changes (such as in the position of a single leaf) in one of the two trees.

Recall that in our method the leaves of the parasite tree are labeled according to their mapping to the leaves of the host tree and that more than one parasite can be mapped to the same host. Hence, we are interested in distances between multilabeled trees.

In our context, the distance that best meets the requirement of efficiency and accuracy appears for now to be the maximum agreement area cladogram (MAAC) (Ganapathy et al. 2006). This is a generalization for multi-labeled trees of the well-known maximum agreement subtree (Finden and Gordon 1985; Farach-Colton et al. 1995) and it corresponds to the number of leaves in the largest isomorphic subtree that is common to two (multilabeled) trees. Clearly this isomorphism takes into account the labels of the trees. The MAAC distance can be calculated in time where is the size of the largest input tree (Ganapathy et al. 2006).

We use a normalized version of MAAC that takes into account also the number of leaves in common between the two trees. More formally, for two trees and with leaf sets and respectively, we define the measure as follows:

Observe that the intersection operation involves multisets. We recall that a multiset is a generalization of a set where the elements are allowed to appear more than once, hence the operations take into account their multiplicity in the following way: if the multiplicity of an element in a multiset is given by , then is given by .

Finally, we propose a distance that is based on these two components and . For a real parasite , a vector , and a simulated parasite tree , we define the distance as follows:

According to our experiments (see Supplementary Material available at, http://dx.doi.org/10.5061/dryad.9g5fp), the most appropriate values are and but this can be set by the user. The main drawback of this distance is that it is not a metric; however, it achieves good results with respect to discriminating the trees as observed in our experiments.

In Coala, we implemented two other distances, both of which are variations of the MAAC. A user can choose the most appropriate one depending on the case. In this article, we show only the results for the two-component distance, as this had the most discriminating power (data not shown).

Given a parameter vector , we generate trees and for each of them we consider the distance of from the real parasite tree . From this set of distances, , we produce a summary, denoted by , that characterizes the set of trees generated with the parameter vector. In our experiments, we choose as the average of all the produced distances.

The summary is the value that is used in the rejection/acceptance step of the ABC method.

Finally, it is worth noting that although the choice of a summary statistic (or equivalently here a summary tree distance) is independent from the generation process (coevolution model), such a choice may have a deep impact on the performance and the results of the method. This is one of the main issues with ABC-related methods. Some recent works have attempted to improve this step (Fearnhead and Prangle 2012). From the experiments done however, we can already see that the two-component distance seems to be a good enough discriminator.

ABC–SMC Procedure

The ABC–SMC procedure is composed of a sequence of rounds. For each of these rounds, we define a tolerance value () which determines the percentage of parameter vectors to be accepted. Associated with a tolerance value , we have a threshold which is the largest value of the summary statistic associated with the accepted parameter vectors.

- Initial round ():

- Draw an initial set of parameter vectors from the prior . Then, for each generate trees . Select parameter vectors that have the smallest , thus defining the threshold and the set of accepted parameter vectors.

- Following rounds ():

- Sample a parameter vector from the set . Create a parameter vector by perturbing . The perturbation is performed by adding to each coordinate of a randomly chosen value in and normalising it.

- Generate trees and compute . If , add into the quantile set . If , return to Step 1.

- Based on the set , select parameter vectors that have the smallest , thus defining the threshold and the set of accepted parameters.

The final set of accepted parameter vectors is the result of the ABC–SMC procedure and characterizes the list of parameter vectors that may explain the evolution of the pair of host and parasite trees given as input.

Let us observe that, because in all our experiments we are assuming a uniform prior distribution and also are performing the perturbations in a uniform way, the weights induced by the proposals appear to be uniform (Beaumont et al. 2009). However, in the case of a different prior, weights should be used in the process in order to correct the posterior distribution according to the perturbation made.

Clustering the Results

Coala implements a hierarchical clustering procedure to group the final list of accepted parameter vectors. The basic process of a hierarchical clustering is as follows. At the beginning, each parameter vector forms a single cluster. Then at each step, the pair of clusters that have the smallest distance to each other are merged to form a new cluster. The distance that we use between the vectors and is the distance, which is a weighted Euclidean distance defined as follows:

At the end of this process, we have a single cluster containing all the items represented as a tree (hierarchical cluster tree or dendrogram) showing the relationship among all the original items. As we make no assumptions concerning the space of the vectors we are dealing with, we chose to apply a more general but still efficient method, introduced in Langfelder et al. (2007), to select the branches to be cut in the dendrogram. The method proceeds in two steps. Starting with the complete dendrogram, it first identifies preliminary clusters that satisfy some criteria: for example, they contain a certain minimum number of objects (to avoid spurious divisions), any two clusters are at least some distance apart, etc. (Langfelder et al. 2007, for more details). In a second step, all the items that have not been assigned to any cluster are tested for sufficient proximity to preliminary clusters; if the nearest cluster is close enough, the item is assigned to that cluster, otherwise the item remains clustered according to the complete dendrogram.

Finally, once the vectors are split into clusters, we associate to each one a representative parameter vector. To define each coordinate of the “consensus” parameter vector, we take the mean value of the respective coordinate in all the parameter vectors which are inside the cluster. We then normalize the “consensus” coordinates to sum to one.

Experimental Results and Discussion

We evaluated our method in two different ways. First we designed a self-test to show that the principle underlying it is sound and to test it on simulated data sets.

We then extended the evaluation to four real examples that correspond to biological data sets from the literature. This choice was dictated by: (i) the availability of the trees and of their leaf mapping; and (ii) the desire to, again, cover for situations as widely different as possible in terms of the events supposed to have taken place during the host–parasite coevolution. As a matter of fact, the first point drove the choice more than the second: there are not so many examples available from which it is easy to extract the tree and/or leaf mapping and that are big enough to represent meaningful data sets on which to test Coala. All four examples were also analyzed in the original paper from which they were extracted by one or more of the existing algorithms that search for a most parsimonious (possibly cyclic) reconciliation (i.e., for a reconciliation of minimum cost). Except in one case, which is a heuristic strategy and therefore does not guarantee optimality of the solution, all existing algorithms need to receive as input the cost of the events, which is thus established a priori and drives the conclusions on the results obtained.

Finally, we applied Coala to a biological data set of our own, representing the coevolution of bacteria from the Wolbachia genus and the various arthropods that host them. This data set was selected because of its size: the trees have each 387 leaves.

Experimental Parameters

All data sets were processed by Coala configured with the same parameters. For each data set, we generated parameter vectors in the first round. For each of the vectors, we generated parasite trees using our method. We required these trees to have a size at most twice the one of the real parasite tree, otherwise the tree was discarded as being too different from the original. If a given vector did not generate such trees in 5000 trials, then the vector was immediately associated with a distance equal to 1 which indicated that it represented the real data badly.

We used the average of all the 1000 distances produced as a fitting criterion in the rejection/acceptance step of the ABC method. The tolerance value used in the first round was . For the remaining rounds , we defined . Notice that defines the size of the quantile set which must be produced in each new round. Thus, after the last round, we have accepted vectors. These vectors are grouped into clusters and a representative vector is associated with each cluster as explained in the Section “Clustering the results.”

We ran the experiments using and rounds. The number of rounds is an important parameter, which defines the characteristics of the list of accepted parameter vectors.

However, observe that a high number of rounds will tend to overfit the data and thus hide a possible variability in the list of accepted vectors that could provide significant alternatives for explaining the studied pair of trees.

Because, we are interested in exploring different alternatives for each data set, we present only the results which were obtained after running Coala for 3 rounds. The results involving 5 rounds may be found in the Supplementary Material available at http://dx.doi.org/10.5061/dryad.9g5fp.

Simulated Data sets

We first evaluated our model on simulated data. Clearly, in order to do this, we have to generate the phylogenies for the hosts and parasites whose coevolution is being studied in such a way that the probability of each event is known. The basic idea is that if we are able to select a “typical” (or representative) parasite tree that is generated starting from a host tree and a given probability vector , Coala should be able to list values close to among the vectors accepted in the last round.

It is important to observe that many different probability vectors can explain the same pair of trees. We will therefore consider it acceptable if Coala produces clusters that are relatively close to .

Generating simulated data sets

Due to the high variability of the parasite trees which can be simulated given a host tree and a vector , the task of choosing the most “typical” tree can be hard. To simplify this task and select a typical tree, we impose two conditions which must be observed by the simulated tree. The first one requires that the candidate tree should have a size close to the median for all the trees which are simulated using and . The second condition requires that the observed number of events of a candidate tree should be very close to the expected number given .

In practical terms, we execute the following procedure: in order to get realistic data sets we choose a real host tree (see Supplementary Material available at http://dx.doi.org/10.5061/dryad.9g5fp, for more information). Then, given a probability vector and , we generate 2000 parasite trees using our model, without imposing any limit on the size of the generated trees. We then compute the median size of all generated trees and we filter out those whose size is far from this value (difference greater than 1 or 2 leaves from the median value). Finally, we select as typical tree the one that shows the smallest distance between the vector and the vector of observed frequencies of events.

We generated in this way 9 data sets (,) associated with the following 9 probability vectors: , , , , , , , , and (see the Supplementary Material available athttp://dx.doi.org/10.5061/dryad.9g5fp, for more details). The choice of vectors was done with the aim to cover different patterns of probability. All data sets were generated with the same host tree of 36 leaves.

Self-test

As concerns the self-test, we designed the following procedure. Let denote the simulated parasite tree chosen in correspondence of the probability vector , as explained in the previous section. We recall that the host tree remains the same during all the self-test experiments. For a pair of host and parasite trees (), we ran Coala 50 times. In each run , we computed the quality that corresponded to how well the method was able to recover the target vector used for generating the data set . To do this, for each run , we considered the representative vectors of the clusters produced as output. We computed the distance for each of the representative vectors to the target vector and set to the smallest value among them.

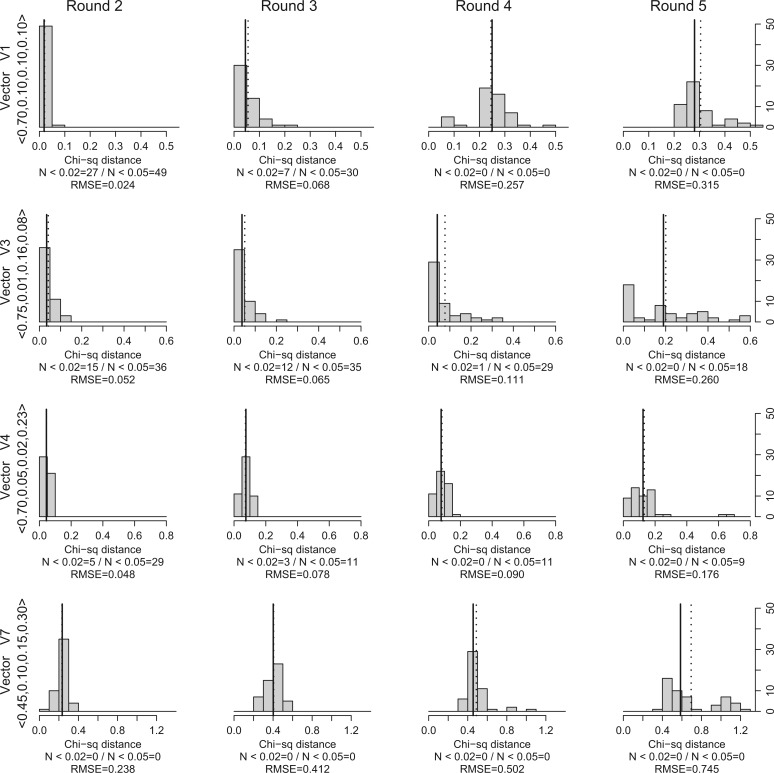

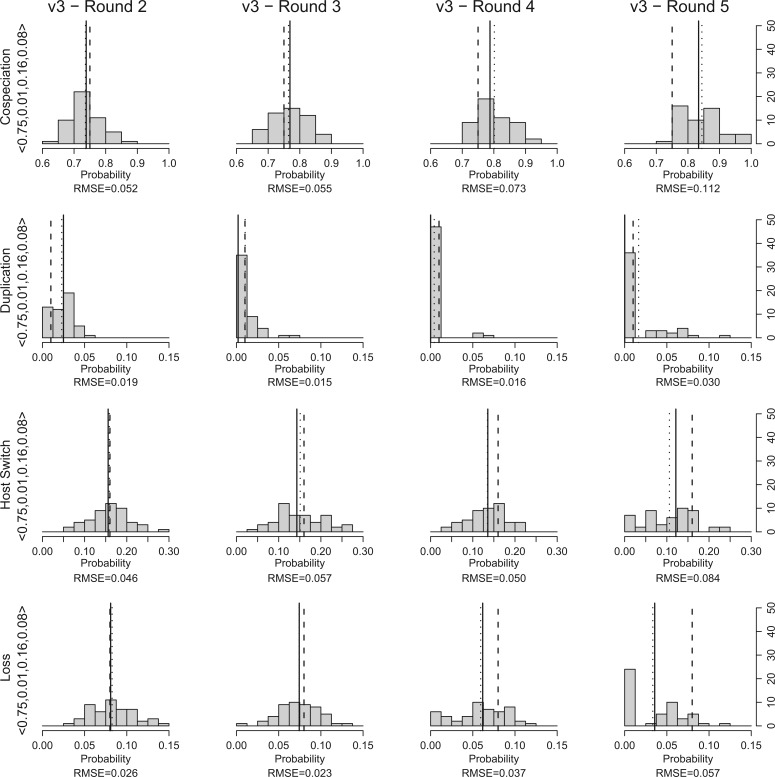

Figure 3 shows the distribution of the quality values which were obtained at the end of each round (from 2 to 5) for the simulated data sets , , , and (the results for the remaining data sets can be found in the Supplementary Material available at http://dx.doi.org/10.5061/dryad.9g5fp). Figure 4 shows the histograms of the event probabilities observed for the 50 parameter vectors with smallest distance at the end of each round for data set (again, the results for the remaining data sets are available in the Supplementary Material available at http://dx.doi.org/10.5061/dryad.9g5fp).

Figure 3.

For each simulated data set, we ran Coala 50 times and, at the end of each round (from 2 to 5), we took note of the cluster whose representative parameter vector had the smallest distance to the probability vector used to generate the simulated data set. The histograms show the distribution of the smallest distance observed on each one of the 50 runs at the end of each round (for the simulated data sets , , , and .). The solid and dotted vertical lines indicate median and mean values, respectively.

Figure 4.

For each simulated data set, we ran Coala 50 times and, at the end of each round (from 2 to 5), we took note of the cluster whose representative parameter vector had the smallest distance to the probability vector used to generate the simulated data set. The histograms show the distribution of the event probabilities observed on the list of parameter vectors which have the smallest distance on each run for the data set . The solid and dotted vertical lines indicate median and mean values, respectively. The dashed vertical line indicates the “target” value.

Up to a certain level of cospeciation probability (≥0.50), our results (Fig. 3) show that in the rounds 2 and 3, Coala is able to select parameter vectors that are close to the target probability vector. Looking to the histograms of these two rounds, we can observe that in most of the runs, the closest parameter vector has low distance to the target. After the third round, this tendency changes and the closest parameter vectors show high distances indicating that Coala is mainly selecting vectors which are far from the target one.

Because Coala is based on an ABC–SMC approach, the accepted vectors in one round have summary statistics (i.e., average distance) smaller than the defined in the previous round. This means that at each new round, Coala is selecting parameter vectors that have more probability of explaining the pair of trees given as input because their simulated parasite trees are, on average, closer to the real one.

Although we try to choose the best representative parasite tree for each pair (, ), we cannot guarantee that is the best explanation for the association between and . Even so, Coala was able to select parameter vectors that are close to the target probability vector in the first rounds. Figure 4 shows the histograms of the event probabilities observed among the 50 parameter vectors with smallest distance at the end of each round for data set , and confirms these observations. We can see that at round 2, the median and mean event probabilities (solid and dotted vertical lines respectively) are very close to the target value (dashed vertical line). When we increase the number of rounds, the distance between the median/mean probabilities and the target values increases.

When we decrease the cospeciation probability to values smaller than 0.50, Coala selects very few vectors which are close to the target vector. When the cospeciation probability decreases while the duplication and host switch probabilities increase, the variability of the tree topologies observed increases exponentially. Due to this, selecting a typical tree becomes an almost impossible task and this may explain the obtained results. Increasing the number of simulated trees to compute the summary statistic might enable us to improve the quality of the results. However, this would require a much longer execution time.

Biological Data sets Extracted from the Literature

To evaluate Coala on biological data sets, we extracted four pairs of host and parasite trees from the literature. However, due to space issues, in this work we present only two of them. A description and the results obtained on the additional biological data sets can be found in the Supplementary Material available at http://dx.doi.org/10.5061/dryad.9g5fp. Before presenting and discussing the data sets, we provide details on how we performed the analyses.

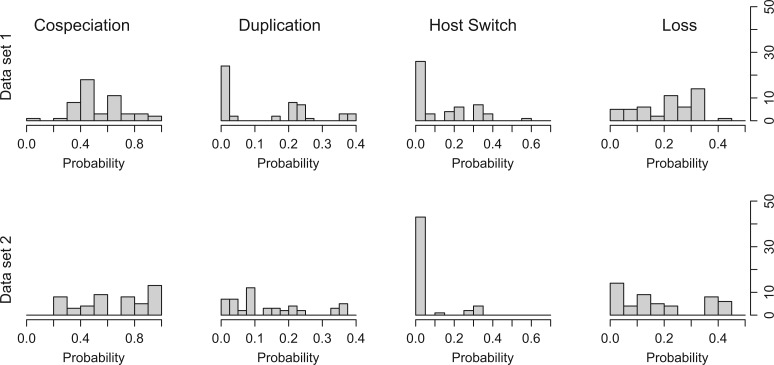

Each data set was processed by Coala as described in the Section “Experimental parameters.” Table 2 shows the representative parameter vectors obtained for each one of the data sets and Figure 5 the histograms of the event probabilities of the list of accepted vectors obtained at the end of the third round.

Table 2.

Representative probability vectors produced by Coala at Round 3

| Data set | Cluster | No. of vectors | ||||

| 0 | 0.030 | 0.000 | 0.557 | 0.413 | 1 | |

| 1 | 1 | 0.461 | 0.258 | 0.000 | 0.281 | 24 |

| 2 | 0.554 | 0.000 | 0.270 | 0.176 | 20 | |

| 3 | 0.910 | 0.016 | 0.058 | 0.016 | 5 | |

| 1 | 0.851 | 0.082 | 0.000 | 0.066 | 25 | |

| 2 | 2 | 0.473 | 0.204 | 0.000 | 0.323 | 10 |

| 3 | 0.238 | 0.349 | 0.000 | 0.413 | 8 | |

| 4 | 0.580 | 0.002 | 0.282 | 0.136 | 7 |

Figure 5.

Distribution of the probability values for each event type observed on the parameter values accepted on the third round while processing the biological data sets 1 and 2.

In order to compare our results to the existing literature, we transformed each one of the representative parameter vectors into a vector of costs that was then used to compute optimal reconciliations between the host and the parasite trees given as input. The transformation was done by defining , with , which is based on a commonly accepted idea that the cost of an event is inversely related to its probability (e.g., Charleston 1998; Ronquist 2003; Huelsenbeck et al. 1997). Indeed, if is equal to 1, then we expect all the events to be of type , thus the cost of the corresponding event must be 0. Similarly, if is equal to 0, we expect that event never happens, and thus the cost must be assigned to .

To the best of our knowledge, the only methods that enumerate all optimal reconciliations are CoRe-Pa (Merkle et al. 2010), Notung (Stolzer et al. 2012), and Eucalypt (Donati et al. 2014). However CoRe-Pa in some cases misses solutions, probably because it considers some additional constraints. Notung does not allow cospeciation costs different from zero and the remaining event costs must be described by integer values. We thus present the results of Eucalypt which allows the configuration of all event costs and accepts real numbers.

Table 3 shows, for each data set, the vector of costs (, , , ) produced by transforming the representative parameter vectors obtained after the third round (Table 2). Column indicates the cost of the optimal solution and columns , , , the numbers of each event type which are observed among the enumerated scenarios. Finally, columns and indicate, respectively, the total number of acyclic and cyclic scenarios.

Table 3.

Event vectors obtained by transforming the probability vectors (Table 2) into cost vectors

| Data set | Cluster | |||||||||||

| 0 | 3.517 | 13.816 | 0.584 | 0.885 | 14.044 | 1 | 0 | 15 | 2 | 2944 | 0 | |

| 1 | 1 | 0.775 | 1.355 | 7.824 | 1.270 | 48.664 | 11 | 2 | 3 | 11 | 2 | 0 |

| 2 | 0.591 | 8.517 | 1.310 | 1.736 | 16.217 | 9 | 0 | 7 | 1 | 1 | 0 | |

| 3 | 0.094 | 4.160 | 2.844 | 4.154 | 24.892 | 9 | 0 | 7 | 1 | 1 | 0 | |

| 1 | 0.161 | 2.496 | 9.210 | 2.717 | 153.544 | 22 | 11 | 8 | 18 | 0 | 12 | |

| 2 | 2 | 0.748 | 1.592 | 9.210 | 1.130 | 105.393 | 22 | 19 | 0 | 52 | 1 | 0 |

| 3 | 1.436 | 1.053 | 8.112 | 0.884 | 97.548 | 22 | 19 | 0 | 52 | 1 | 0 | |

| 4 | 0.545 | 6.266 | 1.265 | 1.996 | 72.588 | 17 | 5 | 19 | 4 | 4 | 0 |

Note: , and denote the number of each event type which are observed among the enumerated scenarios. and indicate, respectively, the total number of acyclic and cyclic scenarios.

Data set 1: flavobacterial endosymbionts and their insect hosts

This data set was extracted from the work of Rosenblueth et al. (2012) and is composed of a pair of host and parasite trees which have each 17 species (see Supplementary Material available at http://dx.doi.org/10.5061/dryad.9g5fp). The parameter adaptive approach of CoRe-Pa (Merkle et al. 2010) was used to infer the more appropriate cost vectors for analyzing this data set. Nine such vectors were produced. However, only one, , was associated with a feasible reconciliation in the sense that host switches happened between contemporary species only (the branch length was used to infer this information). Because CoRe-Pa can produce unfeasible (i.e., cyclic) solutions during the parameter adaptive approach, Rosenblueth et al. decided to complement their study with Jane 3 (Conow et al. 2010), which uses a genetic algorithm approach to produce only acyclic reconciliations. They thus started with the only cost vector obtained by CoRe-Pa associated with a feasible reconciliation, however transforming it into integer numbers (a requirement of the software), and then gradually changed the costs until a feasible reconciliation was produced (again using branch-length information). This procedure resulted in the cost vector and a reconciliation with 9 cospeciations, 0 duplication, 7 host switches and 1 loss, the same as obtained by CoRe-Pa.

Running Coala on this data set, we obtain 3 nonsingleton clusters which are quite different from each other (Table 2). Cluster 0 is formed by a single accepted vector which did not cluster with any other because it is too far apart. Cluster 1 shows probabilities of 0.46, 0.26, and 0.28, respectively, for cospeciation, duplication, and loss. After transforming these into costs (Table 3), the obtained reconciliation scenarios have 11 cospeciations, 2 duplications, 3 host switches, and 11 losses. Clusters 2 and 3 show very low duplication probability. Although Cluster 2 exhibits intermediate values for the remaining probabilities, Cluster 3 has a very high cospeciation probability value (0.91) and low host switch (0.06) and loss (0.02). Due to the low duplication value, these clusters show the same reconciliation scenario: 9 cospeciations, 0 duplications, 7 host switches, and 1 loss, which is identical to the one proposed by Rosenblueth et al. (2012).

Dataset 2: Rodents and Hantaviruses

This data set is taken from Ramsden et al. (2009, Fig. 2) and considers the coevolution of hantaviruses with their insectivore and rodent hosts. The host tree consists of a total of 34 hosts (28 rodents and 6 insectivores) and the parasite tree includes 42 hantaviruses. It was strongly believed that hantaviruses cospeciated with rodents becuase their phylogenetic trees have topological similarities with three consistently well-defined clades (Hughes and Friedman 2000; Jackson and Charleston 2004; Nemirov et al. 2004; Plyusnin and Morzunov 2001). The authors show that to support this hypothesis, the evolutionary rate of the RNA sequences of the hantaviruses should be several orders of magnitude smaller than the rates which are normally observed in RNA viruses that replicate with RNA-dependent RNA polymerase (Hanada et al. 2004). By analyzing the cophylogenetic reconciliations, the authors show that scenarios with more than 20 cospeciations are statistically nonsignificant. To explain the topological congruences, the authors point to the fact that host-switching followed by pathogen speciation can generate congruence between trees, particularly when pathogens preferentially switch among closely related hosts. Based on this fact and on the observed patterns of amino acid replacement observed in these viruses (compatible with host-specific adaptation), the authors conclude that the coevolutionary history of these hosts and parasites is the result of a recent history of preferential host-switching and local adaptation.

Looking at Table 2, we can observe that Clusters 1, 2, and 3 have representative vectors with zero probability for host switch events: Cluster 1 has a very high cospeciation probability (0.85), whereas Clusters 2 and 3 have probability values which are almost equally distributed among cospeciation, duplication, and loss events. After transforming these vectors into costs (Table 3), we obtain scenarios with a high number of cospeciations which is considered nonsignificant by Ramsden et al. (2009).

Differently from the others, Cluster 4 shows a vector with host switch probability higher than the probabilities of duplication and loss. When converted into costs (Table 3), this generates time-consistent scenarios with 17 cospeciations, 5 duplications, 19 host switches, and 4 losses, a result much closer to the explanation given by Ramsden et al. (2009). These results reinforce the idea that, although Coala is able to identify vectors which can explain a pair of trees, having a prior knowledge of the dynamics of the interactions of the two groups of species is important to identify the clusters that better explain their coevolution.

Wolbachia and their Arthropod Hosts Data set

Wolbachia is a large, phylogenetically diverse monophyletic genus of intracellular bacteria that are currently considered the most abundant endosymbionts in arthropods. In insects alone, it is estimated that over 65% of the species are infected by Wolbachia. The data set used in this artcile corresponds to Wolbachia species that were detected in an extensive set of arthropods collected from 4 young, isolated islands (less than 5 Myr old) (Simões et al. 2011; Simões 2012). The trees are a subset of those discussed in (Simões et al. 2011; Simões 2012), where we retained only those parasites which were associated with a unique host, the hosts diverge by at least 2% at the level of the CO1 genes that were used for reconstructing their phylogenetic tree and the Wolbachia sequences (corresponding to the fbpA gene) differ by at least one SNP. Each resulting tree is composed of 387 leaves. The initial results presented in Simões (2012) seemed to indicate that host switches might be quite frequent even among hosts that are physiologically and molecularly very distinct and thus phylogenetically distant.

The Wolbachia-arthropods data set was also processed by Coala as described in the Section “Experimental parameters.” Table 4 shows the three clusters which were obtained at the end of the third round. All these clusters have significantly high cospeciation probabilities (>0.77). The first cluster has a very low duplication probability and a host switch probability around 0.5. The two other clusters point to a relatively high duplication probability and low level of host switches. The difference between them is related to the probability of losses, which is around 0.14 for Cluster 2 and zero for Cluster 3.

Table 4.

Representative probability vectors produced by Coala, at the end of the third round, while processing the Wolbachia-arthropods data sets

| Cluster | No. of vectors | ||||

| 1 | 0.866 | 0.006 | 0.055 | 0.073 | 26 |

| 2 | 0.771 | 0.078 | 0.010 | 0.141 | 22 |

| 3 | 0.964 | 0.022 | 0.014 | 0.000 | 2 |

Cluster 1 goes in the direction of what was presented in Simões (2012) where the author suggested that in the last 3 Ma, there were many transfers of Wolbachia, including between different arthropod orders, that is over large phylogenetic distances. Clusters 2 and 3 point to an opposite scenario.

Similarly to the analysis performed for the small biological data sets, we transformed each one of the representative parameter vectors into a vector of costs that was then used to compute optimal reconciliations between the host and the parasite trees given as input.

What is most striking with the results obtained for this data set is the absolutely huge number of optimal reconciliations that can be derived for all clusters. Because the total number of solutions makes impossible the enumeration of all the results, for this data set, we therefore only computed the costs of the optimal solutions and the total number of solutions. Additionally, for each cluster, we sampled 10,000 solutions and we checked for the presence of acyclic solutions. Table 5 summarizes the results obtained.

Table 5.

Total number of solutions obtained by transforming the probability vectors (Table 4) into cost vectors for Wolbachia-arthropods data sets

| Cluster | Solutions | Acyclic solutions | |||||

| 1 | 0.144 | 5.116 | 2.899 | 2.623 | 917.475 | No | |

| 2 | 0.260 | 2.551 | 4.595 | 1.961 | 1407.877 | No | |

| 3 | 0.037 | 3.817 | 4.269 | 13.816 | 1375.725 | Yes |

For the small sampling that we performed, we were able to find feasible (acyclic) solutions only with the cost vector produced with the event probabilities of Cluster 3. However, the results obtained with all the other four data sets used here lead us to suggest that the number of feasible solutions might quite possibly remain large.

Conclusions

We have developed an automated method that, starting from two phylogenies representing sets of host and parasite species, allows extraction of information about the costs of the events in a most probable reconciliation. It is clear that within a parsimony-based approach, an optimal solution strictly depends on the specific values attributed to these costs. However, there seldom is enough information for assigning those values a priori. Indeed, we observe in the results we obtained on a diverse selection of data sets that the costs inferred by our simulations may be very different across data sets, thus motivating the use of estimated instead of fixed costs. Such costs may even differ widely for a same pair of host–parasite trees, as is observed for the Wolbachia-arthropods data set.

These costs are inversely related to their likelihood, and so to their expected frequency. For this reason, providing information on the frequencies of the events is an important issue, in particular in the cases where the reconciliation methods fail to find a solution. The latter can happen, for instance, if all the optimal solutions that are identified by the existing reconciliation algorithms are biologically unfeasible due to the presence of cycles, becuase finding an acyclic reconciliation is an NP-hard problem. In addition, if the host and parasite trees are large (for instance, on the order of hundreds of taxa), these cases cannot be handled by the existing reconciliation algorithms in the sense that there are too many solutions to test for acyclicity.

As a future work, we first plan to refine the model used for the reconciliation problem, including more biological information and making it more realistic. In particular, we could include information about the distance of the allowed host switches (for instance if we expect a host switch to rarely happen between species that are too far from each other), or allow the mapping of the leaves to be an association instead of a function (thus addressing the cases where a parasite can be found in more than one host species). Moreover, we should also consider the case where the input phylogenies are not fully resolved, meaning that the trees are not binary.

A more efficient exploration of the parameter space is another important future issue that would significantly increase the efficiency of our procedure and also allow to handle larger trees.

It is important to observe that most studies on cophylogeny assume that the phylogenies of the organisms are correct. Clearly, this may affect the results observed. It would therefore be interesting to be able to infer the cophylogenetic reconciliation directly from sequence data.

Finally, the accuracy of the results obtained by our method depends on the choice of the metric used for comparing trees. Designing new metrics that can be computed efficiently while still capturing the similarity for multilabeled, not fully resolved trees is therefore another important future issue which we believe is also interesting per se.

Availability

The Coala program is available at http://coala.gforge.inria.fr/ and runs on any machine with Java 1.6 or higher. The Eucalypt program is available at http://eucalypt.gforge.inria.fr/.

Running Time

The experiments were executed at the IN2P3 Computing Center (http://cc.in2p3.fr/). For the simulated data sets, each pair of trees was processed with 3 threads for speeding up the simulation process. The time necessary to complete 5 rounds for all 50 runs varied from 1 to 2 days depending on the size of the trees. For the biological data sets 1 to 4, we also used 3 threads. The observed execution times for 5 rounds were between a couple of hours for the smallest data set (Data set 1) and 1 day for Data set 4. Due to its size, the data set Wolbachia-arthropods was processed with 150 threads and it required approximately 8 days to complete 5 rounds.

Supplementary Material

Data available from the Dryad Digital Repository: http://dx.doi.org/10.5061/dryad.9g5fp.

Funding

The research leading to these results was funded by the European Research Council under the European Community's Seventh Framework Programme (FP7/2007-2013) / ERC grant agreement no. [247073]10, and by the French National Research Agency (ANR) / Project ANR MIRI BLAN08-1335497. It was supported by the ANR funded LabEx ECOFECT.

References

- Arvestad L., Berglund A.-C., Lagergren J., Sennblad B. Bayesian gene/species tree reconciliation and orthology analysis using MCMC. Bioinformatics. 2003;19:i7–i15. doi: 10.1093/bioinformatics/btg1000. [DOI] [PubMed] [Google Scholar]

- Bansal M.S., Alm E., Kellis M. Efficient algorithms for the reconciliation problem with gene duplication, horizontal transfer and loss. Bioinformatics. 2012;28:i283–i291. doi: 10.1093/bioinformatics/bts225. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baroni M., Grünewald S., Moulton V., Semple C. Bounding the number of hybridisation events for a consistent evolutionary history. J. Math. Biol. 2005;51:171–182. doi: 10.1007/s00285-005-0315-9. [DOI] [PubMed] [Google Scholar]

- Beaumont M.A., Cornuet J.-M., Marin J.-M., Robert C.P. Adaptive approximate Bayesian computation. Biometrika. 2009;96:983–990. [Google Scholar]

- Beaumont M.A., Zhang W., Balding D.J. Approximate Bayesian computation in population genetics. Genetics. 2002;162:2025–2035. doi: 10.1093/genetics/162.4.2025. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bryant D., Steel M. Computing the distribution of a tree metric. IEEE/ACM Trans. Comput. Biol. Bioinf. 2009;6:420–426. doi: 10.1109/TCBB.2009.32. [DOI] [PubMed] [Google Scholar]

- Charleston M.A. Jungles: a new solution to the host/parasite phylogeny reconciliation problem. Math. Biosci. 1998;149:191–223. doi: 10.1016/s0025-5564(97)10012-8. [DOI] [PubMed] [Google Scholar]

- Charleston M.A. 2002. Biological Evolution and Statistical Physics vol. 585 of Lecture Notes in Physics chap. Principles of cophylogenetic maps. Berlin Heidelberg: Springer. pp. 122–147.

- Charleston M.A. Recent results in cophylogeny mapping. Adv. Parasitol. 2003;54:303–330. doi: 10.1016/s0065-308x(03)54007-6. [DOI] [PubMed] [Google Scholar]

- Charleston M.A. 2012. Treemap 3b. Available from: https://sites.google.com/site/cophylogeny/.

- Conow C., Fielder D., Ovadia Y., Libeskind-Hadas R. Jane: a new tool for the cophylogeny reconstruction problem. Algorithms Mol. Biol. 2010;5:10. doi: 10.1186/1748-7188-5-16. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Day W.H.E. Optimal algorithms for comparing trees with labeled leaves. J. Classif. 1985;2:7–28. [Google Scholar]

- Del Moral P., Doucet A., Jasra A. An adaptive sequential Monte Carlo method for approximate Bayesian computation. Stat. Comput. 2012;22:1009–1020. [Google Scholar]

- Donati B., Baudet C., Sinaimeri B., Crescenzi P., Sagot M.-F. Eucalypt: Efficient tree reconciliation enumerator. Algorithms Mol. Biol. 2014 doi: 10.1186/s13015-014-0031-3. (in press) [DOI] [PMC free article] [PubMed] [Google Scholar]

- Doyon J.-P., Hamel S., Chauve C. An efficient method for exploring the space of gene tree/species tree reconciliations in a probabilistic framework. IEEE/ACM Trans. on Comput. Biol. Bioinf. 2011a;9:26–39. doi: 10.1109/TCBB.2011.64. [DOI] [PubMed] [Google Scholar]

- Doyon J.-P., Ranwez V., Daubin V., Berry V. Models, algorithms and programs for phylogeny reconciliation. Brief. Bioinform. 2011b;12:392–400. doi: 10.1093/bib/bbr045. [DOI] [PubMed] [Google Scholar]

- Doyon J.-P., Scornavacca C., Gorbunov K. Y., Szöllősi G. J., Ranwez V., Berry V. 2011c. An efficient algorithm for gene/species trees parsimonious reconciliation with losses, duplications and transfers. In: Tannier E., editors. Proceedings of the 8th annual RECOMB Satellite Workshop on Comparative Genomics (RECOMB-CG 2010) vol. 6398 of Lecture Notes in Bioinformatics. Heidelberg: Springer. pp. 93–108.

- Drinkwater B., Charleston M.A. An improved node mapping algorithm for the cophylogeny reconstruction problem. Coevolution. 2014;2:1–17. [Google Scholar]

- Farach-Colton M., Przytycka T.M., Thorup M. On the agreement of many trees. Inform. Process. Lett. 1995;55:297–301. [Google Scholar]

- Fearnhead P., Prangle D. Constructing summary statistics for approximate Bayesian computation: semi-automatic approximate Bayesian computation. J. R. Stat. Soc.: Series B Stat. Methodol. 2012;74:419–474. [Google Scholar]

- Felsenstein J. Inferring phylogenies. Sunderland, MA: Sinauer Associates, Inc; 2003. [Google Scholar]

- Finden C.R., Gordon A.D. Obtaining common pruned trees. J. Classif. 1985;2:255–276. [Google Scholar]

- Ganapathy G., Goodson B., Jansen R., Le H., Ramachandran V., Warnow T. Pattern identification in biogeography. IEEE/ACM Trans. Comput. Biol. Bioinf. 2006;3:334–346. doi: 10.1109/TCBB.2006.57. [DOI] [PubMed] [Google Scholar]

- Gelman A., Carlin J.B., Stern H.S., Rubin D.B. Bayesian data analysis. London: Chapman & Hall; 2003. [Google Scholar]

- Goodman M., Czelusniak J., Moore G., Romero-Herrera A., Matsudai G. Fitting the gene lineage into its species lineage, a parsimony strategy illustrated by cladograms constructed from globin sequences. Syst. Zool. 1979;28:132–163. [Google Scholar]

- Guigó R., Muchnik I., Smith T. Reconstruction of ancient molecular phylogeny. Mol. Phylogenet. Evol. 1996;6:189–213. doi: 10.1006/mpev.1996.0071. [DOI] [PubMed] [Google Scholar]

- Hallett M.T, Lagergren J. Efficient algorithms for lateral gene transfer problems. In: Lengauer T., editor. Proceedings of the fifth Annual International Conference on Research in Computational Molecular Biology (RECOMB) New York: ACM; 2001. pp. 149–156. [Google Scholar]

- Hanada K., Suzuki Y., Gojobori T. A large variation in the rates of synonymous substitution for RNA viruses and its relationship to a diversity of viral infection and transmission modes. Mol. Biol. Evol. 2004;21:1074–1080. doi: 10.1093/molbev/msh109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hein J. Reconstructing evolution of sequences subject to recombination using parsimony. Math. Biosci. 1990;98:185–200. doi: 10.1016/0025-5564(90)90123-g. [DOI] [PubMed] [Google Scholar]

- Huelsenbeck J.P., Rannala B., Larget B. A Bayesian framework for the analysis of cospeciation. Evolution. 2000;54:352–364. doi: 10.1111/j.0014-3820.2000.tb00039.x. [DOI] [PubMed] [Google Scholar]

- Huelsenbeck J.P., Rannala B., Yang Z. Statistical tests of host-parasite cospeciation. Evolution. 1997;51:410–419. doi: 10.1111/j.1558-5646.1997.tb02428.x. [DOI] [PubMed] [Google Scholar]

- Hughes A.L., Friedman R. Evolutionary diversification of protein-coding genes of hantaviruses. Mol. Biol. Evol. 2000;17:1558–1568. doi: 10.1093/oxfordjournals.molbev.a026254. [DOI] [PubMed] [Google Scholar]

- Jackson A.P., Charleston M.A. A cophylogenetic perspective of RNA-virus evolution. Mol. Biol. Evol. 2004;21:45–57. doi: 10.1093/molbev/msg232. [DOI] [PubMed] [Google Scholar]

- Langfelder P., Zhang B., Horvath S. Defining clusters from a hierarchical cluster tree: the Dynamic Tree Cut package for R. Bioinformatics. 2007;24:719–720. doi: 10.1093/bioinformatics/btm563. [DOI] [PubMed] [Google Scholar]

- Libeskind-Hadas R., Charleston M.A. On the computational complexity of the reticulate cophylogeny reconstruction problem. J. Comput. Biol. 2009;16:105–117. doi: 10.1089/cmb.2008.0084. [DOI] [PubMed] [Google Scholar]

- Libeskind-Hadas R., Wu Y.-C., Bansal M.S., Kellis M. Pareto-optimal phylogenetic tree reconciliation. Bioinformatics. 2014;30:i87–i95. doi: 10.1093/bioinformatics/btu289. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Marin J.-M., Pudlo P., Robert C.P., Ryder R.J. Approximate Bayesian computational methods. Stat. Comput. 2012;22:1167–1180. [Google Scholar]

- Merkle D., Middendorf M., Wieseke N. A parameter-adaptive dynamic programming approach for inferring cophylogenies. BMC Bioinformatics. 2010;11:10. doi: 10.1186/1471-2105-11-S1-S60. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Merkle D., Middendorf M. Reconstruction of the cophylogenetic history of related phylogenetic trees with divergence timing information. Theor. Biosci. 2005;123:277–299. doi: 10.1016/j.thbio.2005.01.003. [DOI] [PubMed] [Google Scholar]

- Mirkin B., Muchnik I., Smith T. A biologically consistent model for comparing molecular phylogenies. J. Comput. Biol. 1995;2:J493–J507. doi: 10.1089/cmb.1995.2.493. [DOI] [PubMed] [Google Scholar]

- Nelder J., Mead R. A simplex method for function minimization. Comput. J. 1965;7:308–313. [Google Scholar]

- Nemirov K., Vaheri A., Plyusnin A. Hantaviruses: co-evolution with natural hosts. Rec. Res. Dev. Virol. 2004;6:201–228. [Google Scholar]

- Ovadia Y., Fielder D., Conow C., Libeskind-Hadas R. The cophylogeny reconstruction problem is NP-complete. J. Comput. Biol. 2011;18:59–65. doi: 10.1089/cmb.2009.0240. [DOI] [PubMed] [Google Scholar]

- Page R.D.M. Maps between trees and cladistic analysis of historical associations among genes, organisms, and areas. Syst. Biol. 1994a;43:58–77. [Google Scholar]

- Page R.D.M. Parallel phylogenies: reconstructing the history of host-parasite assemblages. Cladistics. 1994b;10:155–173. [Google Scholar]

- Paterson A.M., Banks J. Analytical approaches to measuring cospeciation of host and parasites: Through a glass, darkly. Int. J. Parasitol. 2001;31:1012–1022. doi: 10.1016/s0020-7519(01)00199-0. [DOI] [PubMed] [Google Scholar]

- Plyusnin A., Morzunov S.P. Virus evolution and genetic diversity of hantaviruses and their rodent hosts. Curr. Top. Microbiol. Immunol. 2001;256:47–75. doi: 10.1007/978-3-642-56753-7_4. [DOI] [PubMed] [Google Scholar]

- Pritchard J., Seielstad M., Perez-Lezaun A., Feldman M. Population growth of human Y chromosomes: a study of Y chromosome microsatellites. Mol. Biol. Evol. 1999;16:1791–1798. doi: 10.1093/oxfordjournals.molbev.a026091. [DOI] [PubMed] [Google Scholar]

- Ramsden C., Holmes E., Charleston M. Hantavirus evolution in relation to its rodent and insectivore hosts. Mol. Biol. Evol. 2009;26:143–153. doi: 10.1093/molbev/msn234. [DOI] [PubMed] [Google Scholar]

- Robinson D.F., Foulds L.R. Comparison of phylogenetic trees. Math. Biosci. 1981;55:131–147. [Google Scholar]

- Ronquist F. Tangled trees: phylogeny, cospeciation, and coevolution chap. Parsimony analysis of coevolving species associations. Chicago, USA: University of Chicago Press; 2003. pp. 22–64. [Google Scholar]

- Rosenblueth M., Sayavedra L., Sámano-Sánchez H., Roth A., Martínez-Romero E. Evolutionary relationships of flavobacterial and enterobacterial endosymbionts with their scale insect hosts (hemiptera: Coccoidea) J. Evolution. Biol. 2012;25:2357–2368. doi: 10.1111/j.1420-9101.2012.02611.x. [DOI] [PubMed] [Google Scholar]

- Simões P.M., Mialdea G., Reiss D., Sagot M.-F., Charlat S. Wolbachia detection: an assessment of standard pcr protocols. Mol. Ecol. Resour. 2011;11:567–572. doi: 10.1111/j.1755-0998.2010.02955.x. [DOI] [PubMed] [Google Scholar]

- Simões P.M. 2012. Diversity and dynamics of Wolbachia-host associations in arthropods from the Society archipelago, French Polynesia. Ph.D. thesis. France: University of Lyon 1.

- Sisson S.A., Fan Y., Tanaka M.M. Sequential Monte Carlo without likelihoods. Proc. Natl. Acad. Sci. USA. 2007;104:1760–1765. doi: 10.1073/pnas.0607208104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sisson S.A., Fan Y., Tanaka M.M. Sequential Monte Carlo without likelihoods. erratum 1041760. Proc. Natl. Acad. Sci. USA. 2009;106:16889–16889. doi: 10.1073/pnas.0607208104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Steel M., Penny D. Distributions of tree comparison metrics – some new results. Syst. Biol. 1993;42:126–141. [Google Scholar]

- Stolzer M.L., Lai H., Xu M., Sathaye D., Vernot B., Durand D. Inferring duplications, losses, transfers and incomplete lineage sorting with nonbinary species trees. Bioinformatics. 2012;28:i409–i415. doi: 10.1093/bioinformatics/bts386. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Szöllősi G.J., Rosikiewicz W., Boussau B., Tannier E., Daubin V. Efficient exploration of the space of reconciled gene trees. Syst. Biol. 2013;62:901–912. doi: 10.1093/sysbio/syt054. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tofigh A., Hallett M., Lagergren J. Simultaneous identification of duplications and lateral gene transfers. IEEE/ACM Trans. Comput. Biol. Bioinf. 2011;8:517–535. doi: 10.1109/TCBB.2010.14. [DOI] [PubMed] [Google Scholar]

- Waterman M.S., Smith T.F. On the similarity of dendrograms. J. Theor. Biol. 1978;73:789–800. doi: 10.1016/0022-5193(78)90137-6. [DOI] [PubMed] [Google Scholar]

- Wieseke N., Bernt M., Middendorf M. Unifying parsimonious tree reconciliation. In: Darling A., Stoye J., editors. Proceedings of the 13th International Workshop on Algorithms in Bioinformatics (WABI 2013) vol. 8126 of Lecture Notes in Computer Science. Heidelberg: Springer; 2013. pp. 200–214. [Google Scholar]