Abstract

Understanding the nature of object representations in the human brain is critical for understanding the neural basis of invariant object recognition. However, the degree to which object representations are sensitive to object viewpoint is unknown. Using fMRI we employed a parametric approach to examine the sensitivity to object view as a function of rotation (0°–180°), category (animal/vehicle) and fMRI-adaptation paradigm (short or long-lagged). For both categories and fMRI-adaptation paradigms, object-selective regions recovered from adaptation when a rotated view of an object was shown after adaptation to a specific view of that object, suggesting that representations are sensitive to object rotation. However, we found evidence for differential representations across categories and ventral stream regions. Rotation cross-adaptation was larger for animals than vehicles, suggesting higher sensitivity to vehicle than animal rotation, and largest in the left fusiform/occipito-temporal sulcus (pFus/OTS), suggesting this region has low sensitivity to rotation. Moreover, right pFus/OTS and FFA responded more strongly to front than back views of animals (without adaptation) and rotation cross-adaptation depended both on the level of rotation and the adapting view. This result suggests a prevalence of neurons that prefer frontal views of animals in fusiform regions. Using a computational model of view-tuned neurons, we demonstrate that differential neural view tuning widths and relative distributions of neural-tuned populations in fMRI voxels can explain the fMRI results. Overall, our findings underscore the utility of parametric approaches for studying the neural basis of object invariance and suggest that there is no complete invariance to object view.

INTRODUCTION

Recognizing an object across different rotations is one of the most difficult challenges faced by the human visual system, as it produces incredible variability in the appearance of an object. Yet, humans can recognize objects across rotations (referred to as view-invariant object recognition) in less than a few hundred milliseconds (Biederman et al., 1974; Grill-Spector and Kanwisher, 2005; Thorpe et al., 1996). How are objects represented in human visual cortex to allow efficient recognition?

Some theories suggest that invariant recognition is accomplished by largely view-invariant representations of objects (Recognition by Components, RBC, Biederman, 1987; generalized cylinders, Marr, 1982). That is, the underlying neural representations respond similarly to an object across its views. However, other theories suggest that object representations are view-dependent, that is, they consist of several 2D views of an object (Basri and Ullman, 1988; Bulthoff and Edelman, 1992; Poggio and Edelman, 1990; Tarr, 1995; Tarr and Gauthier, 1998; Ullman, 1989, 1998). Invariant object recognition is accomplished by interpolation across these views (Logothetis et al., 1994; Poggio and Edelman, 1990; Ullman, 1989) or by a distributed neural code across view-tuned neurons (Perrett et al., 1998).

Single unit electrophysiology studies in primates indicate that the majority of neurons in monkey inferotemporal cortex are view-dependent (Desimone, 1996; Kayaert et al., 2005; Logothetis and Pauls, 1995; Logothetis et al., 1995; Perrett, 1996; Vogels and Biederman, 2002; Vogels et al., 2001; Wang et al., 1996) with a small minority (5–10%) of neurons showing view-invariant responses across object rotations (Booth and Rolls, 1998; Logothetis and Pauls, 1995; Logothetis et al., 1995; Perrett et al., 1991).

In humans, fMRI-adaptation studies (Grill-Spector et al., 1999; Grill-Spector and Malach, 2001) and analysis of distributed response patterns (Eger et al., 2008) have been used to study the invariant nature of object representations with variable results. Short-lagged fMRI-A experiments (Grill-Spector et al., 2006), in which the test stimulus is presented immediately after the adapting stimulus, suggest that object representations in the lateral occipital complex (LOC; Malach et al., 1995) are view-dependent (Ewbank et al., 2007; Fang et al., 2007; Gauthier et al., 2002; Grill-Spector et al., 1999) but see (Valyear et al., 2006). However, long-lagged fMRI-A experiments (Grill-Spector et al., 2006), in which many intervening stimuli occur between the test and adapting stimulus, have provided some evidence for view-invariant representations in ventral LOC (Eger et al., 2004; James et al., 2002), especially in the left hemisphere (Vuilleumier et al., 2002) and the parahippocampal place area (PPA; Epstein et al., 2008). Also, a recent study showed that the distributed LOC responses to a particular object remained stable across 60° rotations (Eger et al., 2008).

It is unknown whether differences across fMRI studies reflect differences in the neural representations depending on object category and cortical region, or whether they reflect methodological differences across studies (e.g. level of object rotation and fMRI-adaptation paradigm used). For example, the Vuilleumier et al. (2002) and Eger et al. (2008) studies used smaller rotations than the Grill-Spector 1999 study. Also, fMRI-A effects may reflect different mechanisms in short and long-time scales (Grill-Spector et al. 2006), with higher invariance manifesting in longer time scales. Indeed, a recent fMRI-A study by Epstein and colleagues (2008) showed viewpoint sensitivity for places in the parahippocampal place area (PPA) for short-lagged fMRI-A and view-invariance for places in the PPA for long-lagged fMRI-A. Critically, previous studies do not provide a comprehensive characterization of object view representation necessary to address theories of object recognition. Therefore, we sought to: (1) establish the view-sensitivity of neural populations in object-selective cortex across several levels of object rotation, (2) examine whether view-sensitivity varies across ventral stream regions, (3) determine whether view-sensitivity varies across object categories and short and long-lagged fMRI-A paradigms, and (4) relate experimental results to putative neural responses using a computational model.

To address these questions, we measured viewpoint sensitivity across object-selective cortex using fMRI-A for two object categories (animals and vehicles), across four parametric changes of viewpoint (rotations of Δ0°, Δ60°, Δ120°, Δ180° around the vertical axis), and two fMRI-A paradigms (short-lagged and long-lagged), see Fig. 1. Using a parametric measurement of object rotation effects is important as it provides a more rigorous characterization of the sensitivity of object representations to viewpoint.

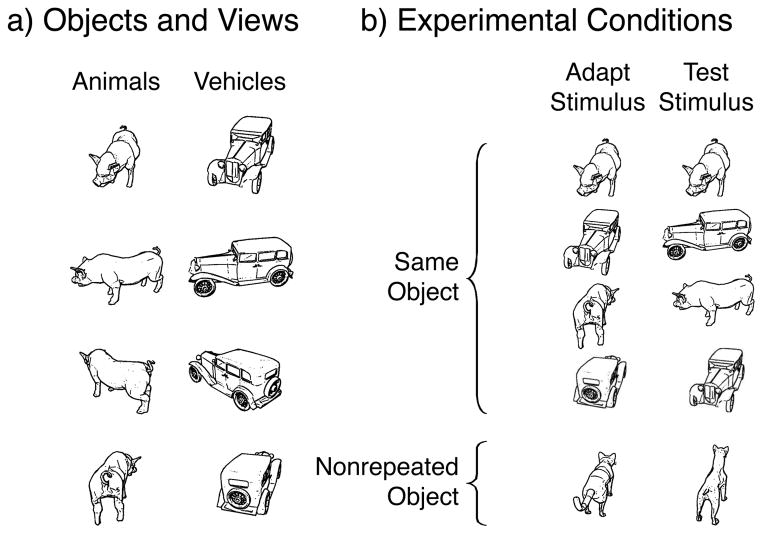

Figure 1. Stimuli and Experimental design.

a) Examples of animal and vehicle stimuli used in the experiments. b) Examples of experimental conditions in adaptation experiments: left column depicts the adapting stimulus, right the test stimulus. In the short-lagged adaptation paradigm, adapting and test stimuli appeared in succession. In the long-lagged paradigm, the test stimulus appeared a few minutes after the adapting stimulus with many intervening stimuli.

MATERIALS AND METHODS

General methodological considerations

fMRI-Adaptation (fMRI-A) is a method used to measure sensitivity to transformations by adapting the neural representation to a particular stimulus (measured as lowered fMRI signal) and comparing the level of response to a specific change in the stimulus after adaptation (Grill-Spector et al., 1999; Grill-Spector and Malach, 2001). In fMRI-A studies of object viewpoint sensitivity subjects are adapted to an object in a particular viewpoint. Then they are shown the same object from a different viewpoint. Recovery from adaptation in response to the rotated object is interpreted as reflecting viewpoint sensitivity (providing evidence for a view-dependent representation), whereas adaptation to new views of the object (i.e., rotation cross-adaptation) is interpreted as viewpoint invariance.

Rotation cross-adaptation depends on both the level of the rotation and the underlying neural tuning to specific object views. If object representations are view invariant, there will be significant rotation cross-adaptation across all levels of object rotation. Alternatively, if object representations are view-dependent, there will be recovery from adaptation (no cross-adaptation) at some level of rotation. Notably, if the view tuning of the neural population is wider than the range of rotations tested, the profile of recovery from adaptation may manifest as a monotonic recovery from adaptation as a function of the level of rotation. However, if the population view tuning is narrower than the range of rotations tested, there may be adaptation only for the zero rotation condition and complete recovery from adaptation (i.e. no rotation cross-adaptation) for non-zero rotations (as shown in the computational model, Fig. 9).

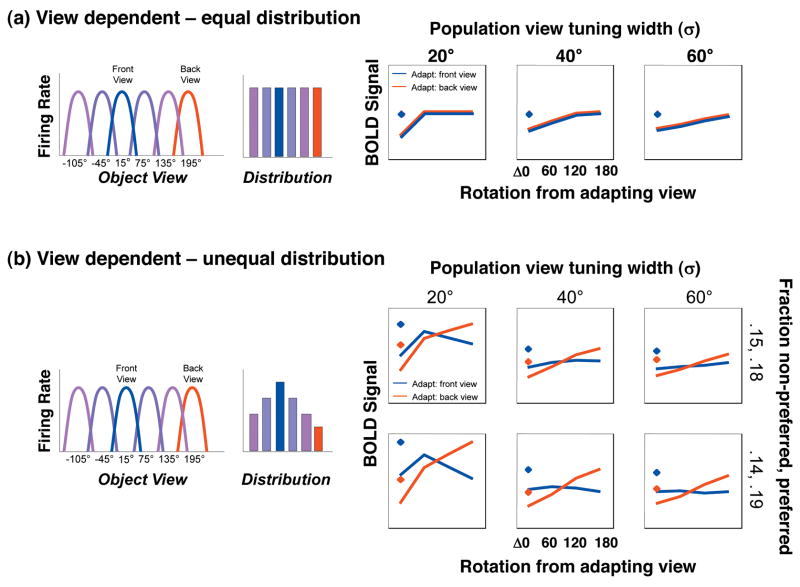

Figure 9. Mixture of View-Dependent Neural Populations Model.

Left: Schematic illustration of the view-tuning and distribution of neural populations tuned to different views in a voxel. Each bar represents the relative proportion of neurons in a voxel tuned to a view, and each is color coded by the preferred view. Right: result of computational implementation of the model illustrating predicted BOLD responses during fMRI-A experiments. Diamonds: responses without adaptation; Lines: response after adaptation with a front view (blue line) or back view (red line). Across columns the view tuning width varies, across rows the distribution of neural populations preferring specific views varies. a) Mixture of view-dependent neural populations which are equally distributed in a voxel. This model predicts a similar pattern of recovery from adaptation regardless of the adapting view. b) Mixture of view-dependent neural populations in a voxel with a higher proportion of neurons that prefer the front view. This model predicts higher BOLD responses to frontal views of animals without adaptation and a different pattern of rotation cross-adaptation during fMRI-A experiments.

We also measured the level of response to specific object views in the absence of adaptation using fMRI for the first time. This measurement is important for two reasons: (1) finding higher activation for a specific view over other views will provide evidence for view-dependent representations, and (2) the interpretation of adaptation experiments necessitates measurements of responses before adaptation. On an intuitive level, a lower response to a rotated version of an object after presentation of a specific view of that object may reflect cross-adaptation across views, or it may simply reflect a lower response to the second view of that object. Thus, measuring fMRI responses without adaptation is necessary for differentiating the potential sources of lowered responses during fMRI-A experiments.

Subjects

A total of 10 subjects (seven males, three females, ages 23–37) participated in this study. All subjects had normal or corrected-to-normal vision. Experiments were approved by the Stanford Internal Review Board on Human Subjects Research, and all subjects provided informed consent to participate in all experiments.

Stimuli

Stimuli were line-drawings of 4-legged animals and 4-wheeled vehicles (Fig. 1a). All objects were rotated around the vertical axis of rotation at the approximate center of mass. All object images were acquired approximately 30° above the horizontal plane to minimize self-occlusion. The 0° view was defined as the head (animals) or headlights (vehicles) directed toward the viewer. Images were then acquired for the 15° (front), 75°, 135°, and 195° (back) views rotated clockwise around the vertical axis. Objects were normalized such that the horizontal span of the largest view for each object was equated across all objects, and the apparent size for each object was consistent across all views. Across all rotations, stimuli subtended (min-max) 3–13° of visual angle vertically, and 4–13° horizontally. Scrambled images were created by randomly scrambling images into 400 squares. The presentation of stimuli was programmed in MATLAB (The Mathworks Inc., Natick, MA) using the Psychophysics Toolbox (Brainard, 1997).

Experiments

Eight subjects (three females, five males) participated in Experiment 1, seven subjects (three females, four males; ages 23–37) participated in Experiment 2, short-lagged (SL) fMRI-A, and eight subjects (two females, six males; ages 25–37) participated in Experiment 3, long-lagged (LL) fMRI-A. Six of the subjects participated in all three experiments (two females, four males). All subjects participated in several additional sessions including high-resolution whole brain anatomy, retinotopic experiments and an FFA localizer. LOC localizers were obtained during experimental sessions.

Experiment 1: Responses to Object Views Without Adaptation

Subjects participated in two runs of Experiment 1. Each run consisted of 16 blocks of intact line-drawing of animals and vehicles each lasting 16 s, which alternated with 8 s blocks of scrambled versions of randomly selected images that served as the baseline. Images were shown at a rate of 1 Hz. Within each of the blocks of the experiment, subjects were presented with stimuli from one category (animals or vehicles) and one view (15°, 75°, 135°, or 195°, Fig. 1a). All combinations of views and categories were seen twice with unique stimuli across the two runs. The order of blocks for each combination of category and view, as well as which stimuli were assigned to each block and condition, was randomized for each subject. Participants were instructed to categorize each image as an animal, vehicle, or other (scrambled image or fixation) using a button box, while maintaining fixation. A maximum of two object images were randomly replaced by scrambled images within each object block to maintain subject vigilance.

Experiment 2: Short-Lagged fMRI-A

Experiment 2 utilized a rapid event-related design in which subject viewed 2 s trials of objects, scrambled objects or fixation. The experimental paradigm is similar to (Kourtzi and Kanwisher, 2000). During object trials, an adapting image of an animal or vehicle was presented in either the front or back view for 300 ms (the adapting stimulus), followed by fixation for 500 ms. A second object was then presented for 300 ms (test stimulus), followed by 900 ms of fixation. The second image in each pair was either a different (nonrepeated) object from the same category presented in the same view as the adapting object, or the second object was the same object rotated Δ0°, Δ60°, Δ120°, or Δ180° around the vertical axis (Fig. 1b). All objects appeared in all conditions. Stimuli were spaced such that there were on average 125 trials between repetitions of the same object (either identical image or the same object in a different level of rotation). Scrambled image trials contained two different scrambled images shown with the same timing as object images. During fixation trials the fixation cross flashed twice (300ms and 800ms). Conditions were assigned to trials using software that optimized the presentation of experimental conditions for deconvolution (Burock et al., 1998); Optseq; FreeSurfer Functional Analysis Stream). Participants categorized each stimulus as animal, vehicle, or other (scrambled or fixation flash) while maintaining fixation. Subjects participated in 10 runs of this experiment using different images with 30 trials per experimental condition.

Experiment 3: Long-Lagged fMRI-A

Each run included an adapting phase followed by a rapid event-related test phase. The experimental paradigm is similar to paradigms used in previous studies of viewpoint effect in object recognition (Koutstaal et al., 2001; Vuilleumier et al., 2002). Adapting phase: For each 64 s adapt phase, a set of four novel animals (two in the 15° view and two in the 195° view) and four novel vehicles (two in the 15° view and two in the 195° view) were shown. Stimuli appeared for 800 ms with 200 ms inter-stimulus interval. Stimuli were presented eight times, in a counterbalanced order that ensured that each stimulus was preceded equally often by every other stimulus. Participants performed a one-back matching task while maintaining fixation. Test phase: Stimuli were presented in a rapid event-related design that began approximately 45 s after the adapt phase. During each 2 s trial, a stimulus was presented for 1500 ms, followed by 500 ms of fixation. All objects presented during the adapt phase were shown again during the test phase in four rotations: Δ0°, Δ60°, Δ120°, and Δ180° from the adapting view. In addition, previously unseen (nonrepeated) animals and vehicles were presented, half in the 15° and half in 195° view (Fig. 1b). Each run also contained scrambled images and fixation-only trials. Conditions were assigned to trials using a counterbalancing algorithm that ensured that each condition was preceded by every other condition an equal number of times across all runs, and stimuli were assigned to rotation conditions such that the same object never appeared twice in a row. On average, 17 trials intervened between presentations of the same object. Participants categorized each stimulus as an animal, vehicle, or neither (scrambled or fixation trials) while maintaining fixation. Each subject participated in 12–14 runs of Experiment 3 which included 24–26 trials per condition.

We wish to note that some groups refer to this type of paradigm as “priming” (e.g., Ganel et al., 2006; Koutstaal et al., 2001; Vuilleumier et al., 2002). However, we believe that a distinction should be made between the paradigm and the behavioral and/or brain consequences of the paradigm. Therefore, we use the term priming when describing behavioral effects induced by repetition and fMRI-A or repetition suppression when referring to fMRI measurements (Grill-Spector, et al., 2006; Sayres & Grill-Spector, 2006).

Visual Meridian and Eccentricity Mapping

Retinotopic cortex was delineated in each subject using standard methods. Subjects viewed rotating wedges to define meridians, and expanding and contracting annuli to define eccentricity while fixating(Grill-Spector and Malach, 2004).

Object and Face-Selective Cortex Localization

Object-selective cortex was localized in a standard manner (Grill-Spector, 2003; Grill-Spector et al., 1998; Malach et al., 1995)using an independent block design localizer scan consisting of grayscale photographs of animals, vehicles, scenes, and scrambled images from all categories. Eight intact-object blocks, two blocks for each stimulus category, were presented in a random order and were interleaved with nine blocks of scrambled images. Face-selective regions were identified in a separate session using an independent localizer scan consisting of four blocks each of grayscale photographs of human faces, inanimate objects (abstract sculptures and vehicles), and scrambled versions of these images (Kanwisher et al., 1997). Images used during the localizer scans were not used in experimental conditions.

MRI Acquisition

Functional imaging data were acquired with a 3 Tesla GE Signa scanner at the Lucas Imaging Center at Stanford University equipped with a custom receive-only surface coil (NOVA Systems). A gradient-echo imaging spiral sequence was used to obtain 16 slices prescribed perpendicular to the calcarine sulcus (16 slices, 4 mm × 3.125 mm × 3.125 mm voxels, TR: 1000 ms, TE: 30 ms, flip angle: 60°, field of view: 20 cm, matrix size: 64 × 64) covering posterior cortex. Inplane anatomical slices were acquired for each session (16 slices, 4 mm × .78 mm × .78 mm voxels, flip angle: 15°, field of view: 20 cm, bandwidth: 15.63 KHz). A high-resolution whole head anatomical volume was acquired for each subject in a separate session using a standard head coil (124 sagittal slices, 1.2 mm × .86 mm × .86 mm voxels, flip angle: 6°, FOV: 22 cm, bandwidth: 31.25 KHz).

Data Analysis

Anatomical images

BrainVoyager QX (BrainInnovations, Maastricht) was used to segment and reconstruct the cortex from the high-resolution volume for each subject in order to restrict analyses to activity within the cortical surface and for visualization on the inflated and flattened cortex.

Functional Preprocessing

BrainVoyager 2000 (BrainInnovations, Maastrict) was used to remove linear drift, high-pass filter (3 cycles per run), and motion correct each functional run by aligning all acquisitions to the first acquisition of each run, and then aligning across runs. Individual runs with more than 1.5 mm of motion were excluded from further analyses (less than 10% of all runs were excluded). The inplane anatomical images and corresponding functional images were aligned to the high-resolution anatomical volume for each subject and projected into Talairach space.

Blocked-Design Experiments

Data were analyzed using a general linear model (GLM) implemented in BrainVoyager 2000 which predicts the response of each voxel by convolving boxcar functions representing each experimental condition with a canonical hemodynamic response function (Boynton et al., 1996). GLM analyses were applied to individual subjects’ data, and responses (percent signal change from baseline) were estimated for each condition and averaged across subjects. Percent signal change was estimated by dividing the beta values of each condition by the mean response during the baseline period.

Rapid Event-Related Experiments

The timecourses for each condition were estimated using a deconvolution algorithm implemented in BrainVoyager QX across a 12 s window after trial onset (Burock et al., 1998; Dale and Buckner, 1997). The deconvolution algorithm produces estimates of the hemodynamic response at each TR (TR=1 s) during a 12 s window after trial onset. Response magnitudes for experimental conditions were estimated in each subject as the mean of the percent signal change (relative to fixation-only trials) of the peak and two highest adjacent time points. These estimates were then averaged across subjects for each condition.

Region-of-Interest Selection

Primary Visual Cortex

We defined the boundaries of primary visual cortex (V1) in each subject using standard meridian and eccentricity mapping techniques. We identified in each subject the representation of the vertical meridian along the upper and lower banks of the calcarine sulcus which are the borders between V1 and V2. ROIs were selected to include all of V1 in each hemisphere (Fig. 2), and responses were averaged across hemispheres and subjects.

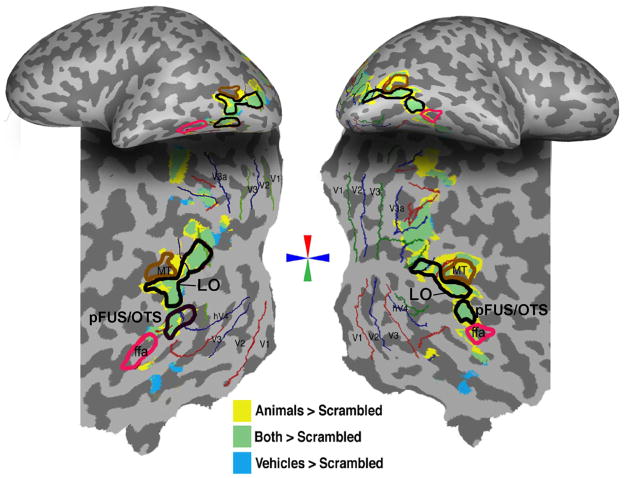

Figure 2. Object selective activations.

Object selective activations for a representative subject shown on the inflated (top) and flattened cortex (bottom). Activations for animals > scrambled (P < .00001, voxel level, yellow) and vehicles > scrambled (P < .00001, voxel level) during the localizer experiment are superimposed. Regions that activated to both contrasts are shown in green. The FFA was defined as a region in the fusiform gyrus (or occipito-temporal sulcus) that responded more to faces than cars and novel objects (P < .001, voxel level). Red, blue and green lines denote visual meridians from the retinotopy experiment. MT is marked as a region in the posterior bank of the inferior temporal sulcus that responded more strongly for moving than stationary low contrast rings (P < .0001).

Object-Selective Cortex

Object-selective regions-of-interest (ROIs) were defined functionally using independent blocked-design localizer scans for each subject (Fig. 2) as regions that activated more strongly to images of cars vs. scrambled and animals vs. scrambled (P< 0.00001, voxel level, uncorrected). This contrast activates a constellation of regions in lateral occipital cortex (Fig. 2).

We subdivided the LO into two ROIs, LO and pFUS/OTS. The LO ROI was adjacent and posterior to area hMT, located between the lower and horizontal meridians of the contralateral visual field. Recently we have shown that this region is retinotopically organized (Sayres and Grill-Spector, 2008) and partially overlaps with LO2 as defined by (Larsson and Heeger, 2006). The pFUS/OTS (Fig. 2) overlapped the fusiform and/or occipito-temporal sulcus, was inferior to LO, and did not overlap with a rentinotopically defined region. This ROI has been labeled pFUS/OTS in our previous studies (Sayres and Grill-Spector, 2006; Vinberg and Grill-Spector, 2008).

ROIs were defined to be of the same volume in each subject (LO ~440 mm3, and pFUS/OTS ~220 mm3) and were centered around peak activations restricted to gray matter using tools in BrainVoyagerQX. We also separated the LO ROI into a posterior and ventral portion (each about 220 mm3). There were no significant differences between responses LO subregions. Generally, our results do not depend on the ROI size as we found similar results for half and double sized ROIs (see Supplementary Figs 2 & 3).

We also localized a face-selective region in the lateral fusiform gyrus (fusiform face area, FFA; (Kanwisher et al., 1997) that was anterior to pFUS/OTS. FFA also responded more strongly to animals than scrambled images, P< .001, voxel level.

Adaptation Effects

The significance of adaptation effects was determined using a paired t-test between the mean response in each condition and the mean response of the nonrepeated condition (Table 3) as in previous studies (Grill-Spector et al., 1999; Grill-Spector and Malach, 2001; Sayres and Grill-Spector, 2006). Complementary statistical analyses were performed to measure significant recovery from adaptation using paired t-tests relative to the identical condition (Table 4).

Table 3.

Cross-adaptation (+: Significantly different than novel non-rotated condition; paired t-test; P < .05)

| Rotation from Adapted View |

||||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Adapt Front View |

Adapt Back View |

|||||||||

| Category | Lag | ROI | 0 | 60 | 120 | 180 | 0 | 60 | 120 | 180 |

| Vehicles | Short | LO | + | − | − | − | + | − | − | − |

| LH pFUS/OTS | − | − | − | − | + | − | − | − | ||

| RH pFUS/OTS | + | − | − | − | + | − | − | − | ||

| FFA | + | − | − | − | + | − | − | − | ||

| Long | LO | + | − | − | − | + | − | − | − | |

| LH pFUS/OTS | − | − | − | − | + | − | − | − | ||

| RH pFUS/OTS | + | − | − | − | + | − | − | − | ||

| FFA | + | − | − | + | + | − | − | − | ||

|

| ||||||||||

| Animals | Short | LO | + | − | − | + | + | + | − | − |

| LH pFUS/OTS | + | + | − | + | + | + | + | − | ||

| RH pFUS/OTS | + | − | − | + | + | + | − | − | ||

| FFA | + | + | + | + | + | + | − | − | ||

| Long | LO | − | − | + | − | + | − | − | − | |

| LH pFUS/OTS | + | + | + | − | + | + | − | − | ||

| RH pFUS/OTS | + | + | + | + | + | − | − | − | ||

| FFA | − | + | + | + | − | − | − | − | ||

Table 4.

Recovery from adaptation (+: Significantly different than identical condition; paired t-test; P < .05)

| Rotation from Adapted View |

||||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Adapt Front View |

Adapt Back View |

|||||||||

| Category | Lag | ROI | 60 | 120 | 180 | Novel | 60 | 120 | 180 | Novel |

| Vehicles | Short | LO | + | + | − | + | + | − | − | + |

| LH pFUS/OTS | − | − | − | − | + | − | + | + | ||

| RH pFUS/OTS | + | − | − | + | + | + | − | + | ||

| FFA | + | + | + | + | + | − | + | + | ||

| Long | LO | + | + | − | + | − | + | + | + | |

| LH pFUS/OTS | − | − | − | − | − | − | + | + | ||

| RH pFUS/OTS | + | + | − | + | + | − | + | + | ||

| FFA | + | + | − | + | + | + | + | + | ||

|

| ||||||||||

| Animals | Short | LO | + | + | + | + | − | + | + | + |

| LH pFUS/OTS | − | + | − | + | − | + | + | + | ||

| RH pFUS/OTS | + | + | − | + | − | + | + | + | ||

| FFA | − | + | − | + | + | + | + | + | ||

| Long | LO | − | − | − | − | − | + | − | + | |

| LH pFUS/OTS | − | − | − | + | − | − | + | + | ||

| RH pFUS/OTS | − | − | − | + | + | + | + | + | ||

| FFA | − | − | − | − | − | − | + | − | ||

Analysis of Variance of fMRI-A data

Analysis of rotation effects

To examine the effects of rotation, percent signal change was submitted to a 3-way analysis of variance (ANOVA) for each ROI with rotation (Δ0°, Δ60°, Δ120°, Δ180°), adapting view (front, back) and adaptation paradigm (short-lagged, long lagged) as factors. Subjects were treated as a random variable. The analysis of rotation effects only included trials in which rotated versions of the adapting object were shown, and did not include the non-repeated condition (in which different objects in the same view were shown). The complete results of this analysis are reported in Table 1.

Table 1.

Results of rotation-effects ANOVA using factors of rotation (0°/60°/120°/180°), adapting view (front/back), and adaptation paradigm (SL/LL) for each ROI. Bold: significant at P < .05. Italics: significant at P = .05.

| Effect |

||||||||

|---|---|---|---|---|---|---|---|---|

| Rotation | Adapt View | Paradigm | Adapt View × Rotation | Rotation × Paradigm | Adapt View × Paradigm | Adapt View × Rotation × Paradigm | ||

| Category | ROI | F(3,39) | F(1,13) | F(1,13) | F(3,39) | F(3,39) | F(1,13) | F(3,39) |

| Vehicles | LO | 13.67 | 3.62 | 7.37 | 0.09 | 0.73 | 0.47 | 0.04 |

| LH | ||||||||

| pFUS/OTS | 3.68 | 3.44 | 2.31 | 1.21 | 0.27 | 0.07 | 0.34 | |

| RH | ||||||||

| pFUS/OTS | 11.69 | 2.03 | 1.46 | 0.14 | 1.71 | 0.02 | 1.59 | |

| FFA | 9.27 | 1.78 | 0.26 | 2.34 | 1.07 | 3.40 | .95 | |

| Animals | LO | 11.99 | 0.08 | 8.89 | 0.49 | 4.34 | 0.42 | 1.35 |

| LH | ||||||||

| pFUS/OTS | 5.97 | 1.28 | 1.65 | 1.74 | 2.90 | 0.09 | 0.95 | |

| RH | ||||||||

| pFUS/OTS | 23.32 | 0.14 | 1.71 | 3.68 | 1.26 | 1.59 | 1.53 | |

| FFA | 7.57 | 0.03 | 0.09 | 4.71 | 2.71 | 4.79 | 0.35 | |

Analysis of the basic adaptation effect across experiments

To examine whether the basic adaptation effect (for identical images) differed across the short and long-lagged adaptation paradigms (Fang et al., 2007; Grill-Spector et al., 2006), we performed a second ANOVA using repetition (repeated, nonrepeated), adaptation paradigm (short-lagged, long-lagged), and adapting view (front, back) as factors. This analysis only included the two Δ0° conditions: two repeated identical stimuli, and two different objects from the same category (animal or vehicle) in the same view. The complete results of this analysis are reported in Table 2.

Table 2.

Results of adaptation-effects ANOVA using factors of repetition (repeated/nonrepeated), adapting view (front/back), and adaptation paradigm (SL/LL) for each ROI. Bold: significant at P < .05. Italics: significant at P = .05.

| Effect |

||||||||

|---|---|---|---|---|---|---|---|---|

| Repetition | Adapt View | Paradigm | Adapt View × Repetition | Repetition × Paradigm | Adapt View × Paradigm | Adapt View × Repetition × Paradigm | ||

| Category | ROI | F(1,13) | F(1,13) | F(1,13) | F(1,13) | F(1,13) | F(1,13) | F(1,13) |

| Vehicles | LO | 31.22 | .001 | 8.15 | 0.12 | 0.10 | 0.48 | 0.32 |

| LH | ||||||||

| pFUS/OTS | 13.82 | 0.85 | 1.22 | 3.46 | 0.001 | 0.31 | 0.01 | |

| RH | ||||||||

| pFUS/OTS | 24.27 | 4.54 | 1.50 | 0.01 | 0.64 | 0.06 | 0.51 | |

| FFA | 48.37 | 0.17 | 0.32 | 8.43 | 0.04 | 0.36 | 0.76 | |

| Animals | LO | 37.30 | 0.11 | 7.17 | 0.008 | 10.17 | 1.69 | 0.006 |

| LH | ||||||||

| pFUS/OTS | 95.82 | 0.23 | 1.18 | 0.04 | 7.24 | 0.07 | 0.41 | |

| RH | ||||||||

| pFUS/OTS | 32.99 | 4.34 | 1.17 | 1.61 | 0.81 | 0.001 | 0.001 | |

| FFA | 34.17 | 17.01 | 0.08 | 0.25 | 8.96 | 0.96 | 0.19 | |

Controls for Factors that May Affect LOC Responses

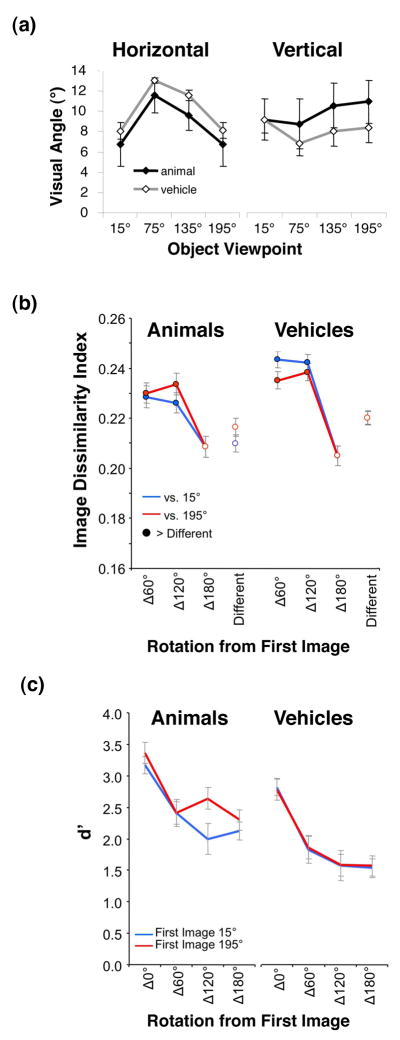

Visual Angle of Object Stimuli (Fig. 8a)

Figure 8. Stimulus size, pixel-wise dissimilarity & behavioral similarity.

a) Mean visual angle of stimuli measured horizontally (left) and vertically (right) for each category and viewpoint. Error bars represent one standard deviation across images. b) Average pixel-wise image dissimilarity between all adapting and test stimuli used in the experiments. Blue: versus the 15° view. Red: versus the 195° view. Rotated versions of the same object (filled circles) are more dissimilar (t-test, P < .05) than two different objects in the same view (Different condition). c) Subjects’ discrimination performance (d′) for the same-different discrimination task as a function of rotation.

We measured the mean horizontal and vertical visual angle for animal and vehicle stimuli at each viewpoint to examine the variation in the visual angle across stimuli and examine the possibility that LOC responses to different views were related to the size of the stimuli in that view. Our results show that visual angle is not an explanatory factor for LOC responses, but may account for V1 responses.

Physical Dissimilarity (Fig. 8b)

To examine whether adaptation effects in high-level visual areas may be due to low-level pixel-wise differences between stimuli, we calculated an image dissimilarity index (IDI, (Grill-Spector et al., 1999; Vuilleumier et al., 2002) between the adapting (initial) views (15° and 195°) and test views (15°, 75°, 135°, and 195°) for all objects.

Where p is the number of pixels, x̃ is the initial image, x is the test image, and N is the number of images. A high dissimilarity index indicates that two images are very dissimilar, whereas a low dissimilarity index indicates that two images are similar, with the IDI of identical images being zero.

Perceived Similarity (Fig. 8c)

The six subjects who participated in all three imaging experiments completed a behavioral experiment conducted outside the scanner approximately six months after the scans. We used a method used in a previous behavioral study of object viewpoint (Hayward and Williams, 2000). On each 3 s trial, subjects were presented an adapting stimulus for 500 ms in either the 15° or 195° view, followed by 500 ms of fixation, then a test stimulus for 500 ms, and finally 1500 ms of fixation. Each stimulus was presented randomly shifted 0° – 4° of visual angle vertically and horizontally from fixation so that the overlap of the 2D images could not be used as a cue for stimulus similarity. The second stimulus was the same object as the first stimulus on half of the trials, and was a different object for the other half. The second stimulus in each trial (both same and different trials) could be presented in the same viewpoint as the first stimulus in each pair (Δ0°), or rotated Δ60°, Δ120°, or Δ180°. Subjects indicated whether the second stimulus was the same object as the first, regardless of a change in viewpoint, as quickly and accurately as possible. We measured the degree of perceived similarity between two objects as the ability to discriminate between them (d′) a function of rotation.

Computational Simulations of Mixtures of View Dependent Neural Populations

We used a computational model to validate that a mixture of view-dependent neural populations can generate the observed fMRI data. We modeled the BOLD response in a voxel as the summed responses of view-dependent neural populations centered at several views. The tuning of a population of neurons to certain object views was modeled with a Gaussian centered at the preferred view. We will refer to this as the population receptive field. The computational model had several free parameters: (1) the proportion of neurons in a voxel tuned to a specific view, pi (2) the view tuning width, σ (3) the number of Gaussians and (4) the location of Gaussian centers (i.e., the preferred viewpoint that produced the maximal response).

The same Gaussian model was used to estimate responses with and without adaptation. We implemented a standard scaling model of adaptation (Avidan et al., 2002; Grill-Spector, 2006; McMahon and Olson, 2007). The scaling model of adaptation suggests that the level of adaptation is proportional to the neuron’s initial response. The factor which determines the level of adaptation is the adaptation constant, c. The maximal degree of adaptation (1−c) occurs for Gaussians centered at the adapting view, with no adaptation (c = 0) if the difference between the initial view and the Gaussian’s preferred view is much larger than its standard deviation: (x0 − μi)2 ≫ σ2.

| (1) |

G(x0,μi,σ) reflects a Gaussian centered at view μi; where μi=15, 75, 135, 195, 255, 315; n is the number of Gaussians. The parameter pi reflects the proportion of neurons in a voxel tuned to view i, where .

| (2) |

This is the same as (1) except for an adaptation factor Ai which reflects the adaptation of neurons tuned to view μi after an initial presentation with the 15° or 195° view.

| (3) |

In Fig. 9 we show results using a model with six Gaussians centered on views 60° apart (15°, 75°, 135°, 195°, 255°, and 315°) in which we varied σ from 20°–60°, and pi from equal proportions of neurons tuned to each view (all six populations were set to pi = 0.17), to unequal proportions of neurons in which the largest proportion of neurons tuned to a view was pi = 0.19 and the smallest proportion pi = 0.14 with linearly interpolated values in between. We also examined the effects of different number of Gaussians (varied from 4–12, Supplementary Fig. 4) and the location of Gaussian centers relative to the test views (Supplementary Fig. 5). Results remain similar as long as there is a sufficient number of Gaussians to cover the view space.

In the simulations in Fig. 9, we used a value of c = 0.26, for the adaptation constant which was estimated from fMRI-A data as one minus the average amount of adaptation (for the repetition of an identical image) across adapting views, categories, and adaptation paradigms. In Supplementary Fig. 6 we show the effect of varying c on the model’s performance. Changing c changes the level of adaptation, but the profile of results remains similar.

RESULTS

Experiment 1: Response to Views of Objects Without Adaptation

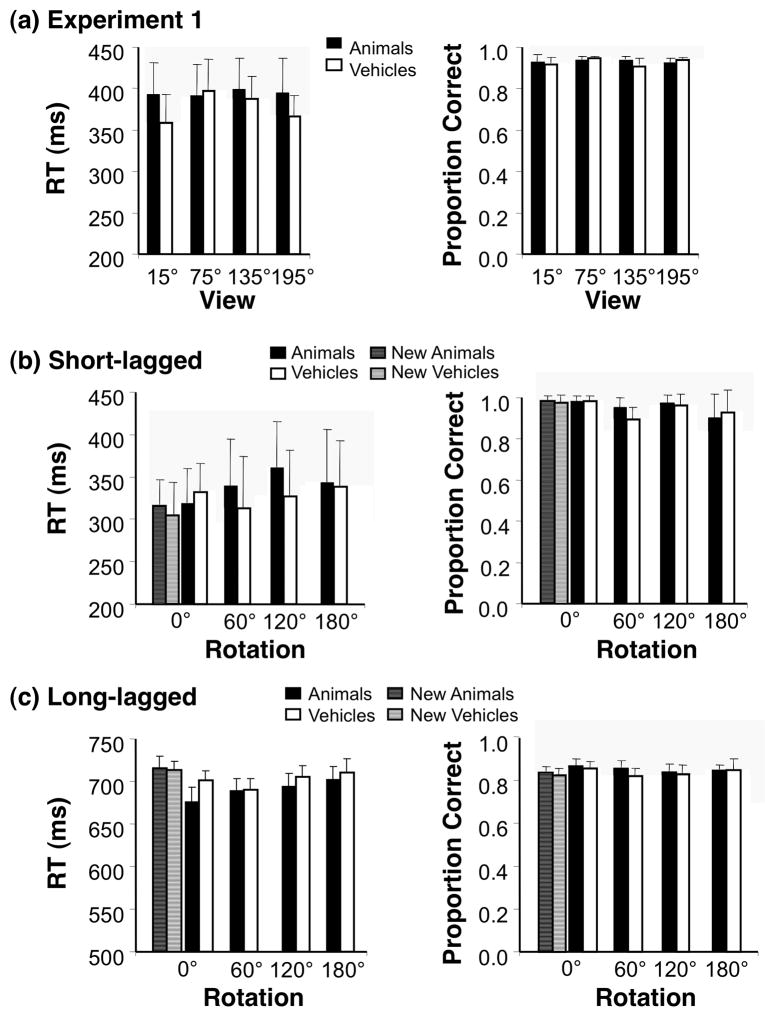

We examined whether different views of objects elicited equivalent or differential fMRI responses across object-selective cortex. Subjects viewed blocks of objects from one category and view while performing a categorization task (Fig. 1 and Methods). There were no significant differences in response times (ANOVAs; all Fs < 1.20, Ps > 0.37) or accuracy (all Fs < 1.16, Ps > 0.35) across conditions (Fig. 3a).

Figure 3. Behavioral Responses.

(a) Mean response time (RT) and accuracy (proportion correct) averaged across 8 subjects who participated in Experiment 1. Error bars indicate standard error of the mean (SEM). (b) Behavioral responses for the test stimulus (second stimulus in each pair) during the short-lagged fMRI-adaptation experiment averaged across 7 subjects. Error bars: SEM. (c) Behavioral responses for the test stimulus during the long-lagged fMRI-adaptation experiment averaged across 8 subjects. Error bars indicate SEM.

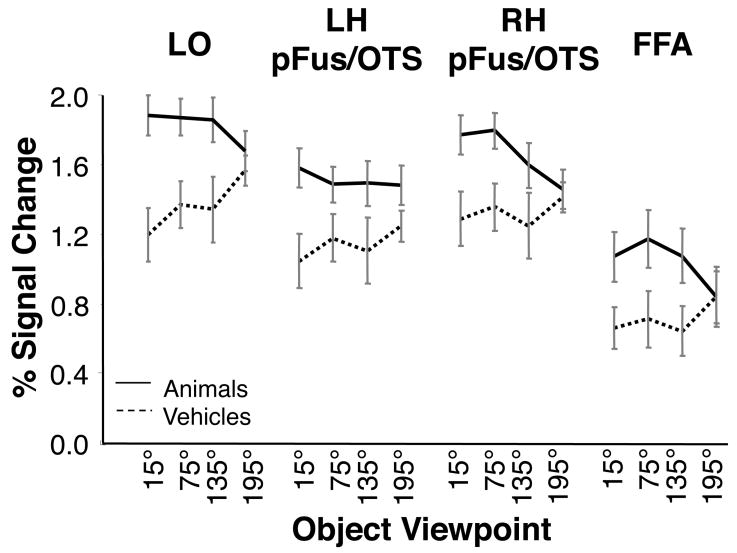

Responses to all animal and vehicle views were significantly higher than scrambled images in all object-selective ROIs (t-tests; allPs < .001; Fig. 4). An analysis of variance (ANOVA) with factors of stimulus category (animal/vehicle), ROI (LO, pFUS/OTS, and FFA), hemisphere (right/left) and viewpoint (15°, 75°, 135°, and 195°) revealed a significant interaction between category and viewpoint (F3,21= 5.90, P < .01, Fig. 4). When each ROI was analyzed separately, the interaction between category and view was significant in LO (F3,21= 3.96, P < .03), RH pFUS/OTS (F3,21= 3.59, P < .04), and FFA (F3,21= 7.95, P < .01), but not in the LH pFUS/OTS (F3,21= 1.95, P = .15).

Figure 4. BOLD responses to object views across object, and face-selective cortex.

Mean fMRI responses to animal (solid) and vehicle (dashed) views relative to a scrambled baseline. Data are averaged across 8 subjects. Error bars indicate SEM.

Because of the significant interaction between category and viewpoint, we examined data for animal and vehicle stimuli separately in subsequent analyses. We found no significant effect of vehicle view (F = 2.41, P = .10) or any differences between hemispheres (all Fs < 1.48, all Ps > .24) in any ROI except for a non significant trend towards higher response to back than front vehicle views. For animals, we found differential responses across ROIs. LO responded similarly across all animal views (F3,21 = .993 P = .40) and there were no hemispheric differences in its response to animal views (F3,21 = .60 P = .60). Responses across views of animals differed between hemispheres in pFUS/OTS (F3,21 = 4.05, P< .03). Right pFUS/OTS revealed a differential response across animal views (main effect of view: F3,21 = 3.75, P< .03) with higher responses to more frontal animal views. However, there was no significant effect of animal view in the left pFUS/OTS (F3,21 = 2.23, P = .70). Thus, left and right pFUS/OTS ROIs were analyzed separately in subsequent analyses. Similar to the right pFUS/OTS the FFA responded more strongly to frontal than back animal views (effect of view: F3,21 = 4.24, P< .02), but FFA responses did not differ across hemispheres (F< 1, P> .72).

We were not able to detect spatially distinct regions in object-selective cortex that are tuned to specific object views (Supplementary Fig. 1). Nevertheless, it is possible that neural populations tuned to specific object views are spatially distinct at a finer spatial resolution. One optical imaging study of monkey IT cortex (Wang et al., 1996) suggests that neural populations tuned to specific face views are arranged in a columnar structure (columns were around 400 μm in diameter).

The results of Experiment 1 revealed a significant response to all views of animals and vehicles across object-selective cortex, and a bias for higher responses for frontal animal views in right pFUS/OTS and FFA. However, the results of Experiment 1 were not sufficient for determining whether the response across object views reflects view-invariant neural populations or mixture of view-dependent neural populations tuned to different views within these ROIs. We can differentiate between these alternatives by examining the profile of recovery from adaptation as a function of object rotation in the same ROIs. Interpretation of fMRI-A results will be considered in light of the response biases to certain views observed in right pFUS/OTS and FFA.

Measuring view-sensitivity using fMRI-A

We next examined sensitivity to object view using fMRI-A in which we adapted object-selective cortex with a front or back view of an animal or vehicle and then tested whether there was cross-adaptation to a rotated view of this object or a recovery from adaptation across object rotation. We hypothesized that regions sensitive to object view will recover from adaptation with rotation depending on the view tuning of the underlying neural populations and the range of rotations tested. In contrast, regions that are view-invariant would show cross-adaptation to rotated views of the object.

We examined fMRI-A effects across object-selective cortex across four levels of rotation (Δ0°, Δ60°, Δ120° and Δ180°), two categories (animals and vehicles), and two adapting views (15° and 195° views). We also examined whether sensitivity to object view varied across short-lagged (SL) and long-lagged (LL) fMRI-A paradigms, (see Methods). For each ROI, we analyzed fMRI responses with respect to category (animal/vehicle), level of rotation from the adapting view (Δ0°, Δ60°, Δ120°, and Δ180°), adapting view (15°/195°), and adaptation paradigm (short/long lagged). Significance of effects was assessed using ANOVAs and paired t-tests (see Methods).

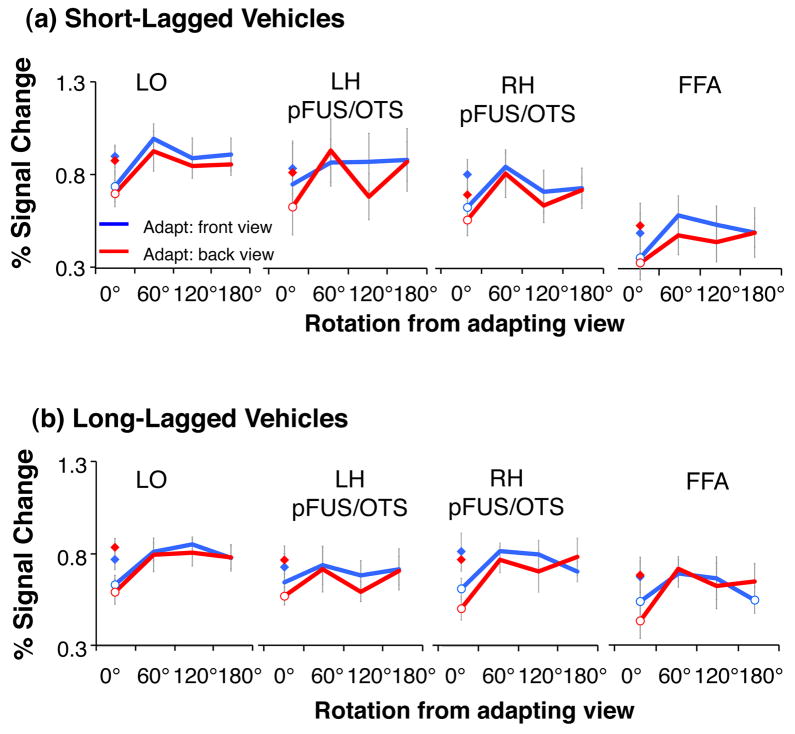

Sensitivity to Vehicle Rotation

In each of the ventral stream ROIs we found significant adaptation to the repetition of identical images of vehicles (Figure 5, Table 2). Adaptation effects did not differ by adapting view (front and back) or adaptation paradigm (except for LO; Table 2). Critically, in all ROIs and both adaptation paradigms we found a significant effect of rotation (Table 1), which did not differ across adapting views or adaptation paradigms (Table 1, Fig. 5). Full recovery from adaptation occurred for views rotated 60° or more from the adapting view (Table 4), suggesting view sensitivity to vehicle rotations of 60° or more across all ventral stream ROIs.

Figure 5. LO responses for vehicles during short and long-lagged fMRI-A experiments.

Panel a) shows results of short-lagged adaptation study, while panel b) shows long-lagged results. Blue: Adapting stimulus was presented in the front view. Red: Adapting stimulus was presented in the back view. Diamonds: responses to novel objects shown in the same view as adapting objects. Open circles: significant adaptation (lower than novel objects, P < 0.05, paired t-test across subjects). Responses are plotted relative to a blank (with fixation) baseline. Error bars indicate averaged SEM.

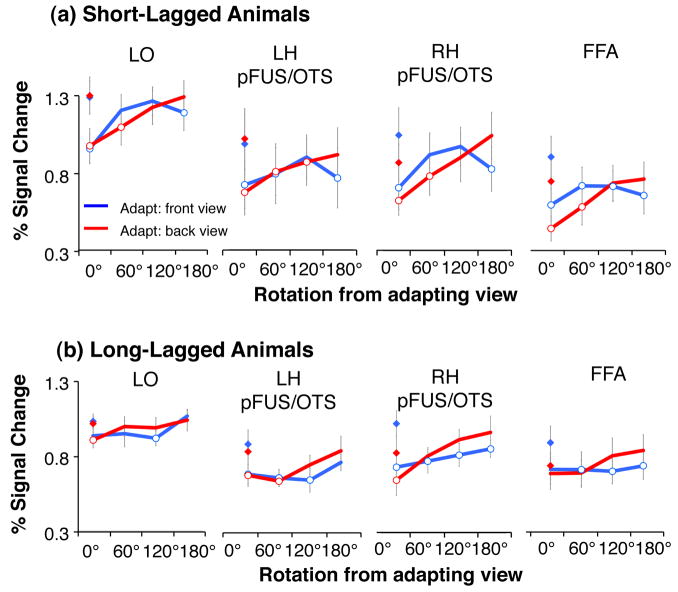

Sensitivity to Animal Rotation

In each of the ventral stream ROIs we also found significant adaptation to the repetition of identical images of animals (Fig. 6). Adaptation effects were signficant in all ROIs for both adapting views (front and back) and both SL and LL adaptation paradigms (Table 2, Fig. 6). We also found a significant effect of rotation in all ROIS (Table 1, Fig. 6). However, the profile of rotation cross-adaptation differed from vehicle data. First, we found differential effects of cross-adaptation across ROIs. In LO and left pFUS/OTS we found a significant effect of rotation that did not differ with the adapting view (Table 1), whereas in right pFUS/OTS and FFA we found that rotation effects varied with the adapting view: there was steeper recovery from adaptation when adapting with the back view of animals than the front view of animals (and a significant interaction between adaptating view × rotation, Table 1). Second, while rotation effects were significant in all ROIs, there was cross-adaptation for animal rotations up to 60° in the SL experiment for all ROIs and a gradual recovery from adaptation as rotation increased (Fig. 6, Table 3). This suggests lesser sensitivity to animal rotation than vehicle rotation within the same ROIs because there was cross-adaptation across larger rotations for animals than vehicles. Third, comparing responses across ventral ROIS revealed that the left pFUS/OTS seemed to have the lowest sensitivity to animal rotations as it remained adapted for rotations as large as 120° for both SL and LL paradigms (Fig. 6 and Table 3).

Figure 6. LO responses for animals during short and long-lagged fMRI-A experiments.

Panel a) shows results of short-lagged adaptation study, while panel b) shows long-lagged results. Blue: Adapting stimulus was presented in the front view. Red: Adapting stimulus was presented in the back view. Diamonds: responses to novel objects shown in the same view as adapting objects. Open circles: significant adaptation (lower than novel objects, P < 0.05, paired t-test across subjects). Responses are plotted relative to a blank (with fixation) baseline. Error bars indicate averaged SEM.

Since this is the first evidence for differential rotation effects across adapting views, we examined responses of right pFUS/OTS and bilateral FFA in the fMRI-A experiments in more detail. We found a monotonic recovery from adaptation as a function of rotation level when adapting with the back view of animals, but not when adapting with the front view of animals (Fig. 6a). This differential profile of cross-adaptation for front and back views of animals was more pronounced in the LL fMRI-A experiment in that there was significant cross-adaptation across all rotations when adapting with the front view of animals, but no cross-adaptation when adapting with a back view of an animal (Fig. 6b). During the SL fMRI-A experiment, there was a monotonic increase in response across rotations when adapting with a back view. However, when adapting with the front view, responses initially increased for 60° and 120° rotations, but decreased for the 180° rotation showing significant cross-adaptation between a front and back views of an animal (Fig. 6a, Table 3).

The differential profile of fMRI-A depending on the adapting animal view cannot be explained by differential behavioral responses across adapting views during fMRI experiments which were similar across adapting views (Fig 3b,c). This differential profile of recovery from adaptation as a function of adapting view may be the result of the higher responses to frontal than back views of animals observed in Experiment 1 and for nonrepeated images in the fMRI-A experiments (Figs. 4–6).

Comparison of Rotation and Repetition Effects across SL and LL fMRI-A

Our analyses revealed significant adaptation and rotation effects in all ventral stream regions, but we found differences across ROIS in their sensitvity to rotation and differences in their sensitivity to object rotations across categories. We next examined whether there were differences across SL and LL adaptation paradigms as recent reports suggest that different mechanisms may underlie adaptation in different time scales and there is higher rotation invariance in the LL than SL paradigm (at least in the PPA, Epstein et al., 2008).

For most ROIs and both categories, we found no significant interaction between rotation effects and the adaptation paradigm (Table 1). Thus, in general, rotation effects were similar across LL and SL adaptation. However, we found an interaction between animal rotation and fMRI-A paradigm in LO and left PFUS/OTS because of larger cross-adaptation across animal rotations in the LL experiment than in the SL experiment (Fig. 6). For example, in the left pFUS/OTS we found fMRI-A to rotated views of animals (relative to the nonrepeated condition) in both LL and SL experiments. However, in the LL experiment rotated versions of animals were not signficantly different than the zero rotation condition, while in the SL there was some recovery from adaptation relative to zero rotation (Table 4).

We also examined if the magnitude of adaptation effects differed across paradigms. We found no differential adaptation effects for vehicles in any ROI (Table 2). In contrast, we found differences in the level of adaptation for animals across paradigms in most ROIs (Table 2) with larger fMRI-A in the SL than LL experiment. This result is consistent with previous findings showing stronger adaptation with fewer intervening stimuli between repetitions of the same image, as in the SL experiment (Sayres and Grill-Spector, 2006).

Examination of Additional Factors that May Affect fMRI-A Results

We performed several controls to examine whether the observed fMRI-A results in object selective cortex can be explained by nonspecific adaptation effects, behavioral responses, or low-level stimulus effects. Specifically, we examined V1 responses to test whether adaptation effects in LOC are specific, or may be propagated from low level visual areas. We further examined whether fMRI-A results may be correlated with subjects’ performance during the scan and/or may be related to the physical or perceptual similarity across object views. Results of these analyses are discussed in detail below, and show that these factors do not explain our fMRI-A results.

No adaptation in V1

We examined V1 responses during the adaptation experiments. We reasoned that if adaptation is a general phenomenon it would also occur in primary visual cortex, further if responses in object-selective regions were driven by low-level stimulus effects we should observe the same profile of responses in V1.

In contrast to LOC, V1 did not adapt to repetitions of the same image regardless of experiment or category (Fig. 7, all Fs< 2.4, Ps> 0.18). There was no fMRI-A in V1 as in previous studies (Grill-Spector et al., 1999; Sayres and Grill-Spector, 2006). Analysis of responses in V1 as a function of object rotation showed that activation varied across rotations and there was a significant interaction between adapting view and rotation (main effect of object rotation, F1,11 = 20.6, P = .0001, interaction between rotation and adapting view F3,33= 8.3, P = .0003), but no effect of fMRI-A paradigm (F1,11 = .14, P = .72). V1 responses were highest for the 60° rotation from the front view and 120° from the back view of both object categories. That is, V1 were largest for the 75° view of both vehicles and animals and its responses were lowest for both the 0° and 180° rotations corresponding to front and back views. Note that this response profile parallels the visual angle which corresponds to the horizontal size of objects which is largest for the 75° and lowest for the front and back views due to foreshortening (Fig. 8a).

Figure 7. V1 responses across object viewpoint during short and long-lagged fMRI-A experiments.

Responses are plotted as a function of the object viewpoint with percentage signal change calculated relative to a blank (with fixation) baseline. Blue: Adapting stimulus was presented in the front view. Red: Adapting stimulus was presented in the back view. Diamonds: responses to novel objects shown in the same view as adapting objects. Error bars indicate averaged SEM. V1 revealed no significant adaptation, but preference to object views that had the largest horizontal extent (see Figure 8a).

Overall, V1 exhibited a different profile of response as compared to our object-selective ROIs, as its responses tracked the horizontal elongation of objects. The differential profile of response across V1 and object-selective cortex during our experiments suggests that the profile of responses in object-selective cortex is unlikely to be due to residual effects propagated from V1 or differences in image sizes of different views of objects.

fMRI-A results do not relate to performance changes

Behavioral data during adaptation experiments are shown in Fig. 3b & c. We found no effects of rotation on accuracy in the LL experiment for animals or vehicles (Fig 3b & c, all Fs< .87, all Ps> .47), and accuracy was at ceiling in the SL experiment. There were no significant rotation effects in response times for either the SL or LL experiment for vehicles or animals (all Fs< 2.8, Ps > .06).

We next examined the correlation between each subject’s average response time (RT) in all experimental conditions and the corresponding fMRI responses for all conditions. Response times and fMRI responses were first normalized by dividing each response time and fMRI response by that subject’s overall average response time or fMRI response. We found no significant correlation between fMRI-A and RT in any ROI or experiment (all SL: r2< .33, P> .05; all L: r2< .34, P> .05). This finding suggests that fMRI-A effects are not driven by performance changes, consistent with previous studies (Sayres and Grill-Spector, 2006;Xu et al., 2007).

Physical similarities do not explain differential adaptation effects for back and front views of animals

Measurements of similarity (IDI, see methods) revealed that images of an object rotated 180° were more similar than images of objects rotated smaller amounts of 60° or 120°. These effects did not depend upon adapting views (Fig. 8b). In addition, two different objects (nonrepeated) at the same viewpoint were more similar than two rotated versions of the same object. Note that this nonrepeated condition yielded the highest responses during the fMRI adaptation experiment, not the lowest as would be predicted from the high similarity. Finally, there was no significant correlation between BOLD responses in fMRI-A experiments and similarity measures in any ROI (all rs< .62, Ps> .1). Thus, pixel-level image dissimilarity does not provide an explanation for fMRI-A results.

Perceptual similarity does not explain differential adaptation effects for back and front views of animals

Our measurement of perceived similarity (d′, see Methods) decreased monotonically with rotation for both animals (F3,18 = 17.30, P< .0001) and vehicles (F3,18 = 34.60, P< .0001, see Fig. 8c), suggesting that the level of object rotation affects the ability to discriminate between objects. However, there was no differences in d′ depending on whether the first image was a front or back view of an animal (F = 1.62, P > .22) or vehicle (F < 1, P > .83). There was a significant negative correlation between d′ and fMRI responses for both animals and vehicles during the SL experiment (all ROIs, mean d′ vs. mean fMRI responses: r <−0.71, Ps< 0.05). In the LL experiment there was significant negative correlation between d′ vs. responses for vehicles in LO, and right pFUS/OTS (r < −.80, P < .02; all other ROIs, r > −0.04, P > .32). Thus, perceived similarity may account for the results of the SL adaptation experiment in which views that are perceptually more similar yield higher adaptation than views that are dissimilar. However, perceived similarity does not explain the differential effects of adaptation when adapting with a front vs. back view of an animal in right pFUS/OTS and the FFA.

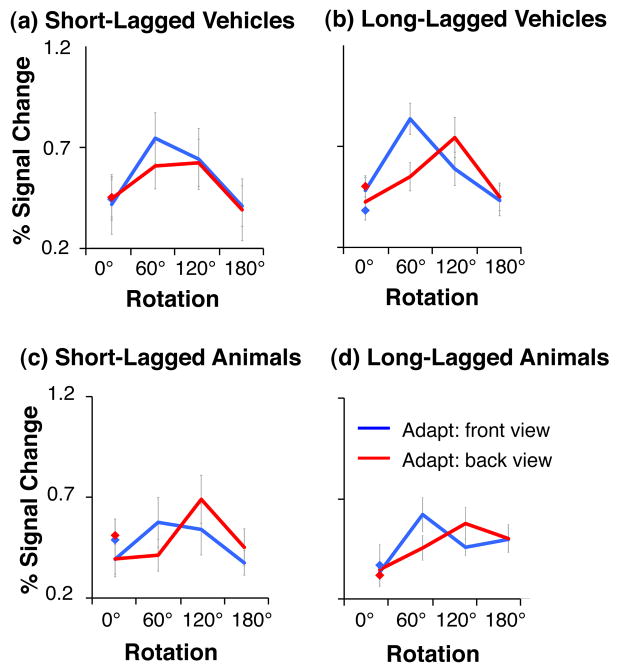

Mixtures of View-Dependent Neural Populations Explain fMRI Results

Our data suggest that objects are largely represented by view-dependent representations across object-selective cortex. Further, we found differences in the representation across categories and across ROIs. To better characterize the underlying representations, we implemented computational simulations to link BOLD responses with putative neural responses. We modeled BOLD responses in an fMRI voxel as the sum of neural responses across populations of view-dependent neurons (Fig. 9 and Methods). The model simulates a mixture of neural populations within each voxel tuned to different object views, and BOLD responses are proportional to the sum of responses across all neural populations. Results of the simulations indicate that the two main parameters that affect the profile of fMRI data are the view tuning width of the neural population and the proportion of neurons in a voxel that prefers each specific view.

Fig. 9a shows the response characteristics of a model of a voxel containing a mixture of view-dependent neural populations tuned to different object views, in which there is an equal number of neurons preferring each view. In this model, responses to front and back views are identical when there is no adaptation (Fig. 9a, diamonds), and the profile of adaptation as a function of rotation is similar when adapting with the front or back views (Fig. 9a). Such a model provides an account of the observed fMRI-A responses to vehicles across object-selective cortex, and for animals in LO ROIs and left pFUS/OTS. Comparison of panels across Fig. 9a shows that increasing the population tuning width results in cross-adaptation across larger rotations. Thus, this model suggests that the difference between the representation of animals and vehicles in LO is likely due to a smaller population view tuning for vehicles than animals (a tuning width of σ < 40° produces complete recovery from adaptation for rotations larger than 60°, as observed for vehicles). Further the model suggests that the population tuning width for animals is largest in the left pFUS/OTS.

Fig. 9b shows the predicted responses for a model of a voxel that contains a mixture of neural populations tuned to different object views with more neurons preferring the front than back view. With no adaptation, the BOLD response for the front view is higher than the back view (Fig. 9b, blue diamond vs. red diamond). Notably, this model predicts that the profile of adaptation differs when adapting with different views, and that the recovery from adaptation across rotations is steeper when adapting with the back view (red in Fig. 9b) than front view (blue in Fig. 9b). Rotation cross-adaptation depends on the population tuning width: when adapting with the front view, cross-adaptation may occur for all rotations when σ ≥ 40°. When σ ≤ 20°, there is recovery from adaptation for small and intermediate rotations, but there may appear to be cross-adaptation for large rotations. However, lower responses after a large rotation simply reflect a lower response to the non-preferred view rather than rotation cross-adaptation. Importantly, both the view-tuning width and the proportion of neurons in a voxel preferring a specific view contribute to fMRI responses. Thus, results of this model suggest that the responses of right pFUS/OTS and FFA can be explained by a model composed of mixtures of neural populations tuned to different views of animals, with a larger population preferring the front view of animals.

We also examined how other model parameters: the total number of Gaussians, the location of their centers, and the value of the adaptation constant, affected the profile of results (Supplementary Figs. 4–6). Importantly, the profile of results does not depend strongly on the number of view-tuned populations (represented by the number of Gaussians) as long as all views can be represented by a linear combination of neural populations (note the failure for 4 Gaussian models with narrow view tuning, Supplementary Fig. 4). Results also do not depend on the location of the Gaussian centers, as the profile of results remains similar even when Gaussians centers do not coincide with the test or adapting views (Supplementary Fig. 5); Except for simulations with six (or less) Gaussians with narrow tuning, centered away from the adapting views (Supplementary Fig. 5d, left column). In this condition there was little adaptation for identical images, which is inconsistent with the profile of neural responses we found. . Finally, changing the value of the adaptation constant mainly changes the degree of adaptation to the zero rotation (Supplementary Fig. 6).

Overall, simulation results show that the tuning width determines the generalization to new views of an object: larger tuning width produces cross-adaptation across larger rotations. When there is an over-representation of a specific view (i.e., a larger neural population tuned to this view) there are higher responses to this view over other views without adaptation and the profile of fMRI rotation cross-adaptation depends both on the level of rotation and the adapting view.

DISCUSSION

We found evidence that animals and vehicle representations across human object-selective cortex are sensitive to viewpoint. First, we found that after being adapted to a particular view of an object, the majority of regions in object-selective cortex recovered from adaptation to some degree when rotated views of the same object were shown. This recovery occurred for both short and long-lagged fMRI-A paradigms, and across two stimulus categories (animals and vehicles). These results are consistent with previous fMRI-A studies showing that LOC responses are sensitive to object rotation (Grill-Spector et al., 2001; Grill-Spector et al., 1999), face view (Fang et al., 2007; Grill-Spector et al., 2001; Grill-Spector et al., 1999; Pourtois et al., 2005), and views of novel objects (Ewbank and Andrews, 2008; Ewbank, Schluppeck, and Andrews, 2005; Gauthier et al., 2002).

Second, frontal views of animals elicited higher responses than rear views in right pFUS/OTS and bilateral FFA without adaptation in Experiment 1, and for non-repeated objects in the SL and LL fMRI-A experiments, suggesting that a larger neural population prefers frontal animal views in these regions. Third, for regions that preferred the front views of animals, the profile of rotation cross-adaptation depended on the adapting view (front vs. back). This response profile can be explained by a model in which voxels contain a mixture of view-dependent neural populations with a prevalence of neurons preferring the front view of animals.

At the same time, we found differences in the representations of object view across object-selective cortex and categories. We found that vehicle representations were more sensitive to rotation than animal representations. Across object-selective cortex, vehicle images shown in a view that was rotated 60° from the adapting view produced complete recovery from adaptation. For animals, we found significant cross-adaptation across rotations of 60° in several object-selective regions. These results can be explained by more narrowly view-tuned neurons representing vehicles than animals as we show in the simulations. Further, fMRI-A experiments measuring the effects of vehicle rotations for smaller rotations of 0–60° will be useful for validating the predictions of our model and testing whether ventral stream subregions are sensitive or insensitive to smaller vehicle rotations.

Differences in recovery from adaptation with rotation between categories may be due to several factors including differences in the geometry of objects (e.g. for some categories there may be large shape changes across views, but for others these changes may be subtle), differential exposure to views of a category (e.g. there may be similar level of exposure to front and back views of vehicles, but higher exposure to front views of animals, (Freedman et al., 2006) and familiarity (e.g., learning may modify sensitivity to object shape and view; Ewbank and Andrews, 2008; Freedman et al., 2006; Gauthier et al., 1999; Jiang et al., 2007; Logothetis and Pauls, 1995).

Representation of objects in the FFA

We found significant levels of adaptation to both vehicles and animals in the FFA, consistent with previous studies that reported FFA adaptation to non-faces (such as butterflies and houses (Avidan et al., 2002; Ewbank et al., 2005; Schiltz and Rossion, 2006). The FFA also recovered from adaptation with rotation for both animals and vehicles. Previous results have shown that the FFA recovers from adaptation to rotated faces (Ewbank and Andrews, 2008; Fang et al., 2007; Grill-Spector et al., 1999; Pourtois et al., 2005). Our current results expand previous findings by suggesting that the FFA is sensitive to changes in viewpoints of both animate and inanimate objects. One possibility is that face-specific mechanisms underlie viewpoint sensitivity to vehicles and animals in the FFA. However, since vehicles do not contain facial features, and we found similar effects for vehicles in object-selective cortex, this possibility is unlikely. Alternatively, adaptation to vehicles may stem from a small subpopulation of neurons in the FFA that responds to vehicles, and shows view-dependent recovery from adaptation during fMRI-A experiments.

Differences across-adaptation paradigms

In general, adaptation effects were larger for animals in the SL than LL adaptation experiments. Especially in LO we found robust fMRI-A for animals during the SL experiment and substantially lesser fMRI-A during the LL experiment (both main effect of paradigm and significant interaction between paradigm and adaptation effect, see Table 2). These findings are consistent with previous results showing larger adaptation when less time and fewer intervening stimuli occur between repetitions of the same image (Henson et al., 2004; Sayres and Grill-Spector, 2006) and with results showing significantly lesser fMRI-A in LO for SL than LL repetitions of the same image (Ganel et al., 2006).

The sensitivity to object rotation was largely similar across-adaptation paradigms. However, LO and left pFUS/OTS responses to animals showed a shallower profile of recovery from adaptation in the LL than SL experiments. Thus, differences in the reported sensitivity to object view across previous studies (Grill-Spector et al., 1999; James et al., 2002; Valyear et al., 2006; Vuilleumier et al., 2002) may be due to a combination of factors: including differences in which object categories subjects viewed, amounts of rotations and fMRI-A paradigms. For example previous SL fMRI-A studies also used larger rotations, and they generally show more rotation sensitivity than studies which used LL fMRI-A with smaller rotations. Notably, the present experiments suggest that a more useful approach to examining sensitivity to object transformations in visual cortex is a parametric approach. This allows characterization of the sensitivity of responses to a range of a transformation parameters rather than just characterizing regions as being “invariant” or “not invariant” to a transformation.

While we found only subtle differences in sensitivity to rotation across SL and LL experiments, Epstein and colleagues (2008) recently reported significant differences in PPA sensitivity to rotated scenes across SL and LL fMRI-A experiments. In particular, they found greater sensitivity to scene rotation in SL than LL fMRI-A experiments. Epstein and colleagues suggested that their results indicate different neural mechanisms across shorter and longer time scales. We hypothesize that the source of the differential findings between our results and those of Epstein and colleagues’ may be a regional difference, with the PPA being more sensitive to fMRI-A paradigm differences than the LOC or FFA (see also Verhoef et al., 2008 who showed differential neural adaptation across monkey IT and PFC).

Importance of measuring the non-adapted BOLD responses for interpretation of adaptation results

Our results underscore the importance of measuring the level of response to different stimuli without adaptation, in addition to measuring cross-adaptation across parametric object transformations. Our data and model (Fig. 9) suggest that even a moderate difference in the level of response to different stimuli without adaptation may affect the profile of fMRI-A results. Determining whether lower responses after adaptation reflect cross-adaptation across changes in a parameter, or the response to a non-preferred stimulus, is difficult without first measuring the response before adaptation to different stimuli.

From Neurons to Voxels

Inferences about the properties of the underlying neural populations in the human brain from the profile of fMRI-A results require an understanding of the relationship between neural tuning to a transformation and neural adaptation. However, the precise relationship between neural tuning to a parameter (e.g. view-sensitivity) and adaptation effects in object-selective cortex is not well understood (Grill-Spector et al., 2006). Sawamrua and colleagues (2006) examined cross-adaptation in IT neurons across different objects and revealed that two object stimuli (A, B) that elicit similar responses in IT neurons produce less cross-adaptation (i.e. cross-adaptation from B->A) than adaptation due to repetitions of identical stimuli (A->A or B->B). One interpretation of Sawamura et al. (2006) results is that cross-adaptation in IT occurs when different stimuli have overlapping inputs and recovery from adaptation occurs when inputs are non-overlapping.

Another caveat in interpreting results of fMRI-A experiments is that BOLD responses reflect the aggregate neural responses in a voxel, and thus likely reflect the properties of the majority neural population in a voxel. Therefore, our data suggest that the majority of neurons in human object selective cortex are view-dependent. It is possible that amongst this majority there is a small minority of view-invariant neurons (Booth and Rolls, 1998; Logothetis et al., 1995).

Presently, only a few studies have directly examined the relation between neural sensitivity to a transformation and neural adaptation. Lueschow and colleagues (1994) showed that cross-adaptation to changes in a parameter (position) reflects neural insensitivity to that change. Recently, Verhoef and colleagues (2008) showed that the level of cross-adaptation in monkey IT neurons across changes in object size depends on the difference in size between the adapting stimulus and the test stimulus. Verhoef and colleagues found size tuned neurons in IT cortex and showed a graded profile of recovery from adaptation as the size difference between the adapting stimulus and the test stimulus increased. These results suggest that cross-adaptation across object transformations is consistent with insensitivity to that transformation at the neural level. Conversely, recovery from adaptation resulting from a transformation is consistent with sensitivity to that transformation at the neural level.

Implications for Theories of Object Recognition

Our data suggest that multiple representations of objects exist across the human ventral stream and that (at least for animal stimuli) there may be a hierarchy of representations across object selective cortex from LO which exhibits a higher sensitivity to animal view to left fusiform regions that exhibit lesser sensitivity to animal view. Given the evidence that neurons are not completely invariant to object view, how is invariant object recognition accomplished? One appealing model for invariant object recognition is that objects are represented by a population code in which single neurons may be selective to a particular view, but the distributed representation across the neural population (in a voxel or across a cortical region) is robust to changes in object view (Perrett et al., 1998).

Another point to consider is whether the finding of view-dependent representations in the human ventral stream necessitates downstream view-invariant neurons in order to support view-invariant recognition? One possibility is that the ventral stream provides a representation on which perceptual decisions are performed by neurons outside visual cortex and these downstream neurons are view-invariant. Examples of view-invariant neurons have been found in the hippocampus, perirhinal cortex and prefrontal cortex (Freedman et al., 2001, 2003; Quiroga et al., 2005; Quiroga et al., 2008). Alternatively, a population (distributed) code across view-tuned neurons in the ventral-stream may be sufficient for view-invariant object recognition. A distributed code can support invariant recognition across object rotations if information about object view and object identity is independent. For example, responses of “animal selective neurons” may be modulated by animal view. However, as long as responses across this population of animal neurons are higher than the responses to other objects (and views), animal information can be recovered across views.

In sum, our results provide evidence for the view sensitivity of object representations across human object-selective cortex evidenced through a combination of both standard fMRI and fMRI-A paradigms. These data provide important empirical constraints for theories of object recognition, and highlight the importance of parametric manipulations for capturing neural selectivity to any type of stimulus transformation.

Supplementary Material

Acknowledgments

This research was funded by NSF grant 0345920 and Whitehall Foundations grant 2005-05-111-RES to KGS and NIH postdoctoral fellowship 5F32EY015045 to DA.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Avidan G, Hasson U, Hendler T, Zohary E, Malach R. Analysis of the neuronal selectivity underlying low fMRI signals. Curr Biol. 2002;12:964–972. doi: 10.1016/s0960-9822(02)00872-2. [DOI] [PubMed] [Google Scholar]

- Basri R, Ullman S. Computer Vision. 1988. The alignment of objects with smooth surfaces. [Google Scholar]

- Biederman I. Recognition-by-components: a theory of human image understanding. Psychol Rev. 1987;94:115–147. doi: 10.1037/0033-295X.94.2.115. [DOI] [PubMed] [Google Scholar]

- Biederman I, Rabinowitz JC, Glass AL, Stacy EW., Jr On the information extracted from a glance at a scene. J Exp Psychol. 1974;103:597–600. doi: 10.1037/h0037158. [DOI] [PubMed] [Google Scholar]

- Booth MC, Rolls ET. View-invariant representations of familiar objects by neurons in the inferior temporal visual cortex. Cereb Cortex. 1998;8:510–523. doi: 10.1093/cercor/8.6.510. [DOI] [PubMed] [Google Scholar]

- Boynton GM, Engel SA, Glover GH, Heeger DJ. Linear systems analysis of functional magnetic resonance imaging in human V1. J Neurosci. 1996;16:4207–4221. doi: 10.1523/JNEUROSCI.16-13-04207.1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brainard DH. The psychophysics toolbox. Spatial Vision. 1997;10:433–436. [PubMed] [Google Scholar]

- Bulthoff HH, Edelman S. Psychophysical support for a two-dimensional view interpolation theory of object recognition. Proc Natl Acad Sci U S A. 1992;89:60–64. doi: 10.1073/pnas.89.1.60. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Burock MA, Buckner RL, Woldorff MG, Rosen BR, Dale AM. Randomized event-related experimental designs allow for extremely rapid presentation rates using functional MRI. Neuroreport. 1998;9:3735–3739. doi: 10.1097/00001756-199811160-00030. [DOI] [PubMed] [Google Scholar]

- Dale AM, Buckner RL. Selective averaging of rapidly presented individual trials using fMRI. Hum Brain Mapp. 1997;5:329–340. doi: 10.1002/(SICI)1097-0193(1997)5:5<329::AID-HBM1>3.0.CO;2-5. [DOI] [PubMed] [Google Scholar]

- Desimone R. Neural mechanisms for visual memory and their role in attention. Proc Natl Acad Sci. 1996;93:13494–13499. doi: 10.1073/pnas.93.24.13494. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eger E, Ashburner J, Haynes J, Dolan RJ, Rees G. fMRI activity patterns in human LOC carry information about object exemplars within category. J Cogn Neurosci. 2008;20:356–370. doi: 10.1162/jocn.2008.20019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eger E, Henson RN, Driver J, Dolan RJ. BOLD repetition decreases in object-responsive ventral visual areas depend on spatial attention. J Neurophysiol. 2004;92:1241–1247. doi: 10.1152/jn.00206.2004. [DOI] [PubMed] [Google Scholar]

- Epstein RA, Parker WA, Feiler AM. Two kinds of fMRI repetition suppression? Evidence for dissociable neural mechanisms. J Neurophysiol. 2008;99:2877–2886. doi: 10.1152/jn.90376.2008. [DOI] [PubMed] [Google Scholar]

- Ewbank MP, Andrews TJ. Differential sensitivity for viewpoint between familiar and unfamiliar faces in human visual cortex. Neuroimage. 2008;40:1857–1870. doi: 10.1016/j.neuroimage.2008.01.049. [DOI] [PubMed] [Google Scholar]