Abstract

Background

Unrelieved pain among nursing home (NH) residents is a well-documented problem. Attempts have been made to enhance pain management for older adults, including those in NHs. Several evidence-based clinical guidelines have been published to assist providers in assessing and managing acute and chronic pain in older adults. Despite the proliferation and dissemination of these practice guidelines, research has shown that intensive systems-level implementation strategies are necessary to change clinical practice and patient outcomes within a health-care setting. One promising approach is the embedding of guidelines into explicit protocols and algorithms to enhance decision making.

Purpose

The goal of the article is to describe several issues that arose in the design and conduct of a study that compared the effectiveness of pain management algorithms coupled with a comprehensive adoption program versus the effectiveness of education alone in improving evidence-based pain assessment and management practices, decreasing pain and depressive symptoms, and enhancing mobility among NH residents.

Methods

The study used a cluster-randomized controlled trial (RCT) design in which the individual NH was the unit of randomization. The Roger's Diffusion of Innovations theory provided the framework for the intervention. Outcome measures were surrogate-reported usual pain, self-reported usual and worst pain, and self-reported pain-related interference with activities, depression, and mobility.

Results

The final sample consisted of 485 NH residents from 27 NHs. The investigators were able to use a staggered enrollment strategy to recruit and retain facilities. The adaptive randomization procedures were successful in balancing intervention and control sites on key NH characteristics. Several strategies were successfully implemented to enhance the adoption of the algorithm.

Limitations/Lessons

The investigators encountered several methodological challenges that were inherent to both the design and implementation of the study. The most problematic issue concerned the measurement of outcomes in persons with moderate to severe cognitive impairment. It was difficult to identify valid, reliable, and sensitive outcome measures that could be applied to all NH residents regardless of the ability to self-report. Another challenge was the inability to incorporate advances in implementation science into the ongoing study

Conclusions

Methodological challenges are inevitable in the conduct of an RCT. The need to optimize internal validity by adhering to the study protocol is compromised by the emergent logistical issues that arise during the course of the study.

Introduction

Conducting clinical trials in any setting is challenging. However, the nursing home (NH) environment can be particularly daunting. Implementing a complex multimodal intervention adds to the investigators’ anxieties. Unexpected challenges occur, regardless of the care with which the research team plans and executes the study. In sharing and discussing the ‘hits’ and ‘misses’, an investigative team can contribute to science by allowing others to learn from their experiences. In this spirit, we describe our experiences conducting a randomized controlled trial (RCT) to test a set of pain assessment and management algorithms (step-by-step flowcharts for clinical use) in 27 Washington State NHs. We focus on several design decisions we made and some challenges we faced as a result of these decisions. We also discuss other obstacles that we had to overcome. Our examples of these ‘untoward consequences’ and other challenges may provide insight and guidance to other investigators.

Rationale for the study

Pain is a common problem among older NH residents and is associated with depression, sleep disturbance, decreased mobility, increased health-care utilization, and physical and social role dysfunction [1–3]. Despite the scope of the problem and its negative consequences, there is much evidence that pain is often not assessed and is undertreated in this vulnerable group, even though evidence-based guidelines for assessing and treating pain are available [2–9].

Changing clinical practice involves attention to multiple factors that influence individuals’ or groups’ willingness and ability to incorporate new knowledge or a new system of care. This use of multiple strategies to encourage the adoption of evidence-based practices is a staple of implementation science, which the National Institutes of Health define as ‘the scientific study of methods to promote the integration of research findings and evidence-based interventions into health-care policy and practice’ (http://grants.nih.gov/grants/guide/pa-files/PAR-10-038.html). This area of scientific inquiry seeks to understand the behavior of health-care professionals and support staff, health-care organizations, consumers and family members, and policy makers in context as key variables in the sustainable adoption, implementation, and uptake of evidence-based interventions. Implementation scientists recognize that there may be two components being tested in a clinical trial: the intervention itself (in this case, the pain management algorithm) and the implementation approaches [10].

One classic and widely used framework to understand, study, and facilitate the adoption of new behaviors into regular practice is the Rogers’ Diffusion of Innovations Theory [11]. According to this theory, an innovation is an idea or a process that is perceived as new by an individual or a group. Diffusion is the process by which an innovation is communicated and adopted over time among members of the social system. Many successful strategies for changing clinical practice incorporate Rogers’ ideas. Potentially successful change strategies include providing feedback to care providers from ‘influential colleagues’, multidisciplinary teams, follow-up reminders, and academic detailing, which involves providing practicing clinicians with specific education about best practices [12,13]. Gilbody et al. [14] reported that clinician education alone was ineffective in changing care practices, but complex multifaceted interventions that incorporated clinician education were successful. Specific strategies within these multicomponent interventions include collaborative patient management, clinician education, enhanced roles for nurses, and use of opinion leaders. The authors also identified the use of algorithms as an effective method for changing practice.

Clinical algorithms are visual road maps that use a step-by-step decision-making process to enhance clinicians’ ability to make sound clinical decisions based on best evidence [15]. Clinical trials conducted in diverse settings have shown that assessment and treatment algorithms are effective in improving practice and patient outcomes [16–18].

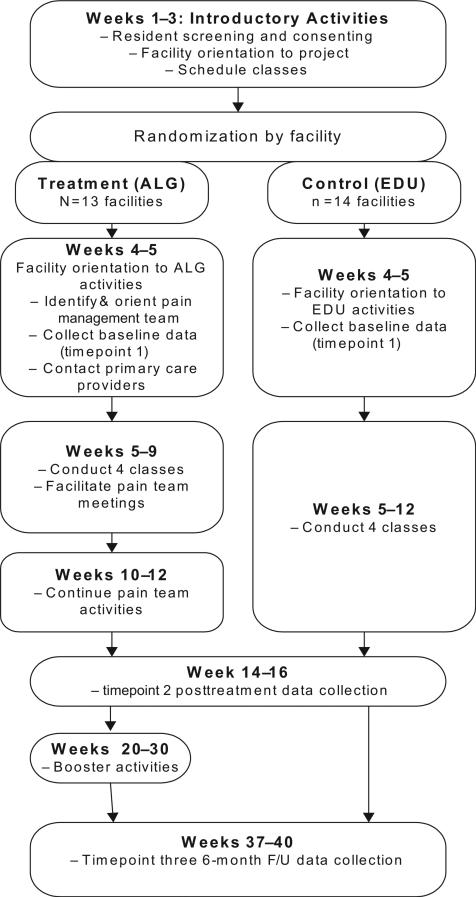

The aim of the study was to examine the effectiveness of a set of pain management algorithms coupled with a comprehensive adoption program in improving evidence-based pain assessment and management practices, decreasing pain and depression, and improving mobility among NH residents. The algorithm (ALG) intervention was compared to an education (EDU)-only control. Table 1 lists the specific aims and accompanying hypotheses of the trial. Figure 1 represents an outline of the study procedures.

Table 1.

Study Aims and Hypotheses

| Aims | Hypotheses |

|---|---|

| Aim 1. Evaluate the effectiveness of a pain management algorithm coupled with intense diffusion strategies (ALG) as compared with pain education (EDU) alone in improving pain, mobility, and depression among nursing home residents at the 14–16 weeks timepoint. | Hypothesis 1-1. At posttreatment (timepoint 2), ALG residents will demonstrate greater decreases in surrogate-reported pain than residents in the EDU group. |

| Hypothesis 1-2. At posttreatment (timepoint 2), ALG residents will demonstrate less depression, less self-reported pain intensity and pain-related interference, and enhanced mobility compared with residents in the EDU group. | |

| Aim 2. Determine the extent to which adherence to the ALG and organizational factors are associated with changes in residents’ mobility, pain, and depression and the extent to which changes in these variables are associated with changes in outcomes. | Hypothesis 2-1. Pain treatment plans for residents in ALG facilities will show greater pre- to posttreatment increases in adherence than will EDU facilities. |

| Hypothesis 2-2. Pre- to posttreatment changes in residents’ mobility, pain, and depression will be associated with adherence to the algorithms and organizational factors. | |

| Aim 3. Evaluate the persistence of changes in process and outcome variables at long-term follow-up (6 months postintervention). | Hypothesis 3-1. Differences between ALG and EDU residents’ outcomes will be maintained at 6-month follow-up (timepoint 3). |

| Hypothesis 3-2. Differences in adherence to the ALG, pre- and post-ALG intervention, will be maintained at 6-month follow-up (timepoint 3). |

ALG: algorithm intervention; EDU: education-only control.

Figure 1.

Schematic of the study activities.

Study design decisions

The study used a cluster RCT design with NHs as the unit of randomization. We chose to randomize facilities rather than individuals within facilities to minimize cross-contamination between intervention and control participants, which can dilute treatment effects [19]. Using a standard approach for RCTs, randomization occurred following recruitment of NH residents within each facility and completion of the baseline measurements, and prior to initiating any intervention activities (ALG) or classes (EDU). Assignment to ALG or EDU was made by the statistician coauthors (M. B. N./N. P.). To manage enrollment, data collection, and other study activities, facilities were enrolled in several waves from September 2006 to December 2009. All study procedures were approved by the Swedish Medical Center Institutional Review Board (IRB) (FWA00000544).

Eligible NH residents were (1) 65 years or older; (2) receiving residential, long-term care at the facility (not Medicare-funded skilled nursing or rehabilitation services); and (3) experiencing moderate to severe pain at some time in the week prior to screening. Participants gave signed consent; the surrogate decision maker agreed to participation whenever a resident was incapable of providing consent. We chose to include persons with moderate to severe cognitive impairment because they comprise nearly 50% of the NH residents [20], and we wanted to maximize the generalizability of our study findings. Also, older persons with cognitive impairment are at greater risk for underdetection and undertreatment of pain than older persons who are cognitively intact [5,21–23].

We applied principles of the Diffusion of Innovations Theory to several strategies that we implemented to support adoption of the proposed clinical changes. First, the ALG intervention focused on the facility, rather than individual clinicians or primary care providers (PCPs). This approach was chosen primarily because of the relative infrequency of physician visits to the NH [24,25], the educational needs of the NH staff, and the central role of the NH staff in assessing pain. Also, the goal of changing the NH culture and making systematic changes to promote acceptance and use of the algorithms dictated a focus on the facility rather than individual PCPs [11,26].

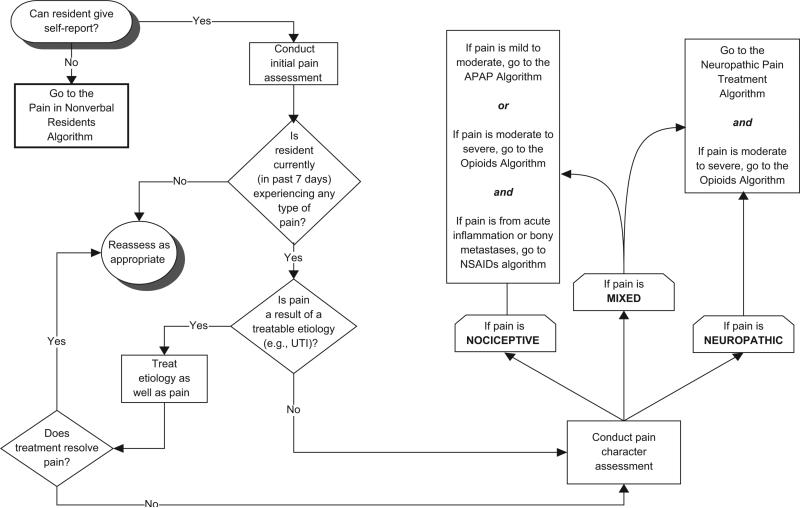

Second, the intervention focused on the assessment and treatment algorithms to address pain. Each facility received copies of a pain management algorithm book for all licensed nursing staff. The algorithm book contained 11 linked algorithms covering the following topics: general pain assessment (see Figure 2), pain assessment and management in cognitively impaired NH residents, opioid initiation and titration, acetaminophen administration, guidance on appropriate use of nonsteroidal anti-inflammatory agents, pharmacologic treatment of neuropathic pain, and assessment and treatment for three common side effects, that is, constipation, sedation, and delirium. Also included in each section were ‘Guiding Principles’, formatted as bulleted lists, as well as tables of commonly used pain medications. The investigators drafted the book, which was based on the most current published clinical guidelines [8,27–29] and high-quality literature reviews [30] available at the time of the study. All content was then reviewed by a panel of six nationally recognized experts in geriatric pain management and dementia. To keep the content current throughout the project, we revised the book 2 years after the initial edition was produced, using an updated literature and expert panel review.

Figure 2.

General pain assessment algorithm.

UTI: urinary tract infection; NSAIDs: nonsteroidal anti-inflammatory drugs; APAP: acetaminophen.

In addition to copies of the pain management algorithm book, intervention facilities received a three-ring binder containing supplemental, high-value resource materials as well as an additional copy of the algorithm book to serve as a central reference guide in the facility. Each nursing unit and staff development coordinator also received copies of the resource binder. The algorithm and reference materials addressed several barriers affecting pain management, which included misconceptions about pain in older adults, evaluation of pain in NH residents with cognitive impairment, older adults’ increased sensitivity to medication side effects, and polypharmacy [31,32].

We provided staff education and support for using the algorithm and resource materials. One of the investigators (A. D. or M. E.) led one class each week for 4 weeks. The classes covered each algorithm, and content was reviewed as necessary. Each class was offered as many as three times to facilitate attendance across shifts. We also videotaped the classes for staff who were unable to attend the live sessions. Case studies were incorporated into classes to demonstrate use of the algorithms. We also encouraged staff to discuss challenging pain management cases from their own practice and demonstrated how to apply the algorithms to these cases.

Third, we guided each facility in forming a pain management team that comprised clinical champions and opinion leaders from multiple disciplines. The purpose of the teams was to provide leadership, stability, and guidance for implementing the algorithm into practice; each team included one or more ‘clinical champions’. These teams began meeting during the third week of the classes and continued for 2 weeks after the classes ended. They worked with one of the investigators (A. D.) to identify and address organizational barriers to pain management and to discuss challenging specific pain problems encountered in NHs. The pain teams were also charged with reviewing and customizing multiple chart forms (e.g., pain flow sheets, pain assessment forms) and policies developed by the investigators for incorporation into the facility's procedures and processes.

Fourth, we procured the support and involvement of PCPs. Upon randomization of facilities to the intervention, staff identified PCPs who regularly practiced in the facility. These clinicians received a letter from the Medical Director and investigators that introduced the project and outlined the study aims and procedures. They were given the investigators’ telephone numbers and email addresses and encouraged to contact any of them if they had questions or concerns. The letter also invited them to attend a 30-min web conference that elaborated the algorithm process and explained the rationale for focusing on facility-wide efforts to enhance pain assessment and management rather than direct engagement with PCPs. Each PCP received a US$100 honorarium for watching the webinar and was invited to join the pain team.

Our fifth strategy was aimed at maintaining adherence to the algorithm and other pain management practices after the period of intensive training and pain team start-up activities. Approximately 8 weeks following the intervention, we initiated biweekly ‘boosters’, which continued for 8 weeks. Boosters included items such as pens and magnets featuring pain-related logos, and ‘Pain-Free Zone’ posters. We also sent ‘Fast Facts FAXes’ containing brief descriptions of information that was presented in the algorithm classes. In some facilities, we conducted telephone conferences with pain team members that focused on continued problem solving around specific cases or strategies for overcoming barriers to implementation of the algorithms.

Another methodological decision we made in designing the trial was to avoid a ‘standard care’ or ‘no intervention’ control condition. We based our decision on the idea of ‘resentful demoralization’ that occurs when people feel as though they are receiving less-than-optimal treatment [33,34]. Our experience working with NHs has shown that administration and staff are eager for any assistance to improve care. If we had provided half of the facilities with little or nothing, the inequity between intervention and control facilities and participating NH residents could hamper recruitment, morale, and willingness to stay involved in the project. Similar problems also could have occurred with a wait-list treatment design, because wait-listed facilities would have to provide baseline data and then wait several months to receive the intervention. Although we recognized that control facilities might have demonstrated some improvement in pain and in processes or outcomes, we believed that education alone was much less likely to change significantly the practices and NH resident outcomes compared with the ALG intervention. Thus, the EDU arm satisfied the goal of minimizing frustration and attrition, while maximizing statistical power to detect significant differences between the ALG and EDU groups.

The EDU procedure consisted of four classes scheduled as 1-h weekly in-services. The content covered basic principles of pain assessment and management for frail, older adults. Similar to the ALG classes, videotapes of each session were made available at every EDU facility.

The NH residents were the primary unit of analysis for addressing the specific aims of the study, and all analyses were based on intention to treat. We used linear mixed models [35] to ascertain the association between outcome variables and predictors. All linear mixed models included a random effect for the facility to account for the possible correlation of NH residents’ outcomes within each facility. We carried out both unadjusted and covariate-adjusted comparisons of outcomes between the ALG and EDU groups. The unadjusted comparison of outcomes included ALG versus EDU as a predictor (a binary variable). The covariate-adjusted models included factors selected into the linear mixed model with the forward stepwise variable selection technique (p < 0.05 for inclusion). As an alternative to the forward stepwise technique, we also considered the backward elimination technique (p > 0.05 for exclusion). After covariates were selected, the group (ALG vs. EDU) was added into the model, and the covariate-adjusted association between the group and the outcome was ascertained. All calculations were performed in the statistical language R, version 2.13.0 (Vienna, Austria).

Addressing challenges in implementing the study

Despite careful planning and consideration of alternatives to the study design, we encountered several challenges. One major challenge involved our attempts to test a complex, multimodal intervention in a variety of facilities. We used an RCT design, which is the gold standard for evaluating therapies [36]. This model maximizes internal validity, often at the expense of external validity; it typically is used in efficacy studies where the focus is on delivery of a standard treatment or intervention to a homogeneous group under optimal conditions [37]. The RCT also is used in effectiveness studies such as ours, where the intervention is delivered in a ‘real-world setting’ [37]. Although fidelity to the study protocol is critical to the RCT study design, process evaluation and measures are less emphasized than outcomes. In contrast, implementation studies often incorporate formative and summative evaluation of processes, including rates of adoption and fidelity to the clinical intervention. These are critical elements of the implementation study design [10].

In our study, we included evaluation of changes in process measures and their association with patient outcomes as one specific aim (Table 1, specific aim 2). Our two process variables were a measure of adherence to the algorithms that used a chart audit tool [38] and a survey of organizational structures and processes that supported and reflected pain best practices. In addition, we conducted focus group interviews at four intervention facilities in order to capture qualitative data about facilitators and barriers to the adoption of the algorithms.

However, we missed opportunities to collect process data that could have provided insight into our findings. One example of a missed opportunity was our failure to explore the reasons for, and effects of, low-PCP engagement in the study. Out of approximately 75 PCPs who practiced in the intervention facilities, only 2 viewed the webinar explaining the study and the algorithms. Moreover, few were involved in the pain teams. Because our focus was on the nursing staff and medical directors at the facilities, we did not monitor or address potential PCP nonadherence to evidence-based pain practices. When we conducted our focus groups at the end of the study, some nursing staff reported that PCPs’ refusal to respond to pain assessment data or follow staff's recommendations was sometimes a problem. Had we incorporated ongoing, intensive monitoring of barriers, and addressed these barriers during the study (as is done in some implementation studies), we would have maximized the effectiveness of the intervention.

Another key issue arose as a result of our decision to include the NH residents with moderate to severe cognitive impairment. Of the 485 NH residents enrolled in the study, 361 (74%) were determined to be able to provide reliable self-report at baseline. At timepoints 2 and 3, 317/430 (74%) and 265/390 (68%) participants provided reliable self-report, respectively.

Because 26%–32% of our study participants could not to provide reliable self-report, we were unable to use NH resident report of pain without losing statistical power. Thus, we needed a primary outcome measure that could be collected for all NH residents, regardless of the ability to communicate verbally. Initially, we chose the Functional Independence Measure (FIM) to assess ability to ambulate, either in a wheelchair or walking. This outcome seemed appropriate in that physical functioning frequently is used as an outcome in pain therapy trials [39] and in that the FIM is a valid, reliable, and widely used measure that allows for an objective evaluation that is not reliant on patient report [40–42]. Moreover, Morrison et al. [43] reported a significant, negative association between pain and FIM-locomotion scores in a large sample of older adults with pain. Early in the trial, however, we realized that our sample of patients was very physically impaired; thus, the FIM was unlikely to be sensitive enough for the relatively small improvements that could be expected. For this reason, we examined other outcomes that were measured in all NH residents: depression, pain behaviors, and surrogate (certified nursing assistant (CNA)) reports of usual pain intensity.

The Cornell Scale for Depression in Dementia was collected for all NH residents; its reliability and validity are supported in persons with dementia, as well as those without dementia [44–47]. However, depression was a secondary outcome measure in that it was influenced by, but not solely attributable to pain; thus, it was not an appropriate primary outcome measure.

We then considered the pain behaviors tool, Checklist of Nonverbal Pain Indicators (CNPI). We had initially chosen the scale because psychometric data that were available at the time suggested that it was reliable, valid, and clinically useful. It assessed only six groups of behaviors on rest and with movement and was readily understood by CNAs. However, subsequent reviews [48] as well as data from our study [49] demonstrated that the CNPI had psychometric deficiencies that raised questions about its suitability as the primary outcome for pain.

We finally chose a surrogate pain intensity measure as the primary outcome for all the NH residents. We used the Iowa Pain Thermometer (IPT), a tool that uses a graphic representation of a thermometer in which the base is white and becomes increasingly red as one moves up the column. The base is anchored with the words ‘no pain’, and the top of the thermometer is anchored with ‘the most intense pain imaginable’, with additional word descriptors between these two extremes to represent different levels of pain intensity. In all, 13 evenly spaced circles, corresponding with numeric values from 0 to 12, are placed between the thermometer's verbal descriptors. Several studies have shown that the IPT is reliable, valid, and generally preferred over other pain intensity tools by older adults [50–52].

CNAs were chosen as the surrogate reporters of NH residents’ pain. Research has shown that with proper training, CNA proxy reports can be highly accurate [53]. Their estimates have been found to correlate significantly higher with resident's reports than estimates from other care providers [54,55]. CNAs were asked to observe the participant at rest and also during movement or transfers that occurred during morning care and report each behavior observed. Based on behaviors they observed, they reported residents’ pain using a scale in which 0 = no pain to 12 = the most intense pain imaginable. To ensure that CNAs completed the IPT and the CNPI accurately, we conducted in-service training sessions at every facility prior to beginning data collection. Each facility also received an 8-min training DVD to educate new CNAs and CNAs who were unable to attend the in-service training. To minimize missing data and to assure accuracy further, research assistants interviewed CNAs after participants’ morning care and marked the CNAs’ responses to both the IPT and CNPI, clarifying instructions and items whenever necessary.

Relying on surrogate pain reports was not entirely satisfactory, because self-reported pain is generally viewed as the gold standard for measuring this subjective experience [9,29,56–58], However, the CNA-reported IPT allowed us to include the entire sample in the analysis. Studies of outcomes in persons with advanced dementia and persons at end of life frequently use surrogate-reported outcomes because patient report usually is unavailable [59–61].

We retained self-reported usual and worst pain intensity as secondary outcomes, again using the IPT. Only data from participants who could provide verbal, reliable responses were included in the self-reported pain outcomes. Participants who were completely nonverbal or unable to respond to the question, ‘Have you experienced aches, pains or discomfort in the past week?’ (alternative phrases, e.g., ‘Do you hurt anywhere?’ or ‘Do you have any sore spots?’ were used, when necessary) and to rate their pain intensity were listed as ‘nonverbal’. Participants also had to give reliable responses. Reliability was assessed in one of two ways. When the study began, participants were asked to describe the worst pain they had ever experienced and to locate its intensity on the IPT scale. A response was considered valid whenever the participant reported experiencing their worst pain and located it on the top third of the IPT (all reported having experienced severe pain as their worst). Some participants, however, struggled with assessment even though they otherwise seemed capable of responding to other questions in the interview. As a result of discussions of this problem with the data collection staff and among the investigators, an alternate approach was taken. The research assistant interviewing the participant reviewed the participant's answers for usual, worst, least, and current pain. Whenever all responses were consistent (e.g., worst pain was greater than least pain), then the participant was assessed as providing reliable answers. When responses were not consistent, the research assistant then asked the participant for clarification (e.g., ‘How is your least pain higher than your current pain?’). If the participant changed the response to a logically consistent one, the response was then marked reliable. If the participant still could not correct the discrepancy, the response was considered unreliable, and the participant's data were not included in the analyses.

NH recruitment and retention

We initially estimated that 20 facilities (10 ALG and 10 EDU) would yield an adequate sample of participating NH residents. Because of the relationships that were forged during previous projects [62–64], we were able to secure the commitment of 22 facilities prior to initiating the study. However, fewer than the expected number of NH residents were eligible and/or willing to participate at each facility. To enroll additional NHs, we again called upon partners from earlier studies, and also asked Directors of Nursing at participating facilities to recommend the study to their colleagues at nonparticipating NHs. Although 6 of the 22 originally selected facilities did not participate (one because of closure), we were able to leverage our community connections and recruit 28 facilities. One of these facilities served as a pilot site, leaving us 27 NHs available for randomization.

Initially, we enrolled facilities in pairs to make data collection and other activities manageable. This decision turned out to have advantages as well as disadvantages. At times, the staggered enrollment allowed NHs to remain in the study. Several NHs wanted to defer enrollment because they anticipated a state survey in the next month, experienced turnover in key administrative or nursing staff, struggled with inadequate staffing, or were in the throes of an ownership change. Our ability to slate them for a later entry into the study actually facilitated retention. The lag time between recruitment and enrollment, however, was problematic because some facilities initiated their own pain management programs or moved on to other clinical priorities while waiting to be enrolled. When these facilities were finally invited to enroll in the study, they declined. The administrative turnover could create problems, as well. Whenever the Administrator or Director of Nursing Services who signed the original agreement left the facility, the successor did not always feel obliged to honor the agreement.

Randomization also was complicated by enrolling facilities in multiple waves. We initially planned to recruit facilities in pairs and, at the same time, facilities that were similar in size (≤110 beds or >110 beds), ownership (for profit or not for profit), and quality (based on number of deficiencies or stars on the five-star quality rating system). These matched facilities were to be randomized one to intervention, one to control, with equal chance of assignment. This strategy was successful in the first several groupings but failed later on when our choices became more limited: we often enrolled facilities simply because they were ready to participate. As a result, the statisticians had to revise the randomization procedure. That is, 6 of the first 18 facilities were not paired and were randomized singly with an equal chance of assignment to either condition. The last nine facilities were randomized with an adaptive randomization schema that set the probability of each possible assignment according to the resulting balance in the allocation of ALG versus EDU on key facility characteristics. There was a higher probability for assignments that maintained or improved the balance of facility characteristics between arms than for assignments that lessened that balance.

Although the adaptive randomization procedures required more work, the effort involved paid off. Table 2 compares the intervention and control sites on the matching variables. Minimal differences were found between intervention and control facilities on these factors.

Table 2.

Characteristics of participating nursing homes (N = 27)

| Characteristic | Participating Nursing Homes |

p valuea | |

|---|---|---|---|

| Treatment (n = 13) | Control (n = 14) | ||

| Number of beds (mean (SD)) | 149.6 (42.7) | 139.6 (39.7) | .535 |

| Number of deficiencies | |||

| Less than Washington state average | 6 | 7 | .842 |

| Greater than Washington State average | 7 | 7 | |

| CMS Star rating (range 0–5) (mean (SD)) | 2.8 (1.4) | 2.9 (1.2) | .837 |

| Ownership | |||

| Not for profit | 7 | 7 | .704 |

| For profit | 5 | 7 | |

| Government | 1 | ||

SD: standard deviation; CMS: Centers for Medicare and Medicaid Services.

Chi-squared test for number of deficiencies, Fisher's exact test for ownership, and unpaired two-sample t-test with unequal variances for ranked and continuous variables.

Lessons learned and conclusion

In the absence of an ideal testing situation in which one has unlimited resources, all study design decisions necessarily involve the consideration and weighing of options on the basis of scientific principles such as internal and external validity. Moreover, the resulting decisions reflect the research team's best judgment given the existing scientific tools. Our experiences may guide investigators who plan future implementation trials or other trials among NH residents.

First, we encourage any research team that sets out to test the effectiveness of a complex intervention to consider using theories and methods promulgated by implementation scientists. This field is growing rapidly, as demonstrated by journals such as Implementation Science and the establishment of the Dissemination and Implementation Research in Health Study Section (DIRH) at the National Institutes of Health [65,66]. Curran et al.'s [10] recent article on hybrid designs for effectiveness and implementation studies provides extremely helpful recommendations for ways to design trials that maximize both internal and external validity. Damschroder and colleagues [67] reviewed published implementation theories and synthesized their findings into a comprehensive framework for implementation research. While these articles were not published in time for us to apply them to our study, our own experience during this study underscores their potential value in future studies.

A second lesson that we learned from our work on this study should prompt researchers to consider carefully the challenges of conducting pain research in persons who cannot self-report. Many of the core recommended outcomes for chronic pain clinical trials rely on patient report. Although the authors of these recommendations acknowledge that, at times, behavioral observation or proxy report of outcomes is necessary, they have made no recommendations for specific measures [68]. In their comprehensive review, Herr and colleagues [48] evaluated every published pain behavioral assessment tool for older persons with cognitive impairment and found that no measure was adequate for every population or setting. An expert panel reviewed several of the tools and, based on several characteristics, including clinical utility, recommended two of the tools [69]; however, other studies question the psychometric properties of these tools [49,70]. There is an urgent need to identify or develop a more specific, sensitive, and clinically useful pain measure for nonverbal persons. We suggest that, while these vulnerable persons should still be included in clinical pain trials, researchers should, for their part, exercise great caution in choosing outcomes.

Improving the quality of pain management delivered to NH residents is an urgent health-care need. This article describes a complex clinical trial designed to improve pain assessment and management practice in NHs. We describe several decisions that were made to maximize internal and external validity, as well as to address the logistical challenges we encountered. In addition, we discuss several problems that arose during the course of the study and the ways in which we approached these challenges. It is our hope that the insights and strategies gained from this study will assist others in designing future studies and addressing anticipated challenges.

Acknowledgments

This material is the result of work supported with resources and the use of facilities at the Swedish Medical Center, Seattle, WA; the University of Pennsylvania School of Nursing; and the Philadelphia VA Medical Center. Mary Ersek conceived the study, procured funding, served as principal investigator of the project, and drafted the manuscript. Nayak Polissar and Moni B Neradilek developed, described, and implemented the randomization procedures, power calculations, and statistical analyses. Anna Du Pen and Keela Herr participated in the study design. Anna Du Pen and Anita Jablonski assisted in coordinating the study and refining the methods. All authors assisted in drafting the manuscript and approved the final manuscript. Clinical trials registration: NCT01399567, Swedish Medical Center, Seattle, WA.

Funding

The project described was supported by Award Number R01NR009100 from the National Institute of Nursing Research.

Footnotes

Conflict of interest

The content is solely the responsibility of the authors and does not necessarily represent the official views, positions, or policies of the National Institute of Nursing Research, the National Institutes of Health, the Department of Veterans Affairs, or the United States Government.

References

- 1.Lapane KL, Quilliam BJ, Chow W, et al. The association between pain and measures of well-being among nursing home residents. J Am Med Dir Assoc. 2012;13:344–49. doi: 10.1016/j.jamda.2011.01.007. [DOI] [PubMed] [Google Scholar]

- 2.Sengupta M, Bercovitz A, Harris-Kojetin LD. Prevalence and management of pain, by race and dementia among nursing home residents: United States 2004. NCHS Data Brief. 2010;30:1–8. [PubMed] [Google Scholar]

- 3.AGS Panel on Persistent Pain in Older Persons The management of persistent pain in older persons. J Am Geriatr Soc. 2002;50:S205–S224. doi: 10.1046/j.1532-5415.50.6s.1.x. [DOI] [PubMed] [Google Scholar]

- 4.Decker SA, Culp KR, Cacchione PZ. Evaluation of musculoskeletal pain management practices in rural nursing homes compared with evidence-based criteria. Pain Manag Nurs. 2009;10:58–64. doi: 10.1016/j.pmn.2008.02.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Won A, Lapane K, Gambassi G, et al. Correlates and management of nonmalignant pain in the nursing home. SAGE Study Group. Systematic Assessment of Geriatric Drug Use via Epidemiology. J Am Geriatr Soc. 1999;47:936–42. doi: 10.1111/j.1532-5415.1999.tb01287.x. [DOI] [PubMed] [Google Scholar]

- 6.Teno J, Bird C, Mor V. The Prevalence and Treatment of Pain in US Nursing Homes. Center for Gerontology and Health Care Research; Providence, RI: 2002. [Google Scholar]

- 7.Teno JM, Kabumoto G, Wetle T, et al. Daily pain that was excruciating at some time in the previous week: Prevalence, characteristics, and outcomes in nursing home residents. J Am Geriatr Soc. 2004;52:762–67. doi: 10.1111/j.1532-5415.2004.52215.x. [DOI] [PubMed] [Google Scholar]

- 8.American Medical Directors Association . Pain Management in the Long-Term Care Setting: Clinical Practice Guideline. AMDA; Columbia, MD: 2003. [Google Scholar]

- 9.Hadjistavropoulos T, Herr K, Turk DC, et al. An interdisciplinary expert consensus statement on assessment of pain in older persons. Clin J Pain. 2007;23:S1–S43. doi: 10.1097/AJP.0b013e31802be869. [DOI] [PubMed] [Google Scholar]

- 10.Curran GM, Bauer M, Mittman B, et al. Effectiveness-implementation hybrid designs: Combining elements of clinical effectiveness and implementation research to enhance public health impact. Med Care. 2012;50:217–26. doi: 10.1097/MLR.0b013e3182408812. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Rogers E. Diffusion of Innovations. 5th edn Free Press; New York: 2003. [Google Scholar]

- 12.Yano EM, Fink A, Hirsch SH, et al. Helping practices reach primary care goals. Lessons from the literature. Arch Intern Med. 1995;155:1146–56. [PubMed] [Google Scholar]

- 13.Titler MG. Translation science and context. Res Theory Nurs Pract. 2010;24:35–55. doi: 10.1891/1541-6577.24.1.35. [DOI] [PubMed] [Google Scholar]

- 14.Gilbody S, Whitty P, Grimshaw J, et al. Educational and organizational interventions to improve the management of depression in primary care: A systematic review. JAMA. 2003;289:3145–51. doi: 10.1001/jama.289.23.3145. [DOI] [PubMed] [Google Scholar]

- 15.Jablonski AM, DuPen AR, Ersek M. The use of algorithms in assessing and managing persistent pain in older adults. Am J Nurs. 2011;111:34–43. doi: 10.1097/10.1097/01.NAJ.0000395239.60981.2f. quiz 4–5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Du Pen AR, Du Pen S, Hansberry J, et al. An educational implementation of a cancer pain algorithm for ambulatory care. Pain Manag Nurs. 2000;1:116–28. doi: 10.1053/jpmn.2000.19333. [DOI] [PubMed] [Google Scholar]

- 17.Du Pen SL, Du Pen AR, Polissar N, et al. Implementing guidelines for cancer pain management: Results of a randomized controlled clinical trial. J Clin Oncol. 1999;17:361–70. doi: 10.1200/JCO.1999.17.1.361. [DOI] [PubMed] [Google Scholar]

- 18.Adli M, Bauer M, Rush AJ. Algorithms and collaborative-care systems for depression: Are they effective and why? A systematic review. Biol Psychiatry. 2006;59:1029–38. doi: 10.1016/j.biopsych.2006.05.010. [DOI] [PubMed] [Google Scholar]

- 19.Campbell M, Fitzpatrick R, Haines A, et al. Framework for design and evaluation of complex interventions to improve health. BMJ. 2000;321:694–96. doi: 10.1136/bmj.321.7262.694. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Gruber-Baldini AL, Zimmerman SI, Mortimore E, et al. The validity of the minimum data set in measuring the cognitive impairment of persons admitted to nursing homes. J Am Geriatr Soc. 2000;48:1601–6. doi: 10.1111/j.1532-5415.2000.tb03870.x. [DOI] [PubMed] [Google Scholar]

- 21.Bernabei R, Gambassi G, Lapane K, et al. Management of pain in elderly patients with cancer. SAGE Study Group. Systematic Assessment of Geriatric Drug Use via Epidemiology. JAMA. 1998;279:1877–82. doi: 10.1001/jama.279.23.1877. [DOI] [PubMed] [Google Scholar]

- 22.Horgas AL, Tsai PF. Analgesic drug prescription and use in cognitively impaired nursing home residents. Nurs Res. 1998;47:235–42. doi: 10.1097/00006199-199807000-00009. [DOI] [PubMed] [Google Scholar]

- 23.Morrison RS, Siu AL. A comparison of pain and its treatment in advanced dementia and cognitively intact patients with hip fracture. J Pain Symptom Manage. 2000;19:240–48. doi: 10.1016/s0885-3924(00)00113-5. [DOI] [PubMed] [Google Scholar]

- 24.Ferrell BA, Ferrell BR, Osterweil D. Pain in the nursing home. J Am Geriatr Soc. 1990;38:409–14. doi: 10.1111/j.1532-5415.1990.tb03538.x. [DOI] [PubMed] [Google Scholar]

- 25.Shield RR, Wetle T, Teno J, et al. Physicians ‘missing in action’: Family perspectives on physician and staffing problems in end-of-life care in the nursing home. J Am Geriatr Soc. 2005;53:1651–57. doi: 10.1111/j.1532-5415.2005.53505.x. [DOI] [PubMed] [Google Scholar]

- 26.Weissman DE, Griffie J, Muchka S, et al. Building an institutional commitment to pain management in long-term care facilities. J Pain Symptom Manage. 2000;20:35–43. doi: 10.1016/s0885-3924(00)00168-8. [DOI] [PubMed] [Google Scholar]

- 27.American Geriatrics Society The management of persistent pain in older persons. J Am Geriatr Soc. 2002;50:S205–S224. doi: 10.1046/j.1532-5415.50.6s.1.x. [DOI] [PubMed] [Google Scholar]

- 28.American Medical Directors Association . Pain Management in the Long-Term Care Setting: Clinical Practice Guideline. 2nd edn AMDA; Columbia, MD: 2009. [Google Scholar]

- 29.American Geriatrics Society Panel Pharmacological management of persistent pain in older persons. J Am Geriatr Soc. 2009;57:1331–46. doi: 10.1111/j.1532-5415.2009.02376.x. [DOI] [PubMed] [Google Scholar]

- 30.Dworkin RH, O'Connor AB, Backonja M, et al. Pharmacologic management of neuropathic pain: Evidence-based recommendations. Pain. 2007;132:237–51. doi: 10.1016/j.pain.2007.08.033. [DOI] [PubMed] [Google Scholar]

- 31.Ersek M. Enhancing effective pain management by addressing patient barriers to analgesic use. J Hosp Palliat Nurs. 1999;1:87–96. [Google Scholar]

- 32.Tarzian AJ, Hoffmann DE. Barriers to managing pain in the nursing home: Findings from a statewide survey. J Am Med Dir Assoc. 2004;5:82–88. doi: 10.1097/01.JAM.0000110648.46882.B3. [DOI] [PubMed] [Google Scholar]

- 33.Cook TD, Campbell DT. Quasi-Experimentation: Design and Analysis Issues for Field Settings. Houghton Mifflin Company; Boston, MA: 1979. [Google Scholar]

- 34.Schwartz CE, Chesney MA, Irvine MJ, et al. The control group dilemma in clinical research: Applications for psychosocial and behavioral medicine trials. Psychosom Med. 1997;59:362–71. doi: 10.1097/00006842-199707000-00005. [DOI] [PubMed] [Google Scholar]

- 35.Laird NM, Ware JH. Random-effects models for longitudinal data. Biometrics. 1982;38:963–74. [PubMed] [Google Scholar]

- 36.Friedman L, Furberg C, DeMets D. Fundamentals of clinical trials. 4th edn Springer; New York: 2010. [Google Scholar]

- 37.Glasgow RE, Lichtenstein E, Marcus AC. Why don't we see more translation of health promotion research to practice? Rethinking the efficacy-to-effectiveness transition. Am J Public Health. 2003;93:1261–7. doi: 10.2105/ajph.93.8.1261. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Jablonski A, Ersek M. Nursing home staff adherence to evidence-based pain management practices. J Gerontol Nurs. 2009;35:28–34. doi: 10.3928/00989134-20090701-02. quiz 6–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Dworkin RH, Turk DC, Wyrwich KW, et al. Interpreting the clinical importance of treatment outcomes in chronic pain clinical trials: IMMPACT recommendations. J Pain. 2008;9:105–21. doi: 10.1016/j.jpain.2007.09.005. [DOI] [PubMed] [Google Scholar]

- 40.Przybylski BR, Dumont ED, Watkins ME, et al. Outcomes of enhanced physical and occupational therapy service in a nursing home setting. Arch Phys Med Rehabil. 1996;77:554–61. doi: 10.1016/s0003-9993(96)90294-4. [DOI] [PubMed] [Google Scholar]

- 41.Beloosesky Y, Grinblat J, Epelboym B, et al. Functional gain of hip fracture patients in different cognitive and functional groups. Clin Rehabil. 2002;16:321–28. doi: 10.1191/0269215502cr497oa. [DOI] [PubMed] [Google Scholar]

- 42.Diamond PT, Felsenthal G, Macciocchi SN, et al. Effect of cognitive impairment on rehabilitation outcome. Am J Phys Med Rehabil. 1996;75:40–43. doi: 10.1097/00002060-199601000-00011. [DOI] [PubMed] [Google Scholar]

- 43.Morrison RS, Magaziner J, McLaughlin MA, et al. The impact of post-operative pain on outcomes following hip fracture. Pain. 2003;103:303–11. doi: 10.1016/S0304-3959(02)00458-X. [DOI] [PubMed] [Google Scholar]

- 44.Alexopoulos GS, Abrams RC, Young RC, et al. Use of the Cornell Scale in nondemented patients. J Am Geriatr Soc. 1988;36:230–36. doi: 10.1111/j.1532-5415.1988.tb01806.x. [DOI] [PubMed] [Google Scholar]

- 45.Muller-Thomsen T, Arlt S, Mann U, et al. Detecting depression in Alzheimer's disease: Evaluation of four different scales. Arch Clin Neuropsychol. 2005;20:271–76. doi: 10.1016/j.acn.2004.03.010. [DOI] [PubMed] [Google Scholar]

- 46.Alexopoulos GS. The Cornell Scale for Depression in Dementia: Administration and Scoring Guidelines. White Plains; New York: 2002. [Google Scholar]

- 47.Barca ML, Engedal K, Selbaek G. A reliability and validity study of the Cornell Scale among elderly inpatients, using various clinical criteria. Dement Geriatr Cogn Disord. 2010;29:438–47. doi: 10.1159/000313533. [DOI] [PubMed] [Google Scholar]

- 48.Herr K, Bursch H, Black B. State of the art review of tools for assessment of pain in nonverbal older adults. doi: 10.1016/j.jpainsymman.2005.07.001. Available at: http://prc.coh.org/PAIN-NOA.htm. [DOI] [PubMed]

- 49.Ersek M, Herr K, Neradilek MB, et al. Comparing the psychometric properties of the Checklist of Nonverbal Pain Behaviors (CNPI) and the Pain Assessment in Advanced Dementia (PAIN-AD) instruments. Pain Med. 2010;11:395–404. doi: 10.1111/j.1526-4637.2009.00787.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Herr K, Spratt KF, Garand L, et al. Evaluation of the Iowa pain thermometer and other selected pain intensity scales in younger and older adult cohorts using controlled clinical pain: A preliminary study. Pain Med. 2007;8:585–600. doi: 10.1111/j.1526-4637.2007.00316.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Taylor L, Harris J, Epps C, et al. Psychometric evaluation of selected pain intensity scales for use with cognitively impaired and cognitively intact older adults. Rehabil Nurs. 2005;30:55–61. doi: 10.1002/j.2048-7940.2005.tb00360.x. [DOI] [PubMed] [Google Scholar]

- 52.Ware LJ, Epps CD, Herr K, et al. Evaluation of the Revised Faces Pain Scale, Verbal Descriptor Scale, Numeric Rating Scale, and Iowa Pain Thermometer in older minority adults. Pain Manag Nurs. 2006;7:117–25. doi: 10.1016/j.pmn.2006.06.005. [DOI] [PubMed] [Google Scholar]

- 53.Snow AL, Weber JB, O'Malley KJ, et al. NOPPAIN: A nursing assistant-administered pain assessment instrument for use in dementia. Dement Geriatr Cogn Disord. 2004;17:240–46. doi: 10.1159/000076446. [DOI] [PubMed] [Google Scholar]

- 54.Engle VF, Graney MJ, Chan A. Accuracy and bias of licensed practical nurse and nursing assistant ratings of nursing home residents’ pain. J Gerontol A Biol Sci Med Sci. 2001;56:M405–M411. doi: 10.1093/gerona/56.7.m405. [DOI] [PubMed] [Google Scholar]

- 55.Ersek M, Polissar N, Neradilek MB. Development of a composite pain measure for persons with advanced dementia: Exploratory analyses in self-reporting nursing home residents. J Pain Symptom Manage. 2011;41:566–79. doi: 10.1016/j.jpainsymman.2010.06.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.International Association for the Study of Pain Pain terminology. 2008 Available at: http://www.iasp-pain.org/AM/Template.cfm?Section=Pain_Definitions.

- 57.Herr K, Coyne PJ, Key T, et al. Pain assessment in the nonverbal patient: Position statement with clinical practice recommendations. Pain Manag Nurs. 2006;7:44–52. doi: 10.1016/j.pmn.2006.02.003. [DOI] [PubMed] [Google Scholar]

- 58.Gibson SJ. IASP global year against pain in older persons: Highlighting the current status and future perspectives in geriatric pain. Expert Rev Neurother. 2007;7:627–35. doi: 10.1586/14737175.7.6.627. [DOI] [PubMed] [Google Scholar]

- 59.Kutner JS, Bryant LL, Beaty BL, et al. Symptom distress and quality-of-life assessment at the end of life: The role of proxy response. J Pain Symptom Manage. 2006;32:300–10. doi: 10.1016/j.jpainsymman.2006.05.009. [DOI] [PubMed] [Google Scholar]

- 60.Teno JM, Clarridge BR, Casey V, et al. Family perspectives on end-of-life care at the last place of care. JAMA. 2004;291:88–93. doi: 10.1001/jama.291.1.88. [DOI] [PubMed] [Google Scholar]

- 61.Kiely DK, Volicer L, Teno J, et al. The validity and reliability of scales for the evaluation of end-of-life care in advanced dementia. Alzheimer Dis Assoc Disord. 2006;20:176–81. doi: 10.1097/00002093-200607000-00009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Ersek M, Grant MM, Kraybill BM. Enhancing end-of-life care in nursing homes: Palliative Care Educational Resource Team (PERT) program. J Palliat Med. 2005;8:556–66. doi: 10.1089/jpm.2005.8.556. [DOI] [PubMed] [Google Scholar]

- 63.Ersek M, Kraybill BM, Hansberry J. Assessing the educational needs and concerns of nursing home staff regarding end-of-life care. J Gerontol Nurs. 2000;26:16–26. doi: 10.3928/0098-9134-20001001-05. [DOI] [PubMed] [Google Scholar]

- 64.Ersek M, Wood BB. Development and evaluation of a Nursing Assistant Computerized Education Programme. Int J Palliat Nurs. 2008;14:502–509. doi: 10.12968/ijpn.2008.14.10.31495. [DOI] [PubMed] [Google Scholar]

- 65.Alegria M. AcademyHealth 25th Annual Research Meeting chair address: From a science of recommendation to a science of implementation. Health Serv Res. 2009;44:5–14. doi: 10.1111/j.1475-6773.2008.00936.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Madon T, Hofman KJ, Kupfer L, et al. Public health. Implementation science. Science. 2007;318:1728–29. doi: 10.1126/science.1150009. [DOI] [PubMed] [Google Scholar]

- 67.Damschroder LJ, Aron DC, Keith RE, et al. Fostering implementation of health services research findings into practice: A consolidated framework for advancing implementation science. Implement Sci. 2009;4:50. doi: 10.1186/1748-5908-4-50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Dworkin RH, Turk DC, Farrar JT, et al. Core outcome measures for chronic pain clinical trials: IMMPACT recommendations. Pain. 2005;113:9–19. doi: 10.1016/j.pain.2004.09.012. [DOI] [PubMed] [Google Scholar]

- 69.Herr K, Bursch H, Ersek M, et al. Use of pain-behavioral assessment tools in the nursing home: Expert consensus recommendations for practice. J Gerontol Nurs. 2010;36:18–29. doi: 10.3928/00989134-20100108-04. quiz 30–31. [DOI] [PubMed] [Google Scholar]

- 70.Zwakhalen SM, Hamers JP, Berger MP. The psychometric quality and clinical usefulness of three pain assessment tools for elderly people with dementia. Pain. 2006;126:210–20. doi: 10.1016/j.pain.2006.06.029. [DOI] [PubMed] [Google Scholar]