Abstract

Visual perceptual learning (VPL) can occur as a result of a repetitive stimulus-reward pairing in the absence of any task. This suggests that rules that guide Conditioning, such as stimulus-reward contingency (e.g. that stimulus predicts the likelihood of reward), may also guide the formation of VPL. To address this question, we trained subjects with an operant conditioning task in which there were contingencies between the response to one of three orientations and the presence of reward. Results showed that VPL only occurred for positive contingencies, but not for neutral or negative contingencies. These results suggest that the formation of VPL is influenced by similar rules that guide the process of Conditioning.

Keywords: Vision, Visual perceptual learning, Reward, Operant Conditioning, Contingency

When an animal is exposed to a particular relationship between a predictable signal and food or predators, the animal learns an association between them, and consequently, its behavior changes in specifiable ways (Mackintosh, 1983). How the animal uses predictable signals to change its behavior, and the rules that govern the animal's appetitive behavior toward rewards, or avoidance behavior against punishments, have been investigated extensively over the past 100 years (Mackintosh, 1983; Schultz, 2006; Wasserman & Miller, 1997). How these rules apply to other reward/learning phenomenon, such as changes in the perceptual experience of the predictable signals after their association with reward (Law & Gold, 2008; 2009; Mackintosh, 1983; Seitz & Watanabe, 2003; Seitz, Kim, & Watanabe, 2009; Seitz & Watanabe, 2005; T. Watanabe, Nanez, & Sasaki, 2001), remains unclear.

Visual perceptual learning (VPL) is the process by which the adult neural system can achieve long-term enhanced performance on visual tasks as a result of experience (Sasaki, Nanez, & Watanabe, 2010). A dominant view in the field of VPL has been that top-down, task-related factors are required for learning to occur (Ahissar & Hochstein, 1993; Herzog & Fahle, 1997; Petrov, Dosher, & Lu, 2006; Schoups, Vogels, Qian, & Orban, 2001; Shiu & Pashler, 1992). This view is supported by a number of perceptual learning studies in which features conveying no useful information to a task showed no, or little, perceptual change (Ahissar & Hochstein, 1993; Schoups et al., 2001; Shiu & Pashler, 1992).

Recent studies, however, demonstrated that top-down, task-related factors are not necessary for perceptual learning to occur (Ludwig & Skrandies, 2002; Nishina, Seitz, Kawato, & Watanabe, 2007; Seitz et al., 2009; Seitz & Watanabe, 2003; Seitz, Nanez, Holloway, Koyama, & Watanabe, 2005a; Seitz & Watanabe, 2005; Seitz, Lefebvre, Watanabe, & Jolicoeur, 2005b; Tsushima, Seitz, & Watanabe, 2008; Watanabe et al., 2001; 2002). This type of perceptual learning is called “task-irrelevant perceptual learning” (Sasaki et al., 2010; Seitz & Watanabe, 2005). Seitz and Watanabe hypothesized that successful recognition of task-targets leads to a sense of accomplishment that elicits internal reward signals, and that VPL can occur as a result of repeated pairing between the perceptual features and reward signals (Seitz & Watanabe, 2005). This hypothesis is based on the assumption that reward signals are released diffusively throughout the brain when subjects successfully recognize task-targets.

If this hypothesis is correct then reward signals in the absence of an actual task should also elicit VPL. This possibility was addressed in a further study of task-irrelevant VPL that demonstrated VPL could occur even without any task involvement (Seitz et al., 2009). In that study subjects passively viewed visual orientation stimuli, which were temporally paired with liquid-rewards during several days of training. Confirming the hypothesis that repeated pairing between perceptual features and reward signals would lead to VPL, visual sensitivity improvements occurred through stimulus-reward pairing in the absence of a task and without awareness of the stimulus presentation.

At face value, task-irrelevant VPL resembles Conditioning, which is a form of learning in which repeated paring of arbitrary features with rewards or punishments leads to a representation of the rewards or punishment that is evoked by the features (Schultz, 2006; Wasserman & Miller, 1997). A question arises whether VPL follows the same rules of contingency as found in Conditioning (Rescorla, 1968; Schultz, 2006; Schultz, Dayan, & Montague, 1997). A hallmark of Conditioning is the contingency rule, along with contiguity and prediction error (Schultz, 2006). Contingency refers to the requirement in which a reward needs to occur more frequently, or less frequently, in the presence of a stimulus as compared with its absence. When the probability of a reward is higher during the conditioned stimulus than at other times (positive contingency), excitatory Conditioning occurs and when the probability is lower (negative contingency), negative Conditioning occurs (Rescorla, 1968; 1988a; 1988b; Schultz, 2006; Shanks, 1987). If there was a common mechanism underlying Conditioning and VPL, then both VPL and Conditioning should follow the same rules of contingency. In that case, we should see positive VPL, negative VPL, or no VPL in accordance with the contingency between the predicted signal and reward.

To test the role of stimulus-reward contingencies in VPL, we developed a novel training paradigm combining VPL and Operant Conditioning in which human subjects, who were deprived of food and water, were trained on a go/no-go task with orientation stimuli that were associated with different liquid reward probabilities (Fig. 1B) (Dorris & Glimcher, 2004; Lauwereyns, Watanabe, Coe, & Hikosaka, 2002; Leon & Shadlen, 1999; Pessiglione et al., 2008; Seitz et al., 2009). Results showed that VPL occurred only for stimuli trained with positive-contingency, whereas learning was not found with zero-contingencies or negative-contingencies. These results demonstrate that continuous temporal pairings between a visual stimulus and reward is not sufficient for VPL and suggest that VPL and Conditioning share common principles.

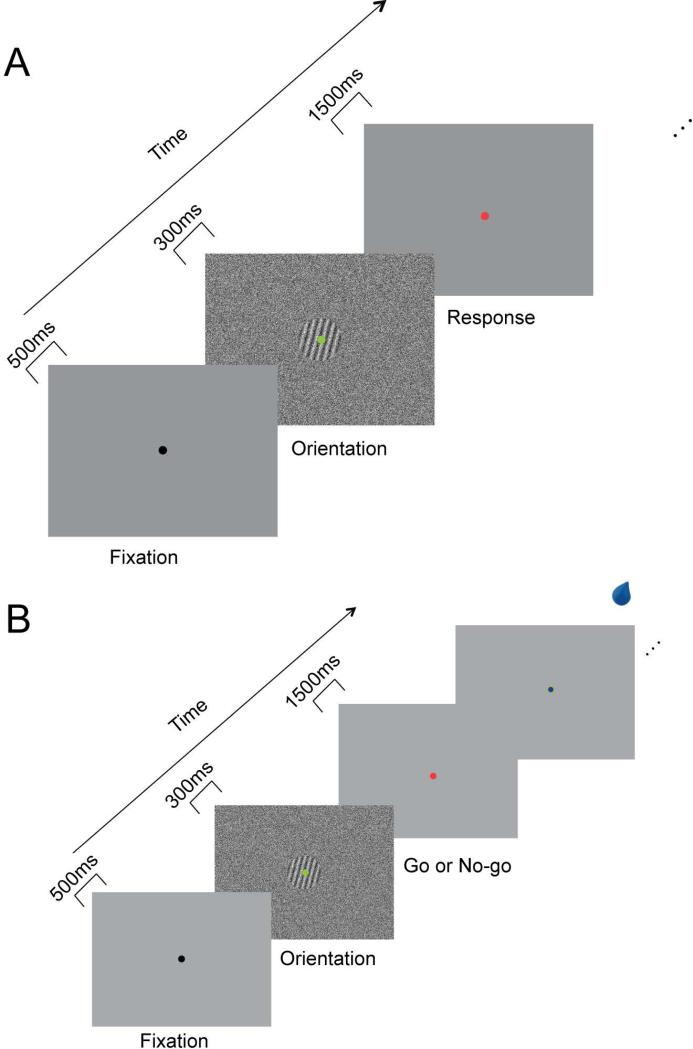

Fig. 1.

Orientation discrimination test and training procedures. (A) Orientation discrimination test procedure. Subjects gazed upon a small green dot on the center of the screen from the fixation to an orientation stimulus. After the signal orientation stimulus disappeared, the color of the fixation dot changed from green to red. As soon as it changed color, subjects had 1500 ms to respond with a key-press regarding which of the 135°, 75°, or 15° orientations was presented. (B) Subjects performed a go/no-go task by choosing between pressing (go) and not pressing (no-go) a button, in response to orientation stimuli. The chance of getting a drop of water (~1 ml of water over 300ms) varied depending on the presented orientation stimulus.

Method

Subjects

A total of 18 human subjects (aged between 19 and 35; 9 male and 9 female) were employed in the experiment. The experiment was conducted in accordance with the IRB approved by the Committee on Human Research of Boston University.

Procedure

Stimuli and apparatus

On each trial, the signal stimulus was a sinusoidal grating (4 cpd; 4 deg diameter; luminance distributed 50±50 cd/m2), which was spatially overlapped by a full contrast noise mask (Seitz et al., 2009). The grating was presented at one of three orientations: 135 deg, 75 deg or 15 deg. Signal to noise ratios (SNRs) were manipulated by a combination of changes in signal and noise by randomly choosing the S/N proportion of pixels from the grating image and 1-S/N proportion of pixels from a noise image (randomly generated for each trial). The noise was generated from a sinusoidal luminance distribution.

Stimuli appeared on a 19 inch CRT monitor with resolution of 1024 by 768 pixels and refresh rate of 85Hz. Viewing distance was 57 cm. Water was delivered using a water dispenser (ValveLink8.2 system, Automate Scientific, Inc) which was controlled by the Psychophysics toolbox (Brainard, 1997) for MATLAB.

Pre-and post-test orientation discrimination tests

Pre-and post-test orientation discrimination tests were conducted at least one day before (pre-test) and one day after (post-test) the period of Training. Each trial started with a 500 ms fixation period where subjects gazed upon a small black dot in the center of the screen. Then fixation dot turned green and an oriented grating (135 deg, 75 deg or 15 deg) masked in noise (0.03, 0.05, 0.08, 0.12, and 0.2 SNR) was presented for 300 ms. Finally the color of the fixation dot changed from green to red, and a blank gray screen appeared, indicating that a response was required (Fig. 1A). As soon as the dot color changed to red, subjects had 1500 ms to respond with a key-press regarding which of the 135°, 75°, or 15° orientations was presented. The three orientations and five signals to noise levels were pseudo-randomly interleaved with 52 repetitions per condition yielding a 1 hour-long session with 780 trials.

Training Sessions

Training sessions were conducted on 9 consecutive days. In these sessions subjects performed a go/no-go task by pressing the spacebar (go) or not pressing the spacebar (no-go), in response to the appearance of orientation stimuli (Pessiglione et al., 2008). In each trial there was a 500-ms fixation period, then the fixation dot changed to green, indicating the presence of an oriented grating (135 deg, 75 deg or 15 deg) masked in noise (0.05, 0.08, 0.12, and 0.2 SNR), that was presented for 300 ms and finally a red fixation dot (interrogation dot) appeared for 1.5 seconds. When the red-dot appeared, subjects made or withhold their response. A small blue dot briefly appeared if at the end of the 1.5-second response period the spacebar was being pressed. If the spacebar was not pressed, ~1 ml of water was delivered for 300ms with the probability of 50% (Fig. 1B). If the subjects pressed the spacebar, ~1ml of water was delivered at a probability depending on the presented orientation (for example, 80% for orientation 135 deg, 50% for orientation 75 deg, 20% for orientation 15 deg; actual stimulus reward mapping counterbalanced across subjects). Subjects were not informed about the reward contingencies, however, during the first training session subjects were told to “try to avoid either keep pressing the key or keep not pressing the key”. Each training session consisted of 192 presentations of each orientation and lasted ~50 minutes.

Results

To test whether subjects followed the rule of contingency during the training sessions, we first performed two-way ANOVA with repeated measures on the percentage of go responses with factors Orientation (3) and Session (9 sessions) (Fig. 2A). This revealed significant main effects for Session (F(8,136)=53.44, p<0.001) and Orientation (F(2,34)=13.52, p<0.001) with a significant interaction of Orientation X Session (F(16,272)=7.6, p<0.001). Overall results showed an increase of responses for the positive-contingency orientation and a decrease of responses for the negative-contingency orientation as compared to responses for the zero-contingency orientation. These data suggest that performance during training was influenced by stimulus-reward contingency.

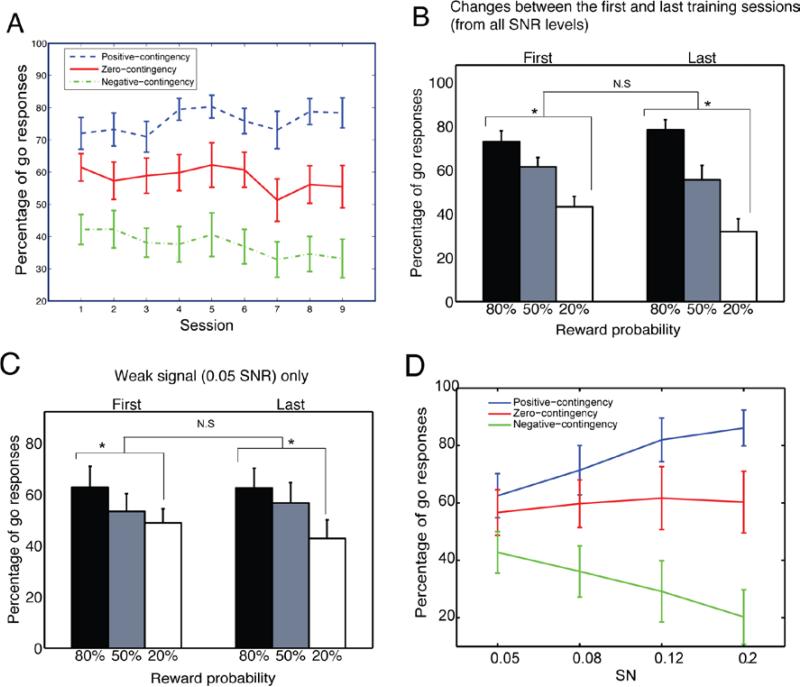

Fig. 2.

The go/no-go data. (A) Subjects chose go responses significantly more often than no-go responses when the positive-contingency orientation was presented (dashed line) and they choose less when the negative-contingency orientation was presented (dash-dotted line) in the last training session. Subjects’ choices between go responses and no-go responses were not significantly different when the zero-contingency orientation was presented (solid line). (B) Learning of the contingencies between reward and orientations occurred even in the first training session. The learning effect persisted during the training sessions. However, there was no significant difference in choosing go responses between the first and last training sessions. (C) No significant changes between the first and last training sessions in 0.05 SNR. Error bars are standard errors. (D) Mean percentage of go responses of three orientations from the weakest (0.05) to the strongest (0.2) SNR levels in the last training session. Error bars are standard errors.

To further understand these results, we examined whether there was a significant difference in choosing go responses between the three orientations in the first training session. Results showed that contingency impacted learning even in the first training session (F(2, 34)=13.56, p<0.001) (Fig. 2B). However, there was no significant difference between means of three orientations in choosing go responses over the first 30 trials (F(2,34)=1.73,p=0.19). Furthermore the pattern of responses for each of the orientations didn't significantly change between the first and last training session (F(1,17)=1.00, p=0.32) (Fig. 2B). A similar pattern of results was found for the weakest signal. There was a significant difference between three orientations in the first training session (0.05 SNR, F(2, 34)=3.39, p=0.046) (Fig. 2C) and the pattern didn't significantly change between the first and last training sessions (0.05 SNR, p=0.88) (Fig. 2C), suggesting that training effects, including the time course, were similar across different trial difficulties. Overall these results suggest that the contingency based difference in performance across orientations was learned quickly within the first training session, similar across SNRs, and largely maintained across the 9 days of training. This fast learning of stimulus-reinforcement contingencies is similar to that found in physiological responses of monkeys in the context of a classical conditioning on similar stimuli (Frank, Seitz and Vogels, 2010).

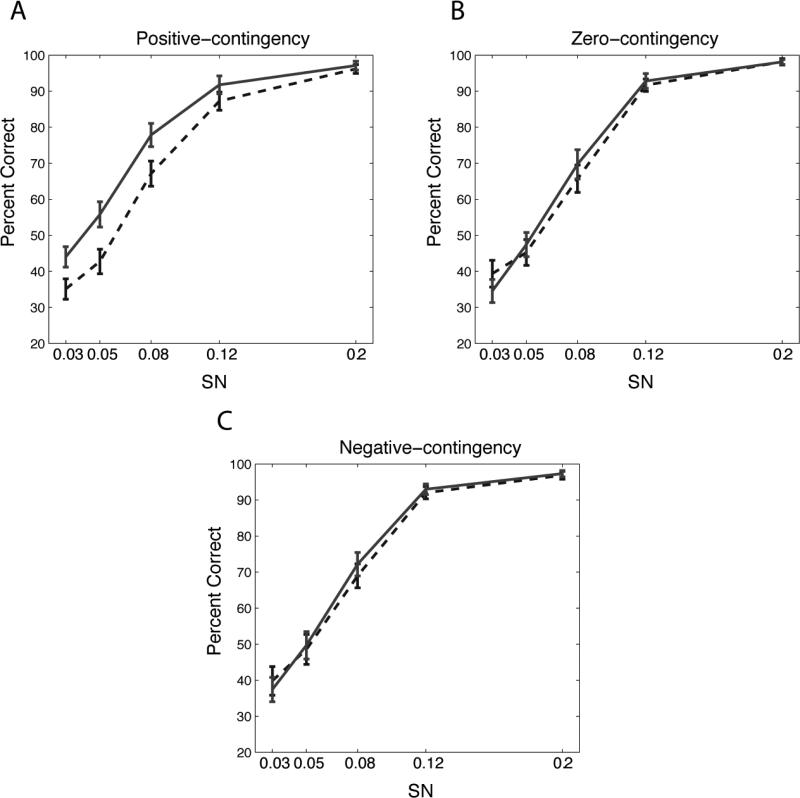

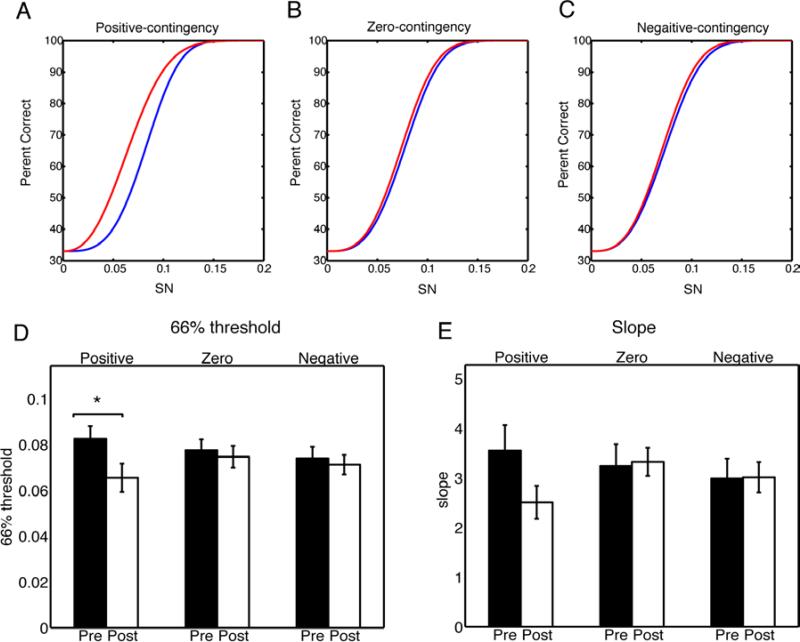

Given that performance on the different orientations follows the rule of contingency during the training, we next assessed whether contingency influenced VPL. To address this, we examined subjects’ performance on orientation discrimination in the pre- and post-testing sessions (Fig. 3A) that were conducted before and after the nine days of Conditioning, respectively. Results showed that there were significant differences among the three orientations in terms of performance change (F(2,17)=3.89, p=0.0267, one-way ANOVA with repeated measures on average across SNRs for each orientation). Notably, psychometric functions in the pre-test did not significantly differ by orientation (F(2,34)=0.49, p=0.61, two-way ANOVA with repeated measurement; two within factors orientations and SNRs). Analysis of learning on each orientation individually showed a significant improvement for the positive-contingency orientation (F(1,17)=10.63, p<0.01, two-way ANOVA with repeated measures), but no significant change for the zero-contingency orientation (F(1,17)=0.08, p=0.78) or the negative-contingency orientation (F(1,17)=0.09, p=0.77) (Fig. 3B and Fig. 3C). To further characterize VPL, we estimated thresholds (we chose 66% because there were three orientations and thus chance level was 33%) and slopes using a Weibull function (Fig. 4A-C). These parameters can provide more precise estimates of learning effect, which might be undermined by the overall accuracy. An ANOVA revealed a significant difference in the mean threshold between the pre- and post-test (F(1,17)=7.76, p=0.013), with a marginal interaction with Orientation (F(2,34)=13.52, p=0.08), and a significant difference only for the positive contingency orientation (p=0.015, paired t-test) (Fig. 4D). However, the mean slope didn't show any change (F(1,17)=1.30,p=0.26) with no interaction with Orientation (F(2,34)=1.94,p=0.16). Together, these results suggest that stimulus-reward contingencies during training, at least positive ones, shape VPL.

Fig. 3.

Results of pre- and post-orientation discrimination tests.

Dashed curves indicate psychometric functions from the pre-test and solid curves from the post-test. SN represents a S/N ratio. Error bars are standard errors. (A) Only the positive-contingency orientation showed significant performance improvement. No significant improvement for the (B) zero-contingency or the (C) negative-contingency orientations.

Fig. 4.

Changes of thresholds and slopes. Two parameters for the Weibull function, a threshold yielding 66% correct performance and a slope were acquired from each of the fitted psychometric curves. The mean threshold and the mean slope were used to plot the Weibull functions for each orientation and session (pre-test in blue and post-test in red). There was a significant difference between pre- and post-test in threshold in the positive-contingency orientation (Fig. 4D). However, changes in slopes failed to reach significance (Fig. 4E).

Discussion

To understand rules guiding the formation of VPL we investigated how VPL is related to Operant Learning. To accomplish this, we investigated the role of the contingency rule, a hallmark of Conditioning (Schultz, 2006), in VPL. We hypothesized that if VPL followed the rule of contingency in Conditioning (Rescorla, 1968) we should observe VPL in the positive-contingency orientation and no VPL in the zero-contingency orientation. The results were consistent with our hypothesis.

An important question that must be addressed is whether water delivery in our study was actually rewarding. For example, Seitz et al., (2009) showed that subjects rated water to be pleasant when food and water deprived (for 5 hours) and neutral without deprivation. Further, only after deprivation did stimulus-water pairing to produce VPL. To check whether water-delivery was treated as a reward in the present study, we asked subjects after the conclusion of the 9-day training period whether they enjoyed the water delivery during training. All subjects reported enjoying drinking the water. These results, together with our observation that contingency impacted learning, suggest that water delivery was indeed a reward.

Previous research has shown a role of top-down attention in perceptual learning (Ahissar and Hochstein, 1993). Top-down attention enhances task-relevant signals (Moran & Desimone, 1985) and inhibits task-irrelevant signals (Friedman-Hill, Robertson, Desimone, & Ungerleider, 2003). Can top-down attention explain these results? While previous research of attention and VPL (Ahissar & Hochstein, 1993; Schoups et al., 2001) compared learning on attended vs. unattended stimuli, in our task, all orientations were attended features and equally task-relevant. Thus while one can conjecture how different levels of attention may be associated with different levels of reward (e.g. Frankó, Seitz, & Vogels, 2010), we cannot easily dissociate the extent to which VPL was shaped by top-down attention, reward or both since attention might be directly modulated by a contingency between a stimulus and reward. Further studies will be required to address this issue more exhaustively.

Alternatively, VPL might occur due to a consistent pairing between a visual stimulus and reward (Seitz et al., 2009; Seitz & Watanabe, 2003; 2005; Watanabe et al., 2001). In that case, the “temporal contiguity” between rewards and visual stimuli would play a crucial role for VPL to occur (Mackintosh, 1983; Seitz & Watanabe, 2005). However, if this were the case, it would be difficult to explain why no VPL occurred in the zero-contingency orientation since the zero-contingency orientation had been paired with reward at 50% probability during Operant Condoning.

According to Law and Gold (2009), reward driven reinforcement signals first guide changes in connections between sensory neurons and the decision process that interprets the sensory information, and then, the same mechanism further refine these connections to more strongly weight inputs from the most relevant sensory neurons, thereby improving perceptual sensitivity. Consistent with this theory our results show that reward contingencies were quickly learned in the first session and then learning occurred only for the stimulus that was positively predictive of reward.

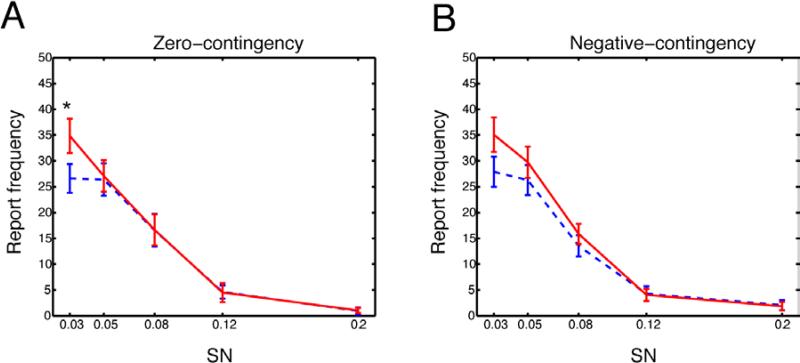

An interesting question is the extent to which the VPL effect was due to response biases to report the positive-contingency orientation, especially when stimulus signals were weak. For example, it has been proposed that if a response is reinforced in the presence of reward, response tendencies to the reward would increase (Spence, 1936). In one experiment, pigeons showed not only excitatory Conditioning to a positive contingency conditioned stimulus but also inhibitory Conditioning to a negative contingency continued stimulus after discrimination training (Honig, Boneau, Burstein, & Pennypacker, 1963; Spence, 1936). Indeed, our results during training period showed that subjects’ responses were biased positively toward positive contingency orientation and negatively toward negative contingency orientation. Although the procedures of the orientation discrimination task and the go-no-go task were completely different in our study, the key question regarding whether biases existing in the pre and post test sessions still remains. To address this, we examined report frequencies of the positive-contingency orientation in the incorrect trials of the neutral-contingency and negative-contingency trials. There were no significant changes between the pre- and post-tests in report frequency for either the zero-contingency orientation (F(1,17)=0.96, p=0.34, Two-way ANOVA with repeated measures) or the negative-contingency orientation (F(1,17)=0.09, p=0.77) (Fig. 5A and Fig. 5B), however, we found a significant interaction for the zero-contingency (F(4,68)=3.17, p=0.02) (Fig. 5A) and a marginal interaction for the negative-contingency (F(4,68)=2.27, p=0.07) (Fig. 5B). Further analysis showed that only in the zero-contingency orientation there was a significant difference between pre- and post-test in the 0.03 SNR (p=0.02, paired t-test) (Fig. 5A). Although only the weakest SNR of zero-contingency orientation showed a significant increase in response frequency, these results do suggest the increased performance for the positive contingency orientation may be influenced by bias toward the rewarded response. These results are consistent with Chalk et al., (2010) and Seitz et al., (2005) who showed that both bias and sensitivity enhancements can arise together in VPL and may be a result of perceptual hallucinations when signal levels are low and are consistent with a framework of perceptual rather than response biases (Chalk et al., 2010; Seitz et al., 2005a).

Fig. 5.

Report frequencies toward the positive-contingency orientation in the incorrect trials of the neutral-contingency trials (Fig. 5A) and negative-contingency trials (Fig. 5B). Dashed blue curves indicate the pre-test and solid red curves indicate the post-test. SN represents a S/N ratio. Error bars are standard errors.

A related question is why negative contingencies didn't also influence VPL. Several studies have shown that the negative-contingency can cause negative learning effect on human subjects (Allan, 1993; Dickinson, Shanks, & Evenden, 1984; Shanks, 1987). Consistent with this performance on the negative-contingency orientation was below that of the neutral-contingency orientation during training. These data provide evidence that the VPL results were not simply an indication of a stimulus-response association during the Conditioning, otherwise, we should have seen the negative VPL on the negative-contingency orientation. However, there is a question of whether positive learning effects due to negative contingencies, which inform that something isn't rewarding, were partially countermanded by the negative influence of response biases on performance. Further research will be required to clarify the extent to which negative contingencies and punishments influence VPL.

An interesting question is why is that subjects can distinguish the orientation paired with zero and negative contingencies during conditioning but show no improvement for these orientations in the following perceptual test? For example, we can see in Fig. 2D that the differences in go-no-go performance (in the last training session) extend even to the lowest SN-level. An ANOVA showed that there was no significant difference in the mean percentage of go responses between 4 SNRs (F(2,51)=0.47, p=0.62) (Fig. 2D), a significant difference between three orientations (F(2,34)=8.85, p<0.01), and a significant interaction between SNR and orientation (F6,102)=5.24, p<0.01). Further analysis showed that there was a significant difference in the mean percentage of go responses between zero-contingency and negative-contingency even in the weakest SNR (0.05) (day 9, p=0.039, paired t-test). A possible explanation for this difference in findings between the go-no-go task and the orientation discrimination task may be that the go-no-go task reveals learning of stimulus-reinforcement contingencies, which are learned very quickly, whereas the orientation discrimination task better taps into perceptual sensitivities. A similar dissociations was found by Frankó, Seitz, & Vogels (2010) in the responses of V4 cells. Further research may be required to better understand this dissociation.

In summary, the present study suggests that common rules guide VPL and Operant Learning. In particular, positive stimulus reward contingencies, and not simple reward pairings, appear to be required to drive VPL. However, further questions remain, such as how attention is shaped by these contingencies, the role of punishments in learning and what neural mechanisms underlie VPL with Operant Conditioning.

Acknowledgement

This study was supported by NIH under Grant R01 EY015980.

References

- Ahissar M, Hochstein S. Attentional control of early perceptual learning. Proceedings of the National Academy of Sciences of the United States of America. 1993;90(12):5718–5722. doi: 10.1073/pnas.90.12.5718. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Allan LG. Human contingency judgments: rule based or associative? Psychological Bulletin. 1993;114(3):435–448. doi: 10.1037/0033-2909.114.3.435. [DOI] [PubMed] [Google Scholar]

- Brainard DH. The Psychophysics Toolbox. Spatial Vision. 1997;10(4):433–436. doi:10.1163/156856897X00357. [PubMed] [Google Scholar]

- Chalk M, Chalk M, Seitz AR, Seitz AR, Series P, Series P. Rapidly learned stimulus expectations alter perception of motion. Journal of Vision. 2010;10(8):2–2. doi: 10.1167/10.8.2. doi:10.1167/10.8.2. [DOI] [PubMed] [Google Scholar]

- Dickinson A, Shanks D, Evenden J. Judgement of act-outcome contingency: The role of selective attribution. The Quarterly Journal of Experimental Psychology Section A. 1984;36(1):29–50. doi:10.1080/14640748408401502. [Google Scholar]

- Dorris MC, Glimcher PW. Activity in posterior parietal cortex is correlated with the relative subjective desirability of action. Neuron. 2004;44(2):365–378. doi: 10.1016/j.neuron.2004.09.009. doi:10.1016/j.neuron.2004.09.009. [DOI] [PubMed] [Google Scholar]

- Frankó E, Seitz AR, Vogels R. Dissociable neural effects of long-term stimulus-reward pairing in macaque visual cortex. Journal of Cognitive Neuroscience. 2010;22(7):1425–1439. doi: 10.1162/jocn.2009.21288. doi:10.1162/jocn.2009.21288. [DOI] [PubMed] [Google Scholar]

- Friedman-Hill SR, Robertson LC, Desimone R, Ungerleider LG. Posterior parietal cortex and the filtering of distractors. Proceedings of the .... 2003 doi: 10.1073/pnas.0730772100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Herzog MH, Fahle M. The role of feedback in learning a vernier discrimination task. Vision Research. 1997;37(15):2133–2141. doi: 10.1016/s0042-6989(97)00043-6. [DOI] [PubMed] [Google Scholar]

- Honig WK, Boneau CA, Burstein KR, Pennypacker HS. Positive and negative generalization gradients obtained after equivalent training conditions. Journal of Comparative and Physiological Psychology. 1963;56(1):111–116. doi:10.1037/h0048683. [Google Scholar]

- Lauwereyns J, Watanabe K, Coe B, Hikosaka O. A neural correlate of response bias in monkey caudate nucleus. Nature. 2002;418(6896):413–417. doi: 10.1038/nature00892. doi:10.1038/nature00892. [DOI] [PubMed] [Google Scholar]

- Law C-T, Gold JI. Neural correlates of perceptual learning in a sensory- motor, but not a sensory, cortical area. Nature Neuroscience. 2008;11(4):505–513. doi: 10.1038/nn2070. doi:10.1038/nn2070. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Law C-T, Gold JI. Reinforcement learning can account for associative and perceptual learning on a visual-decision task. Nature Neuroscience. 2009;12(5):655–663. doi: 10.1038/nn.2304. doi:10.1038/nn.2304. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leon MI, Shadlen MN. Effect of expected reward magnitude on the response of neurons in the dorsolateral prefrontal cortex of the macaque. Neuron. 1999;24(2):415–425. doi: 10.1016/s0896-6273(00)80854-5. [DOI] [PubMed] [Google Scholar]

- Ludwig I, Skrandies W. Human perceptual learning in the peripheral visual field: sensory thresholds and neurophysiological correlates. Biological Psychology. 2002;59(3):187–206. doi: 10.1016/s0301-0511(02)00009-1. [DOI] [PubMed] [Google Scholar]

- Mackintosh NJ. Conditioning and associative learning. Clarendon Press; Oxford: 1983. [Google Scholar]

- Moran J, Desimone R. Selective attention gates visual processing in the extrastriate cortex. Science. 1985;229(4715):782–784. doi: 10.1126/science.4023713. doi:10.1126/science.4023713. [DOI] [PubMed] [Google Scholar]

- Nishina S, Seitz AR, Kawato M, Watanabe T. Effect of spatial distance to the task stimulus on task-irrelevant perceptual learning of static Gabors. Journal of Vision. 2007;7(13):2, 1–10. doi: 10.1167/7.13.2. doi:10.1167/7.13.2. [DOI] [PubMed] [Google Scholar]

- Pessiglione M, Petrovic P, Daunizeau J, Palminteri S, Dolan RJ, Frith CD. Subliminal instrumental conditioning demonstrated in the human brain. Neuron. 2008;59(4):561–567. doi: 10.1016/j.neuron.2008.07.005. doi:10.1016/j.neuron.2008.07.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Petrov AA, Dosher BA, Lu Z-L. Perceptual learning without feedback in non-stationary contexts: data and model. Vision Research. 2006;46(19):3177–3197. doi: 10.1016/j.visres.2006.03.022. doi:10.1016/j.visres.2006.03.022. [DOI] [PubMed] [Google Scholar]

- Rescorla RA. Probability of shock in the presence and absence of CS in fear conditioning. Journal of Comparative and Physiological Psychology. 1968;66(1):1–5. doi: 10.1037/h0025984. [DOI] [PubMed] [Google Scholar]

- Rescorla RA. Behavioral studies of Pavlovian conditioning. Annual Review of Neuroscience. 1988a;11:329–352. doi: 10.1146/annurev.ne.11.030188.001553. doi:10.1146/annurev.ne.11.030188.001553. [DOI] [PubMed] [Google Scholar]

- Rescorla RA. Pavlovian conditioning: It's not what you think it is. American Psychologist. 1988b;43(3):151. doi: 10.1037//0003-066x.43.3.151. [DOI] [PubMed] [Google Scholar]

- Sasaki Y, Nanez JE, Watanabe T. Advances in visual perceptual learning and plasticity. Nature Reviews Neuroscience. 2010;11(1):53–60. doi: 10.1038/nrn2737. doi:10.1038/nrn2737. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schoups A, Vogels R, Qian N, Orban G. Practising orientation identification improves orientation coding in V1 neurons. Nature. 2001;412(6846):549–553. doi: 10.1038/35087601. doi:10.1038/35087601. [DOI] [PubMed] [Google Scholar]

- Schultz W. Behavioral theories and the neurophysiology of reward. Annual Review of Psychology. 2006;57:87–115. doi: 10.1146/annurev.psych.56.091103.070229. doi:10.1146/annurev.psych.56.091103.070229. [DOI] [PubMed] [Google Scholar]

- Schultz W, Dayan P, Montague PR. A neural substrate of prediction and reward. Science. 1997;275(5306):1593–1599. doi: 10.1126/science.275.5306.1593. [DOI] [PubMed] [Google Scholar]

- Seitz AR, Watanabe T. Psychophysics: Is subliminal learning really passive? Nature. 2003;422(6927):36. doi: 10.1038/422036a. doi:10.1038/422036a. [DOI] [PubMed] [Google Scholar]

- Seitz AR, Kim D, Watanabe T. Rewards evoke learning of unconsciously processed visual stimuli in adult humans. Neuron. 2009;61(5):700–707. doi: 10.1016/j.neuron.2009.01.016. doi:10.1016/j.neuron.2009.01.016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Seitz AR, Nanez JE, Holloway SR, Koyama S, Watanabe T. Seeing what is not there shows the costs of perceptual learning. Proceedings of the National Academy of Sciences of the United States of America. 2005a;102(25):9080–9085. doi: 10.1073/pnas.0501026102. doi:10.1073/pnas.0501026102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Seitz A, Watanabe T. A unified model for perceptual learning. Trends in Cognitive Sciences. 2005;9(7):329–334. doi: 10.1016/j.tics.2005.05.010. doi:10.1016/j.tics.2005.05.010. [DOI] [PubMed] [Google Scholar]

- Seitz A, Lefebvre C, Watanabe T, Jolicoeur P. Requirement for high- level processing in subliminal learning. Current Biology : CB. 2005b;15(18):R753–5. doi: 10.1016/j.cub.2005.09.009. doi:10.1016/j.cub.2005.09.009. [DOI] [PubMed] [Google Scholar]

- Shanks DR. Acquisition functions in contingency judgment. Learning and Motivation. 1987;18(2):147–166. [Google Scholar]

- Shiu LP, Pashler H. Improvement in line orientation discrimination is retinally local but dependent on cognitive set. Perception & Psychophysics. 1992;52(5):582–588. doi: 10.3758/bf03206720. [DOI] [PubMed] [Google Scholar]

- Spence KW. The nature of discrimination learning in animals. Psychological Review. 1936;43(5):427–449. doi:10.1037/h0056975. [Google Scholar]

- Tsushima Y, Seitz AR, Watanabe T. Task-irrelevant learning occurs only when the irrelevant feature is weak. Current Biology : CB. 2008;18(12):R516–7. doi: 10.1016/j.cub.2008.04.029. doi:10.1016/j.cub.2008.04.029. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wasserman EA, Miller RR. What's elementary about associative learning? Annual Review of Psychology. 1997;48:573–607. doi: 10.1146/annurev.psych.48.1.573. doi:10.1146/annurev.psych.48.1.573. [DOI] [PubMed] [Google Scholar]

- Watanabe T, Nanez JE, Sasaki Y. Perceptual learning without perception. Nature. 2001;413(6858):844–848. doi: 10.1038/35101601. doi:10.1038/35101601. [DOI] [PubMed] [Google Scholar]

- Watanabe T, Nanez JE, Koyama S, Mukai I, Liederman J, Sasaki Y. Greater plasticity in lower-level than higher-level visual motion processing in a passive perceptual learning task. Nature Neuroscience. 2002;5(10):1003–1009. doi: 10.1038/nn915. doi:10.1038/nn915. [DOI] [PubMed] [Google Scholar]