Abstract

Single-trial analyses of ensemble activity in alert animals demonstrate that cortical circuits dynamics evolve through temporal sequences of metastable states. Metastability has been studied for its potential role in sensory coding, memory, and decision-making. Yet, very little is known about the network mechanisms responsible for its genesis. It is often assumed that the onset of state sequences is triggered by an external stimulus. Here we show that state sequences can be observed also in the absence of overt sensory stimulation. Analysis of multielectrode recordings from the gustatory cortex of alert rats revealed ongoing sequences of states, where single neurons spontaneously attain several firing rates across different states. This single-neuron multistability represents a challenge to existing spiking network models, where typically each neuron is at most bistable. We present a recurrent spiking network model that accounts for both the spontaneous generation of state sequences and the multistability in single-neuron firing rates. Each state results from the activation of neural clusters with potentiated intracluster connections, with the firing rate in each cluster depending on the number of active clusters. Simulations show that the model's ensemble activity hops among the different states, reproducing the ongoing dynamics observed in the data. When probed with external stimuli, the model predicts the quenching of single-neuron multistability into bistability and the reduction of trial-by-trial variability. Both predictions were confirmed in the data. Together, these results provide a theoretical framework that captures both ongoing and evoked network dynamics in a single mechanistic model.

Keywords: gustatory cortex, hidden Markov models, network dynamics, ongoing activity, spiking network models

Introduction

Sensory networks respond to stimuli with dynamic and time-varying modulations in neural activity. Single-trial analyses of sensory responses have shown that cortical networks may quickly transition from one state of coordinated firing to another as a stimulus is being processed or a movement is being performed (Abeles et al., 1995; Seidemann et al., 1996; Laurent et al., 2001; Baeg et al., 2003; Lin et al., 2005; Rabinovich et al., 2008; Ponce-Alvarez et al., 2012). Applying a hidden Markov model (HMM) analysis to spike trains recorded from neuronal ensembles in the gustatory cortex (GC) revealed that taste-evoked activity progresses through a sequence of metastable states (Jones et al., 2007). Each metastable state can be described as a pattern of ensemble activity (a collection of firing rates) lasting tens to hundreds of milliseconds. Transitions between different states are characterized by coordinated changes in firing rates across multiple neurons in an ensemble. This description of taste-evoked activity in GC has been successful in capturing essential features of gustatory codes, such as trial-to-trial variability (Jones et al., 2007) and learning-dependent changes (Moran and Katz, 2014). In addition, describing neural responses as sequences of metastable states has been proposed as an explanation for the role of GC in ingestive decisions (Miller and Katz, 2010). The observation that similar dynamics exist also in frontal (Abeles et al., 1995; Seidemann et al., 1996; Durstewitz et al., 2010), motor (Kemere et al., 2008), premotor, and somatosensory (Ponce-Alvarez et al., 2012) cortices further emphasizes the generality of this framework.

Although the functional significance of these transitions among metastable states is being actively investigated (Moran and Katz, 2014), very little is known about their genesis. A recent attractor-based model has successfully demonstrated that external inputs can give rise to transitions of metastable states by perturbing the network away from a stable spontaneous state (Miller and Katz, 2010). However, sequences of metastable states could also occur spontaneously, hence reflecting intrinsic network dynamics (Deco and Hugues, 2012; Deco and Jirsa, 2012; Litwin-Kumar and Doiron, 2012; Mattia and Sanchez-Vives, 2012; Litwin-Kumar and Doiron, 2014). Intrinsic activity patterns have indeed been observed in vivo during periods of ongoing activity (i.e., neural activity in the absence of overt sensory stimulation). Such ongoing patterns have been reported in visual (Arieli et al., 1996; Tsodyks et al., 1999; Kenet et al., 2003; Fiser et al., 2004), somatosensory (Petersen et al., 2003; Luczak et al., 2007) and auditory (Luczak et al., 2009) cortices, and the hippocampus (Foster and Wilson, 2006; Diba and Buzsáki, 2007; Luczak et al., 2009). The functional significance of ongoing activity, its origin and its relationship to evoked activity remains, however, elusive.

Here, we analyzed ongoing activity to investigate whether transitions among metastable states can occur in the absence of sensory stimulation in the GC of alert rats. We found that ongoing activity, much like evoked activity, is characterized by sequences of metastable states. Transitions among consecutive states are triggered by the coactivation of several neurons in the ensemble, with some neurons capable of producing multiple (i.e., ≥3) firing rates across the different states (a feature hereby referred to as multistability). To elucidate how a network could intrinsically generate transitions among multistable states, we introduce a spiking neuron model of GC capable of multistable states and exhibiting the same ongoing transition dynamics observed in the data. We prove the existence of multistable states analytically via mean field theory, and the existence of transient dynamics via computer simulations of the same model. The model reproduced many properties of the data, including the pattern of correlations between single neurons and ensemble activity, the multistability of single neurons, its reduction upon stimulus presentation, and the stimulus-induced reduction of trial-by-trial variability. To our knowledge, this is the first unified and mechanistic model of ongoing and evoked cortical activity.

Materials and Methods

Experimental subjects and surgical procedures.

Adult female Long–Evans rats (weight: 275–350 g) were used for this study (Samuelsen et al., 2012). Briefly, animals were kept in a 12 h:12 h light/dark cycle and received ad libitum access to food and water, unless otherwise mentioned. Movable bundles of 16 microwires (25 μm nichrome wires coated with fromvar) attached to a “mini-microdrive” (Fontanini and Katz, 2006; Samuelsen et al., 2012) were implanted in GC (anteroposterior 1.4, mediolateral ±5 from bregma, dorsoventral −4.5 from dura) and secured in place with dental acrylic. After electrode implantation, intraoral cannulae (IOC) were inserted bilaterally and cemented (Phillips and Norgren, 1970; Fontanini and Katz, 2006). At the end of the surgery, a positioning bolt for restraint was cemented in the acrylic cap. Rats were given at least 7 d for recovery before starting the behavioral procedures outlined below. All experimental procedures were approved by the Institutional Animal Care and Use Committee of Stony Brook University and complied with university, state, and federal regulations on the care and use of laboratory animals (for more details, see Samuelsen et al., 2012).

Behavioral training.

Upon completion of postsurgical recovery, rats began a water restriction regimen in which water was made available for 45 min a day. Body weight was maintained at >85% of presurgical weight. Following a few days of water restriction, rats began habituation to head-restraint and training to self-administer fluids through IOCs by pressing a lever. Upon learning to press a lever for water, rats were trained that water reward was delivered exclusively by pressing the lever following an auditory cue (i.e., a 75 dB pure tone at a frequency of 5 kHz). The interval at which lever pressing delivered water was progressively increased to 40 ± 3 s (intertrial interval). To receive fluids, rats had to press the lever within a 3-s-long window following the cue. Lever-pressing triggered the delivery of fluids and stopped the auditory cue. During training and experimental sessions, additional tastants were automatically delivered at random times near the middle of the intertrial interval, at random trials, and in the absence of the anticipatory cue. Upon termination of each recording session, the electrodes were lowered by at least 150 μm so that a new ensemble could be recorded. Stimuli were delivered through a manifold of 4 fine polyimide tubes slid into the IOC. A computer-controlled, pressurized, solenoid-based system delivered ∼40 μl of fluids (opening time ∼40 ms) directly into the mouth. The following tastants were delivered: 100 mm NaCl, 100 mm sucrose, 100 mm citric acid, and 1 mm quinine HCl. Water (∼50 μl) was delivered to rinse the mouth clean through a second IOC 5 s after the delivery of each tastant. Each tastant was delivered for at least 6 trials in each condition.

Electrophysiological recordings.

Single-neuron action potentials were amplified, bandpass filtered (at 300–8000 Hz), digitized, and recorded to a computer (Plexon). Single units of at least 3:1 signal-to-noise ratio were isolated using a template algorithm, cluster cutting techniques, and examination of interspike interval plots (Offline Sorter, Plexon).

Data analysis.

All data analysis and model simulations were performed using custom software written in MATLAB (MathWorks), Mathematica (Wolfram Research), and C. Starting from a pool of 299 single neurons in 37 sessions, neurons with peak firing rate <1 Hz (defined as silent) were excluded from further analysis, as well as neurons with a large peak ∼6–10 Hz in the spike power spectrum, which were considered somatosensory (Katz et al., 2001; Samuelsen et al., 2012; Horst and Laubach, 2013). Only ensembles with more than two simultaneously recorded neurons were included in the rest of the analyses (27 ensembles with 167 neurons). We analyzed ongoing activity in the 5 s interval preceding either the auditory cue or taste delivery, and evoked activity in the 5 s interval following taste delivery exclusively in trials without anticipatory cue.

HMM analysis.

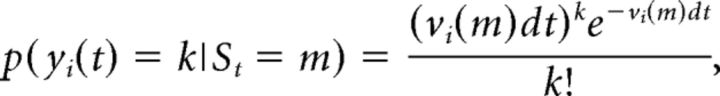

The details of the HMM have been reported previously (Abeles et al., 1995; Jones et al., 2007; Escola et al., 2011; Ponce-Alvarez et al., 2012); here we briefly outline the methods of this procedure, especially where our methods differ from previous accounts. Under the HMM, a system of N recorded neurons is assumed to be in one of a predetermined number of hidden (or latent) states. Each state is defined as a vector of N firing rates, one for each simultaneously recorded neuron. In each state, the neurons were assumed to discharge as stationary Poisson processes (Poisson-HMM).

We denote by [yi(1),…,yi(T)] the spike train of the i-th neuron in the current trial, where yi(t) = k if k spikes occur in the interval [t, t + dt] and zero otherwise; dt = 1 ms, and T is the total duration of the trial in ms (or, alternatively, the number of bins because dt = 1 ms). Denoting with St the hidden state of the ensemble at time t, the probability of having k spikes/s from neuron i in a given state m in the interval dt is given by the Poisson distribution as follows:

|

where vi(m) is the firing rate of neuron i in state m. The firing rates vi(m) completely define the states and are also called “emission probabilities” in HMM parlance. We matched the HMM to the data segmented in 1 ms bins. Given the short duration of the bins, we assumed that, in each bin, either 0 or 1 spike is emitted by each neuron, with probabilities p(yi(t) = 0|St = m) = e−vi(m)dt and p(yi(t) = 1|St = m) = 1 − e−vi(m)dt, respectively. We also neglected the simultaneous firing of ≥2 neurons (a rare event): if more than one neuron fired in a given bin, a single spike was randomly assigned to one of the firing neurons.

The HMM is fully determined by the emission probabilities defining the states and the transition probabilities among those states (assumed to obey the Markov property). The emission and transition probabilities were found by maximization of the log-likelihood of the data given the model via the expectation-minimization (EM), or Baum-Welch, algorithm (Rabiner, 1989). This is known as “training the HMM.” For each session and type of activity (ongoing vs evoked), ensemble spiking activity from all trials was binned at 1 ms intervals before training assuming a fixed number of latent states M (Jones et al., 2007; Escola et al., 2011). We used always the same state as the initial state (e.g., state 1) but ran the algorithm starting 200 ms before the interval of interest to obtain an unbiased estimate of the first state of each sequence. For each given number of states M, the Baum-Welch algorithm was run 5 times, each time with random initial conditions for the transition and emission probabilities. The procedure was repeated for M ranging from 10 to 20 for ongoing activity trials, and from 10 to 40 for evoked activity trials, based on previous accounts (Jones et al., 2007; Miller and Katz, 2010; Escola et al., 2011). For evoked activity, each HMM was trained on all four tastes simultaneously, using overall the same number of trials as for ongoing activity. Of the models thus obtained, the one with largest total likelihood was taken as the best HMM match to the data, and then used to estimate the probability of the states given the model and the observations in each bin of each trial (a procedure known as “decoding”). During decoding, only those states with probability exceeding 80% in at least 50 consecutive 1 ms bins were retained (henceforth denoted simply as “states”).

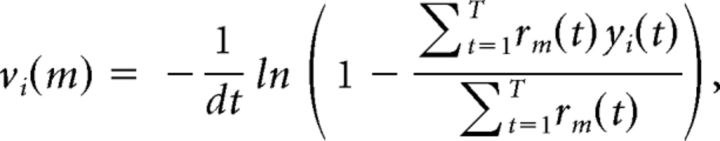

For the firing rate analysis of Figure 2, the firing rates vi(m) were obtained from the analytical solution of the maximization step of the Baum-Welch algorithm as follows:

|

Here, rm(t) = p(St = m|y(1),…,y(T)) is the probability that the state at time t is m, given the observations. This procedure allows for sampling the variability of vi(m) across trials, which can be then used for inferential statistics (see State specificity and firing rate distributions).

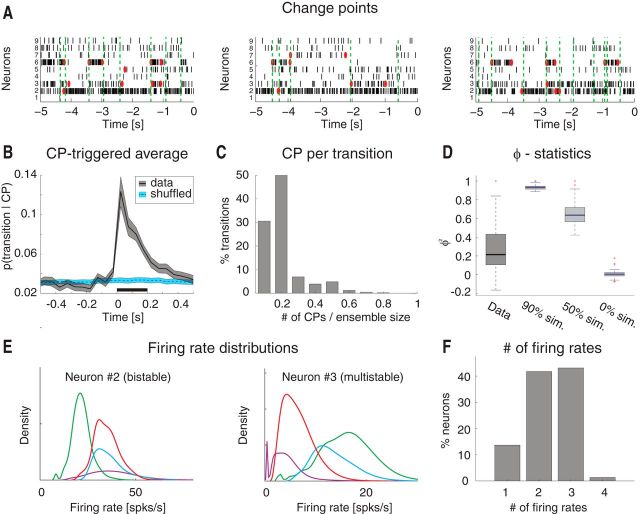

Figure 2.

Single-neuron coactivation drives ensemble transitions. A, Change points (CPs), represented by red dots, and state transitions (vertical dashed green lines) are superimposed to a raster plot of simultaneously recorded neurons (same trials as the first three in Fig. 1A). x-axis for top, time preceding taste delivery (0 = stimulus delivery); y-axis, ensemble neuron index. B, CPTA of ensemble transitions for the empirical dataset (black) and for the trial-shuffled dataset (blue). Shaded bounds represent SE. Thick black line below traces indicates p < 0.05 (bin-wise t test with multiple-bin Bonferroni correction). x-axis, time lag from CP (seconds); y-axis, probability of a transition given a CP at t = 0. C, Fraction of transitions co-occurring with CPs within a 200 ms window before the state transition (significant window from B). x-axis, number of single neuron's CPs divided by the ensemble size; y-axis, fraction of transitions co-occurring with CPs (%). D, Correlation (φ2 statistics) between the signs of single neurons' and ensemble firing rate changes for the empirical (“Data”) and simulated datasets. In the latter, 90%, 50%, and 0% of simulated neurons had firing rate changes matched to those of the whole ensemble (boxes in box-plots represent 95% CIs). y-axis, φ2 statistics. E, Firing rate distributions across states for representative neurons 2 and 3 from the ensemble in Figures 1A and 2A (color-coded as in Fig. 1A; each curve represents the empirical distribution of firing rates in each state). x-axis, firing rate (spikes/s); y-axis, probability density of states. F, Number of different firing rates per neuron across all sessions: 42% of all neurons had ≥3 different firing rates across states. x-axis, minimal number of different firing rates across states; y-axis, fraction of neurons (%).

The distribution of state durations across sessions, ts, was fitted with an exponential function f(ts;a, b) = a · exp(b · ts) using nonlinear least-squares minimization, and 95% confidence intervals (CIs) were computed according to a standard procedure (see e.g., Wolberg, 2006).

Firing rate modulations and change point (CP) analysis.

We detected changes in single neurons' instantaneous firing rate (in single trials) by using the CP procedure based on the cumulative distribution of spike times of Gallistel et al., 2004. Briefly, the procedure selects a sequence of putative variations of firing rate, called “change points,” as the points where the cumulative record of the spike count produces local maximal changes in slope. A putative CP was accepted as valid if the spike count rates before and after the CP were significantly different (binomial test, p < 0.05) (for details, see Gallistel et al., 2004). This method, which is based on a binomial test for the spike count (Gallistel et al., 2004), was chosen because it is a simple and general tool for analyzing cumulative records and had been already empirically validated for the analysis of CPs in GC (Jezzini et al., 2013).

Single-neuron responsiveness to stimuli.

Neurons with significant poststimulus modulations of firing rate were defined as stimulus-responsive. The analysis was based on the CP procedure performed in [ − 1, 5] s intervals around stimulus delivery occurring at time 0 (for further details, see Jezzini et al., 2013). The CP procedure was performed on the cumulative record of spike counts over all evoked trials for a given taste. If any CP was detected before taste delivery, the CP analysis was repeated with a more stringent criterion, until no prestimulus CPs were detected. If no CP was found in response to any of the four tastes, the neuron was deemed not responsive. If a CP was detected in the postdelivery interval for at least one taste, a t test was performed to establish whether the post-CP activity was significantly different from baseline activity during the 1 s interval before taste delivery (p < 0.05), and only then accepted as a significant CP. Neurons with at least one significant CP were considered stimulus-responsive.

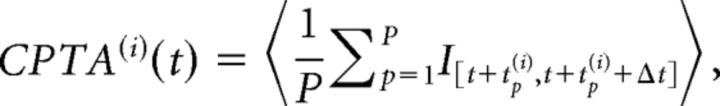

CP-triggered average (CPTA).

The CPTA of ensemble transitions, formally reminiscent of the spike-triggered average (Chichilnisky, 2001; Dayan and Abbott, 2001), estimates the average HMM transition at a lag t from a CP. Given a set of CPs at times [t1(i),…,tP(i)] for the i-th neuron, its CPTA is defined as follows:

|

where 〈…〉 denotes average across trials, Δt = 50 ms, and I[a,b] = 1 if a transition occurs in the interval [a, b], and zero otherwise. Significance of the CPTA was assessed by comparing the simultaneously recorded data with 100 trial-shuffled ensembles (t test, p < 0.05, Bonferroni corrected for multiple bins), in which we randomly permuted each spike train across trials, to scramble the simultaneity of the ensemble recordings. A CPTA peak at zero indicates that, on average, CPs co-occur with ensemble transitions, whereas, for example, a significant CPTA for positive lag indicates that CPs tend to precede transitions (see Fig. 2B).

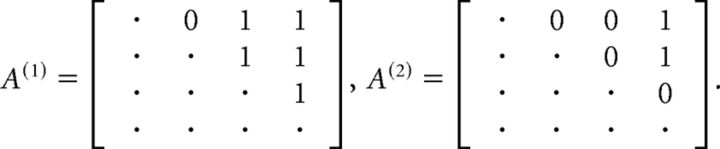

State specificity and firing rate distributions.

We defined a neuron as “state-specific” if its firing rate varied across different HMM states. To assess state specificity for the i-th unit, we collected the set of firing rates across all trials and states (see HMM analysis). To determine whether the distribution of firing rates varied across states, we performed a nonparametric one-way ANOVA (unbalanced Kruskal–Wallis, p < 0.05). The smallest number of significantly different firing rates for a given neuron in a given state was found with a post hoc multiple-comparison rank analysis (with Bonferroni correction). Given a p value plk for the pairwise post hoc comparison between states l and k, we considered the symmetric M × M matrix A with elements Alk = 1 if the rates were different (plk < 0.05) and Alk = 0 otherwise. For example, consider the case of 4 states and the following two outcomes of s as follows:

|

In the first case, the firing rate in state 1 is different from that in states 3 and 4 (first row); it is different in state 2 versus 3 and 4 (second row), and in state 3 versus 4 (third row). The conclusion is that f1 ≠ f3, f1 ≠ f4 (from the first row), and f3 ≠ f4 (from the third row), whereas f2, although different from f3 and f4, may not be different from f1. It follows that at least 3 different firing rates are found across states. In the example of matrix A(2), the smallest number of different rates is 2 (e.g., f1 ≠ f4).

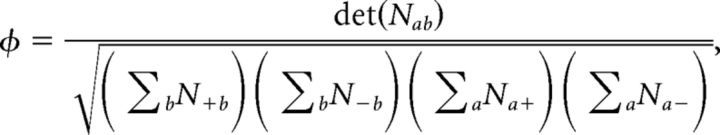

Single unit-ensemble correlations and φ statistics.

We estimated the nonparametric correlation between ensemble and single-unit firing rate modulations in single trials, by means of their φ statistics. For each ensemble transition between consecutive states m → n, we looked at whether the i-th neuron increased (si(m → n) = + 1) or decreased (si(m → n) = − 1) its firing rate during the transition, and analogously for the ensemble mean firing rate sens(m → n) = ± 1. For each transition m → n, we counted the number of ensemble neurons that increased their firing rate when the whole ensemble increased its firing rate as follows:

and analogously for the other three possibilities n+−, n−+, n−−, thus obtaining a 2 × 2 matrix nab(m → n), for a, b = +, −. We then compiled the contingency matrix with elements Nab = ∑nab(m → n), by summing each nab(m → n) over all transitions and sessions. Based on these quantities, the φ statistics (Pearson, 1904; Cramer, 1946) were computed as follows:

|

If changes in single neurons perfectly matched ensemble changes (as in the case of UP and DOWN states), then φ = 1, whereas φ = 0 in case of no correlation and 0 < φ < 1 in case of partial coactivation of neurons and ensembles.

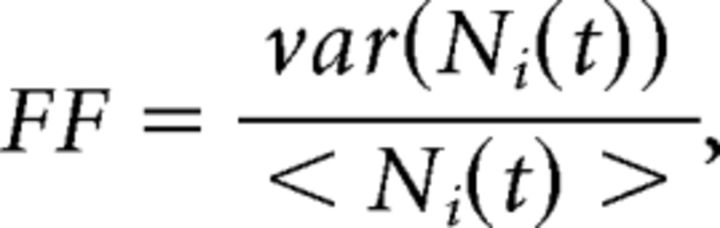

Fano factor (FF).

The raw FF for the i-th unit is defined as follows:

|

where Ni(t) is the spike count for the i-th neuron in the [t, t + Δt] bin, and 〈…〉 and var are the mean and variance across trials. To control for the difference in firing rates at different times, the raw FF was mean-matched across all bins and conditions, and its mean and 95% CIs were estimated via linear regression (for details, see Churchland et al., 2010). We used a window of 200 ms for the comparison between model and data. Results were qualitatively similar for a wide range of windows sizes (Δt = 50 to 500 ms), sliding along at 50 ms steps.

Spiking neuron network model.

Our recurrent network model comprised N = 5000 randomly connected leaky integrate-and-fire (LIF) neurons, with a fraction nE = 0.8 of excitatory (E) and nI = 0.2 inhibitory (I) neurons. Connection probability pβα from neurons in population α ∈ E, I to neurons in population β ∈ E, I were pEE = 0.2 and pEI = pIE = pII = 0.5. A fraction f = 0.9 of excitatory neurons were arranged into Q different clusters, with the remaining fraction belonging to an unstructured (“background”) population (Amit and Brunel, 1997b). Synaptic weights Jβα from neurons in population α ∈ E, I to neurons in population β ∈ E, I scaled with N as Jβα = jβα/, with jβα constants having the following values (units of mV): jEI = 3.18,jIE = 1.06, jII = 4.24, jEE = 1.77. Within an excitatory cluster, synaptic weights were potentiated: they took values J+jEE with J+ > 1, whereas synaptic weights between neurons belonging to different clusters were depressed to values J−jEE, with J− = 1 − γf(J+ − 1) < 1, with γ = 0.5. The latter relationship between J+ and J− ensures balance between overall potentiation and depression in the network (Amit and Brunel, 1997b).

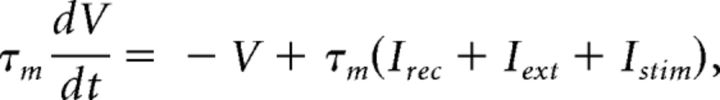

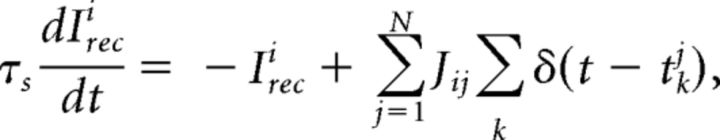

Below spike threshold, the membrane potential V of each LIF neuron evolved according to the following:

|

with a membrane time constant τm = 20 ms for excitatory and 10 ms for inhibitory neurons. The input current was the sum of a recurrent input Irec, an external current Iext representing an ongoing afferent input from other areas, and an input stimulus Istim representing a delivered taste during evoked activity only. In our units, a membrane capacitance of 1 nF is set to 1. A spike was said to be emitted when V crossed a threshold Vthr, after which V was reset to a potential Vreset = 0 for a refractory period of τref = 5 ms. Spike thresholds were chosen so that, in the unstructured network (i.e., with J+ = J− = 1), the E and I populations had average firing rates of 3 and 5 spikes/s, respectively (Amit and Brunel, 1997b). The recurrent synaptic input Ireci to neuron i evolved according to the dynamical equation as follows:

|

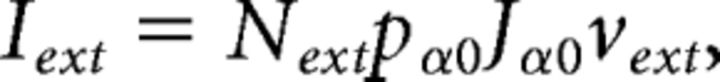

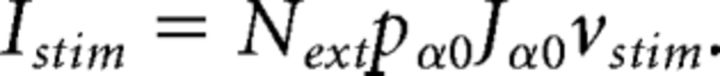

where tkj was the arrival time of k-th spike from the j-th presynaptic neuron, and τs was the synaptic time constant (3 and 2 ms for E and I neurons, respectively). The postsynaptic current (PSC) elicited by a single incoming spike was exp( − t/τs)Θ(t), where Θ(t) = 1 for t ≥ 0, and Θ(t) = 0 otherwise. The ongoing external current to a neuron in population α was constant and given by the following:

|

where Next = nEN, pα0 = pEE, and Jα0 = with jE0 = 0.3, jI0 = 0.1, and vext = 7 spikes/s, respectively. During evoked activity, stimulus-selective neurons received an additional transient input representing 1 of the 4 incoming stimuli. The percentage of neurons responsive to 1, 2, 3, or 4 stimuli was modeled after the estimates obtained from the data, which implied that stimuli targeted overlapping neurons (see Results). We tested two alternative model stimuli: a biologically realistic stimulus vstimth(t) resembling thalamic stimulation (Liu and Fontanini, 2014), modeled as a double exponential with peak amplitude of 0.3 vext and rise times of 50 ms and decay times of 500 ms, or a stimulus of constant amplitude vstimbox ranging from 0 to 0.5vext (“box” stimulus). In the following, we measure the stimulus amplitude as percentage of vext (e.g., “30%” corresponds to vstim = 0.3 vext. The onset of each stimulus was always t = 0, the time of taste delivery. The stimulus current to a neuron in population α was constant and given by the following:

|

Mean field analysis of the model.

The spiking network model described in the previous subsection is a complex system capable of many behaviors depending on the parameter values. One main aim of the model is finding under what conditions it can sustain multiple configurations of activity that can be later interpreted as HMM states. Parameter search was used relying on an analytical procedure for networks of LIF neurons known as “mean field theory” or “population density approach” (see, e.g., Amit and Brunel, 1997b; Brunel and Hakim, 1999; Fusi and Mattia, 1999). Under the conditions stated below, this theory provides a global picture of network behavior together with the associated parameter values, which can then be tested in model simulations.

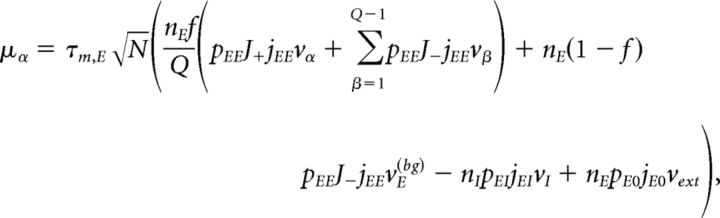

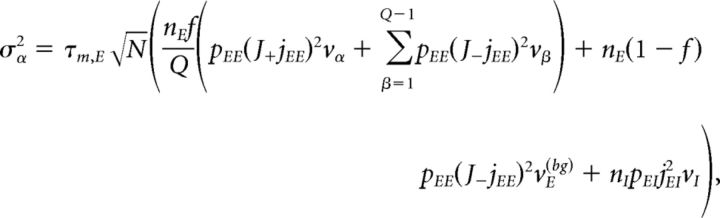

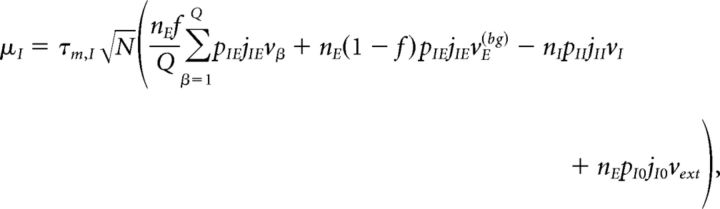

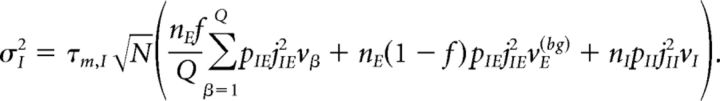

Under typical conditions, each neuron of the network receives a large number of small PSCs per integration time constant. In such a case, the dynamics of the network can be analyzed under the diffusion approximation, which is amenable to the population density approach. The network has α = 1,…, Q + 2 subpopulations, where the first Q indices label the Q excitatory clusters, α = Q + 1 labels the “background” excitatory population, and α = Q + 2 labels the homogeneous inhibitory population. In the diffusion approximation (Tuckwell, 1988; Lánský and Sato, 1999; Richardson, 2004), the input to each neuron is completely characterized by the infinitesimal mean μα and variance σα2 of the postsynaptic potential. Adding up the contributions from all afferent inputs, μα and σα2 for an excitatory neuron belonging to cluster α (Amit and Brunel, 1997b) are given by the following:

|

|

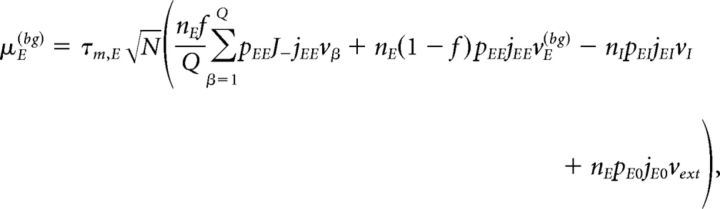

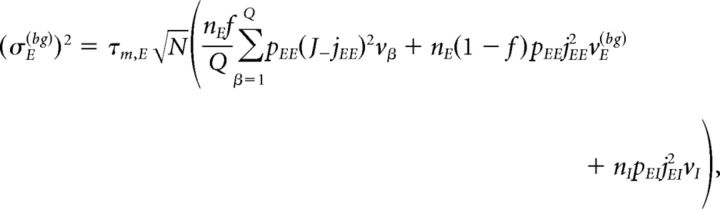

where vE(bg) is the firing rate of the unstructured (“background”) E population. Afferent current and variance to a neuron belonging to the background E population and to the homogeneous inhibitory population are as follows:

|

|

|

|

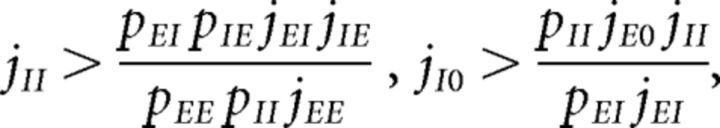

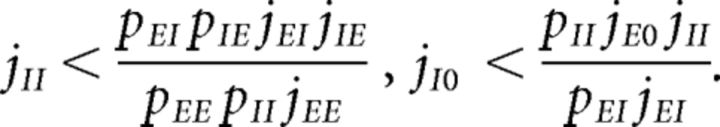

Parameters were chosen so as to have a balanced unstructured network. In other words, our network with J+ = J− = 1 (where all E → E synaptic weights are equal) would operate in the balanced asynchronous regimen where incoming contributions from excitatory and inhibitory inputs balance out and the peristimulus time interval over time is approximately flat. In such a regimen, one can solve for the excitatory and inhibitory firing rates as linear functions of the external firing rate vext, up to terms of , provided the connection probabilities and synaptic weights satisfy one of the following conditions (van Vreeswijk and Sompolinsky, 1996, 1998; Renart et al., 2010): either

|

or

|

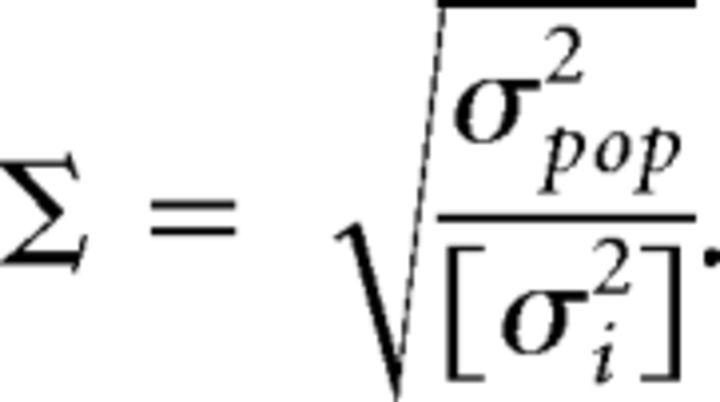

Asynchronous activity was confirmed by computing the network synchrony (Golomb and Hansel, 2000), defined as follows:

|

where σi2 is the time-averaged variance in the instantaneous firing rate vi(t) of the i-th neuron, […] denotes the average over all neurons in the network, and σpop2 is the time-averaged variance of the instantaneous population firing rate [vi(t)]. The network synchrony Σ is expected to be of order 1 in a fully synchronized network, but of order Σ ∾ O in networks of size N that are in the asynchronous state. We used bins of variable size (from 10 to 50 ms) to estimate Σ, obtaining similar results in all cases.

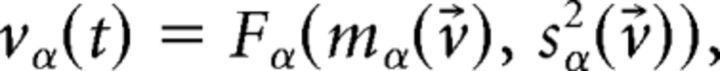

The unstructured network has only one dynamical state (i.e., a stationary point of activity) where all E and I neurons have constant firing rate vE and vI, respectively. In the structured network (where J+ > 1), the network undergoes continuous transitions among a repertoire of states, as shown in the main text. Not to confuse the network's states of activity with the HMM states, we shall from here on use the term “network configurations” instead. Admissible network configurations must satisfy the Q + 2 self-consistent mean field equations (Amit and Brunel, 1997b) as follows:

|

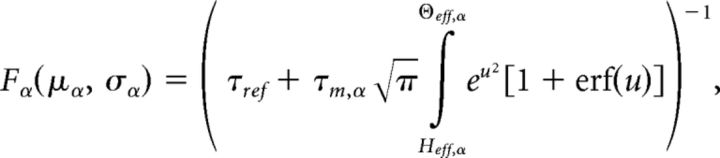

where v⃗ = [v1,…,vQ,vEbg,vI] is the firing rate vector and Fα(μα, σα2) is the current-to-rate response function of the LIF neurons. For fast synaptic times, i.e., ≪ 1, Fα(μα, σα2) is well approximated (Brunel and Sergi, 1998; Fourcaud and Brunel, 2002) as follows:

|

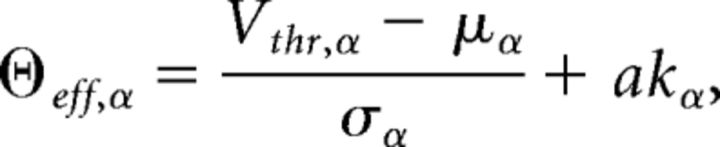

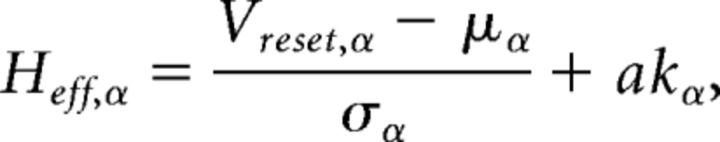

where

|

|

where kα = is the square root of the ratio of synaptic time constant to membrane time constant, and a = ∾ 1.03. This theoretical response function has been fitted successfully to the firing rate of neocortical neurons in the presence of in vivo-like fluctuations (Rauch et al., 2003; La Camera et al., 2006, 2008).

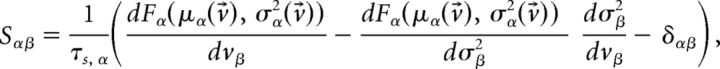

The fixed points v⃗* of the mean field equations were found with Newton's method (Press et al., 2007). The fixed points can be either stable (attractors) or unstable depending on the eigenvalues λα of the stability matrix:

|

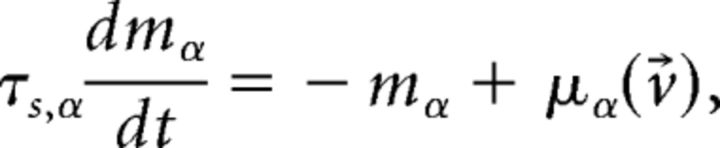

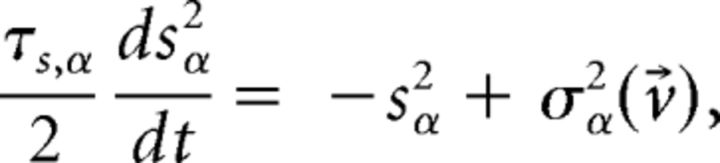

evaluated at the fixed point v⃗* (Mascaro and Amit, 1999). If all eigenvalues have negative real part, the fixed point is stable (attractor). If at least one eigenvalue has positive real part, the fixed point is unstable. Stability is meant with respect to an approximate linearized dynamics of the mean and variance of the input current:

|

|

|

where μα and σα2 are the stationary values for fixed v⃗ given earlier. For fast synaptic dynamics in the asynchronous balanced regimen, these rate dynamics are in very good agreement with simulations (La Camera et al., 2004; for more detailed discussions, see Renart et al., 2004; Giugliano et al., 2008).

Model simulations.

The dynamical equations of the LIF neurons were integrated with the Euler algorithm with a time step of dt = 0.1 ms. We simulated 30 ensembles during ongoing activity and 20 ensembles during evoked activity. We chose four different stimuli per session during evoked activity (as in the data). Trials were 5 s long. In all cases, we preprocessed each ensemble by picking at random a distribution of neurons per ensemble that matched the distribution of ensemble sizes found in the data, and performed the same analyses performed on the data (e.g., HMM, CPTA, FF). The range of hidden states M for the HMM in the model was the same as the one used for the data (see above), except in the case of analyses involving simulated ensembles of 30 neurons (see Fig. 5A), where M varied from 20 to 60 states. To determine the number of active clusters at any given time (see Fig. 4), a cluster was considered active if its population firing rate was >20 spikes/s in a 50 ms bin. This criterion gave a good separation of the firing rates of active and inactive clusters in the multistable region (see Fig. 3B, region with J+ > J′). A cluster was activated in a particular bin if its population firing rate crossed the 20 spikes/s threshold in that bin.

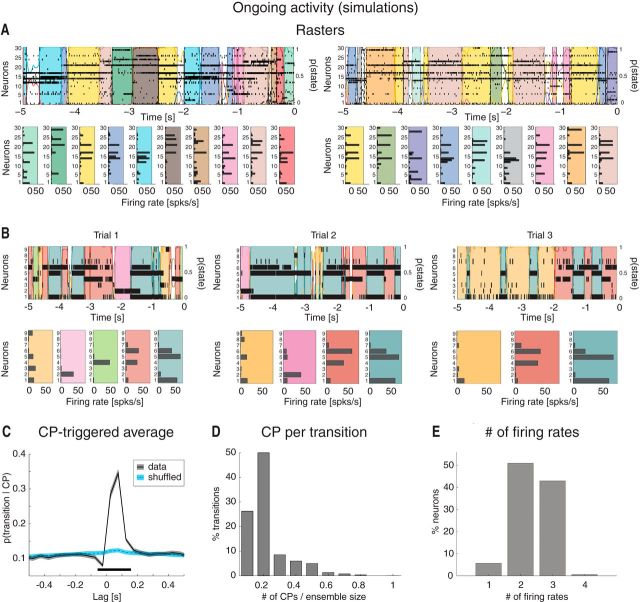

Figure 5.

Ongoing activity in the model is characterized by sequences of states. A, Two representative trials from an ensemble with 30 neurons from Figure 4C, one neuron per cluster (notations as in Fig. 1A). Raster plots (top) are overlaid with HMM states, together with their firing rates across neurons (bottom). Top, x-axis, time preceding the next event (0 = stimulus delivery); y-axis, left: ensemble neuron index; right: probability of HMM states. Bottom, x-axis, firing rates (spikes/s); y-axis, ensemble neuron index. B, Three representative trials of simulated data after keeping only 9 randomly chosen neurons, together with a representation of the corresponding HMM states (bottom). Notations are as in A. C, CPTA of ensemble transitions for the simulated dataset (black) and for a trial-shuffled dataset (blue). Same conventions as in Figure 2B. Thick black line indicates p < 0.05 (t test with multiple-bin Bonferroni correction). x-axis, time lag from CP (seconds); y-axis, probability of a transition given a CP at t = 0. D, Fraction of transitions co-occurring with CPs within the 200 ms significant window from B. x-axis, number of single neurons' CPs divided by the ensemble size; y-axis, fraction of transitions co-occurring with CPs (%). E, Number of different firing rates per neuron across all sessions: 44% of all model neurons had ≥3 different firing rates across ensemble states (compare with Fig. 2F). x-axis, minimal number of different firing rates across states; y-axis, fraction of neurons (%).

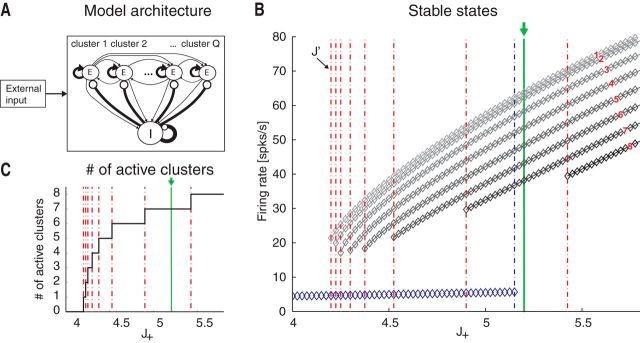

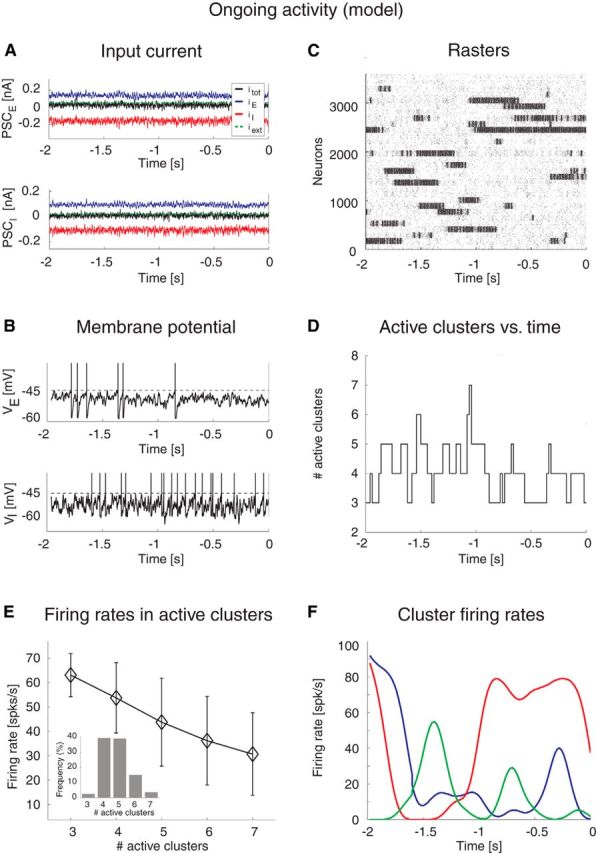

Figure 4.

Dynamics of the spiking network model during ongoing activity. Simulation of the network in Figure 3 with 4000 excitatory and 1000 inhibitory LIF neurons, Q = 30 clusters at intracluster synaptic potentiation J+ = 5.2. A, Incoming PSC to an excitatory (PSCE, top plot) and an inhibitory (PSCI, bottom plot) neuron: EPSC (blue trace), IPSC (red trace), external current (green line), and total current (black trace) are in a balanced regimen. x-axis, time (seconds); y-axis, PSC (nA). B, Membrane potential from representative excitatory (VE, top plot) and inhibitory (VI, bottom plot) neurons. Vertical bars represent spikes. Horizontal dashed lines indicate threshold for spike emission. x-axis, time (seconds); y-axis, membrane potential V (mV). For illustration purposes, V was linearly transformed to obtain the threshold for spike emission at −45 mV and the reset potential after a spike at −60 mV. C, Representative rasterplot from excitatory clustered neurons. Each dot represents a spike (background population not shown). Clusters of neurons that are currently active appear as darker regions of the raster. x-axis, time (seconds); y-axis, neuron index. D, Time course of the number of active clusters from the representative trial in C. x-axis, time (seconds); y-axis, number of active clusters. E, Average firing rates in the active clusters as a function of the number of active clusters across all simulated sessions. Error bars indicate SD. x-axis, number of active clusters; y-axis, average cluster firing rate (spikes/s). Inset, Occurrence of states with different counts of active clusters for 5% stimulus amplitude. x-axis, number of active clusters; y-axis, frequency of occurrence (% of total time). F, Instantaneous cluster firing rate in three representative clusters (red, blue, and green lines) from trial in C. x-axis, time (seconds); y-axis, firing rate (spikes/s).

Figure 3.

Attractor landscape of the spiking network model. A, Network architecture. Excitatory (E) and inhibitory (I) LIF neurons are recurrently connected (black lines), with E neurons organized in Q clusters; intracluster synaptic connections are potentiated (thick arrows), intercluster connections are depressed. Line thickness illustrates relative synaptic weight strength. B, Mean field theory analysis of the network in A. Firing rates for excitatory clusters attractor states (diamonds) are shown as a function of the intracluster potentiation parameter J+ (in units of JEE). Below the critical point J′ = 4.2 (i.e., left most vertical dotted line), the only stable state has low firing rate ∼5 spikes/s (blue diamonds). At J+ = J′, a bifurcation occurs whereby states with active clusters at higher firing rate coexist (top diamond). There are in total Q such states. As J+ is further increased, states with ≥2 active clusters exist. For each state, all active clusters have the same firing rate (reported on the vertical axis). Vertical green line at J+ = 5.2 indicates the value chosen for the spiking network simulations. Vertical red lines indicate critical points where a new configuration appears with an increased number of active clusters. x-axis, intracluster synaptic potentiation (J+); y-axis, firing rate (spikes/s). C, Number of active clusters as a function of J+. Notations are as in B. For J+ = J′, all attractor states have only one active cluster; for J+ = 5.2 (green line), there are 7 possible configurations of attractor states, with 1, 2, …, 7 active clusters, respectively. x-axis, intracluster synaptic potentiation (J+); y-axis, firing rate (spikes/s).

Results

Characterization of ongoing cortical activity as a sequence of ensemble states

We analyzed ensemble activity from extracellular recordings in the gustatory cortex (GC) of behaving rats. In our experimental paradigm, rats were delivered one of four tastants (sucrose, sodium chloride, citric acid, and quinine, denoted respectively as S, N, C, and Q) through an intraoral cannula, followed by a water rinse to clean the mouth from taste residues (Samuelsen et al., 2012). In half of the trials, taste delivery was preceded by an auditory cue (75 db, 5 kHz), signaling taste availability upon lever press; in the other half of the trials, taste was delivered randomly in the absence of an anticipatory cue. Expected and unexpected trials were randomly interleaved. Rats were engaged in a task to prevent periods of rest. We analyzed ongoing activity in the 5 s interval preceding either the auditory cue or taste delivery, and evoked activity in the 5 s interval following taste delivery in trials without anticipatory cue. The interval length was chosen based on the fact that population activity in GC encodes taste-related information for at least 5 s after taste delivery (Jezzini et al., 2013) and the ongoing interval was designed to match the length of the evoked one.

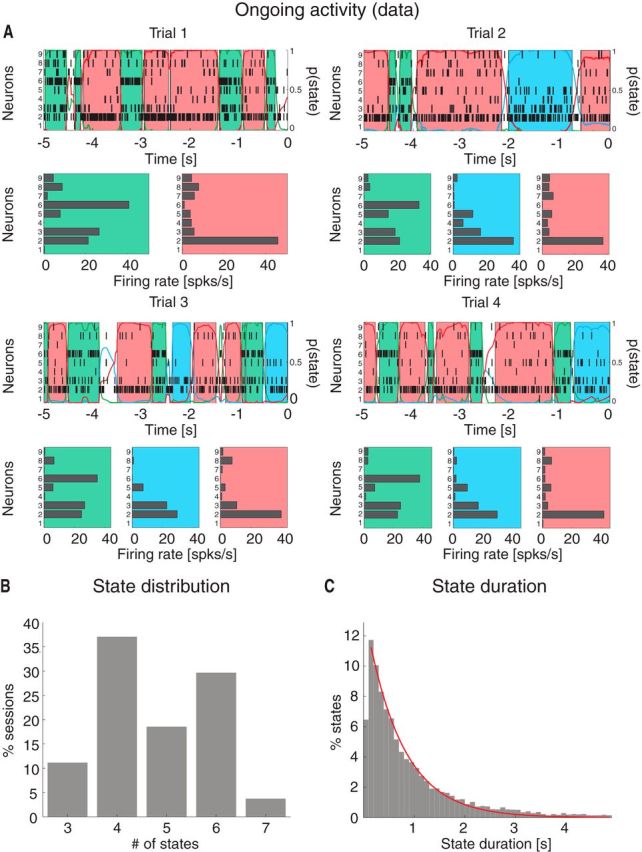

Visual inspection of ensemble spiking activity during ongoing intertrial periods (Fig. 1A) reveals that several neurons simultaneously change their firing rates. This suggests that, similarly to taste-evoked activity (Jones et al., 2007), ongoing activity in GC could be characterized in terms of sequences of states, where each state is defined as a collection of constant firing rates across simultaneously recorded neurons (Fig. 1A; for details, see Materials and Methods). We assumed that the dynamics of state transitions occurred according to a Markov stochastic process and performed an HMM analysis in single trials (Seidemann et al., 1996; Jones et al., 2007; Escola et al., 2011; Ponce-Alvarez et al., 2012). For each neural ensemble and type of activity (ongoing vs evoked), spike trains were fitted to several Poisson-HMMs that differed for number of latent states and the initial conditions. The model providing the largest likelihood was selected as the best model, but only states with probability >80% in at least 50 consecutive 1 ms bins were retained as valid states (called simply “states” in the following; for details, see Materials and Methods). The number of states across sessions ranged from 3 to 7 (mean ± SD: 4.8 ± 1.1; Fig. 1B) with approximately exponentially distributed state durations with mean of 717 ms (95%, CI: [687, 747]; Fig. 1C). The number of states was correlated with the number of neurons in each state (R2 = 0.3, p < 0.01). However, such correlation disappeared when rarely occurring states (occurring for <3% of the total session duration) were excluded (R2 = 0.1, p > 0.1), suggesting that the most frequent states are present even in small ensembles.

Figure 1.

Ongoing activity is characterized by sequences of states. A, Four representative trials from one ensemble of nine simultaneously recorded neurons, segmented according to their ensemble states (HMM analysis). Black vertical lines indicate action potentials. States are color-coded: smooth colored lines represent the probability for each state; shaded colored areas indicate intervals where the probability of a state exceeds 80%. Below each single-trial population raster, average firing rates across simultaneously recorded neurons are plotted (states are color-coded). x-axis for population rasters: time preceding the next event at (0 = stimulus delivery); x-axis for average firing rates panels: firing rates (spikes/s); y-axis for population rasters: left, ensemble neuron index, right, probability of HMM states; y-axis for firing rate panels: ensemble neuron index. B, Distribution of number of states per ensemble across all sessions (only states lasting >50 ms are included). x-axis, number of states per ensemble; y-axis, frequency of occurrence. C, Distribution of state durations across all sessions together with the least-squares exponential fit (red line; see Materials and Methods). x-axis, state duration; y-axis, frequency of states occurrence.

To gain further insight into the structure revealed by HMM analysis, we pitted the ensemble state transitions against modulations of firing rate in single neurons. The latter were found using a change-point (CP) procedure applied to the cumulative distribution of spike counts (Gallistel et al., 2004; Jezzini et al., 2013). Examples from three representative trials are shown in Figure 2A, where it can be seen that CPs from multiple neurons (red dots) tend to align with ensemble state transitions (dashed vertical green lines). The relationship between CPs and state transitions was characterized with a CP-triggered average (CPTA) of state transitions. The CPTA estimates the likelihood of observing a state transition at time t, given a CP in any neuron of the ensemble at time t = 0. If detection of CPs and state transitions were instantaneous, a significant peak of the CPTA at positive times would indicate that state transitions tend to follow a CP, whereas a peak at negative times would indicate that state transitions tend to precede CPs. We found a significant positive CPTA in the interval between t = 0 and t = 200 ms (Fig. 2B, black trace), with a peak at time t = 0. However, we must be cautious to conclude that state transitions tend to follow CPs in this case. Because of our requirement that the state's posterior probability must exceed 80% to detect an ensemble transition, the scored time of occurrence is likely to lag behind the correct time, which would skew the CPTA toward positive values. In any case, the CPTA peak is found around t = 0 (Fig. 2B) and shows that the largest proportion of state transitions co-occurs with a CP. Significance was established by comparison with a CPTA in a trial-shuffled dataset (p < 0.05, t test with Bonferroni correction; see Materials and Methods). In the trial-shuffled dataset, the CPTA was flat, indicating that the relation between CPs and ensemble transitions was lost (Fig. 2B, blue trace).

The fraction of neurons having CPs co-occurring with a state transition ranged from a single neuron to half of the ensemble (Fig. 2C), indicating that more than one neuron, but not all neurons in the ensemble, coactivate during a state transition. In support of this result, we found that population firing rate modulations and single-neuron modulations were partially correlated across transitions (Fig. 2D; φ2 = 0.21 ± 0.04, mean ± SD). We explained the observed correlation by comparing it with three surrogate datasets (correlations in the four datasets were all significantly different; χ2(3) = 110, p < 10−10, Kruskal–Wallis one-way ANOVA with post hoc multiple comparisons). The empirical correlation was significantly larger than in the case of a surrogate dataset in which all neurons changed their firing rates at random (φ2 = 0.01 ± 0.01), but significantly lower than in surrogate datasets with high levels of global coordination. A dataset with 90% and 50% of the neurons having co-occurring changes in firing rates yielded a φ2 = 0.93 ± 0.01 and φ2 = 0.66 ± 0.02, respectively (Fig. 2D). Together, these results confirm that state transitions are due to a partial (and variable in size) coactivation of a fraction of neurons in the whole ensemble.

Beyond unveiling the multistate structure described above, inspection of the representative examples in Figure 1A also provides indications on the spiking behavior of single neurons. Single neurons' firing rates appear to be bistable in some cases and multistable in others. To quantify this phenomenon, we computed the firing rate distributions across states for each neuron. Figure 2E shows two representative examples featuring mixtures of distributions peaked at multiple characteristic firing rates (different states are color-coded). To find out the minimal number of firing rates across states for all recorded neurons, we conducted a conservative post hoc comparison after a nonparametric one-way ANOVA of the firing rates for each neuron (see Materials and Methods). The mean firing rates varied significantly across states for 90% of neurons (150 of 167; Kruskal–Wallis one-way ANOVA, p < 0.05), with at least 42% (70 of 167) of all neurons being multistable (i.e., having three or more significantly different firing rate distributions across states) (Fig. 2F).

Together, these results demonstrate that ongoing activity in GC can be described as a sequence of multiple states in which a portion of neurons changes firing activity in a coordinated manner. In addition, our analyses show that almost half of the neurons switch between at least three different firing rates across states.

A spiking network model with a landscape of multistable attractors

We have shown that ongoing activity in rat GC is structured and characterized by sequences of metastable states. Biologically plausible models capable of generating such states, based on populations of spiking neurons, have been put forward (Deco and Hugues, 2012; Litwin-Kumar and Doiron, 2012, 2014). However, the hallmark of these models is bistability in single neurons, which fire at either low or high firing rate, depending on the state of the network (Amit and Brunel, 1997b; Renart et al., 2007; Churchland and Abbott, 2012). Instead, a substantial fraction of GC neurons can exhibit ≥3 stable firing rates (Fig. 2F), raising a challenge to existing models. To address this issue, we developed a spiking network model capable of multistability, where each single neuron can attain several firing rates (i.e., ≥3). The network contains 4000 excitatory (E) and 1000 inhibitory (I) LIF neurons (Fig. 3A; see Materials and Methods) that are mutually and randomly connected. A fraction of E neurons form an unstructured (“background”) population with mean synaptic strength JEE. The majority of E neurons are organized into Q recurrent clusters. Synaptic weights within each cluster are potentiated (with mean value of 〈J〉 = J+JEE, with J+ > 1), whereas synapses between E neurons belonging to different clusters are depressed (mean value 〈J〉 = J−JEE, with J− < 1). Inhibitory neurons are randomly interconnected to themselves and to the E neurons. All neurons also receive constant external input representing contributions from other brain regions and/or an applied stimulus (such as taste delivery to mimic our data).

As explained in detail below, a theoretical analysis of this model using mean field theory predicts the presence of a vast landscape of attractors and the existence of a multistable regimen where each neuron can fire at several different firing rates.

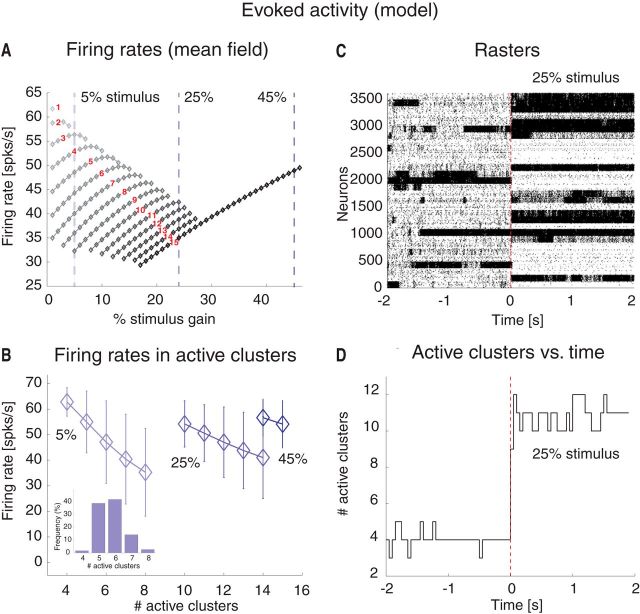

Mean field analysis of the model

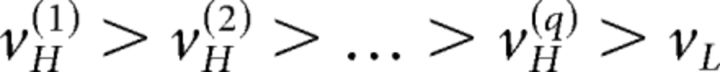

A mean field theory analysis (see Materials and Methods) predicts that, depending on the intracluster potentiation parameter J+, the cortical network can exhibit configurations with one or more active clusters, where we use the term “configuration” of the network to distinguish its admissible states of firing rate activities across neurons from the ensemble states revealed by an HMM analysis. A network configuration is fully specified by the number and identity of its active clusters, although we shall often focus on the number of active clusters regardless of their identity. The predicted population firing rates, as a function of J+ and the number of active clusters in stable network configurations, are shown in Figure 3B. For very weak values of J+ ≳ 1, the network has one stable configuration of activity (“background” configuration), where all E neurons fire at a low rate vL ∾ 5 Hz (Fig. 3B, blue diamonds left to the leftmost vertical red line at J′ = 4.2). As J+ increases beyond the first critical point J′ > 1, a bifurcation occurs (Fig. 3B). At the bifurcation, a new set of attractors emerges characterized by a single cluster active at a higher firing rate vH, while the rest of the network fires at ≈ vL (Fig. 3B, gray diamonds between the two leftmost vertical red lines). There are Q possible such configurations, one for each cluster. As we increase J+ in small steps, we cross several bifurcation points (Fig. 3B, vertical red lines). To the right of each line, a new set of global configurations is added to the previous ones, characterized by an increasing number of simultaneously active clusters, whose firing rates depend on the number of active clusters. For example, for J+ = 5.2 (green line), the network can be in 1 of 30 configurations with only 1 active cluster at vH(1) = 64 spikes/s; with 2 active clusters at vH(2) = 62 spikes/s ; with 3 active clusters at vH(3) = 58 spikes/s , and so on. A configuration with q of Q simultaneously active clusters can be realized in any one of possible configurations, giving rise to a vast landscape of attractors.

The firing rates vH(q) (q ≤ Q) depend on the number q of simultaneously active clusters in decreasing fashion as follows:

|

The lower firing rate per cluster in a larger number of active clusters is the consequence of recurrent inhibition because the whole network is kept in a state of global balance of excitation and inhibition (van Vreeswijk and Sompolinsky, 1996, 1998; Renart et al., 2007). For each maximal number qmax of allowed active clusters in the range of J+ in Figure 3B, any subset of qmax clusters could in principle be active, and the network can be in any one of the global configurations characterized by a number of active clusters compatible with the intracluster potentiation J+. The number of different firing rates per neuron in the stable configurations (diamonds) are plotted as a function of J+ in Figure 3C.

It is important to realize that these configurations are stable and would thus persist indefinitely, only in a network with an infinite number of neurons. Moreover, configurations with the same number of active clusters are equally likely (for a given value of J+) only if all clusters contain exactly the same number of neurons. We show next in model simulations that, in a network with a finite number of neurons, the persistent configurations are spontaneously destabilized, resulting in the network going through a sequence of metastable states, as observed in the data. Moreover, slight variations in the number of neurons per cluster greatly decrease the number of observed configurations, also in keeping with the data.

Model simulations: asynchronous activity and multistable regimen in the spiking network model

We tested the predictions of the theory (Fig. 3) in a simulation of the model in the regimen corresponding to J+ = 5.2 (Fig. 3B, green line). A total of 90% of the E neurons were assigned to Q = 30 clusters with potentiated intracluster connectivity J+JEE. The clusters had a variable size with a mean number of 120 neurons and 1% SD. In the “background” configuration, the network was in the balanced regimen as shown in Figure 4A for one E (top) and one I neuron (bottom), respectively. In this regimen, the excitatory (blue), inhibitory (red), and external (green) PSCs sum up to a total current with mean close to zero (black trace) and large variance. This E/I balance results in irregular spike trains originating from substantial membrane potential fluctuations (Fig. 4B). In line with this, the network had a global synchrony index of Σ ≈ 0.01 ∾ 1/ (Golomb and Hansel, 2000), which is the signature of asynchronous activity (see Materials and Methods). The fluctuations generated in the network turned the stable configurations predicted by the mean field analysis (Fig. 3B) into configurations of finite duration, and the network dynamically sampled different metastable configurations with different patterns of activations across clusters (Fig. 4C), reminiscent of the sequences shown in Figure 1. In each configuration, q of Q clusters were simultaneously active at firing rate vH(q). In the example of Figure 4C, the number of active clusters ranged from 3 to 7 at any given time (Fig. 4D). The number of active clusters across all sessions was approximately bell-shaped ∼4.8 ± 0.9 (mean ± SD; Fig. 4E, inset); the configurations with appreciable frequency of occurrences had 3–7 active clusters, with firing rates ranging from a few to ∼70 spikes/s. Configurations with 1 or 2 active clusters, although predicted in mean field (Fig. 3B), were not observed during simulations, presumably due to low probability of occurrence and/or inhomogeneity in cluster size (more on this point below). Moreover, the average population firing rate in each cluster was inversely proportional to the number of active clusters, as predicted by the theory (Fig. 4E). The actual firing rates as a function of the number of active clusters were also in good agreement with the values predicted by mean field theory (Fig. 3B). As the network hops across configurations, the firing rates of single neurons also change over time, jumping to values that depend on the number of coactive clusters at any given time (Fig. 4F; different neurons are in different colors). These results suggest that this model can provide an explanation of the ongoing dynamics observed in the data, as we show next.

Ensemble states in the network model

The model configurations with multiple active clusters could be the substrate for the states observed in the data. To show this, we performed an HMM analysis on 30 simulated model sessions, each containing 40 ongoing trials per session, each trial lasting 5 s (Fig. 5). Because all neurons in the same cluster tend to be active or inactive at the same time (Fig. 4C), it is sufficient to consider one neuron per cluster in the HMM analysis. Figure 5A shows examples of segmentation in states for a simulated ensemble of 30 neurons (each randomly chosen from a different cluster). The duration and distribution of states in the sequences depended on a number of factors, among which the amount of heterogeneity in cluster size (larger clusters were more likely to be in an active state), the synaptic time constants, and the intracluster potentiation parameter J+. For our set of parameters, we found 34 ± 4 states per session, ranging from 29 to 39. Examples of these states are shown in Figure 5A.

Different configurations with the same number, but different identity, of active clusters would produce different states; thus, in principle, our network could produce a very large number of states if the clusters would transition independently. Three factors limit this number to the actually observed one: (1) Because of to the network's organization in clusters competing via recurrent inhibition, the number of predicted configurations that are stable have between 1 and 7 active clusters for J+ = 5.2, as predicted by the theory (Fig. 3B, diamonds on the green vertical line). No configurations with 8, …, 30 clusters are stable because activation of one cluster will tend to suppress, via recurrent inhibition, the activation of other clusters. (2) Not all predicted configurations have the same likelihood of being visited by the dynamics of the network; in our example, the probability of observing configurations with <3 active clusters in model simulations has very low probability (Fig. 4E), further reducing the total number of visited configurations. (3) Because of a slight heterogeneity in cluster size (1% variance across clusters), configurations with the larger clusters active tend to be sampled the most (i.e., they tend to recur more often and last longer), presumably because of a larger basin of attraction and/or a deeper potential well in the attractor landscape. All these factors working together produce an itinerant network dynamics among only a handful of configurations (compared with the large number possible a priori), which in turn originate only a handful of ensemble states in an HMM analysis of the same simulated data (Fig. 5A).

The presence of recurrent inhibition and the heterogeneity in cluster size are also responsible for a higher degree of cluster coactivation than expected by chance had the clusters been independent. This was tested by comparison with a surrogate dataset obtained by random trial shuffling of the simulated data. The distributions of the number of cluster coactivations in 50 ms bins in the original versus the shuffled data were significantly different (Kolmogorov–Smirnov test, Neff = 3 × 104, p < 10−12) (Press et al., 2007). In particular, activations of single clusters were more frequent in the trial-shuffled dataset, whereas coactivations of multiple clusters were more frequent in the original model simulations (one-way ANOVA with Bonferroni corrected multiple comparisons, p < 0.01). Finally, the average number of different configurations was significantly larger in the trial-shuffled dataset (Wilcoxon rank-sum test across sessions, z = −2.4, p < 0.05). Together, these results show that the model network, although producing asynchronous activity hence low pairwise spike train correlations (data not shown), induces more coordinated clusters' coactivations and a much more limited number of network configurations than expected by chance. In turn, this is compatible with a limited number of states as revealed by an HMM analysis (Fig. 5A).

An HMM analysis on 30 simulated neurons taken from 30 different clusters is bound to produce a larger number of states than detected in the data because in the latter only ensembles of 3–9 simultaneously recorded neurons were available. Moreover, it is not guaranteed that the recorded neurons all came from different clusters. Thus, to facilitate comparison between model and data, we picked a distribution of ensemble sizes matching the empirical distribution (3–9 neurons) and chose standard physiological values for all other parameters (see Materials and Methods). Three representative trials from an ensemble with 9 neurons are shown in Figure 5B, revealing the reoccurrence of HMM states across trials, with 8.3 ± 1.7 states (mean ± SD), ranging from 5 to 12 (a range much closer to the range of 3–7 states found in the data), and approximately exponential duration with mean of 239 ms (95% CI: [234, 243]). The number of states detected was correlated with the number of neurons in each state (R2 = 0.36, p < 0.01), as could be expected because the probability of detecting a state will increase in larger ensembles until neurons from all clusters are sampled. The model states matched other characteristic features of the data: state transitions followed CPs with a similar shape of the CPTA (Fig. 5C; compare with Fig. 2B) and were often congruent with the comodulation of a fraction of the neurons (Fig. 5D; compare with Fig. 2C). Moreover, we found that 44% of the neurons had ≥3 firing rates (Fig. 5E), essentially the same fraction (42%) found in the data (Fig. 2F). No additional tuning of the parameters was required to obtain this match.

Comparison between ongoing and stimulus-evoked activity: reduction of multistability

Previous work has proposed various relationships between patterns of ongoing activity and responses evoked by sensory stimuli (see, e.g., Arieli et al., 1996; Kenet et al., 2003; Fontanini and Katz, 2008; Luczak et al., 2009; Fiser et al., 2010; Abbott et al., 2011; Berkes et al., 2011). We performed a series of analyses of the experimental and simulated data to determine whether the model network, developed to reproduce GC ongoing activity, captured the essential features of taste-evoked activity with no additional tuning of parameters.

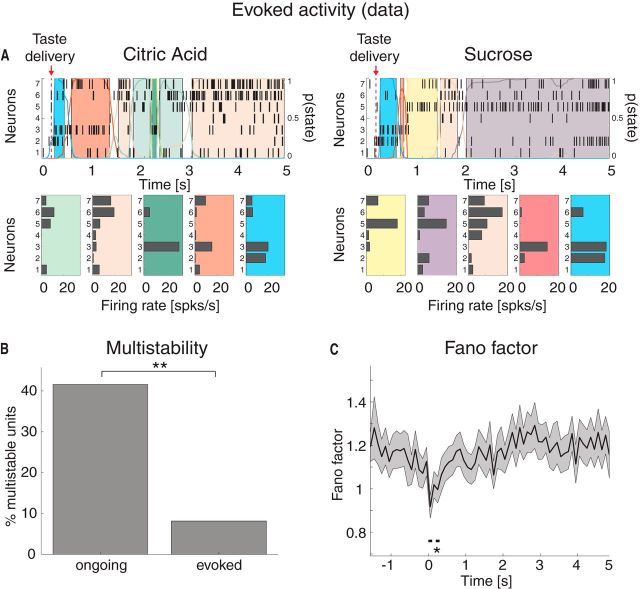

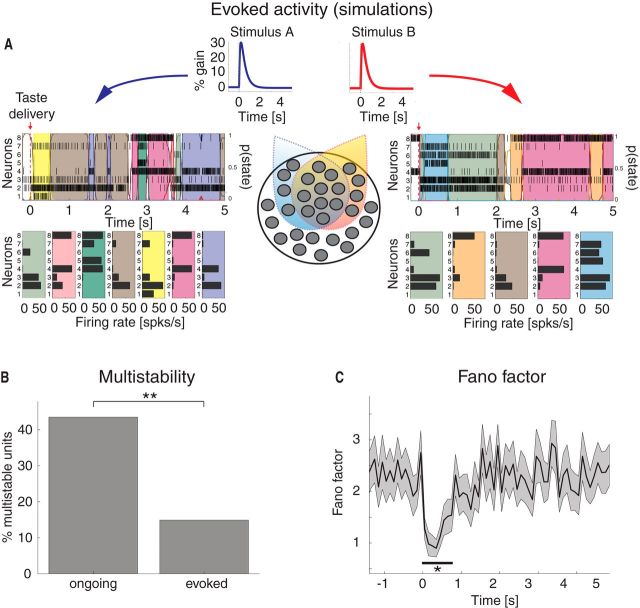

We first performed an HMM analysis on the data recorded during evoked activity in the [0,5] s interval post-taste delivery, as by Jones et al., 2007 (Figure 6A). We found a range of 4–11 states per taste across sessions (mean ± SD: 7.2 ± 1.6) with an approximate exponential distribution of durations with mean 306 ms (95% CI: [278, 334]). However, during evoked activity, only 8% of the neurons had >2 different firing rates across states compared with 42% during ongoing activity (Fig. 6B; χ2(1) = 51, p < 10−12). This suggests that a stimulus steers the firing rates of single neurons away from the multistable regimen, hence reducing the range and number of different firing rates available. Moreover, in keeping with previous reports (Churchland et al., 2010), the stimulus caused a significant drop in trial-by-trial variability, as measured by the mean-matched FF (see Materials and Methods; Fig. 6C).

Figure 6.

Taste-evoked activity: reduction of multistability and trial-by-trial variability in the experimental data. A, Two representative examples of population rasters together with their segmentation in HMM states during evoked activity in GC, in response to citric acid (left) and sucrose (right) delivery, respectively. Red arrow indicates taste delivery at t = 0. The remaining notations are as in Fig. 1A. Top, x-axis, time relative to taste delivery (0 = stimulus delivery); y-axis, left, ensemble neuron index, right, probability of HMM states. Bottom, x-axis: firing rates (spikes/s); y-axis, ensemble neuron index. B, Fraction of multistable neurons across all states during ongoing (left) and evoked activity (right) in GC. **p < 0.01 (χ2 test). x-axis, ongoing and evoked conditions; y-axis, fraction of multistable neurons (%). C, Time course of the mean-matched FF in a time interval around taste delivery (occurring at time t = 0) across all data. Shaded bounds represent 95% CIs. The thick horizontal black line indicates bins where the evoked FF is significantly different from baseline (*p < 0.05, see Materials and Methods). x-axis, time (seconds); y-axis, FF.

To gain insight into the mechanism responsible for the observed reduction in multistability, we investigated whether this new configuration imposed by the stimulus is compatible with the attractor landscape predicted by the model (Fig. 3B). We analyzed the behavior of the model network in the presence of a stimulus mimicking thalamic input following taste delivery (see Materials and Methods). Four stimuli were used (to mimic the 4 tastants used in experiment), which differed only in the neurons they targeted, according to the same empirical distribution found in the data (2 representative stimuli are shown in the diagram of Fig. 7A). Specifically, the fractions of neurons responsive to n = 1, 2, 3, or all 4 stimuli were 17% (27 of 162), 22% (36 of 162), 26% (42 of 162), and 35% (57 of 162), respectively. All other model parameters were chosen as in the analysis of ongoing activity.

Figure 7.

Taste-evoked activity: reduction of multistability and trial-by trial variability in the model. A, Two examples of population rasters together with their segmentation in HMM states during evoked activity in the model network, in response to two surrogate stimuli. Clusters may be selective to more than one stimulus. Middle bottom, Dark circles and colored areas represent, respectively, individual clusters and their selectivity to the two different stimuli A (blue) and B (red). The remaining conventions are as in Figure 6A. Model stimuli (middle top) consist of double exponentials peaking at 30% of vext (50 ms rise time and 500 ms decay time). x-axis, time (seconds); y-axis, stimulus amplitude (%). B, Fraction of multistable neurons across all states during ongoing and evoked activity in the model. **p < 0.01. x-axis, ongoing and evoked conditions; y-axis, fraction of multistable neurons (%). C, Time course of the mean-matched FF in a time interval around stimulus presentation (occurring at time t = 0) across all simulated sessions (same conventions as in Fig. 6C, *p < 0.05). x-axis, time (seconds); y-axis, FF.

With a biologically realistic stimulus peaking at 30% of vext (see Materials and Methods), we found a range of 4–17 states per stimulus across sessions (mean ± SD: 10.0 ± 3.0), with an approximate exponential distribution of state durations with mean 227 ms (95% CI: [219, 235]). Representative trials from simulated activity evoked by two of the four different stimuli are shown in Figure 7A, together with their segmentation in HMM states. As in the data, a significantly smaller fraction (15%) of neurons had ≥3 different firing rates across states compared with ongoing activity (44%; Fig. 7B; χ2(1) = 24, p < 10−5). This result was robust to changes in stimulus shape and amplitude; using different stimuli gave a comparable fraction of multistable neurons (either varying stimulus peak amplitude from 10% to 30% of vext, or using a box stimulus; data not shown, see Materials and Methods). The model also captured the stimulus-induced reduction in trial-by-trial variability found in the data (Fig. 7C), as measured by the mean-matched FF, confirming previous results found with similar model networks (Deco and Hugues, 2012; Litwin-Kumar and Doiron, 2012).

Both the empirical data and the behavior of the network under stimulation could be explained with the same theoretical analysis used to predict ongoing activity (Fig. 3). We repeated the mean field analysis for a fixed value of J+ = 5.2 (Fig. 3B, green vertical line and arrow) but varying the stimulus amplitude. The results are shown in Figure 8A. The number of active clusters tends to increase with stimulus amplitude, whereas the configurations with fewer active clusters gradually disappear. Moreover, one observes a reduction in the range of firing rates across states until, for a stimulus amplitude ≳ 30% of vext, only configurations with 15 active clusters are theoretically possible. Computer simulations confirmed these predictions (Fig. 8B): although the match between predicted and simulated firing rates was not perfect (presumably due to finite size effects and the approximations used for synaptic dynamics), the predicted narrowing of the firing rate range for stronger stimuli was evident. As with ongoing activity, we also found that the network spent most of its time visiting configurations with an intermediate number of active clusters among all those theoretically possible predicted in mean field (Fig. 8B, inset), suggesting, again, that different network configurations have different basins of attraction, with larger basins corresponding to longer durations. An example simulation for a stimulus amplitude of 30% of vext is shown in Figure 8C, together with the running score of the number of active clusters (Fig. 8D).

Figure 8.

Mean field predictions of evoked activity and comparison with model simulations. A, Attractor states of the model during evoked activity predicted by mean field theory for J+ = 5.2 and as a function of stimulus amplitude. Diamonds represent mean firing rate in active clusters for each attractor state, featuring the number of active clusters indicated by the red numbers (see also Fig. 3B). Stimulus amplitude varied from 1% to 45% of vext. Vertical dashed lines indicate attractor states for stimuli of 5%, 25%, and 45%. The firing rate range decreases as the stimulus amplitude is increased, and it reduces to a single configuration with 15 active clusters for stimuli >30%. x-axis, stimulus increase (%); y-axis, firing rate (spikes/s). B, Mean firing rates in active clusters in simulations of the model for the three stimuli marked by vertical lines in A. Error bars indicate SD. x-axis, number of active clusters; y-axis, average cluster firing rate (spikes/s). Inset, Frequency of configurations with different numbers of active clusters for 5% stimulus. x-axis, active clusters; y-axis, frequency of occurrence (% of total simulation time across all simulations). C, Rasterplot of spike trains from excitatory clustered neurons (background excitatory population not shown) in a representative trial encompassing both periods of ongoing and evoked activity (compare with Fig. 4C), using a “box” stimulus with amplitude at 30% of vext in the [0, 2]s interval (ongoing activity corresponds to the [−2, 0]s interval). Vertical red line indicates stimulus onset. x-axis, time (seconds); y-axis, neuron index. D, Number of active clusters versus time (same representative trial as in C). Notations as in Fig. 4D. x-axis, time (seconds); y-axis, active clusters.

In conclusion, stimulating a cortical network during a state of multistable ongoing activity reduces not only the trial-by-trial variability, as previously reported, but also multistability, defined as the single neurons' ability to exhibit multiple (≥3) firing rates across states. This may be linked to a reduction in complexity of evoked neural representations (Rigotti et al., 2013).

Discussion

Taste-evoked activity in GC has been characterized as a progression through a sequence of metastable states, where each state is a collection of firing rates across simultaneously recorded neurons (Jones et al., 2007). Here we demonstrate that metastable states can also be observed during ongoing activity, and we report several quantitative comparisons between ongoing and evoked activity. The most notable difference is that single neurons are multistable during ongoing activity (expressing ≥3 different firing rates across states), whereas they are mostly bistable during evoked activity (expressing at most 2 different firing rates across states).

These results were reproduced in a biologically plausible, spiking network model of cortical activity amenable to theoretical analysis. The network had dense and sufficiently strong intracluster connections, which endowed it with a rich landscape of stable states with several different firing rates. To our knowledge, this rich repertoire of network attractors and its specific modification under stimulation have not been reported before. The stable states become metastable in a finite network, wherein endogenously generated fluctuations induce an itinerant dynamics among ensemble states. As observed in the data, this phenomenon did not require overt external stimulation. The model captured other essential features of the data, such as the distribution of single neurons' firing rates across states and the partial coactivation of neurons associated to each ensemble transition. Moreover, without additional tuning, the model reproduced the reduction of multistability and trial-by-trial variability at stimulus onset.

The reduction in trial-by-trial variability confirms previous results obtained across several brain areas (Churchland et al., 2010), which were also reproduced in spiking models similar to ours (Deco and Hugues, 2012; Litwin-Kumar and Doiron, 2012, 2014). As for multistability, our theory offers a mechanistic explanation of its origin and its stimulus-induced reduction. The theory predicts the complete abolition of multistability above a critical value of the external stimulation (Fig. 8A), when the network exhibits only one firing rate across all neurons in active clusters. In the more plausible range of mid-strength stimulations, the model shows a reduction in multistability and a contraction of the range of firing rates.

External and internal sources of metastability in GC

Metastable activity can be induced by an external stimulus as shown recently (Miller and Katz, 2010, 2013) in a spiking network model. Their model relies on feedforward connections between recurrent cortical modules responsible for taste detection, taste identification, and decision to spit or swallow. Each stage corresponds to the activation of a particular module, and transitions between stages are driven by stochastic fluctuations in the network. Although this model reproduces the dynamics of taste-evoked activity, it relies on an external stimulus to ignite the transitions, and thus would not explain metastable dynamics during ongoing activity. Our model provides a mechanistic explanation of intrinsically generated state sequences via a common mechanism during both ongoing and evoked activity.

The segmentation of ongoing activity in a sequence of metastable states suggests that the network hops among persistent activity configurations that lose stability by mechanisms, including intrinsically generated noise (Shpiro et al., 2009; Deco and Hugues, 2012; Deco and Jirsa, 2012; Litwin-Kumar and Doiron, 2012; Mattia and Sanchez-Vives, 2012; Litwin-Kumar and Doiron, 2014), some process of adaptation (Giugliano et al., 2004, 2008; Treves, 2005; Shpiro et al., 2009), or winnerless competition (Rabinovich et al., 2001). In our model and in previous models similar to ours (Deco and Hugues, 2012; Litwin-Kumar and Doiron, 2012, 2014), the mechanism is internally generated noise that destabilizes the stable attractors predicted by mean field theory.

In a finite network, the stable states become metastable due to fluctuations of neural activity similar to those typically observed in vivo (Shadlen and Newsome, 1994; Rauch et al., 2003; La Camera et al., 2006). These fluctuations are a consequence of the network being in the balanced regimen (van Vreeswijk and Sompolinsky, 1996, 1998; Renart et al., 2007), which produces irregular and asynchronous spiking activity (Brunel and Hakim, 1999; Renart et al., 2010). In the network containing a finite number of neurons, this fluctuating activity can destabilize the stable states and produce hopping behavior among metastable states (Deco and Hugues, 2012; Deco and Jirsa, 2012; Litwin-Kumar and Doiron, 2012; Mattia and Sanchez-Vives, 2012; Litwin-Kumar and Doiron, 2014).

Relationship between ongoing and evoked activity

The nature of the relationship between ongoing and evoked states remains elusive. One proposal for the role of ongoing activity is that it serves as a repertoire of representations sampled by the neural circuit during evoked activity (Arieli et al., 1996; Kenet et al., 2003; Luczak et al., 2009). According to this proposal, evoked states occupy a subset of ongoing states in a reduced representational space, such as the space of principal components, or the space obtained after multidimensional scaling (Luczak et al., 2009). This applies especially to the activity of auditory or somatosensory neurons during high and low activity states called “UP” and “DOWN” states (MacLean et al., 2005; Barthó et al., 2009; Luczak et al., 2009, 2013).

An alternative proposal regards ongoing activity as a Bayesian prior that incrementally adjusts to the statistics of external stimuli during early development (Fiser et al., 2004; Berkes et al., 2011). In this framework, ongoing activity starts out as initially different from evoked activity and progressively shifts toward it.

Our model's explanation of the genesis and structure of ensemble states has two main implications: if, on the one hand, ongoing and evoked activities are undoubtedly linked (they emerge from the same network structure and organization); on the other hand, they may differ in important ways. Given the network's structure, only a handful of states are allowed to emerge through network dynamics, which might suggest that ongoing activity constrains the repertoire of sensory responses. However, the same network's structure, especially its synaptic organization in potentiated clusters, can be obtained as the result of learning external stimuli (Amit and Brunel, 1997b; Amit and Mongillo, 2003; Tkacik et al., 2010; Litwin-Kumar and Doiron, 2014), so from this point of view it is the realm of evoked responses that defines the structure of ongoing activity. In any case, even though originating from the same network structure and mechanisms, evoked and ongoing activity can be characteristically different. They may differ in the number of ensemble states or firing rate distributions across neurons, resulting in only a partial overlap when analyzed as in (Luczak et al., 2009) (data not shown). Stimuli drive neurons at higher and lower firing rates than typically observed in ongoing activity, preventing ongoing activity from completely encompassing the evoked states. This is a direct consequence of our finding that activity in GC is not compatible with UP and DOWN states, both in terms of the dynamics of state transitions (Fig. 2D) and in terms of the wider range of firing rates attained by single neurons (multistability; Fig. 2F).

The observed differences between ongoing and evoked activity can be understood thanks to our spiking network model. This model shows how the presence of a stimulus induces a change in the landscape of metastable configurations (Fig. 8A). This change depends on the strength of the stimulus and is incremental, quenching the range and the number of the active clusters in admissible configurations. Beyond a critical point (≳ 30% stimulus strength in Fig. 8A), the only states left are in configurations where all stimulated clusters are simultaneously active, and evoked states are intrinsically different from ongoing states. Although they still relate to ongoing states due to their common network origin, evoked states contain information that is not available in the ongoing activity itself (and indeed, this information can be used to decode the taste, see e.g., Jones et al., 2007; Samuelsen et al., 2012; Jezzini et al., 2013).

Mechanisms of itinerant multistable activity