Abstract

Continuous-time Markov processes over finite state-spaces are widely used to model dynamical processes in many fields of natural and social science. Here, we introduce a maximum likelihood estimator for constructing such models from data observed at a finite time interval. This estimator is dramatically more efficient than prior approaches, enables the calculation of deterministic confidence intervals in all model parameters, and can easily enforce important physical constraints on the models such as detailed balance. We demonstrate and discuss the advantages of these models over existing discrete-time Markov models for the analysis of molecular dynamics simulations.

I. INTRODUCTION

Estimating the parameters of a continuous-time Markov jump process model based on discrete-time observations of the state of a dynamical system is a problem which arises in many fields of science, including physics, biology, sociology, meteorology, and finance.1–4 Diverse applications include the progression of credit risk spreads,5 social mobility,6 and the evolution of DNA sequences in a phylogenetic tree.7 In chemical physics, these models, also called master equations, describe first-order chemical kinetics, and are the principal workhorses for modeling chemical reactions.8

For complex physical systems, the derivation of kinetic models from first principles is often intractable. In these circumstances, the parameterization of models from data is often a superior approach. As an example, consider the dynamical behavior of solvated biomolecules, such as proteins and nucleic acids. Despite the microscopic complexity of their equations of motion, relatively simple multi-state kinetics often arise, as exemplified by the ubiquity of two- and few-state Markov process models for protein folding.9–14

Due in part to the unavailability of computationally efficient and numerically robust estimators for continuous-time Markov models, in the field of computational biophysics, discrete-time Markov models have been widely used to fit and interpret the output of molecular dynamics (MD) simulations. Also called Markov state models (MSMs), these methods describe the molecular kinetics observed in a MD simulation as a jump process with a discrete-time interval generally on the order of ∼10–100 ns.15,16 These models provide convenient estimators for key quantities of interest for molecular systems, such as the free energies of various metastable conformational states, the time scales of their interconversion, and the dominant transition pathways.17–20

In this work, we introduce an efficient maximum likelihood estimator for continuous-time Markov models on a finite state space from discrete-time data. The source of data used here is identical to that employed in fitting discrete-time Markov chain models—namely, the number of observed transitions between each pair of states within a specified time interval. We demonstrate the properties of these models on simple systems, and apply them to the analysis of the folding of the FiP35 WW protein domain.

II. BACKGROUND

Consider a time-homogenous continuous-time Markov process {X(t) : t ≥ 0} over a finite state space, 𝒮 = {1, …, n}. The process is determined completely by an n × n matrix K, variously called its rate matrix, infinitesimal generator,21 substitution matrix,22 or intensity matrix.23

For an interval τ > 0, begin with the n × n matrix, T(τ), of probabilities that the process jumps from one state, i, to another state, j,

| (1) |

which, by time-homogeneity is assumed to be independent of t. The rate matrix of the process, K, is defined as

| (2) |

Given K and any time interval, τ, the transition probability matrix, T(τ), can be expressed as a matrix exponential,

| (3) |

A particular rate matrix K corresponds to a valid continuous-time Markov process if and only if its off-diagonal elements are non-negative and its row sums equal zero. These constraints are necessary to ensure that the probabilities propagated by the dynamics remain positive and sum to one. We denote by 𝒦 this set of admissible rate matrices,

| (4) |

Furthermore, we denote by 𝒯 the set of all embeddable transition probability matrices, that is, those which could originate as the transition probability matrix, T(τ), induced by some continuous-time Markov process,

| (5) |

It is well-known that set 𝒯 is a strict subset of the set of all stochastic matrices; not all stochastic matrices are embeddable.24,25 A complete description of the topological structure of 𝒯 as well as the necessary and sufficient conditions for a stochastic matrix to be embeddable are open problems in the theory of Markov processes.

Although Eq. (2) serves as the definition of the rate matrix of a continuous-time Markov process, it is generally not directly suitable as a method for parameterizing Markov models, particularly for applications in chemical kinetics. The attempt to numerically approximate the limit in Eq. (2) from empirically measured transition probabilities would be valid if the generating process were exactly Markovian. However, in chemical kinetics, a Markov process model—the chemical master equation—is an approximation valid only for time scales longer than the molecular relaxation time.26,27 A suitable Markov model which is predictive over long time scales must capture both the instantaneous kinetics as well as, to use the vocabulary of Mori-Zwanzig formalism, the effective contribution of the integrated memory kernel.28,29

Our goal is to address this parameterization problem. The primary contribution of this work is an efficient algorithm for estimating K from observed discrete-time observations. We adopt a direct maximum likelihood approach, with O(n3) work per iteration. Many constraints on the solution, such as detailed balance or specific sparsity patterns on K, can be introduced in a straightforward manner without additional cost.

Prior work on this subject is numerous. Crommelin and Vanden-Eijnden proposed a method for estimating K in which a discrete-time transition probability matrix is first fit to the observed data, followed by the determination of the rate matrix, K such that exp(Kτ) is nearest to the target empirical transition probability matrix.30,31 The nature of this calculation depends on the norm used to define the concept of “nearest”: under a Frobenius norm, this problem has a closed form solution, while the norm of Crommelin and Vanden-Eijnden leads to a quadratic program. A similar approach was advocated by Israel et al.32

Kalbfleisch and Lawless proposed a maximum likelihood estimator for K.33 Without constraints on the rate matrix, their proposed optimization involves the construction and inversion of an n2 × n2 Hessian matrix at each iteration of the optimization, rendering it prohibitively costly (O(n6) scaling per iteration) for moderate to large state spaces.

A series of expectation maximization (EM) algorithms are described by Asmussen, Nerman and Olsson, Holmes and Rubin, Bladt and Sørensen, and Hobolth and Jensen.22,23,34,35 These algorithms treat the state of the system between observation intervals as an unobserved latent variable, which when interpolated via EM leads to more efficient estimators. A review of these algorithms is presented by Metzner et al.21 At best, each iteration of the proposed methods scales as O(n5).

III. MAXIMUM LIKELIHOOD ESTIMATION

A. Log-likelihood and gradient

We take our source of data to be one or more observed discrete-time trajectories from a Markov process, x = {x0, xτ, …, xNτ}, in a finite state space, observed at a regular time interval.

The likelihood of the data given the model and the initial state is given in terms of the transition probability matrix as the product of the transition probabilities assigned to each of the observed jumps in the trajectory,

| (6) |

When more than one independent trajectory is observed, the data likelihood is a product over trajectory with individual terms given by Eq. (6).

Because many transitions are potentially observed multiple times, Eq. (6) generally contains many repeated terms. Define the observed transition count matrix C(τ) ∈ ℝn×n,

| (7) |

Collecting repeated terms, the likelihood can be rewritten more compactly as

| (8) |

Suppose that the rate matrix, K is parameterized by a vector, θ ∈ ℝb of independent variables, K = K(θ). In the most general case, every element of the rate matrix may individually be taken as an independent variable, with b = n2 − n. As discussed in Section III B, other parameterizations may be used to enforce certain properties on K. The logarithm of data likelihood is

| (9) |

| (10) |

| (11) |

where ln(X) is the element-wise natural logarithm, exp(X) matrix exponential, and X∘Y is the Hadamard (element-wise) matrix product. Note that the element-wise logarithm and matrix exponential are not inverses of one another.

The most straightforward parameter estimator—the maximum likelihood estimator (MLE)—selects parameters which maximize the likelihood of the data,

| (12) |

To maximize Eq. (12), we focus our attention on quasi-Newton optimizers that utilize the first derivatives of with respect to θ. This requires an efficient algorithm for computing . We achieve this by starting from the eigendecomposition of K,

| (13) |

where the columns of U and V contain the left and right eigenvectors of K, respectively, jointly normalized such that V−1 = UT, and λ are the corresponding eigenvalues. Assuming that K has no repeated eigenvalues, the directional derivatives of induced transition probability matrix, ∂T(τ)ij/∂θu are given by33,36

| (14) |

where X(λ, t) is an n × n matrix with entries

| (15) |

The elements of the gradient of the log-likelihood can then be constructed as

| (16) |

where Dij = C(τ)ij/Tij.

A direct implementation of Eq. (16) requires at least 4 n × n matrix multiplies for each element of θ, indexed by u. If the parameter vector, θ, contains O(n2) parameters, then computing the full gradient will require O(n5) floating point operations (FLOPs). However, two properties of the Hadamard product and matrix trace can be exploited to dramatically reduce the computational complexity of constructing the gradient vector to O(n3) FLOPs,

| (17) |

| (18) |

Using these identities, the gradient of the log-likelihood can be rewritten as

| (19) |

Note that because Z is independent of u, it can be constructed once at the beginning of a gradient calculation at a cost of O(n3) FLOPs, and reused for each index, u. The remainder of the work involves constructing the derivative matrix ∂K/∂θu, which is generally quite sparse, and a single inexpensive sum of a Hadamard product. Overall, this rearrangement reduces the complexity of constructing the full gradient vector from O(n5) to O(n3) FLOPs.

B. Reversible parameterization

In the application of these models to domain-specific problems, additional constraints on the Markov process may be known, and enforcing these constraints during parameterization can enhance the interpretability of solutions as well as provide a form of regularization.

For many molecular systems, it is known that the underlying dynamics are reversible, and this property can be enforced in Markov models as well. A Markov process is reversible when the rate matrix, K, satisfies the detailed balance condition with respect to a stationary distribution, π, towards which the process relaxes over time,

| (20) |

| (21) |

This constraint can be enforced on solutions through the design of the parameterization function, K(θ). If K is reversible, Eq. (21) implies that a real symmetric n × n matrix, S, can be formed, which we refer to as the symmetric rate matrix, such that

| (22) |

Because of this symmetry and the constraint on the row sums of K, only the upper triangular (exclusive of the main diagonal) elements of S, and the stationary vector, π, need to be directly encoded by the parameter vector, θ, to fully specify K. Furthermore, since the elements of π are constrained to be positive, working with the element-wise logarithm of π can enhance numerical stability. For the elements of S, which are only constrained to be non-negative, the same logarithm transformation is inapplicable, as it is incompatible with sparse solutions that set one or more rate constants equal to zero. For these reasons, we use a parameter vector, of length , with θ = (θ(S), θ(π)). The first elements, notated θ(S), encode the off-diagonal elements of S. The remaining n elements are notated θ(π), and are used to construct the stationary distribution, π. From S and π, the off-diagonal and diagonal elements of K are then constructed from Eq. (22). In explicit notation, the construction is

| (23) |

| (24) |

| (25) |

where vech(A) is the row-major vectorization of the elements of a symmetric n × n matrix above the main diagonal,

| (26) |

The necessary gradients of Eq. (25), ∂Kij/dθu are sparse. For fixed , the n × n matrix ∂Kij/dθu over all i, j contains only four nonzero entries, whereas for , the same matrix contains 3n − 2 nonzero entries. The sum of its Hadamard product with Z in Eq. (19) can thus be computed in O(1) or O(n) time. For the remainder of this work, we focus exclusively on this reversible parameterization for K(θ).

C. Optimization

Equipped with the log-likelihood and an efficient algorithm for the gradient, we now consider the construction of maximum likelihood estimates, Eq. (12). Among the first-order quasi-Newton methods tested, we find Limited-memory Broyden-Fletcher-Goldfarb-Shanno optimizer with bound constraints (L-BFGS-B) to be the most successful and robust.37,38

To begin the optimization, we choose the initial guess for θ according to the following procedure. First, we fit the maximum likelihood reversible transition probability matrix computed using Algorithm 1 of Prinz et al.39 Next, we compute its principal matrix logarithm, , using an inverse scaling and squaring algorithm, and scaling by τ.40 Generally, the MLE reversible transition matrix is not embeddable, and thus the principal logarithm is complex or has negative off-diagonal entries, and does not correspond to any valid continuous-time Markov process. We take the initial guess from θ(π) directly from the stationary eigenvector of the MLE transition matrix, and θ(S) from the nearest (by Frobenius norm) valid rate matrix to , given by .25

The optimization problem is non-convex in the general case and may have multiple local minima. Varying the optimizer’s initialization procedure can thus mitigate the risk of convergence to a low quality local minimum. One alternative initialization K is the pseudo-generator, Kp = (T(τ) − In)/τ, which arises from a first-order Taylor approximation to the matrix exponential. After the optimization has terminated, a useful check is to compare the maximum likelihood transition matrix T(τ) estimated during initialization with the exponential of the recovered rate matrix, exp(τKMLE). Large differences between the two matrices, or their eigenspectra/relaxation time scales, may be symptomatic of non-embedability of the data or a convergence failure of the optimizer. If the data are available at a lag time shorter than τ, convergence failures can often also be circumvented by using a converged rate matrix obtained from a model at a shorter lag time as an initial guess for a model at a longer lag time.

D. Implementation notes

Because S is symmetric, it can be diagonalized efficiently at cost of O(4n3/3) FLOPs. The eigenvectors can then be rotated by to give the eigenvectors of K. Compared to diagonalizing the non-symmetric matrix K directly, this can yield a speedup of 2-10× in the critical diagonalization step required to compute the gradient vector.

For each pair of states with an observed transition count, (i, j) such that C(τ)ij > 0, gradient expressions Eqs. (16) and (19) are only defined when Tij > 0. A sufficient condition to ensure this property is that K be irreducible,41 but this cannot be straightforwardly ensured throughout every iteration of the L-BFGS-B optimization without heavy-handed measures such as complete positivity of K. In practice, we find that replacing any zero values in T with a small constant, such as 1 × 10−20, when computing the matrix D in Eq. (19) is sufficient to avoid this instability.

Furthermore, note that calculation of X(λ, t) by direct implementation of Eq. (15) can suffer from a substantial loss of accuracy for close-lying eigenvalues. The matrix can instead be computed in a more precise manner using the exprel(x) ≡ (ex − 1)/x or exmp1 ≡ ex − 1 routines, which are designed to be accurate for small x and are available in numerical libraries such as SLATEC, GSL, and the upcoming release of SciPy.42–44

IV. QUANTIFYING UNCERTAINTY

Since all data sets are finite, statistical uncertainty in any estimate of a probabilistic model is unavoidable. Therefore, key quantities of interest beyond the maximum likelihood rate matrix itself, KMLE = K(θMLE), are estimates of the sampling uncertainty in KMLE, and estimates of the sampling uncertainty in quantities derived from KMLE, such as its stationary eigenvector, π, its eigenvalues, λi, and relaxation time scales.

In the large sample size limit, the central limit theorem guarantees that the distribution of θMLE converges to a multivariate normal distribution with a covariance matrix which can be estimated by the inverse of the Hessian of the log-likelihood function evaluated at θMLE, assuming that the MLE does not lie on a constraint boundary.45 This can be thought of as a second order Taylor expansion for the log-likelihood surface at the MLE; the log-likelihood is approximated as a paraboloid with negative curvature whose peak is at the MLE and whose width is determined by the Hessian matrix at the peak. The exponential of the log-likelihood, the likelihood surface, is then Gaussian, and the multivariate delta theorem can be used to derive expressions for the asymptotic variance in scalar functions of θMLE.45 Computationally, the critical component is the computation of the Hessian matrix,

| (27) |

| (28) |

and its inverse.

A. Approximate analytic Hessian

Direct calculation of the Hessian requires both the evaluation of the first derivatives of T as well as the more costly second derivatives. A more efficient alternative, as pointed out by Kalbfleisch and Lawless, is to approximate the second derivatives by estimates of their expectations.33

Let . Taking the expected value of Cij conditional on Ci, we approximate Cij ≈ TijCi. This makes it possible to factor Cij out of the summation over j in Eq. (28), and exploit the property that , simplifying Eq. (28) to

| (29) |

Equipped with approximator Eq. (29), the asymptotic variance-covariance matrix of θ is calculated as the matrix inverse of the Hessian, Σ = H−1, and the asymptotic variance in each derived quantity g(θ) is estimated using the multivariate delta method,45

| (30) |

For example, the asymptotic variance in the stationary distribution can be calculated as

| (31) |

where Σ(π) represent the lower n × n block of the asymptotic variance covariance matrix and

| (32) |

Other key quantities of interest for biophysical applications include the exponential relaxation time scales of the Markov model,

| (33) |

The asymptotic variance in the relaxation time scales, τi, is

| (34) |

where follows from standard expressions for derivatives of eigensystems,46

| (35) |

The sampling uncertainty in other derived properties which depend continuously on θ can be calculated similarly.

When the MLE solution lies at the boundary of the feasible region, with one or more elements of θ(S) equal to zero, we adopt an active set approach to approximate Σ. We refer to the elements of θ(S) which do not lie on a constraint boundary as free parameters. The Hessian block for the free parameters is constructed and inverted, and the variance and covariance of the constrained elements as well as their covariance with the free parameters are taken to be zero.

V. NUMERICAL EXPERIMENTS

We performed numerical experiments on three datasets, which demonstrate different aspects of our estimator for continuous-time Markov processes. Where appropriate, we compare these models to reversible discrete-time Markov models which directly estimate T(τ), parameterized via Algorithm 1 of Prinz et al.39

A. Recovering a known rate matrix

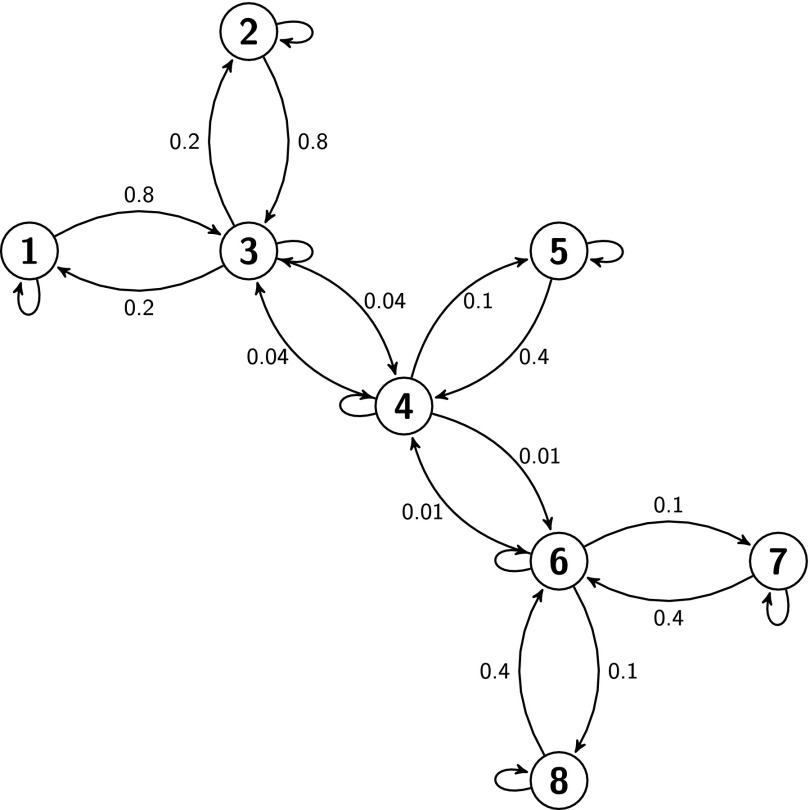

First, we constructed a simple synthetic eight state Markov process with known rates. The network is shown in Fig. 1. The largest non-zero eigenvalue of K is λ2 ≈ − 9.40 × 10−3, which corresponds to a slowest exponential relaxation time scale, τ2 ≈ 106.4 (arbitrary time units).

FIG. 1.

A simple eight state Markov process. Connected states are labeled with the pairwise rate constants, Kij. Self transition rates (not shown), Kii, are equal to the negative sum of each state’s outgoing transition rates, in accordance with Eq. (4).

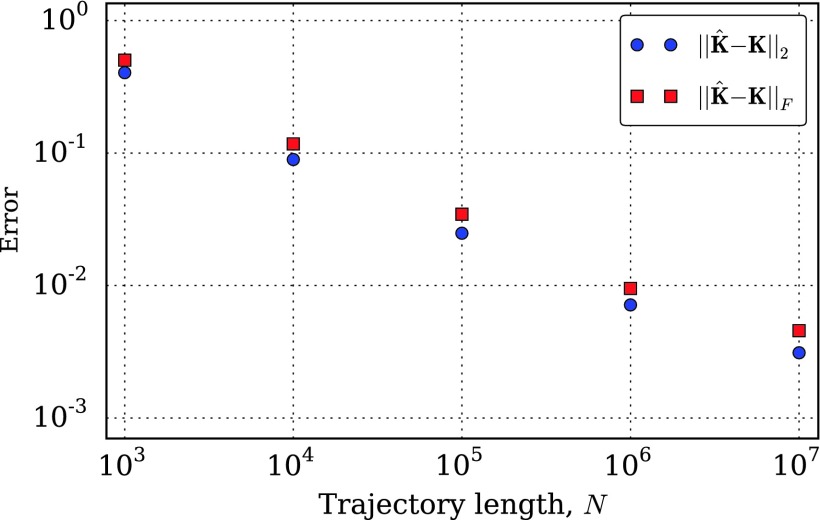

From this model, we simulated discrete-time data with a collection interval of 1 time unit by calculating the matrix exponential of K and propagating the discrete-time Markov chain. In Fig. 2, we show the convergence of the models estimated from these simulation data to the true model, as the length of the simulated trajectories grows. As expected, the fit parameters get more accurate as the size of the data set grows. We observe approximately power law convergence as measured by the 2-norm and Frobenius norm over the range of trajectory lengths studied.

FIG. 2.

Convergence of the estimated rate matrix, , to the true generating rate matrix in Fig. 1 for discrete-time trajectories of increasing length simulated from the process in Fig. 1 with a time step of 1. Using either a 2 norm (blue) or Frobenius norm (red), we see roughly power law convergence over the range of trajectory lengths studied.

The true rate matrix for this continuous time Markov process is sparse—only 7 of the 28 possible pairs of distinct states are directly connected in Fig. 2. Can this graph structure be recovered by our estimator? This task is challenging because of the nature of the discrete-time data. The observation that the system transitioned from state i (at time t) to state j (at time t + 1) does not imply that Kij is non-zero. Instead, the observed i → j transition may have been mediated by one or more other states—the process may have jumped from i to k, and then again from k to j, all within the observation interval.

When the rate matrix, K is irreducible, the corresponding transition probability matrix T(τ) is strictly positive for every positive lag time, τ.41 This implies that in the limit that the trajectory length, N, approaches infinity, at least 1 transition count will almost surely be observed between any pair of states, regardless of the sparsity of K.

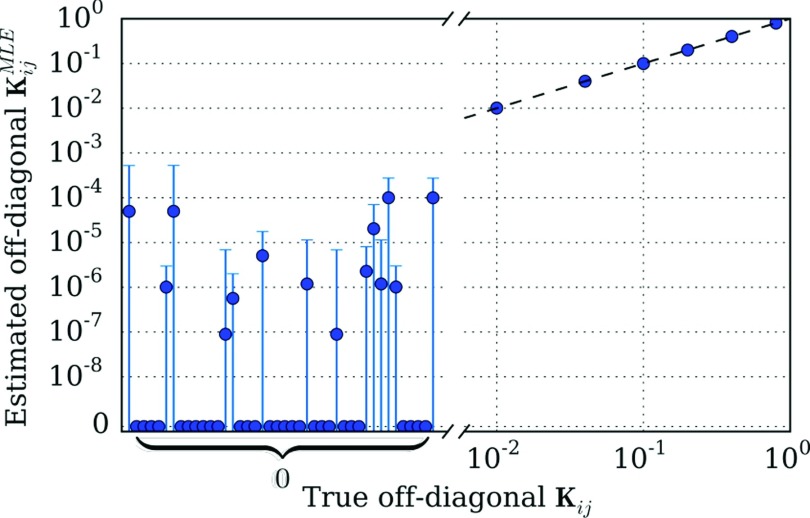

In Fig. 3, we attempt to resolve the underlying graph structure using the model estimated with a trajectory of length N = 107. The plot compares the estimated rate matrix elements with the true values. We find that all of the true connections are well-estimated, and that many of the zero rates are also correctly identified. However, the maximum likelihood estimator also identifies very low, but non-zero rates between many of the states which are in fact disconnected.

FIG. 3.

Comparison of the estimated and true off-diagonal rate matrix elements for a trajectory of length N = 107 simulated from the process in Fig. 1 with a time step of 1. The true non-zero elements of K are well-estimated, as shown in the right portion of the plot; here, error bars are small enough to be fully obscured by point markers. On the other hand, the estimator spuriously estimates non-zero rates between many of the states which are not connected in the underlying process. However, the 95% confidence intervals for these spurious rates each overlap with zero.

We computed 95% (1.96σ) confidence intervals for each of the estimated rate matrix elements, . For each of the spuriously non-zero elements, these confidence intervals overlapped with zero. None of the confidence intervals for the properly non-zero rates overlapped with zero. These uncertainty estimates can therefore be used, in combination with the MLE, to identify the underlying graph structure.

This example demonstrates that some degree of sparsity-inducing regularization or variable selection may be required to robustly identify the underlying graph structure in Markov process.

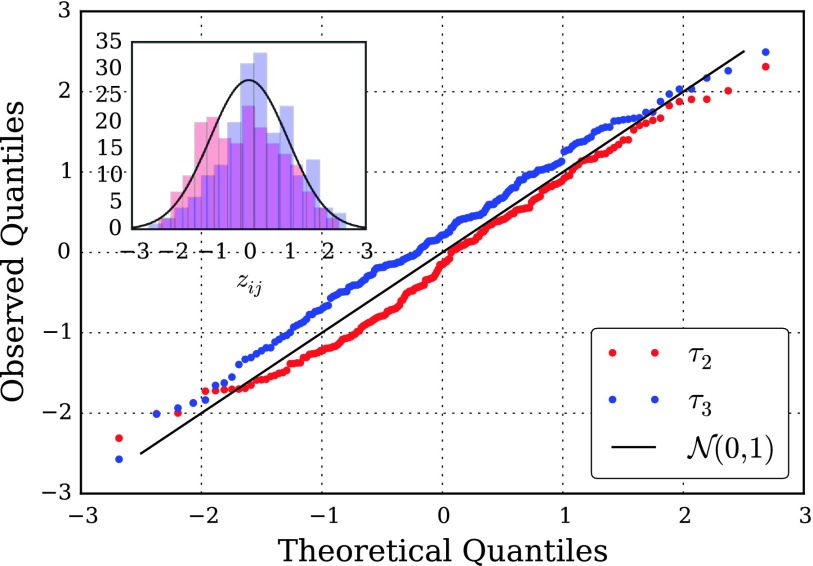

B. Accuracy of uncertainty estimates

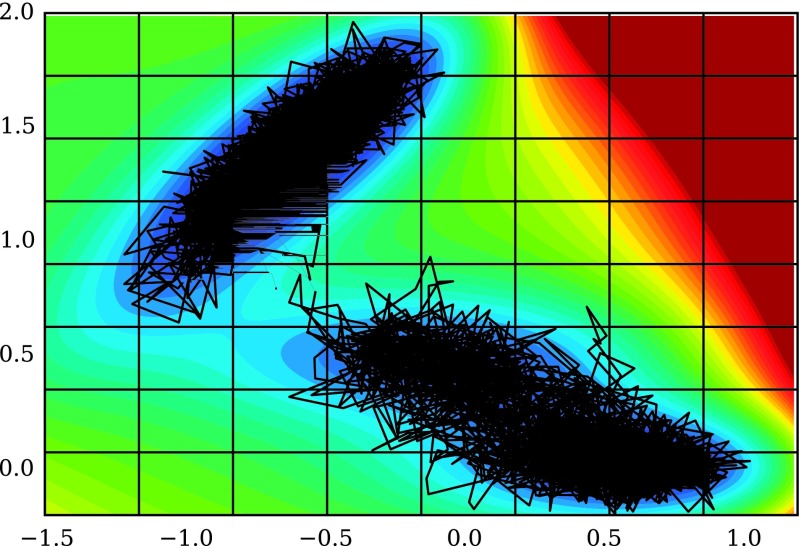

How accurate are the approximate asymptotic uncertainty expressions derived in Section IV? To answer this question, we performed a numerical experiment with twenty independent and identically distributed collections of trajectories of Brownian dynamics on a two-dimensional potential. One of those trajectories is shown superimposed on the potential in Fig. 4, along with the 8 × 8 grid used to discretize the process. The Brownian dynamics simulations were performed following the same procedure described in McGibbon, Schwantes, and Pande.47

FIG. 4.

Brownian dynamics on the 2-dimensional Müller potential was discretized by projecting the simulated trajectories onto an 8 × 8 grid. A typical trajectory is shown in black. The resulting discrete-state process can be approximated as a continuous-time Markov process.

To assess the accuracy of the asymptotic approximations, we compare the empirical distribution of the estimated parameters over the separate data sets with the theoretical distribution which would be expected based on the Gaussian approximation. Consider a scalar model parameter g, such as one of the relaxation time scales or equilibrium populations. Fitting a model separately on each of the twenty data sets yields estimates, . If these estimates are accurate, then is normally distributed, . Our goal is to examine the accuracy of the estimated variances, . Note that the true value of g is unknown, but subtracts out when examining standardized differences between the estimates, which, assuming normality, should follow a standard normal distribution,

| (36) |

In Fig. 5, we compare the empirical and theoretical distributions of zij, (i, j) : 1 ≤ i ≤ 20, i < j ≤ 20, for estimates of the first two relaxation time scales using a quantile-quantile (Q-Q) plot, a powerful method of comparing distributions. The observation that Q-Q plot runs close to the y = x line is encouraging, and shows that the observed deviates are close to normally distributed, and that the approximator’s variance estimates are of the appropriate magnitude. This suggests that the asymptotic error expressions can be of practical utility for practitioners.

FIG. 5.

Quantile-quantile plot of the standardized differences, Eq. (36), between estimated relaxation time scales, τ2 and τ3, on twenty i.i.d. datasets. If the estimated time scales are normally distributed with the calculated asymptotic variances, the quantiles of their standardized differences would match exactly with the theoretical quantiles of the standard normal distribution.

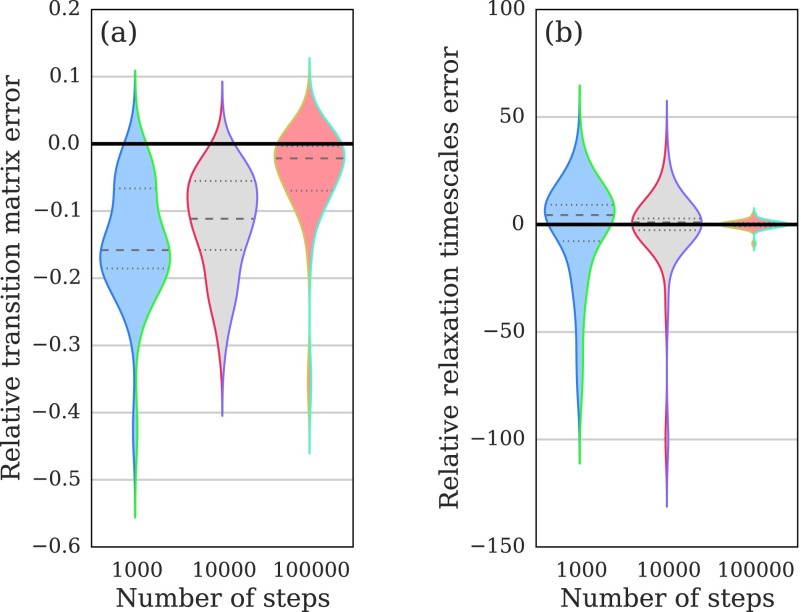

C. Comparison with discrete-time MSMs

In a data-limited regime, are continuous-time Markov models more capable than discrete-time MSMs? We extended the analysis in Section V A to a larger class of generating processes in order to address this question. We began by sampling random 100-state Markov process rate matrices from scale free random graphs.48 Details of the random rate matrix generation are described in the Appendix.

From each random rate matrix, K, we sampled three discrete-time trajectories of different lengths. Each trajectory was used individually to fit both a continuous-time and discrete-time Markov model, and the parameterized models were then compared to the underlying system from which the trajectories were simulated to assess the convergence properties of the approaches.

In Fig. 6, we consider two notions of error. The first norm measures error in the elements of the estimated transition matrix, . Unlike the experiment in Fig. 2, we used the as the basis of the measure so that the continuous-time and discrete-time models could be compared on an equal footing. The second error norm we consider is the max-norm error in the estimated relaxation time scales, , which measures a critical spectral property of the models. In both panels of Fig. 6, the distribution of the difference in error between the continuous-time and discrete-time models is plotted; values below zero indicate that the continuous-time model performed better for a particular class of trajectories, whereas values above zero indicate the reverse. For each condition, we performed N = 30 replicates.

FIG. 6.

Violin plots of the relative error between continuous-time and discrete-time Markov models for kinetics on random graphs. Values below zero indicate lower error for the continuous-time model, whereas values above zero indicate the reverse. The shape displays the data density, computed with a Gaussian kernel density estimator. Panel (a): as measured by the Frobenius-norm error in the estimated transition matrices, , the continuous-time model achieves lower errors, with a larger advantage for shorter trajectories. Panel (b): as measured by the max-norm error in the estimated relaxation time scales, , the two models are not distinguishable.

Our results show that as measured by the transition matrix error, the continuous-time Markov process model is more accurate in the regimes considered. A binomial sign test rejects the hypothesis that the two estimators give the same error for all three conditions (two-sided p values of [2 × 10−9, 2 × 10−9, 1 × 10−3] for trajectories of length [103, 104, 105] steps, respectively). The relative advantage of the continuous-time Markov model decreases as the trajectory length increases—its advantage is in the sparse data regime when no transition counts have been observed between a significant number of pairs of states.

In contrast, as measured by the relaxation time scale estimation error, we observe no significant difference between the continuous-time and discrete-time estimators. A binomial sign test does not definitively reject the hypothesis that the two estimators give the same error for any of the three conditions (two-sided p values of [0.02, 0.36, 0.85] for trajectories of length [103, 104, 105] steps, respectively). Neither estimator is consistently more accurate in recovery of the dominant spectral properties of the dynamics.

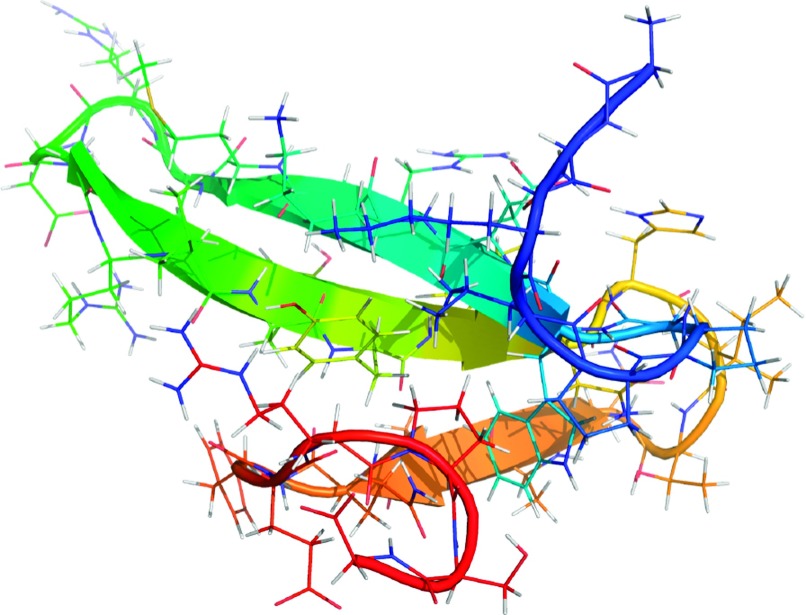

D. Application to protein folding and lag time selection

How can these models be applied to the analysis of MD simulations of protein folding? We obtained two independent ultra-long 100 μs MD simulations of the FiP35 WW protein,50 a small 35 residue β-sheet protein (Fig. 7), performed by D.E. Shaw Research on the ANTON supercomputer.49

FIG. 7.

The FiP35 WW protein, in its native state. We analyzed two 100 μs MD trajectories of its folding performed by D.E. Shaw Research to estimate a Markov process model for its conformational dynamics.49

In order to focus on the construction of discrete-state Markov models, we initially projected every snapshot of the MD trajectories, which were available at a 200 ps time interval, into a discrete state space with 100 states in a way consistent with prior work.47 Briefly, this involved the extraction of the distance between the closest non-hydrogen atoms in each pair of amino acids in each simulation snapshot,51 followed by the application of time-structure independent components analysis (tICA) to extract the four most slowly decorrelating degrees of freedom,52,53 which were then clustered into 100 states using the k-means algorithm.54,55

Although the equations of motion for a protein’s dynamics in a MD simulation are Markovian, the generating process of the data analyzed by our model is not. The pre-processing procedure which projects the original dynamics from a high-dimensional continuous state space (the position and momenta of the constituent atoms) into a lower dimensional continuous space or discrete state space is not information preserving, and destroys the Markov property.28,29 For chemical dynamics, qualitative features of the non-Markovianity are well-understood. Consider, for example, a metastable system with two states, A and B, the system in state A may stochastically oscillate across the boundary surface many times without committing to state B. Whereas for a Markov process, the probability distribution of the waiting time that the system spends in any states before exiting is exponential, chemical dynamics are expected to show a higher propensity for short waiting times, corresponding to so-called recrossing events.27 This effect is more pronounced when viewing the process at short lag times—the bias induced by approximating the process as Markov decreases with lag time.57

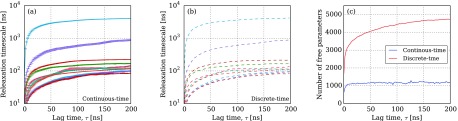

For the FiP35 WW domain, we observe that the change in the relaxation time scales of the continuous-time and discrete-time Markov models with respect to lag time is essentially identical, as shown in Fig. 8. For both model classes, the estimated relaxation time scales increase and converge with respect to lag time. This is consistent with our results in Fig. 6(b), which suggest that the estimated time scales are the same for both models, especially as the length of the trajectories grow. While fitting the models in Fig. 8, we observed a small number (2-4) of convergence failures at long lag times, which were notable due to a dramatic discontinuity in the relaxation time scale curve. This problem was solved by reinitializing the optimization at these lag times from the converged solutions at adjacent lag times.

FIG. 8.

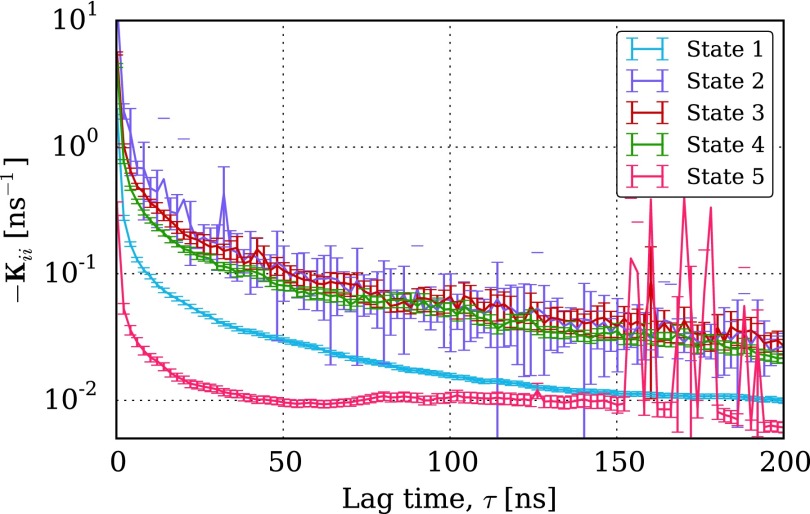

Implied exponential relaxation time scales of parameterized (a) continuous-time Markov process model and (b) discrete-time Markov model as a function of lag time. The relaxation time scales computed by the two algorithms coincide almost exactly (r2 = 0.999 978). (c) The number of free (non-zero) parameters estimated by the discrete-time and continuous-time models, respectively; the continuous-time Markov model achieves a more parsimonious representation of the data.

Because of the essentially unchanged nature of the relaxation time scale spectrum, we suggest that when choosing a particular lag time, the same approach be used for discrete-time and continuous-time Markov models. Ideally, this entails the selection of a lag time large enough that the relaxation time scales are independent of lag time.58,59 For the continuous-time Markov model, other techniques may be appropriate as well. For example, in Fig. 9 we show the convergence of selected diagonal entries of the rate matrix as a function of lag time. As described in the context of transition state theory, these rate constants should plateau with increasing τ, which provides another related basis to select the parameter.60,61

FIG. 9.

Convergence of selected rate matrix elements as a function of lag time. A plausible method for lag time selection would be to choose τ such that some or all of these entries are determined to have plateaued.

The most significant difference between the continuous-time and discrete-time estimators in this case is the sparsity of the parameterized models. In Fig. 8(c), we compare the number of non-zero independent parameters for both models as a function of τ. Of the independent parameters for both the continuous-time and discrete-time models, only ≈1200 are nonzero for the continuous-time model, regardless of lag time. In contrast, the number of nonzero parameters for the discrete-time model continues to increase with lag time.

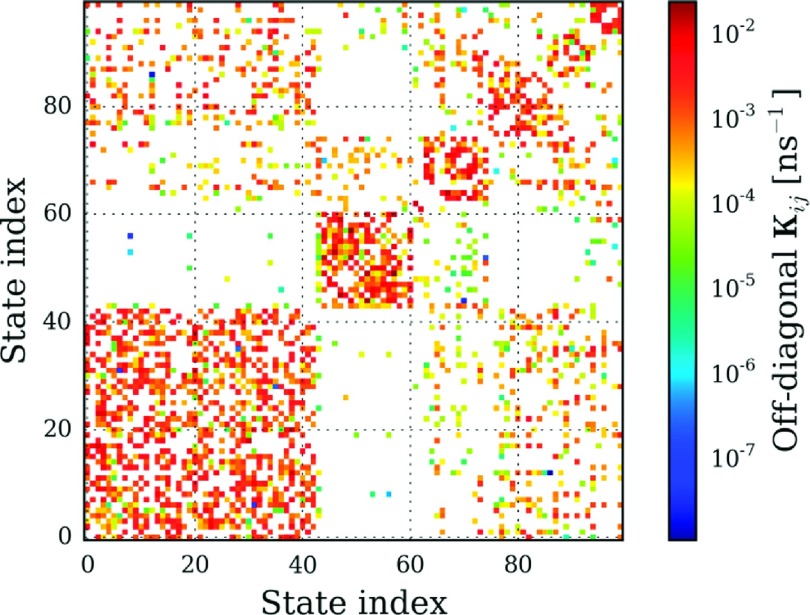

We anticipate that the sparsity of K may aid in the analysis and interpretation of Markov models. In Fig. 10, we show the MLE rate matrix computed at τ = 100 ns. The state indices were sorted such that states grouped together via Peron cluster cluster analysis (PCCA) were given adjacent indices.56 The evident block structure of the matrix visually indicates that the protein’s conformational space consists of a small number of regions with generally high within-region rate constants, but weak between-region coupling. Although a detailed analysis of the biophysics of these conformations is beyond the scope of this work, visual analysis of these structures indicates that the model resolves folded and unfolded, as well as partially folded intermediate states.

FIG. 10.

The maximum likelihood rate matrix, , computed at a lag time, τ, of 100 ns. The state indices were sorted in seven macrostates using the PCCA algorithm.56

In interpreting the 1.96σ error bars on the relaxation time scales in Fig. 8(a), cautionary note is warranted. Our error analysis considers the number of observed transitions between states but does not take into account any notion of uncertainty in the proper definitions of the states themselves, or the error inherent in approximating a non-Markovian process with a Markov process. The observation in Fig. 8(a) that the magnitude of the systematic shift in the time scales with respect to lag time is much larger than the error bars suggests that the Markov approximation (a model misspecification) is a larger source of error, for this dataset, than the statistical uncertainty in model parameters. For these reasons, we caution that these error bars should be interpreted as lower bounds rather than upper bounds.

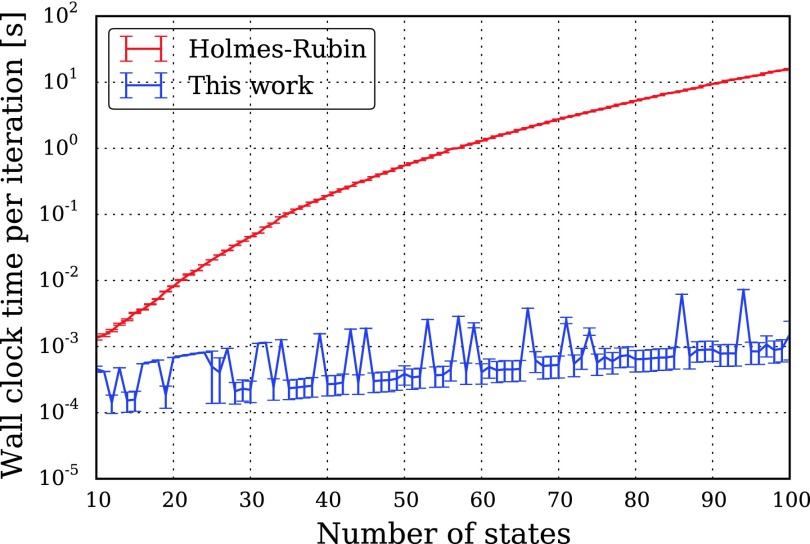

E. Performance

In order to assess the performance of our maximum likelihood estimator, we compared it with an algorithm by Holmes and Rubin, which solves the same Markov process parameterization problem using an expectation-maximization approach.22 Because the original code was unavailable, we reimplemented the algorithm following the description by Metzner et al., where it is denoted “Algorithm 4: Enhanced MLE-method for the reversible case.”21 The algorithm scales as O(n5), where n is the number of states. Its rate limiting step involves an O(n5) FLOP contraction of five n × n matrices into a rank-4 tensor of dimension n on each axis.62 For benchmarking, we constructed a variant of the FiP35 WW protein dataset from Section V D, in which we varied the number of states between 10 and 100 during clustering. All models were fit on an Intel Xeon E5-2650 processor using a single thread.

As shown in Fig. 11, and expected on the basis of the O(n5) vs. O(n3) scaling, the performance difference between the algorithms is substantial. For n = 100, our algorithm is roughly four orders of magnitude faster per iteration; our algorithm takes on the order of 1 ms per iteration, while the Holmes-Rubin estimator’s iteration takes over 10 s. Using the L-BFGS-B optimizer’s default convergence criteria, roughly three quarters (68/91) of the runs of our algorithm converge in fewer than 100 iterations; a solution is often achieved long before the EM estimator has performed a single iteration.

FIG. 11.

Performance of our Markov process estimator, as compared to the Holmes-Rubin EM estimator.22 Each iteration of our O(n3) estimator takes on the order of 1 ms, while the O(n5) Holmes-Rubin estimator takes over 10 s per iteration for a 100 state model. Using default convergence criteria, our estimator often achieves a solution long before the EM estimator finishes a single iteration.

VI. CONCLUSIONS

In this work, we have introduced a maximum likelihood estimator for continuous-time Markov processes on discrete state spaces. This model can be used to estimate transition rates between various substates in a dynamical system based on observations of the system at a discrete time interval. Various constraints on the solution, such as detailed balance, can be easily incorporated into the model, and asymptotic error analysis can give confidence intervals in model parameters and derived quantities.

With the efficient parameterization problem solved, these continuous-time Markov models offer several advantages over existing MSM methodologies. As compared to discrete-time MSMs, these models are more interpretable for chemists and biologists because they do not arbitrarily discretize time. Although a lag time is used internally during parameterization, the final estimated quantities are familiar rate constants from chemical kinetics, as opposed to the somewhat unintuitive transition probabilities in a discrete-time MSM. Furthermore, these models are more parsimonious, and unlike the discrete-time MSM are able to detect that many pairs of states are not immediately kinetically adjacent to one another. This makes it possible to more clearly recover the underlying graph structure of the kinetics. For applications such as the determination of transition pathways in protein dynamics, we anticipate that this property will be valuable.

Many extensions of this model are possible in future work. The simple nature of the constraints on θ make Bayesian approaches, especially Hamiltonian Monte Carlo, particularly attractive.63 In particular, because of the separation of θ(π) and θ(S) in the parameterization, strong informative priors on π may be added to extend the work of Trendelkamp-Schroer and Noé.64 The appropriate sparsity inducing priors on θ(S) may be a topic of future work.

An implementation of this estimator is available in the MSMBuilder software package at http://msmbuilder.org/ under the GNU Lesser General Public License.

Acknowledgments

This work was supported by the National Science Foundation and National Institutes of Health under Grant Nos. NIH R01-GM62868, NIH S10 SIG 1S10RR02664701, and NSF MCB-0954714. We thank Mohammad M. Sultan, Matthew P. Harrigan, John D. Chodera, Kyle A. Beauchamp, Ariana Peck, and the reviewers for helpful feedback during the preparation of this work.

APPENDIX: RANDOM RATE MATRICES

Scale-free random graphs with 100 states were generated using the Barabási–Albert preferential attachment model with m = 3.48 From the graph’s adjacency matrix, we generated a symmetric rate matrix S by sampling a log-normally distributed random variable (μ = − 3, σ = 2) for each connected edge. The stationary distribution, π, was sampled from Dirichlet(α = 1). The matrix S was then scaled by , which tuned the relaxation time scales in the range between 102 and 103 time steps, and used with π in Eq. (25) to construct K.

REFERENCES

- 1.Singer B. and Spilerman S., Am. J. Sociol. 82, 1 (1976). 10.1086/226269 [DOI] [Google Scholar]

- 2.Madsen H., Spliid H., and Thyregod P., J. Clim. Appl. Meteorol. 24, 629 (1985). [DOI] [Google Scholar]

- 3.Turner C. M., Startz R., and Nelson C. R., J. Financ. Econ. 25, 3 (1989). 10.1016/0304-405X(89)90094-9 [DOI] [Google Scholar]

- 4.Minin V. N. and Suchard M. A., J. Math. Biol. 56, 391 (2008). 10.1007/s00285-007-0120-8 [DOI] [PubMed] [Google Scholar]

- 5.Jarrow R. A., Lando D., and Turnbull S. M., Rev. Financ. Stud. 10, 481 (1997). 10.1093/rfs/10.2.481 [DOI] [Google Scholar]

- 6.Spilerman S., Am. J. Sociol. 78, 599 (1972). 10.1086/225366 [DOI] [Google Scholar]

- 7.Kimura M., J. Mol. Evol. 16, 111 (1980). 10.1007/BF01731581 [DOI] [PubMed] [Google Scholar]

- 8.Anderson D. F. and Kurtz T. G., Design and Analysis of Biomolecular Circuits (Springer, 2011), pp. 3–42. [Google Scholar]

- 9.Ikai A. and Tanford C., Nature 230, 100 (1971). 10.1038/230100a0 [DOI] [PubMed] [Google Scholar]

- 10.Zwanzig R., Proc. Natl. Acad. Sci. U. S. A. 94, 148 (1997). 10.1073/pnas.94.1.148 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Sánchez I. E. and Kiefhaber T., J. Mol. Biol. 325, 367 (2003). 10.1016/S0022-2836(02)01230-5 [DOI] [PubMed] [Google Scholar]

- 12.Chan H. S. and Dill K. A., Proteins: Struct., Funct., Bioinf. 30, 2 (1998). [DOI] [Google Scholar]

- 13.Pirchi M., Ziv G., Riven I., Cohen S. S., Zohar N., Barak Y., and Haran G., Nat. Commun. 2, 493 (2011). 10.1038/ncomms1504 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Beauchamp K. A., McGibbon R. T., Lin Y.-S., and Pande V. S., Proc. Natl. Acad. Sci. U. S. A. 109, 17807 (2012). 10.1073/pnas.1201810109 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Beauchamp K. A., Bowman G. R., Lane T. J., Maibaum L., Haque I. S., and Pande V. S., J. Chem. Theory Comput. 7, 3412 (2011). 10.1021/ct200463m [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Senne M., Trendelkamp-Schroer B., Mey A. S., Schütte C., and Noé F., J. Chem. Theory Comput. 8, 2223 (2012). 10.1021/ct300274u [DOI] [PubMed] [Google Scholar]

- 17.Banerjee R. and Cukier R. I., J. Phys. Chem. B 118, 2883 (2014). 10.1021/jp412130d [DOI] [PubMed] [Google Scholar]

- 18.Shukla D., Hernández C. X., Weber J. K., and Pande V. S., Acc. Chem. Res. 48, 414 (2015). 10.1021/ar5002999 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Noé F. and Fischer S., Curr. Opin. Struct. Biol. 18, 154 (2008). 10.1016/j.sbi.2008.01.008 [DOI] [PubMed] [Google Scholar]

- 20.Chodera J. D. and Noé F. F., Curr. Opin. Stuct. Biol 25, 135 (2014). 10.1016/j.sbi.2014.04.002 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Metzner P., Dittmer E., Jahnke T., and Schütte C., J. Comput. Phys. 227, 353 (2007). 10.1016/j.jcp.2007.07.032 [DOI] [Google Scholar]

- 22.Holmes I. and Rubin G., J. Mol. Biol. 317, 753 (2002). 10.1006/jmbi.2002.5405 [DOI] [PubMed] [Google Scholar]

- 23.Bladt M. and Sørensen M., J. R. Stat. Soc., Ser. B 67, 395 (2005). 10.1111/j.1467-9868.2005.00508.x [DOI] [Google Scholar]

- 24.Kingman J. F. C., Probab. Theory Relat. Fields 1, 14 (1962). 10.1007/BF00531768 [DOI] [Google Scholar]

- 25.Davies E., Electron. J. Probab. 15, 1474 (2010). 10.1214/EJP.v15-733 [DOI] [Google Scholar]

- 26.Adams J. and Doll J., Surf. Sci. 103, 472 (1981). 10.1016/0039-6028(81)90278-8 [DOI] [Google Scholar]

- 27.Voter A. F. and Doll J. D., J. Chem. Phys. 82, 80 (1985). 10.1063/1.448739 [DOI] [Google Scholar]

- 28.Mori H., Prog. Theor. Phys. 33, 423 (1965). 10.1143/PTP.33.423 [DOI] [Google Scholar]

- 29.Zwanzig R., Phys. Rev. 124, 983 (1961). 10.1103/PhysRev.124.983 [DOI] [Google Scholar]

- 30.Crommelin D. and Vanden-Eijnden E., J. Comput. Phys. 217, 782 (2006). 10.1016/j.jcp.2006.01.045 [DOI] [Google Scholar]

- 31.Crommelin D. and Vanden-Eijnden E., Multiscale Model. Simul. 7, 1751 (2009). 10.1137/080735977 [DOI] [Google Scholar]

- 32.Israel R. B., Rosenthal J. S., and Wei J. Z., Math. Finance 11, 245 (2001). 10.1111/1467-9965.00114 [DOI] [Google Scholar]

- 33.Kalbfleisch J. D. and Lawless J. F., J. Am. Stat. Assoc. 80, 863 (1985). 10.1080/01621459.1985.10478195 [DOI] [Google Scholar]

- 34.Asmussen S., Nerman O., and Olsson M., Scand. J. Stat. 23, 419 (1996), available at www.jstor.org/stable/4616418. [Google Scholar]

- 35.Hobolth A. and Jensen J. L., Stat. Appl. Genet. Mol. Biol. 4, 1 (2005). 10.2202/1544-6115.1127 [DOI] [PubMed] [Google Scholar]

- 36.Jennrich R. I. and Bright P. B., Technometrics 18, 385 (1976). 10.1080/00401706.1976.10489469 [DOI] [Google Scholar]

- 37.Byrd R., Lu P., Nocedal J., and Zhu C., SIAM J. Sci. Comput. 16, 1190 (1995). 10.1137/0916069 [DOI] [Google Scholar]

- 38.Zhu C., Byrd R. H., Lu P., and Nocedal J., ACM Trans. Math. Software 23, 550 (1997). 10.1145/279232.279236 [DOI] [Google Scholar]

- 39.Prinz J.-H., Wu H., Sarich M., Keller B., Senne M., Held M., Chodera J. D., Schütte C., and Noé F., J. Chem. Phys. 134, 174105 (2011). 10.1063/1.3565032 [DOI] [PubMed] [Google Scholar]

- 40.Al-Mohy A. and Higham N., SIAM J. Sci. Comput. 34, C153 (2012). 10.1137/110852553 [DOI] [Google Scholar]

- 41.Bakry D., Gentil I., and Ledoux M., Analysis and Geometry of Markov Diffusion Operators, Grundlehren der mathematischen Wissenschaften Vol. 348 (Springer International Publishing, 2014). [Google Scholar]

- 42.Vandevender W. H. and Haskell K. H., SIGNUM Newsl. 17, 16 (1982). 10.1145/1057594.1057595 [DOI] [Google Scholar]

- 43.Gough B., GNU Scientific Library Reference Manual (Network Theory Ltd., 2009). [Google Scholar]

- 44.Jones E., Oliphant T., Peterson P. et al. , “SciPy: Open source scientific tools for Python,” 2001.

- 45.Linear Statistical Inference and its Applications, edited by Rao C. R. (John Wiley & Sons, Inc., 1973). [Google Scholar]

- 46.Murthy D. V. and Haftka R. T., Int. J. Numer. Methods Eng. 26, 293 (1988). 10.1002/nme.1620260202 [DOI] [Google Scholar]

- 47.McGibbon R. T., Schwantes C. R., and Pande V. S., J. Phys. Chem. B 118, 6475 (2014). 10.1021/jp411822r [DOI] [PubMed] [Google Scholar]

- 48.Barabási A.-L. and Albert R., Science 286, 509 (1999). 10.1126/science.286.5439.509 [DOI] [PubMed] [Google Scholar]

- 49.Shaw D. E., Maragakis P., Lindorff-Larsen K., Piana S., Dror R. O., Eastwood M. P., Bank J. A., Jumper J. M., Salmon J. K., Shan Y., and Wriggers W., Science 330, 341 (2010). 10.1126/science.1187409 [DOI] [PubMed] [Google Scholar]

- 50.Liu F., Du D., Fuller A. A., Davoren J. E., Wipf P., Kelly J. W., and Gruebele M., Proc. Natl. Acad. Sci. U. S. A. 105, 2369 (2008). 10.1073/pnas.0711908105 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.McGibbon R. T., Beauchamp K. A., Schwantes C. R., Wang L.-P., Hernández C. X., Harrigan M. P., Lane T. J., Swails J. M., and Pande V. S., “MDTraj: A modern, open library for the analysis of molecular dynamics trajectories,” preprint bioRxiv (2014). 10.1101/008896 [DOI] [PMC free article] [PubMed]

- 52.Schwantes C. R. and Pande V. S., J. Chem. Theory Comput. 9, 2000 (2013). 10.1021/ct300878a [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Pérez-Hernández G., Paul F., Giorgino T., De Fabritiis G., and Noé F., J. Chem. Phys. 139, 015102 (2013). 10.1063/1.4811489 [DOI] [PubMed] [Google Scholar]

- 54.Lloyd S., IEEE Trans. Inf. Theory 28, 129 (1982). 10.1109/TIT.1982.1056489 [DOI] [Google Scholar]

- 55.Arthur D. and Vassilvitskii S., in Proceedings of the Eighteenth Annual ACM–SIAM Symposium on Discrete Algorithms (SIAM, 2007), pp. 1027–1035. [Google Scholar]

- 56.Deuflhard P., Huisinga W., Fischer A., and Schütte C., Linear Algebra Appl. 315, 39 (2000). 10.1016/S0024-3795(00)00095-1 [DOI] [Google Scholar]

- 57.Sarich M., Noé F., and Schütte C., Multiscale Model. Simul. 8, 1154 (2010). 10.1137/090764049 [DOI] [Google Scholar]

- 58.Swope W. C., Pitera J. W., and Suits F., J. Phys. Chem. B 108, 6571 (2004). 10.1021/jp037421y [DOI] [Google Scholar]

- 59.Bowman G. R., Huang X., and Pande V. S., Methods 49, 197 (2009). 10.1016/j.ymeth.2009.04.013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Chandler D., J. Chem. Phys. 68, 2959 (1978). 10.1063/1.436049 [DOI] [Google Scholar]

- 61.Chandler D., in Classical and Quantum Dynamics in Condensed Phase Simulations, edited byBerne B. J., Ciccotti G., and Coker D. J. (World Scientific, Singapore, 1998), pp. 3–23. [Google Scholar]

- 62.Both our algorithm and Holmes-Rubin estimator were implemented the Cython language and compiled to C++. Our implementation of the Holmes-Rubin estimator is available at https://github.com/rmcgibbo/holmes_rubin.

- 63.Neal R. M., in Handbook of Markov Chain Monte Carlo, edited by Brooks S., Gelman A., Jones G. L., and Meng X.-L. (Chapman & Hall / CRC, 2011), pp. 113–162. [Google Scholar]

- 64.Trendelkamp-Schroer B. and Noé F., J. Chem. Phys. 138, 164113 (2013). 10.1063/1.4801325 [DOI] [PubMed] [Google Scholar]