Abstract

In neural circuits, statistical connectivity rules strongly depend on cell-type identity. We study dynamics of neural networks with cell-type specific connectivity by extending the dynamic mean field method, and find that these networks exhibit a phase transition between silent and chaotic activity. By analyzing the locus of this transition, we derive a new result in random matrix theory: the spectral radius of a random connectivity matrix with block-structured variances. We apply our results to show how a small group of hyper-excitable neurons within the network can significantly increase the network’s computational capacity by bringing it into the chaotic regime.

The theory of random matrices has diverse applications in nuclear [1] and solid-state [2, 3] physics, number theory and statistics [4] and models of neural networks [5–8]. The increasing use of boolean networks to model gene regulatory networks [9–11] suggests that random matrix theory may advance our understanding of those biological systems as well. Most existing theoretical results pertain to matrices with values drawn from a single distribution, corresponding to randomly connected networks with a single connectivity rule and cell-type. Recent experimental studies describe in increasing detail the heterogeneous structure of biological networks where connection probability depends strongly on cell-type [12–17]. As a step towards bridging this gap between theory and experiment, we extend here mean-field methods used to analyze conventional randomly connected networks to networks with multiple cell-types and allow for cell-type-dependent connectivity rules. We focus here on neural networks.

Randomly connected networks of one cell-type were shown to have two important properties. First, they undergo a phase transition from silent to chaotic activity as the variance of connection strength is increased [7, 8]. Second, such networks reach optimal computational capacity near the critical point [18, 19] in a weakly chaotic regime. We find both phenomena in networks with multiple cell-types. Importantly, the effective gain of multi-type networks deviates strongly from predictions obtained by averaging across the cell types, and in many cases these networks show greater computational capacity compared to networks with cell-type independent connectivity.

The starting point for our analysis of recurrent activity in neural networks is a firing-rate model where the activation xi(t) of the ith neuron determines its firing-rate ϕi(t) through a nonlinear function ϕi(t) = tanh(xi). The activation of the ith neuron depends on the firing-rate of all N neurons in the network:

| (1) |

where Jij describes the connection weight from neuron j to i. Previous work [7] considered a recurrent random network where all connections are drawn from the same distribution. There, the matrix elements was drawn from a Gaussian distribution with mean zero and variance g2/N, where g defines the average synaptic gain in the network. According to Girko’s circular law, the spectral density of the random matrix J in this case is uniform on a disk with radius g [20, 21]. When the real part of some of the eigenvalues of J exceeds 1, the quiescent state xi(t) = 0 becomes unstable and the network becomes chaotic [7]. Thus, for networks with one cell-type the transition to chaotic dynamics occurs when g = 1. The chaotic dynamics persist even in the presence of noise, but the critical point gcrit shifts to values > 1, with gcrit = 1 − σ2 log σ2 for small noise intensities σ2 and for large noise [8].

We now consider networks with D cell-types, each with a fraction αd of neurons in it. The mean connection weight is 〈Jij〉 = 0. The variances depend on the cell-type of the input (c) and output (d) neurons; where ci denotes the group neuron i belongs to. In what follows, indices i, j = 1, …, N and c, d = 1, …, D correspond to single neurons and neuron groups, respectively. Averages over realizations of J are denoted by 〈·〉. It is convenient to represent the connectivity structure using a synaptic gain matrix G. Its elements Gij = gcidj are arranged in D2 blocks of sizes N αc × N αd (Fig. 1a–c, top insets). The mean synaptic gain, ḡ, is given by . Defining (but see [22] for discussion of non-Gaussian entries) and allows us to rewrite Eq. (1) in a form that emphasizes the separate contributions from each group to a neuron:

| (2) |

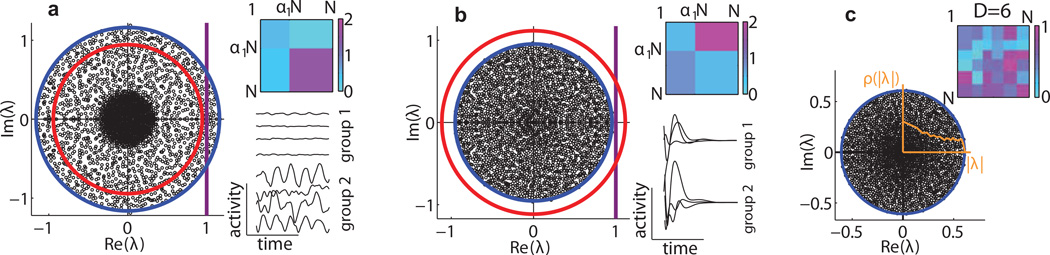

FIG. 1.

Spectra and dynamics of networks with cell-type dependent connectivity (N = 2500). The support of the spectrum of the connectivity matrix J is accurately described by (radius of blue circle) for different networks. Top insets - the synaptic gain matrix G summarizes the connectivity structure. Bottom insets - activity of representative neurons from each type. The line ℜ{λ} = 1 (purple) marks the transition from quiescent to chaotic activity. (a) An example chaotic network with two cell-types. The average synaptic gain ḡ (radius of red circle) incorrectly predicts this network to be quiescent. (b) An example silent network. Here ḡ incorrectly predicts this network to be chaotic. (c) An example network with six cell-types. In all examples the radial part of the eigenvalue distribution ρ(|λ|) (orange line) is not uniform [22].

We use the dynamic mean field approach [5, 7, 23] to study the network behavior in the N → ∞ limit. Averaging Eq. (2) over the ensemble from which J is drawn implies that only neurons that belong to the same group are statistically identical. Therefore, to represent the network behavior it is enough to look at the activities ξd(t) of D representative neurons and their inputs ηd (t).

The stochastic mean field variables ξ and η will approximate the activities and inputs in the full N dimensional network provided that they satisfy the dynamic equation

| (3) |

and provided that ηd (t) is drawn from a Gaussian distribution with moments satisfying the following conditions. First, the mean 〈ηd(t)〉 = 0 for all d. Second, the correlations of η should match the input correlations in the full network, averaged separately over each group. Using Eq. (3) and the property we get the self-consistency conditions:

| (4) |

where 〈·〉 denotes averages over i = nc−1 + 1, …, nc and k = nd−1 + 1, …, nd in addition to average over realizations of J. The average firing rate correlation vector is denoted by C(τ). Its components (using the variables of the full network) are , translating to Cd(τ) = 〈ϕ[ξd(t)]ϕ[ξd(t + τ)]〉 using the mean field variables. Importantly, the covariance matrix ℋ(τ) with elements ℋcd (τ) = 〈ηc (t) ηd (t + τ)〉 is diagonal, justifying the definition of the vector H = diag (ℋ). With this in hand we rewrite Eq. (4) in matrix form

| (5) |

where M is a constant matrix reflecting the network connectivity structure: .

A trivial solution to this equation is H(τ) = C(τ) = 0 which corresponds to the silent network state: xi(t) = 0. Recall that in the network with a single cell-type, the matrix M = g2 is a scalar and Eq. (5) reduces to H (τ) = g2C(τ). In this case the silent solution is stable only when g < 1. For g > 1 the autocorrelations of η are non-zero which leads to chaotic dynamics in the N dimensional system [7].

In the general case (D ≥ 1), Eq. (5) can be projected on the eigenvectors of M leading to D consistency conditions, each equivalent to the single group case. Each projection has an effective scalar given by the eigenvalue in place of g2 in the D = 1 case. Hence, the trivial solution will be stable if all eigenvalues of M have real part < 1. This is guaranteed if Λ1, the largest eigenvalue of M, is < 1 [24]. If Λ1 > 1 the projection of Eq. (5) on the leading eigenvector of M gives a scalar self-consistency equation analogous to the D = 1 case for which the trivial solution is unstable. As we know from the analysis of the single cell-type network, this leads to chaotic dynamics in the full network. Therefore Λ1 = 1 is the critical point of the multiple cell-type network.

Another approach to show explicitly that Λ1 = 1 at the critical point is to consider first order deviations in the network activity from the quiescent state. Here C(τ) ≈ Δ(τ) where Δ(τ) is the autocorrelation vector of the activities with elements Δd(τ) = 〈ξd(t)ξd(t + τ)〉. By invoking Eq. (3) we have

| (6) |

Substituting Eq. (6) into Eq. (5) leads to an equation of motion for a particle with coordinates Δ(τ):

| (7) |

The particle’s trajectories depend on the eigenvalues of M. The first bifurcation (assuming the elements of M are scaled together) occurs when Λ1 = 1, in the direction parallel to the leading eigenvector. Physical solutions should have ‖Δ(τ)‖ < ∞ as τ → ∞ because Δ(τ) is an autocorrelation function. When all eigenvalues of M are smaller than 1 the trivial solution Δ(τ) = 0 is the only solution (in the neighborhood of xi(t) = 0 where our approximation is accurate). At the critical point (Λ1 = 1) a non trivial solution appears, and above it finite autocorrelations lead to chaotic dynamics in the full system.

The eigenvalue spectrum of J is circularly symmetric in the absence of correlation between matrix entries as is evident from numerical simulations and direct calculations using random matrix theory techniques [25]. To derive the radius r of the support of its spectral density, one can use the following scaling relationship. If all elements of the matrix gcd are multiplied by a constant κ, the radius r will scale linearly with κ. At the same time, , so Λ1 ∝ κ2. Thus, . The proportionality constant can be determined by noting that for both single and multiple cell-type networks this transition occurs when a finite mass of the spectral density of J has real part > 1, which can also be verified by direct computation of the largest Lyapunov exponent [22]. The transition occurs at Λ1 = 1, meaning that for Λ1 = 1 the eigenvalues of J are bounded in the unit circle r = 1, so in general:

| (8) |

Predictions for the radius according to Eq. (8) matched numerical simulations for a number of different matrix configurations (Fig. 1a,b). Eq. (8) also holds for networks with cell-type independent connectivity, in which case Λ1 = g2 and r = g. Importantly, r differs qualitatively from the mean synaptic gain ḡ. The inequality is a signature of the block structured variances. It is not observed in the case where the variances have columnar structure [26] or when the Jij ’s are randomly permuted.

Next we analyze the network dynamics above the critical point. In the chaotic regime the persistent population-level activity is determined by the structure matrix M. Consider the decomposition where are the right and left eigenvectors ordered by the real part of their corresponding eigenvalues ℜ{Λc}, satisfying . We find, with analogy to the analysis of the scalar self consistency equation in [7] that the trivial solution to Eq. (5) is unstable in the subspace , where D* is the number of eigenvalues of M with real part > 1. In that subspace the solution to Eq. (5) is a combination of D* different autocorrelation functions. In the D − D* dimensional orthogonal complement subspace the trivial solution is stable. Consequently, the vectors H(τ), Δ(τ) are significant in 𝒰M with ≈ 0 projection on any vector in (Fig. 2). Note that for asymmetric M, are not orthogonal and is spanned by the left rather than the right eigenvectors: .

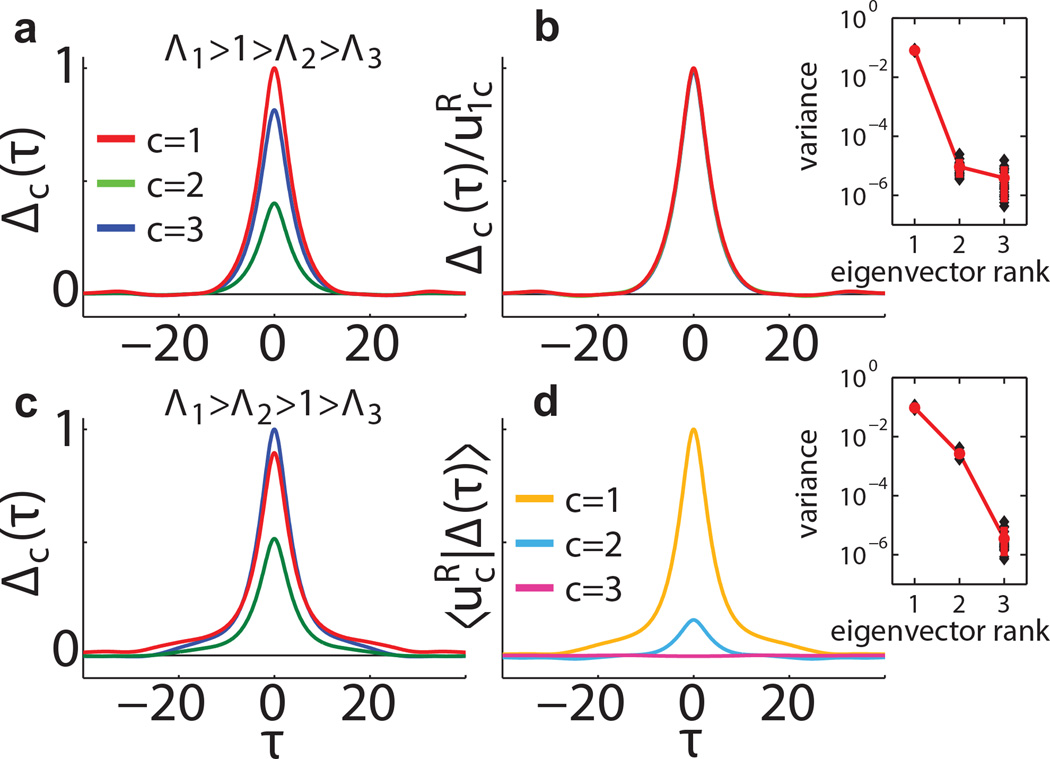

FIG. 2.

Autocorrelation modes. Example networks (N = 1200) have 3 equally sized groups with α, g such that M is symmetric. (a) When D* = 1 autocorrelations maintain a constant ratio independent of τ. (b) Rescaling by the components collapses the autocorrelation functions (Here Λ1 = 20, Λ2 = 0.2, Λ3 = 0.1). (c) When D* = 2, the autocorrelation functions are linear combinations of two autocorrelation “modes” that decay on different timescales. Projections of these functions are shown in (d). Only projections on are significantly different from 0 (Here Λ1 = 20, Λ2 = 16, Λ3 = 0.1). Insets show the variance of Δ (τ) projected on averaged over 20 networks in each setting.

In the special case D* = 1 we can write and where qH (τ), qΔ (τ) are scalar functions of τ determined by the nonlinear self-consistency condition. Therefore, neurons in all groups have the same autocorrelation function with different amplitudes. The ratio of amplitudes is determined by the components of the leading right eigenvector of M (see Fig. 2a,b) as . This ratio is independent of τ and the firing rate nonlinearity. The latter affects only the overall amount of activity in the network but not the ratio of activity between the subgroups.

We illustrate how these results give insight into a perplexing question in computational neuroscience - how can a small number of neurons have a large effect on the representational capacity of the whole network? In adults, newborn neurons continuously migrate into the existing neural circuit in the hippocampus and olfactory bulb regions [27]. Impaired neurogenesis results in strong deficits in learning and memory. This is surprising since the young neurons, although hyperexcitable, constitute only a very small fraction (< 0.1) of the total network. To better understand the role young neurons may play, we analyzed a network with D = 2 groups of neurons: group 1 of young neurons that is significantly smaller than group 2 of mature neurons (α1 ≪ α2). The connectivity within the existing neural circuit is such that by itself that subnetwork would be in the quiescent state: g22 = 1 − ε < 1. To model the increased excitability of the young neurons all connections of these neurons were set to: g12 = g21 = g11 = γ > 1 − ε.

We analyzed the network’s capacity to reproduce a target output pattern f (t). The activity of the neurons serves as a “reservoir” of waveforms from which f (t) is composed. The learning algorithm in [28] allows us to find the vector w such that , where the modified dynamics have Jij → Jij + uiwj and u is a random vector with O(1) entries. For simplicity we choose periodic target functions f (t) = sin(Ωt), and define the learning index as the fraction of power that the output function z(t) has at the target frequency. The index varies from 0 to 1, and is computed by averaging over 50 cycles.

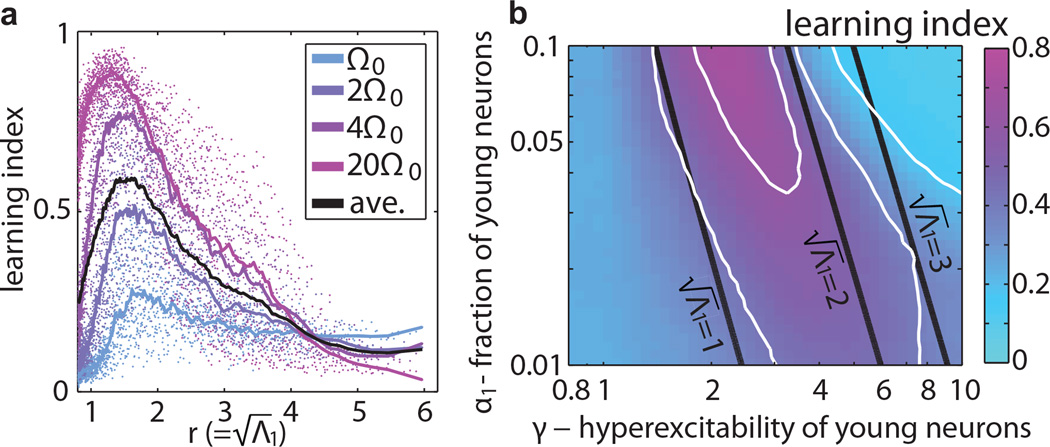

Performance depends primarily on Λ1 and not on the network structure, peaking for (Fig. 3). This is directly related to the maximal learning capacity observed at g ≈ 1.5 in networks with a single cell-type [28], further supporting the identification of as the effective gain. Importantly, because of the block structured connectivity, the effective gain is larger than the average gain (), for all values of γ and α1 [22]. In other words, for the same average connection strength, networks with block-structured connectivity have a higher effective gain that can place them in a regime with larger learning capacity compared to networks with shuffled connections, demonstrating that a small group of neurons could place the entire network in a state conducive to learning. Moreover, since increases in average connection strength are generally associated with increased metabolic cost, networks with block-structured connectivity can provide a more metabolically efficient way to perform computation compared to statistically homogeneous networks.

FIG. 3.

Learning capacity is primarily determined by , the effective gain of the network. (a) The learning index for four pure frequency target functions (Ω0 = π/120) plotted as a function of the radius . The training epoch lasted approximately 100 periods of the target signal. Each point is an average over 25 networks with N = 500, ε = 0.2 and different values of α1 and γ. The line is a moving average of these points for each frequency. (b) The same data averaged over the target frequencies shown as a function of γ and α1. Contour lines of lΩ (white) and of (black) coincide approximately in the region where lΩ peaks.

Outgoing connections from any given neuron are typically all positive or all negative, obeying Dale’s law [29]. Within random networks, this issue was addressed by Rajan and Abbott [26] and Tao [30] who computed the bulk spectrum and the outliers of a model where columns of J are separated to two groups, each with its offset and element variance. The dynamics of networks with cell-type-dependent connectivity that is offset to respect Dale’s law were addressed in [31] with some limitations, and remain an important problem for future research.

Ultimately, neural network dynamics need to be considered in relation to external inputs. The response properties of networks with D = 1 have been recently worked out [19, 32]. The analogy between the mean field equations suggests that our results can be used to understand the non-autonomous behavior of multiple cell-type networks.

Supplementary Material

ACKNOWLEDGMENTS

The authors would like to thank Larry Abbott for his support, including comments on the manuscript, and Ken Miller for many useful discussions. This work was supported by grants R01EY019493 and P30 EY019005, NSF Career award (IIS 1254123). MS was supported by the Gatsby Foundation.

References

- 1.Mehta ML. Random Matrices. Elsevier Academic Press; 2004. [Google Scholar]

- 2.Izyumov YA. Adv. Phys. 1965;14:569. [Google Scholar]

- 3.Kosterlitz JM, Thouless DJ, Jones RC. Phys. Rev. Lett. 1976;36:1217. [Google Scholar]

- 4.Wishart J. Biometrika. 1928;20A:32. [Google Scholar]

- 5.Amari S-I. Systems, Man and Cybernetics, IEEE Transactions on. 1972:643. [Google Scholar]

- 6.Sommers HJ, Crisanti A, Sompolinsky H, Stein Y. Phys. Rev. Lett. 1988;60:1895. doi: 10.1103/PhysRevLett.60.1895. [DOI] [PubMed] [Google Scholar]

- 7.Sompolinsky H, Crisanti A, Sommers HJ. Phys. Rev. Lett. 1988;61:259. doi: 10.1103/PhysRevLett.61.259. [DOI] [PubMed] [Google Scholar]

- 8.Molgedey L, Schuchhardt J, Schuster HG. Phys. Rev. Lett. 1992;69:3717. doi: 10.1103/PhysRevLett.69.3717. [DOI] [PubMed] [Google Scholar]

- 9.Kauffman S. Nature. 1969;224:177. doi: 10.1038/224177a0. [DOI] [PubMed] [Google Scholar]

- 10.Shmulevich I, Kauffman SA, Aldana M. Proceedings of the National Academy of Sciences. 2005;102:13439. doi: 10.1073/pnas.0506771102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Pomerance A, Ott E, Givan M, Losert W. PNAS. 2009;106:8309. [Google Scholar]

- 12.Schubert D, Ktter R, Zilles K, Luhmann HJ, Staiger JF. J. Neurosci. 2003;23:2961. doi: 10.1523/JNEUROSCI.23-07-02961.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Yoshimura Y, Dantzker JL, Callaway EM. Nature. 2005;433:868. doi: 10.1038/nature03252. [DOI] [PubMed] [Google Scholar]

- 14.Yoshimura Y, Callaway EM. Nature Neurosci. 2005;8:1552. doi: 10.1038/nn1565. [DOI] [PubMed] [Google Scholar]

- 15.Suzuki N, Bekkers JM. J. Neurosci. 2012;32:919. doi: 10.1523/JNEUROSCI.4112-11.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Franks KM, Russo MJ, Sosulski DL, Mulligan AA, Siegelbaum SA, Axel R. Neuron. 2011;72:49. doi: 10.1016/j.neuron.2011.08.020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Levy RB, Reyes AD. J. Neurosci. 2012;32:5609. doi: 10.1523/JNEUROSCI.5158-11.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Bertschinger N, Natschläger T. Neural Comp. 2004;16:1413. doi: 10.1162/089976604323057443. [DOI] [PubMed] [Google Scholar]

- 19.Toyoizumi T, Abbott LF. Phys. Rev. E. 2011;84:051908. doi: 10.1103/PhysRevE.84.051908. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Girko V. Theory Probab. Appl. 1984;29:694. [Google Scholar]

- 21.Bai Z. The Annals of Probability. 1997;25:494. [Google Scholar]

- 22.see Supplemental Material [url], which includes Refs. [33–34] and (i) calculation of the largest Lyapunov exponent, (ii) extension to matrices with non-Gaussian entries, and (iii) comparison of effective gain of networks with multiple cell-types and that of a network with cell-type independent connectivity..

- 23.Sompolinsky H, Zippelius A. Phys Rev. B. 1982;25:6860. [Google Scholar]

- 24.Note that M has strictly positive elements, so by the Perron-Frobenious theorem its largest eigenvalue (in absolute value) is real and positive and the corresponding eigenvector has strictly positive components.

- 25.Aljadeff J, Renfrew D, Stern M. arXiv preprint arXiv:1411.2688. 2014 [Google Scholar]

- 26.Rajan K, Abbott LF. Phys. Rev. Lett. 2006;97:188104. doi: 10.1103/PhysRevLett.97.188104. [DOI] [PubMed] [Google Scholar]

- 27.Zhao C, Deng W, Gage FH. Cell. 2008;132:645. doi: 10.1016/j.cell.2008.01.033. [DOI] [PubMed] [Google Scholar]

- 28.Sussillo D, Abbott LF. Neuron. 2009;63:544. doi: 10.1016/j.neuron.2009.07.018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Eccles J. Notes and records of the Royal Society of London. 1976;30:219. doi: 10.1098/rsnr.1976.0015. [DOI] [PubMed] [Google Scholar]

- 30.Tao T. Prob. Theory and Related Fields. 2013;155:231. [Google Scholar]

- 31.Cabana T, Touboul J. J. of Stat. Phys. 2013;153:211. [Google Scholar]

- 32.Rajan K, Abbott LF, Sompolinsky H. Phys. Rev. E. 2010;82:011903. doi: 10.1103/PhysRevE.82.011903. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Tao T, Vu V, Krishnapur M. Ann. Probab. 2010;38:2023. [Google Scholar]

- 34.Wolf A, Swift JB, Swinney HL, Vastano JA. Physica D. 1985;16:285. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.