Abstract

Background:

Clinical guidelines, prediction tools, and computerized decision support (CDS) are underutilized outside of research contexts, and conventional teaching of evidence-based practice (EBP) skills fails to change practitioner behavior. Overcoming these challenges requires traversing practice, policy, and implementation domains. In this article, we describe a program’s conceptual design, the results of institutional participation, and the program’s evolution. Next steps include integration of instruction in principles of CDS.

Conceptual Model:

Teaching Evidence Assimilation for Collaborative Health Care (TEACH) is a multidisciplinary annual conference series involving on- and off-site trainings and facilitation within health care provider organizations (HPOs). Separate conference tracks address clinical policy and guideline development, implementation science, and foundational EBP skills. The implementation track uses a model encompassing problem delineation, identifying knowing-doing gaps, synthesizing evidence to address those gaps, adapting guidelines for local use, assessing implementation barriers, measuring outcomes, and sustaining evidence use. Training in CDS principles is an anticipated component within this track. Within participating organizations, the program engages senior administration, middle management, and frontline care providers. On-site care improvement projects serve as vehicles for developing ongoing, sustainable capabilities. TEACH facilitators conduct on-site workshops to enhance project development, integration of stakeholder engagement and decision support. Both on- and off-site components emphasize narrative skills and shared decision-making.

Experience:

Since 2009, 430 participants attended TEACH conferences. Delegations from five centers attended an initial series of three conferences. Improvement projects centered on stroke care, hospital readmissions, and infection control. Successful implementation efforts were characterized by strong support of senior administration, involvement of a broad multidisciplinary constituency within the organization, and on-site facilitation on the part of TEACH faculty. Involvement of nursing management at the senior faculty level led to increased presence of nursing and other disciplines at subsequent conferences.

Conclusions:

A multidisciplinary and multifaceted approach to on- and off-site training and facilitation may lead to enhanced use of research to improve the quality of care within HPOs. Such training may provide valuable contextual grounding for effective use of CDS within such organizations.

Keywords: Learning health care system, evidence based medicine, decision support

Introduction

The failure of efforts in evidence-based health care (EBHC) to verifiably inform clinical practice has become more conspicuous, even as the methodological rigor and sophistication of epidemiological and research tools and instruments have advanced. Clinical guidelines continue to be ignored,1,2 and decision support instruments are inconsistently utilized.3 Abundant literature attests to the failure of conventional, practitioner-based, educational efforts to change behavior.4 Finally, a landmark study5 showed that, despite richness of technology and resources within the United States health care system, only half of Americans receive evidence-based interventions for common and important health conditions.

In 2009, concerned about these failures, educators at the New York Academy of Medicine expanded a previously existing workshop to address capacity building needs within health care organizations.

“Teaching Evidence Assimilation for Collaborative Health Care (TEACH)” incorporates training in skill areas beyond critical appraisal of published research, including those required to develop clinical policies and guidelines as well as their successful implementation within organized care settings. In developing the TEACH design we were influenced by literature addressing the fragmentation of conventional training in EBHC as an underlying cause for lack of uptake of research in practice. Specifically, David Eddy had warned that the teaching of evidence-based medicine (EBM) was being confined to the context of health care delivery to individual patients, while evidence-based clinical guidelines and implementation skills were being ignored.6 Recently, a review of the status of medical education in the United States commissioned by the Carnegie Foundation determined that instruction in quality improvement and multidisciplinary, systems-based health care constitutes a major deficiency at both undergraduate and graduate levels.7 We concluded that an adequate approach to education and training in EBHC would require attention to at least three dimensions: clinical guidelines and policies, systems level implementation, and delivery of health services to individual patients.

Efforts to integrate training across the dimensions of EBHC have been sparse. Wahabi et al.8 linked conventional instruction in EBM to a capacity building program for public health project development in rural Saudi Arabia. Wright et al.9 described a multidisciplinary, emergency department based program combining evidence-based training and literature review with a team-based approach to improvement in specific clinical areas. An innovative workshop in Rio de Janeiro engages health care managers, policymakers, and clinicians in a multidisciplinary setting,10 and has more recently incorporated guideline development and implementation into the scope of training. However, training programs in evidence-based practice continue to focus largely upon clinicians and clinical educators and the skill sets applicable to individual patient care.10,11

In this article, we describe the conceptual design of the TEACH program, our program experience to date including results of institutional participation, and its evolution. In the context of future directions for the program, we also address the process of integrating instruction in principles of computerized decision support (CDS) into the TEACH program and preliminary steps in that direction.

Conceptual Design of the TEACH Program

TEACH was initiated in 2009 at the New York Academy of Medicine (NYAM) as an annual conference series linked to on-site facilitation and educational efforts for interested institutional subscribers.12 The three-day conferences combine plenary sessions with workshop-style small group learning. A three-level design corresponds to three broadly defined dimensions of EBHC, i.e., basic skills and delivery of services to individual patients (Level 1), development of clinical policies and recommendations (Level 2), and implementation of evidence-based clinical policies in specific clinical settings (Level 3). These conform to three critically important domains activity within health care provider organizations (HPOs). Within this design we consider that a common skill set is carried through the successive levels in a fashion that reflects greater complexity of application. The sections that follow describe the learning objectives, target audience, and the content and structure of the TEACH conferences and workshops, as well as other features of the TEACH program.

Learning Objectives

The global objective of TEACH is to facilitate the acquisition of knowledge and skills needed to maximize the use of research within HPOs. Participants attending Level 1 are expected to acquire a basic familiarity with the basic knowledge and skills that pertain to assembling, appraising, and applying evidence from research to specific problems and information needs. They also gain insight with respect to use of these skills in the care of individual patients. Level 2 participants learn to apply the same skills in a more advanced framework, that of performing evidence-based reviews as well as developing and adapting clinical policies and guidelines within an HPO. Level 3 attendees are introduced to principles of evidence-based practice on a systems level, encompassing implementation science and knowledge translation.13

Target Audience

We market TEACH conferences to a broad range of health care specialties and disciplines including professionals in training, together with those who are highly experienced. Most attendees are directly involved with the delivery of health care within HPOs. The TEACH workshop also provides a training ground for librarians, who account for 5–10 percent of attendees. Attendees may enroll as individuals or come as part of HPO delegations. Small groups of 8–10 participants are constituted to maximize interdisciplinary sharing of perspectives. Small group facilitators are similarly assigned so as to complement the diversity of participants.

Content and Structure of the TEACH Conferences

The conference design revolves around the notion that multiple dimensions of health care delivery are necessary to the objectives of EBHC, and that, to be successful, capacity building efforts must address these. Table 1 summarizes the three-level design, skill content, and specific learning objectives of the TEACH conferences and workshops. The foundations of evidence-based care track offers a practice-based approach to evidence-based practice that emphasizes relational over epidemiological principles.14,15 It embraces a broader framework of research than conventional EBM.16 Level 2 initially concentrated on the Grading Recommendations Assessment, Development and Recommendations (GRADE) system17 and currently embraces broader issues of development of clinical policies and recommendations within HPOs. Level 3 uses the knowledge-to-action (K2A) model13 as a conceptual framework. The K2A model13 encompasses the steps summarized in the Level 3 learning objectives in Table 1. TEACH participants attend all plenary sessions, but attend only one track and corresponding small group during a given conference. Members of HPO delegations are encouraged to distribute themselves throughout the different workshop tracks.

Table 1.

TEACH Conference Framework

| LEVEL | TITLE | CONTENT | LEARNING OBJECTIVES |

|---|---|---|---|

| 1 | Foundations of evidence-based care | Basic evidence literacy skills,14 narrative principles.16 | Defining problems and formulating questions. Finding relevant research. Appraising the applicability, practical importance and quality of research. |

| 2 | Clinical policies, guidelines, and the GRADE system* | Developing rapid reviews32 and recommendations to serve policy and practice needs within organized health care settings.20 Use of the GRADE* system and the GRADEpro software for evidence synthesis. |

Performing systematic reviews, including rapid reviews, on well-defined health care questions. Applying the GRADE* system for evidence summaries. Finding and selecting guidelines relevant to a health care problem. Appraising the quality of guidelines Adapting guidelines for local use. |

| 3 | Implementing evidence-based care | Use of the knowledge-to-action (K2A) framework to guide sustainable implementation of evidence-based clinical policies. | Identifying clinical problems conducive to knowledge translation methods. Applying the principles of knowledge translation and the K2A cycle to implementation of evidence-based policies. Using EBHC skills to inform the process of implementing policies. Defining and measuring outcomes of implementation of clinical policies. |

Note:

Grading Recommendations Assessment, Development and Recommendations (GRADE)17

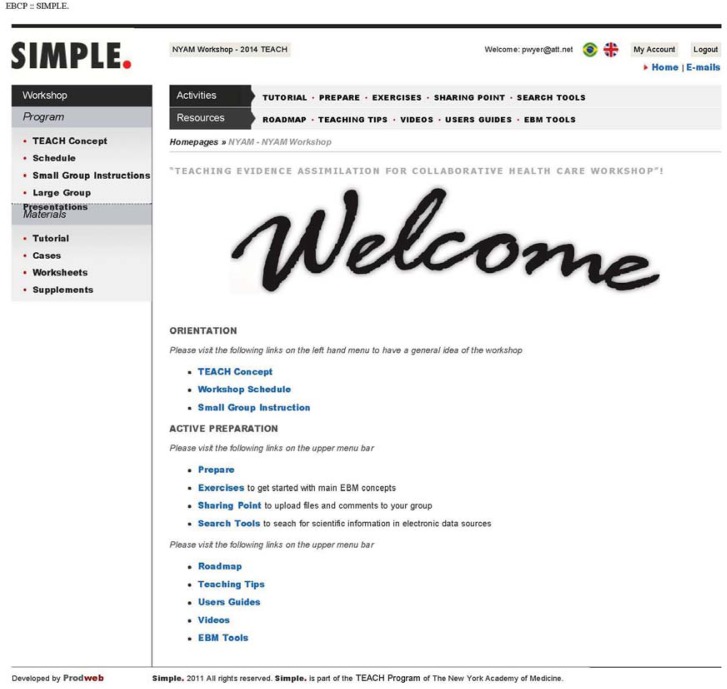

The SIMPLE Website

The TEACH program developed and uses the Scientifically Informed Medical Practice and Learning (SIMPLE) website (Figure 1). The password protected site is also used at workshops at McMaster University and in connection with a workshop series in Rio de Janeiro. The SIMPLE format accommodates multiple tracks including preparatory readings and assignments, interactive exercises, and other interactive features specific to small groups and parallel learning tracks. Conference participants have access to all materials. “Sharing point,” an interactive feature, provides a living electronic environment that enhances the small group process. It allows small group members to communicate with each other and their facilitator both before and during the workshop as well as to upload files.

Figure 1.

Home Page of the Website Developed to Facilitate the TEACH Program

Beyond the Conferences: On-Site Facilitation

The TEACH program offers facilitation of the development of evidence-based improvement projects to participating HPOs. Such projects involve organized team efforts within those institutions and the utilization of knowledge and skills introduced in the annual workshop. TEACH facilitation18 takes different forms with different HPOs. A customized plan is worked out with HPO leadership prior to their initial conference attendance. Facilitation ranges from ongoing participation in a project team, to one or more site visits over a one- or two-year period. In some cases TEACH faculty have designed and led customized on-site educational programs that complement or supplement the conference content. In others, they have served as project consultants.

Experience with the TEACH Framework

Profile of Attendance

From 2009 to 2014, 430 participants took the three-day TEACH course at NYAM. The breakdown of attendees by discipline evolved across the six-year period (Table 2). In 2009, 65/81 (80 percent) of attendees were physicians and physicians in training. During the first three years (2009–2011) physicians and physician trainees accounted for 163/230 (72 percent) of all participants. There was a modest shift toward increased nursing and allied profession participation in the third year (43/81 = 54 percent physician and physician trainees). Nonetheless, after three years, we perceived the need to enhance multidisciplinary participation. We augmented our faculty to include facilitators with nursing backgrounds in all Level 1 and Level 3 tracks and added plenary speakers from nursing management and implementation. Facilitator teams currently include a physician, a nurse, and a librarian. We also intensified our promotion to and recruitment from allied health professions. By 2014, 30/79 (38 percent) of total attendees came from a nursing background. Physicians attending clinically related tracks accounted for 15/79 (19 percent) of attendance, while 26/79 (33 percent) were made up of health professionals engaged in administrative and policy roles.

Table 2.

Distribution of TEACH Conference Attendees across the Tracks, 2009–2014

| YEAR | TRACK 1 (NUMBER OF PARTICIPANTS) | TRACK 2 (NUMBER OF PARTICIPANTS) | TRACK 3 (NUMBER OF PARTICIPANTS) | TOTAL (NUMBER OF PARTICIPANTS) | |

|---|---|---|---|---|---|

| POLICIES/RECOMMENDATIONS | GRADE | ||||

| 2009 | 40 | — | 15 | 26 | 81 |

| 2010 | 29 | — | 11 | 28 | 68 |

| 2011 | 31 | — | 15 | 35 | 81 |

| 2012 | 20 | 6 | 8 | 16 | 50 |

| 2013 | 20 | 10 | 25 | 16 | 71 |

| 2014 | 22 | 14 | 19 | 24 | 79 |

| TOTAL | 162 | 30 | 93 | 145 | 430 |

Results of Institutional Participation

Although we originally intended to assess gains in knowledge and skills related to EBHC among conference attendees, this proved impractical for several reasons. Validated instruments relevant to all of the TEACH domains did not exist. Furthermore, conference attendees are already asked to do preliminary readings and other preparatory work. Increasing the time burden with cognitive testing during the preconference period seemed unwise. We therefore concentrated evaluation efforts on the use of EBHC knowledge and skill by institutional participants to achieve tangible improvements in care. Between 25 and 40 percent of TEACH participants stem from institutional delegations. In some cases, these participants were clustered into a single workshop group and used a project drawn from their specific institutional interests and needs as the learning vehicle. In other cases, delegations were distributed over the different workshop tracks in order to obtain the broadest possible experience of the conference.

Table 3 lists institutional participants by institution type, change agent and project leadership profiles and the nature of projects worked on for a representative sample. Table 4 summarizes the six participating institutions for which outcome data is available. We followed the latter for at least one year following their participation to determine the extent to which projects were carried through to implementation. Although quantitative outcomes were rarely available, one HPO sent delegations of participants to the TEACH conference over a three-year period resulting in a program to decrease heart failure readmissions and reported a 17 percent drop in readmissions during the first year of implementation.

Table 3.

Characteristics of Institutional Subscribers to the TEACH Program and Evidence-Based Projects Developed 2009–2014

| INSTITUTION | LOCATION | TYPE | CHANGE AGENTS | PROJECT LEADERSHIP | PROJECTS | OUTCOME |

|---|---|---|---|---|---|---|

| 1 | New York City (NYC) | Community hospital adjunct to academic center | Senior administration, clinical leadership | Clinical leadership | Reducing hospital readmission for patients admitted for acute heart failure | Reduced heart failure readmissions during first year of implementation |

| 2 | Eastern Canada | Community-based academic affiliate | Emergency physician trained in evidence-based practice and quality improvement (QI) | Varied |

|

Protocols developed and disseminated |

| 3 | Midwest | Hospital network linked to academic center | Emergency physician | Network QI director |

|

Protocols developed and disseminated |

| 4 | Midwest | Hospital network linked to academic center | Emergency physician | Network QI director | CAUTI* | Protocols developed and disseminated |

| 5 | Philadelphia | Academic medical center and affiliate | Nursing clinical specialists | Nursing clinical specialists | CAUTI*

|

Protocols developed |

| 6 | NYC | Community teaching hospital | Hospital administration | Poorly defined | Sepsis care | Protocols developed |

| 7 | NYC | Ambulatory home care network | Senior administration | Various |

|

NA** |

Notes:

Catheter associated urinary tract infections (CAUTI)

Newly initiated participation, no outcomes available

Magnet status is an award given by the American Nurses’ Credentialing Center (ANCC), an affiliate of the American Nurses Association. EBCP is a requirement for this award

Table 4.

Types of Facilitation within Institutions Subscribing to the TEACH Program

| INSTITUTION | TYPES OF FACILITATION |

|---|---|

| 1 | On-site course extending TEACH conference participation. Ongoing process facilitation by TEACH core faculty member. |

| 2 | Site visits by TEACH faculty with lecture and consultative meetings. Ongoing process facilitation by TEACH core faculty member. |

| 3 | Site visits by TEACH faculty with lecture and consultative meetings. Ongoing process and content facilitation by TEACH faculty liaison. |

| 4 | Site visits by TEACH faculty with lecture and consultative meetings. |

| 5 | On-site facilitation by core TEACH faculty. |

| 6 | No facilitation beyond TEACH attendance. |

Several potential insights emerging from the TEACH program experience with HPOs might be of use to others attempting to link conference and workshop training in EBHC to on-site improvement projects. Specifically, results seemed particularly impressive when the change agent reflected a partnership between senior administration, middle management, and clinical providers, and when the change agent and leadership had prior training in evidence-based practice. Results were less impressive when the change agent and leadership reflected limited roles and interests within the institution. Table 4 broadly suggests a qualitative relationship between the degree of project success and the intensity of facilitation efforts. Potential reasons for failure of project development included lack of leadership, isolated leadership vulnerable to replacement, and the inability to define and efficiently monitor appropriate metrics over time through the use of information technology (IT) and electronic medical record data.

Evolution of the TEACH Conference Design—The Role of Computerized Decision Support (CDS)

Lessons learned and insights gained since the TEACH program began have led to important design changes. Bringing three dimensions into a workshop framework otherwise modeled on traditional EBM approaches10,11 did not in itself prove adequate to achieving a fully integrated learning environment. Several approaches to enhancing such integration were also ineffective. For example, wrap-up summaries by attendees from different tracks of their activities during the workshop proved distracting and inconsistently engaging. Similarly, a seminar framework in which TEACH faculty presented track-specific content to participants in different tracks resulted in negative participant feedback. Maximizing use of the interactive website during the preparation period, using the opening plenary session to set the stage for the workshop experience as a whole, and selecting plenary sessions to bring forward issues pertaining to the different tracks in a way that is of interest to all participants have proved more promising.

The TEACH conference design has undergone several broad changes. We expanded Level 2 through the addition of a track aimed at developing clinical policies and guidelines within health care organizations. The track is staffed by faculty from an established hospital evidence-based practice center.19,20 Its addition rendered the guidelines and recommendations track relevant to institutional participants. The recruitment for this track further enhanced multidisciplinary participation, which in turn serves to align the TEACH environment with the principles of team-based health care.

From the outset, the TEACH program has emphasized principles of narrative medicine,21 storytelling, and the relational dimension of health care.15 Initially, a narrative medicine pioneer took active part in the conferences, including delivering plenary presentations. Although many participants were enthusiastic, others failed to recognize the relevance of these presentations. We therefore revised the approach by dedicating half of the plenary time on day 2 to a special integrated session addressing issues to do with patient-centered health care in a way that is relevant to all dimensions of the conference.

As the TEACH program has matured, the era of the electronic health record (EHR) and the role of CDS has come into its own. Although the advent of the EHR—and health IT in general—has brought great promise,22 important risks and barriers to uptake are also visible.23 Furthermore, CDS remains underutilized3 even though some recent efforts report success.24 Clearly, the interface between clinical evidence, evidence-informed clinical policies and guidelines, and the delivery of care to individual patients is going to be more and more an electronic one. Hence, models for profiling and responding to user attitudes and experiences have become essential components of a structured approach to implementing clinical policies25,26 and have also begun to be incorporated into knowledge translation texts.27 Accordingly, we plan to augment the Level 3 implementation track of the TEACH program by incorporating principles of IT and CDS into the learning objectives in the context of knowledge translation and implementation skills. The TEACH environment should provide a valuable learning context around acquisition of principles of CDS and IT.

Strengths, Weaknesses, Future Perspectives

The TEACH framework offers an environment in which learners from HPO’s can gain knowledge and skills pertaining to multiple dimensions of EBHC. It provides all participants with access to internationally recognized innovators in three major dimensions of EBHC, as well as intensive tutelage in a preferred learning area. The structure of TEACH reflects the complexity inherent in maximizing the contribution of information from research to the quality of health services. This opportunity—combined with the multidisciplinary, team-based makeup of the tracks and small groups and the inclusion of front line health care professionals alongside administrators and policymakers—generates a compelling learning environment. Over time, organizations—including guideline committees from medical specialty societies—have assigned participants to attend multiple tracks within the conference, maximizing their ability to absorb the key skill sets that characterize the different domains.

A potential limitation of TEACH is the fact that it is pitched to the needs of moderate-size HPO’s. Hence, it may be perceived to have less appeal to practitioners within a small or solo practice environment than to those within large integrated delivery systems. The former’s contexts may prevent their directly absorbing the range of knowledge, skills, and activities embodied within the TEACH program. The latter characteristically have their own differentiated frameworks for realizing evidence-based care. However, the TEACH program has something to offer virtually any health care professional likely to enroll in it. Many health care professionals enroll as individuals and are able to increase knowledge and skills they would be exposed to in conventional evidence-based training programs, but within a broader framework of application.

TEACH is to be distinguished from other training efforts that have encompassed multiple EBHC skill areas. One such effort offered tracks in different areas related to EBHC, including the GRADE system, knowledge translation, and the conducting of systematic reviews.28 However, the program was not generated by a unified concept of organizational capacity building and did not address the needs of delegations from HPOs. We believe that TEACH is unique among training programs in EBHC in its systematic identification and incorporation of distinct but essential domains of knowledge, skill, and proficiency. Traditional EBM workshops, largely following the McMaster University model, focus on critical appraisal skills framed within the context of the care of individual patients.10,11 Additionally, the Institute for Healthcare Improvement (IHI) conducts seminars for delegations from provider organizations focused on training in quality improvement skills, while the intensive training programs offered by Intermountain Healthcare focus more on the organizational level, also from a quality improvement standpoint. Although quality improvement efforts share goals with those of knowledge translation and the K2A model, they also have differences.29 Otherwise, the IHI program only marginally overlaps the content of training in EBHC.

From the standpoint of cost, the three-day TEACH conference compares favorably with other EBHC training programs and is priced in the lower range of such workshops. It operates on a breakeven basis within the host institution that provides section discounts on facilities for internally sponsored events. This allows the offering of tuition discounts for students and group rates for participating institutions. Additional fees are required for on-site courses and other facilitation efforts. One institution estimated the total cost of participation over a three-year period to be $100,000, of which $10,000 comprised the cost of conference attendance for approximately 20 individuals, and the remainder reflected costs of personnel time—including conference attendance and on-site time requirements—and the salary of an additional full-time on-site nurse coordinator who was hired as part of an implementation plan to decrease hospital readmissions.

Effectiveness of the TEACH framework is limited by resource constraints within participating institutions. For example, the ability to collect, mine, and interpret practice-based evidence drawn from EHR data is rapidly becoming a critically important dimension of EBHC.30 Many smaller HPOs are still in the early stages of developing such capacity. We have observed that the lack of those capabilities frequently disables the ability to create verifiable and sustainable improvements within project frameworks arising from institutional participation in TEACH. Longer-term outcome information on TEACH related projects has proved to be elusive. Furthermore we have not been able to relate actual increases in knowledge and skill on the part of conference participants to demonstrable increases in capacity for EBHC within participating institutions. Lack of validated assessment tools across the full range of required skills31 renders this an objective that will require longer range development. We believe that we have demonstrated that it is possible for an EBHC training program operating within the scale of conventional EBM workshops to address a minimum range of skill domains required to increase the use of research within organized health care settings. An integrated learning environment for EBHC may provide a context within which principles of CDS may be absorbed in a fashion that increases utilization and effectiveness.

Acknowledgments

Funding for the TEACH conference was made possible in part by Grant # 5R13HS018607 from the Agency for Healthcare Research and Quality (AHRQ).

The following individuals contributed substantially to the TEACH project: Saadia Akhtar, MD, Mt. Sinai Beth Israel Medical Center, Icahn School of Medicine, NY; Barnet Eskin, MD, PhD, Morristown Medical Center, NJ; Ian Graham, PhD, University of Ottawa, Canada; Judy Honig, EdD, DNP, Columbia University School of Nursing, NY; T.J. Jirasevijinda, MD, Weill Cornell Medical College, NY; Barbara Lock, MD, Columbia University Medical Center, NY; Pattie Mongelis, MLS, New York City; Patricia Quinlan, RN, DScN, Hospital for Special Surgery, NY; Dorice Vieira, MLS, MPH, NY University Langone Medical Center, NY; and Arlene Smaldone, RN, PhD, Columbia University School of Nursing, NY.

Footnotes

Disciplines

Education | Medicine and Health Sciences

References

- 1.Baiardini I, Braido F, Bonini M, Compalati E, Canonica GW. Why do doctors and patients not follow guidelines? Curr Opinion Allergy Clin Immunol. 2009;9:228–233. doi: 10.1097/ACI.0b013e32832b4651. [DOI] [PubMed] [Google Scholar]

- 2.Barnett ML, Lindner JA. Antibiotic Prescribing for Adults With Acute Bronchitis in the United States, 1996–2010. JAMA. 2014;311:2020–2022. doi: 10.1001/jama.2013.286141. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Elwyn G, Scholl I, Tietbohl C, Mann M, Edwards AGK, Clay C, et al. “Many miles to go....”: A systematic review of the implementation of patient decision support interventions into routine clinical practice. Med Informatics Dec Making. 2013;13(Suppl 2):514. doi: 10.1186/1472-6947-13-S2-S14. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Coomarasamy A, Khan KS. What is the evidence that postgraduate teaching in evidence based medicine changes anything? A systematic review. BMJ. 2004;329:1017–1021. doi: 10.1136/bmj.329.7473.1017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.McGlynn EA, Asch SM, Adams J, Keesey J, Hicks J, DeCristofaro A, et al. The quality of health care delivered to adults in the United States. N Engl J Med. 2003;348:2635–2645. doi: 10.1056/NEJMsa022615. [DOI] [PubMed] [Google Scholar]

- 6.Eddy DM. Evidence-based medicine: A unified approach. Health Affairs. 2005;24:9–17. doi: 10.1377/hlthaff.24.1.9. [DOI] [PubMed] [Google Scholar]

- 7.Cooke M, Irby DM, O’Brien BC. Educating Physicians: A Call for Reform of Medical School of and Residency. Jossey-Bass; 2010. [Google Scholar]

- 8.Wahabi HA, Al-Ansary LA. Innovative teaching methods for capacity building in knowledge translation. BMC Med Educ. 2011;11:85. doi: 10.1186/1472-6920-11-85. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Wright SW, Trott A, Lindsell CJ, Smith C, Gibler WB. Creating a System to Facilitate Translation of Evidence Into Standardized Clinical Practice: A Preliminary Report. Ann Emerg Med. 2008;51:80–86. doi: 10.1016/j.annemergmed.2007.04.009. [DOI] [PubMed] [Google Scholar]

- 10.Murad MH, Montori VM, Kunz R, Letelier LM, Keitz SA, Dans AL, et al. How to teach evidence-based medicine to teachers: reflections from a workshop experience. J Eval Clin Prac. 2009;15:1205–1207. doi: 10.1111/j.1365-2753.2009.01344.x. [DOI] [PubMed] [Google Scholar]

- 11.Leipzig RM, Wallace EZ, Smith LG, Sullivant J, Dunn K, McGinn T. Teaching evidence-based medicine: a regional dissemination model. Teach Learn Med. 2003;15:204–209. doi: 10.1207/S15328015TLM1503_09. [DOI] [PubMed] [Google Scholar]

- 12.Lang E, Wyer P, Tabas J, Krishnan J. Educational and Research Advances Stemming From the 2007 Academic Emergency Medicine Consensus Conference in Knowledge Translation. Acad Emerg Med. 2010;17:865–869. doi: 10.1111/j.1553-2712.2010.00825.x. [DOI] [PubMed] [Google Scholar]

- 13.Straus S, Tetroe J, Graham ID. Knowledge Translation in Health Care. 2nd Edition. Oxford: Wiley-Blackwell; 2013. [Google Scholar]

- 14.Silva SA, Charon R, Wyer PC. The marriage of evidence and narrative: scientific nurturance within clinical practice. J Evaluation Clin Prac. 2011;17:585–593. doi: 10.1111/j.1365-2753.2010.01551.x. [DOI] [PubMed] [Google Scholar]

- 15.Wyer PC, Silva SA, Post SG, Quinlan P. Relationship-centred care: antidote, guidepost or blind alley? The epistemology of 21st century health care. J Eval Clin Pract. 2014;20:881–889. doi: 10.1111/jep.12224. [DOI] [PubMed] [Google Scholar]

- 16.Silva SA, Wyer PC. The Roadmap: a blueprint for evidence literacy within a Scientifically Informed Medical Practice and LEarning model. Int J Person Centered Med. 2012 In Press. [Google Scholar]

- 17.Guyatt GH, Oxman AD, Vist GE, Kunz R, Falck-Ytter Y, Alonso-Coello P, et al. GRADE: an emerging consensus on rating quality of evidence and strength of recommendations. BMJ. 2008;336:924–926. doi: 10.1136/bmj.39489.470347.AD. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Stetler CB, Legro MW, Rycroft-Malone J, Bowman C, Curran G, Guihan M, et al. Role of “external facilitation” in implementation of research findings: a qualitative evaluation of facilitation experiences in the Veterans Health Administration. Implementation Science. 2006;1:23. doi: 10.1186/1748-5908-1-23. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Gagnon M. Hospital-Based Health Technology Assessment: Developments to Date. Pharmaco Economics. 2014;32:819–834. doi: 10.1007/s40273-014-0185-3. [DOI] [PubMed] [Google Scholar]

- 20.Umscheid CA, Williams K, Brennan PJ. Hospital-Based Comparative Effectiveness Centers: Translating Research into Practice to Improve the Quality, Safety and Value of Patient Care. J Gen Intern Med. 2010;25:1352–1355. doi: 10.1007/s11606-010-1476-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Charon R, Wyer P, the NEBM Working Group The Art of Medicine: Narrative Evidence Based Medicine. Lancet. 2008;371:296–297. doi: 10.1016/s0140-6736(08)60156-7. [DOI] [PubMed] [Google Scholar]

- 22.Chaudhry B, Wang J, Wu S, Maglione M, Mojica W, Roth E, et al. Systematic Review: Impact of Health Information Technology on Quality, Efficiency, and Costs of Medical Care. Ann Intern Med. 2006;144:742–752. doi: 10.7326/0003-4819-144-10-200605160-00125. [DOI] [PubMed] [Google Scholar]

- 23.Middleton B, Bloomrosen M, Dente MA, Hashmat B, Koppel R, Overhage JM, et al. Enhancing patient safety and quality of care by improving the usability of electronic health record systems: recommendations from AMIA. J Am Med Inform Assoc. 2013;20:e2–e8. doi: 10.1136/amiajnl-2012-001458. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.McGinn TG, McCullagh L, Kannry MJ, Knaus M, Sofianou A, Wisnivesky JP, et al. Efficacy of an Evidence-Based Clinical Decision Support in Primary Care Practices A Randomized Clinical Trial. JAMA Intern Med. 2013;173(17):1584–1591. doi: 10.1001/jamainternmed.2013.8980. [DOI] [PubMed] [Google Scholar]

- 25.Kushniruk AW, Borycki EM, Anderson J, Anderson M, Nicoll J, Kannry J. Using Clinical and Computer Simulations to Reason About the Impact of Context on System Safety and Technology-Induced Error. Studies Health Technol Informatics. 2013;194:154–159. [PubMed] [Google Scholar]

- 26.Monkman H, Borycki EM, Kushrinuk AW, Kuo M. Exploring the Contextual and Human Factors of Electronic Medication Reconciliation Research: A Scoping Review. Studies Health Technol Informatics. 2013;194:166–172. [PubMed] [Google Scholar]

- 27.Gupta S, McKibbon KA. Chapter 3: Informatics Inverventions. In: Straus SE, Tetroe J, Graham ID, editors. Knowledge Translation in Health Care. Oxford: Wiley Blackwell; 2013. pp. 189–196. [Google Scholar]

- 28.University of Alberta Putting Evidence Into Practice Edmonton. http://www.pep.ualberta.ca/index.htm Accessed June 12, 2015.

- 29.Sales A. Chapter 45: Quality improvement theories. In: Straus SE, Tetroe J, Graham ID, editors. Knowledge Translation in Health Care. Oxford: Wiley Blackwell; 2013. pp. 320–328. [Google Scholar]

- 30.Weil AR. Big Data In Health: A New Era For Research And Patient Care (Editorial) Health Affairs. 2014;33:1110. doi: 10.1377/hlthaff.2014.0689. [DOI] [PubMed] [Google Scholar]

- 31.Chatterji M, Graham MJ, Wyer PC. Mapping Cognitive Overlaps between Practice-Based Learning and Improvement and Evidence-based Medicine: An Operational Definition for Assessing Resident Physician Competence. J Graduate Med Educ. 2009;1:287–298. doi: 10.4300/JGME-D-09-00029.1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Ganann R, Cilska D, Thomas H. Expediting systematic reviews: methods and implications of rapid reviews. Implementation Sci. 2010;5:56. doi: 10.1186/1748-5908-5-56. [DOI] [PMC free article] [PubMed] [Google Scholar]