Abstract

Signal transduction in living cells is vital to maintain life itself, where information transfer in noisy environment plays a significant role. In a rather different context, the recent intensive research on ‘Maxwell's demon'—a feedback controller that utilizes information of individual molecules—have led to a unified theory of information and thermodynamics. Here we combine these two streams of research, and show that the second law of thermodynamics with information reveals the fundamental limit of the robustness of signal transduction against environmental fluctuations. Especially, we find that the degree of robustness is quantitatively characterized by an informational quantity called transfer entropy. Our information-thermodynamic approach is applicable to biological communication inside cells, in which there is no explicit channel coding in contrast to artificial communication. Our result could open up a novel biophysical approach to understand information processing in living systems on the basis of the fundamental information–thermodynamics link.

The connection between information and thermodynamics is embodied in the figure of Maxwell's demon, a feedback controller. Here, the authors apply thermodynamics of information to signal transduction in chemotaxis of E. coli, predicting that its robustness is quantified by transfer entropy.

The connection between information and thermodynamics is embodied in the figure of Maxwell's demon, a feedback controller. Here, the authors apply thermodynamics of information to signal transduction in chemotaxis of E. coli, predicting that its robustness is quantified by transfer entropy.

A crucial feature of biological signal transduction lies in the fact that it works in noisy environment1,2,3. To understand its mechanism, signal transduction has been modelled as noisy information processing4,5,6,7,8,9,10,11. For example, signal transduction of bacterial chemotaxis of Escherichia coli (E. coli) has been investigated as a simple model organism for sensory adaptation12,13,14,15,16. A crucial ingredient of E. coli chemotaxis is a feedback loop, which enhances the robustness of the signal transduction against environmental noise.

The information transmission inside the feedback loop can be quantified by the transfer entropy, which was originally introduced in the context of time series analysis17, and has been studied in electrophysiological systems18, chemical processes19 and artificial sensorimotors20. The transfer entropy is the conditional mutual information representing the directed information flow, and gives an upper bound of the redundancy of the channel coding in an artificial communication channel with a feedback loop21; this is a fundamental consequence of Shannon's second theorem22,23. However, as there is not any explicit channel coding inside living cells, the role of the transfer entropy in biological communication has not been fully understood.

The transfer entropy also plays a significant role in thermodynamics24. Historically, the connection between thermodynamics and information was first discussed in the thought experiment of ‘Maxwell's demon' in the nineteenth century25,26,27, where the demon is regarded as a feedback controller. In the recent progress on this problem in light of modern non-equilibrium statistical physics28,29, a universal and quantitative theory of thermodynamics feedback control has been developed, leading to the field of information thermodynamics24,30,31,32,33,34,35,36,37,38,39,40,41,42,43,44,45,46,47,48. Information thermodynamics reveals a generalization of the second law of thermodynamics, which implies that the entropy production of a target system is bounded by the transfer entropy from the target system to the outside world24.

In this article, we apply the generalized second law to establish the quantitative relationship between the transfer entropy and the robustness of adaptive signal transduction against noise. We show that the transfer entropy gives the fundamental upper bound of the robustness, elucidating an analogy between information thermodynamics and the Shannon's information theory22,23. We numerically studied the information-thermodynamics efficiency of the signal transduction of E. coli chemotaxis, and found that the signal transduction of E. coli chemotaxis is efficient as an information-thermodynamic device, even when it is highly dissipative as a conventional heat engine.

Results

Model

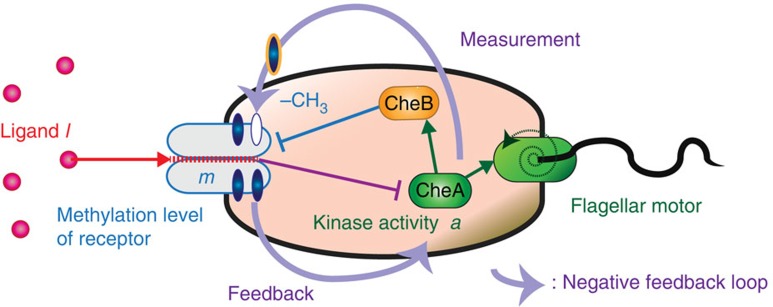

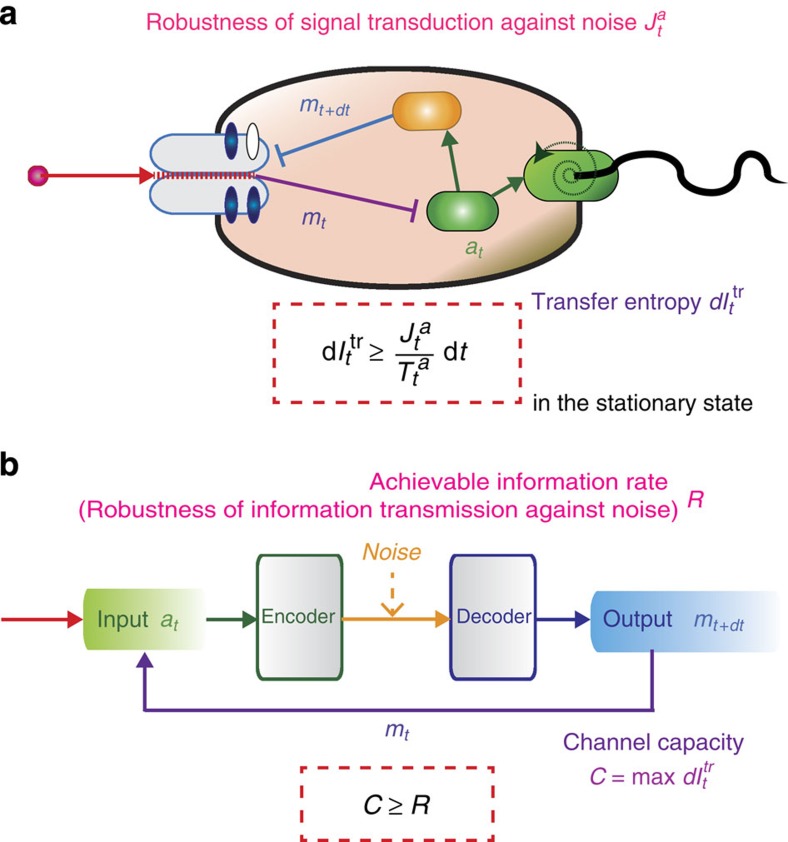

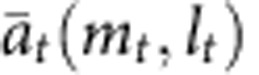

The main components of E. coli chemotaxis are the ligand density change l, the kinase activity a and the methylation level m of the receptor (Fig. 1). A feedback loop exists between a and m, which reduces the environmental noise in the signal transduction pathway from l to a (ref. 49). Let lt, at and mt be the values of these quantities at time t. They obey stochastic dynamics due to the noise, and are described by the the following coupled Langevin equations7,14,16:

Figure 1. Schematic of adaptive signal transduction of E. coli bacterial chemotaxis.

Kinase activity a (green) activates a flagellar motor to move E. coli towards a direction of the higher ligand density l (red) by using the information stored in methylation level m (blue). CheA is the histidine kinase related to the flagellar motor, and the response regulator CheB, activated by CheA, removes methyl groups from the receptor. The methylation level m plays a similar role to the memory of Maxwell's demon 8,24, which reduces the effect of the environmental noise on the target system a; the negative feedback loop (purple arrows) counteracts the influence of ligand binding.

|

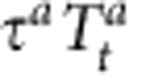

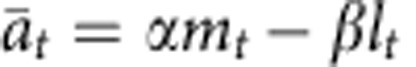

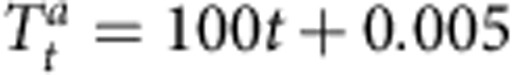

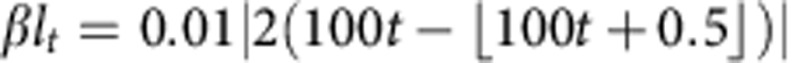

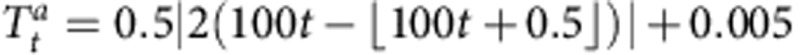

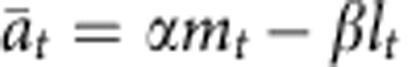

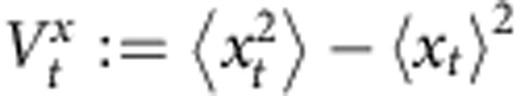

where  is the stationary value of the kinase activity under the instantaneous values of the methylation level mt and the ligand signal lt. In the case of E. coli chemotaxis, we can approximate

is the stationary value of the kinase activity under the instantaneous values of the methylation level mt and the ligand signal lt. In the case of E. coli chemotaxis, we can approximate  as αmt−βlt, by linearizing it around the steady-state value7,14.

as αmt−βlt, by linearizing it around the steady-state value7,14.  (x = a,m) is the white Gaussian noise with

(x = a,m) is the white Gaussian noise with  and

and  , where 〈⋯〉 describes the ensemble average.

, where 〈⋯〉 describes the ensemble average.  describes the intensity of the environmental noise at time t, which is not necessarily thermal inside cells. The noise intensity

describes the intensity of the environmental noise at time t, which is not necessarily thermal inside cells. The noise intensity  characterizes the ligand fluctuation. The time constants satisfy

characterizes the ligand fluctuation. The time constants satisfy  , which implies that the relaxation of a to

, which implies that the relaxation of a to  is much faster than that of m.

is much faster than that of m.

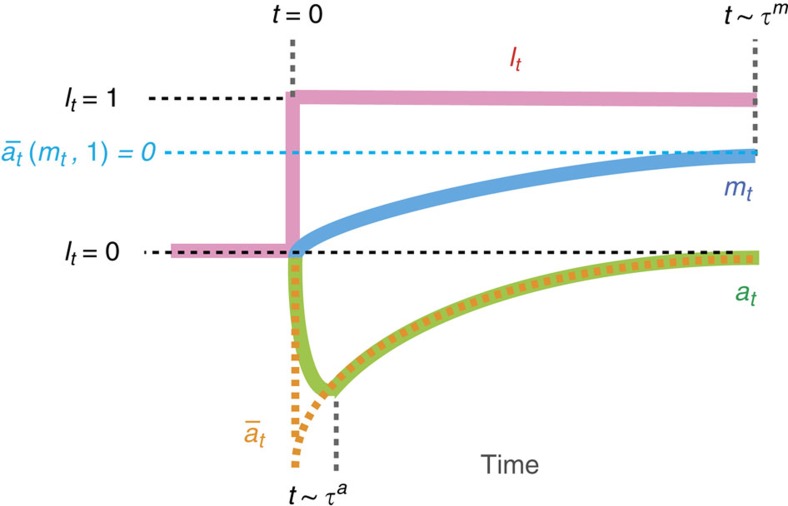

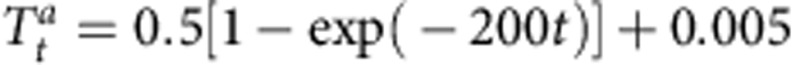

The mechanism of adaptation in this model is as follows (Fig. 2; refs 14, 16). Suppose that the system is initially in a stationary state with lt = 0 and  at time t < 0, and lt suddenly changes from 0 to 1 at time t = 0 as a step function. Then, at rapidly equilibrates to

at time t < 0, and lt suddenly changes from 0 to 1 at time t = 0 as a step function. Then, at rapidly equilibrates to  so that the difference

so that the difference  becomes small. The difference

becomes small. The difference  plays an important role, which characterizes the level of adaptation. Next, mt gradually changes to satisfy

plays an important role, which characterizes the level of adaptation. Next, mt gradually changes to satisfy  , and thus at returns to 0, where

, and thus at returns to 0, where  remains small.

remains small.

Figure 2. Typical dynamics of adaptation with the ensemble average.

Suppose that lt changes as a step function (red solid line). Then, at suddenly responds (green solid line), followed by the gradual response of mt (blue solid line). The adaptation is achieved by the relaxation of at to  (orange dashed line). The methylation level mt gradually changes to

(orange dashed line). The methylation level mt gradually changes to  (blue dashed line).

(blue dashed line).

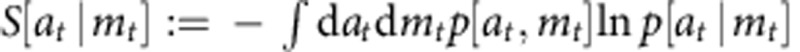

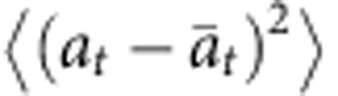

Robustness against environmental noise

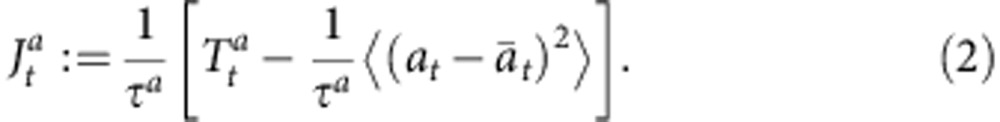

We introduce a key quantity that characterizes the robustness of adaptation, which is defined as the difference between the intensity of the ligand noise  and the mean square error of the level of adaptation

and the mean square error of the level of adaptation  :

:

|

The larger  is, the more robust the signal transduction is against the environmental noise. In the case of thermodynamics,

is, the more robust the signal transduction is against the environmental noise. In the case of thermodynamics,  corresponds to the heat absorption in a and characterizes the violation of the fluctuation–dissipation theorem28. Since the environmental noise is not necessarily thermal in the present situation,

corresponds to the heat absorption in a and characterizes the violation of the fluctuation–dissipation theorem28. Since the environmental noise is not necessarily thermal in the present situation,  is not exactly the same as the heat, but is a biophysical quantity that characterizes the robustness of adaptation against the environmental noise.

is not exactly the same as the heat, but is a biophysical quantity that characterizes the robustness of adaptation against the environmental noise.

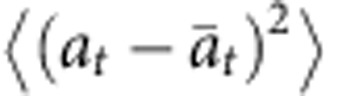

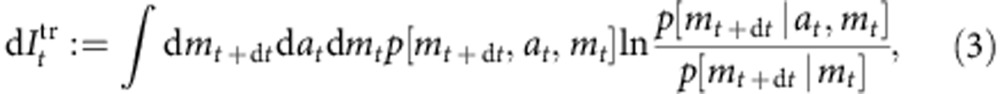

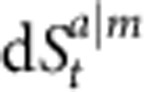

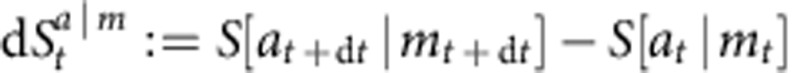

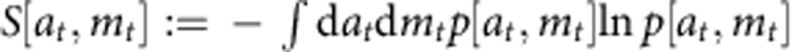

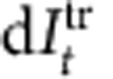

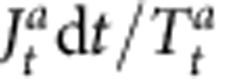

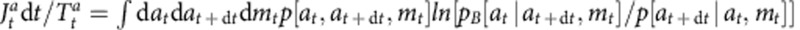

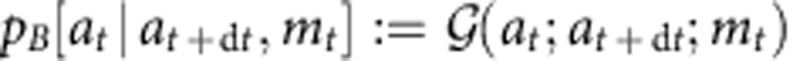

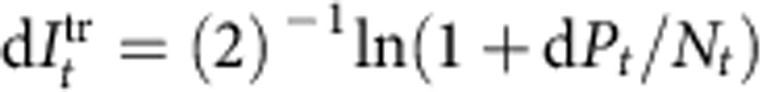

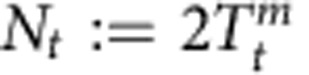

Information flow

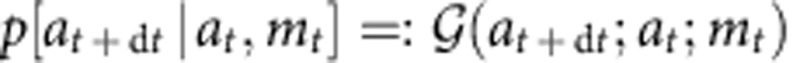

We here discuss the quantitative definition of the transfer entropy17. The transfer entropy from a to m at time t is defined as the conditional mutual information between at and mt+dt under the condition of mt:

|

where p[mt+dt,at,mt] is the joint probability distribution of (mt+dt,at,mt), and p[mt+dt|at,mt] is the probability distribution of mt+dt under the condition of (at,mt). The transfer entropy characterizes the directed information flow from a to m during an infinitesimal time interval dt (refs 17, 50), which quantifies a causal influence between them51,52. From the non-negativity of the conditional mutual information23, that of the transfer entropy follows:  .

.

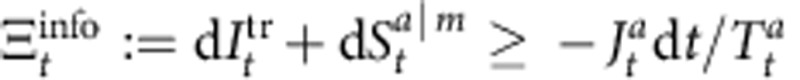

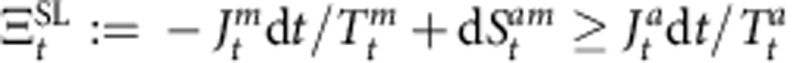

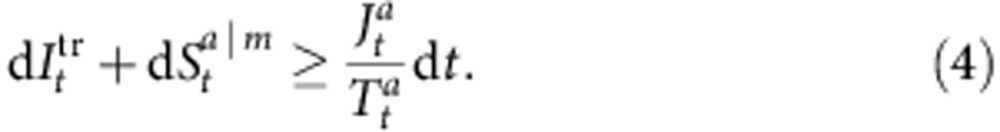

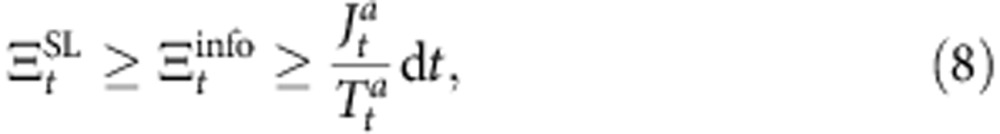

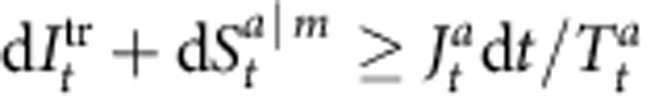

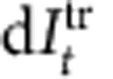

Second law of information thermodynamics

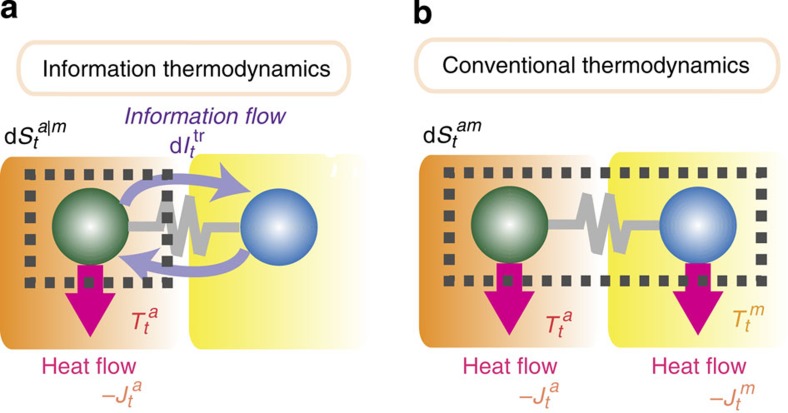

We now consider the second law of information thermodynamics, which characterizes the entropy change in a subsystem in terms of the information flow (Fig. 3). In the case of equation (1), the generalized second law is given as follows (see also Methods section):

Figure 3. Schematics of information thermodynamics and conventional thermodynamics.

A green (blue) circle indicates subsystem a (m) and a grey polygonal line indicates their interaction. (a) The second law of information thermodynamics characterizes the entropy change in a subsystem in terms of the information flow between the subsystem and the outside world (that is,  ). The information-thermodynamics picture concerns the entropy change inside the dashed square that only includes subsystem a. (b) the conventional second law of thermodynamics states that the entropy change in a subsystem is compensated for by the entropy change in the outside world (that is,

). The information-thermodynamics picture concerns the entropy change inside the dashed square that only includes subsystem a. (b) the conventional second law of thermodynamics states that the entropy change in a subsystem is compensated for by the entropy change in the outside world (that is,  ). The conventional thermodynamics picture concerns the entropy change inside the dashed square, which includes the entire systems a and m. As explicitly shown in this paper, information thermodynamics gives a tighter bound of the robustness

). The conventional thermodynamics picture concerns the entropy change inside the dashed square, which includes the entire systems a and m. As explicitly shown in this paper, information thermodynamics gives a tighter bound of the robustness  in the biochemical signal transduction of E. coli chemotaxis.

in the biochemical signal transduction of E. coli chemotaxis.

|

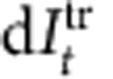

Here,  is the conditional Shannon entropy change defined as

is the conditional Shannon entropy change defined as  with

with  , which vanishes in the stationary state. The transfer entropy dIttr on the left-hand side of equation (4) shows the significant role of the feedback loop, implying that the robustness of adaptation can be enhanced against the environmental noise by the feedback using information. This is analogous to the central feature of Maxwell's demon.

, which vanishes in the stationary state. The transfer entropy dIttr on the left-hand side of equation (4) shows the significant role of the feedback loop, implying that the robustness of adaptation can be enhanced against the environmental noise by the feedback using information. This is analogous to the central feature of Maxwell's demon.

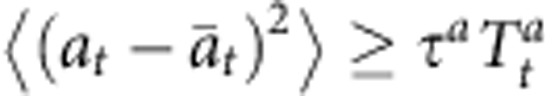

To further clarify the meaning of inequality (equation (4)), we focus on the case of the stationary state. If there was no feedback loop between m and a, then the second law reduces to  , which, as naturally expected, implies that the fluctuation of the signal transduction is bounded by the intensity of the environmental noise. In contrast, in the presence of a feedback loop,

, which, as naturally expected, implies that the fluctuation of the signal transduction is bounded by the intensity of the environmental noise. In contrast, in the presence of a feedback loop,  can be smaller than

can be smaller than  owing to the transfer entropy

owing to the transfer entropy  in the feedback loop:

in the feedback loop:

|

This inequality clarifies the role of the transfer entropy in biochemical signal transduction; the transfer entropy characterizes an upper bound of the robustness of the signal transduction in the biochemical network. The equality in equation (5) is achieved in the limit of α → 0 and τa/τm → 0 for the linear case with  (Supplementary Note 1). The latter limit means that a relaxes infinitely fast and the process is quasi-static (that is, reversible) in terms of a. This is analogous to the fact that Maxwell's demon can achieve the maximum thermodynamics gain in reversible processes35. In general, the information-thermodynamic bound becomes tight if α and τm/τa are both small. The realistic parameters of the bacterial chemotaxis are given by

(Supplementary Note 1). The latter limit means that a relaxes infinitely fast and the process is quasi-static (that is, reversible) in terms of a. This is analogous to the fact that Maxwell's demon can achieve the maximum thermodynamics gain in reversible processes35. In general, the information-thermodynamic bound becomes tight if α and τm/τa are both small. The realistic parameters of the bacterial chemotaxis are given by  and

and  (refs 7, 14, 16), and therefore the real adaptation process is accompanied by a finite amount of information-thermodynamics dissipation.

(refs 7, 14, 16), and therefore the real adaptation process is accompanied by a finite amount of information-thermodynamics dissipation.

Our model of chemotaxis has the same mathematical structure as the feedback cooling of a colloidal particle by Maxwell's demon36,38,42,47, where the feedback cooling is analogous to the noise filtering in the sensory adaptation49. This analogy is a central idea of our study; the information-thermodynamic inequalities (equation (5) in our case) characterize the robustness of adaptation as well as the performance of feedback cooling.

Numerical result

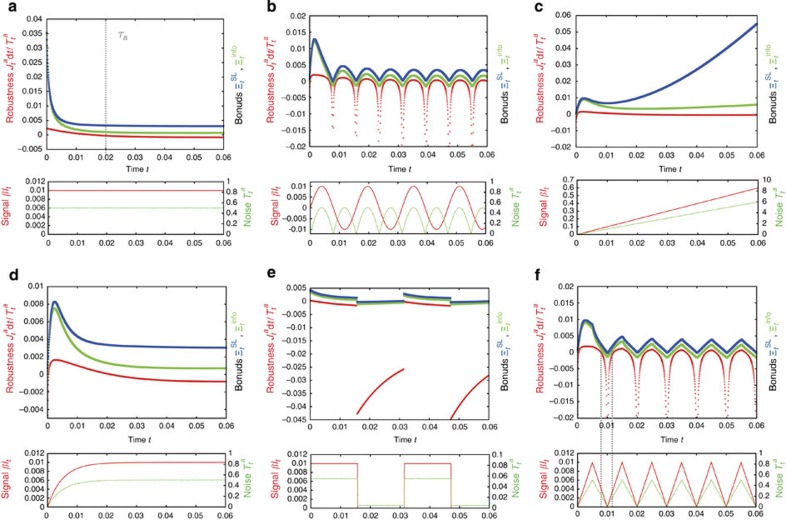

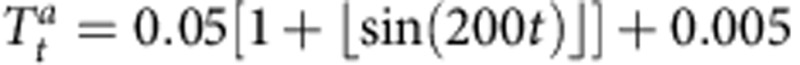

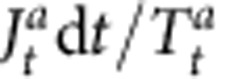

We consider the second law (equation (4)) in non-stationary dynamics, and numerically demonstrate the power of this inequality. Figure 4 shows  and

and

Figure 4. Numerical results of the information-thermodynamics bound on the robustness.

We compare the robustness  (red line), the information-thermodynamic bound

(red line), the information-thermodynamic bound  (green line) and the conventional thermodynamic bound

(green line) and the conventional thermodynamic bound  (blue line). The initial condition is the stationary state with

(blue line). The initial condition is the stationary state with  , fixed ligand signal βlt = 0, and noise intensity Ta = 0.005. We numerically confirmed that

, fixed ligand signal βlt = 0, and noise intensity Ta = 0.005. We numerically confirmed that  holds for the six transition processes. These results imply that, for the signal transduction model, the information-thermodynamic bound is tighter than the conventional thermodynamic bound. The parameters are chosen as τa = 0.02, τm = 0.2, α = 2.7 and

holds for the six transition processes. These results imply that, for the signal transduction model, the information-thermodynamic bound is tighter than the conventional thermodynamic bound. The parameters are chosen as τa = 0.02, τm = 0.2, α = 2.7 and  to be consistent with the real parameters of E. coli bacterial chemotaxis 7,14,16. We discuss the six different types of input signals βlt (red solid line) and noises

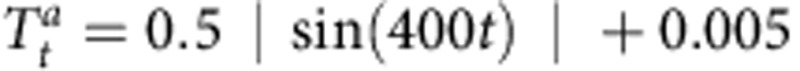

to be consistent with the real parameters of E. coli bacterial chemotaxis 7,14,16. We discuss the six different types of input signals βlt (red solid line) and noises  (green dashed line). (a) Step function: βlt = 0.01 and

(green dashed line). (a) Step function: βlt = 0.01 and  for t > 0. (b) Sinusoidal function: βlt = 0.01 sin(400t) and

for t > 0. (b) Sinusoidal function: βlt = 0.01 sin(400t) and  for t > 0. (c) Linear function: βlt = 10t and

for t > 0. (c) Linear function: βlt = 10t and  for t > 0. (d) Exponential decay: βLt = 0.01[1−exp(−200t)] and

for t > 0. (d) Exponential decay: βLt = 0.01[1−exp(−200t)] and  for t > 0. (e) Square wave:

for t > 0. (e) Square wave:  and

and  for t > 0, where

for t > 0, where  denotes the floor function. (f) Triangle wave:

denotes the floor function. (f) Triangle wave:  and

and  for t > 0.

for t > 0.

|

in six different types of dynamics of adaptation, where the ligand signal is given by a step function (Fig. 4a), a sinusoidal function (Fig. 4b), a linear function (Fig. 4c), an exponential decay (Fig. 4d), a square wave (Fig. 4e) and a triangle wave (Fig. 4f). These results confirm that  gives a tight bound of

gives a tight bound of  , implying that the transfer entropy characterizes the robustness well. In Fig. 4b,f, the robustness

, implying that the transfer entropy characterizes the robustness well. In Fig. 4b,f, the robustness  is nearly equal to the information-thermodynamics bound

is nearly equal to the information-thermodynamics bound  when the signal and noise are decreasing or increasing rapidly (for example,

when the signal and noise are decreasing or increasing rapidly (for example,  and t = 0.012 in Fig. 4f).

and t = 0.012 in Fig. 4f).

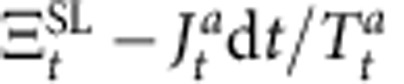

Conventional second law of thermodynamics

For the purpose of comparison, we next consider another upper bound of the robustness, which is given by the conventional second law of thermodynamics without information. We define the heat absorption by m as  , and the Shannon entropy change in the total system as

, and the Shannon entropy change in the total system as  with

with  , which vanishes in the stationary state. We can then show that

, which vanishes in the stationary state. We can then show that

|

is an upper bound of  , as a straightforward consequence of the conventional second law of thermodynamics of the total system of a and m (refs 28, 29). The conventional second law implies that the dissipation in m should compensate for that in a (Fig. 3). Figure 4 shows

, as a straightforward consequence of the conventional second law of thermodynamics of the total system of a and m (refs 28, 29). The conventional second law implies that the dissipation in m should compensate for that in a (Fig. 3). Figure 4 shows  along with

along with  and

and  . Remarkably, information-thermodynamic bound

. Remarkably, information-thermodynamic bound  gives a tighter bound of

gives a tighter bound of  than the conventional thermodynamics bound

than the conventional thermodynamics bound  such that

such that

|

for every non-stationary dynamics shown in Fig. 4. Moreover, we can analytically show inequalities (equation (8)) in the stationary state (Supplementary Note 4).

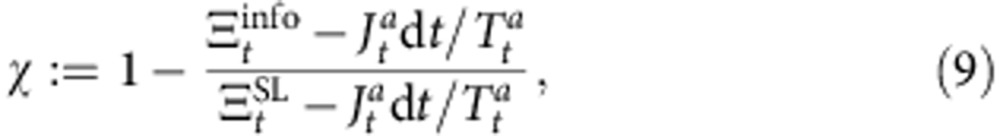

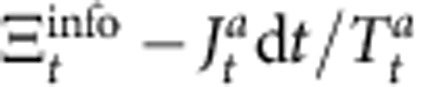

To compare the information-thermodynamic bound and the conventional thermodynamics one more quantitatively, we introduce an information-thermodynamic figure of merit based on the inequalities (equation (8)):

|

where the second term on the right-hand side is given by the ratio between the information-thermodynamic dissipation  and the entire thermodynamic dissipation

and the entire thermodynamic dissipation  . This quantity satisfies 0 ≤ χ ≤ 1, and

. This quantity satisfies 0 ≤ χ ≤ 1, and  (

( ) means that information-thermodynamic bound is much tighter (a little tighter) compared with the conventional thermodynamic bound. We numerically calculated χ in the aforementioned six types of dynamics of adaptation (Supplementary Figs 1–6). In the case of a linear function (Supplementary Fig. 3), we found that χ increases in time t and approaches to

) means that information-thermodynamic bound is much tighter (a little tighter) compared with the conventional thermodynamic bound. We numerically calculated χ in the aforementioned six types of dynamics of adaptation (Supplementary Figs 1–6). In the case of a linear function (Supplementary Fig. 3), we found that χ increases in time t and approaches to  . In this case, the signal transduction of E. coli chemotaxis is highly dissipative as a thermodynamic engine, but efficient as an information transmission device.

. In this case, the signal transduction of E. coli chemotaxis is highly dissipative as a thermodynamic engine, but efficient as an information transmission device.

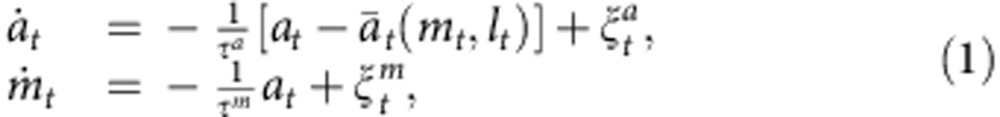

Comparison with Shannon's theory

We here discuss the similarity and the difference between our result and the Shannon's information theory (refs 22, 23; Fig. 5). The Shannon's second theorem (that is, the noisy-channel coding theorem) states that an upper bound of achievable information rate R is given by the channel capacity C such that C ≥ R. The channel capacity C is defined as the maximum value of the mutual information with finite power, where the mutual information can be replaced by the transfer entropy  in the presence of a feedback loop21. R describes how long bit sequence is needed for a channel coding to realize errorless communication through a noisy channel, where errorless means the coincidence between the input and output messages. Therefore, both of

in the presence of a feedback loop21. R describes how long bit sequence is needed for a channel coding to realize errorless communication through a noisy channel, where errorless means the coincidence between the input and output messages. Therefore, both of  and R characterize the robustness information transmission against noise, and bounded by the transfer entropy

and R characterize the robustness information transmission against noise, and bounded by the transfer entropy  . In this sense, there exists an analogy between the second law of thermodynamics with information and the Shannon's second theorem. In the case of biochemical signal transduction, the information-thermodynamic approach is more relevant, because there is not any explicit channel coding inside cells. Moreover, while

. In this sense, there exists an analogy between the second law of thermodynamics with information and the Shannon's second theorem. In the case of biochemical signal transduction, the information-thermodynamic approach is more relevant, because there is not any explicit channel coding inside cells. Moreover, while  is an experimentally measurable quantity as mentioned below 28,29, R cannot be properly defined in the absence of any artificial channel coding 23. Therefore,

is an experimentally measurable quantity as mentioned below 28,29, R cannot be properly defined in the absence of any artificial channel coding 23. Therefore,  is an intrinsic quantity to characterize the robustness of the information transduction inside cells.

is an intrinsic quantity to characterize the robustness of the information transduction inside cells.

Figure 5. Analogy and difference between our approach and Shannon's information theory.

(a) Information thermodynamics for biochemical signal transduction. The robustness  is bounded by the transfer entropy

is bounded by the transfer entropy  in the stationary states, which is a consequence of the second law of information thermodynamics. (b) Information theory for artificial communication. The archivable information rate R, given by the redundancy of the channel coding, is bounded by the channel capacity

in the stationary states, which is a consequence of the second law of information thermodynamics. (b) Information theory for artificial communication. The archivable information rate R, given by the redundancy of the channel coding, is bounded by the channel capacity  , which is a consequence of the Shannon's second theorem. If the noise is Gaussian as is the case for the E. coli chemotaxis, both of the transfer entropy and the channel capacity are given by the power-to-noise ratio

, which is a consequence of the Shannon's second theorem. If the noise is Gaussian as is the case for the E. coli chemotaxis, both of the transfer entropy and the channel capacity are given by the power-to-noise ratio  , under the condition that the initial distribution is Gaussian (see Methods section).

, under the condition that the initial distribution is Gaussian (see Methods section).

Discussion

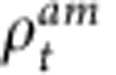

Our result can be experimentally validated by measuring the transfer entropy and thermodynamics quantities from the probability distribution of the amount of proteins in a biochemical system5,6,9,10,46,47,48,49. In fact, the transfer entropy dItr and thermodynamics quantities (that is,  and

and  ) can be obtained from the joint probability distribution of (at,mt,at+dt,mt+dt). The measurement of such a joint distribution would not be far from today's experimental technique in biophysics5,6,9,10,53,54,55,56. Experimental measurements of

) can be obtained from the joint probability distribution of (at,mt,at+dt,mt+dt). The measurement of such a joint distribution would not be far from today's experimental technique in biophysics5,6,9,10,53,54,55,56. Experimental measurements of  and

and  would lead to a novel classification of signal transduction in terms of the thermodynamics cost of information transmission.

would lead to a novel classification of signal transduction in terms of the thermodynamics cost of information transmission.

We note that, in ref. 16, the authors discussed that the entropy changes in two heat baths  can be characterized by the accuracy of adaptation. In our study, we derived a bound for

can be characterized by the accuracy of adaptation. In our study, we derived a bound for  that is regarded as the robustness of signal transduction against the environmental noise. These two results capture complementary aspects of adaptation processes: accuracy and robustness.

that is regarded as the robustness of signal transduction against the environmental noise. These two results capture complementary aspects of adaptation processes: accuracy and robustness.

We also note that our theory of information thermodynamics24 can be generalized to a broad class of signal transduction networks, including a feedback loop with time delay.

Methods

The outline of the derivation of inequality (4)

We here show the outline of the derivation of the information-thermodynamic inequality (equation (4); see also Supplementary Note 2 for details). The heat dissipation  is given by the ratio between forward and backward path probabilities as

is given by the ratio between forward and backward path probabilities as  (refs 24, 28, 29), where the backward path probability

(refs 24, 28, 29), where the backward path probability  can be calculated from the forward path probability

can be calculated from the forward path probability  . Thus, the difference

. Thus, the difference  is given by the Kullback–Libler divergence23. From its non-negativity23, we have

is given by the Kullback–Libler divergence23. From its non-negativity23, we have  . This inequality can be derived from the general inequality of information thermodynamics24 (see Supplementary Note 3 and Supplementary Fig. 7). As discussed in Supplementary Note 3, this inequality gives a weaker bound of the entropy production.

. This inequality can be derived from the general inequality of information thermodynamics24 (see Supplementary Note 3 and Supplementary Fig. 7). As discussed in Supplementary Note 3, this inequality gives a weaker bound of the entropy production.

The analytical expression of the transfer entropy

In the case of E. coli chemotaxis, we have  , and equation (1) become linear. In this situation, if the initial distribution is Gaussian, we analytically obtain the transfer entropy up to the order of dt (Supplementary Note 4):

, and equation (1) become linear. In this situation, if the initial distribution is Gaussian, we analytically obtain the transfer entropy up to the order of dt (Supplementary Note 4):  , where

, where  describes the intensity of the environmental noise, and

describes the intensity of the environmental noise, and  describes the intensity of the signal from a to m per unit time with

describes the intensity of the signal from a to m per unit time with  , and

, and  . We note that dItr for the Gaussian case is greater than that of the non-Gaussian case, if

. We note that dItr for the Gaussian case is greater than that of the non-Gaussian case, if  and

and  are the same23. We also note that the above analytical expression of

are the same23. We also note that the above analytical expression of  is the same form as the Shannon–Hartley theorem23.

is the same form as the Shannon–Hartley theorem23.

Additional information

How to cite this article: Ito, S. and Sagawa, T. Maxwell's demon in biochemical signal transduction with feedback loop. Nat. Commun. 6:7498 doi: 10.1038/ncomms8498 (2015).

Supplementary Material

: Supplementary Figures 1-7, Supplementary Notes 1-4 and Supplementary References

Acknowledgments

We are grateful to S.-I. Sasa, U. Seifert, M. L. Rosinberg, N. Shiraishi, K. Kawaguchi, H. Tajima, A.C. Barato, D. Hartich and M. Sano for their valuable discussions. This work was supported by the Grants-in-Aid for JSPS Fellows (grant no. 24·8593), by JSPS KAKENHI grant numbers 25800217 and 22340114, by KAKENHI no. 25103003 ‘Fluctuation & Structure' and by Platform for Dynamic Approaches to Living System from MEXT, Japan.

Footnotes

Author contributions S.I. mainly constructed the theory, carried out the analytical and numerical calculations, and wrote the paper. T.S. also constructed the theory and wrote the paper. Both authors discussed the results at the all stages.

References

- Phillips R., Kondev J. & Theriot J. Physical Biology of the Cell Garland Science (2009). [Google Scholar]

- Korobkova E., Emonet T., Vilar J. M., Shimizu T. S. & Cluzel P. From molecular noise to behavioural variability in a single bacterium. Nature 428, 574–578 (2004). [DOI] [PubMed] [Google Scholar]

- Lestas I., Vinnicombe G. & Paulsson J. Fundamental limits on the suppression of molecular fluctuations. Nature 467, 174–178 (2010). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Andrews B. W. & Iglesias P. A. An information-theoretic characterization of the optimal gradient sensing response of cells. PLoS Comput. Biol. 3, e153 (2007). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Skerker J. M. et al. Rewiring the specificity of two-component signal transduction systems. Cell 133, 1043–1054 (2008). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mehta P., Goyal S., Long T., Bassler B. L. & Wingreen N. S. Information processing and signal integration in bacterial quorum sensing. Mol. Syst. Biol. 5, 325 (2009). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tostevin F. & ten Wolde P. R. Mutual information between input and output trajectories of biochemical networks. Phys. Rev. Lett. 102, 218101 (2009). [DOI] [PubMed] [Google Scholar]

- Tu Y. The nonequilibrium mechanism for ultrasensitivity in a biological switch: Sensing by Maxwell's demons. Proc. Natl Acad. Sci. USA 105, 11737–11741 (2008). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cheong R., Rhee A., Wang C. J., Nemenman I. & Levchenko A. Information transduction capacity of noisy biochemical signaling networks. Science 334, 354–358 (2011). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Uda S. et al. Robustness and compensation of information transmission of signaling pathways. Science 341, 558–561 (2013). [DOI] [PubMed] [Google Scholar]

- Govern C. C. & ten Wolde P. R. Optimal resource allocation in cellular sensing systems. Proc. Natl Acad. Sci. USA 111, 17486–17491 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barkai N. & Leibler S. Robustness in simple biochemical networks. Nature 387, 913–917 (1997). [DOI] [PubMed] [Google Scholar]

- Alon U., Surette M. G., Barkai N. & Leibler S. Robustness in bacterial chemotaxis. Nature 397, 168–171 (1999). [DOI] [PubMed] [Google Scholar]

- Tu Y., Shimizu T. S. & Berg H. C. Modeling the chemotactic response of Escherichia coli to time-varying stimuli. Proc. Natl Acad. Sci. USA 105, 1485514860 (2008). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shimizu T. S., Tu Y. & Berg H. C. A modular gradient-sensing network for chemotaxis in Escherichia coli revealed by responses to time-varying stimuli. Mol. Syst. Biol. 6, 382 (2010). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lan G., Sartori P., Neumann S., Sourjik V. & Tu Y. The energy-speed-accuracy trade-off in sensory adaptation. Nat. Phys. 8, 422–428 (2012). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schreiber T. Measuring information transfer. Phys. Rev. Lett. 85, 461 (2000). [DOI] [PubMed] [Google Scholar]

- Vicente R., Wibral M., Lindner M. & Pipa G. Transfer entropy - a model-free measure of effective connectivity for the neurosciences. J. Comput. Neurosci. 30, 45–67 (2011). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bauer M., Cox J. W., Caveness M. H., Downs J. J. & Thornhill N. F. Finding the direction of disturbance propagation in a chemical process using transfer entropy. IEEE Trans. Control Syst. Technol. 15, 12–21 (2007). [Google Scholar]

- Lungarella M. & Sporns O. Mapping information flow in sensorimotor networks. PLoS Comput. Biol 2, e144 (2006). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Massey J. L. Causality, feedback and directed information. Proc. Int. Symp. Inf. Theory Appl. 303–305 (1990). [Google Scholar]

- Shannon C. E. A mathematical theory of communication. Bell Syst. Tech. J. 27, 379 (1948). [Google Scholar]

- Cover T. M. & Thomas J. A. Element of Information Theory John Wiley and Sons (1991). [Google Scholar]

- Ito S. & Sagawa T. Information thermodynamics on causal networks. Phys. Rev. Lett. 111, 180603 (2013). [DOI] [PubMed] [Google Scholar]

- Maxwell J. C. Theory of Heat Appleton (1871). [Google Scholar]

- Szilard L. On the decrease of entropy in a thermodynamic system by the intervention of intelligent beings. Z. Phys. 53, 840–856 (1929). [DOI] [PubMed] [Google Scholar]

- Leff H. S., Rex A. F. (eds) Maxwell's Demon 2: Entropy, Classical and Quantum Information, Computing Princeton University Press (2003). [Google Scholar]

- Sekimoto K. Stochastic Energetics Springer (2010). [Google Scholar]

- Seifert U. Stochastic thermodynamics, fluctuation theorems and molecular machines. Rep. Prog. Phys. 75, 126001 (2012). [DOI] [PubMed] [Google Scholar]

- Allahverdyan A. E., Janzing D. & Mahler G. Thermodynamic efficiency of information and heat flow. J. Stat. Mech. P09011 (2009). [Google Scholar]

- Sagawa T. & Ueda M. Generalized Jarzynski equality under nonequilibrium feedback control. Phys. Rev. Lett. 104, 090602 (2010). [DOI] [PubMed] [Google Scholar]

- Toyabe S., Sagawa T., Ueda M., Muneyuki E. & Sano M. Experimental demonstration of information-to-energy conversion and validation of the generalized Jarzynski equality. Nat. Phys. 6, 988–992 (2010). [Google Scholar]

- Horowitz J. M. & Vaikuntanathan S. Nonequilibrium detailed fluctuation theorem for repeated discrete feedback. Phys. Rev. E 82, 061120 (2010). [DOI] [PubMed] [Google Scholar]

- Fujitani Y. & Suzuki H. Jarzynski equality modified in the linear feedback system. J. Phys. Soc. Jpn. 79, 104003–104007 (2010). [Google Scholar]

- Horowitz J. M. & Parrondo J. M. Thermodynamic reversibility in feedback processes. Euro. Phys. Lett. 95, 10005 (2011). [Google Scholar]

- Ito S. & Sano M. Effects of error on fluctuations under feedback control. Phys. Rev. E 84, 021123 (2011). [DOI] [PubMed] [Google Scholar]

- Sagawa T. & Ueda M. Fluctuation theorem with information exchange: role of correlations in stochastic thermodynamics. Phys. Rev. Lett. 109, 180602 (2012). [DOI] [PubMed] [Google Scholar]

- Kundu A. Nonequilibrium fluctuation theorem for systems under discrete and continuous feedback control. Phys. Rev. E 86, 021107 (2012). [DOI] [PubMed] [Google Scholar]

- Mandal D. & Jarzynski C. Work and information processing in a solvable model of Maxwell's demon. Proc. Natl Acad. Sci. USA 109, 1164111645 (2012). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bérut A. et al. Experimental verification of Landauer's principle linking information and thermodynamics. Nature 483, 187189 (2012). [DOI] [PubMed] [Google Scholar]

- Hartich D., Barato A. C. & Seifert U. Stochastic thermodynamics of bipartite systems: transfer entropy inequalities and a Maxwell's demon interpretation. J. Stat. Mech. P02016 (2014). [Google Scholar]

- Munakata T. & Rosinberg M. L. Entropy production and fluctuation theorems for Langevin processes under continuous non-Markovian feedback control. Phys. Rev. Lett. 112, 180601 (2014). [DOI] [PubMed] [Google Scholar]

- Horowitz J. M. & Esposito M. Thermodynamics with continuous information flow. Phys. Rev. X 4, 031015 (2014). [Google Scholar]

- Barato A. C., Hartich D. & Seifert U. Efficiency of celluler information processing. New J. Phys. 16, 103024 (2014). [Google Scholar]

- Sartori P., Granger L., Lee C. F. & Horowitz J. M. Thermodynamic costs of information processing in sensory adaption. PLoS Compt. Biol. 10, e1003974 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lang A. H., Fisher C. K., Mora T. & Mehta P. Thermodynamics of statistical inference by cells. Phys. Rev. Lett. 113, 148103 (2014). [DOI] [PubMed] [Google Scholar]

- Horowitz J. M. & Sandberg H. Second-law-like inequalities with information and their interpretations. New. J. Phys. 16, 125007 (2014). [Google Scholar]

- Shiraishi N. & Sagawa T. Fluctuation theorem for partially masked nonequilibrium dynamics. Phys. Rev. E 91, 012130 (2015). [DOI] [PubMed] [Google Scholar]

- Sartori P. & Tu Y. Noise filtering strategies in adaptive biochemical signaling networks. J. Stat. Phys. 142, 1206–1217 (2011). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kaiser A. & Schreiber T. Information transfer in continuous processes. Physica D 166, 43–62 (2002). [Google Scholar]

- Hlavackova-Schindler K., Palus M., Vejmelka M. & Bhattacharya J. Causality detection based on information-theoretic approaches in time series analysis. Phys. Rep. 441, 1–46 (2007). [Google Scholar]

- Barnett L., Barrett A. B. & Seth A. K. Granger causality and transfer entropy are equivalent for Gaussian variables. Phys. Rev. Lett. 103, 238701 (2009). [DOI] [PubMed] [Google Scholar]

- Collin D. et al. Verification of the Crooks fluctuation theorem and recovery of RNA folding free energies. Nature 437, 231–234 (2005). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ritort F. Single-molecule experiments in biological physics: methods and applications. J. Phys. Condens. Matter 18, R531 (2006). [DOI] [PubMed] [Google Scholar]

- Toyabe S. et al. Nonequilibrium energetics of a single F1-ATPase molecule. Phys. Rev. Lett. 104, 198103 (2010). [DOI] [PubMed] [Google Scholar]

- Hayashi K., Ueno H., Iino R. & Noji H. Fluctuation theorem applied to F 1-ATPase. Phys. Rev. Lett. 104, 218103 (2010). [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

: Supplementary Figures 1-7, Supplementary Notes 1-4 and Supplementary References